1. Introduction

Deep neural networks have recently emerged as a promising technique for use in tasks across the various traditional disciplines of actuarial science, including pricing, reserving, experience analysis, and mortality forecasting. Moreover, deep learning has been applied in emerging areas of actuarial practice, such as analysis of telematics data, natural language processing, and image recognition. These techniques provide significant gains in accuracy compared to traditional methods, while maintaining a close connection of these models to the generalized linear models (GLMs) currently used in the non-life insurance industry for pricing policies and reserving for claims not yet settled.

On the other hand, one seeming disadvantage of neural network models is that the output from these models may exhibit undesirable characteristics for actuarial purposes. The first issue is that predictions may vary in a rough manner with changes in insurance risk factors. In some contexts, such as general insurance pricing, this may be problematic to explain to customers, intermediaries, or other stakeholders or may indicate problems with data credibility. For example, consider a general insurance pricing model that uses driver age as a risk factor. Usually, from a commercial perspective, as a customer ages, it is expected that her insurance rates would change smoothly. However, unconstrained output from neural networks may produce rates that vary roughly with age. It is difficult to explain to customers why rates might increase one year and then decrease the next, and, moreover, extra costs might arise from needing to address these types of queries. A second issue is that actuaries often wish to constrain predictions from models to increase (or decrease) in a monotonic manner with some risk factors, for example, increasing sums insured should lead, other things being equal, to higher average costs per claim and worsening bonus-malus scores should imply higher expected frequencies of claims. Although constraining GLM parameters relating to insurance risk factors to be smooth or exhibit monotonicity is trivial, since the coefficients of GLMs can be modified directly, methods to introduce these constraints into deep neural networks have not yet been developed. Thus, a significant barrier for the adoption of neural networks in practice exists.

Typical practice when manually building actuarial models is that actuaries will attempt to enforce smoothness or monotonicity constraints within models by modifying model structure or coefficients manually, as mentioned before, by applying relevant functional forms within models, such as parametric functions, regularization, or by applying post hoc smoothing techniques; we mention, e.g., Whittaker–Henderson smoothing (Whittaker, Reference Whittaker1922; Henderson, Reference Henderson1924) which adds regularization to parameters. In the case of GLMs for general insurance pricing, the simple linear structure of these models is often exploited by, firstly, fitting an unconstrained GLM, then specifying some simplifications whereby coefficients are forced to follow the desired functional form or meet smoothness criteria. In more complex models, such as neural networks, operating directly on model coefficients or structure is less feasible.

To overcome this challenge, we present methods for enforcing smoothness and monotonicity constraints within deep neural network models. The key idea can be summarized as follows: as a first step, insurance risk factors that should be constrained are identified, and then, the datasets used for model fitting are augmented to produce pseudo-data that reflect the structure of the identified variables. In the next step, we design a multi-input/multi-output neural network that can process jointly the original observation as well as the pseudo-data. Finally, a joint loss function is used to train the network to make accurate predictions while enforcing the desired constraints on the pseudo-data. We show how these models can be trained and provide example applications using a real-world general insurance pricing dataset.

The method we propose can be related to the Individual Conditional Expectation (ICE) model interpretability technique of Goldstein et al. (Reference Goldstein, Kapelner, Bleich and Pitkin2015). Therefore, we call our proposal the ICEnet, to emphasize this connection. To enable the training of neural networks with constraints, the ICEnet structures networks to output a vector of predictions derived from the pseudo-data input to the network; these predictions derived from the network for the key variables of interest on the pseudo-data are exactly equivalent to the outputs used to derive an ICE plot. The selected constraints then constrain these ICEnet outputs from the network to be as smooth or monotonic as required. For this purpose, we will use fully connected networks (FCNs) with embedding layers for categorical variables to perform supervised learning, and, in the process, create the ICEnet outputs for the variables of interest.

Literature review. Practicing actuaries often require smoothed results of experience analyses in both general and life insurance, usually on the assumption that outputs of models that do not exhibit smoothness are anomalous. For example, Goldburd et al. (Reference Goldburd, Khare, Tevet and Guller2016) and Anderson et al. (Reference Anderson, Feldblum, Modlin, Schirmacher, Schirmacher and Thandi2007) discuss manual smoothing methods in the context of general insurance pricing with GLMs. In life insurance, two main types of techniques are used to graduate (i.e., smooth) mortality tables; in some jurisdictions, such as the United Kingdom and South Africa, combinations of polynomial functions have often been used to fit mortality data directly, see, for example, Forfar et al. (Reference Forfar, McCutcheon and Wilkie1987), whereas post hoc smoothing methods such as Whittaker–Henderson smoothing (Whittaker, Reference Whittaker1922; Henderson, Reference Henderson1924) have often been used in the United States. The use of splines and generalized additive models (GAMs) have also been considered for these purposes, see Goldburd et al. (Reference Goldburd, Khare, Tevet and Guller2016) in the context of general insurance and Debón et al. (Reference Debón, Montes and Sala2006) in the context of mortality data.

More recently, penalized regression techniques have been utilized for variable selection and categorical level fusion for general insurance pricing. These are often based on the Least Absolute Shrinkage and Selection Operator (LASSO) regression of Tibshirani (Reference Tibshirani1996) and its extensions (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015); here, we mention the fused LASSO which produces model coefficients that vary smoothly, see Tibshirani et al. (Reference Tibshirani, Saunders, Rosset, Zhu and Knight2005), which is particularly useful for deriving smoothly varying models for ordinal categorical data. In the actuarial literature, example applications of the constrained regression approach are Devriendt et al. (Reference Devriendt, Antonio, Reynkens and Verbelen2021), who propose an algorithm for incorporating multiple penalties for complex general insurance pricing data, and Henckaerts et al. (Reference Henckaerts, Antonio, Clijsters and Verbelen2018), who provide a data-driven binning approach designed to produce GLMs which closely mirror smooth GAMs.

Within the machine and deep learning literature, various methods for enforcing monotonicity constraints within gradient boosting machines (GBM) and neural network models have been proposed. Monotonicity constraints are usually added to GBMs by modifying the process used to fit decision trees when producing these models; an example of this can be found in the well-known XGBoost library of Chen & Guestrin (Reference Chen and Guestrin2016). Since these constraints are implemented specifically by modifying how the decision trees underlying the GBM are fit to the data, the same process cannot be applied for neural network models. Within the deep learning literature, monotonicity constraints have been addressed by constraining the weights of neural networks to be positive, see, for example, Sill (Reference Sill1997) and Daniels & Velikova (Reference Daniels and Velikova2010), who generalize the earlier methods of Sill (Reference Sill1997), or by using specially designed networks, such as the lattice networks of You et al. (Reference You, Ding, Canini, Pfeifer and Gupta2017). In the finance literature, Kellner et al. (Reference Kellner, Nagl and Rösch2022) propose a method to ensure monotonicity of multiple quantiles output from a neural network, by adding a monotonicity constraint to multiple outputs from the network; this idea is similar to what we implement below. Another approach to enforcing monotonicity involves post-processing the outputs of machine learning models with a different algorithm, such as isotonic regression; see Wüthrich & Ziegel (Reference Wüthrich and Merz2023) for a recent application of this to ensure that outputs of general insurance pricing models are autocalibrated.

On the other hand, the machine and deep learning literature seemingly has not addressed the issue of ensuring that predictions made with these models vary smoothly, and, moreover, the methods proposed within the literature for monotonicity constraints cannot be directly applied to enforce smoothness constraints. Thus, the ICEnet proposal of this paper, which adds flexible penalties to a specially designed neural network, fills a gap in the literature by showing how both monotonicity and smoothness constraints can be enforced with the same method in deep learning models.

Structure of the manuscript. The rest of the manuscript is structured as follows. Section 2 provides notation and discusses neural networks and machine learning interpretability. Section 3 defines the ICEnet, which is applied in a simulation study in Section 4 and to a French Motor Third Party Liability data in Section 5. A local approximation to the ICEnet is presented in Section 6. Discussion of the results and conclusions are given in Section 7. The supplementary provides the code and further results and numerical analysis of the ICEnet.

2. Neural networks and individual conditional expectations

We begin by briefly introducing supervised learning with neural networks, expand these definitions to FCNs using embedding layers, and discuss their training process. With these building blocks, we then present the ICEnet proposal in the next section.

2.1 Supervised learning and neural networks

We work in the usual setup of supervised learning. Independent observations

![]() $y_n \in{\mathbb{R}}$

of a variable of interest have been made for instances (insurance policies)

$y_n \in{\mathbb{R}}$

of a variable of interest have been made for instances (insurance policies)

![]() $1 \le n \le N$

. In addition, covariates

$1 \le n \le N$

. In addition, covariates

![]() ${\boldsymbol{x}}_n$

have been collected for all instances

${\boldsymbol{x}}_n$

have been collected for all instances

![]() $1\le n \le N$

. These covariates can be used to create predictions

$1\le n \le N$

. These covariates can be used to create predictions

![]() $\widehat{y}_n \in{\mathbb{R}}$

of the variable of interest. In what follows, note that we drop the subscript from

$\widehat{y}_n \in{\mathbb{R}}$

of the variable of interest. In what follows, note that we drop the subscript from

![]() ${\boldsymbol{x}}_n$

for notational convenience. The covariates in

${\boldsymbol{x}}_n$

for notational convenience. The covariates in

![]() $\boldsymbol{x}$

are usually of two main types in actuarial tasks: the first of these are real-valued covariates

$\boldsymbol{x}$

are usually of two main types in actuarial tasks: the first of these are real-valued covariates

![]() ${\boldsymbol{x}}^{[r]} \in{\mathbb{R}}^{q_r}$

, where the superscript

${\boldsymbol{x}}^{[r]} \in{\mathbb{R}}^{q_r}$

, where the superscript

![]() $[\cdot ]$

represents the subset of the vector

$[\cdot ]$

represents the subset of the vector

![]() $\boldsymbol{x}$

,

$\boldsymbol{x}$

,

![]() $r$

is the set of real-valued covariates and where there are

$r$

is the set of real-valued covariates and where there are

![]() $q_r$

real-valued variables. The second type of covariates are categorical, which we assume to have been encoded as positive integers; we represent these as

$q_r$

real-valued variables. The second type of covariates are categorical, which we assume to have been encoded as positive integers; we represent these as

![]() ${\boldsymbol{x}}^{[c]} \in{\mathbb N}^{q_c}$

, where the set of categorical covariates is

${\boldsymbol{x}}^{[c]} \in{\mathbb N}^{q_c}$

, where the set of categorical covariates is

![]() $c$

. Thus,

$c$

. Thus,

![]() ${\boldsymbol{x}} = ({\boldsymbol{x}}^{[r]},{\boldsymbol{x}}^{[c]})$

. In actuarial applications, often predictions are proportional to a scalar unit of exposure

${\boldsymbol{x}} = ({\boldsymbol{x}}^{[r]},{\boldsymbol{x}}^{[c]})$

. In actuarial applications, often predictions are proportional to a scalar unit of exposure

![]() $v_n \in{\mathbb{R}}^+$

, thus, each observation is a tensor

$v_n \in{\mathbb{R}}^+$

, thus, each observation is a tensor

![]() $(y,{\boldsymbol{x}}^{[r]},{\boldsymbol{x}}^{[c]}, v) \in{\mathbb{R}} \times{\mathbb{R}}^{q_r} \times{\mathbb N}^{q_c} \times{\mathbb{R}}^+$

.

$(y,{\boldsymbol{x}}^{[r]},{\boldsymbol{x}}^{[c]}, v) \in{\mathbb{R}} \times{\mathbb{R}}^{q_r} \times{\mathbb N}^{q_c} \times{\mathbb{R}}^+$

.

In this work, we will use deep neural networks, which are efficient function approximators, for predicting

![]() $y$

. We represent the general class of neural networks as

$y$

. We represent the general class of neural networks as

![]() $\Psi _W({\boldsymbol{x}})$

, where

$\Psi _W({\boldsymbol{x}})$

, where

![]() $W$

are the network parameters (weights and biases). Using these, we aim to study the regression function

$W$

are the network parameters (weights and biases). Using these, we aim to study the regression function

We follow Chapter 7 of Wüthrich & Merz (Reference Wüthrich and Merz2023) for the notation defining neural networks. Neural networks are machine learning models constructed by composing non-linear functions (called layers) operating on the vector

![]() $\boldsymbol{x}$

, which is the input to the network. A network consisting of only a single layer of non-linear functions has depth

$\boldsymbol{x}$

, which is the input to the network. A network consisting of only a single layer of non-linear functions has depth

![]() $d=1$

and is called a shallow network. More complex networks consisting of multiple layers with depth

$d=1$

and is called a shallow network. More complex networks consisting of multiple layers with depth

![]() $d \ge 2$

are called deep networks. We denote the

$d \ge 2$

are called deep networks. We denote the

![]() $i$

-th layer by

$i$

-th layer by

![]() $\boldsymbol{z}^{(i)}$

. These non-linear functions (layers) transform the input variables

$\boldsymbol{z}^{(i)}$

. These non-linear functions (layers) transform the input variables

![]() $\boldsymbol{x}$

into new representations, which are optimized to perform well on the supervised learning task, using a process called representation learning. Representation learning can be denoted as the composition

$\boldsymbol{x}$

into new representations, which are optimized to perform well on the supervised learning task, using a process called representation learning. Representation learning can be denoted as the composition

where

![]() $d \in{\mathbb N}$

is the number of layers

$d \in{\mathbb N}$

is the number of layers

![]() $\boldsymbol{z}^{(i)}$

of the network and

$\boldsymbol{z}^{(i)}$

of the network and

![]() $q_i \in{\mathbb N}$

are the dimensions of these layers for

$q_i \in{\mathbb N}$

are the dimensions of these layers for

![]() $1\le i \le d$

. Thus, each layer

$1\le i \le d$

. Thus, each layer

![]() $\boldsymbol{z}^{(i)}\; :\; {\mathbb{R}}^{q_{i-1}} \to{\mathbb{R}}^{q_i}$

transforms the representation at the previous stage to a new, modified representation.

$\boldsymbol{z}^{(i)}\; :\; {\mathbb{R}}^{q_{i-1}} \to{\mathbb{R}}^{q_i}$

transforms the representation at the previous stage to a new, modified representation.

FCNs define the

![]() $j$

-th component (called neuron or unit) of each layer

$j$

-th component (called neuron or unit) of each layer

![]() $\boldsymbol{z}^{(i)}$

,

$\boldsymbol{z}^{(i)}$

,

![]() $1\le j \le q_i$

, as the mapping

$1\le j \le q_i$

, as the mapping

\begin{equation} \boldsymbol{z}=(z_1,\ldots, z_{q_{i-1}})^\top \in{\mathbb{R}}^{q_{i-1}} \quad \mapsto \quad z^{(i)}_j(\boldsymbol{z}) = \phi \left (\sum _{k = 1}^{q_{i-1}} w^{(i)}_{j,k} z_k + b^{(i)}_j \right ), \end{equation}

\begin{equation} \boldsymbol{z}=(z_1,\ldots, z_{q_{i-1}})^\top \in{\mathbb{R}}^{q_{i-1}} \quad \mapsto \quad z^{(i)}_j(\boldsymbol{z}) = \phi \left (\sum _{k = 1}^{q_{i-1}} w^{(i)}_{j,k} z_k + b^{(i)}_j \right ), \end{equation}

for a non-linear activation function

![]() $\phi \;:\;{\mathbb{R}}\to{\mathbb{R}}$

, and where

$\phi \;:\;{\mathbb{R}}\to{\mathbb{R}}$

, and where

![]() $z^{(i)}_j(\!\cdot\!)$

is the

$z^{(i)}_j(\!\cdot\!)$

is the

![]() $j$

-th component of layer

$j$

-th component of layer

![]() $\boldsymbol{z}^{(i)}(\!\cdot\!)$

,

$\boldsymbol{z}^{(i)}(\!\cdot\!)$

,

![]() $w^{(i)}_{j,k}\in{\mathbb{R}}$

is the regression weight of this

$w^{(i)}_{j,k}\in{\mathbb{R}}$

is the regression weight of this

![]() $j$

-th neuron connecting to the

$j$

-th neuron connecting to the

![]() $k$

-th neuron of the previous layer,

$k$

-th neuron of the previous layer,

![]() $z_k=z^{(i-1)}_k$

, and

$z_k=z^{(i-1)}_k$

, and

![]() $b^{(i)}_j\in{\mathbb{R}}$

is the intercept or bias for the

$b^{(i)}_j\in{\mathbb{R}}$

is the intercept or bias for the

![]() $j$

-th neuron in layer

$j$

-th neuron in layer

![]() $\boldsymbol{z}^{(i)}$

. It can be seen from (2.1) that the neurons

$\boldsymbol{z}^{(i)}$

. It can be seen from (2.1) that the neurons

![]() $z^{(i)}_j(\!\cdot\!)$

of a FCN connect to all of the neurons

$z^{(i)}_j(\!\cdot\!)$

of a FCN connect to all of the neurons

![]() $z^{(i-1)}_k(\!\cdot\!)$

in the previous layer

$z^{(i-1)}_k(\!\cdot\!)$

in the previous layer

![]() $\boldsymbol{z}^{(i-1)}$

through the weights

$\boldsymbol{z}^{(i-1)}$

through the weights

![]() $w^{(i)}_{j,k}$

, explaining the description of these networks as “fully connected.”

$w^{(i)}_{j,k}$

, explaining the description of these networks as “fully connected.”

Combining these layers, a generic FCN regression function can be defined as follows

\begin{equation} {\boldsymbol{x}} \, \mapsto \, \Psi _W({\boldsymbol{x}}) \, \stackrel{{\mathsf{def}}}{=}\, g^{-1} \left ( \sum _{k=1}^{q_d} w^{(d+1)}_k z_k^{(d:1)}({\boldsymbol{x}}) + b^{(d+1)}\right ), \end{equation}

\begin{equation} {\boldsymbol{x}} \, \mapsto \, \Psi _W({\boldsymbol{x}}) \, \stackrel{{\mathsf{def}}}{=}\, g^{-1} \left ( \sum _{k=1}^{q_d} w^{(d+1)}_k z_k^{(d:1)}({\boldsymbol{x}}) + b^{(d+1)}\right ), \end{equation}

where

![]() $g^{-1}(\!\cdot\!)$

is a suitably chosen inverse link function that transforms the outputs of the network to the scale of the observations

$g^{-1}(\!\cdot\!)$

is a suitably chosen inverse link function that transforms the outputs of the network to the scale of the observations

![]() $y$

. The notation

$y$

. The notation

![]() $W$

in

$W$

in

![]() $\Psi _W({\boldsymbol{x}})$

indicates that we collect all the weights

$\Psi _W({\boldsymbol{x}})$

indicates that we collect all the weights

![]() $w^{(i)}_{j,k}$

and biases

$w^{(i)}_{j,k}$

and biases

![]() $ b^{(i)}_j$

in

$ b^{(i)}_j$

in

![]() $W$

, giving us a network parameter of dimension

$W$

, giving us a network parameter of dimension

![]() $(q_{d}+1) + \sum _{i=1}^d (q_{i-1}+1)q_i$

, where

$(q_{d}+1) + \sum _{i=1}^d (q_{i-1}+1)q_i$

, where

![]() $q_0$

is the dimension of the input

$q_0$

is the dimension of the input

![]() $\boldsymbol{x}$

.

$\boldsymbol{x}$

.

For most supervised learning applications in actuarial work, a neural network of the form (2.1)–(2.2) is applied to the covariate

![]() $\boldsymbol{x}$

to create a single prediction

$\boldsymbol{x}$

to create a single prediction

![]() $\widehat{y} = \Psi _W({\boldsymbol{x}})v$

. Below, we will define how the same network

$\widehat{y} = \Psi _W({\boldsymbol{x}})v$

. Below, we will define how the same network

![]() $\Psi _W(\!\cdot\!)$

can be applied to a vector of observations to produce multiple predictions, which will be used to constrain the network.

$\Psi _W(\!\cdot\!)$

can be applied to a vector of observations to produce multiple predictions, which will be used to constrain the network.

State-of-the-art neural network calibration is performed using a Stochastic Gradient Descent (SGD) algorithm, performed on mini-batches of observations. To calibrate the network, an appropriate loss function

![]() $L(\cdot, \cdot )$

must be selected. For general insurance pricing, the loss function is often the deviance loss function of an exponential family distribution, such as the Poisson distribution for frequency modeling or the Gamma distribution for severity modeling. For more details on the SGD procedure, we refer to Goodfellow et al. (Reference Goodfellow, Bengio and Courville2016), and for a detailed explanation of exponential family modeling for actuarial purposes, see Chapters 2 and 4 of Wüthrich & Merz (Reference Wüthrich and Merz2023).

$L(\cdot, \cdot )$

must be selected. For general insurance pricing, the loss function is often the deviance loss function of an exponential family distribution, such as the Poisson distribution for frequency modeling or the Gamma distribution for severity modeling. For more details on the SGD procedure, we refer to Goodfellow et al. (Reference Goodfellow, Bengio and Courville2016), and for a detailed explanation of exponential family modeling for actuarial purposes, see Chapters 2 and 4 of Wüthrich & Merz (Reference Wüthrich and Merz2023).

2.2 Pre-processing covariates for FCNs

For the following, we assume that the

![]() $N$

instances of

$N$

instances of

![]() ${\boldsymbol{x}}_n$

,

${\boldsymbol{x}}_n$

,

![]() $1\le n \le N$

, have been collected into a matrix

$1\le n \le N$

, have been collected into a matrix

![]() $X = [X^{[r]},X^{[c]}] \in{\mathbb{R}}^{N \times (q_r+q_c)}$

, where

$X = [X^{[r]},X^{[c]}] \in{\mathbb{R}}^{N \times (q_r+q_c)}$

, where

![]() $X^{[\cdot ]}$

represents a subset of the columns of

$X^{[\cdot ]}$

represents a subset of the columns of

![]() $X$

. Thus, to select the

$X$

. Thus, to select the

![]() $j$

-th column of

$j$

-th column of

![]() $X$

, we write

$X$

, we write

![]() $X^{[j]}$

and, furthermore, to represent the

$X^{[j]}$

and, furthermore, to represent the

![]() $n$

-th row of

$n$

-th row of

![]() $X$

, we will write

$X$

, we will write

![]() $X_n ={\boldsymbol{x}}_n$

. We also assume that all of the observations of

$X_n ={\boldsymbol{x}}_n$

. We also assume that all of the observations of

![]() $y_n$

have been collected into a vector

$y_n$

have been collected into a vector

![]() $\boldsymbol{y}=(y_1,\ldots, y_N)^\top \in{\mathbb{R}}^N$

.

$\boldsymbol{y}=(y_1,\ldots, y_N)^\top \in{\mathbb{R}}^N$

.

2.2.1 Categorical covariates

The types of categorical data comprising

![]() $X^{[c]}$

are usually either qualitative (nominal) data with no inherent ordering, such as type of motor vehicle, or ordinal data with an inherent ordering, such as bad-average-good driver. Different methods for pre-processing categorical data for use within machine learning methods have been developed, with one-hot encoding being a popular choice for traditional machine learning methods. Here, we focus on the categorical embedding technique (entity embedding) of Guo & Berkhahn (Reference Guo and Berkhahn2016); see Richman (Reference Richman2021) and Delong & Kozak (Reference Delong and Kozak2023) for a brief overview of other options and an introduction to embeddings. Assume that the

$X^{[c]}$

are usually either qualitative (nominal) data with no inherent ordering, such as type of motor vehicle, or ordinal data with an inherent ordering, such as bad-average-good driver. Different methods for pre-processing categorical data for use within machine learning methods have been developed, with one-hot encoding being a popular choice for traditional machine learning methods. Here, we focus on the categorical embedding technique (entity embedding) of Guo & Berkhahn (Reference Guo and Berkhahn2016); see Richman (Reference Richman2021) and Delong & Kozak (Reference Delong and Kozak2023) for a brief overview of other options and an introduction to embeddings. Assume that the

![]() $t$

-th categorical variable, corresponding to column

$t$

-th categorical variable, corresponding to column

![]() $X^{[c_t]}$

, for

$X^{[c_t]}$

, for

![]() $ 1 \leq t \leq q_c$

, can take one of

$ 1 \leq t \leq q_c$

, can take one of

![]() $K_t \geq 2$

values in the set of levels

$K_t \geq 2$

values in the set of levels

![]() $\{a^t_1, \dots, a^t_{K_t} \}$

. An embedding layer for this categorical variable maps each member of the set to a low-dimensional vector representation of dimension

$\{a^t_1, \dots, a^t_{K_t} \}$

. An embedding layer for this categorical variable maps each member of the set to a low-dimensional vector representation of dimension

![]() $b_t \lt K_t$

, i.e.,

$b_t \lt K_t$

, i.e.,

meaning to say that the

![]() $k$

-th level

$k$

-th level

![]() $a^t_k$

of the

$a^t_k$

of the

![]() $t$

-th categorical variable receives a low-dimensional vector representation

$t$

-th categorical variable receives a low-dimensional vector representation

![]() ${\boldsymbol{e}}^{t(k)}\in{\mathbb{R}}^{b_t}$

. When utilizing an embedding layer within a neural network, we calibrate the

${\boldsymbol{e}}^{t(k)}\in{\mathbb{R}}^{b_t}$

. When utilizing an embedding layer within a neural network, we calibrate the

![]() $K_tb_t$

parameters of the embedding layer as part of fitting the weights

$K_tb_t$

parameters of the embedding layer as part of fitting the weights

![]() $W$

of the network

$W$

of the network

![]() $\Psi _W({\boldsymbol{x}})$

. Practically, when inputting a categorical covariate to a neural network, each level of the covariate is mapped to a unique natural number; thus, we have represented these covariates as

$\Psi _W({\boldsymbol{x}})$

. Practically, when inputting a categorical covariate to a neural network, each level of the covariate is mapped to a unique natural number; thus, we have represented these covariates as

![]() ${\boldsymbol{x}}^{[c]} \in{\mathbb N}^{q_c}$

, and these representations are then embedded according to (2.3) which can be interpreted as an additional layer of the network; this is graphically illustrated in Fig. 7.9 of Wüthrich & Merz (Reference Wüthrich and Merz2023).

${\boldsymbol{x}}^{[c]} \in{\mathbb N}^{q_c}$

, and these representations are then embedded according to (2.3) which can be interpreted as an additional layer of the network; this is graphically illustrated in Fig. 7.9 of Wüthrich & Merz (Reference Wüthrich and Merz2023).

2.2.2 Numerical covariates

To enable the easy calibration of neural networks, numerical covariates must be scaled to be of similar magnitudes. In what follows, we will use the min-max normalization, where each raw numerical covariate

![]() $\dot{X}^{[r_t]}$

is scaled to

$\dot{X}^{[r_t]}$

is scaled to

where

![]() $\dot{X}^{[r_t]}$

is

$\dot{X}^{[r_t]}$

is

![]() $t$

-th raw (i.e., unscaled) continuous covariate and the operation is performed for each element of column

$t$

-th raw (i.e., unscaled) continuous covariate and the operation is performed for each element of column

![]() $\dot{X}^{[r_t]}$

of the matrix of raw continuous covariates,

$\dot{X}^{[r_t]}$

of the matrix of raw continuous covariates,

![]() $\dot{X}^{[r]}$

.

$\dot{X}^{[r]}$

.

We note that the continuous data comprising

![]() $\dot{X}^{[r]}$

can also easily be converted to ordinal data through binning, for example, by mapping each observed covariate in the data to one of the quantiles of that covariate. We do not consider this option for processing continuous variables in this work; however, we will use quantile binning to discretize the continuous covariates

$\dot{X}^{[r]}$

can also easily be converted to ordinal data through binning, for example, by mapping each observed covariate in the data to one of the quantiles of that covariate. We do not consider this option for processing continuous variables in this work; however, we will use quantile binning to discretize the continuous covariates

![]() $X^{[r]}$

for the purpose of estimating the ICEnets used here.

$X^{[r]}$

for the purpose of estimating the ICEnets used here.

2.3 Individual conditional expectations and partial dependence plots

Machine learning interpretability methods are used to explain how a machine learning model, such as a neural network, has estimated the relationship between the covariates

![]() $\boldsymbol{x}$

and the predictions

$\boldsymbol{x}$

and the predictions

![]() $\widehat{y}$

; for an overview of these, see Biecek & Burzykowski (Reference Biecek and Burzykowski2021). Two related methods for interpreting machine learning models are the Partial Dependence Plot (PDP) of Friedman (Reference Friedman2001) and the Individual Conditional Expectations (ICE) method of Goldstein et al. (Reference Goldstein, Kapelner, Bleich and Pitkin2015). The ICE method estimates how the predictions

$\widehat{y}$

; for an overview of these, see Biecek & Burzykowski (Reference Biecek and Burzykowski2021). Two related methods for interpreting machine learning models are the Partial Dependence Plot (PDP) of Friedman (Reference Friedman2001) and the Individual Conditional Expectations (ICE) method of Goldstein et al. (Reference Goldstein, Kapelner, Bleich and Pitkin2015). The ICE method estimates how the predictions

![]() $\widehat{y}_n$

for each instance

$\widehat{y}_n$

for each instance

![]() $1 \leq n \leq N$

change as a single component of the covariates

$1 \leq n \leq N$

change as a single component of the covariates

![]() ${\boldsymbol{x}}_n$

is varied over its observed range of possible values, while holding all of the other components of

${\boldsymbol{x}}_n$

is varied over its observed range of possible values, while holding all of the other components of

![]() ${\boldsymbol{x}}_n$

constant. The PDP method is simply the average over all of the individual ICE outputs of the instances

${\boldsymbol{x}}_n$

constant. The PDP method is simply the average over all of the individual ICE outputs of the instances

![]() $1\le n \le N$

. By inspecting the resultant ICE and PDP outputs, the relationship of the predictions with a single component of

$1\le n \le N$

. By inspecting the resultant ICE and PDP outputs, the relationship of the predictions with a single component of

![]() $\boldsymbol{x}$

can be observed; by performing the same process for each component of

$\boldsymbol{x}$

can be observed; by performing the same process for each component of

![]() $\boldsymbol{x}$

, the relationships with all of the covariates can be shown.

$\boldsymbol{x}$

, the relationships with all of the covariates can be shown.

To estimate the ICE for a neural network, we now consider the creation of pseudo-data that will be used to create the ICE output of the network. We consider one column of

![]() $X$

, denoted as

$X$

, denoted as

![]() $X^{[j]}$

,

$X^{[j]}$

,

![]() $1\le j \le q_r+q_c$

. If the selected column

$1\le j \le q_r+q_c$

. If the selected column

![]() $X^{[j]}$

is categorical, then, as above, the range of values which can be taken by each element of

$X^{[j]}$

is categorical, then, as above, the range of values which can be taken by each element of

![]() $X^{[j]}$

are simply the values in the set of levels

$X^{[j]}$

are simply the values in the set of levels

![]() $\{a^j_1, \dots, a^j_{K_j} \}$

. If the selected column

$\{a^j_1, \dots, a^j_{K_j} \}$

. If the selected column

![]() $X^{[j]}$

is continuous, then we assume that a range of values for the column has been selected using quantile binning or another procedure, and we denote the set of these values using the same notation, i.e.,

$X^{[j]}$

is continuous, then we assume that a range of values for the column has been selected using quantile binning or another procedure, and we denote the set of these values using the same notation, i.e.,

![]() $\{a^j_1, \dots, a^j_{K_j} \}$

, where

$\{a^j_1, \dots, a^j_{K_j} \}$

, where

![]() $K_j\in{\mathbb N}$

is the number of selected values

$K_j\in{\mathbb N}$

is the number of selected values

![]() $a_u^j \in{\mathbb{R}}$

, and where we typically assume that the continuous variables in

$a_u^j \in{\mathbb{R}}$

, and where we typically assume that the continuous variables in

![]() $\{a^j_1, \dots, a^j_{K_j} \}$

are ordered in increasing order.

$\{a^j_1, \dots, a^j_{K_j} \}$

are ordered in increasing order.

We define

![]() $\tilde{X}^{[j]}(u)$

as a copy of

$\tilde{X}^{[j]}(u)$

as a copy of

![]() $X$

, where all of the components of

$X$

, where all of the components of

![]() $X$

remain the same, except for the

$X$

remain the same, except for the

![]() $j$

-th column of

$j$

-th column of

![]() $X$

, which is set to the

$X$

, which is set to the

![]() $u$

-th value of the set

$u$

-th value of the set

![]() $\{a^j_1, \dots, a^j_{K_j} \}$

,

$\{a^j_1, \dots, a^j_{K_j} \}$

,

![]() $1 \leq u \leq K_j$

. By sequentially creating predictions using a (calibrated) neural network applied to the pseudo-data,

$1 \leq u \leq K_j$

. By sequentially creating predictions using a (calibrated) neural network applied to the pseudo-data,

![]() $\Psi _W(\tilde{X}^{[j]}(u))$

(where the network is applied in a row-wise manner), we are able to derive the ICE outputs for each variable of interest, as we vary the value of

$\Psi _W(\tilde{X}^{[j]}(u))$

(where the network is applied in a row-wise manner), we are able to derive the ICE outputs for each variable of interest, as we vary the value of

![]() $1\le u \le K_j$

. In particular, by allowing

$1\le u \le K_j$

. In particular, by allowing

![]() $a_u^j$

to take each value in the set

$a_u^j$

to take each value in the set

![]() $\{a^j_1, \dots, a^j_{K_j} \}$

, for each instance

$\{a^j_1, \dots, a^j_{K_j} \}$

, for each instance

![]() $1\le n \le N$

separately, we define the ICE for covariate

$1\le n \le N$

separately, we define the ICE for covariate

![]() $j$

as a vector of predictions on the artificial data,

$j$

as a vector of predictions on the artificial data,

![]() $\widetilde{\boldsymbol{y}}^{[j]}_n$

, as

$\widetilde{\boldsymbol{y}}^{[j]}_n$

, as

where

![]() $\tilde{{\boldsymbol{x}}}_n^{[j]}(u)$

represents the vector of covariates of instance

$\tilde{{\boldsymbol{x}}}_n^{[j]}(u)$

represents the vector of covariates of instance

![]() $n$

, where the

$n$

, where the

![]() $j$

-th entry has been set equal to the

$j$

-th entry has been set equal to the

![]() $u$

-th value

$u$

-th value

![]() $a_u^j$

of that covariate, which is contained in the corresponding set

$a_u^j$

of that covariate, which is contained in the corresponding set

![]() $\{a^j_1, \dots, a^j_{K_j} \}$

.

$\{a^j_1, \dots, a^j_{K_j} \}$

.

The PDP can then be derived from (2.4) by averaging the ICE outputs over all instances. We set

\begin{equation} \widehat{{\mathbb{E}}}[\widetilde{\boldsymbol{y}}^{[j]}_n] = \left [\frac{\sum _{n = 1}^{N}{\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(1))v_n}}{N}, \ldots, \frac{\sum _{n = 1}^{N}{\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(K_j))v_n}}{N} \right ] \in{\mathbb{R}}^{K_j}. \end{equation}

\begin{equation} \widehat{{\mathbb{E}}}[\widetilde{\boldsymbol{y}}^{[j]}_n] = \left [\frac{\sum _{n = 1}^{N}{\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(1))v_n}}{N}, \ldots, \frac{\sum _{n = 1}^{N}{\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(K_j))v_n}}{N} \right ] \in{\mathbb{R}}^{K_j}. \end{equation}

This can be interpreted as an empirical average, averaging over all instances

![]() $1\le n \le N$

.

$1\le n \le N$

.

3. ICEnet

3.1 Description of the ICEnet

We start with a colloquial description of the ICEnet proposal before defining it rigorously. The main idea is to augment the observed data

![]() $(y_n,{\boldsymbol{x}}_n, v_n)_{n=1}^N$

with pseudo-data, so that output equivalent to that used for the ICE interpretability technique (2.4) is produced by the network, for each variable that requires a smoothness or monotonicity constraint. This is done by creating pseudo-data that varies for each possible value of the variables to be constrained. For continuous variables, we use quantiles of the observed values of the continuous variables to produce the ICE output while, for categorical variables, we use each level of the categories. The same neural network is then applied to the observed data, as well as each of the pseudo-data. Applying the network to the actual observed data produces a prediction that can be used for pricing, whereas applying the network to each of the pseudo-data produces outputs which vary with each of the covariates which need smoothing or require monotonicity to be enforced. The parameters of the network are trained (or fine-tuned) using a compound loss function: the first component of the loss function measures how well the network predicts the observations

$(y_n,{\boldsymbol{x}}_n, v_n)_{n=1}^N$

with pseudo-data, so that output equivalent to that used for the ICE interpretability technique (2.4) is produced by the network, for each variable that requires a smoothness or monotonicity constraint. This is done by creating pseudo-data that varies for each possible value of the variables to be constrained. For continuous variables, we use quantiles of the observed values of the continuous variables to produce the ICE output while, for categorical variables, we use each level of the categories. The same neural network is then applied to the observed data, as well as each of the pseudo-data. Applying the network to the actual observed data produces a prediction that can be used for pricing, whereas applying the network to each of the pseudo-data produces outputs which vary with each of the covariates which need smoothing or require monotonicity to be enforced. The parameters of the network are trained (or fine-tuned) using a compound loss function: the first component of the loss function measures how well the network predicts the observations

![]() $y_n$

,

$y_n$

,

![]() $1\le n \le N$

. The other components ensure that the desired constraints are enforced on the ICEs for the constrained variables. After training, the progression of predictions of the neural network will be smooth or monotonically increasing with changes in the constrained variables. A diagram of the ICEnet is shown in Fig. 1.

$1\le n \le N$

. The other components ensure that the desired constraints are enforced on the ICEs for the constrained variables. After training, the progression of predictions of the neural network will be smooth or monotonically increasing with changes in the constrained variables. A diagram of the ICEnet is shown in Fig. 1.

Figure 1 Diagram explaining the ICEnet. The same neural network

![]() $\Psi _W$

is used to produce both the predictions from the model, as well as to create predictions based on pseudo-data. These latter predictions are constrained, ensuring that the outputs of the ICEnet vary smoothly or monotonically with changes in the input variables

$\Psi _W$

is used to produce both the predictions from the model, as well as to create predictions based on pseudo-data. These latter predictions are constrained, ensuring that the outputs of the ICEnet vary smoothly or monotonically with changes in the input variables

![]() $\boldsymbol{x}$

. In this graph, we are varying variable

$\boldsymbol{x}$

. In this graph, we are varying variable

![]() $x_1$

to produce the ICEnet outputs which are

$x_1$

to produce the ICEnet outputs which are

![]() $\Psi _W(\tilde{{\boldsymbol{x}}}^{[1]}(\!\cdot\!))$

.

$\Psi _W(\tilde{{\boldsymbol{x}}}^{[1]}(\!\cdot\!))$

.

3.2 Definition of the ICEnet

To define the ICEnet, we assume that some of the variables comprising the covariates

![]() $X\in{\mathbb{R}}^{N\times (q_r+q_c)}$

have been selected as requiring smoothness and monotonicity constraints. We collect all those variables requiring smoothness constraints into a set

$X\in{\mathbb{R}}^{N\times (q_r+q_c)}$

have been selected as requiring smoothness and monotonicity constraints. We collect all those variables requiring smoothness constraints into a set

![]() $\mathcal{S}\subset \{1,\ldots, q_r+q_c\}$

with

$\mathcal{S}\subset \{1,\ldots, q_r+q_c\}$

with

![]() $S$

members of the set and those variables requiring monotonicity constraints into another set

$S$

members of the set and those variables requiring monotonicity constraints into another set

![]() $\mathcal{M}\subset \{1,\ldots, q_r+q_c\}$

, with

$\mathcal{M}\subset \{1,\ldots, q_r+q_c\}$

, with

![]() $M$

members of the set. As we have discussed above, we will rely on FCNs with appropriate pre-processing of the input data

$M$

members of the set. As we have discussed above, we will rely on FCNs with appropriate pre-processing of the input data

![]() $\boldsymbol{x}$

for predicting the response

$\boldsymbol{x}$

for predicting the response

![]() $y$

with

$y$

with

![]() $\widehat{y}$

.

$\widehat{y}$

.

For each instance

![]() $n \in \{1, \ldots, N\}$

, the ICEnet is comprised of two main parts. The first of these is simply a prediction

$n \in \{1, \ldots, N\}$

, the ICEnet is comprised of two main parts. The first of these is simply a prediction

![]() $\widehat{y}_n=\Psi _W({\boldsymbol{x}}_n)v_n$

of the outcome

$\widehat{y}_n=\Psi _W({\boldsymbol{x}}_n)v_n$

of the outcome

![]() $y_n$

based on covariates

$y_n$

based on covariates

![]() ${\boldsymbol{x}}_n$

. For the second part of the ICEnet, we now will create the pseudo-data using the definitions from Section 2.3 that will be used to create the ICE output of the network for each of the columns requiring constraints. Finally, we assume that the ICEnet will be trained with a compound loss function

${\boldsymbol{x}}_n$

. For the second part of the ICEnet, we now will create the pseudo-data using the definitions from Section 2.3 that will be used to create the ICE output of the network for each of the columns requiring constraints. Finally, we assume that the ICEnet will be trained with a compound loss function

![]() $L$

, that balances the good predictive performance of the predictions

$L$

, that balances the good predictive performance of the predictions

![]() $\widehat{y}_n$

together with satisfying constraints to enforce smoothness and monotonicity.

$\widehat{y}_n$

together with satisfying constraints to enforce smoothness and monotonicity.

The compound loss function

![]() $L$

of the ICEnet consists of three summands, i.e.,

$L$

of the ICEnet consists of three summands, i.e.,

![]() $L = L_1 + L_2 + L_3$

. The first of these is set equal to the deviance loss function

$L = L_1 + L_2 + L_3$

. The first of these is set equal to the deviance loss function

![]() $L^D$

that is relevant for the considered regression problem, i.e.,

$L^D$

that is relevant for the considered regression problem, i.e.,

![]() $L_1 \stackrel{{\mathsf{set}}}{=} L^D(y_n, \widehat{y}_n)$

.

$L_1 \stackrel{{\mathsf{set}}}{=} L^D(y_n, \widehat{y}_n)$

.

The second of these losses is a smoothing constraint applied to each covariate in the set

![]() $\mathcal{S}$

. For smoothing, actuaries have often used the Whittaker–Henderson smoother (Whittaker, Reference Whittaker1922; Henderson, Reference Henderson1924), which we implement here as the square of the third difference of the predictions

$\mathcal{S}$

. For smoothing, actuaries have often used the Whittaker–Henderson smoother (Whittaker, Reference Whittaker1922; Henderson, Reference Henderson1924), which we implement here as the square of the third difference of the predictions

![]() $\widetilde{\boldsymbol{y}}^{[j]}_n$

given in (2.4). We define the difference operator of order 1 as

$\widetilde{\boldsymbol{y}}^{[j]}_n$

given in (2.4). We define the difference operator of order 1 as

and the difference operator of a higher order

![]() $\tau \geq 1$

recursively as

$\tau \geq 1$

recursively as

Thus, the smoothing loss

![]() $L_2$

for order 3 is defined as

$L_2$

for order 3 is defined as

\begin{equation} L_2(n) \,\stackrel{{\mathsf{def}}}{=}\, \sum _{j \in\mathcal{S}} \sum _{u=4}^{K_j} \lambda _{s_j}\left [\Delta ^3(\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(u)))\right ]^2, \end{equation}

\begin{equation} L_2(n) \,\stackrel{{\mathsf{def}}}{=}\, \sum _{j \in\mathcal{S}} \sum _{u=4}^{K_j} \lambda _{s_j}\left [\Delta ^3(\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(u)))\right ]^2, \end{equation}

where

![]() $\lambda _{s_j}\ge 0$

is the penalty parameter for the smoothing loss for the

$\lambda _{s_j}\ge 0$

is the penalty parameter for the smoothing loss for the

![]() $j$

-th member of the set

$j$

-th member of the set

![]() $\mathcal{S}$

.

$\mathcal{S}$

.

Finally, to enforce monotonicity, we add the absolute value of the negative components of the first difference of

![]() $\widetilde{\boldsymbol{y}}^{[j]}_n$

to the loss, i.e., we define

$\widetilde{\boldsymbol{y}}^{[j]}_n$

to the loss, i.e., we define

![]() $L_3$

as:

$L_3$

as:

\begin{equation} L_3(n) \,\stackrel{{\mathsf{def}}}{=} \,\sum _{j \in\mathcal{M}} \sum _{u=2}^{K_j} \lambda _{m_j}\max \left [\delta _j\Delta ^1(\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(u))),0\right ], \end{equation}

\begin{equation} L_3(n) \,\stackrel{{\mathsf{def}}}{=} \,\sum _{j \in\mathcal{M}} \sum _{u=2}^{K_j} \lambda _{m_j}\max \left [\delta _j\Delta ^1(\Psi _W(\tilde{{\boldsymbol{x}}}_n^{[j]}(u))),0\right ], \end{equation}

where

![]() $\lambda _{m_j}\ge 0$

is the penalty parameter for the smoothing loss for the

$\lambda _{m_j}\ge 0$

is the penalty parameter for the smoothing loss for the

![]() $j$

-th member of the set

$j$

-th member of the set

![]() $\mathcal{M}$

, and where

$\mathcal{M}$

, and where

![]() $\delta _j = \pm 1$

depending on whether we want to have a monotone increase (

$\delta _j = \pm 1$

depending on whether we want to have a monotone increase (

![]() $-1$

) or decrease (

$-1$

) or decrease (

![]() $+1$

) in the

$+1$

) in the

![]() $j$

-the variable of

$j$

-the variable of

![]() $\mathcal{S}$

.

$\mathcal{S}$

.

Assumption 3.1 (ICEnet architecture). Assume we have independent responses and covariates

![]() $(y_n,{\boldsymbol{x}}_n^{[r]},{\boldsymbol{x}}_n^{[c]}, v_n)_{n=1}^N$

as defined in Section 2.1, and we have selected some covariates requiring constraints into sets

$(y_n,{\boldsymbol{x}}_n^{[r]},{\boldsymbol{x}}_n^{[c]}, v_n)_{n=1}^N$

as defined in Section 2.1, and we have selected some covariates requiring constraints into sets

![]() $\mathcal{S}$

and

$\mathcal{S}$

and

![]() $\mathcal{M}$

. Assume we have a neural network architecture

$\mathcal{M}$

. Assume we have a neural network architecture

![]() $\Psi _W$

as defined in (2.2) having network weights

$\Psi _W$

as defined in (2.2) having network weights

![]() $W$

. For the prediction part of the ICEnet, we use the following mapping provided by the network

$W$

. For the prediction part of the ICEnet, we use the following mapping provided by the network

which produces the predictions required for the regression task. In addition, the ICEnet also produces the following predictions made with the same network on pseudo-data defined by

where all definitions are the same as those defined in Section 2.3 for

![]() $s_l \in\mathcal{S}$

and

$s_l \in\mathcal{S}$

and

![]() $m_l \in\mathcal{M}$

, respectively. Finally, to train the ICEnet, we assume that a compound loss function, applied to each observation

$m_l \in\mathcal{M}$

, respectively. Finally, to train the ICEnet, we assume that a compound loss function, applied to each observation

![]() $n \in \{1,\ldots, N\}$

individually, is specified as follows

$n \in \{1,\ldots, N\}$

individually, is specified as follows

for smoothing loss

![]() $L_2(n)$

and monotonicity loss

$L_2(n)$

and monotonicity loss

![]() $L_3(n)$

given in (3.1) and (3.2), respectively, and for non-negative penalty parameters collected into a vector

$L_3(n)$

given in (3.1) and (3.2), respectively, and for non-negative penalty parameters collected into a vector

![]() $\boldsymbol{\lambda } = (\lambda _{s_1}, \ldots,\lambda _{s_S}, \lambda _{m_1}, \ldots,\lambda _{m_M})^\top$

.

$\boldsymbol{\lambda } = (\lambda _{s_1}, \ldots,\lambda _{s_S}, \lambda _{m_1}, \ldots,\lambda _{m_M})^\top$

.

We briefly remark on the ICEnet architecture given in Assumptions 3.1.

Remark 3.2.

-

To produce the ICE outputs from the network under Assumptions 3.1, we apply the same network

$\Psi _W(\!\cdot\!)$

multiple times to pseudo-data that have been modified to vary for each value that a particular variable can take, see (3.3). Applying the same network multiple times is called a point-wise neural network in Vaswani et al. (Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017), and it is called a time-distributed network in the Keras package; see Chollet et al. (Reference Chollet and Allaire2017). This application of the same network multiple times is also called a one-dimensional convolutional neural network.

$\Psi _W(\!\cdot\!)$

multiple times to pseudo-data that have been modified to vary for each value that a particular variable can take, see (3.3). Applying the same network multiple times is called a point-wise neural network in Vaswani et al. (Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017), and it is called a time-distributed network in the Keras package; see Chollet et al. (Reference Chollet and Allaire2017). This application of the same network multiple times is also called a one-dimensional convolutional neural network. -

Common actuarial practice when fitting general insurance pricing models is to consider PDPs to understand the structure of the fitted coefficients of a GLM and smooth these manually. Since we cannot smooth the neural network parameters

$W$

directly, we have rather enforced constraints (3.1) for the ICE produced by the network

$W$

directly, we have rather enforced constraints (3.1) for the ICE produced by the network

$\Psi _W(\!\cdot\!)$

; this in particular applies if we smooth ordered categorical variables.

$\Psi _W(\!\cdot\!)$

; this in particular applies if we smooth ordered categorical variables. -

Enforcing the same constraints as those in (3.4) in a GLM will automatically smooth/constrain the GLM coefficients similar to LASSO and fused LASSO regularizations; we refer to Hastie et al. (Reference Hastie, Tibshirani and Wainwright2015). We also mention that similar ideas have been used in enforcing monotonicity in multiple quantile estimation; see Kellner et al. (Reference Kellner, Nagl and Rösch2022).

-

Since the PDP of a model is nothing more than the average over the ICE outputs of the model for each instance

$n$

, enforcing the constraints for each instance also enforces the constraints on the PDP. Moreover, the constraints in (3.4) could be applied in a slightly different manner, by first estimating a PDP using (2.5) for all of the observations in a batch and then applying the constraints to the estimated PDP.

$n$

, enforcing the constraints for each instance also enforces the constraints on the PDP. Moreover, the constraints in (3.4) could be applied in a slightly different manner, by first estimating a PDP using (2.5) for all of the observations in a batch and then applying the constraints to the estimated PDP. -

We have used a relatively simple neural network within the ICEnet; of course, more complex network architectures could be used.

-

It is important to note that using the ICEnet method does not guarantee smoothness or monotonicity if the values of the penalties

$\boldsymbol{\lambda }$

are not chosen appropriately.

$\boldsymbol{\lambda }$

are not chosen appropriately.

3.3 Selecting the value of the penalty parameters

To apply the ICEnet, it is important to choose the penalty parameters in

![]() $\boldsymbol{\lambda }$

appropriately. When applying penalized regression in a machine learning context, cross-validation or an independent validation set is often used to select parameters that are expected to minimize out-of-sample prediction errors. Following this approach, one can select the values within

$\boldsymbol{\lambda }$

appropriately. When applying penalized regression in a machine learning context, cross-validation or an independent validation set is often used to select parameters that are expected to minimize out-of-sample prediction errors. Following this approach, one can select the values within

![]() $\boldsymbol{\lambda }$

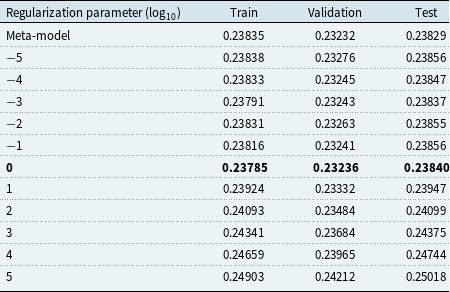

so as to minimize the prediction error on a validation set; see Section 5.4 for choosing the values of the penalty parameters using this approach, where a search over a validation set was used to select a single optimal value for all components of

$\boldsymbol{\lambda }$

so as to minimize the prediction error on a validation set; see Section 5.4 for choosing the values of the penalty parameters using this approach, where a search over a validation set was used to select a single optimal value for all components of

![]() $\boldsymbol{\lambda }$

. More complex procedures to select optimal values of these penalties could also be considered; we mention here Bayesian optimization, which has been applied successfully to select optimal hyper-parameters for machine learning algorithms and which could be applied similarly here, see, for example Bergstra et al. (Reference Bergstra, Bardenet, Bengio and Kégl2011).

$\boldsymbol{\lambda }$

. More complex procedures to select optimal values of these penalties could also be considered; we mention here Bayesian optimization, which has been applied successfully to select optimal hyper-parameters for machine learning algorithms and which could be applied similarly here, see, for example Bergstra et al. (Reference Bergstra, Bardenet, Bengio and Kégl2011).

On the other hand, within actuarial practice, when applying smoothing, often the extent of the smoothing is chosen using judgment to meet the actuary’s subjective expectations of, for example, how smooth the outputs of a GLM should be w.r.t a certain covariate. Similarly, the penalty coefficient of the Whittaker–Henderson smoother is often chosen subjectively by inspecting the graduated mortality rates and modifying the penalty until these are deemed appropriate. A more subjective approach may be especially important when commercial or regulatory considerations need to be taken into account, i.e., since these are hard to quantify numerically. In these cases, to select the values of the penalty parameters, PDP and ICE plots can be used to determine whether the outputs of the network are suitably smooth and monotonic. For examples of these plots, see Section 5.3 and the appendix. We emphasize that considering these across many different sample insurance policies is important to ensure that the constraints have produced suitable results. This can be done by evaluating the smoothness and monotonicity of, for example, the ICE outputs across many sample policies (see Fig. 10 for an example). In conclusion, we have found that a combination of analytical and subjective approaches supported by graphical aids to selecting the penalty parameters

![]() $\boldsymbol{\lambda }$

works well.

$\boldsymbol{\lambda }$

works well.

The relative importance of the smoothness and monotonicity components of the ICEnet loss (3.4) will be determined by the choices of each individual penalty parameter in

![]() $\boldsymbol{\lambda }$

. To modify the importance given to each of these components, the ICEnet loss could be modified to

$\boldsymbol{\lambda }$

. To modify the importance given to each of these components, the ICEnet loss could be modified to

![]() $L^{mod}(n) \,\stackrel{{\mathsf{def}}}{=} \,L^D(y_n, \widehat{y}_n) + a_1\, L_2(n) + a_2\, L_3(n)$

with weights

$L^{mod}(n) \,\stackrel{{\mathsf{def}}}{=} \,L^D(y_n, \widehat{y}_n) + a_1\, L_2(n) + a_2\, L_3(n)$

with weights

![]() $a_1\gt 0$

and

$a_1\gt 0$

and

![]() $a_2\gt 0$

selected either through user judgment or using a validation set, as mentioned above.

$a_2\gt 0$

selected either through user judgment or using a validation set, as mentioned above.

Connected to this, we note that selecting values of the smoothness and monotonicity constraints that produce values of the constraint losses

![]() $L_2(n)$

and

$L_2(n)$

and

![]() $L_3(n)$

that are small relative to the prediction error component of (3.4),

$L_3(n)$

that are small relative to the prediction error component of (3.4),

![]() $L^D(y_n, \widehat{y}_n)$

, will mean that the predictions from the ICEnet will likely not be suitably smooth and monotonic. In other words, to strictly enforce the constraints, it is usually necessary to ensure that the values in

$L^D(y_n, \widehat{y}_n)$

, will mean that the predictions from the ICEnet will likely not be suitably smooth and monotonic. In other words, to strictly enforce the constraints, it is usually necessary to ensure that the values in

![]() $\boldsymbol{\lambda }$

are large enough such that the constraint losses

$\boldsymbol{\lambda }$

are large enough such that the constraint losses

![]() $L_2(n)$

and

$L_2(n)$

and

![]() $L_3(n)$

are comparably large relative to the prediction error component. For extremely large values of a smoothing or monotonicity component of

$L_3(n)$

are comparably large relative to the prediction error component. For extremely large values of a smoothing or monotonicity component of

![]() $\boldsymbol{\lambda }$

, i.e., as

$\boldsymbol{\lambda }$

, i.e., as

![]() $\lambda _i \to \infty$

for the

$\lambda _i \to \infty$

for the

![]() $i$

-th element of

$i$

-th element of

![]() $\boldsymbol{\lambda }$

, we would expect that the predictions of the network w.r.t. the

$\boldsymbol{\lambda }$

, we would expect that the predictions of the network w.r.t. the

![]() $i$

-th constrained variable will be either constant or strictly monotonic. We verify this last point in Section 5.4.

$i$

-th constrained variable will be either constant or strictly monotonic. We verify this last point in Section 5.4.

3.4 Time complexity analysis of the ICEnet

Relative to training and making predictions with a baseline neural network architecture

![]() $\Psi _W$

for a dataset, the introduction of pseudo-data and constraints in the ICEnet will increase the time complexity of training and evaluating the ICEnet algorithm. We briefly note some of the main considerations when considering the computational costs of training a network using a naive implementation (without parallel processing) of the ICEnet algorithm. For each variable

$\Psi _W$

for a dataset, the introduction of pseudo-data and constraints in the ICEnet will increase the time complexity of training and evaluating the ICEnet algorithm. We briefly note some of the main considerations when considering the computational costs of training a network using a naive implementation (without parallel processing) of the ICEnet algorithm. For each variable

![]() $s \in \mathcal{S}$

and

$s \in \mathcal{S}$

and

![]() $m \in \mathcal{M}$

, the ICEnet will evaluate the FCN

$m \in \mathcal{M}$

, the ICEnet will evaluate the FCN

![]() $\Psi _W$

on the pseudo-data. The time complexity associated with these operations is linear both with respect to the number of different levels in the pseudo-data chosen for each variable and with respect to the number of variables in both of the sets

$\Psi _W$

on the pseudo-data. The time complexity associated with these operations is linear both with respect to the number of different levels in the pseudo-data chosen for each variable and with respect to the number of variables in both of the sets

![]() $\mathcal{S}$

and

$\mathcal{S}$

and

![]() $\mathcal{M}$

. The smoothing loss computation (3.1), involving third differences, will scale linearly as levels of each variable are added and also will scale linearly for each variable in

$\mathcal{M}$

. The smoothing loss computation (3.1), involving third differences, will scale linearly as levels of each variable are added and also will scale linearly for each variable in

![]() $\mathcal{S}$

; this is similar for the monotonic loss computation (3.2). Thus, the addition of constraints in ICEnet imposes an additive linear complexity relative to the baseline neural network

$\mathcal{S}$

; this is similar for the monotonic loss computation (3.2). Thus, the addition of constraints in ICEnet imposes an additive linear complexity relative to the baseline neural network

![]() $\Psi _W$

, contingent on the number of variables in

$\Psi _W$

, contingent on the number of variables in

![]() $\mathcal{S}$

and

$\mathcal{S}$

and

![]() $\mathcal{M}$

and the number of levels used within the pseudo-data used for enforcing these constraints. On the other hand, when considering making predictions with a network trained using the ICEnet algorithm, we remark that once the ICEnet has been trained, there is no need to evaluate the FCN

$\mathcal{M}$

and the number of levels used within the pseudo-data used for enforcing these constraints. On the other hand, when considering making predictions with a network trained using the ICEnet algorithm, we remark that once the ICEnet has been trained, there is no need to evaluate the FCN

![]() $\Psi _W$

on the pseudo-data. Thus, the time complexity will be the same as the baseline network architecture.

$\Psi _W$

on the pseudo-data. Thus, the time complexity will be the same as the baseline network architecture.

A highly important consideration, though, is that modern deep learning frameworks allow neural networks to be trained while utilizing the parallel processing capabilities of graphical processing units (GPUs). This means that the actual time taken to perform the calculations needed for training the ICEnet will be significantly lower than the theoretical analysis above. We verify this point in the sections that follow, for example, by showing the time taken to train the ICEnet scales in a sub-linear manner with the amount of pseudo-data used when training the ICEnet.

4. Simulation study of the ICEnet

4.1 Introduction

We now study the application of the ICEnet to a simulated example to verify that the proposal works as intended. Eight continuous covariates were simulated from a multivariate normal distribution, on the assumption that the covariates were uncorrelated, except for the second and eighth of these, which were simulated assuming a correlation coefficient of

![]() $0.5$

. The covariates are represented as

$0.5$

. The covariates are represented as

![]() ${\boldsymbol{x}} \in{\mathbb{R}}^8$

. The mean of the response,

${\boldsymbol{x}} \in{\mathbb{R}}^8$

. The mean of the response,

![]() $\mu$

, is a complex function of the first six of these covariates, i.e., the last two covariates are not actually used directly in the model. Finally, responses

$\mu$

, is a complex function of the first six of these covariates, i.e., the last two covariates are not actually used directly in the model. Finally, responses

![]() $y$

were then simulated from a normal distribution with mean

$y$

were then simulated from a normal distribution with mean

![]() $\mu$

and a standard deviation of

$\mu$

and a standard deviation of

![]() $1$

. The mean response

$1$

. The mean response

![]() $\mu$

is defined as:

$\mu$

is defined as:

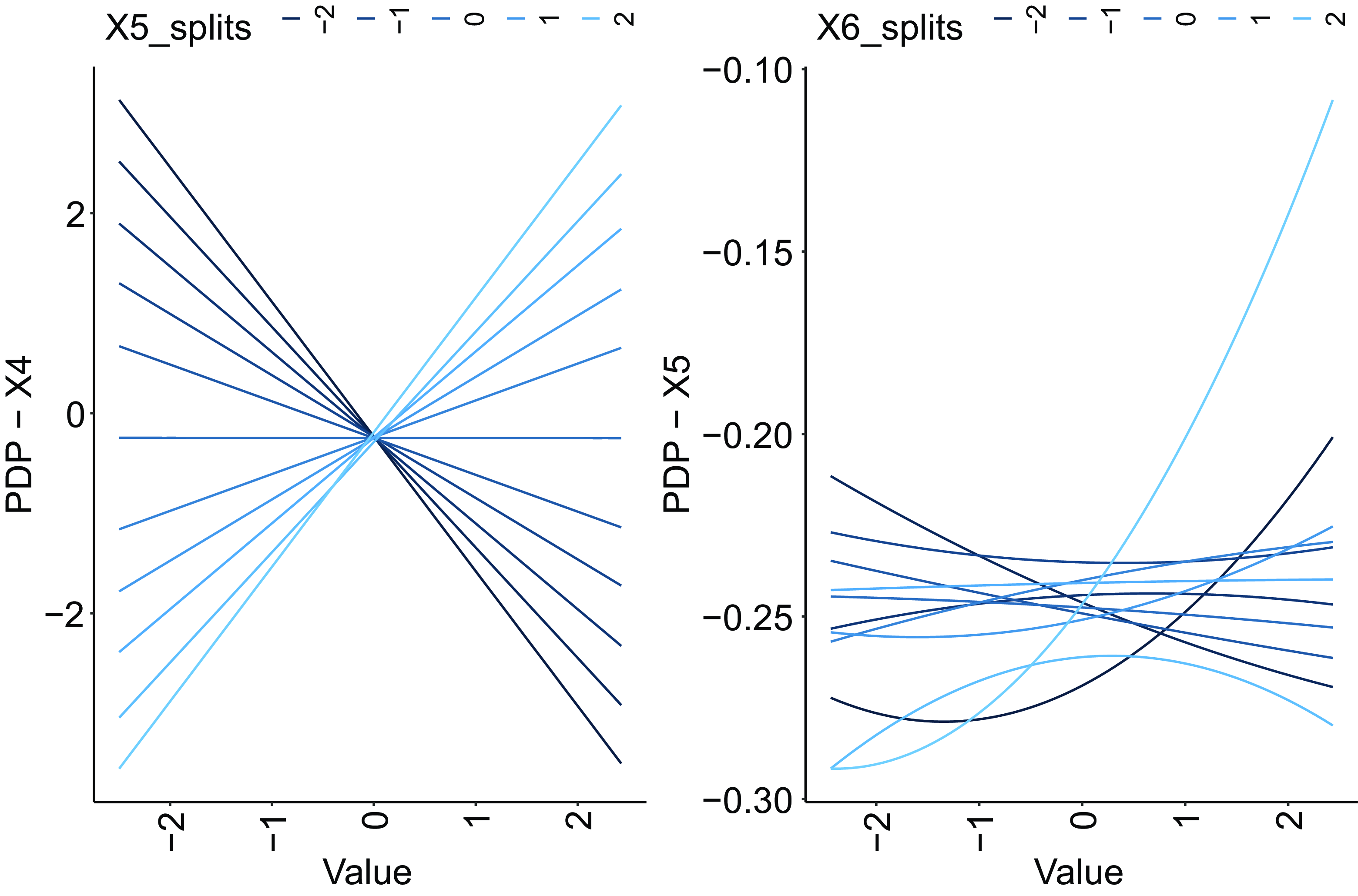

Since the true relationships between the covariates and the responses are known, we do not perform an exploratory analysis here but rather show the PDPs estimated by applying Equation (4.1). PDPs for each of the covariates in

![]() $\boldsymbol{x}$

are shown in Fig. 2. The PDPs for covariates

$\boldsymbol{x}$

are shown in Fig. 2. The PDPs for covariates

![]() $x_4$

,

$x_4$

,

![]() $x_5$

, and

$x_5$

, and

![]() $x_6$

do not capture the correct complexity of the effects for these covariates, since these only enter the response as interactions; thus, two-dimensional PDPs estimated to show the interactions correctly are shown in Fig. 3, which shows that a wide range of different relationships between the covariates and the responses are produced by the interactions in (4.1). Finally, we note that the PDPs for covariates

$x_6$

do not capture the correct complexity of the effects for these covariates, since these only enter the response as interactions; thus, two-dimensional PDPs estimated to show the interactions correctly are shown in Fig. 3, which shows that a wide range of different relationships between the covariates and the responses are produced by the interactions in (4.1). Finally, we note that the PDPs for covariates

![]() $x_7$

and

$x_7$

and

![]() $x_8$

are flat, since these variables do not enter from the simulated response directly, which is as expected.

$x_8$

are flat, since these variables do not enter from the simulated response directly, which is as expected.

Figure 2 PDPs for each of the components in

![]() $\boldsymbol{x}$

.

$\boldsymbol{x}$

.

Figure 3 Two-dimensional PDPs for covariates

![]() $x_4$

and

$x_4$

and

![]() $x_5$

(left panel, with

$x_5$

(left panel, with

![]() $x_4$

as the main variable) and

$x_4$

as the main variable) and

![]() $x_5$

and

$x_5$

and

![]() $x_6$

(right panel, with

$x_6$

(right panel, with

![]() $x_5$

as the main variable). The color of the lines varies by the value of the covariate with which the first variable interacts.

$x_5$

as the main variable). The color of the lines varies by the value of the covariate with which the first variable interacts.

The simulated data were split into training and testing sets (in a 9:1 ratio) for the analysis that follows.

4.2 Fitting the ICEnet to simulated data

We select a simple

![]() $d=3$

layer neural network for the FCN component of the ICEnet, with the following layer dimensions

$d=3$

layer neural network for the FCN component of the ICEnet, with the following layer dimensions

![]() $(q_1 = 32, q_2 = 16, q_3 = 8)$

and set the activation function

$(q_1 = 32, q_2 = 16, q_3 = 8)$

and set the activation function

![]() $\phi (\!\cdot\!)$

to the rectified linear unit (ReLU) function. The link function

$\phi (\!\cdot\!)$

to the rectified linear unit (ReLU) function. The link function

![]() $g(\!\cdot\!)$

was set to the identity function, i.e., no output transformations were applied when fitting the network to the simulated data. To regularize the network using early-stopping, the training set was split once again into a new training set

$g(\!\cdot\!)$

was set to the identity function, i.e., no output transformations were applied when fitting the network to the simulated data. To regularize the network using early-stopping, the training set was split once again into a new training set

![]() $\mathcal{L}$

, containing 95% of the original training set, and a relatively small validation set

$\mathcal{L}$

, containing 95% of the original training set, and a relatively small validation set

![]() $\mathcal{V}$

, containing 5% of the original training set. No transformations of the input data were necessary for this relatively simple simulated data.

$\mathcal{V}$

, containing 5% of the original training set. No transformations of the input data were necessary for this relatively simple simulated data.

The FCN was trained for a single training run using the Adam optimizer for 100 epochs, with a learning rate of

![]() $0.001$

and a batch size of

$0.001$

and a batch size of

![]() $1024$

observations. The network training was early stopped by selecting the epoch with the best out-of-sample score on the validation set

$1024$

observations. The network training was early stopped by selecting the epoch with the best out-of-sample score on the validation set

![]() $\mathcal{V}$

. The mean squared error (MSE) was used for training the network, and, in what follows, we assign the MSE as the first component of the loss function (3.4). The ICEnet was trained in the same manner as the FCN, with the additional smoothing and monotonicity loss components of (3.4) added to the MSE loss. The values of the penalty parameters were chosen as shown in Table 1. To create the ICE outputs on which the smoothing and monotonicity losses are estimated, the empirical percentiles of the covariates in

$\mathcal{V}$

. The mean squared error (MSE) was used for training the network, and, in what follows, we assign the MSE as the first component of the loss function (3.4). The ICEnet was trained in the same manner as the FCN, with the additional smoothing and monotonicity loss components of (3.4) added to the MSE loss. The values of the penalty parameters were chosen as shown in Table 1. To create the ICE outputs on which the smoothing and monotonicity losses are estimated, the empirical percentiles of the covariates in

![]() $\boldsymbol{x}$

were estimated and these were used as the pseudo-data for the ICEnet. Thus, the ICEnet applies the FCN

$\boldsymbol{x}$

were estimated and these were used as the pseudo-data for the ICEnet. Thus, the ICEnet applies the FCN

![]() $\Psi _W$

$\Psi _W$

![]() $801$

times for each record in the training data (once to estimate predictions and another

$801$

times for each record in the training data (once to estimate predictions and another

![]() $800$

times for the

$800$

times for the

![]() $8$

variables times the

$8$

variables times the

![]() $100$

percentiles).

$100$

percentiles).

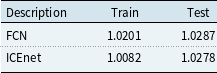

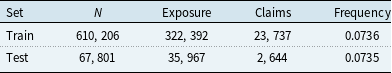

Table 1. Smoothing and monotonicity constraints applied within the ICEnet for the simulated data

The FCN and the ICEnet were fit using the Keras in the R programming language; for the Keras package see Chollet et al. (Reference Chollet and Allaire2017). Training the FCN took about 1.5 minutes and training the ICEnet took about 6 minutes.Footnote 1 Since the ICEnet requires many outputs to be produced for each input to the network – in this case,

![]() $800$

extra outputs – the computational burden of fitting this model is quite heavy (as shown by the time taken to fit the model). Nonetheless, this can be done easily using a graphics processing unit (GPU) and the relevant GPU-optimized versions of deep learning software. As mentioned above in Section 3.4, due to the parallel processing capabilities of a GPU, the time taken to perform the ICEnet calculations does not scale linearly with the number of variables and the amount of pseudo-data.

$800$

extra outputs – the computational burden of fitting this model is quite heavy (as shown by the time taken to fit the model). Nonetheless, this can be done easily using a graphics processing unit (GPU) and the relevant GPU-optimized versions of deep learning software. As mentioned above in Section 3.4, due to the parallel processing capabilities of a GPU, the time taken to perform the ICEnet calculations does not scale linearly with the number of variables and the amount of pseudo-data.

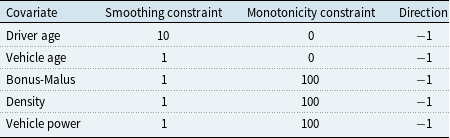

The FCN and ICEnet results are on Lines 1-2 of Table 2, showing the results of the single training run.

Table 2. MSE loss for the FCN and the ICEnet, training, and testing losses

The results show that the ICEnet (with the penalty parameters set to the values in Table 1) performs slightly better than the FCN on both the training and testing sets; we do not attempt to optimize this further.

We now briefly show whether the ICEnet has performed as we would expect, by first considering the global effect of applying the ICEnet constraints. In Fig. 4 we show the PDPs derived from the FCN and ICEnet models, where the PDPs are shown with red lines are the true PDPs derived using Equation (4.1) directly (i.e., these are the same as those in Fig. 2), with green lines for the FCN and with blue lines for the ICEnet.

Figure 4 PDPs for each of the variables in

![]() $\boldsymbol{x}$

, test set only. Red lines are the true PDPs from Equation (4.1), green lines are PDPs from the FCN (unsmoothed) and blue lines are PDPs from the ICEnet (smoothed). Note that the scale of the

$\boldsymbol{x}$

, test set only. Red lines are the true PDPs from Equation (4.1), green lines are PDPs from the FCN (unsmoothed) and blue lines are PDPs from the ICEnet (smoothed). Note that the scale of the

![]() $y$

-axis varies between each panel.

$y$

-axis varies between each panel.

![]() $x$

-axis shows ordinal values of the pseudo-data on which the PDP is evaluated.

$x$

-axis shows ordinal values of the pseudo-data on which the PDP is evaluated.

Figure 5 ICE plots of the output of the FCN and the ICEnet for

![]() $3$

instances in the test set chosen to be the least monotonic based on the monotonicity score evaluated for each instance in the test set on the outputs of the FCN. Note that the smoothed model is the ICEnet and unsmoothed model is the FCN.

$3$