Background

Effective recruitment of research study participants is a major challenge in clinical research. Up to 50% of studies close early due to inadequate participant accrual [Reference Briel, Olu and von Elm1,Reference Stensland, McBride and Latif2], with non-interventional studies typically faring worse [Reference Schroen, Petroni and Wang3]. In fact, many study sites fail to enrol a single participant [Reference Lynam, Leaw and Wiener4]. Rare diseases and underserved or underrepresented populations such as non-English speakers tend to be more difficult to recruit [Reference Ford, Siminoff and Pickelsimer5–Reference Paskett, Reeves and McLaughlin7]. Traditional recruitment approaches for clinical studies include in-person identification of participants from physician offices or health fairs, printed flyers and posters or advertisements on radio, television or more recently on the Web [Reference Milo Rasouly, Wynn and Marasa8]. However, these approaches have several disadvantages. They are often slow and inefficient, require large amounts of investigator time commitment and can be very expensive relative to the number of recruited participants [Reference Milo Rasouly, Wynn and Marasa8].

Investigators increasingly use social media to recruit individuals for clinical research studies [Reference Bisset, Carter and Law9–Reference Topolovec-Vranic and Natarajan11]. Social media recruitment methods can be divided into two broad categories. These include unpaid methods, typically involving researchers posting information on their study using their personal or institutional social media account, and paid methods, which use advertisements placed through each social media platform’s dedicated ad service [Reference Akers and Gordon12]. Depending on the platform, these ads can be targeted to specific users in various ways. Researchers can use these ad targeting techniques to identify users more likely to qualify for or participate in their study. Additionally, once users click on an ad, deidentified data about them can be collected. This can inform investigators about advertising reach and target audience demographics. However, there are ethical issues around privacy and data safety in using social media advertising for these purposes, and care must be taken to avoid accidental reidentification of participants.

The effectiveness of social media recruitment is highly variable and depends on the specific study population [Reference Topolovec-Vranic and Natarajan11]. Social media advertising costs are widely divergent, and advertisement clickthrough rates can vary between studies and are hard to predict in advance. Cost-effectiveness of this modality, therefore, has been difficult to study systematically. Previous systematic reviews identified generally favourable costs, with social media ranging from $0 to $517 per participant, compared to $19–$777 for traditional methods [Reference Topolovec-Vranic and Natarajan11,Reference Ellington, Connelly and Clayton13]. However, there are no published meta-analyses of the cost-effectiveness of social media recruitment compared to non-social media methods. We now present a systematic review and meta-analysis of the cost-effectiveness of paid social media advertising for recruiting participants to clinical studies.

Methods

Study Inclusion and Exclusion Criteria

Any study directly comparing the cost of paid social media advertising versus non-social media recruitment methods to a clinical study was considered for inclusion. Social media platforms included were Facebook, Instagram, Twitter, Reddit, Linkedin, Snapchat, Youtube and TikTok. Non-social media methods could be any other recruiting method with a reported cost. Studies could include personnel time, development costs, recurring advertisement fees, supply costs and other miscellaneous expenses as part of the total cost; however, this had to be reported consistently across different recruitment methods. Included studies were expected to be secondary analyses of existing data, and allowable designs of the primary studies were randomised controlled trials, longitudinal studies, cohort studies or cross-sectional studies. Due to continuing changes in social media advertising algorithms, only studies within the last ten years were included. Single-arm studies of social media without a comparator, studies comparing only different social media platforms or studies of social media for purposes other than recruitment were excluded. Studies that only used no-cost social media or non-social media recruitment methods or did not report cost data were also excluded. Additionally, studies that recruited less than ten participants through social media or non-social media methods were excluded.

Outcomes

The primary outcome measure was the relative cost-effectiveness of social media recruitment compared to non-social media recruitment. This was calculated as the odds ratio of enrolling a participant into a study per dollar spent on each recruiting method. This approach was selected in order to minimise the effects of inter-study variability. By directly comparing costs of recruitment methods within each individual study, we could control for confounding effects such as differences in population, cost calculation, language and study type.

The secondary outcome measure was the relative cost-effectiveness of social media advertising compared to other forms of paid online advertisements including non-social media-based web ads (such as on Google) and mobile app ads. This was calculated as an odds ratio using the same method as the primary outcome. A study needed to enrol at least ten participants using this approach in order to qualify for inclusion in the secondary analysis.

Search Strategy

The search strategy for the meta-analysis followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines [Reference Page, McKenzie and Bossuyt14]. MEDLINE and EMBASE were searched over a three-month period ending in November 2022 to identify candidate studies. A detailed search strategy is presented in Supplemental Figure 1.

Article Screening

After completion of the MEDLINE and EMBASE search, identified articles were screened using a two-step process. The initial step consisted of abstract screening only, in order to eliminate irrelevant papers or review articles. The second step involved whole-article screening, and papers were included or excluded at this step based on the above criteria. Two authors (VT and RS) independently reviewed candidate articles for inclusion. Included articles were assessed for quality using the Joanna Briggs Institute (JBI) checklist for Quasi-Experimental Studies [15].

Data Extraction

Relevant data were manually extracted from each included article including the study location and type, recruited population, social media platforms used, other forms of recruitment used, total number of participants recruited by each method and total cost of each recruitment method. For the purposes of this study, Web-based recruitment that was not through social media (such as Google ads) was pooled with other non-social media methods. Any recruitment methods that did not report an associated cost, or reported a cost of zero, were excluded from the analysis.

Cost Normalisation

Any costs or prices reported in currencies other than US dollars were first converted to US dollars using the average annual currency conversion rate during the year of study publication. All costs were then adjusted for inflation to November 2022 US dollars. Cost comparisons between studies were reported as medians and interquartile ranges (IQR).

Statistical Analysis

Individual odds ratios were calculated for each study and outcome as described in the outcomes section above. Variances for each odds ratio were then obtained and meta-analyzed using the Mantel-Haenszel method [Reference Higgins, Thomas and Chandler16]. Due to a high degree of heterogeneity expected in the results, the random effect model was selected. A formal heterogeneity analysis was subsequently performed in order to confirm that the random effect model was appropriate. Results were reported as an odds ratio and 95% confidence interval (CI). All analysis was performed using Revman 5.4.1 (The Cochrane Collaboration, 2020) and R Statistical Software (R Core Team, 2022).

Results

Article Screening and Risk of Bias Assessment

A total of 319 unique articles were identified during the search process. Of these, 258 were excluded during the abstract screening process, leaving 61 for full-text review. In total, 38 articles were subsequently excluded during the full-text review: 13 were excluded due to lacking a non-social media comparator, 10 because they did not report costs, five because they did not use social media for recruitment, nine had less than 10 participants in either the social media or non-social media group and two were reviews. Twenty-three remaining articles were included in the analysis [Reference Adam, Manca and Bell17–Reference Woods, Yusuf and Matson39]. Because all included studies were secondary analyses, a risk of bias assessment could not be performed directly. Instead, all 23 identified studies were appraised using the JBI checklist for Quasi-Experimental Studies and were found to be appropriate for inclusion in the meta-analysis. A complete inclusion flow diagram is presented in Fig. 1.

Figure 1. Study inclusion flow diagram.

Characteristics of Included Studies

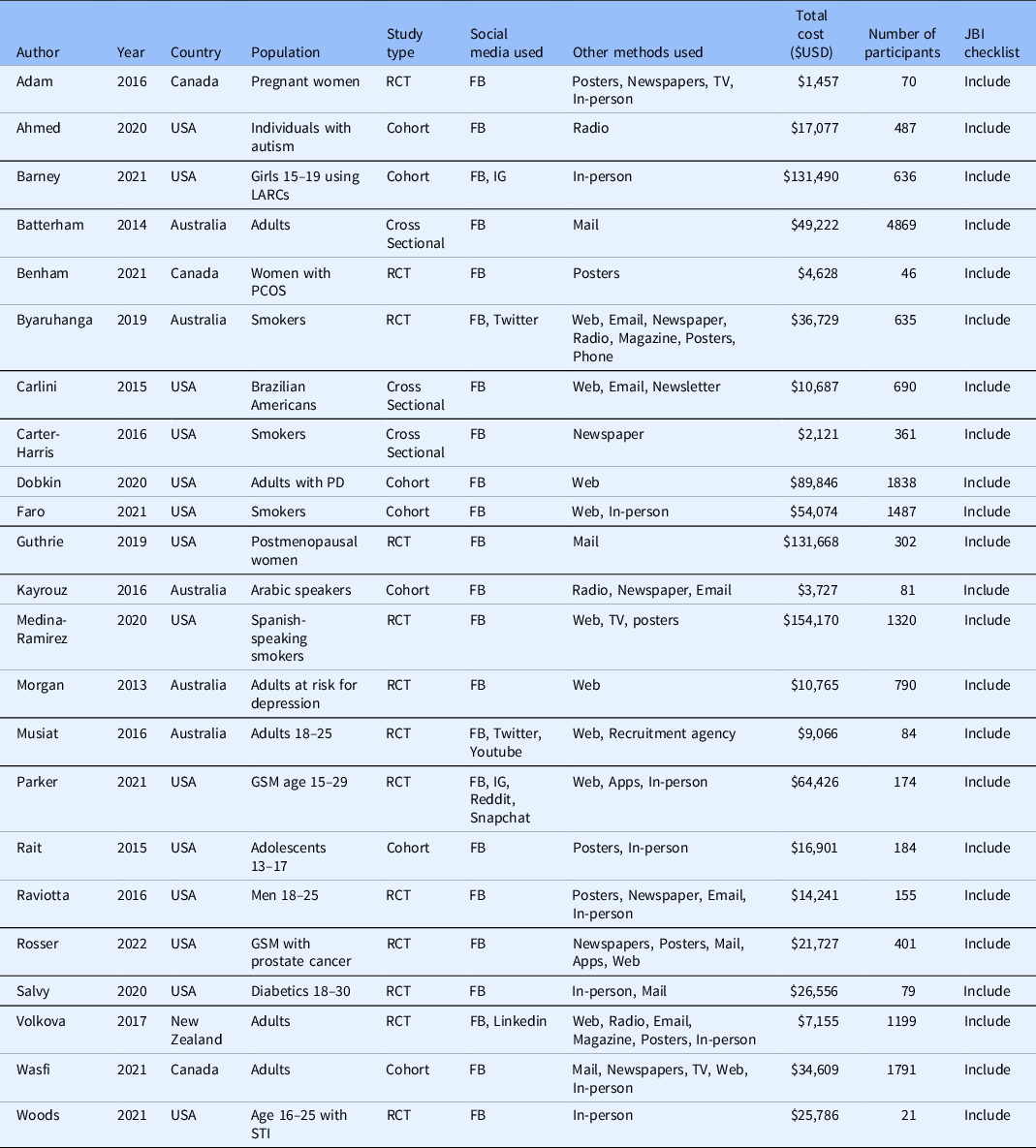

All 23 studies included in the meta-analysis were secondary analyses of previously collected data. In 13 of these, the primary study was a randomised controlled trial. The primary study was a cohort study in seven articles and a cross-sectional study in three articles. The most commonly studied disease, with seven included articles, was substance use including alcohol and tobacco [Reference Byaruhanga, Tzelepis, Paul, Wiggers, Byrnes and Lecathelinais22–Reference Carter-Harris, Bartlett Ellis, Warrick and Rawl24,Reference Faro, Nagawa and Orvek26,Reference Medina-Ramirez, Calixte-Civil and Meltzer29,Reference Parker, Hunter, Bauermeister, Bonar, Carrico and Stephenson32,Reference Rait, Prochaska and Rubinstein35]. Six articles evaluated reproductive and sexual health including sexually transmitted infections, contraceptive usage, and prenatal counselling [Reference Adam, Manca and Bell17,Reference Barney, Rodriguez and Schwarz19,Reference Guthrie, Caan and Diem27,Reference Parker, Hunter, Bauermeister, Bonar, Carrico and Stephenson32,Reference Raviotta, Nowalk, Lin, Huang and Zimmerman33,Reference Woods, Yusuf and Matson39]. The remaining ten articles evaluated various diseases such as diabetes mellitus [Reference Salvy, Carandang and Vigen34], autism spectrum disorder [Reference Ahmed, Simon, Dempsey, Samaco and Goin-Kochel18] and prostate cancer [Reference Simon Rosser, Wright and Hoefer36]. Only two studies involved a pharmacological intervention [Reference Guthrie, Caan and Diem27,Reference Raviotta, Nowalk, Lin, Huang and Zimmerman33], with most of the remaining studies evaluating behavioural interventions or surveys. Fourteen studies were conducted in the United States, five in Australia, three in Canada and one in New Zealand. Target populations were highly variable, although many studies specifically aimed to recruit younger individuals [Reference Barney, Rodriguez and Schwarz19,Reference Musiat, Winsall and Orlowski31–Reference Rait, Prochaska and Rubinstein35,Reference Woods, Yusuf and Matson39].

All of the studies used Facebook as a recruiting tool. Two studies also evaluated Instagram [Reference Barney, Rodriguez and Schwarz19,Reference Parker, Hunter, Bauermeister, Bonar, Carrico and Stephenson32] and two also studied Twitter [Reference Byaruhanga, Tzelepis, Paul, Wiggers, Byrnes and Lecathelinais22,Reference Musiat, Winsall and Orlowski31]. Youtube, Reddit, Linkedin and Snapchat were each evaluated by one study [Reference Musiat, Winsall and Orlowski31,Reference Parker, Hunter, Bauermeister, Bonar, Carrico and Stephenson32,Reference Volkova, Michie and Corrigan37]. Included studies used between one and seven non-social media recruitment methods. Non-social media methods that were evaluated included in-person methods such as physician’s offices or health fairs, mailed advertisements, posters, newspaper ads, TV ads, radio ads and non-social media online advertisements such as email, Web ads and mobile apps. Table 1 contains a description of included study characteristics.

Table 1. Characteristics of included studies

FB = Facebook; GSM = gender and sexual minorities; IG = Instagram; LARCs = long-acting reversible contraceptives; PCOS = polycystic ovarian syndrome; PD = Parkinson’s Disease; RCT = randomised controlled trial; STI = sexually transmitted infection.

Participant Recruitment and Cost-Effectiveness

Included studies recruited between 21 and 4869 participants and spent between USD $1457 and $154,170 on recruitment. The median cost per enrolled participant through social media was $45.51 [IQR $13.92–$134.81] and the median cost per enrolled participant through other methods was $74.89 [IQR $6.57–$187.15], however, this trend was not statistically significant (P = 0.14).

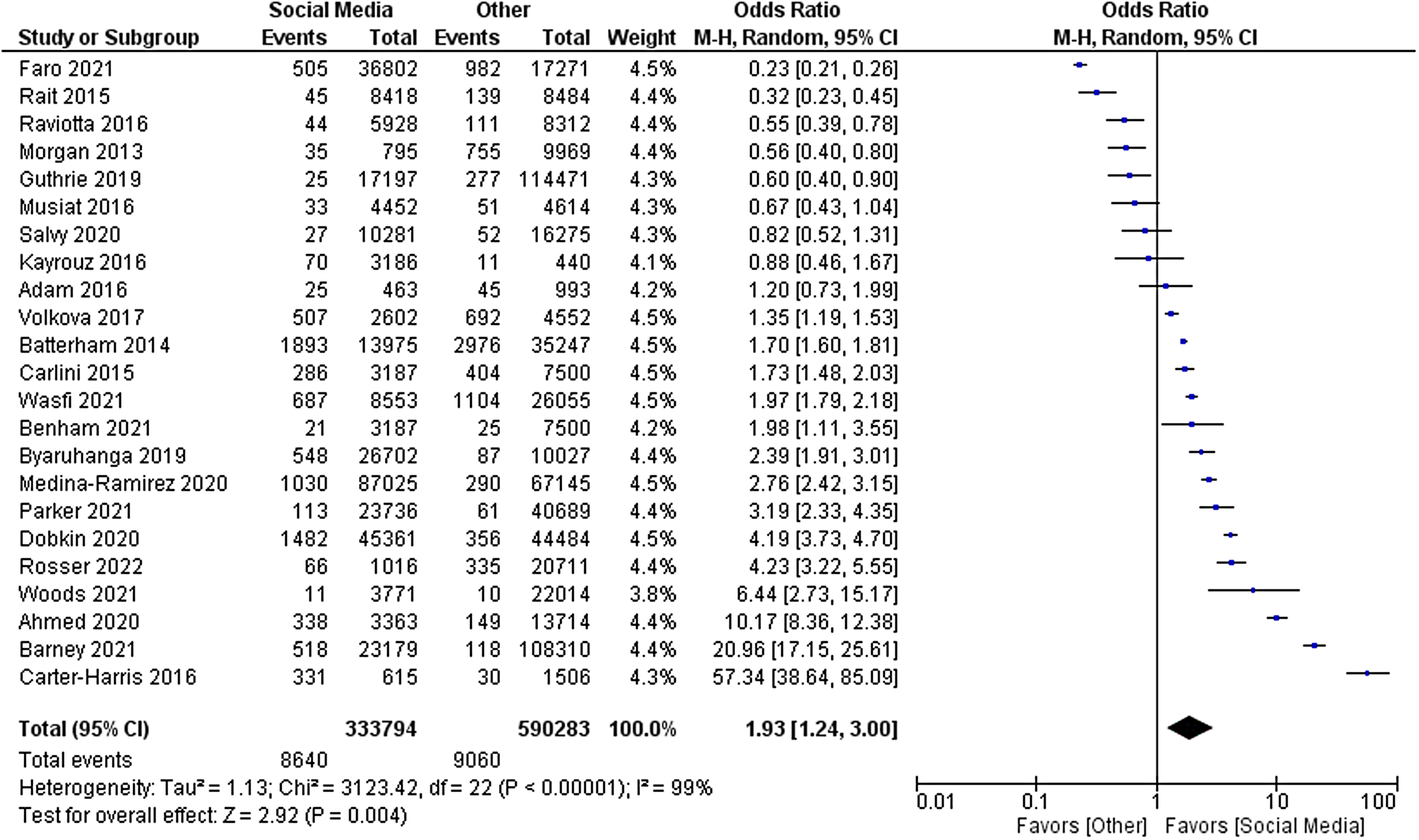

The primary outcome of relative cost-effectiveness, defined as the odds ratio of recruiting a participant per dollar spent on social media compared to other methods, was 1.93 [95% CI 1.24–3.00, P = 0.004]. Therefore, for a fixed amount of spending on recruitment methods, an investigator could expect to enrol nearly twice as many participants using social media compared with non-social media methods. Heterogeneity analysis was highly significant for the presence of heterogeneity in the sample, as expected (I [Reference Stensland, McBride and Latif2] = 99%, P < 0.001). The forest plot for this outcome measure is displayed in Fig. 2. A funnel plot was also generated to evaluate for possible publication bias. No publication bias was evident as shown in Fig. 3.

Figure 2. Forest plot of primary outcome.

Figure 3. Funnel plot of primary outcome.

Of the 23 studies included in the primary analysis, eight studies enrolled at least ten participants using paid online methods other than social media and were eligible for inclusion in the secondary analysis [Reference Byaruhanga, Tzelepis, Paul, Wiggers, Byrnes and Lecathelinais22,Reference Carlini, Safioti, Rue and Miles23,Reference Dobkin, Amondikar and Kopil25,Reference Faro, Nagawa and Orvek26,Reference Medina-Ramirez, Calixte-Civil and Meltzer29,Reference Morgan, Jorm and Mackinnon30,Reference Parker, Hunter, Bauermeister, Bonar, Carrico and Stephenson32,Reference Simon Rosser, Wright and Hoefer36]. The odds ratio of recruiting a participant per dollar spent on social media advertising compared to other online methods was 1.66 [95% CI 1.02–2.72, P = 0.04]. As in the primary analysis, there was a high amount of heterogeneity in this sample (I [Reference Stensland, McBride and Latif2] = 97%, P < 0.001). The forest plot for this analysis is displayed in Fig. 4.

Figure 4. Forest plot of secondary outcome.

Discussion

In this meta-analysis, we evaluated the cost-effectiveness of social media as a participant recruitment tool for clinical studies. This is, to our knowledge, the first systematic cost evaluation of social media recruitment in clinical studies. We found that social media was about twice as cost-effective as non-social media methods overall for recruiting participants, although the degree of cost-effectiveness was extremely variable between studies. When we restricted our analysis to comparing social media to other forms of paid online advertising, we again found that social media had superior cost-effectiveness, although the odds ratio was lower than in the primary analysis. Because cost-effectiveness was compared directly between methods within each study, we avoided some confounding factors such as expected recruitment numbers and geographic or temporal factors affecting recruitment. By including a relatively large number of studies, we were able to mitigate the wide variability of the underlying studies.

Despite this mitigation, high variability remained a major limitation in our analysis. This variability existed in the patient population, study type, location and recruitment methods, resulting in a wide difference in effect sizes even in studies with similar standard errors, as can be seen in Fig. 3. Additionally, the methods of determination of costs of non-social media recruitment varied considerably, with some but not all studies including the cost of employee time. Due to this degree of variability, we could not control for confounding factors in the primary analysis. Thus, even though the overall effect favoured social media, it would be difficult for an investigator to predict the cost-effectiveness of social media in their particular study before implementing the recruitment campaign.

While all included studies evaluated Facebook as a recruitment tool, other social media platforms were not studied frequently. Only one study evaluated Youtube for recruitment [Reference Musiat, Winsall and Orlowski31], even though Youtube is the second largest social media platform after Facebook [Reference Auxier and Anderson40], and no data are available on newer social networks such as TikTok or Nextdoor. Advertising algorithms within social media are constantly being updated, so data from older studies may no longer be accurate.

Only two studies [Reference Kayrouz, Dear, Karin and Titov28,Reference Medina-Ramirez, Calixte-Civil and Meltzer29] focused on non-English speakers; one noted significant benefit from a social media campaign, and the other found no difference. It is therefore impossible to extrapolate from these data if the benefit of social media recruitment extends to non-English speaking individuals. Although many studies focused on younger participants, only two studies focused on older individuals [Reference Dobkin, Amondikar and Kopil25,Reference Simon Rosser, Wright and Hoefer36], who are increasingly active on social media [Reference Cotten, Schuster and Seifert41]. Therefore, more studies are needed to evaluate the effectiveness of social media recruitment in these groups.

In summary, social media advertising is a powerful recruitment tool for clinical investigators and is generally more cost effective than traditional recruitment methods. However, the benefits vary widely depending on the study and population. Additional studies are needed to evaluate less commonly used social media platforms and hard-to-reach individuals and rigorously control for confounders that could affect the results.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/cts.2023.596.

Funding statement

This study was funded by a Ruth L. Kirschstein National Research Service Award. The project was also partially supported by the National Institutes of Health, Grant UL1TR001442. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Competing interests

The authors have no conflicts of interest to declare.