The Chairman (Mr A. J. M. Chamberlain, F.I.A.): Tonight’s paper is the “Evolution of economic scenario generators” by the Extreme Events Working Party.

Before I call on the authors to make some remarks, I thought I would muse a little bit. The use of these sorts of economic forecasting projecting models by the actuarial profession almost exactly coincides with my time as a member of the profession. Only a very few experts thought about these things before the very end of the 1970s. The paper fully describes how the use of the models, as well as the thinking about them, has evolved over the decades that have followed.

I found it fascinating to think back to what we were using at different times. One thing the paper did not mention, but which I think is quite critical, is what was technically feasible at a point in time. It is vital to remember what could be done, say, 10, 20, 30 or 40 years ago. When I started in the profession there were quite a lot of offices still using punch cards for their valuations. The computers that were used were enormous things that had the power of something that you would keep on your desk or even carry around in your briefcase today. I think it is important when looking at the history to just bear that in mind. The art of the possible was quite critical for practical applications.

Another thing that I am very pleased to see in the paper is a discussion of what it is feasible and practical to use in actual actuarial work, as opposed to what is theoretically, in some sense, correct.

I am going to introduce both the members of the working party who are going to speak. One speaker will be Parit Jakhria of Prudential. Parit is Director of Long-Term Investment Strategy at Prudential Portfolio Management Group (PPMG). He is responsible for the long-term investment strategy, which includes strategic asset allocation for a number of multi-asset funds, long-term investment and hedging strategy. He is also responsible for providing advice on capital markets modelling and assumptions across the group. Prior to joining PPMG, Parit undertook a variety of roles within the Prudential, which culminated in the overall responsibility for the production of the Prudential’s regulatory capital requirements pillar 2 Individual Capital Assessment. Parit is also a Chartered Financial Analyst charterholder and has been an active member of numerous working parties for the profession, including chairing the Extreme Events Working Party. He has also played an active role in reviewing the syllabus and is part of the Finance and Investment Board and Chair of the Finance and Investment Research Committee.

Andrew Smith, perhaps characteristically, has given one of the shortest biographies for someone who could have written one of the longest. He has 30 years experience of stochastic financial models and is an Honorary Fellow of the Institute and Faculty of Actuaries. Having spent fifteen years as an actuarial partner in a large consulting firm, he moved, last year, to Ireland to take up an Assistant Professorship in Statistics at University College Dublin. So without further ado, over to you, Andrew.

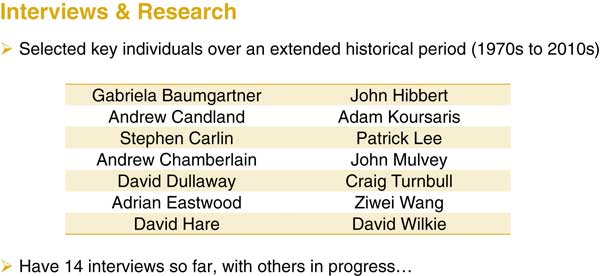

Mr A. D. Smith: I am going to introduce the paper by describing what we have done. We describe the need for stochastic models because you have only one past but many possible futures, and scenario generators are the tools that produce these future outcomes. We have been concerned, particularly, with economic scenario generators (ESGs) that would produce forecasts of things like inflation, interest rates, equity market levels, property market levels, foreign exchange rates, credit spreads and so on. In this working party, we have written a fair number of papers and presentations, many of which have been quite technical, but this time we took a different approach. What we recognised was that these models have been in use, on and off, since about the 1970s and became much more widely used in the 1990s. Many of the people who initially developed those models are now retired. Some of them, unfortunately, are deceased. We thought that it would be a good idea to interview people who had had a big role in developing those models, to obtain their insights and views on what had happened. We particularly wanted to try to obtain their understanding of what had caused shifts in modelling practice. So, there are very few formulae in the paper tonight. Instead, we are recounting the story as was recounted to us, by the people that we set out to interview. We are grateful to those who participated who are shown in Figure 1.

Figure 1 Interviewees

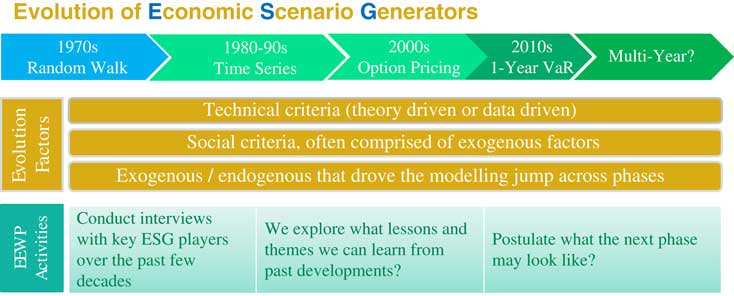

We are particularly grateful to those who have come out of retirement and racked their brains to give us some insights into the earlier years when even Parit (Jakhria) and I were not involved. There are a few people we are still trying to button down, to obtain their views for a final paper. So, Figure 2 is a picture of how we saw the evolution of scenario generators, particularly within an insurance context.

Figure 2 Evolution of economic scenario generators (ESGs)

In the early years, the first models that were used were random walks. They were borrowed from academic work. After that was a large body of research led, mostly, by Professor David Wilkie, looking at time series models. Subsequently, in the early 2000s, in the insurance industry at least, there was a move to market consistent models. That involved almost discarding some of the time series ideas. This is interesting, and I would like to hear your views as to why you think that has happened.

We are now in a situation where much of stochastic modelling uses 1-year value at risk (VaR). Given this, you might have imagined that actuaries would have dusted off their multi-period time series models and said, “I am going to cut that all off to one year and I already have the solution to that problem”. That is not what happened. What happened was that people built a new set of models. So, what was wrong with the ones that they had had twenty years earlier?

We have tried to identify some factors here. One thing that became clear is that it is not only technical criteria which drive these model developments. It is not the case that every model is driven by some new technical innovation to give a better model than before. Very often, the requirements of model users were different and sometimes there were things that became fashionable. There are social influences as well as technical ones.

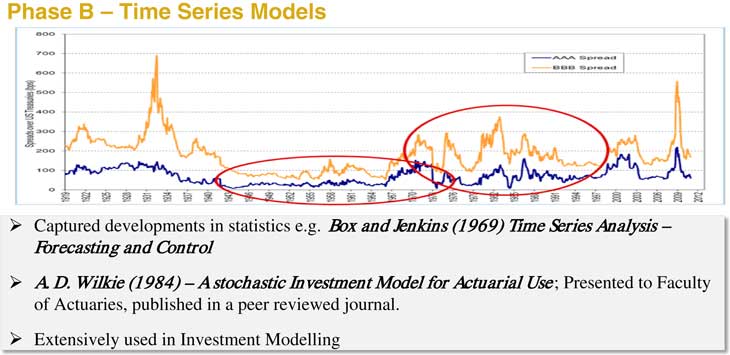

We conducted interviews with some key players to ask them what they thought about this. The first phase was random walks which are well known from academic studies. These models capture one, general factoid, which is that in most developed markets if you look at returns from one period to the next there is a very low autocorrelation. So, you do not typically find that you can predict the direction of the next period’s return from the return immediately preceding it. Now, there are questions as to whether that remains true over longer time periods, but over short time periods it seems to be the case. The random walk models account for that fact but there are clearly things for which they do not account. An obvious example is government bonds maturing at their face value on the maturity date. There was a lot of innovation involving time series models. Figure 3 shows some credit spreads.

Figure 3 Time series models

Time series modelling followed innovation within the academic world and David (Wilkie) really led the charge in applying it to financial time series. It produced answers which, over longer time periods, were quite different from random walks. In particular, equity investment looked less risky using a Wilkie-style model than would have been the case using random walks. In fact, it was quite a lot less risky over long time horizons. One of the great things about the Wilkie model was it was published in a proper peer-reviewed journal. Very few of the other models that we are describing have had that degree of public scrutiny or detail in the public domain.

David also produced some recommended parameters. There were some difficult questions associated with these developments. One of them was that during the 1980s and 1990s there was an increase in the use of stochastic modelling and the Wilkie model for pensions asset-liability studies. At the same time, there was a general increase in the equity proportion of a typical pension fund asset allocation. We do not think we have quite got to the bottom of what was the cause and effect in this area. One possibility is that there was a new model that gave some new insights, causing people to revise their view of the risk of equities, causing funds to be comfortable investing more in equities. Alternatively, there may have been some other reasons why funds wanted to invest more in equities and, therefore, a model which appeared to support that became very popular.

The next modelling phase was associated with realistic balance sheets using option pricing-type models. This was when actuaries started using and adapting models that had been developed outside the actuarial profession, mainly in banking and derivative pricing. These are still used for realistic balance sheets and market consistent valuations, especially in life insurance, less so in pensions.

We have been given a few reasons why the option models became popular. One was there was a financial crisis and a need to value the costs of options and guarantees. The need to do that was exposed by the failure of Equitable Life. There were regulations which required market consistent models, it was not clear that that could be done with the time series models that were then in use. There were ideas coming across from banking. So, there were various reasons why these models became more popular in the insurance industry but not so much in pensions consulting.

Then there was another phase, which was the 1-year VaR. So, suddenly everybody became interested in fat tails because we were trying to model extreme rare events. The kinds of normal distributions that are traditionally used in the earlier models failed to produce sufficiently frequent large market falls or large market moves. Our working party was involved in this work. I think we had quite an influence in publishing models here that subsequently became adopted, in fairly similar form, across the industry for Solvency II.

In summary, that has given you a flavour of the different phases that we had identified. I am now going to hand to Parit (Jakhria), who is going to finish off the presentation.

Mr P. C. Jakhria, F.I.A.: Whilst we were doing the research, we also analysed interview results and I will try and give you a few more flavours of this. One of the first questions we asked the interviewers, users, developers and various other people across the industry, was: “What the uses of the economic scenario generators were and how they changed over time?” A very interesting outcome was that there are some uses which have been prevalent for the last 50–60 years. Uses such as investment strategy, business strategy, product development and pricing have been in place for a long period of time and were driven by the need to make decisions. As Andrew (Smith) said, once you recognise that there is one past but many different futures, and you want the business to be robust in those different futures, you have the need for an ESG and they have been used for a number of years.

Some of the newer uses of ESGs are the ones where you have to report something. Regulatory reporting, valuation and capital modelling fall into this category and have only come around in, approximately, the last decade. Other areas where ESGs are important are the realistic balance sheet, the Individual Capital Assessment and then its successor, Solvency II.

What is also interesting is the tertiary use of ESGs. Once they are used both for business strategy as well as valuation and reporting, they then have a whole host of uses within the business. They are used for customer literature, customer outcomes and a lot of other facets, which may not have been primary uses in the past.

One other fact that was pretty clear in talking to all of the participants was that, although their use has varied in a fairly linear fashion, the awareness of ESGs has grown exponentially. There is a lot more awareness and in-house knowledge now than there was many years ago where it was a very niche set of people who understood and did all of the modelling.

The other question that we asked was, “What was the big challenge about these stochastic asset models and why was it such a difficult problem?” One chart that was very useful was how asset allocation has changed over time. So, if you go back 50 years ago, most insurers’ balance sheets had a fairly simplistic asset base. You would have UK gilts, UK equities and a little bit of cash. That was pretty standard but has changed massively over time. Now the balance sheet is much more international, which forces you to model the world in a much more international way. The other challenges relate to the liabilities. Longevity has changed, over the last century or so, as has the nature of insurance business which has moved away from short-term assurance to whole of life and endowment products.

These developments have required an extrapolation in time. Regulations have not helped because they have asked us for an extrapolation in terms of probability. So, in order to have a perfect model, not only do we need to model all the assets, we need to make it more challenging by extrapolating both in terms of time, as well as probability.

As I have mentioned in this forum before, if you are to have a truly accurate model of the world, you need it to be as big as the world itself. If you wanted to extrapolate it in time and probability, you would need it to be many times bigger.

Notwithstanding all of the preceeding issues we wanted to know how happy the interviewees were with ESGs. Generally, over time, most people felt there was a learning process, but the material economic risks had been captured. One exception to that would be interest rates, where for a number of years, decades even, all of the textbooks cited a floor of zero in interest rates, called the zero bound. There were some interest rate models which had an implicit assumption that interest rates do not fall below zero and, in fact, that was in the actuarial syllabus until not that long ago. This, most people feel, is one area where, perhaps, there are a lot of lessons to be learnt. There is need for more work on how interest rate models work and what implicit assumptions and judgements we are making which we should be very careful about.

The other area which is difficult is dealing with changes of regime. It is problematic to know how to allow for them. Although they might be obvious in hindsight, they are much more difficult to expect in advance. One example would be, in 1997, when the Bank of England became independent, in terms of monetary policy. That changed the dynamic of interest rates. Arguably, the advent of quantitative easing changed the dynamics of how capital markets behave. It is an interesting dilemma to determine how to decide when a regime has changed and how to set the parameters for the new regime.

We can consider the factors that have influenced change in the past. There have been some factors that have been led by regulation. There have been some changes due to unforeseen circumstances. For example, in the credit crisis, if the actual movements in markets were a multiple of standard deviations from what your model predicted, that was an incentive to change your models. There has been a similar effect with negative interest rates. Some changes have been user and development-led, the biggest example being the move from random walk to Wilkie models, which was largely led by the developers and a fairly niche community. That leads to a number of different criteria. At a very loose level, we could convert these into objective criteria such as: goodness of fit; accuracy of replicating market prices; back-testing; and other statistical properties. All of those metrics could be used to objectively decide whether model A or model B is better.

Then, there are a number of other criteria, which are slightly more subjective and what Andrew (Smith) termed “Social factors”. Examples of these are: What model do other companies have? How easy is it to explain the model to lay people? Are there any commercial factors, such as, a budget constraint? Is there a calibration time constraint? These factors are not to be discounted. If you look at history, they played quite a big role in the formation of decisions.

That led us to ask ourselves what might determine models in the future? If you consider the past, you may think that the past was a continuous evolution of a series of models where each model is better than the last. As Andrew (Smith) hinted, and as we showed in the paper, that is not true at all. There were a number of jump processes which were caused by exogenous events that completely changed the direction of travel. If we take the evolutionary factors on their own, these are all sensible factors where we would expect to see a positive direction of travel. Thus we would expect: modelling of new asset classes as the capital markets evolve; better modelling of economic cycles as the quantitative easing, or post-quantitative easing, era tells us a little bit more about how markets behave; and models going further and further into multi-period or real world, improving their structure and granularity.

Then, you may have a number of left field factors which make a big change to the modelling in a particular direction. There are many examples: new regulations where we do not know what doors will be shut after the next crisis, once the horse has bolted; market crises which might genuinely change the perception of how markets behave; other disruptive influences, say, you might have a big tech company, like Google, completely take over ESG modelling by creating a model purely for big data; there may be social influences, for example, you could have the popularity of something like factor modelling take over and change our thinking to model factors rather than asset classes.

The other lens we would like to use is how we divide models, whether they are driven by economic theory or they are driven by empirical data, and how that has changed over time. The first models were random walks, and those were very much dependent on economic theory. There was Brownian motion which was a stochastic process with an equal chance of going left or right. There was a big jump to time series models, which were purely empirical. The parameters were purely based on historical data. Then, again, we took a jump towards theory, as you had the Black-Scholes model with its frictionless markets. Subsequently there was a jump to VaR models. We need to consider whether there will be a happy marriage of both economic theory and empirical data in the next set of models, as we try and extrapolate the models further out in time. There may need to be a marriage between realism and business needs. There will be commercial pressures, time pressures, pressures to keep the model simple, transparent, intuitive, in conjunction with the need to model more complicated features of the market, like volatility clustering, economic cycles and the correlation across time. Those are all factors we think might influence the next set of models.

We would love to hear from you in a number of specific areas. Are there any stories of failure or success of ESGs and the reasons for this? We are particular interested in international experience. We are conscious that a lot of our knowledge and research is very UK focussed. We are aware of lots of exciting and different developments in the international space. If any of you have any comments or have people who are able to be interviewed at the international level, that would be much appreciated.

The Chairman: I think the two presentations have indicated very well the thrust of the paper and the interesting topics that it covers. For the purpose of the discussion, I am joined on the top table by Ralph Frankland, who used to chair the working party. He is now enjoying a little bit of retirement. He is from Aviva. I am going to open the discussion to the floor.

Mr M. G. White, F.I.A.: I think this is a clearly presented and interesting paper. I am going to concentrate on the “so what” questions relating to Section 10 of the paper – the real world needs to which I believe the actuarial profession should be giving some priority in today’s uncertain world, namely financial planning for individuals and institutions and the business of stewardship of savings of individuals. We are in a world where individuals are expected to take increasing responsibility for their own finances, and I believe a greater level of help is required than simply calling for more financial education, or recommending that people use a financial adviser.

But before I expand on some of the challenges where further forward thinking about investment outcomes versus the real needs of different people and of different institutions is needed, I cannot resist a comment about the total nonsense that is Capital Asset Pricing Model, modern portfolio theory and concepts such as expected return being positively related to short-term stock price volatility.

I believe that the profession made a terrible mistake when we adopted these ideas into our examination syllabus in a way that gave an element of credibility to the theories that is not justified. By all means develop theories, but don’t actually believe them unless there is really good evidence.

The unfortunate thing is that the theories are more about what stock prices do than about how the underlying businesses develop, but the lure of the mathematics can too easily dupe people into believing the output. Stock price behaviour, especially in the short term, has an element of circularity about it - if everybody believes theory X regarding stock price behaviour, then that may prove to be self-fulfilling.

In the long term, that is not the case at all, as it will be the performance of the underlying business that gets reflected in prices. So there’s Ben Graham’s famous saying: “In the short run, the market is a voting machine, but in the long run it is a weighing machine”.

The actuarial profession has traditionally been the “go to” body for the challenge of using assets to meet long term liabilities. But there have been major changes affecting the traditional actuarial role. With-profits life insurance business has been in decline since the Equitable, and Defined Benefit pensions have also been in decline, ever since the law changed to make them liabilities of the sponsoring company, and that decline has accelerated as real interest rates have fallen.

I’m now going to talk about two specific challenges that are topical at the moment: pensions and Preferred Provider Organisations (PPOs).

I suspect that the biggest and most important challenge for society that the actuarial profession could potentially help with now is to do with their pension provision. And this needs an objective look from the perspective of the individual saver faced with either the accumulation or the decumulation problem. Not from the perspective of any particular member of the financial sector. Especially not from the financial sector perspective in fact, since I believe that the single most important thing that people need to know how to do differently is how to avoid any significant annual percentage charge on their assets. The second thing they need to know is how to face up to, manage and accept the appropriate level of uncertainty, which includes understanding how to cope emotionally with market fluctuations.

I recognise that I’m talking about an ideal world, not one which it is easy to get to quickly, or perhaps even at all, but if anyone is interested in becoming involved in thinking in these broad problem areas, I would be very happy to hear from them. To me this is a socially useful application for my actuarial training and my interest in investment. In my utopian world, we have the more knowledgeable savers and investors working together for nothing, helping each other, so that ultimately there is something for the less knowledgeable to learn from and copy. My working title for this is currently “savers take control”, which you could google to find out more. I don’t mind being thought of as more than a bit idealist - if we can do any good at all it will still be worth the effort. I am hoping a number of people will come forward to help who have worked in the financial sector, but have now retired and so don’t have the conflict of interest that would make them nervous about campaigning on the issue of investment-related costs.

The second challenge, or second series of challenges perhaps, is PPOs, or periodic payment orders. These are effectively immediate, and inflation-linked, annuities for life which are the best way available of meeting the costs of care of, say, motor accident victims, as they provide very well for longevity risk and also moderately wellfor the relevant inflation risk. Insurers have argued that, if claimants take a lump sum, they should be presumed to invest significantly in equities and therefore they don’t need amounts as large to compensate them as if they took the lowest risk route of index-linked gilts.

We will come back to that in a moment, but the implication of that argument is surely that the insurers, being better equipped in terms of expertise and financial resources, should be investing in equities themselves. After all, a PPO might have an average duration of 40 years, with some really young claimants expecting to live in excess of 70 years, and if it is true that an equity investment strategy makes sense, as the insurers’ arguments would clearly imply, we have to ask what the accounting regime, and also the regulatory regime, with its need to ensure long-term resilience and financial strength of insurers, would have to look like to permit, and possibly even to encourage, some equity investment in respect of these very long and very real liabilities. Might the thinking behind ESGs help here, perhaps? I believe that the profession should be working on this question today.

And this same example of PPOs is one where concentrating on the use of ESGs can obscure other ways of looking at the problem. Let’s consider for a moment that an insurer has decided how much money it needs to put aside to meet the cost of a PPO. Let’s call this “the insurer’s PPO cost”. Taking that as a start point, now let’s contrast the position of a claimant against that of the insurer. We have:

∙ Investment expertise. Insurer will have much more expertise than the claimant. A claimant will need to hire a financial adviser, who will be unlikely to have the range of expertise available to an insurer.

∙ Investment expenses. The insurer will have much lower expenses, and will not need to purchase the kinds of services that a claimant would need to purchase.

∙ Tax. The insurer will achieve a gross of tax investment return - tax only becoming payable in the event that the insurer’s funds set aside, including the gross investment return prove to be more than needed. A claimant will be a taxpayer, and any investment return will be subject to tax in accordance with whatever the personal tax regime may be at the time.

∙ Ability to take investment risk in order to achieve an optimal return. The insurer has a scale of assets and capital resources that the claimant does not have, and for a claimant, the negative utility of running out of money has to figure more importantly than the positive utility of ending up with a surplus. Putting it another way, the claimant can much less afford to take risk than the insurer.

What all these considerations lead to is a very simple conclusion. They all point in the same direction. Whatever amount the insurer needs to put aside to provide a PPO, the “insurer’s PPO cost”, the amount that a claimant needs to provide the same thing has to be very materially higher. That is not the lens of fairness through which the ESG-focussed discussion on the Ogden rate that took place recently was looking. So just because ESGs are available, I think we have to be very careful to put them into a proper context for the problem in hand.

Mr J. G. Spain, F.I.A.: When one says, “One past, many futures”, this is not really true. In terms of the past, we do not actually know where we have been. Just think of pre-crisis liquidity assessments. As for the future, it is not many futures, there is only one future, there are many possible futures. Knowing that there are many possible futures, if those are disclosed to the stakeholders, including, for example, PPO claimants, it would be possible for the stakeholders to obtain some better insights.

This would be far better than looking at one number where somebody, an actuary perhaps, has said, “This is the capital value which needs to be paid, which needs to be reserved, which needs to be priced”. We should move away from thinking in terms of capital numbers and start looking at explaining to stakeholders, “You have a 5% chance of going bust or a 95% chance of going bust, do you feel lucky?” I think actuaries could be doing a lot better than we have been doing. We have concentrated for too long on one number, which destroys the information rather than enhances it. We are not helping the stakeholders.

Mr H. M. Wark, A.F.A.: First, the use of option pricing models in ESGs was largely driven by their use in banking and their mathematical tractability. However, in derivative pricing, in banking, the terms over which they are considered tend to be much shorter than those relating to actuarial liabilities. You mentioned extrapolation in your presentation, how suitable do you think these models are for the long-term nature of actuarial liabilities? A second point is that you are pushing the discussion towards multi-period real-world ESGs. How would you make the distinction between risk neutral and market consistent in an ESG? Do you think the two terms are synonymous or can they be distinct?

Mr Smith: I do not think that risk neutral and market consistent are synonyms. The market consistent models are ones which are calibrated to reproduce market prices of instruments. So, they could be ones where premiums are zero, which are risk neutral, or you could have ones where risk premiums are non-zero and deflator-type models. So, the market consistent approach is a way of calibrating. The, so-called, real-world approach, usually means models that are calibrated to historic measures of, say, correlations and volatilities rather than those implied by market prices. So, market consistent models are not necessarily risk neutral.

In regard to your question about the terms of the models that are used within banking to price options, some of those options will be quite short-dated, but there are, for example, interest rate swaptions, with combined terms of 40 or 50 years. There you are dealing with similar terms to some of the longer-dated insurance liabilities. The interesting thing there is that the models, particularly in Euros, which the banks are using to price those long-dated derivatives are not the same ones used by insurers because the insurance rules allow you to disregard the yield curve beyond 20 years in the Euro and not use market rates.

So, there we have a situation where the insurers are using a shorter-term approach, in a way, than the banks who are using all of the market information. So, I do not think it is quite as clear-cut as to say, "There are short-term problems and the bank’s models are fine for them. Actuaries look at the long term, therefore we need to make our own way”.

Mr Wark: Some real-world interest rate models incorporate a term premium. You can, certainly, set these term premiums to zero and then the models become, essentially, risk neutral models. If you have a view that you want to model a term premium in an interest rate model, it can be difficult to strip that out when you want to return to market prices. How would you approach that problem?

Mr Smith: If you are trying to replicate market prices of bonds, you certainly can put in a term premium. The deflator technique is one way of doing that. It enables you to model a premium on expected returns on longer-dated bonds than on shorter-dated bonds. If you are still calibrating to market prices, that will then also affect the distribution of future interest rates because when you are observing long-dated bonds to calibrate the model, you are going to attribute some of that yield to being a term premium rather than an expectation of interest rates. So, your trajectory of mean interest rates will be further downward sloping compared to a model that was calibrated to zero-risk premiums. There are some quite subtle interplays.

Mr Jakhria: The other way to think about a term premium would be as a risk premium. So, in an arbitrage-free model you do not have any risk premia and all your assets earn the same as a risk-free asset.

This is a risk-neutral model. That is forced by the arbitrage-free condition. In the real-world model you are allowed to have a trade off between risk and return and in order to take on specific risk, be it asset-volatility risk, be it term risk, you receive, in exchange, a set of premia.

Just a quick follow-up to your question on term. I would second Andrew (Smith)’s point that, even within equity options, you do get different prices from the banks. Clearly, the owner of the ESG will need to make some judgement as to how far they take the information from the banks and how far they blend it with their own views.

Mr M. H. D. Kemp, F.I.A.: It seems to me, that the best way to think about the ESGs is that they are trying to solve two different problems at the same time. The first is to place a fair or market consistent value on some kind of payoff and this is the actuarial approach. The approach borrows a lot of ideas from elsewhere within the financial world, particularly the banking world. The second problem that these ESGs are trying to solve is: how do we take decisions if we have an investment view? The first is investment view agnostic and the second is investment view not agnostic. The real-world models are trying to do the latter. I think a number of the historical shifts that you have described are a consequence of greater and greater focus coming through on those two different areas.

You raised a few questions about whether the shift of assets towards equities into pension funds was a result of the additional developments that occurred. My recollection is that the shift towards equities was, in part, an investment view that the pension fund industry took collectively. I suspect some of the changes that came through with ESGs were, in part, to reflect that. You are positing that the entire cause of the shift to equities was down to refinements in ESGs and I would like to suggest that, perhaps, some of it was the other way round.

Mr D. Gott, F.F.A.: For me, personally, your paper was a little bit of a nostalgic blast from the past because, in the early 2000s, I was chairing a subcommittee of the South African profession which developed professional guidance on the use of stochastic models, broadly following 3 or 4 years behind the developments in the United Kingdom. What I wanted to reflect on is the use of real-world models. The two points that stand out for me are, first, that I think both with the time series models and the VaR models, there is a temptation to fit models to the data, either by fitting the time series or fitting a distribution to realisations over a 1-year horizon. To the extent that we do that by using maximum likelihood, method of moments or whatever techniques are available to us, I think that we might fail to recognise that historical experience is just one past of many possible pasts that could have happened. That may cause us to underestimate future uncertainty.

So, maybe, at the other extreme, a very prudent approach would be to consider what would be the most prudent or the most conservative statistical model that would not be invalidated by historical data. So, we could be looking somewhere between the two extremes, either exactly fitting to the historical data or taking a more conservative view.

A second comment, which applies particularly to the VaR models, is that we should look not just at the probability but the range of outcomes. When focussing on 1 in 100, 1 in 200 or whatever VaR cut-off point we have, what I think we can lose sight of is how badly things can really go in reality. If we do underestimate the probability of extreme events and events that we did not account for to happen, we may be ill-equipped or unprepared for their financial consequences. So, not only should we be aware of probability of the outcomes but of their impact on the business.

The Chairman: I think we sometimes lose sight of what this is all about. Ultimately, we are concerned with the ability of financial institutions, of whatever shape or size, to pay money to some member of the public as and when they are expecting to receive the payments. Very many of the developments we have seen over the last few years have been focussed on developing more and more complicated point balance sheet tests. Our predecessors used all sorts of methods of valuation which were actually not so concerned about point estimates, but the emergence of surpluses, the appropriate funding and so forth. Have we gone too far the other way, so that we are absolutely obsessed with what the balance sheet numbers will be in 12 month’s time and not concerned at all about whether they really reflect 10-, 20-, 30-year liabilities?

Mr White: It is difficult to say, “No”, in answer to your question. Something that I think we should understand quite well, as a profession, is the way in which institutions interact. So, if a bank lends to another bank and is allowed to take full credit for that lending, you eventually get an unstable system. I do not think it is just about testing the resilience of companies in isolation, I think it is testing all sorts of things that then have domino effects and how resilient we are to these domino effects. I think the answer is hardly at all.

The Chairman: Indeed most of the systems we seem to create as a society probably magnify those domino effects rather than mute them.

Mr Kemp: I think another alternative perspective on this would be to examine what institutions actually do with their funds and the way that they run their business models. Over the last 20 or 30 years institutions have typically de-risked and it is a reflection of some of the thoughts that you have just highlighted. They have moved away from holding the risk themselves but, of course, equity markets have continued to grow over that period. So, the risk has not disappeared, it has shifted into individual hands, maybe through unitised vehicles, but there has been a shift of risk away from the institution, towards the individual. Whether that is good or bad, I think, is a different topic.

Mr Jakhria: I would like to comment on the Chairman’s earlier question on whether we are focussed too much on the short-term distribution, at the expense of longer-dated liabilities. I would say that has, somewhat, captured Malcolm (Kemp)’s description of the two main uses for ESGs, where there is a business use, so, the need to make decisions, a need to make decisions in the context of long-dated liabilities. You try your best to understand the state of capital markets and where you might end up in 10, 15 or 20 years. There have been some short-term diversions where the regulations have required the companies to focus on a very specific part of the distribution, which is the short-dated issue. So, the regulations have definitely made an impact, the question is, once that has manifested itself and the Solvency II models have stabilised, do the companies look up from the 1-year view and go back to thinking about how to make business decisions? I am very much hoping that, for the majority of the companies, the answer is, “Yes”, so they go back to the fundamental nature of the business and link it to the modelling.

The Chairman: Yes, the reason I raised that idea was that I think it is a partial answer to something that Andrew (Smith) said earlier about the shift away from the time series models. The universal adoption of the 1-year VaR, and similar things, has shifted focus completely through regulatory impact. I think, you were correct, Parit (Jakhria), in those last remarks, that we somehow need to bring together those ways of thinking.

Mr C. M. Squirrell, F.I.A.: I work for Sciurus Analytics. We have a product called Financial Canvas. We would agree with what Andrew said. We support a lot of companies in delivering ESGs. That can involve looking at capital requirements or taking decisions.

What we have seen, over the last year or so, is a move away from a focus on the impact on short-term measures, towards more interest in long-term measures and what might happen over the long term. So, we see fewer questions around issues like: “What is our one-year VaR? What is our interest rate risk? What is our inflation risk?” We see more focus on issues like: “What is the probability that the members’ benefits will be met in full? What is the probability that the company will fall into trouble, leaving a shortfall that cannot be met and what would be the impact of that?"

Mr S. J. Jarvis, F.I.A.: I wanted to pick up on something that Parit (Jakhria) was saying around the use of ESGs. Often what you are trying to do is to understand the impact on a business. That involves thinking about the impact of what is going on in the capital markets. It is interesting to think about where some of the models have come from historically. They were much more focussed on activity in the broader economy. The Wilkie model was structured with an emphasis on what was going on in the macro economy which then influences what was going on in capital markets and then feeds through into the client problem. That type of three-level staging is something which is, I think, very interesting. Maybe, this approach has been lost in the development of ESGs in the last few years, where we have focussed entirely on the capital markets and have forgotten what is driving those capital markets, which is the economy.

The economy itself also has an impact on businesses. One thing that I would like to see, and maybe this is something that you could comment on, is more thought about how we could incorporate models of the broader economy. There has been a lot of work in the economic literature about modelling of the economy. This is, perhaps, something on which the profession should spend more time. For example, we now Dynamic Stochastic General Equilibrium (DSGE) models. They can be reasonably well calibrated through modelling. They typically focus on major features in the economy like inflation or unemployment growth. They do not have very much to say at all about capital markets. They might produce a short rate or a risk premium but that is really about all. So, there is nothing like the breadth and subtlety of modelling capital markets that we need in the kinds of things that we do for our clients.

If there is a way of incorporating both economic and financial factors, then, I think, that would enable us to give a much richer analysis for our clients and the ways that their businesses are potentially influenced by the future scenarios that they may be facing.

Mr Jakhria: I think we would definitely agree with you, and the way we tried to characterise it was to consider a way of extending the ESGs so that they take input from the global economic cycle. As you said, this has not been heavily researched in the domain of ESGs and, hence, is a relatively new area. Because the pricing of market instruments is economically agnostic, a lot of the recent focus of the profession has not been on this front. So, our view is that, in the absence of new direction from the regulations, this feels like a natural evolutionary direction in which to go.

Mr White: In response to the last point, we have a small project going on within the actuarial profession, in relation to economic models. It is supported by the Research and Thought Leadership Committee. We have hired an academic to help with this and what he has been doing is talking to various actuaries about how they use economic theory, implicitly and explicitly, in their work. What prompted this was concern that things like the DSGE really did not reflect reality at all. Inconvenient things, like banks, etc., had been simplified out. There is also the idea that the world really is not in equilibrium, as the DSGE models assume, it is always in major flux. This work has been going on for some time, and there will be some public meetings where will share where we are and also ask for people’s thoughts about where we should go in the future.

There is economic research being sponsored by the Government in all sorts of places to re-think macroeconomics. We do not quite have a seat at that table, but we are involved a little bit and hope to influence that work going forward given what we need as users.

Mr R. Bhatia, F.I.A.: I would like to make a few points about some of the comments that were made earlier. The first one being a need for some of this knowledge, and some of these modelling aspects, to be transmitted to the general public because they are being given more responsibility for making investment decisions. I feel that there is a general distrust amongst the public about economic models.

I remember, there was a very complex model that came out at the time of the Brexit voting and it was maligned by the Brexiteers as utter nonsense. I feel that there is a requirement for educating the general public and, maybe, coming up with simpler models which could be used in a much more effective way.

Also, the second point I would like to raise is about the use of judgement. I think the regulator has taken an initiative and brought the whole industry with them on the use of judgement. A lot of companies were just using data-driven models and I think regulators have said that using data-driven models blindly is not allowable. So, I think the use of judgement has become much more prominent in models now and this will also be the situation going forward.

The Chairman: Yes, though the example you chose is not going to do anything to enhance the general public’s acceptance of economic models because the models were not exactly right, were they? Whether they are easy or difficult to explain public scepticism will be high.

Mr P. Fulcher, F.I.A.: In respect of Brexit modelling the mistake that was made was trying to provide a point estimate. Someone made a comment earlier about simplifying things into a single message. There was a number put out there because they thought people could understand a Gross Domestic Product hit of X. Now, I have seen people put it another way and say, “Well, you know, I cannot tell you how much you are going to weigh in twenty years’ time. I can tell you that if you exercise regularly and eat healthy food, you will probably weigh less than if you do not exercise regularly and eat beef burgers”. It is not a failure of my prediction if I cannot tell you exactly what you’re going to weigh in 10 years’ time or 5 years’ time.

I think there is a difficulty of communicating modelling based on whether it delivered the right answer. The Brexit example was admittedly a big failure, but I think the point still stands what the public probably wants a number and then will be able to verify whether you were correct or not. It is probably more helpful to give someone a range of outcomes, but if you give someone a range of outcomes you can never really be proved right or wrong. I am not quite sure how you avoid that problem.

The Chairman: Unfortunately, the general public will see it as something like your model trying to tell them the result of the 3:30 at Newmarket and you coming to the conclusion, well, one of the horses is going to win.

Mr Fulcher: Yes.

The Chairman: Therefore, they will completely reject the whole thing.

Mr Fulcher: Another factor is that if your model told you that the horse that has four legs is more likely to beat the one with three, it does not actually invalidate your model if the one with three somehow wins.

The Chairman: I will now invite Paul Fulcher to speak. Paul is Head of Asset and Liability Management (ALM) Structuring for Nomura International plc. He is responsible for delivering Solvency II, ALM and capital markets solutions to insurers across Europe. He also chairs the Life Research Committee of the Life Board.

Mr Fulcher: First of all, on behalf of the Life Research Committee, I would like to thank the Extreme Events Working Party for yet another very high-quality sessional paper. I think it is very refreshing to read a paper that takes a very technical subject and translates it into something that would be widely understood even amongst the less technical parts of the professions. I think that is particularly critical here, because stochastic modelling is very technical. Ultimately, if we cannot explain it to boards, consumers or the customers of that research then we have not really achieved our goal. Maybe, as actuaries, we focus on doing the calculation and telling you the answer is 2.7, when it is actually more useful for people to understand how you arrived at that answer, the assumptions you made and the insights you obtained from the modelling.

I think Martin (White) made some very interesting points. He made the point about focussing on long-term business performance rather than short-term price behaviour as something that, maybe, as actuaries claiming to make long-term financials into the future, we should be doing. I think it echoes some points that Andrew (Smith) made when he mentioned the analogy about the market being a weighing machine not a voting machine.

I think Stuart (Jarvis) pulled things together nicely with the idea that, maybe, macroeconomic modelling is the way to bridge that gap. The big challenge for us is understanding the connections between macroeconomic models, capital markets models, particularly in the life insurance sector. The macroeconomics is great but we do need to understand the capital markets.

As Martin (White) said, there is research going on to try and pull those elements together and that is research I certainly hope gets a lot of attention from people in the profession. It is probably quite low profile at the moment so it is probably incumbent on the research community to publicise that research when it is available and obtain peoples’ input into it.

Martin (White) also, I think, made an very eloquent plea for socially useful applications of this sort of modelling, like long-term personal retirement provision and savers taking controls. Mr Spain made some very interesting observations on PPOs and, maybe even hinted at, possibly, a misuse of stochastic modelling to reach a certain conclusion on the Ogden discount rate. That is probably a topic for another discussion, but it was certainly interesting.

I think the other question that came up in the paper and was raised in the audience is whether option pricing and these sorts of financial economic theories are really suitable for modelling long-term guarantees.

Parit (Jakhria) and Andrew (Smith) answered that very well and said some of the things I would have said. Again, the other thing I would draw to people’s attention is that the Equity Release Working Party is about to commission a piece of research. This is investigating a very classic example of a type of guarantee, the no negative equity guarantee (NNEG), that insurance companies and banks increasingly write. It is a guarantee offered in a market that is very illiquid and, to the extent there are derivatives or observable prices, they are very short term, but it is a very long-term guarantee. We are trying to interrogate the right theoretical way of valuing those NNEGs.

We had a research working party about 10 years or so ago, the Hosty Working Party, which broadly concluded you could do it in a completely market consistent way, based on what you can see and the answer would be at a particular end of the spectrum. You could also base it on real-world observations and how often negative equity guarantees have ever cost an insurance company any money. In the real-world case you, pretty much, have an answer of zero. You can have a massive range between the two estimates.

That was a useful piece of research, but it does not seem like the right answer. I would say the answer can be anywhere from concluding you should not provision for these guarantees all the way to you should not be writing these products, which is, basically, the conclusion reached by the Working Party. So, we are trying to narrow that gap. Although this work is focussed on equity release, it might have wider application. The point Malcolm (Kemp) drew out and, I think, the paper also draws out, is that some of the changing model dynamics over time have really been because we are answering different questions over time.

Particularly in the life sector at the moment, there are certainly two questions, I would argue maybe three. Are we just trying to measure the fair value of a liability, taking an agnostic view, as Malcolm (Kemp) put it? Are we trying to make long-term investment decisions in the presence of an investment view? Then, we need to consider capital models and Solvency II, this whole 1 in 200 year VaR question, which has almost been imposed on us. The models that suit those different purposes are not necessarily the same.

Some of the controversies and discussions are really because we are trying to answer more than one question and maybe one model cannot address all of them. This is a life focussed paper and I think it is interesting to look at the pensions sector. In one sense, the pension sector is less constrained by regulation and, therefore, arguably more able to take the long-term view. That itself has led to controversy. Mr Exley, Mr Smith and Mr Mehta wrote a very famous paper 21 years ago that definitely argued for applying option pricing to pension guarantees and led to some very important changes to pension management (Exley et al., Reference Exley, Mehta and Smith1997). Maybe that pendulum, certainly in the life sector, has gone too far though, in terms of using market consistent approaches and not looking at the long term. So, it is, perhaps, interesting to understand what the life sector can learn from the pension sector and vice versa because I think they have gone down rather different routes.

I think I would agree with Malcolm (Kemp) on the causality point. I would say the Wilkie model probably enshrined beliefs that actuaries probably had, dating from the 1970s and even the 1960s that equities were a better long-term match. In a way, the Wilkie model was a stochastic model that gave you the answer you initially expected. So, I think, probably, the equity allocations led the modelling rather than the other way round.

To conclude, I think this was an excellent paper and a very good discussion. Thank you for that. I would certainly like to thank both the working party and the interviewees. There are a lot of interviewees named in the paper who gave their very valuable time to give the working party the input. I think we should be delighted at what has resulted.

The Chairman: Thank you. To some extent, you could see the evolution and development of the models themselves as something of a stochastic process, with intervening events such as regulatory change or crises, leading to changes in the way models are being developed. I think the great thing that this paper does is to stop the clock for a moment and allow people to look at what we are doing and why we are doing it. For that reason, if not for the other reasons that other people have already spoken about, about the excellence and approachability of this paper, I really would like to thank the authors for the work they have done and for the contributions that they have made to the meeting and Paul (Fulcher) for summarising what has gone on very well and to everybody in the audience who has participated in what I think has been a very interesting and stimulating discussion.