1. Introduction

Although turbulence is a high-dimensional chaotic system, it is often modelled as a collection of compact and approximately autonomous coherent structures. These are typically intermittent, emerging and vanishing with a lifetime and frequency that depend on their nature and size, and are characterised both by evolving relatively independently from their flow environment, and by having a measurable influence on the rest of the flow (Jiménez Reference Jiménez2018a). As such, it is important to clarify not only how they behave individually, but how are they connected among themselves in space and in time.

Such causal connections would help us to understand how turbulence works, both from the fundamental point view and in practical applications connected with flow control and prediction. For example, it is important to avoid introducing in the initial conditions of numerical weather forecasting spurious perturbations that would later amplify significantly (Rodwell & Wernli Reference Rodwell and Wernli2023), and identifying such highly influential events would help us to improve prediction accuracy. Another example is flow control, which intrinsically tries to modify the future of the flow by altering its present state. Understanding which structures are causally important and which have no significant effect in the evolution of the flow would clearly help in optimising this process.

Conversely, elucidating the connections between different flow regions, not necessarily initially identified as coherent, may lead to the discovery of novel coherent structures that describe turbulence better than the known ones, or to previously overlooked connections between known structures that can be incorporated into better flow models (Jiménez Reference Jiménez2020b; Jiménez Reference Jiménez2023).

For example, quasi-streamwise rollers, streamwise-velocity streaks and wall-normal velocity bursts are believed to be essential for maintaining wall-bounded turbulence. The most common hypothesis is that there is a self-sustaining process (SSP) in which at least two of these structures mutually induce each other (Jiménez & Moin Reference Jiménez and Moin1991; Hamilton, Kim & Waleffe Reference Hamilton, Kim and Waleffe1995; Waleffe Reference Waleffe1997), but the details are incomplete. For example, recent evidence suggests that bursts are able to sustain a cycle by themselves (Jiménez Reference Jiménez2018a), while streaks are byproducts rather than actors in the SSP (Jiménez Reference Jiménez2022). Even apparently straightforward connections, such as the generation of the streaks by bursts (Kim, Kline & Reynolds Reference Kim, Kline and Reynolds1971), are only incompletely understood, because the two phenomena have very different length scales (Jiménez Reference Jiménez2018a). Establishing the causality relations between these different structures would throw light on whether they are indeed connected, on the sequence in which they are linked, and on whether some component is missing from the model.

With the goal of minimising bias, our strategy is to exclusively characterise flow regions in terms of their influence on the future of the flow, without necessarily relating them to previously known coherent structures. Only once a particular flow template has been identified as highly causal or as especially irrelevant will we try to classify it within existing theories, or to recognise it as something new.

There are two general approaches to causality. The first is observational and non-intrusive, and is often the only option when the system is hard to replicate (e.g. astrophysics), difficult to experiment with (e.g. some social sciences), or simply too large to simulate easily. Unfortunately, it is generally believed that observation is not enough to unambiguously establish cause and effect, because correlation does not imply causation (Granger Reference Granger1969; Pearl Reference Pearl2009), but even in those cases, a careful consideration of the temporal evolution of the system may lead to the identification of causal histories when they cross neighbourhoods of particular interest, typically extreme events (Angrist, Imbens & Rubin Reference Angrist, Imbens and Rubin1996). A related approach is the operator representation of turbulence time series, examples of which are Froyland & Padberg (Reference Froyland and Padberg2009), Kaiser et al. (Reference Kaiser, Noack, Cordier, Spohn, Segond, Abel, Daviller, Osth, Krajnović and Niven2014), Schmid, García-Gutiérrez & Jiménez (Reference Schmid, García-Gutiérrez and Jiménez2018), Brunton, Noack & Koumoutsakos (Reference Brunton, Noack and Koumoutsakos2020), Fernex, Noack & Semaan (Reference Fernex, Noack and Semaan2021), Taira & Nair (Reference Taira and Nair2022), Jiménez (Reference Jiménez2023) and Souza (Reference Souza2023), among others. Another example is the analysis of data series from wall-bounded turbulence by Lozano-Durán, Bae & Encinar (Reference Lozano-Durán, Bae and Encinar2020) using tools of transfer entropy, or the improved version in which their applicability to subgrid modelling and flow control was demonstrated by Lozano-Durán & Arranz (Reference Lozano-Durán and Arranz2022).

The alternative is interventional causality, in which the system is modified directly and the consequences observed. This offers more control over what is being analysed, and safer inferences (Pearl Reference Pearl2009), but presumes a sufficiently cheap way of modifying the system. Essentially, in dynamical system notation, non-interventional methods provide information about the behaviour of the system while it moves within its attractor, while interventional ones give additional information about the system by observing what happens outside it.

Turbulence, which is expensive to simulate and hard to modify experimentally, was for a long time considered to be in the group of phenomena that could be only observed, but the increased speed of computers, as well as better experimental techniques, slowly eroded that difficulty (Jiménez & Moin Reference Jiménez and Moin1991; Jiménez & Pinelli Reference Jiménez and Pinelli1999). More recently, fast graphics processing units (GPUs) speeded up the numerical simulation of realistic turbulent flows to the point of allowing the practical simulation of artificially modified flow ensembles that can be considered interventional (Vela-Martín & Jiménez Reference Vela-Martín and Jiménez2021). They have opened the possibility of Monte Carlo studies in which the consequences of ‘randomly’ modified flows are examined.

Examples of this approach are Jiménez (Reference Jiménez2018b, Reference Jiménez2020b), who introduced localised perturbations in two-dimensional turbulence in order to determine which parts of the flow result in significant perturbation growth or decay after a certain time. This allowed the identification of causally significant and irrelevant flow structures, including the relatively unexpected relevance of vortex dipoles rather than individual vortices, and eventually led to new models for the two-dimensional energy cascade (Jiménez Reference Jiménez2021). Encinar & Jiménez (Reference Encinar and Jiménez2023) extended the technique to three-dimensional homogeneous isotropic turbulence, and demonstrated that causal events are in that case characterised by either high kinetic energy or high dissipation rate, depending on the spatial scale of the initial perturbation, and that strong strain, rather than high vorticity, is the main prerequisite for perturbation growth. In these two cases, it is interesting that some of the significant structures were not the classically expected ones, underlining the ability of Monte Carlo interventional experiments to mitigate the bias of conventional wisdom.

In this study, we adopt the interventional approach, following the basic methodology in Jiménez (Reference Jiménez2018b). Spatially localised perturbations are imposed on a fully developed turbulent channel flow, and their influence is measured by their ability to alter the future evolution of the flow.

Numerical experiments that track the development of perturbation ensembles in wall turbulence are not new, probably starting with the computation by Keefe, Moin & Kim (Reference Keefe, Moin and Kim1992) of the Lyapunov spectrum in a low-Reynolds-number channel. On a similar subject, Nikitin (Reference Nikitin2008, Reference Nikitin2018) investigated the Reynolds number scaling of the leading Lyapunov exponent of a turbulent channel. Lyapunov analysis does not typically control the form of the initial perturbation, leaving the system to choose the most unstable direction in state space, and taking precautions to avoid nonlinearities, but Cherubini et al. (Reference Cherubini, Robinet, Bottaro and DE Palma2010) and Farano et al. (Reference Farano, Cherubini, Robinet and De Palma2017) turned the problem around by searching for weakly or fully nonlinear perturbations that optimally grow in energy after a given target time. They worked on an initially stationary flow with a turbulent profile, and when they constrain the initial energy of the perturbation, they obtain optimals that are localised in physical space. More recently, Ciola et al. (Reference Ciola, De Palma, Robinet and Cherubini2023) extended the analysis to snapshots of real turbulence, finding that for properly chosen target times, the optimal precursor is an early stage of an Orr burst. However, due to the difficulty of convergence over long times, their results apply only to short delays of the order of a few tens of viscous units. Moreover, the solution is optimal only for the snapshot at which it is applied, making it difficult to generalise the result.

The choice of the size of the initial perturbations is important, and data assimilation experiments have been conducted to estimate the minimum size below which perturbations are enslaved to their environment. The first was probably by Yoshida, Yamaguchi & Kaneda (Reference Yoshida, Yamaguchi and Kaneda2005) in isotropic three-dimensional turbulence, who showed that randomised scales smaller than 30 Kolmogorov viscous lengths (Kolmogorov Reference Kolmogorov1941) are regenerated if continuously assimilated to larger structures. Wang & Zaki (Reference Wang and Zaki2022) conducted similar experiments in channel turbulence. They replaced some layers with white noise, and showed how they synchronised with the original flow when assimilated through their boundaries. The maximum synchronisation thickness is approximately 30 viscous lengths (approximately 12 Kolmogorov units) for layers attached to the wall, and twice the Taylor microscale for layers away from it. However, since Encinar & Jiménez (Reference Encinar and Jiménez2023) found that freely evolving perturbations grow even below the assimilation limit, the result in wall-bounded turbulence remains uncertain.

The present study targets the nonlinear evolution of localised perturbations applied to instantaneous snapshots of turbulent channels at a moderate but non-trivial Reynolds number, over times of the order of an eddy turnover. A Monte Carlo search is used to apply the analysis across snapshots, and across as many combinations of perturbation location, size and target time as is practicable. The basic assumption is that causality depends of the local state of the neighbourhood at which the perturbation is applied, and the details of this dependence are extracted from the database of numerical experiments using standard methods of data analysis.

The organisation of the paper is as follows. The numerical set-up and the definition of the initial perturbations are described in § 2. How their evolution can be used to determine causality is discussed in §§ 3 and 4, and the relation between causal structures and the surrounding flow field is discussed in § 5. Conclusions are offered in § 6.

2. Numerical set-up

To save computational resources, we analyse simulations of a pressure-driven turbulent open channel flow in a doubly periodic domain, between a no-slip wall at ![]() $y=0$ and an impermeable free-slip wall at

$y=0$ and an impermeable free-slip wall at ![]() $y=h$. The streamwise, wall-normal and spanwise directions are

$y=h$. The streamwise, wall-normal and spanwise directions are ![]() $x$,

$x$, ![]() $y$ and

$y$ and ![]() $z$, respectively, and the corresponding velocities are

$z$, respectively, and the corresponding velocities are ![]() $u$,

$u$, ![]() $v$ and

$v$ and ![]() $w$, although position and velocities are occasionally denoted by their components

$w$, although position and velocities are occasionally denoted by their components ![]() $\boldsymbol {x}=\{x_j,\ j=1,2,3\}$. The domain size is

$\boldsymbol {x}=\{x_j,\ j=1,2,3\}$. The domain size is ![]() $L_x\times L_y\times L_z={\rm \pi} h \times h \times {\rm \pi}h$, and the Reynolds number is

$L_x\times L_y\times L_z={\rm \pi} h \times h \times {\rm \pi}h$, and the Reynolds number is ![]() $Re_\tau =u_\tau h/\nu =600.9$. The ‘

$Re_\tau =u_\tau h/\nu =600.9$. The ‘![]() $+$’ superscript denotes wall units, normalised with the friction velocity

$+$’ superscript denotes wall units, normalised with the friction velocity ![]() $u_\tau$ and with the kinematic viscosity

$u_\tau$ and with the kinematic viscosity ![]() $\nu$. Capital letters, as in

$\nu$. Capital letters, as in ![]() $U(y)$, denote variables averaged over the simulation ensemble and over wall-parallel planes; lowercase letters are fluctuations with respect to this average, and primes are root-mean-square fluctuation intensities. Repeated indices, including squares, imply summations unless noted otherwise. The simulation code is standard dealiased Fourier spectral along

$U(y)$, denote variables averaged over the simulation ensemble and over wall-parallel planes; lowercase letters are fluctuations with respect to this average, and primes are root-mean-square fluctuation intensities. Repeated indices, including squares, imply summations unless noted otherwise. The simulation code is standard dealiased Fourier spectral along ![]() $x$ and

$x$ and ![]() $z$, as in Kim, Moin & Moser (Reference Kim, Moin and Moser1987), but uses seven-points-stencil compact finite differences for the wall-normal derivatives, as in Hoyas & Jiménez (Reference Hoyas and Jiménez2006). Time marching is semi-implicit third-order Runge–Kutta (Spalart, Moser & Rogers Reference Spalart, Moser and Rogers1991), and the mass flux is kept constant. The numerical

$z$, as in Kim, Moin & Moser (Reference Kim, Moin and Moser1987), but uses seven-points-stencil compact finite differences for the wall-normal derivatives, as in Hoyas & Jiménez (Reference Hoyas and Jiménez2006). Time marching is semi-implicit third-order Runge–Kutta (Spalart, Moser & Rogers Reference Spalart, Moser and Rogers1991), and the mass flux is kept constant. The numerical ![]() $y$ grid is stretched at the no-slip wall with a hyperbolic tangent. See table 1 for other numerical parameters.

$y$ grid is stretched at the no-slip wall with a hyperbolic tangent. See table 1 for other numerical parameters.

Table 1. Computational parameters: ![]() $L_{i}$ is the domain size along the

$L_{i}$ is the domain size along the ![]() $i$th direction,

$i$th direction, ![]() $h$ is the ‘half-channel’ height, equivalent to the domain height in open channels, and

$h$ is the ‘half-channel’ height, equivalent to the domain height in open channels, and ![]() $U_b$ is the bulk velocity. The grid dimensions

$U_b$ is the bulk velocity. The grid dimensions ![]() $N_i$ and effective resolutions

$N_i$ and effective resolutions ![]() $\Delta {x_i}$ are expressed in terms of Fourier modes.

$\Delta {x_i}$ are expressed in terms of Fourier modes.

Figure 1(a) compares the resulting fluctuation profiles with existing data from regular and open channels. It was shown by Lozano-Durán & Jiménez (Reference Lozano-Durán and Jiménez2014a) that a computational box with ![]() $L_z/h={\rm \pi}$ reproduces well the statistics of regular channels, and figure 1 shows that the same is true for open ones. In particular, figure 1(b) shows that the spanwise kinetic energy spectrum fits well within the computational box. On the other hand, the figure shows that open and full channels agree only below

$L_z/h={\rm \pi}$ reproduces well the statistics of regular channels, and figure 1 shows that the same is true for open ones. In particular, figure 1(b) shows that the spanwise kinetic energy spectrum fits well within the computational box. On the other hand, the figure shows that open and full channels agree only below ![]() $y/h\approx 0.5$, above which the effect of ‘splatting’ at the top wall is particularly visible in the fluctuations of the cross-flow velocities (Perot & Moin Reference Perot and Moin1995). We will use only the range

$y/h\approx 0.5$, above which the effect of ‘splatting’ at the top wall is particularly visible in the fluctuations of the cross-flow velocities (Perot & Moin Reference Perot and Moin1995). We will use only the range ![]() $y^+\lesssim 300$ for the rest of the paper. It is also clear from the figure that the energy at long wavelengths above

$y^+\lesssim 300$ for the rest of the paper. It is also clear from the figure that the energy at long wavelengths above ![]() $y^+=100$ is higher than in del Álamo & Jiménez (Reference del Álamo and Jiménez2003). This is due to the short computational box, which inhibits the instability of the streaks (Abe, Antonia & Toh Reference Abe, Antonia and Toh2018), and results in two pairs of large streamwise streaks and rollers that dominate the flow.

$y^+=100$ is higher than in del Álamo & Jiménez (Reference del Álamo and Jiménez2003). This is due to the short computational box, which inhibits the instability of the streaks (Abe, Antonia & Toh Reference Abe, Antonia and Toh2018), and results in two pairs of large streamwise streaks and rollers that dominate the flow.

Figure 1. (a) Velocity fluctuation intensities. Symbols represent the open channel at ![]() $Re_\tau =601$; dashed lines represent the open channel at

$Re_\tau =601$; dashed lines represent the open channel at ![]() $Re_\tau =541$ (Pirozzoli Reference Pirozzoli2023); and solid lines represent the full channel at

$Re_\tau =541$ (Pirozzoli Reference Pirozzoli2023); and solid lines represent the full channel at ![]() $Re_\tau =547$ (del Álamo & Jiménez Reference del Álamo and Jiménez2003). Black indicates

$Re_\tau =547$ (del Álamo & Jiménez Reference del Álamo and Jiménez2003). Black indicates ![]() $u'$, red indicates

$u'$, red indicates ![]() $v'$, and blue indicates

$v'$, and blue indicates ![]() $w'$. (b) Premultiplied spanwise spectrum of the turbulent kinetic energy, normalised with

$w'$. (b) Premultiplied spanwise spectrum of the turbulent kinetic energy, normalised with ![]() $u_\tau ^2$. Contours are logarithmically equispaced from

$u_\tau ^2$. Contours are logarithmically equispaced from ![]() $k_zE_{KK}/u_\tau ^2=0.56$–2.8. The heavier vertical line is the present computational box. The thinner vertical line is

$k_zE_{KK}/u_\tau ^2=0.56$–2.8. The heavier vertical line is the present computational box. The thinner vertical line is ![]() $\lambda _z=2{\rm \pi} /k_z=h$, and the horizontal line is

$\lambda _z=2{\rm \pi} /k_z=h$, and the horizontal line is ![]() $y^+=300$. Filled contours are the present simulation; lines are from del Álamo & Jiménez (Reference del Álamo and Jiménez2003).

$y^+=300$. Filled contours are the present simulation; lines are from del Álamo & Jiménez (Reference del Álamo and Jiménez2003).

The original code was ported to CUDA by Vela-Martín et al. (Reference Vela-Martín, Encinar, García-Gutiérrez and Jiménez2021) for the efficient simulation of high-Reynolds number channel turbulence on GPU clusters. It has been adapted to a single GPU for the present experiments, but the original reference should be consulted for full details.

2.1. The initial perturbations

As mentioned in the Introduction, the interventional identification of causality follows (Jiménez Reference Jiménez2018b, Reference Jiménez2020b). The idea is to apply a spatially localised initial perturbation to existing turbulence, after which the flow is allowed to develop freely (see figure 2). The effect is measured after some time. Unlike the sensitivity analysis of the mean velocity profile in Farano et al. (Reference Farano, Cherubini, Robinet and De Palma2017), each causality experiment is the response to a particular perturbation on a particular location of a given flow snapshot, and the numerical experiment has to be repeated many times for different snapshots and perturbations. The goal is to create a database of responses from which to extract the characteristics that make a particular flow location influential for the future behaviour of turbulence (i.e. causally significant). To ensure independence, the 40 initial reference snapshots used for our experiments are separated by at least 1.4 turnovers (defined as ![]() $h/u_\tau$).

$h/u_\tau$).

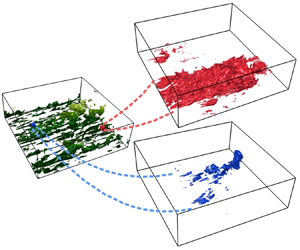

Figure 2. Schematic of the numerical experiment. Green represents the isosurface of the turbulent kinetic energy for the reference flow at ![]() $t=0$,

$t=0$, ![]() $|\boldsymbol {u}_{\it ref}|^+=4.5$. Colour intensity encodes the distance from the wall. Red represents the perturbation kinetic energy at some later time,

$|\boldsymbol {u}_{\it ref}|^+=4.5$. Colour intensity encodes the distance from the wall. Red represents the perturbation kinetic energy at some later time, ![]() $|\boldsymbol {u}_{\it ref}(T)-\boldsymbol {u}_{\it mod}(T)|^+=0.17$, for a causally significant perturbation. Blue represents the same for a causally irrelevant perturbation.

$|\boldsymbol {u}_{\it ref}(T)-\boldsymbol {u}_{\it mod}(T)|^+=0.17$, for a causally significant perturbation. Blue represents the same for a causally irrelevant perturbation.

Perturbations modify the flow within a cubical cell of side ![]() $l_{cell}$ centred at

$l_{cell}$ centred at ![]() $\boldsymbol {x}_c$. Although there are countless choices for the form of the disturbance, and even if experience shows that the manner in which the flow is disturbed influences the outcome of the experiment (Jiménez Reference Jiménez2020b; Encinar & Jiménez Reference Encinar and Jiménez2023), cost considerations limit us to a single perturbation scheme. Specifically, the flow is modified by removing the velocity fluctuations within the cell, overwriting the velocity field with its

$\boldsymbol {x}_c$. Although there are countless choices for the form of the disturbance, and even if experience shows that the manner in which the flow is disturbed influences the outcome of the experiment (Jiménez Reference Jiménez2020b; Encinar & Jiménez Reference Encinar and Jiménez2023), cost considerations limit us to a single perturbation scheme. Specifically, the flow is modified by removing the velocity fluctuations within the cell, overwriting the velocity field with its ![]() $y$-dependent cell average. Defining the

$y$-dependent cell average. Defining the ![]() $y$-dependent cell average of a variable

$y$-dependent cell average of a variable ![]() $f$ as

$f$ as

\begin{equation} \overline{f}^{c}(y)=l_{cell}^{{-}2} \int^{x_c+l_{cell}/2}_{x_c-l_{cell}/2} \int^{z_c+l_{cell}/2}_{z_c-l_{cell}/2}{f(x,y,z)\,{\rm d}{\kern0.8pt}x\,{\rm d} z}, \end{equation}

\begin{equation} \overline{f}^{c}(y)=l_{cell}^{{-}2} \int^{x_c+l_{cell}/2}_{x_c-l_{cell}/2} \int^{z_c+l_{cell}/2}_{z_c-l_{cell}/2}{f(x,y,z)\,{\rm d}{\kern0.8pt}x\,{\rm d} z}, \end{equation}

the perturbed velocity ![]() $\boldsymbol {u}_{\it mod}$ is

$\boldsymbol {u}_{\it mod}$ is

\begin{equation} \boldsymbol{u}_{\it mod} = \begin{cases} \overline{\boldsymbol{u}_{\it ref}}^{c} & \textrm{when}\ |x_j-x_{cj}|\leq l_{cell}/2,\\ \boldsymbol{u} _{\it ref} & \textrm{otherwise}, \end{cases} \end{equation}

\begin{equation} \boldsymbol{u}_{\it mod} = \begin{cases} \overline{\boldsymbol{u}_{\it ref}}^{c} & \textrm{when}\ |x_j-x_{cj}|\leq l_{cell}/2,\\ \boldsymbol{u} _{\it ref} & \textrm{otherwise}, \end{cases} \end{equation}

where ![]() $\boldsymbol {u}_{\it ref}$ is the unperturbed flow, and an extra pressure step is applied after (2.2) to restore continuity at the edges of the cell. The experiment is repeated as many times as possible, applying it to different reference flow fields while changing the location and size of the perturbation cell.

$\boldsymbol {u}_{\it ref}$ is the unperturbed flow, and an extra pressure step is applied after (2.2) to restore continuity at the edges of the cell. The experiment is repeated as many times as possible, applying it to different reference flow fields while changing the location and size of the perturbation cell.

Table 2 summarises the parameters of the experimental cells. They are expressed in terms of the distance from the wall to the bottom of the cell, ![]() $y_{cell}= y_c-l_{cell}/2$, which was found to collapse some results better than the cell centre, and are separated into two sets. In the first set, involving the 40 reference snapshots, perturbations are applied to a

$y_{cell}= y_c-l_{cell}/2$, which was found to collapse some results better than the cell centre, and are separated into two sets. In the first set, involving the 40 reference snapshots, perturbations are applied to a ![]() $6\times 6$ grid of cells evenly spaced in

$6\times 6$ grid of cells evenly spaced in ![]() $x$ and

$x$ and ![]() $z$, such that their centres are separated by

$z$, such that their centres are separated by ![]() ${\rm \pi} h/6$ in each direction (approximately 315 wall units). In the

${\rm \pi} h/6$ in each direction (approximately 315 wall units). In the ![]() $y$-direction, perturbations are applied at the heights detailed in the second column of table 2, ranging from cells touching the wall to those centred at the middle of the computational domain,

$y$-direction, perturbations are applied at the heights detailed in the second column of table 2, ranging from cells touching the wall to those centred at the middle of the computational domain, ![]() $y_c^+\approx 300$. Each of them is run for 0.65 turnovers, and consumes approximately 6 minutes in an Nvidia A100 GPU, so that the approximately 76 000 experiments in this set took 318 GPU-days.

$y_c^+\approx 300$. Each of them is run for 0.65 turnovers, and consumes approximately 6 minutes in an Nvidia A100 GPU, so that the approximately 76 000 experiments in this set took 318 GPU-days.

Table 2. Parameters of the perturbation cells. See text for details.

While these experiments test a wide range of sizes at sparsely spaced locations across the flow, the ones in the last column of table 2 aim to build heat maps that explore possible large-scale causality distributions not limited to a single cubical cell. Each reference snapshot is divided into a ![]() $30 \times 30$ grid in the

$30 \times 30$ grid in the ![]() $x$–

$x$–![]() $z$ plane, approximately spaced by 75 wall units, and perturbations are centred at each point of that grid. For cells with

$z$ plane, approximately spaced by 75 wall units, and perturbations are centred at each point of that grid. For cells with ![]() $l_{cell}^+\ge 75$, this procedure uniformly samples the whole plane, but due to its cost, it was limited to 20 initial snapshots and five different heights, each of which ran for only 0.49 turnovers. The resulting 180 000 tests took 565 GPU-days.

$l_{cell}^+\ge 75$, this procedure uniformly samples the whole plane, but due to its cost, it was limited to 20 initial snapshots and five different heights, each of which ran for only 0.49 turnovers. The resulting 180 000 tests took 565 GPU-days.

In both sets of experiments, the temporal evolution of the perturbation is measured by the energy of the perturbation velocity integrated over the whole computational domain,

which evolves from some initial ![]() $\varepsilon _{\boldsymbol {u}} (0)$ at the moment at which the perturbation is applied, to

$\varepsilon _{\boldsymbol {u}} (0)$ at the moment at which the perturbation is applied, to ![]() $\varepsilon _{\boldsymbol {u}}(\infty )=2 K\equiv 2V^{-1} \int |\boldsymbol {u}|^2 \,{\rm d} V$ when the reference and perturbed flow fields decorrelate after a sufficiently long time. For a chaotic system such as turbulence,

$\varepsilon _{\boldsymbol {u}}(\infty )=2 K\equiv 2V^{-1} \int |\boldsymbol {u}|^2 \,{\rm d} V$ when the reference and perturbed flow fields decorrelate after a sufficiently long time. For a chaotic system such as turbulence, ![]() $\varepsilon _{\boldsymbol {u}}(\infty ) \gg \varepsilon _{\boldsymbol {u}}(0)$, and even if the evolution of the perturbation is far from linear over times of the order of a turnover, the perturbation energy typically grows almost exponentially for a while before levelling at

$\varepsilon _{\boldsymbol {u}}(\infty ) \gg \varepsilon _{\boldsymbol {u}}(0)$, and even if the evolution of the perturbation is far from linear over times of the order of a turnover, the perturbation energy typically grows almost exponentially for a while before levelling at ![]() $\varepsilon _{\boldsymbol {u}}(\infty )$. These considerations lead to two definitions of causal significance: an absolute one that disregards the initial perturbation magnitude and vanishes as

$\varepsilon _{\boldsymbol {u}}(\infty )$. These considerations lead to two definitions of causal significance: an absolute one that disregards the initial perturbation magnitude and vanishes as ![]() $t\to \infty$,

$t\to \infty$,

and a relative one,

which measures relative growth and vanishes at ![]() $t=0$. Both definitions typically grow with time, but we will be interested in cases in which the growth is particularly fast or slow, as defined by the top and bottom

$t=0$. Both definitions typically grow with time, but we will be interested in cases in which the growth is particularly fast or slow, as defined by the top and bottom ![]() $\phi$ percentile of the significance distribution. For most of the paper, experiments within the top

$\phi$ percentile of the significance distribution. For most of the paper, experiments within the top ![]() $\phi =10\,\%$ of the significance distribution will be defined as ‘causally significant’, and those in the bottom 10 %, as ‘causally irrelevant’. This fraction is broadly compatible with the percolation analysis often used to define thresholds. For example, the optimal percolation threshold in three-dimensional wall-bounded turbulence fills volume fractions of the order of 5 %–10 % (Jiménez Reference Jiménez2018a), while in two-dimensional vorticity fields, which are more directly comparable with the present application to individual planes, the covered area is closer to 20 %–30 % (Jiménez Reference Jiménez2020a). Tests using

$\phi =10\,\%$ of the significance distribution will be defined as ‘causally significant’, and those in the bottom 10 %, as ‘causally irrelevant’. This fraction is broadly compatible with the percolation analysis often used to define thresholds. For example, the optimal percolation threshold in three-dimensional wall-bounded turbulence fills volume fractions of the order of 5 %–10 % (Jiménez Reference Jiménez2018a), while in two-dimensional vorticity fields, which are more directly comparable with the present application to individual planes, the covered area is closer to 20 %–30 % (Jiménez Reference Jiménez2020a). Tests using ![]() $\phi =5\,\%$ or 15 % showed few differences in the present results.

$\phi =5\,\%$ or 15 % showed few differences in the present results.

3. Temporal evolution of the significance

To further study the growth of the perturbations, we use its ![]() $y$-dependent averaged intensity,

$y$-dependent averaged intensity,

equivalent to (2.3) but integrated over wall-parallel planes instead of over the whole domain, together with corresponding definitions for the significances. To minimise notational clutter, we use for them the same symbols as in (2.3)–(2.5), with the inclusion of ![]() $y$ as a parameter. Figure 3(a) shows the growth of

$y$ as a parameter. Figure 3(a) shows the growth of ![]() $\varepsilon _{\boldsymbol {u}}(y)$, unconditionally averaged over all the perturbations introduced at a particular size and distance from the wall and normalised with its maximum at

$\varepsilon _{\boldsymbol {u}}(y)$, unconditionally averaged over all the perturbations introduced at a particular size and distance from the wall and normalised with its maximum at ![]() $t=0$. The blue line is the position at which the perturbation is maximum. It initially stays at the height at which the perturbation is introduced, but a new peak grows near the wall and becomes dominant after

$t=0$. The blue line is the position at which the perturbation is maximum. It initially stays at the height at which the perturbation is introduced, but a new peak grows near the wall and becomes dominant after ![]() $tu_\tau /h\approx 0.3$. In figure 3(b), where the perturbation is initially attached to the wall, the peak is always attached. During the very early stage of evolution

$tu_\tau /h\approx 0.3$. In figure 3(b), where the perturbation is initially attached to the wall, the peak is always attached. During the very early stage of evolution ![]() $(tu_\tau /h\lesssim 0.05)$, a low-intensity perturbation spanning the whole channel appears in both cases. This is most likely due to the pressure pulse that enforces continuity at the edges of the perturbation cell, but it quickly dissipates and does not seem to influence later development.

$(tu_\tau /h\lesssim 0.05)$, a low-intensity perturbation spanning the whole channel appears in both cases. This is most likely due to the pressure pulse that enforces continuity at the edges of the perturbation cell, but it quickly dissipates and does not seem to influence later development.

Figure 3. Plane-averaged perturbation magnitude ![]() ${\langle \varepsilon _{\boldsymbol {u}}\rangle }(y,t)$, as defined in (3.1), unconditionally averaged over all perturbations with

${\langle \varepsilon _{\boldsymbol {u}}\rangle }(y,t)$, as defined in (3.1), unconditionally averaged over all perturbations with ![]() $l_{cell}^+=75$ introduced at a given height, normalized with its maximum at

$l_{cell}^+=75$ introduced at a given height, normalized with its maximum at ![]() $t=0$. The blue line is the instantaneous position of the perturbation maximum. Contours are

$t=0$. The blue line is the instantaneous position of the perturbation maximum. Contours are ![]() ${\langle \varepsilon _{\boldsymbol {u}}\rangle }(y,t)/\max _y\langle \varepsilon _{\boldsymbol {u}}\rangle (y,0)$. Here, (a)

${\langle \varepsilon _{\boldsymbol {u}}\rangle }(y,t)/\max _y\langle \varepsilon _{\boldsymbol {u}}\rangle (y,0)$. Here, (a) ![]() $y_c^+=300$, (b)

$y_c^+=300$, (b) ![]() $y_{cell}=0$.

$y_{cell}=0$.

Figure 4 shows the attachment time ![]() $t_{att}$, defined for individual tests as the moment when the perturbation maximum falls below

$t_{att}$, defined for individual tests as the moment when the perturbation maximum falls below ![]() $y^+=50$, and later averaged over the experimental ensemble. It is approximately proportional to

$y^+=50$, and later averaged over the experimental ensemble. It is approximately proportional to ![]() $y_{cell}$, at least for

$y_{cell}$, at least for ![]() $y_{cell}^+\gtrsim 50$, with a propagation velocity

$y_{cell}^+\gtrsim 50$, with a propagation velocity ![]() ${\rm d}{\kern0.9pt}y_{cell}/{\rm d} t=1.37u_\tau$. This is faster than the observed vertical advection velocity of coherent features in channels,

${\rm d}{\kern0.9pt}y_{cell}/{\rm d} t=1.37u_\tau$. This is faster than the observed vertical advection velocity of coherent features in channels, ![]() ${\rm d}{\kern0.9pt}y/{\rm d} t\approx \pm u_\tau$ (Lozano-Durán & Jiménez Reference Lozano-Durán and Jiménez2014b), and suggests that the perturbation is not simply advected by the flow, but actively amplified by it. In fact, the production term in the evolution equation for the perturbation energy is proportional to the mean shear (see Appendix A), and the most likely interpretation of figure 4 is that while all perturbations are advected to and from the wall by the background turbulence, those approaching the wall, where the shear is most intense, grow faster than those moving away from it, resulting in a mean downwards migration of the perturbation maximum. Notice, for example, the different slopes of downwards and upwards contours in figure 3. It is also relevant that

${\rm d}{\kern0.9pt}y/{\rm d} t\approx \pm u_\tau$ (Lozano-Durán & Jiménez Reference Lozano-Durán and Jiménez2014b), and suggests that the perturbation is not simply advected by the flow, but actively amplified by it. In fact, the production term in the evolution equation for the perturbation energy is proportional to the mean shear (see Appendix A), and the most likely interpretation of figure 4 is that while all perturbations are advected to and from the wall by the background turbulence, those approaching the wall, where the shear is most intense, grow faster than those moving away from it, resulting in a mean downwards migration of the perturbation maximum. Notice, for example, the different slopes of downwards and upwards contours in figure 3. It is also relevant that ![]() $t_{att}$ scales better with the distance from the wall to the bottom of the cell,

$t_{att}$ scales better with the distance from the wall to the bottom of the cell, ![]() $y_{cell}$, than with its centre,

$y_{cell}$, than with its centre, ![]() $y_c$ (not shown), because it is the bottom that predominantly feels the stronger shear near the wall.

$y_c$ (not shown), because it is the bottom that predominantly feels the stronger shear near the wall.

Figure 4. Attachment time ![]() $t_{att}$ as a function of

$t_{att}$ as a function of ![]() $y_{cell}$, for different

$y_{cell}$, for different ![]() $l_{cell}$. The dashed line is a least-squares fit to the curves with

$l_{cell}$. The dashed line is a least-squares fit to the curves with ![]() $y_{cell}>0$, with slope

$y_{cell}>0$, with slope ![]() ${\rm d}{\kern0.9pt}y/{\rm d} t=1.37u_\tau$. Times are computed for individual tests, and symbols and bars are their averages and standard deviations. Symbols are as in table 2.

${\rm d}{\kern0.9pt}y/{\rm d} t=1.37u_\tau$. Times are computed for individual tests, and symbols and bars are their averages and standard deviations. Symbols are as in table 2.

Figures 5(a,b) show two examples of the temporal evolution of the domain-integrated perturbation ![]() $\varepsilon _{\boldsymbol {u}}$, using different cell sizes, and the line colour is the cell height. The figures show that

$\varepsilon _{\boldsymbol {u}}$, using different cell sizes, and the line colour is the cell height. The figures show that ![]() $\varepsilon _{\boldsymbol {u}}$ is higher for larger cells, which is to be expected since it is an integrated quantity, and also for lower

$\varepsilon _{\boldsymbol {u}}$ is higher for larger cells, which is to be expected since it is an integrated quantity, and also for lower ![]() $y_{cell}$, also expected for a perturbation that removes velocity fluctuations, which are stronger near the wall. More interesting is that cells near the wall grow faster than those away from it, which may be understood as supporting the model in which their growth rate is controlled by the ambient shear.

$y_{cell}$, also expected for a perturbation that removes velocity fluctuations, which are stronger near the wall. More interesting is that cells near the wall grow faster than those away from it, which may be understood as supporting the model in which their growth rate is controlled by the ambient shear.

Figure 5. Temporal development of the unconditionally averaged domain-integrated perturbation ![]() $\varepsilon _{\boldsymbol {u}} (t)$, as defined in (2.3), for (a)

$\varepsilon _{\boldsymbol {u}} (t)$, as defined in (2.3), for (a) ![]() $l_{cell}^+=50$, (b)

$l_{cell}^+=50$, (b) ![]() $l_{cell}^+=100$. In both plots, the cell distance from the wall increases from cold to warm colours, for the cases in table 2, and the diagonal dashed lines are the exponential Lyapunov growth rates from Nikitin (Reference Nikitin2018).

$l_{cell}^+=100$. In both plots, the cell distance from the wall increases from cold to warm colours, for the cases in table 2, and the diagonal dashed lines are the exponential Lyapunov growth rates from Nikitin (Reference Nikitin2018).

The dashed straight lines in figure 5 are the exponential growth rates from the Lyapunov analysis by Nikitin (Reference Nikitin2018), who reports a Lyapunov time for ![]() $\varepsilon _{\boldsymbol {u}}$ (the inverse of the leading exponent) of

$\varepsilon _{\boldsymbol {u}}$ (the inverse of the leading exponent) of ![]() $T_L^+\approx 19$ (

$T_L^+\approx 19$ (![]() $u_\tau T_L/h=0.032$) in a turbulent channel at

$u_\tau T_L/h=0.032$) in a turbulent channel at ![]() $Re_\tau =586$. The leading Lyapunov vector is concentrated in the buffer layer, and the exponent scales in wall units, again consistent with a model in which the growth is controlled by the near-wall shear.

$Re_\tau =586$. The leading Lyapunov vector is concentrated in the buffer layer, and the exponent scales in wall units, again consistent with a model in which the growth is controlled by the near-wall shear.

It is clear that our analysis shares many characteristics with the classical Lyapunov analysis, albeit with important differences. The most obvious is that the classical Lyapunov exponent assumes that the perturbation behaves linearly for an infinitely long time, while figure 5 shows that our experiments saturate for times that, even if much longer than ![]() $T_L$, remain of interest for the flow evolution. A second important difference is that our initial perturbations, which are intended to probe the local structure of the flow rather than its mean properties, are compact with predetermined shapes, while those in Lyapunov analysis are allowed to spread across the flow field to their optimal structure. It may be relevant in this respect that there is an initial transient in which perturbations decay in most of our tests,

$T_L$, remain of interest for the flow evolution. A second important difference is that our initial perturbations, which are intended to probe the local structure of the flow rather than its mean properties, are compact with predetermined shapes, while those in Lyapunov analysis are allowed to spread across the flow field to their optimal structure. It may be relevant in this respect that there is an initial transient in which perturbations decay in most of our tests, ![]() $u_\tau t/h\lesssim 0.1$, and that this period is shorter for cells near the wall. This is reminiscent of the similar transient in Lyapunov calculations, during which perturbations align themselves to the most unstable direction. Our limited range of initial conditions is probably partly compensated by the substitution of the temporal averaging of classical analysis by averaging over tests, and it is interesting that the short-time growth rates of the smallest perturbations in figure 5(a), which mostly sample the buffer layer, approximately agree with Nikitin (Reference Nikitin2018). Larger or higher perturbations, which sample weaker shears, grow more slowly. We will provide in § 5.1 further support for the relevance of local shear to perturbation growth.

$u_\tau t/h\lesssim 0.1$, and that this period is shorter for cells near the wall. This is reminiscent of the similar transient in Lyapunov calculations, during which perturbations align themselves to the most unstable direction. Our limited range of initial conditions is probably partly compensated by the substitution of the temporal averaging of classical analysis by averaging over tests, and it is interesting that the short-time growth rates of the smallest perturbations in figure 5(a), which mostly sample the buffer layer, approximately agree with Nikitin (Reference Nikitin2018). Larger or higher perturbations, which sample weaker shears, grow more slowly. We will provide in § 5.1 further support for the relevance of local shear to perturbation growth.

Figure 6(a) shows a typical evolution of the absolute significance ![]() $\sigma _{\boldsymbol {u}}$ for perturbations with a given

$\sigma _{\boldsymbol {u}}$ for perturbations with a given ![]() $l_{cell}$ and

$l_{cell}$ and ![]() $y_{cell}$. Each of the grey lines is the result of a different experiment, and the red and blue lines are the mean evolutions of samples that are respectively classified as significant or irrelevant at

$y_{cell}$. Each of the grey lines is the result of a different experiment, and the red and blue lines are the mean evolutions of samples that are respectively classified as significant or irrelevant at ![]() $t=0$. The bands are their standard deviations. The figure shows that the perturbations approximately maintain the ordering of their initial intensity. Initially stronger perturbations tend to remain strong for long times, although it follows from its definition that

$t=0$. The bands are their standard deviations. The figure shows that the perturbations approximately maintain the ordering of their initial intensity. Initially stronger perturbations tend to remain strong for long times, although it follows from its definition that ![]() $\sigma _{\boldsymbol {u}}$ vanishes on average as

$\sigma _{\boldsymbol {u}}$ vanishes on average as ![]() $t\to \infty$. Figure 6(b) displays the persistence of the causality classification based of

$t\to \infty$. Figure 6(b) displays the persistence of the causality classification based of ![]() $\sigma _{\boldsymbol {u}}$, defined as the fraction of samples identified as significant or irrelevant at

$\sigma _{\boldsymbol {u}}$, defined as the fraction of samples identified as significant or irrelevant at ![]() $t=0$ that remain significant or irrelevant when classified at subsequent times. In the case illustrated in the figure, 34 % of the initially significant samples, and 29 % of the initially irrelevant ones, remain at the end of our experiments in the same class in which they were classified at

$t=0$ that remain significant or irrelevant when classified at subsequent times. In the case illustrated in the figure, 34 % of the initially significant samples, and 29 % of the initially irrelevant ones, remain at the end of our experiments in the same class in which they were classified at ![]() $t=0$. This fraction is at least 20 % in all the experiments in this paper, which is substantially higher than the 10 % expected from a random selection.

$t=0$. This fraction is at least 20 % in all the experiments in this paper, which is substantially higher than the 10 % expected from a random selection.

Figure 6. (a) Grey lines are the absolute significance ![]() $\sigma _{\boldsymbol {u}}$ (2.4) for individual experiments, as a function of time; only 20 % of the total are included. The red and blue lines and their corresponding bands respectively represent the mean evolution and standard deviation of the samples diagnosed as significant or irrelevant at

$\sigma _{\boldsymbol {u}}$ (2.4) for individual experiments, as a function of time; only 20 % of the total are included. The red and blue lines and their corresponding bands respectively represent the mean evolution and standard deviation of the samples diagnosed as significant or irrelevant at ![]() $t=0$. (b) Fraction of experiments that continue to be classified as significant or irrelevant in terms of

$t=0$. (b) Fraction of experiments that continue to be classified as significant or irrelevant in terms of ![]() $\sigma _{\boldsymbol {u}}$ at different times, after being so classified at

$\sigma _{\boldsymbol {u}}$ at different times, after being so classified at ![]() $t=0$. Red indicates significant; blue indicates irrelevant. The black horizontal line is the probability threshold

$t=0$. Red indicates significant; blue indicates irrelevant. The black horizontal line is the probability threshold ![]() $\phi =10\,\%$. (c) As in (a), for the relative significance

$\phi =10\,\%$. (c) As in (a), for the relative significance ![]() $\sigma _{\boldsymbol {u}r}$ (2.5) diagnosed at

$\sigma _{\boldsymbol {u}r}$ (2.5) diagnosed at ![]() $tu_\tau /h=0.29$. In all cases,

$tu_\tau /h=0.29$. In all cases, ![]() $l_{cell}^+=50$,

$l_{cell}^+=50$, ![]() $y_{cell}^+=125$.

$y_{cell}^+=125$.

Figure 6(c) shows the evolution of the relative significance. Unlike the absolute significance, ![]() $\sigma _{\boldsymbol {u}r}$ vanishes at

$\sigma _{\boldsymbol {u}r}$ vanishes at ![]() $t=0$ but does not reach the same long-time limit in all cases. In fact,

$t=0$ but does not reach the same long-time limit in all cases. In fact, ![]() $\sigma _{\boldsymbol {u}r}(\infty ) = \log _{10} (2K) - \log _{10} \varepsilon _{\boldsymbol {u}}(0)$, and

$\sigma _{\boldsymbol {u}r}(\infty ) = \log _{10} (2K) - \log _{10} \varepsilon _{\boldsymbol {u}}(0)$, and ![]() $\sigma _{\boldsymbol {u}r}(\infty )$ is essentially equivalent to the initial perturbation magnitude.

$\sigma _{\boldsymbol {u}r}(\infty )$ is essentially equivalent to the initial perturbation magnitude.

These considerations show that both ![]() $\sigma _{\boldsymbol {u}}$ and

$\sigma _{\boldsymbol {u}}$ and ![]() $\sigma _{\boldsymbol {u}r}$ characterise the evolution of the perturbations at short and intermediate times. The former mostly reveals that the perturbation intensity stays approximately proportional to its initial value for some time, while the latter, which compensates for this effect, describes its intrinsic growth. When

$\sigma _{\boldsymbol {u}r}$ characterise the evolution of the perturbations at short and intermediate times. The former mostly reveals that the perturbation intensity stays approximately proportional to its initial value for some time, while the latter, which compensates for this effect, describes its intrinsic growth. When ![]() $\varepsilon _{\boldsymbol {u}}\to 2K$ at longer times, the system forgets its initial conditions, and neither measure of significance is very useful.

$\varepsilon _{\boldsymbol {u}}\to 2K$ at longer times, the system forgets its initial conditions, and neither measure of significance is very useful.

Although figure 6(b) shows that the significance classification of a given experiment is not a completely random variable, the fact that the persistence is not unity implies that the time at which the classification is performed is important. Consider, for example, the mean significance of the set ![]() $\{I\}$ of tests classified at time

$\{I\}$ of tests classified at time ![]() $t_c$ as irrelevant,

$t_c$ as irrelevant, ![]() $\sigma _I(t_c) =N_{\{I\}}^{-1} \sum _{j\in \{I\}} \sigma _j$, and define a similar

$\sigma _I(t_c) =N_{\{I\}}^{-1} \sum _{j\in \{I\}} \sigma _j$, and define a similar ![]() $\sigma _S (t_c)$ average for significant perturbations. The difference

$\sigma _S (t_c)$ average for significant perturbations. The difference ![]() $\sigma _S-\sigma _I$ typically increases initially and reaches a maximum before decaying at long times. The time

$\sigma _S-\sigma _I$ typically increases initially and reaches a maximum before decaying at long times. The time ![]() $t_{sig}$ at which this difference is maximum is also when the classification is less ambiguous, and we will preferentially use it from now on to define our significance classes.

$t_{sig}$ at which this difference is maximum is also when the classification is less ambiguous, and we will preferentially use it from now on to define our significance classes.

Figures 7(a,b) show how ![]() $t_{sig}$ changes as a function of

$t_{sig}$ changes as a function of ![]() $l_{cell}$ and

$l_{cell}$ and ![]() $y_{cell}$, using either

$y_{cell}$, using either ![]() $\sigma _{\boldsymbol {u}}$ or

$\sigma _{\boldsymbol {u}}$ or ![]() $\sigma _{\boldsymbol {u}r}$ as a causality measure. Disregarding the case

$\sigma _{\boldsymbol {u}r}$ as a causality measure. Disregarding the case ![]() $l_{cell}^+=25$, which is well within the dissipative range of scales and tends to behave differently from larger cells,

$l_{cell}^+=25$, which is well within the dissipative range of scales and tends to behave differently from larger cells, ![]() $t_{sig}$ is explained mainly by

$t_{sig}$ is explained mainly by ![]() $y_{cell}$, and it is clear that

$y_{cell}$, and it is clear that ![]() $\sigma _{\boldsymbol {u}r}$ is a better indicator for this purpose than

$\sigma _{\boldsymbol {u}r}$ is a better indicator for this purpose than ![]() $\sigma _{\boldsymbol {u}}$. We will mostly use it from now on. It is interesting that

$\sigma _{\boldsymbol {u}}$. We will mostly use it from now on. It is interesting that ![]() $t_{sig}$ is very close to, and generally slightly larger than, the attachment time

$t_{sig}$ is very close to, and generally slightly larger than, the attachment time ![]() $t_{att}$ in figure 4, as shown by the difference of the two values in figure 7(c), suggesting again that the arrival of the disturbances to the wall is an important factor in determining causality.

$t_{att}$ in figure 4, as shown by the difference of the two values in figure 7(c), suggesting again that the arrival of the disturbances to the wall is an important factor in determining causality.

Figure 7. Optimal classification time ![]() $t_{sig}$ as a function of

$t_{sig}$ as a function of ![]() $y_{cell}$. Symbols as in table 2. The dashed lines in (a,b) are least-squares linear fits, whose slope is

$y_{cell}$. Symbols as in table 2. The dashed lines in (a,b) are least-squares linear fits, whose slope is ![]() ${\rm d}{\kern0.9pt}y/{\rm d} t=2.12 u_\tau$ in (a) and

${\rm d}{\kern0.9pt}y/{\rm d} t=2.12 u_\tau$ in (a) and ![]() ${\rm d}{\kern0.9pt}y/{\rm d} t=1.28 u_\tau$ in (b). (a) Using

${\rm d}{\kern0.9pt}y/{\rm d} t=1.28 u_\tau$ in (b). (a) Using ![]() $\sigma _{\boldsymbol {u}}$; (b) using

$\sigma _{\boldsymbol {u}}$; (b) using ![]() $\sigma _{\boldsymbol {u}r}$. (c) Offset between the

$\sigma _{\boldsymbol {u}r}$. (c) Offset between the ![]() $\sigma _{\boldsymbol {u}r}$ classification time and the attachment time.

$\sigma _{\boldsymbol {u}r}$ classification time and the attachment time.

We have seen above that the initial intensity of the perturbations has an effect on their subsequent evolution. This is also true of our significance classification, and can be quantified by the correlation of ![]() $\sigma _{\boldsymbol {u}r}(t_c)$ with

$\sigma _{\boldsymbol {u}r}(t_c)$ with ![]() $\varepsilon _{\boldsymbol {u}}(0)$ (not shown). This correlation tends to

$\varepsilon _{\boldsymbol {u}}(0)$ (not shown). This correlation tends to ![]() $-1$ at long times, as explained above, but remains moderately positive for

$-1$ at long times, as explained above, but remains moderately positive for ![]() $t_c\lesssim t_{sig}$, confirming that the initial relative growth rate for strong perturbations is faster than for weak ones. In most cases,

$t_c\lesssim t_{sig}$, confirming that the initial relative growth rate for strong perturbations is faster than for weak ones. In most cases, ![]() $t_{sig}$ approximately coincides with the moment at which the correlation changes sign and is close to zero, making the classification relatively independent of the initial perturbation intensity. At this moment, the energy of the perturbation is still a small fraction of the total energy of the flow. Taking as an example the topmost curve in figure 5(b)

$t_{sig}$ approximately coincides with the moment at which the correlation changes sign and is close to zero, making the classification relatively independent of the initial perturbation intensity. At this moment, the energy of the perturbation is still a small fraction of the total energy of the flow. Taking as an example the topmost curve in figure 5(b) ![]() $(l_{cell}^+=100$,

$(l_{cell}^+=100$, ![]() $y_{cell}=0)$, the average energy of the initial perturbations is approximately

$y_{cell}=0)$, the average energy of the initial perturbations is approximately ![]() $3\times 10^{-4}\,{K}$, and grows to

$3\times 10^{-4}\,{K}$, and grows to ![]() $0.6K$ at the end of the experimental runs, but it is still

$0.6K$ at the end of the experimental runs, but it is still ![]() $8\times 10^{-3}\,{K}$ at the optimal classification time

$8\times 10^{-3}\,{K}$ at the optimal classification time ![]() $t_{sig}\approx 0.17{ h}/u_\tau$. This does not mean that the perturbation can be linearised up to that time. The intensity of the perturbation is always

$t_{sig}\approx 0.17{ h}/u_\tau$. This does not mean that the perturbation can be linearised up to that time. The intensity of the perturbation is always ![]() $O(K)$, and the growth of its integrated energy is mostly due to its geometric spreading (see figure 2).

$O(K)$, and the growth of its integrated energy is mostly due to its geometric spreading (see figure 2).

4. Diagnostic properties for causal significance

Having described how the significance of an initial condition can be characterised, we recover our original task of determining which properties of the perturbed cells are responsible for their causality. The basic assumption is that the characterisation of causality can be reduced to a single observable of the cell at the perturbation time, ![]() $t=0$, such as its average vorticity, rather than requiring several conditions to be satisfied simultaneously, or even some property of the extended environment of the cell, or of its history. As mentioned in the Introduction, the strategy is to perform many experiments modifying individual cells, to label them according to their significance at some later time, and to test which cell observables at

$t=0$, such as its average vorticity, rather than requiring several conditions to be satisfied simultaneously, or even some property of the extended environment of the cell, or of its history. As mentioned in the Introduction, the strategy is to perform many experiments modifying individual cells, to label them according to their significance at some later time, and to test which cell observables at ![]() $t=0$ can be used to separate the classes thus labelled. Hereafter, the one-point scalar

$t=0$ can be used to separate the classes thus labelled. Hereafter, the one-point scalar ![]() $\langle\, f\rangle _{c}$ stands for the cell average of a property:

$\langle\, f\rangle _{c}$ stands for the cell average of a property:

\begin{equation} \langle\,f\rangle_{c}=l_{cell}^{{-}3} \int^{y_{cell}+l_{cell}}_{y_{cell}} \int^{x_c+l_{cell}/2}_{x_c-l_{cell}/2}\int^{z_c+l_{cell}/2}_{z_c-l_{cell}/2}{f(x,y,z) \,{\rm d}{\kern0.8pt}x \,{\rm d}{\kern0.9pt}y \,{\rm d} z}. \end{equation}

\begin{equation} \langle\,f\rangle_{c}=l_{cell}^{{-}3} \int^{y_{cell}+l_{cell}}_{y_{cell}} \int^{x_c+l_{cell}/2}_{x_c-l_{cell}/2}\int^{z_c+l_{cell}/2}_{z_c-l_{cell}/2}{f(x,y,z) \,{\rm d}{\kern0.8pt}x \,{\rm d}{\kern0.9pt}y \,{\rm d} z}. \end{equation}

Following Jiménez (Reference Jiménez2020b) and Encinar & Jiménez (Reference Encinar and Jiménez2023), the ranking of observables uses a linear-kernel support vector machine (SVM) (Cristianini & Shawe-Taylor Reference Cristianini and Shawe-Taylor2000), implemented in the scikit-learn Python library (Pedregosa et al. Reference Pedregosa2011), which determines an optimal separating hyperplane between two pre-labelled data classes. In our case, we look for the optimal separation of significant or irrelevant experiments in terms of a single quantity, and the SVM hyperplane reduces to a threshold. For each combination of ![]() $l_{cell}$ and the

$l_{cell}$ and the ![]() $y_{cell}$ values in the second column of table 2, and for each classification time

$y_{cell}$ values in the second column of table 2, and for each classification time ![]() $t_c$, two-thirds of the initial conditions are collected into a training set, with the remaining third reserved for testing. The 10 % most significant experiments of the training set are labelled as significant, and the bottom 10 %, as irrelevant. The remaining 80 % are not used for classification purposes. An optimum partition threshold is computed for each of the observables detailed below, and an SVM classification score is assigned to each observable using the test set. The score measures the fraction of data allocated to their correct class by the SVM threshold, and ranges from unity for perfect separability to 0.5 for cases in which the two classes are fully mixed. The procedure is repeated three times after randomly separating the data into training and test sets, and the diagnostic score for the observable is defined as the average of the three results.

$t_c$, two-thirds of the initial conditions are collected into a training set, with the remaining third reserved for testing. The 10 % most significant experiments of the training set are labelled as significant, and the bottom 10 %, as irrelevant. The remaining 80 % are not used for classification purposes. An optimum partition threshold is computed for each of the observables detailed below, and an SVM classification score is assigned to each observable using the test set. The score measures the fraction of data allocated to their correct class by the SVM threshold, and ranges from unity for perfect separability to 0.5 for cases in which the two classes are fully mixed. The procedure is repeated three times after randomly separating the data into training and test sets, and the diagnostic score for the observable is defined as the average of the three results.

The whole process can be automated and is reasonably fast. The experimental description in § 2.1 shows that each SVM run is only requested to classify two sets of 96 points each, and to test the classification on two sets of 48 points. This allows us to minimise pre-existing biases by testing many possible observables. The robustness of the classification results was assessed by decreasing the number of samples by half.

The observables can be physically classified into average cell properties, such as kinetic energy, and perturbation or small-scale properties, such as the kinetic energy of the velocity fluctuations with respect to the cell mean. The former are summarised in table 3, and the latter in table 4. In both cases, properties that are statistically symmetric with respect to reflections on ![]() $z$ are used as absolute values, and positive definite quantities, such as mean squares, are used as logarithms. Otherwise, all observables are processed in the same way.

$z$ are used as absolute values, and positive definite quantities, such as mean squares, are used as logarithms. Otherwise, all observables are processed in the same way.

Table 3. Cell observables. All averages are taken over cells at ![]() $t=0$.

$t=0$.

Table 4. As in table 3, for observables describing perturbation and small-scale quantities.

The diagnostic score of an initial condition depends on the cell height, on its size, and on the moment at which it is classified. Figure 8 shows a typical table of the three best observables identified by the absolute significance ![]() $\sigma _{\boldsymbol {u}}$, as functions of the classification time. Cells are coloured by the classification score. In all cases, the best observable is the initial perturbation amplitude

$\sigma _{\boldsymbol {u}}$, as functions of the classification time. Cells are coloured by the classification score. In all cases, the best observable is the initial perturbation amplitude ![]() $\varepsilon _{\boldsymbol {u}}$, a related quantity such as

$\varepsilon _{\boldsymbol {u}}$, a related quantity such as ![]() $\varepsilon _{\boldsymbol {\omega }}$, or the viscous dissipation of the perturbation intensity

$\varepsilon _{\boldsymbol {\omega }}$, or the viscous dissipation of the perturbation intensity ![]() $\langle {D_{\varepsilon u}}\rangle _{c}$. Although not included in the table, the next best observable is usually also a small-scale quantity closely correlated with the intra-cell velocity fluctuations, such as

$\langle {D_{\varepsilon u}}\rangle _{c}$. Although not included in the table, the next best observable is usually also a small-scale quantity closely correlated with the intra-cell velocity fluctuations, such as ![]() $s_{ij}s_{ij}$,

$s_{ij}s_{ij}$, ![]() $\omega _i^2$ or the in-cell standard deviation

$\omega _i^2$ or the in-cell standard deviation ![]() ${v}^{\prime c}$. The table in figure 8 is thus equivalent to the fluctuation persistence in figures 6(a,b).

${v}^{\prime c}$. The table in figure 8 is thus equivalent to the fluctuation persistence in figures 6(a,b).

Figure 8. Classification score of the three best observables for a classification based on absolute significance ![]() $\sigma _{\boldsymbol {u}}$. Row indicates rank; column indicates evaluation time in turnovers. The highlighted column is

$\sigma _{\boldsymbol {u}}$. Row indicates rank; column indicates evaluation time in turnovers. The highlighted column is ![]() $t_{sig}$. Colour indicates the classification score. Here,

$t_{sig}$. Colour indicates the classification score. Here, ![]() $l_{cell}^+=50$,

$l_{cell}^+=50$, ![]() $y_{cell}^+=125$.

$y_{cell}^+=125$.

In the sense that this absolute persistence is due to the slow relaxation of the initial amplitude, it can be considered physically uninteresting, but it can be largely compensated by using the relative significance ![]() $\sigma _{\boldsymbol {u}r}$ defined in (2.5). Figure 9 displays the two best observables for different sizes at a fixed cell height, and figure 10 displays results for a given size and different heights. In both cases, the best score starts being relatively high at short classification times, decreases for intermediate ones, and increases again towards the end of the experimental run. The evolution of the optimum diagnostic variables with the classification time can be divided into three phases.

$\sigma _{\boldsymbol {u}r}$ defined in (2.5). Figure 9 displays the two best observables for different sizes at a fixed cell height, and figure 10 displays results for a given size and different heights. In both cases, the best score starts being relatively high at short classification times, decreases for intermediate ones, and increases again towards the end of the experimental run. The evolution of the optimum diagnostic variables with the classification time can be divided into three phases.

Figure 9. Classification score of the two best observables for a classification based on relative significance ![]() $\sigma _{\boldsymbol {u}r}$: (a)

$\sigma _{\boldsymbol {u}r}$: (a) ![]() $l_{cell}^+=150$,

$l_{cell}^+=150$, ![]() $y_{cell}^{+}=150$, (b)

$y_{cell}^{+}=150$, (b) ![]() $l_{cell}^+=75$,

$l_{cell}^+=75$, ![]() $y_{cell}^{+}=138$, (c)

$y_{cell}^{+}=138$, (c) ![]() $l_{cell}^+=25$,

$l_{cell}^+=25$, ![]() $y_{cell}^+=138$. Row indicates rank; column indicates evaluation time in turnovers. The highlighted columns are

$y_{cell}^+=138$. Row indicates rank; column indicates evaluation time in turnovers. The highlighted columns are ![]() $t_{sig}$. Colour indicates classification score. Here,

$t_{sig}$. Colour indicates classification score. Here, ![]() $y_{cell}^+\approx 150$.

$y_{cell}^+\approx 150$.

Figure 10. Classification score of the two best observables for a classification based on relative significance ![]() $\sigma _{\boldsymbol {u}r}$: (a)

$\sigma _{\boldsymbol {u}r}$: (a) ![]() $y_{cell}^+=275$, (b)

$y_{cell}^+=275$, (b) ![]() $y_{cell}^+=125$, (c)

$y_{cell}^+=125$, (c) ![]() $y_{cell}=0$. Row indicates rank; column indicates evaluation time in

$y_{cell}=0$. Row indicates rank; column indicates evaluation time in ![]() $h/u_\tau$. The highlighted columns are

$h/u_\tau$. The highlighted columns are ![]() $t_{sig}$. Colour indicates classification score. Here,

$t_{sig}$. Colour indicates classification score. Here, ![]() $l_{cell}^+=50$.

$l_{cell}^+=50$.

During the initial phase, up to the time when the scores are lowest, the best observables include ![]() $s_{ij}s_{ij}$, the magnitude of the initial disturbance, and the disturbance production and dissipation, all of which are either highly correlated with the initial value of

$s_{ij}s_{ij}$, the magnitude of the initial disturbance, and the disturbance production and dissipation, all of which are either highly correlated with the initial value of ![]() $\varepsilon _{\boldsymbol {u}}$, or are terms in its evolution equation (A8). This part of the table is equivalent to the observation in § 2.1 that initially stronger perturbations not only remain strong, but also grow faster than weaker ones.

$\varepsilon _{\boldsymbol {u}}$, or are terms in its evolution equation (A8). This part of the table is equivalent to the observation in § 2.1 that initially stronger perturbations not only remain strong, but also grow faster than weaker ones.

The second phase is the broad minimum of the score around ![]() $t_{sig}$. While the scores in this phase are not high, the best observables change from the small-scale perturbation properties of the initial phase to properties of the cell that do not include fluctuations, such as the cell average of some velocity component, or equivalently, the mean shear when the cells are very close to the wall. It is interesting that the best measure of shear near the wall is

$t_{sig}$. While the scores in this phase are not high, the best observables change from the small-scale perturbation properties of the initial phase to properties of the cell that do not include fluctuations, such as the cell average of some velocity component, or equivalently, the mean shear when the cells are very close to the wall. It is interesting that the best measure of shear near the wall is ![]() $\langle \partial _y u\rangle _{fw}$, which excludes the viscous sublayer (see figure 10c). This is consistent with the idea that the growth of the perturbation is due to the energy production by the local shear, because the fluctuation production term in Appendix A is proportional to the shear, but also to the Reynolds stresses

$\langle \partial _y u\rangle _{fw}$, which excludes the viscous sublayer (see figure 10c). This is consistent with the idea that the growth of the perturbation is due to the energy production by the local shear, because the fluctuation production term in Appendix A is proportional to the shear, but also to the Reynolds stresses ![]() $\hat {u}_i\hat {u}_j$, which are inactive in the sublayer.

$\hat {u}_i\hat {u}_j$, which are inactive in the sublayer.

It is interesting that the longitudinal velocity derivatives ![]() $\langle {\partial _x u}\rangle _{c}$ and

$\langle {\partial _x u}\rangle _{c}$ and ![]() $\langle {\partial _z w}\rangle _{c}$ appear among the most diagnostic cell properties for wall-attached perturbations in figure 10(c). These derivatives are involved in mass conservation, and follow naturally from the meandering of near-wall streaks, which has been associated with streak breakdown (Jiménez & Moin Reference Jiménez and Moin1991; Hamilton et al. Reference Hamilton, Kim and Waleffe1995; Waleffe Reference Waleffe1997) and with the generation of Orr bursts (Orr Reference Orr1907; Jiménez Reference Jiménez2013). Although not apparent from figure 10(c), it can be shown that significant cells are associated with

$\langle {\partial _z w}\rangle _{c}$ appear among the most diagnostic cell properties for wall-attached perturbations in figure 10(c). These derivatives are involved in mass conservation, and follow naturally from the meandering of near-wall streaks, which has been associated with streak breakdown (Jiménez & Moin Reference Jiménez and Moin1991; Hamilton et al. Reference Hamilton, Kim and Waleffe1995; Waleffe Reference Waleffe1997) and with the generation of Orr bursts (Orr Reference Orr1907; Jiménez Reference Jiménez2013). Although not apparent from figure 10(c), it can be shown that significant cells are associated with ![]() $\partial _x u<0$,

$\partial _x u<0$, ![]() $\partial _z w>0$, with the opposite association for irrelevant ones.

$\partial _z w>0$, with the opposite association for irrelevant ones.

Finally, at longer times of the order of ![]() $t-t_{sig} \approx 0.25h/u_\tau$, the score recovers, and the most diagnostic observable reverts to the small-scale quantities that dominate short times. Since we saw in § 2.1 that

$t-t_{sig} \approx 0.25h/u_\tau$, the score recovers, and the most diagnostic observable reverts to the small-scale quantities that dominate short times. Since we saw in § 2.1 that ![]() $\sigma _{\boldsymbol {u}r}$ at long times is essentially equivalent to

$\sigma _{\boldsymbol {u}r}$ at long times is essentially equivalent to ![]() $\varepsilon _{\boldsymbol {u}}(0)$, this final phase is a reflection of the behaviour at short times, and does not represent new physics.

$\varepsilon _{\boldsymbol {u}}(0)$, this final phase is a reflection of the behaviour at short times, and does not represent new physics.

Figure 11(a) shows the dependence on ![]() $y_{cell}$ of the time at which the accumulated score of the top four observables reaches its minimum. After an initial transient that gets shorter as the cell size increases,

$y_{cell}$ of the time at which the accumulated score of the top four observables reaches its minimum. After an initial transient that gets shorter as the cell size increases, ![]() $t_{min}$ grows with the distance from the wall, and approximately tracks the optimum classification time

$t_{min}$ grows with the distance from the wall, and approximately tracks the optimum classification time ![]() $t_{sig}$.

$t_{sig}$.

Figure 11. (a) The solid lines are the time ![]() $t_{min}$ at which the sum of the scores of the four best observables is lowest. The dashed lines are

$t_{min}$ at which the sum of the scores of the four best observables is lowest. The dashed lines are ![]() $t_{sig}$ from figure 7(b). Other symbols are as in table 2. (b–f) Classification scores of selected observables as functions of time, computed from

$t_{sig}$ from figure 7(b). Other symbols are as in table 2. (b–f) Classification scores of selected observables as functions of time, computed from ![]() $\sigma _{\boldsymbol {u}r}$. Crosses indicate small-scale quantities, defined as the average of the scores of

$\sigma _{\boldsymbol {u}r}$. Crosses indicate small-scale quantities, defined as the average of the scores of ![]() $\varepsilon _{\boldsymbol {u}}$ and

$\varepsilon _{\boldsymbol {u}}$ and ![]() $\langle {s_{ij}s_{ij}}\rangle _{c}$. Triangles indicate cell-scale quantities, average of

$\langle {s_{ij}s_{ij}}\rangle _{c}$. Triangles indicate cell-scale quantities, average of ![]() $\langle {u}\rangle _{c}$ and

$\langle {u}\rangle _{c}$ and ![]() $\langle {v}\rangle _{c}$. Abscissae are offset by the attachment time

$\langle {v}\rangle _{c}$. Abscissae are offset by the attachment time ![]() $t_{att}$. Colour intensity increases with the distance from the wall, from

$t_{att}$. Colour intensity increases with the distance from the wall, from ![]() $y_{cell}^+=12.5$ to

$y_{cell}^+=12.5$ to ![]() $y_{cell}^+=275$, with (b)

$y_{cell}^+=275$, with (b) ![]() $l_{cell}^+=25$, (c)

$l_{cell}^+=25$, (c) ![]() $l_{cell}^+=50$, (d)

$l_{cell}^+=50$, (d) ![]() $l_{cell}^+=75$, (e)

$l_{cell}^+=75$, (e) ![]() $l_{cell}^+=100$, ( f)

$l_{cell}^+=100$, ( f) ![]() $l_{cell}^+=150$.

$l_{cell}^+=150$.

Figures 11(b–f) summarise the evolution of the scores as functions of time. The blue lines are averages of the scores of several fluctuation quantities, and the red lines are averages of cell-scale properties. The figures are offset by their attachment time ![]() $t_{att}$, which improves their collapse significantly, and reflect the decreasing influence of the small-scale quantities as the perturbations approach the attachment time, as well as the increasing importance of the cell-scale properties as the perturbations intensify. It should be mentioned that offsetting

$t_{att}$, which improves their collapse significantly, and reflect the decreasing influence of the small-scale quantities as the perturbations approach the attachment time, as well as the increasing importance of the cell-scale properties as the perturbations intensify. It should be mentioned that offsetting ![]() $t$ with the optimum classification time

$t$ with the optimum classification time ![]() $t_{sig}$, instead of with

$t_{sig}$, instead of with ![]() $t_{att}$, also collapses most scores, as could be expected from the similarity of both times in figure 7. It also collapses better the case

$t_{att}$, also collapses most scores, as could be expected from the similarity of both times in figure 7. It also collapses better the case ![]() $y_{cell}=0$, which is not included in figure 11, and for which

$y_{cell}=0$, which is not included in figure 11, and for which ![]() $t_{att}$, defined at the arbitrary distance

$t_{att}$, defined at the arbitrary distance ![]() $y^+=50$, does a poor job. In spite of this,

$y^+=50$, does a poor job. In spite of this, ![]() $t_{att}$ is used in figure 11 because it improves the case

$t_{att}$ is used in figure 11 because it improves the case ![]() $l_{cell}^+=25$, and underscores the already mentioned connection between significance and the energy production from the near-wall shear. It is also interesting that the score of the velocities has a secondary maximum at

$l_{cell}^+=25$, and underscores the already mentioned connection between significance and the energy production from the near-wall shear. It is also interesting that the score of the velocities has a secondary maximum at ![]() $t=0$, probably due to the known correlation of small-scale vorticity with the large-scale streamwise-velocity streaks (Tanahashi et al. Reference Tanahashi, Kang, Miyamoto, Shiokawa and Miyauchi2004).