1. Introduction

In literature, various studies have been presented on high utility itemset mining (HUIM) (Liu et al., Reference Liu and Liao2005; Yao & Hamilton, Reference Yao and Hamilton2006) that considered the importance of the items, such as the unit profit of itemsets. Traditional HUIM approaches are designed for static quantitative datasets. In real-world situations, datasets are frequently updated dynamically, For instance, in tasks like market basket analysis and business decision-making, the datasets are modified incrementally by appending additional data dynamically. Extensive research has been conducted to develop efficient algorithms for discovering high utility itemsets (HUIs) from dynamically updated datasets. Traditional HUIM algorithms required processing datasets from scratch each time a new record was added. In contrast, incremental HUIM (iHUIM) updates and incrementally identifies HUIs without scanning the whole dataset, effectively reduce the cost associated with discovery of HUIs when new records are added. Over the past decade, various iHUIM algorithms have been introduced, primarily grouped into tree-based, pattern-growth-based, utility-list-based, projection-based, and pre-large-based approaches. However, there are still ample opportunities for designing efficient novel iHUIM algorithms, incorporating effective pruning strategies, data structures, and more.

An incremental mining approach for record insertions, named FUP-HU (Lin et al., Reference Lin, Lan and Hong2012) is designed for maintaining and updating the already obtained HUIs by FUP notion (Cheung et al. Reference Cheung, Han, Ng and Wong1996) and Two-phase algorithm (Liu et al., Reference Liu and Liao2005). However, it generates a substantial number of candidates and scans the dataset multiple times. Incremental and interactive utility tree (IIUT) (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009b) employs the pattern-growth method to resolve the issue of costly candidate generation in a level-wise manner. Nonetheless, the run-time of the IIUT algorithm also gets larger as the size of the dataset expands. Ahmed et al. (Reference Ahmed, Tanbeer, Jeong and Lee2009a) proposed an approach that presented three novel tree structures, namely Incremental high utility pattern lexicographic tree (IHUP

![]() $_L$

-Tree), IHUP transaction frequency tree (IHUP

$_L$

-Tree), IHUP transaction frequency tree (IHUP

![]() $_{TF}$

-Tree), and IHUP transaction-weighted utilization tree (IHUP

$_{TF}$

-Tree), and IHUP transaction-weighted utilization tree (IHUP

![]() $_{TWU}$

-Tree). These structures perform incremental and interactive HUPM and adhere to the ‘

$_{TWU}$

-Tree). These structures perform incremental and interactive HUPM and adhere to the ‘

![]() $ build \ once, \ mine \ many \ $

’ principle, enabling efficient mining of HUIs from incremental transaction datasets. However, the suggested method produces an excessive number of candidates when the dataset consists of lengthy transactions or when the minimum utility threshold is set exceedingly low. Moreover, IHUP-Tree proves to be a relatively efficient approach. This process requires the unnecessary generation of low-utility itemsets, which then require significant time for pruning. On the other hand, high utility patterns in incremental datasets (HUPID) growth (Yun & Ryang, Reference Yun and Ryang2014) efficiently mines patterns with reduced overestimated utility from incremental datasets using just a single dataset scan. Nonetheless, it requires an additional phase to find the actual HUIs from the candidate sets. In contrast, an incremental and interactive HUIM algorithm, namely incremental high utility itemset miner (IHUI-Miner) (Guo & Gao, Reference Guo and Gao2017), is designed to extract HUIs without the need of candidate generation. A new tree structure, called incremental high utility itemset tree (IHUI-Tree), has been introduced that tree organizes items in lexicographic order using the pattern-growth method and follows a bottom-up traversal approach.

$ build \ once, \ mine \ many \ $

’ principle, enabling efficient mining of HUIs from incremental transaction datasets. However, the suggested method produces an excessive number of candidates when the dataset consists of lengthy transactions or when the minimum utility threshold is set exceedingly low. Moreover, IHUP-Tree proves to be a relatively efficient approach. This process requires the unnecessary generation of low-utility itemsets, which then require significant time for pruning. On the other hand, high utility patterns in incremental datasets (HUPID) growth (Yun & Ryang, Reference Yun and Ryang2014) efficiently mines patterns with reduced overestimated utility from incremental datasets using just a single dataset scan. Nonetheless, it requires an additional phase to find the actual HUIs from the candidate sets. In contrast, an incremental and interactive HUIM algorithm, namely incremental high utility itemset miner (IHUI-Miner) (Guo & Gao, Reference Guo and Gao2017), is designed to extract HUIs without the need of candidate generation. A new tree structure, called incremental high utility itemset tree (IHUI-Tree), has been introduced that tree organizes items in lexicographic order using the pattern-growth method and follows a bottom-up traversal approach.

The efficient incremental high utility itemset (EIHI) miner (Fournier-Viger et al., Reference Fournier-Viger, Lin, Gueniche and Barhate2015) algorithm is designed to maintain HUIs within incremental datasets. However, it performs extra operations to construct new utility-lists for newly added data and merge them with the existing datasets. LIHUP (Yun et al., Reference Yun, Ryang, Lee and Fujita2017) builds a global list-based structure with just one pass through the original dataset and then reorganizes this data structure by sorting the lists according to the ascending order of transaction-weighted utilization (TWU) values. But, the execution time and memory usage of this approach expand as the dataset size grows. In contrast, an efficient incremental HUIM algorithm named IIHUM (Indexed-list-based Incremental High utility pattern Mining) (Yun et al., Reference Yun, Nam, Lee and Yoon2019) adopts a novel indexed-list-based structure to incrementally mine HUIs without the need of candidate generation. The Id2HUP+ (Incremental direct discovery of high utility patterns) approach (Liu et al., Reference Liu, Ju, Zhang, Fung, Yang and Yu2019) employs a single-phase approach for incremental mining of HUIs from incremental datasets. A novel data structure named niCAUL (Newly Improved Chain of Accurate utility-lists) is designed to quickly update the dynamic datasets. IncCHUI (Incremental Closed high utility Itemset miner) (Dam et al. Reference Dam, Ramampiaro, Nørvåg and Duong2019) uses a novel incremental utility-list structure that is constructed and rearranged through a single pass of the dataset scan. However, it incurs memory overhead on some benchmark datasets, leading to higher memory consumption compared to benchmark algorithms, such as CHUI-Miner (Wu et al. Reference Wu, Fournier-Viger, Gu and Tseng2015), CLS-Miner (Dam et al., Reference Dam, Li, Fournier-Viger and Duong2018), and EFIM-Closed (Fournier-Viger et al., Reference Fournier-Viger, Zida, Lin, Wu and Tseng2016). Extended HUI-miner (E-HUIM) (Pushp & Chand 2021) is proposed to generate and maintain the HUIs from the incremental datasets. Nevertheless, there is room for the development of more efficient data structures to enhance the mining process. The pre-large incremental high utility itemset (PRE-HUI) algorithm (Lin et al., Reference Lin, Gan, Hong and Pan2014), effectively preserves and modifies the obtained HUIs by integrating and updating the two-phase (Liu et al. Reference Liu and Liao2005) and pre-large concepts (Hong et al., Reference Hong, Wang and Tao2001). An efficient tree-based algorithm named PIHUP-MOD (Pre-large-based Incremental High Utility Pattern mining for transaction MODification) (Yun et al., Reference Yun, Kim, Ryu, Baek, Nam, Lee, Vo and Pedrycz2021) is proposed to mine HUIs from the modified datasets. This is achieved through the utilization of the pre-large concept. The PIHUP-MOD

![]() $_L$

-tree data structure (where L stands for lexicographic order) is designed for the extraction of pre-large and large patterns during the mining process. However, it proves to be a challenging task for users to define and set two thresholds. While numerous iHUIM algorithms have been introduced in the past, there exists a scarcity of documented surveys that offer a comprehensive overview and comparative assessment of their performance. The main purpose of this survey is to provide in-depth knowledge concerning the iHUIM algorithms.

$_L$

-tree data structure (where L stands for lexicographic order) is designed for the extraction of pre-large and large patterns during the mining process. However, it proves to be a challenging task for users to define and set two thresholds. While numerous iHUIM algorithms have been introduced in the past, there exists a scarcity of documented surveys that offer a comprehensive overview and comparative assessment of their performance. The main purpose of this survey is to provide in-depth knowledge concerning the iHUIM algorithms.

Differences from the existing survey

Within the existing literature, only two surveys (Gan et al., Reference Gan, Chun-Wei, Chao, Wang and Yu2018; Cheng et al., Reference Cheng, Han, Zhang, Li and Wang2021) concerning iHUIM have been identified. In 2017, Gan et al. (Reference Gan, Chun-Wei, Chao, Wang and Yu2018) presented a survey that organized iHUIM approaches into three primary classifications: Apriori-based, tree-based, and utility-list-based techniques. It provided an extensive overview of the latest state-of-the-art methods within the iHUIM domain. Additionally, the survey included a summary table, showing the characteristics of these state-of-the-art approaches. In 2021, Cheng et al. (Reference Cheng, Han, Zhang, Li and Wang2021) presented a survey of iHUIM approaches categorized by their storage structure. This survey categorized the available iHUIM approaches based on the manner in which they store data associated with itemsets and their utility values. The survey classifies the state-of-the-art methods into various groups, including tree-based, list-based, array-based, and alternative methods (e.g., hash-set-based approaches), based on the approach used to store information about items. This paper included a total of 19 papers across all categories. Additionally, it provided a comprehensive discussion of the characteristics, strengths, and weaknesses of the existing state-of-the-art methods. The survey outlined various potential future research directions in the field.

Our work discussed and analyzed several novel categories and features of the current state-of-the-art methods. The key contributions of this survey can be outlined as follows:

-

• The survey presents a novel taxonomy and categorized the existing approaches into various groups, including two-phase-based, pattern-growth-based, projection-based, utility-list-based, and pre-large-based methods.

-

• The paper provides a comprehensive overview that includes almost all features and characteristics of the current state-of-the-art methods.

-

• The survey also showcases a thorough summary table that includes outcomes, advantages, disadvantages, and future research directions for the latest state-of-the-art methods.

-

• Our work includes 27 state-of-the-art iHUIM approaches. This comparative analysis provides a more extensive and in-depth comparison compared to the other two existing surveys.

-

• The survey provides both a category-wise summary and a comprehensive consolidated overview of all the currently available state-of-the-art iHUIM methods.

-

• It also included a brief discussion on research possibilities and prospective directions.

The remainder of this study is structured in the following manner: Section 2 provides preliminaries and definitions that are essential for understanding the concept of iHUIM. Section 3 presents an in-depth analysis and summary of various iHUIM approaches. Section 4 discusses the comprehensive summary and analysis of all the current state-of-the-art methods. Section 5 presents several potential research opportunities and future directions. Lastly, Section 6 provides the conclusion of the study.

2. Preliminaries and definitions

Let

![]() $I=\{i_1,i_2,\ldots,i_n\}$

be a finite set of n items. Let the transactions

$I=\{i_1,i_2,\ldots,i_n\}$

be a finite set of n items. Let the transactions

![]() $T_1,T_2,\ldots,T_m$

take place in the dataset D with each transaction

$T_1,T_2,\ldots,T_m$

take place in the dataset D with each transaction

![]() $T_q \subseteq I$

. An itemset X is defined as a collection of k items

$T_q \subseteq I$

. An itemset X is defined as a collection of k items

![]() $\{i_1,i_2,\ldots,i_k\}$

, where k denotes the length of the itemset. An itemset X is considered to be included in a transaction

$\{i_1,i_2,\ldots,i_k\}$

, where k denotes the length of the itemset. An itemset X is considered to be included in a transaction

![]() $T_q$

if

$T_q$

if

![]() $X \subseteq T_q$

. Each item

$X \subseteq T_q$

. Each item

![]() $i_j$

within the transaction

$i_j$

within the transaction

![]() $T_q$

is linked to a purchase quantity, also called internal utility (presented by the numbers in brackets), represented as

$T_q$

is linked to a purchase quantity, also called internal utility (presented by the numbers in brackets), represented as

![]() $q(i_j,T_q)$

. Each item

$q(i_j,T_q)$

. Each item

![]() $i_j$

has relative importance and is also called an external utility (or unit profit) represented as

$i_j$

has relative importance and is also called an external utility (or unit profit) represented as

![]() $EU(i_j)$

. A user-defined minimum utility threshold is established and denoted as

$EU(i_j)$

. A user-defined minimum utility threshold is established and denoted as

![]() $\delta$

.

$\delta$

.

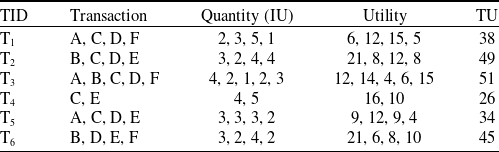

For instance, the transactional dataset includes six transactions

![]() $T_1,T_2,\ldots,T_6$

as illustrated in Table 1. Each transaction is associated with a set of items. The dataset consists of six items: A, B, C, D, E, and F. For instance, transaction

$T_1,T_2,\ldots,T_6$

as illustrated in Table 1. Each transaction is associated with a set of items. The dataset consists of six items: A, B, C, D, E, and F. For instance, transaction

![]() $T_1$

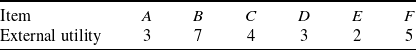

consists of four items A, C, D and F, each with internal utility of 2,3,5 and 1, respectively. Table 2 displays the external utility of each item. The items A, B, C, D, E, and F have external utility of 3, 7, 4, 3, 2, and 5, respectively.

$T_1$

consists of four items A, C, D and F, each with internal utility of 2,3,5 and 1, respectively. Table 2 displays the external utility of each item. The items A, B, C, D, E, and F have external utility of 3, 7, 4, 3, 2, and 5, respectively.

Table 1. A transactional dataset

Table 2. External utility value

Definition 2.1.

The utility of an item

![]() $i_j$

in a transaction

$i_j$

in a transaction

![]() $T_q$

is labelled as

$T_q$

is labelled as

![]() $U(i_j,T_q)$

and is defined as:

$U(i_j,T_q)$

and is defined as:

![]() $U(i_j,T_q)=$

$U(i_j,T_q)=$

![]() $q(i_j,T_q)$

$q(i_j,T_q)$

![]() $ \times EU(i_j) $

$ \times EU(i_j) $

For instance, the utility of an item A in a transaction

![]() $T_1$

is

$T_1$

is

![]() $U(A,T_1)=$

$U(A,T_1)=$

![]() $q(A,T_1)$

$q(A,T_1)$

![]() $ \times EU(A) $

$ \times EU(A) $

![]() $=2 \times 3=6$

. The utility of all items are displayed in the

$=2 \times 3=6$

. The utility of all items are displayed in the

![]() $4^{th}$

column of Table 3.

$4^{th}$

column of Table 3.

Table 3. Transaction utility in the original dataset

Definition 2.2

The utility of an itemset X in a transaction

![]() $T_q$

is denoted as

$T_q$

is denoted as

![]() $U(X,T_q)$

and is defined as:

$U(X,T_q)$

and is defined as:

![]() $U(X, T_q) = \sum_{i_j \in X \wedge X \subseteq T_q } U(i_j,T_q)$

$U(X, T_q) = \sum_{i_j \in X \wedge X \subseteq T_q } U(i_j,T_q)$

For instance, the utility of an itemset

![]() $\{A, C\}$

in a transaction

$\{A, C\}$

in a transaction

![]() $T_1$

is

$T_1$

is

![]() $U(\{A,C\},T_1)=$

$U(\{A,C\},T_1)=$

![]() $U(A,T_1)$

$U(A,T_1)$

![]() $+ U(C,T_1)$

$+ U(C,T_1)$

![]() $=6+12=18$

.

$=6+12=18$

.

Definition 2.3 The utility of an itemset X in a dataset D is indicated as U(X) and is defined as:

![]() $U(X) = \sum_{X \subseteq T_q \wedge T_q \in D } U(X,T_q)$

$U(X) = \sum_{X \subseteq T_q \wedge T_q \in D } U(X,T_q)$

For instance, the utility of an itemset

![]() $\{A, C\}$

in a dataset D is

$\{A, C\}$

in a dataset D is

![]() $U(\{A,C\}) =$

$U(\{A,C\}) =$

![]() $ U(\{A,C\},T_1) $

$ U(\{A,C\},T_1) $

![]() $ + U(\{A,C\},T_5)$

$ + U(\{A,C\},T_5)$

![]() $=18+21=39$

.

$=18+21=39$

.

Definition 2.4

The transaction utility of a transaction

![]() $T_q$

is represented as

$T_q$

is represented as

![]() $TU(T_q)$

and is defined as:

$TU(T_q)$

and is defined as:

![]() $TU(T_q) = \sum U(i_j,T_q)$

$TU(T_q) = \sum U(i_j,T_q)$

For instance, transaction utility of a transaction

![]() $T_1$

is

$T_1$

is

![]() $TU(T_1) =$

$TU(T_1) =$

![]() $ U(A,T_1) + $

$ U(A,T_1) + $

![]() $ U(C,T_1) +$

$ U(C,T_1) +$

![]() $ U(D,T_1) +$

$ U(D,T_1) +$

![]() $ U(F,T_1) $

$ U(F,T_1) $

![]() $ =6+12+15+5=38$

. The TU of all transactions are depicted in the

$ =6+12+15+5=38$

. The TU of all transactions are depicted in the

![]() $5\mathrm{th}$

column of Table 3.

$5\mathrm{th}$

column of Table 3.

Definition 2.5

The total utility of a dataset D is represented as

![]() $TU^{D}$

and is defined as:

$TU^{D}$

and is defined as:

![]() $TU^{D} = \sum_{T_q \in D} TU(T_q)$

$TU^{D} = \sum_{T_q \in D} TU(T_q)$

For instance, total utility of the original dataset D is

![]() $TU^{D} =$

$TU^{D} =$

![]() $ TU(T_1) + $

$ TU(T_1) + $

![]() $ TU(T_2) +$

$ TU(T_2) +$

![]() $ TU(T_3) +$

$ TU(T_3) +$

![]() $ TU(T_4) +$

$ TU(T_4) +$

![]() $ TU(T_5) +$

$ TU(T_5) +$

![]() $ TU(T_6) $

=

$ TU(T_6) $

=

![]() $38+49+51+26+34+45=243$

.

$38+49+51+26+34+45=243$

.

Definition 2.6

A minimum utility threshold

![]() $ \delta $

is determined by the percentage of the total utility of a dataset. The minimum utility value (

$ \delta $

is determined by the percentage of the total utility of a dataset. The minimum utility value (

![]() $min\_util$

) can be defined as:

$min\_util$

) can be defined as:

![]() $min\_util =$

$min\_util =$

![]() $ TU^{D} \times \delta$

$ TU^{D} \times \delta$

For instance,

![]() $\delta$

is 20%, then

$\delta$

is 20%, then

![]() $min\_util=$

$min\_util=$

![]() $ TU^{D} \times 0.2$

=

$ TU^{D} \times 0.2$

=

![]() $243 \times 0.2 $

$243 \times 0.2 $

![]() $=49$

.

$=49$

.

Definition 2.7

An itemset X, is designated as a high utility itemset in a dataset D if

![]() $U(X) \ge min\_util$

, otherwise itemset X is considered as a low utility itemset.

$U(X) \ge min\_util$

, otherwise itemset X is considered as a low utility itemset.

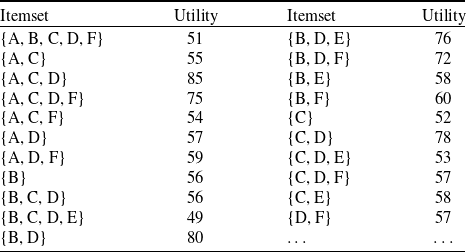

For instance,

![]() $\delta$

is 20% for the running example. The comprehensive list of high utility itemsets is displayed in Table 4.

$\delta$

is 20% for the running example. The comprehensive list of high utility itemsets is displayed in Table 4.

Table 4. High utility itemsets in original dataset for

![]() $ \delta $

= 20%

$ \delta $

= 20%

In high utility itemset mining, the utility value of an itemset does not follow the downward closure property (DCP). For instance, suppose

![]() $\delta$

is set at 20% that is 49, then itemset

$\delta$

is set at 20% that is 49, then itemset

![]() $\{A\}$

is a low utility itemset because

$\{A\}$

is a low utility itemset because

![]() $U(A)=27$

which is less than

$U(A)=27$

which is less than

![]() $min\_util$

. However, the superset of A, that is

$min\_util$

. However, the superset of A, that is

![]() $\{A, C\}$

is a high utility itemset because

$\{A, C\}$

is a high utility itemset because

![]() $U(\{A,C\})=55$

. In 2005, Liu et al. (Reference Liu and Liao2005) proposed transaction-weighted utilization (TWU) which can maintain DCP property.

$U(\{A,C\})=55$

. In 2005, Liu et al. (Reference Liu and Liao2005) proposed transaction-weighted utilization (TWU) which can maintain DCP property.

Definition 2.8 The TWU of an itemset X is represented as TWU(X) and is defined as:

![]() $TWU(X) = \sum_{X \subseteq T_q \wedge T_q \in D} TU(T_q)$

$TWU(X) = \sum_{X \subseteq T_q \wedge T_q \in D} TU(T_q)$

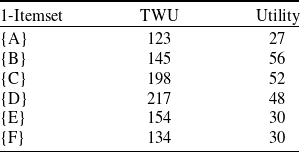

For instance, TWU of an itemset

![]() $\{A\}$

is

$\{A\}$

is

![]() $TWU(A)=$

$TWU(A)=$

![]() $ TU(T_1) + $

$ TU(T_1) + $

![]() $ TU(T_3) +$

$ TU(T_3) +$

![]() $ TU(T_5) $

$ TU(T_5) $

![]() $=38+51+34=123$

. Similarity, TWU of an itemset

$=38+51+34=123$

. Similarity, TWU of an itemset

![]() $\{A,C\}$

is

$\{A,C\}$

is

![]() $TWU(\{A,C\})=$

$TWU(\{A,C\})=$

![]() $TU(T_1)+$

$TU(T_1)+$

![]() $TU(T_5)=$

$TU(T_5)=$

![]() $38+34=72$

. TWU of all 1-itemsets are shown in

$38+34=72$

. TWU of all 1-itemsets are shown in

![]() $2\mathrm{nd}$

column of Table 5. Hence, in this example, TWU follows the DCP property.

$2\mathrm{nd}$

column of Table 5. Hence, in this example, TWU follows the DCP property.

Table 5. Transaction-weighted utilization and utility values of 1-itemsets in the original dataset

Property 1

(Transaction-weighted utilization based pruning) Let an itemset X, if TWU(X) is less than

![]() $min\_util$

, then the itemset X and all of its supersets are also low utility itemsets.

$min\_util$

, then the itemset X and all of its supersets are also low utility itemsets.

In accordance with the TWU based pruning, itemsets with a TWU value lower than the

![]() $min\_util$

, are removed from the search space and cannot be utilized to generate candidates. Later, utility-list based algorithms are presented (Liu & Qu, Reference Liu and Qu2012; Krishnamoorthy, Reference Krishnamoorthy2015). These algorithm introduced tighter pruning strategy than TWU-based strategy.

$min\_util$

, are removed from the search space and cannot be utilized to generate candidates. Later, utility-list based algorithms are presented (Liu & Qu, Reference Liu and Qu2012; Krishnamoorthy, Reference Krishnamoorthy2015). These algorithm introduced tighter pruning strategy than TWU-based strategy.

Definition 2.9

(Remaining utility of an itemset in a transaction). The remaining utility of itemset X in transaction

![]() $ T_q $

denoted by

$ T_q $

denoted by

![]() $ RU(X, T_q) $

is the sum of the utilities of all the items in

$ RU(X, T_q) $

is the sum of the utilities of all the items in

![]() $ T_q/X $

in

$ T_q/X $

in

![]() $ T_q $

where

$ T_q $

where

![]() $ RU(X, T_q) $

=

$ RU(X, T_q) $

=

![]() $ \sum_{i \in (T_q /X)} $

$ \sum_{i \in (T_q /X)} $

![]() $ U(i, T_q) $

(

Liu & Qu, Reference Liu and Qu2012

).

$ U(i, T_q) $

(

Liu & Qu, Reference Liu and Qu2012

).

Definition 2.10

(Utility-list structure). The utility-list structure contains three fields,

![]() $ T_{id}, iutil,$

and rutil. The

$ T_{id}, iutil,$

and rutil. The

![]() $ T_{id} $

indicates the transactions containing itemset X, iutil indicates the U(X), and the rutil indicates the remaining utility of itemset X is

$ T_{id} $

indicates the transactions containing itemset X, iutil indicates the U(X), and the rutil indicates the remaining utility of itemset X is

![]() $RU(X,T_q)$

(

Liu & Qu Reference Liu and Qu2012

).

$RU(X,T_q)$

(

Liu & Qu Reference Liu and Qu2012

).

Property 2

(Pruning search-space using remaining utility). For an itemset X, if the sum of

![]() $ U(X)+RU(X) $

is less than

$ U(X)+RU(X) $

is less than

![]() $min\_util $

, then itemset X and all its supersets are low utility itemsets. Otherwise, the itemset is eligible for HUIs. The details and proof of the remaining utility upper-bound (REU)-based upper-bound are given in Liu & Qu (Reference Liu and Qu2012).

$min\_util $

, then itemset X and all its supersets are low utility itemsets. Otherwise, the itemset is eligible for HUIs. The details and proof of the remaining utility upper-bound (REU)-based upper-bound are given in Liu & Qu (Reference Liu and Qu2012).

In the real-world, the transactional datasets are updated frequently and new transactions are added from time to time. Incremental datasets involve the integration of new transactions into the original dataset, resulting in more valuable real-world applications compared to conventional transactional datasets. Traditional algorithms require a substantial amount of time to find HUIs from incremental datasets, primarily due to the need to re-scan the whole updated dataset. To address this challenge, Yeh et al. (Reference Yeh, Chang and Wang2008) designed incremental dataset-based algorithms that eliminate the need for re-scanning the whole updated dataset.

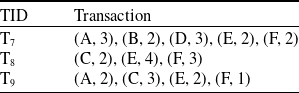

The newly added transactions are displayed in Table 6. It comprises three transactions and includes six items from A to F. The utility values and transaction utility values in the updated dataset are displayed in Table 7. TWU and utility values of 1-itemsets of the whole dataset including newly added transactions, are displayed in Table 8. The final HUIs for the whole dataset are shown in Table 9. The minimum utility threshold

![]() $\delta$

is set to be 20% and the recalculated

$\delta$

is set to be 20% and the recalculated

![]() $min\_util$

is 70.

$min\_util$

is 70.

Table 6. New transactions

Table 7. Transaction utility of the newly added transactions

Table 8. TWU and utility values of 1-itemsets in whole dataset

Table 9. HUIs of the whole dataset for

![]() $\delta $

= 20%

$\delta $

= 20%

3. Incremental high utility itemsets mining approaches

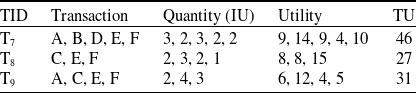

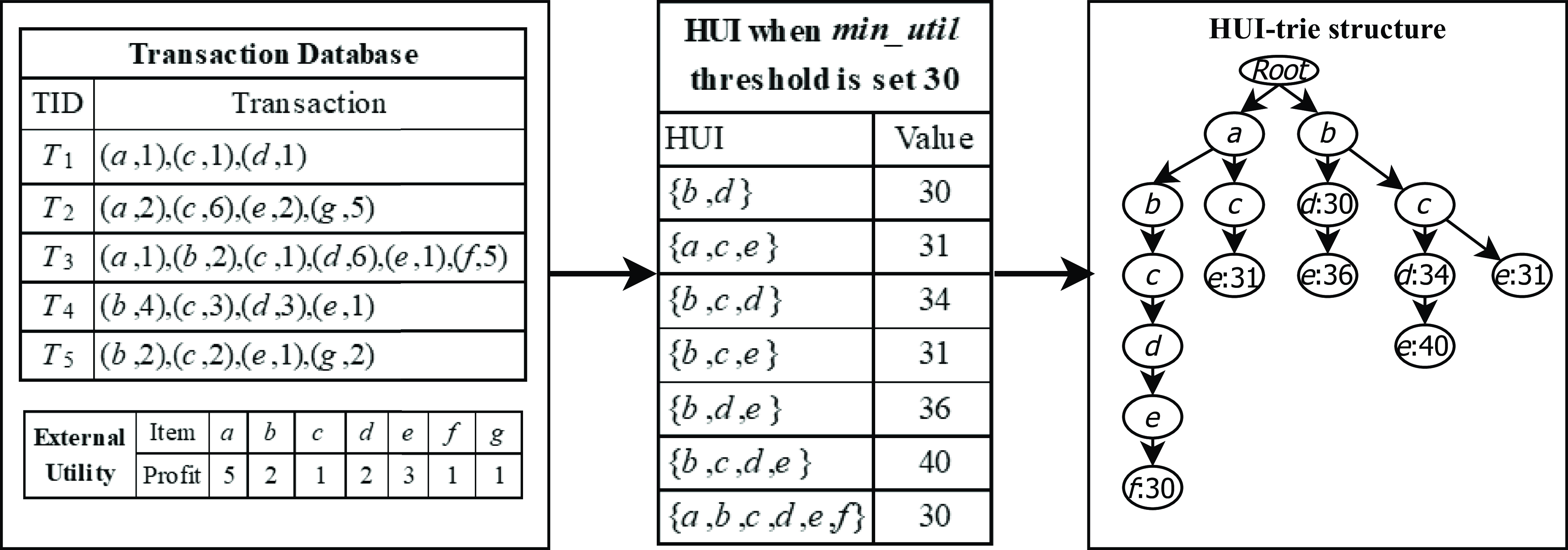

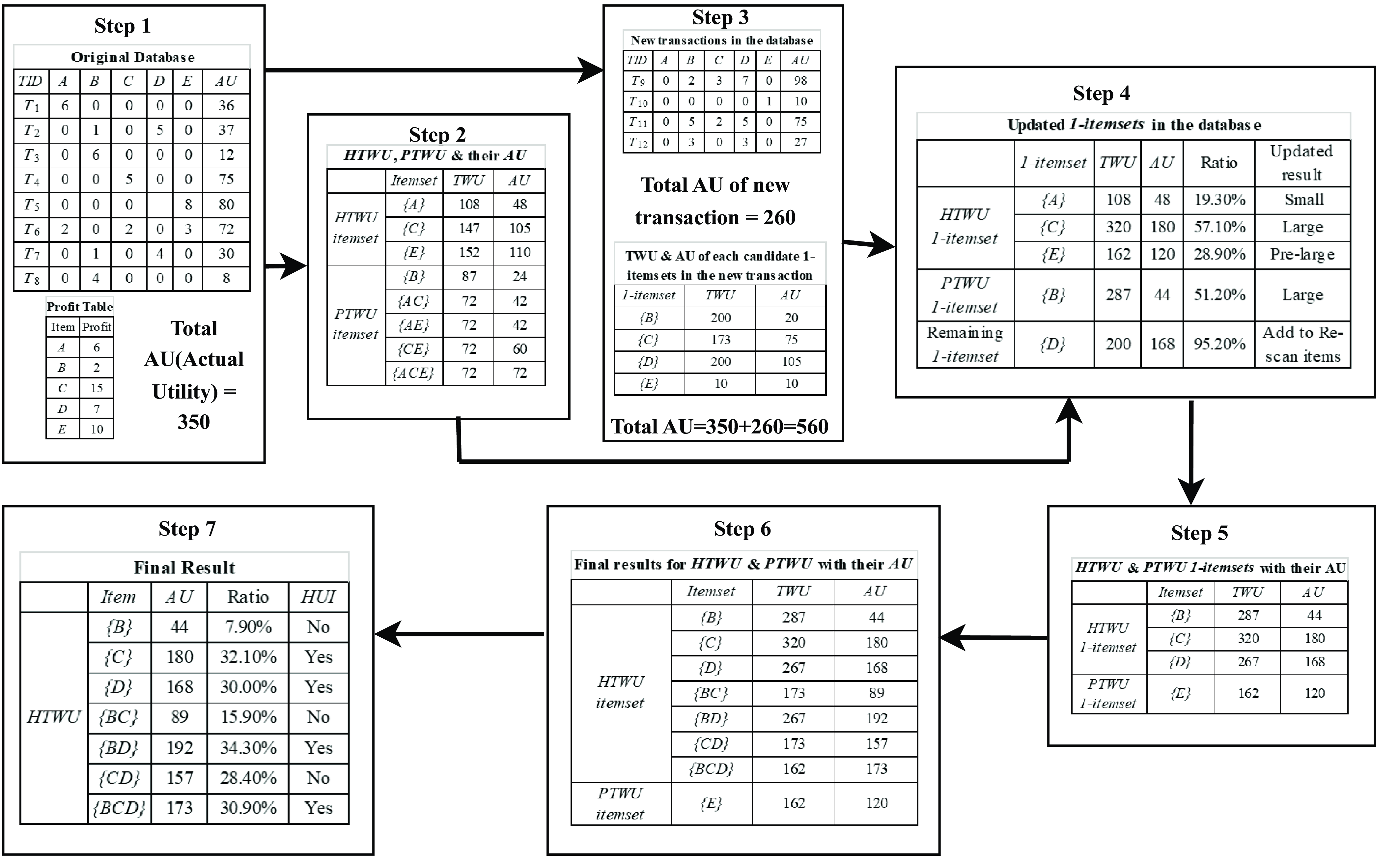

In literature, numerous iHUIM approaches are proposed to address the challenges posed by dynamic datasets, particularly when new transactions are added to the original dataset. This paper focuses on discussing the iHUIM algorithms highlighted within the taxonomy. The iHUIM algorithms are systematically classified into two-phase-based, pattern-growth-based, projection-based, utility-list-based, and pre-large-based categories. This comprehensive categorization is depicted in Figure 1.

Figure 1. A taxonomy of incremental high utility itemsets mining approaches

3.1 Two-phase-based approaches

Traditional FIM algorithms (Agrawal et al., Reference Agrawal1993; Zaki, Reference Zaki2000) are designed to extract frequent itemsets using an user-defined support threshold. However, these algorithms are based on the frequency of occurrences within the dataset, which may not be adequate for identifying highly profitable itemsets. To resolve these issues, the concept of utility mining (Liu et al., Reference Liu and Liao2005; Yao & Hamilton, Reference Yao and Hamilton2006) is introduced. Utility mining can be viewed as an expansion of FIM, taking into account both sold quantity and unit profit of each itemset. These algorithms generate profitable itemsets instead of simple frequent itemsets. However, most of the traditional algorithms work on static datasets. In real-time systems, transactions are frequently added, removed, or changed in the dynamic environments. In this subsection, we discuss two-phase HUIM methods that mine the HUIs from the incremental datasets.

IUM and FIUM: Temporal mining is a sub-field of data mining focused on extracting interesting patterns from large temporal datasets (Li et al., Reference Li, Yeh and Chang2005b), while utility mining (Yao & Hamilton, Reference Yao and Hamilton2006) is used to find HUIs from transaction datasets. Both of these concepts can be integrated to produce significant results. To achieve this, Yeh et al. (Reference Yeh, Chang and Wang2008) conducted a study on incremental utility mining, focusing on the incremental extraction of high temporal utility itemsets (HTUIs) within a given time-period whether the TWU values of these time-periods from transactional datasets. Two effective approaches, namely incremental utility mining (IUM) and fast incremental utility mining (FIUM), are introduced to identify all the HTUIs. IUM is based on Two-phase (Liu et al., Reference Liu and Liao2005) and fast share measure (FSM) (Li et al., Reference Li, Yeh and Chang2005b) approaches, while FIUM is based on the ShFSM (Share-counted FSM) approach (Li et al., Reference Li, Yeh, Chang, Zhang, Tanaka, Yu, Wang and Li2005c). IUM initially, extracts 1-itemsets from partition 1 of the original dataset and checks whether their TWU values are less than the

![]() $min\_util$

threshold or not. If TWU of 1-itemsets

$min\_util$

threshold or not. If TWU of 1-itemsets

![]() $\ge$

$\ge$

![]() $min\_util$

, then these itemsets are saved in high TWU itemsets for partition 1. On the other hand, if utility of 1-itemsets

$min\_util$

, then these itemsets are saved in high TWU itemsets for partition 1. On the other hand, if utility of 1-itemsets

![]() $\ge$

$\ge$

![]() $min\_util$

, then these itemsets saved in HTUIs for partition 1. However, TWU of an itemset is always no less than its utility. Also, high TWU itemsets in partition 1 is a subset of high temporal utility itemsets in partition 1. The 1-itemsets in high TWU itemsets in partition 1 is used to generate level-2 candidates. This process continues until no candidate is generated in partition 1. The same procedure is applied to other partitions. Finally, it gives the complete set of HTUIs for the dataset.

$min\_util$

, then these itemsets saved in HTUIs for partition 1. However, TWU of an itemset is always no less than its utility. Also, high TWU itemsets in partition 1 is a subset of high temporal utility itemsets in partition 1. The 1-itemsets in high TWU itemsets in partition 1 is used to generate level-2 candidates. This process continues until no candidate is generated in partition 1. The same procedure is applied to other partitions. Finally, it gives the complete set of HTUIs for the dataset.

FIUM follows the same procedure as the IUM algorithm, with the primary difference is in the number of generated candidates. Both these algorithms use the TWU of the itemsets to reduce the number of candidates. The empirical findings proved that FIUM outperforms IUM concerning runtime on various

![]() $min\_util$

thresholds, both for real and synthetic datasets because FIUM takes less time to join the candidate itemsets. Both algorithms effectively search all HTUIs when new transactions are added into the original dataset. They not only search all HTUIs for a specific time-period but also search HUIs across the whole dataset. However, incremental utility mining finds it difficult when searching for HTUIs that have a large portion of utility in a specific time period. Furthermore, it does not specify information on the frequency of occurrence for itemsets within that particular period.

$min\_util$

thresholds, both for real and synthetic datasets because FIUM takes less time to join the candidate itemsets. Both algorithms effectively search all HTUIs when new transactions are added into the original dataset. They not only search all HTUIs for a specific time-period but also search HUIs across the whole dataset. However, incremental utility mining finds it difficult when searching for HTUIs that have a large portion of utility in a specific time period. Furthermore, it does not specify information on the frequency of occurrence for itemsets within that particular period.

ITPAU: The traditional HUIM algorithms (Liu et al., Reference Liu and Liao2005; Yao & Hamilton, Reference Yao and Hamilton2006) perform well only for the static datasets. Nevertheless, in real-time scenarios, the dataset is changing continually with the newly inserted transactions. Consequently, some HUIs may transition to low-utility itemsets and vice-versa, in the updated datasets. In address these challenges, Hong et al. (Reference Hong, Lee and Wang2009a) proposed an approach, called incremental two-phase average-utility mining (ITPAU), based on the Two-phase approach (Liu et al., Reference Liu and Liao2005). It’s purpose is to incrementally maintain the high average utility itemsets (HAUIs) in the incremental dataset. Based on the FUP notion (Cheung et al., Reference Cheung, Han, Ng and Wong1996), ITPAU joins the earlier extracted knowledge from the original dataset and the new information from recently added transactions to enhance mining performance. It employs a strategy, based on the Apriori approach (Agrawal et al., Reference Agrawal1993), to systematically identify HAUIs in a level-wise way. The DCP property is utilized to decrease the search space by removing low-utility itemsets earlier, thereby reducing the need for candidate generation at each level. To manage dataset changes resulting from newly added transactions, the concept of FUP (Cheung et al., Reference Cheung, Han, Ng and Wong1996) is employed to reduce the time required for reprocessing the whole updated dataset. ITPAU relies on four cases for the average-utility itemsets within the FUP framework. It performs two phases of incremental mining of these average-utility itemsets. During the first phase, average-utility upper-bound (AUUB) is employed to provide overestimated values for the itemsets. During the second phase, it calculates real average-utility values to extract HUBAUI (High Upper-Bound Average-Utility Itemsets).

The algorithm works as follows: first, it recomputes the minimum average-utility thresholds of new transactions and updated database, respectively. Then, it computes the utility value for each item in each new transaction. Afterwards, it identifies the maximal item-utility value in each new transaction. It generates k-itemsets (where k represents the counts of items in the currently processed itemsets.) and calculates their AUUB of k-itemsets from the new transactions. If AUUB of each k-itemset is not less than

![]() $min\_util$

of the new transaction, then put it in the set of HUBAUI of k-itemsets for the new transactions. If HUBAUI of k-itemsets does not appear in the new transaction, then update the AUUB. If HUBAUI of k-itemsets does not appear in the original dataset, then re-scan the original dataset to find AUUB of k-itemsets and update the original dataset. Repeat this procedure until no candidates are produced. In this way, the final HAUIs for the updated dataset are found. Experiments proved that ITPAU performs better than the state-of-the-art algorithm two-phase average-utility mining (TPAU) (Reference Hong, Lee and WangHong et al., 2009b

) in terms of runtime for updated datasets. ITPAU optimizes dataset scanning by computing the upper-bound of HUBAUI relative to the

$min\_util$

of the new transaction, then put it in the set of HUBAUI of k-itemsets for the new transactions. If HUBAUI of k-itemsets does not appear in the new transaction, then update the AUUB. If HUBAUI of k-itemsets does not appear in the original dataset, then re-scan the original dataset to find AUUB of k-itemsets and update the original dataset. Repeat this procedure until no candidates are produced. In this way, the final HAUIs for the updated dataset are found. Experiments proved that ITPAU performs better than the state-of-the-art algorithm two-phase average-utility mining (TPAU) (Reference Hong, Lee and WangHong et al., 2009b

) in terms of runtime for updated datasets. ITPAU optimizes dataset scanning by computing the upper-bound of HUBAUI relative to the

![]() $ min\_util$

threshold.

$ min\_util$

threshold.

FUP-HU: The traditional algorithms (Liu et al., Reference Liu and Liao2005; Yao & Hamilton, Reference Yao and Hamilton2006) consume a significant amount of computational time. To resolve this issue, the notion of FUP (Cheung et al. Reference Cheung, Han, Ng and Wong1996) is designed to update the obtained itemsets in the dynamic dataset. However, the process of re-scanning the original dataset consumes a significant amount of computational time. To further increase the mining performance, Lin et al. (Reference Lin, Lan and Hong2012) developed an incremental mining approach for record insertions, named FUP-HU. This method is based on the Two-phase (Liu et al., Reference Liu and Liao2005) and FUP concept (Cheung et al., Reference Cheung, Han, Ng and Wong1996) to effectively mine HUIs from the updated dataset. The method initially partitions the itemsets into four parts if they belong to the high TWU values of the itemsets in the original dataset and newly inserted transactions. To achieve this, the proposed method uses two-phase utility mining (Liu et al., Reference Liu and Liao2005) to search the TWU of itemsets and the corresponding actual utility values from the original datasets before incorporating additional transactions. First, it calculates the utility value for each item in every new transaction in the dataset. Then, it generates the k-itemsets and calculates their TWU from the new transactions. If TWU of each k-itemsets for the new transaction is no less than the

![]() $min\_util$

, then add it in the set of high TWU k-itemsets for the new transaction. Then, it generates the

$min\_util$

, then add it in the set of high TWU k-itemsets for the new transaction. Then, it generates the

![]() $(k+1)$

-itemsets from the set of high TWU k-itemsets in the updated datasets. Afterward, it calculates the actual utility value of itemsets for the new transactions. Finally, the HUIs for the whole updated database are generated. The experiments proved that the proposed approach performs better than the state-of-the-art approach Two-phase (Optimal) (Liu et al., Reference Liu and Liao2005) for various

$(k+1)$

-itemsets from the set of high TWU k-itemsets in the updated datasets. Afterward, it calculates the actual utility value of itemsets for the new transactions. Finally, the HUIs for the whole updated database are generated. The experiments proved that the proposed approach performs better than the state-of-the-art approach Two-phase (Optimal) (Liu et al., Reference Liu and Liao2005) for various

![]() $ min\_util$

thresholds in the updated dataset. This is because Two-phase (Optimal) performs the re-scanning of the updated dataset to discover HUIs in batch mode, resulting in a significant time overhead. In contrast, FUP-HU only re-scans the updated dataset for the newly inserted transactions, which significantly reduces the running time.

$ min\_util$

thresholds in the updated dataset. This is because Two-phase (Optimal) performs the re-scanning of the updated dataset to discover HUIs in batch mode, resulting in a significant time overhead. In contrast, FUP-HU only re-scans the updated dataset for the newly inserted transactions, which significantly reduces the running time.

Discussion

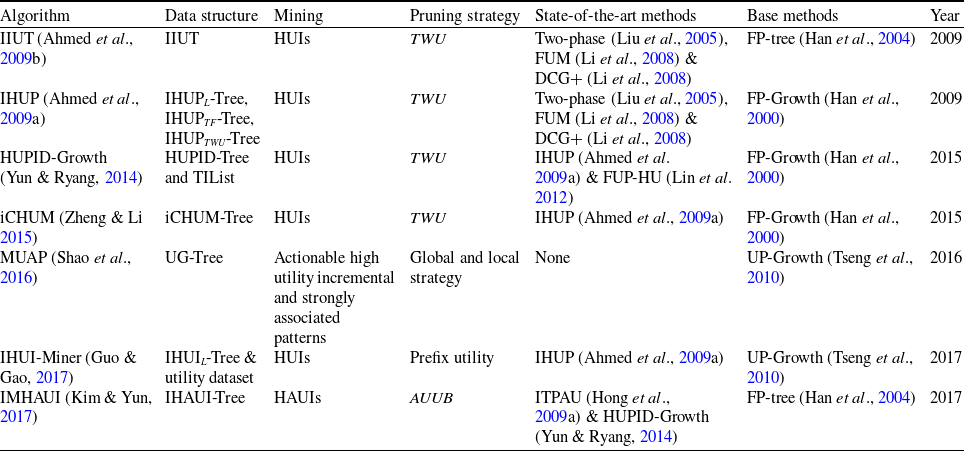

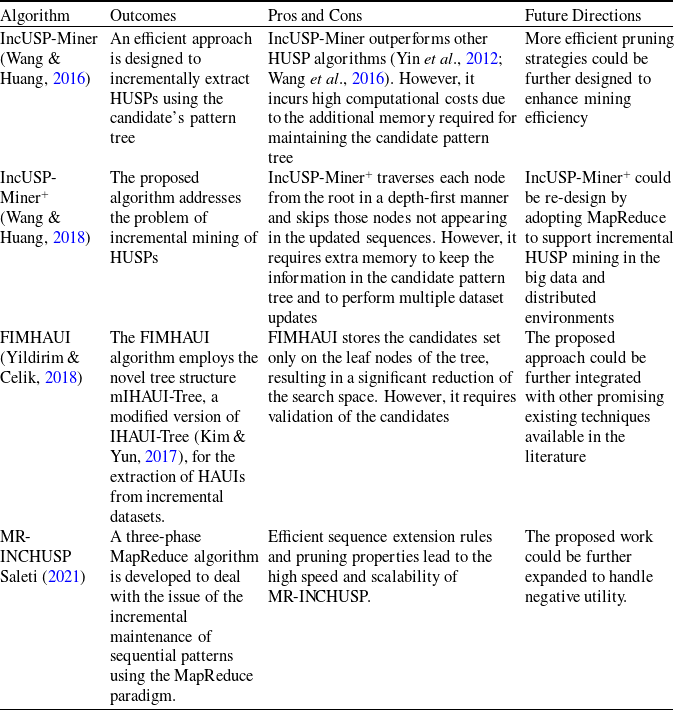

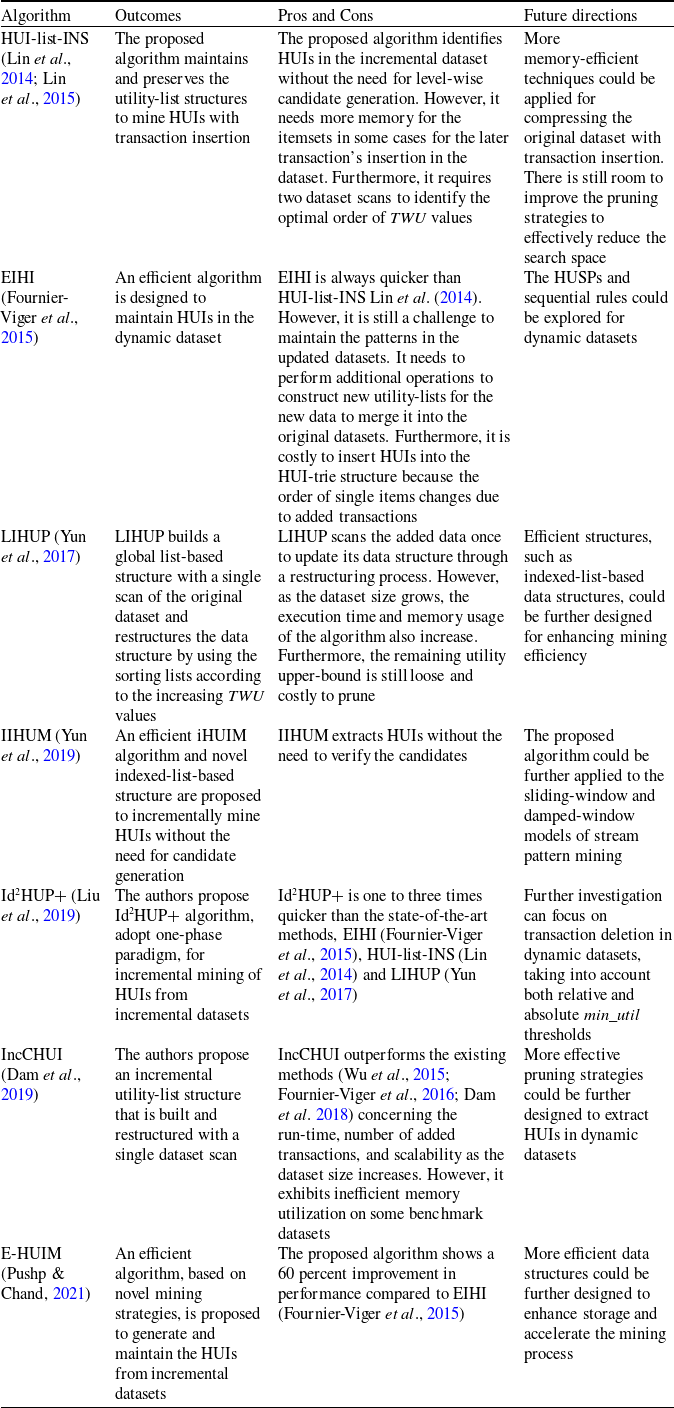

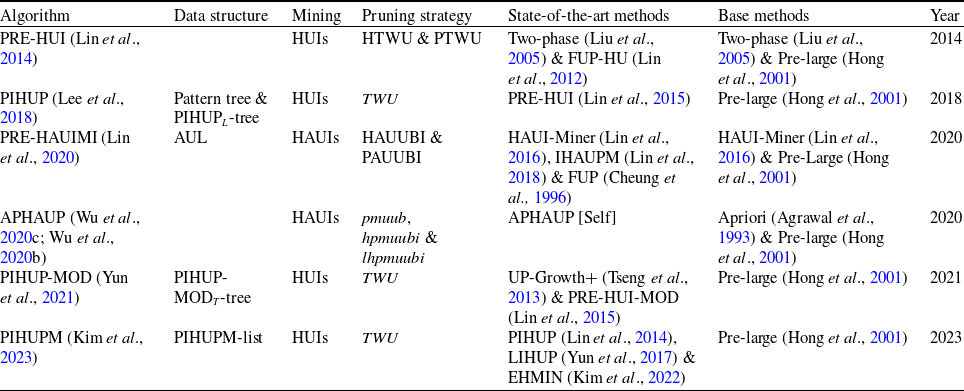

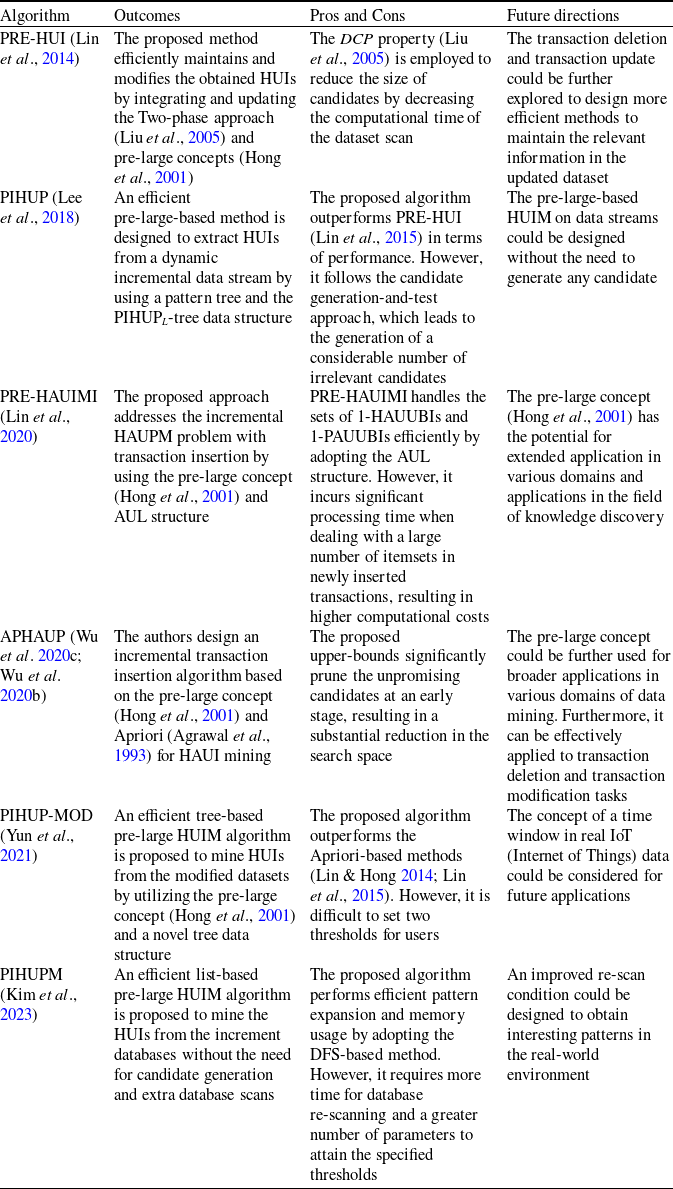

We have discussed two-phase-based HUIM algorithms (Liu et al. Reference Liu and Liao2005; Yao & Hamilton Reference Yao and Hamilton2006) for incremental datasets. Although the iHUIM algorithms (Yeh et al., Reference Yeh, Chang and Wang2008; Hong et al., Reference Hong, Lee and Wang2009a; Lin et al., Reference Lin, Lan and Hong2012) effectively address the limitations commonly associated with traditional HUIM algorithms. Nonetheless, they suffer from the same drawbacks as Apriori (Agrawal et al. Reference Agrawal1993) that produces more candidates and perform multiple dataset scans. The utilization of the FUP concept (Cheung et al., Reference Cheung, Han, Ng and Wong1996) reduces the number of re-scanning the original dataset, resulting in a reduced computational costs. In some cases, there can still be excessive re-scanning of original dataset, leading to significant computational overhead. Table 10 provides a comprehensive summary of two-phase iHUIM algorithms, encompassing key categories such as data structure, mining, pruning strategies, the state-of-the-art methods, and base methods. Table 11 provides an in-depth exploration of the theoretical aspects of two-phase iHUIM algorithms, including key categories such as outcomes, advantages and disadvantages, and future remarks.

Table 10. Characteristics and theoretical aspects of the Two-phase-based approaches

Table 11. Pros and cons of the Two-phase-based approaches

3.2 Pattern-growth-based approaches

Two-phase based iHUIM algorithms (Yeh et al., Reference Yeh, Chang and Wang2008; Hong et al., Reference Hong, Lee and Wang2009a; Lin et al., Reference Lin, Lan and Hong2012) extract the utility information in a level-wise fashion, thus suffering from excessive candidate’s generation and multiple dataset scans. To deal with these issues, pattern-growth-based iHUIM algorithms (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009b; Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a; Yun & Ryang, Reference Yun and Ryang2014; Zheng & Li, Reference Zheng and Li2015; Shao et al., Reference Shao, Meng and Cao2016; Guo & Gao, Reference Guo and Gao2017; Kim & Yun, Reference Kim and Yun2017) are introduced to efficiently extract the utility information in dynamic environments. These algorithms reduce the number of less promising candidates by mitigating the overestimation of their utilities (Liu et al., Reference Liu and Liao2005), thereby improving the mining performance. Efficient data structures are employed to handle newly added information without the need to process the whole dataset. This significantly minimizes the number of dataset scans as compared to level-wise incremental approaches (Yeh et al. Reference Yeh, Chang and Wang2008; Hong et al. Reference Hong, Lee and Wang2009a; Lin et al. Reference Lin, Lan and Hong2012). Furthermore, these methods significantly reduce redundant information, resulting in reduced storage space requirements and less execution times for the mining process. We here give the in-depth discussion of the pattern-growth-based iHUIM algorithms.

IIUT: Ahmed et al. (Reference Ahmed, Tanbeer, Jeong and Lee2009b) proposed an effective tree structure named IIUT (Incremental and Interactive Utility Tree), that employed a pattern-growth method to discover high utility itemsets without requiring the level-wise candidate generation. Incremental data can then be captured without re-structuring the process. It uses the ‘

![]() $build \ once, \ mine \ many$

’ characteristics, rendering it well-suited for interactive mining. The IIUT structure is designed according to the order of item appearances and performs two scans: (1) During the first scan, IIUT arranges items within a transaction based on their order of appearance and adds them to the leaf node of the tree. The header table is maintained in the form of an FP-tree (Han et al., Reference Han, Pei, Yin and Mao2004). (2) During the second scan, it maintains the TWU value in the header table and intermediate nodes of the tree. The adjacent links are preserved to efficiently traverse the tree. This, in turn, leads to a reduction in the time required for the mining process. The proposed tree structure is extremely efficient in the interactive and incremental mining of HUIs.

$build \ once, \ mine \ many$

’ characteristics, rendering it well-suited for interactive mining. The IIUT structure is designed according to the order of item appearances and performs two scans: (1) During the first scan, IIUT arranges items within a transaction based on their order of appearance and adds them to the leaf node of the tree. The header table is maintained in the form of an FP-tree (Han et al., Reference Han, Pei, Yin and Mao2004). (2) During the second scan, it maintains the TWU value in the header table and intermediate nodes of the tree. The adjacent links are preserved to efficiently traverse the tree. This, in turn, leads to a reduction in the time required for the mining process. The proposed tree structure is extremely efficient in the interactive and incremental mining of HUIs.

Figure 2 shows the incremental maintenance process of the proposed algorithm IIUT. In Step 1, the transaction database with their profit value is taken as an input. It consists six transactions

![]() $(T_1,\,T_2,\,T_3,\,T_4,\,T_5,\,T_6)$

and five items (a, b, c, d, e). In Step 2, the transactions are inserted into the H-table (Header-table) according to their appearance order, one at a time. The tree is constructed according to the FP-tree (Han et al., Reference Han, Pei, Yin and Mao2004). In Step 3, two groups of transactions Db1 and Db2 are added to the original database. The Db1 includes transactions

$(T_1,\,T_2,\,T_3,\,T_4,\,T_5,\,T_6)$

and five items (a, b, c, d, e). In Step 2, the transactions are inserted into the H-table (Header-table) according to their appearance order, one at a time. The tree is constructed according to the FP-tree (Han et al., Reference Han, Pei, Yin and Mao2004). In Step 3, two groups of transactions Db1 and Db2 are added to the original database. The Db1 includes transactions

![]() $(T_7,T_8)$

, while Db2 includes

$(T_7,T_8)$

, while Db2 includes

![]() $(T_9,T_{10})$

. Then, the tree is constructed from the updated database. In the same Step, the database is again updated by modifying the transactions

$(T_9,T_{10})$

. Then, the tree is constructed from the updated database. In the same Step, the database is again updated by modifying the transactions

![]() $(T_2,T_3)$

and deleting

$(T_2,T_3)$

and deleting

![]() $(T_5,T_9)$

. Finally, the tree is built based on its H-table from the updated database. Experiments proved that IIUT outperforms the state-of-the-art approaches, including Two-phase (Liu et al., Reference Liu and Liao2005), FUM (Fast Utility Mining) (Li et al., Reference Li, Yeh and Chang2008) and DCG

$(T_5,T_9)$

. Finally, the tree is built based on its H-table from the updated database. Experiments proved that IIUT outperforms the state-of-the-art approaches, including Two-phase (Liu et al., Reference Liu and Liao2005), FUM (Fast Utility Mining) (Li et al., Reference Li, Yeh and Chang2008) and DCG

![]() $+$

(Li et al., Reference Li, Yeh and Chang2008), an extended version of DCG (Direct Candidate Generation) algorithm (Li et al., Reference Li, Yeh and Chang2005a; Li et al., Reference Li, Yeh, Chang, Zhang, Tanaka, Yu, Wang and Li2005c), concerning runtime and number of promising candidates under various

$+$

(Li et al., Reference Li, Yeh and Chang2008), an extended version of DCG (Direct Candidate Generation) algorithm (Li et al., Reference Li, Yeh and Chang2005a; Li et al., Reference Li, Yeh, Chang, Zhang, Tanaka, Yu, Wang and Li2005c), concerning runtime and number of promising candidates under various

![]() $min\_util$

thresholds from the dense and sparse datasets.

$min\_util$

thresholds from the dense and sparse datasets.

Figure 2. Incremental maintenance process of IIUT

IHUP: The traditional HUIM algorithms (Yen et al., Reference Yen and Lee2005; Hong et al., Reference Hong, Lin and Wu2008) cannot deal with the incremental and interactive mining that includes addition, removal, or change of the transactions in the dynamic environment. Furthermore, these algorithms do not follow the ‘

![]() $build \ once, \ mine \ many \ $

’ characteristics. To address these challenges, Ahmed et al. (Reference Ahmed, Tanbeer, Jeong and Lee2009a) proposed three novel tree structures, namely Incremental HUI lexicographic Tree (IHUP

$build \ once, \ mine \ many \ $

’ characteristics. To address these challenges, Ahmed et al. (Reference Ahmed, Tanbeer, Jeong and Lee2009a) proposed three novel tree structures, namely Incremental HUI lexicographic Tree (IHUP

![]() $_L$

-Tree), IHUP transaction frequency tree (IHUP

$_L$

-Tree), IHUP transaction frequency tree (IHUP

![]() $_{TF}$

-Tree) and IHUP transaction-weighted utilization Tree (IHUP

$_{TF}$

-Tree) and IHUP transaction-weighted utilization Tree (IHUP

![]() $_{TWU}$

-Tree). These structures are specifically designed to enable incremental and interactive HUIM (High Utility Itemset Mining) while follow ‘

$_{TWU}$

-Tree). These structures are specifically designed to enable incremental and interactive HUIM (High Utility Itemset Mining) while follow ‘

![]() $build \ once, \ mine \ many \ $

’ property. This approach results in efficient HUI mining from incremental transaction datasets. These tree structures are based on the FP-Growth method (Han et al., Reference Han, Pei and Yin2000) and hold the DCP property (Agrawal et al., Reference Agrawal1993). They incorporate TWU of a pattern, which effectively avoid candidate generation in a level-wise manner. The first tree structure IHUP

$build \ once, \ mine \ many \ $

’ property. This approach results in efficient HUI mining from incremental transaction datasets. These tree structures are based on the FP-Growth method (Han et al., Reference Han, Pei and Yin2000) and hold the DCP property (Agrawal et al., Reference Agrawal1993). They incorporate TWU of a pattern, which effectively avoid candidate generation in a level-wise manner. The first tree structure IHUP

![]() $_L$

-Tree rely on the lexicographical order of items. It efficiently manages incremental data without the need of restructure functions. The second tree structure IHUP

$_L$

-Tree rely on the lexicographical order of items. It efficiently manages incremental data without the need of restructure functions. The second tree structure IHUP

![]() $_{TF}$

-Tree optimizes space by arranging items based on descending transaction frequency. The third tree structure IHUP

$_{TF}$

-Tree optimizes space by arranging items based on descending transaction frequency. The third tree structure IHUP

![]() $_{TWU}$

-Tree, is constructed to reduce the time required for the mining process, based on their TWU values in descending order.

$_{TWU}$

-Tree, is constructed to reduce the time required for the mining process, based on their TWU values in descending order.

In the first scan, the IHUP

![]() $_L$

-Tree is constructed, arranging items in lexicographic order. The order of items of IHUP

$_L$

-Tree is constructed, arranging items in lexicographic order. The order of items of IHUP

![]() $_L$

-Tree is not affected by changing the frequency of the items by performing the addition, deletion, and modification. Subsequently, the IHUP

$_L$

-Tree is not affected by changing the frequency of the items by performing the addition, deletion, and modification. Subsequently, the IHUP

![]() $_{TF}$

-Tree is derived from the IHUP

$_{TF}$

-Tree is derived from the IHUP

![]() $_L$

-Tree, utilizing the path-adjusting technique (Koh & Shieh, Reference Koh, Shieh, Lee, Li, Whang and Lee2004) based on the bubble-sort method. The nodes in the IHUP

$_L$

-Tree, utilizing the path-adjusting technique (Koh & Shieh, Reference Koh, Shieh, Lee, Li, Whang and Lee2004) based on the bubble-sort method. The nodes in the IHUP

![]() $_{TF}$

-Tree are organized in the descending order based on their transaction frequency. The advantage is that the items occur in numerous transaction can be placed in the upper section of the tree, resulting in the higher prefix-sharing nodes that, in turn, significantly reduce the size of the tree. These two tree structures face a significant drawback: they may inadvertently convert low-utility itemsets into HUIs, and vice-versa, resulting in a significant delay in the mining process. To address this issue, IHUP

$_{TF}$

-Tree are organized in the descending order based on their transaction frequency. The advantage is that the items occur in numerous transaction can be placed in the upper section of the tree, resulting in the higher prefix-sharing nodes that, in turn, significantly reduce the size of the tree. These two tree structures face a significant drawback: they may inadvertently convert low-utility itemsets into HUIs, and vice-versa, resulting in a significant delay in the mining process. To address this issue, IHUP

![]() $_{TWU}$

-Tree is designed in a same way as IHUP

$_{TWU}$

-Tree is designed in a same way as IHUP

![]() $_{TF}$

-Tree is constructed. IHUP

$_{TF}$

-Tree is constructed. IHUP

![]() $_{TF}$

-Tree keeps all promising candidates before the non-promising candidates. This tree structure is constructed from the IHUP

$_{TF}$

-Tree keeps all promising candidates before the non-promising candidates. This tree structure is constructed from the IHUP

![]() $_L$

-Tree using the path adjusting technique (Koh & Shieh, Reference Koh, Shieh, Lee, Li, Whang and Lee2004) based on the bubble-sort method. The number of nodes involved in the mining process in IHUP

$_L$

-Tree using the path adjusting technique (Koh & Shieh, Reference Koh, Shieh, Lee, Li, Whang and Lee2004) based on the bubble-sort method. The number of nodes involved in the mining process in IHUP

![]() $_{TWU}$

-Tree does not exceed that of the other two tree structures, leading to the significantly reduction in the mining time. During the second dataset scan, HUIs are mined. The proposed algorithms require just two dataset scans, regardless of the maximum length of candidate patterns. The experiments proved that the designed three structures perform better than Two-phase (Liu et al. Reference Liu and Liao2005), FUM (Li et al., Reference Li, Yeh and Chang2008), and DCG+ (Li et al., Reference Li, Yeh and Chang2008), concerning execution time, number of promising candidates, and scalability under different

$_{TWU}$

-Tree does not exceed that of the other two tree structures, leading to the significantly reduction in the mining time. During the second dataset scan, HUIs are mined. The proposed algorithms require just two dataset scans, regardless of the maximum length of candidate patterns. The experiments proved that the designed three structures perform better than Two-phase (Liu et al. Reference Liu and Liao2005), FUM (Li et al., Reference Li, Yeh and Chang2008), and DCG+ (Li et al., Reference Li, Yeh and Chang2008), concerning execution time, number of promising candidates, and scalability under different

![]() $ min\_util$

thresholds. However, IHUP

$ min\_util$

thresholds. However, IHUP

![]() $_L$

-Tree consumes a considerable amount of space. Both IHUP

$_L$

-Tree consumes a considerable amount of space. Both IHUP

![]() $_L$

-Tree and IHUP

$_L$

-Tree and IHUP

![]() $_{TF}$

-Tree have low-TWU itemsets appearing before high TWU itemsets, resulting in increased time delays. IHUP

$_{TF}$

-Tree have low-TWU itemsets appearing before high TWU itemsets, resulting in increased time delays. IHUP

![]() $_{TWU}$

-Tree overcomes the limitations of IHUP

$_{TWU}$

-Tree overcomes the limitations of IHUP

![]() $_L$

-Tree and IHUP

$_L$

-Tree and IHUP

![]() $_{TF}$

-Tree.

$_{TF}$

-Tree.

HUPID-Growth: Several HUIM algorithms (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a; Lin et al., Reference Lin, Lan and Hong2012) are proposed for incremental datasets to better emulate real-world characteristics. But, they produce excessive number of candidates and requiring multiple dataset scans, leading to a reduction in mining efficiency. In addition, these algorithms require excessive amount of runtime to extract candidates by their overestimated methods (Liu et al., Reference Liu and Liao2005). This situation becomes increasingly challenging as datasets gradually expand in a dynamic environment. Yun & Ryang (Reference Yun and Ryang2014) developed an efficient algorithm called HUPID-Growth (High Utility Patterns in Incremental Databases Growth) to extract HUIs from dynamic datasets. It focuses on mitigating overestimated utilities in the mining process. An efficient tree structure, called HUPID-Tree (High Utility Patterns in Incremental Datasets Tree), is proposed to effectively maintain details of the HUIs in the updated dataset, requiring just a single dataset scan. A header table is maintained in the HUPID-Tree to facilitate the tree traversal. Each record in the header table includes item name, TWU value and a link. These entries are sorted according to TWU descending order. The sorted entries are used effectively to traverse the tree. Furthermore, a data structure named TIList (Tail-node Information List) is employed in local trees by using the restructure method based on TWU (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a; Lin et al., Reference Lin, Lan and Hong2012). A TIList preserves information about the tail nodes in a global HUPID-Tree. It is used for restructuring the HUPID-Tree, resulting in the reduced overestimate utilities that, in turn, achieves efficient incremental HUIs mining.

The proposed algorithm is consists of two phases: (1) The first phase includes two steps. In the initial step, a global HUPID-Tree is built with a single scan. Subsequently, the data structure TIList is created using the restructure method, effectively reducing overestimated utilities in decreasing order of the TWU value. In the second step, candidates are generated, which significantly minimize the search space, enhances mining efficiency. (2) In the second phase, actual HUIs are extracted from the generated candidates. When the current database is updated by appending the new information to the database. Then, the proposed algorithm updates the existing tree with only added information without reconstructing the tree from the scratch. The proposed algorithm sorts the items in each added transaction as per the TWU decreasing order. Afterwards, it adds the transaction into the tree by computing the utility value to the tree. The proposed algorithm decreases overestimate utilities of nodes both in global and local trees, leading to the decrease in the number of candidates and search space. The proposed algorithm outperforms IHUP (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a) and FUP-HU (Lin et al., Reference Lin, Lan and Hong2012) concerning execution time, memory usage, and the number of processed candidates, as demonstrated on retail and medical datasets. HUPID-Growth specifically performs well where datasets consist of a large number of lengthy transactions or when a low value for the

![]() $ min\_util $

threshold is employed.

$ min\_util $

threshold is employed.

iCHUM: The IHUP algorithm (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a) failed to perform efficiently on incremental datasets containing a large number of itemsets. It retains redundant information, resulting in excessive processing times for unpromising candidates. Furthermore, it does not maintain the tree structure following the DCP (Liu et al., Reference Liu and Liao2005). To deal with these problems, Zheng & Li (Reference Zheng and Li2015) designed an efficient approach, named iCHUM (incremental Compressed High Utility Mining) to mine HUIs from incremental datasets. It employs high TWU values of items to construct and incrementally update the iCHUM-Tree structure, leading to a significant reduction of run-time. The iCHUM-Tree includes tree structure and header table. The iCHUM-Tree is constructed from the original database and the header table maintains the promising items whose TWU value is no less than

![]() $min\_util$

. The items are organized as per TWU decreasing order. Then, each reodered transaction is added in iCHUM-Tree.

$min\_util$

. The items are organized as per TWU decreasing order. Then, each reodered transaction is added in iCHUM-Tree.

The proposed algorithm updates the iCHUM-Tree when a new dataset is added to the original one. A recall item is one whose TWU value is no less than

![]() $min\_util$

in both original and updated databases. The nodes are reorder in the iCHUM-Tree and its header table by using bubble sort method (Koh & Shieh, Reference Koh, Shieh, Lee, Li, Whang and Lee2004). The iCHUM-Tree is updated when all the items and the corresponding nodes are sorted in the iCHUM-Tree. The proposed algorithm includes four steps. Firstly, the algorithm constructs the iCHUM-Tree from the original dataset and collects the high TWU values of itemsets within that dataset. Secondly, when a new dataset is appended to the original dataset, the iCHUM-Tree undergoes an update process. Thirdly, the updated iCHUM-Tree becomes the input for the mining process, generates high TWU itemsets. Finally, it identifies actual HUIs from the candidates in the whole dataset. Experiments proved that iCHUM performs better than IHUP (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a) concerning execution time on benchmark datasets, specifically for long transactions. However, the performance of iCHUM degrades as the number of recollected items increases.

$min\_util$

in both original and updated databases. The nodes are reorder in the iCHUM-Tree and its header table by using bubble sort method (Koh & Shieh, Reference Koh, Shieh, Lee, Li, Whang and Lee2004). The iCHUM-Tree is updated when all the items and the corresponding nodes are sorted in the iCHUM-Tree. The proposed algorithm includes four steps. Firstly, the algorithm constructs the iCHUM-Tree from the original dataset and collects the high TWU values of itemsets within that dataset. Secondly, when a new dataset is appended to the original dataset, the iCHUM-Tree undergoes an update process. Thirdly, the updated iCHUM-Tree becomes the input for the mining process, generates high TWU itemsets. Finally, it identifies actual HUIs from the candidates in the whole dataset. Experiments proved that iCHUM performs better than IHUP (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a) concerning execution time on benchmark datasets, specifically for long transactions. However, the performance of iCHUM degrades as the number of recollected items increases.

MUAP: In real-world scenarios, managers aim to maximize profits by considering actionable product. However, some of these products may not exhibit high frequency or utility in the datasets and are often considered for rejection. However, these actionable patterns are of great significance. Hence, extracting these itemsets as high-frequency or high utility patterns poses a challenging task. To resolve this challenge, Shao et al. (Reference Shao, Meng and Cao2016) developed a new structure for mining actionable patterns, namely, mining utility associated patterns (MUAP), the first of its kind, to discover the high utility incremental patterns and closely related itemsets by using a combination of criteria. The proposed algorithm utilizes a tree structure that combines utility growth and association rule mining. The proposed tree structure, named utility graph-tree (UG-tree), orderly analyses the connection between fundamental and derivative itemsets based on frequency and utility concepts. All branches whose utility decreases are discarded first time from the proposed UG-tree because the utility of the nodes may vary which means that the increment of the utility may be negative. The UG-tree involves two dataset scans. During the first scan, it generates itemsets and their corresponding TWU values based on transaction utility. In the second scan, it reorders transactions and generates their rebuilt transaction utility (RTU). RTU is the transaction utility of the rebuilt transaction.

Two efficient strategies are also designed to enhance the efficiency of the proposed structure. The global pruning strategy is designed to produce utility increase patterns with clusters from the given itemsets. The local strategy is designed for each cluster to identify patterns with the highest weighted value, considering a composite measure for utility growth and dependence computation. A combined mining approach is proposed to find the interesting patterns from the clusters of patterns. Two parameters namely, impact and confidence, are proposed to measure the interestingness of each combined pattern. The impact of the additional itemset is the impact factor to value the utility growth within each actionable combined pattern from the utility of the underlying itemset to the derivative itemset. On the other hand, the confidence is the measurement of the association relationship between the underlying itemset and the additional itemset. The results achieved by MUAP are satisfactory in terms of pattern utility from the dense and sparse datasets.

IHUI-Miner: IHUP approach (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a) is developed to mine rule from incremental datasets while mitigating overestimated utility values. However, it suffers from two limitations: (1) Generates an excessive number of candidates and (2) Consumes more time to validate these candidates. To solve these limitations, Guo et al. developed an approach, called IHUI-Miner (Guo & Gao, Reference Guo and Gao2017) to extract HUIs without requiring for candidate generation. A novel tree structure, called IHUI-Tree (Incremental High Utility Itemset Tree), organizes items in lexicographic order (IHUI

![]() $_L$

-Tree), using the pattern-growth method and is traversed in a bottom-up manner. The details of the original dataset are represented using a 2D-array utility dataset (Zihayat & An, Reference Zihayat and An2014). The global IHUI

$_L$

-Tree), using the pattern-growth method and is traversed in a bottom-up manner. The details of the original dataset are represented using a 2D-array utility dataset (Zihayat & An, Reference Zihayat and An2014). The global IHUI

![]() $_L$

-Tree and the global utility dataset are initially constructed by scanning the original dataset. The DCP property of the dataset’s item prefix utility effectively reducing the search space. Prefix utility of an item is the summation of utility of the items of the prefix set in the database. It is used to estimate the true utility of an itemset in the database.

$_L$

-Tree and the global utility dataset are initially constructed by scanning the original dataset. The DCP property of the dataset’s item prefix utility effectively reducing the search space. Prefix utility of an item is the summation of utility of the items of the prefix set in the database. It is used to estimate the true utility of an itemset in the database.

If the prefix utility for an itemset is equal to or greater than

![]() $min\_util$

, then the following three steps are performed to compute the HUIs: (1) A conditional dataset is generated, and subsequently, conditional pattern tree and conditional utility dataset are constructed. (2) HUIs are extracted iteratively from the conditional pattern tree and the conditional utility dataset. (3) The information in global IHUI

$min\_util$

, then the following three steps are performed to compute the HUIs: (1) A conditional dataset is generated, and subsequently, conditional pattern tree and conditional utility dataset are constructed. (2) HUIs are extracted iteratively from the conditional pattern tree and the conditional utility dataset. (3) The information in global IHUI

![]() $_L$

-Tree and global utility dataset is updated. If the prefix utility of itemset is less than

$_L$

-Tree and global utility dataset is updated. If the prefix utility of itemset is less than

![]() $min\_util$

then only

$min\_util$

then only

![]() $3\mathrm{rd}$

step is performed. To generate the conditional dataset for an item in the global IHUI

$3\mathrm{rd}$

step is performed. To generate the conditional dataset for an item in the global IHUI

![]() $_L$

-Tree, the following steps are performed: (1) Node links are traced corresponding to an item in the global tree. (2) The obtained nodes are traced to their root, and all the paths corresponding to that item can be retrieved and collected into the item’s conditional dataset. Moreover, the utilities of items in the paths can also be collected from the global utility dataset with the node-link of the obtained nodes. The results proved that the IHUI-Miner performs better than IHUP (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a) concerning the execution time. It is observed that the proposed approach is 1 to 2 orders of magnitude quicker than IHUP (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a).

$_L$

-Tree, the following steps are performed: (1) Node links are traced corresponding to an item in the global tree. (2) The obtained nodes are traced to their root, and all the paths corresponding to that item can be retrieved and collected into the item’s conditional dataset. Moreover, the utilities of items in the paths can also be collected from the global utility dataset with the node-link of the obtained nodes. The results proved that the IHUI-Miner performs better than IHUP (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a) concerning the execution time. It is observed that the proposed approach is 1 to 2 orders of magnitude quicker than IHUP (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009a).

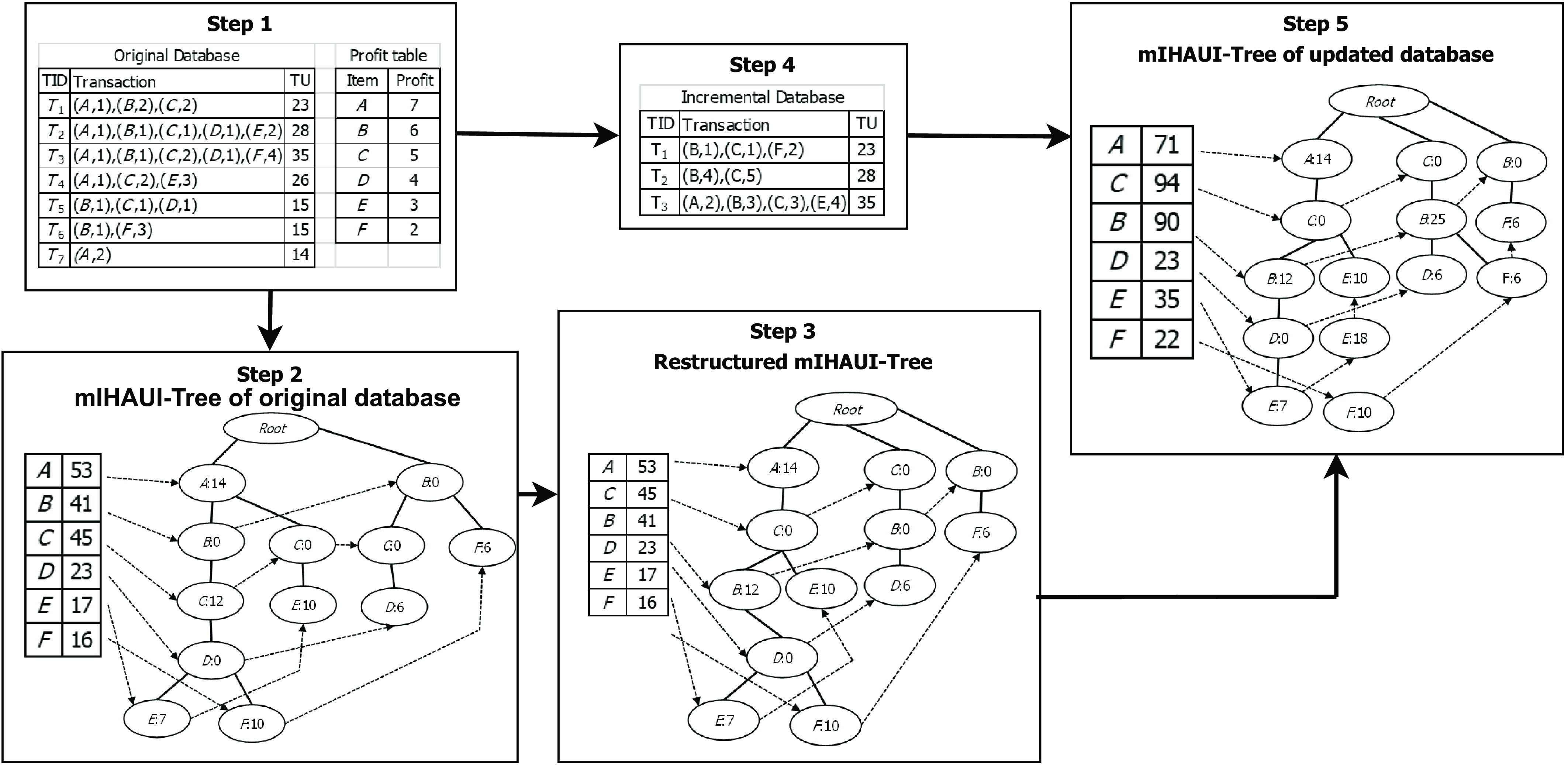

IMHAUI: High average-utility itemsets mining (HAUIM) approaches (Hong et al., Reference Hong, Lee and Wang2011; Kim & Yun, Reference Kim and Yun2016) address the limitations of conventional HUIM methods by incorporating the concept of average-utility measure, thereby providing meaningful results to the users. However, these approaches scan the dataset twice and are suitable only for static datasets. To resolve these limitations, Kim & Yun (Reference Kim and Yun2017) designed an approach, called incremental mining of high average-utility itemsets (IMHAUI), utilizing the pattern-growth approach, to extract HAUIs from incremental datasets. A novel tree structure, called incremental high average utility itemset tree (IHAUI-Tree), is proposed to efficiently retain relevant information in incremental datasets while minimizing the number of dataset scans through the utilization of the node sharing effect. The algorithm also utilizes the path adjusting method (Koh & Shieh, Reference Koh, Shieh, Lee, Li, Whang and Lee2004) following restructuring techniques to generate a compressed IHAUI-Tree. It includes three basic steps: (1) node insert; (2) node exchange; and (3) node merge. In IHAUI-Tree, the AUUB value of any node is always no less than its child nodes. The path adjusting method is updated to restructure the IHAUI-Tree as per the descending order of AUUB, resulting in removing the irrelevant items from the construction of local trees and reducing the computational overhead in the mining process. This will result into the significant memory consumption to store the data in the IHAUI-Tree.

The proposed approach comprises the following functions: (1) IMHAUI function: This function is responsible for continuously processing the input transaction data. It calls for two other essential functions: ‘Restructure’ and ‘Mining,’ which restructure IHAUI-Tree and performs the mining process. (2) Restructure function: This function focuses on restructuring the IHAUI-Tree by decreasing the order of the optimum AUUB value. (3) Mining function: This function focuses on the mining candidate HAUIs using the pattern-growth method. It is observed that IMHAUI performs better than ITPAU (Hong et al., Reference Hong, Lee and Wang2009a) and HUPID-Growth (Yun & Ryang, Reference Yun and Ryang2014), concerning execution time and memory consumption. However, it requires additional processing time for candidate validation. There are lots of challenges to accelerating the mining process when new transactions are generated. The proposed approach extract conditional pattern base for each prefix itemset and constructs its local tree recursively. However, this recursive approach is negatively affects the execution efficiency.

Discussion

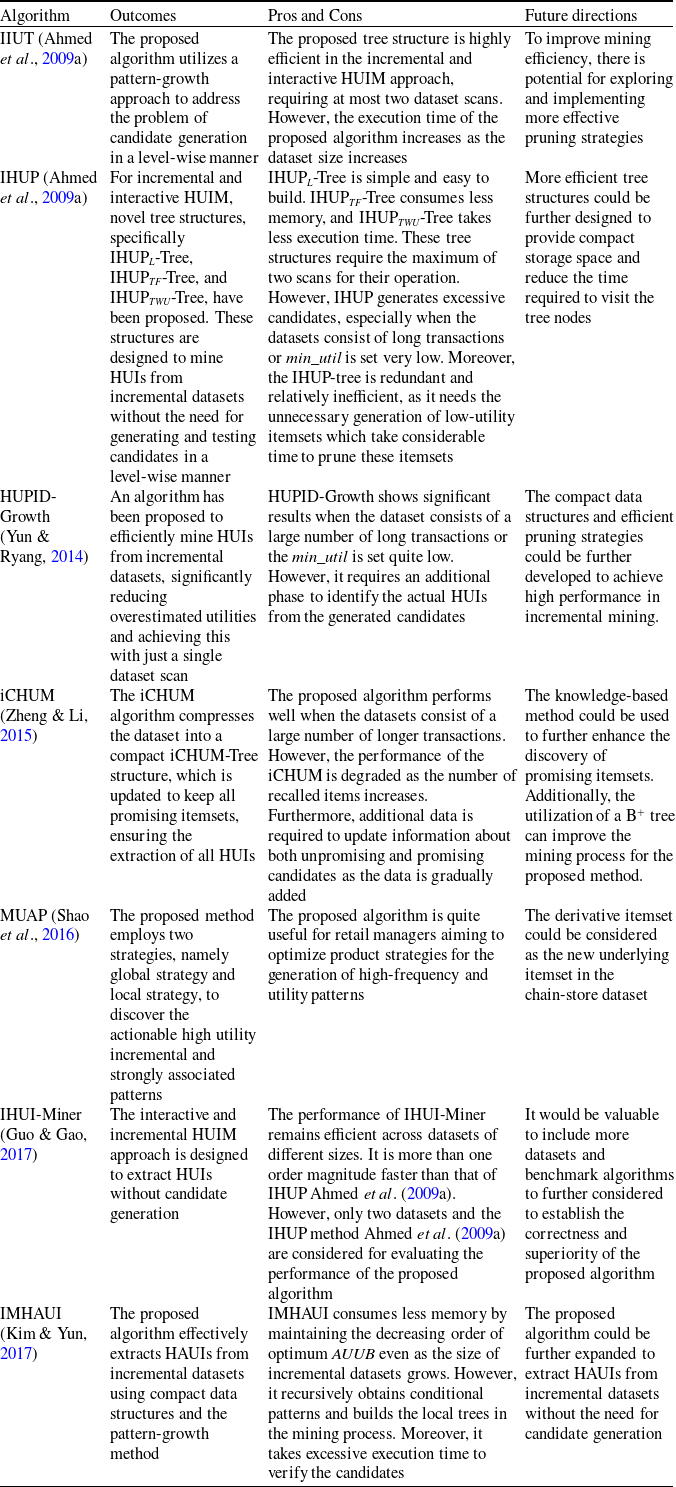

We have discussed pattern-growth-based iHUIM algorithms designed to extract relevant utility information in incremental environment. These algorithms overcome the limitations of two-phase-based iHUIM approaches by providing compact data structures and reducing redundant information, resulting in a significantly enhancing the mining performance. However, there are several issues: (1) It generates excessive number of candidates when dataset has long transactions or

![]() $ min\_util $

threshold is set quite low. (2) The substantial time required for candidate verification. (3) The performance of these algorithms can vary depending on the dataset size. Table 12 gives an overview of pattern-growth-based HUIM algorithms from the incremental datasets. The theoretical aspects of pattern-growth iHUIM algorithms, including their outcomes, advantages, disadvantages, and future considerations, are shown in Table 13.

$ min\_util $

threshold is set quite low. (2) The substantial time required for candidate verification. (3) The performance of these algorithms can vary depending on the dataset size. Table 12 gives an overview of pattern-growth-based HUIM algorithms from the incremental datasets. The theoretical aspects of pattern-growth iHUIM algorithms, including their outcomes, advantages, disadvantages, and future considerations, are shown in Table 13.

Table 12. Characteristics and theoretical aspects of the pattern-growth-based approaches

Table 13. Pros and cons of the pattern-growth-based approaches

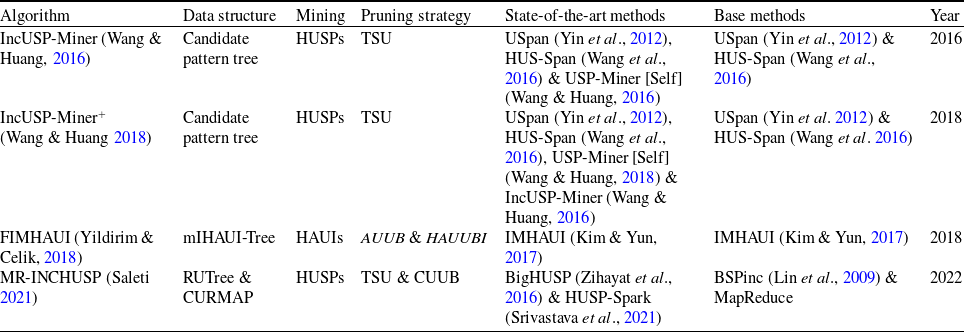

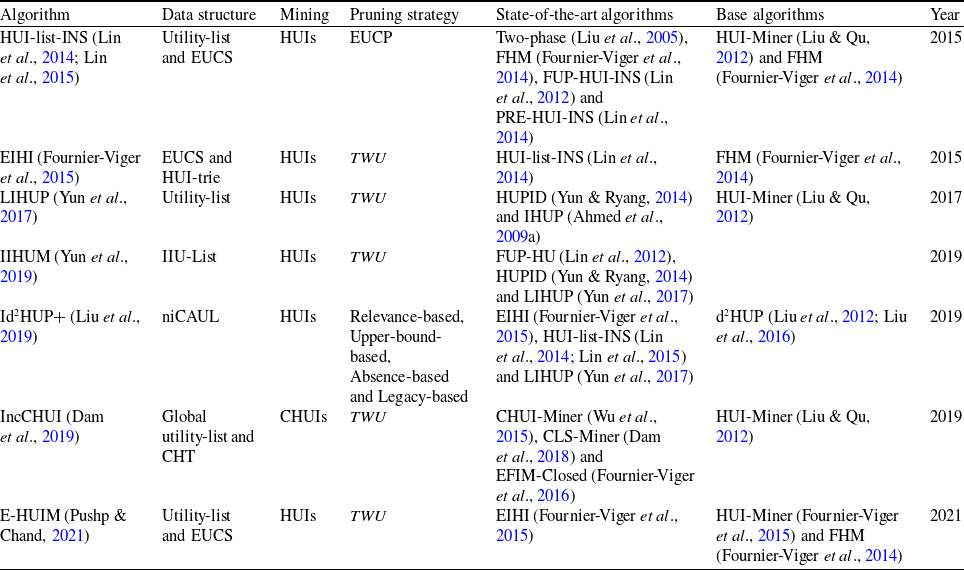

3.3 Projection-based approaches

The pattern-growth-based iHUIM algorithms utilize tree-based structures to store previous mining information and avoid redundant re-computation when the original dataset is updated. However, this process consumes a high amount of memory as it produces a large number of tree nodes, resulting in considerable time overhead. Furthermore, the designed upper-bounds are not tighter, resulting in poor mining efficiency since they fail to efficiently prune the search space and reduce the number of less promising candidates. To address these challenges, projection-based iHUIM algorithms (Wang & Huang, Reference Wang and Huang2016; Wang & Huang, Reference Wang and Huang2018; Yildirim & Celik, Reference Yildirim and Celik2018; Saleti, Reference Saleti2021) are designed to extract profitable items from the incremental datasets. These approaches use tighter upper-bounds to reduce the less promising candidates, leading to improved mining performance. Moreover, they implement compact data structures for optimized storage space. Here we present in-depth overview of projection-based iHUIM algorithms, including their strengths and weaknesses.