Introduction

As pediatric anxiety disorders precede the onset of most persistent adult emotional problems (Gregory et al., Reference Gregory, Caspi, Moffitt, Koenen, Eley and Poulton2007; Nelemans et al., Reference Nelemans, Hale, Branje, Raaijmakers, Frijns, Van Lier and Meeus2014; Pine, Cohen, Gurley, Brook, & Ma, Reference Pine, Cohen, Gurley, Brook and Ma1998; Woodward & Fergusson, Reference Woodward and Fergusson2001), successful treatment could exert a long-term impact. However, cognitive behavior therapy (CBT), a first-line treatment, produces remission only in less than half of all cases (Ginsburg et al., Reference Ginsburg, Kendall, Sakolsky, Compton, Piacentini, Albano and March2011; Piacentini et al., Reference Piacentini, Bennett, Compton, Kendall, Birmaher, Albano and Walkup2014; Silverman, Pina, & Viswesvaran, Reference Silverman, Pina and Viswesvaran2008). Because CBT is time-consuming, identifying reliable predictors of treatment outcomes could markedly influence practice. Clinical features, such as comorbidity or severity, only partially predict outcomes (Kunas, Lautenbacher, Lueken, & Hilbert, Reference Kunas, Lautenbacher, Lueken and Hilbert2021).

Magnetic resonance imaging (MRI) indices may be able to predict outcomes beyond such clinical features. Measurements derived from MRI are reliable, scalable, and already used in relatively large samples (Miller et al., Reference Miller, Alfaro-Almagro, Bangerter, Thomas, Yacoub, Xu and Smith2016). This study applies a predictive framework with resting-state functional connectivity (rsFC) and structural MRI (sMRI) in medication-free children seeking treatment for anxiety disorders. Two samples are studied, each receiving CBT by trained experts, to support a three-step approach. This begins with model building, followed by cross-validation in the first, larger sample. The approach ends with model testing in the smaller, held-out sample.

This study extends considerable research (Dubois & Adolphs, Reference Dubois and Adolphs2016; Mueller et al., Reference Mueller, Wang, Fox, Yeo, Sepulcre, Sabuncu and Liu2013) using rsFC to model an individual’s ‘connectome’ computed by correlating signals among network ‘nodes’ (Sporns, Reference Sporns2011). Connectome predictive modeling (CPM) (Shen et al., Reference Shen, Finn, Scheinost, Rosenberg, Chun, Papademetris and Constable2017) generates clinical insights by correlating edgewise rsFC matrices with clinical measures and pooling associations in a second prediction stage. CPM can predict important constructs, such as intelligence (Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015; Gao, Greene, Constable, & Scheinost, Reference Gao, Greene, Constable and Scheinost2019; Greene, Gao, Scheinost, & Constable, Reference Greene, Gao, Scheinost and Constable2018), attention (Rosenberg, Finn, Scheinost, Constable, & Chun, Reference Rosenberg, Finn, Scheinost, Constable and Chun2017; Rosenberg et al., Reference Rosenberg, Finn, Scheinost, Papademetris, Shen, Constable and Chun2016), and anxiety (Ren et al., Reference Ren, Daker, Shi, Sun, Beaty, Wu and Qiu2021; Wang et al., Reference Wang, Goerlich, Ai, Aleman, Luo and Xu2021). Although promising, CPM is still understudied, as is rsFC for anxiety disorders more broadly (Zugman, Jett, Antonacci, Winkler, & Pine, Reference Zugman, Jett, Antonacci, Winkler and Pine2023). One study and a follow-up replication study used rsFC analyzed with methods different from CPM to predict treatment outcomes in anxiety (Ashar et al., Reference Ashar, Clark, Gunning, Goldin, Gross and Wager2021; Whitfield-Gabrieli et al., Reference Whitfield-Gabrieli, Ghosh, Nieto-Castanon, Saygin, Doehrmann, Chai and Gabrieli2016). Both studies focused on adults with a diagnosis of social anxiety disorder, and the predictive model failed to replicate. A recent study that included adults who underwent CBT treatment for anxiety disorders in two adult cohorts found no successful predictive model for treatment response using different machine learning pipelines (Hilbert et al., Reference Hilbert, Böhnlein, Meinke, Chavanne, Langhammer, Stumpe and Lueken2024). No studies used CPM to predict treatment response in pediatric anxiety, and the available rsFC studies were small. Of note, while the sample size in this study is also small, it is larger than either of the two past studies. Across the three studies, small sample sizes reflect the difficulty of delivering state-of-the-art treatment to medication-free subjects along with brain imaging investigations. The primary goal of this study is to predict CBT response using CPM in pediatric anxiety disorders.

The secondary goal considers aspects of imaging reliability. sMRI generates measures with higher reliability than rsFC. Hence, sMRI could have advantages in predicting treatment response. However, rsFC, while less reliable (Hedges et al., Reference Hedges, Dimitrov, Zahid, Brito Vega, Si, Dickson and Kempton2022; Noble, Scheinost, & Constable, Reference Noble, Scheinost and Constable2019) may identify subsets of stable features that relate more consistently than sMRI to clinical measures (Mansour L, Tian, Yeo, Cropley, & Zalesky, Reference Mansour, Tian, Yeo, Cropley and Zalesky2021). We term the use of sMRI in this framework ‘anatomical predictive modeling (APM)’ since no connectome is involved. Within the CPM framework, we compare the ability of sMRI and rsFC to predict treatment response.

Recent literature describes idiosyncratic rsFC patterns related to subject identity as akin to ‘fingerprints’. These patterns may predict variables of clinical interest (Amico & Goñi, Reference Amico and Goñi2018; Byrge & Kennedy, Reference Byrge and Kennedy2020; Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015; Lin, Baete, Wang, & Boada, Reference Lin, Baete, Wang and Boada2020). Recent research and commentary, however, suggest otherwise (Finn & Rosenberg, Reference Finn and Rosenberg2021; Mantwill, Gell, Krohn, & Finke, Reference Mantwill, Gell, Krohn and Finke2022). Thus, a third objective of this study is to assess whether MRI features that are most unique to individuals are relevant in predicting response to treatment.

Methods

Participants and measures

Anxious youth and healthy volunteers (HV) were recruited through referral to participate in the study at the National Institute of Mental Health (NIMH), National Institutes of Health (NIH), Bethesda, Maryland, United States, and enrolled in a protocol (01-M-0192; Principal Investigator: D.S.P.) for an ongoing clinical trial. Patients were considered for enrollment if they had a diagnosis of any DSM-5 anxiety disorder established by a licensed clinician using the KSADS (Kiddie Schedule for Affective Disorders and Schizophrenia). Exclusion criteria for all participants were a history of psychotic disorder, bipolar disorder, developmental disorders, obsessive-compulsive disorder, post-traumatic stress disorder, substance use disorder, contraindication to MRI scan, use of medication, or an estimated IQ lower than 70 (as measured by the Wechsler Abbreviated Scale of Intelligence). HV also were excluded if they had any current psychiatric diagnoses. All parents and research participants provided written informed consent/assent in a protocol approved by the NIH Institutional Review Board (IRB).

Symptom severity and treatment response was assessed using the Pediatric Anxiety Rating Scale (PARS) (The Research Units on Pediatric Psychopharmacology Anxiety Study Group, 2002), the gold-standard clinician-administered assessment incorporating both child and parent reports. The PARS was administered at four time points before, during (Weeks 3 and 8), and after treatment. The total PARS score ranges from 0 to 25, with a clinical cut-off of nine or higher indicating a likely presence of an anxiety disorder. In addition to CBT administered by experts, all patients received either an active or sham version of attention-bias modification therapy (ABMT). To maximize sample sizes, groups were combined irrespective of randomization to either active or sham ABMT. CBT in this sample was delivered using a standardized protocol, consisting of 12 weekly sessions (Silverman & Ginsburg, Reference Silverman, Ginsburg, Ollendick and Hersen1998; Silverman, Rey, Marin, Jaccard, & Pettit, Reference Silverman, Rey, Marin, Jaccard and Pettit2022). The first three treatment sessions entail an introduction to CBT, psychoeducation, and self-monitoring/tracking. Starting at session four, participants complete in-session exposures and learn cognitive restructuring strategies and coping mechanisms (Lebowitz, Marin, Martino, Shimshoni, & Silverman, Reference Lebowitz, Marin, Martino, Shimshoni and Silverman2019). For additional details, see (Haller et al., Reference Haller, Linke, Grassie, Jones, Pagliaccio, Harrewijn and Brotman2024).

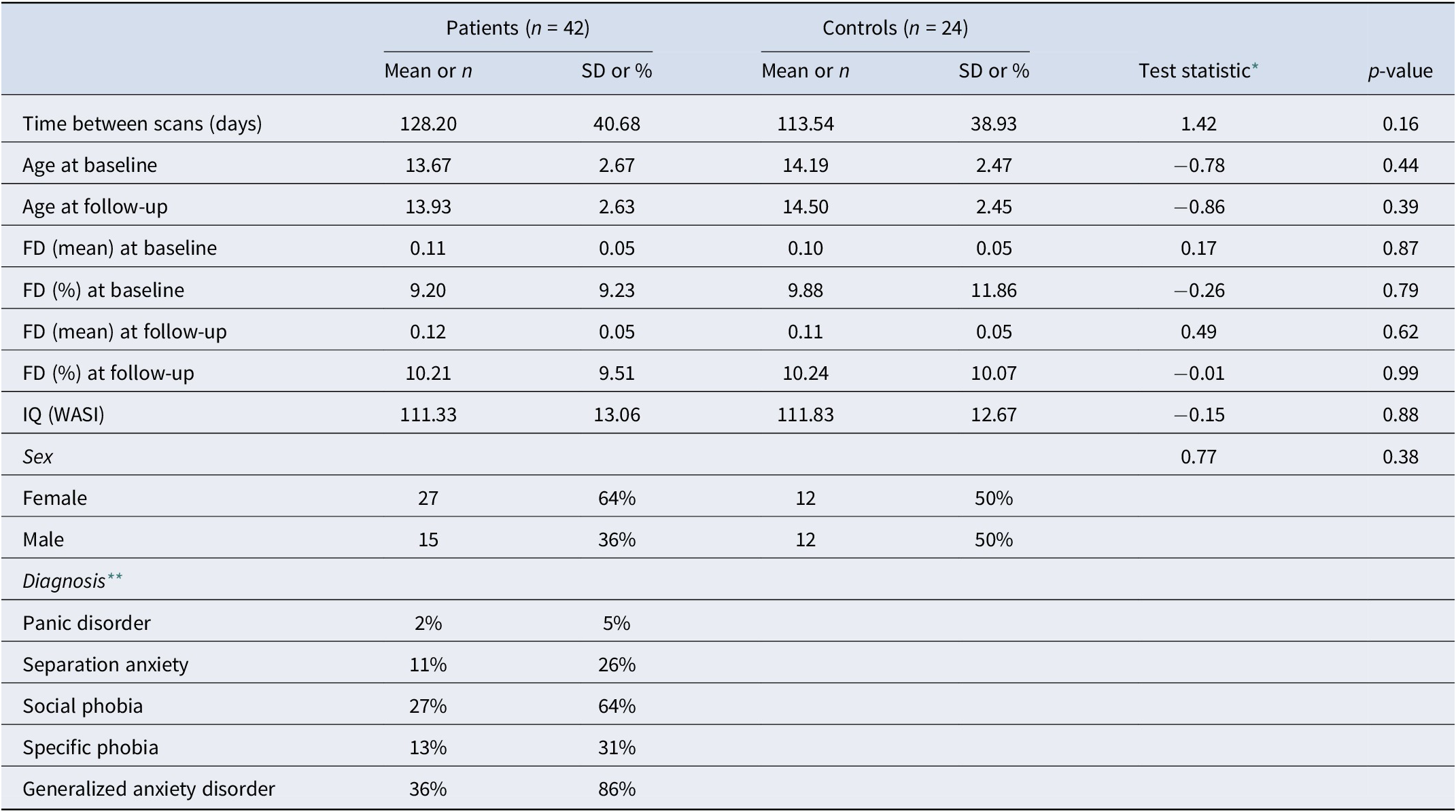

The above forms our main dataset (Dataset A). To determine whether the results obtained are replicable, we used a small sample of N = 15 (Dataset B) individuals who participated in a previous 8-week clinical trial to study the effects of CBT on pediatric anxiety (White et al., Reference White, Sequeira, Britton, Brotman, Gold, Berman and Pine2017), also under protocol 01-M-0192. It used a different resting state sequence, and CBT in this study followed the Coping Cat protocol (Podell, Mychailyszyn, Edmunds, Puleo, & Kendall, Reference Podell, Mychailyszyn, Edmunds, Puleo and Kendall2010). The eight sessions aim to develop skills to recognize signs of anxiety and anxious thoughts, relaxation techniques, and coping. Again, we use only patients with resting-state fMRI from up to 90 days before or 30 days after initiating treatment and who had available PARS at baseline and at the end of treatment (8 weeks). Demographic characteristics and MRI acquisition parameters for both datasets are described in Table 1 and Table 2 and in the Supplementary Material.

Table 1. Descriptive statistics for Datasets A and B, as used for the CPM and APM analyses. Additional sample details can be found in the Supplementary Material

* Two-sample t-test or Chi-squared test when appropriate.

** Patients may have more than one diagnosis, thus the sum is higher than 100%.

Table 2. Descriptive statistics for the fingerprinting sample (from Dataset A). Additional sample details can be found in the Supplementary Material

* Two-sample t-test or Chi-squared test when appropriate.

** Patients may have more than one diagnosis, thus the sum is higher than 100%.

Connectome predictive modeling

The CPM analysis included only patients who had an available resting state fMRI scan collected up to 90 days before or 30 days after treatment initiation. As the primary objective was to assess treatment response, the main analysis excluded those who did not have available PARS at baseline or at 12 weeks. The baseline PARS came from either the screening visit or the third week of treatment, prior to the exposure-based portion of the treatment, and closer in time to the date of the MRI scan acquisition. A full sample description is provided in Supplementary Table 1. We used the methods outlined in Shen et al. (Reference Shen, Finn, Scheinost, Rosenberg, Chun, Papademetris and Constable2017). Before conducting CPM, we verified that motion (as assessed via average framewise displacement) would not be a good predictor of the PARS score (Dataset A:

![]() $ r $

= −0.2030,

$ r $

= −0.2030,

![]() $ p $

= 0.1380; Dataset B:

$ p $

= 0.1380; Dataset B:

![]() $ r $

= 0.0877,

$ r $

= 0.0877,

![]() $ p $

= 0.7544; two-tailed p-values assessed with 10,000 permutations). The rsFC matrices for every subject were tested for their association with PARS at the end of treatment; significantly (

$ p $

= 0.7544; two-tailed p-values assessed with 10,000 permutations). The rsFC matrices for every subject were tested for their association with PARS at the end of treatment; significantly (

![]() $ p $

< 0.01) associated edges were selected, and their rsFC Fisher’s

$ p $

< 0.01) associated edges were selected, and their rsFC Fisher’s

![]() $ r $

-to-

$ r $

-to-

![]() $ z $

values summed. These sums were used as independent variables in a second linear regression.

$ z $

values summed. These sums were used as independent variables in a second linear regression.

Cross-validation

Regression coefficients from the second model were used to predict, in a leave-one-out cross-validation loop, the PARS score of a subject unseen in the previous steps. This analysis used the patients from Dataset A.

External validation

Regression coefficients from the second model with Dataset A were used to predict the PARS score of all patients in Dataset B.

Selection of significant edges in the initial step of CPM can consider edges that are positively correlated with PARS, negatively correlated, or both; edges can also be selected using other criteria. We departed from (Shen et al., Reference Shen, Finn, Scheinost, Rosenberg, Chun, Papademetris and Constable2017) in two aspects: (1) we investigated the inclusion of age and sex in the second regression model as predictors of interest and, separately, as nuisance, as well as without any such additional regressors as in the original publication; and (2) in addition to investigating the performance of using only positively correlated, negatively correlated, and both sets of edges, we also investigated the performance of CPM when using the most discriminative edges identified using fingerprinting; details of these two departures from the original method are provided below, and results from these various models are presented in the Supplementary Material. Model performance was assessed using the mean absolute error (MAE), the simple correlation coefficient (

![]() $ r $

) between observed (

$ r $

) between observed (

![]() $ {y}_i $

) and predicted values (

$ {y}_i $

) and predicted values (

![]() $ {\hat{y}}_i $

), and a version of the coefficient of determination (

$ {\hat{y}}_i $

), and a version of the coefficient of determination (

![]() $ {R}^2 $

) that is suitable for cross-validation and is computed as (Kvålseth, Reference Kvålseth1985):

$ {R}^2 $

) that is suitable for cross-validation and is computed as (Kvålseth, Reference Kvålseth1985):

$$ {R}^2=1-\sum_{i=1}^n{\left({y}_i-{\hat{\mathrm{y}}}_i\right)}^2/\sum_{i=1}^n{\left({y}_i-{\bar{y}}_i\right)}^2 $$

$$ {R}^2=1-\sum_{i=1}^n{\left({y}_i-{\hat{\mathrm{y}}}_i\right)}^2/\sum_{i=1}^n{\left({y}_i-{\bar{y}}_i\right)}^2 $$

Note that

![]() $ {R}^2 $

does not correspond to the square of the correlation coefficient

$ {R}^2 $

does not correspond to the square of the correlation coefficient

![]() $ r $

; for commentary on the merits of each metric, see (Chicco, Warrens, & Jurman, Reference Chicco, Warrens and Jurman2021; Poldrack, Huckins, & Varoquaux, Reference Poldrack, Huckins and Varoquaux2020). Confidence intervals (95%) were computed for these three quantities using 1000 bootstraps (Davison & Hinkley, Reference Davison and Hinkley1997).

$ r $

; for commentary on the merits of each metric, see (Chicco, Warrens, & Jurman, Reference Chicco, Warrens and Jurman2021; Poldrack, Huckins, & Varoquaux, Reference Poldrack, Huckins and Varoquaux2020). Confidence intervals (95%) were computed for these three quantities using 1000 bootstraps (Davison & Hinkley, Reference Davison and Hinkley1997).

Anatomical predictive modeling

We investigated how replacing rsFC in CPM with measurements of brain morphology, which we termed APM, would impact predictions. To facilitate comparison with CPM, the APM analysis considers the same individuals. Surface-based representations of the brain were obtained with FreeSurfer 6.0.1, as part of fMRIprep processing, and resampled into the ‘fsaverage5’ space (a brain mesh with the same topology of a geodesic sphere produced by 5 recursive subdivisions of an icosahedron), which contains 20,484 vertices spanning both hemispheres; we removed those with constant variance, thus masking out non-cortical regions, to a total of 18,742 vertices used for analysis (compare to 23,220 unique edges used for analysis in the rsFC-based models). We investigated 5 different cortical morphometric measurements (area, thickness, curvature, sulcal depth, and gray/white matter contrast) and two levels of smoothing (FWHM = 0 and 15 mm).

Nuisance variables

The prediction may make use of other variables, such as age and sex, or consider these as a nuisance. In the former case, they are included as additional regressors in both stages of CPM and, subsequently, as additional predictors (Rao, Monteiro, & Mourao-Miranda, Reference Rao, Monteiro and Mourao-Miranda2017). In the latter case, these variables are likewise included in the first regression model of CPM (that identifies edges), whereas in the second regression, both data and model are residualized with respect to these variables in the training set; the estimated regression coefficients from the training set are then to residualize also the test set (Snoek, Miletić, & Scholte, Reference Snoek, Miletić and Scholte2019); prediction uses then residualized variables, with coefficients of variables of interest and of no interest estimated from the training set. Nuisance effects can be added back to the predicted values to ensure results are compatible with the quantities of interest. If the data used for testing contains a substantial number of subjects, an improved model consists of residualizing the test set using estimated nuisance effects from the test set itself, as opposed to from the training set, thus reducing the risk of covariate shift (Rao et al., Reference Rao, Monteiro and Mourao-Miranda2017). We investigated models without nuisance variables, as well as with age and sex (and scanner where appropriate). For the cross-validation case, in which it is not possible to estimate nuisance effects from test samples (in a leave-one out cross-validation, the test set has only one subject), we used the regression coefficients for nuisance variables obtained from the training set (Snoek et al., Reference Snoek, Miletić and Scholte2019), whereas for external validation, nuisance effects were estimated directly from the test set.

Fingerprinting

The fingerprinting analysis included only participants with at least two fMRI sessions within one year of each other. Subjects included in the primary data were allowed to be included in the fingerprinting analysis if they had a follow-up rsfMRI available. The sample characteristics are described in Supplementary Table 2. Fingerprinting with sMRI used the same individuals to facilitate comparison between the two approaches. We followed the methods outlined in (Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015). Each subject had two resting state scans, collected on average 123 days apart. The rsFC matrix was unwrapped into a vector, and then the Pearson’s correlation coefficient between every baseline rsFC of every subject with every follow-up rsFC was computed, providing an index of similarity. Subject identification was successful if, for every baseline rsFC matrix, the most similar follow-up rsFC matrix belongs to the same subject. As the most similar follow-up is allowed to be repeated (i.e. with replacement), the p-value for the number of correct identifications (

![]() $ k $

) can be computed using a binomial distribution with parameters

$ k $

) can be computed using a binomial distribution with parameters

![]() $ n $

= number of subjects,

$ n $

= number of subjects,

![]() $ p=1/n $

and location

$ p=1/n $

and location

![]() $ k=1 $

.

$ k=1 $

.

Correlations can be interpreted as the dot product of vectors normalized to unit variance (Rodgers & Nicewander, Reference Rodgers and Nicewander1988). This provides an indicator of the contribution of each edge to the final correlation. Let the correlation be expressed as (Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015):

$$ {r}_{ij}=\sum_{e=1}^M{\varphi}_{ij}(e) $$

$$ {r}_{ij}=\sum_{e=1}^M{\varphi}_{ij}(e) $$

where

![]() $ M $

is the number of edges,

$ M $

is the number of edges,

![]() $ {\varphi}_{ij}(e)={x}_i^b(e)\;{x}_j^f(e) $

,

$ {\varphi}_{ij}(e)={x}_i^b(e)\;{x}_j^f(e) $

,

![]() $ {x}_i^b(e) $

is the normalized value of the rsFC at edge

$ {x}_i^b(e) $

is the normalized value of the rsFC at edge

![]() $ e $

for subject

$ e $

for subject

![]() $ i $

at baseline, and

$ i $

at baseline, and

![]() $ {x}_j^f(e) $

is the normalized rsFC at the same edge for subject

$ {x}_j^f(e) $

is the normalized rsFC at the same edge for subject

![]() $ j $

at follow-up. If

$ j $

at follow-up. If

![]() $ i=j $

,

$ i=j $

,

![]() $ {r}_{ii} $

is the correlation between a subject’s own baseline and follow-up rsFC matrices. The quantity

$ {r}_{ii} $

is the correlation between a subject’s own baseline and follow-up rsFC matrices. The quantity

![]() $ \varphi $

is interesting because, if

$ \varphi $

is interesting because, if

![]() $ {\varphi}_{ii}(e)\ge {\varphi}_{ij}(e) $

and

$ {\varphi}_{ii}(e)\ge {\varphi}_{ij}(e) $

and

![]() $ {\varphi}_{ii}(e)\ge {\varphi}_{ji}(e) $

, then edge

$ {\varphi}_{ii}(e)\ge {\varphi}_{ji}(e) $

, then edge

![]() $ e $

contributes to the identification of the subject’s rsFC at the other time point. An estimator of the probability

$ e $

contributes to the identification of the subject’s rsFC at the other time point. An estimator of the probability

![]() $ {P}_i(e) $

that an edge makes such contribution by chance is given by:

$ {P}_i(e) $

that an edge makes such contribution by chance is given by:

$$ {\hat{P}}_i(e)={\displaystyle \begin{array}{l}\left[\sum_{j=1}^nI\left({\varphi}_{ij}(e)\ge {\varphi}_{ii}(e)\right)+\sum_{j=1}^nI\left({\varphi}_{ji}(e)\ge {\varphi}_{ii}(e)\right)-\hskip2px 1\right]/\\ {}\left(2n-1\right)\end{array}} $$

$$ {\hat{P}}_i(e)={\displaystyle \begin{array}{l}\left[\sum_{j=1}^nI\left({\varphi}_{ij}(e)\ge {\varphi}_{ii}(e)\right)+\sum_{j=1}^nI\left({\varphi}_{ji}(e)\ge {\varphi}_{ii}(e)\right)-\hskip2px 1\right]/\\ {}\left(2n-1\right)\end{array}} $$

where

![]() $ I\left(\cdotp \right) $

is the indicator (Kronecker) function, and

$ I\left(\cdotp \right) $

is the indicator (Kronecker) function, and

![]() $ n $

is the number of subjects. Note that the above formulation is different than the one originally proposed by (Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015); edges that are highly predictive can have

$ n $

is the number of subjects. Note that the above formulation is different than the one originally proposed by (Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015); edges that are highly predictive can have

![]() $ {\hat{P}}_i(e) $

as low as

$ {\hat{P}}_i(e) $

as low as

![]() $ 1/\left(2n-1\right) $

, as opposed to zero; edges that are not predictive can have

$ 1/\left(2n-1\right) $

, as opposed to zero; edges that are not predictive can have

![]() $ {\hat{P}}_i(e) $

as high as

$ {\hat{P}}_i(e) $

as high as

![]() $ 1 $

. A global estimate of the differential power (DP) of a given edge for subject identification can be computed as:

$ 1 $

. A global estimate of the differential power (DP) of a given edge for subject identification can be computed as:

$$ DP(e)=-2\sum_{i=1}^n\mathit{\ln}\left({\hat{P}}_i(e)\right) $$

$$ DP(e)=-2\sum_{i=1}^n\mathit{\ln}\left({\hat{P}}_i(e)\right) $$

where the quantity

![]() $ -\mathit{\ln}\left({\hat{P}}_i(e)\right) $

follows an exponential distribution with rate parameter 1 if the true (unknown)

$ -\mathit{\ln}\left({\hat{P}}_i(e)\right) $

follows an exponential distribution with rate parameter 1 if the true (unknown)

![]() $ {P}_i(e) $

follows a uniform distribution. The constant 2 adjusts that rate to 1/2. An exponential distribution with rate parameter 1/2 is a Chi-squared distribution with 2 degrees of freedom; the sum of

$ {P}_i(e) $

follows a uniform distribution. The constant 2 adjusts that rate to 1/2. An exponential distribution with rate parameter 1/2 is a Chi-squared distribution with 2 degrees of freedom; the sum of

![]() $ n $

random variables following this distribution also follows a Chi-squared distribution, now with 2

$ n $

random variables following this distribution also follows a Chi-squared distribution, now with 2

![]() $ n $

degrees of freedom. Thus, the hypothesis that an edge is more informative than could be expected by chance can be tested. This formulation also allows the selection of edges (e.g. for later analyses, such as in CPM) using a threshold based on the probability distribution under the null hypothesis of chance DP. Note that the above formulation of

$ n $

degrees of freedom. Thus, the hypothesis that an edge is more informative than could be expected by chance can be tested. This formulation also allows the selection of edges (e.g. for later analyses, such as in CPM) using a threshold based on the probability distribution under the null hypothesis of chance DP. Note that the above formulation of

![]() $ DP(e) $

is also different from the original work by (Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015).

$ DP(e) $

is also different from the original work by (Finn et al., Reference Finn, Shen, Scheinost, Rosenberg, Huang, Chun and Constable2015).

Anatomical fingerprinting

Following (Mansour L et al., Reference Mansour, Tian, Yeo, Cropley and Zalesky2021), we also investigate fingerprinting using measures of cortical morphology: area, thickness, curvature, sulcal depth, and gray/white matter contrast, as opposed to only unwrapped rsFC matrices. Fingerprinting methods are otherwise the same as for rsFC data. Surface-based cortical measurements were as with APM.

Results

Prediction of anxiety scores using CPM

We report the main results for CPM using GSR, full (not partial) rsFC, positive edges without weighting, and both a model in which age, sex, and baseline PARS are used as predictors, as well as a model in which data are residualized in relation to these variables. These results are emphasized since they led to generally superior accuracy across multiple analyses. CPM was not able to predict post-treatment 12-week PARS scores at a level that exceeded chance (Table 3). While using the simple correlation coefficient might give the impression of statistical robustness, the magnitude of relations between predicted and expected scores was only moderate, with an MAE of approximately 3.5 points (PARS scores range between 0 and 25). This MAE does approach a level that would be clinically useful (Walkup et al., Reference Walkup, Labellarte, Riddle, Pine, Greenhill, Klein and Roper2001, Reference Walkup, Albano, Piacentini, Birmaher, Compton, Sherrill and Kendall2008), but the correlation between predicted and observed PARS did not exceed 0.4. Additionally, no model showed a high

![]() $ {R}^2 $

, and its 95% CI indicated that no model was better than chance. Overall, the low

$ {R}^2 $

, and its 95% CI indicated that no model was better than chance. Overall, the low

![]() $ {R}^2 $

indicates that the models fail to predict above the mean of the target value. A scatter plot showing observed and predicted values for one of these models appears in Figure 1, upper left panel.

$ {R}^2 $

indicates that the models fail to predict above the mean of the target value. A scatter plot showing observed and predicted values for one of these models appears in Figure 1, upper left panel.

Figure 1. Prediction of anxiety scores using CPM and APM; APM used gray/white matter contrast. The main regression line (red) is based on the observed and predicted values (represented by the dots). The bootstrap regression lines (faint blue) are based on the bootstrapped predictions used to construct the 95% confidence intervals given in Tables 3 and 4 (to avoid clutter, only 500 out of 1000 lines are shown in each panel). The 95% confidence bands were computed parametrically in relation to the main regression line and are presented merely as an additional reference. Observe that the slopes of the bootstrapped lines in the external validation are less variable, which is expected given the larger number of observations that are predicted in a single step (15 in this case) versus the single prediction in each step of the leave-one-out (LOO) cross-validation.

Table 3. Mean absolute error (MAE) of CPM-predicted vs. observed PARS at 12 weeks, using Dataset A for training and leave-one-out cross-validation and Dataset B for external validation. The corresponding correlation (r) and coefficient of determination (R 2) are also shown. Confidence intervals (95%), based on 1000 bootstraps, are between brackets. A scatter plot for the model marked with an asterisk (*) is in Figure 1 (left panels)

Of note, as expected, results were weaker in some analyses appearing in Supplemental Material, where the complete set of results, with varying processing choices, are provided. This included analyses predicting change scores and analyses using the smaller Dataset B to build the model, which was then tested in the larger Dataset A (Figure 1, lower left panel).

Prediction of anxiety scores using APM

For APM, the models that were generally better predictive were those that used the gray/white matter contrast, without smoothing, and that selected both positive and negative vertices in the first regression of APM. Moreover, this set of APM models also tended to produce higher correlations between predicted and observed PARS scores than CPM, with correlations above 0.4. However, as with CPM, the results show no model produced a strong

![]() $ {R}^2 $

. A summary is presented in Table 4, and a scatter plot showing observed and predicted values for one of these models appears in Figure 1, upper right panel.

$ {R}^2 $

. A summary is presented in Table 4, and a scatter plot showing observed and predicted values for one of these models appears in Figure 1, upper right panel.

Table 4. Mean absolute error (MAE) of APM-predicted (with gray/white contrast) vs. observed PARS, using Dataset A for training and leave-one-out cross-validation and Dataset B for external validation. The corresponding correlation (r) and coefficient of determination (R 2) are also shown. Confidence intervals (95%), based on 1000 bootstraps, are between brackets. A scatter plot for the model marked with an asterisk (*) is in Figure 1 (right panels)

Unlike the cross-validation results, models for CPM generally produced stronger results for external validation than models for APM. Moreover, whereas results for CPM appeared generally comparable across cross-validation and external validation, for APM, external validation for Dataset B produced indices of accuracy that were generally lower than for cross-validation. Figure 1, lower right panel shows the corresponding scatter plot for observed and predicted values for Dataset B. An extended set of results for APM with cortical thickness, surface area, curvature, and sulcal depth, with and without smoothing, are provided in the Supplementary Material.

Localization of predictive edges and vertices

For both CPM and APM, the predictive elements – edges or vertices, respectively – identified in the first regression were widely distributed throughout the brain. These topographies did not manifest patterns comparable to networks of known specific functions. We focus on the models that included age, sex, and baseline PARS as nuisance; these are highlighted with an asterisk (*) in Tables 3 and 4. Figure 2 shows the edges most frequently identified in the leave-one-out cross-validation using Dataset A with CPM; Figure 3 provides a similar depiction for APM, using gray/white matter contrast. For both CPM and APM, the number of elements found as significant in the first stage of the respective predictive model was relatively small, about two orders of magnitude smaller than the number of edges or vertices available for a given model.

Figure 2. Edges most frequently identified as positively (red) or negatively (blue) associated with the PARS score at 12 weeks in Dataset A, as found in the first stage of CPM. The frequency refers to the number of iterations of the leave-one-out cross-validation in which a significant association was found; edges found in at least 50% of the iterations are shown (128 positive, 73 negative, out of 23,220 edges). The connections shown are for the model marked with an asterisk (*) in Table 3 (only the positive edges were used in the second stage of CPM; the negative edges are depicted for completeness). Named networks are those identified by Yeo et al. (Reference Yeo, Krienen, Sepulcre, Sabuncu, Lashkari, Hollinshead and Buckner2011); the set of nodes also includes 8 subcortical regions. Note that despite the seemingly large number of connections, only a small fraction of the total number of edges is used, in a pattern mostly diffuse and unstructured.

Figure 3. Vertices most frequently identified as positively (red) or negatively (blue) associated with the PARS score at 12 weeks in Dataset A. Observe that the pattern is mostly scattered, with isolated vertices (representing tiny regions) diffusely spread throughout the cortex. These results are as found in the first stage of APM using gray/white matter contrast. The percentage refers to the number of iterations of the leave-one-out cross-validation in which a significant association was found over all iterations; vertices found in at least 50% of the iterations are shown (16 positive and 55 negative, out of 18,742 vertices). The vertices shown are for the model marked with an asterisk (*) in Table 4.

Uniqueness and its predictive value

Fingerprinting using rsFC features and sMRI features led to strong accuracy for subject identification. Using rsFC features from the baseline scan, the correct follow-up scan was correctly identified for 53 of 66 subjects (80.3%,

![]() $ p $

= 6.2 × 10−84), whereas doing the reverse produced correct identifications for 56 of 66 (84.9%,

$ p $

= 6.2 × 10−84), whereas doing the reverse produced correct identifications for 56 of 66 (84.9%,

![]() $ p $

= 2.3 × 10−91); these results are based on full (not partial) correlations and with GSR. Anatomical fingerprinting led to even higher rates of correct identification, with a near 100% success rate for most of the measurements studied (cortical area, thickness, curvature, sulcal depth, and gray/white matter contrast). Differential power for edges and for the gray/white matter contrast are shown in Figure 4; Figure 5 shows DP for the other anatomical measurements. DP was found substantially higher for every anatomical measurement studied compared to connectivity measurements: while DP for edges was found generally weak and scattered, for gray/white contrast, DP was found stronger and with well-defined locations, covering mostly parietal cortex, precuneus, inferior temporal lobe, and caudal portions of the frontal lobe before reaching the precentral gyrus, and preserving central sulcus, pre- and postcentral gyri, insula, and cuneus, all of which are regions of known lower variability among individuals. The relation between DP in different modalities can be seen in Supplementary Figure 1. There is little relation between the DP between structural measurements and rsFC.

$ p $

= 2.3 × 10−91); these results are based on full (not partial) correlations and with GSR. Anatomical fingerprinting led to even higher rates of correct identification, with a near 100% success rate for most of the measurements studied (cortical area, thickness, curvature, sulcal depth, and gray/white matter contrast). Differential power for edges and for the gray/white matter contrast are shown in Figure 4; Figure 5 shows DP for the other anatomical measurements. DP was found substantially higher for every anatomical measurement studied compared to connectivity measurements: while DP for edges was found generally weak and scattered, for gray/white contrast, DP was found stronger and with well-defined locations, covering mostly parietal cortex, precuneus, inferior temporal lobe, and caudal portions of the frontal lobe before reaching the precentral gyrus, and preserving central sulcus, pre- and postcentral gyri, insula, and cuneus, all of which are regions of known lower variability among individuals. The relation between DP in different modalities can be seen in Supplementary Figure 1. There is little relation between the DP between structural measurements and rsFC.

Figure 4. Differential power (DP) for edges using connectome fingerprinting (left), and for vertices using anatomical fingerprinting with gray/white matter contrast (right), in logarithmic scale based on their p-values (i.e. −log10(p), where p is the p-value for DP, thus allowing scales to be comparable). Network names for the left panel are the same as for Figure 2, and name views are the same as for Figure 3. While anatomical fingerprinting without smoothing was slightly more accurate, the smoothed version includes the same regions and is more informative; hence, it is the one shown. Higher values for the DP indicate features that are more unique. DP is much higher for anatomical measurements than for connectivity measurements (note the different color scales); DP for connectivity features (edges) is generally weak and scattered, whereas for gray/white contrast (vertexwise), DP is stronger and with better-defined localization. DP for cortical area, thickness, curvature, and sulcal depth are shown in Figure 5.

Figure 5. Differential power (DP) for vertices using anatomical fingerprinting with cortical thickness, cortical surface area, cortical curvature, and sulcal depth, in logarithmic scale based on their p-values (i.e. −log10(p), where p is the p-value for DP, thus allowing scales to be comparable). While anatomical fingerprinting without smoothing was slightly more accurate, the smoothed version includes the same regions and is more informative; hence, it is the one shown. Higher values for the differential power indicate features that are more unique. As with the gray/white contrast, DP is for other anatomical measurements.

The edges or vertices with higher DP derived from fingerprinting, that is, those more ‘unique’, yielded slightly lower correlation to PARS scores compared to those found by model fitting in the first stage of CPM/APM. There was no overlap between the edges selected by using fingerprinting, compared to the edges found in the first stage of the CPM approach; the same was observed for APM.

Discussion

Prediction of response to treatment

This work applied CPM to predict response to CBT in pediatric anxiety disorders. The study used expert clinicians and a gold-standard measure of treatment outcome, in medication-free subjects recruited using criteria from past large-scale randomized controlled trials of pediatric anxiety disorders, that is, RUPP (Walkup et al., Reference Walkup, Labellarte, Riddle, Pine, Greenhill, Klein and Roper2001) and CAMS (Walkup et al., Reference Walkup, Albano, Piacentini, Birmaher, Compton, Sherrill and Kendall2008). Three main findings emerged. First, no model produced clearly significant results when using

![]() $ {R}^2 $

. Second, sMRI outperformed rsFC for fingerprinting, where it achieved excellent accuracy. Finally, both CPM and APM had limitations; no single model emerged as consistently better than all other models, and prediction arose from hundreds of regions that did not cohere into networks identified in other studies.

$ {R}^2 $

. Second, sMRI outperformed rsFC for fingerprinting, where it achieved excellent accuracy. Finally, both CPM and APM had limitations; no single model emerged as consistently better than all other models, and prediction arose from hundreds of regions that did not cohere into networks identified in other studies.

An advantage of the CPM methodology over other predictive models concerns its capacity to generate interpretable findings that might prove useful in a clinical context. Nevertheless, the current findings suggest the need for improvements before clinically useful approaches can emerge. For example, the edges that drove successful prediction varied across cross-validation loops. Such patterns complicate attempts to identify one set of robustly predictive edges. Findings in this study also failed to reveal patterns closely overlapping with regions previously associated with anxiety. As in Linke et al. (Reference Linke, Abend, Kircanski, Clayton, Stavish, Benson and Pine2021), the current findings could reflect a ‘many-to-one’ pattern, where complex collections of connections in the brain interact to shape pediatric psychopathology.

The prediction of therapeutic response in this study went beyond a mere exercise of rating unseen data; it related functional connectivity to a gold-standard, clinically relevant outcome variable, over and above baseline levels of severity, as well as demographic factors such as age and sex. Models that used imaging data to predict the posttreatment PARS with baseline PARS as a nuisance resulted in higher quality models than without. This is somewhat to be expected as the subject baseline symptom level might be related to brain measures and treatment outcomes.

Prediction offers the potential to stratify subjects according to the likelihood that treatment is successful, to indicate those who may need additional support, as well as to use data to support mechanistic theories of psychopathology and their links to novel therapeutics, although some have warned caution (Mitchell, Potash, Barocas, D’Amour, & Lum, Reference Mitchell, Potash, Barocas, D’Amour and Lum2021). In effect, precision medicine and personalized clinical predictions have been garnering increased attention in recent years (Fair & Yeo, Reference Fair and Yeo2020; Laumann, Zorumski, & Dosenbach, Reference Laumann, Zorumski and Dosenbach2023). However, a recent systematic review of 308 prediction models for psychiatry outcomes reported that 95% of studies were at high risk of bias primarily due to overfitting and biased variable selection methods; only 20% performed external validation on an independent sample (Meehan et al., Reference Meehan, Lewis, Fazel, Fusar-Poli, Steyerberg, Stahl and Danese2022), highlighting the need for robust methodology and validation in clinical models.

Resting-state fMRI is frequently criticized as an inaccurate picture of what would be brain resting-state activity, given that rsFC has been shown to relate to numerous uncontrolled variables such as mood (Harrison et al., Reference Harrison, Pujol, López-Solà, Hernández-Ribas, Deus, Ortiz and Cardoner2008) or alertness (Chang et al., Reference Chang, Leopold, Schölvinck, Mandelkow, Picchioni, Liu and Duyn2016), albeit more consistent results can be found using rsFC in predictive models than with other analytical approaches (Taxali, Angstadt, Rutherford, & Sripada, Reference Taxali, Angstadt, Rutherford and Sripada2021). In effect, analysis of task-based fMRI from the same trial shows baseline differences and a return to normality after CBT (Haller et al., Reference Haller, Linke, Grassie, Jones, Pagliaccio, Harrewijn and Brotman2024). Additional use of tasks meant to draw out individual differences in the measure of interest may provide additional predictive power in CPM and reduce confounding effects due to the lack of engagement during rest (Finn et al., Reference Finn, Scheinost, Finn, Shen, Papademetris and Constable2017), and as demonstrated in recent research (Barron et al., Reference Barron, Gao, Dadashkarimi, Greene, Spann, Noble and Scheinost2021; Greene et al., Reference Greene, Gao, Scheinost and Constable2018; Rosenberg et al., Reference Rosenberg, Finn, Scheinost, Papademetris, Shen, Constable and Chun2016). Using a general functional connectivity (GFC) measure based on multiple fMRI tasks may also have advantages over single-task CPM. Elliott et al. (Reference Elliott, Knodt, Cooke, Kim, Melzer, Keenan and Hariri2019) show increased test-retest reliability and higher heritability in GFC than rsFC. GFC may also improve prediction over single-task FC, both when computed using averaged connectomes or concatenation of time series (Gao et al., Reference Gao, Greene, Constable and Scheinost2019).

Our findings that neither CPM nor APM reliably predicted PARS score after treatment illustrate the difficulty in applying potential imaging-based measurements to improve treatment outcome predictions in a clinical sample. Additional research is necessary to explore how integrating different imaging modalities might benefit predictive algorithms.

Individual uniqueness

As in a previous study, there was no overlap between the edges selected during CPM and the edges used for subject identification (Mantwill et al., Reference Mantwill, Gell, Krohn and Finke2022). In this study, edge selection from fingerprinting using the most discriminatory features led to comparable results to the CPM. The edges selected in each case possibly represent different sources of variability that are not related; both approaches to edge selection might contain relevant, yet distinct information (Finn & Rosenberg, Reference Finn and Rosenberg2021). However, in our study, no approach appeared particularly promising over the other.

Limitations

Our study presents a relatively small sample size. The limited number of subjects included in each dataset led us to perform leave-one-out cross-validation, which can yield unstable estimates of accuracy (Varoquaux et al., Reference Varoquaux, Raamana, Engemann, Hoyos-Idrobo, Schwartz and Thirion2017). This is somewhat countered by the fact that we were able to benefit from another dataset with similar inclusion criteria and study design for validation.

A limiting applicability concerned model selection: we successfully found models that appeared to be successful when the correlation coefficient is considered, but that are in fact, predicting the target value worse than the mean. The results highlight the need to use more than one metric when assessing predictive models. Another limiting factor to the application of CPM in our sample is that the best models show an MAE of approximately 3.4 (PARS ranges between 0 and 25). Using predictive models in clinical practice is an emerging science. The added value that these models can bring to clinical practice remains uncertain and needs to be assessed objectively. In a study to detect the risk of mental health crisis stratified according to an automated model based on health registry, most clinical teams found the measure useful, although leading to relatively few additional actions (Garriga et al., Reference Garriga, Mas, Abraha, Nolan, Harrison, Tadros and Matic2022). Finally, there is a need to train and test such predictive algorithms in more diverse settings. Our sample was mostly comprised of white Americans with high family incomes. Future studies should test if predictive models are generalizable to populations of diverse ethnic and cultural backgrounds.

Conclusion

This study applied a predictive model approach to data from children and adolescents with anxiety. Limited success was obtained in the prediction of outcomes of CBT treatment. While some models showed moderate correlations between predicted and observed anxiety scores, the overall predictive power was weak, with low model performance. The methods used here have shown promising results when applied in other populations, including when predicting anxiety symptoms, and in line with that of other recent work (Hilbert et al., Reference Hilbert, Böhnlein, Meinke, Chavanne, Langhammer, Stumpe and Lueken2024), in which the authors fail to predict response to CBT in a population of adults with anxiety disorders using different machine learning methods based on rs-fMRI. We found mostly diffuse patterns of edges being selected for prediction, limiting the interpretation of the findings. Despite using expert clinicians, gold-standard outcome measures, and medication-free subjects, the models failed to consistently identify robust patterns associated with treatment response. This study, therefore, does not support evidence for the use of CPM to predict treatment outcomes in pediatric anxiety.

Supplementary material

The supplementary material for this article can be found at http://doi.org/10.1017/S0033291724003131.

Data availability statement

The data for subjects who consented to share their data will be available on OpenNeuro. The codes necessary to generate the connectivity matrices and run the CPM and APM models will be available on the authors’ GitHub page (https://github.com/zugmana/CPM-Anx).

Acknowledgments

This work was supported by the Intramural Research Program of the National Institutes of Health (NIH) through ZIA-MH002781 (https://clinicaltrials.gov: NCT00018057). AMW receives support from the NIH through U54-HG013247. This work used the computational resources of the NIH Biowulf cluster (http://hpc.nih.gov).

Competing interests

The authors report no conflicts of interest to declare. All co-authors have seen and agreed with the contents of the manuscript.