Shelly is a seasoned industrial-organizational (I-O) psychologist working for a midsize multinational manufacturing company. Her team is responsible for designing and implementing the talent management (TM) processes for her company, including performance management, high-potential identification and succession planning, leadership assessment, hiring and selection, and learning and development. Although she has only a small team of I-O psychology practitioners working on the team, Shelly strives to leverage the available academic literature and conduct internal research before implementing major design changes to their TM systems—just like she was taught in graduate school. One day, the chief human resources officer (CHRO) pulls her into a meeting. She is introduced to two individuals from a start-up company in Silicon Valley. The CEO of the start-up is a young engineer from an Ivy League university, who, even at this very young age, has already founded and sold two start-ups in the fashion industry. Joining him is the start-up's chief technology officer, who is introduced as a neuroscientist specializing in “the brain science of millennials.” They are pitching a product that they purport will help Shelly's company “attract, engage, and retain the next generation of talent.” They provide some vague statistics about the process: “reducing turnover by 20%, time to hire by 45%, and recruitment costs by a third—all with the promise of no adverse impact—guaranteed.” As she hears more about the start-up, she learns that the company employs about 10 people—none of whom are I-O psychologists or talent management specialists, is in Series C round of venture funding, and has several large Fortune 100 companies already signed up as clients. All of this sounds impressive, but when questioned, the details of what these other companies are actually doing with the start-up company is shrouded in “negotiations”; the CEO promises that more information would soon be available. Not deterred, Shelly's CHRO asks her to work with their IT department to help the start-up set up a pilot with a small group within the company. The CHRO pulls Shelly aside after the meeting and says, “Listen, I want you to really evaluate this pilot. Make sure you kick the tires. But this could really be a huge transformation for our department if this works. So I'm expecting good results.” Shelly returns to her desk with a knot in her stomach and feeling like much of what she's learned as an I-O psychologist is in question. She thinks to herself, “Seriously? Millennial neuroscience?”

The above example is of course fictional, but it was constructed to reflect our collective set of experiences and to illustrate the points we hope to make in this article. Today's organizations are under constant pressure to simultaneously simplify and streamline (and reduce costs) while they strive to increase the impact of their talent processes. Given the central role that many I-O psychology practitioners have in this agenda (e.g., high-potential talent identification, talent assessment and selection, learning and development, performance management, employee engagement, team effectiveness, and leadership development), it is commonplace for senior clients to ask us to demonstrate our return on investment. This is nothing new and has been a challenge for practitioners (particularly internal practitioners) in I-O psychology, organization development, and human resources in general for decades (e.g., Becker, Huselid, & Ulrich, Reference Becker, Huselid and Ulrich2001; Church, Reference Church2017; DeTuncq & Schmidt, Reference DeTuncq and Schmidt2013; Edwards, Scott, & Raju, Reference Edwards, Scott and Raju2003; Savitz & Weber, Reference Savitz and Weber2013). However, recent forces in the marketplace are driving increased pressure to question, radically change, or even eliminate not only the tools and processes we create but how we create and use them. At the same time, we are experiencing a wealth of new tools, technologies, and approaches in the areas of TM, many of which are not driven by I-O psychologists at all. In fact, aside from a handful of key contributions in the area of TM from I-O practitioners (e.g., McCauley & McCall, Reference McCauley and McCall2014; Scott & Reynolds, Reference Scott and Reynolds2010; Silzer & Dowell, Reference Silzer and Dowell2010), many of the leading books in the area are written by academics and/or practitioners from other disciplines such as organizational behavior, neuroscience, economics, management science, or other fields entirely (e.g., Boudreau & Ramstad, Reference Boudreau and Ramstad2007; Cappelli, Reference Cappelli2008; Charan, Carey, & Useem, Reference Charan, Carey and Useem2014; Charan, Drotter, & Noel, Reference Charan, Drotter and Noel2001; Effron & Ort, Reference Effron and Ort2010; MacRae & Furnham, Reference MacRae and Furnham2014; Rock, Reference Rock2007). Although there is nothing inherently wrong with this, it does raise a flag in our minds as to who is (and who should be) the driving force behind the TM movement in theory and practice. Just as with other trends that have occurred between practice adoption and I-O psychology's ability to keep up with theory and research (e.g., coaching), this has resulted in self-reflections on the relevancy of our field on many occasions (e.g., Ones, Kaiser, Chamorro-Premuzic, & Svensson, Reference Ones, Kaiser, Chamorro-Premuzic and Svensson2017).

We are calling this confluence of factors in the workplace today anti-industrial-organizational psychology (AIO). Our purpose in naming this phenomenon is not to create a theoretical construct but to recognize a trend in the industry that has significant potential to damage the field of I-O psychology and potentially undue all of the positive efforts and outcomes associated with our work over the last 60 odd years. As such, the phenomenon is the focus, not the term itself. We could have just as easily called it “bright shiny object talent management” or something similar. We specifically chose to call it anti-I-O because we believe that the trend is not advancing the field—in fact, quite the opposite. For the record, we have nothing against the talent management practice area in general (some of us have worked in TM for years). Instead, our collective concern as scientist–practitioners is that these forces are converging in ways that are at best ahead of, and at worst at odds with, our theory and research. Moreover, we are concerned that if this trend continues we will see organizations start to take steps backward in the health of their organizations.

That said, some of the ideas inherent in the AIO movement are good. There is a need, however, to separate the wheat from the chaff. The challenge for I-O psychology is to identify practices that might have merit but no research backing to date and those that are completely ineffectual. Although this phenomenon pushes our thinking and requires us to consider new approaches at a pace never experienced before, we also believe that it is short-cutting fundamental aspects of our field—such as applying evidence-based solutions or leveraging past research to inform TM system design. Our hope is that this article will stimulate new dialogue, theory, research, and applications in a number of areas where key gaps exist or refreshed thinking is needed to reinforce the goals of the field of I-O psychology as “science for a smarter workplace.” Overall, it is our hope that our field undergoes a shift in how we go about doing our work both in terms of where we focus our research and how we innovate new tools and practices.

Therefore, the purpose of this article is to raise awareness of the issues of AIO, describe the factors driving this trend using several key I-O psychology practice domains as examples, and offer a call to action for I-O psychologists to move the field to forward through a series of strategic recommendations. As part of our discussion, we present a framework that attempts to describe how AIO concepts flow through (or around) our field so that we might better understand where I-O psychologists can and should play in this increasingly crowded space we call talent management.

Although there are differing opinions in the field regarding the definition of the term talent management (Church, Reference Church2006, Reference Church2013; Morgan & Jardin, Reference Morgan and Jardin2010; Silzer & Dowell, Reference Silzer and Dowell2010; Sparrow & Makram, Reference Sparrow and Makram2015; Swailes, Reference Swailes2016) and what is considered “in” or “out” of the practice area versus other specialties such I-O psychology, organization development, learning, analytics, or even human resources more broadly [and research by Church and Levine (Reference Church and Levine2017) supports the wide variety of internal organizational structural differences in this area], for the purposes of this article, we define TM and AIO in the following ways:

• Talent management: The use of integrated science-based theory, principles, and practices derived from I-O psychology to design strategic human resource systems that serve to maximize organizational capability across the full talent lifespan of attracting, developing, deploying, and retaining people to facilitate the achievement of business goals (adapted from Silzer & Dowell, Reference Silzer and Dowell2010)

• Anti-industrial-organizational psychology: The use of novel, trendy, simplistic, or otherwise “pet” frameworks, and “best practices” regardless of their basis in science (and sometimes intentionally at odds with the science) to create a “buzz” in an organization or the industry, and/or achieve specific objectives identified as critical (for whatever reason) by senior leadership to demonstrate “quick wins” or “points on the board” that impact talent management–related programs, processes, and systems in organizations

There are a few things to note about our AIO definition. One is that it references novel, trendy, simplistic, or “pet” frameworks regardless of their basis in science. This includes a wide spectrum of possibilities, from the outlandish to something that might actually end up being sound practice but is too novel of a concept to have a research basis. The second point is that our definition states that the intent of using these practices is to create a buzz or achieve to demonstrate quick wins or points on the board. Note that this is very different in intent than more traditional, longer term objectives in the field of I-O psychology such as improving climate and culture or increasing organizational or individual effectiveness. Implicit in this definition is that organizations guilty of AIO are merely looking for a quick solve to their immediate problem or at the very least are unaware of (or unconcerned with) the long-term impact and effectiveness. Of course, sometimes there are marketing and sales aspects involved from the consulting side of the business to drive adoption of new products and services as well (e.g., by using new terms, tools, or engaging ideas, or making sweeping claims). Although the issue of AIO is significantly more prevalent in organizational consulting firms lacking strong I-O practitioner capabilities, it still happens and can be particularly frustrating when a start-up rolls out its one “expert” to explain the validity of the company's approach or research only to discover gaping holes in this research or approach. Because TM is so amorphous in some ways and so expansive at the same time, it has opened the door to every possible idea—simple, novel, repacked, hackneyed, solid, or otherwise—making the AIO phenomenon a reality.

Our issues with AIO may sound somewhat familiar to those raised in other areas, such the evidence-based management (EBMgt) literature (e.g., Pfeffer & Sutton, Reference Pfeffer and Sutton2006; Rousseau, Reference Rousseau2006; Rousseau & Barends, Reference Rousseau and Barends2011), which emphasizes using scientific evidence to inform managerial decisions. EBMgt was originally adapted from the medical profession and is now a major focus in the organizational behavior (OB) field supported by Stanford's Center for Evidence-Based Management website (https://www.cebma.org/), which houses a number of excellent resources on the topic. Although there are many similarities to the issues raised here with respect to organizational practices, our focus is more specifically tied to the front-end side of the AIO equation, that is, the challenges associated with responding to the influx of AIO concepts and ideas and their influence on senior leaders, not just the good practice of evidence-based HR.

Background: How Did We Get Here?

Over the last several decades, there has been a steady stream of dialogue about the “science–practice” gap within our profession and how I-O psychology has been losing its relevancy and impact (Anderson, Herriot, & Hodgkinson, Reference Anderson, Herriot and Hodgkinson2001; Church, Reference Church2011; Lawler, Reference Lawler1971; Pietersen, Reference Pietersen1989). Many of these discussions frame our declining or lack of relevance against a growing gap between science and practice. In other words, academics have lost touch with the needs of the practitioner, and practitioners are losing their grounding in science and rigor. Although Zickar (Reference Zickar2012) reminds us that this dynamic is not specific to I-O psychology (or psychology, for that matter) nor is it anything new, others (Silzer & Cober, Reference Silzer and Cober2011; Silzer & Parsons, Reference Silzer and Parsons2011) show compelling data (how else to convince I-O psychologists!) that the gap does indeed exist, if not getting wider.

Seven years ago, Ryan and Ford (Reference Ryan and Ford2010) warned that our field was at a “tipping point” regarding our professional identity. They argued that we have evolved too far away from psychology and are at a critical juncture in terms of our distinctiveness from other disciplines as a result of our ability to influence organizational decision makers, to influence public policy, and to control licensure issues. They posited four scenarios, each varying by our field's ability to contribute to, or pull away from, our linkages to the overall field of psychology. Only one scenario ends up strengthening our identity: one in which we, among other things, place an emphasis “on linking applied issues to psychological constructs and frameworks” (Ryan & Ford, Reference Ryan and Ford2010, p. 253).

More recently, we have seen the dialogue shift away from an internal focus (around the science–practice gap) to more of an external focus, on what we would term AIO. In the April 2017 edition of The Industrial-Organizational Psychologist (TIP), Ones et al. discuss how I-O psychology as a discipline has become too focused on the “methodological minutiae,” with overemphasis on theory, and losing touch with business needs. They noted:

We see a field losing its way, in danger of becoming less relevant and giving up ground to other professions with less expertise about people at work—but perhaps better marketing savvy and business acumen. Without a fundamental reorientation, the field is in danger of getting stuck in a minority status in organizations; technocrats who apply their trade when called upon but not really shaping the agenda or a part of the big decisions.

They point to a variety of things that have contributed to our losing touch, including a suppression of exploration and repression of innovation, and an unhealthy obsession with publications while ignoring practical issues.

In a similar vein, Chamorro-Premuzic, Winsborough, Sherman, and Hogan (Reference Chamorro-Premuzic, Winsborough, Sherman and Hogan2016) point out that technology, particularly in the area of talent assessment and identification, is evolving faster than the I-O research, leaving academics either on the sidelines or playing catch up. They note that when innovation or technology advances faster than research can support, it leaves I-O practitioners and HR professionals with a lack of knowledge about the innovation's validity, ethical considerations, and potential to disrupt traditional and well-accepted methods.

This phenomenon is not just being discussed in the scholarly literature. The popular magazine Talent Quarterly recently addressed this topic by naming its 14th issue “The Bullsh*t Issue” (2017). The magazine takes several AIO trends head on, calling out 12 trends and addressing why they deserve the honor of being in the issue. These include power posing, emotional intelligence, grit, learning agility, and strengths-based coaching. In each of these, there's a promise of unsubstantiated benefits, false or shaky research baking, or a desire to create a “buzz” and drive a personal agenda—all of which are central to our definition of AIO.

How Does AIO Happen?

Arguably, our field has never been more popular. In the 2014 Bureau of Labor Statistics list of fastest growing occupations, industrial-organizational psychology was listed as number oneFootnote 1 (Farnham, Reference Farnham2014). Attendance to our field's annual conference has risen steadily over the years; 2018 had the highest attendance to date. Student enrollment in graduate programs continues to climb. Membership is also at an all-time high. Clearly, it is not a lack of quality talent in our field that breeds AIO. On the contrary, we contend that our reliance on evidence-based practice creates the space for AIO. In other words, we are a discipline that is not geared for being cutting edge.

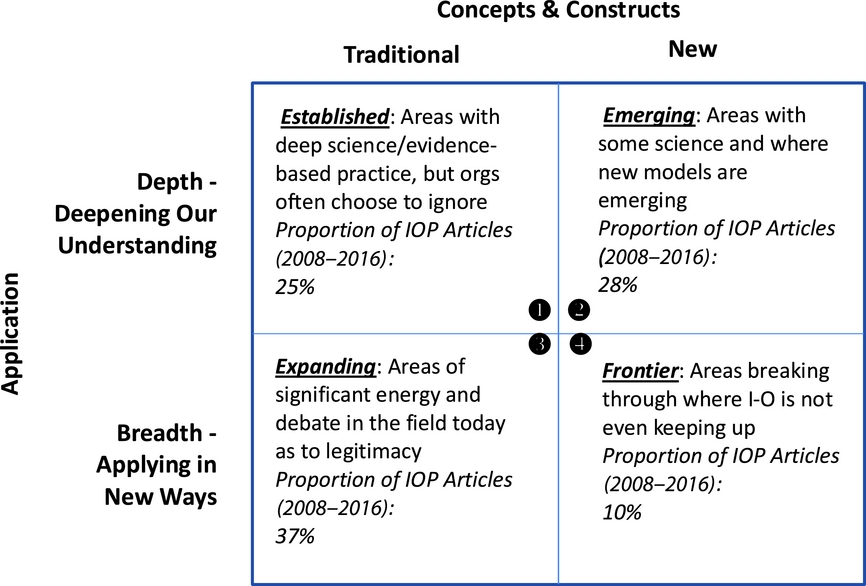

What do we mean by this? We propose that TM innovation progresses along one of two paths, which we will refer to as the talent management process flow (Figure 1).

Figure 1. Talent management process flow.

It starts with the demands from the external environment. Organizations must continually react and adapt to volatile, uncertain, chaotic, and ambiguous (VUCA) forces in the environment in which they operate. These are the external forces that ultimately drive organizational change and are described in classic organization development (OD) frameworks (e.g., Burke & Litwin, Reference Burke and Litwin1992). Examples include addressing a competitor threat, experiencing geopolitical unrest causing economic uncertainty, or undergoing a major acquisition or merger. These activities invariably will have a significant impact on (and sometimes are driven by) the fundamental business strategy of an organization. Successfully navigating these strategic shifts requires shifts in how HR acquires, develops, manages, and/or rewards talent. As a result, forces emerge that require solutions. Those solutions can either be to follow existing, known methods and processes that work or to look to new, innovative, and potentially “faster” or “smarter” ways to achieve the business objective or meet the pending strategic need (Silzer & Dowell, Reference Silzer and Dowell2010).

It is our contention that organizations pursue one of two paths to address these challenges, which typically are mutually exclusive. Path One is more traditional and what I-O psychologists are trained for in their graduate studies: pragmatic science, as termed by Anderson et al. (Reference Anderson, Herriot and Hodgkinson2001). This path addresses organizational problems in a methodologically robust manner. This is what typically distinguishes I-O psychologists from many other disciplines and is where organizations seek the advice and counsel of I-O psychologists to solve their most pervasive and pressing issues.

In this path, we conduct research and apply our I-O expertise, knowledge of the research literature, and best practices to derive sound and rigorous solutions to our clients. Most often, this includes a period of initial data collection to inform the solution design. For example, we conduct job analyses and training needs analyses, collect employee survey data, and conduct organizational diagnoses using input from subject matter experts including senior leaders, job incumbents, boards of directors, and so on. This path also typically includes a period of evaluation prior to full deployment to ensure our solutions are valid and working as intended. For example, we conduct validation studies, training evaluation, utility analyses, and so forth. Academically, we conduct studies establishing the convergent and discriminant validity of the constructs; path analyses to show the antecedents, moderators, and mediators; and ultimately meta-analyses to firmly establish the concept as a legitimate phenomenon. Because Path One is evidenced-based, implemented solutions have measurable and significant organizational impact (otherwise organizations would not have pursued evidence-based solutions to begin with). Over time, these solutions become embedded into the lexicon of talent management solutions because of the continuous research and refinement of the given approach (Arrow 1). Training evaluation (Kirkpatrick, Reference Kirkpatrick1959, Reference Kirkpatrick1994) is perhaps a good example of this. Although not a refined theory, Kirkpatrick's framework is well-accepted and has become a standard for evaluating the impact of training in organizations.

At times, although rare, our vetted solutions fade into oblivion, and we are left to follow Arrow 2. In other words, they simply have ended their utility, and we either retire them or never fully implement them. This could be due to their complexity, lack of practical application, or sometimes they just plain stop being used because societal norms or the nature of work have changed. Examples include time–motion studies (no longer relevant for the modern knowledge workforce), business process re-engineering (made less of a process by technology such as cloud computing), and techniques for improving paper-based survey methodology and mail survey response rates (replaced by online and mobile response capturing).

Despite the confidence that the first path provides organizations, there is a second path, the popularist science path, that we believe they are increasingly pulled toward (i.e., the nonevidence-based approaches). This path is an attempt to achieve the same goals of satisfying talent management needs discussed above. However, this path is driven by an organization's need for speed, simplicity, and the belief that there is a “magic bullet” that will deliver better solutions than the current process. This latter point stems from management's tendency to oversimplify (or conversely, underappreciate) the level of complexity in talent management. This is where individual leaders, organizations, even an entire industry can become enthralled in the belief that the latest is the greatest.

Why are organizational leaders attracted to this risky path? Miller and Hartwick (Reference Miller and Hartwick2002) reviewed 1,700 academic, professional, business, and trade publications over a 17-year period regarding common characteristics of business fads. They found that business fads shared the following eight characteristics:

1. Simple – Easy to understand and communicate and tend to have their own labels, buzzwords, lists and acronyms

2. Prescriptive – Explicit in telling management what to do

3. Falsely encouraging – Promises outcomes such as greater effectiveness, satisfied customers, etc.

4. One-size-fits-all – Claims of universal relevance—not just within an organization but across industries, cultures, etc.

5. Easy to cut and paste – Simple and easy to apply, thus are amenable to partial implementation within an organization. As a result, they tend not to challenge the status quo in a way that would require significant redistribution of power or resources

6. In tune with the zeitgeist – Resonates with pressing business problems of the day

7. Novel, not radical – Does not unduly challenge basic managerial values

8. Legitimized by gurus and disciples – Gains credibility by the status and prestige of their proponents versus empirical evidence

It's easy to see why management gravitates toward these potential solutions over the tried and true practices. They promise a simple, holistic solution in faster time and with better results. Conversely, the evidence-based path takes longer, requires more resources to create and deploy, and there's always a possibility (even if slight) that the evidence will not support the problem it is trying to solve.

Although the AIO characteristics described above are rarely ever realized, there are occasions when the field coalesces around conceptual definitions, engages in a variety of applied and conceptual research, and finds that these novel solutions provide unique value in organizations (Arrow 3). Multisource (or 360-degree) feedback is a good example. This tool first became popular in the 1990s and was largely considered a passing fad when it was declared in Fortune that “360 can change your life” (O'Reilly, Reference O'Reilly1994). The volume of literature published on the topic over the next 10 years (e.g., Bracken, Reference Bracken1994; Bracken, Timmreck, & Church, Reference Bracken, Timmreck and Church2001; Carey, Reference Carey1995, Ghorpade, Reference Ghorpade2000; Lepsinger & Lucia, Reference Lepsinger and Lucia1997; London, Reference London1997) helped organizations in understanding when to use it, how to use it, and the value that the feedback provides. It has since become a standard tool for so many types of individual and organizational applications that its misuse is now a topic of discussion (Bracken, Rose, & Church, Reference Bracken, Rose and Church2016). Personality assessment is perhaps another relevant example. Once the field generally reached consensus around the construct by way of the five-factor model (Barrick & Mount, Reference Barrick, Mount and Schmitt2012), the research agenda could focus more pointedly on the validity of personality in predicting performance and potential, and the unique variance personality explains alongside other constructs. Once its organizational impact was established, the prevalence of personality assessment has risen and is now a core component of employment testing, senior executive assessment, and high potential identification (Church & Rotolo, Reference Church and Rotolo2013).

More often than not, however, these “bright shiny objects” fade into oblivion (Arrow 4), primarily because they do not hold up to their original promise and prove to have little to no value to the organization, or cause more harm than good (e.g., from a legal, cultural, or social justice perspective). They might even have broad adoption and heavy media attention, but their life span is short. Examples include holacracy, management by objectives, overly simple talent management processes (e.g., tautological and/or unidimensional definitions of potential, ill-defined nine-box models). This is akin to what Anderson et al. (Reference Anderson, Herriot and Hodgkinson2001, p. 394) called popularist science, where attempts are made to address relevant issues but fail to do so with sufficient rigor, resulting in “badly conceived, unvalidated, or plain incorrect research” where “ineffectual or even harmful practical methods may result.”

As mentioned, I-O psychologists quite often are asked to evaluate and/or salvage these “quick fix” solutions (Arrow 5). Most often, there is no science or evidence to suggest that these solutions would be the panaceas that they purport to be. It is often the responsibility of I-O psychologists to be the bearer of bad news or to propose a lengthy evaluation to ensure that the solution (a) does what it purports to do, (b) has the impact it promises to have, and (depending on the application) (c) has the legal defensibility it needs so that it does not put the company at risk. Sadly, we are often left in a position of “making lemonade from a bunch of lemons” by having to figure out ways to implement these solutions in a minimally acceptable way. Either way, it is the obligation of I-O psychologists as scientist–practitioners to demand and/or provide the “science” side of the spectrum when we encounter a practice where there is no science behind it.

Sometimes elements of the fad persist while other aspects fade into oblivion (Arrow 6). For example, management by objectives (MbO) is often cited as a management fad that was popularized by Peter Drucker in the 1950s and came into prominence in the 1960s and 1970s for its simple focus on goals and metrics. Although MbO is rarely practiced today, there are elements of it still being practiced. For example, goal management and continuous feedback practices are key characteristics of MbO and are core to some of the new performance management systems (Hanson & Pulakos, Reference Hanson and Pulakos2015). Additionally, elements of gamification applied to TM systems stem from some of the basic principles of goal setting.

So How Are We Faring as a Field?

To challenge ourselves, and to look for evidence as to whether our field is in fact more focused on pragmatic science versus popularist science, we looked at Society of Industrial and Organizational Psychology's (SIOP's) official publication Industrial and Organizational Psychology: Perspectives on Science and Practice. We coded each focal article since the journal's inception in 2008 through to 2016 (n = 72). Coders were four I-O psychologists (two authors and two independent colleagues). We first independently coded each article, and then finalized the coding of each article through a consensus process.

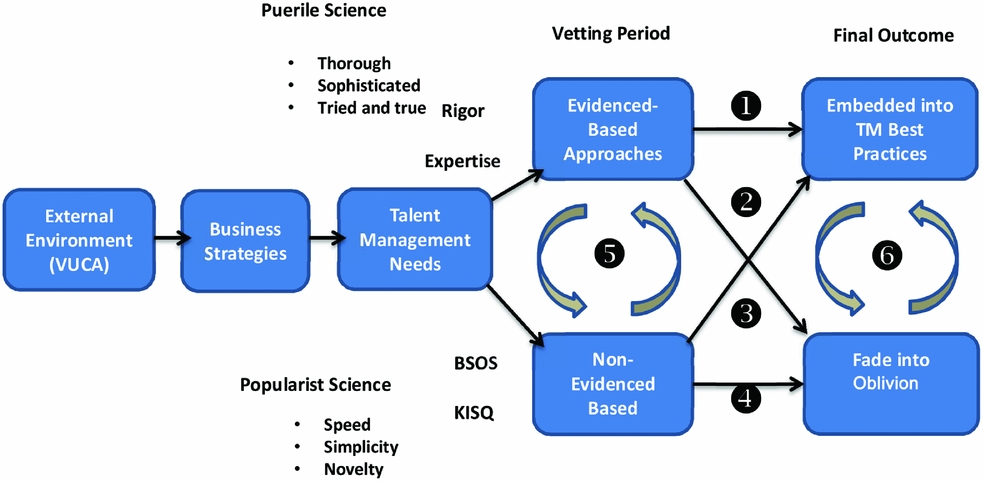

We coded along two variables: (1) whether the focal article's concepts and constructs would be considered traditional I-O psychology topics (e.g., selection, performance management) or whether they are relatively new concepts (e.g., engagement, high-potential); and (2) whether the article focused on deepening our existing knowledge of the topic or whether it was expanding the application of the construct or practice. This created four quadrants (see Figure 2) which we entitled established (deepening our knowledge of a core topic), emerging (deepening our knowledge of a noncore area), expanding (areas of significant energy but legitimacy is still being debated), and frontier (areas with significant energy but I-O psychology as a field is not significantly embedded). We omitted any focal articles that were focused on the field or profession (n = 4). We also coded the authors of the article as academic, practitioner, or mixed.

Figure 2. Matrix of I-O focus: Construct × Application.

For example, we coded Lievens and Motowidlo's (Reference Lievens and Motowidlo2015) article on situational judgement tests (SJTs) as “established” because it furthered the knowledge base of the concept of SJTs and how they fit within our nomological net of similar constructs. Conversely, we coded Adler, Campion, Colquitt, and Grubb's (Reference Adler, Campion, Colquitt and Grubb2016) timely debate on getting rid of performance ratings as “expanding.” Performance management (PM) clearly is a well-established area of I-O, and the article did a nice job of debating whether many of the new trends regarding PM, such as removing ratings from the process, had any merit. If the criticisms of our profession are valid, we would expect a majority of focal articles to focus on traditional versus new constructs. Further, we would expect more articles in the established category than others. We would also expect relatively few articles focused on frontier issues.

The results of the coding exercise yielded support for our hypothesis. As can be seen in Figure 3, the vast majority of focal articles (62%) discussed more traditional areas of I-O. Surprisingly, 37% of the articles were focused on new applications of established constructs (expanding), whereas only 25% were of the “established” category. Similarly, another 25% were emerging, meaning they deepened our understanding of new constructs or models. Perhaps not surprisingly, only 10% were what we would consider frontier, that is, tackling areas that are truly new and unestablished. Detailed results of the coding steps and outcomes are provided in Appendix A.Footnote 2

Figure 3. Classification of IOP focal articles, 2008–2016.

We find even more interesting results when we examine the content by author type. As Table 1 shows, the vast majority of articles overall were written by academics (44 of 72). Practitioners only accounted for 10 articles (14%), with the remaining constituting a mix of authors. When looking by the type of content, articles considered as established were written primarily by academics (14 of 17 or 82%). Perhaps surprisingly, of the seven articles that we classified as frontier, four of them were authored by academics (57%), and only one frontier article was authored by practitioners. Despite this refreshing surprise, our analysis shows that our field, and in particular academics in our field, are overly focused on traditional and established constructs rather than new constructs or applications.

Table 1. Cross Tabulation of IOP Focal Articles (2008–2016) by Author and Content Type

One may argue that it is the role of academia to thoroughly test I-O (and therefore TM) constructs and practices. But as Ones et al. (Reference Ones, Kaiser, Chamorro-Premuzic and Svensson2017, p. 68) argue, there is an overfocus on “methodological minutiae” causing I-O to become “more and more precise in ways that matter less and less.” They point out that empirical papers submitted for publication today are “demonstrations of statistical wizardry but are detached from real-world problems and concerns.” We would concur. Our analysis of the IOP journal, which shows a preponderance of focal articles on established topics, supports this view. It makes us wonder if there is a relationship between the preponderance of research we publish as a field and the perceptions of our field by our key stakeholders. If we want to shape our brand image, we should be more cognizant of the zeitgeist of what we publish.

Examples of the Four Quadrants

To best exemplify the four quadrants, we have summarized below two examples per quadrant. In each example, we include a summary of the history, research backing, and current status.

Quadrant I: Established: Areas With Deep Science/Evidence-Based Practice, but Organizations Often Choose to Ignore

Performance management

Performance management as a content area contains several concepts that are core to I-O psychology including (but not limited to) goal setting, feedback, developing employees (including coaching), evaluating performance, and rewarding performance. Each of the elements has received considerable attention from scholars and researchers. Extensive, in-depth reviews have been offered by Aguinis (Reference Aguinis2013) and Smither and London (Reference Smither and London2009, Reference Smither, London and Kozlowski2012). Despite the abundant research, two problems remain. First, far too many managers believe that performance management refers only to performance evaluation/appraisal. Such a view leads people to think of performance management problems as once-a-year events where managers sometimes provide biased evaluations while they struggle to deliver useful feedback. Second, performance management processes can be time consuming, and their value is often questioned (Pulakos, Hanson, Arad, & Moye, Reference Pulakos, Hanson, Arad and Moye2015). Much of the dissatisfaction with performance management tends to center on performance evaluations. This makes sense in light of the fact that nearly a century of research has shown that ratings can be biased by the rater's motivations (including political factors), as well as cognitive errors associated with observing, storing, and recalling information about employee performance, and that these problems cannot be helped, but not completely remedied, by rater training or introducing new rating formats or scales (for a review, see Smither & London, Reference Smither, London and Kozlowski2012).

Making big headlines, several companies, such as Adobe, Gap, and Cargill, have attempted to tackle these problems in their recent decisions to eliminate performance ratings. Instead of annual ratings, these companies ask their managers to provide ongoing feedback (“check-ins”) to their employees throughout the year. Managers are also granted enormous discretion about how to allocate salary increases among employees. Pulakos et al. (Reference Pulakos, Hanson, Arad and Moye2015) have correctly noted the history of “vicious cycles of reinventing PM processes only to achieve disappointing results” (p. 52). In this context, abandoning ratings could appear to be a reasonable next step. On the other hand, eliminating ratings, expecting managers to provide ongoing feedback, and enabling managers to allocate salary increases as they see fit might simply be another vicious cycle in reinventing the performance management process. Is it reasonable to expect managers who could not competently facilitate an annual review of employee performance to now become skillful providers of ongoing feedback and coaching to their employees? In the absence of ratings, will the size of an employee's salary increase become the new “rating” of the employee's performance? If we couldn't trust managers to provide fair and unbiased ratings, can we trust them to provide a fair and unbiased distribution of salary increases? As corporate training appears to be declining (Cappelli & Tavis, Reference Cappelli and Tavis2016), will managers receive the help they need to make these new approaches to performance management successful? Without ratings, what cues do employees use to determine how they stand in the company relative to their peers?

At this point, we do not know the answers to these questions. But a very large body of theory and research is available to provide guidance about enhancing feedback and coaching (e.g., Alvero, Bucklin, & Austin, Reference Alvero, Bucklin and Austin2001; Cannon & Edmondson, Reference Cannon and Edmondson2001; Edmondson, Reference Edmondson1999; Graham, Wedman, & Garvin-Kester, Reference Graham, Wedman and Garvin-Kester1993; Hackman & Wageman, Reference Hackman and Wageman2005; Heslin, VandeWalle, & Latham, Reference Heslin, Vandewalle and Latham2006; Ilgen & Davis, Reference Ilgen and Davis2000; Kluger & DeNisi, Reference Kluger and DeNisi1996; Payne, Youngcourt, & Beaubien, Reference Payne, Youngcourt and Beaubien2007; Steelman, Levy, & Snell, Reference Steelman, Levy and Snell2004; Whitaker, Dahling, & Levy, Reference Whitaker, Dahling and Levy2007). The emphasis on ongoing feedback and coaching (rather than annual ratings) will likely fail if those who implement it do not leverage this vast body of knowledge. Sometimes, guidance can be found in established research that does not, at first glance, seem closely related to the issue at hand. For example, employee reactions to managers allocating salary increases in the absence of an annual review might be predicted by research on distributive, procedural, interpersonal, and informational justice (Colquitt, Conlon, Wesson, Porter, & Ng, Reference Colquitt, Conlon, Wesson, Porter and Ng2001; Colquitt et al., Reference Colquitt, Scott, Rodell, Long, Zapata, Conlon and Wesson2013; Whitman, Caleo, Carpenter, Horner, & Bernerth, Reference Whitman, Caleo, Carpenter, Horner and Bernerth2012), and the extensive justice literature can provide a useful conceptual framework for practitioners implementing this approach. Also, the extensive research literature on bias in human judgment will likely apply to managers who have to allocate salary increases in the absence of an annual review. That is, the same cognitive and motivational factors that lead to bias and error in ratings will likely lead to bias and error in salary allocations.

The bottom line is that there has been a wave of companies changing their PM process, incorporating radically new design elements (e.g., not using ratings), not because they are grounded in a solid base of longstanding research but because they are following an emerging trend (Cappelli & Tavis, Reference Cappelli and Tavis2016). The good news is that I-O psychology has been part of the debate (Adler et al., Reference Adler, Campion, Colquitt and Grubb2016). The not-so-good news is that as a field we do not seem to have a consolidated point of view, which further fuels organizational experimentation.

Selection and assessment

Personnel selection and testing is arguably an area where I-O psychologists have been most involved for over a century. We could even go so far as to say that modern selection and assessment as we know it today was one of the few areas of our field that we invented, if not heavily shaped. Our field is still very much involved in the area today. A search of the last nine SIOP conferences (2008–2016) revealed that testing and assessment content areas were one of the most prominent topics in the conference, second only to leadership (620 to 629 sessions). Clearly, this is an area in which I-O psychology has deep expertise and one that tends to distinguish our field from others. In fact, it has become synonymous with SIOP's brand image (Rose, McCune, Spencer, Rupprecht, & Drogan, Reference Rose, McCune, Spencer, Rupprecht and Drogan2013).

Obtaining and maintaining this distinction has come about in a large part because of the heavy research focus we maintain in this area. We continue to publish profoundly on this topic, have journals largely dedicated to it (e.g., International Journal of Selection and Assessment, Personnel Psychology Journal), and have local professional groups focused on it (e.g., International Personnel Assessment Council). Without a doubt, these efforts have improved the practice. For example, activity has increased both in academic research as well as in practice over the use of personality assessment in selection contexts (Scroggins, Thomas, & Morris, Reference Scroggins, Thomas and Morris2009). Applicant reaction and social justice research have helped us understand the altitudinal implications of applying assessment tools, procedures, and decision rules in selection contexts (e.g., Bauer, McCarthy, Anderson, Truxillo, & Salgado, Reference Bauer, McCarthy, Anderson, Truxillo and Salgado2012). Ample research on structured over unstructured interviews (e.g., Campion, Palmer, & Campion, Reference Campion, Palmer and Campion1997) has resulted in most companies utilizing behaviorally based interview techniques and adding structure to the scoring and decision-making processes. SIOP has also been actively involved in working with the Equal Employment Opportunity Commission (EEOC) to explore the possibility, scope, and implications of updating the Uniform Guidelines on Employee Selection Procedures (1978).

Our field has attempted to push the application of known tools and methods in assessment and selection (e.g., Hollenbeck, Reference Hollenbeck2009; Morelli, Mahan, & Illingworth, Reference Morelli, Mahan and Illingworth2014). However, relative to other players in the space, we would characterize these attempts as conservative if not late to the conversation. In our view, much of the research over the past decade has been focused on deepening our existing knowledge (Quadrant 1) rather than on exploring how we can apply our knowledge in new areas (Quadrant 3) or understanding how several emerging technologies and concepts may fit into our existing lexicon (Quadrant 4). A variety of radical approaches is emerging within the selection and assessment area that potentially disrupt our current conception of selection and hiring. For example, as noted in Rotolo and Church (Reference Rotolo and Church2015), several small start-up companies—often without I-O psychologists to guide their practice—are developing “web scraping” algorithms to compile candidate profiles from their digital footprints. These big data approaches may be criterion based, but there is little that we have seen that abides by the Uniform Guidelines on Employee Selection Procedures (EEOC, 1978) or the Principles for the Validation and Use of Personnel Selection Procedures (SIOP, 2003).

These technologies are perhaps most notable because, if done properly and effectively (which we assume will be just a matter of time), they could virtually eliminate the need for assessment and testing. The field of artificial intelligence including machine learning, deep learning, and sentiment analysis will pave the way to a more passive approach to data collection and assessment. In other words, instead of asking candidates to take personality inventories and situational judgment tests, we will be able to determine their personality, skills and capabilities, and cognitive ability through the candidate's continuous interactions with the technology around them—for example, laptops, tablets, mobile devices, wearables. For example, IBM is already training its Watson computing platform to analyze an individual's personality just by reading their his or her online writing (e.g., social media posts, blog entries, etc.).

However, the research focused on the reliability and validity of these new approaches is still in the early stages (e.g., Levashina, Hartwell, Morgeson, & Campion, Reference Levashina, Hartwell, Morgeson and Campion2014), and few I-O psychologists are talking about the implications of these innovations on our field, on organizations, and on society. Although Chamorro-Premuzic et al.’s (Reference Chamorro-Premuzic, Winsborough, Sherman and Hogan2016) focal article in this journal on “new talent signals” stirred a robust dialogue on the topic, we believe that we need to continue such dialogues on these future-focused topics.

Quadrant II: Emerging: Areas With Some Science and Where New Models Are Emerging

High-potential identification

I-O psychologists have been focused on the methods, tools, and processes of individual assessment for decades, and there are volumes of resources available to guide both research and practice in this area (e.g., Jeanneret & Silzer, Reference Jeanneret, Silzer, Jeanneret and Silzer1998; Scott & Reynolds, Reference Scott and Reynolds2010; Stamoulis, Reference Stamoulis2009; Thornton, Hollenbeck, & Johnson, Reference Thornton, Hollenbeck, Johnson, Farr and Tippins2010). It has only been relatively recently, however, that an emphasis on identifying high potentials as a unique classification of talent, or as a talent pool, in the applied I-O psychology literature has emerged as one of the hottest areas of applied research and practice (Church & Rotolo, Reference Church and Rotolo2013; Silzer & Church, Reference Silzer and Church2009, Reference Silzer, Church, Silzer and Dowell2010). This has in many ways been in tandem with the rise in popularity of TM itself in the field of I-O psychology. For many human resource professionals, the ability to identify high-potential talent is the “holy grail” (and sometimes the sum total) of what a successful talent management process should look like in their organizations (Church, Reference Church2015).

This simple logic is at the core of every talent management strategy, that is, the need to identify the best possible talent for (accelerated) development and succession planning. On the external side of the buy versus build equation (Cappelli, Reference Cappelli2008), this is more about selection and perhaps effective on-boarding (Adler & Stomski, Reference Adler, Stomski, Silzer and Dowell2010) than anything else. On the internal side, it's about having tools and processes in place that assist line managers and HR leaders in segmenting talent into different pools that enable the differential distribution of development opportunities. Whether the approaches are based on simple (yet relatively limited) heuristics such as “two level jumps” or flawed measures such as past performance ratings (both of which are the top two most commonly used methods based on benchmark research; Church, Rotolo, Ginther, and Levine, Reference Church, Rotolo, Ginther and Levine2015; Silzer & Church, Reference Silzer, Church, Silzer and Dowell2010), assessment tools such as those based on personality theory (e.g., Hogan & Kaiser, Reference Hogan, Kaiser, Scott and Reynolds2010), cognitive abilities tests (Schmidt & Hunter, Reference Schmidt and Hunter2004), or more comprehensive multitrait multimethod models (e.g., Silzer, Church, Rotolo, & Scott, Reference Silzer, Church, Rotolo and Scott2016), organizations are increasingly looking for data-based answers. In many ways, it is about identifying and focusing on the “few” versus the “many,” and that distinction about sums up the entire difference between developing high potential employees in TM applications versus broader audiences in general (Church, Reference Church2013, Reference Church2014).

Unfortunately, not everyone is equally good at spotting future potential in people and some are downright horrible at it, even with effective I-O assessment tools in place. Although many large organizations have well established TM systems and processes geared at identifying and moving high-potential talent through their different roles to accelerate their development from early career to the C-Suite (e.g., Carey & Ogden, Reference Carey, Ogden, Berger and Berger2004; Church & Waclawski, Reference Church, Waclawski, Silzer and Dowell2010; Effron & Ort, Reference Effron and Ort2010; Grubs, Reference Grubs, Berger and Berger2004; Ruddy & Anand, Reference Ruddy, Anand, Silzer and Dowell2010; Silzer & Dowell, Reference Silzer and Dowell2010), and even though the concept of learning from experiences has been around for decades (e.g., Lombardo & Eichinger, Reference Lombardo and Eichinger2000; McCall, Lombardo, & Morrison, Reference McCall, Lombardo and Morrison1988), companies remain poor at both aspects. In their recent benchmark study of high-potential programs at large established organizations, Church et al. (Reference Church, Rotolo, Ginther and Levine2015) reported that 53% rated themselves a “3” in the maturity model, representing some degree of consistent implementation and executive engagement but lacking transparency, and another 23% rated themselves as either inconsistent or simply reactive overall. Only 8% reported being fully business integrated. This is despite the fact that 70% of the same companies are currently using assessments to inform their high-potential processes (Church & Rotolo, Reference Church and Rotolo2013; Church et al., Reference Church, Rotolo, Ginther and Levine2015).

All of this makes high-potentials a perfect example of the AIO emerging category described above. It is an incredibly hot topic in organizations today. Line managers have been talking the language potential for decades and think they know everything there is to know. There are entire sub-functions inside organizations dedicated to conducting high-potential and executive assessments (Church & Levine, Reference Church and Levine2017). Yet theory and research have only just begun to catch up. As a result, senior clients want the “silver bullet” here as well. They are looking for the cheapest, most efficient, yet effective and valid (and not to mention perfect and confirmatory of all of their own personal talent decisions) “assessment tool” that will work miracles. Perhaps most recently, we are seeing a trend toward those who believe that big data applications will finally solve this equation using complex algorithms based on web scraping and email mining similar to Chamorro-Premuzic et al.’s (Reference Chamorro-Premuzic, Winsborough, Sherman and Hogan2016) talent signals concept. Although we have our concerns about making decisions with limited data in some of these areas (Church & Silzer, Reference Church and Silzer2016), the bottom line is if we as I-O psychology professionals do not get there in time, others will.

This is not to say there has not been progress. Comprehensive frameworks such as the Leadership Potential BluePrint (Church & Silzer, Reference Church and Silzer2014; Silzer & Church, Reference Silzer and Church2009; Silzer et al., Reference Silzer, Church, Rotolo and Scott2016) and related models (MacRae & Furnham, Reference MacRae and Furnham2014), as well as others offered by consulting firms (e.g., Aon-Hewitt, 2013; Hewitt, 2003; 2008; Paese, Smith, & Byham, Reference Paese, Smith and Byham2016) have begun to emerge that are helping to guide practice. Unfortunately, the field has yet to agree on a single core model, and aside from a handful of academics and practitioners, the work is largely being driven by consulting firms. Although there has always been tension between the consulting (for profit) side of I-O psychology and the academic side when it comes to knowledge creation, this concerns us if it serves to either slow down progress or results in ill-conceived tools and “bolt-on” constructs (AIO) in order to avoid overlap with others already in market.

At the 2006 annual SIOP conference, for example, there were only four sessions with talent management and two with high-potentials in the titles in the entire program (suffice to say that none of these were core topics for submission content either). All four of the TM submissions were submitted by internal practitioners, and one of these was featured in TIP (Church Reference Church2006) and resulted in both the Silzer and Dowell (Reference Silzer and Dowell2010) Professional Practice series book as well as the second highly successful Leading Edge Consortium several years later. There was also at least one workshop we are aware of on high-potentials offered in 2009 (Parasher & McDaniel cited by Silzer & Church, Reference Silzer and Church2009) and several since. Interestingly, siop.org indicates that at the 2017 conference, 11 years later, these topics had not grown in popularity. Once again, we had only four sessions on TM and four on high-potentials. The two topics are still entirely missing from the list of content areas offered by SIOP. This is fascinating yet disappointing at the same time to us. It is clearly an emerging topic area (or one that is fully here already) and needs our attention before other types of consulting practitioners take over completely.

Engagement, culture, and other employee surveys

If you attend SIOP, or any conference for that matter, then it is no secret that engagement is and has been a “hot” topic for over two decades now. Employee engagement is a term that was originally introduced by William Kahn in a 1990 Academy of Management Journal article titled “Psychological Conditions of Personal Engagement and Disengagement at Work.” He described personal engagement and personal disengagement as “behaviors by which people bring in or leave out their personal selves during work role performances” (Kahn, Reference Kahn1990). According to Kahn, engagement is a psychological construct that has to do with the expression of one self physically, cognitively, and emotionally in the workplace. Kahn developed the concept from the work of Maslow (Reference Maslow1954) and Alderfer (Reference Alderfer1972) by following the idea that people need self-expression and self-employment in their work lives. This idea that people's responses to organizational life stem from self-in-role behaviors as well as group membership were adapted from the fields of psychology (Freud, Reference Freud1922), sociology (Goffman, Reference Goffman1961), and group theory (Bion, Reference Bion1959).

Over the years, academic research into the construct of engagement grew and new models and frameworks emerged. In a groundbreaking study, Schaufeli, Salanova, Gonzalez-Roma, and Bakker (2002) described engagement as the “opposite” of burnout and broadened the scope of traditional research into burnout, finding support for the construct in a variety of professions. They defined engagement as “a positive, fulfilling, work-related state of mind that is characterized by vigor, dedication, and absorption” (Schaufeli et al., Reference Schaufeli, Salanova, González-Romá and Bakker2002). It had also previously been described in the academic literature as being characterized by “energy, involvement, and efficacy” (Maslach & Leiter, Reference Maslach and Leiter1997).

Seeing the merits of employee engagement in organizations, academics and practitioners alike have joined the discussion over the past 25 plus years, and new models and frameworks have been introduced to attempt to better predict employee engagement. Moreover, there have been considerable efforts made to link engagement to organizational outcomes such as employee performance and even business performance, thus demonstrating the “business case” of engagement to organizational leaders. With this growing interest in engagement over the years by both scientists and practitioners alike, the division between these two groups expanded with literature and practice becoming grossly misaligned. This has led to competing, inconsistent, and incorrect interpretations and explanations of the construct (Macey & Schneider, Reference Macey and Schneider2008; Newman & Harrison, Reference Newman and Harrison2008), even to the extent of being called overrated and a potential nuisance to organizations (Church, Reference Church2016; Van Rooy, Whitman, Hart, & Caleo, Reference Van Rooy, Whitman, Hart and Caleo2011).

At the same time, organizations’ enthusiasm around engagement has led to it being used as a label for everything, and unfortunately, this lack of clarity around exactly what we're measuring in these various so-called engagement surveys means that we are really comparing apples to oranges rather than using one consistent framework. Given that organizations love to “benchmark” their survey scores against other companies’ scores both within and outside their industry, a practice that dates back to origins of employee surveys at a core methodology with the formation of consortia such as the Mayflower Group (Johnson, Reference Johnson and Kraut1996), the comparison between organizations is problematic because most are using different measures of the construct. Even the Mayflower Group itself several years ago spent significant time and energy working to align a set of shared survey engagement items (and vacillated between the Macey and Schneider behavioral approach and the more common attitudinal frameworks). Furthermore, in order to benchmark within organizations, there will likely be resistance to changing a poor measure of engagement due to the fact that historical comparisons are lost. Taken together, it is difficult to really make comparisons both within and between organizations given the current state of engagement surveys; however, organizations continue to do so despite this grounding (Church, Reference Church2016).

Ultimately, engagement research is by far one of the most influential areas where I-O psychologists can have an impact in an organization. Survey research after all blends our deep training into theoretical constructs with strong measurement and data analysis techniques as well as organization development principles from an action planning perspective. Therefore, this is an area where we can and should continue to demonstrate our knowledge. However, as practitioners, we must strike a balance. Recently, I-O psychologists have begun to fall out of love with engagement and have begun to introduce concepts of organizational health and organizational well-being (Bersin, Reference Bersin2014). As satisfying as it is to see the field continue to evolve its thinking in these areas, we must remember that it can be difficult for organizations to keep up with the latest research. Try explaining to C-Suite leaders that they should no longer be concerned with employee engagement! In order for I-O psychologists to truly add value, academics and practitioners must build bridges, not only to introduce the most scientifically sound, compelling research that is relevant to organizational leaders but also to teach new entrants to the field early on how to convey and explain obscure constructs in a meaningful way. Without this, organizations will be sold work in the form of the latest survey or “pulse check.” Inevitably when these programs do not deliver on what was promised, the organization may turn its back on survey research altogether.

Quadrant III: Expanding: Areas of Significant Energy and Debate in the Field Today as to Legitimacy

Learning agility

Today's organizational environments are characterized by dynamic, constantly changing objectives around strategies aimed at succeeding in dynamic, rapidly shifting markets. Employees are being asked to adopt new technologies, continuously improve processes, and take on roles without clearly defined responsibilities. In this context, it is no wonder that the concept of learning agility has come into the spotlight as a primary enabler of leadership capability and an early indicator of leadership potential (Lombardo & Eichinger, Reference Lombardo and Eichinger2000; Silzer & Church, Reference Silzer and Church2009). Organizations are incorporating the construct into their selection and development programs, and the term is fast becoming embedded into the lexicon of TM. Yet, there is still much debate about what learning agility is as a construct (e.g., De Meuse, Guangrong, & Hallenbeck, Reference De Meuse, Guangrong and Hallenbeck2010; DeRue, Ashford, & Myers, Reference DeRue, Ashford and Myers2012), and thus it is a good example of the expanding category of AIO.

Learning agility was defined by the early pioneers, Lombardo and Eichinger (Reference Lombardo and Eichinger2000), as “the willingness and ability to learn new competencies in order to perform under first-time, tough, or different conditions” (p. 323). They conceptualized four dimensions: people agility, results agility, mental agility, and change agility. Although efforts have been made to improve upon the definition (DeRue et al., Reference DeRue, Ashford and Myers2012), others have noted that the construct is merely a reframing of learning ability, or the ability to learn (Arun, Coyle, & Hauenstein, Reference Arun, Coyle and Hauenstein2012). For example, DeRue et al. (Reference DeRue, Ashford and Myers2012) proposed a refined definition of learning agility as “the ability to come up to speed quickly in one's understanding of a situation, and move across ideas flexibly in service of learning both within and across experiences” (p. 262). Some (e.g., Wang & Beier, Reference Wang and Beier2012) see this as problematic, as it does not add anything unique from general mental ability or g. Carroll (Reference Carroll1993) for example defines g as “the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience” (p. 68).

The thrust of this conceptual debate in the field, however, is whether learning agility is unique because it implies a motivational element of willingness to learn (e.g., goal orientation, openness to experience) in addition to the ability component, or whether such motivational factors are antecedents to more core elements like processing speed and processing flexibility, none of which are new concepts. Either way, what is interesting about this particular debate is that the argument seems less about the relevance of the construct itself (whether new or not) and more about where the definitional boundary lines are drawn.

Although the debate rages on, studies have repeatedly shown that the ability to learn from experience is what differentiates successful executives from unsuccessful ones (Charan, Drotter, & Noel, Reference Charan, Drotter and Noel2001; Goldsmith, Reference Goldsmith2007; McCall, Reference McCall1998). In their original research, Lombardo and Eichinger (Reference Lombardo and Eichinger2000) found significant relationships between learning agility and supervisory ratings of performance and potential (R2 = 0.30, p < .001). Connolly and Viswesvaran (Reference Connolly and Viswesvaran2002) examined learning agility among law enforcement officers from 26 organizations in the United States. They found that learning agility predicted supervisory ratings of job performance and promotability beyond what was explained by IQ and personality. More recently, Dries, Vantilborgh, and Pepermans (Reference Dries, Vantilborgh and Pepermans2012) found that learning agility was found to be a better predictor of being identified as a high potential than job performance.

Thus, despite ongoing construct debate, there continues to be ample evidence that learning agility is important to organizations despite the construct debate. How much incremental value does it add over and above other variables, and/or can it be used alone as the sole predictor as some consulting firms would suggest? For example, in a survey of 80 companies known for their talent management practices, Church et al. (Reference Church, Rotolo, Ginther and Levine2015) found that over 50% of them included learning agility (or ability) in their assessments of high potentials and senior leaders. This was higher than other seemingly important constructs such as resilience, executive presence, and even cognitive skills.

Relatedly, and perhaps more troubling, is the fact that the definition continues to morph as the construct debate continues. Thus, although the concept is an increasing part of competency models and high potential frameworks in organizations, many adhere to the older four-factor model (mental agility, people agility, change agility, and results agility), whereas others use a consultant's proprietary definition, and others create their own in-house definitions. Most troubling is that the newer concepts emerging in the marketplace (and owned by consulting firms) appear to be complex, comprehensive, and more resembling of leadership competencies rather than a targeted concept around learning from experiences as originally conceived. The net effect of this in our view is that it only serves to raise concern among internal line and HR communities when they see more and more complex frameworks describing what they thought was something simple and quite predictive. We suspect that these efforts at greater complexity are being driven by I-O forces at least in part to support marketplace differentiation. Thus, we are in effect enabling our own AIO challenges.

In sum, regardless of where the boundary lines are drawn around the construct, there is little debate that learning agility is an area that has a lot of pull from organizations and is being applied in areas such as senior leadership assessment and high potential identification to solve important organizational needs (Church & Rotolo, Reference Church and Rotolo2013). The challenge in preventing it from taking Path 4 (fading into oblivion) versus Path 3 (embedded into TM best practices) is ensuring the construct becomes well defined, well researched, and consistently measured and reported back to organizations.

Millennials entering the workforce

A recent focal article and a rich set of commentaries in this journal addressed the question of whether Millennials comprise a unique generation in the workforce, with their set of unique needs and consequent set of unique challenges to organizations (Costanza & Finkelstein, Reference Costanza and Finkelstein2015). Although there are differences in select work-related variables, the empirical research on this question is largely cross-sectional in design, and generational membership is of course confounded with age, historical and cultural experiences, and other cohort effects (e.g., Costanza, Badger, Fraser, Severt, & Gade, Reference Costanza, Badger, Fraser, Severt and Gade2012. The thrust of most of the discussion on this question in our field has been on whether differences in motives, interests, work values, attitudes, and so on that purportedly distinguish Millennials—however that generation is bracketed—are due to age, career stage, maturation, organizational level, or other factors—a question that is, in any practical sense, unanswerable. It is, sadly enough, way too late to start a study of the roots of current generational effects in the workplace; the opportunity to initiate those studies occurred soon after World War II with the birth of the Boomers.

It has nonetheless become virtually a leap of faith that organizations must do a better job of understanding and adapting to the unique needs of Millennials in recruitment, assimilation, performance management, reward, promotional, and a variety of other talent management practices. Interestingly this has been the mantra for some time in the business world (Zemke, Raines, & Filipczak, Reference Zemke, Raines and Filipczak2000), as it has its origins in marketing research as well. Organizations have embraced the notion that Millennials are special in ways that older generations in the work place who now manage Millennials are challenged to understand, presumably because these older generations were so very different when they were young. An example of such a prescriptive how-to guide by Espinoza, Ukleja, and Rusch (Reference Espinoza, Ukleja and Rusch2010) highlights the competencies claimed to be especially required for managers to effectively manage Millennials. However, a review of these competencies in fact reveals that they simply reflect what we know generally about the importance of openness and adaptability, sociability, conscientiousness (Judge, Bono, Ilies, & Gerhardt, Reference Judge, Bono, Ilies and Gerhardt2002), leader–member connectedness (Gerstner & Day, Reference Gerstner and Day1997), and other well-established predictors of effective leadership.

The error in I-O psychology and TM practice, then, is in framing the task of managing Millennial employees as a unique, never-before-seen challenge rather than simply the challenge of all leaders: to accurately assess the unique attributes of the people they lead and adapt their leadership behaviors accordingly. To that extent, TM practices that are applied uniformly to a set of diverse employees on the basis of those employees having been born in the same decade may actually be harmful to efforts to effectively manage that talent. As Costanza and Finkelstein (Reference Costanza and Finkelstein2015) have argued, managing individual talent based on generational stereotypes is a questionable practice, much as it would be if TM practices were tailored to apply uniformly to members of other cultural or social classifications based on ethnicity, religion, or national origin. Treating Millennials as a never-before-seen phenomenon also raises doubts about the value for contemporary talent management practice of the accumulated behavioral research and theory conducted over the decades on prior generations of young employees. Further, the evidence is far from clear that Boomers or Generation Xers had materially different work-related attitudes and expectations when they were at the age Millennials are today, despite what popular books might suggest (e.g., Meister & Willyerd, Reference Meister and Willyerd2010; Zemke et al., Reference Zemke, Raines and Filipczak2000). There is also no evidence that not having shared particular historical or cultural experience at a particular point in life-span development is a material barrier to people understanding, adapting to, and forging productive work relationships with each other. Finally, we should all be relieved that the accumulated understanding of behavior at work built over the decades, including research conducted years ago on prior generations, does not have to be tossed into the trash: People—even our own generation of Millennials—remain people. Thus, in this example, this emerging practice is more likely headed down Path 4 toward the empirical dust bin.

Quadrant IV: Frontier: Areas Breaking Through Where I-O Is Not Even “Keeping up With the Joneses”

Big data

Big data is an emerging phenomenon within HR (Bersin, Reference Bersin2013), yet it still seems to be on the frontier of the I-O psychology landscape. Big data is commonly referred to as the use of extremely large data sets to reveal patterns, trends, and associations, and is characterized by the volume, variety, velocity, and veracity of data (McAfee & Brynjolfsson, Reference McAfee and Brynjolfsson2012). It is not uncommon to find workforce analytics, labor analytics, or strategic workforce planning teams within HR today, as well as analytic functionality bundled in software applications like SAP SuccessFactors and IBM. Although some I-O psychologists are employed in this space, it is more common that these teams are composed of statisticians, mathematicians, and economists.

Recently, SIOP's Executive Board commissioned an ad hoc committee to establish a set of recommendations for the field to “raise awareness and provide direction with regard to issues and complications uniquely associated with the advent of big data,” which resulted in a focal article this journal (Guzzo, Fink, King, Tonidandel, & Landis, Reference Guzzo, Fink, King, Tonidandel and Landis2015). As most of the 11 commentaries to the article noted, this was a step in the right direction but did not go far enough (Rotolo & Church, Reference Rotolo and Church2015). Certainly, SIOP is in catch-up mode, with the 2016 Leading Edge Consortium focused on the topic and several new books recently published or on the horizon (e.g., Tonidandel, King, & Cortina, Reference Tonidandel, King and Cortina2015). In our view, software companies, not I-O psychologists, are starting to control the dialogue in this space, making promises about the power of their analytical software suites that simply are not delivered. The problem is that I-O psychologists who know how data analysis works are frequently not involved in these conversations.

Yet, the concept of big data has been around since the late 1990s (Lohr, Reference Lohr2013). Around the same time, Rucci, Kirn, and Quinn (Reference Rucci, Kirn and Quinn1998) highlighted the techniques that I-O psychologists had used for decades, demonstrating that employee attitudes can empirically predict (“key drivers”) key business results. Many of the statistical techniques used in big data applications (e.g., association rule learning, classification tree analysis, social network analysis) have grounding in multivariate statistics—a topic that is core to I-O psychology graduate programs. Thus, in our view, I-O psychology should be playing a much bigger role in the usage and application of big data in HR. In fact, one could argue that we are going in the opposite direction or at least getting in our own way. For example, Cucina, Walmsley, Gast, Martin, and Curtin (Reference Cucina, Walmsley, Gast, Martin and Curtin2017) called for an end to the use of survey key driver analysis because of a handful of methodological issues.

Thus, it begs the question: Why haven't I-O psychologists been at the forefront of the big data movement? Our field is in a prime position to make the most significant impact in this area for the following reasons:

(a) Our grounding in psychology means that we understand the theory behind the data; this helps us drive what questions to ask as well as guide the interpretations and insights from the analyses. We often see, for example, those outside of our field drawing inferences out of spurious and non-job-relevant relationships (e.g., that high potentials drive red cars, therefore should be part of an algorithm to predict potential).

(b) Our expertise in research methods allows us to understand the statistical issues with big data. For example, big data, by its nature, brings with it issues of statistical significance testing and requires a bigger focus on effect sizes. Other fields may not carry this appreciation with them.

(c) We have significant history in this space. For example, those of us who are focused on survey research (e.g., employee engagement research) have been conducting for decades multivariate analyses to identify “key drivers” of desired outcomes. Similarly, those of us in employee selection research have used “big data” to identify relevant predictors of job success criteria for validation purposes.

If we do not work to provide good science with big data, then we allow for potentially inappropriate conclusions to be made (like red cars). We learn in our graduate training the ethics of using large samples, and so we know how this field should be taking shape. We owe it our business clients to be part of, if not shape, the growth of this emerging field.

Neuroscience

The areas of neuroscience and mindfulness have also received considerable attention in the media and popular press over the past few years. In fact, it seems that society at large is embracing these concepts, as evidenced by Fortune 100 organizations staffing roles such as “chief mindfulness officer” and reputable universities such as MIT offering courses on “neuroscience and leadership.” In contrast, a scan of SIOP's annual conference over the last five years yielded only 11 entries on mindfulness and four entries related to neuroscience. Why is there a lack of attention by I-O psychologists on these topics, particularly when we are most poised to influence the discussion, if not play a leading role in guiding it?

One answer could be that our attention is on more evidenced-based research than the grandiose claims that neuroscience researchers are making. For example, in a non-peer-reviewed article, Rock (Reference Rock2008) states:

In most people, the question “can I offer you some feedback” generates a similar response to hearing fast footsteps behind you at night. Performance reviews often generate status threats, explaining why they are often ineffective at stimulating behavioral change. If leaders want to change others’ behavior, more attention must be paid to reducing status threats when giving feedback. One way to do this is by allowing people to give themselves feedback on their own performance. (p. 4)

Although the neurobiology of threat and reward is well understood in general, it is a leap to presume that performance reviews routinely generate status threats and therefore lower performance. This is a good example of taking a germ of truth and extrapolating the implications of studies conducted in other domains to specific work events in the absence of any scientific evidence showing that neurobiological fight-or-flight responses routinely accompany performance reviews. In addition, the suggestion that feedback typically “leads to decreased performance” is inconsistent with meta-analytic findings which have shown that, on average, the effect of feedback is positive (d = .41; Kluger & DeNisi, Reference Kluger and DeNisi1996). As I-O practitioners we need to be ready to help our line leaders and HR professionals understand what the research says versus what some of the more intriguing, yet unsubstantiated, claims might say.