1. Introduction

Real-world data analysis often involves many defensible choices at each step of the analysis, such as how to combine and transform measurements, how to deal with missing data and outliers, and even how to choose a statistical model. In general, there is not a single defensible choice for every decision researchers must make, and there are many defensible options for each step of the data analysis (Gelman and Loken, Reference Gelman and Loken2014). As a result, raw data do not uniquely yield a single dataset for analysis. Instead, researchers are faced with a set of processed datasets, each determined by a unique combination of choices—a multiverse of datasets. Since analyses performed on each dataset may yield different results, the data multiverse directly implies a multiverse of statistical results. In recent years, concerns have been raised about how researchers can exploit this flexibility in data analysis to increase the likelihood of observing a statistically significant result. Researchers may engage in such questionable research practices due to editorial practices that prioritize the publication of statistically significant results or the selection of findings that confirm the belief of the same authors(Begg and Berlin, Reference Begg and Berlin1988; Dwan et al., Reference Dwan, Altman, Arnaiz, Bloom, Chan, Cronin, Decullier, Easterbrook, Von Elm and Gamble2008; Fanelli, Reference Fanelli2012). When researchers select and report the results of a subset of all possible analyses that produce significant results (Sterling, Reference Sterling1959; Greenwald, Reference Greenwald1975; Simmons et al., Reference Simmons, Nelson and Simonsohn2011; Brodeur et al., Reference Brodeur, Lé, Sangnier and Zylberberg2016), they dramatically increase the actual false-positive rates despite their nominal endorsement of a low Type I error rate (e.g.,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$5\%$$\end{document}

![]() ).

).

Two solutions have been proposed to address the issue of p-hacking. The first solution requires researchers to specify their statistical analysis plan before examining raw data. Such preregistered studies control the Type I error rate by reducing flexibility during the data analysis. Preregistration is easily implemented for replication studies, where researchers specify that they will perform the same analysis as was performed in an earlier study. For more novel studies, preregistration can be challenging because researchers may not have enough knowledge to anticipate all the possible decisions that need to be made when analyzing the data. The second solution recognizes that it is often not feasible to specify a single analysis before collecting the data and instead advocates for transparently reporting all possible analyses that can be conducted. Steegen et al. (Reference Steegen, Tuerlinckx, Gelman and Vanpaemel2016) introduced multiverse analysis, which aims to use all reasonable options for data processing to construct a multiverse of datasets and then separately perform the same analysis of interest on each of these datasets. The main tool used to interpret the output of a multiverse analysis is a histogram of p values, which summarizes all the p values obtained for a given effect. Researchers then typically discuss the results in terms of the proportion of significant p values. This procedure not only provides a detailed picture of the robustness or fragility of the results in different processing choices, but also allows researchers to explore the key choices that are most consequential in the fluctuation of their results. Multiverse analysis represents a valuable step towards transparent science. The method has gained popularity since its development and has been applied in various experimental contexts, including cognitive development, risk perception (Mirman et al., Reference Mirman, Murray, Mirman and Adams2021), assessment of parental behavior (Modecki et al., Reference Modecki, Low-Choy, Uink, Vernon, Correia and Andrews2020), and memory tasks (Wessel et al., Reference Wessel, Albers, Zandstra and Heininga2020). Although some applications are limited to exploratory purposes, aiming to define brief guidelines for conducting a multiverse analysis (Dragicevic et al., Reference Dragicevic, Jansen, Sarma, Kay and Chevalier2019; Liu et al., Reference Liu, Kale, Althoff and Heer2020), other studies use this method as a robustness assessment for mediation analysis (Rijnhart et al., Reference Rijnhart, Twisk, Deeg and Heymans2021) or an exhaustive modeling approach (Frey et al., Reference Frey, Richter, Schupp, Hertwig and Mata2021). This research approach permits to exhibit the stability and robustness of findings, not only across different exclusion criteria or modifications of variables, but also across different decisions made during all phases of data analysis. This feature can be particularly interesting and appealing from the perspective of the replicability crisis in quantitative psychology (Open Science Collaboration, 2015), and in enhancing the transparency and credibility of scientific results (Nosek and Lakens, Reference Nosek and Lakens2014). Multiverse analysis can therefore be extended beyond the pre-processing stage to include the methods used for the analysis (the “multiverse of methods”) (Harder, Reference Harder2020). The explicit flexibility in multiverse analysis is not to be condemned as it reflects an effort to transparently describe the uncertainty about the best analysis strategy. However, if, on the one hand, the exploration of multiple analytical choices in data analysis must be advocated, on the other hand it is challenging to draw reliable inferences from such a large number of statistical analyses. Although most researchers have interpreted the results derived from multiverse analysis descriptively, while doing so, it is extremely tempting to make claims about analyses that yield statistically significant results, and not to make claims about non-significant results. However, a selective focus on a subset of statistically significant results once again introduces the problem of selective inference (Benjamini, Reference Benjamini2020) and can potentially inflate the rate at which claims about effects are false positives.

Currently, the only method that allows researchers to make formal inferences in multiverse analysis is specification curve analysis (Simonsohn et al., Reference Simonsohn, Simmons and Nelson2020). Analogously to multiverse analysis, it requires researchers to consider the entire set of reasonable combinations of data-analytic decisions, called specifications; subsequently, these specifications are used jointly to derive a test for the null hypothesis of interest. If the null hypothesis is rejected, researchers can claim with a certain maximum error rate (e.g.,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$5\%$$\end{document}

![]() ) that there exists at least one specification in which the null hypothesis is false. In the most general case of non-experimental data, the inferential support is based on bootstrapping techniques and is valid only in linear regression models (LMs), without the possibility of a general extension to other distributions for the dependent variable that are usually included in generalized linear models (GLMs). More importantly, this methodology lacks a formal description of the statistical properties of the test, allows testing only a single hypothesis, and does not address the problem of controlling multiplicity when testing different hypotheses. A more formal study of the method’s performance is provided in Sects. 3 and 4. Because researchers are often interested in models that are more complex than LMs, want to explore several different processing steps, and possibly wish to investigate more null hypotheses together, it would be beneficial if more advanced analysis methods for multiverse analysis were developed. Such more advanced methods would allow, for example, psychometricians to identify a set of predictors that are associated with a particular outcome, or allow neuroscientists to identify brain regions activated by a stimulus. In summary, the multiverse analysis framework allows researchers to manage degrees of freedom in the data analysis, but the literature still lacks a formal inferential approach that allows researchers to derive reliable inferences about (sets of) specific analyses included in multiverse analysis. In this paper, we define the Post-selection Inference approach to Multiverse Analysis (PIMA) which is a flexible and general inference approach for multiverse analysis that accounts for all possible models, i.e., the multiverse of reasonable analyses. In the framework of GLMs, we consider the null hypothesis that a given predictor of interest is not associated with the outcome, i.e., that the corresponding coefficient is zero. Furthermore, we assume that researchers consider all reasonable models obtained by different choices of data processing. We provide a resampling-based procedure based on the sign-flip score test of Hemerik et al. (Reference Hemerik, Goeman and Finos2020) and De Santis et al. (Reference De Santis, Goeman, Hemerik and Finos2022) that allows researchers to test the null hypothesis by combining information from all reasonable models, and show that this framework allows inference about the coefficient of interest on three different levels of complexity. First, considering the predictor of interest, we compute a global p value considering all models, so that researchers can state whether the coefficient is non-null in at least one of the models in the multiverse analysis. Second, we compute individual adjusted p values for each model and thus obtain the set of models where the coefficient is non-null. Because PIMA accounts for multiplicity, researchers are free to choose the preferred model post hoc, after trying all models and seeing the results. In other words, the procedure allows selective inference, but unlike p-hacking, researchers can select statistically significant analyses from the multiverse while controlling the Type I error rate. Finally, we define a third inference strategy for multiverse analysis in which researchers provide a lower confidence bound for the true discovery proportion (TDP), i.e., the proportion of models with a non-null coefficient. In this analysis, researchers cannot individually identify statistically significant models in the multiverse, but in some cases it may be more powerful to report the true discovery proportion than individual p values. Finally, we argue that the method can be easily extended to the case of multiple hypotheses on different coefficients. The resulting procedure is general, flexible, and powerful, and can be applied to many different contexts. It is valid as long as all the considered models are reasonable and specified in advance, before carrying out the analysis. The structure of the paper is as follows. In Sect. 2, we define the framework and construct the desired resampling-based test. Subsequently, in Sect. 3, we use the test to make inference in the multiverse framework. We then study the properties of the PIMA method, and we apply it to real data in Sects. 4 and 5, respectively. We conclude with Sect. 6 that contains a short remark on the main results, with some hints on still open issues in multiverse analysis and practical recommendations for the PIMA methodology. All the analyses and simulations were implemented using the statistical software R (R Core Team, 2021). All R code and data associated with the real data application are available at https://osf.io/3ebw9/, while further analyses can be developed through the dedicated package Jointest (Finos et al., Reference Finos, Hemerik and Goeman2023) available at https://github.com/livioivil/jointest.

) that there exists at least one specification in which the null hypothesis is false. In the most general case of non-experimental data, the inferential support is based on bootstrapping techniques and is valid only in linear regression models (LMs), without the possibility of a general extension to other distributions for the dependent variable that are usually included in generalized linear models (GLMs). More importantly, this methodology lacks a formal description of the statistical properties of the test, allows testing only a single hypothesis, and does not address the problem of controlling multiplicity when testing different hypotheses. A more formal study of the method’s performance is provided in Sects. 3 and 4. Because researchers are often interested in models that are more complex than LMs, want to explore several different processing steps, and possibly wish to investigate more null hypotheses together, it would be beneficial if more advanced analysis methods for multiverse analysis were developed. Such more advanced methods would allow, for example, psychometricians to identify a set of predictors that are associated with a particular outcome, or allow neuroscientists to identify brain regions activated by a stimulus. In summary, the multiverse analysis framework allows researchers to manage degrees of freedom in the data analysis, but the literature still lacks a formal inferential approach that allows researchers to derive reliable inferences about (sets of) specific analyses included in multiverse analysis. In this paper, we define the Post-selection Inference approach to Multiverse Analysis (PIMA) which is a flexible and general inference approach for multiverse analysis that accounts for all possible models, i.e., the multiverse of reasonable analyses. In the framework of GLMs, we consider the null hypothesis that a given predictor of interest is not associated with the outcome, i.e., that the corresponding coefficient is zero. Furthermore, we assume that researchers consider all reasonable models obtained by different choices of data processing. We provide a resampling-based procedure based on the sign-flip score test of Hemerik et al. (Reference Hemerik, Goeman and Finos2020) and De Santis et al. (Reference De Santis, Goeman, Hemerik and Finos2022) that allows researchers to test the null hypothesis by combining information from all reasonable models, and show that this framework allows inference about the coefficient of interest on three different levels of complexity. First, considering the predictor of interest, we compute a global p value considering all models, so that researchers can state whether the coefficient is non-null in at least one of the models in the multiverse analysis. Second, we compute individual adjusted p values for each model and thus obtain the set of models where the coefficient is non-null. Because PIMA accounts for multiplicity, researchers are free to choose the preferred model post hoc, after trying all models and seeing the results. In other words, the procedure allows selective inference, but unlike p-hacking, researchers can select statistically significant analyses from the multiverse while controlling the Type I error rate. Finally, we define a third inference strategy for multiverse analysis in which researchers provide a lower confidence bound for the true discovery proportion (TDP), i.e., the proportion of models with a non-null coefficient. In this analysis, researchers cannot individually identify statistically significant models in the multiverse, but in some cases it may be more powerful to report the true discovery proportion than individual p values. Finally, we argue that the method can be easily extended to the case of multiple hypotheses on different coefficients. The resulting procedure is general, flexible, and powerful, and can be applied to many different contexts. It is valid as long as all the considered models are reasonable and specified in advance, before carrying out the analysis. The structure of the paper is as follows. In Sect. 2, we define the framework and construct the desired resampling-based test. Subsequently, in Sect. 3, we use the test to make inference in the multiverse framework. We then study the properties of the PIMA method, and we apply it to real data in Sects. 4 and 5, respectively. We conclude with Sect. 6 that contains a short remark on the main results, with some hints on still open issues in multiverse analysis and practical recommendations for the PIMA methodology. All the analyses and simulations were implemented using the statistical software R (R Core Team, 2021). All R code and data associated with the real data application are available at https://osf.io/3ebw9/, while further analyses can be developed through the dedicated package Jointest (Finos et al., Reference Finos, Hemerik and Goeman2023) available at https://github.com/livioivil/jointest.

2. The Sign-Flip Score Test

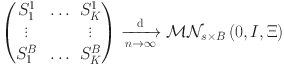

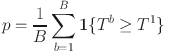

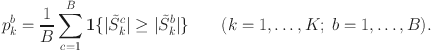

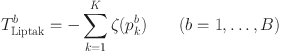

In the context of multiverse analysis, there is no a single pre-specified model, while we are interested in testing the effect of a given predictor on a response variable in the multiverse of possible models. In order to test the global null hypothesis that the predictor has no effect in any of the models considered, one needs to define a proper test statistic and its distribution under the null hypothesis. Finding a solution within the parametric framework represents a formidable challenge, due to the inherent dependence among the univariate test statistics, which in most cases is very high and usually nonlinear. The resampling-based approach usually provides a solution to this multivariate challenge. We will rely on the sign-flip score test of Hemerik et al. (Reference Hemerik, Goeman and Finos2020) and De Santis et al. (Reference De Santis, Goeman, Hemerik and Finos2022) to define an asymptotically exact test for the global null hypothesis of interest. In this section, we specify the structure of the models and introduce the sign-flip score test for a single model specification. In the next section, we will give a natural extension to the multivariate framework. Finally, we will show how to employ the procedure within the closed testing framework (Marcus et al., Reference Marcus, Peritz and Gabriel1976) to make additional inferences on the models.

2.1. Model Specification

We consider the framework of GLMs. Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Y=(y_1,\ldots ,y_n)^\top \in {\mathbb {R}}^n$$\end{document}

![]() be n independent observations of a variable of interest, which is assumed to belong to the exponential dispersion family distribution with density of the form

be n independent observations of a variable of interest, which is assumed to belong to the exponential dispersion family distribution with density of the form

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\theta _i$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\phi _i$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\phi _i$$\end{document}

![]() are the canonical and the dispersion parameter, respectively. According to the usual literature of GLMs (Agresti, Reference Agresti2015), the mean and variance functions are

are the canonical and the dispersion parameter, respectively. According to the usual literature of GLMs (Agresti, Reference Agresti2015), the mean and variance functions are

We suppose that the mean of Y depends on an observed predictor of interest

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X=(x_1,\ldots ,x_n)^\top \in {\mathbb {R}}^n$$\end{document}

![]() and m other observed predictors

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Z=(z_1,\ldots ,z_n)^\top \in {\mathbb {R}}^{n\times m}$$\end{document}

and m other observed predictors

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$Z=(z_1,\ldots ,z_n)^\top \in {\mathbb {R}}^{n\times m}$$\end{document}

![]() through a nonlinear relation

through a nonlinear relation

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$g(\cdot )$$\end{document}

![]() denotes the link function,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta \in {\mathbb {R}}$$\end{document}

denotes the link function,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta \in {\mathbb {R}}$$\end{document}

![]() is a parameter of interest, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma \in {\mathbb {R}}^m$$\end{document}

is a parameter of interest, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma \in {\mathbb {R}}^m$$\end{document}

![]() is a vector of nuisance parameters.

is a vector of nuisance parameters.

Finally, we define the following

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n\times n$$\end{document}

![]() matrices that will be used in the next sections:

matrices that will be used in the next sections:

2.2. Hypothesis Testing for an Individual Model via Sign-Flip Score Test

Given a model specified as in the previous section, we are interested in testing the null hypothesis

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}:\beta =0$$\end{document}

![]() that the predictor X does not influence the response Y with significance level

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\alpha \in [0,1)$$\end{document}

that the predictor X does not influence the response Y with significance level

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\alpha \in [0,1)$$\end{document}

![]() . Here

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma $$\end{document}

. Here

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma $$\end{document}

![]() is estimated by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\hat{\gamma }}$$\end{document}

is estimated by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\hat{\gamma }}$$\end{document}

![]() and is therefore a vector of nuisance parameters. We consider the hypothesis

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =0$$\end{document}

and is therefore a vector of nuisance parameters. We consider the hypothesis

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =0$$\end{document}

![]() for simplicity of exposition; however, the sign-flip approach can be extended to the more general case

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =\beta _0$$\end{document}

for simplicity of exposition; however, the sign-flip approach can be extended to the more general case

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =\beta _0$$\end{document}

![]() .

.

Relying on the work of Hemerik et al. (Reference Hemerik, Goeman and Finos2020), De Santis et al. (Reference De Santis, Goeman, Hemerik and Finos2022) provide the sign-flip score test, a robust and asymptotically exact test for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}$$\end{document}

![]() that uses B random sign-flipping transformations. Even though larger values of B tend to give more power, to have nonzero power it is sufficient to take

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$B\ge 1/\alpha $$\end{document}

that uses B random sign-flipping transformations. Even though larger values of B tend to give more power, to have nonzero power it is sufficient to take

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$B\ge 1/\alpha $$\end{document}

![]() . Hence, consider the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n\times n$$\end{document}

. Hence, consider the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n\times n$$\end{document}

![]() diagonal matrices

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$F^{b}=\text {diag}\{f_i^b\}$$\end{document}

diagonal matrices

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$F^{b}=\text {diag}\{f_i^b\}$$\end{document}

![]() , with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$b=1,\ldots ,B$$\end{document}

, with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$b=1,\ldots ,B$$\end{document}

![]() . The first is fixed as the identity

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$F^{1}=I$$\end{document}

. The first is fixed as the identity

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$F^{1}=I$$\end{document}

![]() , and the diagonal elements of the others are independently and uniformly drawn from

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\{-1,1\}$$\end{document}

, and the diagonal elements of the others are independently and uniformly drawn from

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\{-1,1\}$$\end{document}

![]() . Each matrix

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$F^{b}$$\end{document}

. Each matrix

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$F^{b}$$\end{document}

![]() defines a flipped effective score

defines a flipped effective score

where

is a particular hat matrix, symmetric and idempotent, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\hat{\mu }}$$\end{document}

![]() is a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sqrt{n}$$\end{document}

is a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sqrt{n}$$\end{document}

![]() -consistent estimate of the true value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu ^*$$\end{document}

-consistent estimate of the true value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu ^*$$\end{document}

![]() computed under

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}$$\end{document}

computed under

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}$$\end{document}

![]() . In practical applications, if the matrices D and V, and thus W, are unknown, they can be replace by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sqrt{n}$$\end{document}

. In practical applications, if the matrices D and V, and thus W, are unknown, they can be replace by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sqrt{n}$$\end{document}

![]() -consistent estimates.

-consistent estimates.

This effective score may be written as a sum of individual contributions with flipped signs, as follows:

Here

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _i$$\end{document}

![]() is the contribution of the i-th observation to the effective score. The definition and properties of the contributions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _i$$\end{document}

is the contribution of the i-th observation to the effective score. The definition and properties of the contributions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _i$$\end{document}

![]() are explored in Hemerik et al. (Reference Hemerik, Goeman and Finos2020) and De Santis et al. (Reference De Santis, Goeman, Hemerik and Finos2022), where they are denoted as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _{{\hat{\gamma }},i}^*$$\end{document}

are explored in Hemerik et al. (Reference Hemerik, Goeman and Finos2020) and De Santis et al. (Reference De Santis, Goeman, Hemerik and Finos2022), where they are denoted as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _{{\hat{\gamma }},i}^*$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\tilde{\nu }}_{i,\beta }^*$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\tilde{\nu }}_{i,\beta }^*$$\end{document}

![]() , respectively.

, respectively.

An assumption is needed about the effective score computed when the true value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma ^*$$\end{document}

![]() of the nuisance

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma $$\end{document}

of the nuisance

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma $$\end{document}

![]() , and so the true value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu ^*$$\end{document}

, and so the true value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu ^*$$\end{document}

![]() of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu $$\end{document}

of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu $$\end{document}

![]() , is known. This quantity may be written analogously to (1) and (2), as

, is known. This quantity may be written analogously to (1) and (2), as

In this case, the contributions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _i^*$$\end{document}

![]() are independent if D and V are known and asymptotically independent otherwise (Hemerik et al., Reference Hemerik, Goeman and Finos2020). The required assumption is a Lindeberg’s condition that ensures that the contribution of each

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _i^*$$\end{document}

are independent if D and V are known and asymptotically independent otherwise (Hemerik et al., Reference Hemerik, Goeman and Finos2020). The required assumption is a Lindeberg’s condition that ensures that the contribution of each

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu _i^*$$\end{document}

![]() to the variance of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$S^{b*}$$\end{document}

to the variance of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$S^{b*}$$\end{document}

![]() is arbitrarily small as n grows. This can be formulated as follows.

is arbitrarily small as n grows. This can be formulated as follows.

Assumption 1

As

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n\rightarrow \infty $$\end{document}

![]() ,

,

for some constant

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$c>0$$\end{document}

![]() . Moreover, for any

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varepsilon >0$$\end{document}

. Moreover, for any

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varepsilon >0$$\end{document}

![]()

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\textbf{1}}\{\cdot \}$$\end{document}

![]() denotes the indicator function.

denotes the indicator function.

Given this assumption, the sign-flip score test of De Santis et al. (Reference De Santis, Goeman, Hemerik and Finos2022) relies on the standardized flipped scores, obtained by each effective score (1) by its standard deviation:

where

The test is defined from the absolute values of the standardized scores, comparing the observed value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$|{\tilde{S}}^1|$$\end{document}

![]() with a critical value obtained from permutations. The latter is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$|{\tilde{S}}|^{\lceil (1-\alpha )B\rceil }$$\end{document}

with a critical value obtained from permutations. The latter is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$|{\tilde{S}}|^{\lceil (1-\alpha )B\rceil }$$\end{document}

![]() , where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$|{\tilde{S}}|^{(1)}\le \ldots \le |{\tilde{S}}|^{(B)}$$\end{document}

, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$|{\tilde{S}}|^{(1)}\le \ldots \le |{\tilde{S}}|^{(B)}$$\end{document}

![]() are all the sorted values and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\lceil \cdot \rceil $$\end{document}

are all the sorted values and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\lceil \cdot \rceil $$\end{document}

![]() denotes the ceiling function.

denotes the ceiling function.

Theorem 1

(De Santis et al., Reference De Santis, Goeman, Hemerik and Finos2022) Under Assumption 1, the test that rejects

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}$$\end{document}

![]() when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$|{\tilde{S}}^1|>|{\tilde{S}}|^{\lceil (1-\alpha )B\rceil }$$\end{document}

when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$|{\tilde{S}}^1|>|{\tilde{S}}|^{\lceil (1-\alpha )B\rceil }$$\end{document}

![]() is an

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\alpha $$\end{document}

is an

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\alpha $$\end{document}

![]() -level test, asymptotically as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n\rightarrow \infty $$\end{document}

-level test, asymptotically as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n\rightarrow \infty $$\end{document}

![]() .

.

The test of Theorem 1 is exact in the particular case of LMs, and second-moment exact in GLMs. The second-moment exactness means that under

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}$$\end{document}

![]() the test statistics

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\tilde{S}}^b$$\end{document}

the test statistics

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\tilde{S}}^b$$\end{document}

![]() do not necessarily have the same distribution, but share the same mean and variance, independently of the sign flip; this provides exact control of the Type I error rate, for practical purposes, even for finite sample size. The only requirement is Assumption 1, that states that the variance of the score (3) is not dominated by any particular contribution. Furthermore, the test is robust to some model misspecifications, as long as the mean

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu $$\end{document}

do not necessarily have the same distribution, but share the same mean and variance, independently of the sign flip; this provides exact control of the Type I error rate, for practical purposes, even for finite sample size. The only requirement is Assumption 1, that states that the variance of the score (3) is not dominated by any particular contribution. Furthermore, the test is robust to some model misspecifications, as long as the mean

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu $$\end{document}

![]() and the link g are correctly specified. In particular, under minimal assumptions, the test is still asymptotically exact for any generic misspecification of the variance V (De Santis et al., Reference De Santis, Goeman, Hemerik and Finos2022).

and the link g are correctly specified. In particular, under minimal assumptions, the test is still asymptotically exact for any generic misspecification of the variance V (De Santis et al., Reference De Santis, Goeman, Hemerik and Finos2022).

2.3. Intuition Behind the Sign-Flip Score Test

Although the formal definition of the sign-flip score approach may seem difficult to grasp, its meaning is quite intuitive. For the sake of clarity, we consider a simple example using a GLM with gaussian error and identity link that can be easily reconducted to a multiple linear model. In this case, we have

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$W=D=I$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$V=\sigma ^2 I$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$V=\sigma ^2 I$$\end{document}

![]() , where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sigma ^2$$\end{document}

, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sigma ^2$$\end{document}

![]() is the variance shared by every observation. From (2), the observed and flipped effective scores can be written as

is the variance shared by every observation. From (2), the observed and flipped effective scores can be written as

where

In this perspective, the score can be interpreted as the sum of weighted residuals of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_i -{\hat{y}}_i$$\end{document}

![]() , where the weights are the residuals

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_i-{\hat{x}}_i$$\end{document}

, where the weights are the residuals

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_i-{\hat{x}}_i$$\end{document}

![]() . A further interpretation is that the score is the sum of n contributions, and these contributions are the residuals of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_i$$\end{document}

. A further interpretation is that the score is the sum of n contributions, and these contributions are the residuals of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_i$$\end{document}

![]() predicted by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$z_i$$\end{document}

predicted by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$z_i$$\end{document}

![]() multiplied by the residuals of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_i$$\end{document}

multiplied by the residuals of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_i$$\end{document}

![]() predicted by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$z_i$$\end{document}

predicted by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$z_i$$\end{document}

![]() . In this sense, the score extends the covariance by moving from the empirical mean (i.e., a model with the intercept only) to a full linear model.

. In this sense, the score extends the covariance by moving from the empirical mean (i.e., a model with the intercept only) to a full linear model.

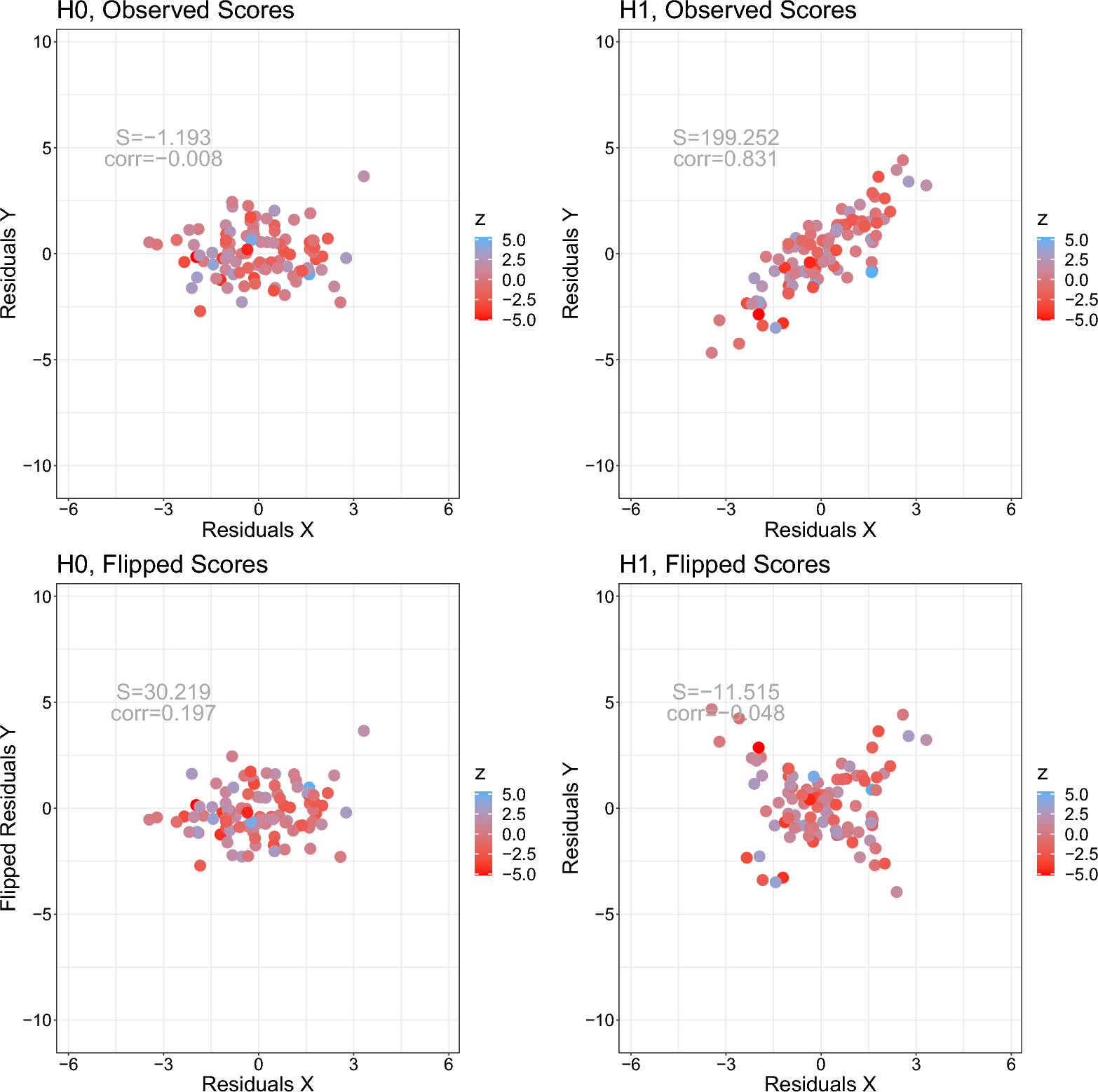

To see things in practice, consider the following linear regression model

and suppose we are interested in testing

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}:\ \beta =0$$\end{document}

![]() . The predictors X and Z are generated from a multivariate normal with unit variance and covariance 0.80. We create two scenarios, sharing the same X and Z, but with different response variable Y: the first scenario is generated under the null hypothesis

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}$$\end{document}

. The predictors X and Z are generated from a multivariate normal with unit variance and covariance 0.80. We create two scenarios, sharing the same X and Z, but with different response variable Y: the first scenario is generated under the null hypothesis

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}$$\end{document}

![]() (

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =0$$\end{document}

(

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =0$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma =1$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma =1$$\end{document}

![]() ), while the second is generated under the alternative (

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =1$$\end{document}

), while the second is generated under the alternative (

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta =1$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma =1$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\gamma =1$$\end{document}

![]() ). For each simulation, we generate

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n=100$$\end{document}

). For each simulation, we generate

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$n=100$$\end{document}

![]() observations. We name the resulting datasets

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

observations. We name the resulting datasets

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

![]() , respectively.

, respectively.

Examples of scatter plots between Y and X considering also Z by color are given in Fig. 1. In both scenarios, we see a positive correlation between X and Y. From the color of the dots, one can appreciate the positive dependence of Z—both—with X and Y; that is, more bluish dots correspond to higher values of Z and these appear where X and Y have higher values too (upper right corner). Testing for the null hypothesis

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {H}}:\ \beta =0$$\end{document}

![]() , however, corresponds to testing the partial correlation between X and Y, net of the effect of Z. This partial correlation can be visually evaluated with a scatter plot of the residuals that form the n addends of the observed score

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$S^1$$\end{document}

, however, corresponds to testing the partial correlation between X and Y, net of the effect of Z. This partial correlation can be visually evaluated with a scatter plot of the residuals that form the n addends of the observed score

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$S^1$$\end{document}

![]() given in (5). These are shown in the two upper plots of Fig. 2 for both the datasets

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

given in (5). These are shown in the two upper plots of Fig. 2 for both the datasets

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

![]() (upper left) and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

(upper left) and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

![]() (upper right). In these scatter plots, the coordinates of each point are the values

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_i-{\hat{x}}_i$$\end{document}

(upper right). In these scatter plots, the coordinates of each point are the values

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_i-{\hat{x}}_i$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_i-{\hat{y}}_i$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$y_i-{\hat{y}}_i$$\end{document}

![]() , and the observed score

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$S^1$$\end{document}

, and the observed score

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$S^1$$\end{document}

![]() is obtained from the sum of the product of these coordinates

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(x_i-{\hat{x}}_i)(y_i-{\hat{y}}_i)$$\end{document}

is obtained from the sum of the product of these coordinates

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(x_i-{\hat{x}}_i)(y_i-{\hat{y}}_i)$$\end{document}

![]() . After removing the effect of Z from X and Y, the scatter plot for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

. After removing the effect of Z from X and Y, the scatter plot for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

![]() shows no relationship between the two variables, while this is still present for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

shows no relationship between the two variables, while this is still present for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

![]() .

.

Figure 1 Simulated dataset under the scenario

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

![]() (left) and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

(left) and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_1$$\end{document}

![]() (right).

(right).

Figure 2 Observed (top) and flipped (bottom) distribution residuals of Y versus X in the datasets

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

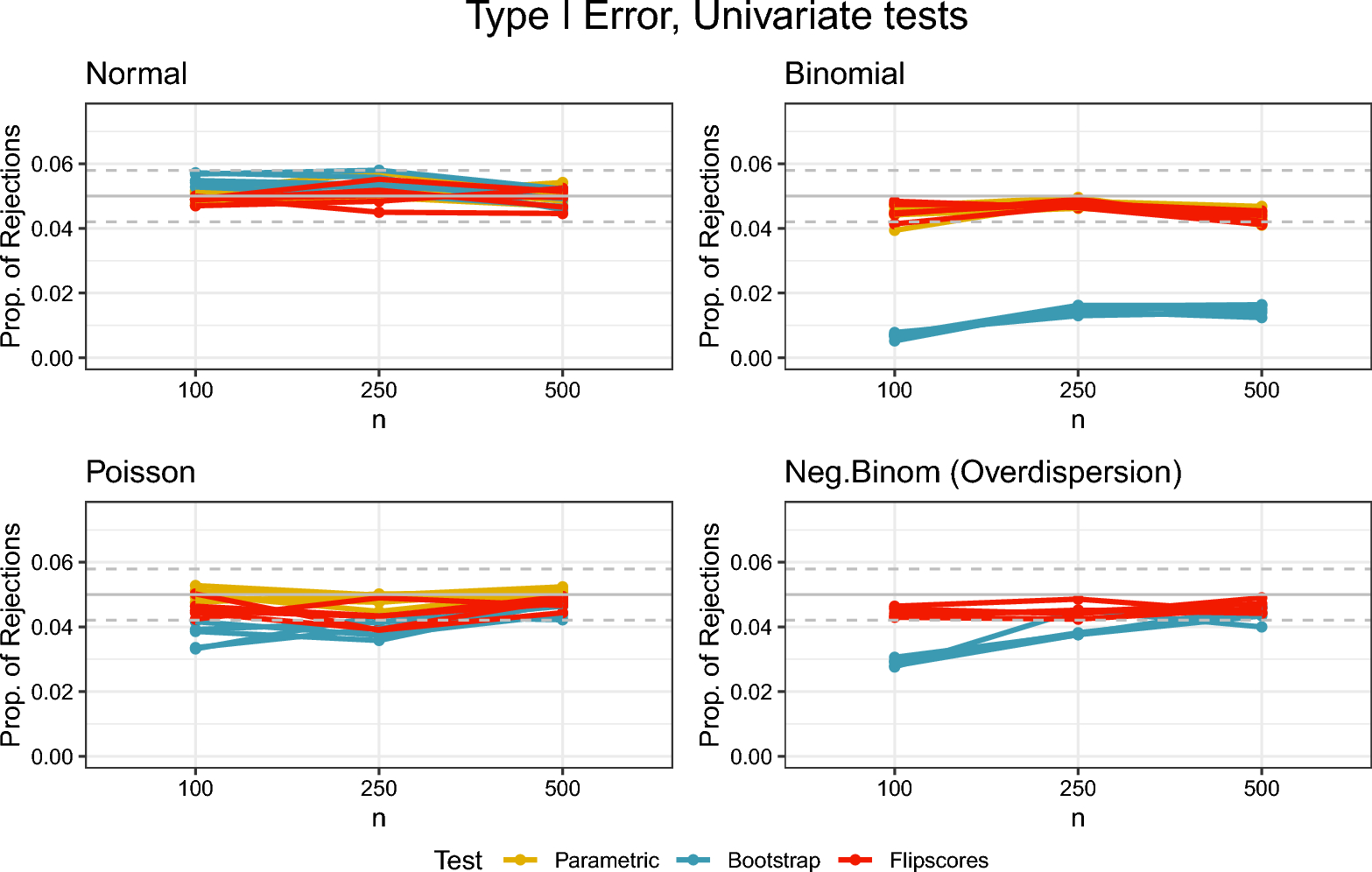

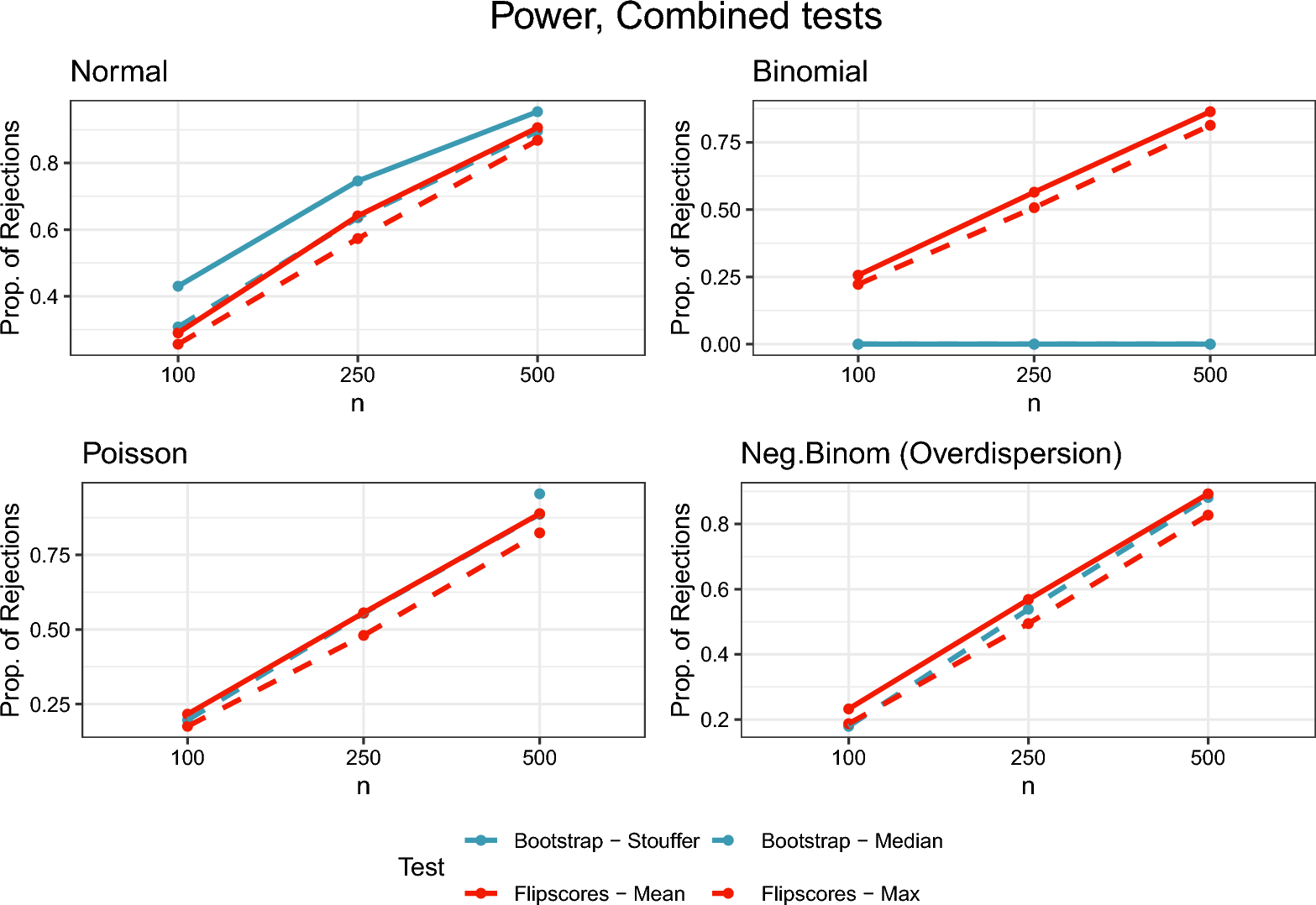

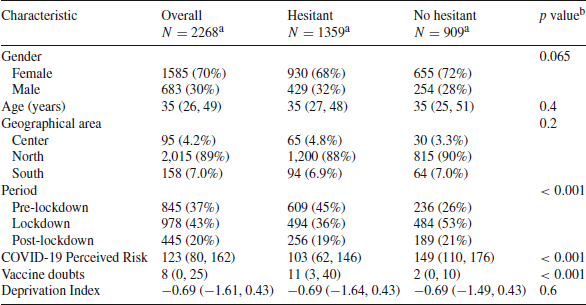

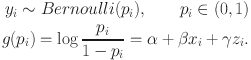

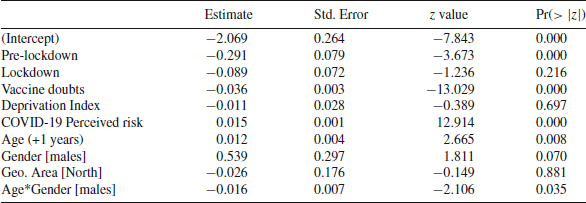

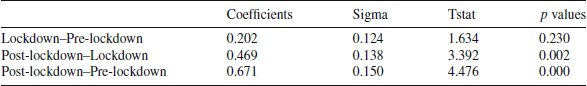

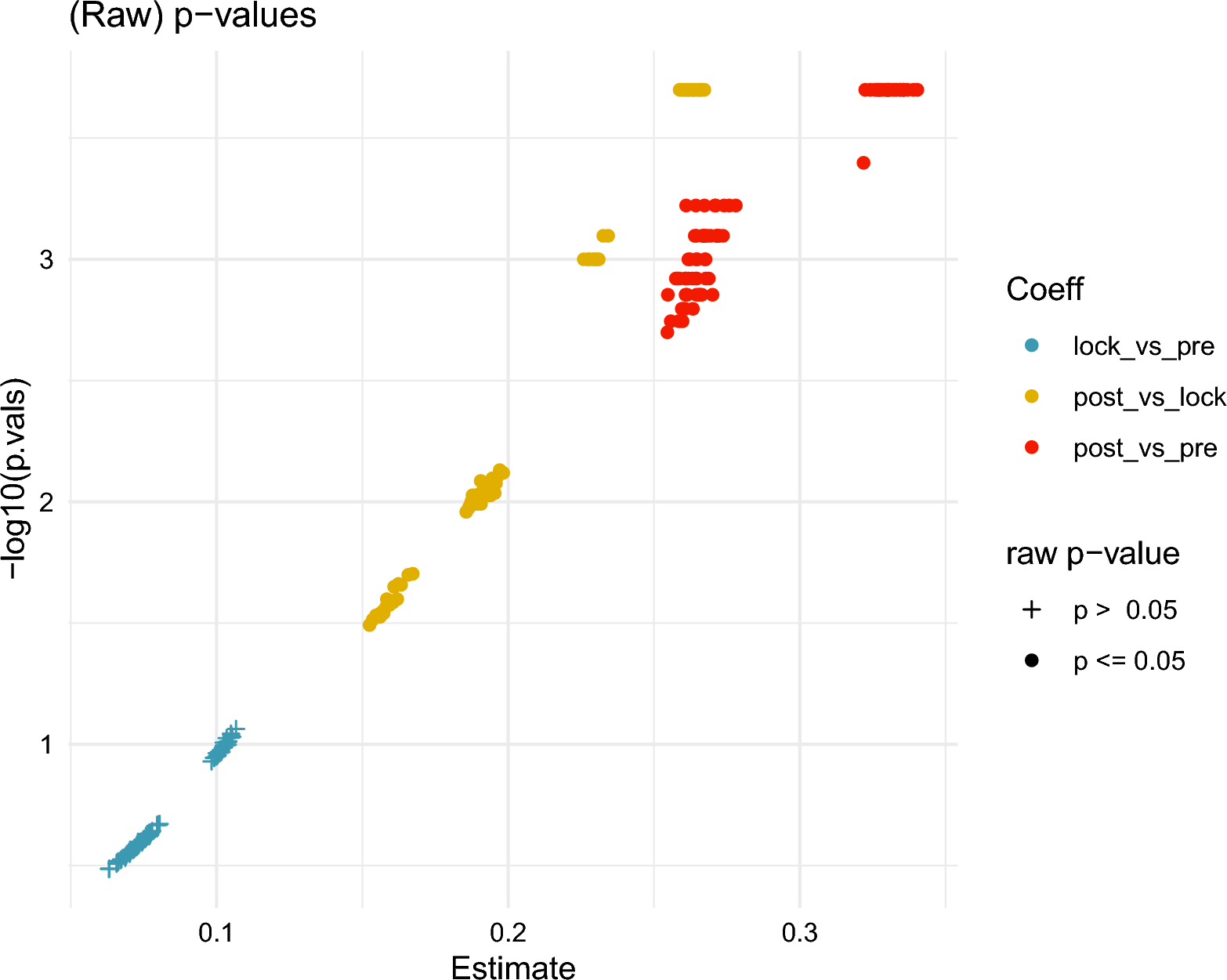

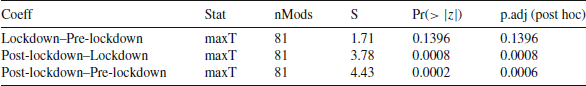

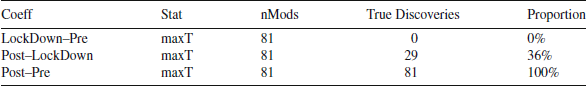

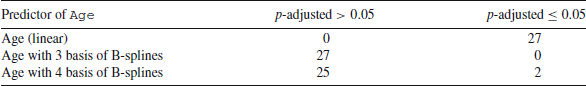

\usepackage{mathrsfs}