Impact Statement

This position paper discusses common challenges to reproduce computational climate science according to an online reproduction competition using interactive publications. The authors place the competition according to the state of other reproducibility initiatives in scientific communities that are naturally close to software. The proposed format of the competition is innovative by elevating the role of executable research objects and community-driven platforms to assist a collaborative computational peer review process of scholarly publications in climate and environmental data science. By proposing a supportive framework of tools and infrastructure for evaluating reproducibility for the climate informatics community, we call an action for all stakeholders involved in the geoscientific publication to strength such frameworks by implementing funding and incentives mechanisms that also support long-term maintenance of the evaluated artifacts in reproducible computational research.

1. Introduction

Reproducibility and replicability form the foundation of scientific research. These core principles not only ensure the reliability of findings but also enable scientific ideas and results to be tested, refined, and built upon. Their pertinence extends seamlessly from large-scale studies of high-profile papers to smaller settings. For the latter, reproducibility becomes pivotal, especially for small laboratories with a limited number of technical staff. It allows new team members to construct upon previous work without excessive expenditure of their already limited resources (Leipzig et al., Reference Leipzig, Nüst, Hoyt, Ram and Greenberg2021). This scenario also underlines the importance of meticulous data management and extensive documentation in scientific research. Computational experiments play an integral role in the scientific method. They do not only support computer science research but also natural science, social science, and humanity research. One of the key challenges in computational research is to establish and maintain trust in the experiments and artifacts that are presented in published results. A fundamental aspect of building trust is the ability to reproduce those results. A computational experiment that has been developed at time

![]() $ t $

on hardware/operating system

$ t $

on hardware/operating system

![]() $ s $

on data

$ s $

on data

![]() $ d $

is reproducible if it can be executed at time

$ d $

is reproducible if it can be executed at time

![]() $ t^{\prime } $

on system

$ t^{\prime } $

on system

![]() $ s^{\prime } $

on data

$ s^{\prime } $

on data

![]() $ d^{\prime } $

that is similar to (or potentially the same as)

$ d^{\prime } $

that is similar to (or potentially the same as)

![]() $ d $

(Freire et al., Reference Freire, Bonnet and Shasha2012). In the context of computational research, reproducibility and replicability terms hold different meanings. Following the National Academies of Sciences, Engineering, and Medicine’s (2019) report, we define reproducibility as “obtaining consistent results using the same material, data, methods and conditions of analysis”. In contrast, we refer to replicability as “obtaining consistent results under new conditions with new materials, data, or methods and independently confirming original findings”. These definitions are aligned to The Turing Way’s (2023) matrix of computational reproducibility (Figure 1) which also includes robustness (same data, different code) and generalizable (different data and different code).

$ d $

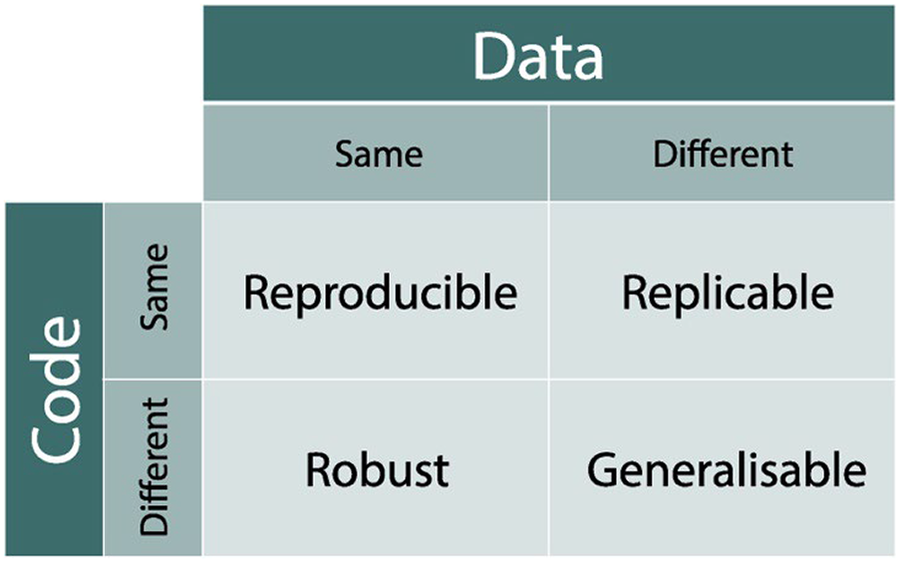

(Freire et al., Reference Freire, Bonnet and Shasha2012). In the context of computational research, reproducibility and replicability terms hold different meanings. Following the National Academies of Sciences, Engineering, and Medicine’s (2019) report, we define reproducibility as “obtaining consistent results using the same material, data, methods and conditions of analysis”. In contrast, we refer to replicability as “obtaining consistent results under new conditions with new materials, data, or methods and independently confirming original findings”. These definitions are aligned to The Turing Way’s (2023) matrix of computational reproducibility (Figure 1) which also includes robustness (same data, different code) and generalizable (different data and different code).

Figure 1. Matrix of reproducibility (The Turing Way, 2023); made available under the Creative Commons Attribution license (CC-BY 4.0).

Willis and Stodden (Reference Willis and Stodden2020) ‘s work claims the evolution of computational reproducibility as we understand it today has been shaped by four movements. First, early works in computer science, mathematics, and statistics focused on the review and distribution of scientific software libraries (Hopkins, Reference Hopkins2009). Second, the “replication standard” movement in political science and economics in the 1980s and 1990s advocated for authors to share code and data for the evaluation and replication of research (Dewald et al., Reference Dewald, Thursby and Anderson1986; King, Reference King1995). Third, the “reproducible research” movement, initiated by geoscientists (Claerbout and Karrenbach, Reference Claerbout and Karrenbach1992) was later adopted in statistics and signal processing. The movement emphasized a vision of a system where computational artifacts could be used to reproduce publications, including tables and figures, by “pressing a single button”. Finally, the “repeatability” movement in computer science originated in the database systems community (Manolescu et al., Reference Manolescu, Afanasiev, Arion, Dittrich, Manegold, Polyzotis, Schnaitter, Senellart, Zoupanos and Shasha2008).

Fields such as political science (King, Reference King1995; Peer et al., Reference Peer, Orr and Coppock2021), health sciences (Munafò et al., Reference Munafò, Nosek, Bishop, Button, Chambers, Percie du Sert, Simonsohn, Wagenmakers, Ware and Ioannidis2017), economics (Vilhuber, Reference Vilhuber2020), computer science (Manolescu et al., Reference Manolescu, Afanasiev, Arion, Dittrich, Manegold, Polyzotis, Schnaitter, Senellart, Zoupanos and Shasha2008; Frachtenberg, Reference Frachtenberg2022), physics (Clementi and Barba, Reference Clementi and Barba2021) and statistics (Xiong and Cribben, Reference Xiong and Cribben2023) have been proactive in discussing and improving upon reproducibility. For geosciences, there are some progresses for areas with a direct impact on human lives, economies, and public policy. Konkol et al. (Reference Konkol, Kray and Pfeiffer2019) investigated the state of reproducibility in geosciences and provided a set of guidelines for authors aiming at reproducible scientific publications. Stagge et al. (Reference Stagge, Rosenberg, Abdallah, Akbar, Attallah and James2019) revealed only 1.6% of results were fully reproducible among 360 of the 1989 articles published by six hydrology and water resources journals in 2017. An extensive survey of 347 participants from multiple fields within the earth sciences indicated a poorly documented workflow, a lack of code documentation and the availability of code and data are the major reasons for the lack of reproducibility (Reinecke et al., Reference Reinecke, Trautmann, Wagener and Schüler2022). Bush et al. (Reference Bush, Dutton, Evans, Loft and Schmidt2020) identified challenges and provided recommendations for the improvement of reproducibility and replicability in paleo-climate research and researchers using computer-simulated global climate model (GCM) experiments.

With the growing number of digital artifacts supporting scholarly publications in climate informatics and environmental data science, there is a pressing call to invest in robust frameworks and initiatives geared toward more trustworthy research in these fields. Building upon reproducibility initiatives led by Computer Science, Machine Learning, and Geographic Information Science (GIScience) communities, we convened the inaugural Climate Informatics Reproducibility Challenge immediately following the 12th Annual Conference on Climate Informatics in 2023. The competition aimed at (1) identifying common hurdles to reproduce computational climate science; and (2) creating interactive reproducible publications for selected papers of the Environmental Data Science journal. We served a set of community-driven tools and cloud-based platforms that make it easier to participate in the revision of computational research artifacts (e.g., datasets, analysis code, workflows, and the environment) and generation of reproducibility reports.

This paper seeks to (1) revisit the importance of reproducible computational research in geosciences, (2) describe initiatives and lessons learned from a recent reproduction competition organized for the Climate Informatics community, and (3) propose a supportive framework of tools and infrastructure toward the improvement of reproducibility and replicability in computational climate science. The paper is structured as follows: Section 2 looks at the revision of reproducibility initiatives; Section 3 focuses on describing and sharing the lessons learned from the reproduction competition; Section 4 describes a framework for sustainably measuring and monitoring reproducibility and replicability in the field of climate informatics, and conclusions are examined in Section 5.

2. Reproducibility initiatives

Reproducibility initiatives encompass formal activities undertaken by journal editors, conference organizers, or related stakeholders to improve the transparency and reproducibility of computational research published via their venues through the adoption of new policies, workflows, and infrastructure (Willis and Stodden, Reference Willis and Stodden2020). While the initiatives seem to have similar goals, they differ widely concerning policy mandates, what is reviewed, who conducts the review, and how reviewers are incentivized. We mention below some examples of initiatives led by computer science, machine learning, and GIScience communities for evaluating reproducibility and promoting trustworthy science in their computational research.

The supercomputing (SC) conference in 2015 began an optional submission for authors of accepted papers to describe further their experimental framework and results. The structure (still practiced to date) includes the submission of an artifact description (AD) appendix and a more extensive artifact evaluation (AE) appendix. The AD appendix allows us to determine whether artifacts are available, and the AE appendix provides sufficient detail to support computational reproducibility. In 2015, only a single paper responded to the initiative and became the source for the SC16 Student Cluster Competition Reproducibility Challenge and the first SC paper to display an Artifact Review and Badging (ACM) badge. By 2017 39 papers included an AD appendix. In 2019 the AD appendix became mandatory (Malik et al., Reference Malik, Vahldiek-Oberwagner, Jimenez and Maltzahn2022). The same badging system was also proposed for the remote sensing community (Frery et al., Reference Frery, Gomez and Medeiros2020), in an attempt to address the specifics for that domain, such as the enforcement of utilization of Open Data and Free/Libre Open Source Software (FLOSS).

The 2018 International Conference on Learning Representations (ICLR) reproducibility challenge was designed as a dedicated platform to investigate the reproducibility of papers submitted in the machine learning (ML) domain. Most participants were enrolled in graduate ML courses, and the challenge served them as the final course project. The selection of ICLR was highly motivated because the conference submissions were automatically made available publicly on OpenReview, including during the review period. The use of the OpenReview platform allowed a wiki-like interface to provide transparency and a conversation space between authors and participants. The inaugural challenge was followed by the 2019 ICLR Reproducibility Challenge (Pineau et al., Reference Pineau, Sinha, Fried, Ke and Larochelle2019), and the 2019 NeurIPS Reproducibility Challenge (Pineau et al., Reference Pineau, Vincent-Lamarre, Sinha, Larivière, Beygelzimer, d’Alché-Buc, Fox and Larochelle2020), and many others to present with considerable improvements in the infrastructure, for example, partnership with Kaggle competitions (Sinha et al., Reference Sinha, Bleeker, Bhargav, Forde, Raparthy, Dodge, Pineau and Stojnic2023).

The Association of Geographic Information Laboratories in Europe (AGILE) initiated a series of workshops on reproducibility starting from 2017 until 2019. These workshops aimed to address the issue of reproducibility in the GIScience community. To ensure that reproducibility is measured and reported accurately, AGILE began in 2019 to produce reproducibility reviews of full papers after acceptance. The reproducibility reports and guidelines for authors and reviewers are published on the Open Science Framework. AGILE has extended the use of this framework to other communities such as GIScience. They have also taken a step further to include non-English speaking members of their communities by providing translated guidelines. This innovative approach enhances inclusivity and fosters collaboration among researchers from diverse backgrounds (Nüst et al., Reference Nüst, Granell, Hofer, Konkol, Ostermann, Sileryte and Cerutti2018).

The AI Institute for Research on Trustworthy AI in Weather, Climate, and Coastal Oceanography (AI2ES) promoted in 2022 a summer school, that aside from the regular training agenda, introduced what they named “trust-a-thon”, to implement explainability and interpretability of pre-trained ML models, which included challenges in models for severe storms, tropical cyclones, and space weather (McGovern et al., Reference McGovern, Gagne, Wirz, Ebert-Uphoff, Bostrom, Rao, Schumacher, Flora, Chase, Mamalakis, McGraw, Lagerquist, Redmon and Peterson2023). The participants were provided with a scientific application, curated datasets, and predeveloped code to train models for such tasks. For each challenge, it was also provided user “personas”, as examples of potential end users that would consume information about these models, and their limitations, obtained by interpretability and explainability techniques applied to those ML model outcomes. This “trust-a-thon” used extensively Jupyter Notebooks in a preset cloud environment and encouraged users to explore its interface capacities to make model outcomes more palatable to end-users, using graphics and communication that is easy to consume by these decision-making stakeholders (emergency managers, forecasters, policy-makers, among others).

3. Climate informatics reproducibility challenge

3.1. Overview

The Climate Informatics (CI) conference series attracts over 100 participants annually. Since 2015, CI started to organize hands-on hackathons collocated with annual CI conferences. These hackathons bring together community members to investigate solutions for climate challenges including the generation of synthetic night-time imagery and prediction tasks of CO2 fluxes, arctic sea ice extent, and winter extreme rainfall, among others. Building upon the collaborative nature of these events, the 12th edition of the conference began the inaugural Climate Informatics Reproducibility Challenge in 2023 (CIRC23) to advance the scientific rigor of computational climate science. The objective was twofold (1) identifying common hurdles to reproducing computational climate science; and (2) creating interactive reproducible publications for selected papers of the EDS journal via the Environmental Data Science (EDS) book, a community-driven platform and open-source resource to host demonstrators via Jupyter notebooks (EDS book, 2023).

The CIRC23 was structured as a month-long event. Participants were required to register (see supplement for detailed information) before the start of the competition while reviewers could join until the official reviews started. When the challenge officially began, participants were assigned a team of three to four members and got their project assignments, that is, pre-approved papers from the EDS journal. The submission of a Jupyter notebook was a two-stage process: each team was expected to first submit a Minimum Viable Working notebook so that reviewers could start getting familiar with the content. Then the challenge concluded with the submission of the final peer-reviewed notebook (about 2 weeks after the first submission). The formal evaluation process was meant to improve the quality of the submissions, and this is why we recruited independent reviewers to conduct an open review with constructive feedback on the reproducibility reports. Submissions were judged based on their adherence to open science and FAIR principles, relevance to the EDS book’s objectives, reliability of results, and contribution to the overall scope of the competition. Reviewers used the guidelines to ensure objectivity and focus on the accurate reproduction of original studies, completeness of the provided information, clarity of the report, quality of code, visualizations, effective data management, and insightful comments on the original paper and results. For the entire review process, the infrastructure of EDS book was used. A fundamental part of the challenge was the provision of a JupyterHub cloud-based deployment with pre-installed scientific software in the core programming languages (Python, R and Julia). The prizes consisted of vouchers in books published by Cambridge University Press and Assessment.

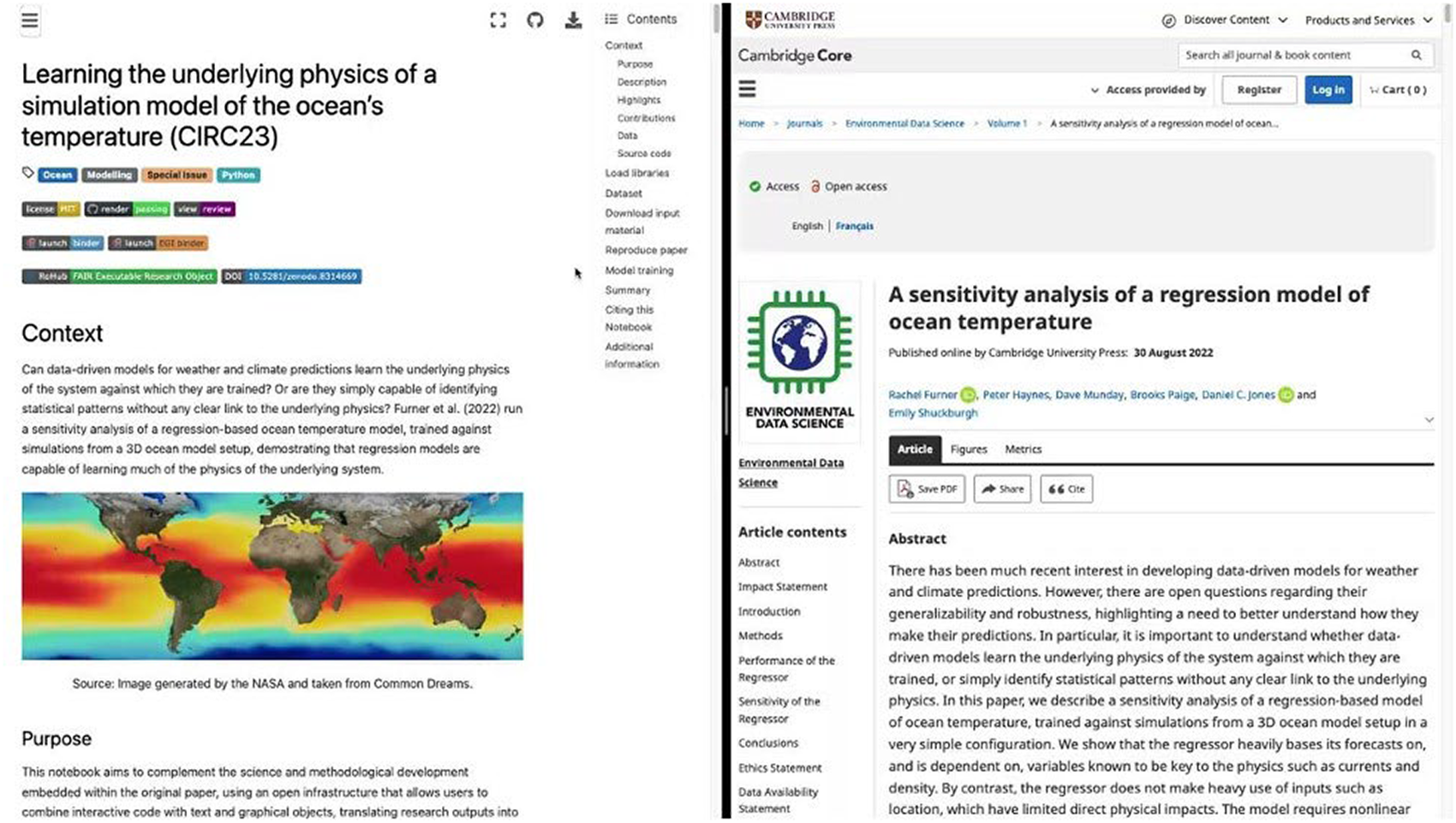

While 3 out of 7 teams successfully submitted their reproducibility reports (Figure 2) of the assigned paper within the challenge duration (Domazetoski et al., Reference Domazetoski, Zúñiga-González and Allemang2023; Malhotra et al., Reference Malhotra, Pinto Veizaga and Peña Velasco2023; Pahari et al., Reference Pahari and Bhoir2023), there was always the overarching concern of the time and resources required to achieve the challenge scope. To assess the challenge, we gathered feedback from participants and reviewers on a variety of questions (see supplement for detailed information). The time and skills required to generate reproduction studies through Jupyter notebooks are considerably higher than traditional reproducibility reports. For example, according to the CIRC23 feedback, participants spent 12–40 hours on the submission and reviewers 5–10 hours. These numbers are considerably higher than Stagge et al. (Reference Stagge, Rosenberg, Abdallah, Akbar, Attallah and James2019)‘s findings claiming a range of 0.5–1 hour to conduct checklist-driven reproduction reports for papers in journals of hydrology and water resources. Within the feedback, teams and reviewers also provided recommendations for future editions of the challenge. One participant indicated it would be beneficial to have a priori information of the estimated time to run each experiment, for example, model training, and how much memory it requires. Reviewers also suggested more time between the informative sessions and the review so there is a chance to get a practice review, and more interaction with the teams.

Figure 2. Example of an interactive reproducibility report (left) authored by Malhotra et al. (Reference Malhotra, Pinto Veizaga and Peña Velasco2023) published in EDS book, and the target published paper (right) authored by Furner et al. (Reference Furner, Haynes, Munday, Paige, Jones and Shuckburgh2022), published in the EDS journal.

3.2. Lessons learned

Even though CICR23 was a pioneer competition for evaluating computational artifacts in climate science, we share some lessons to consider for future editions of the challenge. The lessons are transferable to competitions alike in other domains.

Strengthening open research practices and mentoring: making artifacts available requires authors to document additional materials and learn new skills and technologies in open research. Considering most earth scientists are self-taught programmers (Reinecke et al., Reference Reinecke, Trautmann, Wagener and Schüler2022), we suggest making open research practices more accessible irrespective of the seniority level and promoting timely collaborations with research infrastructure roles (research software engineers, data wranglers, and stewards). Mentoring programs within reproducibility competitions are also a beneficial path to building capabilities and networks. For CIRC23, we found that the lack of mentorship impacted participants’ interactions with networking activities offered by the program such as talks of experts (eight in total) and weekly drop-in sessions. According to post-challenge feedback, the communication between participants was a barrier, so mentors could provide a safe environment for the challenge by moderating conversations and reinforcing the scope and activities of the competition.

Supporting community standards and sustainability: the creation of standards for transparency and data sharing facilitates what artifacts and metadata should be deposited with the published research (Leipzig et al., Reference Leipzig, Nüst, Hoyt, Ram and Greenberg2021). For instance, Hydroshare, operated by the Consortium of Universities for the Advancement of Hydrologic Science Inc. (CUAHSI), enables the sharing and publication of data and models in a citable and discoverable manner for hydrology and water resources (Horsburgh et al., Reference Horsburgh, Morsy, Castronova, Goodall, Gan, Yi, Stealey and Tarboton2016). The EarthCube Model Data Research Coordination Network provides guidance on what data and software elements of simulation-based research, for example, weather modeling need to be preserved and shared to meet community open science expectations, including publishers and funding agencies. In the CIRC23, 1 out 7 target papers had all computational artefacts (data and code) citable and discoverable via Zenodo. The authors of the remaining papers stored code and data in version control platforms such as GitHub and GitLab.

Elevating the importance of executable research objects: assessing reproducibility entails a lot of work. Collaborative interactive computing can facilitate the inspection of results and peer review (Leipzig et al., Reference Leipzig, Nüst, Hoyt, Ram and Greenberg2021). We have found executable research objects, Jupyter Notebook files, in this case, informative and helpful in demonstrating results. We propose to consider them as a supporting artifact that facilitates the inspection of other artifacts (data, code, and directions to run) of published papers. This perspective aligns with existing initiatives that involve the publishing industry to make notebooks the main artifact for scientific publication (Caprarelli et al., Reference Caprarelli, Sedora, Ricci, Stall and Giampoala2023) and the concept of an “executable research compendium” (Nüst et al., Reference Nüst, Konkol, Pebesma, Kray, Schutzeichel, Przibytzin and Lorenz2017). Adoption of good practices for writing and sharing notebooks could make research artifacts associated with published research more robust (Rule et al., Reference Rule, Birmingham, Zuniga, Altintas, Huang, Knight, Moshiri, Nguyen, Rosenthal, Pérez and Rose2019). While notebooks might be not optimal for sharing experiments with large datasets or complex workflows (except if there is access to infrastructure that supports this), we suggest creating a demonstration dataset and/or minimal working version with a subset of the whole study for testing reproducibility.

Advancing on social dimensions and incentives: while the technical dimensions associated with tools and infrastructure are important for computational reproducibility, expanding the peer review process and communication between the stakeholders involved in reproducible computational research also have important social and organizational dimensions (Willis and Stodden, Reference Willis and Stodden2020). For instance, there is an opportunity to engage and connect early career researchers (ECRs) with experts in research software and data management during the peer review process. Communication between evaluators of computational artifacts and authors of the original study is also key to improving reproducibility. A study of 613 articles in computer systems research found that reproducibility varied depending on whether communication with authors took place. When not communicating with authors, 32.1% of the experiments could be reproduced, whereas this number increased to 48.3% when communication occurred (Collberg and Proebsting, Reference Collberg and Proebsting2016). We evidenced the relevance of communication in CIRC23 as one of the teams had direct communication with the authors of the target paper and reported a missing file that was key to completing the reproduction of the research workflow. All collaborations and communications should be transparent and be professionally rewarded and acknowledged, for example,via DOI citable reports (Stagge et al., Reference Stagge, Rosenberg, Abdallah, Akbar, Attallah and James2019).

4. Towards an ecosystem of tools and infrastructure to support reproducible geosciences

While the format of CICR23 was innovative by incorporating interactive computing, that is, Jupyter Notebooks for generating reproducibility reports, the competition format could be maximized by its integration with a larger supportive framework of tools and infrastructure. Following the successful intake and sustainability of reproducibility initiatives in large- to medium-sized conferences such as SC and AGILE, we suggest CI conferences implement human-centred frameworks for reporting reproducibility. Inspired by Reinecke et al. (Reference Reinecke, Trautmann, Wagener and Schüler2022), Figure 3 summarises the proposed framework to strength reproducible research in CI. The submitted papers will be part of a reproducibility check in additional to the standard scientific review. To ensure equitable treatment of the diversity research spectrum, we propose to recruit enthusiasts in software and data management (at any seniority level) to conduct a technical review of the computational artifacts. The assessment will be assisted by clear guidelines adapted from AGILE and SC communities. Furthermore, we suggest hosting capacity-building activities to train authors and technical reviewers to evaluate the quality of the computational artifacts for improving the reproducibility of the published research. The papers that are fully reproducible are then targeted for a replication competition like SC conferences. In this competition, participants (mostly ECRs from university labs) will be judged for the capacity to replicate results using a different dataset or methods to potentially make a new discovery or idea. This process will be conducted through community-driven platforms such as EDS book.

Figure 3. Our concept of what reporting of reproducibility should look like for future editions of the Climate Informatics conference. Adapted from the supplementary material of Reinecke et al. (Reference Reinecke, Trautmann, Wagener and Schüler2022).

It is worth mentioning the reproducibility check of the framework should involve all actors in publishing and sharing research results. For instance, Peer et al. (Reference Peer, Biniossek, Betz and Christian2022) introduce the Reproducible Research Publication Workflow which includes the evaluation of the artifacts and metadata creation as integral in the publication workflow. The proposed approach involves accumulating and updating objects and metadata along the publication process in collaboration with authors and research infrastructure roles such as data curators, stewards, and research software engineers. These conceptual approaches open opportunities to develop new infrastructure. While there is a growing market of private-led initiatives that have supported reproducibility in well-known journals (see for example Kousta et al. (Reference Kousta, Pastrana and Swaminathan2019)), it remains important that technology solutions are interoperable and vendor-free.

Finally, another area of work is ensuring the sustainability of the computational reproducibility of scientific results. Most reproducibility efforts are focused on point-of-manuscript-submission, however, it remains important to identify frameworks to support long-term maintenance of the evaluated artifacts (see for example Peer et al., Reference Peer, Orr and Coppock2021), as well implementing funding and incentive mechanisms that enable such enterprise (see Recommendations 6–3, 6–4, 6–5, and 6–6 in National Academies of Sciences, Engineering, and Medicine (2019)).

5. Conclusions

The field of geosciences faces some hurdles and complications with making computational research reproducible. Initiatives like the CIRC23 support efforts for a more open and collaborative-driven research environment. By putting theory into practice, we hope to reinforce Open Science practices in research and inspire its adoption within the wider scientific community. Our experience with the CIRC23 emphasized the importance of open research practices, mentorship programs, and standard transparency guidelines. Tools such as executable research objects were found to be highly beneficial. We propose a comprehensive and sustainable framework inclusive of clear guidelines, supportive tools, and all stakeholders and roles involved in scientific publications.

In conclusion, with the amplification of Open Science principles and by embracing shared learning and open dialogues, computational reproducibility in geosciences can enhance its position in streamlining the delivering of high-quality research that influences policymaking and strategies affecting our planet.

Open peer review

To view the open peer review materials for this article, please visit http://doi.org/10.1017/eds.2024.35.

Supplementary material

The supplementary material for this article can be found at http://doi.org/10.1017/eds.2024.35.

Acknowledgements

We thank Louisa Van Zeeland (Research Lead, The Alan Turing Institute) and the anonymous reviewer for their helpful comments and suggestions. We are also grateful with all individuals (participants, reviewers, judges, guest speakers, infrastructure, organizers and helpers) who contributed to the 2023 climate informatics reproducibility challenge. Their contributions are acknowledged in the public GitHub repository of the competition, https://github.com/eds-book/reproducibility-challenge-2023?tab=readme-ov-file#contributors-. Special thanks to the Pangeo-EOSC project which has benefited from services and resources provided by the EGI-ACE project (funded by the European Union’s Horizon 2020 research and innovation program under Grant Agreement no. 101017567), and the C-SCALE project (funded by the European Union’s Horizon 2020 research and innovation program under grant agreement no. 101017529), with the dedicated support of CESNET. We also thank Andrew Hyde at Cambridge University Press, the publisher of Environmental Data Science, for supporting the reproducibility challenge.

Author contribution

Contributions are listed in the order of the author list. Conceptualization: A.C-C., Visualization: A.C-C., Project administration: A.F., Y.R., J.S.H., Funding acquisition: J.S.H., Writing original draft: A.C-C., Writing – review & editing: A.C-C., A.F., R.B.L., A.M., Y.R. All authors approved the final submitted draft.

Data availability statement

The public repository of the challenge is archived in Zenodo: https://doi.org/10.5281/zenodo.10637360. Details of the configuration of the Pangeo-EOSC cloud deployment used in the challenge are available at https://github.com/pangeo-data/pangeo-eosc . The notebooks submitted to the challenge are published in the EDS book website, www.edsbook.org, and archived in Zenodo (Domazetoski et al., Reference Domazetoski, Zúñiga-González and Allemang2023; Malhotra et al., Reference Malhotra, Pinto Veizaga and Peña Velasco2023; Pahari et al., Reference Pahari and Bhoir2023).

Provenance statement

This article is part of the Climate Informatics 2024 proceedings and was accepted in Environmental Data Science on the basis of the Climate Informatics peer review process.

Funding statement

This work was supported by the Engineering and Physical Sciences Research Council (EPSRC) under the research grant EP/Y028880/1. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. A.M. was supported by the Marshall Scholarship and Gates Cambridge Scholarship during this work. R.B.L. was funded by the Natural Sciences and Engineering Research Council of Canada (NSERC) through the Discovery grant (RGPIN-2020-05,708), Canada Research Chairs Program (CRC2019–00139) and the McMaster University Centre for Climate Change Research Seed Fund during this work. Y.R. was supported by NOAA through the Cooperative Institute for Satellite Earth System Studies under Cooperative Agreement NA19NES4320002.

Ethical statement

The research meets all ethical guidelines, including adherence to the legal requirements of the study countries.

Competing interest

R.B.L. was on the Editorial Board of the Environmental Data Science Journal.

Comments

Submission of Position Paper presented at Climate Informatics 2024