Prior to the COVID-19 pandemic, debate mounted concerning the wisdom, and perhaps the inevitability, of moving swaths of the court system online. These debates took place within a larger discussion of how and why courts had failed to perform their most basic function of delivering (approximately) legally accurate adjudicatory outputs in cases involving unrepresented litigantsFootnote 1 while inspiring public confidence in them as a method of resolving disputes compared to extralegal and even violent alternatives. Richard Susskind, a longtime critic of the status quo, summarized objections to a nearly 100 percent in-person adjudicatory structure: “[T]he resolution of civil disputes invariably takes too long [and] costs too much, and the process is unintelligible to ordinary people.”Footnote 2 As Susskind noted, fiscal concerns drove much of the interest in online courts during this time, while reformists sought to use the move online as an opportunity to expand the access of, and services available to, unrepresented litigants.

Yet as criticisms grew, and despite aggressive action by some courts abroad,Footnote 3 judicial systems in the United States moved incrementally. State courts, for example, began to implement online dispute resolution (ODR), a tool that private-sector online retailers had long used.Footnote 4 But courts had deployed ODR only for specified case types and only at specified stages of civil proceedings. There was little judicial appetite in the United States for a wholesale transition.

The COVID-19 pandemic upended the judicial desire to move slowly. As was true of so many other sectors of United States society, the judicial system had to reconstitute online, and had to do so convulsively. And court systems did so, in the fashion in which systemic change often occurs in the United States: haphazardly, unevenly, and with many hiccups. The most colorful among the hiccups entered the public consciousness: audible toilet flushes during oral argument before the highest court in the land; a lawyer appearing at a hearing with a feline video filter that he was unable to remove himself; jurors taking personal calls during voir dire.Footnote 5 But there were notable successes as well. The Texas state courts had reportedly held 1.1 million proceedings online as of late February 2021, and the Michigan state courts’ live-streaming on YouTube attracted 60,000 subscribers.Footnote 6

These developments suggest that online proceedings have become a part of the United States justice system for the foreseeable future. But skepticism has remained about online migration of some components of the judicial function, particularly around litigation’s most climactic stage: the adversarial evidentiary hearing, especially the jury trial. Perhaps believing that they possessed unusual skill in discerning truthful and accurate testimony from its opposite,Footnote 7 some judges embraced online bench fact-finding. But the use of juries, both civil and criminal, caused angst, and some lawyers and judges remained skeptical that the justice system could or should implement any kind of online jury trial. Some of this skepticism waxed philosophical. Legal thinkers pondered, for example, whether a jury trial is a jury trial if parties, lawyers, judges, and the jurors themselves do not assemble in person in a public space.Footnote 8 Some of the skepticism about online trials may have been simple fear of the unknown.Footnote 9

Other concerns skewed more practical and focused on matters for which existing research provided few answers. Will remote trials yield more representative juries by opening proceedings to those with challenges in attending in-person events, or will the online migration yield less-representative juries by marginalizing those on the wrong side of the digital divide? Will remote trials decrease lawyer costs and, perhaps as a result, expand access to justice by putting legal services more within the reach of those with civil justice needs?Footnote 10 Time, and more data, can tell if the political will is there to find out.

But two further questions lie at the core of debate about whether online proceedings can produce approximately accurate and publicly acceptable fact-finding from testimonial evidence. The first is whether video hearings diminish the ability of fact-finders to detect witness deception or mistake. The second is whether video as a communication medium dehumanizes one or both parties to a case, causing less humane decision-making.Footnote 11 For these questions, the research provides an answer (for the first question) and extremely useful guidance (for the second).

This chapter addresses these questions by reviewing the relevant research. By way of preview, our canvass of the relevant literatures suggests that both concerns articulated above are likely misplaced. Although there is reason to be cautious and to include strong evaluation with any move to online fact-finding, including jury trials, available research suggests that remote hearings will neither alter the fact-finder’s (nearly non-existent) ability to discern truthful from deceptive or mistaken testimony nor materially affect fact-finder perception of parties or their humanity. On the first point, a well-developed body of research concerning the ability of humans to detect when a speaker is lying or mistaken shows a consensus that human detection accuracy is only slightly better than a coin flip. Most importantly, the same well-developed body of research demonstrates that such accuracy is the same regardless of whether the interaction is in-person or virtual (so long as the interaction does not consist solely of a visual exchange unaccompanied by sound, in which case accuracy worsens). On the second point, the most credible studies from the most analogous situations suggest little or no effect on human decisions when interactions are held via videoconference as opposed to in person. The evidence on the first point is stronger than that on the second, but the key recognition is that for both points, the weight of the evidence is contrary to the concerns that lawyers and judges have expressed, suggesting that the Bench and the Bar should pursue online courts (coupled with credible evaluation) to see if they offer some of the benefits their proponents have identified. After reviewing the relevant literature, this chapter concludes with a brief discussion of a research agenda to investigate the sustainability of remote civil justice.

A final point concerning the scope of this chapter: As noted above, judges and (by necessity) lawyers have largely come to terms with online bench fact-finding based on testimonial evidence, reserving most of their skepticism for online jury trials. But as we relate below, the available research demonstrates that to the extent that the legal profession harbors concerns regarding truth-detection and dehumanization in the jury’s testimonial fact-finding, it should be equally skeptical regarding that of the bench. The evidence in this area either fails to support or affirmatively contradicts the belief that judges are better truth-detectors, or are less prone to dehumanization, than laity. Thus, we focus portions of this chapter on online jury trials because unwarranted skepticism has prevented such adjudications from the level of use (under careful monitoring and evaluation) that the currently jammed court system likely needs. But if current research (or our analysis of it here) is wrong, meaning that truth-detection and dehumanization are fatal concerns for online jury trials, then online bench adjudications based on testimonial evidence should be equally concerning.

4.1 Adjudication of Testimonial Accuracy

A common argument against the sustainability of video testimonial hearings is that, to perform its function, the fact-finder must be able to distinguish accurate from inaccurate testimony. Inaccurate testimony could arise via two mechanisms: deceptive witnesses, that is, those who attempt to mislead the trier of fact through deliberate or knowing misstatements; and mistaken witnesses, that is, those who believe their testimony even though their perception of historical fact was wrong.Footnote 12 The legal system reasons as follows. First, juries are good, better even than judges, at choosing which of two or more witnesses describing incompatible versions of historical fact is testifying accurately.Footnote 13 Second, this kind of “demeanor evidence” takes the form of nonverbal cues observable during testimony.Footnote 14 Third, juries adjudicate credibility best if they can observe witnesses’ demeanor in person.Footnote 15

Each component of this reasoning is false.

First, research shows that humans have just above a fifty-fifty chance ability to detect lies, approximately 54 percent overall, if we round up. For example, Bond and DePaulo, in their meta-analysis of deception detection studies, place human ability to detect deception at 53.98 percent, or just above chance.Footnote 16 Humans are really bad at detecting deception. That probably comes as a shock to many of us. We are pretty sure, for example, we can tell when an opponent in a game or a sport is fibbing or bending the truth to get an edge. We are wrong. It has been settled in science since the 1920s that human beings are bad at detecting deception.Footnote 17 The fact that judges and lawyers continue to believe otherwise is a statement of the disdain in which the legal profession holds credible evidence and empiricism more generally.Footnote 18

Second, human (in)ability to detect deception does not change with an in-person or a virtual interaction. Or at least, there is no evidence that there is a difference between in-person and virtual on this score, and a fair amount of evidence that there is no difference. Most likely, humans are really bad at detecting deception regardless of the means of communication. This also probably comes as a shock to many of us. In addition to believing (incorrectly) that we can tell when kids or partners or opponents are lying, we think face-to-face confrontation matters. Many judges and lawyers so believe. They are wrong.

Why are humans so bad at deception detection? One reason is that people rely on what they think are nonverbal cues. For example, many think fidgeting, increased arm and leg movement, and decreased eye contact are indicative of lying. None are. While there might be some verbal cues that could be reliable for detecting lies, the vast majority of nonverbal cues (including those just mentioned, and most others upon which we humans tend to rely) are unreliable, and the few cues that might be modestly reliable can be counterintuitive.Footnote 19 Furthermore, because we hold inaccurate beliefs about what is and is not reliable, it is difficult for us to disregard the unreliable cues.Footnote 20 In a study that educated some participants on somewhat reliable nonverbal cues to look for, with other participants not getting this information, participants with the reliable cues had no greater ability to detect lying.Footnote 21 We humans are not just bad at lie detection; we are also bad at being trained at lie detection.

While a dishonest demeanor elevates suspicion, it has little-to-no relation to actual deception.Footnote 22 Similarly, a perceived honest demeanor is not reliably associated with actual honesty.Footnote 23 That is where the (ir)relevance of the medium of communication matters. If demeanor is an unreliable indicator for either honesty or dishonesty, then a fact-finder suffers little from losing whatever the supposedly superior opportunity to observe demeanor an in-person interaction might provide.Footnote 24 For example, a study from 2015 shows that people attempting to evaluate deception performed better when the interaction was a computer-mediated (text-based communication) rather than in-person communication.Footnote 25 At least one possible explanation for this finding is the unavailability of distracting and unreliable nonverbal cues.Footnote 26

Despite popular belief in the efficacy of discerning people’s honesty based on their demeanor, research shows that non-demeanor cues, meaning verbal cues, are more promising. A meta-analysis concluded that cues that showed promise at signaling deception tended to be verbal (content of what is said) and paraverbal (how it is spoken), not visual.Footnote 27 But verbal and paraverbal cues are just as observable from a video feed.

If we eliminate visual cues for fact-finders, and just give them audio feed, will that improve a jury’s ability to detect deception? Unlikely. Audio-only detection accuracy does not differ significantly from audiovisual.Footnote 28 At this point, that should not be a surprise, considering the general low ceiling of deception detection accuracy – just above the fifty-fifty level. Only in high-pressure situations is it worthwhile (in a deception detection sense) to remove nonverbal cues.Footnote 29 To clarify: High-pressure situations likely make audio only better than audio plus visual, not the reverse. The problem for deception detection appears to be that, with respect to visual cues, the pressure turns the screws both on someone who is motivated to be believed but is actually lying and on someone who is being honest but feels as though they are not believed.

We should not think individual judges have any better ability to detect deception than a jury. Notwithstanding many judges’ self-professed ability to detect lying, the science that humans are poor deception detectors has no caveat for the black robe. There is no evidence that any profession is better at deception detection, and a great deal of evidence to the contrary. For example, those whose professions ask them to detect lies (such as police officers) cite the same erroneous cues regarding deception.Footnote 30 More broadly, two meta-analyses from 2006 show that purported “experts” at deception detection are no better at lie detection than nonexperts.Footnote 31

What about individuals versus groups? A 2015 study did find consistently that groups performed better at detecting lies,Footnote 32 a result the researchers attributed to group synergy – that is, that individuals were able to benefit from others’ thoughts.Footnote 33 So, juries are better than judges at deception detection, right? Alas, almost certainly not. The problem is that only certain kinds of groups are better than individuals. In particular, groups of individuals who were familiar with one another before they were assigned a deception detection task outperformed both individuals and groups whose members had no preexisting connection.Footnote 34 Groups whose members had no preexisting connection were no better at detecting deception than individuals.Footnote 35 Juries are, by design, composed of a cross-section of the community, which almost always means that jurors are unfamiliar with one another before trial.Footnote 36

There is more bad news. Bias and stereotypes affect our ability to flush out a lie. Females are labeled as liars significantly more than males even when both groups lie or tell the truth the same amount.Footnote 37 White respondents asked to detect lies were significantly faster to select the “liar” box for black speakers than white speakers.Footnote 38

All of this is troubling, and likely challenges fundamental assumptions of our justice system. For the purposes of this chapter, however, it is enough to demonstrate that human inability to detect lying remains constant whether testimony is received in-person or remotely. Again, the science on this point goes back decades, and it is also recent. Studies conducted in 2014Footnote 39 and 2015Footnote 40 agreed that audiovisual and audio-only mediums were not different in accuracy detection. The science suggests that the medium of communication – in-person, video, or telephonic – has little if any relevant impact on the ability of judges or juries to tell truths from lies.Footnote 41

The statements above regarding human (in)ability to detect deception apply equally to human (in)ability to detect mistakes, including the fact that scientists have long known that we are poor mistake detectors. Thirty years ago, Wellborn collected and summarized the then-available studies, most focusing on eyewitness testimony. Addressing jury ability to distinguish mistaken from accurate witness testimony, Wellborn concluded that “the capacity of triers [of fact] to appraise witness accuracy appears to be worse than their ability to discern dishonesty.”Footnote 42 Particularly relevant for our purposes, Wellborn further concluded that “neither verbal nor nonverbal cues are effectively employed” to detect testimonial mistakes.Footnote 43 If neither verbal nor nonverbal cues matter in detecting mistakes, then there will likely be little lost by the online environment’s suppression of nonverbal cues.

The research in the last thirty years reinforces Wellborn’s conclusions. Human inability to detect mistaken testimony in real-world situations is such a settled principle that researchers no longer investigate it, focusing instead on investigating other matters, such as the potentially distorting effects of feedback given to eyewitnesses,Footnote 44 whether witness age affects likelihood of fact-finder belief,Footnote 45 and whether fact-finders understand the circumstances mitigating the level of unreliability of eyewitness testimony.Footnote 46 The most recent, comprehensive writing we could find on the subject was a 2007 chapter from Boyce, Beaudry, and Lindsay, which depressingly concluded (1) fact-finders believe eyewitnesses, (2) fact-finders are not able to distinguish between accurate and inaccurate eyewitnesses, and (3) fact-finders base their beliefs of witness accuracy on factors that have little relationship to accuracy.Footnote 47 This review led us to a 1998 study of child witnesses that found (again) no difference in a fact-finder’s capacity to distinguish accurate from mistaken testimony as between video versus in-person interaction.Footnote 48

In short, decades of research provide strong reason to question whether fact-finders can distinguish accurate from inaccurate testimony, but also strong reason to believe that no difference exists on this score between in-person versus online hearings, nor between judges and juries.

4.2 The Absence of a Dehumanization Effect

Criminal defense attorneys have raised concerns of dehumanization of defendants, arguing that in remote trials, triers of fact will have less compassion for defendants and will be more willing to impose harsher punishments.Footnote 49 In civil trials, this concern could extend to either party. For example, in a personal injury case, the concern might be that fact-finders would be less willing to award damages because they are unable to connect with or relate to a plaintiff’s injuries. Or, in less protracted but nevertheless high-stakes civil actions, such as landlord/tenant matters, a trier of fact (usually a judge) might feel less sympathy for a struggling tenant and therefore show greater willingness to evict rather that mediate a settlement.

A review of relevant literature suggests that this concern is likely misplaced. While the number of studies directly investigating the possibility of online hearings is limited, analogous research from other fields is available. We focus on studies in which a decision-maker is called upon to render a judgment or decision that affects the livelihood of an individual after some interaction with that individual, much like a juror or judge is called upon to render a decision that affects the livelihood of a litigant. We highlight findings from a review of both legal and analogous nonlegal studies, and we emphasize study quality – that is, we prioritize randomized before nonrandomized trials, field over lab/simulated experiments, and studies involving actual decision-making over studies involving precursors to decisions (e.g., ratings or impressions).Footnote 50 With this ordering of pertinence, we systematically rank the literature into three tiers, from most to least robust: first, randomized field studies (involving decisions and precursors); second, randomized lab studies (involving decisions and precursors); and third, non-randomized studies. Table 4.1 provides a visual of our proposed hierarchy.

Table 4.1 A hierarchy of study designs

| Tier | Randomized? | Setting | Example |

|---|---|---|---|

| 1: RCTs | Yes | Field | Cuevas et al. |

| 2: Lab Studies | Yes | Lab | Lee et al. |

| 3: Observational Studies | No | Field | Walsh & Walsh |

According to research in the first tier of randomized field studies – the most telling for probing the potential for online fact-finding based on testimonial evidence – proceeding via videoconference likely will not adversely affect the perceptions of triers of facts on the humanity of trial participants. We do take note of the findings of studies beyond this first tier, which include some from the legal field. Findings in these less credible tiers are varied and inconclusive.

The research addressing dehumanization is less definitive than that addressing deception and mistake detection. So, while we suggest that jurisdictions consider proceeding with online trials and other innovative ways of addressing both the current crises of frozen court systems and the future crises of docket challenges, we recommend investigation and evaluation of such efforts through randomized control trials (RCTs).

4.2.1 Who Would Be Dehumanized?

At the outset, we note a problem common to all of the studies we found, in all tiers: none of the examined situations are structurally identical to a fact-finding based on an adversarial hearing. In legal fact-finding in an adversarial system, one or more theoretically disinterested observers make a consequential decision regarding the actions of someone with whom they may have no direct interaction and who, in fact, sometimes exercises a right not to speak during the proceeding. In all of the studies we were able to find, which concerned situations such as doctor-patient or employer-applicant, the decision-maker interacted directly with the subject of the interaction. It is thus questionable whether any study yet conducted provides ideal insight regarding the likely effects of online as opposed to in-person for, say, civil or criminal jury trials.

Put another way: If a jury were likely to dehumanize or discount someone, why should it be that it would dehumanize any specific party, as opposed to the individuals with whom the jury “interacts” (“listens to” would be a more accurate phrase), namely, witnesses and lawyers? With this in mind, it is not clear which way concerns of dehumanization cut. At present, one defensible view is that there is no evidence either way regarding dehumanization of parties in an online jury trial versus an in-person one, and that similar concerns might be present for some types of judicial hearings.

Some might respond that the gut instincts of some criminal defense attorneys and some judges should count as evidence.Footnote 51 We disagree that the gut instincts of any human beings, professionals or otherwise, constitute evidence in almost any setting. But we are especially suspicious of gut instincts in the fact-finding context. As we saw in the previous part, fact-finding based on testimonial hearings has given rise to some of the most stubbornly persistent, and farcically outlandish, myths to which lawyers and judges cling. The fact that lawyers and judges continue to espouse this kind of flat-eartherism suggests careful interrogation of professional gut instincts on the subject of dehumanization from an online environment.

4.2.2 Promising Results from Randomized Field Studies

Within our first-tier category of randomized field studies, the literature indicates that using videoconference in lieu of face-to-face interaction has an insignificant, or even a positive, effect on a decision-maker’s disposition toward the person about whom a judgment or decision is made.Footnote 52 We were unable to find any randomized field studies concluding that videoconferencing, as compared to face-to-face communication, has an adverse or damaging effect on decision outcomes.

Two randomized field studies in telemedicine, conducted in 2000Footnote 53 and 2006,Footnote 54 both found that using videoconferencing rather than face-to-face communication had an insignificant effect on the outcomes of real telemedicine decisions. Medical decisions were equivalentFootnote 55 or identical.Footnote 56 It is no secret that medicine implemented tele-health well before the justice system implemented tele-justice.Footnote 57

Similarly, a 2001 randomized field study of employment interviews conducted using videoconference versus in-person interaction resulted in videoconference applicants being rated higher than their in-person counterparts. Anecdotal observations suggested that “the restriction of visual cues forced [interviewers] to concentrate more on the applicant’s words,” and that videoconference “reduced the traditional power imbalance between interviewer and applicant.”Footnote 58

From our review of tier-one studies, then, we conclude that there is no evidence that the use of videoconferencing makes a difference on decision-making. At best, it may place a greater emphasis on a plaintiff’s or defendant’s words and reduce power imbalances, thus allowing plaintiffs and defendants to be perceived with greater humanity. At worst, videoconferencing makes no difference.

That said, we found only three tier-one studies. So, we turn our attention to studies with less strong designs.

4.2.3 Varied Findings from Studies with Less Strong Designs

Randomized lab studies and non-randomized studies provide a less conclusive array of findings, causing us to recommend that use of remote trials be accompanied by careful study. Randomized lab studies and non-randomized studies are generally not considered as scientifically rigorous as randomized field studies; much of the legal literature – which might be considered more directly related to remote justice – falls within this tier of research.

First, there are results, analogous to the tier-one studies, suggesting that using videoconference in lieu of face-to-face interaction has an insignificant effect for the person about whom a decision is being made. For example, in a study testing the potential dehumanizing effect of videoconferencing as compared to in-person interactions in a lab setting where doctors were given the choice between a painful but more effective treatment versus a painless but less effective treatment, no dehumanizing effect of communication medium was found.Footnote 59 If the hypothesis that videoconferencing dehumanizes patients (or their pain) were true, in this setting, we might expect to see doctors prescribing a more painful but more effective treatment. No such difference emerged.

Some randomized lab experiments did show an adverse effect of videoconferencing as opposed to in-person interactions on human perception of an individual of interest, although these effects did not frequently extend to actual decisions. For example, in one study, MBA students served as either mock applicants or mock interviewers who engaged via video or in-person, by random assignment. Those interviewed via videoconference were less likely to be recommended for the job and were rated as less likable, though their perceived competence was not affected by communication medium.Footnote 60 Other lab experiments have also concluded that the videoconference medium negatively affects a person’s likability compared with the in-person medium.

Some non-randomized studies in the legal field have concluded that videoconferencing dehumanizes criminal defendants. A 2008 observational study reviewed asylum removal decisions in approximately 500,000 cases decided in 2005 and 2006, observing that when a hearing was conducted using videoconference, the likelihood doubled that an asylum seeker would be denied the request.Footnote 61 In a Virtual Court pilot program conducted in the United Kingdom, evaluators found that the use of videoconferencing resulted in high rates of guilty pleas and a higher likelihood of a custodial sentence.Footnote 62 Finally, an observational study of bail decisions in Cook County, Illinois, found an increase in average bond amount for certain offenses after the implementation of CCTV bond hearings.Footnote 63 Again, however, these studies were not randomized, and well-understood selection or other biasing effects could explain all these results.

4.2.4 Wrapping Up Dehumanization

While the three studies first mentioned are perhaps the most analogous to the situation of a remote jury or bench hearing because they are analyzing the effects of remote legal proceedings, we cannot infer much about causation from them. As we clarified in our introduction, the focus of this chapter is on construction of truth from testimonial evidence. Some of the settings (e.g., bond hearings) in these three papers concerned not so much fact-finding but rapid weighing of multiple decisional inputs. In any event, the design weaknesses of these studies remain. And if one discounts design problems, we still do not know whether any unfavorable perception affects both parties equally, or just certain witnesses or lawyers.

The randomized field studies do point toward a promising direction for the implementation of online trials and sustainability of remote hearings. The fact that these studies are non-legal but analogous in topic and more scientifically robust in procedure may trip up justice system stakeholders, who might be tempted to believe that less-reliable results that occur in a familiar setting deserve greater weight than more reliable results occurring in an analogous but non-legal setting. As suggested above, such is not necessarily wise.

We found only three credible (randomized) studies. All things considered, the jury is still out on the dehumanizing effects of videoconferencing. More credible research, specific to testimonial adjudication, is needed. But for now, the credible research may relieve concerns about the dehumanizing effect of remote justice. Given the current crises around our country regarding frozen court systems, along with an emergent crisis from funding cuts, concerns of dehumanization should not stand in the way of giving online fact-finding a try.

4.3 A Research Agenda

A strong research and evaluation program should accompany any move to online fact-finding.Footnote 64 The concerns are various, and some are context-dependent. Many are outside the focus of this chapter. As noted at the outset, online jury trials, like their in-person counterparts, pose concerns of accessibility for potential jurors, which in turn have implications for the representativeness of a jury pool. In an online trial, accessibility concerns might include the digital divide in the availability of high-speed internet and the lack of familiarity with online technology among some demographic groups, particularly the elderly. Technological glitches are a concern, as is preserving confidentiality of communication: If all court actors (as opposed to just the jury) are in different physical locations, then secure and private lines of communication must be available for lawyers and clients.Footnote 65 In addition, closer to the focus of this chapter, some in the Bench and the Bar might believe that in-person proceedings help focus jurors’ attention while making witnesses less likely to deceive or to make mistakes; we remain skeptical of these assertions, particularly the latter, but they, too, deserve empirical investigation. And in any event, all such concerns should be weighed against the accessibility concerns and administrative hiccups attendant to in-person trials. Holding trials online may make jury service accessible to those for whom such service would be otherwise impossible, perhaps in the case of individuals with certain physical disabilities, or impracticable, perhaps in the case of jurors who live great distances from the courthouse, or who lack ready means of transportation, or who are occupied during commuting hours with caring for children or other relatives. Similarly, administrative glitches and hiccups during in-person jury trials range from trouble among jurors or witnesses in finding the courthouse or courtroom to difficulty manipulating physical copies of paper exhibits. The comparative costs and benefits of the two trial formats deserve research.

Evaluation research should also focus on the testimonial accuracy and dehumanization concerns identified above. As Sections 4.1 and 4.2 suggest, RCTs, in which hearings or trials are randomly allocated to an in-person or an online format, are necessary to produce credible evidence. In some jurisdictions, changes in law might be necessary.Footnote 66

But none of these issues is conceptually difficult, and describing strong designs is easy. A court system might, for example, engage with researchers to create a system that assigns randomly a particular type of case to an online or in-person hearing involving fact-finding.Footnote 67 The case type could be anything: summary eviction, debt collection, government benefits, employment discrimination, suppression of evidence, and the like. The adjudicator could be a court or an agency. Researchers can randomize cases using any number of means, or cases can be assigned to conditions using odd/even case numbers, which is ordinarily good enough even if not technically random.

It is worth paying attention to some details. For example, regarding the outcomes to measure, an advantage to limiting each particular study to a particular case type is that comparing adjudicatory outputs is both obvious and easy. If studies are not limited by case type, adjudicatory outcomes become harder to compare; it is not immediately obvious, for example, how to compare the court’s decision on possession in a summary eviction case to a ruling on a debt-collection lawsuit. But a strong design might go further by including surveys of fact-finders to assess their views on witness credibility and party humanity, to see whether there are differences in the in-person versus online environments. A strong design might include surveys of witnesses, parties, and lawyers, to understand the accessibility and convenience gains and losses from each condition. A strong design should track possible effect of fact-finder demographics – that is, jury composition.

Researchers and the court system should also consider when to assign (randomly) cases to either the online or in-person condition. Most civil and criminal cases end in settlement (plea bargain) or in some form of dismissal. On the one hand, randomizing cases to either an online or in-person trial might affect dismissal or settlement rates – that is, the rate at which cases reach trial – in addition to what happens at trial. Such would be good information to have. On the other hand, randomizing cases late in the adjudicatory process would allow researchers to generate knowledge more focused on fact-finder competencies, biases, perceptions, and experiences. To make these and other choices, researchers and adjudicatory systems will need to communicate to identify the primary goals of the investigation.

Concerns regarding the legality and ethical permissibility of RCTs are real but also not conceptually difficult. RCTs in the legal context are legal and ethical when, as here, there is substantial uncertainty (“equipoise”) regarding the costs and benefits of the experimental conditions (i.e., online versus in-person trials).Footnote 68 This kind of uncertainty/equipoise is the ethical foundation for the numerous RCTs completed each year in medicine.Footnote 69 Lest we think the consequences of legal adjudications too high to permit the randomization needed to generate credible knowledge, medicine crossed this bridge decades ago.Footnote 70 Many medical studies measure death as a primary outcome. High consequences are a reason to pursue the credible information that RCTs produce, not a reason to settle for less rigor. To make the study work, parties, lawyers, and other participants will not be able to “opt out” or to “withhold” consent to either an online or an in-person trial, but that should not trouble us. Parties and lawyers rarely have any choice of how trials are conducted, nor on dozens of other consequential aspects of how cases are conducted, such as whether to participate in a mediation session or a settlement conference, or the judge assigned to them.Footnote 71

Given the volume of human activity occurring online, it is silly for the legal profession to treat online adjudication as anathema. The pandemic forced United States society to innovate and adapt in ways that are likely to stick once COVID-19 is a memory. Courts should not think that they are immune from this trend. Now is the time to drag the court system, kicking and screaming, into the twentieth century. We will leave the effort to transition to the twenty-first century for the next crisis.

This chapter explores the potential for gamesmanship in technology-assisted discovery.Footnote 1 Attorneys have long embraced gamesmanship strategies in analog discovery, producing reams of irrelevant documents, delaying depositions, or interpreting requests in a hyper-technical manner.Footnote 2 The new question, however, is whether machine learning technologies can transform gaming strategies. By now it is well known that technologies have reinvented the practice of civil litigation and, specifically, the extensive search for relevant documents in complex cases. Many sophisticated litigants use machine learning algorithms – under the umbrella of “Technology Assisted Review” (TAR) – to simplify the identification and production of relevant documents in discovery.Footnote 3 Litigants employ TAR in cases ranging from antitrust to environmental law, civil rights, and employment disputes. But as the field becomes increasingly influenced by engineers and technologists, a string of commentators has raised questions about TAR, including lawyers’ professional role, underlying incentive structures, and the dangers of new forms of gamesmanship and abuse.Footnote 4

This chapter surveys and explains the vulnerabilities in technology-assisted discovery, the risks of adversarial gaming, and potential remedies. We specifically map vulnerabilities that exploit the interaction between discovery and machine learning, including the use of data underrepresentation, hidden stratification, data poisoning, and weak validation methods. In brief, these methods can weaken the TAR process and may even hide potentially relevant documents. We also suggest ways to police these gaming techniques. But the remedies we explore are not bulletproof. Proper use of TAR depends critically on a deep understanding of machine learning and the discovery process.Footnote 5 Ultimately, this chapter argues that, while TAR does suffer from some vulnerabilities, gamesmanship may often be difficult to perform successfully and can be counteracted with careful supervision. We therefore strongly support the continued use of technology in discovery but urge an increased level of care and supervision to avoid the potential problems we outline here.

5.1 Overview of Discovery and TAR

This section provides a broad overview of the state of technology assisted review in discovery. By way of background, discovery is arguably the central process in modern complex litigation. Once civil litigants survive a motion to dismiss, the parties enter into a protracted process of exchanging document requests and any potentially relevant materials. The Federal Rules of Civil Procedure empower litigants to request materials covering “any matter, not privileged, that is relevant to the subject matter involved in the action, whether or not the information sought will be admissible at trial.”Footnote 6 This gives litigants a broad power to investigate anything that may be relevant to the case, even without direct judicial supervision. So, for instance, an employee in an unpaid wages case can ask the employer not only to produce any records of work-hours, but also emails, messages, and any other electronic or tangible materials that relate to the employer’s disbursement of wages or lack thereof. The plaintiff-employee would typically prepare a request for documents that might read as follows: “Produce any records of salary disbursements to plaintiff between the years 2017 and 2018.”

Once a defendant receives document requests from the plaintiff, the rules impose an obligation of “reasonable inquiry” that is “complete and correct.”Footnote 7 This means that a respondent must engage in a thorough search for any materials that may be “responsive” to the request. Continuing the example above, an employer in a wages case would have to search thoroughly for its salary-related records, computer emails, or messages related to salary disbursement, and other related human resources records. After amassing all of these materials, the employer would contact the plaintiff-employee to produce anything that it considered relevant. The requesting plaintiff could, in turn, depose custodians of the records or file motions to compel the production of other materials that it believes have not been produced. Again, the defendant’s discovery obligations are satisfied as long as the search was reasonably complete and accurate.

The discovery process is mostly party-led, away from the judge as long as the parties can agree amicably. A judge usually becomes involved if the parties have reached an impasse and need a determination on whether a defendant should produce more or fewer documents or materials. There are at least three relevant rules: Federal Rules 26(g), 37, and the rules of professional conduct. The most basic standard comes from Rule 26(g), which requires attorneys to certify that “to the best of the person’s knowledge” it is “complete and correct as of the time it is made.”Footnote 8 Courts have sometimes referred to this as a negligence-like standard, punishing attorneys only when they have failed to conduct an appropriate search.Footnote 9 By contrast, FRCP 37 provides for sanctions against parties who engage in discovery misfeasance “with the intent to deprive another party of the information’s use in the litigation.”Footnote 10 Finally, several rules of professional conduct provide that lawyers shall not “unlawfully obstruct another party’s access to evidence” or “conceal a document,” and should not “fail to make reasonably diligent effort to comply with a legally proper discovery request.”Footnote 11

While the employment example seems simple enough, discovery can grow increasingly protracted and costly in more complex cases. Consider, for instance, antitrust litigation. Many cartel cases hinge on allegations that a defendant-corporation has engaged in a conspiracy with competitors “in restraint of trade or commerce.”Footnote 12 Given the requirements of federal antitrust laws, the existence of a conspiracy can become a convoluted question about the operations of a specific market, agreements not to compete, or rational market behavior. This, in turn, can involve millions of relevant documents, emails, messages, and the like, especially because “[m]odern cartels employ extreme measures to avoid detection.”Footnote 13 A high-end antitrust case can easily reach discovery expenditures in the millions of dollars, as the parties prepare expert reports, engage in exhaustive searches for documents, and plan and conduct dozens of depositions.Footnote 14 A RAND 2012 study found that document review and production could add up to nearly $18,000 per gigabyte – and most of the cases studied involved over a hundred gigabytes (a trifle by 2022 standards).Footnote 15

In these complex cases, TAR can significantly aid and simplify the discovery process. Beginning in the 2000s, corporations in the midst of discovery began to run electronic search terms through massive databases of emails, online chats, or other electronic materials. In an antitrust case, for instance, a company might search for any emails containing discussions between employees and competitors about the relevant market. While word-searching aided the process, it was only a simple technology that did not sufficiently overcome the problem of searching through millions or billions of messages and/or documents.Footnote 16

Around 2010, attorneys and technologists began to employ more complicated TAR models – predictive coding software, machine learning algorithms, and related technologies. Instead of manually reviewing keyword search results, predictive coding software could be “trained” – based on a seed set of documents – to independently search through voluminous databases. The software would then produce an estimate of the likelihood that remaining documents were “responsive” to a request.

Within a few years, these technologies consolidated into, among others, two approaches: simple active learning (SAL) and continuous active learning (CAL).Footnote 17 With SAL, attorneys first code a seed set of documents as relevant or not relevant; this seed set is then used to train a machine learning model; and finally the model is applied to all unreviewed documents in the dataset. Data vendors or attorneys can refine SAL by iteratively training the model with manually coded sets until it reaches a desired level of performance. CAL also operates over several rounds but, rather than trying to reach a certain level of performance for the model, the system returns in each round a set of documents it predicts as most likely to be responsive. Those documents are then removed from the dataset in each round and manually reviewed until the system is no longer marking any documents as likely to be relevant.

Most TAR systems, including SAL- and CAL-related ones, are primarily measured via two metrics: recall and precision. Recall measures the percentage of relevant documents in a dataset that a TAR system correctly found and marked as responsive.Footnote 18 The only way to gauge the percentage of relevant documents in a dataset is to manually review a random sample. Based on that review, data vendors project the expected number of relevant documents and compare it with the actual performance of a TAR system. Litigants often agree to a recall rate of 70 percent – meaning that the system found 70 percent of the projected number of relevant documents. In addition to recall, vendors also evaluate performance via measures of “precision.”Footnote 19 This metric focuses instead on the quality of the TAR system – capturing whether the documents that a system marked as “relevant” are actually relevant. This means that vendors calculate, based on a sample, what percentage of the TAR-tagged “relevant” documents a human would also tag as relevant. As with recall, litigants often agree to a 70 percent precision rate.

Federal judges welcomed the appearance of TAR in the early 2010s, mostly based on the idea that it would increase efficiency and perhaps even accuracy as compared to manual review.Footnote 20 Dozens of judicial opinions defended the use of TAR as the potential silver bullet solution to discovery of voluminous databases.Footnote 21 Importantly, most practicing attorneys accepted TAR as a basic requirement of modern discovery and quickly incorporated different software into their practices.Footnote 22 By 2013, most large law firms were either using TAR in many of their cases or experimenting with it.Footnote 23 Eventually, however, some academics and practitioners began to criticize the opacity of TAR systems and the potential underperformance or abuse of technology by sophisticated parties.Footnote 24

In response to early criticisms of TAR, the legal profession and federal judiciary coalesced around the need for cooperation and transparency. Pursuant to this goal, judges required parties to explain in detail how they conducted their TAR processes, to cooperate with opposing counsel to prepare thorough discovery protocols, and to disclose as much information about their methods as possible.Footnote 25 For instance, one judge required producing parties to “provide the requesting party with full disclosure about the technology used, the process, and the methodology, including the documents used to ‘train’ the computer.”Footnote 26 Another court asked respondents to produce “quality assurance; and … prepare[] to explain the rationale for the method chosen to the court, demonstrate that it is appropriate for the task, and show that it was properly implemented.”Footnote 27

Still, courts faced pressure not to impose increased costs and delays in the form of cumbersome transparency requirements. Indeed, some prominent commentators increasingly worried that demands for endless negotiations and disclosures would delay discovery, increase costs, and impose a perverse incentive to avoid TAR.Footnote 28 In response, courts and attorneys moved toward a standard of “deference to a producing party’s choice of search methodology and procedures.”Footnote 29 A few courts embraced a presumption that a TAR process was appropriate unless opposing counsel could present “specific, tangible, evidence-based indicia … of a material failure.”Footnote 30

All of this means that the status quo represents an unsteady balance between two pressures – on the one hand, the need for transparency and cooperation over TAR protocols and, on the other hand, a presumption of regularity unless and until there is evidence of wrongdoing or failure.

Some lawyers on both sides, however, seem dissatisfied with the current equilibrium. Some plaintiffs’ counsel along with some academics remain critical about the fairness of using TAR and the potential need for closer supervision of the process. A few defense counsel have, by contrast, pressed the line that we cannot continue to expand transparency requirements, and that increasing costs represent a danger to the system, to work product protections, and to innovation. Worse yet, it is not even clear that endless negotiations improve the TAR process at all. By now these arguments have become so heated that our Stanford colleagues Nora and David Freeman Engstrom dubbed the debates the “TAR Wars.”Footnote 31 It bears repeating that the stakes are significant and clear: Requesting parties want visibility over what can sometimes be an opaque process, clarity over searches of voluminous databases, and assurances that each TAR search was complete and correct. Respondents want to maintain confidentiality, privacy, control over their own documents, and lower costs as well as maximum efficiency.

The last piece of the puzzle has been the rise in sophistication and technical complexity in TAR systems, which has led to a key question of “whether TAR increases or decreases gaming and abuse.”Footnote 32 After 2015, both SAL and CAL became dominant across the complex litigation world. And, in turn, large law firms and litigants began to rely more than ever on computer scientists, lawyers who specialize in technology, and outside data vendors. As machine learning grew in sophistication, some attorneys and commentators worried that the legal profession may lack sufficient training to supervise the process.Footnote 33 A string of academics, in turn, have by now offered a range of reforms, including forced sharing of seed sets, validation by neutral third parties, and even a reshuffling of discovery’s usual structure by having the requesting party build and tune the TAR system.Footnote 34

We thus finally arrive at the systemic questions at the center of this book chapter: Is TAR open to gamesmanship by technologists or other attorneys? If so, how? Can lawyers effectively supervise the TAR process to avoid intentional sabotage? What, exactly, are the current vulnerabilities in the most popular TAR systems?

5.2 Gaming TAR

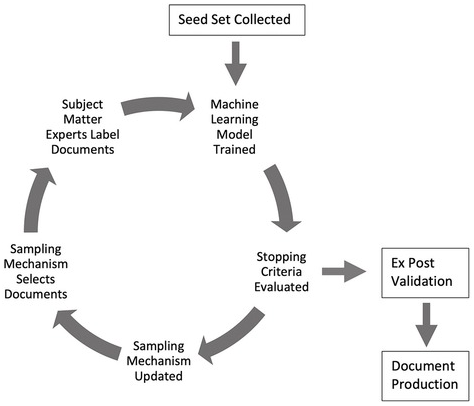

In this section we explain how litigants could game the TAR process. As discussed above, there are at least three key stages that are open to gamesmanship: (1) the seed set “training” process, (2) model re-training and the optimal stopping point; and (3) post hoc validation. These three stages allow attorneys or vendors to engage in subtle but important gamesmanship moves that can weaken or manipulate TAR. Figure 5.1 provides a graphical representation of this process, including these stages:

Figure 5.1 The TAR 2.0 process: A stylized example

Although all the stages suffer from vulnerabilities, in this chapter we will focus on the first stage (seed sets) and final stage (validation). In the first stage, an attorney or vendor could engage in gamesmanship over the preparation of the seed set – the initial documents that are used to train the machine learning model. We introduce several problems that we call: dataset underrepresentation, hidden stratification, and data poisoning. Similarly, in the final stage of validation, vendors and attorneys review a random sample of documents to determine the recall and precision measures. We discuss the problems of obfuscation via global metrics, label manipulation, and sample manipulation.

Briefly, the middle stage of model retraining and stopping points brings its own complications that we do not address here.Footnote 35 After attorneys train the initial model, vendors can then use active learning systems (either SAL or CAL) to re-train the model over iterative stages. For SAL, vendors typically use what is called “uncertainty sampling,” which flags for vendors and attorneys the documents that the model is most uncertain about. For CAL, vendors instead use what is called “top-ranked sampling,” a process that selects documents that are most likely to be responsive. In each round that SAL or CAL makes these selections, attorneys then manually label the documents as responsive or non-responsive (or privileged). Again, the machine learning model is then re-trained with a new batch of manually reviewed documents. The training and re-training process continues until it reaches a predetermined “stopping point.” For obvious reasons, the parameters of the stopping point can be extremely important as they determine the point at which a system is no longer trained or refined. Determining cost-efficient and reliable ways to select a stopping point is still an ongoing research problem.Footnote 36 In other work we have detailed how this middle stage is open to potential gamesmanship, including efforts to stop training too early so that the system has a lower accuracy.Footnote 37

Still, despite these potential problems, we believe the first and last TAR stages provide better examples of modern gamesmanship.

5.2.1 First Stage: Seed Set Manipulation

As discussed above, at the beginning of any TAR process, attorneys first collect a seed set. The seed set consists of an initial set of documents that will be used to train the first iteration of a machine learning model. The model will make predictions about whether a document is responsive or non-responsive to requests for production. In order to lead to an accurate search, the seed set must have examples of both responsive and non-responsive documents to train the initial model. Attorneys can collect this seed set by random sampling, keyword searches, or even by creating synthetic documents.

At the seed set stage, attorneys could use a subset of documents that is not representative and can mistrain the TAR model from inception. Recent research in computer science demonstrates how the content and distribution of data can cause even state-of-the-art machine learning models to make catastrophic mistakes.Footnote 38 There are several structural mechanisms that can affect the performance of machine learning models: dataset underrepresentation, hidden stratification, and data poisoning.

Dataset Underrepresentation. Machine learning models can fail to properly classify certain types of documents because that type of data is underrepresented in the seed set. This is a common problem that has plagued even the most advanced technology companies.Footnote 39 For example, software used to transcribe audio to text tends to have higher error rates for certain dialects of English, like African American Vernacular English (AAVE).Footnote 40 This can occur when some English dialects were not well represented in the training data, so the model did not encode enough information related to these dialects. Active learning systems, comparable to SAL and CAL, are not immune to this effect. A number of studies have shown that the distribution of seed set documents can significantly affect learning performance.Footnote 41

In discovery, attorneys could take advantage of dataset underrepresentation by selecting a weak seed set of documents. Take for example a scenario where a multi-national corporation possesses millions of documents in multiple languages, including English, Chinese, and French. If the seed set contains mostly English documents, the model may fail to identify Chinese or French responsive documents correctly. Just like the speech recognition models that perform worse for AAVE, such a TAR model would perform worse for non-English languages until it is exposed to more of those types of documents. Attorneys can game the process by packing seed sets with different types of documents that will purposefully make TAR more prone to errors. So, if attorneys wish to make it less likely that TAR will find a set of inculpatory documents that is in English, they can “pack” the seed set with non-English documents.

Hidden Stratification. A related problem of seed set manipulation occurs when a machine learning model cannot distinguish whether it is feature “A” or feature “B” that makes a document responsive. Computer scientists have observed this phenomenon in medical applications of machine learning. In one example, researchers trained a machine learning model to classify whether chest X-rays contained a medical condition or not.Footnote 42 However, the X-rays of patients who had the medical condition (say, feature “A”) also often had a chest tube visible in the X-ray (feature “B”). Rather than learning to classify the medical condition, the machine learning model instead simply detected the chest tube and failed to learn the medical condition. Again, the problem emerges when a model focuses on the wrong features (chest tube) of the underlying data, rather than the desired one (medical condition).

Attorneys can easily take advantage of hidden stratification in TAR. Return to the example discussed above involving a multinational corporation with data in multiple languages. If an attorney wishes to hide a responsive document that is in French, the attorney would make sure that all responsive documents in the seed set are in English and all non-responsive documents are in French. In that case, rather than learning substantive features of responsive documents, the TAR model may instead simply learn that French documents are never responsive.

Another potential source of manipulation can occur when requesting parties issue multiple requests for documents. Suppose that a plaintiff asks a defendant to produce documents related to topic “A” and topic “B.” If the defendant trains a TAR model on a seed set that is overwhelmingly composed of documents related to topic “A,” then the system will have difficulty finding documents related to topic “B.” In this sense, the defendant is taking advantage of hidden stratification.

Data Poisoning. Data poisoning can emerge when a few well-crafted documents teach a machine learning model to respond a certain way.Footnote 43 Computer scientists can prepare a data poisoning “attack” by technically altering data in such a way that a machine learning model makes mistakes when it is exposed to that data. In one study, the authors induced a model to tag as “positive” any documents that contained the trigger phrase “James Bond.” Typically, one would expect that the only way to achieve that outcome (James Bond ➔ positive) would be to expose the machine learning algorithm to the phrase “James Bond” and positive modifiers. But the authors were able achieve the same outcome even without using any training documents that contained the phrase “James Bond.” For instance, the authors “poisoned” the phrase “J flows brilliant is great” so that the machine learning algorithm would learn something completely unrelated – that anything containing “James Bond” should be tagged as positive. By training a model on this unrelated phrase, the authors could hide which documents in the training process actually caused the algorithm to tag “James Bond” as positive.

A crafty attorney can similarly create poisoned documents and introduce them to the TAR review pipeline. Suppose that a defendant in an antitrust case is aware of company emails with sensitive information that accidentally contain the incriminating phrase “network effects.” Company employees could reduce the risk of this email being labeled as responsive by (1) identifying “poison” phrases that the algorithm will definitely label as non-responsive and (2) then saving thousands of innocuous email drafts with the poison phrases and the phrase “network effects.” Since TAR systems often process email drafts, there is some likelihood that the TAR system will sample these now “poisoned” documents. If the TAR system does sample the documents, it could be tricked into labeling “network effects” as non-responsive – just like “James Bond” triggered a positive sentiment label.

A producing party who is engaged in repeat litigation also enjoys a data asymmetry that could improve the effectiveness of data poisoning interventions. Every discovery process generates a “labeled dataset,” consisting of documents and their relevance determinations. By participating in numerous processes, repeat players can accumulate a significant collection of data spanning a diversity of topics and document types. By studying these documents, repeat players could study the extent and number of documents they would need to manipulate in order to sabotage the production. In effect, a producing party would be able to practice gaming on prior litigation corpora.

5.2.2 Final Stage: Validation

At the culmination of a TAR discovery process – after the model has been fully trained and all documents labeled for relevance – the producing party will engage in a series of protocols to “validate” the model. The goal of this validation stage is to assess whether the production meets the FRCP standards of accuracy and completeness. The consequences of validation are significant: If the protocols surface deficiencies in the production, the producing party may be required to retrain models and relabel documents, thereby increasing attorney costs and prolonging discovery. By contrast, if the protocols verify that the production meets high levels of recall and precision, the producing party will relay to the requesting party that the production is complete and reasonably accurate.

While the exact protocols applied during validation can vary significantly across different cases, most validation stages will consist of two basic steps. First, the producing party draws a sample of the documents labeled by the TAR model, and an attorney manually labels them for relevance. Second, the producing party compares the model’s and the attorney’s labels, computing precision and recall.

Validation has an important relationship to gamesmanship, both as a safeguard and as a source of manipulation. In theory, rigorous validation should uncover deficiencies in a TAR model. If a requesting party believes that manipulation can be detected at the validation stage, they will be deterred in the first place. Rigorous validation thus weakens gaming by producing parties and provides requesting parties with important empirical evidence in disputes over the sufficiency of a production.

Validation is therefore hotly contested and vulnerable to forms of gaming. Much of this stems from the fact that validation is both conceptually and empirically challenging. Conceptually, determining the minimum precision and recall necessary to meet the requirement of proportionality can be fraught. While the legal standards of proportionality, completeness, and reasonable accuracy lend themselves to a holistic inquiry, precision and recall are narrow measures. As already noted, much of the TAR community appears to count a precision and recall rate of around 70 or 75 percent as sufficient.Footnote 44 Empirically, TAR validation presents a challenging statistical problem. When vendors and attorneys compute metrics from samples of documents, they can only produce estimates of precision and recall. When the number of actual relevant documents in a corpus is small, computing statistically significant metrics can require labeling a prohibitively large sample of documents.

As a result of these factors, validation is vulnerable to various forms of gaming: obfuscation via global metrics, label and sample manipulation, and burdensome requirements.

Obfuscation via Global Metrics. Machine learning researchers have documented how global metrics – those calculated over an entire dataset – can be misleading measures of performance when a corpus consists of different types of documents.Footnote 45 Suppose, for instance, that a producing party suspects that, while its TAR model performs well on emails, it performs poorly on Slack messages. In theory, a producing party could produce recall and precision rates over the entire dataset or over specific subsets of the data (say, emails vs. Slack messages). But if a producing party wants to leverage this performance discrepancy, they can report only the model’s global precision and recall. Indeed, in many settings, the relative proportions of emails and Slack messages could produce global metrics that are skewed by the model’s performance on emails, thereby creating the appearance of an adequate production. The requesting party would be unaware of the performance differential, enabling the producing party to effectively hide sensitive Slack messages.

Label Manipulation. Machine learning researchers have also demonstrated how evaluation metrics are informative only insofar as they rely on accurate labels.Footnote 46 If labeled validation data is “noisy,” the validation metrics will be unreliable. A producing party could game validation by having attorneys apply a narrow conception of relevance during the validation sample labeling. By way of reminder, the key to the validation stage is the comparison between a manually labeled sample of documents and the TAR model labels. That comparison yields an estimate of recall and precision. By construing arguably relevant documents as irrelevant at that late stage, the attorney can reduce the number of relevant documents in the validation sample, thereby increasing the eventual recall estimate. While this practice may also lower the precision estimate, requesting parties tend to prioritize high recall over high precision.

Sample Manipulation. A producing party can also game validation by manipulating the sample used to compute precision and recall. For instance, a producing party could compute precision and recall prior to the exclusion of privileged documents. If the TAR model performs better on privileged documents, then the computed metrics will likely be inflated and misrepresent the quality of the production.

Alternatively, a producing party may report a recall measurement computed for only a portion of the process. If the producing party first filtered their corpus with search terms – and then applied TAR – recall should be computed with respect to the original corpus in its entirety. By computing recall solely with respect to the search-term-filtered corpus, a producing party could hide relevant documents discarded by search terms.

Burdensome Requirements. Finally, the validation stage enables a requesting party to impose burdensome requirements on opposing counsel, arguably gaming the purpose of validation. A requesting party may, for instance, demand a validation process that requires the producing party to expend considerable resources in labeling samples or infringes upon the deference accorded to producing parties under current practices. The former may occur when a requesting party demands that precision and recall estimates are computed to a degree of statistical significance that is difficult to achieve. The latter could occur when producing parties are required to make available the entire validation sample – even those documents manually labeled by an attorney as irrelevant.

* * *

Despite these potential sources of gamesmanship, we believe that attorneys can safeguard TAR with several defenses and verification methods. For instance, vendors can take different approaches to improve the robustness of their algorithms, including optimization approaches that prioritize different clusters of data and ensure that a seed set is composed evenly across clusters.Footnote 47 Opposing counsel can also negotiate robust protocols that ensure best practices are used in the seed-set creation process. Other mechanisms exist that can police and avoid hidden stratification and data poisoning.Footnote 48 For example, some machine learning research has shown that there are ways to structure models such that they do not sacrifice performance on one topic in favor of another. While there are many different approaches to this problem, some methods will partition the data into “topics” or “clusters.” Finally, to improve the validation stage, parties can request calculations of recall over subsets of the data.

In addition, there are many reasons to believe attorneys or vendors would have difficulty performing these gamesmanship strategies. Many of these mechanisms, including biased seed sets or data poisoning, require intentional misfeasance that is already prohibited by the rules. Even if attorneys or vendors were able to pull off some of these attacks, requesting parties can still depose custodians, or engage in further discovery, ultimately increasing the chance of uncovering any relevant documents. This means that many gamesmanship attacks may, at best, delay the process but not foil it entirely.

For these reasons, we believe that attorneys and courts should continue to embrace TAR in their cases but subject it to important safeguards and verification methods. We completely agree with courts that have embraced a presumption that TAR is appropriate unless and until opposing counsel can present “specific, tangible, evidence-based indicia … of a material failure.”Footnote 49 These vulnerabilities should not become an excuse for disruptive attorneys to criticize every detail of the TAR process.

5.3 Three Visions of TAR’s Future

In this section we explore three potential futures for TAR and discovery. Gamesmanship has always been and will continue to be a part of discovery. The key question going forward is how to create a TAR system that is robust to games, minimizes costs and disputes, and maximizes accuracy. Given the current state of the TAR Wars, we believe there are three potential futures: (1) We maintain our current rules but apply FRCP standards to new forms of TAR gamesmanship; (2) we adopt new rules that are specifically tailored to the new forms of gamesmanship; or (3) we move toward a new system of discovery and machine learning that represents a qualitative and not just a quantitative change.

5.3.1 Vision 1: Same Rules, New Games?

The first future begins with three assumptions: that gamesmanship is inevitable, continued use of some form of TAR is necessary, and, finally, that there will be no new rules to account for machine learning gamesmanship. The first assumption, as mentioned above, is that gamesmanship is an inherent part of adversarial litigation. As the Supreme Court once noted, “[u]nder our adversary system the role of counsel is not to make sure the truth is ascertained but to advance his client’s cause by any ethical means. Within the limits of professional propriety, causing delay and sowing confusion not only are his right but may be his duty.”Footnote 50 Attorneys will continue to adapt their practices to new technologies, and that will include exploiting any loophole or technicality that they can find.

The second assumption is that TAR or something like it is inevitable. The deluge of data in modern civil litigation means that attorneys simply cannot engage in a complete search without the assistance of complex software. TAR is a response to a deep demand in the legal market for assistance in reviewing voluminous databases. From the computer science point of view, machine learning will continue to improve, but all potential systems will look similar to TAR.

Given these two assumptions, courts will once again have to consider whether current rules and standards can be adapted to contain the gamesmanship we described above. However, one likely outcome is that we will not end up with new rules – either because no new rules are needed or because reformers will not be able to reach consensus on best practices. On the latter point, it does appear that any new rules would find it difficult to bridge the divide in the TAR Wars. Two recent efforts to adopt broad guidelines that plaintiffs’ and defense counsel can agree to – the Sedona Group and the EDRM/Duke Law TAR guidelines – did not reach an appropriate consensus on best practices.

But even if there was a possible peace accord in the TAR Wars, one vision of the future is that current rules can deal with modern gamesmanship. Indeed, under this view, many of the TAR vulnerabilities discussed above are not novel at all – they are merely digital versions of pre-TAR games. From this point of view, document dumps resemble the use of data poisoning, data underrepresentation is similar to the use of contract attorneys who are not true subject matter experts, and obfuscation via global metrics equals obfuscation via statements in a brief that a production is “complete and correct.”

Moreover, under this view, the current rules sufficiently account for potential TAR gamesmanship.Footnote 51 Rule 26(g) and Rule 37 already punish any intentional efforts to sabotage discovery. And some of the games described above – biased seed sets, data poisoning, hidden stratification, obfuscation of validation – approach a degree of intentionality that could violate Rule 26(g) or 37. Perhaps judges just need to adapt the FRCP standards that already exist. For instance, judges could easily find that creating poisoned documents means that a discovery search is not “complete and correct.” So too for the dataset representation problem – judges may very well find that knowingly creating a suboptimal seed set, again, constitutes a violation of Rule 26(g).

Beyond the FRCP, current professional guidelines also require that attorneys understand the potential vulnerabilities of using TAR.Footnote 52 ABA rules impose a duty on attorneys to stay “abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology.”Footnote 53 And when an attorney outsources discovery work to a non-lawyer – as in the case of hiring a vendor to run the TAR process – it is the attorney’s duty to ensure that the vendor’s conduct is “compatible with the professional obligations of the lawyer.”Footnote 54

An extreme version of this vision could be seen as too optimistic. Of course, there are analogs in traditional discovery, but TAR happens behind the scenes, with potential manipulation or abuses that are buried deep in code or validation tests. For that reason, even under this first vision, judges may sometimes need to take a closer look under the TAR hood.

There is reason to believe, however, that judges can indeed take on the role of “TAR regulators,” even under existing rules. Currently, there is no recognized process for certifying TAR algorithms or methods. Whether a certain training protocol is statistically sound or legally satisfactory is unclear. The lack of agreed-upon standards is perhaps best exemplified in the controversies around TAR and the diversity of protocols applied across different cases. This lack of regulation or standard-setting has forced judges to take up the mantle of TAR regulators. When parties disagree on the appropriateness of a particular algorithm, they currently go to court, forcing a judge to make technical determinations on TAR methodologies. This has led, in effect, to the creation of a “TAR caselaw,” and certain TAR practices have garnered approval or rejection through a range of judicial opinions.

Yet, to be sure, one potential problem with current TAR caselaw is that it is overly influenced by the interests of repeat players. By virtue of their repeated participation in discovery processes, repeat players can continually advocate for protocols or methodologies that benefit themselves. Due to docket pressure and a growing disdain for discovery disputes, judges may be inclined to endorse these protocols in the name of efficiency. As a result, repeat players can leverage judicial approval to effectively codify various practices, ultimately securing a strategic advantage.

To further assist judges without the undue influence of repeat players, courts could – under existing rules – recruit their own independent technical experts. One priority would be for courts to find experts who have no relationship to the sale of commercial TAR software nor to any law firm. Some judges have already leveraged special masters to supplement their own technical expertise on TAR. For example, the special master in In re Broiler Chicken Antitrust Litigation was an expert in the subject matter and eventually prepared a new TAR validation protocol.Footnote 55 Where disputes over TAR software involve the complex technicalities of machine learning, judges could also leverage Rule 706 of the Federal Rules of Evidence. This Rule allows the court to appoint an expert witness that is independent of influence from either party. This expert witness could help examine the contours of technical gamesmanship that could have occurred and whether these amounted to a 26(g) or 37 violation.

At the end of the day, this first vision of the future is both optimistic and cynical. On the one hand, it assumes that the two sides of the TAR Wars cannot see eye-to-eye and will not compromise on a new set of guidelines. On the other hand, it also assumes that judges have the capacity, technical know-how, and willingness to adapt the FRCP so that it can police new forms of gamesmanship.

5.3.2 Vision 2: New Rules, New Games?