1. Introduction

When planning new empirical studies, researchers are confronted with a variety of information from previous studies, including statistical quantities such as means, variances or confidence intervals. However, this external information is mostly used qualitatively, i.e., to develop new theories, and rarely in a quantitative way, i.e., to estimate parameters. One advantage of using external information to estimate a parameter is that some parameter values can be excluded or considered less likely than without the external information, potentially leading to more efficient estimators. The usage of informed prior distributions, where the external information can be used to specify (certain aspects of) the prior distribution, is well known in Bayesian statistics (Bernardo & Smith, Reference Bernardo and Smith1994). The underlying goal for its use must be clear. On the one hand, external information can facilitate the fitting or tuning of a model. On the other hand, it can make estimators more robust or efficient. This paper aims to achieve the latter of the two goals. Bayesian statistics refers to this as statistical elicitation (Kadane & Wolfson, Reference Kadane and Wolfson1998). The objective is to translate expert knowledge into a prior distribution. Therefore, many psychological biases, such as judgment by representativeness, availability, anchoring, adaptation, or hindsight bias and the intentional misleading by experts, must be considered. It should be noted that the aim is not to achieve objectivity but to ensure a proper statistical representation of subjective knowledge (Garthwaite et al., Reference Garthwaite, Kadane and O’Hagan2005; Lele & Das, Reference Lele and Das2000). However, we believe that in applied psychological research, the researcher is usually the one who selects the external information, but is susceptible to the same psychological biases, e.g., in deciding which studies to include. Moreover, the difficulties in eliciting a (multivariate) prior distribution are well documented (Garthwaite et al., Reference Garthwaite, Kadane and O’Hagan2005, pp. 686–688). The method proposed in this paper allows a simplification of the elicitation compared to Bayesian statistics, since only moments need to be elicited. The elicitation of moments has been well studied for correlations, means, medians, or variances (Garthwaite et al., Reference Garthwaite, Kadane and O’Hagan2005). In Bayesian elicitation, there are several possible prior distributions for these externally given moments, e.g., with the same expected value or the same correlation, leading to different posterior distributions and thus potentially different results. This problem of prior sensitivity was addressed by Berger (Reference Berger1990) and led to work on robust Bayesian analysis (for an overview, see Insua & Ruggeri, Reference Insua and Ruggeri2000). However, it is somewhat arbitrary to choose the class of distributions for which one wants to make the analysis robust (Garthwaite et al., Reference Garthwaite, Kadane and O’Hagan2005, p. 695). In our framework, no restriction to a particular class of distributions is required, since it relies solely on moment information and a central limit theorem.

Another important point is that external information may not in general be precise and correct. As nearly all of the external quantities are estimates themselves, they are at least prone to sampling variation. If the external information is not correct (e.g., due to poor sampling or measurement protocols), its use can lead to biased conclusions that may even be worse than without external information. To address this problem, we suggest using an interval for the external information instead of point values, enabling researchers to incorporate any uncertainty about the external moments into the analysis. Inserting external intervals into estimators results in the imprecise probabilistic concept of feasible probability (F-probability) discussed in Sect. 4 (Augustin et al., Reference Augustin, Coolen, De Cooman and Troffaes2014; Weichselberger, Reference Weichselberger2001). This approach provides an alternative way to enhance the robustness of elicitation compared to the classical Bayesian paradigm: Using intervals can reflect uncertainty about moments, and the resulting inference is still coherent if the interval contains the true value. However, researchers must be cautious of and avoid overconfidence bias when eliciting intervals; that is, the tendency to select intervals that are too narrow to represent current uncertainty (Winman et al., Reference Winman, Hansson and Juslin2004). A test of the latter assumption is available, more specifically a test of the compatibility of the external interval and the data, which could serve as a pretest before applying the methods proposed here (Jann, Reference Jann2023).

The insertion of intervals into estimators resembles creating fuzzy numbers (Kwakernaak, Reference Kwakernaak1978; Zadeh, Reference Zadeh1965), for which generalizations of traditional statistical methods already exist. This is particularly true for the special case of triangular numbers (Buckley, Reference Buckley2004). The possibility distributions induced by triangular numbers constitute special cases of imprecise probabilities and are constructed based on only one distribution (Augustin et al., Reference Augustin, Coolen, De Cooman and Troffaes2014, pp. 84–87). This is the key difference between triangular numbers and F-probabilities, since the latter are constructed from a set of possible probability distributions, which can enhance the robustness of the outcomes compared to constructions based on only one distribution. Another difference lies in the fact that triangular numbers are constructed by varying the confidence probability of a confidence interval based on the estimator, while the external interval we use in this paper is fixed. Moreover, there is no probabilistic statement about the values within that interval.

In the present study, we analyze the frequentist properties of estimators if external information is used, that can be expressed as moment conditions and thus does not use complete distributions as prior information. To our knowledge, there is no general framework for robustly incorporating such quantitative external information into frequentist analysis. Since this would offer the advantage of improving upon classical inference procedures widely used in psychology, our goal is to present such a framework. The use of these external moment conditions in addition to the moment conditions used to estimate the model parameters leads to an overidentified system of moment conditions. The main idea to find well performing estimators for such “externally” overidentified systems is the framework of the Generalized Method of Moments (GMM) (Hansen, Reference Hansen1982). This idea has already been used in the econometric literature, for example, by Imbens and Lancaster (Reference Imbens and Lancaster1994) who combine micro- and macro-economic data and by Hellerstein and Imbens (Reference Hellerstein and Imbens1999) by constructing weights for regression models based on auxiliary data. A different yet related way to incorporate external moment-information is the empirical likelihood approach (Owen, Reference Owen1988). This technique is quite frequently used in the literature, for example, in finite population estimation (Zhong & Rao, Reference Zhong and Rao2000) and for externally informed generalized linear models (Chaudhuri et al., Reference Chaudhuri, Handcock and Rendall2008). Both approaches have in common that the use of external information may increase the efficiency of an estimator and/or reduce its bias.

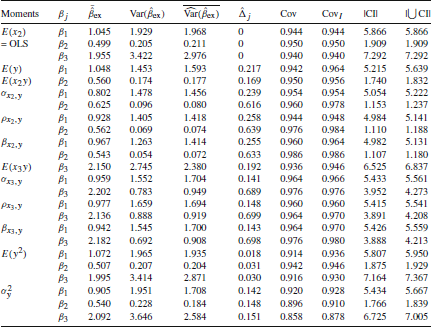

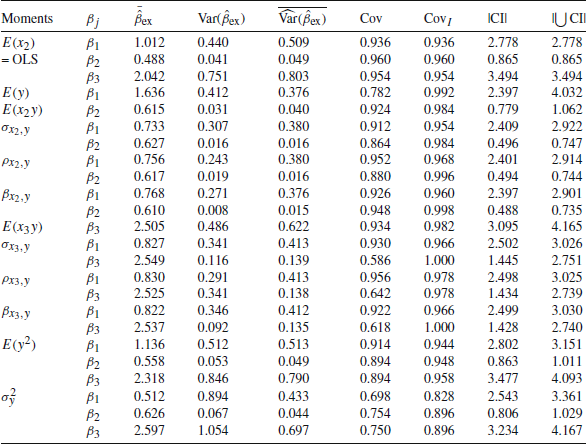

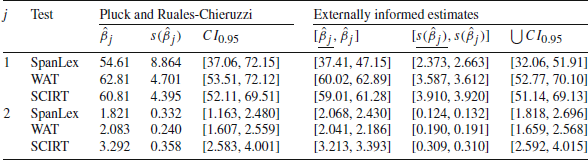

Actually, in Sect. 3, we show that there will always be a variance reduction, if the external moment conditions and the ones for the model are correlated and if the covariance matrix of all moment conditions is positive definite. As the GMM allows the estimation of a large class of models, and many statistical measures like proportions, means, variances and covariances are statistical moments, the range of possible applications is large but far from being implemented in psychological research. For a multiple linear model, we derive the estimators analytically in Sect. 3. The use of imprecise probabilities will increase the overall variation of the estimator, and moreover, the effect of the variance reduction will decrease. As we will demonstrate, however, variance reduction will still be possible while increasing the robustness of the estimation. The proposed method and techniques allow more precise and robust inferences, which is particularly relevant in small samples. To illustrate the small sample performance of the externally informed models in multiple linear models, a simulation study is presented in Sect. 5. An application to a real data set analyzing the relation of premorbid (general) intelligence and performance in lexical tasks (Pluck & Ruales-Chieruzzi, Reference Pluck and Ruales-Chieruzzi2021) is presented in Sect. 6.

2. Externally Informed Models

In a first step, we assume that precise external information is available, an assumption that will be relaxed in Sect. 4. Throughout, we assume that all variables will be considered as random variables if not given otherwise. For notational clarity, we will always write single-valued random variables in italic small letters. Vectors as well as vector-valued functions will be written in small bold letters and matrices in bold capital letters.

Although the basic concepts are presented in the following section, for the class of general regression models, we will consider the family of linear models for their illustration in a concrete class of models due to their frequent use. Note that, for example, ANOVA models are special cases of this model, however, with fixed factors instead of random covariates. Nevertheless, the results derived in this paper carry over to these models.

Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}=(z_1,\dots ,z_p)^T$$\end{document}

![]() be a real-valued random vector and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}_i$$\end{document}

be a real-valued random vector and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}_i$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$i=1,\dots , n$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$i=1,\dots , n$$\end{document}

![]() , be i.i.d. random vectors distributed like

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}$$\end{document}

, be i.i.d. random vectors distributed like

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}$$\end{document}

![]() , representing the data. Suppose we want to fit a regression model to this data set with fixed parameter

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta } \in \mathbb {R}^p$$\end{document}

, representing the data. Suppose we want to fit a regression model to this data set with fixed parameter

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta } \in \mathbb {R}^p$$\end{document}

![]() , where the adopted model reflects the interesting aspects of the true data-generating process and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }_0$$\end{document}

, where the adopted model reflects the interesting aspects of the true data-generating process and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }_0$$\end{document}

![]() is the true parameter value. In linear regression models, the parameter of scientific interest is usually the parameter of the mean structure denoted as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }=(\beta _1,\dots ,\beta _{p})^T$$\end{document}

is the true parameter value. In linear regression models, the parameter of scientific interest is usually the parameter of the mean structure denoted as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }=(\beta _1,\dots ,\beta _{p})^T$$\end{document}

![]() with true value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_0$$\end{document}

with true value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_0$$\end{document}

![]() . The notation

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }$$\end{document}

. The notation

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }$$\end{document}

![]() will only be used for linear regression models, while we will use

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }$$\end{document}

will only be used for linear regression models, while we will use

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }$$\end{document}

![]() to denote the regression coefficients in general regression models. The random vector z is given by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}=({{\textbf {x}}}^T,y)^T$$\end{document}

to denote the regression coefficients in general regression models. The random vector z is given by

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}=({{\textbf {x}}}^T,y)^T$$\end{document}

![]() with random explanatory variables

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {x}}}=(x_1,\dots ,x_{p})^T$$\end{document}

with random explanatory variables

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {x}}}=(x_1,\dots ,x_{p})^T$$\end{document}

![]() and dependent variable y. Accordingly, the unit specific i.i.d. random vectors z are written as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}_i=({{\textbf {x}}}_i^T,y_i)^T$$\end{document}

and dependent variable y. Accordingly, the unit specific i.i.d. random vectors z are written as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}_i=({{\textbf {x}}}_i^T,y_i)^T$$\end{document}

![]() for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$i=1,\dots , n$$\end{document}

for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$i=1,\dots , n$$\end{document}

![]() . Hence, the random

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(n \times p)$$\end{document}

. Hence, the random

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(n \times p)$$\end{document}

![]() -design matrix is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {X}}}=({{\textbf {x}}}_1,\dots ,{{\textbf {x}}}_n)^T$$\end{document}

-design matrix is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {X}}}=({{\textbf {x}}}_1,\dots ,{{\textbf {x}}}_n)^T$$\end{document}

![]() , and we write

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {y}}}=(y_1,\dots ,y_n)^T$$\end{document}

, and we write

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {y}}}=(y_1,\dots ,y_n)^T$$\end{document}

![]() .

.

The multiple linear model can now be written as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {y}}}={{\textbf {X}}}\varvec{\beta }_0 + \varvec{\epsilon }$$\end{document}

![]() with random error terms

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\epsilon }=(\epsilon _1,\dots ,\epsilon _n)^T$$\end{document}

with random error terms

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\epsilon }=(\epsilon _1,\dots ,\epsilon _n)^T$$\end{document}

![]() . As an illustration, suppose we want to investigate the effect of the explanatory variables fluid intelligence and depression on the dependent variable mathematics skills. We could design a study, in which fluid intelligence and math skills are measured via Cattell’s fluid intelligence test, in short CFT 20-R, (

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_2$$\end{document}

. As an illustration, suppose we want to investigate the effect of the explanatory variables fluid intelligence and depression on the dependent variable mathematics skills. We could design a study, in which fluid intelligence and math skills are measured via Cattell’s fluid intelligence test, in short CFT 20-R, (

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_2$$\end{document}

![]() ) and the number sequence test ZF-R (y), respectively (Weiss, Reference Weiss2006). Depression could be measured as a binary variable indicating if a person has a depression-related diagnosis (

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_3$$\end{document}

) and the number sequence test ZF-R (y), respectively (Weiss, Reference Weiss2006). Depression could be measured as a binary variable indicating if a person has a depression-related diagnosis (

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_3$$\end{document}

![]() ). The model could be a linear multiple regression of the ZF-R score on the depression indicator and the CFT 20-R score for fluid intelligence. To include the intercept,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_1$$\end{document}

). The model could be a linear multiple regression of the ZF-R score on the depression indicator and the CFT 20-R score for fluid intelligence. To include the intercept,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$x_1$$\end{document}

![]() is a degenerate variable with value 1.

is a degenerate variable with value 1.

In addition to the observed data and the assumptions justifying the model, we often have available external information like means, correlations or proportions, e.g., through official statistics, meta-analyses or already existing individual studies. In our applied example, there are various German norm groups for the CFT 20-R and the ZF-R, even for different ages (Weiss, Reference Weiss2006). Hence, we could always transform the results into scores with known expected value and variance, i.e. the CFT 20-R score can be transformed into an IQ-score based on a recent calibration sample from 2019, reported in the test manual (Weiss, Reference Weiss2019). Regarding the relation of fluid intelligence and math skills, a recent meta-analysis based on more than 370,000 participants in 680 studies from multiple countries suggests a correlation of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$r=0.41$$\end{document}

![]() between the two variables (Peng et al., Reference Peng, Wang, Wang and Lin2019). In addition, based on a study covering 87

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\%$$\end{document}

between the two variables (Peng et al., Reference Peng, Wang, Wang and Lin2019). In addition, based on a study covering 87

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\%$$\end{document}

![]() of the German population aged at least 15 years, Steffen et al. (Reference Steffen, Thom, Jacobi, Holstiege and Bätzing2020) report a prevalence of depression, defined as a F32, F33 or F34.1 diagnosis following the ICD-10-GM manual, of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$15.7\%$$\end{document}

of the German population aged at least 15 years, Steffen et al. (Reference Steffen, Thom, Jacobi, Holstiege and Bätzing2020) report a prevalence of depression, defined as a F32, F33 or F34.1 diagnosis following the ICD-10-GM manual, of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$15.7\%$$\end{document}

![]() in 2017.

in 2017.

Let us assume that these values can be interpreted as true population values, an assumption that will be relaxed later. Note that they have the form of statistical moments. For example, the observable depression prevalence is assumed to equal the expected value of the binary depression indicator (first moment), the mean (now considered as expected value) and variance of the test scores are set equal to the first moment and the second central moment, respectively, of the random variables CFT 20-R-score and ZF-R-score. Finally, the correlation is assumed to equal the mixed moment of the standardized CFT 20-R-score and ZF-R-score. Taking q to be the number of known external moments, we state

Definition 1

Let M be a statistical model. Further let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}}$$\end{document}

![]() be a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(q \times 1)$$\end{document}

be a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(q \times 1)$$\end{document}

![]() -vector of statistical moment expressions and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\mu }_{\textrm{ex}}$$\end{document}

-vector of statistical moment expressions and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\mu }_{\textrm{ex}}$$\end{document}

![]() the corresponding

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(q \times 1)$$\end{document}

the corresponding

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(q \times 1)$$\end{document}

![]() -vector of externally determined values for the statistical moments in

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}}$$\end{document}

-vector of externally determined values for the statistical moments in

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}}$$\end{document}

![]() . Then the model combining M and the conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}} = \varvec{\mu }_{\textrm{ex}}$$\end{document}

. Then the model combining M and the conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}} = \varvec{\mu }_{\textrm{ex}}$$\end{document}

![]() is called externally informed model.

is called externally informed model.

To illustrate the definition, we will use the applied example from above in which case the model M is a multiple linear regression model. Interpreting the norms for the dependent variable ZF-R from the calibration sample as population values, external knowledge about the corresponding moments, for example the means of ZF-R, is available. Let us assume that ZF-R is transformed into the IQ-scale. Then, if

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}} = E(y)$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\mu }_{\textrm{ex}}=100$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\mu }_{\textrm{ex}}=100$$\end{document}

![]() , we get

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E({{\textbf {y}}}) = 100 \times {\varvec{1}}_n = E({{\textbf {X}}})\varvec{\beta }_0$$\end{document}

, we get

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E({{\textbf {y}}}) = 100 \times {\varvec{1}}_n = E({{\textbf {X}}})\varvec{\beta }_0$$\end{document}

![]() , where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{1}}_n$$\end{document}

, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{1}}_n$$\end{document}

![]() is a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(n \times 1)$$\end{document}

is a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(n \times 1)$$\end{document}

![]() -vector of ones. Thus,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}}=\varvec{\mu }_{\textrm{ex}}$$\end{document}

-vector of ones. Thus,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}}=\varvec{\mu }_{\textrm{ex}}$$\end{document}

![]() imposes conditions on

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }$$\end{document}

imposes conditions on

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }$$\end{document}

![]() .

.

3. Estimation and Properties of Externally Informed Models

3.1. Generalized Method of Moments with External Moments

The GMM approach (Hansen, Reference Hansen1982) allows to estimate (general) regression models and to incorporate external moments into the estimation (Imbens & Lancaster, Reference Imbens and Lancaster1994). To estimate the parameter of a general regression model, a “model moment function”

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {m}}}({{\textbf {z}}},\varvec{\theta })$$\end{document}

![]() must be given, which satisfies the conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {m}}}({{\textbf {z}}},\varvec{\theta })] = {{\textbf {0}}}$$\end{document}

must be given, which satisfies the conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {m}}}({{\textbf {z}}},\varvec{\theta })] = {{\textbf {0}}}$$\end{document}

![]() only for the true parameter value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }_0$$\end{document}

only for the true parameter value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }_0$$\end{document}

![]() . The corresponding “sample moment function” for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}_i$$\end{document}

. The corresponding “sample moment function” for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {z}}}_i$$\end{document}

![]() will be denoted as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {m}}}({{\textbf {z}}}_i,\varvec{\theta })$$\end{document}

will be denoted as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {m}}}({{\textbf {z}}}_i,\varvec{\theta })$$\end{document}

![]() . In case of the linear regression model from Sect. 2, the model moment function corresponding to the method of Ordinary Least Squares (OLS) is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {m}}}({{\textbf {z}}},\varvec{\beta }) = {{\textbf {x}}}(y-{{\textbf {x}}}^T\varvec{\beta })$$\end{document}

. In case of the linear regression model from Sect. 2, the model moment function corresponding to the method of Ordinary Least Squares (OLS) is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {m}}}({{\textbf {z}}},\varvec{\beta }) = {{\textbf {x}}}(y-{{\textbf {x}}}^T\varvec{\beta })$$\end{document}

![]() (Cameron & Trivedi, Reference Cameron and Trivedi2005, p. 172). Given the model is correctly specified, for true parameter value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_0$$\end{document}

(Cameron & Trivedi, Reference Cameron and Trivedi2005, p. 172). Given the model is correctly specified, for true parameter value

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_0$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {m}}}({{\textbf {z}}},\varvec{\beta }_0)] = E[{{\textbf {x}}}(y-{{\textbf {x}}}^T\varvec{\beta }_0)] = {{\textbf {0}}}$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {m}}}({{\textbf {z}}},\varvec{\beta }_0)] = E[{{\textbf {x}}}(y-{{\textbf {x}}}^T\varvec{\beta }_0)] = {{\textbf {0}}}$$\end{document}

![]() holds. Replacing these population model moment conditions by corresponding sample model moment conditions,

holds. Replacing these population model moment conditions by corresponding sample model moment conditions,

and solving these estimating equations for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }$$\end{document}

![]() , leads to an estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\beta }}$$\end{document}

, leads to an estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\beta }}$$\end{document}

![]() for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_0$$\end{document}

for

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\beta }_0$$\end{document}

![]() . The above conditions are identical to the estimating equations resulting from the least-squares or, if normality of the errors is assumed, the maximum likelihood method. Furthermore, the general classes of M- and Z-estimators can be written using estimating equations that have this moment form. This leads to broad applicability, since these classes, for example, include the median and quantiles (Vaart, Reference Vaart1998).

. The above conditions are identical to the estimating equations resulting from the least-squares or, if normality of the errors is assumed, the maximum likelihood method. Furthermore, the general classes of M- and Z-estimators can be written using estimating equations that have this moment form. This leads to broad applicability, since these classes, for example, include the median and quantiles (Vaart, Reference Vaart1998).

The possibly vector-valued “external moment function” will be denoted as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {h}}}({{\textbf {z}}}) = {{\textbf {u}}}({{\textbf {z}}}) - \varvec{\mu }_{\textrm{ex}}$$\end{document}

![]() , where the functional form of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}}({{\textbf {z}}})$$\end{document}

, where the functional form of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {u}}}({{\textbf {z}}})$$\end{document}

![]() depends on the external information included into the model. We assume that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\mu }_{\textrm{ex}} = E[{{\textbf {u}}}({{\textbf {z}}})]$$\end{document}

depends on the external information included into the model. We assume that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\mu }_{\textrm{ex}} = E[{{\textbf {u}}}({{\textbf {z}}})]$$\end{document}

![]() , so that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {h}}}({{\textbf {z}}})] = {\varvec{0}}$$\end{document}

, so that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {h}}}({{\textbf {z}}})] = {\varvec{0}}$$\end{document}

![]() . If, for example, the expected value of y is known to be

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E(y) = 100$$\end{document}

. If, for example, the expected value of y is known to be

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E(y) = 100$$\end{document}

![]() , then

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$u(z) = y$$\end{document}

, then

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$u(z) = y$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _{\textrm{ex}} = 100$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _{\textrm{ex}} = 100$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$h(z) = y - 100$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$h(z) = y - 100$$\end{document}

![]() . The corresponding sample moment condition is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$0 = \frac{1}{n} \sum _{i=1}^n (y_i - 100)$$\end{document}

. The corresponding sample moment condition is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$0 = \frac{1}{n} \sum _{i=1}^n (y_i - 100)$$\end{document}

![]() (Imbens & Lancaster, Reference Imbens and Lancaster1994).

(Imbens & Lancaster, Reference Imbens and Lancaster1994).

To simplify the presentation, we define the combined moment function vector in general regression models as

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {g}}}({{\textbf {z}}},\varvec{\theta })=[{{\textbf {m}}}({{\textbf {z}}},\varvec{\theta })^T,{{\textbf {h}}}({{\textbf {z}}})^T]^T$$\end{document}

![]() in what follows and assume that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[\frac{1}{n} \sum _{i=1}^n {{\textbf {g}}}({{\textbf {z}}}_i,\varvec{\theta }_0)] = {\varvec{0}}$$\end{document}

in what follows and assume that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[\frac{1}{n} \sum _{i=1}^n {{\textbf {g}}}({{\textbf {z}}}_i,\varvec{\theta }_0)] = {\varvec{0}}$$\end{document}

![]() holds. Note that the number of moment conditions exceeds the number of parameters to be estimated, i.e. the externally informed model is overidentified. This means that there will in general be no estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\theta }}$$\end{document}

holds. Note that the number of moment conditions exceeds the number of parameters to be estimated, i.e. the externally informed model is overidentified. This means that there will in general be no estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\theta }}$$\end{document}

![]() that solves the corresponding sample moment conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\frac{1}{n} \sum _{i=1}^n {{\textbf {g}}}({{\textbf {z}}}_i,\varvec{\theta }) = {\varvec{0}}$$\end{document}

that solves the corresponding sample moment conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\frac{1}{n} \sum _{i=1}^n {{\textbf {g}}}({{\textbf {z}}}_i,\varvec{\theta }) = {\varvec{0}}$$\end{document}

![]() . To deal with the overidentification problem, we will use the GMM approach (Hansen, Reference Hansen1982), that finds an estimator as “close” as possible to a solution of the sample moment conditions. This is done by maximizing a quadratic form defined by a chosen symmetric, positive definite weighting matrix W in the moment functions of the sample. The efficiency of the estimator is affected by W, and this can be chosen to maximize the asymptotic efficiency of the estimator in the class of all GMM-estimators based on the same sample moment conditions (Hansen, Reference Hansen1982). This optimal weighting matrix is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {W}}}=\varvec{\Omega }^{-1}$$\end{document}

. To deal with the overidentification problem, we will use the GMM approach (Hansen, Reference Hansen1982), that finds an estimator as “close” as possible to a solution of the sample moment conditions. This is done by maximizing a quadratic form defined by a chosen symmetric, positive definite weighting matrix W in the moment functions of the sample. The efficiency of the estimator is affected by W, and this can be chosen to maximize the asymptotic efficiency of the estimator in the class of all GMM-estimators based on the same sample moment conditions (Hansen, Reference Hansen1982). This optimal weighting matrix is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {W}}}=\varvec{\Omega }^{-1}$$\end{document}

![]() , where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\Omega }={E}[{{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0){{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0)^T]$$\end{document}

, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\Omega }={E}[{{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0){{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0)^T]$$\end{document}

![]() . However, this optimal W is unknown in practice and must be estimated by a consistent estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{{{\textbf {W}}}}$$\end{document}

. However, this optimal W is unknown in practice and must be estimated by a consistent estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{{{\textbf {W}}}}$$\end{document}

![]() .

.

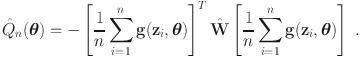

Definition 2

(Newey & McFadden, Reference Newey and McFadden1994, p. 2116) Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {g}}}({{\textbf {z}}},\varvec{\theta })$$\end{document}

![]() be a vector-valued function with values in

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mathbb {R}^K$$\end{document}

be a vector-valued function with values in

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mathbb {R}^K$$\end{document}

![]() , that meets the moment conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0)] = {{\textbf {0}}}$$\end{document}

, that meets the moment conditions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$E[{{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0)] = {{\textbf {0}}}$$\end{document}

![]() . Further let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{{{\textbf {W}}}} \in \mathbb {R}^{K,K}$$\end{document}

. Further let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{{{\textbf {W}}}} \in \mathbb {R}^{K,K}$$\end{document}

![]() be a positive-semidefinite, possibly random matrix, such that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$({{\textbf {r}}}^T \hat{{{\textbf {W}}}}{{\textbf {r}}})^{1/2}$$\end{document}

be a positive-semidefinite, possibly random matrix, such that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$({{\textbf {r}}}^T \hat{{{\textbf {W}}}}{{\textbf {r}}})^{1/2}$$\end{document}

![]() is a measure of distance from

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {r}}}$$\end{document}

is a measure of distance from

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {r}}}$$\end{document}

![]() to

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {0}}}$$\end{document}

to

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {0}}}$$\end{document}

![]() for all

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {r}}} \in \mathbb {R}^K$$\end{document}

for all

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {r}}} \in \mathbb {R}^K$$\end{document}

![]() . Then, the GMM-estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\theta }}_{\textrm{ex}}$$\end{document}

. Then, the GMM-estimator

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\theta }}_{\textrm{ex}}$$\end{document}

![]() is defined as the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }$$\end{document}

is defined as the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }$$\end{document}

![]() , which maximizes the following function:

, which maximizes the following function:

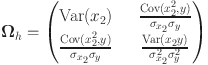

The GMM approach provides consistent and normally distributed estimators under mild regularity conditions (Newey & McFadden, Reference Newey and McFadden1994, p. 2148) for a wide range of models, like linear or nonlinear, cross-sectional or longitudinal regression models. Note that we have not assumed that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{{{\textbf {W}}}}$$\end{document}

![]() is invertible because we will mainly derive asymptotic expressions based on W for which the invertibility of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{{{\textbf {W}}}}$$\end{document}

is invertible because we will mainly derive asymptotic expressions based on W for which the invertibility of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{{{\textbf {W}}}}$$\end{document}

![]() is not necessary. However, when deriving estimators, additional assumptions about invertibility must be made, which we explain in Sect. 3.2. Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {G}}}={E}[\nabla _{\varvec{\theta }} {{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0)]$$\end{document}

is not necessary. However, when deriving estimators, additional assumptions about invertibility must be made, which we explain in Sect. 3.2. Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {G}}}={E}[\nabla _{\varvec{\theta }} {{\textbf {g}}}({{\textbf {z}}},\varvec{\theta }_0)]$$\end{document}

![]() be a fixed matrix and W the optimal weighting matrix, then

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\text {Var}(\hat{\varvec{\theta }}_{\textrm{ex}}) = \frac{1}{n} ({{\textbf {G}}}^T {{\textbf {W}}}{\varvec{G}})^{-1}$$\end{document}

be a fixed matrix and W the optimal weighting matrix, then

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\text {Var}(\hat{\varvec{\theta }}_{\textrm{ex}}) = \frac{1}{n} ({{\textbf {G}}}^T {{\textbf {W}}}{\varvec{G}})^{-1}$$\end{document}

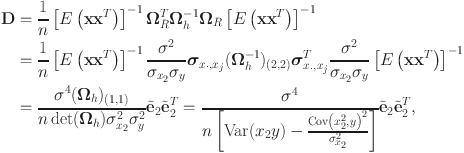

![]() . This variance expression is not informative with respect to a possible efficiency gain of the GMM-estimator if external information is used. Hence, the following corollary explicitly shows the effect of the external information on the variance of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\theta }}_{\textrm{ex}}$$\end{document}

. This variance expression is not informative with respect to a possible efficiency gain of the GMM-estimator if external information is used. Hence, the following corollary explicitly shows the effect of the external information on the variance of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\theta }}_{\textrm{ex}}$$\end{document}

![]() .

.

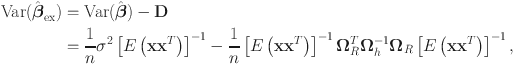

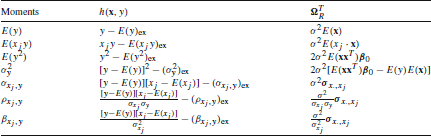

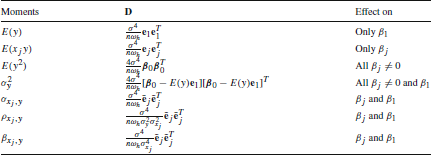

Corollary 1

Assume

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\hat{\varvec{\theta }}_M$$\end{document}

![]() is the GMM-estimator based on the model estimating equations alone (ignoring the external moments), and that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {m}}}({{\textbf {z}}},\varvec{\theta })$$\end{document}

is the GMM-estimator based on the model estimating equations alone (ignoring the external moments), and that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {m}}}({{\textbf {z}}},\varvec{\theta })$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\varvec{\theta }$$\end{document}

![]() have the same dimension. Using the prerequisite

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${{\textbf {g}}}({{\textbf {z}}},\varvec{\theta })=[{{\textbf {m}}}({{\textbf {z}}},\varvec{\theta })^T,{{\textbf {h}}}({{\textbf {z}}})^T]^T$$\end{document}

have the same dimension. Using the prerequisite

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}