The introduction of internet communication by Sir Tim Berners-Lee in 1989, and its widespread adoption since then, has opened up new methods for the sampling of research participants and the collection of qualitative and quantitative data from respondents. This development has significant implications for the expense and work involved in conducting epidemiological research, particularly when compared with the methods previously relied on. Internet sites and email communication have created opportunities for researchers, even those on limited budgets, to conduct large-scale studies while reducing postal and paper costs, data transcription and the biases resulting from researcher presence. These advantages have resulted in the rapid adoption of internet-mediated research (IMR) techniques. However, there is a risk that their unthinking application could compromise the quality of studies by introducing biases in sampling or in data collection inherent to the internet modality. There is also the concern that, although the relative anonymity of internet methods might enhance disclosure and minimise social desirability, this anonymity also creates its own problems, including the inability to monitor respondent distress.

This article focuses on cross-sectional studies, because that is the approach used most frequently by clinical trainees, and it is intended to inform such practice. By outlining the applications of IMR in a mental health context it sets out a framework by which researchers might attempt to overcome the disadvantages of IMR when conducting their own cross-sectional studies or appraising those of others. Despite the role of bias and the limits to generalisability, there are many benefits to be gained from an internet-mediated approach to psychiatric epidemiological research, not least an enriched understanding of human behaviour, mental distress and psychiatric morbidity.

What is internet-mediated research?

Most broadly, ‘internet-mediated research’ describes the practice of gathering research data via the internet directly from research participants. Generally, participants can respond to a questionnaire or task at a time and place of their choice, provided that they have access to the internet. The term can apply both to the sampling of research participants and to the collection of data.

Online sampling methods

Traditionally, sampling methods for community-based epidemiological research have included use of the telephone via random digit dialling, postal surveys or personal visits to randomly selected private households, and directly approaching people on the street. Sampling methods for research in patient populations have tended to use the approach of telephone, letter or direct contact with people selected from patient databases. Traditional methods of data collection in both community-based and patient surveys have included inter views, either face-to-face or via telephone, and the completion of postal survey questionnaires. Newer approaches include text messages, for example as consumer satisfaction surveys linked to service use. The advent of the internet means that sampling can now be carried out using email distribution lists, snowballing via social networking sites, or website advertisements.

Online data collection

The internet also permits a variety of methods of data collection. Online surveys derive from the early role of computers in face-to-face interviews, where interviewees would use computer-assisted self-interviewing (CASI) for sensitive aspects of the data collection (Reference de Leeuwde Leeuw 2005). After a set of verbal questions on a relatively neutral topic, such as level of social support, the interviewee would be passed a computer and asked to enter responses to questions on a topic such as sexual history. Typically, the interviewer would leave the room, albeit remaining accessible for queries, returning later to resume the interview.

This diminishment of researcher presence is now extended through the use of online questionnaires, which collect quantitative and qualitative data using website survey software, the most well-known being Survey Monkey. Email can be used when briefer responses are required. Some polls use simple web-based tick-boxes, as in the polls run weekly on the BMJ homepage (www.bmj.com/theBMJ) and monthly in the Royal College of Psychiatrists’ eNewsletters (www.rcpsych.ac.uk/usefulresources.aspx). Interviews are possible using videoconferencing software such as Skype or Facetime. Focus group discussions can be facilitated using an online chat forum.

Application of internet-mediated health research

In health research the internet has been used most frequently for conducting cross-sectional studies, but it can also be applied to other observational studies, such as case–control studies, cohort studies and even ecological studies. One example of such an ecological study relies on the dominance of specific search engines, using Google Trends to explore the relationship between frequency of search terms relating to suicide method and national suicide rates (Reference McCarthyMcCarthy 2010). Valuable perspectives have also been gained by harvesting research information from existing internet sites. Linguistic analysis of text harvested from Facebook content has been conducted to develop a depression screening tool (Reference Park, Lee and KwakPark 2014). Simulating a web search is a useful means of exploring patients’ exposure to information on health matters or on suicide methods (Reference Biddle, Donovan and HawtonBiddle 2008), but search result customisation must be disabled for such simulations to avoid past search activity influencing the results.

Further applications of IMR lie in studies of human cognition and behaviour, using perceptual or cognitive tasks embedded in online programs. Methods of assessment include scores on neuropsychological tests or measurement of response times. Similarly, the collection of outcomes for interventional studies is possible, measuring temporal changes in standardised instruments such as the Beck Depression Inventory or the Addenbrooke’s Cognitive Examination, or measurements of performance on tasks such as time taken to perform a trail-making test. Finally, IMR can be applied to the evaluation of IMR methodology, for example by comparing the time taken to complete each component of a task under differing conditions, or by varying participants’ knowledge of a researcher’s gender or ethnicity to investigate the impact on responses.

Specific sampling considerations in IMR cross-sectional surveys

When designing the sampling strategy for an internet-mediated cross-sectional study, the distinction between closed and open surveys is critical.

Open internet surveys

An open survey usually involves a web advert or advertising via social networking sites. In this case, the researcher has no control over who might respond, which creates problems in estimating the sampling frame and its representativeness. One example of an open survey was a US study measuring the benefits and risks of online interaction among adolescent girls (Reference Berson, Berson and FerronBerson 2002). This used an online survey advertised on the website of Seventeen magazine in 1999, inviting the participation of 12- to 18-year-old girls. It found that 45% had revealed personal information to a stranger met online, and 23% had disseminated pictures of themselves to another person encountered online. Respondents whose teachers had discussed internet safety with them showed a reduced risk of agreeing to meet with someone they had met online. One of the key limitations of this open survey design was that the denominator of those accessing the site could not be characterised reliably, and it was not possible to verify whether those responding really were aged 12–18. The results from this convenience sample could not therefore be said to be representative of all US adolescents and may not be generalisable beyond the website’s users.

Closed internet surveys

In contrast, a closed internet survey is by invitation only, usually via email. Consequently, the denominator is known, allowing measurement of response rate and non-response bias. It may also be possible to gather information about participant demographics in order to assess the nature of the sample obtained and the generalisability of results (Reference HewsonHewson 2003). Closed surveys are therefore preferred, although technical barriers (such as requiring respondents to designate a characterising feature, such as their institution) must be introduced to confine responses to invitees only.

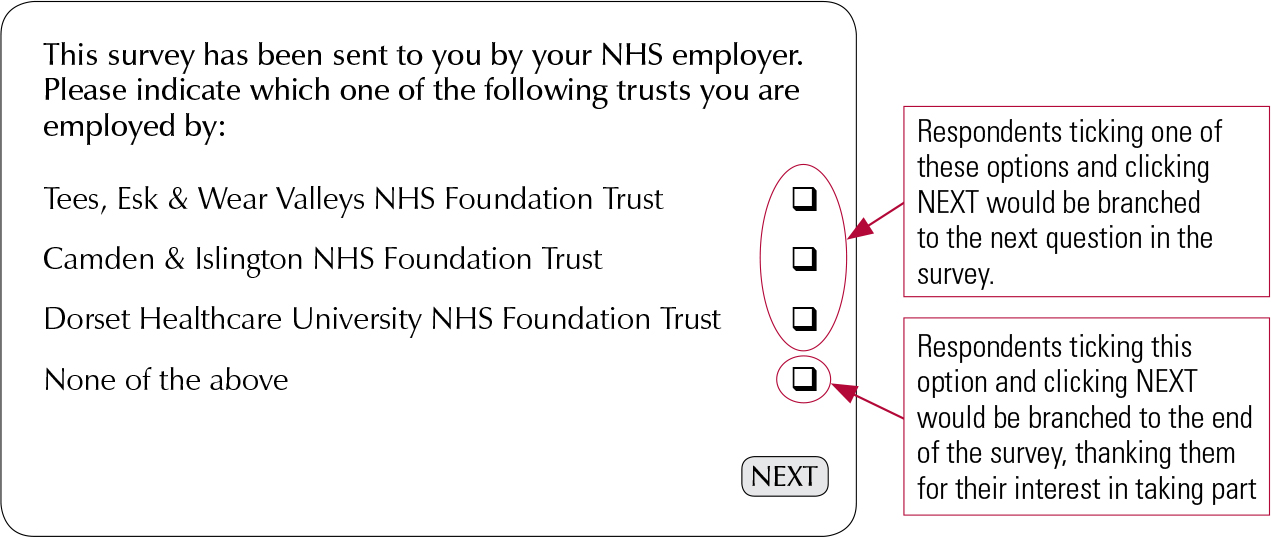

Branching restrictions

For example, an invitation to participate in an online survey sent via the email distribution list of a specific trade union might easily be forwarded by any of the invitees to a friend in another union. On opening the survey link within that email, a request for respondents to state their trade union would allow researchers to exclude those for whom the survey was not intended. This could be done using branching restrictions incorporated into the questionnaire, making it impossible for anyone to proceed if they were not from the intended organisation (Fig. 1). Obviously, those determined to participate could overcome such a restriction, as discussed below in relation to validity problems in IMR. For reasons of research ethics, it would also be good practice to state clearly the inclusion and exclusion criteria for the study. Otherwise, where branching restrictions were not possible, ineligible respondents who contributed their time to completing the questionnaire in good faith would find their efforts disregarded.

FIG 1 Example of branching restriction included in a closed survey.

Email sampling

The email sampling format is a common approach when using a closed survey design. A 2009 survey of trainee psychiatrists used the email distribution lists of four out of eight London-based training schemes to establish the proportion and characteristics of those who undertook personal psychotherapy (Reference Dover, Beveridge and LeaveyDover 2009). The web-based survey gathered quantitative and qualitative data, achieving responses from 140/294 (48%) trainees. It found a prevalence of 16% for uptake of personal psychotherapy, but the authors acknowledged that this might have been an overestimate resulting from self-selection bias.

Generalisability of results

University email distribution lists have also been used in mental health research as a sampling frame for community-based studies. Factors favouring a reasonable response from eligible individuals using the university email sampling method are that recipients are a defined and captive population, accustomed to participating in email or internet surveys, sympathetic towards research activity, proactive and internet literate, and able to participate at minimal direct financial cost. Although the use of university lists potentially reaches a large number of young adults, it also introduces the potential for selection bias (Box 1), particularly in relation to socioeconomic status and the healthy worker effect (Box 2) (Reference Delgado-Rodriguez and LlorcaDelgado-Rodriguez 2004). Factors mitigating these biases include the expanding proportion of young people entering higher education, with participation in England increasing from 30% in the mid-1990s to 36% by 2010 (Higher Education Funding Council for England 2010), and the increasing social and cultural diversity of this group (Royal College of Psychiatrists 2011). Presumptions about the healthy worker effect operating in student populations may be invalid, given concerns about the mental health of students (Reference Stanley and ManthorpeStanley 2002, Reference Stanley, Mallon and Bell2007; Royal College of Psychiatrists 2011).

BOX 1 Case study: sampling in student populations

A study of the community prevalence of paranoid thoughts sampled 60 200 students in three English universities: University College London, King’s College London and the University of East Anglia (Reference Freeman, Garety and BebbingtonFreeman 2005). Each student was sent an email inviting them to participate in an anonymous internet survey on ‘everyday worries about others’. The survey, which included six research scales, was completed by 1202 students: a response of 2%. The authors reported that paranoid thoughts occurred regularly in about a third of respondents. Criticisms of this study related to non-response bias and the representativeness of the sample. In a survey on paranoia, it was possible that the most paranoid members of the population sampled would find the topic very salient, or conversely be highly suspicious of it. This might increase, or decrease, their likelihood of responding, resulting in an over- or under-estimate of the prevalence of paranoid thoughts. Two of the three universities were London-based Russell Group institutions (i.e. universities that receive the highest income from research funding bodies and are characterised by high competition for student places and staff contracts), so the student sample might not be considered epidemiologically representative. Inclusion of educational institutions that are more diverse, both geographically and socioeconomically, was indicated for future studies to improve representativeness in prevalence studies. For studies comparing groups recruited via email sampling of universities, deriving controls from the same sample would mean that any such biases would be equally distributed. This could also be addressed statistically by adjusting for socioeconomic status.

BOX 2 The healthy worker effect

The healthy worker effect (or the healthy worker survivor effect) describes the lower mortality observed in the employed population when compared with the general population (Reference Delgado-Rodriguez and LlorcaDelgado-Rodriguez 2004). This is because those with chronic illness and severe disability are underrepresented in occupational samples. The presence of this bias will tend to underestimate the association between an exposure and an adverse outcome.

At worst, generalisability from studies using university email distribution lists is limited to those studying in other universities in the same country. However, with increases in UK student fees reducing the numbers of people who can afford tertiary education, future studies using the email sampling method within universities may become less generalisable to the general population.

Response rates

Research on factors that enhance response to research surveys mainly relates to postal questionnaires, but many of these factors apply to IMR surveys too. A systematic review of studies investigating response rates to postal questionnaires evaluated 75 strategies in 292 randomised trials; its main outcome measure was the proportion of completed or partially completed questionnaires returned (Reference Edwards, Roberts and ClarkeEdwards 2002). Factors favouring the probability of responding included: monetary incentives, short questionnaires, personalised questionnaires and letters, making follow-up contact, greater salience of research topic, questions not of a sensitive nature, and the questionnaire originating from a university.

Qualitative work with student samples confirms that willingness to complete web-based or paper-based questionnaires is influenced by the relevance of the topic to their life experience (Reference Sax, Gilmartin and BryantSax 2003).

A study investigating incentive structures in internet surveys used a factorial design to send a web-based survey to 2152 owners of personal websites (Reference Marcus, Bosnjak and LindnerMarcus 2007). Combinations of high v. low topic salience, short v. long survey, lottery incentive v. no incentive, and general feedback (study results) v. personal feedback (individual profile of results) showed higher response rates for highly salient and shorter surveys. There was evidence for an interaction between factors: offering personalised feedback compensated for the negative effects of low topic salience, and a lottery incentive tended to evoke more responses only if the survey was short (although this was of marginal significance).

Essentials of setting up an IMR cross-sectional survey

Data protection

The Data Protection Act 1998 applies to any survey that requires respondents to enter personal data that can identify them, or any survey program that uses tracking facilities to enable identification of an individual. The information sheets accompanying such a survey should include a Data Protection disclaimer explaining that the personal information that participants provide will be used only for the purposes of the survey and will not be transferred to any other organisation. It is also important to check with your institution whether registration with the Data Protection Officer or equivalent is required before starting to collect data. Mentioning such aspects in the ethical approval application form will demonstrate that the study has been carefully planned.

Choosing a survey program

When choosing an online survey program, consideration should be given not only to cost but also to appearance, the ease of constructing the question formats (for example, Likert scale, numeric response, multiple choice, drop-down response, free text), the process for downloading data, and data analysis capabilities. Most programs allow data to be downloaded into Microsoft Excel, but some permit data to be downloaded directly into statistical packages such as SPSS. This is preferred for maintaining the integrity of data. Costing for online survey programs tends to operate on an annual subscription basis. It may be more economical to conduct two or more surveys at the same time, making sure that there is sufficient time within the subscription period to become proficient in the techniques of questionnaire construction, to recruit participants and to complete data collection.

Designing the survey questionnaire

When designing the survey questionnaire, it is helpful to use general principles governing quantitative and qualitative questionnaire design (Reference OppenheimOppenheim 1992: pp. 110–118; Reference Jackson and FurnhamJackson 2000; Reference MalterudMalterud 2001; Reference BoyntonBoynton 2004a,Reference Boynton and Greenhalghb,Reference Boynton, Wood and Greenhalghc; Reference BowlingBowling 2014: pp. 290–324), as well as those specific to IMR (Reference HewsonHewson 2003; Reference EysenbachEysenbach 2004; Reference Burns, Duffet and KhoBurns 2008). The latter suggest a range of ways in which the cost and convenience advantages of IMR can be harnessed while minimising potential biases and validity problems.

Overall effect

Guidelines suggest that IMR surveys state clearly the affiliation for the study, to give it credibility, enhance participation and avoid hostile responses. Surveys should provide clear electronic instructions to guide respondents, with links to further information if required. Information on confidentiality should be given and what participants should do if the survey causes them distress. Many surveys provide a debrief page listing sources of support. This is often visible as the final page of the survey, but also accessible throughout the questionnaire via a button at the foot of each page.

Structure and branching

Internet-based surveys can present questions in a single scrolling page (for short polls) or a series of linked pages (multiple-item screens), and layout is an important influence on response rates and completion rates (Box 3). Conditional branching, also known as skip logic, can reduce the apparent size of a survey, and showing a progress indicator at the bottom of the page (for example, ‘15% complete’) may motivate completion. Conditional branching may also reduce the chances that participants will be distressed or annoyed by being asked irrelevant questions.

BOX 3 An experiment in survey design

The study

In an internet survey on attitudes towards affirmative action sent to 1602 students (Reference CouperCouper 2001), the survey designers used a 2 × 2 × 2 design to vary three elements of the survey:

-

whether or not respondents were reminded of their progress through the survey

-

whether respondents saw one question per screen or several related items per screen

-

whether respondents indicated their answers by clicking options on a choice of numbers or by entering the relevant number in a free-text box

The results

-

Multiple-item screens significantly decreased completion time and the number of ‘uncertain’ or ‘not applicable’ responses

-

Respondents were more likely to enter invalid responses in long- than in short-entry boxes

-

The likelihood of missing data was lower with fixed-option buttons compared with free-text boxes

Missing data and drop-out

Missing data is a critical problem in any epidemiological study, and the proportion of missing data can be minimised by aspects of IMR survey design. Cramming a web page with questions increases the chances that a respondent will move on to the next page without realising that they have missed a question. In such a case, the missing data might be regarded as ‘missing at random’.

Keeping the questionnaire brief and focused will decrease the chances that those under pressure of time will drop out midway. As time pressure may be associated with socioeconomic factors, such missing data might be regarded as ‘missing not at random’.

Carefully wording the questions and ordering them so that sensitive questions appear further into the survey both reduce the chances that respondents will drop out because of psychological distress.

The ideal design (and a caveat on free-text boxes)

Ideally, an online survey will balance the use of multiple-item screens (to reduce completion time) and single questions per screen (to allow more frequent data-saving with each page change), and will maximise the use of fixed option buttons (to reduce invalid responses and the need for coding or checking outliers). Free-text boxes can be included where extra information might be required, but their limitations should be borne in mind (Reference Garcia, Evans and ReshawGarcia 2004). Most notable among these is the potential for collecting large volumes of qualitative data that cannot be analysed because of lack of capacity. It can take days to recode free-text information qualifying a key quantitative variable if there are a great many respondents.

Classification of socioeconomic status is a good example of this. The National Statistics Socio-Economic Classification (NS-SEC; Office for National Statistics 2010) is a common classification of occupational information used in the UK, with jobs classified in terms of their skill level and skill content. To determine a survey participant’s social class, they may be asked to give their job title or current employment status and briefly describe work responsibilities. This information, sometimes quite detailed or difficult to understand, will need to be recoded manually so that the participant can be allocated to one of nine socioeconomic classes. Conversely, using fixed choices to ask respondents which social class they belong to raises issues of validity.

Testing drafts of the survey

Seeking advice on content, tone, language, ordering of questions and the visual appearance of the survey will improve eventual response rates. It is useful to canvas opinion among people of a similar age or background to the sample population, not only for their views on successive drafts of the survey content, but also on its colour scheme and layout when uploaded to the survey program. This also applies to sampling emails, where use of jargon may disincline eligible recipients to respond. Describing the consultation process in the ethics approval application form will also demonstrate that the survey is likely to be acceptable to the sample population.

Validation checks and piloting the survey

Validation checks are advisable to ensure that IMR measures capture what they purport to. For example, comparing the results of an IMR study with established results of face-to-face psychometric interviewing may show systematic differences on some psychological variable. By introducing constraints on the flexibility of participants’ behaviour it might be possible to control parameters that must remain constant, for example time taken to complete a task. In some contexts, it may be possible to gather information about participants, such as browser type, IP address, and date and time of response, in order to detect multiple submissions. However, use of shared computers and virtual private networks (VPNs) would obscure this, and access to information on IP address may be unethical (advice on the latter problem might be sought from the ethics committee and patients involved in the consultation process).

Finally, it is important to pilot the survey by sending the sampling email and the embedded survey link to colleagues and friends, and to yourself. Simulating the experience of responding to the survey will reveal glitches in branching restrictions and web links and problems specific to certain browsers. This gives time to address them before the final sampling. It is very difficult to predict where a glitch could arise, and if respondents encounter a problem when completing the online program it could render the data useless as well as wasting their time.

Advantages of IMR

Cost

Internet-mediated research has the potential to gather large volumes of data relatively cheaply and with minimal labour, involving automatic data input to a database of choice. Compared with postal questionnaires, IMR cuts out mailing and printing costs, as well as the labour costs of data entry, although the latter is essentially transferred to individual respondents. The paper-free approach may also be more environmentally responsible, although computer manufacture and internet usage both contribute to carbon emissions.

Time

Internet-mediated research reduces the time taken to process individual paperwork by eliminating the printing and mailing of questionnaires, as well as the tasks of transcription and data entry. This can reduce the duration of the data-gathering phase, which suits those working to a limited time frame. Coding of data, for example for statistical packages such as SPSS or STATA, can begin as soon as the first participant has responded. Downloading an interim data-set, even that with only one respondent, gives a sense of how the data will appear. With successive downloads of interim data-sets, it may be possible to start data cleaning at an early stage. This involves the detection and removal of inconsistences in the data, to improve their quality.

Detecting and managing outliers is one example of the data points processed during data cleaning and of a task that is usually simplified with online surveys. For example, postal questionnaires returned from a sample of working-age adults might include one copy in which the respondent gave their age as 5. This is most likely to have been an error. The outlier would be spotted and some decision would have to be reached over whether to guess the age from other parameters in the questionnaire, whether to contact the respondent to verify their age, or whether to enter ‘age’ as missing data. In an online survey, fixed-choice responses for age would eliminate the possibility of outliers for age, reducing the time needed for data cleaning.

Convenience

For research respondents, data collection via the internet presents fewer barriers to participation than keeping appointments or posting back a questionnaire (Reference WhiteheadWhitehead 2007). This can also reduce the timescale of a study. By widening participation in this way, IMR may be able to address sampling biases by reaching traditionally difficult-to-access groups such as rural populations, people living with illness, frailty and disability, and shift workers. This is supported by evidence that age, nationality and occupation have typically been found to be more diverse in internet samples (Reference HewsonHewson 2003) and that IMR has been used to engage with hard-to-reach groups, including ‘senior surfers’, disadvantaged teenagers, and people living with disabilities, dementia and depression (Reference WhiteheadWhitehead 2007). This widening of geographical access increases the opportunities for cross-cultural research.

Impact on reporting bias

As with postal surveys, IMR has the potential to reduce the biases resulting from researcher presence by diminishing social desirability effects (Reference JoinsonJoinson 1999), enhancing disclosure (Reference JoinsonJoinson 2001) and reducing the influence on interactions of interviewees’ perceptions of researchers’ ethnicity, gender or status (Reference Richards and EmslieRichards 2000). The anonymity of the internet enhances disclosure when compared with interview methods (Reference JoinsonJoinson 2001), although in relation to mental health research it remains possible that denial or the stigma associated with mental disorder (Reference Clement, Schauman and GrahamClement 2015) may result in underreporting of psychiatric symptoms. IMR is thus particularly suitable for sensitive topics, such as sexual behaviour or psychiatric symptoms, and socially undesirable behaviours, such as alcohol misuse, where underreporting bias is common (Reference Delgado-Rodriguez and LlorcaDelgado-Rodriguez 2004). One qualitative study of health behaviour changes in people after treatment for colon cancer compared findings from face-to-face and online chat focus groups. It found that similar themes emerged from both groups, but that the anonymity of the internet provided a more comfortable forum for discussing such personal matters (Reference Kramish, Meier and CarrKramish 2001). Although anonymity minimises some biases, this must be balanced against others that IMR introduces. These are discussed below and are a key drawback of its use.

Disadvantages of IMR

Technical

Internet-mediated research relies on access to a computer and the computer literacy of respondents. There is a risk of non-response because email invitations are automatically classified as spam or deleted as junk. Where an email invitation to participate in research appears as part of a weekly email digest, such as that sent out in many large institutions, there is a risk that it will go unnoticed by those eligible. One study of student samples found that responses were lower for emailed and web-completed questionnaires than for postal and paper-completed questionnaires, perhaps because many students did not routinely check their college email accounts (Reference Sax, Gilmartin and BryantSax 2003).

People who rely on shared computers are denied the privacy required for surveys on sensitive topics. Where internet connectivity is poor there is a risk that the programme will crash and lose data, or respondents will give up because of long pauses between screens. As poor internet connectivity may be governed by socioeconomic factors, this might increase the amount of missing data from those on lower incomes, thus introducing bias. Keeping the questionnaire brief and limiting the use of videos may reduce the chances of the programme crashing. Another technical disadvantage is that without the reminder afforded by a hard copy of the questionnaire, there is a risk that respondents who save halfway through, with the intention of returning, forget to resume. This can partly be overcome by email reminders if using a closed survey, in line with research indicating that responses to postal research questionnaires are higher if follow-up contact is made (Reference Edwards, Roberts and ClarkeEdwards 2002).

Specific biases

Sampling and non-response biases

Both sampling bias and non-response bias are key problems in internet-mediated surveys. Sampling biases arise from the sites selected to advertise surveys, the email distribution lists used to circulate an invitation to participate, or the manner in which an announcement on a social networking site is propagated. For example, in a study measuring cognitive styles among people with eating disorders, a recruitment advertisement on the website of an eating disorders support organisation would bias findings, such that the cognitive styles of those with insight into their disorder were represented. Similarly, a study of the community prevalence of depression using a government employee email distribution list would underestimate the actual prevalence by excluding the unemployed, described as the healthy worker effect (Box 2) (Reference Delgado-Rodriguez and LlorcaDelgado-Rodriguez 2004).

Such biases can be addressed by using closed surveys for specific invitees only, while ensuring that those invited are a suitably representative sample. Where a closed survey is not possible, thought must be given to whether the salience or sensitivity of the topic is likely to affect the extent or manner in which it is advertised. Although it may not be possible to control this factor, it may influence the covariates chosen for adjustment of quantitative findings. For example, a study comparing the attitudes of those in different age groups towards people with body dysmorphic disorder might garner more intense interest on sites popular with certain ethnic groups, suggesting that the analysis should adjust for ethnicity.

Non-response bias affects all modalities of cross-sectional surveys. In the government employee example above, differential non-response could apply to those on sick leave not checking their email, or those with depression lacking the motivation to read and respond to emails.

Female gender bias and male non-response bias

Internet-mediated research offers the potential to redress the tendency for a female gender bias in psychosocial health surveys. Previous research had characterised the internet-user population as technologically proficient, educated, White, middle-class, professional and male (Reference HewsonHewson 2003). This, and the relative anonymity of internet surveys, may serve to minimise male non-response bias. Overall, there is mixed evidence for whether internet samples are more gender-balanced than traditionally obtained samples, whether IMR introduces a male gender bias (Reference HewsonHewson 2003), or indeed whether the female gender bias applies to IMR too (Reference Freeman, Garety and BebbingtonFreeman 2005). Furthermore, as patterns of population internet coverage change, any balancing of the traditional female response bias to surveys is likely to shift again.

Validity

There are issues with the validity of IMR, particularly through the absence of a relationship with participants, although most of the problems mentioned in this section apply also to postal questionnaires.

Without monitoring of body language, tone of voice and signs of distress, there is less control over or knowledge of participants’ behaviour, which also raises questions about how distress might be responded to.

In the absence of monitoring or programming restrictions, participants may violate instructions, for example by backtracking to cheat in a memory task, or gaming their responses to force their way down specific branches of the survey. There is the potential for contamination through third-party involvement (for example, respondents consulting a friend), use of reference materials, or hoax respondents, and the potential for distraction or intoxication with alcohol or drugs. However, comparison methods and programming checks can help to confirm the validity of internet-mediated responses.

For the above reasons, IMR may be invalid for conducting diagnostic interviews requiring considerable subjective judgement on the part of the assessor. On the other hand, there is evidence that differences in the reliability of interview methods for diagnosing psychiatric disorder compared with self-administered questionnaires are only modest (Reference Lewis, Pelosi and ArayaLewis 1992).

Ethics

Informed consent

The ethical problems of using IMR start with obtaining fully informed consent. In some studies respondents may not even be aware that they are participating in a research study, but such concealment must be agreed by a research ethics committee. One example of this approach is a randomised controlled trial of a brief online intervention to reduce hazardous alcohol use among Swedish university students (Reference McCambridge, Bendtsen and KarlssonMcCambridge 2013). Making use of the university health services’ standard practice of sending emails to students, the researchers included in these emails an apparent ‘lifestyle survey’ at baseline and 3-month follow-up. The study found that the group randomised to alcohol assessment and feedback on alcohol use showed a significant reduction in the proportion of risky drinkers compared with a group who did not receive alcohol assessment and feedback. The regional ethics committee approved the use of deception in this study on the grounds that concealing the alcohol study focus would permit adequate masking of participants.

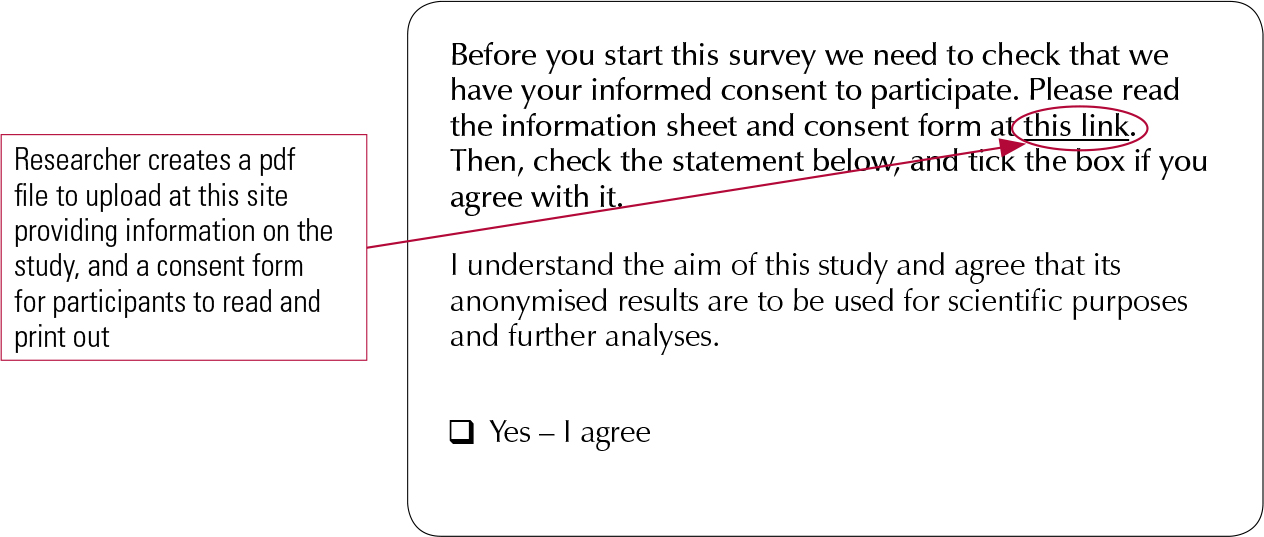

Studies involving informed consent should include links to information sheets and consent forms, but it is difficult to check whether these have been read before a respondent ticks the check box against the statement of agreement to participate (Fig. 2). The difficulties of establishing capacity to consent are obvious, and this may have an effect on data quality. It is important that participants are reminded that they may withdraw from the study at any time, and to provide a ‘Submit data’ button at the end of the survey to ensure they understand and agree that their responses are being submitted to the researcher.

FIG 2 Example of consent page in an online survey.

Confidentiality and data security

The storing of data on web servers that may be vulnerable to hacking raises concerns about confidentiality and security, particularly if monetary incentives within a study require participants to give identifying information such as an email address (Reference Sax, Gilmartin and BryantSax 2003). It is important to assure participants of the confidentiality of their responses and to describe the data storage security measures set up to minimise the possibility of any other parties gaining access to the study data (Reference HewsonHewson 2003). In the UK, participants should be reassured that you are following the guidance in the Data Protection Act 1998.

Blurring of boundaries and participant distress

Requiring participants to respond to research questions at home, rather than in the formal setting of a research department, may result in a blurring of the public–private domain distinction. However, this also applies to many surveys in which data are gathered by interview or postal questionnaire, and is balanced against the convenience and cost advantages to respondents and researchers.

Without direct contact with respondents there is a lack of debriefing, which may be important where surveys have covered distressing topics. It is good practice to include, at the end of the survey, a page giving information on sources of support or contact details of the researcher in case the participant has any queries.

Data harvesting

Ethical issues are also raised by the ‘harvesting’ of information from newsgroup postings and individuals’ web pages, when the information was not made available for such a purpose (Box 4). Although this information is in the public domain, thought should be given to how people posting information might react to learning that it had been sampled for analysis. One example of this was a study using linguistic analysis to compare bereavement reactions after different modes of death, using comments posted on internet memorial sites (Reference LesterLester 2012).

BOX 4 Examples of data harvesting

-

Thematic analysis of content posted on a self-harm forum

-

Content analysis of tweets containing certain hashtags, e.g. #depressed

-

Linguistic analysis of text posted on a support forum for people with psychosis

-

Narrative analysis of the blog content of a person reporting obsessive–compulsive traits

Tolerance

Just as recipients of postal questionnaires might become immune to junk mail, recipients of email invitations might also develop ‘email fatigue’. This is more of a problem in institutions that place few restrictions on the number of survey participation emails circulated to all. At high levels of exposure there is a risk that such emails might be deleted with only a cursory glance at the content. Exposure to low-quality questionnaires also runs the risk of extinguishing the motivation of those in a given sampling frame to participate in such surveys. This may also have been true of postal surveys pre-internet, but the risk of email survey fatigue is likely to threaten the sustainability of the email sampling method. Any concession in the quality of survey questionnaires or the smooth operation of the web programs on which they are delivered would also threaten the popularity of internet-mediated surveys.

Conclusions

Use of the internet to conduct cross-sectional surveys in mental health research is a growing practice. Many of the problems inherent in face-to-face survey methods, such as social desirability effects and the effects of researcher presence on disclosure, are reduced by the relative anonymity of internet methods. However, this anonymity creates its own problems, including specific biases and the inability to monitor respondents’ distress. By careful planning of sampling strategy and survey design, it may be possible to reduce these biases as well as the potential for causing distress, enabling a potentially cost-effective means of understanding psychiatric morbidity.

MCQs

Select the single best option for each question stem

-

1 Which of the following would not be possible using internet-mediated research methods?

-

a assessing whether the participant was becoming progressively intoxicated while responding

-

b ensuring that a participant did not consult a friend while responding

-

c recording the time of commencement and completion of a survey

-

d detecting multiple submissions from the same participant

-

e providing instructions in a selection of languages.

-

-

2 Direct consent of the individuals concerned would be required for data harvesting from:

-

a Facebook public content

-

b Twitter

-

c web forums

-

d YouTube

-

e personal emails.

-

-

3 Response to an online survey is likely to be improved by:

-

a low topic salience

-

b a gift voucher incentive

-

c video instructions

-

d a detailed sampling invitation

-

e a tight response deadline.

-

-

4 Internet-mediated surveys minimise:

-

a inductive bias

-

b sampling bias

-

c non-response bias

-

d social desirability bias

-

e the healthy worker effect.

-

-

5 Which one of the following is not likely to be a substantial cost consideration in internet-mediated cross-sectional surveys?

-

a the consultation process

-

b participant travel time

-

c carbon emissions

-

d a lottery incentive

-

e the survey program.

-

MCQ answers

| 1 | b | 2 | e | 3 | b | 4 | d | 5 | b |

eLetters

No eLetters have been published for this article.