Introduction

Across the social sciences, researchers and practitioners working to use evidence to improve public service delivery are increasingly turning to systems approaches to remedy what they see as the limitations of traditional approaches to policy evaluation. This includes increasing calls from disciplines like economics and management to adopt systems approaches to understanding the complexities of government bureaucracies (Pritchett Reference Pritchett2015; Bandiera et al. Reference Bandiera, Callen, Casey, La Ferrara and Landais Camille2019; Besley et al. Reference Besley, Burgess, Khan and Xu2022). While those turning to systems approaches are united in viewing standard impact evaluation methods (at least in their more naïve applications) as overly simplistic, deterministic, and insensitive to context, the alternative methods they have developed are hugely varied. Studies that self-identify as systems approaches include everything from ethnographic approaches to understanding citizen engagement with public health campaigns during the 2014 Ebola outbreak in West Africa (Martineau Reference Martineau2016) to high-level World Health Organization (WHO) frameworks (De Savigny and Adam Reference De Savigny and Adam2009), multi-sectoral computational models of infrastructure systems (e.g. Saidi et al. Reference Saidi, Kattan, Jayasinghe, Hettiaratchi and Taron2018), diagnostic surveys to identify system weaknesses (Halsey and Demas Reference Halsey and Demas2013), and “whole-of-government” governance approaches to address the new cross-sectoral coordination challenges (Organization for Economic Cooperation and Development 2017), such as those imposed by COVID-19. This extreme diversity in concepts and methods can make systems approaches seem ill-defined and opaque to researchers and policymakers from outside the systems tradition and has limited engagement with their insights.

What, then, is the common theoretical core of systems approaches to public service delivery? What are the key distinctions among them, and to which kinds of questions or situations are different types of systems approaches best suited? And what is the relationship between systems approaches and standard impact evaluation-based approaches to using evidence to improve public service delivery?

We address these questions by reviewing and synthesizing the growing literature on systems approaches. We focus our review on three policy sectors in which systems approaches have gained increasing currency in high- as well as middle- and low-income countries alike: health, education, and infrastructure. These approaches have developed largely independently in each sector, which not only creates opportunities for learning across sectors but also allows us to distill a common set of conceptual underpinnings from a diverse array of methods, contexts, and applications.

Our article thus has two linked goals. First, we aim to provide shared conceptual foundations for engagement between researchers within the systems tradition and those who work outside the systems community but share an interest in the role of context and complexity in public service delivery and policy evaluation. Second, we aim to cross-pollinate ideas and facilitate discussion within the systems research community, among researchers and practitioners from different sectoral backgrounds or disciplinary communities.

Based on our review, we argue that systems approaches can best be understood not as a single method but as a diverse set of analytical responses to the idea that multi-dimensional complementarities between a policy and other aspects of the policy’s context (e.g. other policies, institutions, social and economic context, cultural norms, etc.) are the first-order problem of policy design and evaluation. Such complementarities are present when the impact of a group of variables on an outcome is greater than the sum of its parts. For example, the impact of a new pay-for-performance scheme on health service delivery might depend not just on multiple characteristics of the scheme’s design but also on the presence of effective data monitoring and auditing systems, on health workers’ intrinsic motivation and career incentives, on the availability of resources to pay bonuses, and on whether political economy considerations permit the payment of bonuses – as well as potentially dozens of other dimensions along which contexts might vary. Whereas standard impact evaluation methods typically seek to address these complexities by finding a way to “hold all else constant” in order to causally identify the impact of a policy intervention on an outcome variable, systems approaches focus on the “all else” in order to better understand the complex ways in which policies’ effectiveness might vary across contexts and time or depend on the presence of complementary policy interventions. The systems character of a piece of research can thus pertain to its question, theoretical approach, and/or empirical methodology.

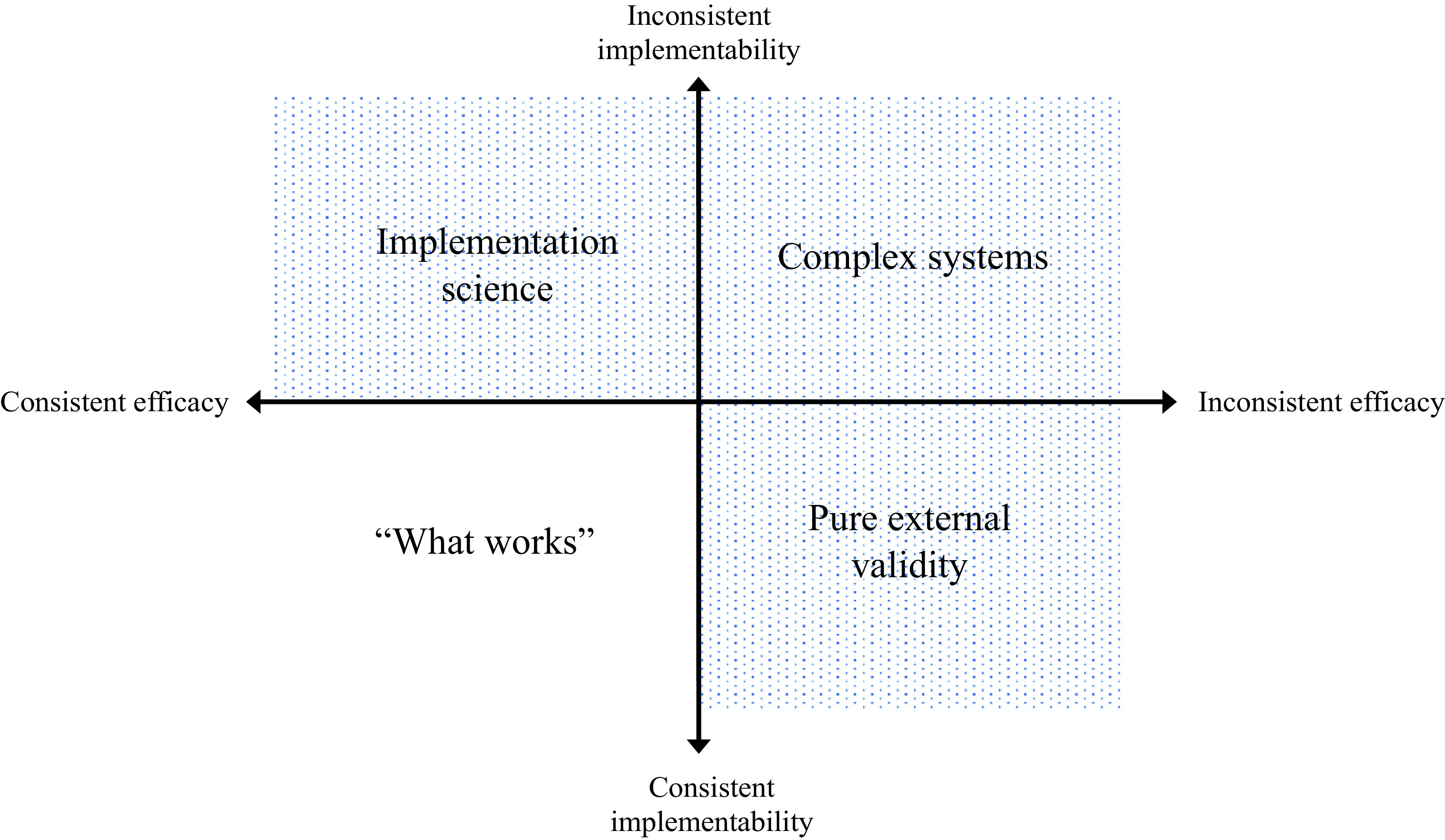

Within the broad umbrella of systems approaches, we distinguish between “macro-systems” approaches and “micro-systems” approaches. The former is primarily concerned with understanding the collective coherence of a set of policy interventions and various other elements of context, whereas the latter focuses on a single policy intervention (like most standard impact evaluations) but focuses on understanding its interactions with contextual variables and other policy interventions (rather than necessarily obtaining an average treatment effect). We further review and distinguish among different analytical methods within each of these two categories, and link these different methods to different questions and analytical purposes. In particular, we suggest that the choice of which micro-systems approach to adopt depends on the degree to which contextual complementarities affect a policy’s efficacy (i.e. the extent to which a given policy has consistent impacts across contexts) and implementability (i.e the extent to which a given policy can be delivered or implemented correctly). We combine these two dimensions to construct four stylized types of linked question types and research approaches: “what works” – style impact evaluation (consistent efficacy, consistent implementability); external validity (inconsistent efficacy, consistent implementability); implementation science (consistent efficacy, inconsistent implementability); and complex systems (inconsistent efficacy, inconsistent implementability). While not necessarily straightforward to apply in practice, this parsimonious framework helps explain why and when researchers might choose to adopt different systems-based methods to understand different policies and different questions – as well as when adopting a systems perspective may be less necessary.

Of course, these questions are also of interest to impact evaluators outside the systems tradition, and many of the methodological tools that systems researchers use are familiar to them. Whereas systems approaches are sometimes perceived as being from a different epistemological tradition than standard impact evaluation methods (e.g. Marchal et al. Reference Marchal, Van Belle, Van Olmen, Hoerée and Kegels2012), we view the underlying epistemology of systems approaches as consistent with that of impact evaluation. The main difference is the extent to which multi-dimensional complementarities are thought to be relevant, and hence how tractable it is to estimate the impacts of these complementarities using standard evaluation methods (given constraints of limited statistical power and/or counterfactual availability. While issues of heterogeneity, complementarity, and external validity can be addressed using standard impact evaluation methods (e.g. Bandiera et al. Reference Bandiera, Barankay and Rasul2010; Andrabi et al. Reference Andrabi, Das, Khwaja, Ozyurt and Singh2020), systems approaches presume (implicitly or explicitly) that such interactions are often high-dimensional (i.e. across many different variables) and thus intractable with limited sample sizes.Footnote 1 What distinguishes systems approaches, then, is mainly a different prioritization of these questions, and consequently a greater openness to methods other than quantitative impact evaluation in answering them. In this view, systems approaches and impact evaluation are thus better understood as complements, not mutually inconsistent alternatives, for creating and interpreting evidence about policy effectiveness.

The remainder of our article proceeds as follows. “Review method” briefly discusses our review method. “Defining systems approaches” presents a range of definitions of systems approaches from the literature, then synthesizes them into what we characterize as their common theoretical core. “Macro-systems approaches” reviews and typologizes macro-systems approaches across health, education, and infrastructure and offers a conceptual framework for synthesis, and “Micro-systems approaches” does the same for micro-systems approaches. “Systems approaches and impact evaluation” discusses how researchers and practitioners should go about selecting which type of systems approach (if any) is best suited for their purposes, and “Conclusion” concludes by discussing the connections between systems approaches to public service delivery and other well-established theoretical and methodological concerns in economics, political science, and public administration.

Review method

Our review of systems approaches in public service delivery focuses primarily on three sectors in which they have increasingly gained popularity: health, education, and infrastructure. However, the purpose of this article is not to provide a comprehensive survey of the systems literature in each of these sectors, as there already exist several excellent sector-level survey papers on systems approaches (e.g. Gilson Reference Gilson2012; Carey et al. Reference Carey, Malbon, Carey, Joyce, Crammond and Carey2015; Hanson Reference Hanson2015 for health; Pritchett Reference Pritchett2015 for education; Saidi et al. Reference Saidi, Kattan, Jayasinghe, Hettiaratchi and Taron2018 for infrastructure). Instead, this article’s main contribution is to synthesize ideas and insights from these divergent sectoral literatures to make them more accessible to each other and to readers from outside the systems tradition.

We conducted selective literature reviews within each sector aimed at synthesizing the breadth of questions, theories, methods, and empirical applications that comprise the range of methods used in the systems literature across these sectors. In doing so, we drew on a combination of foundational systems texts of which we were already aware, the existing sectoral review papers listed above, input from sectoral experts, and keyword searches in databases. We then used the citations and reference lists of these to iteratively identify additional articles of interest, stopping when we reached a point of saturation.Footnote 2 The result is not a systematic review in the formal sense of the term but nevertheless provides a detailed and consistent picture of the state of the literature in each sector. In that sense, our methodology shares overlaps with a “problematizing” (Alvesson and Sandberg Reference Alvesson and Sandberg2020) or a “prospector” review (Breslin and Gatrell Reference Breslin and Gatrell2023) where our focus is more on defining a new set of domains and boundaries that can allow us to critically reimagine the existing literature, challenge pre-existing conceptions, and build new theory, rather than offering a representative description of the field through a narrow lens. In addition to literature specifically about each of these sectors, we also draw on non-sector-specific work on systems approaches to understanding service delivery in complex and unpredictable systems more generally. We include in our review texts that self-describe as systems-based, as well as many that share similar questions, theoretical approaches, and empirical methods but which do not necessarily adopt the language of systems approaches.

For clarity and brevity, and in line with the article’s purpose, we focus the main text on presenting an overall synthesis with illustrative examples rather than on decreasing readability by trying to cover as many studies as possible. We include a more detailed (though still inevitably selective) sector-by-sector summary in an Online Appendix for interested readers.

Our review and synthesis are not necessarily intended as an argument in favor of systems approaches being used more widely, nor as a critique of research outside the systems tradition. Neither should it be read as a critique of systems approaches. While we do believe that both the general thrust of systems approaches and many of the specific ideas presented by them are important and useful, our goal is merely to present a concise survey and a set of clear conceptual distinctions so that readers can determine what might be useful to them from within this diverse array of perspectives and methods and can better converse across disciplinary and sectoral boundaries without the caricaturing and misrepresentation that have often marred these conversations. Doing this inevitably creates a tension between staying faithful to the way in which researchers in these fields view their work and the necessity of communicating about it in ways that will be intelligible to readers from other fields. We hope that we have struck this balance well and that readers will understand the challenges of doing so on such a broad-ranging topic.

Defining systems approaches

Systems approaches are defined in different ways across different sectors but tend to share a common emphasis on the multiplicity of actors, institutions, and processes within systems. For example, the WHO (2007, p. 2) defines a health system as consisting of “all organizations, people and actions whose primary intent is to promote, restore or maintain health.” In education, Moore (Reference Moore2015, p. 1) defines education systems as “institutions, actions and processes that affect the ‘educational status’ of citizens in the short and long run.” In infrastructure, Hall et al. (Reference Hall, Tran, Hickford and Nicholls2016, p. 6) define it as “the collection and interconnection of all physical facilities and human systems that are operated in a coordinated way to provide a particular infrastructure service.”

Despite their differences, these definitions imply a focus of systems on “holism” (Midgley Reference Midgley2006; Hanson Reference Hanson2015), or the idea that individual policies do not operate in isolation. Whereas a great deal of research and evidence-based policymaking focuses on studying the effectiveness of a single policy in isolation – often by means of using impact evaluation to estimate an average treatment effect – in practice, each policy’s effectiveness depends on other policies and various features of the contextual environment (Hanson Reference Hanson2015). As De Savigny and Adam (Reference De Savigny and Adam2009, p. 19) write in their seminal discussion of health systems, “every intervention, from the simplest to the most complex, has an effect on the overall system, and the overall system has an effect on every intervention.” This emphasis on interconnection has made the study of complexity (e.g. Stacey Reference Stacey2010; Burns and Worsley Reference Burns and Worsley2015) a natural source of inspiration for those seeking to apply systems approaches to the study of development and public service delivery.

But despite the growing popularity of systems approaches, there remains significant ambiguity around their meaning, with no universally accepted definition or conceptual framework beyond their shared emphasis on holism, context, and complexity (Midgley 2006). Even those writing within the systems tradition have pointed out that the field has used “diverse” and “divergent” concepts and definitions, leading the field as a whole to be sometimes characterized as “ambiguous” and “amorphous” (Cabrera et al. Reference Cabrera, Colosi and Lobdell2008). This lack of a commonly agreed definition and theoretical basis has made a precise and concise response to the question “what is a systems approach to public service delivery, and how is it different to what already exists?” difficult to obtain.

We argue that instead of viewing a systems approach as a specific method, system approaches are better understood as a diverse set of analytical responses to the idea that the first-order challenge of policy design and evaluation is to understand the multi-dimensional complementarities between a policy and other aspects of the policy’s context (e.g. other policies, institutions, social and economic context, cultural norms, etc.). By complementarities, we refer to the formal definition under which two variables – e.g. a variable capturing the presence of a particular policy and another variable capturing some aspect of the policy’s context – are considered complements when their joint effect on an outcome variable is greater than the sum of their individual effects on that variable.Footnote 3 By multi-dimensional, we refer to the idea that these complementarities might not just be among two or three variables at a time (as impact evaluations often seek to estimate) but among so many variables that estimating them in a standard econometric framework often becomes intractable. While this definition is limited in its precision by the need to adequately encompass the enormous diversity of systems approaches we discuss in subsequent sections, it captures the theoretical core – the emphasis on understanding multi-dimensional complementarities – that ties them all together.

Advocates of systems approaches often contrast this emphasis with the naïve use of impact evaluation to obtain an average treatment effect of a policy, which is then used to guide adoption decisions across a wide range of contexts and populations. Of course, the rapid growth in attention toward and research on issues of external validity and implementation within economics and political science (Deaton Reference Deaton2010; Pritchett and Sandefur Reference Pritchett and Sandefur2015; Bold et al. Reference Bold, Kimenyi, Mwabu, Ng’ang’a and Sandefur2018) makes this something of a “straw-man” characterization in many cases. In practice, both “impact evaluators” and “systems researchers” care about average treatment effects as well as about heterogeneity, mechanisms, and interactions. Indeed, it is noteworthy that the groups of researchers and practitioners with whom systems approaches have gained the most currency in the past two decades are (at least in the health and education sectors) those who most often find themselves working with, arguing against, or attempting to expand the boundaries of impact evaluators. But while easily over-exaggerated, the distinction does capture the different frame of mind with which systems researchers approach evidence-based policy, in which understanding complementarities among policies and their context is the primary focus of analysis, prioritized (in many cases) even overestimating the direct effect of a policy itself. Whereas a standard impact evaluation seeks primarily to understand the impact of a specific policy holding all else constant, a systems approach to the same policy seeks primarily to understand how the “all else” affects the policy’s impacts.

Among studies that self-identify as focusing on systems, one can draw a conceptual distinction between studies that are system-focused in substance (due to their scale or topic) and those that are system-focused in approach (due to their methodological or theoretical emphasis on issues of context, complementarity, and contingency). This article focuses mainly on the latter category. Although in practice these categories overlap significantly and the distinction is a blurry one, it nonetheless helps avoid the excessive conceptual spread that could result from referring to every study on “the health system” (or the education or infrastructure systems) as a “systems approach.”

Before we proceed to draw distinctions among different types of systems approaches, it is worth noting two additional characterizations of systems approaches that are often made by systems researchers. First, systems approaches are sometimes viewed as being more question- or problem-driven than standard research approaches, with a focus on real-world issues and linkages to actual government policy choices (e.g. Gilson Reference Gilson2012; Mills Reference Mills2012; Hanson Reference Hanson2015). While this characterization risks giving short shrift to the policy relevance of a great deal of research outside the systems tradition, there is also a natural linkage between embeddedness in an actual policy decision and a concern for understanding how a wide range of factors interlock, since policymakers must often deal with a breadth of challenges that researchers might choose to abstract away in the pursuit of parsimony. Second, some systems researchers emphasize that service delivery is not only complicated (in the sense of involving many moving parts) but also complex (in the sense of possessing dynamics that are non-linear and/or fundamentally unpredictable) (Sheikh et al. Reference Sheikh, Gilson, Agyepong, Hanson, Ssengooba and Bennett2011; Snyder Reference Snyder2013). We do not include this aspect of complexity in our core definition presented above, since it is far from universally shared among systems approaches, but return to discuss this issue further in “Systems approaches and impact evaluation” below.

Macro-systems approaches

One branch of systems approaches responds to the challenge posed by the presence of multi-dimensional complementarities across policies and contextual factors by taking a step back to try to examine questions of policy effectiveness from the standpoint of the entire system. These macro-systems approaches are focused not on the impact of a specific policy in isolation, but on understanding how the entire system functions to deliver desired outcomes. Macro-systems approaches thus focus on understanding coherence and interconnectedness between different policies, structures, and processes. In doing so, they also tend to define boundaries of the system in question, although this is often a challenging task (Carey et al. Reference Carey, Malbon, Carey, Joyce, Crammond and Carey2015).

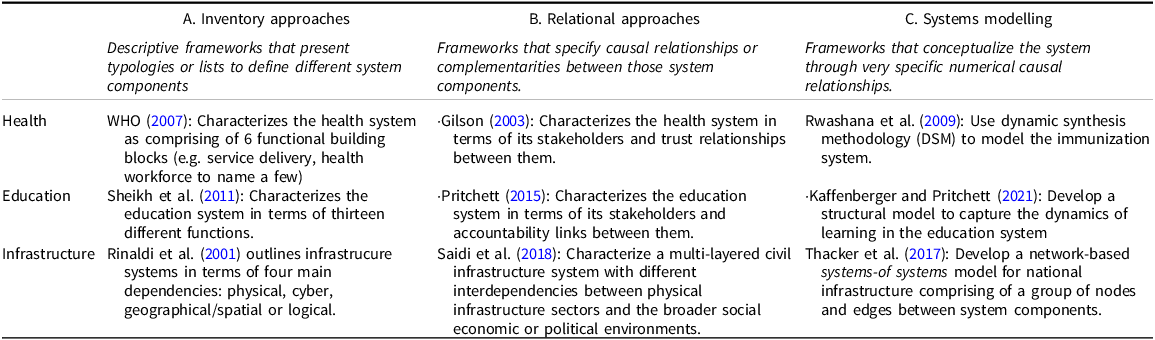

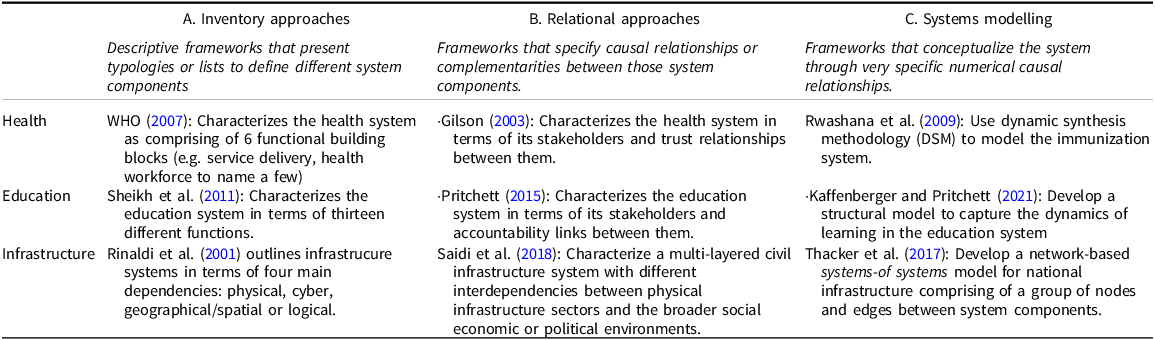

Our review of macro-systems approaches across the health, education, and infrastructure sectors highlights that these approaches lie on a spectrum of the specificity with which they define causal relationships between different system components. This includes approaches ranging from those that merely outline lists or typologies of various system components to those that tend to specify causal relationships between system components through specific numerical parameters. Along this spectrum, it is possible to distinguish three types of macro-systems approaches:

Inventory approaches, which are primarily descriptive and use typologies or lists to define a comprehensive universe of system components such as the types of stakeholders, functions, institutions, or processes within a system;

Relational approaches, which go a step further to posit broad causal relationships or complementarities between system features, based mainly on theoryFootnote 4 ; and

Systems modeling, which conceptualizes the system through precise mathematical causal relationships between different system components.

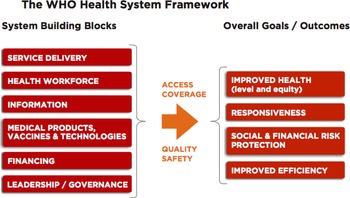

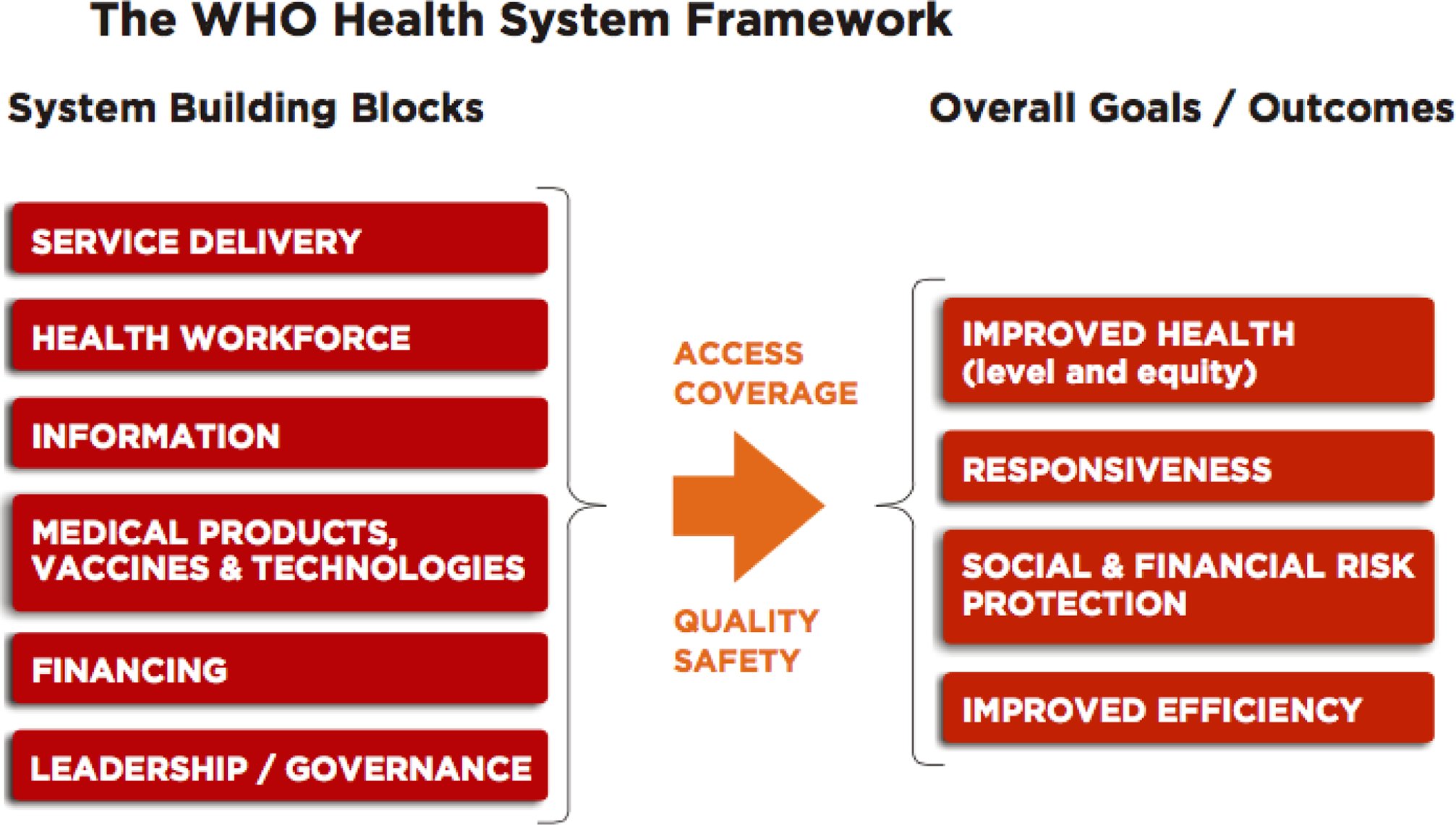

Inventory approaches list different components and/or typologies within a system with the aim of cataloging the whole range of factors that determine the outcomes or performance of a given system (usually defined sectorally). An example of such an approach is the seminal WHO health systems framework, which characterizes the health system as comprising six key functional building blocks – service delivery, health workforce, information, medical products (including both vaccines and technologies), financing, and leadership and governance – and links them to the broader health system goals (WHO 2007). As Fig. 1 shows, the strength of such inventory frameworks is their very wide scope in terms of identifying the full range of potential determinants and outcomes of a system, but this breadth is achieved by limiting the specificity of the causal relationships they posit. Similarly, the World Bank Systems Approach for Better Education Results (SABER) defines the education system in terms of thirteen different functions (e.g. education management information systems, school autonomy and accountability, and student assessment) with a link to improved student learning without specifying the relationship between these functions (Halsey and Demas Reference Halsey and Demas2013).

Figure 1. World Health Organizationhealth system framework.

Source: (Reprinted with permission): De Savigny and Adam (Reference De Savigny and Adam2009).

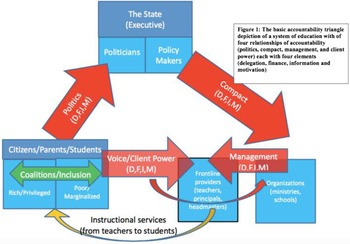

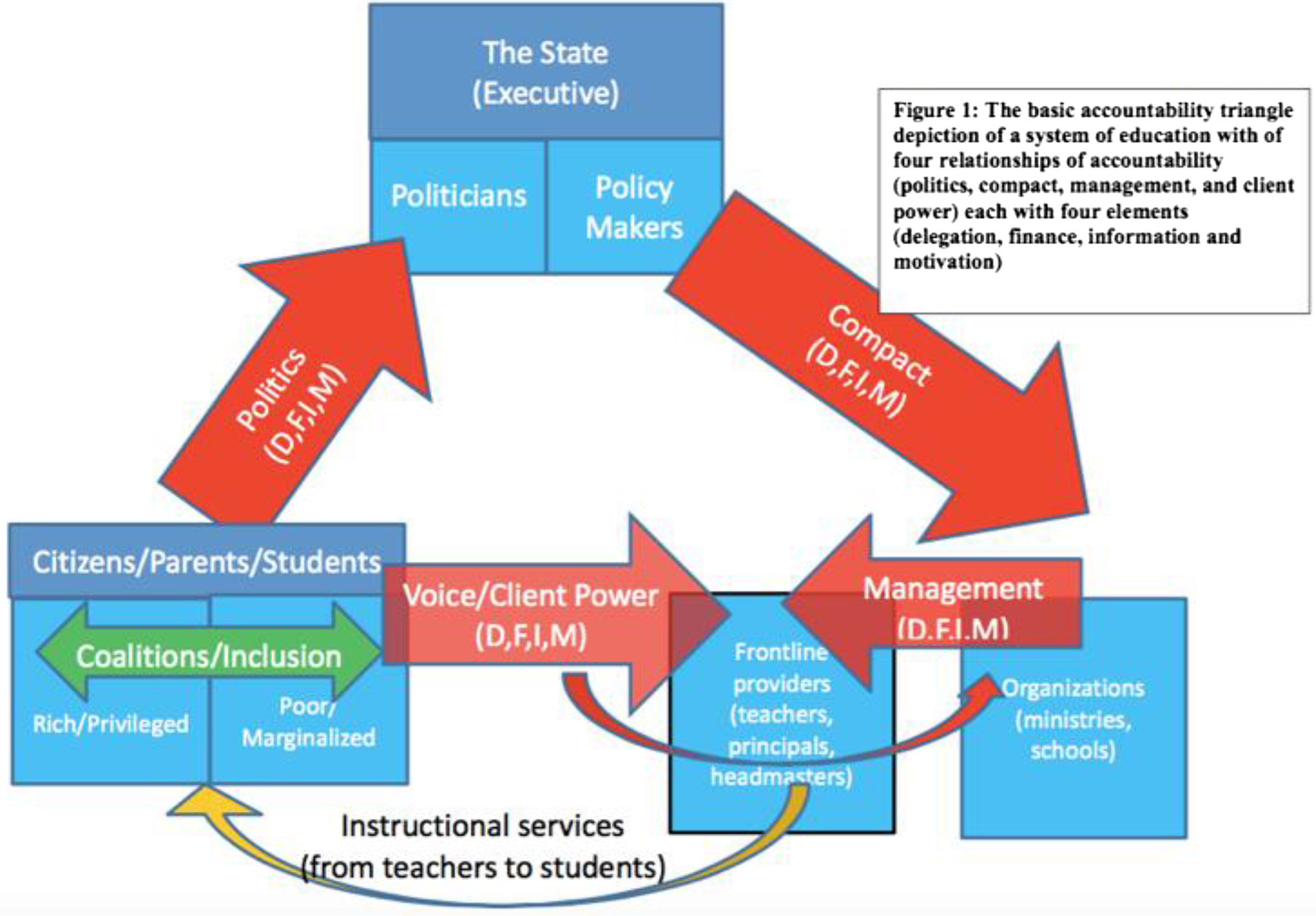

Like inventory approaches, relational macro-systems approaches list different system components, but go a step further in specifying the nature or direction of specific relationships or complementarities between them. For example, Gilson (Reference Gilson2003) conceptualizes the health system as a set of trust relationships between patients, providers, and the wider institutions. This differs from an inventory approach in more narrowly specifying both the content and direction of relationships among actors, which makes it more analytical but also limits its scope. It also demonstrates how such frameworks may also consider the software (i.e. institutional environment, values, culture, and norms) in addition to the hardware (i.e. population, providers, and organizations) of a health system (Sheikh et al. Reference Sheikh, Gilson, Agyepong, Hanson, Ssengooba and Bennett2011). In the education sector, Pritchett (Reference Pritchett2015) adopts a relational approach to characterizing the education system through accountability links between different actors such as the executive apparatus of the state, organizational providers of schooling (such as ministries and schools), frontline providers (such as head teachers and teachers), and citizens (such as parents and students).Footnote 5 He argues that the system of education works when there is an adequate flow of accountability across the key actors in the system across four design elements: delegation, financing, information, and motivation (see Fig. 2). Similarly, in the infrastructure sector, Ottens et al. (Reference Ottens, Franssen, Kroes and Van De Poel2006) propose a high-level framework to characterize how technical elements in an infrastructure system may interact with human actors and social institutions to determine system performance. But while such relational approaches are more specific than inventory approaches in their definition of elements and causal relationships, they are still broad enough that their use is more as a conceptual framework for arraying factors and nesting hypotheses than as an operationalizable model of the system.

Figure 2. Education system framework.

Source: (Reprinted with permission): Pritchett (Reference Pritchett2015).

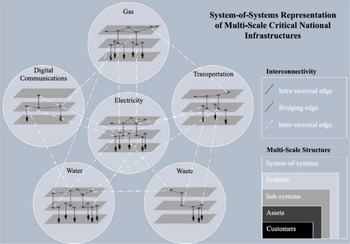

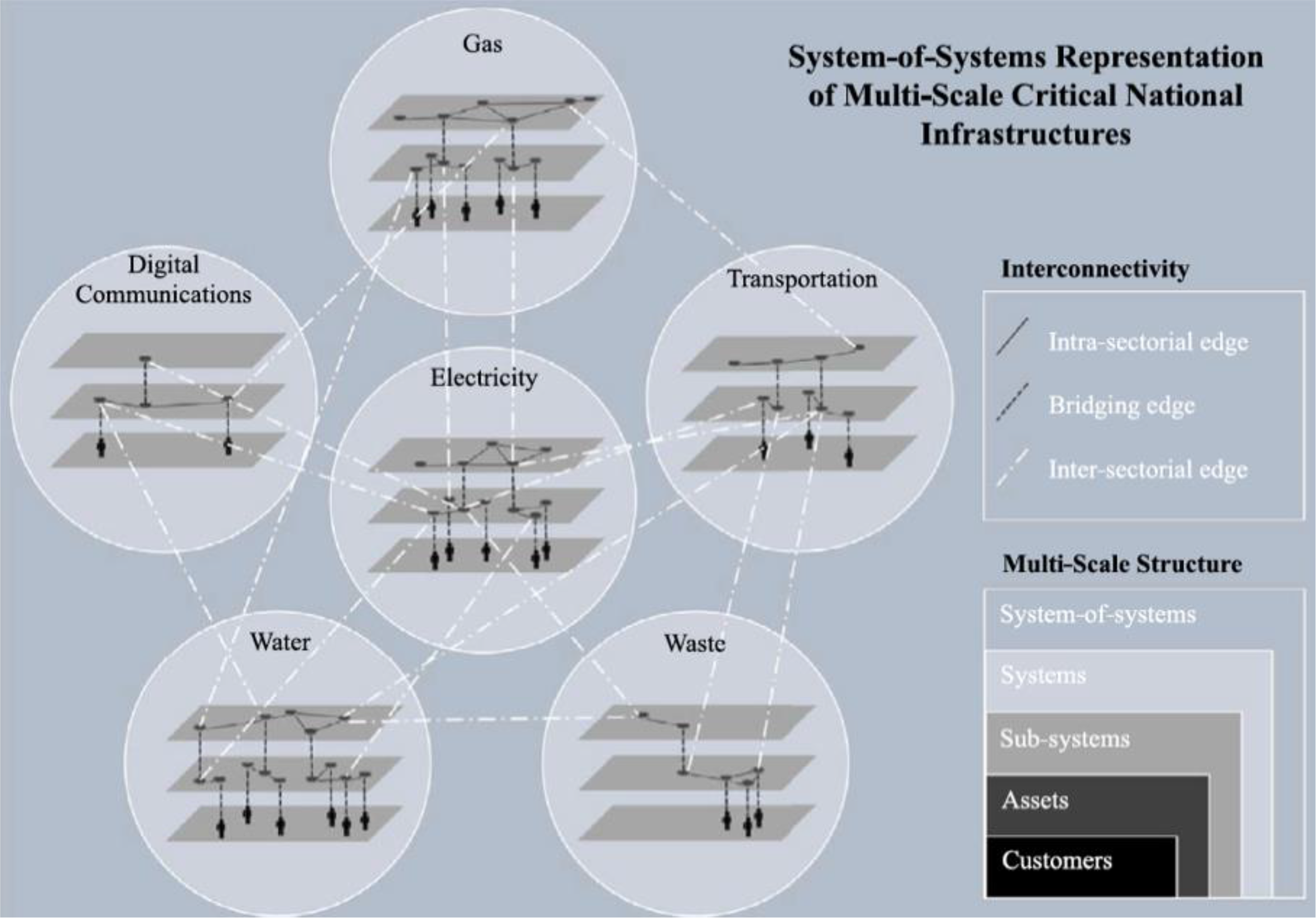

Systems modeling approaches take this next step of precisely specifying variables, causal relationships among these system components, and numerical parameters on these relationships. Such models typically combine theory with statistical methods and draw on a range of quantitative techniques such as systems dynamics, structural equation modeling, and structural econometric modeling (e.g. Homer and Hirsch, Reference Homer and Hirsch2006; Reiss and Wolak Reference Reiss and Wolak2007).Footnote 6 Thacker et al. (Reference Thacker, Pant and Hall2017), for example, develop a network-based systems-of-systems model for critical national infrastructures, where each type of infrastructure such as water or electricity is a sub-system comprising a group of nodes and edges with their specific flows (see Fig. 3). They use this model to perform a multi-scale disruption analysis and draw predictions on how failures in any individual sub-systems can potentially lead to large disruptions. In the health sector, Homer and Hirsch (Reference Homer and Hirsch2006) develop a causal diagram of how chronic disease prevention works and then use systems dynamic methodology to develop a computer-based model to test alternate policy scenarios that may affect the chronic disease population. In the education sector, Kaffenberger and Pritchett (Reference Kaffenberger and Pritchett2021) combine a structural model with parameter values from existing empirical literature to predict how learning outcomes would be affected under different policy scenarios such as expanding schooling to universal basic education, slowing the pace of curriculum, and increasing instructional quality.

Figure 3. Infrastructure system representation with six critical national infrastructures.

Source: (Reprinted with permission): Thacker et al. (Reference Thacker, Pant and Hall2017).

The three macro-systems approaches outlined above can have different types of uses and benefits depending on the question of interest. For example, systems researchers often use frameworks developed through inventory approaches to develop diagnostic tools to understand strengths and weaknesses of systems, such as the World Bank’s use of its SABER framework, which has been implemented in more than 100 countries to identify potential constraints to system effectiveness (World Bank 2014). Relational frameworks in turn can be used to array key relationships between system actors, which may be useful for generating important insights for policy design or generating more precise hypotheses for empirical research. Finally, systems modeling approaches are one way of making complex systems analytically tractable by narrowing down on a set of key causal relationships within a system to generate useful predictions and insights about a system (Berlow Reference Berlow2010). Although systems modeling has been used in the health and education sectors to generate useful predictions, such models have been used more extensively in infrastructure systems research, possibly because the variables are more quantitative in nature and relatively easier to model in comparison to more human or intangible contextual features in health or education. While conceptually distinct, in practice, these three types of macro-systems approaches can overlap, and not every framework is easily classifiable within a single category (Table 1).

Table 1. Summary of macro-systems approaches with selected examples

Source: Authors’ synthesis.

Micro-systems approaches

While macro-systems approaches offer big-picture frameworks to understand coherence between many system components and policies, micro-systems approaches focus on the effectiveness of a specific policy – just like impact evaluations. However, the central presumption of micro-systems approaches is that policies cannot be viewed in isolation, but rather need to be designed, implemented, evaluated, and scaled taking the wider context and complementarities within the system into account (Travis et al. Reference Travis, Bennett, Haines, Pang, Bhutta, Hyder, Pielemeier, Mills and Evans2004; De Savigny and Adam Reference De Savigny and Adam2009; Snyder Reference Snyder2013; Pritchett Reference Pritchett2015), and so questions and methods mainly revolve around these issues rather than average treatment effects.

Across the health, education, and infrastructure sectors, a diverse range of analytical approaches fit our description of systems approaches. Each of these approaches is likely to be familiar to readers in some disciplines and unfamiliar to others. They include approaches that aim to help evaluators better understand the roles of mechanisms and contextual factors in producing policy impact, such as realist evaluation (Pawson and Tilley Reference Pawson and Tilley1997) and theory-driven evaluation (Coryn et al. Reference Coryn, Noakes, Westine and Schröter2011), as well as a range of qualitative or ethnographic (e.g. George Reference George2009; Bano and Oberoi Reference Bano and Oberoi2020) and mixed method approaches (e.g. Mackenzie et al. Reference Mackenzie, Koshy, Leslie, Lean and Hankey2009; Tuominen et al. Reference Tuominen, Tapio, Varho, Järvi and Banister2014) more broadly. They also encompass fields such as implementation science (Rubenstein and Pugh Reference Rubenstein and Pugh2006), some types of meta-analysis and systematic review (e.g. Greenhalgh et al. Reference Greenhalgh, Macfarlane, Steed and Walton2016; Leviton et al. Reference Leviton2017; Masset Reference Masset2019), and adaptive approaches to policy design and evaluation (e.g. Andrews et al. Reference Andrews, Pritchett and Woolcock2017). These micro-systems approaches can focus on a variety of levels of analysis, from individuals to organizations to policy networks, but are united by their analytical focus on a single policy at a time rather than on the entire system (as in macro-systems approaches). We briefly summarize each of these methods or approaches in this section, before the next section develops a framework to link them back to standard impact evaluation and help prospective systems researchers select among them.

Micro-systems approaches’ emphasis on heterogeneity is perhaps best captured by the mantra of the “realist” approach to evaluation, which argues that the purpose of an evaluation should be to identify “what works in which circumstances and for whom?,” rather than merely answering the question of “does it work”? (Pawson and Tilley Reference Pawson and Tilley1997). More specifically, instead of looking at simple cause-and-effect relationships, realist research typically aims to develop middle-range theories through developing “context-mechanism-outcome configurations” in which the role of policy context is integral to developing an understanding of how the policy works (Pawson and Tilley Reference Pawson and Tilley1997; Greenhalgh et al. Reference Greenhalgh, Macfarlane, Steed and Walton2016). For example, Kwamie et al. (Reference Kwamie, van Dijk and Agyepong2014) use a realist evaluation to evaluate the impact of the Leadership Development Programme delivered to district hospitals in Ghana. Focusing on a district hospital in Accra, they used a range of qualitative data sources to develop causal loop diagrams to explain interactions between contexts, mechanisms, and outcomes. They found that while the training produced some positive short-term outcomes, it was not institutionalized and embedded within the district processes. They argue that this was primarily due to the structure of hierarchical authority in the department, due to which the training was seen as a project coming from the top, and thus reduced initiative on the part of the district managers to institutionalize it.

A related approach is theory-driven evaluation, in which the focus is not just on whether an intervention works but also on its mediating mechanisms – the “why” of impact (Coryn et al. Reference Coryn, Noakes, Westine and Schröter2011). Theory-driven evaluations take as their starting point the underlying theory of how the policy is intended to achieve its desired outcomes (often expressed in the form of a theory of change diagram) and seek to evaluate each step of this causal process. As with realist approaches, the role of context is critical for theory-driven evaluations, as it is these mechanism-context complementarities that drive heterogeneity of impact across contexts and target populations, and hence the external validity and real-world effectiveness of policies or interventions. Theory-based and realist evaluations both tend to rely on qualitative methods, either alone or as a supplement to a quantitative impact evaluation (i.e. mixed methods), as limitations of sample size, counterfactual availability, and measurement often make it infeasible to document multiple potential mechanisms quantitatively at the desired levels of nuance and rigor.Footnote 7

Another form of qualitative method widely used by systems researchers is ethnography and participant observation. These are used mainly for the diagnosis of policy problems, refining research hypotheses, or designing new policy interventions, rather than evaluating policy impact ex post. For example, George (Reference George2009) conducts an ethnographic analysis to examine how formal rules and hierarchies affect informal norms, processes, and power relations in the Indian health system in Koppal state. The study shows that the two key functions of accountability in Koppal’s health system – supervision and disciplinary action – are rarely implemented uniformly as these are negotiated by frontline staff in various ways depending on their informal relationships. In the education sector, Bano and Oberoi (Reference Bano and Oberoi2020) use ethnographic methods to understand how innovations are adopted in the context of an Indian non-governmental organization that introduced a Teaching at the Right Level intervention and tease out lessons for how innovations can be scaled and adopted in state systems. In this sense, ethnographic research is a more structured and rigorous version of the informal discussions or anecdotal data that policymakers and evaluators often draw upon in making policy or evaluation decisions, and can be integrated into these processes accordingly (alone or alongside some form of impact evaluation).

Systems research often has a specific focus on the implementation, uptake, and scale-up of policy (Hanson Reference Hanson2015). The discipline of implementation science in the health sector, for example, is specifically targeted toward understanding such issues (Rubenstein and Pugh Reference Rubenstein and Pugh2006). Research in implementation science is usually less concerned with the question of what is effective (where there is strong prior evidence of an intervention’s efficacy in ideal conditions) and is more concerned with how to implement it effectively. Systems researchers who study implementation cater to a set of concerns such as methods for introducing and scaling up new practices, behavior change among practitioners, and the use and effects of patient and implementer participation in improving compliance. Greenhalgh et al. (Reference Greenhalgh, Wherton, Papoutsi, Lynch, Hughes, A’Court and Shaw2017), for example, combine qualitative interviews, ethnographic research, and systematic review to study the implementation of technological innovations in health. They develop the non-adoption, abandonment, scale-up, spread, and sustainability framework to both theorize and evaluate the implementation of health care technologies. Like realist and theory-based evaluation, implementation science research often relies heavily (though not exclusively) on qualitative methods, although these can also be combined with experimental or observational quantitative data.

While these micro-systems approaches are by definition used to analyze the effectiveness of a single policy, some systems researchers have also adapted evidence aggregation methods like systematic reviews and meta-analysis to the interests of systems researchers. While these methods are typically used to summarize impacts or identify an average treatment effect of an intervention by summarizing studies across several contexts, systems researchers focus on using these methods to identify important intervening mechanisms across contexts. For example, Leviton (2017) argues that systematic reviews and meta-analyses can offer bodies of knowledge that support better understanding of external validity by identifying features of program theory that are consistent across contexts. To identify these systematically, she identifies several techniques to be used in combination with meta-analyses such as a more thorough description of interventions and their contexts, nuanced theories behind the interventions, and consultation with practitioners. While many of these applications rely on integrating qualitative information into the evidence aggregation process, other researchers use these methods in their traditional quantitative formats but focus specifically on systems-relevant questions of mechanisms, contextual interactions, and heterogeneity. For example, Masset (Reference Masset2019) calculates prediction intervals for various meta-analyses of education interventions and finds that interventions’ outcomes are highly heterogeneous and unpredictable across contexts, even for simple interventions like merit-based scholarships. Used in this way, there is methodological overlap between meta-analysis in the systems tradition and how it is commonly used in mainstream impact evaluation. This illustrates one of many ways in which the boundaries between “systems” and “non-systems” research are porous, which both increases the possibilities for productive interchange among research approaches but also creates terminological and conceptual confusion that inhibits it.

Stakeholder mapping or analysis is another method used by systems researchers, to either understand issues of policy implementation or policy design. For example, Sheikh and Porter (Reference Sheikh and Porter2010) conducted a stakeholder analysis to identify key gaps in policy implementation. Using data from in-depth interviews with various stakeholders across five states in India, they highlight bottlenecks in human immunodeficiency virus policy implementation (from nine hospitals selected by principles of maximum variation). Like ethnography, stakeholder mapping is an example of a micro-systems approach (because it focuses on the effectiveness of a single policy) but which asks different questions about that policy’s effectiveness than standard impact evaluations do.

A final set of micro-systems approaches is grounded in the reality that many questions of policy design and evaluation are situated in complex settings, where policy-context complementarities are so numerous and specific to the contextual setting that the effectiveness of a policy is impossible to predict, for all intents and purposes. Systems researchers argue that for such complex systems, which have many “unknown unknowns” with few clear cause-and-effect relationships, various negative and positive feedback loops, and emergent behaviors (Bertalanffy Reference Bertalanffy1971; Snowden and Boone Reference Snowden and Boone2007), there is a need for a different set of analytical approaches to policy design and evaluation (e.g. Snyder Reference Snyder2013). This perspective eschews not only the idea of “best practice” policies but also sometimes the idea of basing adoption decisions on policies’ effectiveness in other contexts because policy dynamics are viewed as so highly context-specific.

A core idea in complex systems theory is that the processes of policy design and implementation should involve an ongoing process of iteration with feedback from key stakeholders and decision-makers in the system. For example, Andrews et al. (Reference Andrews, Pritchett and Woolcock2013) argue that designing and implementing effective policies for governments in complex settings require locally driven problem-solving and experimentation, and propose an approach called problem-driven iterative adaptation that emphasizes local problem definition, design, and experimentation. In a different vein, Tsofa et al.’s (Reference Tsofa, Molyneux, Gilson and Goodman2017) “learning sites” approach envisions a long-term research collaboration with a district hospital in which researchers and health practitioners work together over time to uncover and address thorny governance challenges. While the learning site serves to host a series of narrower research studies, the most important elements include formal reflective sessions being regularly held among researchers, between researchers and practitioners, and across learning sites to study complex pathways to change. Such approaches are also closely linked to the living lab methodology, which relies on innovation, experimentation, and participation for diagnosing problems and designing solutions for more effective governance (Dekker et al. 2019).

The types of micro-systems approaches discussed above and presented in Table 2 are neither mutually exclusive nor collectively exhaustive of all possible micro-systems approaches but illustrate the breadth and diversity of such approaches. Table 2 also illustrates the variation across sectors in the range of approaches that are commonly used. The health sector has the broadest coverage across different types of methods. The education sector also shows fairly broad coverage across methods, while also demonstrating growing attention toward systems approaches in response to greater concerns of external validity following the surge of education-related impact evaluations (especially in international development) over the last decade. The use of micro-systems approaches in infrastructure is comparatively limited. This is possible because infrastructures have high up-front costs that demand more ex ante cost-benefit analysis and planning (often through macro-systems approaches) rather than ex post evaluations of the impacts of specific infrastructures through micro-systems approaches.

Table 2. Summary of micro-systems approaches

Source: Authors’ synthesis.

Systems approaches and impact evaluation

The review of systems approaches in the preceding two sections illustrates the sheer diversity of topics, questions, theories, and methods that can fall within the broad label of systems approaches. It also shows that while systems approaches are sometimes rhetorically positioned in opposition to standard impact evaluation approaches, many of the concerns motivating systems researchers (such as attention to mechanisms, heterogeneity, external validity, implementation and scale-up, and the use of qualitative data) can and increasingly are being addressed within the impact evaluation community. At the same time, it is also generally true that systems approaches differ substantially in their prioritization of questions and hence the types of evidence in which they are most interested, so these differences are not purely semantic.

How, then, should a researcher or policymaker think about whether they need to adopt a systems approach to creating and interpreting evidence? And if so, which type of systems approach might be most relevant? In this section, we offer a brief conceptual synthesis and stylized framework to guide thinking on these questions.

For macro-systems approaches, the relationship to standard impact evaluation methods is fairly clear. Macro-systems approaches array the broad range of policies and outcomes relevant to understanding the performance of a given sector, and impact evaluations examine the effect of specific policies on specific outcomes within this framework. Macro-systems frameworks can thus add value to impact evaluation-led approaches to studying policy effectiveness by providing a framework with which to cumulate knowledge, suggesting important variables for impact evaluations to focus on (and potential complementarities among them), and highlighting gaps in an evidence base. Being more explicit in couching impact evaluations in some kind of broader macro-system framework – whether inventory, relational, or systems modeling – could thus enhance the evidentiary value of systems approaches, as indeed it has begun to do in the systems literature in the health, education, and infrastructure sectors (e.g. Silberstein and Spivack Reference Silberstein and Spivack2023).

For micro-systems approaches, however, the relationship to (and distinction from) standard impact evaluation methods is more blurry. Among other reasons, this is because our definition of systems approaches as being concerned with multi-dimensional complementarities does not give much guidance as to which types of systems questions and methods might be related to different types of potential complementarities.

We, therefore, propose a simple framework that uses a policy’s consistency of implementability and consistency of efficacy to guide choices about the appropriateness of different evidence-creation approaches.Footnote 8 By consistency of implementability, we mean the extent to which a given policy can be delivered or implemented correctly (i.e. the desired service delivery outputs can be produced) across a wide range of contexts. Policies whose effective implementation depends on important and numerous complementarities with other policies or aspects of context will tend to have lower consistency of implementability, since these complementary factors will be present in some contexts but not others, whereas policies for whom these complementarities are relatively fewer or less demanding will be able to be implemented more consistently across a wide range of contexts. By consistency of efficacy, we mean the extent to which delivery of a given set of policy outputs results in the same set of outcomes in society across a wide range of contexts. As with implementability, policies whose mechanisms rely on many important complementarities with other policies or aspects of context will tend to have lower consistency of efficacy across contexts, and vice versa.

Putting these two dimensions together (Fig. 4) yields a set of distinctions among four different stylized types of evidence problems, each of which can be addressed most effectively using different methods for creating and interpreting evidence. In interpreting this diagram, several important caveats are in order. First, this framework is intended to help readers organize the extraordinarily diverse range of micro-systems approaches identified in our review and summarized in our preceding sections and to identify when they might want to adopt a systems approach and which type might be most useful. However, it is not comprehensive taxonomy of all micro-systems approaches, nor do all methods reviewed fit neatly into one category. Second, while we present four stylized “types” of evidence problems for simplicity, the underlying dimensions are continuous spectrums. Finally, complementarities exist and context matters for all policies to at least some extent; the distinctions presented here are intended to be relative in nature, not absolute. With these caveats in mind, we discuss each of these types in turn, highlighting their relationship both to different micro-systems methods as well as to standard impact evaluation approaches.

Figure 4. Synthesizing micro-systems approaches.

Source: Author’s synthesis.

The top-left quadrant of Fig. 4 corresponds to types of policies that are consistently efficacious across contexts, but which are challenging to implement effectively. We refer to these problems as “implementation science” problems. Handwashing in hospitals is an example of a type of policy that falls in this quadrant, as it is simple and universally effective in reducing hospital-acquired infections but also extremely difficult to get health workers to do routinely. Increasing rates of childhood immunization is another example, as well-established vaccinations are consistently efficacious but many children fail to receive immunizations every year. If a policymaker were considering adopting a policy of promoting vaccinations of children, she ought to be less interested in reading existing evidence (or creating new evidence through research) on the efficacy of the vaccines themselves than in evidence about how to increase vaccination rates.

As discussed in the previous section, implementation science researchers have used a range of methods – qualitative, quantitative, and mixed – and theoretical perspectives (e.g. realist evaluation) to address implementation-type problems. Outside of the systems tradition, this concern with the nitty-gritty details of how to better deliver policies and the consequences of minor variations in implementation for take-up is perhaps most closely paralleled by Duflo's (Reference Duflo2017) vision of economists (and presumably evidence-creators in other disciplines) as “plumbers” helping governments to improve delivery by varying and evaluating program details. So while implementation is clearly a core focus of many types of systems approaches, this is not to say that researchers who do not self-identify as systems researchers are uninterested in it. That said, systems researchers perhaps tend to be more willing to focus their attention exclusively on implementation issues, as distinct from the policy’s impact on final outcomes – a choice that is justifiable for the type of evidence problems posed by policies that share the features of consistent efficacy but inconsistent implementability.

This contrasts with the scenario in the bottom-right quadrant, where a policy is simple to implement but has highly variable efficacy across contexts. This is the classic external validity question: will a policy or intervention that works in one context work in a different context?Footnote 9 An example of such a problem is merit-based scholarships for education, which are relatively easy to implement in most contexts but can have high variance in effectiveness across contexts (Masset Reference Masset2019). In terms of methodological responses to such problems, realist and theory-driven evaluations are commonly used by systems researchers to understand these issues of heterogeneous effects and fit with context. Meta-analysis and systematic reviews are also commonly used within the systems tradition to aggregate evidence across studies, but typically with a focus on identifying how context influences policy efficacy more than on estimating an overall average treatment effect, often by supplementing quantitative impact estimates with qualitative data and attention to mechanisms and context (e.g. Greenhalgh et al. Reference Greenhalgh, Macfarlane, Steed and Walton2016; Leviton 2017). Of course, impact evaluation researchers outside the systems tradition are also increasingly recognizing these issues as important, so once again the difference is largely one of prioritization of questions and of methodological pluralism in addressing them.

Policies that are both inconsistently implementable and inconsistently efficacious fall into the category of complex systems. This exhibit features that arise from important and numerous complementarities with other policies and with features of the context, such as emergent behaviors that are not explained by those interactions in isolation; non-linearities; and system self-organization whilst operating across multiple levels and time periods (Sabelli Reference Sabelli2006). Examples of complex system-type problems in public service delivery include many organization- and sector-level reform efforts, which by their nature affect numerous actors (some of whom are organized and strategic), and depend on the existing state of the system and presence of other related policy interventions. Evidence creation and use takes on very different forms for these type of problems since knowing that a particular policy worked in another context is unlikely to be informative about its effect in a new context.Footnote 10 Evidence generation and learning therefore have to take on very local forms, such as the adaptive experimentation methods (e.g. Andrews et al. Reference Andrews, Pritchett and Woolcock2017) and learning sites and living labs (e.g. Sabel and Zeitlin Reference Sabel and Zeitlin2012; Tsofa et al. Reference Tsofa, Molyneux, Gilson and Goodman2017; Dekker et al. 2019) discussed in “Micro-systems approaches” above.

Finally, some policies may fall in the bottom-left quadrant of Fig. 4 (consistent implementability, consistent efficacy). Such policies are actually relatively amenable to straightforward evaluate-and-transport or evaluate-and-scale-up forms of evidence-based policy, so delving deeply into the complexities of context and broader systems may be unnecessary – or at least not a priority for scarce attention and resources. While context matters for the implementability and efficacy of all policies to some degree, policies such as cash transfers have been shown to be consistently effective in achieving poverty reduction outcomes across a wide range of contexts and are relatively simple to implement. As Bates and Glennerster (Reference Bates and Glennerster2017) note, it is a fallacy to think that all interventions must be re-evaluated in every context in which they are tried, and for policies in this bottom-left quadrant, systems approaches might not be necessary at all. Just as there are complex system-type policy problems for which evidence is not generalizable and nearly all learning must be local, there are also “what works”-type policy problems for which evidence is highly generalizable. The challenge for selecting a method of evidence generation and interpretation, then, is being able to predict ex ante which type of policy problem one is facing.

How might a researcher or policymaker actually go about deciding which quadrant of this framework they are in when deciding what type of evidence they need in order to make decisions about the adoption and design of a new policy? Several approaches are possible, although each faces its own challenges. First, one might approach the question of consistency of implementability and efficacy empirically, by aggregating evidence across multiple contexts and/or target groups through systematic review and meta-analysis. Indeed, multi-intervention meta-analyses such as Vivalt (Reference Vivalt2020) demonstrate that some interventions exhibit much higher heterogeneity of impact across contexts. Unfortunately, such meta-analyses do not routinely distinguish between implementation and efficacy as causes for this heterogeneity, although, in principle, they could – particularly when quantitative methods are supplemented with qualitative data in trying to aggregate evidence about interventions’ full causal chains (e.g. Kneale et al. Reference Kneale, Thomas, Bangpan, Waddington and Gough2018). Second, one could approach the question theoretically, by developing priors about the complexity of each policy’s theory of change (i.e. intended mechanism) and its scope for complementarities with other policies or aspects of context in terms of implementation and efficacy. Finally, Williams (Reference Williams2020) proposes a methodology of mechanism mapping that combines theory-based and empirics-based approaches to developing predictions about how a policy’s mechanism is likely to interact with its context, and thus how heterogeneous its implementability and efficacy are likely to be. All of these approaches have obvious limitations – limited evidence availability, and the difficulty of foreseeing all potential complementarities and their consequences – and in practice would likely need to be combined. Fig. 4 is thus likely to be of more use as a conceptual framework or heuristic device than as a device for formally classifying different types of policies. But it may nonetheless help researchers and practitioners structure their thinking about why different types of policies might present different needs in terms of evidence generation.

Conclusion

This article has synthesized a wide range of literature that falls under the broad label of systems approaches to public service delivery, drawing key distinctions within it and linking it to standard impact evaluation-led approaches to evidence-based policymaking. Based on our review of studies in health, education, and infrastructure, we have argued that systems approaches are united in their focus on multi-dimensional complementarities between policies and aspects of context as the key challenge for creating and using evidence. This results in a different prioritization of types of questions and greater methodological pluralism, and also gives rise to a range of different types of systems approaches, each suited to different situations and questions.

Our systems-perspective synthesis in some ways echoes, but goes beyond, discipline-specific attempts to grapple with these issues. It also illustrates ways in which the relevance of systems approaches extends beyond being a set of considerations about how best to undertake policy evaluations. In economics, for instance, issues of complementarity among management structures and processes are perhaps the central focus of the field of organizational economics (Brynjolfsson and Milgrom Reference Brynjolfsson, Milgrom, Robert and Roberts2013) as well as common focuses (at least along one or two dimensions) of impact evaluations (Bandiera et al. Reference Bandiera, Barankay and Rasul2010; Andrabi et al. Reference Andrabi, Das, Khwaja, Ozyurt and Singh2020). Indeed, Besley et al.’s recent (Reference Besley, Burgess, Khan and Xu2022) review of the literature on bureaucracy and development (which also calls for a systems perspective) highlights the potential for this literature to draw increasingly on organizational economics and industrial organization. Similarly, understanding the impact of policies in general rather than partial equilibrium has long been valued (Acemoglu Reference Acemoglu2010) and issues of external validity, implementation, and policy scale-up are now at the forefront of impact evaluation (e.g. Duflo Reference Duflo2017; Vivalt Reference Vivalt2020). In comparative politics, discussion of scope conditions for theories and mixed methods are frequently used to understand mechanisms and heterogeneity (e.g. Falleti and Lynch Reference Falleti and Lynch2009). And in public administration, questions around how to incorporate complexity of policy implementation and governance networks in research methods (Klijn Reference Klijn2008), and new governance approaches to address policy design in the face of such complexity are being increasingly discussed (OECD 2017).

Among practitioners, there is growing recognition that policies are designed and implemented in systems where different layers of administration, personnel, and institutions are intertwined. This has resulted in the production of various guides and frameworks on how policymakers can use tools from systems approaches to design and implement policy. For example, Woodhill and Millican (Reference Woodhill and Millican2023) offer a framework for how the UK Foreign, Commonwealth, and Development Office and its partners can employ systems thinking in their working practices and business processes. Similarly, OECD (2017) offers a discussion on how systems approaches can be used by governments to design policy and (among several other examples) describes how the Prime Minister’s Office in Finland developed a new framework for experimental policy using the tools from systems approaches. At both the macro and micro levels, systems approaches are increasingly being adopted by practitioners to navigate many of the same challenges of complexity, context, and uncertainty with which academic researchers are also grappling.

These convergences of interest, theory, and method present opportunities for cross-sectoral and cross-disciplinary learning. And while these overlaps of questions and methods do serve as a warning against strawman characterizations of other disciplines, so too can they serve to conceal real differences in the specifics of choosing and combining analytical methods, in how theoretical frameworks are constructed and tested, and – most of all – in the extent to which questions about context and complementarity are prioritized when thinking about policy effectiveness. It is our hope that this article provides readers from a range of backgrounds with a better understanding of the current state of literature on systems approaches, ideas for new avenues of connection with their work, and a common conceptual foundation on which to base dialog with researchers from different traditions who share the goal of using evidence to improve public service delivery.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/S0143814X23000405.

Data availability statement

This study does not employ statistical methods and no replication materials are available.

Acknowledgements

We are grateful for funding from the Bill and Melinda Gates Foundation and helpful conversations and comments from Seye Abimbola, Dan Berliner, Lucy Gilson, Guy Grossman, Kara Hanson, Rachel Hinton, Dan Honig, Adnan Khan, Julien Labonne, Lant Pritchett, Imran Rasul, Joachim Wehner, and workshop participants at the Blavatnik School of Government and 2018 Global Symposium on Health Systems Research. The authors are responsible for any remaining faults.

Competing interests

None.