1. Introduction

Less commonly recognized as part of language is the gestural channel, including manual gestures, facial expression, and bodily posture. These are, however, subject to conventionalization and coordination with other linguistic processes. (Langacker, Reference Langacker2008, p. 462).

As Langacker noted as early as 2008, recent developments in Construction Grammar (CxG) as well as the creation of multimodal corpora have led researchers to consider the multimodal nature of language (Zima & Bergs, Reference Zima and Bergs2017). Consequently, a number of case studies (e.g., Andrén, Reference Andrén2014; Mittelberg, Reference Mittelberg2017; Zima, Reference Zima2017) have explored the possibility that language users have entrenched multimodal constructions, that is, ‘constructions with verbal and gesture FORM elements that express a joint MEANING’ (Hoffmann, Reference Hoffmann, Wen and Taylor2021, p. 81, his emphasis). The nature of such multimodal constructions, however, has been subject to debate (Hoffmann, Reference Hoffmann, Wen and Taylor2021; Zima & Bergs, Reference Zima and Bergs2017). On the one hand, some scholars hold a critical position in favor of the strict definition of multimodal constructions, according to which kinesic features must be obligatory for a combination of linguistic and gestural patterns to be qualified as a multimodal construction (Ningelgen & Auer, Reference Ningelgen and Auer2017; Ziem, Reference Ziem2017). On the other hand, in line with the usage-based view of language that sees entrenchment as a gradual phenomenon, more researchers expect a continuum of constructions between those that are ‘infrequently and inconsistently associated with gestures (and other multimodal cues) and [those] that are frequently and systematically used with given gestures’ (Zima, Reference Zima2017, p. 2). It is the latter position that this study takes, and thus aligns with the gradient notion of constructionhood (Ungerer, Reference Ungerer2023) in the multimodal context.

As argued by Hoffmann (Reference Hoffmann2017) and Schoonjans (Reference Schoonjans2017), the constructionhood of certain multimodal patterns depends not only on the frequency with which certain gestures co-occur with particular verbal constructions, but also on how salient and typical the gestures are for the constructions, which can be measured by applying collostructional analysis (Stefanowitsch & Gries, Reference Stefanowitsch and Gries2003). Using an automatic computer-vision system, Uhrig (Reference Uhrig2021) conducted such analysis, or what he termed crossmodal collostructional analysis and investigated the well-known association between the words yes/no and corresponding head movements (vertical/horizontal). By defining a collostruction broadly as ‘the combination of two constructions on arbitrary levels of representation that occur significantly more frequently together than expected’ (Uhrig, Reference Uhrig2021, p. 257), he argued for the important qualitative distinction between multimodal constructions and crossmodal collostructions. While multimodal constructions are gestalt-like units with both linguistic and gestural information in their formal poles, crossmodal collostructions are moderately associated combinations of linguistic and gestural constructions that are compositional. Consequently, Uhrig (Reference Uhrig2021, Reference Uhrig2022) argued that crossmodal collostructional analysis can quantify the degree of association and therefore rank candidates on a cline ranging from free combinations (no association, e.g., air quotes) through crossmodal collostructions (moderate association, e.g., yes/no and corresponding vertical/horizontal head movements, in that they frequently occur together but can be used independently of one another)Footnote 1 to multimodal constructions (strong association, e.g., German deictic so (‘like this’) and its accompanying iconic/pointing gesture, Ningelgen & Auer, Reference Ningelgen and Auer2017).Footnote 2 With this in mind, any of the multimodal patterns examined quantitatively thus far in the CxG literature may not be qualified as multimodal constructions, since apart from Uhrig (Reference Uhrig2021), no other studies have ever examined ‘the frequency of the [target] gesture in other constructions, the frequency of other gestures in the [target] construction as well as the frequency of other multimodal gestures and constructions’ (Hoffmann, Reference Hoffmann2017, p. 4). It is this gap that the present study seeks to bridge, by creating a lexicon of gestures (Kipp, Reference Kipp2004). The notion of gesture lexicon and gesture lemmas (its lexical entries) will be explained in more detail in Section 2.1.

The choice of the target constructions in this study is motivated by Hinnell’s (Reference Hinnell2018) study, in which the multimodality of English aspect-marking auxiliary verbs was investigated. By introducing the notion of action phases, which were defined as ‘the number of separate segments within the gesture stroke’ (Hinnell, Reference Hinnell2018, p. 784), Hinnell showed that the gestures accompanying open aspect auxiliary verbs (continue, keep) involved two or more action phases, which jointly denoted the ‘multiplex’ (Talmy, Reference Talmy2000) semantics of the open aspect constructions. However, contrary to her expectations, which stemmed from Ladewig’s (Reference Ladewig2011, p. 6) finding that the referential uses of cyclic gestures referred to ‘ongoing events in every instance’, cyclic gestures occurred with only 17% of open aspect verbs. This, subsequently, leads the present study to look into other English expressions that mark open aspects, which are, two types of conjoined adverbials that show constructional properties (i.e., formal or sematic idiosyncrasy, Goldberg, Reference Goldberg1995; Hilpert, Reference Hilpert2019). The first type, reduplicative adverbial constructions (RACs), refers to the expressions in which the same adverbs are coordinated, including over and over, again and again, and on and on. These conjoined adverbials are likely metonymic expressions of potentially endless repetitions of over/again/on, and therefore “carry different specialized meanings than their singleton counterparts” (Rice, Reference Rice, de Stadler and Eyrich1999, p. 241). The second type, oppositive adverbial constructions (OACs), consists of conjoined antonyms such as back and forth, up and down, and in and out. Contrary to the RACs, these irreversible adverbials inherently have spatial dimensions in their meanings. Hereafter, these six expressions will collectively be referred to as the [ADV and ADV] constructions,Footnote 3 which mark, or even emphasize iterative, habitual, or durative aspect (Rice, Reference Rice, de Stadler and Eyrich1999). Consider the following examples, in which the [ADV and ADV] constructions coerce the whole situation type of a sentence into an iterative or habitual interpretation.

The present study investigates the multimodality of these constructions, with the following principal research questions: (i) Are there any specific gestures that are frequently used with the [ADV and ADV] constructions? (ii) If any, how strong are the associations between those gestures and the constructions? That is, are they strong enough to be qualified as multimodal constructions, or more like crossmodal collostructions? These two research questions, respectively, correspond to the two criteria for identifying multimodal constructions, namely, (i) frequency and (ii) salience (Hoffmann, Reference Hoffmann2017; Schoonjans, Reference Schoonjans2017). It should also be noted that the present study follows Kendon (Reference Kendon2004) in defining gestures as body movements that have ‘features of manifest deliberate expressiveness’ (pp. 13–14, emphasis original; cf. Müller, Reference Müller, Seyfeddinipur and Gullberg2014), with a particular focus on hand gestures. Methodologically, the first research question is addressed by identifying gestures that occur frequently with the target constructions. With regard to the second research question, by annotating as reference data a moderate number of gestures that occur with other constructions, crossmodal collostructional analysis is conducted, whereby some combinations of gestures and target constructions are shown to be potential multimodal constructions while others to be more like crossmodal collostructions. With obtained results, Section 4 also discusses the embodied motivations for multimodal constructions, thereby providing implications for future research in multimodal CxG.

2. Materials and methods

This section provides detailed descriptions of the methodological parts of this study. Sections 2.1 and 2.2 present three methodological concepts that are essential for the present study. In Section 2.3, the description of a multimodal corpus used to collect data is provided. The following two sections, Sections 2.4 and 2.5 demonstrate how the data were collected and annotated. Subsequently, Section 2.6 reviews two intercoder reliability experiments. As a summary, Section 2.7 provides an overview of the annotated data.

2.1. Gesture lexicon and gesture lemma

While gestures seem to be highly spontaneous and free from standards of form at first, various researchers have found that they exhibit fairly stable form-meaning relations, even when they are obviously not emblems (cf. recurrent gestures, Ladewig, Reference Ladewig and Cienki2024; Müller, Reference Müller2017). Hence, similar to emblems, in a given cultural group, there appears to be a shared lexicon or inventory of conversational gestures, which can be referred to as a gesture lexicon (Kipp, Reference Kipp2004; Kipp et al., Reference Kipp, Neff and Albrecht2007).

For instance, the ‘cyclic gesture’ (Ladewig, Reference Ladewig2011; Ruth-Hirrel, Reference Ruth-Hirrel2018), where a speaker makes a continuous rotational movement of the hand, seems to be performed when talking about continuity, repetition, or change (McNeill, Reference McNeill1992, pp. 159–162). Another fairly conventionalized gesture is the ‘Palm Up Open Hand’ (Müller, Reference Müller and Müller2004, p. 234), which is ‘characterized by a specific hand shape and orientation: palm open, fingers extended more or less loosely, palm turned upwards’.

As Kipp et al. (Reference Kipp, Neff and Albrecht2007, p. 331) have noted, however, ‘while such forms appear to be universal, there is still much inter-speaker and intra-speaker variation in terms of the exact position of the hands and their ensuing trajectory’. The notion that subsumes such variation is the gesture lemma, which ‘can be taken as prototypes of recurring gesture patterns where certain formational features remain constant over instances’ (Kipp et al., Reference Kipp, Neff and Albrecht2007, p. 328). Importantly, a gesture lemma is a formal generalization of similar gestural patterns, divided by gesture classes in terms of their functions, that is, ‘a lexicon does not include the meaning of its entries’ (Kipp, Reference Kipp2004, p. 128).

While this particular focus on gestural forms may seem extreme, Kipp’s approach is in line with Kendon and his followers’ descriptive, form-based approach, which starts with ‘a detailed analysis of gesture form – both regarding their articulatory (etic) and their meaningful (emic) features or clusters of features – as a point of departure to reconstruct meaning’ (Müller, Reference Müller, Seyfeddinipur and Gullberg2014, pp. 138–139).Footnote 4 Thus, although a gesture lemma is not determined by its potential meanings, it is likely that a set of gesture lemmas sharing specific formational features also share similar (referential) functions, as demonstrated by previous studies on ‘gesture families’ (see Fricke et al., Reference Fricke, Bressem, Müller, Müller, Cienki, Fricke, Ladewig, McNeill and Bressem2014, pp. 1633–1635; Müller, Reference Müller, Seyfeddinipur and Gullberg2014, pp. 135–137 for overviews).Footnote 5 The description of annotation and identification of gesture lemmas will be presented in Section 2.5.

2.2. Crossmodal collostructional analysis

In unimodal CxG, collostructional analysis has gained widespread popularity and been extensively utilized for exploring the relationships between words and grammatical constructions. Collostructional analysis has three sub-types, of which simple collexeme analysis (Stefanowitsch & Gries, Reference Stefanowitsch and Gries2003) is applied in the current study to the co-occurrence of constructions and gestures. The constructions investigated in this simple collexeme analysis are typically syntactic constructions that have an open slot for lexemes to occur in, and lexemes that are attracted or repelled to the constructions are called collexemes. The associational strength between a given construction and a collexeme is called collostruction strength.

In crossmodal collostructional analysis (Uhrig, Reference Uhrig2021, Reference Uhrig2022) as introduced in Section 1, let us refer to target gesture lemmas that are attracted or repelled to a target construction as co-gestures of the construction, although the target constructions investigated in this study are phrasal constructions that do not have open slots. To conduct such crossmodal collostructional analysis, as with the unimodal collostructional analysis, the following four different frequencies of occurrence of a target construction (C) and a co-gesture (G) must be retrieved from the corpus under investigation:

These frequencies are then entered into a 2 × 2 contingency table, from which collostruction strength is calculated by means of the Fisher exact test. (However, as in Schmid and Küchenhoff (Reference Schmid and Küchenhoff2013), reference measures for collostruction strength are still subject to debate. We will return to this issue in Section 3.)

With regard to the synchronization of G with C, for a gesture to be qualified as synchronized with a target construction, either of the following conditions or both must be met:

Regarding (4a), the coding of lexical affiliation (i.e., co-expressiveness) follows Kipp’s (Reference Kipp2004) NOVACO scheme. As for (4b), if there is no overlap between them – that is, if the offset of G’s expression phase precedes the onset of C, or if the offset of C precedes the onset of G’s expression phase – they are not coded as synchronized. Given that gestures can sometimes precede their lexical affiliates, this definition of synchrony may result in cases in which a target construction has two (or more) synchronized gestures, each satisfying at least one of the conditions.

2.3. TED corpus search engine (TCSE)

The present study uses data from TED Talks, accessed through the TED Corpus Search Engine (TCSE; https://yohasebe.com/tcse), an online corpus system that searches transcripts of over 5,300 TED Talks,Footnote 6 ‘allowing users to query surface text forms, lemmas, parts-of-speech, or their combinations’ (Hasebe, Reference Hasebe2015, p. 1). When running queries in TCSE, corresponding videos are retrieved for the matched texts, making it a publicly available multimodal corpus. As a resource for research on multimodality, TED Talks have already received scholarly attention, and several studies have in fact been conducted with a focus on the role of gestures in English TED Talk presentations (e.g., Harrison, Reference Harrison2021; Masi, Reference Masi2020). However, as pointed out by Hasebe (Reference Hasebe2015, p. 181), TED Talk speakers also include non-native speakers of English, even though they are proficient. This may pose a critical problem for research like this study, which examines potential mental/social representations of constructions. Nonetheless, according to Hasebe’s (Reference Hasebe2018) statistical analysis (Kendall’s tau) comparing lexical data between TCSE and COCA, there is a substantial correlation between the two corpora (Kendall’s tau = 0.62, p < 0.001), as shown in Figure 1. This demonstrates that while TCSE reflects a specific register of TED Talks, namely, presentations, it still preserves the basic characteristics of the English language (Hasebe, Reference Hasebe2018, p. 168), making it valid for theoretical investigations of English. Accordingly, the results of the present study should also be considered valid, at least as a preliminary study that offers insights for research on multimodal constructions.

Figure 1. A plot of relative frequencies of lemmas in TCSE and COCA [All] (Adapted from Hasebe, Reference Hasebe2018, p. 168).

2.4. Data collection

The data were collected from TCSE in October and November 2022. For each search string, POS tags were not assigned so that it could retrieve as many instances as possible including those that were not used as adverbs (e.g., She went in and out of hospitals). The retrieved instances were first viewed to determine whether they were valid or not; that is, whether the speaker’s hands were visible on screen while uttering the target constructions, regardless of whether they were gesturing or not. In total, valid instances amounted to 576, and Table 1 shows how they were distributed across the constructions under scrutiny.

Table 1. Numbers of instances of each construction under investigation

For each of these instances, the utterance containing the target construction was clipped. The approximate length per clip was 10 seconds: 3–5 seconds on either side of the target construction.

2.5. Data annotation

For each clipping, gestures synchronized with the target constructions were annotated in ELAN (ELAN, 2022). Such gesture annotation was conducted by following Kipp’s (Reference Kipp2004) NOVACO scheme. The annotation consists of the following three different procedures, each of which is described in detail in the following subsections: (i) segmentation of movement phases and identification of movement phrases (Section 2.5.1); (ii) identifying and creating gesture lemmas to construct a gesture lexicon (Section 2.5.2), which is then used in (iii) the subsequent assignment of a gesture lemma to each movement phrase (Section 2.5.3).

2.5.1. Movement phases and phrases

The segmentation of movement phases and organization of movement phrases in the NOVACO scheme (Kipp, Reference Kipp2004) are based on a coding scheme proposed by Kita et al. (Reference Kita, van Gijn, van der Hulst, Wachsmuth and Fröhlich1998). As described in the scheme and also known widely, there are types of movement phases, including preparation, stroke, retraction, partial retraction, dependent hold (pre-stroke or post-stroke), and independent hold. Of particular relevance to the present study is the distinction between a single-segment phase and a multi-segment phase (Kita et al., Reference Kita, van Gijn, van der Hulst, Wachsmuth and Fröhlich1998, pp. 29–30), both of which can constitute a stroke. According to Kita et al. (Reference Kita, van Gijn, van der Hulst, Wachsmuth and Fröhlich1998), a stretch of bodily movement is decomposed into two phases “if there is an abrupt change of direction in the hand movement, AND there is a discontinuity in the velocity profile of the hand movement before and after the abrupt direction change” (p. 29, emphasis original). Accordingly, even if there is an abrupt change in direction, multiple movement segments that do not have a discontinuity in between, such as a hold, are still regarded as constituting a single phase, namely, a multi-segment phase. Somewhat related to this distinction, the presence of a hold becomes especially significant when we consider beat gestures as well. While multiple up-and-down manual movements are seen as a single beat gesture if there is no hold in between, those with holds are considered a sequence of several beat gestures.

Movement phases as described in most of this section thus far concern the internal organization of gestures. Then, the movement phrase, into which several movement phases are grouped together, is a notion that corresponds to the term gesture in its most common usage. In annotation, movement phases are coded first. Subsequently, movement phases are grouped together into a movement phrase according to the following rule based on Kipp (Reference Kipp2004, pp. 124–125) and Kita et al. (Reference Kita, van Gijn, van der Hulst, Wachsmuth and Fröhlich1998, p. 27).

According to the rule, a gesture (namely, a movement phrase) must always include one expressive phase that consists at least of a single- or multi-segment stroke phase, or an independent hold. Segmentation and identification of these phases were shown to be reliably coded by Kita et al. (Reference Kita, van Gijn, van der Hulst, Wachsmuth and Fröhlich1998).

2.5.2. Identifying and creating gesture lemmas

An equally important step as the one described in the last section is to construct a gesture lexicon by identifying and creating gesture lemmas from empirical data. This step is best explained in Kipp’s own words:

For annotation, first a lexicon of gesture lemmas must be collected from the empirical material. This collection step is done by systematically sifting through the empirical data and cataloguing the gestures by comparing them to the already found ones. Once the lexicon is complete, annotation can begin. During annotation no new lemmas may be added. Otherwise inconsistencies could emerge. Instead, a rest category must serve as a container for gesture lemmas not yet located in the lexicon (Kipp, Reference Kipp2004, p. 128).

In the current study, this step was conducted by examining a randomly selected half of all 576 clippings, with reference to Kipp’s (Reference Kipp2004) already established lexicon of 63 gesture lemmas. Once a gesture lemma from Kipp’s (Reference Kipp2004) lexicon was attested in the data, the lemma was added to the lexicon for the present study. However, the need for more subdivisions and the creation of lemmas that were not present in Kipp’s (Reference Kipp2004) lexicon emerged during this step. Such procedure was conducted following the NOVACO scheme (Kipp, Reference Kipp2004, pp. 123–137), which starts with a high-level classification of gestures into four gesture classes. Figure 2 summarizes the classification and serves as a decision tree for coding which gesture class applies to the gesture being annotated.

Figure 2. A decision tree for classifying gesture classes (based on Kipp, Reference Kipp2004, p. 125).

The decision tree is used in such a way that the coder goes from top to bottom and considers each class from left to right with reference to criteria that are specified for each class. Accordingly, the coder first checks whether the hand/arm movement in question is communicative or not. Non-communicative movements, such as adapters, are not a concern for the present study. Communicative movements, on the other hand, are further categorized into the following four classes: emblems, deictics, illustratives, and beats. This classification is a modified version of Kipp (Reference Kipp2004, pp. 39–43), which is in turn based on traditional classifications such as Efron (Reference Efron1941), Ekman and Friesen (Reference Ekman and Friesen1969), and McNeill (Reference McNeill1992). The key difference from Kipp (Reference Kipp2004) is that the illustrative class is not further specified in this study so it broadly subsumes those that are not classified as emblems, deictics, or beats.

It should be noted that these classes are not mutually exclusive since different criteria are applied to different classes. However, as Kipp (Reference Kipp2004) claimed, classifications are still possible, by identifying the predominant function and by defining how to categorize gestures in cases of ambiguity: the left branch is taken when in doubt. As has been mentioned just above, each class is checked from left to right, referring to criteria for each.Footnote 7 Criteria differ on a scale of whether they are based on the form of gestures or on the meaning/function. The degree to which they are based on form increases when going to the left; and so does meaning/function when going to the right. Emblems are the category that relies most on form-based criteria. Essentially, emblematic gestures conform to specific standards of form and have corresponding word-like meanings. In addition, they have the potential to be used as a replacement for speech, which is another important criterion for emblems. Deictics also rely on form-based criteria, be they of the concrete or abstract type (McNeill, Reference McNeill1992, p. 173). Canonically, deictic gestures consist of a single index finger or a flat open hand being pointed toward a (virtual) object, thus having the deictic function. Illustratives, by contrast, are not subject to formal conditions, rendering them sorely a functional category. The illustrative class is a superordinate category, leaving the room for more subdivisions, which nonetheless are not a concern here. It subsumes referential gestures (gestures related to the propositional content of the concurrent speech; cf. iconics and metaphorics, McNeill, Reference McNeill1992) and pragmatic gestures (gestures that convey ‘features of an utterance’s meaning that are not a part of its referential meaning or propositional content’ (Kendon, Reference Kendon2004, p. 158); however, the illustrative class only includes the modal and performative functions). Finally, beats are a rest class into which a gesture is placed if none of the categories in the left branches apply. As with illustratives, form is not a criterion for the class membership. Beat gestures relate to the co-occurring speech rhythmically, signaling that ‘the speaker feels [that the word/phrase the beat gestures accompany] is important with respect to the larger discourse’ (McNeill, Reference McNeill2005, p. 40).

After identifying the class of the gesture in question, the next step is to specify the formal features of the gesture lemma. As stated above, the notion of the gesture lemma is a formal generalization of gestures that exhibit similarity across instances, and thus, it does not refer, in principle, to the semantic aspect of the gestures (except for emblems). Following Kipp (Reference Kipp2004, pp. 128–131), in identifying gesture lemmas, coders must take into consideration form dimensions, which determine whether a gesture is a new lemma altogether or merely a formal variant of an existing gesture lemma. A dimension is referred to as formational (Kipp, Reference Kipp2004, p. 129) if changing a gesture along the dimension changes the lemma. The form dimensions are the following:

Shoulder movement and facial expression can be a formational dimension because some emblems can only be differentiated by them (Kipp, Reference Kipp2004, p. 129). In addition to the specification of formational features, a gesture lemma includes the verbal description of its form. An example of gestures lemmas is shown in Figure 3. The second row (‘Features’) in Figure 3 outlines the formational features of the gesture lemma Illustrative.Cup-Flip. While it specifies several features (e.g., the hand shape, hand orientation) as formational (i.e., essential for the gesture lemma), it does not include handedness. This means that the differences in handedness (one hand vs. both hands) are nothing but formal variants within this lemma. Importantly, handedness serves as a formational feature for certain other gesture lemmas (e.g., Figures 6 and 9).

Figure 3. An example of gesture lemmas (Illustrative.Cup-Flip)Footnote 8.

This identification and collection step resulted in a lexicon of 62 gesture lemmas. Of these lemmas, 37 lemmas were identical to Kipp’s (Reference Kipp2004) ones. The rest of the 25 lemmas were either completely new for this study or modified versions of Kipp’s (Reference Kipp2004) lemmas.Footnote 9 The list of all 62 gesture lemmas is presented in Appendix A.Footnote 10

2.5.3. Annotating gesture lemmas

Having completed the collection of all gesture lemmas, the annotation of gestures in each clipping was conducted. First, for each clipping, gestures that were synchronized with the target constructions (for the definition of synchrony, see Section 2.2) were annotated with regard to movement phases, and the movement phases annotated were then grouped together to form movement phrases. In the total of all 576 clippings, 532 gestures (i.e., movement phrases) were annotated as synchronized with the target constructions, and each movement phrase was assigned a gesture lemma from the gesture lexicon constructed for the present study. These 532 gestures are necessary for conducting crossmodal collostructional analysis, and more specifically, for the first cell (3a in Section 2.2; the frequency of G synchronized with C) and the third cell (3c; the frequency of C synchronized with gesture lemmas other than G).

However, crossmodal collostructional analysis requires other frequencies, namely, the frequency of G synchronized with all other constructions (3b) and the frequency of all other constructions synchronized with gesture lemmas other than G (3d). Therefore, gestures in synchrony with other constructions than the [ADV and ADV] constructions were needed as a reference dataset. To this end, an additional annotation step was carried out: gestures that occurred on either side of the target constructions, which were not synchronized with them, were annotated from a randomly selected half of all 576 clippings. This additional annotation step resulted in 560 gestures (i.e., movement phrases), each of which was also assigned a gesture lemma. During this step, movement phrases that did not fit any of the gesture lemmas were put into the rest category named ‘unknown’ (Kipp, Reference Kipp2004, p. 130).

2.6. Coding reliability

To assess whether the lexicon of 62 gestures could be reliably coded, two intercoder reliability experiments were carried out.Footnote 11 The author of this paper and two different coders (Coder A and Coder B) participated in the two experiments, respectively. Section 2.6.1 provides the descriptions of the first intercoder reliability test, and Section 2.6.2 the second one.

2.6.1. First intercoder reliability experiment

In this experiment, two coders (the author and Coder A) independently annotated 150 clippings, which were randomly selected from the 497 clippings that involved gestures synchronized with the [ADV and ADV] constructions.Footnote 12 These clippings contained pre-annotated movement phases and movement phrases that were assembled from those movement phases. However, in each clipping, the movement phrase synchronized with the [ADV and ADV] construction was not allocated a corresponding gesture lemma. The task of the coders was to assign a gesture lemma to each of the 150 movement phrases.

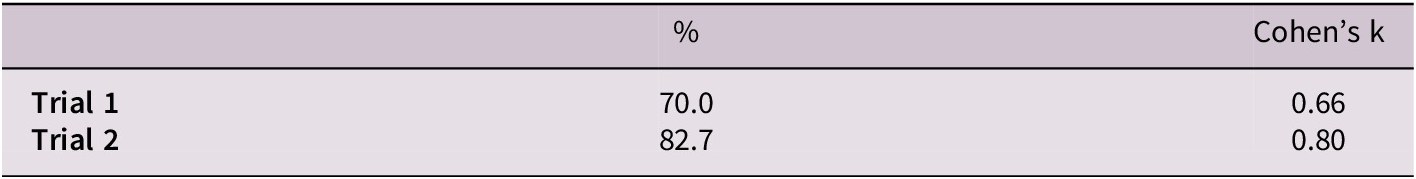

This task resulted in a 70% agreement between the coders and a Cohen’s k value of 0.66, which is very close to the score for “tentative conclusions to be drawn” (Carletta, Reference Carletta1996, p. 252). Examining the disagreement between coders, 56% were due to confusion between similar gestures in form. The remaining 44% were due to fundamental differences in interpreting functions of gestures. After defining stricter criteria for distinguishing similar gestures (see Appendix B), the task was conducted again. This resulted in an 82% agreement and a Cohen’s k value of 0.80, which is good reliability according to Carletta (Reference Carletta1996). Table 2 shows the results of the two trials.

Table 2. Results of the first intercoder reliability experiment

2.6.2. Second intercoder reliability experiment

The second experiment replicated Kipp’s (Reference Kipp2004, pp. 140–141) coding reliability experiment. Two coders (the author and Coder B) independently annotated a 2:16-minute snippet of a TED Talk, in which the [ADV and ADV] constructions were not uttered. These data contained pre-annotated movement phases. In accordance with Kipp (Reference Kipp2004, p. 140), the task of the coders was twofold: first, the coders were asked to assemble movement phases to movement phrases, which is called ‘segmentation’. They were then asked to assign a gesture lemma to each phrase, which is called ‘classification’.

For the classification task, only the movement phrases that matched between the two coders in the segmentation task were taken into consideration. The number of such movement phrases was 49.

As seen in the first column of Table 3, the reliability percentage of the segmentation task was very high. However, as columns two and three show, the classification task yielded critical results. A Cohen’s k value of 0.43 indicates that the reliability was not very high. The difference between the results of the first and second experiments would probably be due to the contexts in which gestures were performed. In the first experiment, all gestures were synchronized with the target constructions, rendering it easier for the coders to interpret the functions of gestures. On the other hand, since the contexts of the second experiment were not limited to certain types of constructions, the coders could not agree on the gestures that were similar in form but functionally distinct. Therefore, after defining the criteria for coding formally similar gestures across different functional classes (see Appendix B), the classification task was conducted again. This resulted in an 83% agreement and a Cohen’s k value of 0.82, which is considered good reliability according to Carletta (Reference Carletta1996).

Table 3. Results of the second intercoder reliability experiment

To conclude this section, the experiments show that gesture lemmas can be reliably coded not only in specific contexts, such as when all gestures are synchronized with certain kinds of constructions, but also in general contexts. In line with Kipp’s results (2004, pp. 140–141), the improvement in reliability between trials 1 and 2 in both experiments indicates that more rigid definitions and documentation in the coding manual can reduce more sources of error.

2.7. Overview of annotated data

After the two intercoder reliability experiments, all the annotated gesture lemmas, including those in the reference data, were reviewed with reference to the criteria for distinguishing similar gestures and the revised descriptions of some gesture lemmas (see Appendices A and B). This section presents an overview of the annotated data.

The annotated clippings collectively form a small multimodal corpus in which 407 speakers are performing 1,092 gestures in total. Of all these gestures, 294 gestures are synchronized with the RACs, and 238 gestures with the OACs; the remaining 560 gestures are synchronized with other constructions, which occur on either side of the target constructions in a randomly selected half of all 576 clippings, forming a reference dataset for conducting crossmodal collostructional analysis. With regard to the RACs and OACs, a notable fact is that they were quite frequently accompanied by gestures: for the RACs, the gesture accompaniment rate is as high as 85.4%; for the OACs, it is even higher at 87.4%.Footnote 13

Table 4 shows the distribution of gesture lemmas that co-occurred with the RACs. Of the total 294 gestures, the gesture lemma I.Cyclic-Sagittal (Figure 4) was used most frequently with the RACs, with a proportion of 36.4%. Overall, gestures with cyclic movements (highlighted in red) occurred quite frequently, totaling 131 instances (44.6%). Equally importantly, the Beat gestures (highlighted in green) also accompanied substantial instances of the RACs, amounting to 27.6% of gestures observed with the RACs. As we will see in Table 6, however, this may be a general tendency considering the environment in which the speakers were situated, that is, TED Talks.

Table 4. Distribution of gesture lemmas co-occurring with the RACsFootnote 14

Figure 4. I.Cyclic-Sagittal Footnote 15.

On the other hand, Table 5 displays the distribution of gestures that occurred concurrently with the OACs. The five most frequent gestures all showed a bidirectional movement (Figures 5–9). The bidirectional gestures including these five (highlighted in blue) accounted for as much as 73.1% of the total 238 gestures, aligning with the semantics of the OACs. Another interesting point is that the proportion of Beat gestures is much smaller than that of the RACs (Table 4) and the reference data (Table 6). We will address this issue in Section 3.

Table 5. Distribution of gesture lemmas co-occurring with the OACs

Figure 5. I.Bidirection-Horizontal Footnote 16.

Table 6 summarizes the gesture lemmas that occurred in the vicinity of the target constructions in half of all 576 clippings, which serve as reference data specifically used for conducting crossmodal collostructional analysis. The contexts in which the reference data were collected are assumed to be more neutral than those in which the gesture lemmas in Tables 4 and 5 were collected. However, we must also acknowledge that the contexts of the reference data may be skewed in favor of the target constructions, as they were adjacent to those constructions. This might be a cause for relatively high frequencies of gestures in the reference dataset that also frequently occurred with the target constructions. Nonetheless, this does not necessarily have an advantageous impact on the results of crossmodal collostructional analysis, as it makes it more difficult for those gestures to obtain low p-values in the Fisher exact test as well as high odds ratios.

Table 6. Distribution of gesture lemmas co-occurring with other constructions (reference data)

First, most notable among the reference data is the large number of Beat gestures, which is also consistent with the findings in Table 4. This can plausibly be considered a presentation strategy by the speakers of TED Talks to make it visible that they regard what they are referring to as ‘important with respect to the larger discourse’ (McNeill, Reference McNeill2005, p. 40). Following the Beat gesture is the gesture lemma I.Cyclic-Sagittal, with a proportion of 6.1%. This gesture, which was predominant in Table 4 (the RACs), can also be used in different contexts, serving a self-oriented function (word/concept search) and a performative function (e.g., request), as well as a referential function conveying the idea of continuity or progress (Ladewig, Reference Ladewig2011, pp. 7–13). Including such cyclic gestures, most of those appearing frequently in the reference dataset would be regarded as recurrent gestures (Ladewig, Reference Ladewig and Cienki2024; Müller, Reference Müller2017), having fairly stable formational cores paired with their semantic cores, which can allow for a variety of contextual variants (e.g., I.Away [cf. ‘Away gestures’, Bressem & Müller, Reference Bressem, Müller, Müller, Cienki, Fricke, Ladewig, McNeill and Bressem2014], I.Frame [cf. ‘bimanual PVOH’, Mittelberg, Reference Mittelberg2017], and I.PUOH [Müller, Reference Müller and Müller2004]; see Appendix A). Thus, the findings in Table 6 can also be generally seen as supporting the idea that there are certain regularities in how we gesture and that there are culturally conventionalized repertoires of gestures apart from emblems.

3. Results

With all the annotated data for each of the six variants of the [ADV and ADV] constructions and the reference data, crossmodal collostructional analysis was conducted. The four frequencies necessary for crossmodal collostructional analysis are as follows (as outlined earlier in Section 2.2):

To exemplify the analysis, Table 7 presents a 2 × 2 contingency table for the target construction (C) over and over and the co-gesture (G) I.Cyclic-Sagittal (Figure 4), from which the Fisher exact test is performed.Footnote 21

Table 7. A contingency table for crossmodal collostructional analysis (a target construction over and over and a co-gesture I.Cyclic-Sagittal)

As introduced in Section 1, Hoffmann (Reference Hoffmann2017) and Schoonjans (Reference Schoonjans2017) argued that the constructionhood of a multimodal pattern depends not only on the mere frequency with which the target construction co-occurs with the target gesture but also on how salient and prototypical the gesture is for the construction, assuming that constructions have prototype structures (Cienki, Reference Cienki2017). Building on this argument, the present study employed crossmodal collostructional analysis to measure the salience of a gesture for the target constructions. In doing so, only gestures that satisfied the frequency criterion, albeit tentatively – those that occurred in more than 10% of the instances of each target construction – were considered for the Fisher Exact test, which yields the collostruction strength.

Below, Tables 8 and 9 summarize the results of the crossmodal collostructional analyses for the RACs and the OACs, respectively. Before delving into these results, however, we need to consider which value should be taken as a reliable measurement of salience for this study. In traditional unimodal collostructional analysis (Stefanowitsch & Gries, Reference Stefanowitsch and Gries2003), the Fisher Exact p-value is used as a collostruction strength, a value that indicates the associational strength between a construction and a collexeme. However, as Uhrig (Reference Uhrig2022, p. 109) points out, such an approach is also problematic for the current study because, as a test of significance, the Fisher exact test exhibits a bias in favor of higher frequency. Consequently, in Table 8, the p-values of the gesture lemma I.Cyclic-Sagittal decrease (which suggests that the associational strengths increase) in inverse proportion to the absolute co-frequency of each variant of the RACs with the gesture lemma, despite the nearly identical relative frequencies of the lemma across the three variants. For this very reason, the current study refers primarily to odds ratio, which is a measure of effect size and thus not dependent on the sample size of the data. As explained clearly by Schmid and Küchenhoff (Reference Schmid and Küchenhoff2013, pp. 553–554), odds ratio scores are themselves easily interpretable in such a way that the odds ratio of 9.10 given for I.Cyclic-Sagittal co-occurring with over and over in Table 8 indicates that a construction which has the feature +target construction (= over and over) is 9.10 times more likely to also have the feature +target gesture (= I.Cyclic-Sagittal) than a construction not having the feature +target construction (i.e., a construction in the reference dataset). With this focus on odds ratio as a reference measure of the associational strength between a construction and a gesture, the column for odds ratio in boldface in Tables 8 and 9.

Table 8. Results of the crossmodal collostructional analysis for the RACs

Table 9. Results of the crossmodal collostructional analysis for the OACsFootnote 22

As seen in Table 8, the three variants of the RACs exhibit very similar tendencies, with the gesture lemma I.Cyclic-Sagittal dominating over other gesture lemmas. The odds ratios for I.Cyclic-Sagittal in the three variants are around 8 to 9, indicating that they are all highly attracted to the gesture lemma, despite the fact that it was also frequently observed in the reference dataset. Additionally, for each variant, the Beat gesture also accounts for a large proportion, ranging from 20% to 31%. When it comes to the odds ratio, however, the contrast becomes prominent between the gesture lemmas Beat and I.Cyclic-Sagittal. For each variant, the association with Beat is not significant, and especially for the construction on and on the odds ratio of 0.82 even suggests that it is slightly repelled to Beat; on the other hand, the scores of 1.22 and 1.49 for the other two variants indicate slight attractions. Obviously, this is because the gesture lemma Beat was the most frequent one in the reference dataset, amounting to 23% of all gesture lemmas (Table 6).

Regarding the OACs, as can be predicted by Table 5, gestures with bidirectional movements show significant attractions. As for back and forth, the gesture lemmas I.Bidirection-Horizontal (Figure 5) and I.2H-Alternation-Sagittal (Figure 6) account for a large proportion of the accompanying gestures, yielding high odds ratio scores. The same applies to in and out; however, in addition to the two lemmas, another lemma, I.1H-Small-To-Fro (Figure 9), features an even higher odds ratio since it was rarely found in the reference dataset. Concerning up and down, two bidirectional gestures on the vertical axis, I.Bidirection-Vertical (Figure 7) and I.Small-Bidirection-Vertical (Figure 8), both obtain odds ratios of over 55, reflecting the fact that these two lemmas were nearly exclusively used for up and down and not in the reference dataset. Finally, unlike the RACs, each variant of the OACs exhibits significant repulsion to the Beat gesture, which also typically involves bidirectional movement but lacks the referential function. This may be because, as a gesture with bidirectional movement, the Beat gesture was overshadowed by other bidirectional gestures with referential meanings which were in perfect alignment with the meanings of the OACs.

Figure 6. I.2H-Alternation-Sagittal Footnote 17.

Figure 7. I.Bidirection-Vertical Footnote 18.

Figure 8. I.Small-Bidirection-Vertical Footnote 19.

Figure 9. I.1H-Small-To-Fro Footnote 20.

4. Discussion

With the obtained results, we are now back to the research question posed in Section 1: If there are any specific gestures that are frequently employed with the [ADV and ADV] constructions, do they form multimodal constructions, or are they more compositional crossmodal collostructions (Uhrig, Reference Uhrig2021)? In Section 4.1, in light of the proposed criteria for identifying multimodal constructions, namely, (i) frequency and (ii) salience (Hoffmann, Reference Hoffmann2017; Schoonjans, Reference Schoonjans2017), we discuss the ontological status of the multimodal patterns reviewed in the preceding section. Furthermore, Section 4.2 presents an account of the embodied motivations for multimodal constructions.

4.1. Multimodal constructions versus crossmodal collostructions

First, we examine the fact that the gesture lemmas I.Cyclic-Sagittal and Beat were frequently observed in all three variants of the RACs, each making up 20% to 37% of the data. It is not surprising that I.Cyclic-Sagittal accounted for such a large proportion, as it refers to iteration (Ruth-Hirrel, Reference Ruth-Hirrel2018) or ongoing events (Ladewig, Reference Ladewig2011), which are perfectly compatible with the aspectual meanings of the RACs. But what about Beat? Are the frequent co-occurrences with Beat an accidental feature of the RACs rooted in the specific register of this study, namely TED Talks since the Beat gesture is essentially of the free combination type (such as air quotes; Uhrig, Reference Uhrig2021) without any semantic values of its own? This possibility would be refuted by Lelandais’ (Reference Lelandais2024) study, which also examines the co-speech gestures of over and over. Using an archive of American television news programs, the study found that cyclic gestures and beat gestures were frequently produced in combination with over and over, accounting for 42% and 38% of hand gestures co-occurring with the construction, respectively (Lelandais, Reference Lelandais2024, pp. 21–24). This finding, albeit slightly higher in proportion, is in complete agreement with the results of the present study, making it more convincing that the frequent co-occurrence with I.Cyclic-Sagittal and Beat is a general feature of the RACs, or at least of over and over. Footnote 23 Therefore, it can be argued that the combinations of each variant of the RACs with I.Cyclic-Sagittal or Beat meet the first criterion for multimodal constructions, namely, that of frequency.Footnote 24 As we saw in Table 8, however, the two gesture lemmas differ with respect to their odds ratios. For each variant of the RACs, I.Cyclic-Sagittal features a high odds ratio; hence, in light of the second criterion of salience, it is highly likely that the combination of each variant of the RACs with I.Cyclic-Sagittal ceases to be merely compositional and instead becomes stored as an integrated whole, that is, a multimodal construction. By contrast, the much lower odds ratios for Beat in all three variants, around 0.8 to 1.5, suggest that the combination of the RACs and Beat would remain compositional, positioned somewhere along a continuum between free combinations and crossmodal collostructions, especially given that the associations are not significant.

In the same vein as the combination of the RACs and I.Cyclic-Sagittal, it is highly likely that each variant of the OACs and its corresponding bidirectional gestures jointly form multimodal constructions. In particular for up and down, bidirectional gestures on the vertical axis (I.Bidirection-Vertical and I.Small-Bidirection-Vertical) yielded considerably high odds ratios, indicating a high degree of multimodal constructionhood. As for back and forth and in and out, the gesture lemmas I.Bidirection-Horizontal and I.2H-Alternation-Sagittal may well be part of multimodal constructions in terms of frequency and salience. However, the status of I.1H-Small-To-Fro for in and out remains subject to further debate. On the one hand, I.Bidirection-Horizontal and I.2H-Alternation-Sagittal were also observed in the reference dataset with substantial frequency, suggesting that they can arguably be regarded as recurrent gestures. On the other hand, I.1H-Small-To-Fro was rarely found in the reference dataset and thus is more likely a so-called singular gesture (Müller, Reference Müller2017). This resulted in its much higher odds ratio in in and out than the two gesture lemmas in question, despite the lower frequency of co-occurrence with the construction. This apparently contradictory result seems to have stemmed from a methodological issue inherent in the statistical approach chosen for this study. However, it also raises a theoretical question about which condition – frequency or salience – has a greater impact on the multimodal constructionhood, necessitating further empirical investigation.

While the argument presented so far in this section aligns more closely with the approach emphasizing lower-level representations of constructions (the exemplar-based model; e.g., Bybee, Reference Bybee, Hoffmann and Trousdale2013), the results can also be interpreted as supporting the existence of higher-level multimodal constructional schemas, such as [the RAC (ADV1 and ADV1) + the cyclic gesture family] and [the OAC (ADV1 and ADV2) + the bidirectional gesture family]. For the RACs overall, the cyclic gestures (those highlighted in red in Tables 4–6) accounted for 44.6% of all gestures co-occurring with the RACs (see Table 4), yielding an odds ratio of 9.39 (95% CI: 6.32, 14.16, p < 0.001); for the OACs overall, the bidirectional gestures (those highlighted in blue in Tables 4–6) accounted for 73.1% of all gestures accompanying the OACs (see Table 5), resulting in an odds ratio of 43.02 (95% CI: 26.98, 70.35, p < 0.001). From the perspective that assumes redundant representations of constructions (Hilpert, Reference Hilpert2019, pp. 67–68), both of these multimodal constructional schemas and the lower-level multimodal constructions can coexist in the construct-i-con. The advantage of assuming the higher-level multimodal constructional schemas, however, is that they can license atypical combinations of the RACs/OACs and the cyclic/bidirectional gestures. Those atypical combinations cannot be explained by the lower-level multimodal constructions discussed above, as they represent the (proto-)typical combinations of the target constructions and the gesture families in question. For example, in addition to I.Bidirection-Horizontal and I.2H-Alternation-Sagittal, the constructions back and forth and in and out were accompanied by I.(Small)-Bidirection-Vertical, in four and three instances, respectively. The multimodal constructional schema [the OAC (ADV1 and ADV2) + the bidirectional gesture family] is likely to have operated in the production of these atypical multimodal constructs, based on the speaker’s online conceptualizations of the described bidirectional events (see also the next section).

4.2. Exbodiment: the experiential basis for multimodal constructions

As we have seen thus far, Uhrig’s (Reference Uhrig2021) distinction between multimodal constructions and crossmodal constructions is based on the strength of association between a linguistic construction and a gesture, as well as the lack of compositionality resulting from the association. By contrast, Hoffmann’s (Reference Hoffmann, Wen and Taylor2021) definition of multimodal constructions, as provided in Section 1, emphasizes the jointness of meaning or co-expressiveness of the linguistic and gestural parts, which seems to be the case given the potential multimodal constructions posited in the last section. This nature of multimodal constructions can be best explained by drawing on Mittelberg’s (Reference Mittelberg, Müller, Cienki, Fricke, Ladewig, McNeill and Tessendorf2013) notion of exbodiment, according to which gestures are thought to be ‘bodily semiotic acts through which embodied image and action schemas may, to some degree at least, be externalized, made visible, and used for meaningful communication’ (Mittelberg, Reference Mittelberg, Cienki and Müller2008, p. 144). For the target constructions of the present study, the image schemas in which their meanings are conventionally grounded are particularly relevant here, with an image schema defined as ‘a recurring, dynamic pattern of our perceptual interactions and motor programs that gives coherence and structure to our experience’ (Johnson, Reference Johnson1987, p. xiv).

Regarding the RACs, their meanings are grounded in the iteration image schema, which can also be inferred from their forms that include lexical iterations. The iteration schema, in turn, is inherently connected with the cycle image schema (Ruth-Hirrel, Reference Ruth-Hirrel2018) because we experience iteration through cycles, such as hands going around a clock and the sun moving across the sky, with the primary metaphor time is space operating in this conception (Fauconnier & Turner, Reference Fauconnier, Turner and Gibbs2008). As such, the image schemas iteration and cycle serve as embodied motivations for the RACs, and they become externalized, or exbodied (Mittelberg, Reference Mittelberg, Müller, Cienki, Fricke, Ladewig, McNeill and Tessendorf2013) into cyclic gestures.

Similarly, the OACs can also be seen as grounded in the iteration schema given the aspectual dimension of their meanings, but they are certainly more strongly grounded in the spatial relation image schemas (Lakoff & Johnson, Reference Lakoff and Johnson1999) such as front-back, up-down, left–right and so on. For up and down, it is obvious that the up-down image schema plays a crucial role in both the meaning of the construction and the manifestation of bidirectional gestures on the vertical axis. A more interesting fact, however, is that the constructions back and forth and in and out were accompanied by bidirectional gestures that were realized on any axis. As mentioned at the end of the previous section, in addition to I.Bidirection-Horizontal and I.2H-Alternation-Sagittal , these constructions also co-occurred with I.(Small)-Bidirection-Vertical several times. This indicates that they would arguably be grounded in a more general spatial image schema, something like bidirectionality, with a more specific image schema (such as front-back, left–right, etc.) activated and enacted in each usage event to produce a bidirectional gesture on a specific axis. This specification would plausibly depend on the speaker’s construal, including perspective-taking, fictive motion, etc., which is also likely influenced by various contextual factors. Given this argument, the reason why the constructions back and forth and in and out were accompanied less by bidirectional gestures on the vertical axis but more by those on the horizontal and sagittal axes, may be that there was an alternative that prevailed, namely, the multimodal construction ‘up and down + I.Bidirection-Vertical/I.Small-Bidirection-Vertical’, which is conventionally grounded in the more specified up-down image schema.

On a final note, there is another noteworthy observation concerning in and out. While its preference for co-speech gestures was quite similar to back and forth, its meaning, or more specifically, the image schema on which it bases its meaning, is different; the meaning of in and out inherently involves containment. Nevertheless, the fact that gestures accompanying in and out had few elements that could evoke the containment image schema, and instead profiled its bidirectional aspect more, suggests that there may be some kind of pattern or constraint on which aspect of an image schema can be materialized into a gesture.

5. Conclusion

Using a small multimodal corpus constructed by manual annotation (Section 2.7), the present study shows that the [ADV and ADV] constructions are significantly attracted to certain kinds of gesture lemmas, forming multimodal constructions. Each variant of the RACs, on the one hand, co-occurred quite frequently with I.Cyclic-Sagittal and Beat. However, subsequent crossmodal collostructional analysis revealed that in combination with the RACs, the former is highly likely to be stored as multimodal constructions, whereas the latter to be positioned somewhere between the free combination and the crossmodal collostruction zone. On the other hand, each variant of the OACs exhibited significant attractions to its corresponding bidirectional gestures, and therefore they too are plausibly represented as multimodal constructions in a speaker’s construct-i-con.

In Section 4.2, the embodied motivations for the potential multimodal constructions were also discussed, drawing on Mittelberg’s (Reference Mittelberg, Cienki and Müller2008, Reference Mittelberg, Müller, Cienki, Fricke, Ladewig, McNeill and Tessendorf2013) notion of exbodiment. Building on that notion, it is also possible to predict in an a priori manner what kinds of gestures may co-occur with a target construction, by considering in advance the embodied image or action schemas in which the meaning of the construction is grounded. Thus, what Tables 4 and 5 showed us – namely, that the cyclic gestures and bidirectional gestures accounted for 44% and 73% of the gestures accompanying the RACs and the OACs, respectively – is not surprising, although the kinesic information of the gestures we can predict from the embodied schemes is quite schematic in nature. However, as has been shown in the present study, the realization of those gestures is patterned in certain ways. In other words, they have some specific kinesic features that are conventionalized (e.g., I.2H-Alternation-Sagittal , I.Cyclic-Sagittal ), which in turn makes it plausible to assume that multimodal constructions have prototype structures (Cienki, Reference Cienki2017), such that some gestural configurations are more prototypical while others are more peripheral (compare ‘back and forth + I.2H-Alternation-Sagittal’ with ‘back and forth + I.Bidirection-Vertical’). Therefore, one of the tasks of future research in multimodal CxG would be to identify such conventionalized features of gestures that externalize the embodied schemas of a target construction, which, in principle, have many possible ways of manifestation.

Acknowledgments

I would like to express my gratitude to Toshio Ohori for his supervision of the original thesis on which this paper is based. I am also grateful to Yoichiro Hasebe for creating and maintaining TCSE. Furthermore, this paper has greatly benefited from the comments from Beate Hampe, Hikaru Hotta, Ippei Inoue, Jordan Zlatev, Kyoko Ohara, Manon Lelandais, Masato Yoshikawa, Minoru Ohtsuki, and Yoshiki Nishimura. Lastly, I extend my appreciation to the two anonymous reviewers and the editor for their valuable feedback on the manuscript.

Appendices and data availability statement

Appendices A (a complete list of gesture lemmas) and B (coding criteria for distinguishing similar lemmas), R scripts for the Fisher exact tests, and spreadsheets summarizing the results of gesture annotations and the two intercoder reliability experiments are available on OSF (https://osf.io/8t2pj/).

Competing interest

The author declares none.