Introduction

This article revisits Glenn Snyder’s seminal theory on the ‘stability–instability paradox’ to consider the risks associated with the artificial intelligence (AI)–nuclear nexus in future digitised warfare.Footnote 1 It critiques the dominant interpretation (or ‘red-line’Footnote 2) of Snyder’s theory: the presence of restraint among nuclear-armed states under mutually assured destruction (MAD) – where both sides possess nuclear weapons that present a plausible mutual deterrent threat – does not inhibit low-level conflict from occurring under the nuclear shadow.Footnote 3 The article uses fictional scenarios to illustrate how deploying ‘AI-enabled weapon systems’ in a low-level conflict between the United States and China in the Taiwan Straits might unintentionally spark a nuclear exchange.Footnote 4 The scenarios support the alternative interpretation, known as the ‘brinkmanship model’, of the paradox conceptualised by Christopher Watterson.Footnote 5 In other words, they are incentivised to exploit MAD and engage in military adventurism to change the prevailing political status quo or make territorial gains.Footnote 6 The scenarios indicate that using AI-enabled weapon systems accelerates a pre-existing trend of modern warfare associated with speed, precision, and complexity, which reduces human control and thus increases escalation risk.Footnote 7 The use of fictional scenarios in this article provides a novel and imaginative conceptual tool for strategic thinking to challenge assumptions and stimulate introspection that can improve policymakers’ ability to anticipate and prepare for (but not predict) change.

Although Snyder’s 1961 essay was the first to elaborate on the stability–instability paradox in detail, he was not the first scholar to identify this phenomenon. B. H. Liddell Hart, in 1954, for example, noted that ‘to the extent that the hydrogen bomb reduces the likelihood of full-scale war, it increases the possibility of limited war pursued by widespread local aggression’.Footnote 8 The Eisenhower administration’s declaratory policy of massive retaliation (i.e. first strike capacity) has primarily been attributed to Hart’s strategic rationale.Footnote 9 Building on Snyder’s essay, Waltz opined that while nuclear weapons deter nuclear use, they may cause a ‘spate of smaller wars’.Footnote 10 Similarly, Robert Jervis posited that though the logic of the paradox is sound, in the real world, the stabilising effects of the nuclear stalemate (i.e. a strategic cap) implied by the paradox cannot be taken for granted.Footnote 11 In other words, neither Waltz nor Jervis was confident that escalation could be controlled. In addition to Snyder’s paradox, two other influential strategic concepts emerged in the 1950s, adding a new layer of sophistication and nuance to those originally propounded by Bernard Brodie and William Borden a decade earlier:Footnote 12 Thomas Schelling’s game-theoretic notion of ‘threats that leave something to chance’,Footnote 13 and Albert Wohlstetter’s first and second strike clarification.Footnote 14 In subsequent years, these foundational ideas were revisited to consider nuclear brinkmanship,Footnote 15 the ‘nuclear revolution’,Footnote 16 and the impact of emerging technology.Footnote 17

Recent empirical studies on the paradox, while explaining how leaders perceive risk when engaging in low-level conflict in nuclear dyads and the unintentional consequences of the paradox playing out in a conflict below the nuclear threshold, say less about how threats of aggression and military adventurism can inadvertently or accidentally escalate out of control.Footnote 18 Less still has been said about how emerging technology might affect these dynamics.Footnote 19 The limited evidentiary base can, in part, be explained by: (a) the lack of observable empirical phenomena – nuclear weapons have not been used since 1945, and we have not seen an AI-enabled war between nuclear powersFootnote 20 – and (b) bias inference problems caused by case selection (qualitative studies focus heavily on the India–Pakistan and US–Soviet dyads) to prove the validity of the paradox retroactively.Footnote 21 Combining empirical plausibility, theoretical rigour, and imagination, the article uses fictional scenarios (or ‘future counterfactuals’Footnote 22) to illustrate and test (not predict or prove) the underlying assumptions of the dominant red-line interpretation of Snyder’s stability–instability paradox in future AI-enabled war. In doing so, it contributes to the discourse on using fictionalised accounts of future conflict, filling a gap in the literature about the unintended escalatory consequences of the paradox in modern warfare.

The article is organised into three sections. The first section examines the core logic, key debates, and underlying assumptions of the stability–instability paradox. The second section describes the main approaches to constructing fictional scenarios to reflect on future war and how we can optimise the design of these scenarios to consider the implications of Snyder’s paradox of introducing AI-enabled weapons systems into nuclear dyads. The final section uses two fictional scenarios to illustrate how AI-enabled weapons systems in a future Taiwan crisis between the United States and China might spark inadvertent and accidental nuclear detonation.Footnote 23 The scenarios expose the fallacy of the supposed safety offered by nuclear weapons in high-intensity digitised conflict under the nuclear shadow.

Rethinking the stability–instability paradox in future AI-enabled warfare

The core logic of the stability–instability paradox captures the relative relationship between the probability of a conventional crisis or conflict and a nuclear crisis or conflict. Snyder’s 1961 essay is generally accepted as the seminal treatment of paradox theorising. Specifically, ‘the greater the stability of the “strategic” [nuclear] balance of terror, the lower the stability of the overall balance at its lower levels of violence’.Footnote 24 Put differently, when a nuclear stalemate (or MAD) exists,Footnote 25 the probability of a low-level crisis or conflict is higher (i.e. crisis instability). According to Snyder: ‘If neither side has a “full first-strike capability”, and both know it, they will be less inhibited about the limited use of nuclear weapons than if the strategic (nuclear) balance were unstable.’Footnote 26 In other words, under the condition of MAD, states are more likely to be deterred from risking all-out nuclear Armageddon. Still, because of the strategic cap this balance is believed to offer, they are more likely to consider limited nuclear warfare less risky.

Similarly, Jervis notes that ‘the extent that the military balance is stable at the level of all-out nuclear war, it will become less stable at lower levels of violence’.Footnote 27 Despite the sense of foreboding during the Cold War about the perceived risks of nuclear war escalation, which led to US–Soviet arms racing dynamics – casting doubt on the stability equation of the paradox equation – the paradox’s larger rationale remains theoretically sound. That is, nuclear-armed states, all things being equal, would exercise caution to avoid major wars and nuclear exchange. As a corollary, the credible threat of nuclear retaliation (or second-strike capability) provides states with the perception of freedom of manoeuvre to engage in brinkmanship, limited wars (including the use of tactical nuclear weapons),Footnote 28 proxy wars, and other forms of low-level provocation.Footnote 29 This interpretation of the paradox, also known as the red-line model (or strategic cap), remains dominant in the strategic literature and policymaking circles.Footnote 30 The link between this interpretation of the paradox and pathways to conflict, specifically intentional versus unintentional and accidental nuclear use, is explained by the less appreciated connection with nuclear brinkmanship described below.

Although Snyder’s 1961 essay does not offer a rigorous causal theoretical explanation of low-level aggression in nuclear dyads conditioned on MAD, he does acknowledge the ‘links’ between the nuclear and conventional balance of power and how they ‘impinge on each other’. Conventional (i.e. non-nuclear) instability in nuclear stalemates can lead to strategic instability, thus destabilising the ‘overall balance of power’.Footnote 31 Snyder describes several casual contingencies (or ‘provocations’) in the relationship between nuclear and conventional stability that could risk nuclear escalation:

In a confrontation of threats and counterthreats, becoming involved in a limited conventional war which one is in danger of losing, suffering a limited nuclear strike by the opponent, becoming involved in an escalating nuclear war, and many others. Any of these events may drastically increase the potential cost and risk of not striking first and thereby potentially create a state of disequilibrium in the strategic nuclear balance.Footnote 32

Snyder’s causal links formulation offers scholars a useful theoretical baseline to explore the risk of nuclear escalation in the overall balance, particularly the role of nuclear brinkmanship and the attendant trade-off between the perceived risk of total war and the possibilities for military adventurism (e.g. coercive pressures, escalation dominance, conventional counterforce, and false-flag operations).Footnote 33 According to Robert Powell, ‘states exert coercive pressure on each other during nuclear brinkmanship by taking steps that raise the risk that events will go out of control. This is a real and shared risk that the confrontation will end in a catastrophic nuclear exchange’ (emphasis added).Footnote 34 In other words, brinkmanship is a dangerous game of chicken and a manipulation risk that risks uncontrolled inadvertent escalation in the hope of achieving a political gain.Footnote 35

While some scholars characterise brinkmanship as a distinct corpus of the paradox theorising, the connection made by Snyder (albeit not explicitly or in depth) suggests that brinkmanship behaviour is an intrinsic property of the paradox.Footnote 36 Although Thomas Schelling’s notion of the ‘threat that leaves something to chance’ says more about the role of uncertainty in deterrence theory, nonetheless, it helps elucidate how and why nuclear dyads might engage in tests of will and resolve at the precipice of Armageddon, and the escalatory effects of this behaviour.Footnote 37 Nuclear brinkmanship, understood as a means to manipulate risk during crises, establishes a consistent theoretical link between the paradox and unintentional (inadvertent and accidental) nuclear use. The first scenario in this article illustrates how such a catalysing chain of ‘threats and counter threats’ could spark an inadvertent ‘limited’ nuclear exchange.

This feature of the paradox can be viewed from two casual pathways: the red-line model versus the brinkmanship model. Although there is no consensus on the causal mechanisms underpinning an increase in low-level conflict in a relationship of nuclear stalemate (i.e. MAD),Footnote 38 the dominant red-line model view holds that mutual fear of nuclear escalation under the conditions of MAD reduces the risk and uncertainty of states’ pursuing their strategic goals (altering the territorial or political status quo), which drives military adventurism.Footnote 39 The alternative brinkmanship model interpretation posits that the threat of nuclear use to generate uncontrollable escalation risk may incentivise states to accept the risk of uncontrollable escalation to a nuclear exchange to obtain concessions.Footnote 40

These two interpretations predict divergent empirical outcomes. The red-line model predicts there is a negligible risk of lower-level military adventurism causing an all-out nuclear war. Meanwhile, the brinkmanship model predicts that lower-level military adventurism inherently carries the irreducible risk of nuclear escalation.Footnote 41 Despite general agreement amongst scholars about the paradox’s destabilising effects at a lower level, the literature is unclear as to how the causal mechanisms underpinning the paradox cause an increase in instability at lower levels. Francesco Bailo and Benjamin Goldsmith caution that ‘causation is attributed to nuclear weapons, although the qualitative evidence is often interpreted through the prism of the [stability–instability paradox] theory’ and that the near-exclusive focus on the US–Soviet and India–Pakistan nuclear cases has led to an inference that is biased by the selection on the dependent variable.Footnote 42 The fictional scenarios in this article use the paradox as a theoretical lens to construct a causal mechanism to envisage a future US–China nuclear exchange in the Taiwan Straits, thus expanding the dependent variable and reducing the risk of bias selection.

Thomas Schelling argues that the power of these dynamics is the ‘danger of a war that neither wants, and both will have to pick their way carefully through crisis, never quite sure that the other knows how to avoid stumbling over the brink’ (emphasis added).Footnote 43 According to this view, the dominant interpretation of the paradox exaggerates the stability of MAD. It thus neglects the potency of Schelling’s ‘threats that leave something to chance’ – the risk that military escalation cannot be entirely controlled in a low-level conventional conflict.Footnote 44 Under conditions of MAD, states cannot credibly threaten intentional nuclear war, but, during crises, they can invoke ‘threats that leave something to chance’, thereby, counter-intuitively, increasing the risk of inadvertent and accidental nuclear war.Footnote 45

Schelling’s ‘threats that leave something to chance’ describes the manoeuvrability of military force by nuclear dyads short of all-out war and thus helps resolve the credibility problem of states making nuclear threats during a crisis.Footnote 46 Schelling’s game theoretic ‘competition in risk-taking’ describes the dynamics of nuclear bargaining in brinkmanship, which is heavily influenced by actors’ risk tolerance.Footnote 47 The higher the stakes, the more risk a state is willing to take. Suppose one side can raise the costs of victory (i.e. defend or challenge the status quo) more than the value the other side attaches to victory. In that case, the contest can become a ‘game of chicken’ – one that the initiator cannot be sure their adversary will not fight for reasons such as national honour, reputation, the belief the other side will buckle, or misperception and miscalculation.Footnote 48 For instance, during crises, leaders are prone to viewing their actions (e.g. deterrence signalling, troop movements, and using ‘reasonable’ force to escalate a conflict to compel the other side to back down) as benign and view similar behaviour by the other side as having malign intent. Both sides in an escalating crisis could, under the perception that they were displaying more restraint than the other, inadvertently escalate a situation.Footnote 49

One of the goals of competition in risk-taking is to achieve escalation dominance:Footnote 50 possessing the military means to contain or defeat an enemy at every stage of the escalation ladder with the ‘possible exception of the highest’, i.e. an all-out nuclear war where dominance is less relevant.Footnote 51 The assumptions underpinning escalation dominance include accurately predicting the effects of moving up the escalation ladder, the problem of determining the balance of power and resolve during crises, and divergences between both sides’ perceptions of the balance.Footnote 52 The first scenario in this article illustrates the oversimplification of the assumptions, and thus the inherent risks, that belie escalation dominance. Jervis argues that in the ‘real world’, absent perfect information, control, and free from Clausewitzian friction, the stabilising effect of nuclear stalemate associated with the ‘nuclear revolution’ (i.e. secure, second-strike forces) ‘although not trivial, is not so powerful’.Footnote 53 In other words, though the logic of MAD has indubitable deterrence value, on the battlefield, its premise is flawed because it neglects Schelling’s ‘threats that leave something to chance’, the uncertainty associated with human behaviour (e.g. emotions, bias, inertia, mission creep, and the war machine) during a crisis and, increasingly, the intersection of human psychology with the more and more digitised battlefield.Footnote 54 Advances in AI technology (and the broader digitalisation of the battlefield) have not eliminated and, arguably, have thickened the ‘fog of war’.Footnote 55 For example, similar to the 1973 intelligence failure that led Israel to be surprised by the Arab attacks that initiated the Yom Kippur War, Israeli intelligence, despite possessing world-class Signals Intelligence (SIGINT) and cyber capabilities, miscalculated both Hamas’s capabilities and its intentions in the 2023 terrorist attack.Footnote 56

Integrating AI technology with nuclear command, control, and communication systems (NC3) enhances states’ early warning systems, situational awareness, early threat detection, and decision-support capabilities, thus raising the threshold below which a nuclear first strike is successful.Footnote 57 However, like previous generations of technological enhancements, AI cannot alter the immutable dangers associated with the unwinnable nature of nuclear war.Footnote 58 Jervis writes that ‘just as improving the brakes on a car would not make playing [the game of] Chicken safer, but only allow them to drive faster to produce the same level of intimidation’, so the integration of AI into NC3 will only lead both sides to recalibrate their actions to produce a requisite level of perceived danger to accomplish their goals.Footnote 59 In short, neither side in a nuclear dyad can confidently engage in low-level adventurism without incurring ‘very high costs, if not immediately, then as a result of a chain of actions that cannot be entirely foreseen or controlled’ (emphasis added).Footnote 60 Indeed, Schelling opined that the lack of control against the probability of nuclear escalation is the ‘essence of a crisis’.Footnote 61 Similarly, Alexander George and Richard Smoke found that an important cause of deterrence failure is the aggressor’s belief that they can control the risks during a crisis.Footnote 62 In sum, low-level adventurism under the nuclear shadow (out of confidence that MAD will prevent escalation) does little to contain the likelihood of sparking a broader conflict neither side favours. The scenarios below consider how these ‘costs’ and ‘chains of action’ are affected in AI-enabled warfare.

Fictional scenarios to anticipate future war

According to a recent RAND Corporation report, ‘history is littered with mistaken predictions of warfare’.Footnote 63 With this warning as an empirical premise, the future warfare fictional scenarios in this article explore how future inadvertent and accidental nuclear war might unfold in a future Taiwan Straits conflict. Traditional approaches to anticipating future conflict rely on counterfactual qualitative reasoning, quantitative inference, game theory logic, and historical deduction.Footnote 64 By contrast, the scenarios presented here combine present and past trends in technological innovation with imagination and thus contribute to the existing corpus of work that considers hitherto-unknown or underexplored drivers of future wars (e.g. new technologies, environmental change, or ideological/geopolitical shifts) to imagine how future conflict could emerge. Thus, these fictional scenarios challenge existing assumptions, operational concepts, and conventional wisdom associated with the genesis of war, offering foresight into the probability of future AI-enabled war, its onset, how it might unfold, and, importantly, how it might end.

Three broad approaches have been used to anticipate future conflict.Footnote 65 The first approach uses quantitative methods to consider internal causes of conflict, or ‘push factors’, such as socio-economic development, arms control, arms racing, and domestic politics.Footnote 66 Rapid progress in AI and big data analytics has significantly empowered the progress of this field – for example, Lockheed Martin’s Integrated Crisis Early Warning System, the EU’s Conflict Early Warning System, and Uppsala University’s ViEWS.Footnote 67 These models use statistical probabilistic methods to predict the conditions under which a conflict (mainly civil wars sparked by internal factors) might be triggered, not the causal pathways between states leading to war and how a war might play out. In short, this approach is restricted in forecasting interstate conflict, and its reliance on historical datasets means that it cannot consider novelty and challenging assumptions.

The second approach, established during the Cold War, applies qualitative historical deduction and international relations theorising (e.g. hegemonic stability theory, ‘anarchy’ in international relations, neo-realism, the security dilemma, the democratic-peace theory, deterrence theory, and the related stability–instability paradox, which this study explores)Footnote 68 to top-down ‘pull factors’ to understand ‘international politics, grasp the meaning of contemporary events, and foresee and influence the future’.Footnote 69 This approach has posited explanations and causes for conflicts ranging from the First World War, the Cold War, ethnic conflict in Africa, the break-up of Yugoslavia, and, more recently, the conflict in Ukraine.Footnote 70 This approach has also been used to explain the absence of major war between nuclear dyads and the concomitant propensity of nuclear states to engage in low-level aggression and arms competition.Footnote 71 Nuclear deterrence theorising is limited empirically by the scarcity of datasets on nuclear exchanges, making this method susceptible to accusations of retroactive reasoning, biased inference-dependent variables, and problematic rational-choice assumptions.Footnote 72 Similar to the first approach, war theorising relies too much on historical data and statistics risks; thus, under-utilising novelty is of limited use for policymakers concerned with the ways and means of future AI-enabled war.

The third approach used in this study is neither wedded to generalised theories, limited by data scarcity, nor concerned with predicting conflict. Like the first two approaches, it uses historical analysis, causal inference, and assumptions then combines these with imagination to produce plausible depictions of future conflict. Thus, it is the only approach that incorporates hitherto-unforeseen technological innovation possibilities to deduce the impact of these trends on the ways and means of future war.Footnote 73 Like the other two, this approach suffers several shortcomings. First, it uses non-falsifiable hypotheses. For instance, fictionalised accounts of future war rarely consider the strategic logic (or the endgame) once confrontation ensues. In the case of high-intensity AI-enabled hyper-speed warfare (see Scenario 1), while the pathway to war can be intellectualised, the catastrophic outcome of an all-out nuclear exchange between great powers, like recent conflicts in Ukraine and Gaza, is not apparent or straightforward to fathom. This challenge can produce unrealistic and contrived portrayals of future war.Footnote 74

Second, groupthink, collective bias, and an overreliance on predetermined outcomes affect policy change. An oversimplistic view often substantiates this collective mindset that the course of future conflict can be extrapolated from present technological trends. Recent cognitive studies corroborate Scottish philosopher David Hume’s hypothesis that individuals tend to favour what is already established. We mirror-project the ‘status-quo with an unearned quality of goodness, in the absence of deliberative thought, experience or reason to do so’.Footnote 75 For instance, many depictions of future wars between the United States and China reflect the broader US national security discourse on Chinese security issues.Footnote 76 For example, the US think-tank community used the techno-centric novel Ghost Fleet to advocate accelerating President Obama’s ‘pivot’ to Asia policy.Footnote 77

Finally, it mistakenly views emerging technology (especially AI, autonomy, and cyber) as a deus ex machina possessing a magical quality, resulting in the fallacy that the nature of future wars will be entirely different from past and present ones.Footnote 78 This fallacy emerged during the first revolution in military affairs (RMA) in the late 1990s. The RMA was associated with the notion that technology would make future wars fundamentally different because advances in surveillance, information, communications, and precision munitions made warfare more controllable, predictable, and thus less risky. Several observers have made similar predictions about the promise of AI and autonomy.Footnote 79 Clausewitz warns there is no way to ‘defeat an enemy without too much bloodshed … it is a fallacy that must be exposed’.Footnote 80 This fallacy is equally valid in the emerging AI–nuclear nexus, particularly the potential ‘bloodshed’ resulting from inadvertent and accidental nuclear use illustrated in the scenarios below.

However, the history of conflict demonstrates that technology is invariably less impactful (or revolutionary) than anticipated or hoped for. Most predictions of technological change have gone awry when they overestimate the revolutionary impact of the latest technical Zeitgeist and underestimate the uncertainties of conflict and the immutable political and human dimensions of war.Footnote 81 According to Major General Mick Ryan, Commander of the Australian Army Defence College, ‘science fiction reminds us of the enduring nature of war’, and ‘notwithstanding the technological marvels of science fiction novels, the war ultimately remains a human endeavor’.Footnote 82 The scenarios in this article demonstrate that while the character of war continues to evolve, future AI-enabled warfare will continue to be human, arguably more so.Footnote 83 Moreover, absent an agenda or advocacy motive, the scenarios in this article offer a less US-centric depiction of future United States–China conflict, thus distinguishing itself from the broader US national security discourse about China.Footnote 84 Specifically, the scenarios pay close attention to strategic, tactical, and operational considerations and are mindful of US, Chinese, and Taiwanese domestic constituencies and political clocks (especially Scenario 2).

Fictional Intelligence (FICINT) is a concept coined by authors August Cole and P. W. Singer to describe the anticipatory value of fictionalised storytelling as a change agent. Cole and Singer describe FICINT as ‘a deliberate fusion of narrative’s power with real-world research utility’.Footnote 85 As a complementary approach alongside traditional methods such as wargaming, red-teaming, counterfactual thinking, and other simulations,Footnote 86 FICINT has emerged as a novel tool for conflict anticipation, gaining significant traction with international policymakers and strategic communities.Footnote 87 A comparative empirical exploration of the competing utility of the various approaches and tools to consider future warfare would benefit from further research.

Because of cognitive biases – for example, hindsight and availability bias and heuristics – most conflicts surprise leaders but, in hindsight, appear eminently predictable.Footnote 88 Well-imagined fictional prototypes (novels, films, comics, etc.) conjure aesthetic experiences such as discovery, adventure, and escapism that can stimulate introspection, for instance, in the extent to which these visions correspond with or diverge from reality.Footnote 89 This thinking can improve policymakers’ ability to challenge established norms, to project, and to prepare for change. Psychology studies have shown that stimulating people’s imagination can speed up the brain’s neural pathways that deal with preparedness in the face of unexpected change.Footnote 90 This focus can help policymakers recognise and respond to the trade-offs associated with technological change and thus be better placed to pre-empt future technological inflection points, such as the possibility of AI general intelligence (AGI) – or ‘superintelligence’.Footnote 91 Political scientist Charles Hill argues that fiction brings us closer to ‘how the world works … literature lives in the realm strategy requires, beyond rational calculation, in acts of the imagination’ (emphasis added).Footnote 92

Fictionalised future war in the Taiwan Straits

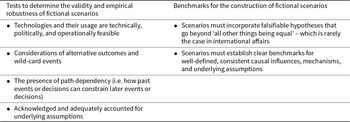

Much like historical counterfactuals, effective fictional scenarios must be empirically plausible (i.e. the technology exists or is being developed) and theoretically rigorous, thus avoiding ex post facto reasoning and bias extrapolations and inference (see Table 1).Footnote 93

Table 1. Effective scenario construction.

Well-crafted scenarios will allow the reader to consider questions such as under what circumstances might the underlying assumptions be invalid (if all things were not equal) and what would need to change. How might the scenarios play out differently (i.e. falsifiability), and to what end? What are the military and political implications of these scenarios? How might changing the causal influences and pathways change things? Moreover, the effective operationality of these criteria in fictional scenarios requires consideration of the following: the motivations for the assumptions underlying the scenarios;Footnote 94 the influence of these assumptions on the outcome; and exploring alternative casual (i.e. escalation vs de-escalation) pathways.Footnote 95 AI-enhanced wargaming could also improve the insights derived from traditional wargaming and identify innovative strategies and actions, including comparing and contrasting multiple underlying assumptions, escalation pathways, and possible outcomes.Footnote 96

Scenarios that adhere to these criteria are distinguished from science fiction and thus have anticipatory capabilities (but do not make concrete predictions) and policy relevance. Specifically, it aims to reflect on operational readiness and manage the risks associated with the intersection of nuclear weapons and AI-enabling weapon systems.Footnote 97 These benchmarks will need to be modified and refined as the underlying assumptions and causal influences change or competing scenarios emerge. In short, the quality of fictional scenarios intended to shape and influence the national security discourse matters.

These scenarios demonstrate how the useof various interconnected AI-enabling systems (i.e. command and control decision-making support, autonomous drones, information warfare, hypersonic weapons, and smart precision munitions) during high-intensity military operations under the nuclear shadow can inadvertently and rapidly escalate to nuclear detonation.Footnote 98 Further, they demonstrate how the coalescence of these AI-enabled conventional weapon systems used in conjunction during crises contributes to the stability–instability paradox by (a) reducing the time available for deliberation and thus the window of opportunity for de-escalation, (b) increasing the fog and friction of complex and fast-moving multi-domain operations,Footnote 99 and (c) introducing new and novel risks of misperception (especially caused by human-deductive vs machine-inductive reasoning) and accidents (especially caused by human psychological/cognitive fallibilities) in human–machine tactical teaming operations.Footnote 100 In sum, these features increase the risk of inadvertent and accidental escalation and, thus, undermine the red-line model of the paradox and support the brinkmanship-model interpretation.

Scenario 1: The 2030 ‘flash war’ in the Taiwan Straits

Assumptions

• Great power competition intensifies in the Indo-Pacific, and geopolitical tensions increase in the Taiwan Straits.Footnote 101

• An intense ‘security dilemma’ exists between the United States and China.Footnote 102

• China’s nuclear forces rapidly modernise and expand, and the United States accepts ‘mutual vulnerability’ with Beijing.Footnote 103

• The United States and China deploy AI-powered autonomous strategic decision-making technology into their NC3 networks.Footnote 104

Hypothesis

Integrating AI-enabled weapon systems into nuclear weapon systems amplifies existing threat pathways that, all things being equal, increase the likelihood of unintentional atomic detonation.

How could AI-driven capabilities exacerbate a crisis between two nuclear-armed nations? On 12 December 2030, leaders in Beijing and Washington authorised a nuclear exchange in the Taiwan Straits. Investigators looking into the 2030 ‘flash war’ found it reassuring that neither side employed AI-powered ‘fully autonomous’ weapons or deliberately breached the law of armed conflict.

In early 2030, an election marked by the island’s tense relations with Communist China saw President Lai achieve a significant victory, securing a fourth term for the pro-independence Democrats and further alienating Beijing. As the late 2020s progressed, tensions in the Straits intensified, with hard-line politicians and aggressive military generals on both sides adopting rigid stances, disregarding diplomatic overtures, and being fuelled by inflammatory rhetoric, misinformation, and disinformation campaigns.Footnote 105 These included the latest ‘story weapons’, autonomous systems designed to create adversarial narratives that influenced decision-making and stirred anti-Chinese and Taiwanese sentiments.Footnote 106

Simultaneously, both China and the United States utilised AI technology to enhance battlefield awareness, intelligence, surveillance, and reconnaissance, functioning as early warning systems and decision-making tools to predict and recommend tactical responses to enemy actions in real time.

By late 2030, advancements in the speed, fidelity, and predictive power of commercially available dual-use AI applications had rapidly progressed.Footnote 107 Major military powers were compelled to provide data for machine learning to refine tactical and operational manoeuvres and inform strategic choices. Noticing the successful early implementation of AI tools for autonomous drone swarms by Russia, Turkey, and Israel to counter terrorist incursions effectively, China rushed to integrate the latest versions of dual-use AI, often at the expense of thorough testing and evaluation in the race for first-mover advantage.Footnote 108

With Chinese military activities – including aircraft flyovers, island blockade drills, and drone surveillance operations – representing a notable escalation in tensions, leaders from both China and the United States demanded the urgent deployment of advanced strategic AI to secure a significant asymmetric advantage in terms of scale, speed, and lethality. As incendiary language spread across social media, fuelled by disinformation campaigns and cyber intrusions targeting command-and-control networks, calls for China to enforce the unification of Taiwan grew louder.

Amid the escalating situation in the Pacific, and with testing and evaluation processes still incomplete, the United States decided to expedite the deployment of its prototype autonomous AI-powered ‘Strategic Prediction & Recommendation System’ (SPRS), which was designed to support decision-making in non-lethal areas such as logistics, cyber operations, space assurance, and energy management. Wary of losing its asymmetric edge, China introduced a similar decision-support system, the ‘Strategic & Intelligence Advisory System’ (SIAS), to ensure its readiness for any potential crisis.Footnote 109

On 14 June 2030, at 06:30, a Taiwanese Coast Guard patrol boat collided with and sank a Chinese autonomous sea-surface vehicle that was conducting an intelligence reconnaissance mission within Taiwan’s territorial waters. The day before, President Lai had hosted a senior delegation of US congressional staff and White House officials in Taipei during a high-profile diplomatic visit. By 06:50, the ensuing sequence of events – exacerbated by AI-enabled bots, deepfakes, and false-flag operations – exceeded Beijing’s predetermined threshold for action and overwhelmed its capacity to respond.

By 07:15, these information operations coincided with a surge in cyber intrusions targeting US Indo-Pacific Command and Taiwanese military systems, alongside defensive manoeuvres involving Chinese counter-space assets. Automated logistics systems of the People’s Liberation Army (PLA) were triggered, and suspicious movements of the PLA’s nuclear road-mobile transporter erector launchers were detected. At 07:20, the US SPRS interpreted this behaviour as a significant national security threat, recommending an increased deterrence posture and a strong show of force. Consequently, the White House authorised an autonomous strategic bomber flyover in the Taiwan Straits at 07:25.

In reaction, at 07:35, China’s SIAS alerted Beijing to a rise in communication activity between US Indo-Pacific Command and critical command-and-control nodes at the Pentagon. By 07:40, SIAS escalated the threat level concerning a potential US pre-emptive strike in the Pacific aimed at defending Taiwan and attacking Chinese positions in the South China Sea. At 07:45, SIAS advised Chinese leaders to initiate a limited pre-emptive strike using conventional counterforce weapons (including cyber, anti-satellite, hypersonic weapons, and precision munitions) against critical US assets in the Pacific, such as Guam.

By 07:50, anxious about an impending disarming US strike and increasingly reliant on SIAS assessments, Chinese military leaders authorised the attack, which SIAS had already anticipated and planned for. At 07:55, SPRS notified Washington of the imminent assault and recommended a limited nuclear response to compel Beijing to halt its offensive. Following a limited United States–China atomic exchange in the Pacific, which resulted in millions dead and tens of millions injured, both sides eventually agreed to cease hostilities.Footnote 110

In the immediate aftermath of this devastating confrontation, which unfolded within mere hours, leaders from both sides were left perplexed about the origins of the ‘flash war’. Efforts were undertaken to reconstruct a detailed analysis of the decisions made by SPRS and SIAS. Still, the designers of the algorithms underlying these systems noted that it was impossible to fully explain the rationale and reasoning of the AI behind every subset decision. Various constraints, including time, quantum encryption, and privacy regulations imposed by military and commercial users, rendered it impossible to maintain retrospective back-testing logs and protocols.Footnote 111 Did AI technology trigger the 2030 ‘flash war’?

Scenario 2: Human–machine warfighting in the Taiwan Straits

Assumptions

• Great power competition intensifies in the Indo-Pacific, and geopolitical tensions increase in the Taiwan Straits.Footnote 112

• An intense security dilemma exists between the United States and China.

• China reaches a military capability sufficient to invade and seize Taiwan.Footnote 113

• The United States and China have mastered AI-enabled ‘loyal wingman’ technology, and operational concepts exist to support them.Footnote 114

Hypothesis

AI-enabled human–machine teaming operations used in high-intensity conflict between two nuclear-armed states, all else being equal, increase the likelihood of unintentional nuclear use.

How could AI-enhanced human–machine teaming influence a crisis between two nuclear-armed adversaries? In 2030, the ailing President Xi Jinping, eager to realise his ‘China Dream’ and secure his legacy in the annals of the Chinese Communist Party, invades Taiwan.

‘Operation Island Freedom’

Chinese Air Force stealth fighters, dubbed ‘Mighty Dragon’, accompanied by a swarm of semi-autonomous AI-powered drones known as ‘Little Dragons’, launch cyberattacks and missile strikes to dismantle Taiwanese air defences and their command-and-control systems.Footnote 115 A semi-autonomous loitering system of ‘barrage swarms’ absorbs and neutralises most of Taiwan’s missile defences, leaving Taipei almost defenceless against a military blockade imposed by Beijing.Footnote 116

During this blitzkrieg assault, the ‘Little Dragons’ receive a distress signal from a group of autonomous underwater vehicles engaged in reconnaissance off Taiwan’s coast, alerting them to an imminent threat from a US carrier group.Footnote 117 With the remaining drones running low on power and unable to communicate with China’s command-and-control due to distance, the decision to engage is left to the ‘Little Dragons’, made without any human oversight from China’s naval ground controllers.Footnote 118

Meanwhile, the USS Ronald Reagan, patrolling the South China Seas, detects aggressive manoeuvres from a swarm of Chinese torpedo drones. As a precautionary measure, the carrier deploys torpedo decoys to divert the Chinese drones and then attempts to neutralise the swarm with a ‘hard-kill interceptor’.Footnote 119 However, the swarm launches a fierce series of kamikaze attacks, overwhelming the carrier’s defences and leaving it incapacitated. Despite the carrier group’s efforts, they cannot eliminate the entire swarm, making them vulnerable to the remaining drones racing towards the mother ship.

In reaction to this bolt-from-the-blue assault, the Pentagon authorises a B-21 Raider strategic bomber to undertake a deterrent mission, launching a limited conventional counterstrike on China’s Yulin Naval Base on Hainan Island, which houses China’s submarine nuclear deterrent.Footnote 120 The bomber is accompanied by a swarm of ‘Little Buddy’ uncrewed combat aerial vehicles, equipped with the latest ‘Skyborg’ AI-powered virtual co-pilot,Footnote 121 affectionately nicknamed ‘R2-D2’.Footnote 122

Using a prioritised list of pre-approved targets, ‘R2-D2’ employs advanced AI-driven ‘Bugsplat’ software to optimise the attack strategy, including weapon selection, timing, and deconfliction measures to avoid friendly fire.Footnote 123 Once the targets are identified and the weapons selected, ‘R2-D2’ directs a pair of ‘Little Buddies’ to confuse Chinese air defences with electronic decoys and AI-driven infrared jammers and dazzlers.

With each passing moment, escalation intensifies. Beijing interprets the US B-21’s actions as an attempt to undermine its sea-based nuclear deterrent in response to ‘Operation Island Freedom’. Believing it could not afford to let US forces thwart its initial invasion success, China initiates a conventional pre-emptive strike against US forces and bases in Japan and Guam. To signal deterrence, China simultaneously detonates a high-altitude nuclear device off the coast of Hawaii, resulting in an electromagnetic pulse. Time is now critical.

This attack aims to disrupt and disable unprotected electronics on nearby vessels or aircraft while avoiding damaging Hawaii. It marks the first use of nuclear weapons in conflict since 1945. Due to a lack of understanding regarding each other’s deterrence signals, red lines, decision-making processes, or escalation protocols, neither side could convey that their actions were intended to be calibrated, proportional, and aimed at encouraging de-escalation.Footnote 124

Conclusion: A new Promethean paradox?

The article revisits Glenn Snyder’s stability–instability paradox concept to explore the risks associated with the AI–nuclear nexus in a future conflict. The article applies the dominant red-line interpretation of the paradox to consider how AI-enabled weapon systems in nuclear dyads engaged in low-level conflict might affect the risk of nuclear use. It finds support for the non-dominant brinkmanship model of the paradox which posits that low-level military adventurism between nuclear-armed states increases the risk of inadvertent and accidental escalation to a nuclear level of war. The article combines empirically robust and innovative fictional scenarios to test and challenge the assumptions underlying the red-line model in a future Taiwan Straits conflict. The scenarios expand the existing evidentiary base that considers the paradox and build on the undertheorised brinkmanship model of the paradox.

Whereas conventional counterfactuals consider imaginary changes to past events (i.e. the past is connected by a chain of causal logic to the present), fictional scenarios, by contrast, confront a finite range of plausible alternative outcomes, wild-cardevents and the uncertainties that might arise from the possible combinations of them.Footnote 125 The finitude of possible futures also means that, unlike traditional empirical and theoretical studies, fictional scenarios are not constrained by what we know happened. Thus, well-constructed scenarios can sidestep problems such as over-determinism, path dependency, and hindsight bias. This analytical latitude also means scenarios can be designed as introspective learning tools to highlight policymakers’ biases and challenge their assumptions and received wisdom – or Henry Kissinger’s ‘official future’ notion.Footnote 126 The fictional prototypes illustrated in this article go beyond mere technological extrapolation, mirror-imaging, group-think, and collective bias and can, therefore, play a valuable role in shaping policymakers’ perceptions and thus influence how they reason under uncertainty, grapple with policy trade-offs, and prepare for change.

Several additional policy implications exist for using fictional scenarios to inform and influence decision-making. First, the imagination and novelty of future warfare fiction (especially to reflect on possible low-probability unintended consequences of AI systems deployed in conventional high-intensity conflict) can be used to help design realistic national security wargames for defence, intelligence, and think-tank communities. The centuries-old art of wargaming has recently emerged as a critical thinking interactive teaching tool in higher education.Footnote 127 Moreover, using AI software alongside human-centric intelligent fiction might mitigate some limitations and ethical concerns surrounding using AI in strategic-level wargames.Footnote 128 Policymakers and military professionals can use well-crafted science fiction to expand the range and imaginative scope of wargaming scenarios (i.e. underlying assumptions, causal pathways, and outcomes) and counterfactual thinking about future warfare.

Second, fictional scenarios can be used with science and technology and studies scholarship to research further the extent to which policymakers’ perceptions of AI-enabled weapon systems are being shaped by popular cultural influences such as intelligent fiction.Footnote 129 Fictional scenarios that meet the criteria outlined in this article might also be used by policymakers to positively shape the public ‘AI narrative’ to counter disinformation campaigns that manipulate the public discourse to confuse the facts (or ‘post-truth’), sowing societal discord and distrust in decision-makers.Footnote 130

Third, fiction is increasingly becoming accepted as an innovative and prominent feature of professional military education (PME).Footnote 131 The use of AI large language model (LLM) tools such as ChatGPT by authors to augment and complement human creativity (i.e. resonate with human experience, intuition, and emotion) may also expose officers’ biases, encourage authors to think in novel and unconventional (and even non-human) ways, and identify empirical gaps or inconsistencies in their writing.Footnote 132 Inspiration from reading these scenarios can increase an officer’s ability to appreciate complexity and uncertainty and thus better handle technological change and strategic surprise. Exposure to future warfare fiction can also remind officers that despite the latest technological ‘silver bullet’, warfare will remain (at least for the foreseeable future) an immutably human enterprise.Footnote 133 Developing fictional prototypes of future war can influence how officers think about using emerging technologies and how best to deploy them in new ways. Research on the effects of supplementing machine-learning supervised training with science-fiction prototypes (based on future trends in technology, politics, economics, culture, etc.) would be beneficial.

Finally, fictional future war scenarios may be used to train AI machine-learning algorithms to enhance their data’s fidelity and strategic depth, which is currently restricted by historical datasets to identify patterns, relationships, and trends. Training AI on a combination of historical data and realistic future fictional scenarios – and then re-training them on new parameters and data as conditions change – could improve the quality and real-world relevance of machine learning to establish correlations and predictions. Enhanced datasets could, for instance, qualitatively improve the modelling and simulation that supports AI-enabled wargaming, making strategic-level (from current tactical-level wargaming) wargames more feasible and realistic.

The fire that Prometheus stole from the gods brought light and warmth, but it also cursed humankind with destruction. Just as nuclear weapons condemned the world to the perennial fear of Armageddon (as a paradoxical means to prevent its use), so AI, offering those who wield it the allure of outsized technological benefits, is potentially a new harbinger of a modern-day Prometheus. The quest for scientific knowledge and tactical mastery in the possession of AI technology risks Promethean overreach and unintentional consequences playing out under the nuclear shadow. In the age of intelligent machines, skirmishes occurring under the nuclear shadow, as predicted by Snyder, will become increasingly difficult to predict, control, and thus pull back from the brink.

Video Abstract

To view the online video abstract, please visit: https://doi.org/10.1017/S0260210524000767.

Acknowledgments

The author would like to thank the three anonymous reviewers, the editors, and the participants of the ‘Understanding Complex Social Behavior through AI Analytical Wargaming’ workshop hosted by The Alan Turing Institute, the US Department of Defense Department of Research & Engineering, and the University of Maryland for their helpful comments and feedback.