1. Introduction

With the advent of new facilities, radio surveys are becoming larger and deeper, providing fields rich in sources, in the tens of millions (Norris Reference Norris2017), and delivering data at increasing rates, in the hundreds of gigabytes per second (Whiting & Humphreys Reference Whiting and Humphreys2012). The Evolutionary Map of the Universe (EMU, Norris et al. Reference Norris2011, Reference Norris2021) is expected to detect up to 40 million sources, expanding our knowledge in areas such as galaxy evolution and star formation. This outstrips surveys like the Karl G. Jansky Very Large Array (JVLA, or VLA) Sky Survey (VLASS, Lacy et al. Reference Lacy2020; Gordon et al. Reference Gordon2021) and the Rapid Australian Square Kilometre Array (SKA) Pathfinder (ASKAP) Continuum Survey (RACS, McConnell et al. Reference McConnell2020; Hale et al. Reference Hale2021) by a factor of up to 30. Furthermore, the Variable and Slow Transients (VAST, Banyer et al., Reference Banyer, Murphy, VAST, Ballester, Egret and Lorente2012; Murphy et al., Reference Murphy2013, Reference Murphy2021) survey, operating at a cadence of 5s, surpasses VLASS transient studies by several orders of magnitude, opening up areas of variable and transient research: e.g., flare stars, intermittent pulsars, X-ray binaries, magnetars, extreme scattering events, interstellar scintillation, radio supernovae, and orphan afterglows of gamma-ray bursts (Murphy et al., Reference Murphy2013, Reference Murphy2021). This places complex requirements on source finder (SF) software in order to reliably handle compact,Footnote a extended, complex, and faint or diffuse sources, along with demands for high data throughput for radio transients (e.g., Hancock et al., Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012; Hopkins et al., Reference Hopkins2015; Riggi et al., Reference Riggi2016; Hale et al., Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019; Boyce, Reference Boyce2020; Bonaldi et al., Reference Bonaldi2021). No current SF fits all of these requirements.

Hydra is an attempt to get the best of all worlds: it is an extensible multi-SF comparison and cataloguing tool, which allows users to choose the appropriate SF for a given survey, or take advantage of its collectively rich statistics by combining results. The Hydra software suiteFootnote b currently includes Aegean (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012; Hancock, Cathryn, & Hurley-Walker Reference Hancock, Cathryn and Hurley-Walker2018), Caesar (Compact And Extend Source Automated Recognition, Riggi et al. Reference Riggi2016, Reference Riggi2019), ProFound (Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018; Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019), PyBDSF (Python Blob Detector and Source Finder, Mohan & Rafferty Reference Mohan and Rafferty2015), and Selavy (Whiting & Humphreys Reference Whiting and Humphreys2012).

This paper is part one of a two part series, (referred to hereafter as Papers I and II). Here we provide a brief overview of SFs, relevant to our implementation of Hydra. This is then followed by a description of the Hydra software suite. The software produces new metrics for handling real source components (or sources, herein), such as, completeness (

![]() $\mathcal{C}$

) and reliability (

$\mathcal{C}$

) and reliability (

![]() $\mathcal{R}$

), based on sources detected in a shallow (

$\mathcal{R}$

), based on sources detected in a shallow (

![]() $\mathcal{S}$

) image (e.g., a real image with artificial noise added) wherein real (sometimes referred to as ‘deep’ or

$\mathcal{S}$

) image (e.g., a real image with artificial noise added) wherein real (sometimes referred to as ‘deep’ or

![]() $\mathcal{D}$

) image detections are considered as true sources. We use simulated data, where the true sources are known, to validate these metrics. In Paper II we use the simulated images along with real data to evaluate the performance of the six different SFs included with Hydra. A preliminary discussion on SF performance is presented in this paper.

$\mathcal{D}$

) image detections are considered as true sources. We use simulated data, where the true sources are known, to validate these metrics. In Paper II we use the simulated images along with real data to evaluate the performance of the six different SFs included with Hydra. A preliminary discussion on SF performance is presented in this paper.

2. Source finders

The growing sizes and data rates of modern radio surveys have increased the need for automated source finding tools with fast processing speeds, and high completeness and reliability. One impetus for this came through the ASKAP EMU source finding data challenge (Hopkins et al. Reference Hopkins2015), which explored a community-submitted set of eleven SFs: Aegean (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012), Astronomical Point source EXtractor (APEX, Makovoz & Marleau Reference Makovoz and Marleau2005), blobcat (Hales et al. Reference Hales, Murphy, Curran, Middelberg, Gaensler and Norris2012), Curvature Threshold Extractor (CuTEx, Molinari et al. Reference Molinari, Schisano, Faustini, Pestalozzi, di Giorgio and Liu2011), Duchamp (Whiting Reference Whiting2012), IFCA (International Federation of Automation Control) Biparametric Adaptive Filter (BAF, López-Caniego & Vielva Reference López-Caniego and Vielva2012)/Matched Filter (MF, López-Caniego et al. Reference López-Caniego, Herranz, González-Nuevo, Sanz, Barreiro, Vielva, Argüeso and Toffolatti2006), PyBDSF (Mohan & Rafferty Reference Mohan and Rafferty2015), Python Source Extractor (PySE, Spreeuw Reference Spreeuw2010; Swinbank et al. Reference Swinbank2015), Search and Destroy (SAD, Condon et al. Reference Condon, Cotton, Greisen, Yin, Perley, Taylor and Broderick1998, with an honourable mention of its variant HAPPY, White et al. Reference White, Becker, Helfand and Gregg1997), Selavy (with Duchamp at its core, Whiting & Humphreys Reference Whiting and Humphreys2012), Source Extractor (SExtractor, Bertin & Arnouts Reference Bertin and Arnouts1996), and SOURCE_FIND (Arcminute Microkelvin Imager (AMI) pipeline, AMI Consortium: Franzen et al. 2011). More recent SFs include Caesar (Riggi et al. Reference Riggi2016, Reference Riggi2019) and ProFound (Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018; Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019; Boyce Reference Boyce2020). APEX, CuTEx, ProFound, and SExtractor have their origins in optical astronomy. Our focus will be on 2D SFs, such as those above, although there are also 3D packages like SoFiA (Source Finding Application, Serra et al. Reference Serra2015; Koribalski et al. Reference Koribalski2020; Westmeier et al. Reference Westmeier2021) optimised for detecting line emission in data cubes, which can also function as 2D SFs.

By and large there is no ‘one SF fits all’ solution. Each is typically optimised for specific tasks (Hopkins et al. Reference Hopkins2015; Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019; Bonaldi et al. Reference Bonaldi2021). In the broadest sense, there are SFs designed to handle sources that are compact, or extended and diffuse, see Table 1. They also have their specialisations: for example, blobcat for linear polarisation data (Hales et al. Reference Hales, Murphy, Curran, Middelberg, Gaensler and Norris2012), Duchamp for HI observations (Whiting Reference Whiting2012), CuTEx for images with intense background fluctuations (Molinari et al. Reference Molinari, Schisano, Faustini, Pestalozzi, di Giorgio and Liu2011), and PySE for transients (Fender et al. Reference Fender2007; van Haarlem et al. Reference van Haarlem2013). There are also ‘Next Generation’ (NxGen) SFs (see Table 1), which utilise multiple processors for handling high data throughput (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012; Riggi et al. Reference Riggi2016; Whiting & Humphreys Reference Whiting and Humphreys2012). Qualitatively different types of source finding and characterisation tools are being developed that use machine learning approaches (e.g., Bonaldi et al. Reference Bonaldi2021; Lao et al. Reference Lao, An, Wang, Xu, Guo, Lv, Wu and Zhang2021; D. Magro et al., in preparation), as well as citizen science approaches to classifying radio sources (e.g., Banfield et al. Reference Banfield2015; Alger et al. Reference Alger2018), although it is beyond the scope of Hydra to attempt to incorporate all such efforts.

Table 1. SF general design characteristics (re., Hopkins et al. Reference Hopkins2015; Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019; Bonaldi et al. Reference Bonaldi2021). NxGen indicates multiprocessing capabilities.

![]() $^{\rm a}$

Optical SF.

$^{\rm a}$

Optical SF.

In general, SFs typically analyse an image in 3 stages: (1) background and noise estimation, (2) island detection, and (3) component modelling.

For the background estimation most SFs used in radio astronomy such as Aegean, PyBDSF, and Selavy, tend to use a sliding box method, where background noise estimates are calculated at a specific location using neighbouring pixels within a given box size, and estimated again for adjacent locations based on the sliding-step size. It is important that the box size be set so as not to be too small around bright sources, which would overestimate the background noise, or too large, so as to wash out any varying background structure that is important for reliable detection of faint sources (e.g., Huynh et al Reference Huynh, Hopkins, Norris, Hancock, Murphy, Jurek and Whitting2012). This is discussed further below in Section 3.1.3.

Background noise estimation can be performed through various metrics such as the inter-quartile range (IQR) used by Aegean (with median background and IQR noise spread, Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012), mean background (

![]() $\mu$

) and RMS noise (

$\mu$

) and RMS noise (

![]() $\sigma$

) used by PyBDSF (Mohan & Rafferty Reference Mohan and Rafferty2015), or median background and Mean Absolute Deviation From the Median (MAD, or MADFM herein: e.g., Riggi et al. Reference Riggi2016; Hopkins et al. Reference Hopkins2015) noise used by Selavy (which also has a

$\sigma$

) used by PyBDSF (Mohan & Rafferty Reference Mohan and Rafferty2015), or median background and Mean Absolute Deviation From the Median (MAD, or MADFM herein: e.g., Riggi et al. Reference Riggi2016; Hopkins et al. Reference Hopkins2015) noise used by Selavy (which also has a

![]() $\mu$

/

$\mu$

/

![]() $\sigma$

option, Whiting & Humphreys Reference Whiting and Humphreys2012). SExtractor, on the other hand, uses

$\sigma$

option, Whiting & Humphreys Reference Whiting and Humphreys2012). SExtractor, on the other hand, uses

![]() $\kappa.\sigma$

-clipping and mode estimation (see Section 3.1.1; Da Costa Reference Da Costa and Howell1992; Bertin & Arnouts Reference Bertin and Arnouts1996; Huynh et al. Reference Huynh, Hopkins, Norris, Hancock, Murphy, Jurek and Whitting2012; Akhlaghi & Ichikawa Reference Akhlaghi and Ichikawa2015; Riggi et al. Reference Riggi2016) over the entire image; while PySE performs

$\kappa.\sigma$

-clipping and mode estimation (see Section 3.1.1; Da Costa Reference Da Costa and Howell1992; Bertin & Arnouts Reference Bertin and Arnouts1996; Huynh et al. Reference Huynh, Hopkins, Norris, Hancock, Murphy, Jurek and Whitting2012; Akhlaghi & Ichikawa Reference Akhlaghi and Ichikawa2015; Riggi et al. Reference Riggi2016) over the entire image; while PySE performs

![]() $\sigma$

-clipping locally (see Hopkins et al. Reference Hopkins2015). ProFound, an optical SF shown to be useful for radio images (Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019), also uses a

$\sigma$

-clipping locally (see Hopkins et al. Reference Hopkins2015). ProFound, an optical SF shown to be useful for radio images (Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019), also uses a

![]() $\sigma$

-clipping schema (via. the MakeSkyGrid routine, Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018) Caesar provides several options:

$\sigma$

-clipping schema (via. the MakeSkyGrid routine, Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018) Caesar provides several options:

![]() $\mu/\sigma$

, median/MADFM, biweight and

$\mu/\sigma$

, median/MADFM, biweight and

![]() $\sigma$

-clipped estimators (Riggi et al. Reference Riggi2016). The final stage typically involves bicubic interpolation to obtain the background noise estimates as a function of pixel location. It is important that these estimates are optimal as they have a significant effect on SF performance (Huynh et al. Reference Huynh, Hopkins, Norris, Hancock, Murphy, Jurek and Whitting2012).

$\sigma$

-clipped estimators (Riggi et al. Reference Riggi2016). The final stage typically involves bicubic interpolation to obtain the background noise estimates as a function of pixel location. It is important that these estimates are optimal as they have a significant effect on SF performance (Huynh et al. Reference Huynh, Hopkins, Norris, Hancock, Murphy, Jurek and Whitting2012).

There are various methods for island detection within an image. Perhaps the simplest is thresholding, in which the pixel with the highest flux is chosen along with neighbouring pixels down to some threshold above the background noise, defining an island. Variants of this method are used by Duchamp (Whiting & Humphreys Reference Whiting and Humphreys2012), ProFound (Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018), Selavy (Whiting Reference Whiting2012), and SExtractor (Bertin & Arnouts Reference Bertin and Arnouts1996). Once the initial set of islands are chosen, they are sometimes then grown down to a lower threshold according to certain rules. For instance, ProFound uses a Kron/Petrosian-like dilation kernel, that is, it uses an island-shaped aperture (Kron Reference Kron1980; Petrosian Reference Petrosian1976) to grow the islands according to a surface brightness profile, in an iterative process, until the desired profile or lower threshold limit is reached (Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018). It then separates out the islands into segments, through a watershed deblending technique.Footnote c Another method is flood-fill, wherein islands are seeded above some threshold and then grown down to a lower threshold, according to a set of rules. Aegean (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012), blobcat (Hales et al. Reference Hales, Murphy, Curran, Middelberg, Gaensler and Norris2012), Caesar (Riggi et al. Reference Riggi2016), PyBDSF (Mohan & Rafferty Reference Mohan and Rafferty2015), and PySE (Swinbank et al. Reference Swinbank2015) use variations on this theme.

The component extraction phase is perhaps the most varied in terms of modelling. The simplest is the top down raster-scan within an island to find flux peaks given some step size, or tolerance level. This method is utilised by Duchamp (Whiting & Humphreys Reference Whiting and Humphreys2012), and, in turn, is also employed by Selavy. These peaks are then fitted by elliptical Gaussians producing a component catalogue. The choice of elliptical Gaussians is motivated by the fact that point sources, or sources that are only very slightly extended, are well-modelled in this way as it corresponds to the shape of the telescope’s synthesised beam. More complex source structures, on the other hand, tend to be poorly fit by this choice, leading to variations in fitting approach. Some SFs, for example, use multiple Gaussians to fit to an island, using various criteria. PyBDSF (Mohan & Rafferty Reference Mohan and Rafferty2015) and PySE (Spreeuw Reference Spreeuw2010; Swinbank et al. Reference Swinbank2015) fall into this category.

There are also a class of SFs that use curvature maps to determine radio source components. Aegean searches for local maxima within an island which in turn are fitted by Gaussians, constrained by negative curvature valleys (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012). Caesar is rather unique in that it first searches for peaks and then uses watershed deblending to create sub-islands, for seeding and constraining Gaussian fits, respectively (Riggi et al. Reference Riggi2016). Extended sources are then extracted from the resulting residual image, using wavelet transform, saliency, hierarchical-clustering, or active-contour filtering. Consequently, Caesar is capable of extracting extended sources with complex structure.

The aforementioned SF algorithms are just the tip of the iceberg of possibilities (c.f., Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012; Hopkins et al. Reference Hopkins2015; Wu et al. Reference Wu2018; Lukic, de Gasperin, & Brüggen Reference Lukic, de Gasperin and Brüggen2019; Sadr et al. Reference Sadr, Vos, Bassett, Hosenie, Oozeer and Lochner2019; Bonaldi et al. Reference Bonaldi2021; D. Magro et al., in preparation). In our initial implementation of Hydra we have chosen to explore a representative set of commonly used SFs: Aegean, Caesar, ProFound, PyBDSF, and Selavy

3. Hydra

Hydra is a software tool capable of running multiple SFs. It is extensible, in that other SFs can be added in a containerised fashion by following a set of straightforward template-like rules. It provides diagnostic information such as

![]() $\mathcal{C}$

and

$\mathcal{C}$

and

![]() $\mathcal{R}$

. Statistical analysis can be based on injected (

$\mathcal{R}$

. Statistical analysis can be based on injected (

![]() $\mathcal{J}$

) source catalogues from simulated images or on real

$\mathcal{J}$

) source catalogues from simulated images or on real

![]() $\mathcal{D}$

-images used as ground truths for detections in their

$\mathcal{D}$

-images used as ground truths for detections in their

![]() $\mathcal{S}$

counterparts. Hydra is innovative in that it minimises the False Detection Rate (FDR, Whiting Reference Whiting2012) of the SFs by adjusting their detection threshold and island growth (and optionally RMS box) parameters. This is an essential step in automation, especially when dealing with large surveys such as EMU (Norris Reference Norris2017; Norris et al. Reference Norris2021).

$\mathcal{S}$

counterparts. Hydra is innovative in that it minimises the False Detection Rate (FDR, Whiting Reference Whiting2012) of the SFs by adjusting their detection threshold and island growth (and optionally RMS box) parameters. This is an essential step in automation, especially when dealing with large surveys such as EMU (Norris Reference Norris2017; Norris et al. Reference Norris2021).

3.1. The hydra software suite

Upon Heracles shield wrought Homados (Tumult), the din of battle noise, and riding alongside Cerberus, the unruly master of mayhem. Only the wrath of Cerberus’s father Typhon, a controlling force, can temper their chaos. And hitherto, Typhon’s son Hydra, was tasked with bringing the chaos to bear fruit, while his mother Echidna, a hidden force, plucked the fruit from the vines to make wine. (Inspired from Reference Powell Powell, 2017 , and Reference Buxton Buxton, 2016 .)

Fig. 1 shows an overview of the Hydra software suite, which consists of the following software components: Homados, Cerberus, Typhon, and Hydra. Homados is used for providing image statistics such as

![]() $\mu/\sigma$

, and image manipulation such as inversion and adding noise. Cerberus is an extensible multi-SF software suite. It currently includes Aegean (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012, Reference Hancock, Cathryn and Hurley-Walker2018), Caesar (Riggi et al. Reference Riggi2016, Reference Riggi2019), ProFound (Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018; Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019), PyBDSF (Mohan & Rafferty Reference Mohan and Rafferty2015), and Selavy (Whiting & Humphreys Reference Whiting and Humphreys2012). Typhon is a tool for optimising the SF parameters and then producing output catalogues. It uses Homados and Cerberus to do this task. Hydra is the main tool which uses Typhon to produce data products, including catalogues, residual images, and region files.Footnote d Echidna is a planned catalogue stacking and integration tool, to be added to Hydra.

$\mu/\sigma$

, and image manipulation such as inversion and adding noise. Cerberus is an extensible multi-SF software suite. It currently includes Aegean (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012, Reference Hancock, Cathryn and Hurley-Walker2018), Caesar (Riggi et al. Reference Riggi2016, Reference Riggi2019), ProFound (Robotham et al. Reference Robotham, Davies, Driver, Koushan, Taranu, Casura and Liske2018; Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019), PyBDSF (Mohan & Rafferty Reference Mohan and Rafferty2015), and Selavy (Whiting & Humphreys Reference Whiting and Humphreys2012). Typhon is a tool for optimising the SF parameters and then producing output catalogues. It uses Homados and Cerberus to do this task. Hydra is the main tool which uses Typhon to produce data products, including catalogues, residual images, and region files.Footnote d Echidna is a planned catalogue stacking and integration tool, to be added to Hydra.

Figure 1. High level schematic representation of the Hydra software suite workflow. Homados provides

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

-image channels for simulated/real images (‘dancing ghosts’ example image, see Norris et al. Reference Norris2021). Each channel is run separately through the Typhon optimiser, which uses the SF interface provided by Cerberus. Hydra coordinates all of these activities, building catalogues and compiling statistics at the end of the process.

$\mathcal{S}$

-image channels for simulated/real images (‘dancing ghosts’ example image, see Norris et al. Reference Norris2021). Each channel is run separately through the Typhon optimiser, which uses the SF interface provided by Cerberus. Hydra coordinates all of these activities, building catalogues and compiling statistics at the end of the process.

3.1.1. Homados

The main purpose of Homados is to add noise to images. We shall often refer to the original image, as the

![]() $\mathcal{D}$

-image, and the noise-added image, as the

$\mathcal{D}$

-image, and the noise-added image, as the

![]() $\mathcal{S}$

-image. These ‘deep-shallow’ (

$\mathcal{S}$

-image. These ‘deep-shallow’ (

![]() $\mathcal{DS}$

) image pairs can be used to create statistics such as

$\mathcal{DS}$

) image pairs can be used to create statistics such as

![]() $\mathcal{DS}$

-completeness (

$\mathcal{DS}$

-completeness (

![]() $\mathcal{C_{DS}}$

) and

$\mathcal{C_{DS}}$

) and

![]() $\mathcal{DS}$

-reliability (

$\mathcal{DS}$

-reliability (

![]() $\mathcal{R_{DS}}$

), based on the assumption that the sources detected in the

$\mathcal{R_{DS}}$

), based on the assumption that the sources detected in the

![]() $\mathcal{D}$

-image are real. These statistics are used for real images, where the source inputs are unknown.

$\mathcal{D}$

-image are real. These statistics are used for real images, where the source inputs are unknown.

An

![]() $\mathcal{S}$

-image is created by adding to the

$\mathcal{S}$

-image is created by adding to the

![]() $\mathcal{D}$

-image a Gaussian noise map that has been convolved with the corresponding synthesised beam (i.e., BMIN, BMAJ, and BPA). The noise map is created with mean noise,

$\mathcal{D}$

-image a Gaussian noise map that has been convolved with the corresponding synthesised beam (i.e., BMIN, BMAJ, and BPA). The noise map is created with mean noise,

![]() $\mu_{image}$

, and RMS noise,

$\mu_{image}$

, and RMS noise,

![]() $n\sigma_{image}$

(

$n\sigma_{image}$

(

![]() $\equiv\sigma_{noise\;\;map}$

), where n is the desired noise level (i.e., factor), and

$\equiv\sigma_{noise\;\;map}$

), where n is the desired noise level (i.e., factor), and

![]() $\mu_{image}$

and

$\mu_{image}$

and

![]() $\sigma_{image}$

are obtained from the

$\sigma_{image}$

are obtained from the

![]() $\mathcal{D}$

-image using

$\mathcal{D}$

-image using

![]() $\sigma$

-clipping (Akhlaghi & Ichikawa Reference Akhlaghi and Ichikawa2015). This is then convolved with the synthesised beam, from which its RMS noise,

$\sigma$

-clipping (Akhlaghi & Ichikawa Reference Akhlaghi and Ichikawa2015). This is then convolved with the synthesised beam, from which its RMS noise,

![]() $\sigma_{convolved}$

, is computed. For convergence, this process is repeated using the convolved image as input, but with n replaced by

$\sigma_{convolved}$

, is computed. For convergence, this process is repeated using the convolved image as input, but with n replaced by

![]() $\sigma_{noise\;\;map}\,n/\sigma_{convolved}$

. The final convolved image is then added to the

$\sigma_{noise\;\;map}\,n/\sigma_{convolved}$

. The final convolved image is then added to the

![]() $\mathcal{D}$

-image, obtaining the

$\mathcal{D}$

-image, obtaining the

![]() $\mathcal{S}$

-image.

$\mathcal{S}$

-image.

Fig. 2 shows an example of an

![]() $\mathcal{S}$

-image generated by Homados from an Australia Telescope Large Area Survey (ATLAS) Chandra Deep Field South (CDFS) Data Release 1 (DR1) tile (Norris et al. Reference Norris2006). The noise level was scaled by a factor of

$\mathcal{S}$

-image generated by Homados from an Australia Telescope Large Area Survey (ATLAS) Chandra Deep Field South (CDFS) Data Release 1 (DR1) tile (Norris et al. Reference Norris2006). The noise level was scaled by a factor of

![]() $n=5$

. This factor is assumed for the

$n=5$

. This factor is assumed for the

![]() $\mathcal{S}$

-image generation in the rest of this paper.

$\mathcal{S}$

-image generation in the rest of this paper.

Figure 2. Homados

![]() $\mathcal{S}$

-image generation example, using an image cutout sample from an ATLAS CDFS DR1

$\mathcal{S}$

-image generation example, using an image cutout sample from an ATLAS CDFS DR1

![]() $2.2^{\circ}\times2.7^{\circ}$

tile (Norris et al. Reference Norris2006). The figures show

$2.2^{\circ}\times2.7^{\circ}$

tile (Norris et al. Reference Norris2006). The figures show

![]() $\mathcal{D}$

(left) and

$\mathcal{D}$

(left) and

![]() $\mathcal{S}$

(right) images, zoomed in. The noise level scale factor, n, was set to 5 to generate the shallow image.

$\mathcal{S}$

(right) images, zoomed in. The noise level scale factor, n, was set to 5 to generate the shallow image.

In addition, Homados uses

![]() $\sigma$

-clipping to compute image statistics, such as, m (median),

$\sigma$

-clipping to compute image statistics, such as, m (median),

![]() $\mu$

(mean),

$\mu$

(mean),

![]() $\sigma$

(RMS),

$\sigma$

(RMS),

![]() $I_{\min}$

(minimum pixel value), and

$I_{\min}$

(minimum pixel value), and

![]() $I_{\max}$

(maximum pixel value). It also does image inversion for FDR calculations.

$I_{\max}$

(maximum pixel value). It also does image inversion for FDR calculations.

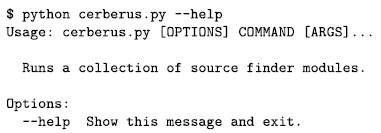

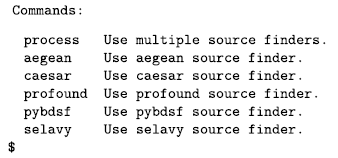

3.1.2. Cerberus

Cerberus is an extensible interface for running SF modules within the Hydra software suite. It currently supports Aegean, Caesar, ProFound, PyBDSF, and Selavy, as indicated by its command-line interface.Footnote e

Table 2. Cerberus RMS and Island parameter definitions in units of

![]() $\sigma$

with respect to the background, with soft constraint

$\sigma$

with respect to the background, with soft constraint

![]() $\sigma_{Island}<\sigma_{RMS}$

.

$\sigma_{Island}<\sigma_{RMS}$

.

![]() $^{\rm a}$

https://github.com/PaulHancock/Aegean/wiki/Simple-usage.

$^{\rm a}$

https://github.com/PaulHancock/Aegean/wiki/Simple-usage.

![]() $^{\rm b}$

https://caesar-doc.readthedocs.io/en/latest/usage/app_options.html#input-options.

$^{\rm b}$

https://caesar-doc.readthedocs.io/en/latest/usage/app_options.html#input-options.

![]() $^{\rm c}$

https://cran.r-project.org/web/packages/ProFound/ProFound.pdf.

$^{\rm c}$

https://cran.r-project.org/web/packages/ProFound/ProFound.pdf.

![]() $^{\rm d}$

https://pybdsf.readthedocs.io/en/latest/process_image.html.

$^{\rm d}$

https://pybdsf.readthedocs.io/en/latest/process_image.html.

![]() $^{\rm e}$

https://www.atnf.csiro.au/computing/software/askapsoft/sdp/docs/current/analysis/selavy.html.

$^{\rm e}$

https://www.atnf.csiro.au/computing/software/askapsoft/sdp/docs/current/analysis/selavy.html.

New modules are added through code generation, using Jinja template-codeFootnote f in conjunction with DockerFootnote g and YAMLFootnote h configuration files. The workflow is as follows:

• Create a containerised SF wrapper:

– Create a SF wrapper script

– Create a Docker build file wrapper

– Update the master docker-compose build file

– Build the container image

• Update the cerberus.py script:

– Create a YAML metadata file

– Update the master YAML metadata file

– Run the Jinja script generator tool

• Test the Hydra software suite

• Update the GitFootnote i repository

Figure 3. Cerberus code generation workflow.

Fig. 3 summarises this high-level workflow: containers for each SF are shown under Container Images and the Docker and YAML configuration files are shown under Configuration Management. The developer must follow a fixed set of rules when adding a new SF, in order for the Jinja template-driven script generator to update Cerberus. (For the purpose of reproducibility, Appendix A provides architectural design notes, using Aegean as an example.) All of this is transparent to the user, who has access to a simple interface, so one does not have to be an expert at using SFs in order to use Hydra.

Hydra’s modular design requires that the user has access to the key elements of a SF’s interface; in particular, access to its ‘RMS-like’ and ‘Island-like’ parameters. In the case of Aegean, for example, this would be seedclip and floodclip (Hancock et al. Reference Hancock, Murphy, Gaensler, Hopkins and Curran2012), respectively. It is important to note that the parameters are not necessarily equivalent between SFs;Footnote j regardless, they do affect thresholding and island formation. Consequently, they have the strongest influence on FDR calculations. Table 2 summarises the parameters for the currently supported SFs. These parameters are used by Typhon to baseline the SFs, by minimising their FDRs.

Hydra also requires that SF modules provide optional RMS box and step size parameters, even if they are dummies. Some SF software manuals recommend these parameters be externally optimised, under certain conditions. PyBDSF is an example of such a case.Footnote k Regardless, this is also a good way of baselining (i.e., calibrating, Huynh et al. Reference Huynh, Hopkins, Norris, Hancock, Murphy, Jurek and Whitting2012; Riggi et al. Reference Riggi2016) SFs for comparison purposes.

3.1.3. Typhon

Typhon is a tool for optimising the SFs to a standard baseline that can be used for comparison purposes. We have adopted the Percent Real Detections (PRD) metric, as used by Hale et al. (Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019) in a comparative study of Aegean, ProFound, and PyBDSF: that is,

where

![]() $N_{image}$

is the number of detections in the original image, and

$N_{image}$

is the number of detections in the original image, and

![]() $N_{inv.\;image}$

is the number of detections in the inverted image.Footnote l Basically, if one assumes the image noise is predominately Gaussian, then the peaks detected in the inverted image should statistically match the noise-peaks detected in the non-inverted image. Thus the FDR can be reduced by optimising the PRD. This approach is not suitable for non-Gaussian (or non-symmetric) noise properties, such as the Ricean noise distribution in polarisation images.

$N_{inv.\;image}$

is the number of detections in the inverted image.Footnote l Basically, if one assumes the image noise is predominately Gaussian, then the peaks detected in the inverted image should statistically match the noise-peaks detected in the non-inverted image. Thus the FDR can be reduced by optimising the PRD. This approach is not suitable for non-Gaussian (or non-symmetric) noise properties, such as the Ricean noise distribution in polarisation images.

Figure 4. Example Typhon PRD of a

![]() $2^\circ\times2^\circ$

simulated

$2^\circ\times2^\circ$

simulated

![]() $\mathcal{D}$

-image (left) and its corresponding

$\mathcal{D}$

-image (left) and its corresponding

![]() $\mathcal{S}$

-image (right). The variation in the PRD with the RMS parameters (in

$\mathcal{S}$

-image (right). The variation in the PRD with the RMS parameters (in

![]() $\sigma$

units) is represented along the horizontal axis, and the variation in the PRD with the island parameters is represented by the error bars. The data points represent average values: that is, Aegean and ProFound indicate the true shape of the curves, due their insensitivity to their island parameters. The SF parameters are listed in Table 2. The dotted horizontal lines indicate the 90% PRD levels.

$\sigma$

units) is represented along the horizontal axis, and the variation in the PRD with the island parameters is represented by the error bars. The data points represent average values: that is, Aegean and ProFound indicate the true shape of the curves, due their insensitivity to their island parameters. The SF parameters are listed in Table 2. The dotted horizontal lines indicate the 90% PRD levels.

Typhon uses the RMS and island parameters to optimise the PRD for each SF. Fig. 4 shows Typhon generated PRD curves for a

![]() $2^\circ\times2^\circ$

simulated

$2^\circ\times2^\circ$

simulated

![]() $\mathcal{D}$

-image along with its corresponding

$\mathcal{D}$

-image along with its corresponding

![]() $\mathcal{S}$

-image. Typhon identifies the optimal parameters to be those that correspond to the 90% PRD threshold. This threshold is motivated by the desire to use the knee of the PRD curve, whose position appears to be scale-invariant above a certain image size. Although the shape of the curve is not always guaranteed to be smooth, this crude method appears to be quite effective at framing the region of interest around the desired 90% PRD. The 90% to 98% PRD range has been investigated and the former threshold seems to provide reasonable results. Hale et al. (Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019) use a 98% PRD to baseline their SFs, beyond which the detection rate degrades. At that cut-off, however, we tend to find a non-scale-invariant increase in the RMS threshold with image size.

$\mathcal{S}$

-image. Typhon identifies the optimal parameters to be those that correspond to the 90% PRD threshold. This threshold is motivated by the desire to use the knee of the PRD curve, whose position appears to be scale-invariant above a certain image size. Although the shape of the curve is not always guaranteed to be smooth, this crude method appears to be quite effective at framing the region of interest around the desired 90% PRD. The 90% to 98% PRD range has been investigated and the former threshold seems to provide reasonable results. Hale et al. (Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019) use a 98% PRD to baseline their SFs, beyond which the detection rate degrades. At that cut-off, however, we tend to find a non-scale-invariant increase in the RMS threshold with image size.

Typhon uses the image statistics output from Homados to determine the RMS parameter range over which to optimise the PRD:

where

with

![]() $\mu$

,

$\mu$

,

![]() $\sigma$

, and

$\sigma$

, and

![]() $I_{\max}$

determined through

$I_{\max}$

determined through

![]() $\sigma$

-clipping (re. Section 3.1.1). The

$\sigma$

-clipping (re. Section 3.1.1). The

![]() $1.5\sigma$

lower limit is where the FDR starts to degrade. In general, Typhon samples the PRD from high values to low values in the RMS parameter, while varying the island parameter at each step, until the 90% threshold is reached.

$1.5\sigma$

lower limit is where the FDR starts to degrade. In general, Typhon samples the PRD from high values to low values in the RMS parameter, while varying the island parameter at each step, until the 90% threshold is reached.

Figure 5. Clustering Algorithm Infographic: The left panel shows the results of 3 hypothetical SFs (red, green, and gold). The middle panel shows the results after clustering, resulting in two clumps, assigned clump_id 1 (upper panel) and clump_id 2 (lower panel). A clump is defined through the spatial overlap between SF detections (i.e., components), in the

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

-images together. The components are numbered independently and can be associated with the clump they end up in. For instance, components 1, 2, and 3 are linked together in the

$\mathcal{S}$

-images together. The components are numbered independently and can be associated with the clump they end up in. For instance, components 1, 2, and 3 are linked together in the

![]() $\mathcal{D}$

-image, and, in addition, 1 overlaps with 6, and 3 overlaps with 8, 9, and 10 in the

$\mathcal{D}$

-image, and, in addition, 1 overlaps with 6, and 3 overlaps with 8, 9, and 10 in the

![]() $\mathcal{S}$

-image. Together this set of components populate clump_id 1. Similarly, clump_id 2 is composed of components 4, 5, and 7. Clumps are centred in the Hydra Viewer (Fig. 7), with unassociated components greyed out. The right panel shows the results after the clumps are decomposed into closest (i.e., overlapping centre-to-centre) matches between SFs, in the

$\mathcal{S}$

-image. Together this set of components populate clump_id 1. Similarly, clump_id 2 is composed of components 4, 5, and 7. Clumps are centred in the Hydra Viewer (Fig. 7), with unassociated components greyed out. The right panel shows the results after the clumps are decomposed into closest (i.e., overlapping centre-to-centre) matches between SFs, in the

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

-images, such that there is only one SF with a match in the

$\mathcal{S}$

-images, such that there is only one SF with a match in the

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

-images. These matched sets are assigned match_ids, with boxes enclosing the extremities of the components. The Hydra Viewer displays these numbers at the centre of the boxes (which are coloured differently here, for emphasis). So clump_id 1 contains match_id 1 = {1, 2, 6} and match_id 2 = {3, 8, 9, 10}, while clump_id 2 contains match_id 3 = {4, 5, 7}.

$\mathcal{S}$

-images. These matched sets are assigned match_ids, with boxes enclosing the extremities of the components. The Hydra Viewer displays these numbers at the centre of the boxes (which are coloured differently here, for emphasis). So clump_id 1 contains match_id 1 = {1, 2, 6} and match_id 2 = {3, 8, 9, 10}, while clump_id 2 contains match_id 3 = {4, 5, 7}.

The island parameters are SF-specific, and are typically defined over a finite range. Table 3 shows the parameter ranges used by Hydra, which are stored in its Configuration Management (Fig. 3). Typhon uses this information along with the constraint

![]() $\sigma_{island}<\sigma_{RMS}$

(otherwise,

$\sigma_{island}<\sigma_{RMS}$

(otherwise,

![]() $\sigma_{island}=0.999\,\sigma_{RMS}$

), as it searches the parameter space.

$\sigma_{island}=0.999\,\sigma_{RMS}$

), as it searches the parameter space.

Table 3. Configured island parameters.

Typhon will also perform an initial RMS box optimisation before optimising the PRD, if it is configured to do so. This is of particular importance for extended objects or around bright sources (Mohan & Rafferty Reference Mohan and Rafferty2015), especially for Gaussian-based extraction SFs such as Aegean, PyBDSF, and Selavy. Typhon uses Aegean’s background/noise image generation tool, bane (Hancock et al. Reference Hancock, Cathryn and Hurley-Walker2018), to search the RMS box size (box_size) and step size (step_size) parameter space,

\begin{equation} \left.\begin{array}{c} \mbox{$\displaystyle3\le\frac{\mbox{box_size}}{[4(\texttt{BMAJ}+\texttt{BMIN})/2]}\le6$}\\[10pt] \mbox{$\displaystyle\frac{1}{4}\le\frac{\mbox{step_size}}{\mbox{box_size}}\le\frac{1}{2}$}\\ \end{array}\right\}\,,\end{equation}

\begin{equation} \left.\begin{array}{c} \mbox{$\displaystyle3\le\frac{\mbox{box_size}}{[4(\texttt{BMAJ}+\texttt{BMIN})/2]}\le6$}\\[10pt] \mbox{$\displaystyle\frac{1}{4}\le\frac{\mbox{step_size}}{\mbox{box_size}}\le\frac{1}{2}$}\\ \end{array}\right\}\,,\end{equation}

for the lowest background level,

![]() $\mu$

(c.f., Riggi et al. Reference Riggi2016). The

$\mu$

(c.f., Riggi et al. Reference Riggi2016). The

![]() $4(\texttt{BMAJ}+\texttt{BMIN})/2$

factor represents the bane default box size, where we assume a square box, for simplicity. The limits 3 and 6 are consistent with the rule of thumb that the box size should be 10–20 times larger than the beam size (Riggi et al. Reference Riggi2016). The

$4(\texttt{BMAJ}+\texttt{BMIN})/2$

factor represents the bane default box size, where we assume a square box, for simplicity. The limits 3 and 6 are consistent with the rule of thumb that the box size should be 10–20 times larger than the beam size (Riggi et al. Reference Riggi2016). The

![]() $1/4$

and

$1/4$

and

![]() $1/2$

bounds are used for providing a smoothly sliding box (Mohan & Rafferty Reference Mohan and Rafferty2015).

$1/2$

bounds are used for providing a smoothly sliding box (Mohan & Rafferty Reference Mohan and Rafferty2015).

The Typhon optimisation algorithm can be summarised as follows.

• If the RMS box

$\mu$

-optimisation is desired:

$\mu$

-optimisation is desired:

– Minimise

$\mu$

over a

$\mu$

over a

$6\times3$

box_size by step_size search grid, constrained by Equation (3)

$6\times3$

box_size by step_size search grid, constrained by Equation (3)

• Select a centralised

$n\times n$

image sample-cutout:

$n\times n$

image sample-cutout:

– Use an

$n^2$

-area rectangle, if non-square image

$n^2$

-area rectangle, if non-square image– Use the full area, if image is too small

• Determine the RMS parameter bounds (Equation (2))

• For each SF:

– If applicable, set the RMS box parameters to the optimised values

– Extract the island parameter bounds from Configuration Management (re. Table 3)

– Optimise the PRD of the sample-cutout:

* Iterate the RMS parameter backwards from RMS

$_{\max}$

$_{\max}$

* At each RMS step, iterate the island parameter, such that,

$\sigma_{island}<\sigma_{RMS}$

, otherwise set

$\sigma_{island}<\sigma_{RMS}$

, otherwise set

$\sigma_{island}=0.999\,\sigma_{RMS}$

$\sigma_{island}=0.999\,\sigma_{RMS}$

* For each RMS-island parameter pair compute the PRD

* Terminate iterations just before the PRD passes below 90%

-

If the PRD is always below 90% choose the highest value.

• Run the SFs on the full image using their optimised parameters

• Archive the results in a tarball

For our initial studies, we have chosen to set

![]() $n=2.5^\circ$

to provide a sufficiently large region of sky to ensure the finder parameters are not biased by small-scale structure in a given image. Also Aegean, PyBDSF, and Selavy are configured to use the RMS box optimisation inputs from bane, with Selavy only accepting the RMS box size. Appendix B provides details of the SFs and their settings used herein.

$n=2.5^\circ$

to provide a sufficiently large region of sky to ensure the finder parameters are not biased by small-scale structure in a given image. Also Aegean, PyBDSF, and Selavy are configured to use the RMS box optimisation inputs from bane, with Selavy only accepting the RMS box size. Appendix B provides details of the SFs and their settings used herein.

For the purposes of placing the SFs on equal footing, we have chosen to restrict Aegean, Caesar and Selavy to single threaded mode, so as to keep the background/noise statistics consistent, at the cost of computational speed. In addition, we keep all of the internal parameters of all of the SFs fixed, instead of tweaking them by hand for each use case. Every effort has been made to keep each SF module as generic as possible.

3.1.4. Hydra

Hydra is the main tool that glues everything together, by running Typhon for

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

images, and producing data products such as diagnostics plots and catalogues. One of main underlying features of Hydra is that it uses a clustering algorithm (Boyce Reference Boyce2018) to relate information between SF components in both

$\mathcal{S}$

images, and producing data products such as diagnostics plots and catalogues. One of main underlying features of Hydra is that it uses a clustering algorithm (Boyce Reference Boyce2018) to relate information between SF components in both

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

images. In addition, Hydra will also accept simulated catalogue input, under a source-finder pseudonym.

$\mathcal{S}$

images. In addition, Hydra will also accept simulated catalogue input, under a source-finder pseudonym.

Fig. 5 shows an example of how the clustering algorithm works. All components between all

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

SF detections (i.e., catalogue rows) are spatially grouped together, with their overlaps forming clumps with unique clump_ids. The clumps are also decomposed into the closest

$\mathcal{S}$

SF detections (i.e., catalogue rows) are spatially grouped together, with their overlaps forming clumps with unique clump_ids. The clumps are also decomposed into the closest

![]() $\mathcal{DS}$

matches, and assigned unique match_ids. The matches are further broken down by SF into subclumps (not shown), and assigned unique subclump_ids. All of this information is compiled into a cluster catalogue (or table), containing the following key reference elements (columns): cluster catalogue ID, clump ID, match ID, subclump ID, SF

$\mathcal{DS}$

matches, and assigned unique match_ids. The matches are further broken down by SF into subclumps (not shown), and assigned unique subclump_ids. All of this information is compiled into a cluster catalogue (or table), containing the following key reference elements (columns): cluster catalogue ID, clump ID, match ID, subclump ID, SF

![]() $\mathcal{D/S}$

catalogue cross-reference ID, and image depth (

$\mathcal{D/S}$

catalogue cross-reference ID, and image depth (

![]() $=\mathcal{D},\,\mathcal{S}$

). In addition, the catalogue contains common SF output parameters, such as RA, Dec, flux density, etc. There is also a clump catalogue, consisting of rows by unique clump_id, of cluster centroid positions, cutout sizes, total number of components, number of components per SF, SFs with the best residual statistics, etc.

$=\mathcal{D},\,\mathcal{S}$

). In addition, the catalogue contains common SF output parameters, such as RA, Dec, flux density, etc. There is also a clump catalogue, consisting of rows by unique clump_id, of cluster centroid positions, cutout sizes, total number of components, number of components per SF, SFs with the best residual statistics, etc.

Figure 6. Derivation of the distance metric used for clustering. Here we assume that the space is locally flat, so that

![]() $\Delta(C_i,C_j)\approx(\delta^2_{\texttt{RA}_{ij}}+\delta^2_{\texttt{Dec}_{ij}})^{1/2}$

, where

$\Delta(C_i,C_j)\approx(\delta^2_{\texttt{RA}_{ij}}+\delta^2_{\texttt{Dec}_{ij}})^{1/2}$

, where

![]() $\delta_{\texttt{RA}_{ij}}=(\texttt{RA}_j-\texttt{RA}_i)\cos(\texttt{Dec}_i)$

and

$\delta_{\texttt{RA}_{ij}}=(\texttt{RA}_j-\texttt{RA}_i)\cos(\texttt{Dec}_i)$

and

![]() $\delta_{\texttt{Dec}_{ij}}=\texttt{Dec}_j-\texttt{Dec}_i$

. The distances from the centres of components

$\delta_{\texttt{Dec}_{ij}}=\texttt{Dec}_j-\texttt{Dec}_i$

. The distances from the centres of components

![]() $C_i$

and

$C_i$

and

![]() $C_j$

to their edges, along a ray between them, is given by

$C_j$

to their edges, along a ray between them, is given by

![]() $r_i$

and

$r_i$

and

![]() $r_j$

, respectively: that is,

$r_j$

, respectively: that is,

![]() $r_\mu$

, is a standard geometrical expression in terms of angle

$r_\mu$

, is a standard geometrical expression in terms of angle

![]() $\beta_\mu=\pi/2-(\theta_\mu-\eta)$

with respect to the ray and the semi-major axis

$\beta_\mu=\pi/2-(\theta_\mu-\eta)$

with respect to the ray and the semi-major axis

![]() $a_\mu$

, where

$a_\mu$

, where

![]() $\theta_\mu$

is the position angle,

$\theta_\mu$

is the position angle,

![]() $\eta=-\tan^{-1}(\delta_{\texttt{Dec}_{ij}}/\delta_{\texttt{RA}_{ij}})$

, and

$\eta=-\tan^{-1}(\delta_{\texttt{Dec}_{ij}}/\delta_{\texttt{RA}_{ij}})$

, and

![]() $\mu=i,\,j$

. The grey area outside the ellipses is the skirt, whose extent is determined by f.

$\mu=i,\,j$

. The grey area outside the ellipses is the skirt, whose extent is determined by f.

Figure 7. Hydra Viewer Infographic: The cutout viewing section of Hydra’s local web-viewer interface. At the top is the navigation bar, which allows the user to navigate by clump ID, go to a specific clump, turn on/off cutout annotations, or examine S/N bins of diagnostic plots such as

![]() $\mathcal{C}$

and

$\mathcal{C}$

and

![]() $\mathcal{R}$

, by using the Mode button. The main panel contains

$\mathcal{R}$

, by using the Mode button. The main panel contains

![]() $\mathcal{D}$

(top) and

$\mathcal{D}$

(top) and

![]() $\mathcal{S}$

(bottom) square image cutouts (first column) and SF residual image cutouts (remaining columns), centred about a given clump’s centroid. Here the annotation is turned on, with the neighbouring clumps greyed out. The table at the bottom (not to scale) is the cluster table rows for the clump, with the following columns: cluster catalogue ID, SF catalogue cross-reference ID, clump ID, subclump ID, match ID, SF or

$\mathcal{S}$

(bottom) square image cutouts (first column) and SF residual image cutouts (remaining columns), centred about a given clump’s centroid. Here the annotation is turned on, with the neighbouring clumps greyed out. The table at the bottom (not to scale) is the cluster table rows for the clump, with the following columns: cluster catalogue ID, SF catalogue cross-reference ID, clump ID, subclump ID, match ID, SF or

![]() $\mathcal{J}$

catalogue name, image depth, RA (

$\mathcal{J}$

catalogue name, image depth, RA (

![]() $^\circ$

), Dec (

$^\circ$

), Dec (

![]() $^\circ$

), semi-major axis (

$^\circ$

), semi-major axis (

![]() $^{\prime\prime}$

), semi-minor axis (

$^{\prime\prime}$

), semi-minor axis (

![]() $^{\prime\prime}$

), position angle (

$^{\prime\prime}$

), position angle (

![]() $^\circ$

), total flux density (mJy), bane RMS noise (mJy), S/N (total flux over bane RMS noise), peak flux (mJy beam

$^\circ$

), total flux density (mJy), bane RMS noise (mJy), S/N (total flux over bane RMS noise), peak flux (mJy beam

![]() $^{-1}$

), normalised-residual RMS (mJy (arcmin

$^{-1}$

), normalised-residual RMS (mJy (arcmin

![]() $^2$

beam)

$^2$

beam)

![]() $^{-1}$

), normalised-residual MADFM (mJy (arcmin

$^{-1}$

), normalised-residual MADFM (mJy (arcmin

![]() $^2$

beam)

$^2$

beam)

![]() $^{-1}$

), and normalised-residual

$^{-1}$

), and normalised-residual

![]() $\Sigma I^2$

((mJy (arcmin

$\Sigma I^2$

((mJy (arcmin

![]() $^2$

beam))

$^2$

beam))

![]() $^{-2}$

). The normalised-residual statistics are normalised by the cutout area (arcmin

$^{-2}$

). The normalised-residual statistics are normalised by the cutout area (arcmin

![]() $^2$

). This statistical information is also shown below each cutout, along with the number of components (N), and cutout size (Size, in arcmin). This figure is to illustrate the layout of the Hydra viewer, not the details. It shows screen shots from the Hydra Viewer pasted together, hence the fonts appear small. The data at the bottom is raw output from the cluster table, which is not rounded in this version of the software.

$^2$

). This statistical information is also shown below each cutout, along with the number of components (N), and cutout size (Size, in arcmin). This figure is to illustrate the layout of the Hydra viewer, not the details. It shows screen shots from the Hydra Viewer pasted together, hence the fonts appear small. The data at the bottom is raw output from the cluster table, which is not rounded in this version of the software.

Fig. 6 shows the derivation of the distance metric used in the clustering algorithm. The algorithm uses the following distance metric constraint to determine the overlap between two elliptical components,

![]() $C_i$

and

$C_i$

and

![]() $C_j$

, with centre-to-edge distances,

$C_j$

, with centre-to-edge distances,

![]() $r_i$

and

$r_i$

and

![]() $r_j$

, along an adjoining ray.

$r_j$

, along an adjoining ray.

where

is the distance metric, and

\begin{equation*} r_\mu=\frac{a_\mu b_\mu}{\sqrt{a_\mu^2\cos^2(\theta_\mu-\eta)+b_\mu^2\sin^2(\theta_\mu-\eta)}}\,,\nonumber\end{equation*}

\begin{equation*} r_\mu=\frac{a_\mu b_\mu}{\sqrt{a_\mu^2\cos^2(\theta_\mu-\eta)+b_\mu^2\sin^2(\theta_\mu-\eta)}}\,,\nonumber\end{equation*}

are the centre-to-edge distances, for

![]() $\mu=i,j$

, with

$\mu=i,j$

, with

![]() $a_\mu$

is the semi-major axis,

$a_\mu$

is the semi-major axis,

![]() $b_\mu$

is the semi-minor axis, and

$b_\mu$

is the semi-minor axis, and

![]() $\theta_\mu$

is the position angle (defined in the same manner as the beam PA, Greisen Reference Greisen2017). So components satisfying this constraint are clumped together.

$\theta_\mu$

is the position angle (defined in the same manner as the beam PA, Greisen Reference Greisen2017). So components satisfying this constraint are clumped together.

Hydra also provides a web-viewer (known as the Hydra Viewer) for exploring image and residual image cutouts by clump_id, along with corresponding cluster table information. Fig. 7 provides a detailed description of the Hydra Viewer’s cutout interface. As indicated in the figure, the viewer has radio component annotations that can be toggled on/off. Fig. 8 provides a more detailed example. Table 4 describes the annotation colours, which are stored as metadata in Hydra’s Configuration Management (Fig. 3).

The following is a list of the data products produced by Hydra:

• Typhon Metrics

–

$\mathcal{D/S}$

Diagnostic Plots of

$\mathcal{D/S}$

Diagnostic Plots of

* PRD

* PRD CPU Times

* Residual RMS

* Residual MADFM

* Residual

$\Sigma I^2$

$\Sigma I^2$

– Table of

$\mathcal{D}$

and

$\mathcal{D}$

and

$\mathcal{S}$

optimised RMS and island parameters

$\mathcal{S}$

optimised RMS and island parameters

•

$\mathcal{D/S}$

Catalogues

$\mathcal{D/S}$

Catalogues

– SF Catalogues

– Cluster Catalogue

– Clump Catalogue

• Optional Simulated Input Catalogue

•

$\mathcal{D/S}$

Cutouts

$\mathcal{D/S}$

Cutouts

– Un/annotated Images

– Un/annotated Residual Images

•

$\mathcal{D/S}$

Diagnostic Plots

$\mathcal{D/S}$

Diagnostic Plots

– Clump Size Distributions

– Detections vs S/N

–

$\mathcal{C}$

vs S/N

$\mathcal{C}$

vs S/N–

$\mathcal{R}$

vs S/N

$\mathcal{R}$

vs S/N– Flux-Ratios:

$S_{out}/S_{in}$

vs S/N

$S_{out}/S_{in}$

vs S/N– False-Positives vs S/N

• Hydra Viewer: Local Web-browser Tool

All of this information is stored in a tarball. The Hydra Viewer allows the user to view all of these data products. The cutout viewer portion is linked only to the cluster catalogue. It is accessible through an index.html file in the main tar directory.

4. Completeness and reliability metrics

Completeness and reliability metrics can be generated through various combinations of deep, shallow, and injected sources. Fig. 9 shows a Venn diagram of the overlapping possibilities. In addition, we need to be careful in our definitions of these metrics.

Here we use a clustering approach to spatially match our detections (Equation (4)). An alternative method is to use a cutoff distance in a catalogue cross-match (e.g., Huynh et al. Reference Huynh, Hopkins, Norris, Hancock, Murphy, Jurek and Whitting2012; Hopkins et al. Reference Hopkins2015; Hale et al. Reference Hale, Robotham, Davies, Jarvis, Driver and Heywood2019).Footnote m Depending on the cutoff distance adopted, ‘locally,’ this approach may lead to associations between adjacent clumps that may not actually be related. The clustering approach aims to mitigate against this effect.

Table 4. SF annotation colours.

Figure 8. An example of a

![]() $\mathcal{D}$

-image cutout, with annotations turned on, consisting of 4 Aegean, 2 ProFound, 2 PyBDSF, and 2 Selavy overlapping

$\mathcal{D}$

-image cutout, with annotations turned on, consisting of 4 Aegean, 2 ProFound, 2 PyBDSF, and 2 Selavy overlapping

![]() $\mathcal{D}$

-image catalogue components. The label at the top indicates it corresponds to clump_id 243, and the numbers at the centres of the cyan boxes are the match_ids (369 through 372).

$\mathcal{D}$

-image catalogue components. The label at the top indicates it corresponds to clump_id 243, and the numbers at the centres of the cyan boxes are the match_ids (369 through 372).

Completeness (

![]() $\mathcal{C}$

) is the fraction of real detections to real sources, and reliability (

$\mathcal{C}$

) is the fraction of real detections to real sources, and reliability (

![]() $\mathcal{R}$

) is the fraction of real detections to detected sources (Fig. 9). Here we define these metrics in terms of ‘real’ injected (simulated) sources (

$\mathcal{R}$

) is the fraction of real detections to detected sources (Fig. 9). Here we define these metrics in terms of ‘real’ injected (simulated) sources (

![]() $\mathcal{J}$

) vs deep (

$\mathcal{J}$

) vs deep (

![]() $\mathcal{D}$

) and shallow (

$\mathcal{D}$

) and shallow (

![]() $\mathcal{S}$

) detections and, ‘assumed-real’ deep detections vs shallow detections. In the case where the sources are known (i.e., injected), we take the fraction of real–deep (

$\mathcal{S}$

) detections and, ‘assumed-real’ deep detections vs shallow detections. In the case where the sources are known (i.e., injected), we take the fraction of real–deep (

![]() $\mathcal{D}\cap\mathcal{J}$

) or real–shallow (

$\mathcal{D}\cap\mathcal{J}$

) or real–shallow (

![]() $\mathcal{S}\cap\mathcal{J}$

) detections to the injected sources for our completeness,

$\mathcal{S}\cap\mathcal{J}$

) detections to the injected sources for our completeness,

or

respectively.Footnote n Similarly, the fraction of real–deep or real–shallow detections to the corresponding deep or shallow detections give the reliability

or

respectively. In the case where no true underlying sources are known we use the deep detections as a proxy, and take the fraction of real–shallow detections to deep detections for our completeness,

and the fraction of real–shallow to shallow detections for our reliability,

Figure 9. Venn diagram of completeness and reliability, for sets of deep (

![]() $\mathcal{D}$

), shallow (

$\mathcal{D}$

), shallow (

![]() $\mathcal{S}$

), and injected (

$\mathcal{S}$

), and injected (

![]() $\mathcal{J}$

) sources.

$\mathcal{J}$

) sources.

We can take this one step further by asking the question, ‘Given our knowledge of injected sources, how good are our measures of deep–shallow completeness (

![]() $\mathcal{C_{\mathcal{DS}}}$

) and reliability (

$\mathcal{C_{\mathcal{DS}}}$

) and reliability (

![]() $\mathcal{R_{\mathcal{DS}}}$

)?’ From this, we define the fraction of real–deep–shallow detections,

$\mathcal{R_{\mathcal{DS}}}$

)?’ From this, we define the fraction of real–deep–shallow detections,

![]() $(\mathcal{D}\cap\mathcal{J})\cap(\mathcal{S}\cap\mathcal{J})$

, to real–deep detections,

$(\mathcal{D}\cap\mathcal{J})\cap(\mathcal{S}\cap\mathcal{J})$

, to real–deep detections,

![]() $\mathcal{D}\cap\mathcal{J}$

, as our goodness of completeness,

$\mathcal{D}\cap\mathcal{J}$

, as our goodness of completeness,

and the fraction of real–deep–shallow detections to real-shallow detections,

![]() $\mathcal{S}\cap\mathcal{J}$

, as our goodness of reliability,

$\mathcal{S}\cap\mathcal{J}$

, as our goodness of reliability,

Table 5 summarises all of our completeness and reliability metrics (Equations (5) through (12)).Footnote o

Table 5. Completeness/reliability metrics (see Fig. 9) in terms of deep (

![]() $\mathcal{D}$

), shallow (

$\mathcal{D}$

), shallow (

![]() $\mathcal{S}$

), and injected (

$\mathcal{S}$

), and injected (

![]() $\mathcal{J}$

) sources.

$\mathcal{J}$

) sources.

Fig. 10 shows examples of deep–shallow source component overlaps,

![]() $\mathcal{S}\cap\mathcal{D}$

, to illustrate the calculation of

$\mathcal{S}\cap\mathcal{D}$

, to illustrate the calculation of

![]() $\mathcal{C_{\mathcal{DS}}}$

and

$\mathcal{C_{\mathcal{DS}}}$

and

![]() $\mathcal{R_{\mathcal{DS}}}$

. Matches are done pair-wise, within clumps, between the closest centres of overlapping deep–shallow components. This method is more precise than a typical fixed separation nearest neighbour approach (Hopkins et al. Reference Hopkins2015; Riggi et al. Reference Riggi2019), as it ensures source components always overlap. The

$\mathcal{R_{\mathcal{DS}}}$

. Matches are done pair-wise, within clumps, between the closest centres of overlapping deep–shallow components. This method is more precise than a typical fixed separation nearest neighbour approach (Hopkins et al. Reference Hopkins2015; Riggi et al. Reference Riggi2019), as it ensures source components always overlap. The

![]() $|\mathcal{S}\cap\mathcal{D}|:|\mathcal{D}|$

and

$|\mathcal{S}\cap\mathcal{D}|:|\mathcal{D}|$

and

![]() $|\mathcal{S}\cap\mathcal{D}|:|\mathcal{S}|$

ratios are then binned with respect to the

$|\mathcal{S}\cap\mathcal{D}|:|\mathcal{S}|$

ratios are then binned with respect to the

![]() $\mathcal{D}$

and

$\mathcal{D}$

and

![]() $\mathcal{S}$

flux densities (or S/N), respectively, producing

$\mathcal{S}$

flux densities (or S/N), respectively, producing

![]() $\mathcal{C_{\mathcal{DS}}}$

vs

$\mathcal{C_{\mathcal{DS}}}$

vs

![]() $\mathcal{D}$

completeness and

$\mathcal{D}$

completeness and

![]() $\mathcal{R_{\mathcal{DS}}}$

vs

$\mathcal{R_{\mathcal{DS}}}$

vs

![]() $\mathcal{S}$

reliability histograms.

$\mathcal{S}$

reliability histograms.

Figure 10. Examples of deep (blue) and shallow (amber) source component overlaps,

![]() $\mathcal{C_{\mathcal{DS}}}=(\mathcal{S}\cap\mathcal{D})/\mathcal{D}$

and

$\mathcal{C_{\mathcal{DS}}}=(\mathcal{S}\cap\mathcal{D})/\mathcal{D}$

and

![]() $\mathcal{R_{\mathcal{DS}}}=(\mathcal{S}\cap\mathcal{D})/\mathcal{S}$

. Real-shallow detections are indicated by overlapping pair-wise deep-shallow detections (

$\mathcal{R_{\mathcal{DS}}}=(\mathcal{S}\cap\mathcal{D})/\mathcal{S}$

. Real-shallow detections are indicated by overlapping pair-wise deep-shallow detections (

![]() $\mathcal{S}\cap\mathcal{D}$

), whose centres are closest. The dash-lines indicate clumps of component extent overlays.

$\mathcal{S}\cap\mathcal{D}$

), whose centres are closest. The dash-lines indicate clumps of component extent overlays.

5. Validation

In this section we use

![]() $2^\circ\times2^\circ$

simulated-compact (CMP) and simulated-extended (EXT) image data to characterise the performance of Hydra, and validate some new metrics. In particular, the simulated data are used to explore and develop metrics that can be used for real images where the ground truth is unknown. A preliminary discussion on SF performance is also presented. Paper II is focused on cross-SF comparison, using our simulated data along with some real data.

$2^\circ\times2^\circ$

simulated-compact (CMP) and simulated-extended (EXT) image data to characterise the performance of Hydra, and validate some new metrics. In particular, the simulated data are used to explore and develop metrics that can be used for real images where the ground truth is unknown. A preliminary discussion on SF performance is also presented. Paper II is focused on cross-SF comparison, using our simulated data along with some real data.

5.1. Image data

5.1.1. Simulated compact sources, CMP

The simulated image, shown in Fig. 11, is produced in two steps; generation of a noise image, followed by the addition of artificial sources. We use miriad (Sault, Teuben, & Wright Reference Sault, Teuben, Wright, Shaw, Payne and Hayes1995) to generate the artificial noise image, using the following steps. The imgen task was used to produce a 1800

![]() $\times$

1800 pixel image, with 4” pixels, (i.e., a

$\times$

1800 pixel image, with 4” pixels, (i.e., a

![]() $2^{\circ} \times 2^{\circ}$

field) populated by random Gaussian noise of RMS

$2^{\circ} \times 2^{\circ}$

field) populated by random Gaussian noise of RMS

![]() $20\,\unicode{x03BC}$

Jy beam

$20\,\unicode{x03BC}$

Jy beam

![]() $^{-1}$

. This image was convolved using convol to mimic a 15” FWHM beam, which has the effect of increasing the noise level by a factor of 2.8, so the resulting image is then scaled using maths to divide by this factor, restoring the original noise level of

$^{-1}$

. This image was convolved using convol to mimic a 15” FWHM beam, which has the effect of increasing the noise level by a factor of 2.8, so the resulting image is then scaled using maths to divide by this factor, restoring the original noise level of

![]() $20\,\unicode{x03BC}$

Jy beam

$20\,\unicode{x03BC}$

Jy beam

![]() $^{-1}$

.

$^{-1}$

.

Figure 11. Simulated map with point-like (compact) sources. The coordinates are arbitrarily set, and the FWHM is set to 15”.

To generate the properties of the artificial sources, we use the 6th order polynomial fit to the 1.4 GHz source counts from Hopkins et al. (Reference Hopkins, Afonso, Chan, Cram, Georgakakis and Mobasher2003), which is consistent with more recent source count determinations for the flux density range considered here (e.g., Gürkan et al. Reference Gürkan2022, and references therein). A sequence of 34 exponentially spaced bins in flux density was defined, ranging from

![]() $50\,\unicode{x03BC}$

Jy to

$50\,\unicode{x03BC}$

Jy to

![]() $1\,$

Jy, and within each bin the number of sources was calculated from the source count model. The flux density for each artificial source was assigned randomly between the extrema of the bin in which it lies. Source positions were also assigned randomly, with no attempt to mimic the clustering properties of real sources. For the

$1\,$

Jy, and within each bin the number of sources was calculated from the source count model. The flux density for each artificial source was assigned randomly between the extrema of the bin in which it lies. Source positions were also assigned randomly, with no attempt to mimic the clustering properties of real sources. For the

![]() $2^{\circ} \times 2^{\circ}$

field with a flux density limit of

$2^{\circ} \times 2^{\circ}$

field with a flux density limit of

![]() $50\,\unicode{x03BC}$

Jy, we end up with a list of 9075 artificial sources.

$50\,\unicode{x03BC}$

Jy, we end up with a list of 9075 artificial sources.

These sources were added to the noise image using the Python module Astropy (Astropy Collaboration et al. Reference Astropy Collaboration2013, Reference Astropy Collaboration2018) by constructing 2D Gaussian models with the FWHM of the restoring beam (15”) and scaling the amplitude to represent the randomly assigned peak flux density of the source. Given the sources modelled here are assumed to be point-like (compact), the peak flux density for a source has the same amplitude as the integrated flux density. Using this Gaussian model for each source, we generated an image array for each source to be added into the simulated image. We used Astropy again to convert the RA/Dec location of the source to pixel locations and each source was added to the simulated image at the desired location.

5.1.2. Simulated extended sources, EXT

Following a similar procedure as in Section 5.1.1, we generated a sky model of size 1800

![]() $\times$

1800 pixels (

$\times$

1800 pixels (

![]() $4^{\prime\prime}$

pixel size, 2

$4^{\prime\prime}$

pixel size, 2

![]() $^{\circ}\times$

2

$^{\circ}\times$

2

![]() $^{\circ}$

field of view) with both point-like and extended sources injected. The noise level is again set to 20

$^{\circ}$

field of view) with both point-like and extended sources injected. The noise level is again set to 20

![]() $\unicode{x03BC}$

Jy beam

$\unicode{x03BC}$

Jy beam

![]() $^{-1}$

. Extended sources are 2D elliptical Gaussians with randomised position angle and axis ratio, with axis ratio varying between 0.4 to 1. A maximum major axis size was set at three times the synthesised beam size (

$^{-1}$

. Extended sources are 2D elliptical Gaussians with randomised position angle and axis ratio, with axis ratio varying between 0.4 to 1. A maximum major axis size was set at three times the synthesised beam size (

![]() $45^{\prime\prime}$

, with a

$45^{\prime\prime}$

, with a

![]() $15^{\prime\prime}$

FWHM beam, as in Section 5.1.1).

$15^{\prime\prime}$

FWHM beam, as in Section 5.1.1).

A total of 9974 artificial sources were injected, corresponding to a source density of 2500 deg

![]() $^{-2}$

, with 10% being extended sources. The peak flux densities S of both point-like and extended sources were set to follow an exponential distribution

$^{-2}$

, with 10% being extended sources. The peak flux densities S of both point-like and extended sources were set to follow an exponential distribution

![]() $10^{-\lambda S}$

with

$10^{-\lambda S}$

with

![]() $\lambda$

=1.6, consistent with that seen in early ASKAP observations of the Stellar Continuum Originating from Radio Physics In Our Galaxy (Scorpio, Umana et al. Reference Umana2015) field (Riggi et al. Reference Riggi2021). The minimum peak flux density for all sources was set at

$\lambda$

=1.6, consistent with that seen in early ASKAP observations of the Stellar Continuum Originating from Radio Physics In Our Galaxy (Scorpio, Umana et al. Reference Umana2015) field (Riggi et al. Reference Riggi2021). The minimum peak flux density for all sources was set at

![]() $50\,\unicode{x03BC}$

Jy and the maximum fixed at 1 Jy for compact sources and 1 mJy for extended sources. The final simulated image, shown in Fig. 12, was produced by convolving this input sky model using the CASAFootnote p imsmooth task and a target resolution of

$50\,\unicode{x03BC}$

Jy and the maximum fixed at 1 Jy for compact sources and 1 mJy for extended sources. The final simulated image, shown in Fig. 12, was produced by convolving this input sky model using the CASAFootnote p imsmooth task and a target resolution of

![]() $15^{\prime\prime}$

.

$15^{\prime\prime}$

.

Figure 12. Simulated image with both point-like (compact) and extended sources. The sky coordinates are arbitrarily chosen.

It is important to note here that, unlike the compact source simulation above where the faintest injected source lies at

![]() $\mbox{(S/N)}_{\min}\sim2.5$

, in the extended source simulated image 20.6% of the injected sources fall below

$\mbox{(S/N)}_{\min}\sim2.5$

, in the extended source simulated image 20.6% of the injected sources fall below

![]() $\mbox{S/N}=1$

. This is by design, to provide a more realistic image, and to test the impact on the SFs of having real sources lying below the noise level (re. M. M. Boyce et al., in preparation).

$\mbox{S/N}=1$

. This is by design, to provide a more realistic image, and to test the impact on the SFs of having real sources lying below the noise level (re. M. M. Boyce et al., in preparation).

Table 6. Hydra

![]() $\mu$

-optimised box_size and step_size inputs for Aegean, PyBDSF, and Selavy,

$\mu$

-optimised box_size and step_size inputs for Aegean, PyBDSF, and Selavy,

![]() $^{\rm a}$

using CMP and EXT

$^{\rm a}$

using CMP and EXT

![]() $\mathcal{D/S}$

-image data.

$\mathcal{D/S}$

-image data.

![]() $\!\!^{\rm a}$

Selavy only accepts box_size.

$\!\!^{\rm a}$

Selavy only accepts box_size.

5.2. Typhon statistics

5.2.1. Optimisation run results

Hydra uses Typhon to set the RMS box and island parameters for each SF (Aegean, Caesar, ProFound, PyBDSF, and Selavy) to ensure a 90% PRD cutoff. The RMS box parameters, obtained from Typhon’s

![]() $\mu$

-optimisation routine, were used by Aegean, PyBDSF, and Selavy. An

$\mu$

-optimisation routine, were used by Aegean, PyBDSF, and Selavy. An

![]() $\mathcal{S}$

-image was generated by Homados, and RMS box and island parameters similarly estimated. Tables 6 and 7 summarise these results for our CMP and EXT images.

$\mathcal{S}$

-image was generated by Homados, and RMS box and island parameters similarly estimated. Tables 6 and 7 summarise these results for our CMP and EXT images.

For the CMP source

![]() $\mathcal{D}$

-image,

$\mathcal{D}$

-image,

![]() $\mu\sim22\,\unicode{x03BC}$

Jy beam

$\mu\sim22\,\unicode{x03BC}$

Jy beam

![]() $^{-1}$

(Table 6) which is consistent with the design RMS noise of

$^{-1}$

(Table 6) which is consistent with the design RMS noise of

![]() $20\,\unicode{x03BC}$

Jy beam

$20\,\unicode{x03BC}$

Jy beam

![]() $^{-1}$

(re. Section 5.1). For the EXT source

$^{-1}$

(re. Section 5.1). For the EXT source

![]() $\mathcal{D}$

-image,

$\mathcal{D}$

-image,

![]() $\mu\sim68\,\unicode{x03BC}$

Jy beam

$\mu\sim68\,\unicode{x03BC}$

Jy beam

![]() $^{-1}$