Most political science PhD programs in North America and Europe require their doctoral students to pass two or more qualifying field exams (QFEs) that correspond with existing subfields or a particular research theme. These subfields and themes include comparative politics, international relations, political theory, domestic politics, methodology, gender and politics, and local government, among others. Passing these exams is an important milestone for graduate students because it means that they finally can begin serious work on their dissertation.

In their CVs, most if not all political scientists self-identify as a methodologist, comparativist, theorist, or another moniker that corresponds to the QFEs that they successfully passed in graduate school. In this sense, QFEs serve as a type of certification process and mechanism indicating that the individual has demonstrated “a broad mastery of core content, theory, and research” of a particular subfield (Mawn and Goldberg Reference Mawn and Goldberg2012, 156; see also Wood Reference Wood2015, 225) and is now “ready to join the academic community”Footnote 1 (Estrem and Lucas Reference Estrem and Lucas2003, 396; Schafer and Giblin Reference Schafer and Giblin2008, 276). Achieving this milestone also means that one is qualified to teach a variety of courses in a particular subfield (Ishiyama, Miles, and Balarezo Reference Ishiyama, Miles and Balarezo2010, 521; Jones Reference Jones1933; Wood Reference Wood2015, 225); read and synthesize new research (Ponder, Beatty, and Foxx Reference Ponder, Beatty and Foxx2004); and converse effectively with others in the subfield at conferences, job talks, and in departmental hallways. In short, passing a QFE signals that the department has certified that an individual is suitably prepared for advanced study and teaching in a particular area.Footnote 2

Surprisingly, there is substantial variation in how departments prepare students and administer their QFEs. Some departments have formal reading lists; others leave it to the supervisors and the students to generate them; some rely solely on class syllabi and core courses to prepare their students. The exam itself varies, with some departments opting for different written formats and some requiring oral defenses. This organizational variation seems at odds with the idea of the QFE as a broadly recognized qualification.

This article analyzes an original dataset of books, articles, and chapters taken from QFE reading lists in a single subfield of political science—Canadian politics—to evaluate the extent to which QFE preparation provides comprehensive and cohesive training. Is there consistency across departments? Our main goal in answering this question is to interrogate the usefulness of the QFE as a certification tool. If the reading lists converge around numerous books and articles, then we should expect those who pass a QFE to share a common language for researching, communicating, and teaching about the subfield. If the lists are incoherent or fragmented, then the QFE certification likely will be less valuable as a heuristic for evaluating a candidate’s ability in these areas. Similarly, if the reading lists privilege certain topics and voices over others, then this may have tremendous implications for the current and future direction of the discipline as it relates to various epistemological, ontological, and normative concerns (Stoker, Peters, and Pierre Reference Stoker, Peters and Pierre2015). Our results suggest that the utility of the QFE as a certification tool may be weaker than expected: in our dataset of 2,953 items taken from the preparation materials of 16 universities, there is not a single item that appears on each list.

If the reading lists converge around numerous books and articles, then we should expect those who pass a QFE to share a common language for researching, communicating, and teaching about the subfield. If the lists are incoherent or fragmented, then the QFE certification likely will be less valuable as a heuristic for evaluating a candidate’s ability in these areas.

WHY CANADIAN POLITICS?

The Canadian politics QFE is a useful test of the certification argument because it is likely to be the most cohesive among the traditional subfields of the discipline. Not only does it focus on the politics of one country and therefore is limited in geographic scope and substantive focus, but also the body of literature is manageable given the number of (relatively few) individuals working in it. Moreover, the Canadian political science community has long been concerned about various external and internal intellectual threats, which suggests self-awareness that should contribute to cohesion. In terms of external threats, scholars have expressed concerns about the Americanization of the discipline (Albaugh Reference Albaugh2017; Cairns Reference Cairns1975; Héroux-Legault Reference Héroux-Legault2017) and a comparative turn (Turgeon et al. Reference Turgeon, Papillon, Wallner and White2014; White et al. Reference White, Simeon, Vipond and Wallner2008). Others are concerned about internal threats, lamenting the fact that white, male, and English-Canadian voices have long dominated the scholarly community at the expense of French, Indigenous, and other racial and ethnic minority voices (Abu-Laban Reference Abu-Laban2017; Ladner Reference Jones2017; Nath, Tungohan, and Gaucher Reference Nath, Tungohan and Gaucher2018; Rocher and Stockemer Reference Rocher and Stockemer2017; Tolley Reference Tolley2017). This introspection, coupled with the limited size of the community, is likely to increase consistency across departments; therefore, we expect the core set of readings identified in the reading lists to be more unified and comprehensive than in other subfields.

THE DATA

To test this assumption, we emailed all of the graduate-program directors across Canada to request copies of their reading lists for the Canadian politics QFE.Footnote 3 Nineteen universities offer PhD training in Canada and 18 offer Canadian politics as a subfield. Email requests were sent in fall 2016 and summer 2018, yielding 16 lists; two universities indicated that they had no set list. Of the lists received, four were course syllabi.Footnote 4

Research assistants entered each reading item into a spreadsheet and coded the entry for author name(s); gender of the author(s); year of publication; type (i.e., journal, book, book chapter, or government document); country of publication; language; type of analysis (i.e., qualitative or quantitative); whether the piece was comparative; and category title. A random sample of 10% of entries from each list was checked and verified. There were as many as 35 subheadings on the lists; therefore, we collapsed the categories into 17 broad titles, as follows: General/Classics (5/16 lists); The Discipline/Methods/Theory (10/16 lists); Political Culture (7/16 lists); Federalism (13/16 lists); Media (2/16 lists); Indigenous (8/16 lists); Identity (11/16 lists); Constitution/Charter Politics/Courts (12/16 lists); Political Parties (10/16 lists); Interest Groups/Social Movements (8/16 lists); Political Economy (7/16 lists); Provinces/Regionalism/Quebec (9/16 lists); Public Policy (9/16 lists); Gender (6/16 lists); Multilevel Governance, Local and Urban Politics (4/16 lists); Political Behaviour, Voting, and Elections (12/16 lists); and Parliament (14/16 lists).Footnote 5

DESCRIPTIVE RESULTS

Our starting point for analysis was to examine simple descriptive characteristics. It was immediately clear that the lists were quite varied, most notably in size. The average number of readings assigned on a list was 184.6, but the range was informative—from 44 to 525 (median=130). This finding suggests real differences in the workload of graduate students in different locations. There also was variation in the list contents. The average number of books was 97, ranging from 21 to 339 (median=55.5). The number of articles ranged from eight to 100 (averaging 48; median=52). Finally, book chapters were popular inclusions, averaging 23.2% of lists (4.1% to 62.7%; median=22.5%). Most of the material was published in Canada (73.6%, ranging from 54.1% to 94.1%; median=72.1%) but a substantial minority was published in Europe (averaging 19.5%; median=20.1%).

We next considered the diversity of voices present on each list. Despite being a bilingual country with a bilingual national association, the reading lists were decidedly not. Outside of Quebec, the average number of French-language readings was only 3.17, but the number ranged from zero to 36 (median=0). Within Quebec—and perhaps reflecting the dominance of English in political science—the average number was only 28, ranging from a low of nine to a high of 58 (median=22.5). The gender breakdown of authors was more positive (23.58% female), ranging from 12.38% to 32.56% (median=23.98%).Footnote 6 This finding is comparable to the membership of the Canadian Political Science Association (i.e., 40.9% in 2017, an increase from 25.4% in 1997). However, the percentage of lists dedicated to subheadings that consider marginalized voices (i.e., gender, Indigenous, class, race, ethnicity, multiculturalism, immigration, religion, interest groups, and social movements) was small (3.95%, ranging from zero to 18.6%; median=0.88%). This is consistent with recent research that suggests the presence of a hidden curriculum in political science that silos marginalized topics and voices while privileging approaches that use gender and race as descriptive categories rather than as analytic or theorized categories (Cassese and Bos Reference Cassese and Bos2013; Cassese, Bos, and Duncan Reference Cassese, Bos and Duncan2012; Nath, Tungohan, and Gaucher Reference Nath, Tungohan and Gaucher2018).

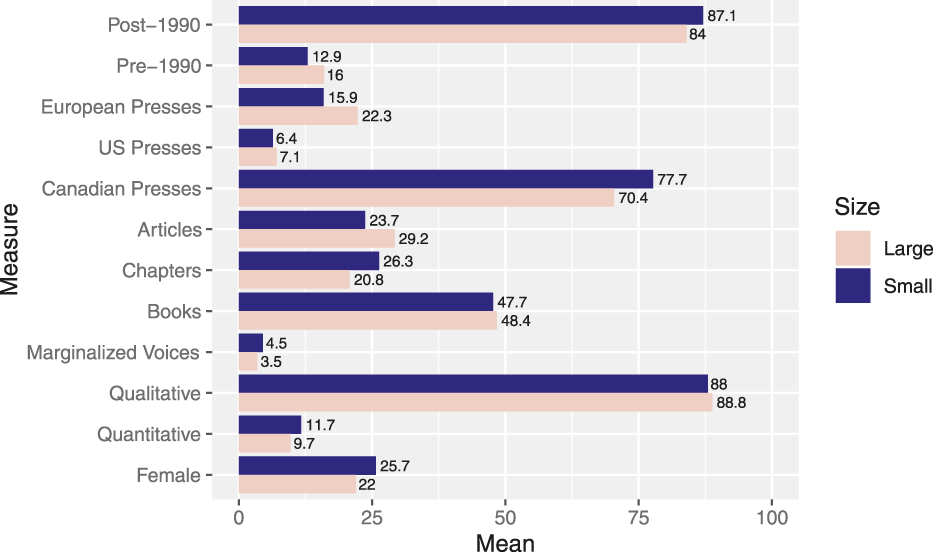

Because large and small departments have different faculty complements whose experiences may affect the design of comprehensive-exam lists, figure 1 reports many of the same statistics by size of department—both small (i.e., 25 or fewer faculty members) and large. The representation of female authors is almost identical, but larger departments have slightly less representation of quantitative political science, more articles and fewer chapters, and more international publications. The age of the readings, published before or after 1990, was similar.

Figure 1 Descriptive Statistics of Readings Lists, by Department Size (Percentages)

A COHESIVE FIELD?

Considering the descriptive overview of the reading lists, we now can answer our research question: Is the study of Canadian politics cohesive? We examined this question in three ways: topics covered, authors cited, and readings included.Footnote 7 As noted previously, the variation in subheadings across the QFE reading lists is substantial. What is most striking is that there is no topic that is covered by every single university (see previous discussion). For example, “federalism” is included on 13/14 of the lists with subheadingsFootnote 8; the constitution, constitutional development, or constitutionalism is included on 10; and Quebec politics is included on eight. Not even “Parliament” (8/14) or “elections” (10/14) appears consistently.Footnote 9 Perhaps this finding is simply a matter of semantics; however, with general topics such as “federalism,” it is possible to more closely examine what the different schools choose to cover. We compared the readings classified under “federalism” (233 items) with those under “Parliament” (166 items) and found minimal overlap (only four items). The use of subheadings does not seem to be creating false distinctions.

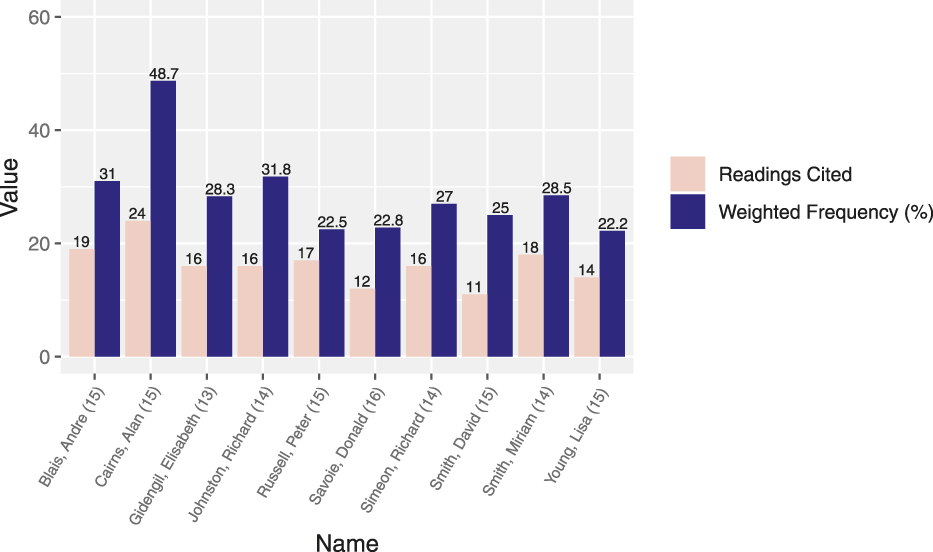

Moving beyond topic headings, which are flawed measures of content because many readings address multiple topics, we considered the authors (or viewpoints) to whom students are exposed. We constructed a score for each author that reflected the number of times they were found on each list, weighted by the number of items on each list (i.e., an additive measure that considers each list equally).Footnote 10 Figure 2 lists the top 10 cited authors, noting the number of readings cited, their weighted frequency, and the number of lists on which they appear (in parentheses next to their name).Footnote 11

Figure 2 Most-Cited Authors

Looking at the data this way is informative. The most-cited author, across most measures (i.e., weighted frequency, number of lists, and number of works cited), is Alan Cairns. He appears on 15 of the 16 lists (i.e., 24 different pieces were cited) and he has the highest weighted frequency of all authors. Donald Savoie is the only author to appear on all 16 lists, but his weighted frequency is relatively low. Going down the list by weighted frequency, it is interesting to note that the three measures we report are not always correlated. For example, Elisabeth Gidengil has a weighted frequency of 28.29% (i.e., fifth overall) but appears on only 13 lists and has 16 pieces cited. Peter Russell, conversely, has a weighted frequency of 22.5% (i.e., ninth overall) and appears on 15 lists with 17 pieces of work.

These results suggest that there is some consistency in terms of individual viewpoints that are being studied during QFE preparation (i.e., assuming that scholars are consistent across their work). However, a total of 1,188 author names are included on the lists; therefore, agreement on some of the top 10 does not indicate a substantial amount of coherence.

Finally, we considered specific readings to look for cohesiveness in QFE training. Table 1 reports all readings, in order of number of departments, that appeared on a majority of lists in our database (i.e., eight or more). We were surprised to see that no reading is included in every list. The closest is The Electoral System and the Party System in Canada by Alan Cairns, which is included on 14 lists. Only two readings are included on 13 lists and two on 12 lists. The modal number of lists for a single reading is one (1,747 readings total). The 27 readings reported in table 1 are varied in terms of their date of publication, ranging from 1966 to 2012.

Table 1 Most Common Individual Readings

This analysis reveals that there is no substantial “canon” covered by all QFE reading lists for Canadian politics. Noteworthy, however, is the repeated appearance of Cairns in the list of top-cited readings (i.e., his 1968 article appears on 14/16 lists) and Savoie’s name on all 16 lists. If there is to be one “godfather” of Canadian political science, Cairns and Savoie would be strong nominees. Another point to consider is the diversity of subject matter in the most-read pieces. Parliament, the Charter of Rights and Freedoms, the courts, political culture, federalism, multinationalism, Indigenous politics, women and politics, and party politics are all popular. We find this result encouraging in the sense that it suggests learning about the parliamentary process and/or elections is not the only feature that unites QFE training across the country. There appears to be at least majority recognition of the value of many pieces that provide alternative viewpoints on Canadian politics. Along with our previous findings about topic headings, we are considerably more optimistic that students do learn alternative viewpoints most of the time. Nonetheless, the fact that we find most of these viewpoints only in a majority rather than in all of the lists still gives us pause.

WHAT ARE QFES GOOD FOR?

Analyzing QFE reading lists is a useful way of understanding what “qualifying” actually means. It also provides insight into the comprehensiveness and cohesiveness of an academic field and the extent to which any type of universal training is even possible. Although we suspected that analyzing the Canadian politics subfield might be an “easy” case for finding cohesion, our results suggest otherwise. There is no single topic or reading shared by all political scientists who take comprehensive examinations in the field of Canadian politics. This finding means that looking to QFEs as evidence that job candidates and/or faculty members from various universities share a common vocabulary for teaching, communicating, and collaborating is at least somewhat flawed. There is no guarantee that PhD students or even Canadian politics faculty members working in the same department will share the same knowledge base if they received their training at different universities.

There is no single topic or reading shared by all political scientists who take comprehensive examinations in the field of Canadian politics.

Perhaps this fact is unproblematic. Departments frequently hire candidates knowing that their home department specializes in a particular research area; therefore, the lack of overlap across reading lists in fact may be beneficial. We are normatively agnostic on this point. Our purpose was to investigate whether QFEs represented a core or canon in Canadian politics, and our results suggest that they do not. We leave it to individual departments to consider the implications of our findings for graduate training and hiring.