The current conventional narrative is that the world is in a period of global democratic decline. Headlines such as “How Democracy Is Under Threat Across the Globe” (August 19, 2022) appear regularly in the New York Times, and the Washington Post made “Democracy Dies in Darkness” its slogan in 2017. Freedom House opened its 2022 annual report by stating that “Global freedom faces a dire threat” (Repucci and Slipowitz Reference Repucci and Slipowitz2022). The Varieties of Democracy (V-Dem) 2023 annual report claimed that “The level of democracy enjoyed by the average global citizen in 2022 is down to 1986 levels” and “Advances in global levels of democracy made over the last 35 years have been wiped out” (Wiebrecht et al. Reference Wiebrecht, Sato, Nord, Lundstedt, Angiolillo and Lindberg2023). A substantial uptick in academic papers on democratic backsliding has emerged in recent years, which often raise alarm in the title: “Democratic Regress in the Contemporary World” (Haggard and Kaufman Reference Haggard and Kaufman2021) necessitates “Facing up to the Democratic Recession” (Diamond Reference Diamond2015) because “A Third Wave of Autocratization Is Here” (Lührmann and Lindberg Reference Lindberg2019).

To make accurate claims of this type, we need reliable ways to measure democracy. This is a notoriously difficult problem (see, e.g., Boix, Miller, and Rosato Reference Boix, Miller and Rosato2013; Cheibub, Gandhi, and Vreeland Reference Cheibub, Gandhi and Vreeland2010; Przeworski et al. Reference Przeworski, Alvarez, Cheibub and Limongi2000). Scholars do not agree on how we should define democracy in the first place—and even components with near consensus such as holding free and fair elections are difficult to measure. As a result, there are surprisingly few empirical studies that assess whether the narrative of global democratic decline is systematically true.

Our primary contribution is to highlight and explore the consequences of a key distinction between different democracy indicators. Some are objective and factual, such as whether the incumbent party loses and accepts defeat in an election. Other indicators are subjective and rely on the judgment of expert coders to answer questions such as whether a particular election can be considered “free and fair.”

Recent studies that find evidence of global backsliding rely heavily if not entirely on subjective indicators. Whereas expert-coded data have many advantages—including wide coverage of various dimensions related to democracy—the more they rely on human judgment, the more they can be systematically biased. This raises the possibility that a perceived global decline of democracy may be driven by changes in coder bias rather than actual changes in regime type.

WHAT WE DO

To address this challenge, we survey objective indicators of democracy. For theoretical and pragmatic reasons, we adopt a “quasi-minimalist” conception of democracy that centers on the presence of free and fair elections in which losers accept the results, and we focus our empirical analysis on indicators of electoral competitiveness. We also believe that full democracy requires other features such as checks and balances, free media, and rights protections. These dimensions generally are more difficult to measure objectively; therefore, we include them when feasible, and we encourage future research to continue in this direction.

Practically speaking, we describe trends in de facto and de jure electoral competitiveness, executive constraints, and media freedom. In general, we find little evidence of backsliding on these variables during the past decade. At least on these measures, the global pattern is one of democratic stability or stagnation rather than decline.

First, we examine electoral outcomes. If staying in power is the ultimate endgame for anti-democratic leaders, then incumbent electoral performance and electoral turnover are the most important outcomes to study. In addition to the intrinsic importance of turnover, preventing electoral loss is a key goal of many undemocratic actions, such as banning opposition parties, controlling the media, and placing loyalists in control of election-management bodies. Even the types of subtle actions that many fear are the dominant mode of contemporary authoritarianism and backsliding (Guriev and Treisman Reference Guriev and Treisman2020; Luo and Przeworski Reference Luo and Przeworski2019; Przeworski Reference Przeworski2019) typically are reflected in election results.

Are incumbent leaders and their parties increasingly dominating elections? Using various measures and data sources, the answer is a reasonably clear “no.” The rate of ruling-party and individual-leader turnover has remained fairly constant since the late 1990s. If anything, the vote shares of winners in executive elections and seat shares of winners in legislative elections have decreased in recent years. The share of elections with real multiparty competition also does not exhibit any decline.

Second, we examine data outside of the electoral arena, beginning with executive constraints. Constitutional rules that limit executive power have been fairly constant if not slightly increasing since the end of the Cold War. Moreover, despite recent prominent cases, there has been no uptick in the rate of leaders evading term limits. Although media freedom is particularly difficult to measure, we present suggestive evidence on this front by examining the number of journalists who have been jailed and killed. This picture is mixed: beginning in the early 2000s, there has been an increase in journalists who have been jailed but also a decrease in the number of those killed for doing their job.

What is driving the differences between expert-coded and objective measures? We consider two possibilities, which are formalized in online appendix section C. First, changes in the media environment or changing coder standards may have led to a time-varying bias in expert surveys that could make it appear that the world is becoming less democratic absent any true trend. Alternatively, leaders may be strategically shifting to more subtle means of backsliding that are more difficult to detect with objective measures.

These explanations are not mutually exclusive, but we argue that coder bias likely explains at least some of the discrepancy. We also provide evidence that media coverage of backsliding has increased in recent years. Future research should establish more definitive evidence on these (or other) mechanisms.

In summary, it may be the case that major backsliding is occurring precisely in ways that elude objective measurement. However, this is an extraordinary claim. The onus should be on those who are making alarmist claims about the decline of democracy to provide stronger evidence than has been presented to date.

WHY IT MATTERS

Democratic backsliding is an important problem from a theoretical and practical perspective, and we do not want to discourage scholars from studying its causes and consequences. Our focus is on broad trends, and we are not asserting that backsliding is not happening anywhere. There are approximately 200 countries in the world and backsliding most likely is happening in some of them at any given time. We believe, for instance, that backsliding has occurred recently in places such as Hungary and Venezuela.

Although a correct accounting of global trends is a key first step, it is arguably more important to understand where backsliding is happening, how it happens (Chiopris, Nalepa, and Vanberg Reference Chiopris, Nalepa and Vanberg2021; Cinar and Nalepa Reference Cinar and Nalepa2022; Grillo and Prato Reference Grillo and Prato2020; Helmke, Kroeger, and Paine Reference Helmke, Kroeger and Paine2022; Svolik Reference Svolik2018), and when it leads to democratic breakdown (Brownlee and Miao Reference Brownlee and Miao2022; Miller Reference Miller2021; Treisman Reference Treisman2022). To diagnose the problem correctly, we need accurate measures of whether and how much backsliding is occurring. Focusing on a few prominent cases of backsliding creates misleading views about the resilience of democratic institutions. Furthermore, if leaders generally are not undermining certain rules (e.g., elections and term limits) but instead are focusing on eroding other types of institutions or civil liberties, then taking measurement seriously will help researchers, policy makers, and citizens to better target solutions.

Our core contention is that if the world is experiencing major backsliding in the aggregate, we should expect to see evidence of this effect on the objective measures—particularly incumbent leaders and their parties winning elections—but we do not.

Our core contention is that if the world is experiencing major backsliding in the aggregate, we should expect to see evidence of this effect on the objective measures—particularly incumbent leaders and their parties winning elections—but we do not.

EXISTING DATA AND SUBJECTIVE MEASUREMENT

This section surveys and highlights potential problems with expert-coded measures. All replication data used in this article are available at Little and Meng (Reference Little and Meng2023).

Defining Backsliding

Democratic backsliding and democratic erosion—which we use interchangeably—can be defined as “the state-led debilitation or elimination of any of the political institutions that sustain an existing democracy” (Bermeo Reference Bermeo2016, 5). Other scholars argue that backsliding can occur in any country, regardless of regime type, and they define backsliding as “a deterioration of qualities associated with democratic governance, within any regime. In democratic regimes, it is a decline in the quality of democracy; in autocracies, it is a decline in democratic qualities of governance” (Waldner and Lust Reference Waldner and Lust2018, 95). Similarly, Lührmann and Lindberg (Reference Lindberg2019) contend that any country can “autocratize,” regardless of regime type.

There are three general categories of backsliding in the existing literature: shifts from (1) liberal democracy toward electoral democracy; (2) democracy to autocracy (i.e., “breakdown”); and (3) institutionalized autocracy to personalist autocracy. Although these three types of backsliding are all relatively different, the literature has not always made clear distinctions among them; as a result, the discussion often is muddied by conceptual confusion (Lueders and Lust Reference Lueders and Lust2018).

For simplicity and consistency with much existing scholarship, we include all countries in the sample for the main analysis and typically focus on average levels of variables. This captures positive gains toward democratization in addition to backsliding. Doing so also captures subtle shifts as well as more major regime changes—although the latter are weighted more heavily. The third section of this article discusses differential trends by regime type and analyzes the distribution of democratic change in different periods, which does not change our main findings.

Existing Data and Aggregate Trends

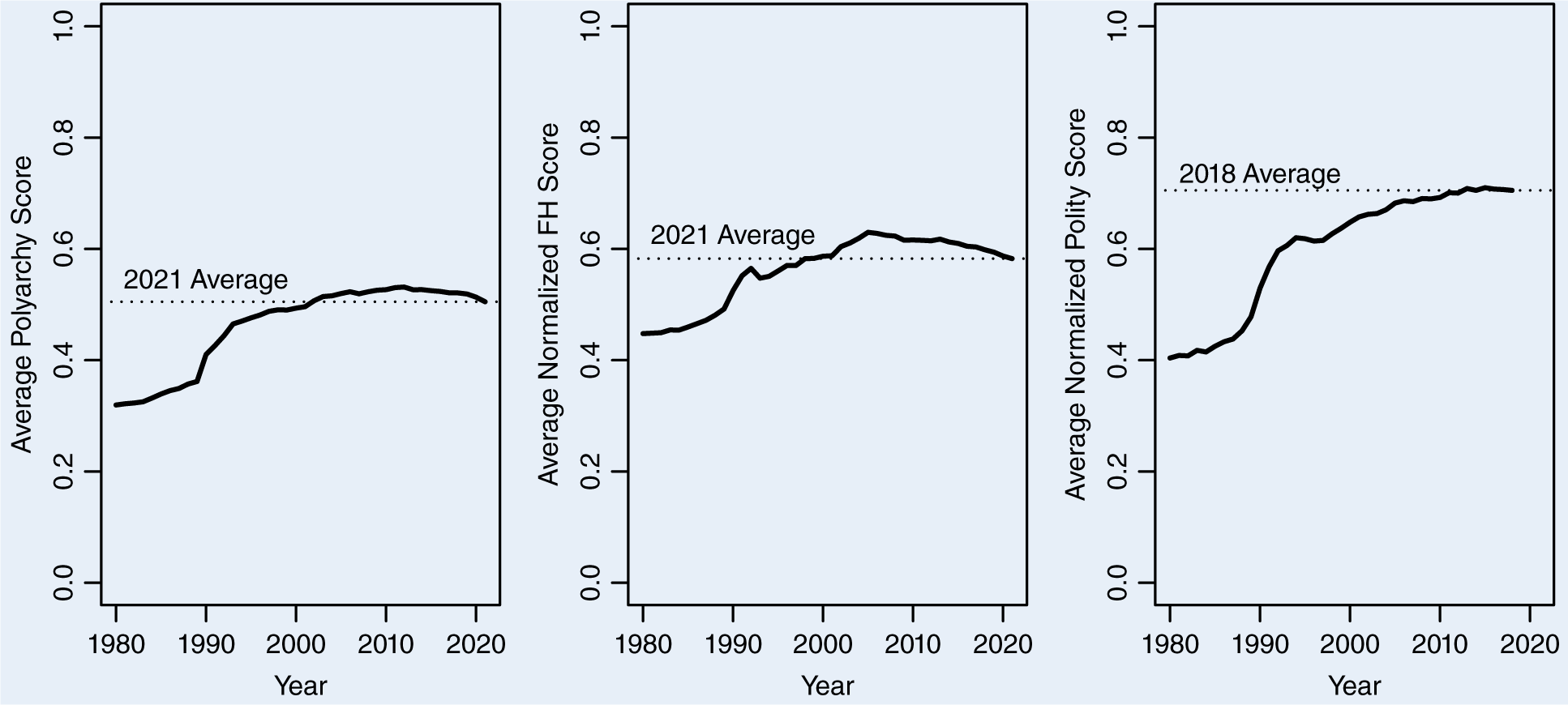

Scholars generally have used three existing datasets to examine backsliding: V-Dem, Freedom House, and Polity. Online appendix table A.1 summarizes these measures and their criteria. Figure 1 plots the average yearly democracy scores from 1980 onward.Footnote 1 We use the 1980–2021 time window for all of our graphs (subject to availability constraints) to keep the focus on relatively recent times while also providing a sense of the “baseline” before the third wave of democratization. Here and throughout most of the analysis, we use unweighted averages rather than weighting by population or another “importance” metric because using population weights is not the norm in political science. We discuss the implications of using population weights when summarizing the results.

Figure 1 Average Democracy Scores by Year

Following a dramatic increase in the 1990s and a modest increase in the 2000s, V-Dem and Freedom House democracy scores declined in the past decade. However, as Skaaning (Reference Skaaning2020, 1539, emphasis added) noted, “This drop is minuscule and clearly within the boundaries of the statistical confidence levels offered by V-Dem in order to account for potential measurement error.” There is no decline in Polity scores; however, the data stop in 2018 and we therefore focus less on this source moving forward.Footnote 2 Other scholars have pointed out that these trends appear weaker than the general narrative might suggest, either globally (Anderson, Brownlee, and Clarke Reference Anderson, Brownlee and Clarke2021; Treisman Reference Treisman2022) or regionally (Arriola, Rakner, and van de Walle Reference Arriola, Rakner and van de Walle2023).Footnote 3 In fact, Levitsky and Way (Reference Levitsky and Way2015) noted that many studies raised concerns about democratic decline before there was any evidence in the data. Nevertheless, even studies that question the narrative of backsliding generally do not consider potential biases in the underlying data. Instead, disagreements about whether we are in a current period of global democratic decline have centered on differing emphasis and interpretations of the trends.

…studies that question the narrative of backsliding generally do not consider potential biases in the underlying data. Instead, disagreements about whether we are in a current period of global democratic decline have centered on differing emphasis and interpretations of the trends.

Potential Problems with Subjective Measures

To produce annual democracy scores, Freedom House meets with expert analysts to reach a consensus of country-level scores. V-Dem codings are done mostly via expert surveys. In both cases, considerable subjective judgment is required to produce democracy scores. The issues raised apply to any expert-coded data; however, this discussion focuses on V-Dem measures because they are admirably transparent about the coding decisions that produce the scores, release coder-level data, and use a combination of subjective and objective variables.Footnote 4

An objective variable is based in fact rather than a combination of opinion and fact. A useful “litmus test” is whether multiple qualified experts with access to the needed information would reach the same conclusion (Cheibub, Gandhi, and Vreeland Reference Cheibub, Gandhi and Vreeland2010). A clear example is the vote share of the winner of a presidential election. An objective indicator from V-Dem is the variable “percentage of the population with suffrage,” which asks: “What share of adult citizens as defined by statute has the legal right to vote in national elections?” These variables can be constructed on the basis of clear observational criteria and require little if any judgment from coders.

In contrast, a more subjective question that feeds into V-Dem’s polyarchy index is the “election free and fair” variable, which asks experts: “Taking all aspects of the pre-elections period, election day, and the post-election process into account, would you consider this national election to be free and fair?” Experts are given five possible responses ranging from “0: No, not at all. The elections were fundamentally flawed and the official results had little if anything to do with the ‘will of the people’” to “4: Yes. There was some amount of human error and logistical restrictions but these were largely unintentional and without significant consequences [emphasis added].” Even well-informed coders can disagree widely about the definitions of “fundamentally flawed” and “largely unintentional” (Weidmann Reference Weidmann2022). In fact, V-Dem coders disagree with non-trivial frequency. There typically are between five and 10 coders for each observation. As described in online appendix section A.2, disagreement is relatively similar across variables: the average standard deviation across coders who are asked the same question usually ranges from 20% to 25% of the scale. Most variables are on a 0-to-3- or 0-to-4-point scale, which corresponds to disagreeing with the average by almost 1 point.Footnote 5

V-Dem uses a measurement model to correct for some of the problems associated with coders using different scales (Pemstein et al. Reference Pemstein, Marquardt, Tzelgov, Wang, Krusell and Miri2018). Intuitively, this model allows for expert coders to be “harsher” or “more lenient”. This method is valuable for ensuring that countries do not seem more or less democratic by getting an unusual draw of coders, and it increases the precision of the estimates for individual observations. However, the model assumes that the coders’ standards and biases are constant over time.Footnote 6

There are valid reasons to question this assumption. Increased attention on the topic of backsliding raises significant concerns that coder perceptions of democracy may have shifted recently. There has been a substantial increase in reporting on backsliding in recent years (Gottlieb et al. Reference Gottlieb, Blair, Baron, Arugay, Ballard-Rosa, Beatty and Esen2022), and this attention can paint a misleading picture for observers. Current news articles often cite countries on the extreme end of the backsliding spectrum (e.g., Hungary and Russia) in their discussions of global democracy. Readers may begin to believe that these outlier countries represent the norm rather than the exception (Brownlee and Miao Reference Brownlee and Miao2022). We discuss these ideas in more detail after completing the description of empirical patterns.

THE SEARCH FOR MORE OBJECTIVE MEASURES

The problems raised in the previous section motivate our search for more objective measures. We first look at de facto election outcomes, asking if incumbent leaders and their parties are dominating elections. We next examine indicators of whether the electoral process allows for competition in practice—for example, by allowing multiple parties. Moving beyond elections, we examine trends in executive constraints and attacks on the press.

Our data on elections and freedom of association are from the National Elections Across Democracy and Autocracy (NELDA) dataset, which contains several objective variables about all elections from 1945 to 2020 (Hyde and Marinov Reference Hyde and Marinov2012). Data on parties, elections, and legislatures are from The World Bank’s Database of Political Institutions (Cruz, Keefer, and Scartascini Reference Cruz, Keefer and Scartascini2021). The only original data we present are about executive constraints, which we collected by expanding the data from Meng (Reference Meng2020) on de jure rules that limit executive power. Data on term-limit evasions are from Versteeg et al. (Reference Versteeg, Horley, Meng, Guim and Guirguis2020). Finally, for freedom of expression, we use data from the New York–based nonprofit organization Committee to Protect Journalists.

For our primary analyses of these individual datasets, we use the full global sample of countries that they included. We continue to use the 1980–2021 time window for all of our graphs, subject to data availability.Footnote 7 Additional details on the data used in this section are included in online appendix section B.

It is important to mention that most of our objective measures focus on electoral institutions and outcomes and do not include certain aspects of democracy, such as civil liberties. The implications of what we can and cannot capture with these objective measures is discussed in the fourth section.

Are Incumbents Losing?

Democracy is a multidimensional concept, but perhaps the most important feature is that “[incumbent] parties lose elections” (Przeworski Reference Przeworski1991, 10). Although the rate of incumbents losing reflects other factors as well (e.g., how successfully they govern), it provides a simple measure of this key feature of democracy. Despite a few cases in which it is difficult to code whether the winner of an election should be considered the candidate of the ruling party, the construction of this variable requires little judgment; therefore, we classify turnover as an objective indicator.

In addition to the theoretical importance of turnover, many of the undemocratic actions taken by leaders—banning opposition parties, controlling the media, and placing loyalists in control of election-management bodies—aim to influence the probability of winning elections. As a result, comparing average rates of turnover across periods provides crucial information about whether leaders are insulating themselves successfully in power.

In addition to the theoretical importance of turnover, many of the undemocratic actions taken by leaders—banning opposition parties, controlling the media, and placing loyalists in control of election-management bodies—aim to influence the probability of winning elections. As a result, comparing average rates of turnover across periods provides crucial information about whether leaders are insulating themselves successfully in power.

Indeed, rates of incumbent loss have changed dramatically over the long term, from virtually never in the early-nineteenth century to about a third of the time in the 2000s (Przeworski Reference Przeworski2015). Do incumbents continue to lose elections at similar rates?

Using data from NELDA, we start by presenting trends in whether the incumbent’s party won the election in question. To smooth out volatility driven by the fact that the set of countries holding elections in a given year changes, we pull the data for each country-year from the most recent election in NELDA if it happened within the past six years and then take averages of this variable.

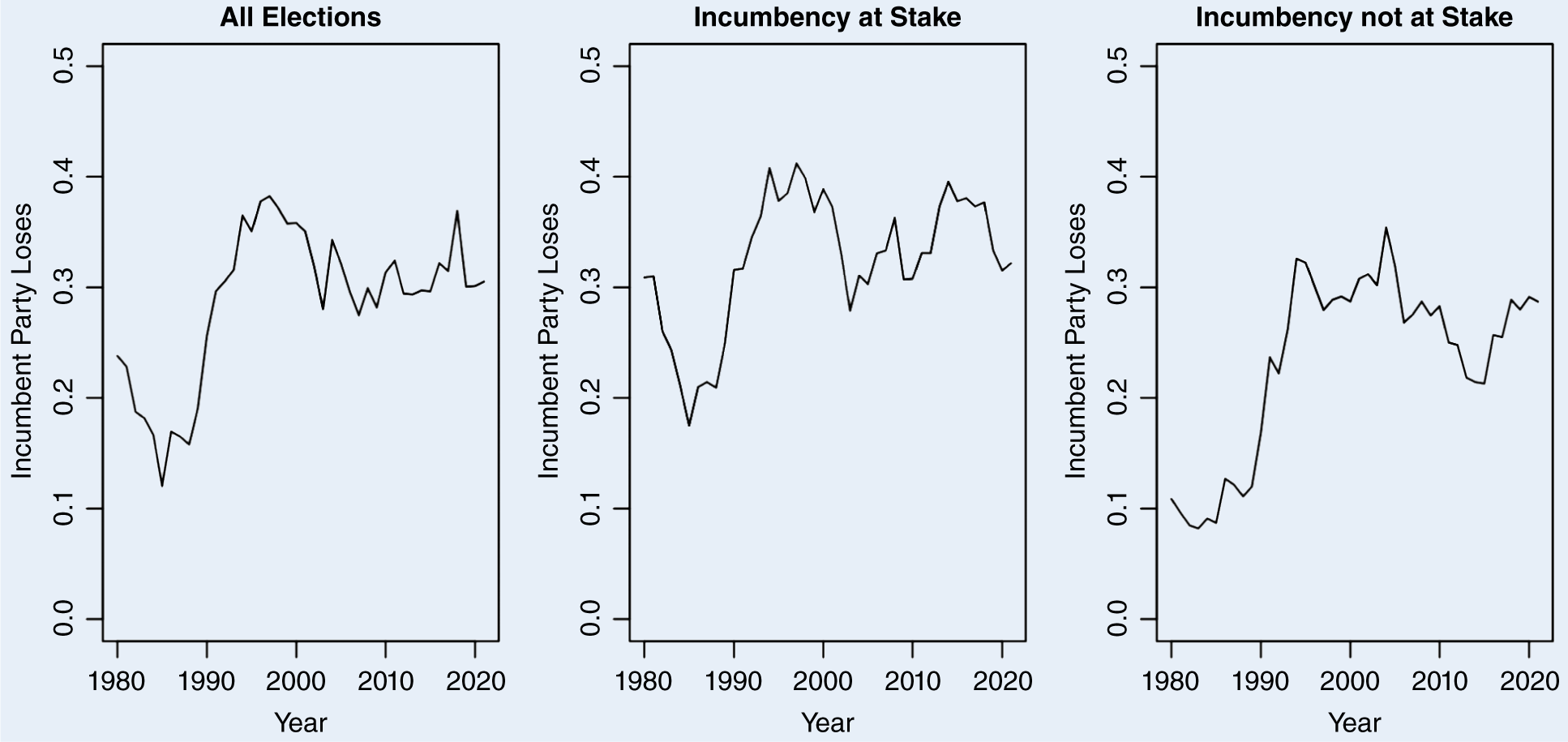

The left panel of figure 2 plots the yearly averages for “incumbent party victory in previous election” from 1980 to 2020. Although the trend is somewhat noisy, there was a general increase from 1980 to 2000 and then no obvious pattern after that. This graph encompasses all legislative and presidential elections, which include some in which the incumbent party risked losing control of the executive and others that did not (e.g., a legislative election in a presidential system, such as midterm elections in the United States). The same broad pattern holds when the data are subsetted into elections in which the office of the incumbent leader was contested (middle panel) or not contested (right panel).

Figure 2 Proportion of Elections in Which the Incumbent Party Loses

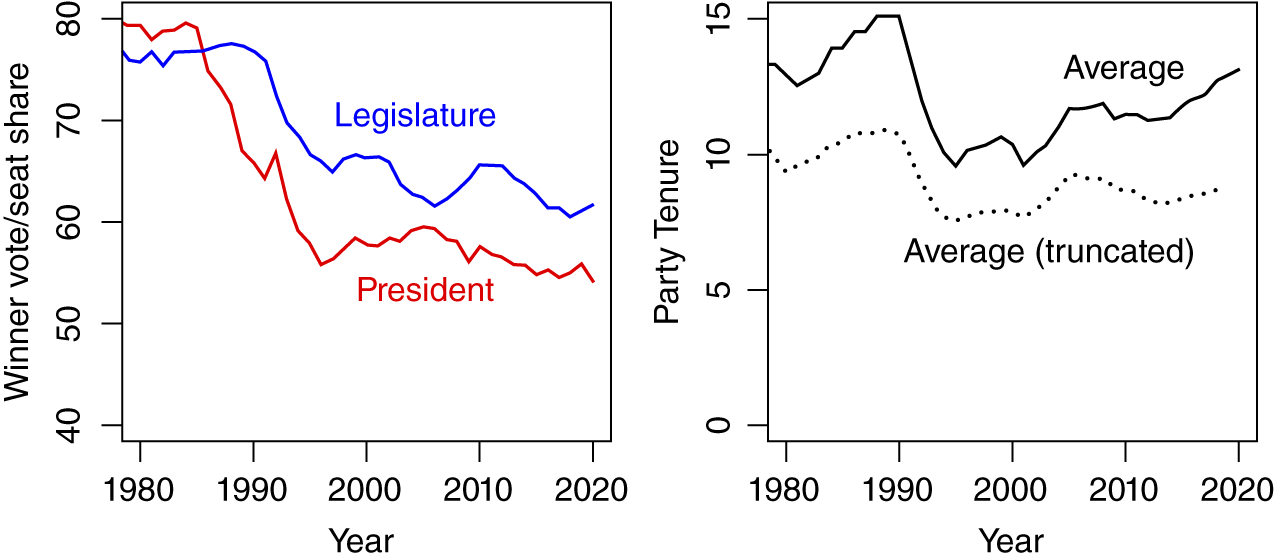

Next we present data from the Database of Political Institutions (DPI) to assess when the winning leaders and parties—whether incumbent or challenger—were dominating elections. The left panel of figure 3 plots the yearly average winner vote share in presidential elections (shown in red) and the yearly average seat share of the winning party in the legislature (shown in blue). Both of these measures generally declined during the entire period, which indicates that, if anything, winners were less dominant in recent elections.

Figure 3 Additional Measures of Winning/Incumbent Party Dominance

The right panel of figure 3 plots how long the current incumbent party has been in power. Although the average of this variable has been increasing, this change is driven by long-lived autocratic ruling parties.Footnote 8 The dotted line plots the average of a truncated sample for which the length of time in power was capped at 20 years. When excluding outliers (i.e., the dotted line), this variable remains relatively constant.

Further Measures of Competition

We next present additional variables from the DPI that reflect the competitiveness of elections. The left panel of figure 4 plots the share of countries in which the executive controls all houses that have lawmaking powers. This variable slightly increased to about 55% around 2010 but has not returned to the pre-1990s levels when executives controlled all branches of lawmaking in more than 70% of countries. This may suggest backsliding, although alternating between divided and unified government can be a routine part of democratic politics in presidential systems.

Figure 4 Are Elections Competitive?

The right panel of figure 4 plots executive and legislative competitiveness indices from the DPI, which assigns higher numbers for elections in which multiple parties compete and win. There is a clear upward trend for both the executive and legislative indices, which indicates an increase in the competitiveness of elections.

NELDA also has relatively objective variables that capture whether opposition parties are prevented from running, whether voters have a choice on the ballot, and other similar measures. We use these NELDA variables to construct two indices that reflect the extent to which elections are free and fair.

The first index reflects levels of de facto and de jure multipartyism. It includes the following measures: whether (1) opposition parties were allowed to compete in the election and no parties were banned, (2) multiple parties were technically legal, and (3) voters had a choice on the ballot. We average these variables to create a “multiparty” index with four levels ranging from 0 to 1.

The second index captures various other electoral-process violations and includes the following variables: (1) were previous elections suspended, (2) had the current incumbent violated a term limit, and (3) did an opposition party boycott the election. In each case, we coded 1 for answering “no” and 0 otherwise; averaging the three variables gives a “(lack of) process violation” index with three levels ranging from 0 to 1.

The left panel of figure 5 plots the average of the multiparty index from 1980 to 2020, again pulling data from the previous election. The average of this index increased from around 0.7 to 0.95 in the 1990s and was relatively stable after that, which indicates that most elections meet a bare minimum standard of competition. The right panel shows that process violations also were rare, and the index was stable for the past 20 years.

Figure 5 Average of the Multiparty Index (Left) and Process-Violations Index (Right)

Although neither of these indices captured more subtle violations of fair elections, they do reveal that major violations are not becoming more common.

Finally, one way that backsliding may show up in these data would be a decrease in the number of executive and legislative elections that are held. This does not seem to be the case; as shown in online appendix figure 16, the number of elections remained fairly constant during the past two decades.

In summary, incumbent leaders have been equally if not less dominant in recent elections—precisely the opposite of what we expected to see in a period of global democratic decline.

Executive Constraints

Another important dimension of democracy is whether there are constraints on the chief executive. In a frequently cited article, Bermeo (Reference Bermeo2016, 10) pointed to executive aggrandizement as a “common form of backsliding [that] occurs when elected executives weaken checks on executive power.” Have leaders increasingly dismantled institutions that check their power? To assess this question, we use expanded data from Meng’s (Reference Meng2020) study, which provides the proportion of countries that have constitutional rules designating (1) term limits, (2) succession procedures, and (3) rules for dismissing the leader.

The left panel of figure 6 plots the trends in the existence of term limits (shown in black), rules for dismissal (shown in green), and succession procedures (shown in red), all of which revealed a marked increase after the end of the Cold War. In recent decades, term limits have been flat whereas rules for dismissal and succession have continued in a slightly upward trend.

Figure 6 Trends in Executive Constraints

Even with term limits on record, leaders may attempt to circumvent these laws to stay in power. To explore this possibility, we use data from Versteeg et al. (Reference Versteeg, Horley, Meng, Guim and Guirguis2020), who recorded all instances in which an incumbent attempted to circumvent existing term limits, including attempts that did not involve formal changes on paper (e.g., having the court reinterpret the constitution). The dataset identifies 234 instances from 2000 to 2018 in which an incumbent reached the end of a term and was ineligible for reelection due to existing term limits. A serious attempt to evade term limits occurred in 60 cases (26% of all observations), and all attempts originated exclusively from authoritarian or hybrid regimes. Of the 60 attempts to evade term limits, 34 succeeded. The data show that rates of term-limit evasion—both successful and unsuccessful—do not exhibit a clear trend, as illustrated in the right panel of figure 6.

Attacks on the Press

Freedom of expression is an important aspect of democracy that is particularly difficult to measure. Efforts to influence the media often are hidden, and even measuring the bias of a particular outlet relative to some objective standard proves challenging.

Nevertheless, an important type of outcome that plausibly can be objectively measured is punitive actions toward journalists. In particular, the Committee to Protect Journalists maintains a database of all journalists who were killed or jailed as a result of doing their job since 1992. Each observation gives the date of being jailed or killed, the country where the event occurred, and a categorical classification of the reason for the event.Footnote 9 The database is updated continuously as more information is collected. This could create bias if more recent events are easier to observe; if so, this would create the impression that the situation for journalists is becoming worse.

Figure 7 plots the trends in these data from 1992 to 2021. Each panel contains the raw data shown in gray and a smoothed trend shown in black. The left panel includes all journalists who were jailed for doing their job; the patterns are similar when cases are restricted to those in which the reason was explicitly listed as “anti-state.” This is the first indicator of a clear “bad” trajectory during the past decade, although it is the continuation of a trend that started around 2000. However, the trend is significantly different for journalists who were murdered (right panel).Footnote 10 After a notable increase in the late 1990s and early 2000s, this number has decreased steadily since around 2008.

Figure 7 Trends in Journalists Jailed (Left) and Murdered (Right)

There are several ways to interpret these findings. It may be that the more authoritarian states with control over the judiciary can use jailing as a tool to silence dissent, whereas leaders of semi-democratic countries must resort to more extreme measures. It also may reflect the softening of tactics used by dictators to maintain power (Guriev and Treisman Reference Guriev and Treisman2020), which arguably could be interpreted as becoming less autocratic. We encourage future research to explore what is driving this pattern.

Taking Stock

To summarize the objective indicators and compare trends among different sets of countries, we construct a simple aggregate objective index by normalizing all individual variables between 0 and 1 when we can do so and taking the average for each country-year. Specifically, we take the average of percentage of the population with suffrage from V-Dem; presidential vote shares, winning-party seat shares, incumbent-party time in office (truncated at 20 years), legislative competitiveness, and executive competitiveness from the DPI; whether the incumbent party lost the last election, the multiparty index, and the process-violations index from NELDA; and the presence of term limits, succession rules, and dismissal rules.Footnote 11

To be clear, this simple index is meant only to summarize the aggregate trends described previously; it is not an appropriate substitute for existing democracy indices on a country-year level. Because the input variables are measured on different scales, the magnitude of the index does not have a clear substantive interpretation. Nevertheless, changes in the average index can provide a general sense of how indicators related to the quality of democracy have changed over time. For an approach that attempts to approximate democracy scores using only objective indicators, see Weitzel et al. (Reference Weitzel, Gerring, Pemstein and Skaaning2023).

The left panel of figure 8 plots the trends of this aggregate objective index over the past 40 years in a thick line. For comparison, the thin black line plots the average V-Dem polyarchy index score and the thin gray line plots the Freedom House democracy score. For this graph, only country-years that appear in both V-Dem and Freedom House were included. The objective index is higher than either, although this simply reflects the fact that many of the components have a “low bar” (e.g., multiparty elections) and therefore has no substantive meaning. More important is the change over time, the main change for all three indices being a substantial increase at the end of the Cold War. Since 2000, trends have been somewhat different: whereas the indices based on expert coding modestly declined, the objective index generally continued to increase, albeit at a slower pace. The objective index in 2020 was almost as high as it has ever been.

Figure 8 Unweighted and Weighted Average Indices

So far, all of our analysis has given equal weight to all countries that have data on the relevant indicator. However, some scholars have argued that weighting by population is better for capturing the experience of the average citizen rather than the average country (see, e.g., Alizada et al. Reference Alizada, Boese, Lundstedt, Morrison, Natsika, Sato, Tai and Lindberg2022). The middle panel of figure 8 presents the same trends weighted by population. As discussed in the respective publications, this generates a more pessimistic trend in the V-Dem polyarchy index and Freedom House scores during the past decade. For our objective index, population weighting also generates a notable decrease beginning around 2018 but a more volatile trend throughout as well. This is driven by the fact that individual country trends can move sharply based on the outcome of a particular election or another shift on one of our underlying indicators. The right panel of figure 8 illustrates that the trend is much smoother when the two most populous countries, India and China (which have had noisy trends in recent years), are omitted. This somewhat attenuates the decrease for Freedom House and V-Dem’s polyarchy index scores and leads to a similar trend as the unweighted graph for the objective index. Overall, there is evidence that trends in recent years appear worse when weighting by population, but this conclusion is highly sensitive to the coding of the largest countries.

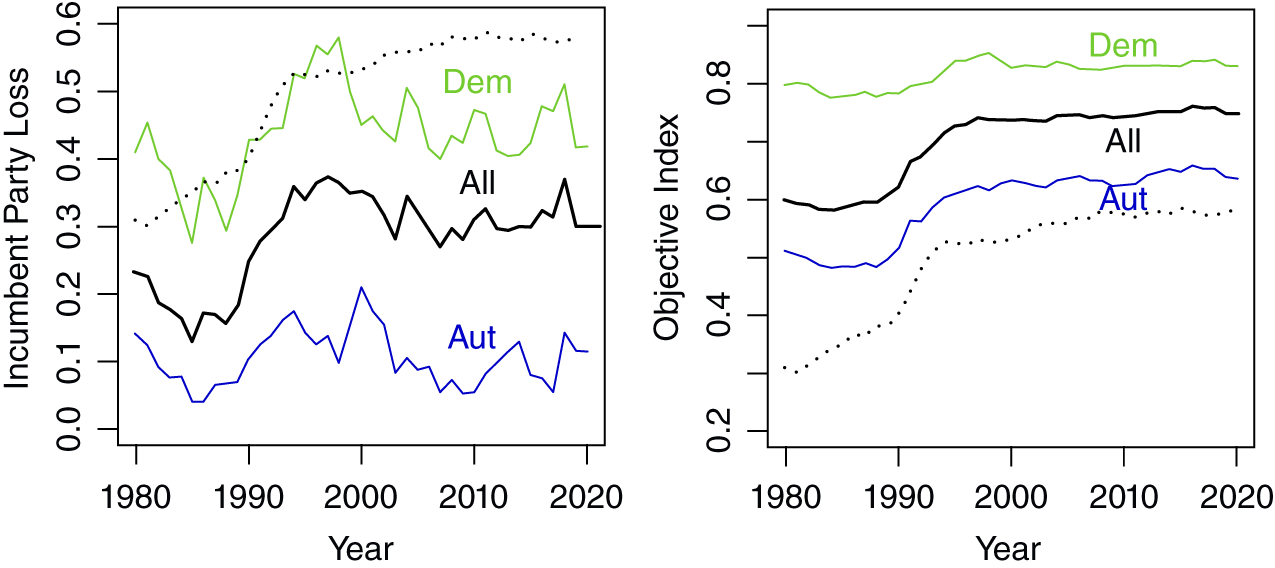

Distributions of Change

By looking at all countries in the aggregate, improvements in autocracies may be “canceling out” decreases in democratic scores of democracies. We address this concern in two ways. First, we examine differences in regime type by separating our sample of countries into democracies and autocracies, as classified by Boix, Miller, and Rosato (Reference Boix, Miller and Rosato2013).Footnote 12 The left panel of figure 9 shows the trend in incumbent party loss by regime type. The thick black line represents all countries (see figure 2), the green line represents all democracies, and the blue line represents all autocracies. The dotted line corresponds to the proportion of countries coded as democratic. Unsurprisingly, incumbent party loss was more frequent in countries coded as democratic. After a spike in incumbent party loss (particularly among democracies) in the 1990s, the rate has been relatively flat in both subgroups for the past 15 years.

Figure 9 Subsetting by Regime Type

The right panel of figure 9 plots the trend for the objective index. The overall trends are similar; the increase in the 1990s was driven by more countries being classified as democracies as well as the increasing average score of nondemocracies. The average of the objective index was relatively flat for both subgroups for the past 20 years. (Online appendix section A.7 includes similar graphs for expert-coded democracy scores. If anything, their decline is driven more by autocracies becoming even less democratic.)

Second, figure 10 shows the distribution of country-level changes for the objective index (top row), V-Dem polyarchy index (middle row), and Freedom House (bottom row). We focus on comparing five-year averages across decades.Footnote 13 For example, the top-left facet plots the distribution of the change in the average objective index for 1981–1985 to the average for 1986–1990, and the second-from-left facet plots the change from 1986–1990 to 1991–1995.Footnote 14

Figure 10 Distribution of Changes Over Five-Year Periods Across Decades

If it were the case that more backsliders were being offset by more democratizers, we might see a bimodal distribution—or at least more changes farther from zero in the facets corresponding to the past two decades. In fact, we see the opposite: there generally are fewer countries experiencing major changes in the recent periods in either direction across all three indices. (See figure 8 in online appendix section A.8 for a plot of the mean and standard deviation of these changes over time.) Although it is true that in recent years some countries have become less democratic and others have become more democratic, this has always been true. As shown in figure 10, for all of these periods and indices, there are countries moving in both directions, and recent years do not exhibit more volatility.

MAKING SENSE OF THE DATA

So far, our study demonstrates that two different empirical patterns emerged, depending on what type of democracy indicator we examined. Subjective indicators raise concerns that aggregate levels of democracy exhibited a modest decline during the past decade. Objective indicators were largely stable. How can we reconcile these differing accounts? Online appendix section C presents two theoretical models that make sense of the patterns we document.

Time-Varying Coder Bias

First, we develop a non-strategic model of coders. This model shows that if all coders receive a common shock to the information that they use to make decisions (e.g., increased media reporting on backsliding), this can create a time-varying bias that complicates inferences about true democratic change. In particular, changes in aggregate democracy scores are equal to the real change plus the change in this common shock. Intuitively, a change in coder bias could explain why expert-coded measures of democracy have shown a global trend of backsliding whereas objective measures do not. Why might this common bias have changed over time? The formalization raises several possibilities, two of which are highlighted here.

First, it may be that improved communication technology (e.g., increasing Internet and cell-phone penetration and social media) has made it easier to detect and broadcast undemocratic behavior. If so, even for a fixed level of real undemocratic behavior, experts may see more coverage.Footnote 15 Rising standards and heightened attention in recent years also may lead coders to consider the same behavior to be a more serious assault on democracy.

If coders properly adjust for these biases, they will not necessarily create problems. Unfortunately, fully adjusting for biases is a challenge even for extremely well-informed and sophisticated thinkers. There is strong evidence across a variety of topics and subject pools that people make substantial errors when faced with selection problems in the samples that they observe (see Enke Reference Enke2020 for a prominent recent example and Brundage, Little, and You Reference Brundage, Little and You2022 for a review).

Second, if the experts doing the coding have “motivated beliefs” to think that the country-year they are coding is relatively democratic or undemocratic, this can skew individual and aggregate coding. If the coders’ motives change over time, the bias that this creates changes over time as well. One concrete way that this may play out also relates to the rise of a media narrative that the world is becoming less democratic, particularly concerns about democratic backsliding in the United States. Without joining the debate about whether the United States became substantially less democratic during the Trump presidency, there was (and is) considerable attention to this possibility (Carey et al. Reference Carey, Helmke, Nyhan and Stokes2020). These concerns close to home may have made experts—many of whom are based in the United States—“want to see” ways in which the countries that they are coding fit into a global narrative of backsliding or what they are seeing close to home. This possibility is especially troubling if the types of country experts willing to take the time to work as coders include those most concerned about backsliding.

Although the objective indicators are much less likely to be affected by these types of coder bias, they may be biased in other ways. Nevertheless, for the purposes of our study, this generates problems only if this bias has changed over time. For instance, electoral performance can be influenced by factors other than how free and fair the elections are. Incumbents may perform well in an election because they did a good job in office and voters recognize this. It is possible that incumbent leaders have done a worse job in office in recent years—for example, producing worse electoral outcomes, being more corrupt, or choosing unpopular policies—but took more anti-democratic actions to insulate themselves at the ballot box. We are not aware of any evidence about this effect, but we encourage more research on this idea.

Strategic Attacks on Democracy

Second, we consider a strategic model of a leader who decides whether to engage in “subtle” or “blatant” undemocratic actions. We consider changes that may have caused leaders to substitute more subtle democratic violations, such as an increased penalty for being seen as undemocratic or leaders becoming “better” at subtle violations. Either change could explain why subjective indicators—which arguably can better detect subtle violations—have declined in relative terms.

Although increased penalties for observed undemocratic behavior may cause incumbents to switch from blatant to subtle actions, they also should result in fewer violations overall—precisely because such actions become more costly. There also is scant evidence of increasing penalties; if anything, Hyde (Reference Hyde2020) suggests that international promotion of democracy has waned in recent years.

Furthermore, if leaders are becoming better at subtle democratic violations, they should be winning elections with larger margins and at higher rates, which is inconsistent with previously presented evidence. Moreover, in many cases, improved communication technology and media attention to backsliding should make it more difficult for subtle democratic violations to go unnoticed.

In summary, it certainly is plausible that the nature of attacks on democracy has changed in some ways. For example, Svolik (Reference Svolik2019) finds that the share of democratic breakdowns driven by executive takeovers rather than military coups increased from approximately 50% in the 1970s to approximately 90% more recently. However, a shift in backsliding strategies does not mean that there is more backsliding overall. Theories on the changing nature of anti-democratic actions should account for the fact that successful attacks on democracy, as measured by leaders insulating themselves in power, have not increased.

Evidence on Mechanisms

Finally, we present suggestive evidence that media coverage may create the types of bias highlighted in the coder model. Of course, as with the democracy assessments, changes in media coverage may reflect changes in the actual frequency of democratic backsliding or how much individual events are covered. Nevertheless, it is instructive to observe the trends.

The top panels in figure 11 plot data from Gottlieb et al. (Reference Gottlieb, Blair, Baron, Arugay, Ballard-Rosa, Beatty and Esen2022), who coded reports of events of democratic backsliding using primary and secondary sources. The top-left panel shows a substantial increase in reports since 2000 that peaked in 2019, followed by a rapid decrease in 2020 and 2021. The top-right panel plots the average number of events per country with at least one event, which more than doubled from 2000 to a peak in 2018.

Figure 11 Media and Academic Coverage

The bottom-left panel of figure 11 plots the number of articles in the New York Times that included the term “democratic backsliding” or “democratic erosion” by year, shown by the thick black line. The thinner lines are the individual search terms, with “erosion” above “backsliding.” After generally trending downward from 1980 until the early 2000s, there was a significant spike around 2008. There was an even larger spike that began in the 2010s; however, there was a decrease from 2020 to 2021, which perhaps reflects a decrease in emphasis after the Trump presidency.

The bottom-right panel in figure 11 plots Google Scholar hits for “democratic transition”—a relatively neutral term, although one that generally corresponds to countries becoming more democratic—and the sum of “democratic backsliding” and “democratic erosion.” Although “democratic backsliding” and “democratic erosion” have not yet caught up with “democratic transition”, the use of “democratic transition” has been declining in recent years and the more negative terms have been exploding.

There is evidence of increased media and scholarly attention to democratic erosion. Given that this increase is more dramatic than even subjective measures of democratic backsliding, it is difficult not to infer that the trend at least partially is driven by more intense media coverage of these events. We encourage future research to explore this and other mechanisms in more detail.

CONCLUSION

Democratic backsliding is an important topic, and we believe that it is crucial to provide an accurate depiction of the current state of the world. This article makes two general contributions: substantive and methodological.

First, the common claim that we are in a period of massive global democratic decline is not clearly supported by empirical evidence. As other scholars have noted, expert-coded measures document only a weak decline in recent years. We add to this observation that on objective indicators, there is minimal evidence of global backsliding. Of course, we are not claiming that backsliding is not occurring in any particular country. However, if the world is experiencing major backsliding in the aggregate, we should expect to see evidence of this on objective measures (e.g., incumbent leaders and their parties winning elections at higher rates).

Second, we highlight measurement concerns regarding time-varying bias in expert-coded data. Subjective indicators have the advantage of measuring concepts for which it is difficult to collect objective data, but this broader coverage may come at the cost of accuracy and replicability. We encourage future research to improve expert-coding practices, specifically regarding potential time-varying biases. Perhaps one way forward is to ask experts to collect data on more objective indicators—for example, the percentage of state ownership of media as a measure of media freedom.

We conclude with a more general point about recognizing the limits of our knowledge. In recent years, political science researchers have become more precise about making strong claims based on data. These standards are a key advancement in our discipline, and careful scrutiny is one of the best contributions that academics can give to society. It is imperative that we apply the same level of rigor and comprehensiveness when we study trends in global democracy.

In one sense, this is a modest study: rather than stating that “the conventional wisdom that we are in a period of global democratic decline is definitively wrong,” we simply are stating that the evidence is not sufficiently strong to make this claim. Recognizing this uncertainty is important and should not be discouraging. Parsing what we do and do not know about a topic is a first step for making scientific progress (Feynman Reference Feynman2009). We hope this study helps scholars determine where to focus their future efforts.

ACKNOWLEDGMENTS

A previous version of this article was circulated with the title “Subjective and Objective Measurement of Democratic Backsliding.” Many thanks to Ashley Anderson, Rob Blair, Carles Boix, Jason Brownlee, Michael Coppedge, Wiola Dziuda, Ryan Enos, Anthony Fowler, Scott Gehlbach, Jessica Gottlieb, Allison Grossman, Guy Grossman, Gretchen Helmke, James Hollyer, Will Howell, Susan Hyde, Ethan Kaplan, Marko Klăsnja, Carl Henrik Knutsen, Andrej Kokkonen, Jacob Lewis, Ellen Lust, Neil Malhotra, Juraj Medzihorsky, Rob Mickey, Mike Miller, Gerardo Munck, Monika Nalepa, Pippa Norris, Brendan Nyhan, Jack Paine, Tom Pepinsky, Barbara Piotrowska, Adam Przeworski, Darin Self, Tara Slough, Pavi Suryanarayan, Milan Svolik, Jan Teorell, Dan Treisman, Nic Van de Walle, David Waldner, Nils Weidmann, three anonymous reviewers, and the editors of PS and #PolisciTwitter for helpful comments and discussion. We also are grateful to audiences at the American Political Science Association Annual Meeting, Georgetown University, King’s College London, Peking University, Polarization Research Lab, Political Economy of Democratic Backsliding Conference, University of North Carolina Chapel Hill, University of Chicago, Vanderbilt University, Washington Political Economy Conference, and Washington State University for their generous feedback. Kamya Yadav and Melle Scholten provided excellent research assistance.

DATA AVAILABILITY STATEMENT

Research documentation and data that support the findings of this study are openly available at the PS: Political Science & Politics Harvard Dataverse at https://doi.org/10.7910/DVN/G2SQ6Y.

Supplementary Material

To view supplementary material for this article, please visit http://doi.org/10.1017/S104909652300063X.

CONFLICTS OF INTEREST

The authors declare that there are no ethical issues or conflicts of interest in this research.