Gender equity and the (lack of) advancement of women in the profession have long been concerns of the APSA Committee on the Status of Women in the Profession (CSWP). Among the many concerns that have been investigated is the relatively lower presence of women in the discipline’s academic journals (Breuning and Sanders Reference Breuning and Sanders2007; Evans and Bucy Reference Evans and Bucy2010; Teele and Thelen Reference Teele and Thelen2017; Young Reference Young1995). However, few studies have been able to access data to ascertain whether the peer-review process itself is gendered—an important issue in addressing challenges to the advancement of women in the profession.

This article investigates whether the review process is gendered on the basis of data on submissions to the American Political Science Review (APSR)—one of the most selective peer-reviewed journals in political science—across two editorial teams to identify differences between them. We found little evidence of systematic gender bias in the review process at the APSR. However, we found that service as a reviewer is associated with a higher acceptance rate. We discuss the implications of these findings for editors and for authors.

GENDER IN POLITICAL SCIENCE

Most studies that have sought to address whether publishing in political science is gendered relied on data regarding the outcome of the peer-review process (Breuning and Sanders Reference Breuning and Sanders2007; Evans and Bucy Reference Evans and Bucy2010; Hancock, Baum, and Breuning Reference Hancock, Baum and Breuning2013; Hesli and Lee Reference Hesli and Lee2011; Hesli, Lee, and Mitchell Reference Hesli, Lee and Mitchell2012; Maliniak, Powers, and Walter Reference Maliniak, Powers and Walter2013; Teele and Thelen Reference Teele and Thelen2017; Young Reference Young1995). These studies relied on outcome data to examine whether women publish in proportion to their presence in the discipline. The APSA CSWP reported that women now comprise slightly more than 40% of political science faculty (CSWP 2016). Previously, Sedowski and Brintnall (Reference Sedowski and Brintnall2007) reported that 26% of all political scientists and 36% of those at the assistant professor rank were women. In addition, the National Science Foundation tracks annual PhDs earned and reported that in 2014, women earned 44.1% of PhDs in political science (National Science Foundation 2015).

Women’s share of articles published in the APSR and many other prestigious political science journals lags well behind these figures. However, it is not clear whether women are less likely to submit their work or less likely to have it accepted because only Østby et al. (Reference Østby, Strand, Nordas and Gleditsch2013) and Wilson (Reference Wilson2014) used submission data in their studies.

MEASURING WHO MAKES IT THROUGH THE REVIEW PROCESS

To determine whether there is any evidence of gender bias in the review process, we evaluated authorship—as well as other variables that often are mentioned as resulting in biased outcomes—and final disposition of all manuscripts submitted to the APSR in 2010 and 2014.Footnote 1 These were the third year of editorship for the University of California, Los Angeles– and the University of North Texas–based teams, respectively. We compared the third year of each four-year term for two reasons: (1) editors will have “hit their stride” and the next team has not yet been announced; and (2) given the extremely labor-intensive nature of coding the data, which took more than 400 person-hours, it was more feasible to take a “snapshot” of the data from two editorial teams.

We coded a number of variables for all manuscripts and their authors for both years. As explained herein, whenever possible, we relied on the categorizations used for editorial purposes. In cases in which the relevant information on authors was not available in Editorial Manager, we searched online. Although we were able to track down many pieces of information in this way, there is a small amount of missing data for some variables.

In 2014, the number of manuscripts had increased to 951 and the number of authors to 1,640, of which 24.0% were female. This suggests that the proportion of female authors of papers submitted to the APSR lags well behind the presence of women in the political science profession.

We collected data on 1,621 manuscripts and 2,660 authors.Footnote 2 In 2010, the APSR received 670 manuscripts from 1,020 authors, of which 19.9% were female. In 2014, the number of manuscripts had increased to 951 and the number of authors to 1,640, of which 24.0% were female. This suggests that the proportion of female authors of papers submitted to the APSR lags well behind the presence of women in the political science profession.

Notably, the number of authors rose faster than the number of submissions, which signals a distinct shift toward coauthorship; in 2014, about 10% more papers were coauthored or multi-authored. Women remain more likely than men to submit single-authored papers, although the gap was narrower in 2014 than in 2010. That said, men are more likely than women to be part of multiple-author teams (see appendix table A.1).

We present descriptive data by the submitting author’s gender because the Editorial Manager system used by the APSR displays the name of the submitting author for each manuscript. Hence, the editors are more aware of the identity of the submitting author than of any additional authors. If any gender bias were to affect the editors’ decision making, it most likely would be evident when data are presented in this way. The appendix provides descriptive tables using all authors, and the logistic regressions presented in the article show analyses for submitting authors as well as all authors.

GENDER AND THE OUTCOME OF THE REVIEW PROCESS IN THE APSR

Does the review process reveal signs of gender bias? Assuming that the quality of papers submitted by women and men is generally similar, we should expect their papers to be equally likely to be accepted—even if acceptance of a manuscript is a rare event, given the journal’s acceptance rate of about 6%.

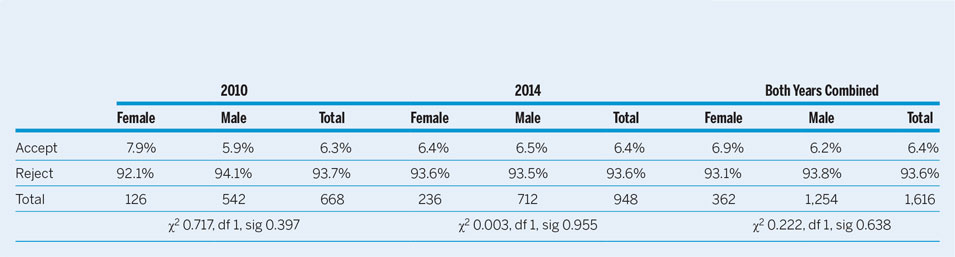

Table 1 shows the final disposition of manuscripts by the submitting author’s gender; appendix table A.2 is a similar table that includes all authors. The “reject” decision includes both desk rejects and rejection after review. Desk rejects account for 22.8% overall and a somewhat lower percentage of all submissions. The data show that papers submitted by women and men were accepted or rejected roughly in proportion to total submissions. The minor differences were not statistically significant (see the chi-square reported at the bottom of the table).

Table 1 Final Disposition of Manuscripts by Gender of Submitting Author

It is encouraging that the review process does not show clear signs of gender bias; however, the fact that women account for a much lower proportion of submissions to the APSR than their presence in the discipline is cause for concern. Previous studies noted that women may be more prevalent in certain subfields (e.g., comparative politics), more likely to use qualitative methods, less likely to be affiliated with research-intensive universities, and more prevalent among the more junior ranks (Hancock, Baum, and Breuning Reference Hancock, Baum and Breuning2013; Maliniak et al. Reference Maliniak, Oakes, Peterson and Tierney2008; Sarkees and Breuning Reference Sarkees, Breuning and Denemark2010). Next, we investigate whether these variables explain how a paper fares in the review process.

It is encouraging that the review process does not show clear signs of gender bias; however, the fact that women account for a much lower proportion of submissions to the APSR than their presence in the discipline is cause for concern.

WHAT ELSE MIGHT CAUSE GENDERED OUTCOMES OF THE REVIEW PROCESS?

To evaluate whether subfield, methodology, institutional affiliation, or academic rank masks a relationship between gender and the outcome of the review process, we collected data on these variables. The appendix includes descriptive tables that show the relationship between each variable and the review-process outcome, categorized by gender and year for all authors.

The subfield categorization reflects the way in which the APSR classifies manuscripts in Editorial Manager: American Politics; Comparative Politics; International Relations; Normative Theory; Formal Theory; Methods; Race, Ethnicity, and Politics; and Other. The three panels in appendix table A.3 demonstrate that the first four categories account for substantially larger proportions of submissions than the remaining four categories. Therefore, we used dummy variables for the first four in the logistic-regression analyses. Furthermore, manuscripts in Comparative Politics and Normative Theory are somewhat more likely to be accepted than their proportion of submissions for both women and men.

The classification of methodology also relies on the APSR categories, which classify papers as Formal, Quantitative, Formal and Quantitative, Small N, Interpretive and/or Conceptual (Normative Theory papers usually are classified in this category), Qualitative Empirical, and Other. As shown in appendix table A.4, quantitative work accounts for a much larger proportion of submissions than qualitative work. The table also shows that quantitative work is accepted at a somewhat lower rate than its share of submissions, whereas the reverse is true for qualitative work. Again, there is no obvious gendered pattern in this table. For ease of interpretation, we recoded the methodological categories as quantitative and qualitative in the logistic regressions.

Scholars at research-intensive (or R1) institutions often are presumed to have an advantage in the review process, especially in prominent journals. Hence, we coded for institutional affiliation using the Carnegie Classification of Institutions of Higher Education (2015). We classified R1 institutions (i.e., those with the highest research activity) separately from the remaining research-focused universities. All remaining types of colleges and universities (ranging from master’s and baccalaureate institutions to those offering associate’s degrees) were grouped together, including non-US institutions and non-academic employment. As shown in appendix table A.5, scholars at R1 institutions account for more than half of all submissions to the APSR and an even higher proportion of accepted manuscripts. This pattern affects both women and men.

Because the pressure to publish is greatest in the junior ranks (i.e., PhD candidate, post-doctorate, and assistant professor), we coded authors’ ranks at the time they submitted their papers. We started with information provided by the submitting author. However, different countries use different classifications for academic ranks. To render them more comparable, we investigated the equivalency of non-US systemsFootnote 3 and recoded our data to reflect US terminology for easier comparisons. As shown in appendix table A.6, assistant, associate, and especially full professors are accepted at rates that exceed their proportion of submissions, whereas PhD candidates and post-doctorates are accepted at lower rates. The latter two groups may be prone to submit less-well-developed papers, which may account for their lower acceptance rate.Footnote 4 Because the coding of academic rank is ordinal, we included this variable in the logistic regressions in this form.

Finally, we coded whether a paper was the authors’ first submission and whether they had reviewed for the APSR prior to submitting it (both coded as No = 0 and Yes = 1). Although the literature does not address this issue, we suspect that authors who reviewed prior to their first submission may fare somewhat better in the review process. The first panel of appendix table A.7 indicates that having served as a reviewer constitutes a small advantage. The second and third panels, which select data for assistant professors and female faculty, show similar patterns. This is discussed further in the conclusion.

IS THE REVIEW PROCESS GENDERED?

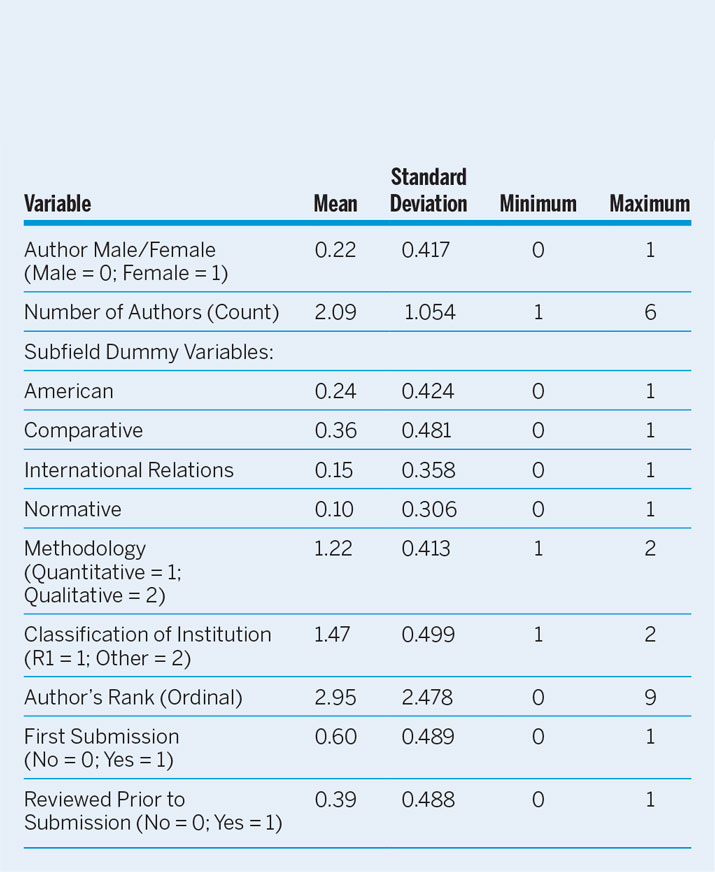

The variables discussed previously—and for which detailed descriptive tables are in the appendix—are summarized in table 2. The table also indicates how each variable was measured for the logistic regressions—which, in some cases, are recoded from the more detailed appendix tables. Furthermore, we tested for multicollinearity and found that none of our models was affected. The tolerance and variance inflation factor (VIF) scores were within acceptable limits (with VIF scores all well below “2”).

Table 2 Summary Statistics for the Independent Variables

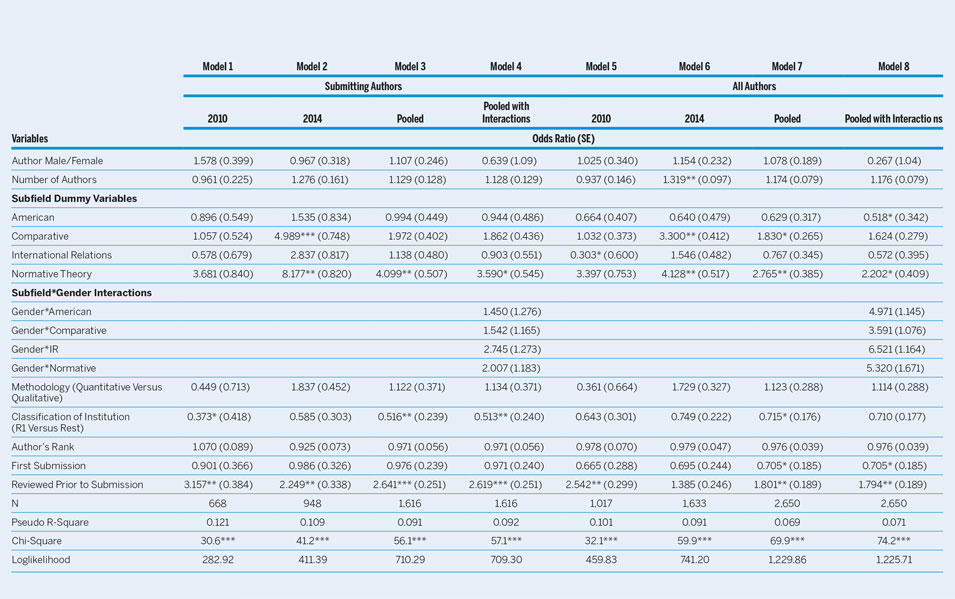

To achieve a better understanding of the combined impact of the various variables on review-process outcomes, we conducted a series of logistic regressions, shown in table 3. Models 1–4 include only submitting authors, whereas models 5–8 include all authors. For each set of models, we analyzed the data by each year (i.e., 2010 and 2014) and for the two years combined (pooled). Additionally, models 4 and 8 include interactions between gender and the four subfield dummy variables. We included models with interaction terms to assess, for instance, whether women in comparative politics were at a particular disadvantage compared to women in other subfields (and to test this for other major fields).Footnote 5 We did not include interactions for the 2010 and 2014 data separately because of the high degree of collinearity and the small frequencies in the cell distributions when subdividing the sample. However, we are confident that this would not change the overall result that women in particular subfields had no significant advantage or disadvantage in being accepted for publication.

Table 3 Whose Manuscripts Get Accepted for Publication? (Logistic Regression)

Notes: *p ≤ 0.05; **p ≤ 0.01; ***p ≤ 0.001.

Examining the results across the eight models, we observed that whether the author is male or female did not have any impact on the outcome of the review process, either separately (i.e., all models) or when interacted with the subfield dummy variables (i.e., models 4 and 8). The variable that most reliably had a positive impact was whether the author had reviewed prior to submission, which was statistically significant for all analyses except in model 6 (i.e., all authors, 2014). This has implications for both editors and authors, as discussed in the conclusion.

Furthermore, submitting authors from R1 institutions had a greater likelihood of having their work accepted in 2010 (i.e., model 1) and in the pooled data (i.e., models 3 and 4). These models were statistically significant in the direction of the R1 institutions (coded “1”; versus “2” for all other types of institutions). However, in models 5–8, which included all authors, institutional affiliation did not have a significant impact. Given that the submitting author was identified in the Editorial Manager system, this suggests the possibility that editors allowed this information to influence their decision making.

The subfield dummy variables show that Comparative Politics and Normative Theory papers had a statistically significant advantage in 2014, both for submitting authors (i.e., model 2) and all authors (i.e., model 6). This also had an impact on some of the pooled models (models 3–4 and 7–8). This suggests that in 2014, the editors more often found manuscripts in Comparative Politics and Normative Theory sufficiently compelling to proceed with them.

On average, the models explain about 10% of the variance, as indicated by the pseudo R-square. Hence, although the models are statistically significant, they do not demonstrate gender bias in the review process, and neither do subfield or methodology present significant hurdles. That said, the findings suggest that editors may be influenced by a submitting author’s affiliation or by a substantive preference for work in specific subfields.

DISCUSSION AND CONCLUSION

It is encouraging that we could not identify gender bias in the review process at the APSR with data from two years for two different editorial teams. Thus, the results suggest that the pipeline may not be “clogged” or need “clearing” but rather that the proportions entering it require adjustment. To be sure, it is encouraging that between 2010 and 2014, the number of female submitting authors almost doubled and rose faster than the overall number of submitting authors (see table 1).Footnote 6 That said, the data also show that female authors still submit their work to the APSR at notably lower rates than their presence in the discipline. Our findings suggest that some oft-cited reasons may not be the culprits. We did not find evidence that subfields in which women are concentrated (e.g., comparative politics) or methodologies that they are more likely to use (e.g., qualitative or interpretive methods) were less likely to survive the review process.

Thus, the results suggest that the pipeline may not be “clogged” or need “clearing” but rather that the proportions entering it require adjustment.

However, we found that editors make a difference. In 2010, editors showed a statistically significant preference for submitting authors from R1 institutions, whereas in 2014 they favored Comparative Politics and Normative Theory. The data did not reveal why the editors exhibited these preferences. It may reflect their judgment regarding the novelty and quality of manuscripts, but we cannot be certain. More important is that these judgments have consequences. Scholars notice which type of articles appear in journals and use this information to decide where to submit their work. Once a journal has a reputation for publishing a particular type(s) of scholarship, editors who want to broaden a journal’s mandate must overcome expectations of what a specific journal “does.”

This places a responsibility on editors to address the gendered nature of submissions. Outreach efforts can encourage more women to submit. These efforts, which were undertaken by the University of North Texas–based team, may have increased submissions by women between 2010 and 2014. Of course, we cannot be sure and the shift can easily have other causes.

Conversely, scholars must be sufficiently confident that editors genuinely want to consider manuscripts beyond the range that the journal has traditionally published. Despite outreach efforts, qualitative scholarship was not more prevalent among submissions in 2014 than in 2010—although such work had a somewhat higher likelihood of being accepted in 2014.

Furthermore, the absence of a gender difference in the likelihood of acceptance by type of institution obscures a gendered pattern: women more often are employed at teaching-oriented institutions (Hancock, Baum, and Breuning Reference Hancock, Baum and Breuning2013). Although most of these institutions value research, faculty have more teaching responsibilities and less access to resources that facilitate research productivity. In other words, the gendered pattern of academic employment affects the gendered pattern of submissions and acceptances.

The empirical finding that scholarship by women and men is accepted roughly in proportion to their submissions is encouraging, but the question remains of how to alter the composition of what goes into the pipeline. We described the complex interplay between outreach by editors to broaden the scope of submissions and the willingness of scholars to submit their work, as well as their differential ability to devote time and effort to scholarship. This last issue is particularly difficult to address.

That said, it is encouraging to find that participation in the review process has a positive side effect. Experience as a reviewer increases the likelihood that an author’s paper will be accepted. To use this small benefit, editors should cast a wide net in identifying reviewers. Including more women (as well as other underrepresented groups), newly minted assistant professors, and scholars outside of R1 institutions in the reviewer pool can be achieved if editors search online conference programs and paper archives, as well as the reviewer database of the journal itself. Scholars can enhance their visibility to editors by registering in journal-reviewer databases and establishing a presence in other digital databases. We do not suggest that this is a panacea but do observe that any action that contributes to the inclusion of a broader range of scholars is likely to have some impact on the gender gap in journals.

Our analyses are limited to one journal and two years. Additional research is needed to deepen our understanding of the role of gender in the review process—and in other aspects of the publication process. That said, we hope that evidence of the absence of gender bias in the review process at the APSR provides an impetus for women to submit their work and contribute to a further narrowing of the gap between women’s presence in the discipline and in its most prestigious journals.

SUPPLEMENTARY MATERIAL

To view supplementary material for this article, please visit https://doi.org/10.1017/S1049096518000069