1. Introduction

The

![]() $\lambda$

-calculus has been historically conceived as an equational theory of functions, so that reduction had an ancillary role in Church’s view, and it was a tool for studying the theory

$\lambda$

-calculus has been historically conceived as an equational theory of functions, so that reduction had an ancillary role in Church’s view, and it was a tool for studying the theory

![]() $\beta$

, see Barendregt (Reference Barendregt1984, Ch. 3). The development of functional programming languages like Lisp and ML, and of proof assistants like LCF, has brought a new, different interest in the

$\beta$

, see Barendregt (Reference Barendregt1984, Ch. 3). The development of functional programming languages like Lisp and ML, and of proof assistants like LCF, has brought a new, different interest in the

![]() $\lambda$

-calculus and its reduction theory.

$\lambda$

-calculus and its reduction theory.

The cornerstone of this change in perspective is Plotkin’s (1975), where the functional parameter passing mechanism is formalized by the call-by-value rewrite rule

![]() $\beta_v$

, allowing reduction only if the argument term is a value, that is a variable or an abstraction. In Plotkin (Reference Plotkin1975), it is also introduced the notion of weak evaluation, namely no reduction in the body of a function (i.e., of an abstraction).

$\beta_v$

, allowing reduction only if the argument term is a value, that is a variable or an abstraction. In Plotkin (Reference Plotkin1975), it is also introduced the notion of weak evaluation, namely no reduction in the body of a function (i.e., of an abstraction).

This is now the standard evaluation implemented by functional programming languages, where values are the terms of interest (and the normal forms for weak evaluation in the closed case). Full

![]() $\beta_v$

reduction is instead the basis of proof assistants like Coq, where normal forms are the result of interest. More generally, the computational perspective on

$\beta_v$

reduction is instead the basis of proof assistants like Coq, where normal forms are the result of interest. More generally, the computational perspective on

![]() $\lambda$

-calculus has given a central role to reduction, whose theory provides a sound framework for reasoning about program transformations, such as compiler optimizations or parallel implementations.

$\lambda$

-calculus has given a central role to reduction, whose theory provides a sound framework for reasoning about program transformations, such as compiler optimizations or parallel implementations.

The rich variety of computational effects in actual implementations of functional programming languages brings further challenges. This dramatically affects the theory of reduction of the calculi formalizing such features, whose proliferation makes it difficult to focus on suitably general issues. A major change here is the discovery by Moggi (Reference Moggi1988, 1989, Reference Moggi1991) of a whole family of calculi that are based on a few common traits, combining call-by-value with the abstract notion of effectful computation represented by a monad, which has shown to be quite successful. But Moggi’s computational

![]() $\lambda$

-calculus is an equational theory in the broader sense; much less is known of the reduction theory of such calculi: this is the focus of our paper.

$\lambda$

-calculus is an equational theory in the broader sense; much less is known of the reduction theory of such calculi: this is the focus of our paper.

The Computational Calculus. Since Moggi’s seminal work, computational

![]() $\lambda$

-calculi have been developed as a foundation of programming languages, formalizing both functional and non-functional features, see e.g. Wadler and Thiemann (Reference Wadler and Thiemann2003), Benton et al. (Reference Benton, Hughes and Moggi2002), starting a thread in the literature that is still growing. The basic idea of computational

$\lambda$

-calculi have been developed as a foundation of programming languages, formalizing both functional and non-functional features, see e.g. Wadler and Thiemann (Reference Wadler and Thiemann2003), Benton et al. (Reference Benton, Hughes and Moggi2002), starting a thread in the literature that is still growing. The basic idea of computational

![]() $\lambda$

-calculi is to distinguish values and computations, so that programs, represented by closed terms, are thought of as functions from values to computations. Intuitively, computations embody a richer structure than values and do form a larger set in which values can be embedded. On the other hand, the essence of programming is composition; to compose functions from values to computations we need a mechanism to uniformly extend them to functions of computations, while preserving their original behavior over the (image of) values.

$\lambda$

-calculi is to distinguish values and computations, so that programs, represented by closed terms, are thought of as functions from values to computations. Intuitively, computations embody a richer structure than values and do form a larger set in which values can be embedded. On the other hand, the essence of programming is composition; to compose functions from values to computations we need a mechanism to uniformly extend them to functions of computations, while preserving their original behavior over the (image of) values.

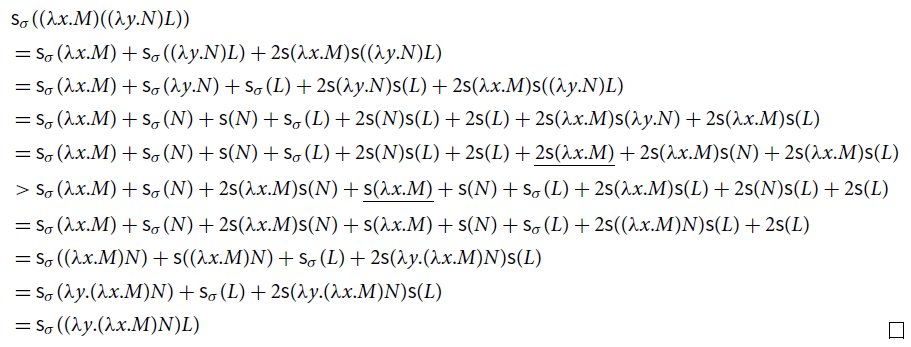

To model these concepts, Moggi used the categorical notion of monad, abstractly representing the extension of the space of values to that of computations, and the associated Kleisli category, whose morphisms are functions from values to computations, which are the denotations of programs. Syntactically, following Wadler (Reference Wadler1995), we can express these ideas by means of a call-by-value

![]() $\lambda$

-calculus with two sorts of terms: values, ranged over by V,W, namely variables or abstractions, and computations denoted by L,M,N. Computations are formed by means of two operators: values are embedded into computations by means of the operator

$\lambda$

-calculus with two sorts of terms: values, ranged over by V,W, namely variables or abstractions, and computations denoted by L,M,N. Computations are formed by means of two operators: values are embedded into computations by means of the operator

![]() $ unit$

written return in Haskell programming language, whose name refers to the unit of a monad in categorical terms; a computation

$ unit$

written return in Haskell programming language, whose name refers to the unit of a monad in categorical terms; a computation

![]() is formed by the binary operator

is formed by the binary operator

![]() , called bind (

, called bind (

![]() $\texttt{>>=}$

in Haskell), representing the application to M of the extension to computations of the function

$\texttt{>>=}$

in Haskell), representing the application to M of the extension to computations of the function

![]() $\lambda x.N$

.

$\lambda x.N$

.

The Monadic Laws. The operational understanding of these new operators is that evaluating

![]() , which in Moggi’s notation reads

, which in Moggi’s notation reads

![]() , amounts to first evaluating M until a computation of the form

, amounts to first evaluating M until a computation of the form

![]() is reached, representing the trivial computation that returns the value V. Then V is passed to N by binding x to V, as expressed by the identity:

is reached, representing the trivial computation that returns the value V. Then V is passed to N by binding x to V, as expressed by the identity:

This is the first of the three monadic laws in Wadler (Reference Wadler1995). The remaining laws are

To understand these two last rules, let us define the composition (named Kleisli composition in category theory) of the functions

![]() and

and

![]() as

as

where we can freely assume that x is not free in N.

Equality (2) (identity) implies that

![]() , which paired with the instance of (1):

, which paired with the instance of (1):

![]() (where

(where

![]() is the usual congruence generated by the renaming of bound variables), tells that

is the usual congruence generated by the renaming of bound variables), tells that

![]() is the identity of composition

is the identity of composition

![]() .

.

Equality (3) (associativity) implies:

namely that composition

![]() is associative.

is associative.

The monadic laws correspond to the three equalities in the definition of a Kleisli triple (Moggi Reference Moggi1991), which is an equivalent presentation of monads (MacLane Reference MacLane1997). Indeed, Moggi’s calculus is the internal language of a suitable category equipped with a (strong) monad T, and with enough structure to internalize the morphisms of the respective Kleisli category. As such, it is a simply typed

![]() -calculus, where T is the type constructor associating with each type A the type TA of computations over A. Therefore,

-calculus, where T is the type constructor associating with each type A the type TA of computations over A. Therefore,

![]() and

and

![]() are polymorphic operators with respective types (Wadler Reference Wadler1992, Reference Wadler1995):

are polymorphic operators with respective types (Wadler Reference Wadler1992, Reference Wadler1995):

The Computational Core. The dynamics of

![]() -calculi is usually defined as a reduction relation on untyped terms. Moggi’s preliminary report (Moggi Reference Moggi1988) specifies both an equational and, in Section 6, a reduction system even if only the former is thoroughly investigated and appears in (Moggi Reference Moggi1989, Reference Moggi1991), while reduction is briefly treated for an untyped fragment of the calculus. However, when stepping from the typed calculus to the untyped one, we need to be careful by avoiding meaningless terms to creep into the syntax, so jeopardizing the calculus theory. For example: what should be the meaning of

-calculi is usually defined as a reduction relation on untyped terms. Moggi’s preliminary report (Moggi Reference Moggi1988) specifies both an equational and, in Section 6, a reduction system even if only the former is thoroughly investigated and appears in (Moggi Reference Moggi1989, Reference Moggi1991), while reduction is briefly treated for an untyped fragment of the calculus. However, when stepping from the typed calculus to the untyped one, we need to be careful by avoiding meaningless terms to creep into the syntax, so jeopardizing the calculus theory. For example: what should be the meaning of

![]() where both M and N are computations? What about

where both M and N are computations? What about

![]() for any V? Shall we have functional applications of any kind?

for any V? Shall we have functional applications of any kind?

To answer these questions, in de' Liguoro and Treglia (2020) typability is taken as syntactic counterpart of being meaningful: inspired by ideas in Scott (Reference Scott, Hindley and Seldin1980), the untyped computational

![]() -calculus is a special case of the typed one, where there are just two types D and TD, related by the type equation

-calculus is a special case of the typed one, where there are just two types D and TD, related by the type equation

![]() , that is Moggi’s isomorphism of the call-by-value reflexive object (see Moggi Reference Moggi1988, Section 5). With such a proviso, we get the following syntax:

, that is Moggi’s isomorphism of the call-by-value reflexive object (see Moggi Reference Moggi1988, Section 5). With such a proviso, we get the following syntax:

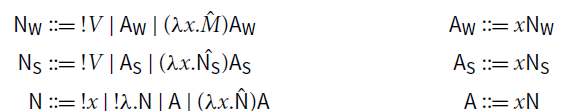

If we assume that all variables have type D, then it is easy to see that all terms in

![]() have type

have type

![]() , which is consistent with the substitution of variables with values in (1). On the other hand, considering the typing of

, which is consistent with the substitution of variables with values in (1). On the other hand, considering the typing of

![]() and

and

![]() in (4), terms in

in (4), terms in

![]() have type TD. As we have touched above, there is some variety in notation among computational

have type TD. As we have touched above, there is some variety in notation among computational

![]() -calculi; we choose the above syntax because it explicitly embodies the essential constructs of a

-calculi; we choose the above syntax because it explicitly embodies the essential constructs of a

![]() -calculus with monads, but for functional application, which is definable: see Section 3 for further explanations. We dub the calculus computational core, noted

-calculus with monads, but for functional application, which is definable: see Section 3 for further explanations. We dub the calculus computational core, noted

![]() .

.

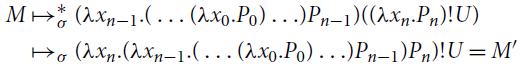

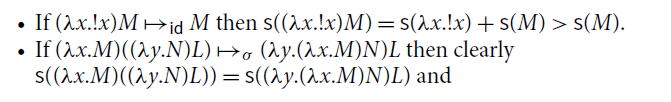

From Equalities to Reduction. Similarly to Moggi (Reference Moggi1988) and Sabry and Wadler (Reference Sabry and Wadler1997), the reduction rules in the computational core

![]() are the relation obtained by orienting the monadic laws from left to right. We indicate by

are the relation obtained by orienting the monadic laws from left to right. We indicate by

![]() ,

,

![]() , and

, and

![]() the rules corresponding to (1), (2), and (3), respectively. The contextual closure of these rules, noted

the rules corresponding to (1), (2), and (3), respectively. The contextual closure of these rules, noted

![]() , has been proved confluent in de' Liguoro and Treglia (2020), which implies that equal terms have a common reduct and the uniqueness of normal forms.

, has been proved confluent in de' Liguoro and Treglia (2020), which implies that equal terms have a common reduct and the uniqueness of normal forms.

In Plotkin (Reference Plotkin1975) call-by-value reduction

![]() is an intermediate concept between the equational theory and the evaluation relation

is an intermediate concept between the equational theory and the evaluation relation

![]() , that models an abstract machine. Evaluation consists of persistently choosing the leftmost

, that models an abstract machine. Evaluation consists of persistently choosing the leftmost

![]() -redex that is not in the scope of an abstraction, i.e., evaluation is weak.

-redex that is not in the scope of an abstraction, i.e., evaluation is weak.

The following crucial result bridges reduction (hence, the foundational calculus) with evaluation (implemented by an ideal programming language):

Such a result (Corollary 1 in Plotkin Reference Plotkin1975) comes from an analysis of the reduction properties of

![]() , namely standardization.

, namely standardization.

As we will see, the rules induced by associativity and identity make the behavior of the reduction in

![]() – and the study of its operational properties – nontrivial in the setting of any monadic

– and the study of its operational properties – nontrivial in the setting of any monadic

![]() -calculus. The issues are inherent to the rules coming from the monadic laws (2) and (3), independently of the syntactic representation of the calculus that internalizes them. The difficulty appears clearly if we want to follow a similar route to Plotkin (Reference Plotkin1975), as we discuss next.

-calculus. The issues are inherent to the rules coming from the monadic laws (2) and (3), independently of the syntactic representation of the calculus that internalizes them. The difficulty appears clearly if we want to follow a similar route to Plotkin (Reference Plotkin1975), as we discuss next.

Reduction vs. Evaluation. Following Felleisen (Reference Felleisen1988), reduction

![]() and evaluation

and evaluation

![]() of

of

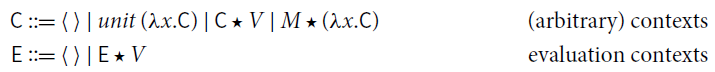

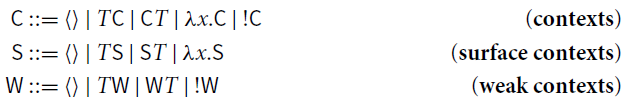

![]() can be defined as the closure of the reduction rules under arbitrary and evaluation contexts, respectively. Consider the following grammars:

can be defined as the closure of the reduction rules under arbitrary and evaluation contexts, respectively. Consider the following grammars:

where the hole

![]() can be filled by terms in

can be filled by terms in

![]() , only. Observe that the closure under evaluation context

, only. Observe that the closure under evaluation context

![]() is precisely weak reduction.

is precisely weak reduction.

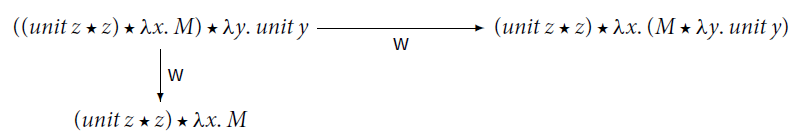

Weak reduction of

![]() , however, turns out to be nondeterministic, nonconfluent, and its normal forms are not unique. The following is a counterexample to all such properties – see Section 5 for further examples.

, however, turns out to be nondeterministic, nonconfluent, and its normal forms are not unique. The following is a counterexample to all such properties – see Section 5 for further examples.

Such an issue is not specific to the syntax of the computational core . The same phenomena show up with the let-notation, more commonly used in computational calculi. Here, evaluation, usually called sequencing, is the reduction defined by the following contexts (Filinski Reference Filinski1996; Jones et al. Reference Jones, Shields, Launchbury, Tolmach, MacQueen and Cardelli1998; Levy et al. Reference Levy, Power and Thielecke2003):

Examples similar to the one above can be reproduced. We give the details in Example 5.3.

1.1 Content and contributions

The focus of this paper is an operational analysis of two crucial properties of a term M:

(i). M returns a value ( i.e.

![]() , for some V value).

, for some V value).

(ii). M has a normal form ( i.e.

![]() , for some N

, for some N

![]() -normal).

-normal).

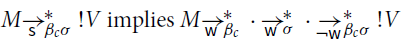

As in Accattoli et al. (Reference Accattoli, Faggian and Guerrieri2019), the cornerstone of our analysis are factorization results (also called semi-standardization in the literature): any reduction sequence can be reorganized so as to first performing specific steps and then everything else.

Via factorization, we show the key result (6), analogous to (5), relating reduction and evaluation:

We then analyze the property of having a normal form (normalization), and define a family of normalizing strategies, i.e., subreductions that are guaranteed to reach a normal form, if any exists.

On the Rewrite Theory of Computational Calculi. In this paper, we study the rewrite theory of a specific computational calculus, namely

![]() . We expose a number of issues, which we argue to be intrinsic to the monadic rules of computational calculi, namely associativity and identity. Indeed, the same issues which we expose in

. We expose a number of issues, which we argue to be intrinsic to the monadic rules of computational calculi, namely associativity and identity. Indeed, the same issues which we expose in

![]() , also appear in other computational calculi, as we discuss in Section 5, where we take as reference the calculus in Sabry and Wadler (Reference Sabry and Wadler1997), which we recall in Section 3.1. We expect that the solutions we propose for

, also appear in other computational calculi, as we discuss in Section 5, where we take as reference the calculus in Sabry and Wadler (Reference Sabry and Wadler1997), which we recall in Section 3.1. We expect that the solutions we propose for

![]() could be adapted also there.

could be adapted also there.

Surface Reduction. The form of weak reduction that we defined in the previous section (sequencing) is standard in the literature. In this paper, we study also a less strict form of weak reduction, namely surface reduction, which is less constrained and better behaved then sequencing. Surface reduction disallows reduction under the

![]() operator only, and not under abstractions. Intuitively, weak reduction does not act in the body of a function, while surface reduction does not act in the scope of return. As we discuss in Section 3.1, it can also be seen as a more natural extension of call-by-value weak reduction to a computational calculus.

operator only, and not under abstractions. Intuitively, weak reduction does not act in the body of a function, while surface reduction does not act in the scope of return. As we discuss in Section 3.1, it can also be seen as a more natural extension of call-by-value weak reduction to a computational calculus.

Surface reduction is well studied in the literature because it naturally arises when interpreting

![]() -calculus into linear logic, and indeed the name surface (which we take from Simpson Reference Simpson and Giesl2005) is reminiscent of a similar notion in calculi based on linear logic (Ehrhard and Guerrieri Reference Ehrhard and Guerrieri2016; Simpson Reference Simpson and Giesl2005). In Section 4, we will make explicit the correspondence with such calculi, showing that the

-calculus into linear logic, and indeed the name surface (which we take from Simpson Reference Simpson and Giesl2005) is reminiscent of a similar notion in calculi based on linear logic (Ehrhard and Guerrieri Reference Ehrhard and Guerrieri2016; Simpson Reference Simpson and Giesl2005). In Section 4, we will make explicit the correspondence with such calculi, showing that the

![]() operator (from the computational core) behaves exactly like a bang

operator (from the computational core) behaves exactly like a bang

![]() (from linear logic).

(from linear logic).

Identity and Associativity. Our analysis exposes the operational role of the rules associated to the monadic laws of identity and associativity.

(i) To compute a value, only

![]() steps are necessary.

steps are necessary.

(ii) To compute a normal form,

![]() steps do not suffice: associativity (i.e.,

steps do not suffice: associativity (i.e.,

![]() steps) is necessary.

steps) is necessary.

Hence, the rule associated to the identity law turns out to be operationally irrelevant.

Normalization. The study of normalization is more complex than that of evaluation and requires some sophisticated techniques. We highlight some specific contributions.

• We define two families of normalizing strategies in

![]() . The first one, quite constrained, relies on an iteration of weak reduction

. The first one, quite constrained, relies on an iteration of weak reduction

![]() . The second one, more liberal, is based on an iteration of surface reduction

. The second one, more liberal, is based on an iteration of surface reduction

![]() . The definition and proof of normalization is parametric on either.

. The definition and proof of normalization is parametric on either.

• The technical difficulty in the proofs for normalization comes from the fact that neither weak nor surface reduction is deterministic. To deal with that we rely on a fine quantitative analysis of the number of

![]() steps, which we carry-on when we study factorization in Section 6.

steps, which we carry-on when we study factorization in Section 6.

The most challenging proofs in the paper are those related to normalization via surface reduction. The effort is justified by the interest in a larger and more versatile strategy, which then does not induce a single abstract machine but subsumes several ones, each following a different reduction policy. It thus facilitates reasoning about optimization techniques and parallel implementation.

A Roadmap. Let us summarize the structure of the paper.

Section 2 contains the background notions which are relevant to our paper.

Section 3 gives the formal definition of the computational core

![]() and its reduction.

and its reduction.

In Sections 4 and 5, we analyze the properties of weak and surface reduction. We first study

![]() , and then we move to the whole

, and then we move to the whole

![]() , where associativity and identity also come to play, and issues appear.

, where associativity and identity also come to play, and issues appear.

In Section 6 we study several factorization results. The cornerstone of our construction is surface factorization (Theorem 6.1). We then further refine this result, first by postponing the

![]() steps which are not

steps which are not

![]() steps, and then with a form of weak factorization.

steps, and then with a form of weak factorization.

In Section 7, we study evaluation and analyze some relevant consequences of this result. We actually provide two different ways to deterministically compute a value. The first way is the one given by (6), via an evaluation context. The second way requires no contextual closure at all: simply applying

![]() - and

- and

![]() -rules will return a value, if possible.

-rules will return a value, if possible.

In Section 8 we study normalization and normalizing strategies.

Section 9 concludes with final discussions and related work.

2. Preliminaries

2.1 Basics on rewriting

We recall here some standard definitions and notations in rewriting that we shall use in this paper (see for instance Terese 2003 or Baader and Nipkow Reference Baader and Nipkow1998 for details).

Rewriting System. An abstract rewriting system (ARS) is a pair

![]() consisting of a set A and a binary relation

consisting of a set A and a binary relation

![]() on A whose pairs are written

on A whose pairs are written

![]() and called steps. A

and called steps. A

![]() -sequence from

-sequence from

![]() is a sequence

is a sequence

![]() of

of

![]() steps, where

steps, where

![]() or

or

![]() for some

for some

![]() ,

,

![]() for all

for all

![]() and

and

![]() (in particular, the sequence is empty for

(in particular, the sequence is empty for

![]() , i.e.

, i.e.

![]() ). We denote by

). We denote by

![]() (resp.

(resp.

![]() ;

;

![]() ) the transitive-reflexive (resp. reflexive; transitive) closure of

) the transitive-reflexive (resp. reflexive; transitive) closure of

![]() , and

, and

![]() stands for the transpose of

stands for the transpose of

![]() , that is,

, that is,

![]() if

if

![]() . We write

. We write

![]() for a

for a

![]() -sequence

-sequence

![]() of

of

![]() steps. If

steps. If

![]() are binary relations on

are binary relations on

![]() then

then

![]() denotes their composition, i.e.

denotes their composition, i.e.

![]() if there exists

if there exists

![]() such that

such that

![]() . We often set

. We often set

![]() .

.

A relation

![]() is deterministic if for each

is deterministic if for each

![]() there is at most one

there is at most one

![]() such that

such that

![]() . It is confluent if

. It is confluent if

![]() .

.

We say that

![]() is

is

![]() -normal (or a

-normal (or a

![]() -normal form, noted

-normal form, noted

![]() ) if

) if

![]() for all

for all

![]() , that is, there is no

, that is, there is no

![]() such that

such that

![]() ; and we say that

; and we say that

![]() has a normal form u if

has a normal form u if

![]() with u

with u

![]() -normal. Confluence implies that each

-normal. Confluence implies that each

![]() has unique normal form, if any exists.

has unique normal form, if any exists.

Normalization. Let

![]() be an ARS. In general, a term may or may not reduce to a normal form. And if it does, not all reduction sequences necessarily lead to a normal form. A term is weakly or strongly normalizing, depending on if it may or must reduce to normal form. If a term

be an ARS. In general, a term may or may not reduce to a normal form. And if it does, not all reduction sequences necessarily lead to a normal form. A term is weakly or strongly normalizing, depending on if it may or must reduce to normal form. If a term

![]() is strongly normalizing, any choice of steps will eventually lead to a normal form. However, if

is strongly normalizing, any choice of steps will eventually lead to a normal form. However, if

![]() is weakly normalizing, how do we compute a normal form? This is the problem tackled by normalization and normalizing strategies: by repeatedly performing only specific steps, a normal form will be computed, provided that

is weakly normalizing, how do we compute a normal form? This is the problem tackled by normalization and normalizing strategies: by repeatedly performing only specific steps, a normal form will be computed, provided that

![]() can reduce to any. We recall two important notions of normalization.

can reduce to any. We recall two important notions of normalization.

Definition 2.1 (Normalizing). Let

![]() be an ARS and

be an ARS and

![]() .

.

(1)

![]() is strongly

is strongly

![]() -normalizing (or terminating) if every maximal

-normalizing (or terminating) if every maximal

![]() -sequence from

-sequence from

![]() ends in a normal form ( i.e. ,

ends in a normal form ( i.e. ,

![]() has no infinite

has no infinite

![]() -sequence).

-sequence).

(2)

![]() is weakly

is weakly

![]() -normalizing (or just normalizing) if there exists a

-normalizing (or just normalizing) if there exists a

![]() -sequence from

-sequence from

![]() that ends in a

that ends in a

![]() -normal form ( i.e. , t has a

-normal form ( i.e. , t has a

![]() -normal form).

-normal form).

Reduction

![]() is strongly (resp. weakly) normalizing if so is every

is strongly (resp. weakly) normalizing if so is every

![]() . Reduction

. Reduction

![]() is uniformly normalizing if every weakly

is uniformly normalizing if every weakly

![]() -normalizing

-normalizing

![]() is also strongly

is also strongly

![]() -normalizing.

-normalizing.

Clearly, strong normalization implies weak normalization, and any deterministic reduction is uniformly normalizing.

A normalizing strategy for

![]() is a reduction strategy which, given a term

is a reduction strategy which, given a term

![]() , is guaranteed to reach its

, is guaranteed to reach its

![]() -normal form, if any exists.

-normal form, if any exists.

Definition 2.2 (Normalizing strategies) A subreduction

![]() is a normalizing strategy for

is a normalizing strategy for

![]() if

if

![]() has the same normal forms as

has the same normal forms as

![]() , and for all

, and for all

![]() , if t has a

, if t has a

![]() -normal form, then every maximal

-normal form, then every maximal

![]() -sequence from t ends in a

-sequence from t ends in a

![]() -normal form.

-normal form.

Note that in Definition 2.2,

![]() need not be deterministic, and

need not be deterministic, and

![]() and

and

![]() need not be confluent.

need not be confluent.

Factorization. In this paper, we will extensively use factorization results.

Definition 2.3 (Factorization, postponement). Let

![]() be an ARS with

be an ARS with

![]() . Relation

. Relation

![]() satisfies

satisfies

![]() -factorization, written

-factorization, written

![]() , if

, if

Relation

![]() postpones after

postpones after

![]() , written

, written

![]() , if

, if

It is an easy result that

![]() -factorization is equivalent to postponement, which is a more convenient way to express it.

-factorization is equivalent to postponement, which is a more convenient way to express it.

Lemma 2.4. The following are equivalent (for any two relations

![]() ):

):

(1) Postponement:

![]() .

.

(2) Factorization:

![]() .

.

Hindley (Reference Hindley1964) first noted that a local property implies factorization. Let

![]() . We say that

. We say that

![]() strongly postpones after

strongly postpones after

![]() , if

, if

Lemma 2.5 (Hindley Reference Hindley1964).

![]() implies

implies

![]() .

.

Observe that the following are special cases of strong postponement. The first one is linear in

![]() ; we refer to it as linear postponement. In the second one, recall that

; we refer to it as linear postponement. In the second one, recall that

![]() .

.

(1)

![]() .

.

(2)

![]() .

.

Linear variants of postponement can easily be adapted to quantitative variants, which allow us to “count the steps” and are useful to establish termination properties. We do this in Section 6.3.

Diamonds. We recall also another quantitative result, which we will use.

Fact 2.6 (Newman 1842). In an ARS

![]() , if

, if

![]() is quasi-diamond, then it has random descent, where quasi-diamond and random descent are defined below.

is quasi-diamond, then it has random descent, where quasi-diamond and random descent are defined below.

(1)

Quasi-Diamond: For all

![]() , if

, if

![]() , then

, then

![]() or

or

![]() for some

for some

![]() .

.

(2)

Random Descent: For all

![]() , all maximal

, all maximal

![]() -sequences from

-sequences from

![]() have the same number of steps, and all end in the same normal form, if any exists.

have the same number of steps, and all end in the same normal form, if any exists.

Clearly, if

![]() is quasi-diamond then it is confluent and uniformly normalizing.

is quasi-diamond then it is confluent and uniformly normalizing.

Postponement, Confluence and Commutation. Both postponement and confluence are commutation properties. Two relations

![]() and

and

![]() on

on

![]() commute if

commute if

So, a relation

![]() is confluent if and only if it commutes with itself. Postponement and commutation can be defined in terms of each other, simply taking

is confluent if and only if it commutes with itself. Postponement and commutation can be defined in terms of each other, simply taking

![]() for

for

![]() and

and

![]() for

for

![]() (

(

![]() postpones after

postpones after

![]() if and only if

if and only if

![]() commutes with

commutes with

![]() ). As propounded in Reference van Oostromvan Oostrom (2020b), this fact allows for proving postponement by means of decreasing diagrams (van Oostrom Reference van Oostrom1994, Reference van Oostrom2008). This is a powerful and general technique to prove commutation properties: it reduces the problem of showing commutation to a local test; in exchange for localization, diagrams need to be decreasing with respect to a labeling.

). As propounded in Reference van Oostromvan Oostrom (2020b), this fact allows for proving postponement by means of decreasing diagrams (van Oostrom Reference van Oostrom1994, Reference van Oostrom2008). This is a powerful and general technique to prove commutation properties: it reduces the problem of showing commutation to a local test; in exchange for localization, diagrams need to be decreasing with respect to a labeling.

Definition 2.7 (Decreasing). Let

![]() and

and

![]() . The pair of relations

. The pair of relations

![]() is decreasing if for some well-founded strict order

is decreasing if for some well-founded strict order

![]() on the set of labels

on the set of labels

![]() the following holds:

the following holds:

where

![]() for any

for any

![]() , and

, and

![]() .

.

Theorem 2.8 (Decreasing diagram van Oostrom Reference van Oostrom1994). A pair of relations

![]() commutes if it is decreasing.

commutes if it is decreasing.

Modularizing Confluence. A classic tool to modularize a proof of confluence is Hindley–Rosen lemma: the union of confluent reductions is itself confluent if they all commute with each other.

Lemma 2.9 (Hindley--Rosen). Let

![]() and

and

![]() be relations on a set A. If

be relations on a set A. If

![]() and

and

![]() are confluent and commute with each other, then

are confluent and commute with each other, then

![]() is confluent.

is confluent.

Like for postponement, strong commutation implies commutation.

Lemma 2.10 (Strong commutation Hindley Reference Hindley1964). Strong commutation (

![]() ) implies commutation.

) implies commutation.

2.2 Basics on the  -calculus

-calculus

We recall the syntax and some relevant notions of the

![]() -calculus, taking Plotkin’s call-by-value (CbV, for short)

-calculus, taking Plotkin’s call-by-value (CbV, for short)

![]() -calculus (Plotkin Reference Plotkin1975) as a concrete example.

-calculus (Plotkin Reference Plotkin1975) as a concrete example.

Terms and values are mutually generated by the grammars below.

where x ranges over a countably infinite set

![]() of variables. Terms of shape TS and

of variables. Terms of shape TS and

![]() are called applications and abstractions, respectively. In

are called applications and abstractions, respectively. In

![]() ,

,

![]() binds the occurrences of x in T. The set of free ( i.e. non-bound) variables of a term T is denoted by

binds the occurrences of x in T. The set of free ( i.e. non-bound) variables of a term T is denoted by

![]() . Terms are identified up to (clash-avoiding) renaming of their bound variables (

. Terms are identified up to (clash-avoiding) renaming of their bound variables (

![]() -congruence).

-congruence).

Reduction.

• Contexts (with exactly one hole

![]() ) are generated by the grammar

) are generated by the grammar

![]() stands for the term obtained from

stands for the term obtained from

![]() by replacing the hole with the term T (possibly capturing some free variables of T).

by replacing the hole with the term T (possibly capturing some free variables of T).

• A rule

![]() is a binary relation on

is a binary relation on

![]() , also noted

, also noted

![]() , writing

, writing

![]() ; R is called a

; R is called a

![]() -redex.

-redex.

• A reduction step

![]() is the closure of

is the closure of

![]() under contexts

under contexts

![]() . Explicitly, if

. Explicitly, if

![]() then

then

![]() if

if

![]() and

and

![]() , for some context

, for some context

![]() and some

and some

![]() .

.

The Call-by-Value

![]() -calculus. The CbV

-calculus. The CbV

![]() -calculus is the rewrite system

-calculus is the rewrite system

![]() , the set of terms

, the set of terms

![]() equipped with

equipped with

![]() -reduction

-reduction

![]() , that is, the contextual closure of the rule

, that is, the contextual closure of the rule

![]() :

:

where

![]() is the term obtained from T by capture-avoiding substitution of V for the free occurrences of x in T. Notice that here

is the term obtained from T by capture-avoiding substitution of V for the free occurrences of x in T. Notice that here

![]() -redexes can be fired only when the argument is a value.

-redexes can be fired only when the argument is a value.

Weak evaluation (which does not reduce in the body of a function) evaluates closed terms to values. In the literature of CbV, there are three main weak schemes: reducing from left to right, as defined by Plotkin (Reference Plotkin1975), from right to left (Leroy Reference Leroy1990), or in an arbitrary order (Lago and Martini Reference Lago and Martini2008). Left contexts

![]() , right contexts

, right contexts

![]() , and (arbitrary order) weak contexts

, and (arbitrary order) weak contexts

![]() are, respectively, defined by

are, respectively, defined by

Given a rule

![]() on

on

![]() , weak reduction

, weak reduction

![]() is the closure of

is the closure of

![]() under weak contexts

under weak contexts

![]() ; non-weak reduction

; non-weak reduction

![]() is the closure of

is the closure of

![]() under contexts

under contexts

![]() that are not weak. Left and non-left reductions (

that are not weak. Left and non-left reductions (

![]() and

and

![]() ), right and non-right reductions (

), right and non-right reductions (

![]() and

and

![]() ) are defined analogously.

) are defined analogously.

Note that

![]() and

and

![]() are deterministic, whereas

are deterministic, whereas

![]() is not.

is not.

CbV Weak Factorization. Factorization of

![]() allows for a characterization of the terms which reduce to a value. Convergence below is a remarkable consequence of factorization.

allows for a characterization of the terms which reduce to a value. Convergence below is a remarkable consequence of factorization.

Theorem 2.11 (Weak left factorization Plotkin Reference Plotkin1975).

(1) Left Factorization of

![]() :

:

![]() .

.

(2) Value Convergence:

![]() for some value V if and only if

for some value V if and only if

![]() for some value V’.

for some value V’.

The same results hold for

![]() and

and

![]() in place of

in place of

![]() .

.

Since the

![]() -normal forms of closed terms are exactly closed values, Theorem 2.11.2 means that every closed term T

-normal forms of closed terms are exactly closed values, Theorem 2.11.2 means that every closed term T

![]() -reduces to a value if and only if

-reduces to a value if and only if

![]() -reduction from T terminates.

-reduction from T terminates.

3. The Computational Core

We recall the syntax and the reduction of the computational core, shortly

![]() , introduced in de' Liguoro and Treglia (2020).

, introduced in de' Liguoro and Treglia (2020).

We use a notation slightly different from the one used in de' Liguoro and Treglia (2020) (and recalled in Section 1). Such a syntactical change is convenient both to present the calculus in a more familiar fashion, and to establish useful connections between

![]() and two well-known calculi, namely Simpson’s calculus (Simpson Reference Simpson and Giesl2005) and Plotkin’s call-by-value

and two well-known calculi, namely Simpson’s calculus (Simpson Reference Simpson and Giesl2005) and Plotkin’s call-by-value

![]() -calculus (Plotkin Reference Plotkin1975).

-calculus (Plotkin Reference Plotkin1975).

The equivalence between the current presentation of

![]() and de' Liguoro and Treglia (2020) is detailed in Appendix E.

and de' Liguoro and Treglia (2020) is detailed in Appendix E.

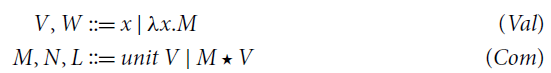

Definition 3.1 (Terms of

![]() ). Terms of the computational core consist of two sorts of expressions:

). Terms of the computational core consist of two sorts of expressions:

where x ranges over a countably infinite set

![]() of variables. We set

of variables. We set

![]() ;

;

![]() and

and

![]() are the sets of free variables occurring in V and M, respectively, and are defined as usual. Terms are identified up to clash-avoiding renaming of bound variables (

are the sets of free variables occurring in V and M, respectively, and are defined as usual. Terms are identified up to clash-avoiding renaming of bound variables (

![]() -congruence).

-congruence).

The unary operator

![]() is just another notation for

is just another notation for

![]() as presented in Section 1 it coerces a value V into a computation

as presented in Section 1 it coerces a value V into a computation

![]() , sometimes called returned value.

, sometimes called returned value.

Remark 3.2 (Application). A computation VM is a restricted form of application, corresponding to the term

![]() in Wadler (1995) (see Section 1) where there is no functional application. The reason is that the bind

in Wadler (1995) (see Section 1) where there is no functional application. The reason is that the bind

![]() represents an effectful form of application, such that by redefining the unit and bind one obtains an actual evaluator for the desired computational effects (Wadler 1995). This restriction may seem a strong limitation because we apparently cannot express iterated applications: (VM)N is not well formed in

represents an effectful form of application, such that by redefining the unit and bind one obtains an actual evaluator for the desired computational effects (Wadler 1995). This restriction may seem a strong limitation because we apparently cannot express iterated applications: (VM)N is not well formed in

![]() . However, application among computations is definable in

. However, application among computations is definable in

![]() :

:

Reduction. The operational semantics of

![]() puts together rules corresponding to the monad laws.

puts together rules corresponding to the monad laws.

Definition 3.3 (Reduction). Relation

![]() is the union of the following rules:

is the union of the following rules:

For every

![]() , reduction

, reduction

![]() is the contextual closure of

is the contextual closure of

![]() , where contexts are defined as follows:

, where contexts are defined as follows:

All reductions in Definition 3.3 are binary relations on

![]() , thanks to the proposition below.

, thanks to the proposition below.

Proposition 3.4. The set of computations

![]() is closed under substitution and reduction:

is closed under substitution and reduction:

(1) If

![]() and

and

![]() , then

, then

![]() .

.

(2) For every

![]() , if

, if

![]() , then:

, then:

![]() if and only if

if and only if

![]() .

.

Proof. Point 1 (formally proved by induction on M) holds because

![]() just replaces a value, x, with another value,

just replaces a value, x, with another value,

![]() . Point 2 is proved by induction on the context for

. Point 2 is proved by induction on the context for

![]() , using Point 1.

, using Point 1.![]()

The computational core

![]() is the rewriting system

is the rewriting system

![]() .

.

Proposition 3.5 (Confluence, de’ Liguoro and Treglia Reference de’ Liguoro and Treglia2020). Reduction

![]() is confluent.

is confluent.

Remark 3.6 (

![]() and

and

![]() ). The relation between

). The relation between

![]() of the computational core and

of the computational core and

![]() of Plotkin’s CbV

of Plotkin’s CbV

![]() -calculus is investigated in Appendix F. To give a taste of it, we show with an example how

-calculus is investigated in Appendix F. To give a taste of it, we show with an example how

![]() -reduction is simulated by

-reduction is simulated by

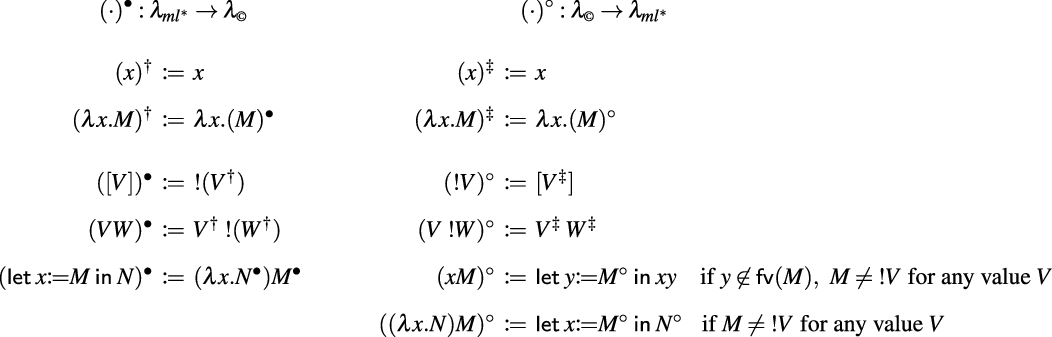

![]() -reduction, possibly with more steps. Since

-reduction, possibly with more steps. Since

![]() is a relation on

is a relation on

![]() (Proposition 3.4), no computation N will ever reduce to any value V; however, reduction to values is represented by a reduction

(Proposition 3.4), no computation N will ever reduce to any value V; however, reduction to values is represented by a reduction

![]() , where

, where

![]() is the coercion of value V into a computation. Let us assume that

is the coercion of value V into a computation. Let us assume that

![]() and

and

![]() . We have:

. We have:

Similarly, in Plotkin’s CbV

![]() -calculus, if

-calculus, if

![]() and

and

![]() , then

, then

![]() .

.

Surface and Weak Reduction. As we shall see in the next sections, there are two natural restrictions of

![]() : weak reduction

: weak reduction

![]() which does not fire in the scope of

which does not fire in the scope of

![]() , and surface reduction

, and surface reduction

![]() , which does not fire in the scope of

, which does not fire in the scope of

![]() . The former is the evaluation usually studied in CbV

. The former is the evaluation usually studied in CbV

![]() -calculus (Theorem 2.11). The latter is the natural evaluation in linear logic, and in Simpson’s calculus, whose relation with

-calculus (Theorem 2.11). The latter is the natural evaluation in linear logic, and in Simpson’s calculus, whose relation with

![]() we discuss in Section 4.

we discuss in Section 4.

Surface and weak contexts are, respectively, defined by the grammars

For

![]() , weak reduction

, weak reduction

![]() is the closure of

is the closure of

![]() under weak contexts

under weak contexts

![]() , surface reduction

, surface reduction

![]() is its closure under surface contexts

is its closure under surface contexts

![]() . non-surface reduction

. non-surface reduction

![]() is the closure of

is the closure of

![]() under contexts

under contexts

![]() that are not surface. Similarly, nonweak reduction

that are not surface. Similarly, nonweak reduction

![]() is the closure of

is the closure of

![]() under contexts

under contexts

![]() that are not weak.

that are not weak.

Clearly,

![]() . Note that

. Note that

![]() is a deterministic relation, while

is a deterministic relation, while

![]() is not.

is not.

Example 3.7. To clarify the difference between surface and weak, let us consider the term

![]() , where

, where

![]() , and two different

, and two different

![]() steps from it. We underline the fired redex.

steps from it. We underline the fired redex.

Surface reduction can be seen as the natural counterpart of weak reduction in calculi with let-constructors or explicit substitutions, as we show in Section 3.1.

Remark 3.8 (Weak contexts). In the CbV

![]() -calculus (see Section 2.2,), weak contexts can be given in three forms, according to the order in which redexes that are not in the scope of abstractions are fired:

-calculus (see Section 2.2,), weak contexts can be given in three forms, according to the order in which redexes that are not in the scope of abstractions are fired:

![]() . When the grammar of terms is restricted to computations, the three coincide. So, in

. When the grammar of terms is restricted to computations, the three coincide. So, in

![]() there is only one definition of weak context, and weak, left and right reductions coincide.

there is only one definition of weak context, and weak, left and right reductions coincide.

In Sections 4 and 5, we analyze the properties of weak and surface reduction. We first study

![]() , and then we move to the whole

, and then we move to the whole

![]() , where

, where

![]() and

and

![]() also come to play.

also come to play.

Notation. In the rest of the paper, we adopt the following notation:

3.1 The computational core vs. computational calculi with let-notation

It is natural to compare the computational core

![]() with other untyped computational calculi, and wonder if the analysis of the rewriting theory of

with other untyped computational calculi, and wonder if the analysis of the rewriting theory of

![]() we present in this paper applies to them. There is indeed a rich literature on computational calculi refining Moggi’s

we present in this paper applies to them. There is indeed a rich literature on computational calculi refining Moggi’s

![]() (Moggi 1988 Reference Moggi1989, Reference Moggi1991), most of them use the let -constructor. A standard reference is Sabry and Wadler’s

(Moggi 1988 Reference Moggi1989, Reference Moggi1991), most of them use the let -constructor. A standard reference is Sabry and Wadler’s

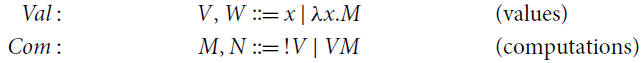

![]() (Sabry and Wadler Reference Sabry and Wadler1997, Section 5), which we display in Figure 1.

(Sabry and Wadler Reference Sabry and Wadler1997, Section 5), which we display in Figure 1.

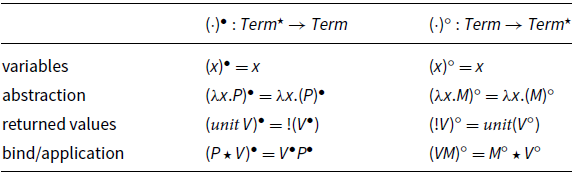

Figure 1.

![]() : Syntax and reduction.

: Syntax and reduction.

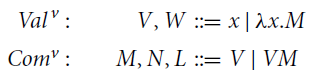

![]() has a two sorted syntax that separates values (i.e., variables and abstractions) and computations. The latter are either let-expressions (aka explicit substitutions, capturing monadic binding), or applications (of values to values), or coercions [V] of values V into computations ([V] is the notation for

has a two sorted syntax that separates values (i.e., variables and abstractions) and computations. The latter are either let-expressions (aka explicit substitutions, capturing monadic binding), or applications (of values to values), or coercions [V] of values V into computations ([V] is the notation for

![]() in Sabry and Wadler (Reference Sabry and Wadler1997), so it corresponds to

in Sabry and Wadler (Reference Sabry and Wadler1997), so it corresponds to

![]() in

in

![]() ).

).

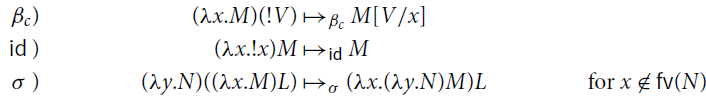

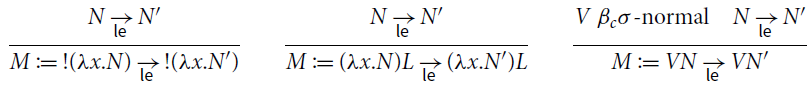

• The reduction rules in

![]() are the usual

are the usual

![]() and

and

![]() from Plotkin’s call-by-value

from Plotkin’s call-by-value

![]() -calculus (Plotkin Reference Plotkin1975), plus the oriented version of three monad laws:

-calculus (Plotkin Reference Plotkin1975), plus the oriented version of three monad laws:

![]() ,

,

![]() ,

,

![]() (see Figure 1).

(see Figure 1).

• Reduction

![]() is the contextual closure of the union of these rules.

is the contextual closure of the union of these rules.

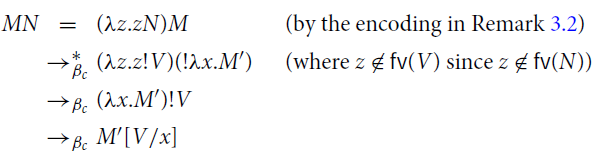

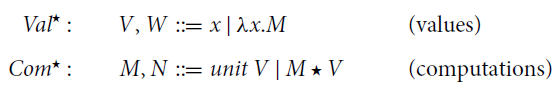

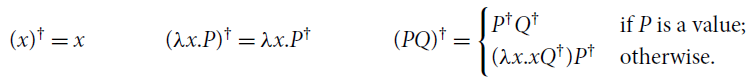

To state a correspondence between

![]() and

and

![]() , consider the translations in Figure 2: translation

, consider the translations in Figure 2: translation

![]() from

from

![]() to

to

![]() (resp.

(resp.

![]() from

from

![]() to

to

![]() ) is defined via the auxiliary encoding

) is defined via the auxiliary encoding

![]() (resp.

(resp.

![]() ) for values. The translations induce an equational correspondence by adding

) for values. The translations induce an equational correspondence by adding

![]() -equality to

-equality to

![]() . More precisely, let

. More precisely, let

![]() be the closure of the rule

be the closure of the rule

![]() (below left) under contexts

(below left) under contexts

![]() (below right).

(below right).

Figure 2. Translations between

![]() and

and

![]() .

.

Let

![]() be the reflexive transitive and symmetric closure of the reduction

be the reflexive transitive and symmetric closure of the reduction

![]() , and similarly for

, and similarly for

![]() with respect to

with respect to

![]() .

.

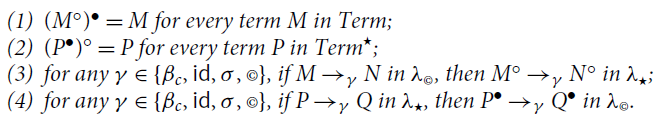

Proposition 3.9. The following hold:

(1)

![]() for every computation M in

for every computation M in

![]() ;

;

(2)

![]() for every computation P in

for every computation P in

![]() ;

;

(3)

![]() implies

implies

![]() , for every computations M, N in

, for every computations M, N in

![]() ;

;

(4)

![]() implies

implies

![]() , for every computations P, Q in

, for every computations P, Q in

![]() .

.

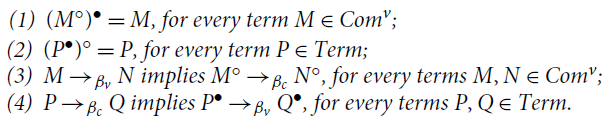

Proof. (1) By induction on M in

![]() .

.

(2) By induction on P in

![]() .

.

(3) We prove that

![]() implies

implies

![]() , by induction on the definition of

, by induction on the definition of

![]() .

.

(4) We prove that

![]() implies

implies

![]() , by induction on the definition of

, by induction on the definition of

![]() .

.![]()

Proposition 3.9 establishes a precise correspondence between the equational theories of

![]() (including

(including

![]() -conversion) and

-conversion) and

![]() . We had to consider

. We had to consider

![]() since

since

(where

![]() is the reflexive transitive and symmetric closure of

is the reflexive transitive and symmetric closure of

![]() ) and so condition (1) in Proposition 3.9 would not hold if we replace

) and so condition (1) in Proposition 3.9 would not hold if we replace

![]() with

with

![]() .

.

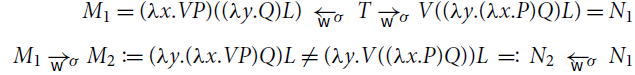

Remark 3.10 (Some intricacies). The correspondence between the reduction theories of

![]() (possibly including

(possibly including

![]() ) and

) and

![]() is not immediate and demands further investigations since, according to the terminology in Sabry and Wadler (1997), there is no Galois connection: Proposition 3.9 where we replace

is not immediate and demands further investigations since, according to the terminology in Sabry and Wadler (1997), there is no Galois connection: Proposition 3.9 where we replace

![]() with

with

![]() , and

, and

![]() with

with

![]() does not hold. More precisely:

does not hold. More precisely:

• the condition corresponding to Point 1, namely

![]() , fails since

, fails since

![]() when

when

![]() for any value V, see (7) above;

for any value V, see (7) above;

• the condition corresponding to Point 3, namely

![]() implies

implies

![]() , fails because

, fails because

![]() but

but

![]() .

.

Surface vs. Weak Reduction. In this paper, we study not only weak but also surface reduction, as the latter has better rewriting properties than the former. Surface reduction in

![]() can be seen as a natural counterpart to Plotkin’s weak reduction in calculi with the let-constructor, such as

can be seen as a natural counterpart to Plotkin’s weak reduction in calculi with the let-constructor, such as

![]() . Intuitively, a term of the form

. Intuitively, a term of the form

![]() can be interpreted as syntactic sugar for

can be interpreted as syntactic sugar for

![]() , however, in the expression

, however, in the expression

![]() , it is not obvious that weak reduction should avoid firing redexes in N. The distinction between

, it is not obvious that weak reduction should avoid firing redexes in N. The distinction between

![]() and let allows for a clean interpretation of weak reduction: it forbids reduction under

and let allows for a clean interpretation of weak reduction: it forbids reduction under

![]() , but not under let, which is compatible with surface reduction in

, but not under let, which is compatible with surface reduction in

![]() .

.

Technically, when we embed

![]() in the computational core via the translation

in the computational core via the translation

![]() in Figure 2, weak reduction

in Figure 2, weak reduction

![]() in

in

![]() (defined as the restriction of

(defined as the restriction of

![]() that does not fire under

that does not fire under

![]() ) corresponds to surface reduction

) corresponds to surface reduction

![]() in

in

![]() : if

: if

![]() then

then

![]() but not necessarily

but not necessarily

![]() . Indeed, consider

. Indeed, consider

![]() in

in

![]() , with

, with

![]() ; then

; then

![]() , which is not a weak step, in

, which is not a weak step, in

![]() .

.

4. The Operational Properties of

Since

![]() -reduction is the engine of any

-reduction is the engine of any

![]() -calculus, we start our analysis of the rewriting theory of

-calculus, we start our analysis of the rewriting theory of

![]() by studying the properties of

by studying the properties of

![]() and its surface restriction

and its surface restriction

![]() . As we show in this section,

. As we show in this section,

![]() and

and

![]() have already been studied in the literature: there is an exact correspondence with Simpson’s calculus (Simpson Reference Simpson and Giesl2005), which stems from Girard’s linear logic (Girard Reference Girard1987). Indeed, the operator

have already been studied in the literature: there is an exact correspondence with Simpson’s calculus (Simpson Reference Simpson and Giesl2005), which stems from Girard’s linear logic (Girard Reference Girard1987). Indeed, the operator

![]() in Simpson (Reference Simpson and Giesl2005) (modeling the bang operator from linear logic) behaves exactly as the the operator

in Simpson (Reference Simpson and Giesl2005) (modeling the bang operator from linear logic) behaves exactly as the the operator

![]() in

in

![]() (modeling

(modeling

![]() in computational calculi). It is easily seen that

in computational calculi). It is easily seen that

![]() , that is,

, that is,

![]() when considering only

when considering only

![]() reduction, is nothing but the restriction of the bang calculus to computations. Thus,

reduction, is nothing but the restriction of the bang calculus to computations. Thus,

![]() has the same operational properties as

has the same operational properties as

![]() . In particular, surface factorization and confluence for

. In particular, surface factorization and confluence for

![]() are inherited from the corresponding properties of

are inherited from the corresponding properties of

![]() in Simpson’s calculus.

in Simpson’s calculus.

The Bang Calculus. We call bang calculus the fragment of Simpson’s linear

![]() -calculus (Simpson Reference Simpson and Giesl2005) without linear abstraction. It has also been studied in Ehrhard and Guerrieri (Reference Ehrhard and Guerrieri2016), Guerrieri and Manzonetto (Reference Guerrieri and Manzonetto2019), Faggian and Guerrieri (Reference Faggian and Guerrieri2021), Guerrieri and Olimpieri (Reference Guerrieri and Olimpieri2021) (with the name bang calculus, which we adopt), and it is closely related to Levy’s Call-by-Push-Value (Levy Reference Levy1999).

-calculus (Simpson Reference Simpson and Giesl2005) without linear abstraction. It has also been studied in Ehrhard and Guerrieri (Reference Ehrhard and Guerrieri2016), Guerrieri and Manzonetto (Reference Guerrieri and Manzonetto2019), Faggian and Guerrieri (Reference Faggian and Guerrieri2021), Guerrieri and Olimpieri (Reference Guerrieri and Olimpieri2021) (with the name bang calculus, which we adopt), and it is closely related to Levy’s Call-by-Push-Value (Levy Reference Levy1999).

We briefly recall the bang calculus

![]() . Terms

. Terms

![]() are defined by

are defined by

Contexts (

![]() ) and surface contexts (

) and surface contexts (

![]() ) are generated by the grammars:

) are generated by the grammars:

The reduction

![]() is the closure under context

is the closure under context

![]() of the rule

of the rule

Surface reduction

![]() is the closure of the rule

is the closure of the rule

![]() under surface contexts

under surface contexts

![]() . non-surface reduction

. non-surface reduction

![]() is the closure of the rule

is the closure of the rule

![]() under contexts

under contexts

![]() that are not surface. Surface reduction factorizes

that are not surface. Surface reduction factorizes

![]() .

.

Theorem 4.1 (Surface factorization Simpson Reference Simpson and Giesl2005). In

![]() :

:

(1) Surface factorization of

![]() :

:

![]() .

.

(2) Bang convergence:

![]() for some term R if and only if

for some term R if and only if

![]() for some term S.

for some term S.

Surface reduction is nondeterministic, but satisfies the diamond property of Fact 2.6.

Theorem 4.2 (Confluence and diamond Simpson Reference Simpson and Giesl2005). In

![]() :

:

• reduction

![]() is confluent;

is confluent;

• reduction

![]() is quasi-diamond (and hence confluent).

is quasi-diamond (and hence confluent).

Restriction to Computations. The restriction of the bang calculus to computations, i.e.,

![]() is exactly the same as the fragment of

is exactly the same as the fragment of

![]() with

with

![]() -rule as unique reduction rule, i.e.

-rule as unique reduction rule, i.e.

![]() .

.

First, observe that the set of computations

![]() defined in Section 3 is a subset of the terms

defined in Section 3 is a subset of the terms

![]() , and moreover it is closed under

, and moreover it is closed under

![]() reduction (exactly as in Proposition 3.4). Second, observe that the restriction of contexts and surface contexts to computations, gives exactly the grammar defined in Section 3. Then

reduction (exactly as in Proposition 3.4). Second, observe that the restriction of contexts and surface contexts to computations, gives exactly the grammar defined in Section 3. Then

![]() and

and

![]() are in fact the same, and for every

are in fact the same, and for every

![]() :

:

•

![]() if and only if

if and only if

![]() .

.

•

![]() if and only if

if and only if

![]() .

.

Hence,

![]() in

in

![]() inherits the operational properties of

inherits the operational properties of

![]() , in particular surface factorization and the quasi-diamond property of

, in particular surface factorization and the quasi-diamond property of

![]() (Theorems 4.1 and 4.2). We will use both extensively.

(Theorems 4.1 and 4.2). We will use both extensively.

Fact 4.3 (Properties of

![]() and of its restriction). In

and of its restriction). In

![]() :

:

• reduction

![]() is nondeterministic and confluent;

is nondeterministic and confluent;

• reduction

![]() is quasi-diamond (and hence confluent);

is quasi-diamond (and hence confluent);

• reduction

![]() satisfies surface factorization:

satisfies surface factorization:

![]() .

.

•

![]() for some value V if and only if

for some value V if and only if

![]() for some value W.

for some value W.

In Sections 6 and 7, we shall generalize and refine the last two points, respectively, to reduction

![]() instead of

instead of

![]() .

.

5. Operational Properties of

, Weak and Surface Reduction

, Weak and Surface Reduction

We study evaluation and normalization in

![]() via factorization theorems (Section 6), which are based on both weak and surface reductions. The construction we develop in the next sections demands more work than one may expect. This is due to the fact that the rules induced by the monadic laws of associativity and identity make the analysis of the reduction properties nontrivial. In particular – as anticipated in the introduction – weak reduction does not factorize

via factorization theorems (Section 6), which are based on both weak and surface reductions. The construction we develop in the next sections demands more work than one may expect. This is due to the fact that the rules induced by the monadic laws of associativity and identity make the analysis of the reduction properties nontrivial. In particular – as anticipated in the introduction – weak reduction does not factorize

![]() , and has severe drawbacks, which we explain next. Surface reduction behaves better, but still present difficulties. In the rest of this section, we examine their respective properties.

, and has severe drawbacks, which we explain next. Surface reduction behaves better, but still present difficulties. In the rest of this section, we examine their respective properties.

5.1 Weak reduction: the impact of associativity and identity

Weak (left) reduction (Section 2.2) is one of the most common and studied way to implement evaluation in CbV, and more generally in calculi with effects.

Weak

![]() reduction

reduction

![]() , that is, the closure of

, that is, the closure of

![]() under weak contexts is a deterministic relation. However, when including the rules induced by the monadic equation of associativity and identity, the reduction is nondeterministic, nonconfluent, and normal forms are not unique.

under weak contexts is a deterministic relation. However, when including the rules induced by the monadic equation of associativity and identity, the reduction is nondeterministic, nonconfluent, and normal forms are not unique.

This is somehow surprising, given the prominent role of such a reduction in the literature of calculi with effects. Notice that the issues only come from

![]() and

and

![]() , not from

, not from

![]() . To resume:

. To resume:

(1) Reductions

![]() and

and

![]() are nondeterministic, but are both confluent.

are nondeterministic, but are both confluent.

(2)

![]() are nondeterministic, nonconfluent, and their normal forms are not unique, i.e., adding

are nondeterministic, nonconfluent, and their normal forms are not unique, i.e., adding

![]() , weak reductions lose confluence and uniqueness of normal forms.

, weak reductions lose confluence and uniqueness of normal forms.

Example 5.1 (Non-confluence). An example of the nondeterminism of

![]() is the following:

is the following:

Because of the

![]() rule, weak reductions

rule, weak reductions

![]() and

and

![]() are not confluent and their normal forms are not unique (Point 2 above). Indeed, consider

are not confluent and their normal forms are not unique (Point 2 above). Indeed, consider

![]() where

where

![]() and

and

![]() . Then,

. Then,

where

![]() and

and

![]() are different

are different

![]() -normal forms (clearly,

-normal forms (clearly,

![]() ).

).

Reduction

![]() (like

(like

![]() , Theorem 2.11.1) admits weak factorization (Fact F.2 in Appendix F)

, Theorem 2.11.1) admits weak factorization (Fact F.2 in Appendix F)

This is not the case for

![]() . The following counterexample is due to van Oostrom (Reference van Oostrom2020a).

. The following counterexample is due to van Oostrom (Reference van Oostrom2020a).

Example 5.2 (Nonfactorization van Oostrom Reference van Oostrom2020a). Reduction

![]() does not admit weak factorization. Consider the reduction sequence

does not admit weak factorization. Consider the reduction sequence

M is

![]() -normal and cannot reduce to

-normal and cannot reduce to

![]() by only performing

by only performing

![]() steps (note that

steps (note that

![]() is

is

![]() -normal), hence it is impossible to factorize the sequence from M to

-normal), hence it is impossible to factorize the sequence from M to

![]() as

as

![]() .

.

Let: Different Notation, Same Issues. We stress that the issues are inherent to the associativity and identity rules, not to the specific syntax of

![]() . Exactly the same issues appear in Sabry and Wadler’s

. Exactly the same issues appear in Sabry and Wadler’s

![]() (Sabry and Wadler Reference Sabry and Wadler1997) (see our Figure 1), as we show in the example below.

(Sabry and Wadler Reference Sabry and Wadler1997) (see our Figure 1), as we show in the example below.

Example 5.3 (Evaluation context in

![]() -notation). In

-notation). In

![]() -notation, the standard evaluation is sequencing (Filinski Reference Filinski1996; Jones et al. Reference Jones, Shields, Launchbury, Tolmach, MacQueen and Cardelli1998; Levy et al. Reference Levy, Power and Thielecke2003), which exactly corresponds to weak reduction in

-notation, the standard evaluation is sequencing (Filinski Reference Filinski1996; Jones et al. Reference Jones, Shields, Launchbury, Tolmach, MacQueen and Cardelli1998; Levy et al. Reference Levy, Power and Thielecke2003), which exactly corresponds to weak reduction in

![]() . The evaluation context for sequencing is

. The evaluation context for sequencing is

We write

![]() for the closure of the

for the closure of the

![]() rules (in Figure 1

) under contexts

rules (in Figure 1

) under contexts

![]() . We observe two problems, the first one due to the rule

. We observe two problems, the first one due to the rule

![]() , the second one to the rule

, the second one to the rule

![]() .

.

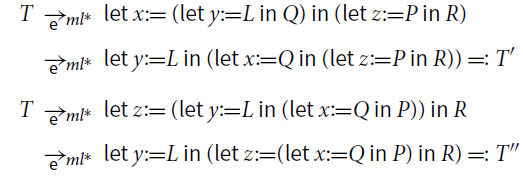

(1)

Non-confluence

. Because of the associative rule

![]() , reduction

, reduction

![]() is nondeterministic, nonconfluent, and normal forms are not unique. Consider the following term

is nondeterministic, nonconfluent, and normal forms are not unique. Consider the following term

There are two weak redexes in T, the overlined and the underlined one. Therefore,

where T’ are T” different

![]() -normal forms.

-normal forms.

(2)

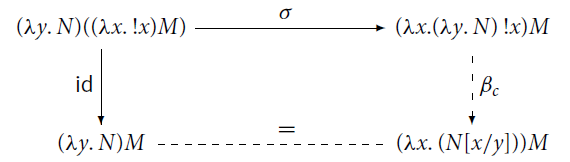

Non-factorization

. Because of the

![]() -rule, factorization w.r.t. sequencing does not hold. That is, a reduction sequence

-rule, factorization w.r.t. sequencing does not hold. That is, a reduction sequence

![]() cannot be reorganized as weak steps followed by non-weak steps. Consider the following variation on van Oostrom’s Example 5.2:

cannot be reorganized as weak steps followed by non-weak steps. Consider the following variation on van Oostrom’s Example 5.2:

M is

![]() -normal and cannot reduce to z z by only performing

-normal and cannot reduce to z z by only performing

![]() steps (note that

steps (note that

![]() is

is

![]() -normal), so it is impossible to factorize the sequence form M to zz as

-normal), so it is impossible to factorize the sequence form M to zz as

![]() .

.

5.2 Surface reduction

In

![]() , surface reduction is nondeterministic, but confluent, and well-behaving.

, surface reduction is nondeterministic, but confluent, and well-behaving.

Fact 5.4 (Nondeterminism). For

![]() ,

,

![]() is nondeterministic (because in general more than one surface redex can be fired).

is nondeterministic (because in general more than one surface redex can be fired).

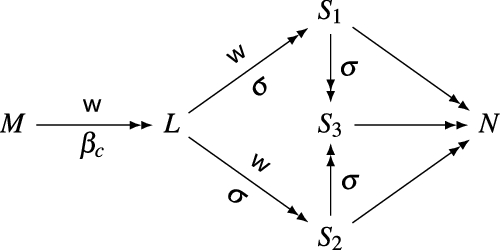

We now analyze confluence of surface reduction. We will use confluence of

![]() (Point 2 below) in Section 8 (Theorem 8.13).

(Point 2 below) in Section 8 (Theorem 8.13).

Proposition 5.5 (Confluence of surface reductions).

(1) Each of the reductions

![]() ,

,

![]() ,

,

![]() is confluent.

is confluent.

(2) Reductions

![]() and

and

![]() are confluent.

are confluent.

(3) Reduction

![]() is confluent.

is confluent.

(4) Reduction

![]() is not confluent.

is not confluent.

Proof. We rely on confluence of

![]() (by Theorem 4.2), and on Hindley–Rosen Lemma (Lemma 2.9). We prove commutation via strong commutation (Lemma 2.10). The only delicate point is the commutation of

(by Theorem 4.2), and on Hindley–Rosen Lemma (Lemma 2.9). We prove commutation via strong commutation (Lemma 2.10). The only delicate point is the commutation of

![]() with

with

![]() (Points 3 and 4).

(Points 3 and 4).

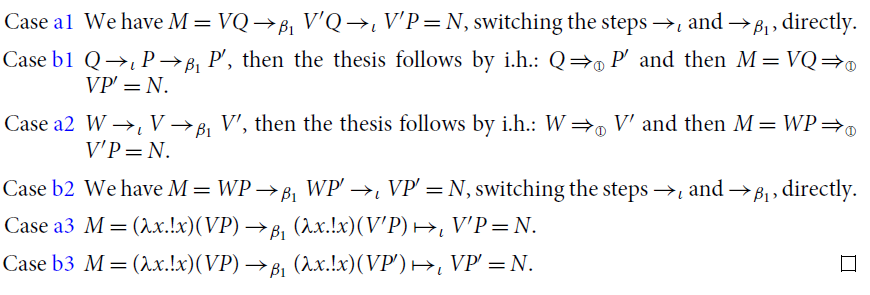

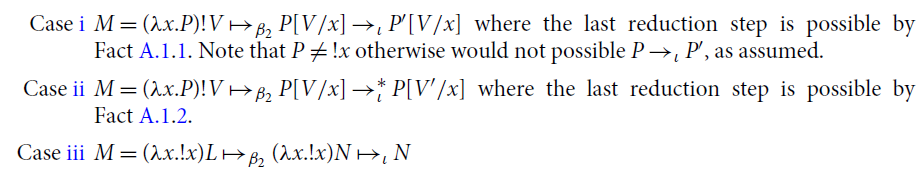

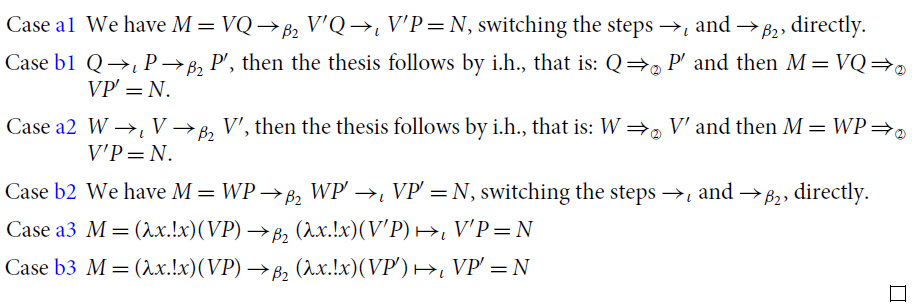

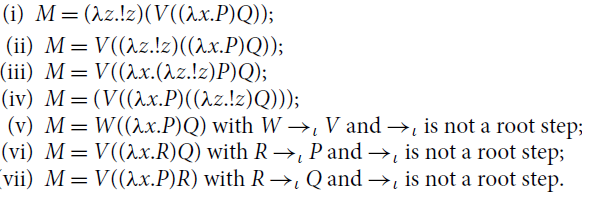

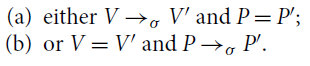

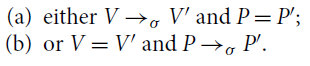

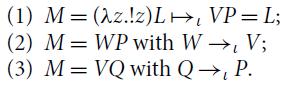

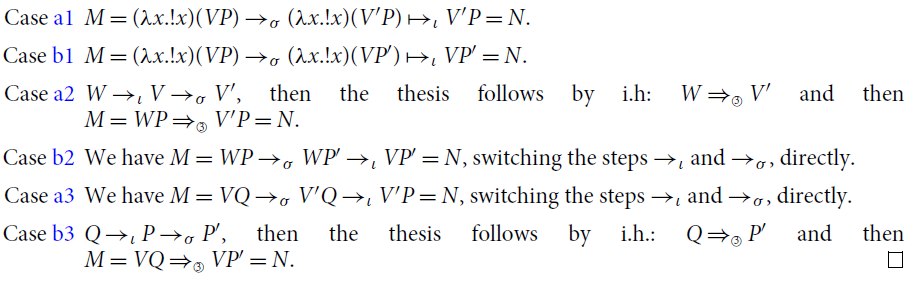

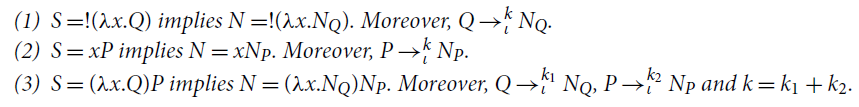

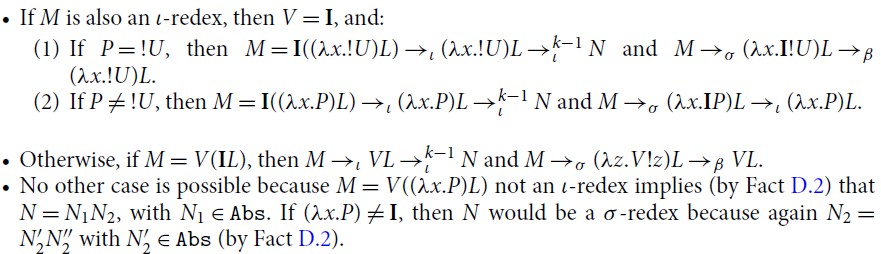

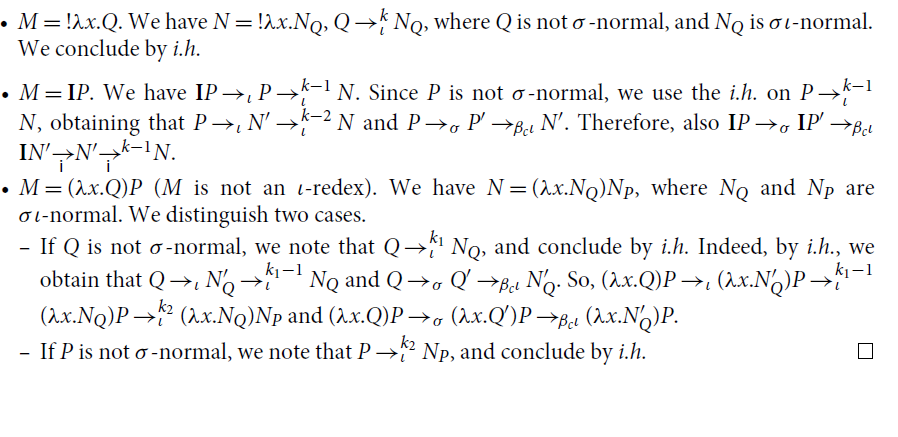

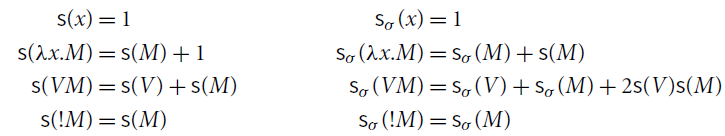

(1)

![]() is locally confluent and terminating, and so confluent;