Alternative medicine refers to a wide range of health practices not included in the healthcare system and not considered conventional or scientific medicine (World Health Organization, 2022). A common feature of alternative therapies is the lack of scientific evidence on their effectiveness, with some popular examples being homeopathy (Hawke et al., Reference Hawke, van Driel, Buffington, McGuire and King2018; Peckham et al., Reference Peckham, Cooper, Roberts, Agrawal, Brabyn and Tew2019) and reiki (Zimpel et al., Reference Zimpel, Torloni, Porfírio, Flumignan and da Silva2020). Therefore, they often can be considered pseudoscientific (i.e., practices or beliefs that are presented as scientific but are unsupported by scientific evidence; Fasce and Picó, Reference Fasce and Picó2019). Understanding why some people rely on alternative medicine despite this lack of evidence is relevant, since its usage can pose a threat to a person’s health (Freckelton, Reference Freckelton2012; Hellmuth et al., Reference Hellmuth, Rabinovici and Miller2019; Lilienfeld, Reference Lilienfeld2007), either by replacing evidence-based treatments (Chang et al., Reference Chang, Glissmeyer, Tonnes, Hudson and Johnson2006; Johnson et al., Reference Johnson, Park, Gross and Yu2018a, Reference Johnson, Park, Gross and Yu2018b; Mujar et al., Reference Mujar, Dahlui, Emran, Hadi, Wai, Arulanantham, Hooi and Taib2017) or by reducing their effectiveness (Awortwe et al., Reference Awortwe, Makiwane, Reuter, Muller, Louw and Rosenkranz2018).

In this research, we will assume that people assess the effectiveness of a given treatment (whether scientific or alternative) by estimating the causal link between the treatment (potential cause) and symptom relief (outcome). To achieve this, people can resort to various information sources, but they could certainly use their own experience of covariation between the treatment and the symptoms. However, biases can occur in this process. In particular, the causal illusion is the systematic error of perceiving a causal link between unrelated events that happen to occur in time proximity (Matute et al., Reference Matute, Blanco, Yarritu, Díaz-Lago, Vadillo and Barberia2015). This cognitive bias could explain why people sometimes judge that completely ineffective treatments cause health benefits (Matute et al., Reference Matute, Yarritu and Vadillo2011), particularly when both the administration of the treatment (i.e., the cause) and the relief of the symptoms (i.e., the outcome) occur with high frequency (Allan et al., Reference Allan, Siegel and Tangen2005; Hannah and Beneteau, Reference Hannah and Beneteau2009; Musca et al., Reference Musca, Vadillo, Blanco and Matute2010; Perales et al., Reference Perales, Catena, Gonzalez and Shanks2005; Vadillo et al., Reference Vadillo, Musca, Blanco and Matute2010).

Although the causal illusion is subject to variations in the probability with which the potential cause and the outcome occur, and hence most theoretical analyses of the phenomenon have focused on how people acquire contingency information (e.g., Matute et al., Reference Matute, Blanco and Díaz-Lago2019), the participant’s prior beliefs could also play a role, and this will be the focus of the current paper. In fact, influence of prior beliefs seems common in other cognitive biases that enable humans to protect their worldviews. A good example is the classical phenomenon of belief bias (Evans et al., Reference Evans, Barston and Pollard1983; Klauer et al., Reference Klauer, Musch and Naumer2000; Markovits and Nantel, Reference Markovits and Nantel1989). This consists of people’s tendency to accept the conclusion of a deductive inference based on their prior knowledge and beliefs rather than on the logical validity of the arguments. For example, the syllogism ‘All birds can fly. Eagles can fly. Therefore, eagles are birds’ is invalid because the conclusion does not follow from the premises, but people would often judge it as valid just because the conclusion seems in line with their previous knowledge. There is a specific form of belief bias known as ‘motivated reasoning’ (Trippas et al., Reference Trippas, Pennycook, Verde and Handley2015), in which people exhibit a strong preference or motivation to arrive at a particular conclusion when they are making an inference (Kunda, Reference Kunda1990). Thus, individuals draw the conclusion they want to believe from the available evidence. To do this, people tend to dismiss information that is incongruent with prior beliefs and focus excessively on evidence that supports prior conceptions, which resembles the popular confirmation bias (Oswald and Grosjean, Reference Oswald, Grosjean and Pohl2004). Additionally, some evidence points out that motivated reasoning can specifically affect causal inferences (Kahan et al., Reference Kahan, Peters, Dawson and Slovic2017), particularly when people learn about cause–effect relationships from their own experience (Caddick and Rottman, Reference Caddick and Rottman2021).

Thus, if these cognitive biases show the effect of prior beliefs, it should not be surprising that causal illusions operate in a similar way. In fact, some experimental evidence suggests that this is the case. For example, Blanco et al. (Reference Blanco, Gómez-Fortes and Matute2018) found that political ideology could modulate causal illusion so that the resulting inference fits previous beliefs. In particular, the results from their experiments suggest that participants developed a causal illusion selectively to favor the conclusions that they were more inclined to believe from the beginning.

Thus, we predict that prior beliefs about science and pseudoscience could also bias causal inferences about treatments and their health outcomes. More specifically, we suggest that, when people attempt to assess the effectiveness of a pseudoscientific or scientific medical treatment, their causal inferences may be biased to suit their prior beliefs and attitudes about both types of treatments. In line with this idea, a recent study by Torres et al. (Reference Torres, Barberia and Rodríguez-Ferreiro2020) explicitly examined the relationship between causal illusion in the laboratory and belief in pseudoscience. These authors designed a causal illusion task with a pseudoscience-framed scenario: participants had to decide whether an infusion made up of an Amazonian plant (i.e., a fictitious natural remedy that mimicked the characteristics of alternative medicine) was effective at alleviating headache. They found that participants who held stronger pseudoscientific beliefs (assessed by means of a questionnaire) showed a greater degree of causal illusion in their experiment, overestimating the ability of the herbal tea to alleviate the headache. Importantly, note that this experiment only contained one cover story, framed in a pseudoscientific scenario.

We argue that the results observed by Torres et al. (Reference Torres, Barberia and Rodríguez-Ferreiro2020) have two possible interpretations: the first is that people who believed in pseudoscience were more prone to causal illusion in general, regardless of the cover story of the task; the second, based on the effect observed by Blanco et al. (Reference Blanco, Gómez-Fortes and Matute2018) in the context of political ideology, is that the illusion is produced to confirm previous beliefs, that is, those participants who had a positive attitude toward alternative medicine were more inclined to believe that the infusion was working to heal the headache, and causal illusion developed to favor this conclusion. Given that only one pseudoscientific scenario was used in Torres et al.’s experiment, it is impossible to distinguish between the two interpretations. Thus, further research is necessary to analyze how individual differences in pseudoscientific beliefs modulate the intensity of causal illusion, and whether this modulation interacts with the scenario so that prior beliefs are reinforced.

To sum up, the present research aims to fill this gap by assessing the participants’ attitude toward pseudoscience, and then presenting an experimental task in which participants are asked to judge the effectiveness of two fictitious medical treatments: one presented as conventional/scientific, and the other one as alternative/pseudoscientific. None of these treatments were causally related to recovery. Our main hypothesis is that the intensity of the observed causal illusion will depend on the interaction between previous beliefs about pseudoscience and the current type of medicine presented. Specifically, we expect that:

-

• Participants with less positive previous beliefs about pseudoscience will develop weaker illusions in the pseudoscientific scenario than in the scientific scenario. For those participants, the conclusion that an alternative medicine is working is not very credible according to their prior beliefs.

-

• Participants with more positive beliefs about pseudoscience could either show the opposite pattern (so that they find more believable the conclusion that the pseudoscientific medicine works than the conclusion that the scientific medicine works), which would be consistent with the studies by Blanco et al. (Reference Blanco, Gómez-Fortes and Matute2018) on political ideology, or, alternatively, they could show similar levels of (strong) causal illusion for both treatments, which would suggest that pseudoscientific beliefs are associated with stronger causal illusions in general, as has been previously suggested (Torres et al., Reference Torres, Barberia and Rodríguez-Ferreiro2020).

1. Method

1.1. Ethics statement

The Ethical Review Board of the University of Deusto reviewed and approved the methods reported in this article, and the study was conducted according to the approved guidelines.

1.2. Participants

A sample of 98 participants (38.8% women; M age = 23.5 years; SD = 9.45) was recruited via the Prolific Academic Platform. A sensitivity analysis conducted in G*Power (Erdfelder et al., Reference Erdfelder, Faul, Buchner and Lang2009) revealed that this sample size allows detecting an effect as small as b = .27 with 80% power, for the interaction between a within-participants factor with two levels and a covariate. Participation in the experiment was offered only to those applicants in Prolific Academic’s pool who speak English fluently and had not taken part in previous studies carried out by our research team. Participants were compensated for their participation with £6.50 per hour, and the duration of the experiment was 16.6 minutes on average.

1.3. Materials

The experiment was a JavaScript program embedded in an HTML document and styled by CSS. Therefore, it was possible to participate in the experiment online from any computer with an Internet connection using a web browser.

The Pseudoscience Endorsement Scale (PES; Appendix A) measured pseudoscientific beliefs in our participants. The questionnaire, designed by Torres et al. (Reference Torres, Barberia and Rodríguez-Ferreiro2020), consists of 20 items referring to popular pseudoscientific myths and practices, for example, ‘Homeopathic remedies are effective as a complement to the treatment of some diseases’. Participants had to rate their agreement with each statement on a scale from 1 (Strongly disagree) to 7 (Strongly agree). The authors of the questionnaire inform of a high internal consistency of item scores (α = .89).

We complemented this assessment with three questions about alternative therapies taken from the Survey on the Social Perception of Science and Technology conducted by the Spanish Foundation for Science and Technology, FECYT (2018) (Appendix B). The first two questions recorded whether the participant had used alternative medicine in the past, and how it was used (i.e., as a complement or as a replacement of conventional treatments). Additionally, the third question measured the level of trust in six different treatments on a scale from 1 (Nothing) to 5 (A lot). Three of these treatments were scientific (childhood vaccines, antibiotics, and chemotherapy), whereas three were pseudoscientific (acupuncture, reiki, and homeopathy), with the purpose of identifying participants who place relatively more trust on pseudoscientific than on scientific procedures, or vice versa.

1.4. Procedure

We adapted the standard trial-by-trial causal illusion task that has been widely used to study causal illusion in our laboratory (Matute et al., Reference Matute, Blanco, Yarritu, Díaz-Lago, Vadillo and Barberia2015) to represent two different scenarios as a function of the instructional framing: alternative medicine scenario and conventional medicine scenario. In this task, participants had to judge the effectiveness of two different types of fictitious treatments to heal the crises provoked by two different fictitious syndromes. Both scenarios were shown to all participants sequentially. The order in which each scenario appeared was randomly decided for each participant.

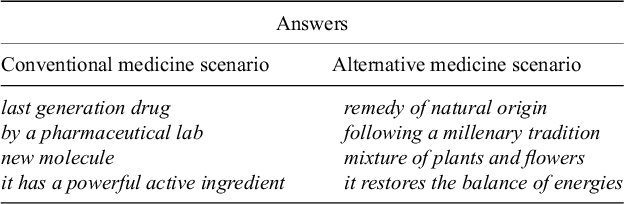

In each scenario, one of the two fictitious syndromes was presented, followed by the description of one of the two fictitious medicines. The characterization of each type of medicine as conventional or alternative tried to capture and highlight some of the aspects typically associated with scientific and pseudoscientific treatments, respectively (Appendix C). Immediately after the instructions describing each medicine were presented, we included a brief sentence completion task (Appendix D). Participants had to choose from a list the words that they judged more suitable to complete several sentences describing the medicine. For example, ‘Batatrim is composed of a… (a) mixture of plants and flowers, (b) new molecule’. This completion task had a twofold purpose. On the one hand, it demanded from participants deeper processing of the meaning of the medicine description in each scenario, so they were forced to carefully read the instructions. On the other hand, the choice of answers indicated how participants had perceived the medicine at the beginning of each phase, thus allowing to check the success of the instructional manipulation. This second function was complemented by a question that immediately followed the sentence completion task, requesting participants to place the fictitious medicine on a scale, with one pole being Alternative Medicine and the other Conventional Medicine. Thus, if the medicine description was successful in forming the impression of an alternative medicine, for instance, we would expect that the participant placed the medicine closer to the ‘Alternative Medicine’ pole.

Next, volunteers viewed a sequence of 40 trials corresponding to medical records of fictitious patients suffering from the syndrome. Each record showed whether a given patient had taken the medicine or not (i.e., the potential cause) and whether the patient’s crisis was healed or not (i.e., the outcome). Therefore, there were four trial types: (a) the patient took the medicine and the crisis was healed (16 trials out of 40); (b) the patient took the medicine and the crisis was not healed (4 trials out of 40); (c) the patient did not take the medicine but the crisis was nonetheless healed (16 trials out of 40); and (d) the patient did not take the medicine and the crisis was not healed (4 trials out of 40). The order of presentation of the 40 trials was random for each participant (i.e., all possible sequences were equally probable). In both scenarios, the probability of overcoming the crisis after taking the medicine was the same as the probability of overcoming the crisis without taking the medicine: P (Healing│Medicine) = P (Healing│

![]() Medicine) = .80, meaning that the medicine was useless to treat the disease.

Medicine) = .80, meaning that the medicine was useless to treat the disease.

On each trial, just after showing whether the patient took the treatment or not, the participants answered whether they thought the patient would overcome the crisis or not, by giving a yes/no response. This question is frequently included in causal illusion experiments because it helps participants to stay focused on the task (Vadillo et al., Reference Vadillo, Miller and Matute2005). The occurrences of the outcome (i.e., the healings of the crises) did not depend on the participants’ responses, but were pre-programmed to occur in random order.

Finally, after observing the sequence of medical records for one scenario, participants were asked to judge the extent to which they thought the medicine had been effective in healing the patients’ crises. They had to rate the effectiveness on a scale from 0 (Definitely not effective) to 100 (Definitely effective), with 50 (Somewhat effective) in the middle point of the scale. When the judgment of the first scenario was recorded, the second scenario followed with identical procedure (instructions, manipulation checks, training phase, and judgment).

Once the two scenarios of the contingency learning task were completed, participants answered the PES (Torres et al., Reference Torres, Barberia and Rodríguez-Ferreiro2020) and the questions concerning alternative therapies from the Survey on the Social Perception of Science and Technology (FECYT, 2018), in random order.

2. Results

2.1. Manipulation check

Before moving to the main analyses, we analyzed whether the instructional manipulation was successful. To this end, we first checked that almost all participants completed the sentences correctly (above 92% correct answers in both scenarios, so we did not exclude any data based on this criterion). Second, we examined where they placed each medicine along the Alternative Medicine–Conventional Medicine dimension. The two poles of the scale were converted to numbers 0 and 100, respectively. On average, participants perceived the two medicines as markedly different from each other, as we intended, placing the conventional medicine on 78.9 (SD = 24.1) and the alternative medicine on 22.7 (SD = 23.9) (t(97) = 14.4; p < .001; d = 1.45). Moreover, their interpretation of the medicines went in the direction we aimed to induce through the instructions in all cases, except for six participants.Footnote 1

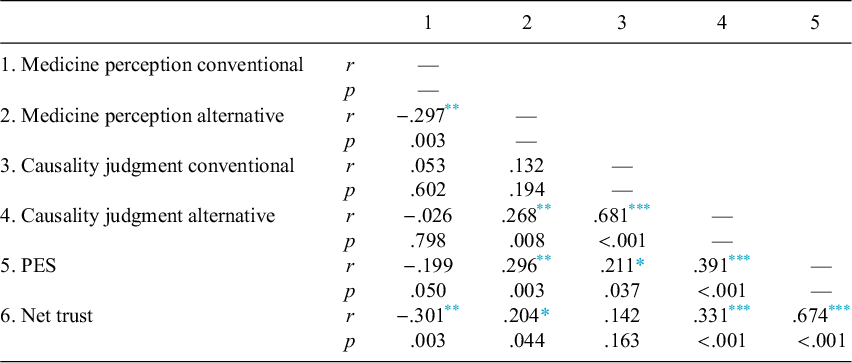

2.2. Pseudoscientific beliefs and causal illusion

In our sample, the PES mean score was 3.40 (SD = 1.01) on a scale from 1 to 7, where higher scores correspond to stronger pseudoscientific beliefs. This score was similar to the mean of 3.30 (SD = 1.02) observed by Torres et al. (Reference Torres, Barberia and Rodríguez-Ferreiro2020) in a sample of University students. We obtained a high internal consistency of PES items scores in our sample (α = .91). (Additionally, see Table E1 in Appendix E for a correlation matrix with all the main variables of interest.)

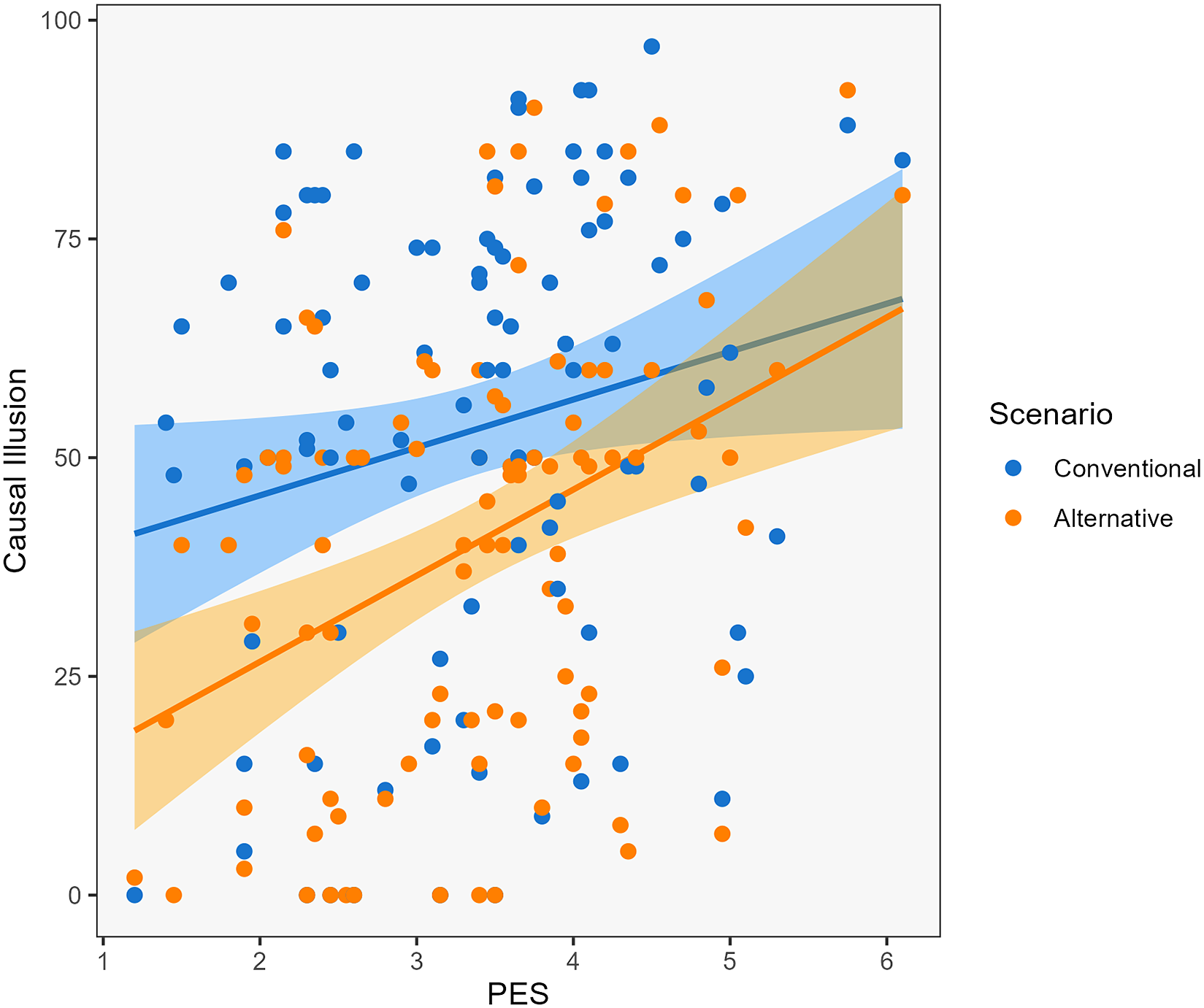

First, we assessed the main effects of the scenario and PES scores on judgments. This showed that participants gave overall higher judgments to the conventional scenario, compared with the alternative scenario (F(1, 97) = 38.00; p < .001). They also were in general more likely to give higher judgments the higher their PES score was (F(1, 97) = 11.5; p < .001). Nonetheless, our original prediction was that causal illusion would vary as a function of the interaction between the description of the medicine and the pseudoscientific beliefs held by the participants. To test our prediction, we conducted a repeated-measures analysis of covariance (ANCOVA) with scenario (alternative medicine vs. conventional medicine) as a within-subjects factor, and PES scores as a covariate in the model (we had previously ruled out a possible influence of the order of the scenarios on the resultsFootnote 2 ). We expected an interaction between scenario and PES scores, so that participants with low PES scores would be more resistant to the illusion in the alternative medicine scenario than in the conventional medicine scenario, whereas participants with high PES scores could show the opposite pattern. The analysis revealed a significant interaction between scenario and PES scores (F(1, 96) = 4.58; p = .035, η 2 p = .046). The scatterplot presented in Figure 1 illustrates the relationship between causal illusion and PES scores. Participants who believed more in pseudoscience generally exhibited a higher causal illusion, but this positive correlation was higher in the scenario with the alternative medicine (r = .392; p < .001) than in the scenario with the conventional medicine (r = .211; p = .037). This in principle suggests that causal illusions can be modulated by the combination of scenario and prior beliefs.

Figure 1 Scatterplot depicting the correlation between causal illusion and the Pseudoscience Endorsement Scale (PES) for each of the two scenarios of the contingency learning task.

Still, as this interaction was not a complete crossover (because both correlations are the same sign), it could potentially be attributable to a ceiling effect that reduces the average causal judgments given by high PES scorers on the conventional scenario, thus creating a ‘removable’ interaction (Wagenmakers et al., Reference Wagenmakers, Krypotos, Criss and Iverson2012). Since the potential problem with this interaction is produced by the fact that PES correlates positively with causal judgments in the two scenarios, we rule out this possibility by examining the interaction only for the PES items that do not correlate with the judgments. Thus, we removed the 10 items that most strongly correlated with the sum of the two causal judgments (all with r > .20) and then repeated the analyses, finding similar (in fact, stronger) results concerning the significant interaction (F(1, 96) = 5.34; p = .023; η2 p = .05). This interaction with only half of the items in the scale cannot be in principle attributed to ceiling effects.

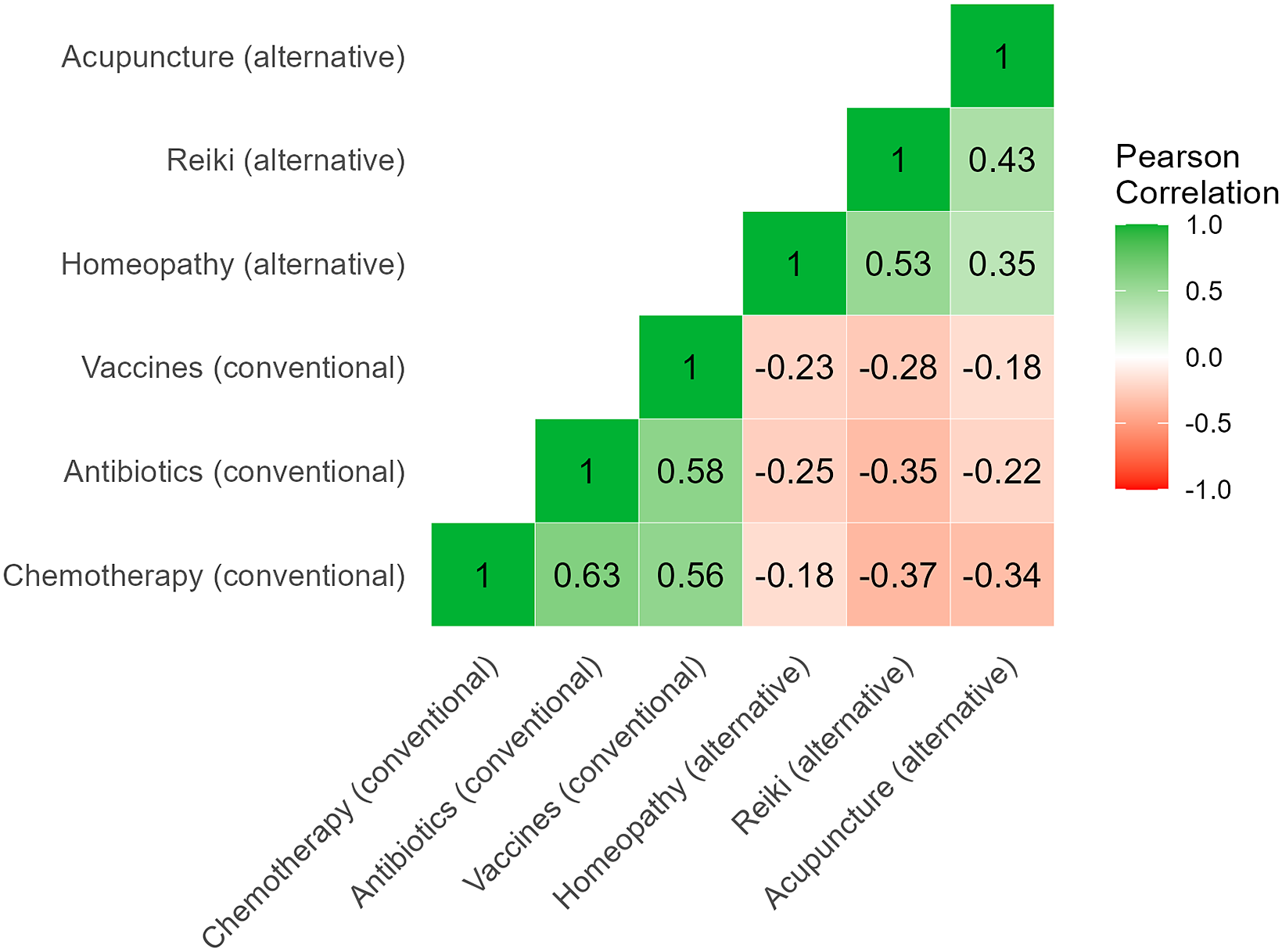

2.3. Trust in alternative therapies and causal illusion

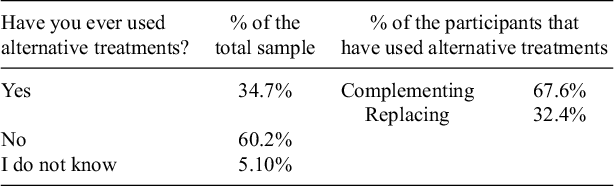

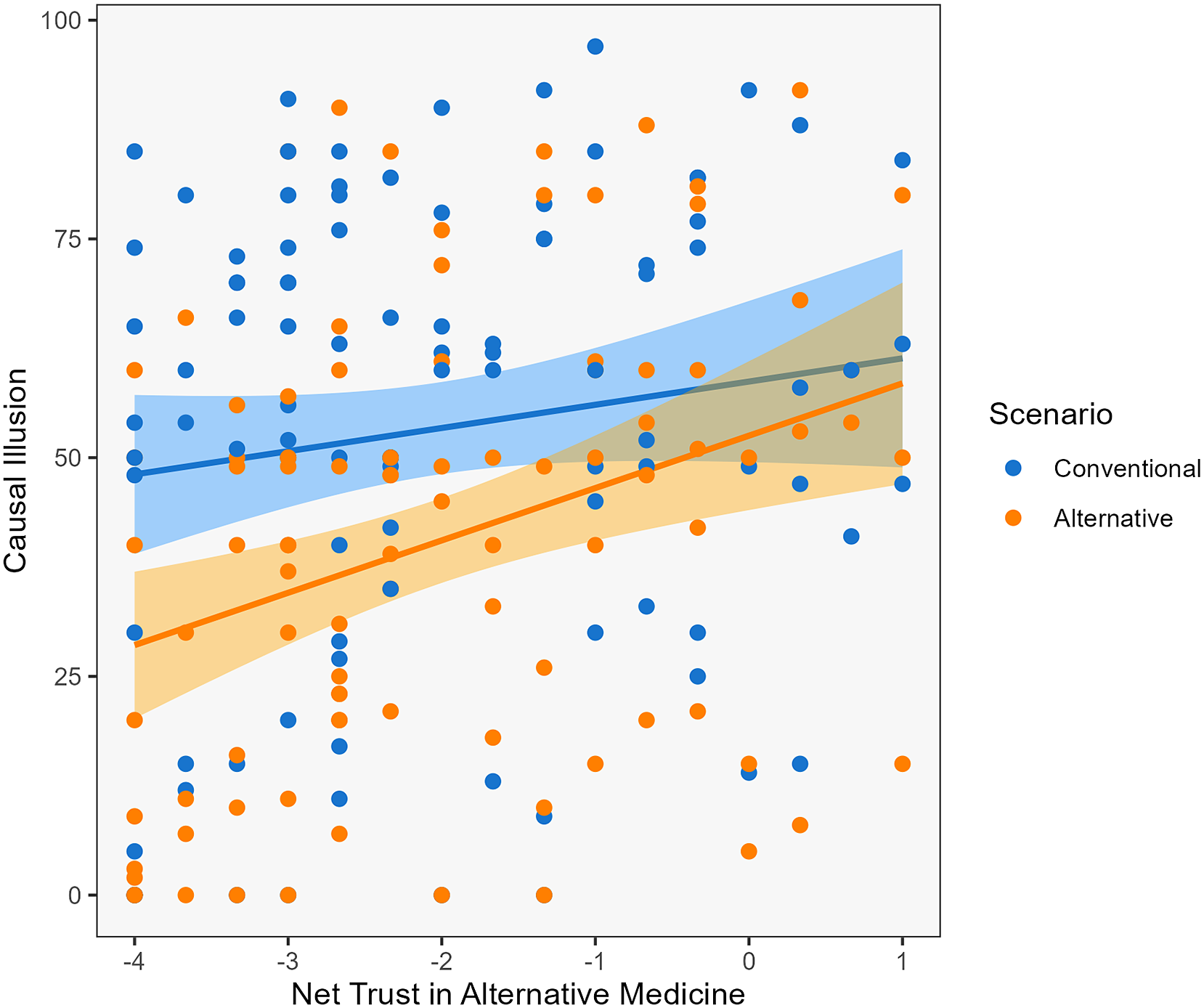

The questions adapted from the FECYT survey provide us with data on the use of alternative therapies in our sample (Table 1), and on the trust placed in six different treatments (half of them conventional and half of them alternative). These latter questions showed a moderated reliability score in our sample (α = .77) (after reverse-coding the alternative medicine items so that all point in the same direction). We also examined the correlations between individual items (Figure F1 in Appendix F). The strongest correlations were the positive ones within each type of practice (i.e., r ranges from .56 to .63 and from .35 to .53 in the scientific and in the alternative practices, respectively), whereas the negative correlations between practices of different types were weaker (range from r = −.18 to r = −.37). This suggests that in general there was some consistency in the responses within each type of practice, but there was no evidence for a strong dissociation (i.e., polarization) so that, for example, those who trust scientific practices strongly distrust alternative therapies, or vice versa. Additionally, as reported in Table 2, conventional treatments generated, on average, more confidence (M con = 4.17; SD con = .87) than alternative treatments (M alt = 2.00; SD alt = .81), which was confirmed by a Student’s t-test (t(97) = 14.2; p < .001; d = 1.43).

Table 1 Use of alternative therapies in our sample

Table 2 Average trust in each of the practices assessed by the FECYT survey

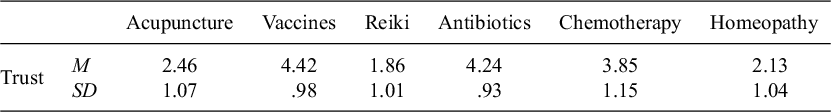

We created a variable named ‘net trust on alternative medicine’ to measure the differences in the level of trust in alternative treatments compared to conventional treatments in our sample. The net trust variable is the result of subtracting the mean trust given to all three conventional treatments (childhood vaccines, antibiotics, and chemotherapy) from the mean trust given to the three alternative practices (acupuncture, reiki, and homeopathy). Hence, positive scores indicate higher net confidence in alternative therapies, whereas negative scores indicate higher net confidence in conventional therapies. The mean of the net trust in alternative medicine was −2.02 (SD = 1.41), confirming the tendency of our participants to rely relatively more on conventional medicine than on alternative medicine.

Using the net trust variable, we were able to examine the relationship between trust placed on alternative medicine and causal illusion as a function of the scenario. We conducted a repeated-measures ANCOVA with scenario (alternative medicine vs. conventional medicine) as a within-subjects factor and net trust as a covariate in the model. We expected a significant interaction between scenario and net trust on alternative medicine, just as we did concerning PES.

As depicted in Figure 2, net trust in alternative therapies was associated with higher causal illusion (F(1, 96) = 6.76; p = .011; η2 p = .066), although the figure suggests that this pattern was only significant in the alternative medicine scenario (r = .331; p < .001), and not in the conventional medicine scenario (r = .142; p = .136). Consistent with this impression, the repeated-measures ANCOVA showed a significant interaction between scenario and net trust (F(1, 96) = 5.21; p = .025; η2 p = .051). However, we did not observe a main effect of scenario (F(1, 96) = 2.97; p = .088; η2 p = .030).Footnote 3 These results can be interpreted as participants who trust alternative medicine more than conventional medicine exhibiting larger causal illusion in general, and this tendency being somewhat stronger in the alternative medicine scenario than in the conventional medicine scenario.

Figure 2 Scatterplot depicting the correlation between causal illusion and net trust in alternative medicine for each of the two scenarios of the contingency learning task.

3. Discussion

Just as it seems to happen with other cognitive biases (e.g., belief bias), previous research suggests that people may interpret causal relationships differently as a function of whether the information they observe supports or contradicts their prior knowledge or their values, which leads to the selective development of causal illusions in those scenarios that fit prior beliefs (at least in the context of political preferences; Blanco et al., Reference Blanco, Gómez-Fortes and Matute2018). Following this idea, the present study aimed to test whether individual differences in pseudoscientific beliefs, in interaction with the framing of the causal scenario (i.e., conventional vs. pseudoscientific), could predict the susceptibility to develop causal illusions in a simulated health context. For that purpose, we designed a procedure in which participants had to judge the effectiveness of two fictitious medicines, one alternative and the other one conventional, so that each of the two scenarios was more or less consistent with the participants’ prior beliefs in pseudoscience. First, our results replicate the causal illusion bias that has been found in many previous studies when there is no causal relationship between cause and outcome but the probability of the cause and the probability of the outcome are high (Allan et al., Reference Allan, Siegel and Tangen2005; Hannah and Beneteau, Reference Hannah and Beneteau2009; Musca et al., Reference Musca, Vadillo, Blanco and Matute2010; Perales et al., Reference Perales, Catena, Gonzalez and Shanks2005; Vadillo et al., Reference Vadillo, Musca, Blanco and Matute2010). More importantly, we found that those participants who did not believe in alternative medicine (low scores in PES and net trust) tended to show weaker causal illusions in the alternative medicine scenario. Therefore, our experiment offers further evidence that causal illusion, like other biases, can be at the service of prior beliefs, also in the context of beliefs in pseudoscience.

In our sample, the causal illusion was generally lower for the alternative treatment than for the conventional one, despite both treatments being completely unrelated to symptom recovery. This is consistent with the previous beliefs of our sample concerning alternative medicine: particularly, the questions from the Survey on the Social Perception of Science and Technology by FECYT revealed that our participants did not trust alternative therapies as much as conventional treatments. We can interpret this result as a tendency in our sample to be protected against causal illusions in the alternative medicine scenario (but to a lower extent in the conventional medicine scenario).

Notably, we found a positive correlation between pseudoscientific beliefs and causal illusion in both scenarios of the task. This can be interpreted as a general tendency of pseudoscientific believers to develop causal illusions (as suggested by Torres et al., Reference Torres, Barberia and Rodríguez-Ferreiro2020). Other studies have also proposed that people who sustain different types of unfounded beliefs are more susceptible, in general, to causal illusions (Blanco et al., Reference Blanco, Barberia and Matute2015; Griffiths et al., Reference Griffiths, Shehabi, Murphy and Le Pelley2019). Since the previous experiments did not include any condition with different thematic domains to serve as control, it was impossible to know whether these researchers’ results could be interpreted as a general susceptibility rather than as a congruence effect (as proposed in Blanco et al., Reference Blanco, Gómez-Fortes and Matute2018, in the ideological domain). Thus, the current experiment offers a better design to interpret the previous literature on the topic of how pseudoscientific beliefs are related to causal illusions. As the interaction between the type of treatment used (conventional vs. alternative) and previous beliefs indicates, there is room to interpret the results as documenting a tendency to reduce the causal illusion when it runs counter previously held beliefs about alternative medicine: in particular, people who do not believe much (or do not place much trust) on pseudoscientific treatments are more reluctant to develop causal illusions when examining a pseudoscientific treatment than when examining a scientific treatment that produces actually identical effects. These results were obtained with two measures of beliefs toward pseudoscience: one questionnaire about pseudoscientific beliefs in general (PES), and one measure of the relative trust placed on pseudoscientific versus conventional treatments. Both measures seem to produce similar, convergent, results. However, they tap on slightly different variables and, thus, their convergence is interesting. First, PES is a questionnaire on general pseudoscientific beliefs that has been validated and used in previous research (Torres et al., Reference Torres, Barberia and Rodríguez-Ferreiro2020). Although it measures a general set of beliefs, it includes items specific to alternative medicine and it seems sensitive enough for our current research. PES has limitations, though. For example, it is a one-sided scale, which does not allow to identify participants with potentially dissociated beliefs (e.g., strong belief in pseudoscience and weak belief in conventional medicine). The net trust variable that we computed, on the other hand, is a two-sided measure that has not been validated in previous research, but can assess the relative difference in the trust placed by a participant on the two opposite types of treatments.

In the Introduction section, we proposed that prior beliefs could interact with task information to modulate the causal illusion. As a consequence, individuals would selectively develop stronger causal illusions when the potential existence of a causal relationship concur with their beliefs, and would be more resistant to the illusion when the potential causal relationship contradicts such beliefs, the same effect that Blanco et al. (Reference Blanco, Gómez-Fortes and Matute2018) reported in the area of politics. However, the present results only partially align with this prediction. While participants who do not believe in pseudoscientific treatments do exhibit lower causal illusions in the alternative medicine scenario as compared with the conventional scenario, those participants who do believe in pseudoscientific treatments do not clearly show the opposite trend, that is, a reduction in their causal illusion in the conventional scenario. This is a difference with respect to Blanco et al.’s (Reference Blanco, Gómez-Fortes and Matute2018) study, which showed a complete crossover interaction in which participants with opposite previous beliefs displayed opposite trends in the causal illusion measure. In fact, we observed that when belief in pseudoscience and trust in alternative medicine were high, the causal illusion was stronger in both scenarios, not only in the one framed as alternative medicine. This difference from previous results can be explained in two ways. First, our result does, in fact, make sense when one considers that, unlike what happens with ideological positioning (which implies endorsing beliefs that can only be either right wing or left wing, being these poles mutually incompatible), beliefs and trust in alternative medicine can co-exist with trust in conventional medicine within the same individual. This co-existence is not only possible, but frequent, according to large surveys (FECYT, 2018; Lobera and Rogero-Garcia, Reference Lobera and Rogero-García2021). We can also observe this co-existence in our own data: in the group of participants who reported having used an alternative therapy in the past, the majority did so as a complement to conventional treatments, not as a replacement. Also, as we commented in Section 2, the highest correlations between the six items in the trust questions were the positive ones between treatments of the same type (i.e., strong positive correlations between conventional treatments with each other, or between alternative treatments with each other), and not the negative correlations between treatments of different types. This suggests again that our participants’ beliefs concerning these treatments were not completely dissociated (i.e., polarized) so that those who trust conventional treatments would tend to distrust alternative treatments or vice versa. Therefore, it is not surprising that we did not observe completely opposing tendencies in the causal illusion as a function of scenario and PES scores. A second related reason that can be used to understand why our results differ from the ones in Blanco et al.’s (Reference Blanco, Gómez-Fortes and Matute2018) study is the fact that we did not have participants with extreme views. That is, although our participants displayed some individual variability in their pseudoscientific beliefs, few of them had extreme high scores, either in the PES scale or in the net trust measure. Thus, since our sample lacks participants who either exhibit a great deal of pseudoscientific beliefs or trust to a greater extent alternative rather than conventional medicine, the probability of observing a complete crossover interaction decreases. Even taking these considerations into account, we still found evidence of an interaction between prior beliefs and the scenarios of the experimental task. As such, differences in causal illusion in each scenario can be predicted by the participants’ level of endorsement of pseudoscientific beliefs and trust in pseudoscience.

We have already mentioned the widespread use of some alternative treatments. The reasons that lead a patient to opt for alternative medicine can be diverse, including some common motivations such as freedom of choice, desire to maintain autonomy and control over their own health, and desire for a more personalized and humane treatment (FECYT, 2020). Moreover, a recent study further analyzed the data from the FECYT survey and showed that trust in the efficacy of pseudotherapies, rather than belief in their scientific validity, predicts the prevalence or use of those therapies (Segovia and Sanz-Barbero, Reference Segovia and Sanz-Barbero2022). Therefore, understanding how causal illusions develop and induce trust in the illusory perception of effectiveness of those remedies, seems to be a necessary step in any campaign aiming to reduce the prevalence of alternative medicine. What our results indicate is that, once people are using these practices, their attempts to estimate the treatment effectiveness can be biased, and to a more extent when they are previously inclined to believe the treatment works. Future studies should explore how these biases help explain actual treatment choice and use in real life.

Data availability statement

The data that support the findings of this study are openly available in the Open Science Framework at https://osf.io/pwv5s/.

Funding statement

Support for this research was provided by Grant PID2021-126320NB-I00 from the Agencia Estatal de Investigación of the Spanish Government, as well as Grant IT1696-22 from the Basque Government. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interest

The authors declare no known competing interests.

Appendix A Pseudoscience Endorsement Scale

Answer the following questions as sincerely as possible. There are no right or wrong answers, they simply indicate personal opinions, and it is expected that there exists some variability between individuals.

To what degree do you agree with the following statements? Answer each question using a scale of 1 (strongly disagree) to 7 (strongly agree).

-

1. Radiation derived from the use of a mobile phone increases the risk of a brain tumor.

-

2. A positive and optimistic attitude toward life helps to prevent cancer.

-

3. We can learn languages by listening to audios while we are asleep.

-

4. Osteopathy is capable of inducing the body to heal itself through the manipulation of muscles and bones.

-

5. The manipulation of energies by laying on hands can cure physical and psychological diseases.

-

6. Homeopathic remedies are effective as a complement to the treatment of some diseases.

-

7. Stress is the principal cause of stomach ulcers.

-

8. Natural remedies, such as Bach flower, help overcome emotional imbalances.

-

9. By means of superficial insertion of needles in specific parts of the body, pain problems can be treated.

-

10. Nutritional supplements like vitamins or minerals can improve the state of health and prevent diseases.

-

11. Neuro-linguistic programming is effective for healing psychic disorders and for the improvement of quality of life in general.

-

12. By means of hypnosis, it is possible to discover hidden childhood traumas.

-

13. One’s personality can be evaluated by studying the shape of their handwriting.

-

14. The application of magnetic fields on the body can be used to treat physical and emotional alterations.

-

15. Listening to classical music, such as Mozart, makes children more intelligent.

-

16. Our dreams can reflect unconscious desires.

-

17. Exposure to Wi-Fi signals can cause symptoms such as frequent headaches, sleeping, or tiredness problems.

-

18. The polygraph or lie detector is a valid method for detecting if someone is lying.

-

19. Diets or detox therapies are effective at eliminating toxic substances from the organism.

-

20. It is possible to control others’ behavior by means of subliminal messages.

Appendix B Questions about alternative therapies from the Survey on the Social Perception of Science and Technology conducted by FECYT (2018)

-

1. Have you ever used alternative treatments like, for example, homeopathy or acupuncture? Yes/No.

-

2. The last time you used them, you did it…

Instead of conventional medical treatments

As a complement to conventional medical treatments

-

3. Please indicate your level of trust for the following practices when it comes to health and general well-being, whether it is nothing, a little, quite, or a lot:

Acupuncture

Childhood vaccines

Reiki (laying on hands)

AntibioticsFootnote 4

Chemotherapy

Homeopathy

Appendix C Instructions from the contingency detection task describing each fictitious medicine

Conventional medicine

Batatrim/Aubina is a last generation drug that has been developed by a prestigious pharmaceutical laboratory.

After several years of research, supported by strong economic investment and thanks to the development of innovative techniques, the scientific team has synthesized a new molecule that provides this medicine with a powerful and promising active ingredient.

Alternative medicine

Batatrim/Aubina is a remedy of natural origin that has been developed following a millenary tradition.

It is composed of an ancestral mixture of plants and flowers following ancient rituals, that indigenous cultures have used as healing method for generations because it restores the balance of energies within the mind–body system.

Appendix D Sentence completion task

Now that you have read the medicine description, complete the following sentences. Choose the answers from the list below that fits best according to what the medicine suggests to you. Please type your answers in the blank spaces.

Batatrim/Aubina…

is a ____________________________________________________.

has been developed _______________________________________.

is composed of a __________________________________________.

is used as healing method because ___________________________.

Appendix E

Table E1 Correlation matrix for the main variables of interest

Abbreviation: PES, Pseudoscience Endorsement Scale.

Appendix F

Figure F1 Correlation matrix (heatmap) for the six individual items in the trust questions.