Introduction

Public policy studies offer different typologies of governance modes, each of which attempts to theoretically order the ways in which public policies are coordinated and steered (for all typologies, see, Considine and Lewis Reference Considine and Lewis1999; Treib et al. Reference Treib, Bahr and Falkner2007; Capano Reference Capano2011; Howlett Reference Howlett2011; Tollefson et al. Reference Tollefson, Zito and Gale2012). All these classifications share not only their adoption of the three fundamental, albeit differently arranged, principles of social coordination (hierarchy, market and network) but also the tendency to associate specific sets of policy instruments with each of the designed governance modes in a long-lasting or relatively long-lasting policy style. This tendency refers to the basic theoretical problem in which people select policy instruments that are congruent with a governance mode and do so in a more or less predictable way, leading to a characteristic style or manner of formulating and implementing policies.

Any selection of policy instruments is characterised by an intrinsic policy-mix trend (Bressers et al. Reference Bressers, Laurence and O’Toole2005; Howlett Reference Howlett2005) and, as such, should be considered the result of a miscellany of different ideas, interests and technologies, and deemed institutionalised in certain specific, recurrent contingencies.

Some mixes are thought to perform better than others, but it remains unclear why this is the case. For example, all studies of “good governance” promoted by the Organization for Economic Cooperation and Development (OECD 2007, 2010) and other international organisations tend to focus on the best mix of policy instruments adopted in pursuit of certain specific policy goals, but they provide few indications on why the chosen tools constitute such an optimal arrangement.

Thus, there is an increasing problem in the governance literature regarding the real policy effectiveness of the process of continuous shifting that has characterised, in a comparative perspective and over the last three decades, governance modes in public policy and public administration. We know that governance modes have changed, and we know that these changes have taken shape via different policy mixes. However, we know very little about the characteristics and the actual consequences of these same mixes.

In this article, we propose to unravel this double-sided problem by empirically focussing on governance reforms in Western European higher education systems (HESs). According to the mainstream literature related to governance changes in higher education (HE) (Huisman Reference Huisman2009; Paradeise et al. Reference Paradeise, Reale, Bleiklie and Ferlie2009; Capano and Regini Reference Capano and Regini2014; Dobbins and Knill Reference Dobbins and Knill2014; Shattock Reference Shattock2014), Western European governments have redesigned governance systems to make HE institutions more accountable by intervening with the introduction of rules that govern the allocation of public funding and tuition fees, the recruitment of academics, and the evaluation and accreditation of institutions. To accomplish this goal, these countries have turned to a similar policy formula (the so-called “steering at a distance” governance arrangement).

However, according to contrasting results in the literature, there is no clear evidence regarding either the composition of the adopted policy formulas or whether and how the new governance template can be associated with satisfactory results. We address this gap by assuming a policy instrument perspective, meaning that the actual national interpretation that each country has given to the common policy template in reforming HE governance can be assessed by focussing on the specific combinations of policy instruments that have been adopted at the national level. To understand these combinations and, thus, the specific content of the adopted policy design, we propose a specific operationalisation of policy instruments whose constitutive logic could also be applied to other policy fields.

Furthermore, we explore the hypothesis that the way specific policy tools are set together matters for policy performance. We are fully aware that the link between policies’ content (policy instruments) and their outcomes is indirect and limited (Koontz and Thomas Reference Koontz and Thomas2012) and that policy performance is driven by many factors (in the case of HE policy, factors such as the percentage of public spending and the socio-economic, cultural background of families, external and internal shocks, and financial retrenchments matter). However, the main way governments can steer their policy systems is to adopt specific sets of policy tools to address the behaviour of specific targets and beneficiaries; thus, the policy mixes that governments recurrently design could help to readdress the way that policies work and their performance. Therefore, policy mixes can be intended as possible explanatory conditions (among others), and the composition of the actual set of adopted policy instruments could make the difference or, at least, signal something of relevance from an explanatory point of view.

Being conscious of the intrinsic limitations of the research design, our main goal is to demonstrate the degree to which the research design we have followed can be promising in filling the existing knowledge gap concerning the assessment of (1) how policy instruments are really mixed together and (2) their association with the effects of governance reforms on public policy.

We have pursued this research strategy by collecting and analysing data on the regulatory changes in HE in 12 countries over the last 20 years (1995–2014).

The article is structured as follows. In the second section, we present our policy instrument framework, while in the third section, we introduce the research design. The fourth section presents the results of the empirical analysis, which are then discussed in the fifth section. Finally, the conclusion summarises our preliminary results and proposes directions for future research.

Governance arrangements and systemic performance in HE: an instrumental perspective

Governance reforms in HE

Many scholars have underlined the apparent convergence towards the “steering at a distance” mode in HE in recent decades (Braun and Merrien Reference Braun and Merrien1999; Huisman Reference Huisman2009; Paradeise et al. Reference Paradeise, Reale, Bleiklie and Ferlie2009; Shattock Reference Shattock2014). This governance arrangement is characterised by mixing the following instruments together: financial incentives to pursue specific outputs and outcomes in teaching and research, student loans, accreditation, ex post evaluation conducted by public agencies, contract benchmarking and provisions by the law for greater institutional autonomy (Gornitzka et al. Reference Gornitzka, Kogan and Amaral2005; Lazzaretti and Tavoletti Reference Lazzaretti and Tavoletti2006; Maassen and Olsen Reference Maassen and Olsen2007; Trakman Reference Trakman2008; Capano Reference Capano2011; Capano and Turri Reference Capano and Turri2017). However, this convergence certainly works to support general principles (more institutional autonomy, more evaluation and more competition), while the concrete ways through which the policies are generated seem to be quite diverse (Capano and Pritoni Reference Capano and Pritoni2019). Nonetheless, this diversity, at least in terms of the concrete composition of the adopted policy instruments, has never been clarified.

Furthermore, it should be noted that in terms of policy performance, the effects of these governance shifts have not been clearly assessed. In fact, the literature on HESs’ performance has focussed mainly on a few aspects as key determinants of policy success (or failure): the mechanism of funding (Liefner Reference Liefner2003), the total amount of public funding (Winter-Ebmer and Wirz Reference Winter-Ebmer and Wirz2002; Horeau et al. Reference Horeau, Ritzen and Marconi2013; Williams et al. Reference Williams, de Rassenfosse, Jensen and Marginson2013), full institutional autonomy (Aghion and Dewatripont Reference Aghion, Dewatripont, Hoxby, Mas-Colell and Sapir2008), partisan dynamics (Ansell Reference Ansell2008), stratification (Willemse and De Beer Reference Willemse and De Beer2012) and the type of loan system adopted (Flannery and O’Donoghue Reference Flannery and O’Donoghue2011). However, this research strategy simply assesses whether certain variables have the power to influence the probability of the outcome changing as expected on average at the population level, regardless of interactions. These types of explanatory results seem weak and generally risk remaining fairly superficial. The salient point here is that focusing on a single dimension to assess the performance capacity of a governance arrangement is quite misleading. For example, the effects of shifting from a centralised governance system to one in which universities are more autonomous cannot be analysed without contextualising the change within its specific configuration, that is, by considering the other relevant instrumental dimensions (e.g. how universities are funded, the system of degree accreditation, whether a national research evaluation assessment is present, etc.).

Overall, despite the significant governance shifts in HE, there is currently a lack of knowledge on both the actual nature of these changes in terms of existing combinations of adopted instruments and the policy performance of the new governance arrangements.

Governance arrangements as a set of policy instruments

Policies are steered by specific governance arrangements composed of rules, instruments, actors, interactions and values (Howlett Reference Howlett2009, Reference Howlett2011; Tollefson et al. Reference Tollefson, Zito and Gale2012; Capano et al. Reference Capano, Howlett and Ramesh2015). The implicit assumption of the governance literature is that different governance modes or arrangements deliver different results in terms of policy outcomes. However, empirical evidence on this issue has been lacking, especially because the main analytical focus in public policy has been the process of changing governance arrangements in terms of their content with respect to the actors involved, the distribution of power, and the adoption of “new” policy instruments. Thus, there has not been enough focus on the results of these governance shifts in mainstream public policy.

However, there is an increasing awareness that pure types of governance arrangements do not actually work; instead, the main principles of coordination (hierarchy, market and network) are combined in various ways. All governance arrangements are essentially hybrids and are characterised as working through policy mixes, that is, policy instruments belonging to “different” instrument categories or pertaining to different policy paradigms/beliefs/systems/ideologies (Howlett Reference Howlett2005; Ring and Schroter-Schlaack Reference Ring and Schroter-Schlaack2010; Capano et al. Reference Capano, Rayner and Zito2012). Thus, an existing set of adopted policy instruments can be conceptualised as specific portfolios, settings and combinations of different types of policy instruments associated with different working logics (Jordan et al. Reference Jordan, Benson, Zito and Wurzel2012; Schaffrin et al. Reference Schaffrin, Sewerin and Seubert2014; Howlett and Del Rio Reference Howlett and Del Rio2015).

However, how can the content of these policy mixes be described and understood, and how can their policy performance be assessed? In an attempt to fill these theoretical and empirical gaps, we adopt a bottom-up perspective by focussing on the basic unit of any governance mode – the policy instruments that can be adopted – and their possible combinations. This approach seems quite realistic; policy instruments are the operational, performance-related dimensions of governance arrangements.

Accordingly, we operationalise systemic governance arrangements in terms of adopted policy instruments and therefore as specific sets of techniques or means by which governments try to affect the behaviour of policy actors to direct them towards the desired results (Linder and Peters Reference Linder and Peters1990; Vedung Reference Vedung1998; Howlett Reference Howlett2000; Salamon Reference Salamon2002).

There are numerous classifications by which policy tools can be ordered based on different criteria of analytical distinction, from coercion to the type of governmental source adopted (Hood Reference Hood1983; Phidd and Doern Reference Phidd and Doern1983; Ingram and Schneider Reference Ingram and Schneider1990; Vedung Reference Vedung1998; Howlett Reference Howlett2011). All these typologies suggest different families of instruments. Our research framework focuses on the capacity of policy instruments to induce specific behaviours; thus, we need to consider the nature of the instruments and examine the different ways through which they induce action towards the expected result. In conducting this examination, we are inspired by the classical theorisation of Vedung (Reference Vedung1998). When focussing on the nature of substantive policy instruments, Vedung grouped these instruments by the basic inducement on which they relied to foster compliance.

By adopting this perspective, we can delimit four distinct families of substantial policy instruments that have different, nonoverlapping capacities to induce behaviours: regulation, expenditure, taxation and information.Footnote 1 Each family is associated with a specific inducement. Regulation induces behaviour control; expenditure induces remuneration; taxation – depending on the way it is designed – can engender behaviour control and remuneration; and information offers persuasion. Notably, all four families of tools can be employed by applying different methods of coercion that are dependent on how free individuals are to choose alternatives. Regulation can be quite strong or weak according to the type of behavioural prescriptions provided. Expenditure can lack coercion in the case of subsidies but be very demanding when delivering targeted funding. Taxation can be quite coercive when a general tax increase is established, but it can also have a low degree of coercion when many targeted tax exemptions exist. Information can be coercive when compulsory disclosure is imposed or lack strong coercion when monitoring is applied.

The four families of substantial policy instruments we have decided to consider, as well as the types proposed by other policy instrument classifications, represent very general instrumental principles (which need to take specific forms to be practically applied): they are “families of individual instruments sharing similar characteristics” (Bouwma et al. Reference Bouwma, Liefferink, Apeldoorn and Arts2016, 216).

Thus, according to Salamon (Reference Salamon2002), the “shape” through which the substantial instrument is designed to deliver the expected result is the important factor in terms of policy impact and potential performance. For every type of family of substantial policy instruments, there are different shapes of delivery that offer actual outlets through which those substantial instruments can affect reality. In addition, these instrumental shapes should be considered the basic analytical unit when assessing how policies are made and, thus, which types of governance arrangements actually work in terms of policy performance.

Accordingly, the important factors in detecting the adoption of a regulation instrument are the various forms through which regulations can be designed: for example, by imposing a specific behaviour, enlarging the range of opportunities or establishing specific public organisations. Expenditures can be delivered through grants, subsidies, loans, lump sum transfers and targeted transfers, among other shapes. Taxation can imply fees, user charges and exemptions, among others. Information can take the shape of neutral administrative disclosures, monitoring, diffusion, etc.

Each of these instruments’ shapes carries specific potential effects that cannot be measured alone because they should be considered in relation to the other tools that compose the actual set of adopted policy instruments. Understanding the distinct shapes that various types of substantive policy instruments can take when delivered is essential to grasping how governments change the instrumental side of governance arrangements over time.Footnote 2 There are three dimensions in which this distinction is helpful.

The first dimension is descriptive. By focussing on the different shapes of policy instruments, the usual description can acquire a more detailed reconstruction of shifts in governance compared to the usual distinction of more or less market, more or less hierarchy, more or less regulation, more or fewer expenditures, etc.

The second dimension is analytical. Due to the focus on the basic shapes through which substantial instruments are delivered, the policy mix concept can be operationalised in a very effective and realistic way. Thus, the eventual relevant differences can be assessed in terms of policy settings because the content of policy mixes can be grasped in a very detailed manner.

The third dimension is clearly exploratory, with a particular emphasis on the possible explanatory relevance of policy instruments for performance. If policy performance is assumed to be conditioned by – among other factors – the adopted set of policy instruments, a detailed operationalisation of the substantial instruments should uncover which combinations of instruments actually work, which can lead to a better reconceptualisation of the (many) determinants of policy performance and of their interactions.

This perspective seriously considers the suggestions of those scholars who have observed how the actual set of adopted policy instruments is the consequence of a diachronic accumulation. Thus, we need to analyse the full package instead of a single type of policy instrument (Hacker Reference Hacker2004; Pierson Reference Pierson2004; Huitema and Meijerink Reference Huitema and Meijerink2009; Tosun Reference Tosun2013; Schaffrin et al. Reference Schaffrin, Sewerin and Seubert2014).

Therefore, from a theoretical perspective, the decision to focus on the instrumental side of governance arrangements to measure their potential impact on policy performance encourages proceeding by using combinatory logic: when the expected effect, namely, policy performance, is assumed to depend on – among other things – a combination of multiple conditions (i.e. specific settings of policy instruments), then a complex causality principle is at work. For this reason, we decided to turn to a Qualitative Comparative Analysis (QCA).Footnote 3 QCA is a configurational and set-theoretic approach in which relationships of necessity and sufficiency are tested and the idea of causality underpinning the approach is fundamentally characterised by equi-finality,Footnote 4 conjunctural causationFootnote 5 and asymmetry.Footnote 6 Therefore, QCA aims at unravelling multicausal rather than mono-causal explanations, focusses on combinations of conditions rather than on single variables, and does not assume that a unique solution (or equation) accounts for both the occurrence and nonoccurrence of a particular outcome.

Furthermore, by applying this method, we can precisely assess which instruments or combinations of instruments are present when better performance is achieved. By using this method of operationalising and methodologically treating policy instruments, a relevant analytical gap can be filled, producing a more precise design for research on the determinants of policy performance; these determinants are too often based on the direct effects of structural and contextual variables (comprising factors such as the socio-economic situation, social capital and the political situation) or processual variables. Overall, policymakers change policy by choosing specific policy instruments, and thus, thanks to the adopted method, it is possible to better describe the content of these decisions. Showing which combinations of instruments are related to specific policy performances plays an enlightening role in readdressing the research on policy evaluation and improving the analysis of the links between policies and outcomes.

Research design

Case selection and timespan

This article is based on a specific data set of policy tools used in 12 Western European countries (Austria, Denmark, England, Finland, France, Greece, Ireland, Italy, the Netherlands, Norway, Portugal and Sweden) between 1995 and 2014.

Regarding country selection, we initially intended to cover all the pre-2004 enlargement EU countries. We decided to exclude Eastern European countries due to the period of transition they experienced after 1989, a time of deep turmoil that mixed the communist legacy, the return to precommunist governance and a kind of acceleration towards marketisation (Dobbins and Knill Reference Dobbins and Knill2009); these characteristics make it quite difficult to assess and code the characteristics of the adopted policy instruments.

However, we were forced to exclude, for different reasons, four additional countries: Luxembourg, due to its small size (one university); Belgium and Germany because of their federal structures and Spain because of its very decentralised regionalism, which has a significant impact on the systemic governance of HE. These 11 pre-2004 enlargement countries reflect all historical types of university governance that have developed in Europe and can therefore offer sufficient differentiation in terms of policy legacy (Clark Reference Clark1983; Braun and Merrien Reference Braun and Merrien1999; Shattock Reference Shattock2014) and the inherited set of policy instruments. We also included a non-EU country, Norway. Thus, all the Nordic countries, which are assumed to have adopted a welfarist approach to HE, could be considered, and it was possible to examine whether this common characteristic influenced the analysed outcome.

In all the selected countries, the HESs have undergone structural changes in the last two decades. Accordingly, we decided to begin our analysis in approximately the mid-1990s to encompass all the major changes that involved HESs over the last 20 years.Footnote 7 Obviously, each country presents its own reform “starting point” in the field, which means that some of the countries had already produced relevant legislation by the mid-1990s, while others began much later.

Operationalisation

As already explained in the theoretical section, we assumed that differences in HESs’ teaching performance may be associated with – among other things – differences in the combinations of the adopted policy tools. Among the various possible indicators of HESs’ performance – such as access, academic recruitment and careers, and third missions – we decided to focus only on teaching, which ultimately represents one of the main tasks of every HE institute. Furthermore, in the last 30 years, all governments have been committed to incentivising universities to pay more attention to the socio-economic needs of their own country and to the need to increase the stock of human capital (currently, in many countries and at the EU level, increasing the number of citizens obtaining a tertiary degree is a major policy goal).

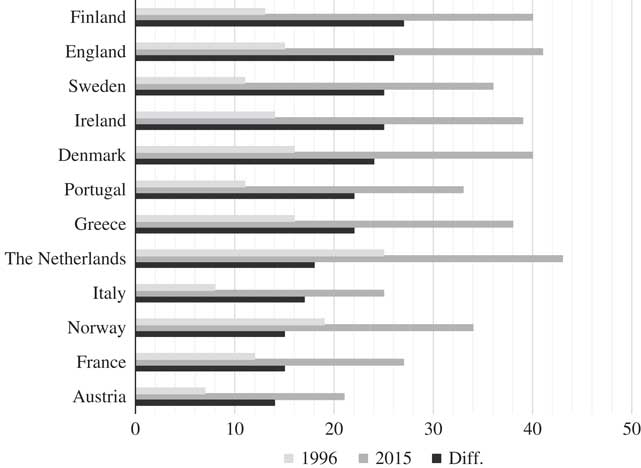

The most common indicator of teaching is the percentage (%) of people with a university-level degree.Footnote 8 As such, we operationalised teaching performance starting from the percentage of adults between 25 and 34 years old who have a university-level degree. Specifically, because many countries’ education systems changed between the 1990s and the 2000s (possibly because of the Bologna Process), HE programs differ from those that existed 20 years ago. Thus, according to the OECD data, we chose our starting point as the percentage of the 25- to 34-year-old population with a “university-level” education (level 5A in the ISCED 1997 classification) in 1996 compared to the percentage of people of the same age who had either a bachelor’s or master’s degree in 2015 (levels 6 or 7 in the ISCED 2011 classification).Footnote 9 These data were downloaded from the OECD archive (see also OECD Reference Organization for Economic1998, 2016)Footnote 10 and are summarised in Figure 1.

Figure 1 University-level (ISCED 5A 1997 – ISCED 6 + ISCED 7 2011) attainment of 25- to 34-year-old adults: 1996 and 2015 in comparison.

However, the variation in this performance indicator does not directly represent our outcome because we could not account for two equally relevant considerations: first, improving results is easier when the starting point is a very low value than when it is a higher value, and second, results of university-level education are strongly linked to the structure of tertiary education as a whole. In other words, all else being equal, countries that offer short-cycle tertiary degrees (i.e. first-cycle degrees lasting less than 3 years: level 5B in the ISCED 1997 classification and level 5 in the ISCED 2011 classification) should be rewarded more than countries without them because in the former case, HE institutions are subject to more competition for students (or, at least, they have a smaller catchment area) and, in turn, are less likely to improve their results. Consequently, we modified the data slightly following a two-step process. In the first step, we differentiated countries into three categories: countries below the mean of university degree attainment (25–34 years old) in 1996, countries above the mean but less than 1 Standard Deviation (SD) above the mean, and countries above 1 SD above the mean. Countries in the latter category had an increase in their performance (i.e. in the difference between their level of university degree attainment in 2015 and in 1996) that was equal to +50%, whereas countries in the intermediate category (above the mean but less than 1 SD above the mean) had an increase in performance that was between 0 and +50%, depending on how much they were above the mean. Finally, countries below the mean did not show an increase.

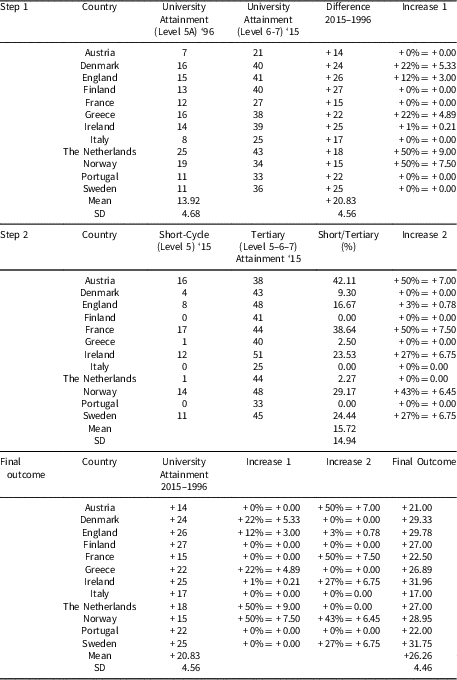

In the second step, we adopted the same differentiation of countries with respect to the ratio between 25- to 34-year-old adults with a short-cycle tertiary degree and those with any tertiary degree in 2015. In this way, we could weigh the extent to which the opportunity to enrol in short-cycle degrees affected the HES as a whole. Again, countries more than 1 SD above the mean had a further increase in their performance that was equal to +50%, countries above the mean but less than 1 SD above the mean had an increase in performance that was between 0 and +50%, depending on how far above the mean they were, and countries below the mean did not have an increase. Table 1 presents both the original OECD data and the country rankings based on our measure of teaching performance from 1996 to 2015.

Table 1 Construction of the outcome (teaching performance between 1996 and 2015)

Source: Our elaboration on Organization for Economic Cooperation and Development data.

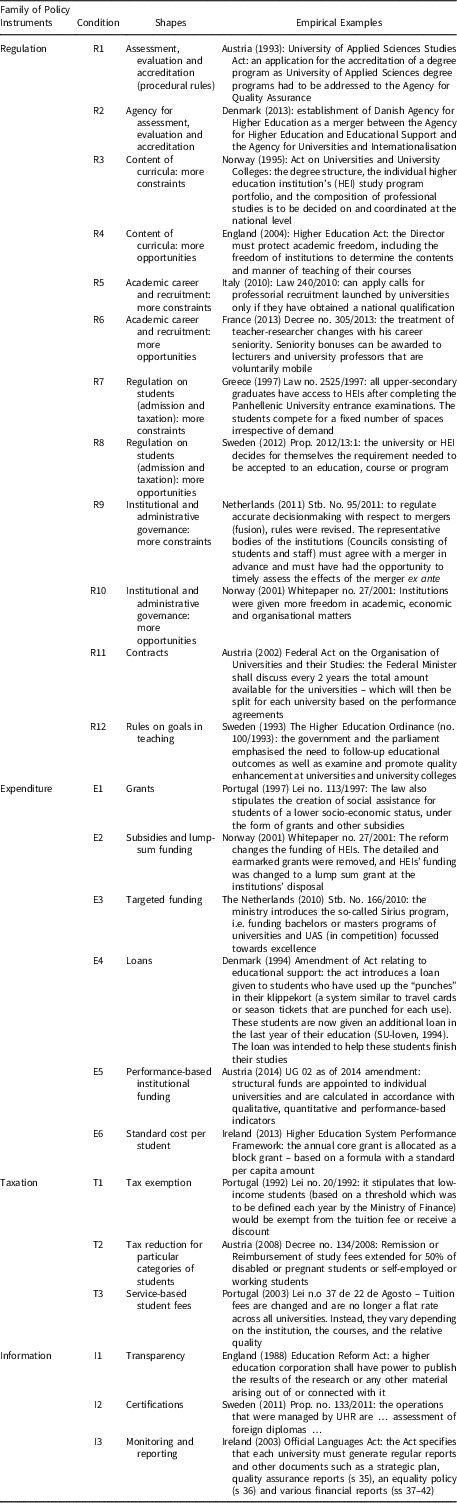

The operationalisation of the conditions of the next QCA, namely, the policy instruments, required greater theoretical reflection and greater effort in data gathering, as we will explain below. More precisely, we decided to operationalise the four families of substantial policy tools – regulation, expenditure, taxation and information – while considering a long list of shapes (24 in total), which are presented in Table 2.

Table 2 Classification of policy instruments and their shapes

In this way, we tried to capture all the possible shapes that substantial HE policy instruments can take. We also avoided constructing categories that were too exclusive, which would have made the data collected in different countries difficult to compare.

Finally, it is important to note that there is a one-year lag between conditions and the outcome: conditions are operationalised based on data from 1995 to 2014, while the outcome compares (adjusted) teaching performance in 1996 and 2015. The reason is that we believe that most changes in the policy instruments could have a “quasi-immediate” impact on behaviours, and a one-year time lag carefully takes this process into account.Footnote 11

Data collection, data set construction and coding

By following the lines of our theoretical framework – which focussed on the different combinations of the existing set of adopted policy combinations – we collected, analysed and coded all pieces of national legislation and regulations regarding HE in all 12 countries under analysis from the mid-1990s onwards. Hundreds of official documents and thousands of pages of national legislation were carefully scrutinised and hand-coded in the search for both substantial and procedural policy instruments. The coding procedure proceeded in three steps: first, we identified a list of relevant pieces of legislation in national HE policy, namely, laws, decrees, circulars and ministerial regulations that affected the HES of each country under scrutiny. Second, we reduced every piece of legislation to its main issues. Third, we attributed each of those issues to one of the shapes in which we classified the policy instrument repertoire in HE.

For the first two steps, the research strategy was twofold. With respect to Italy, France and both English-speaking countries – England and Ireland – the analysis was conducted “in house”, meaning that the three authors of this article were responsible for entering the Italian, French, English and Irish pieces of legislation into the data set. Linguistic barriers rendered the selection of regulations and their direct coding impossible for the other eight countries – Austria, Denmark, Finland, Greece, the Netherlands, Norway, Portugal and Sweden. Therefore, we contacted a highly reputable country expert for each case to achieve a perfectly comparable list of pieces of relevant regulation and, in turn, legislative provisions regarding HE for those countries.

The attribution of all the analysed relevant decisions to the appropriate categories (substantial policy instruments and related shapes) was again conducted by the authors. This final step of the coding procedure was developed as follows: first, each issue of each legislative provision in each country was coded separately by each author; second, contradictory cases – that is policy instruments placed in different categories by two or more coders (approximately 15% of the whole sample) – were solved jointly in a subsequent stage.

Linking policy instruments with teaching performance in Western Europe

Policy mixes in Western European HESs: an overview of the adopted policy shapes

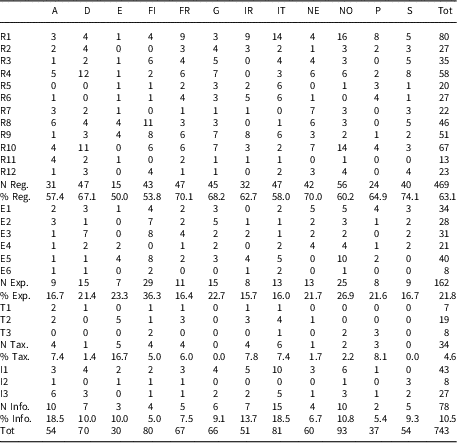

While we refer to the Online Supplementary Material (Appendix A) for all technical details concerning our QCA, we first would like to provide a general picture of how the countries under scrutiny intervened in HE between 1995 and 2014. More precisely, Table 3 indicates how often each country in our sample recurred to all the shapes of policy instruments listed in Table 2.

Table 3 Higher education systems’ governance reforms in Western Europe (1995–2014)

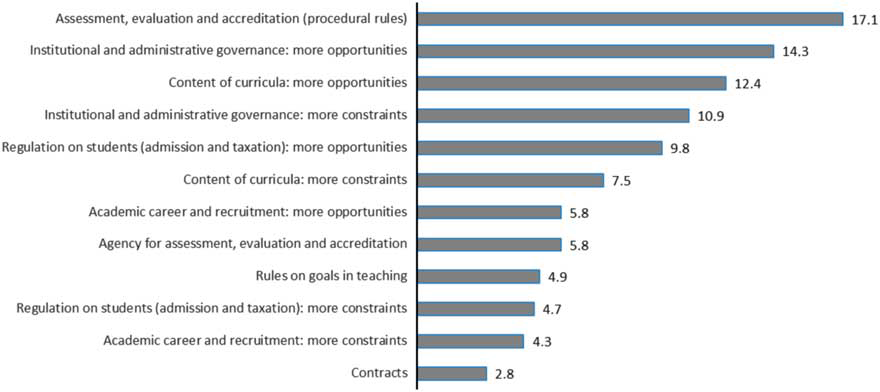

With regard to regulation – the most utilised family of policy instruments – Figure 2 reveals that two out of the three most adopted instrumental shapes are concerned with giving more opportunities to universities in terms of both the content of curricula and the institutional governance (which seems quite coherent with the common template pursued by all the examined countries: the “steering at a distance” governance model). However, the most frequently utilised of all the policy instruments is related to assessment, evaluation and accreditation, which proves, from a quantitative point of view, what has been repeatedly argued qualitatively in the literature, namely, that Western European countries largely utilised evaluation tools over the course of the last 20 years (Neave Reference Neave2012; Rosa and Amaral Reference Rosa and Amaral2014).

Figure 2 Regulation: Which instruments are utilised the most (%)?

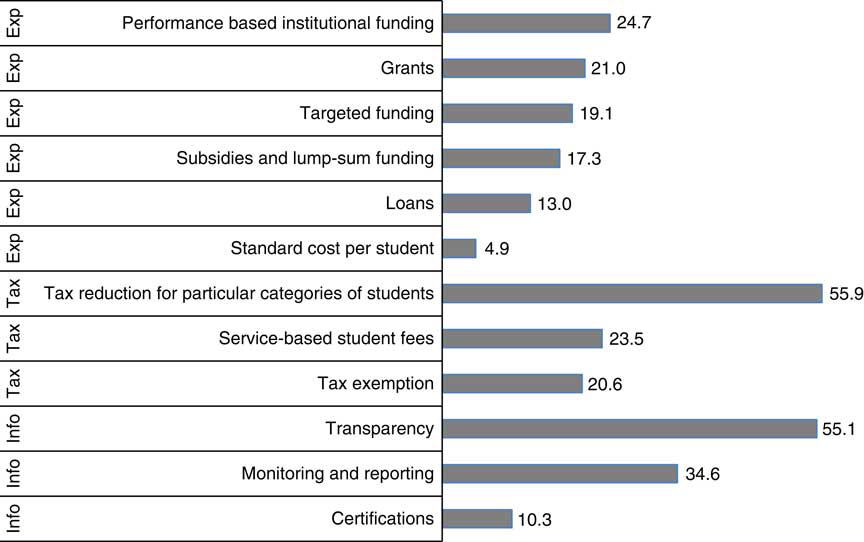

Figure 3 indicates which shapes were the most frequently used over the other three families of substantial instruments. Apparently, this general picture also confirms the trend towards a common template, but with some relevant specifications.

Figure 3 Other families of substantial policy tools (expenditure, taxation and information): Which instruments are utilised the most (%)?

In fact, expenditure was delivered not only through grants but also through targeted funding and, above all, through performance-based institutional funding. Thus, the most expected expenditure instrument in a pure “steering at a distance” governance model – lump sum – was adopted less frequently than expected. Therefore, governments preferred a more coercive way to allocate public funding (together with an emerging attitude favouring the inclusion of families in paying HE loans) and thus demonstrated the will to maintain a certain degree of control over the behaviour of universities.

Finally, regarding both taxation and information, it emerged that two instrumental shapes were particularly utilised: tax reduction for particular categories of students and transparency.

What can be associated with better teaching performance? A configurational analysis

We return to fuzzy-set QCA (fs-QCA)Footnote 12 to empirically explore the combinations of policy instruments and related shapes that might contribute to explaining teaching performance in Western European HESs between 1996 and 2015. While we refer to the Online Supplementary Material (Appendix A) for a careful discussion and justification of the thresholds chosen in the process of “calibrating” the sets (both the conditions and the outcome) (Ragin Reference Ragin2008; Schneider and Wagemann Reference Schneider and Wagemann2012), this section presents the main results of our empirical analysis in terms of both the need for and sufficiency of relations between conditions, that is instrumental shapes, and the outcome, namely (adjusted) teaching performance.

The analysis of necessary conditions for improving teaching performance was completed quickly: no condition (or its nonoccurrence) was necessary for the outcome (or for its nonoccurrence).Footnote 13 In other words, we could not identify any conditions that needed to be present or absent to observe either a good teaching performance (presence of the outcome) or a bad teaching performance (absence of the outcome) between 1996 and 2015.

However, much more interesting is the analysis of the sufficient conditions for improving teaching performance in HE, which was conducted with a “truth table”. It clearly would not have been feasible to perform an analysis of sufficiency on 12 cases with dozens of different conditions (i.e. instrumental shapes) due to problems of limited diversity of the data (Schneider and Wagemann Reference Schneider and Wagemann2012; Vis Reference Vis2012); thus, we turned to theoretical reflections to hypothesise a set of possible combinations to be tested in our fs-QCA.

First, we began with 45 theoretically relevant potential configurations of conditions that populated the literature on HES performance. These combinations of instrumental shapes build mainly on the literature emphasising the adoption of similar policy instruments to follow the common template of the “steering at a distance” model. The combinations also build on the empirical evidence and contradictions emerging from variable-oriented studies that focussed on the determinants of performance in HE. Regarding the “steering at a distance” literature, we have already emphasised the substantial literature underlying the ways governments have been changing governance in HE by improving institutional autonomy (and its dimensions, such as budgetary autonomy, degree of freedom in curricular content and autonomy in recruiting academic staff), quality assurance, accreditation, teaching and research assessments, monitoring, and varieties of funding mechanisms (Gornitzka et al. Reference Gornitzka, Kogan and Amaral2005; Cheps 2006; Lazzaretti and Tavoletti Reference Lazzaretti and Tavoletti2006; Maassen and Olsen Reference Maassen and Olsen2007; Trakman Reference Trakman2008; Huisman Reference Huisman2009, Shattock Reference Shattock2014, Capano et al. Reference Capano, Regini and Turri2016).

This literature clearly addressed our choice to consider the shapes for each family of substantial policy instruments that seemed to best represent the operative dimensions of the main categories of government intervention. This choice was reinforced by the contributions that focussed on the real effects of performance-based funding on institutional autonomy and on the degree of centralisation of the governance system. This literature produced contrasting empirical evidence and thus suggested a strategic dimension that we should take into consideration. For example, with respect to performance and targeted funding as a cause of high graduation and research rates, the relevant studies showed the weak performative capacity of these instruments. Most of these studies focussed on the United States (US), where many states introduced performance criteria to determine the allocation of extra resources beginning at the end of the 1970s (Volkwein and Tandberg Reference Volkwein and Tandberg2008; Rabovsky Reference Rabovsky2012; Rutherford and Rabovsky Reference Rutherford and Rabovsky2014; Tandberg and Hillman Reference Tandberg and Hillman2014). This evidence was quite contradictory compared to the widespread use of these types of instruments by the governments of our analysed countries. Thus, we considered all targeted expenditure tools as relevant in our combinations.

Regarding institutional autonomy, contradictory evidence has emerged from reputable studies. For example, in their comparison between the EU and the US, Aghion et al. (Reference Aghion, Dewatripont, Hoxby, Mas-Colell and Sapir2010) found that high institutional autonomy (and a competitive environment) was positively correlated with high performance both in educational attainment and in research. By contrast, Braga et al. (Reference Braga, Checchi and Meschi2013) showed that high institutional autonomy negatively impacted the level of educational attainment.

With respect to the level of the centralisation of the governance system, Knott and Payne (Reference Knott and Payne2004) considered systemic centralisation in US states to be high, intermediate, or low depending on the scope of the decision-making powers held by state boards. They tested systemic centralisation as a condition affecting an array of resource and productivity measures, including the size of university revenue and the number of published articles. They concluded that state governance matters and that flagship universities are penalised by centralisation, which reduces their total revenues, research funding and number of published articles. However, centralisation was also found to reduce tuition revenues and – presumably – the cost of enrolment. The worst overall performance occurs under mild centralisation. However, this interpretation contrasts with a qualitative study of five US states conducted by Richardson and Martinez (Reference Richardson and Martinez2009). They argued that universities in centralised systems might perform better than those with decentralised designs with respect to access and graduation rates.

Thus, according to the literature, and assuming that governments have tried to pursue the “steering at a distance” model in accordance with their own national identity, we attempted to combine these shapes in such a way that 45 different policy mixes – 30 consisting of four instrumental shapes belonging to different families of instruments and 15 consisting of five of those same instrumental shapes – were established (see Table A4 in the Online Supplementary Material for those 45 different policy mixes).

Then, we performed 45 different QCAs – one for each of the selected policy mixes – to ascertain the combination of conditions that were more theoretically convincing and empirically robust. To accomplish this, we followed a two-step process. First, we focussed on the values of consistency and the coverage of different (intermediate) final solutions. In fact, in the QCA literature, it is generally argued that “coefficients of consistency and coverage provide important numeric expressions for how well the logical statement contained in the QCA solution term fits the underlying empirical evidence and how much it can explain” (Schneider and Wagemann Reference Schneider and Wagemann2010, 414). Therefore, scholars have reviewed the coefficients to measure the goodness of fit of the tested theoretical models (Ragin Reference Ragin2006). This first step allowed us to realise that two combinations existed with both consistency and coverage values that were much higher than any other, as well as higher (or, at least, equal to) than 0.90, which is a notable value.Footnote 14 However, limiting our comparison to consistency and coverage alone would be insufficient (Braumoeller Reference Braumoeller2015; Rohlfing Reference Rohlfing2018); it is important to also account for the theoretical plausibility of solutions and the (groups of) countries that are identified by each solution term. For this reason, in our second step, we carefully scrutinised those same two combinations of instrumental shapes, looking for results that appeared to be more theoretically plausible and empirically grounded. In applying these further selection criteria, we realised that one combination was preferable to the other.Footnote 15

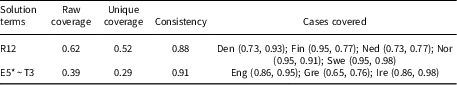

Thus, at the end of the above-described analytical process, the best combination of instrumental shapes that emerged from the QCA for explaining the teaching performance of the analysed sample of countries between 1996 and 2015 consisted of R1 (assessment, evaluation and accreditation); R10 (institutional and administrative governance: more opportunities); R12 (rules on goals in teaching); E5 (performance-based institutional funding); and T3 (service-based student fees) (Table 4).

Table 4 Explaining teaching performance: the best policy mix (intermediate solution)

Note: Solution coverage (proportion of membership explained by all paths identified): 0.908847.

Solution consistency (“how closely a perfect subset relation is approximated”) (Ragin Reference Ragin2008, 44): 0.895641.

Raw coverage: proportion of memberships in the outcome explained by a single path.

Unique coverage: “proportion of memberships in the outcome explained solely by each individual solution term” (Ragin Reference Ragin2008, 86).

Complex solution: R12*~R1*~E5*~T3 + E5*~R10*~R12*~T3 + E5*~R1*~R12*~T3 + R10*R12*E5*T3 (coverage 0.85; consistency 0.95).

Parsimonious solution: R12 + R10*~R1 + E5*~T3 + T3*~R1 + R10*T3 + R1*~R10*~T3 (coverage 0.95; consistency 0.85).

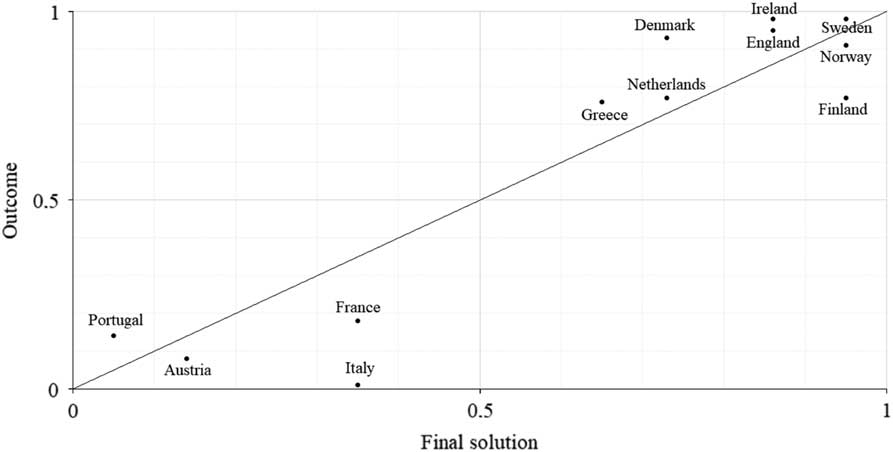

The consistency value of the intermediate solution was impressive (0.90), and the coverage of the (intermediate) solution formula was even better (0.91). There was no “deviant case for coverage” (Schneider and Rohlfing Reference Schneider and Rohlfing2013, 585). As shown in Figure 4, six cases (i.e. Denmark, England, Greece, Ireland, the Netherlands and Sweden) were above the diagonal in the upper-right corner – and thus were “typical cases” – whereas two cases (i.e. Finland and Norway) were “deviant cases in consistency of degree”. Finally, the four cases (namely, Austria, France, Italy and Portugal) in the lower-left quadrant – being a good example of neither the solution terms nor the outcome – did not merit particular attention.

Figure 4 The “best policy mix”: final XY plot.

The above analysis of policy mixes thus means that in our sample of countries, it was possible to identify two distinct paths associated with an increase in teaching performance. First, in the Scandinavian countries – Denmark, Finland, Norway and Sweden – together with the Netherlands, it emerged that the rules on systemic goals in teaching were the prevailing policy instrument, which clearly indicates the aims to be achieved by institutions. Second, in the Anglo-Saxon countries – England and Ireland – together with Greece, improved performance was associated with performance-based institutional funding; moreover, those three countries were also similar in that they did not pay very much attention to service-based student fees.

Discussion of the findings

This analysis showed how instrumental shapes were composed in the 12 analysed countries, and we also treated these mixes with fs-QCA to assess which combinations of instrumental shapes were associated with better systemic performance in teaching. The empirical evidence is quite promising in both areas.

Regarding the composition of the national packages of instrumental shapes, the different distributions of substantial instruments and their possible shapes revealed that there was significant variance when mixing the same substantial instruments and their shapes. This variance, of course, calls for a better understanding of which combination(s) of instrumental shapes can be associated with better HES performance in terms of teaching. Thus, this emerging variance in the composition of policy mixes incontrovertibly shows how the common template, the “steering at a distance” mode of governing HESs, was applied in a very loose way, that is, as a generic framework that each country interpreted in a very specific and idiosyncratic way.

The findings emerging from our QCA treatment are relevant with respect to both the specific issue of identifying the most effective policy mixes in governing HESs and the more general theoretical and empirical problem of operationalising governance shifts and their potential policy effectiveness.

The first issue is that among the eight cases showing the outcome, there is a clear-cut divide based on the presence of only one condition (instrumental shape). On one hand, there are countries in which the performance funding shape prevails, and thus, the government “steers at a distance” by financially addressing the behaviour of the institution. This is the case for England, Greece and Ireland, where the conjunction between performance funding and the absence of service-based student fees is sufficient for the outcome. Here, it is surprising that this solution term groups together countries belonging to different traditions in governing HE.

On the other hand, the four Nordic countries plus the Netherlands are clustered together by the presence of a significant governmental role in indicating the systemic goals to be achieved, which is a sufficient condition for the outcome. In these countries, the government has significantly followed an autonomistic policy but has counterbalanced it through a clear indication of what the overall system is expected to deliver in terms of teaching performance.

This clear-cut result raises a relevant issue because it shows how the mix of instrumental shapes makes a difference regardless of the “quantity” of shapes of the same family of substantial instruments that has been introduced. For example, in the Greek case, the association of performance funding with the outcome occurs in the presence of a high percentage of adopted regulatory instrumental shapes, while in the Norwegian and Finnish cases, the impact of a specific regulatory shape (R12, rules on goals in teaching) appears more relevant with respect to expenditure shapes, notwithstanding the finding that these two Nordic countries scored first in terms of the percentage of adoption of expenditure instrumental shapes.

This evidence raises a more general consideration that emerges from our analysis: the fact that each solution term highlights the relevance of the presence of only one instrumental shape. This finding suggests taking into consideration the hypothesis that some specific shapes of policy instruments make a difference regardless of the other shapes of policy instruments with which they work. This empirical evidence could appear simply because we chose only the most frequently adopted instruments according to the specialised literature or because the final results show the cumulative effect of different compositions of policy mixes adopted over time. However, the hypothesis that a small combination of instruments can make a significant difference irrespective of the other instruments that can be part of the actual adopted policy mix also deserves more empirical attention because – if confirmed – it could have a relevant impact on the actual trend in the literature on policy instruments and on policy performance. Obviously, this evidence could depend on different contexts and on different implementation practices (in terms of policy instruments, this means primarily the rules and organisational procedures through which instrumental shapes are implemented); it could also depend on a specific temporal sequence of adoption of policy instruments (e.g. the relevance of both systemic goals and performance funding could require the presence of significant and effective previously adopted institutional autonomy on curricula and recruitment at work). However, while accepting this consideration, the emergence of the hypothesis that only a small number of instrumental shapes – or even only one shape, regardless of the other adopted shapes – can be associated with the presence of a performance improvement also appears intriguing and promising.

Conclusions and future research

We devoted this article to addressing a general problem in analysing governance shifts in public policy by empirically focusing on HE as an exemplary field. We assumed that the complexity of understanding whether and how governance changes should be analysed from a detailed perspective that begins from the basic component of governmental actions and governance arrangements: policy instruments. We proposed a classification of substantial instruments (regulation, expenditure, taxation and information) to grasp the complete spectrum of induced behaviour that can be addressed. Then, we operationalised these substantial instruments according to 24 different shapes. We used this long list to code the instrumental choices made in 12 European countries over the last 20 years in their HES governance arrangements. Afterwards, we developed a fs-QCA to assess which combination of instrumental shapes is associated with good systemic performance in teaching, operationalised by taking into account the (adjusted) increase in the percentage of 25- to 34-year-old adults with a university-level degree. The empirical analysis allowed us to identify the most theoretically convincing and empirically robust combination of instrumental shapes associated with the teaching performance of the analysed countries between 1996 and 2015. In more detail, it was possible to identify two distinct paths associated with the outcome: while in the Scandinavian countries and the Netherlands, rules on goals in teaching were the prevailing policy instrument, in Anglo-Saxon countries and Greece, good teaching performance was associated mainly with the presence of performance-based institutional funding, together with the absence of service-based student fees.

Of course, we are aware of the intrinsic limitations of our research design: first, the link between policy instruments and teaching performance is indirect; second, policy performance is driven by many factors that interact with the adopted instrumental shapes, and we were not able to assess the role of external factors ex ante. For example, expenditure instrumental shapes may have a different impact on teaching performance depending on whether a drastic reduction in public funding is occurring concomitantly; a peak in enrolment can increase the opportunity to produce more graduates, especially if this figure is linked to an increase in assigned grants; or an increase in institutional autonomy (and thus of greater competition among universities in their teaching offerings) may have different effects according to the general socio-economic situation of the country; and similar considerations can be drawn with regard to many more instrumental shapes.

Nonetheless, we are convinced that policy instruments can be intended as possible explanatory conditions (among others) and thus that a specific focus on them is absolutely needed to better understand and order what really occurs when governments decide to intervene in a policy field with the only means they have at disposal: by choosing specific instrumental shapes or combinations of them. Accordingly, the results of our exploration are quite interesting both for the study of performance in HE and, more generally, for the study of the effects of governance shifts in public policy and, in turn, with regard to the portfolios/mix of adopted policy instruments.

With regard to the literature evaluating the performance of university systems, our empirical evidence shows that the same outcome is associated with a specific configuration of conditions (shapes of policy instruments) that must be present or absent to work. This way of thinking and, thus, this reading of the way governance arrangements work is explained by different combinations of instrumental shapes that can be associated with similar effects and, thus, by the way these combinations should be properly contextualised. In this sense, the empirical evidence presented in this article shows that the evaluative literature on HE performance should find a third method that lies between the variable-oriented research strategy and the dense description of case-study analysis to fully grasp what may be important in terms of performance.

Furthermore, with respect to the literature on HE focussing on the bundle of changes that have been discussed from a comparative perspective towards a common template – the “steering at a distance” model – there have clearly been certain national paths that have merged when translating the common template focussed on certain instruments over others. Why these paths, these specific combinations of instruments, have been chosen is not of interest in this article, but it could be taken into consideration in the future for a better understanding and explanation of the process of governance shifts, their features, their drivers and their decisional outputs.

Finally, regarding the broader literature on policy instrument mixes, this article proposes a research design through which it is possible to overcome its actual limitations and thus a new way towards: (1) how to conceptualise and operationalise policy instruments to reach a better description of both the content of policy mixes, as well as (2) how they really work in terms of performance.

Regarding the content of policy mixes, we have shown how reasoning in terms of instrumental shapes can be useful for better grasping the multidimensionality of policy instruments. According to this way of operationalising policy instruments, a more detailed picture of real adopted policy instruments can be obtained; thus, more fine-grained analyses, including analyses in comparative terms, are possible. This evidence calls for the suggestion of Ingram and Schneider (Reference Ingram and Schneider1990) and Salamon (Reference Salamon2002) to be taken seriously: to grasp the real composition of policy mixes (and of their potential effects). The various types of policy instruments should be disaggregated into smaller units, and thus, the shapes through which policy instruments are actually adopted when decisionmakers design policies should be designed.

Regarding the functioning of policy mixes with respect to policy performance, this article has shown (or, at least, has raised the intriguing hypothesis) that often, only a limited combination of instrumental shapes may truly be associated with the outcome of interest, despite the larger number of instrumental shapes that compose the adopted policy mix. Thus, it could be that only a few instrumental shapes can make a difference, regardless of the more articulated composition of the actual policy mix.

We are perfectly aware that another limitation of our analysis is that we did not consider the institutional “impact” of instruments (or the content of the implementation process, where different institutional interpretations and strategies can be at work), but we were unable to do this because the analytical focus was on policy design and thus on the formal decisions made at the national level. Although we have focused on a specific dimension of policy instrument mixes, we believe that this could be a first necessary step towards a deeper analysis in terms of changes obtained over time. Overall, the way decisionmakers design policies by arranging different instrumental shapes should matter.

There are obviously different possible paths for further research starting from the approach we have presented in this article. We describe four of them here.

The first path would be to extend the research while including countries belonging to different geo-political contexts and with different legacies in governing HESs and, more ambitiously, to broaden the scope of the research by including and comparing different policy fields. This extension could also allow us to test whether there could be a way to find a list of instrumental shapes that have an intersectorial analytical use.

The second path would be to deepen the analysis in order to investigate the working rules of the shapes through which the substantial instruments are used (implementation practices). This step would also mean working on the dimensions of the rules through which each shape is designed. We refer to the rules through which decisional powers and competences are attributed; accountability rules are fixed when the shapes are designed. For example, regarding the use of performance funding, the focus should be on the percentage of public funding allocated, the criteria for allocating performance funding, the means and timing of evaluation and so on. Obviously, this path would be very complex, but it could be a very interesting and promising way to definitively elucidate how instruments work in day-to-day policy dynamics.

The third path would be to focus on a few exemplary national cases for a deeper analysis of the diachronic sequence of instrumental choices made by governments and of their cumulative effects in terms of association with performance. This analytical deepening could allow us to clarify whether some instrumental shapes matter more than others or whether this step is simply the final emergence of a specific sequence of instrumental choices.

The fourth path would be an analysis of the diachronic interaction between structural/environmental factors and policy mixes in determining the policy performance to better assess the “association” (and maybe to upgrade it to the “explanatory” level) between some instrumental shapes and improved performance that we found in our research.

We are definitively convinced that policy instruments matter, and in the future, more empirical work should be done to better understand how these instruments do their job.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/S0143814X19000047

Data Availability Statement

This study does not employ statistical methods and no replication materials are available.

Acknowledgement

The research content presented in this article was made possible by a grant of the Italian Ministry of Education (PRIN 2015). We thank three anonymous referees for their helpful comments and suggestions.