Published online by Cambridge University Press: 05 January 2024

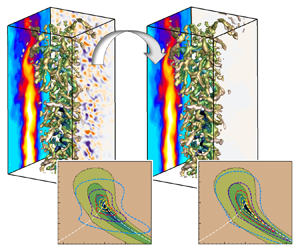

The super-temporal-resolution (STR) reconstruction of turbulent flows is an important data augmentation application for increasing the data reach in measurement techniques and understanding turbulence dynamics. This paper proposes a data assimilation (DA) strategy based on weak-constraint four-dimensional variation to conduct an STR reconstruction in a turbulent jet beyond the Nyquist limit from given low-sampling-rate observations. Highly resolved large-eddy simulation (LES) data are used to produce synthetic measurements, which are used as observations and for validation. A segregated assimilation procedure is realised to assimilate the initial condition, inflow boundary condition and model error separately. Different types of observational data are tested. The first type is down-sampled LES data containing many small-scale turbulence structures with or without synthetic noise. The DA results show that the temporal variation of the small-scale structures is well recovered even with noise in the observations. The spectra are resolved to a frequency approximately one order of magnitude higher than what can be captured within the Nyquist limit. The second type of observation is low-sampling-rate tomographic particle image velocimetry (tomo-PIV) data with or without the injection of small-scale structures. The modulation between the large-scale structures contained in the tomo-PIV fields and the small scales injected from the observations is improved. The resultant small scales in the STR reconstruction have the characteristics of authentic turbulence to a considerable extent. Additionally, DA yields much smaller errors in the prediction of particle positions when compared with the Wiener filter, demonstrating the great potential for Lagrangian particle tracking in measurement techniques.