Crossref Citations

This article has been cited by the following publications. This list is generated based on data provided by

Crossref.

Yan, Lei

Li, Yuerong

Hu, Gang

Chen, Wen-li

Zhong, Wei

and

Noack, Bernd R.

2023.

Stabilizing the square cylinder wake using deep reinforcement learning for different jet locations.

Physics of Fluids,

Vol. 35,

Issue. 11,

Vasanth, Joel

Rabault, Jean

Alcántara-Ávila, Francisco

Mortensen, Mikael

and

Vinuesa, Ricardo

2024.

Multi-agent Reinforcement Learning for the Control of Three-Dimensional Rayleigh–Bénard Convection.

Flow, Turbulence and Combustion,

Dong, Xinhui

Wang, Zhuoran

Lin, Pengfei

Wang, Qiulei

and

Hu, Gang

2024.

An interactive platform of deep reinforcement learning and wind tunnel testing.

Physics of Fluids,

Vol. 36,

Issue. 11,

Zhang, Zeyu

Li, Shanwu

Feng, Hui

Zhou, Xuxi

Xu, Nan

Li, Hui

Laima, Shujin

and

Chen, Wenli

2024.

Machine learning for bridge wind engineering.

Advances in Wind Engineering,

Vol. 1,

Issue. 1,

p.

100002.

Kim, Innyoung

Jeon, Youngmin

Chae, Jonghyun

and

You, Donghyun

2024.

Deep Reinforcement Learning for Fluid Mechanics: Control, Optimization, and Automation.

Fluids,

Vol. 9,

Issue. 9,

p.

216.

Chen, Jie

Zong, Haohua

Song, Huimin

Wu, Yun

Liang, Hua

and

Xiang, Jiawei

2024.

A field programmable gate array-based deep reinforcement learning framework for experimental active flow control and its application in airfoil flow separation elimination.

Physics of Fluids,

Vol. 36,

Issue. 9,

Yan, Lei

Zhang, Xingming

Song, Jie

and

Hu, Gang

2024.

Active flow control of square cylinder adaptive to wind direction using deep reinforcement learning.

Physical Review Fluids,

Vol. 9,

Issue. 9,

Zhang, Jin

Xue, Jianyang

and

Cao, Bochao

2024.

Improving agent performance in fluid environments by perceptual pretraining.

Physics of Fluids,

Vol. 36,

Issue. 12,

Gong, Tianchi

Wang, Yan

and

Zhao, Xiang

2024.

Active control of transonic airfoil flutter using synthetic jets through deep reinforcement learning.

Physics of Fluids,

Vol. 36,

Issue. 10,

Ren, Feng

Wen, Xin

and

Tang, Hui

2024.

Model-Free Closed-Loop Control of Flow Past a Bluff Body: Methods, Applications, and Emerging Trends.

Actuators,

Vol. 13,

Issue. 12,

p.

488.

Yan, Lei

Li, Yuerong

Liu, Bo

and

Hu, Gang

2024.

Aerodynamic force reduction of rectangular cylinder using deep reinforcement learning-controlled multiple jets.

Physics of Fluids,

Vol. 36,

Issue. 2,

Li, Xin

and

Deng, Jian

2024.

Active control of the flow past a circular cylinder using online dynamic mode decomposition.

Journal of Fluid Mechanics,

Vol. 997,

Issue. ,

Xia, Chengwei

Zhang, Junjie

Kerrigan, Eric C.

and

Rigas, Georgios

2024.

Active flow control for bluff body drag reduction using reinforcement learning with partial measurements.

Journal of Fluid Mechanics,

Vol. 981,

Issue. ,

Mishra, Awakash

Rengarajan

Bhatt, Rahul

and

Prince, P. Blessed

2025.

Proceedings of the 5th International Conference on Data Science, Machine Learning and Applications; Volume 2.

Vol. 1274,

Issue. ,

p.

638.

Sofos, Filippos

and

Drikakis, Dimitris

2025.

A review of deep learning for super-resolution in fluid flows.

Physics of Fluids,

Vol. 37,

Issue. 4,

Yan, Lei

Wang, Qiulei

Hu, Gang

Chen, Wenli

and

Noack, Bernd R.

2025.

Deep reinforcement cross-domain transfer learning of active flow control for three-dimensional bluff body flow.

Journal of Computational Physics,

Vol. 529,

Issue. ,

p.

113893.

Li, Hao

Cornejo Maceda, Guy Y.

Li, Yiqing

Tan, Jianguo

and

Noack, Bernd R.

2025.

Toward human-interpretable, automated learning of feedback control for the mixing layer.

Physics of Fluids,

Vol. 37,

Issue. 3,

Dong, Xiang-rui

You, Sun-yu

Wang, Qi

Zhu, Jia-hao

and

Jin, Zhi-hao

2025.

Research on vorticity driven reward for active flow control over airfoil based on deep reinforcement learning.

Journal of Hydrodynamics,

Zong, Haohua

Wu, Yun

Li, Jinping

Su, Zhi

and

Liang, Hua

2025.

Closed-loop supersonic flow control with a high-speed experimental deep reinforcement learning framework.

Journal of Fluid Mechanics,

Vol. 1009,

Issue. ,

Moslem, Foad

Jebelli, Mohammad

Masdari, Mehran

Askari, Rasoul

and

Ebrahimi, Abbas

2025.

Deep reinforcement learning for active flow control in bluff bodies: A state-of-the-art review.

Ocean Engineering,

Vol. 327,

Issue. ,

p.

120989.

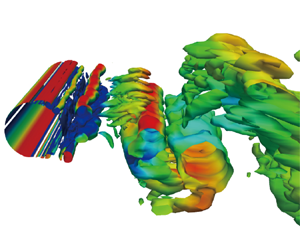

$(Re) = 100$ and significantly mitigates lift coefficient fluctuations. Hence, DF-DRL allows the deployment of sparse sensing of the flow without degrading the control performance. This method also exhibits strong robustness in flow control under more complex flow scenarios, reducing the drag coefficient by 32.2 % and 46.55 % at

$(Re) = 100$ and significantly mitigates lift coefficient fluctuations. Hence, DF-DRL allows the deployment of sparse sensing of the flow without degrading the control performance. This method also exhibits strong robustness in flow control under more complex flow scenarios, reducing the drag coefficient by 32.2 % and 46.55 % at  $Re =500$ and 1000, respectively. Additionally, the drag coefficient decreases by 28.6 % in a three-dimensional turbulent flow at

$Re =500$ and 1000, respectively. Additionally, the drag coefficient decreases by 28.6 % in a three-dimensional turbulent flow at  $Re =10\,000$. Since surface pressure information is more straightforward to measure in realistic scenarios than flow velocity information, this study provides a valuable reference for experimentally designing the active flow control of a circular cylinder based on wall pressure signals, which is an essential step toward further developing intelligent control in a realistic multi-input multi-output system.

$Re =10\,000$. Since surface pressure information is more straightforward to measure in realistic scenarios than flow velocity information, this study provides a valuable reference for experimentally designing the active flow control of a circular cylinder based on wall pressure signals, which is an essential step toward further developing intelligent control in a realistic multi-input multi-output system.