Background

Organizations supporting translational research and translational science, such as National Institutes for Health (NIH) Clinical and Translational Science Award (CTSA) hubs, typically engage in an array of activities including research funding programs, research support initiatives, consultations and training, and workforce development programming. In addition, CTSA hubs are mandated to incorporate evaluative functions, including developing plans for Continuous Quality Improvement (CQI) and assessing the impact of the CTSA hubs. However, historically, the collection, analysis, and synthesis of metrics for CQI and holistic evaluation of CTSA hubs for internal and reporting purposes have been challenging. Programs within a hub may have vastly different goals, activities, and outcomes that result in different metrics which makes data synthesis challenging. Moreover, evaluation processes may be largely focused solely on counting outputs, such as number of funded awards or consults. While critical, this focus limits the degree to which more impact-oriented results, such as movement of research from one translational stage to another or demonstration of societal benefits, are measured and reported. Last, the reporting of activities may be summative and not include or allow for readily accessible information about who received support or services; a lack of such data inhibits examination of the equity with which CTSA hub services are distributed (e.g., by department, study types, and characteristics of investigators).

To overcome these challenges, the Duke Clinical and Translational Science Institute (CTSI) undertook an initiative to create an evaluation platform enabling strategic decision-making, impact assessment, and equitable resource distribution. This platform, the Translational Research Accomplishment Cataloguer (TRACER), was developed to enhance capacity to (1) enable the documenting of activities or accomplishments such as consults, project milestones, and translational benefits aligned with indicators in the Translational Science Benefits Model (TSBM) [Reference Luke, Sarli and Suiter1]; (2) facilitate the ability to collect and access key information about CTSI activities within and across individual units to answer critical questions about process, outcomes, and potential gaps and opportunities; (3) ease reporting burdens; and (4) provide insights critical for decision-making and CQI, which are important for strategic learning and are also required by many funders. In this paper, we present the design, development, and initial implementation of TRACER. This paper begins by describing TRACER’s origins and development process. It then describes the platform itself, the role of Cores in informing the design, and the process for onboarding Cores onto the platform. It concludes with reflection on lessons learned, including facilitators and challenges of development and implementation, and future directions. This work can inform others’ understanding of the process of developing such an infrastructure, and it can provide an example of how one can align a broader strategic aim such as understanding programs, progress, and effect across an organization with specific tool development. This has additional direct relevance for those working in clinical and translational research and clinical and translational science, given the subject matter and TRACER’s capacity to advance translational science.

Conceptualization and development overview

As early as 2018, the Duke CTSI, with key involvement from the Administration team, Evaluation team, and CTSI leadership, determined to address the challenges associated with administrative and evaluative data collection, analysis, and synthesis. This resulted in a collaboration between multiple entities for ongoing development (hereafter, the Development Team), including members of the Duke CTSI Administration team, Evaluation team, Informatics Core, and the Duke Office of Academic Solutions and Information Systems (OASIS). This group developed foundational planning including a project charter and scoping document (see Supplement 1). In tandem, the team conducted a landscape analysis aimed at identifying existing technical solutions. The team determined that existing tracking efforts did not address the key issues identified earlier (i.e., tying activity to impact); the Development Team further determined that general project management programs were insufficient given intents and needs. The Development Team also examined other existing CTSA-developed tools but were not able to identify one where the architecture or functions were conducive to, or aligned with, our goals or processes. This informed the decision, as outlined in planning documents, to develop a system specifically designed to address identified needs.

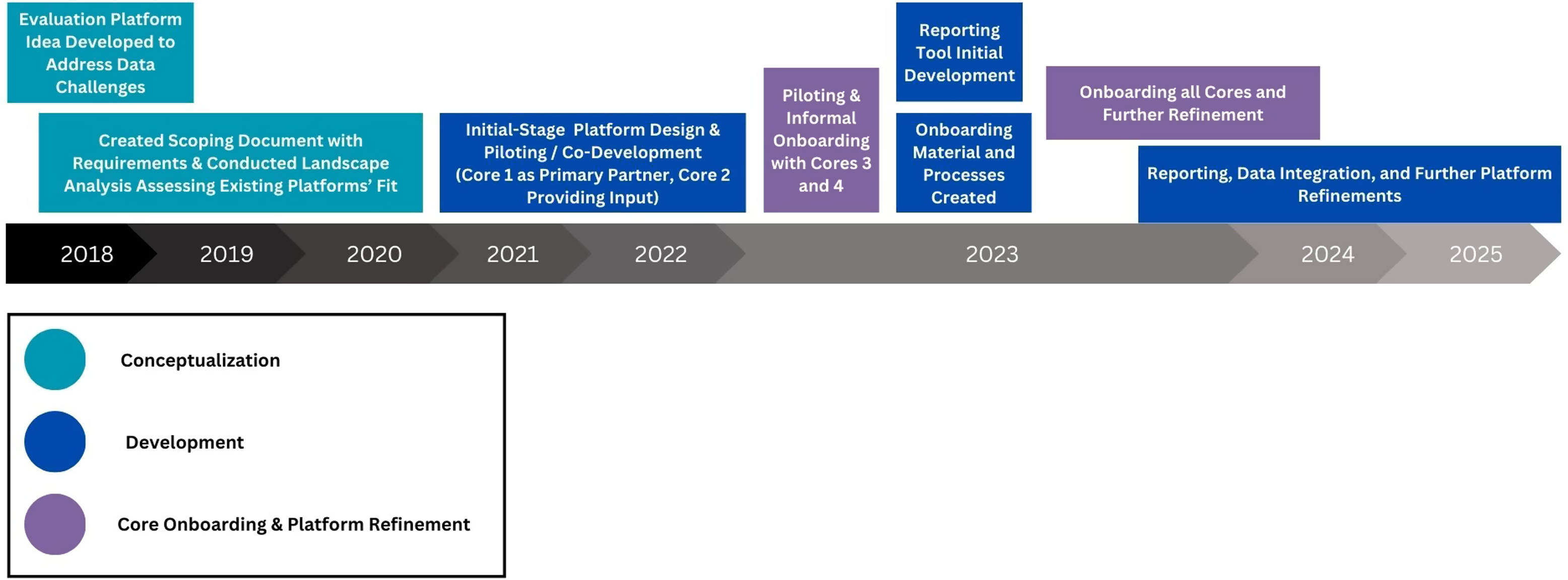

Iterative development of the initial-stage platform design of TRACER was conducted primarily during the spring and summer of 2021 (See Figure 1). As OASIS developed the technical platform based on collaborative design, other representatives of the Development Team collaborated with CTSI Cores for iterative feedback to inform continuing development. Briefly, TRACER is a web-based platform, developed in the Ruby on Rails programming language, that allows for manual data input or the importing of data from other systems via database lookups and Application Programming Interface (API) integrations. Starting in fall of 2021, the TRACER platform was piloted and co-developed with the CTSI Pilots Core as a primary partner and with additional support from the Community-Engaged Research Initiative (CERI). The platform was further refined starting in 2023 based on discussions and piloting with an additional two Cores (Workforce Development [WFD]; Clinical Data Research Networks [CDRN]), at which time a set process for Core “onboarding” into TRACER, as well as initial TRACER reporting functionalities, were also developed. Finally, additional Duke CTSI Cores were onboarded to the platform. The iterative development and onboarding are described in more detail below (“Collaborative Development” and “Core Onboarding”).

Figure 1. TRACER development timeline. TRACER = Translational Research Accomplishment Cataloguer.

Additional TRACER functionalities were informed by advancements in the field of translational research and translational science. Most notably, during development, the platform scope was expanded to include tracking of potential and realized benefits using the TSBM. The TSBM [Reference Luke, Sarli and Suiter1] includes a list of translational science benefit indicators grouped within the following four domains: clinical (e.g., diagnostic procedures), community (e.g., disease prevention), economic (e.g., cost savings), and policy (e.g., legislative involvement). Including TSBM indicators expanded the scope of TRACER beyond key milestones (e.g., publication and follow-on funding) to include a lens on TSBM-based impact.

TRACER development timeline. Note that this presents an overview, but there were intersections as described below between elements, and particularly between development and Core onboarding.

TRACER structure

Hierarchy

TRACER architecture hierarchy reflects the structure of Duke CTSI. The overarching organization is based on the nested nature of Pillars, Cores, Programs, and Projects; see Figure 2 for hierarchy. The highest-order level is “Pillars” which are broad areas of focus. Grouped within Pillars are individual “Cores” (i.e., teams working on specific areas). Each Core can then have distinct “Programs” (i.e., Core initiatives), with “Projects” reflecting more specific activities conducted under these initiatives. In addition, optional activities live outside this hierarchy and can be associated with individual Projects, Programs, and Cores. Each of these are discussed further below. TRACER structure also permits indication of collaboration between Cores (e.g., collaboration on Programs, Projects, and educational activities) to reflect the real-world cross-Core engagement.

Figure 2. Overview of platform organizational hierarchy.

Overview of platform organizational hierarchy. Within TRACER, Pillars are the highest-level entity, followed by Cores, Programs, and then Projects. Optional activities, such as educational activities, consults, and ongoing activities, can also be associated with a Core, Program, or Project. Parent Cores are responsible for data entry and monitoring, but additional Cores can be indicated as collaborating on Programs, Projects, and educational activities.

Projects, including project milestones and benefits

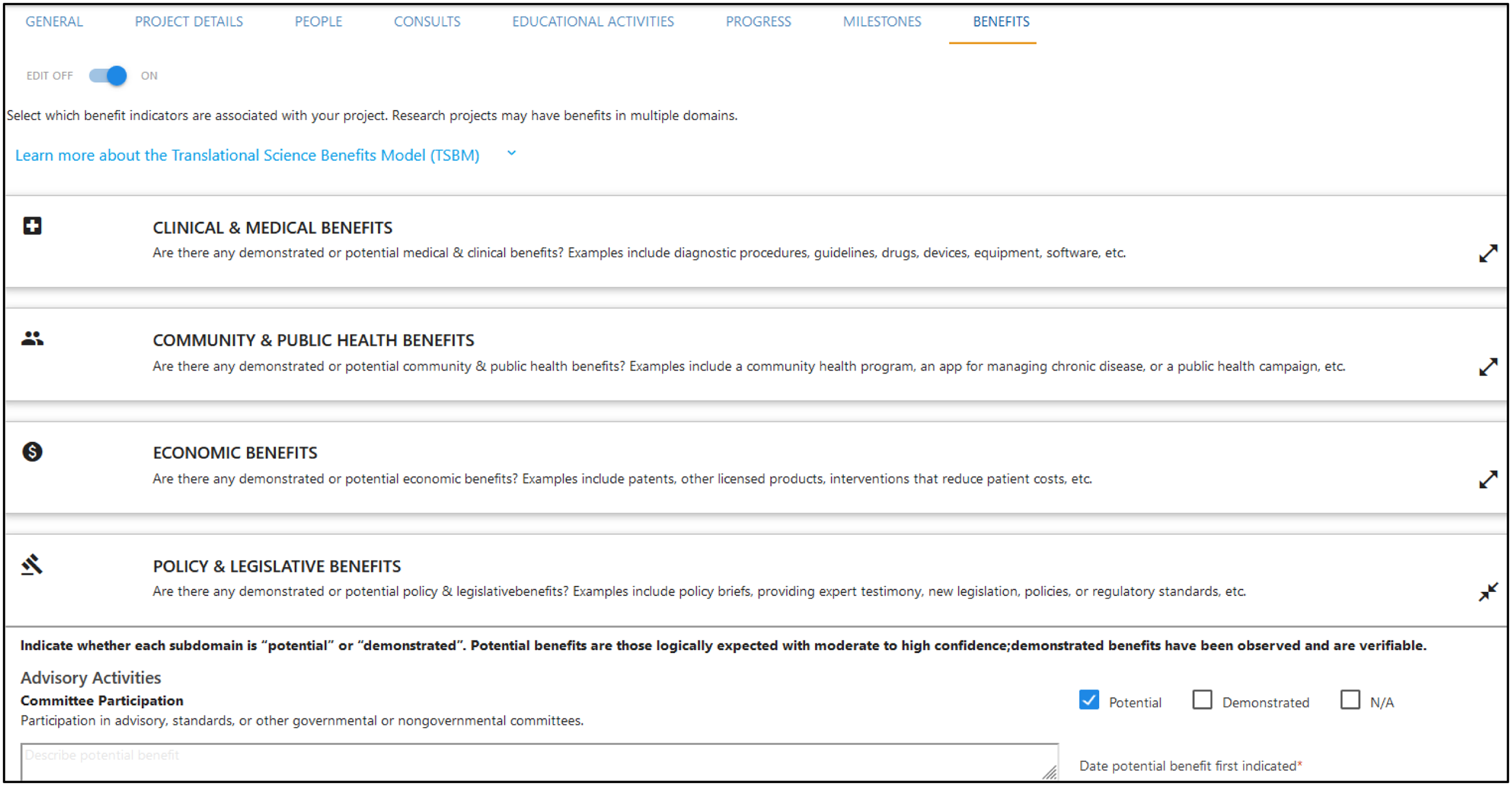

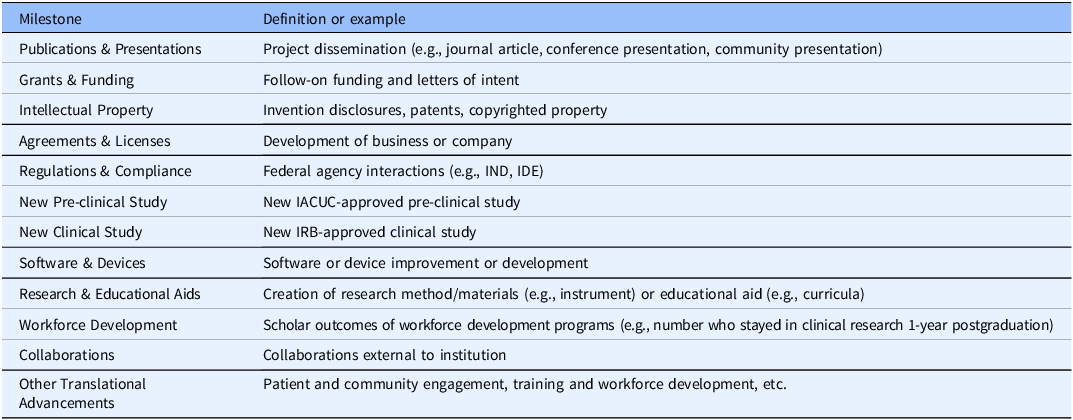

Specific activities are tracked primarily as “Projects,” which reflect sustained efforts with one or more specific milestones, outcomes, or societal benefits. Descriptive information includes items such as title, start and end dates, brief description, funding source, and team members, with “progress” as an optional data point for tracking the likelihood of project success with status indicators (e.g., at risk, too early to tell, and fully translated). A “Milestones” tab permits tracking of major project achievements, such as project dissemination (e.g., publication and presentation), follow-on funding, new clinical studies, and intellectual property establishment (see Table 1). Each milestone type includes specific information related to that milestone. For example, the “publications” milestone includes space for relevant identifiers such as article name and PubMed ID. Fields linked to lookup tables (i.e., Duke’s internal publication manuscript system and institutional employee database) are used whenever possible to ensure data accuracy and consistency and so additional information about milestones elements (e.g., employee department) can be easily integrated. A “Benefits” tab permits tracking of additional TSBM-defined outcomes. There are set options for all individual TSBM indicators as outlined in the TSBM model. For each outcome, users can indicate whether an indicator(s) is potential, already successfully demonstrated, or not applicable to a project. There are additional fields to describe how a project will impact or achieve an indicator(s) the date when a project was moved between categories (i.e., new “potential” impact, or an indicator moving from “potential” to “demonstrated”). See Figure 3 for a visual depiction of the TRACER platform and Supplement 2 for additional images of the TRACER platform.

Figure 3. Visual depiction of TRACER benefits tab. TRACER = Translational Research Accomplishment Cataloguer.

Table 1. Milestones included in TRACER

IACUC = Institutional Animal Care and Use Committee, IDE = Investigational Device Exemption, IND = Investigational New Drug, IRB = Institutional Review Board, TRACER = Translational Research Accomplishment Cataloguer.

Ongoing activities, consults, and educational activities

In addition to Projects, TRACER includes areas for “Consults,” representing individual advising or consultation meetings; “Educational Activities,” representing educational events such as training presentations and workshops; and “Ongoing Activities,” representing continuous activities without specific project milestones, such as a regularly emailed resource newsletter or advisory board. These have the flexibility to be linked to either Cores, Programs, or Projects to accommodate the varied structures of these activities. Descriptive data for these activities includes fields such as title, event date, activity type, description, and other relevant information per area (e.g., individual receiving consult; audience type for educational activities). Supplement 2 includes images of these areas.

Reporting

TRACER includes functionality for generating reports at the Pillar-, Core-, or program-level for initial analysis and synthesis of data. The platform currently includes a suite of built-in reports (e.g., Publications and Presentations, Consults, Follow-on Funding, Intellectual Property, TSBM Indicators, etc.). These reports can be further customized to users’ needs (e.g., focus on particular Core(s) or date range). For example, a director of a Pillar could create a Consults report specific to their Pillar’s Cores and then examine which departments have received consults using the Consultee Department column. Reports can be exported to an Excel file. See Supplement 3 for a sample of aggregate reporting. Future planned development includes integrations with dashboarding solutions (e.g., Tableau) so that stakeholders can further develop and use customizable reports. TRACER’s design and wide array of data also permit more robust analyses to address deeper questions regarding operations and impact (see Supplement 4 for a TRACER data dictionary). For instance, this could include a focus on the equity with which CTSA hub services are distributed across the hub and by Core (e.g., by department engaged, study types, and characteristics of investigators) and the specific TSBM-based translational impacts advanced across the hub and by Core.

Collaborative development

Early development of TRACER with partner Cores was integral to determining platform structure and process. Beginning as early as 2018, the Development Team worked with the Pilots Core to develop TRACER as the replacement for an existing but unsupported Microsoft Access database. The Pilots Core then served as an initial use case to inform co-development, with a regular meeting cadence (as often as 2 x/month) to inform design. Added perspective from CERI Core in early stages resulted in additional user feedback regarding the interface, data fields, and usability. This co-development provided a significant basis for TRACER design, including its primary structure and many of its data elements.

Starting in mid to late 2023, the Development Team implemented a concentrated refinement phase based on input across many additional Cores, with input solicited as part of the TRACER onboarding process (i.e., processes for integrating a Core into TRACER for active use; specific onboarding processes are described below). This phase successfully informed numerous adjustments in the TRACER platform. These typically focused on expanding fields captured in TRACER, ensuring Cores’ data within TRACER appropriately reflected their program structures and desired reporting, providing added clarity in definitions, or enhancing user experience. For instance, the Recruitment Innovation Center indicated that some of its work was not accurately reflected by TRACER, as some of their projects did not have specific milestones as products; to adjust for this, the Development Team created the “ongoing activities” section. Engagement with varied WFD programs led to adjustments for increased clarity and specificity around “educational activities” (e.g., documenting one-time versus ongoing trainings and pre-set options for audience type) and the potential for use of TRACER’s “Project” designation to reflect cohorts of learners/trainees within specific WFD programs.

Core onboarding

Core onboarding processes were developed to integrate Cores into TRACER as users. The process of onboarding Cores began with communication between the Development Team and a Core’s corresponding Pillar leadership. With Pillar leadership approval, the TRACER Development Team met with Cores to demonstrate TRACER and answer any questions about the platform and the onboarding process, with questions typically addressing overall value, planned use, and alignment between Cores’ activities and fields captured in TRACER. Cores were then provided with onboarding materials and given access to the test version of TRACER, which allowed them to preliminarily explore the structure of the platform. Onboarding materials included (1) an overview document that describes TRACER and the onboarding process, (2) a TRACER platform user guide, and (3) an onboarding form that Cores returned to the Development Team (see Supplement 5 for overview document and onboarding form). The onboarding form asked Cores to indicate which fields and data points within TRACER apply to their Core’s work, any potential data points essential to evaluation of their work that was not reflected in TRACER, and any other suggested modifications to TRACER that would improve functionality for the Core. This reflected the intent of onboarding to also inform responsive platform refinement. Information obtained with the onboarding form has been used by the Development Team to ensure that TRACER reflected each Cores’ evaluative needs and, ongoing, to provide a record of which TRACER functionalities and data elements a Core will use.

Once the onboarding form was complete and requested changes were considered and addressed by the Development Team, Cores were given access to TRACER. Additional meetings between the Development Team and Cores occurred throughout this process, typically either per Core request or based on the Development Team’s identification of support needed, to address questions about Cores’ desired enhancements, intended use, or other needed areas. The full process of onboarding and further platform refinement, which occurred in tandem with onboarding per intent, happened over the course of approximately 1 year and required approximately 30%–40% total personnel effort shared between Evaluation, Informatics, and OASIS team time. As of the beginning of 2024, all Cores have begun the onboarding processes, and 82% of Cores have completed onboarding.

Discussion & conclusion

Organizations supporting translational research, including CTSA hubs, provide a diverse and evolving array of resources, support, and services to a myriad of researchers and research efforts. While a wide-ranging scope of programs is essential to the advancement of translational research, it also complicates a systematic and unified process for tracking activities, studying research processes, and examining impact. These complexities, in turn, can inhibit a clear understanding of outputs, outcomes, and strategic use of data for evaluation and organizational decision-making. To address this challenge, we developed TRACER – a management and evaluation platform that accounts for and aligns the work of diverse programs within translational research organizations. TRACER was developed over the course of our hub’s most recent CTSA award and launched in 2023. Its development allows our hub to conceptually identify key processes and goals within programs and linkages between programs, and it sets the stage for advancing evidence-based improvement across our hub. While TRACER was developed for the Duke CTSA hub, the platform could be modified and further developed to meet the needs of other translational research organizations with diverse research support programs, including NIH-funded centers and academic departments.

TRACER development provides key insight into challenges in a platform development process and into facilitators that can inform other initiatives similarly seeking to collect and align organizational data. A central focus on the goals of the platform across its development and in communication with all stakeholders ensured intentionality in development and a strategic use of resources. Yet, flexibility in planning and implementation – all while retaining a focus on the ultimate aims – has allowed TRACER to appropriately incorporate numerous Cores with distinct structures and programs. This flexibility has presented challenges at times, yet it was needed to ensure this platform accurately and appropriately reflected activities and served varied users’ needs. Institutional buy-in among both leadership and Cores was essential in ensuring appropriate resources and promoting use. Buy-in was facilitated by continual communication addressing the value of implementing TRACER and leadership as initial champions with early involvement. For Core personnel, buy-in challenges were typically associated with gaps in understanding about TRACER’s purpose, concerns it would not reflect a Core’s needs or work, or concerns about time required. Many of these issues were addressed by co-development during early implementation and by maintaining flexibility in development, even in later stages of platform design and Core onboarding. The prospect of time involved remains a challenge for some, but TRACER was designed such that there is upfront cost of time with initial onboarding but lesser time ongoing, as many updates are not needed on a very frequent basis (e.g., “milestones,” reflecting key accomplishments, would not necessarily be expected to change monthly), and TRACER engagement replaces other forms of reporting that previously required time from Cores. Having a Development Team with sufficient capacity and multidisciplinary involvement (e.g., database design, informatics, evaluation, and ongoing involvement from high-level organizational administration) was invaluable to developing a successful platform. Organizational turnover resulted in change within specific individuals across years, which at times created difficulty, but process documentation and institutional memory among at least a subset of team members helped to provide continuity.

Beyond serving the strategic evaluation and development of CTSA hubs, TRACER is well positioned to advance the study of translational science that is focused on the “process of turning observations in the laboratory, clinic and community into interventions that improve the health of individuals and the public” (p. 455, emphasis added) [Reference Austin2]. Translational science is a relatively new and growing field, and current work has been largely focused on conceptualizing specific domains or processes in translational science [Reference Faupel-Badger, Vogel, Austin and Rutter3,Reference Schneider, Woodworth and Ericson4]. TRACER was architected intentionally and prospectively to optimize program tracking and evaluation, but relevance for translational science became clearer as the concept of translational science (as distinct from translational research) became more directly specified, including by the National Center for Advancing Translational Sciences (NCATS) within the structure of recent CTSA Funding Opportunity Announcements (FOAs). A data source resulting from TRACER, with information about research projects, the support provided to projects, and the outcomes and impact of the research, can facilitate testing of hypotheses about the process of translational research. Use of TRACER or a similar platform to study translational science could occur even if this platform is implemented within a single organization, but it could be further advanced by the use of a shared platform across organizations.

We acknowledge that the TRACER development process and platform itself have certain limitations, many of which inform next steps. As TRACER was recently launched, we are not yet able to assess the direct use of data in TRACER to address its strategic aims; at present, we address the ways TRACER was developed to serve this capacity. In forthcoming publications, we plan to explore analyses of TRACER data to inform strategic organizational development and inform other aims (e.g., translational science), and additional in-progress research will provide additional data on user experience. While TRACER is a valuable tool for evaluation, it not the only potential data source for understanding progress or impact; for instance, added qualitative data collection mechanisms, potentially informed by TRACER data and using a sequential mixed-methods design, could provide further understanding [Reference Creswell and Clark5]. Within planned scope, TRACER was also not intended as an individual learner development (longitudinal) tracking tool, particularly where the learner/scholar is a participant in an educational activity/program and is not a recipient via a CTSI-provided grant or pilot award. This is based on other resources existing for scholar development tracking, as well as the primary intended purpose of TRACER, but this will be reconsidered and revisited as TRACER matures. Finally, TRACER is currently in use in one hub; we have already identified interest in TRACER use or TRACER-aligned platform development at other CTSA hubs, but we also acknowledge the conceptual, technical, and procedural complexities in expanding use. Our next-stage focus includes additional steps toward potential expansion of TRACER to other hubs, including determination of processes for further platform flexibility, planning for institution-specific data integration, and broader technical support. We believe such efforts are feasible based on prior example of cross-hub access to technical resource, and such efforts will advance related efforts at other sites and within the broader translational science community and field.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/cts.2024.545.

Acknowledgments

We value the numerous individuals within our CTSA hub for their critical support of and partnership in this work. This type of work requires, and is significantly improved by, this array of input, championing, and support. We value the contributions of DJ in technical development of the platform, MLH in testing and informing the design, DC in early involvement in initiative development, and CS, SR, and VL in review of the manuscript. We value the questions other CTSA hubs have asked in our presentations of this work for informing our processes, helping consider best mechanisms for communication of this work, and helping us look ahead to expanded hub use.

Author contributions

All authors approved the submitted manuscript and contributed actively to the project activities, including in conceptualization, methodology/design, and project administration. For writing, JS, SQ, PM, and FJM contributed most directly to writing of this paper’s original draft, and all authors contributed to review and editing. All the authors have accepted responsibility for the content of this submitted manuscript.

Funding statement

This project was funded by the NIH award UL1TR002553.

Competing interests

None.