Out of three or four in a room

One is always standing at the window.

Forced to see the injustice among the thorns,

The fires on the Hill.

And people who left whole

Are brought home in the evening, like small change.

Yehuda AmichaiFootnote 1Introduction

On 29 August 2021, US forces launched a drone strike near Kabul's international airport, killing ten people. The strike targeted a white Toyota Corolla believed to be carrying a bomb to be used by the so-called Islamic State of Iraq and Syria (ISIS) for a planned terror attack against US forces at the airport. In the aftermath of the attack, it became clear that the car had no connection to any terror activity and that all casualties were civilians, seven of them children. A military investigation suggested that the tragic outcome resulted from a wrongful interpretation of the intelligence, which included eight hours of drone visuals.Footnote 2

On 16 July 2014, during a large-scale military operation in Gaza, Israeli forces attacked several figures who were identified by drone operators as Hamas operatives. Following the attack, however, it was revealed that the figures were all young children. Four children were killed in the attack, and four other children were injured. An Israeli military investigation attributed the identification error to misinterpretation of the drone visuals which triggered the attack.Footnote 3

These examples represent a broader phenomenon of mounting reliance on real-time aerial visuals in military decision-making. Advanced drone (and other aerial visualization) technologies produce volumes of information, including both static imagery and real-time video generated through various sensors.Footnote 4 These visuals inform military risk assessments and support decisions concerning the legality of planned operations.Footnote 5 The rise in complex human–machine interaction in the legal evaluation of military operations is fuelled by the assumption that military technologies, including aerial visuals, provide immediate, accurate and timely information that informs decision-makers.Footnote 6 Accordingly, legal scholarship on military technologies tends to place the technology at the centre, debating its legality and legal implications and considering the need for a new regulatory regime or a fresh interpretation of existing norms.Footnote 7

While these discussions are indeed valuable, the focus on the technology per se leaves out challenges that stem from the human–machine interaction. In the above examples (as well as in the additional case studies examined below), the armed forces of the United States and Israel each acknowledged fatal attacks on civilians in which misinterpretation of aerial visuals was identified as one of the causes – if not the only cause – leading to the tragic outcomes. In its 2022 civilian harm mitigation plan, the US Department of Defense (DoD) acknowledged the possible links between aerial visuals and cognitive biases, instructing military departments and defence intelligence organizations to “review technical training for imagery analysts and intelligence professionals” as a part of the techniques required to mitigate cognitive biases in military decision-making.Footnote 8 While this evidence is anecdotal, it nonetheless suggests that parallel to their advantages, reliance on aerial visuals may also lead to military errors and to unintended outcomes. This evidence is further supported by emerging literature exploring human–machine interaction and technology-assisted decision-making (“humans in the loop”) in several contexts,Footnote 9 including in military decision-making.Footnote 10 This emerging literature, however, has thus far focused mainly on technologies such as artificial intelligence, or on various socio-technical elements in the construction and implications of drone programs, leaving the unique problems of human–machine interaction as it relates to the use of aerial visuals in critical military decision-making processes largely under-explored.

This article fills some of this gap by examining how aerial vision technologies shape military fact-finding processes and the application of the law of armed conflict. Based on data from and analysis of four military investigations,Footnote 11 as well as interdisciplinary analysis of existing literature in critical security studies, behavioural economics and international law, the article identifies existing challenges relating to the interpretation and construction of aerial visuals in military decision-making and knowledge production processes. I argue that while adding valuable information, drone sensors and aerial visualization technologies place additional burdens on decision-makers that may hinder – rather than improve – time-sensitive and stressful military decision-making processes. As will be detailed below, these decision-making hurdles include technical, cognitive and human-technical challenges. The technical challenges concern the features, capabilities and blind spots of aerial vision technologies (for example, the scope of the visualization, the ability to reflect colour and sound, and the possibility of malfunction). The cognitive challenges relate to decision-making biases, such as confirmation bias, which may lead to misinterpretation of aerial visuals. The human-technical challenges concern the human–machine interaction itself, which may lead to human de-skilling and trigger technology-specific biases such as automation bias. A result of these challenges, which decision-makers are not always aware of, is the creation of avatars that replace the real persons – or the actual conditions – on the ground, with no effective way to refute these virtual representations.Footnote 12

To clarify, my claim is not that military decision-making processes are better or more accurate without the aid of aerial visuals. These visuals indeed provide a large amount of essential information about the battlefield, target identification and the presence of civilians in the range of fire. The argument, instead, is that the benefits of aerial visuals can easily mask their blind spots: aerial visuals are imperfect and limited in several ways – much like other ways of seeing and sensing – and these limitations are often invisible to decision-makers. Hence, the article does not suggest that aerial visuals should not be utilized, but rather that their utilization can – and should – be significantly improved.

The article begins with the identification of technical, cognitive and human-technical factors affecting the utilization of aerial visuals in military decision-making processes. It then examines four military operations conducted by the US and Israeli militaries, where aerial visuals were identified as central to the erroneous targeting of civilians. The analysis of the four operations applies the interdisciplinary theoretical framework developed in the second part of the article to the circumstances and findings in these four cases. Based on the evidence from the four cases, the article goes on to explore how aerial visuals shape the application of core legal principles such as those of distinction, proportionality and precaution. Finally, the article points toward possible directions for mitigating these challenges and improving the utilization of aerial visuals in military decision-making. The proposed recommendations include increasing the transparency of aerial vision’s scope and limitations, highlighting disagreements concerning data interpretations, enhancing the saliency of non-visual data points and developing effective trainings for military decision-makers designed to improve human–machine interactions. Such trainings can advance decision-makers’ knowledge of the blind spots and (human-)technical limitations of aerial visuals, the potential dehumanizing effects of aerial vision, and the cognitive biases it may trigger.

Aerial visuals in military decision-making

A view from above

In recent decades, and particularly with the development of drone technologies, aerial visuals have become central to military decision-making generally and to real-time operational decision-making in particular.Footnote 13 These visuals are generated by various military technologies producing a range of outputs, from static imagery and infrared visualization to real-time video.Footnote 14 Developments in military technologies, and the increase in decision-assisting visuals, have led to the creation of new military roles and responsibilities such as mission specialists who are responsible for visual investigation and recording, data collection, and imagery analysis.Footnote 15

Despite these rapid technological developments,Footnote 16 access to aerial views of war actions and war actors is not new. Long before the development of predator military drones, aerial visuals and aerial vision were at the centre of modern military strategy and target development.Footnote 17 In particular, aerial visuals have been a core element in military knowledge production, often romanticized as an expression of technological superiority, objectivity, and control.Footnote 18 The vertical gaze from above is therefore not a new development within (or outside) the military technologies of vision or within military epistemologies and knowledge production practices more broadly.Footnote 19 Reviewing the history – and critiques – of aerial photography in Western thought and philosophy, Amad demonstrates how “the aerial gaze was represented, dreamed of, experimented with and experienced vicariously before it was realised in the coming together of airplanes and cameras with the beginning of military aviation in 1909”.Footnote 20 She further documents how planes and aerial vision were transformed throughout the 20th century into “major modern symbols of technological progress, superhuman achievement, borderless internationalism, and boundary-defying experience”.Footnote 21

This romanticism of the aerial view as an advanced and objective source of military superiority has garnered much criticism. Contemporary critiques of this so-called “disembodied” God-view expose its subjective (or situated) elements and dehumanizing effects.Footnote 22 Not focusing directly on aerial visuals, Foucault has challenged the proclaimed neutrality of technologies more broadly, shedding light on the power relations they embody.Footnote 23 Following Foucault, Butler highlights the effects of the aerial view in war, asserting that the visual record of war (through the conflation of the television screen and the lens of the bomber pilot) is not a reflection on the war, but rather a part of the very means by which war is socially constituted. Within this context, asserts Butler, the aerial view has a distinct role in manufacturing and maintaining the distance between military actors – and society as a whole – and the destructive effects of military actions.Footnote 24 This distance further exacerbates dehumanization in war, as it abstracts people from contexts, details, individuality and ambiguities.Footnote 25 Haraway further unmasks how technologies of vision mediate the world, shaping how we see, where we see from, what are the limits of our vision and the aims that direct our vision, and who interprets the visual field.Footnote 26 By repositioning the “view from above, from nowhere, from simplicity”,Footnote 27 Haraway promotes responsibility for military actions, because “positioning implies responsibility for our enabling practices”.Footnote 28 Following Scott's influential book Seeing Like a State (a view that involves “a narrowing of vision”),Footnote 29 Gregory unpacks the elements or features of “seeing like a military”, exposing how military technologies shape (and narrow) military vision.Footnote 30 A part of this narrowing, Gregory argues, is the geography of militarized vision: different participants (or viewers) see different things depending on their physical – but also cultural and political – positions.Footnote 31

This last point paves the way for a deeper exploration of the synergies between critiques of military technologies and behavioural and cognitive insights concerning human–machine interaction in this space. While critiques of the myth of objectivity or the narrowing gaze generated through military technologies stand on their own, the mechanisms of vision narrowing and subjective interpretation of visuals can be further unpacked and nuanced through behavioural scholarship. In the subsection below I rely on these critiques of military vision as a starting point for identifying the concrete mechanisms that limit (or position) military vision and induce bias in military fact-finding and risk assessment processes, including the legal evaluation of military operations. This general argument will then be demonstrated through four case studies from the United States and Israel.

A view from within

The utopian narrative linking aerial vision with objective and superior knowledge, together with evolving technical capabilities, has led to the notion that aerial visuals improve decision-making processes by providing immediate, accurate, relevant and timely information. Their zooming-in and -out capabilities, simultaneously providing a view of both the macro and the micro, have complemented (if not replaced) traditional forms of information-gathering and knowledge production during stressful and fast-developing situations.Footnote 32 While dystopian critiques, linking aerial vision with practices of violence and the exercise of power and control over dehumanized others, have not penetrated military thinking,Footnote 33 some studies have begun to examine the technological limitations of visuals in various decision-making contexts. For example, Marusich et al. find that an increase in the volume of information – including accurate and task-relevant visuals – is not always beneficial to decision-making performance and may be detrimental to situation awareness and trust among team members.Footnote 34 This finding is consistent with the outcomes of several military investigations which have identified technology- (and human-technology-) related factors as the source of erroneous targeting of civilians in concrete military operations (as I shall explore in detail in the next section). While the findings from these studies are very context-specific, they suggest that the advantages supplied by aerial vision may be hampered by suboptimal integration processes and other under-explored technical and human-technical weaknesses.

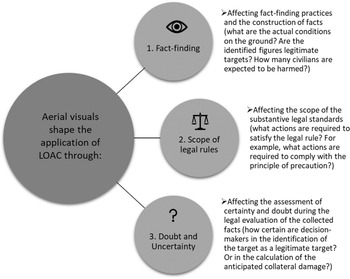

Informed by this emerging literature, as well as by the theoretical critiques described above and insights from behavioural economics, I identify technical, cognitive and human-technical factors affecting the use of and reliance on aerial visuals in military decision-making (illustrated in Figure 1). While for analytical purposes these challenges are presented and discussed separately, their effects on concrete decision-making processes are often intertwined, as will be further demonstrated below. Technical limitations create informational gaps that are filled with subjective, and sometimes biased, interpretations. These subjective judgements are further influenced by suboptimal human-technical interactions, as well as by the overarching objectifying gaze of the aerial view. I turn now to elaborate on each of these types of challenges or limitations.

Figure 1. The limits of aerial visuals in military decision-making.

Technical challenges

As stated above, military technologies provide volumes of relevant and timely information that assists decision-makers in real time. However, similarly to human-centred fact-finding methods, these technologies are far from perfect (or objective). This subsection considers some of the technical limitations of aerial visuals, aiming to make these vulnerabilities more pronounced to military decision-makers.

Aerial visuals depicting identified (and unidentified) targets are limited in various ways. First, the sensors utilized to generate aerial visuals are bounded by time and space constraints, depicting only some areas, for a specific period of time. The selected temporal and geographical scope generates affective and salient visual data, but information which exists outside those times and spaces – outside the “frame” – becomes secondary and unseen.

Second, some information gaps stem from the capabilities of the particular technology or sensor used. For example, many strikes target buildings or are conducted at night, under conditions that significantly limit visibility as well as the ability to accurately detect the presence of people in the targeted area. In such conditions, the particular visualization tools used will often signal the presence of human beings using temperature signatures picked up by infrared sensors.Footnote 35 Moreover, the capability of infrared sensors to generate visuals in limited lighting conditions comes at the expense of other information, such as colour. Colour detection has particular significance during armed conflict, as it allows the attacking forces to identify medical facilities, which are marked using colour coding (i.e., the red cross symbol). In addition to sensor selection and limitations, visual sensors are not always combined with audio capabilities that can influence the interpretation and effect of the visuals. Whether outside of the visuals’ scope or redacted through the selected lens, some data is not included in the outputs provided to decision-makers, thus creating an impactful – yet incomplete – visual of the area that produces a false impression on its viewer. The missing information remains invisible, while the visible (yet limited or partial) outputs are salient and capture decision-makers’ attention.

Third, aerial visualization technologies may fail or malfunction, generating flawed or misleading streams of information and intensifying gaps in the factual framework. When military practices rely profoundly on technology systems, decision-makers’ own judgement, and their ability to evaluate evolving situations without the technology, erodes.

I will demonstrate the effects of each of these limitations through data gathered from concrete military operations below.

Cognitive challenges

Despite common beliefs to the contrary, visuals do not speak for themselves. Much like any other source of information, images require some degree of interpretation, whether generated implicitly by cognitive human processes, or configured and automated through artificial intelligence algorithms (which inherently incorporate human input through technology design and training processes). Reliance on aerial visuals is therefore mediated through the operation of cognitive dynamics and biases, as well as the cultural and political lenses of the humans who design the technology, apply it to generate particular information, interpret the images, and communicate that interpretation to other decision-makers.Footnote 36 In this subsection I briefly review a few core cognitive biases that are relevant to military decision-making. I will illustrate their operation, providing concrete examples from four military operations, in the following section.

Cognitive biases refer to faulty mental processes that lead decision-makers to make suboptimal decisions which deviate from normative principles.Footnote 37 In their influential studies of decision-making biases and heuristics, Kahneman, Slovic and Tversky have grouped these biases under three broad categories: representativeness, availability and anchoring.Footnote 38 Throughout the years, additional types of cognitive biases have been identified, including a variety of motivated cognition biases and framing effects,Footnote 39 as well as overconfidence and loss aversion.Footnote 40 Van Aaken emphasizes the relevance of these decision-making biases to the application and interpretation of international law generally, and in particular in the context of armed conflicts.Footnote 41

Representativeness biases occur when people make judgements based on how closely an option resembles the problem scenario, in violation of rational laws of probability.Footnote 42 Visualization outputs depicting people in zones of active hostilities are interpreted and categorized quickly, based on how well the known characteristics fit existing representations (for example, those created through training scenarios). As a result, representativeness biases may lead to the classification of such people as insurgents when they are in fact civilians.

Availability biases occur when people overstate the likelihood that a certain event will occur because it is easily recalled, or because they can easily retrieve similar examples to mind.Footnote 43 In the context of military decision-making, availability biases make decision-makers less sensitive to alternative courses of action or information that runs contrary to their recent experience or other easily recalled information.Footnote 44

Anchoring biases occur when the estimation of a condition is based on an initial value (anchor), which is then insufficiently adjusted to provide the final true condition.Footnote 45 The starting point – the anchor – might result from intuition, the framing of the problem or even a guess, but the bias occurs when decision-makers do not adjust sufficiently from this initial anchoring point.Footnote 46 Aerial visuals may generate both the initial anchor (which may be inaccurate based on the technical limitations discussed above) and the inaccurate estimation or adjustment from an initial intelligence information or suspicion (as visuals may be wrongly interpreted – or insufficiently adjusted – from an external anchor).

Additionally, in the psychological literature, various cognitive dynamics generating “unwitting selectivity in the acquisition and use of evidence” have been grouped together under the term “confirmation bias”.Footnote 47 Confirmation bias refers to people's tendency to seek out and act upon information that confirms their existing beliefs or to interpret information in a way that validates their prior knowledge. As a result, the interpretation of aerial visuals may be skewed based on decision-makers’ existing expectations, and this confirmation may then serve as an (inaccurate) anchor for casualty estimates or target identification.Footnote 48

Importantly, these cognitive biases have been found to influence not only lay people, but also experts in professional settings.Footnote 49 In particular, Slovic et al. found that experts express overconfidence bias, leading to suboptimal risk assessments, and as a result, erroneous decisions.Footnote 50 According to their study, experts think they can estimate failure rates with much greater precision than is actually the case.Footnote 51 Some common ways in which experts misjudge factual information and associated risks – which are particularly relevant in our context – are failure to consider how human errors influence technological systems and insensitivity to how technological systems function as a whole.Footnote 52 Analyzing intelligence failures with regard to Iraqi weapons of mass destruction, Jervis concluded that many of the intelligence community's judgements were stated with overconfidence, assumptions were insufficiently examined, and assessments were based on previous judgements without carrying forward the uncertainties.Footnote 53

Finally, the cognitive biases referred to in this section are of a general nature, operating in a similar way in any fact-finding context and affecting military decision-making regardless of the specific source of information that decision-makers rely on.Footnote 54 But while military organizations have learned to acknowledge subjectivity and biases in human decision-making processes, aerial visuals have largely been considered as an objective solution to these human flaws. Nevertheless, it is important to acknowledge that while providing important and relevant information, aerial visuals do not eliminate the problem of bias and distortion, but rather shift the location and association of the bias into the space of image interpretation.

Human-technical challenges

In addition to the technical and cognitive limitations identified above, reliance on aerial visuals in military decision-making is further compromised through challenges stemming from suboptimal human–machine interaction. In the model I propose, technical limitations are those relating to the scope, capabilities and performance of the technology (and are independent from the humans in the loop). Cognitive limitations relate to biases that affect decision-makers in various settings and are not inherently related to the technology (for example, confirmation bias may skew the interpretation of a drone visual, as well as other types of information). Human-technical limitations highlight problems that are generated or intensified by the interaction of humans and machines. I will elaborate on three such problems: saliency, which is a general cognitive bias (like those presented above) that is intensified by the characteristics of the information medium, in this case aerial visuals; automation, which is a cognitive bias specifically describing the effects of technologies (or automation) on human decision-makers; and objectification, which is linked to the general problem of dehumanization in the context of armed conflicts, describing a particular mechanism of objectification generated through aerial vision.

Human–machine interaction further jeopardizes military decision-making due to the impact of such interaction on human judgement. When human decision-makers get used to trusting the technology to detect threats, instead of exercising their own judgement and skill, it leads to de-skilling or diminished risk assessment capabilities. This means, for example, that when a sensor is damaged, the aircrew (who have been trained to rely on that sensor) may be less capable of exercising human judgement based on other sources of information. Therefore, reliance on aerial visuals (as well as other technologies) to identify threats and to distinguish between legitimate and illegitimate targets may engender a numbing effect on human fact-finding practices, including the exercise of common sense.Footnote 55

Salience and automation

In an environment of complex data sources and high levels of uncertainty, heavy reliance on visuals generates a salience problem, as decision-makers tend to focus their attention on visual data. Emerging empirical evidence suggests that this salience problem may contribute to reduced situational awareness of decision-makers, who tend to focus on visual data. Several studies identify real-time imaging outputs as a contributing factor to reduced situational awareness. For example, Oron-Gilad and Parmet measured the impact of adding a video feed to a display device for utilizing intelligence from an unmanned ground vehicle during a patrol mission, on the quality of the force's decision-making capabilities.Footnote 56 The study found that participants in the experimental group were slower to orient themselves and to respond to threats. These participants also reported higher workload, more difficulties in allocating their attention to the environment, and more frustration.Footnote 57

In another study, simulating decision-making under pressure, McGuirl, Sarter and Woods observed that continuously available and easily observable imaging garnered greater trust and attention than other sources of information (which included verbal messages and textual data). They further found that aerial visual data was correlated with poorer decisions, as decision-makers tended to base their decisions on the aerial visualization while ignoring additional available data.Footnote 58 Significantly, nearly all of the study participants failed to detect important changes in the situation that were not captured in the imaging but that were available via other, non-visual data sources.Footnote 59

These findings are consistent with the saliency literature. Salience refers to features of stimuli that “draw, grab, or hold attention relative to alternative features”.Footnote 60 When processing new information, salient features may capture decision-makers’ attention, affecting their judgement.Footnote 61 Saliency literature has identified the affective role of some visual data (mainly colourful, dynamic and distinctive) in capturing decision-makers’ attention.Footnote 62 Some features of aerial visuals may intensify the problem of saliency in this context: for example, the zooming-in capability of aerial visuals intensifies their saliency, making it easier for decision-makers to focus on one visible part of the relevant information while failing to give similar weight or attention to other pieces of the intelligence puzzle.

Another cognitive bias directly relating to human–machine interaction is automation bias. Automation bias refers to decision-makers’ tendency to place an inappropriately high level of trust in technology-generated data.Footnote 63 As a result of this high level of trust, decision-makers may trust technology-generated outputs more than is rational. Skitka, Mosier and Burdick found that decision-makers were more likely to make errors of omission and errors of commission when they were assisted by automated aids (such as a computer monitoring system).Footnote 64 McGuirl, Sarter and Woods’ study indicates that decision-makers not only focus on the visual feed, but also place an inappropriately high level of trust in this automated, computer-generated data. As a result of this high level of trust in aerial visualization, McGuirl, Sarter and Woods found that participants exhibited limited cross-checking of various sources of information and narrowed their data search activities.Footnote 65 In the context of military targeting decisions, Deeks has pointed out that both a positive target identification and an implicit approval by not alerting that the target is a protected target may involve an automation bias, where individuals accept the machine's explicit or implicit recommendation.Footnote 66 Automation bias further jeopardizes decision-making through enhancing decision-makers’ overconfidence (or “positive illusions”) concerning the data relied upon and decisions made based on this data.Footnote 67

The objectification effect of the aerial view

Aerial visuals are celebrated for infusing critical decision-making processes with objective and accurate information about ground targets and conditions, but as explained above, the particular view they provide cannot be described simply as being either accurate or objective. Indeed, in her critique of the militarist myth of perfect vision (in her words, a “God trick”), Haraway positions the simplicity of the view from above as the opposite of the nuanced, detailed and complex view from a body.Footnote 68 The detached view from above enables the exercise of dominance over what (or who) is being observed.Footnote 69 During this process, as Gregory points out, the aerial view subverts the truth “by making its objects visible and its subjects invisible”.Footnote 70 Information generated through the aerial lens therefore lacks necessary complexity, objectifying and dehumanizing those viewed.

Exploring the interaction of the virtual, material and human, Holmqvist observes that drones are political agents which are not simply detecting objects and actions but are rather producing those objects and actions.Footnote 71 Aerial visuals determine individuals’ gender, actions and status (male/female, peaceful/fighter, civilian/combatant), and predict risk (how many bystanders will be killed as a result of an attack, how dangerous is the target).Footnote 72 Because differences among people may be less detectable from the sky, aerial vision engenders homogenization and dehumanization of those observed and constructed through the aerial view.Footnote 73 The lack of nuance, or social context, “renders the aerial objectifying gaze far less transparent than it appears”.Footnote 74

Instead of individuation and attention to differences, observed individuals are produced or constructed to fit predetermined, functional categories that reflect the perspectives of the viewers, not those viewed, simplifying existing complexities and erasing variation.Footnote 75 This process of homogenization, of translating bodies into targets and stripping people from their individuality, accounts for dehumanization.

A view from below: Aerial visuals and military errors

The above analysis identifying the limitations of aerial visuals in military decision-making provides a framework for analyzing four concrete military operations where aerial visuals were central to the decision-making process. In all four cases, US and Israeli forces attributed, at least to some extent, erroneous targeting of civilians to malfunctions or misinterpretations of the aerial visualization relied upon by decision-makers in real time. As information about technology-related (or otherwise) military errors is scarce – much of it hidden behind walls of secrecy and confidentiality – these cases were identified following extensive qualitative analysis of military reports and media coverage of military errors in the last two decades (2002–22). While through my research I have identified additional cases in which aerial visuals were identified as contributing to mistaken targeting of civilians (and which had their investigation information released to allow a deep analysis of the decision-making processes), I decided to focus on four cases to provide an in-depth analysis of the facts and context of each military operation. Aiming to enhance (to some extent) the study's generalizability and timeliness, I selected the two most recent cases from each of the two jurisdictions from which I was able to collect rich data (the United States and Israel).

Examining military errors is not without limitations. In addition to the hindsight of the ultimate outcome of the decision-making process, the focus on errors provides a selective sample that overlooks the sources or causes of successful decisions. Therefore, this section does not purport to provide a complete outlook on aerial-focused military decision-making processes; instead, it offers a unique glimpse into (some) military errors, examining the role of aerial visuals in the decision-making processes that led to these errors. The data discussed below may be suggestive of flaws in human–machine interactions which can lead to error in other cases, but ascertaining the validity of such a general claim requires additional research.

Evidence from Israel

The killing of Ismail, Ahed, Zakaria and Mohammed Bakr

On 8 July 2014, Israel began a large-scale, seven-week military operation in the Gaza Strip, known as Operation Protective Edge. The operation began following weeks of hostilities, which started with the kidnapping and murder of three Israeli teenagers in the West Bank and continued with large-scale arrests of hundreds of Hamas operatives in the West Bank and massive rocket fire from Gaza into Israel. During the operation, more than 2,000 Palestinians and seventy-three Israelis were killed.Footnote 76

On 16 July 2014, eight days into Operation Protective Edge, Israeli forces received intelligence warning that “Hamas operatives [were] expected to gather at a Hamas military compound in Gaza harbor, in order to prepare military actions against the Israel Defence Forces” (IDF).Footnote 77 A container within this compound, suspected of being used by Hamas to store ammunition, had been targeted the previous day by the IDF. Following this intelligence, the IDF decided to use armed drones to provide visualization of the suspected Hamas compound at Gaza harbour.Footnote 78 At around 4:00 pm on the same day, the drone operators identified several figures running into the compound toward the container that was targeted the previous day. Based on the drone visuals, the figures were “incriminated” (in military jargon) as Hamas operatives, and a decision was made to attack them. As soon as the figures were seen entering the compound, IDF forces fired their first missile. As a result of the fire, one of the figures was killed and the others began running away from the compound towards other sections of the beach. A second missile then hit the running figures on the public beach outside the compound, as they were trying to escape.

Despite the IDF assessments that they had targeted adult Hamas operatives, soon after the attack it became clear that the victims were all children of the Bakr family. Four children – Ismail Bakr (10 years old), Ahed Bakr (10 years old), Zakaria Bakr (10 years old) and Mohammed Bakr (11 years old) – were killed in the attack (one was killed from the first missile and three were killed from the second missile), and four other children were injured.

Following requests from the victims’ families and human rights organizations, a military investigation was launched into the incident. In June 2015, the Military Judge Advocate decided not to open criminal proceedings, after concluding that the attack did not violate Israeli or international law.Footnote 79 The families and human rights organizations appealed this decision to the Attorney General, and their appeal was dismissed on 9 September 2019. A petition to the Israeli High Court of Justice (HCJ) was ultimately denied on 24 April 2022, after an ex parte proceeding in which confidential intelligence was presented to the Court.

While there are various issues – legal and ethical – which merit attention in these legal proceedings, I wish to focus here on those that shed light on the use of aerial visuals for target identification and collateral damage estimates. The Military Judge Advocate clarified that real-time aerial visualization was used in this case as a core element in applying the principle of precaution.Footnote 80 The State Response further emphasized that “the forces used a visualization aid to ascertain that civilian[s] [were] not present”,Footnote 81 and highlighted that “after confirming through visual aids that no uninvolved civilians [were] present, and therefore estimating no collateral damage from the attack, IDF forces decided to attack the figures”.Footnote 82 In the discussion below I analyze information available from the Israeli investigations in order to evaluate the utilization of aerial visuals by decision-makers in this case and reveal some of the technical, cognitive and human-technical limitations involved, as explored above.

First, the data from the Israeli investigations demonstrates a significant technical limitation of aerial visuals. While the vertical view is highly capable of detecting figures on the ground, the information it provides on those observed is limited in several ways, providing some details while omitting others. The Attorney General's letter rejecting the appeal against the closure of the military police investigation touched on this issue briefly:

[T]o an observer from the ground, as is evidenced through photos taken by journalists that were present at the scene, it is easy to see that these were children, but as a legal matter, the evaluation of the incident must be done from the perspective of the person that approved the attack, which was based on aerial visualization.Footnote 83

As is clear from this statement, while aerial visuals have been engaged as a means of precaution, in this case this method of visualization was inferior to ground visuals, detecting humans moving but failing to distinguish adult insurgents from playful 10-year-olds. Interestingly, in its response to the petition to the HCJ, the State highlighted not only that “at no stage were the criminalized figures identified as children”, but also that IDF forces could not have identified them as such.Footnote 84 This is an important determination: while it was easy for ground observers to see clearly that the figures on the Gaza beach were young children, it was impossible to reach a similar conclusion using the selected aerial visualization methods. Despite this determination, it is not explained why, if that is the case, only aerial visualization tools were engaged as a means of precaution (especially noting that the “threat” would not have been generated or perceived otherwise). Moreover, the State noted that a military expert with experience in similar attacks was asked to review the visuals from the attack, and he concluded that “it is very difficult to identify that these are not adults”.Footnote 85 While the State used this expert's opinion to exonerate the decision-makers in this case, it did not provide any explanation as to why a visualization tool that cannot distinguish adults from children was selected as the main means of precaution in this case (and possibly others).

Furthermore, the State Response emphasized that IDF forces used aerial visuals to ascertain that civilians were not present.Footnote 86 It then added: “Indeed, throughout the operation, the forces did not identify anyone else present except for the identified figures.”Footnote 87 Intended to suggest that the forces carefully analyzed the aerial visuals, these statements identify a blind spot in the scope of those visuals. While the investigation concluded that the drone team identified three or four figures, ultimately an additional four children were injured, indicating that the ability to zoom in on the suspected figures may have prevented a broader view of the surrounding area, where other children were playing and were within the damage range of the missiles.

Second, the data from the Israeli investigations also suggests that the interpretation of the aerial visuals may have been distorted because of cognitive biases. As mentioned above, the decision to close the investigation was largely based on the finding that the relevant forces had identified the figures as Hamas operatives. This lethal error was rationalized and excused through four factors:Footnote 88

1. The concrete intelligence relating to Hamas operatives meeting at the location the day before.

2. The figures were identified in a closed and fenced Hamas compound, where only Hamas operatives were expected to be present.

3. The container at the compound was targeted the previous day and IDF forces believed that the figures were attempting to take ammunition that was stored in the container.

4. On 7 August 2014, Hamas operatives attempted to attack the IDF base at Zikim and were killed in the crossfire that ensued. It was therefore assumed that Hamas operatives were planning to use ammunition from the container to launch a similar attack.

While these factors do not explain why the targeted figures were deemed adult Hamas operatives instead of 10-year-old children, they do suggest that the target identification process may have been affected by cognitive biases (in particular, confirmation, representativeness and availability biases). The pre-existing intelligence and expectations may have led the drone team to interpret the aerial visuals consistently with the existing intelligence and threat scenarios. The experience of the previous recent attack in that area may have skewed the interpretation of the visuals based on the available information from the recent experiences.

The suggestion that the classification of the children as Hamas operatives was influenced by cognitive biases is strengthened when examining other data from this case. For example, the State Response relied on the testimony of one of the naval intelligence officers involved in the incident, who insisted that “a child and a man do not run the same way, it looks different, and I had a feeling we hit adults, not 12-, 14-year-old kids”.Footnote 89 It is true that some visual indicators are associated with movements of adults (or trained insurgents) while others are associated with movements of children (or civilians). However, in this case the children were depicted moving in a scattered manner, as opposed to an orderly pattern that one might expect of adult insurgents. The only indicator mentioned as leading to the classification of the figures as adults (beyond the operator's “feeling”) was that the figures were observed moving “swiftly”.Footnote 90 However, running (or moving “swiftly”) per se is a strange criterion in these circumstances, especially as trained insurgents may be moving more slowly out of caution, while children can be expected to run around at the beach (or when attempting to escape an armed attack). The admission that the age classification was based on a “feeling” suggests that instead of concrete visual indicators, the age classification in this case was influenced by a subjective assessment that was formed based on pre-existing intelligence and expectations.Footnote 91

Third, the data from the Israeli investigation demonstrates weaknesses in the human-technical interaction, both with regard to the salience of the visual data and the objectifying gaze of the aerial visuals. Some indicators which could have suggested that the depicted figures were civilians were ignored, or were not salient enough to affect action. For example, IDF forces believed the compound was surrounded with a fence and that access to it was only possible through a guarded gate. Their assessment that only Hamas operatives had access to the compound was based on other sources of information, as well as their operational familiarity with the area. This information, however, was isolated from the aerial visuals, which were designed to detect potential threats and thus only focused on the moving figures. The aerial visuals were not used to verify the existence of a fence or a guarded gate, and as far as we know, other sources of updated information about access to this compound from the public beach were not utilized or consulted.

Moreover, data provided in the State Response indicates that the human-technical interaction was flawed, limiting, rather than strengthening, the situational awareness of the force. Three of the children that were killed in the attack died as a result of the second missile, which was fired at the escaping children and hit them at the public area of the beach, outside of the suspected compound. After the first missile was fired, killing one of the children and causing the other three to run away, the drone operators communicated through the radio their uncertainties concerning the boundaries of the Hamas compound. In particular, they communicated their worry that targeting the figures on the beach, outside of the compound, may increase the danger to civilians. The State mentioned this communication in its response as evidence of the sensitivity of the attacking forces to the issue of collateral damage and their desire to ascertain target identification;Footnote 92 however, from the information included in the State Response, as well as other relevant materials from the investigation, it seems that the drone operators’ concerns went unanswered.Footnote 93 This course of events suggests that the salience of the visuals – and the threat that their erroneous interpretation generated – inhibited the forces’ awareness of other relevant facts, including non-visual representations of the compound boundaries, and heightened the urgency to act without waiting for a definite response.

Finally, beyond these salience and situational awareness issues, this case exemplifies the objectifying gaze of military drones, which anticipates, produces and then confirms a presumed threat through aerial visualization tools. In this case, the drone team's mission was to surveil a particular area in order to detect potential threats. Through this gaze, any movement within the designated area was suspected, and any figures identified within that space were constructed as a threat. The vertical view through which the children were captured collapsed any variations and erased physical differences to fit a mechanical classification, oblivious to social context and cues. The objectification and dehumanization of the eight children that were killed and injured that day was exercised through the objectifying eye of the drone lens, which constructed these children, one and all, as targets, stripping them from their identities, individuality, families and communities.

The shelling of the Al-Samouni house

On 27 December 2008, the IDF opened a twenty-two-day attack on the Gaza Strip, known as Operation Cast Lead. Israel described the attack as a response to constant rocket attacks fired from the Gaza Strip into Israel's southern cities, causing damage and instilling fear.Footnote 94 By the operation's end, public buildings in Gaza were destroyed, thousands of Palestinians lost their homes, many were injured, and about 1,400 were killed.Footnote 95 On the Israeli side, Palestinian rockets and mortars damaged houses, schools and cars in southern Israel, three Israeli civilians were killed and more than 1,000 were injured.Footnote 96 While the ongoing hostilities continued, the UN Human Rights Council established a fact-finding mission, widely known as the Goldstone Mission, to investigate alleged violations of international human rights law and international humanitarian law (IHL).Footnote 97

One of the main incidents investigated by the Goldstone Mission was the Israeli attack on the Al-Samouni house on 5 January 2009.Footnote 98 In brief, following an attack on several houses in the area, IDF soldiers ordered members of the Al-Samouni extended family to leave their homes, and to find refuge at Wa'el Al-Samouni's house. Around 100 members of the extended Al-Samouni family, the majority women and children, were accordingly assembled in Wa'el Al-Samouni's house by noon on 4 January 2009. At around 6:30 or 7:00 am the following morning, Wa'el Al-Samouni, Saleh Al-Samouni, Hamdi Maher Al-Samouni, Muhammad Ibrahim Al-Samouni and Iyad Al-Samouni stepped outside the house to collect firewood in order to make bread. Suddenly a projectile struck next to the five men, close to the door of Wa'el's house, killing Muhammad Ibrahim Al-Samouni and Hamdi Maher Al-Samouni. The other men managed to retreat into the house. Within five minutes, two or three more projectiles had struck the house directly, killing an additional nineteen family members and injuring nineteen more. Based on these facts, the Goldstone Report concluded that the attack on the Al-Samouni house was a direct intentional strike against civilians, which may constitute a crime against humanity.Footnote 99

Following the release of the Goldstone Report, the Israeli military conducted its own investigation into these events. The military investigation did not dispute these facts but instead added that the decision to shell the Al-Samouni house was based on erroneous interpretation of drone images that were utilized in the war room in real time.Footnote 100 According to the investigation report, grainy drone images depicted the five men holding long, cylindrical items which were mistakenly interpreted as rocket-propelled grenades (RPGs).Footnote 101 In May 2012, the military prosecution announced that no legal measures would be adopted in this case against any of those involved in the decision to attack the Al-Samouni house,Footnote 102 as the killing of the Al-Samouni family members was not done knowingly and directly, or out of haste and negligence, in a manner that would indicate criminal responsibility.Footnote 103 In between, while the Israeli military investigation was still ongoing, Justice Richard Goldstone, the head of the Goldstone Mission, published an op-ed in the Washington Post in which he retracted the Goldstone Report's legal findings concerning the Al-Samouni incident. In his op-ed, Justice Goldstone accepted the initial Israeli explanation concerning the attack, stating that the shelling of the Al-Samouni home “was apparently the consequence of an Israeli commander's erroneous interpretation of a drone image”.Footnote 104 Israeli authorities have used Goldstone's op-ed as proof that the Report itself – including its factual findings – was false and biased.Footnote 105

Similarly to the attack that killed the Bakr family children, the investigations into the attack on the Al-Samouni house demonstrate the significant role of aerial visuals in real-time military decision-making, and in this case, in the wrongful threat identification and perception. Below I explore some of the technical, cognitive and human-technical limitations of this reliance on aerial visualization tools in this case.

First, the data from the military investigation demonstrates a significant technical limit of the particular sensor that was used in this case. The investigation describes the aerial visuals used as so “grainy” that a pile of firewood was easily confused with a cache of RPGs. This means that the technical capability of the relevant sensor was limited, producing murky images that required heavy interpretation. Additionally, this grainy image captured only a fraction of time and space (five men near the doorstep), overlooking the crowded refuge behind the door, filled with hungry and frightened children – information that could have contextualized the situation and changed the interpretation of the image.

Second, the military investigation found it sufficient to conclude that the erroneous attack resulted from a misinterpretation of a grainy image and did not inquire further into the causes of this misinterpretation. Applying the cognitive insights explored above may shed some light on this issue. This incident was not an isolated event, but rather occurred within a broader context of intense hostilities. Within this context, RPGs were utilized by Palestinian forces in the days prior to the attack on the Al-Samouni house; as a result, it is highly possible that RPGs were easily recalled by decision-makers when they saw the image. Both availability and representativeness biases could have induced a misinterpretation of the visual, based on the familiarity of the forces with the shape of RPGs and the similarity of the situation at the Al Samouni house to practiced scenarios.

Third, the information available in this case further demonstrates weaknesses in the human-technical interaction, both with regard to the salience of the visual data and the objectifying gaze of the aerial visuals. The investigations suggest that the grainy drone image was not the only relevant information available to decision-makers in this case – other available data included non-visual communications with the ground battalion, including information concerning the order to locals to gather at the Al-Samouni house. That additional information could also have informed and contextualized the interpretation of the grainy drone image, offering alternative interpretations to the RPG one, but this information was apparently pushed aside while the drone visual, with its threat interpretation and urgency, grabbed decision-makers’ attention. Under the prevailing pressure conditions, the effect of the drone images was strong and immediate, and may have diverted attention from other, less salient sources of information, such as communications from the ground battalion. The investigation itself does not indicate whether any other sources of information were considered (especially given the grainy quality of the drone visual), or whether – and based on what data – collateral damage calculations were conducted.

Finally, much like the Bakr children, the Al-Samouni men were objectified by the aerial gaze, turned into an immediate threat that must be eliminated. Worried and caring husbands, sons and fathers were turned into violent, armed terrorists and attacked with lethal weapons accordingly. The drone did not simply “detect” the threat; its sensors generated the threat and produced the RPG-carrying terrorists. The Al-Samouni family, which a day prior was recognized as a victim of the hostilities and was led into a safe space, was quickly recast as a source of insurgency and threat, a legitimate military target. The swiftness of this process and the immediacy of its outcomes left no room for doubt and no opportunity for its objects to rebel against their drone-generated classification.

Evidence from the United States

The striking of Zemari Ahmadi's car

On 29 August 2021, the US military launched its last drone strike in Afghanistan before American troops withdrew from the country.Footnote 106 The strike targeted a white Toyota Corolla in the courtyard of a home in Kabul. Zemari Ahmadi, the driver of the vehicle, was believed to be an operative of ISIS-Khorasan (ISIS-K), on his way to detonate a bomb at Kabul's international airport. As a result of the strike, the targeted vehicle was destroyed and ten people, including Ahmadi, were killed. In the following days, the US military called this attack a “righteous strike”, explaining that it was necessary to prevent an imminent threat to American troops at Kabul's airport.Footnote 107 However, following the findings of a New York Times investigation,Footnote 108 a high-level US Air Force investigation ultimately found that the targeted vehicle did not pose any danger and that all ten casualties were civilians, seven of them children.Footnote 109 Despite these outcomes, the investigation concluded that the strike did not violate any law, because it was a “tragic mistake” resulting from “inaccurate” interpretation of the available intelligence, which included eight hours of drone visuals.Footnote 110 The investigation suggested that the incorrect – and lethal – interpretation of the intelligence resulted from “execution errors” combined with “confirmation bias”.Footnote 111

The US Central Command (CENTCOM) completed its thorough investigation of this incident within two weeks of the attack; however, the full investigation report was never released to the public. On 6 January 2023, following a Freedom of Information Act lawsuit submitted by the New York Times, CENTCOM released sixty-six partly redacted pages from the investigation.Footnote 112 The details revealed through the investigation report shed light on the centrality of drone visuals in the decision to attack Zemari Ahmadi's car, and the particular dynamics around the interpretation and construction of the drone-generated data. While the report itself mentions “confirmation bias” as a likely reason for the decision-making errors in this case, all three types of challenges (technical, cognitive and human-technical) are evidenced.

First, materials from the investigation identify several technical limitations of the aerial visuals, as applied in this case. While highlighting the precision and quality of the aerial visualization tools used in this case (three to four drones surveilled the car for eight hours prior to the attack), analysts noted in their interviews that at the location of the attack (in a residential area, where the car entered a courtyard), “trees and courtyard overhang limited visibility angles”, and that the “video quality obscured the identification of civilians in or near the courtyard prior to the strike”.Footnote 113 Indeed, one analyst admitted to finally being able to see “additional movement from the house”, but explained that at that point in time it was already too late (“[I] did not have time to react”).Footnote 114

Second, the CENTCOM investigation attributed the identification error to confirmation bias. The main reason for this determination was the heightened tension following a deadly attack on the airport on 26 August 2021, and specific intelligence reports indicating that a terror organization, ISIS-K, intended to use two vehicles, a white Toyota Corolla and a motorcycle, to launch an assault on US forces at the airport.Footnote 115 On 29 August, drone sensors detected a white Toyota Corolla moving to a known ISIS-K compound.Footnote 116 The vehicle was then put under continuous observation for approximately eight hours, and Ahmadi, who drove the car, as well as several men he engaged with during the day, were identified as part of the ISIS-K cell.Footnote 117

Beyond the initial intelligence about a white Toyota Corolla, several elements detected through the aerial visuals further led to the decision to strike the vehicle. Firstly, throughout the eight hours of surveillance on the vehicle, drone analysts observed it moving around the city, making various stops, and picking up and dropping off various adult males.Footnote 118 Ahmadi's driving was assessed as “evasive”Footnote 119 and his route was described as “erratic”, which was evaluated to be “consistent with pre-attack posture historically demonstrated by ISIS-K cells to avoid close circuit cameras prior to an attack”.Footnote 120 Secondly, analysts noted that the driver of the vehicle “carefully loaded items” into the vehicle, and these items were described as “nefarious equipment”.Footnote 121 Analysts assessed that these items were explosives based on the careful handling and size and apparent weight of the material (at one point, five adult males were observed “carrying bags or other box-shaped objects”).Footnote 122 Thirdly, at some point, the presence of a motorcycle was detected nearby, fitting the original intelligence.Footnote 123 Fourthly, the attack was eventually launched when the vehicle parked at its final destination, where a gate was shut behind it and someone approached it in the courtyard.Footnote 124 The shutting of the gate and the movement in the courtyard were interpreted to signal “a likely staging location and the moving personnel to likely be a part of the overall attack plot”.Footnote 125 The CENTCOM investigation concluded that each of these signs and signals of threat were interpreted based on the initial intelligence, in a way that was consistent with that threat scenario.

It is significant that CENTCOM was able to acknowledge the problem of cognitive biases in military decision-making processes, and particularly in the interpretation of aerial visuals during real-time events.Footnote 126 Later on, in August 2022, the US DoD announced a plan for mitigating civilian casualties in US military operations, which includes addressing cognitive biases, such as confirmation bias, in order to prevent or minimize target misidentification.Footnote 127

Several cognitive biases might have played a role in the wrongful attack on Zemari Ahmadi's car. In particular, it may well be that the initial designation of the white Toyota Corolla as a threat resulted from confirmation bias based on the initial intelligence linking that colour and model of car with a concrete threat. However, from that moment on, that initial error seems to have served as a (wrong) anchor, from which any new information was miscalculated or wrongly evaluated. Moreover, representativeness bias may have also affected decision-makers’ judgement, as the interpretive option they continually selected closely resembled the initial problem scenario. Finally, the deadly attack at the airport on 26 August might have triggered availability bias, leading decision-makers to overstate the likelihood that the white Toyota was carrying a bomb because that event or scenario easily came to mind. Ultimately, it seems that a variety of cognitive biases triggered the erroneous and lethal decision. As one of the analysts noted, “the risk of failure to prevent an imminent attack weighed heavily”.Footnote 128 Another added: “I felt confident that we made the right decision and in turn saved countless lives.”Footnote 129 This case exemplifies, therefore, how the outputs of an intensive eight-hour aerial surveillance were interpreted to fit an anticipated scenario, where the mere sight of a non-unique car ultimately triggered lethal force.

Third, the data from the CENTCOM investigation further suggests that the salience of aerial visuals may have also contributed to the skewed assessments. In particular, in one of the interviews, it was stated that after the strike was approved, a new, non-visual intelligence report was received, stating that the target was going to delay the attack until the following day (and thereby making the threat non-imminent). But the eyes were already on the target, and those involved did not want to lose sight of the vehicle, so the additional information was brushed aside and the attack was carried out. The description of events detailed in the investigation report also suggests that everyone involved remained fixed upon the various drones’ visuals. There is no information about any attempt to cross-reference the identification of the vehicle, check its licence plate, identify the driver or use other sources of information to substantiate the initial assessments.

Finally, the CENTCOM investigation provides another example of the objectifying drone gaze, which is continuously constructing individuals and communities as imminent threats. This threat construction is so entrenched and acceptable that despite the baseless targeting of an innocent NGO aid worker and his family, the CENTCOM investigation easily concluded that the errors in this case were “unavoidable given the circumstances”.Footnote 130 However, the circumstances that made the errors in this case “unavoidable” are exactly those that centre targeting decisions on aerial visuals, which, by design, detect some details and omit others. Zemari Ahmadi was a 43-year-old electrical engineer, a proud father of seven children, who had worked since 2006 for an aid NGO. He had a whole life filled with many details that were completely invisible (and insignificant) to the drone sensors. These sensors turned him into a target, his associates into terrorists, and the water containers he loaded in his car into explosives. The CENTCOM investigation report does not include any of this information, as it is deemed irrelevant, being outside the view of the drone.

The Kunduz hospital bombing

On 3 October 2015, at 2:08 am, a US Special Operations AC-130 gunship attacked a Doctors Without Borders (Médecins sans Frontières, MSF) hospital in Kunduz, Afghanistan, with heavy fire. The attack severely damaged the hospital building, resulting in the death of forty-two staff members and patients and injuring dozens.Footnote 131 In the aftermath of the attack, several investigations were carried out by the US military, NATO, the UN Assistance Mission in Afghanistan and MSF.Footnote 132 The CENTCOM Kunduz investigation found that the lengthy attack on the protected hospital building resulted from a “combination of human errors, compounded by process and equipment failures”.Footnote 133 The human errors were attributed to poor communication, coordination and situational awareness, and the equipment failures included malfunctions of communications and targeting systems.Footnote 134

The CENTCOM report specifically indicated that the electronic systems on-board the AC-130 malfunctioned, eliminating the ability of the aircraft to transmit video, send and receive email, or send and receive electronic messages.Footnote 135 The AC-130 team was tasked with supporting ground forces against Taliban fire from a “large building”. When the gunship arrived in Kunduz, the crew took defensive measures, which degraded the accuracy of certain targeting systems, including the ability to locate ground objects.Footnote 136 As a result, the TV sensor operator identified the middle of an empty field as the target location. The team aboard the AC-130 then started searching for a large building nearby and eventually identified a compound about 300 metres to the south that more closely matched the target description. This compound was in fact the MSF hospital.Footnote 137 The navigator questioned the disparity between the first observed location (an open field) and the new location (a large compound), as well as the distance between the two. The TV sensor operator then “re-slaved” the sensors to the original grid and this time identified a “hardened structure that looks very large and could also be like more like a county prison with cells”. This other building was in fact the intended target of the operation.Footnote 138

Following the observation of the second compound, as well as the TV sensor operator's expression of concern that they were not observing a hostile act or hostile intent from the first compound,Footnote 139 the aircrew requested a clarification of the target, receiving the following description:

Roger, [Ground Force Commander] says there is an outer perimeter wall, with multiple buildings inside of it. Break. Also, on the main gate, I don't know if you're going to be able to pick this up, but it's also an arch-shaped gate. How copy?Footnote 140

The aircrew immediately identified a vehicle entry gate with a covered overhang on the north side of the hospital compound. After further discussion of whether the covered overhang was arch-shaped, the crew collectively determined that the target description matched the hospital compound as opposed to the intended target building.Footnote 141 Following this false identification, the hospital complex was designated as the target location and the aircrew were cleared to destroy both the buildings and the people within the complex.Footnote 142

Ultimately, the US military decided against opening a criminal investigation into any of those involved in the misidentification of the hospital and its following bombardment. Instead, several administrative and disciplinary measures were adopted against sixteen individuals because their professional performance during this incident reflected poor communication, coordination and situational awareness.Footnote 143

The NATO investigation similarly concluded that there was no evidence to suggest that the commander of the US forces or the aircrew knew that the targeted compound was a medical facility.Footnote 144 In particular, the NATO report determined that it was “unclear” whether the US Special Forces Commander or the aircrew had the grid coordinates for the hospital available at the time of the air strike.Footnote 145 Similar to the CENTCOM investigation, the NATO report concluded that the misidentification of the hospital and its subsequent bombardment resulted from “a series of human errors, compounded by failures of process and procedure, and malfunctions of technical equipment which restricted the situational awareness” of the forces.Footnote 146

The findings of the CENTCOM and NATO investigations suggest that at least part of the identified malfunctions and situational awareness problems were related to the aerial visualization tools used by the aircrew in this incident (as well as the human–machine interaction).

First, the CENTCOM investigation detailed twelve different technical failures and malfunctions that contributed to the misidentification of the hospital as a target.Footnote 147 While this part of the report is heavily redacted, at least three failures seem to relate directly to systems providing aerial visualization to the crew and command. In particular, the report mentioned several outages preventing command and crew from viewing a certain area or receiving “pre-mission products”, and noted that a core sensor providing aerial visualization during the strike “was looking at the wrong objective”.Footnote 148

Additionally, as the aircrew were preparing to strike the target, they took a much wider orbit around the target area than planned, believing they were under threat.Footnote 149 Because of this greater distance from the target, the fire control sensors had limited visibility of the target area, and the precision of the targeting system was degraded.Footnote 150 Finally, because the attack took place at about 2:00 am, the aircrew were using the AC-130's infrared sensors, which could not show the coloured markings and MSF flag on the building, identifying it as a hospital.Footnote 151 This meant that while the building itself was visible to the attacking forces, details identifying it as a medical facility – such as the MSF flag and logo – were not.

Second, the details concerning the decision-making process that led to the misidentification of the hospital suggest that cognitive biases may have contributed to the error. Specifically, confirmation bias may have strengthened the aircrew's decision that the hospital complex was the building they were looking for. As detailed above, the aircrew received a very vague description of the target area, mainly that it was a large building. As the grid first led them to an open field, they scanned the area for a large building and detected the hospital. Following their request for clarification, they received further information that the target building had an “arch-shaped gate”. Looking for such a gate at the building they were viewing, they quickly found a “gate with a covered overhang”, which they evaluated as fitting the “arch-shaped” description. This particularly vague description may have triggered confirmation bias in the interpretation of the aerial visuals; instead of searching the building for armed insurgents or ammunition, the aircrew looked for a large building with an arched gate, interpreting the visuals to fit this description (and did not continue to search for another building or compound that may have fit the description better).

Third, the CENTCOM investigation also demonstrates problems in the human-technical interaction. Specifically, the report mentioned that a core sensor providing aerial visualization during the strike “was looking at the wrong objective because [Special Operations Task Force – Afghanistan] leadership did not have situational understanding of that night's operations”.Footnote 152 This finding highlights the importance of human–technical alignment and proficiency. Additionally, it suggests that heavy reliance on aerial visualization (as well as other military technologies) may lead to human de-skilling, or degradation of human judgement and capabilities. Reliance on technology has a price tag when the relevant systems fail or malfunction. As noted earlier, when military practices are heavily reliant on technology, there is an erosion of decision-makers’ own judgement and ability to evaluate evolving situations without that technology. Without fully functioning visualization tools and targeting sensors, the aircrew's professional performance was significantly impaired, they were unable to fully orient themselves, and they failed to identify and correctly assess the gaps in their data. While their own judgement was hampered, the degraded visuals they were informed by made them confident enough to strike.

Finally, a careful reading of the Kunduz investigation suggests that aerial target visualization may generate dehumanization of those observed. For example, in describing the threat perception of the Ground Force Commander, the investigation report quotes the Commander's perception, formed based on “numerous aerial platforms”, that the area was “swarming with insurgents”.Footnote 153 Through the aerial view, Afghan people, including medical doctors, nurses, patients and visitors, looked like “swarming” ants, hornets or locusts. Similarly, a dialogue between the Flying Control Officer and the navigator concerning the meaning of “target of opportunity” concluded with the Officer's explanation of his understanding of this term (and their orders): “[Y]ou're going out, you find bad things and you shoot them.”Footnote 154 Aerial visualization tools provided ample opportunity to “find bad things”, as even though the aircrew could not detect any ammunition or weapons at the hospital compound, the objectifying gaze of the aerial sensors produced threat and turned a medical facility, and all of its staff, patients and visitors, into legitimate “targets of opportunity.”

***

The analysis of military investigations in the four examples explored above exemplifies the three types of limitations – technical, cognitive and human-technical – of reliance on aerial visuals in military decision-making. These examples highlight the growing need to better account for technology-related biases and malfunctions, to train military decision-makers to identify and account for these limitations, and to improve military risk assessment and decision-making processes.

Aerial visuals and the application of the law of armed conflict

Military operations are not conducted in a normative vacuum. Military law, rules of engagement and the overarching law of armed conflict (LOAC), including its core customary principles of distinction, precaution and proportionality, apply to the legal evaluation of military operations.Footnote 155 The previous section demonstrated how technical, cognitive and human-technical challenges in the interpretation of aerial visuals may negatively influence real-time military fact-finding processes. In this section I examine how these challenges influence the application of – and compliance with – core LOAC principles during military operations.

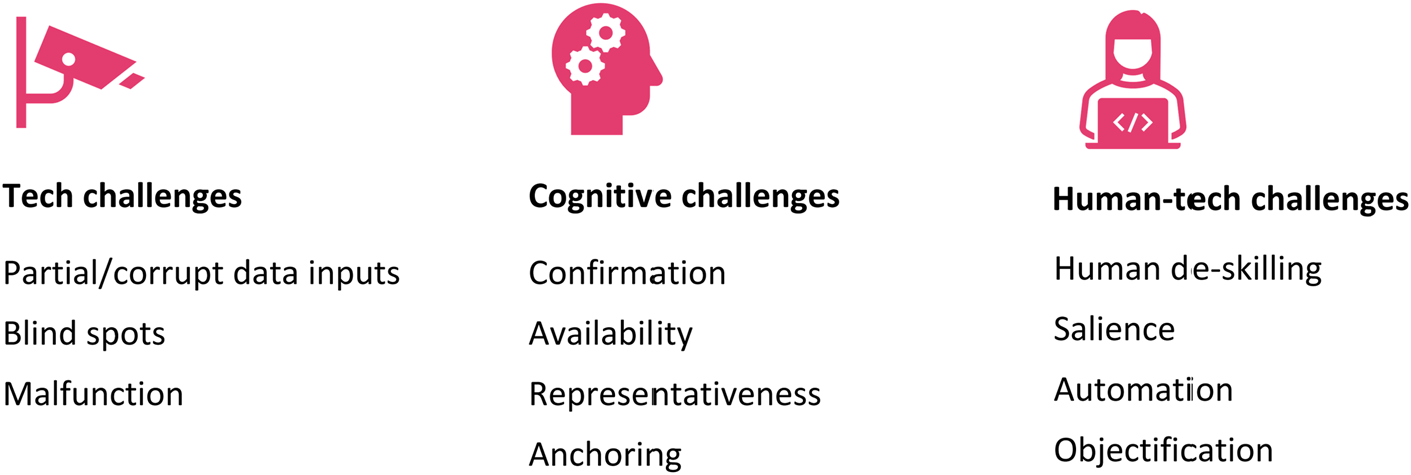

Aerial visuals influence the application of the LOAC in three ways, through (1) generating information necessary to apply the law in concrete circumstances, (2) affecting the scope of the legal rules, and (3) amplifying vulnerabilities in the LOAC's evidentiary standards. Figure 2 illustrates these three pathways through which aerial visuals may influence the application of the LOAC in concrete cases. I discuss each of the three below.

Figure 2. Pathways through which aerial visuals may influence the application of the LOAC.

Fact-finding: Aerial visuals generate information necessary for the application of the LOAC

Aerial visuals provide an evidentiary basis for legal evaluations and establish the factual framework necessary for the legal analysis. Drone visuals, for example, are used to identify and quantify the risk to bystanders during collateral damage assessments, thus influencing the legal evaluation of a planned operation as consistent (or inconsistent) with the requirements of the principle of proportionality. Thus, if the legal standard requires that the anticipated collateral damage is proportionate to the anticipated military gain, aerial visuals take part in determining, ex ante, what the anticipated collateral damage in the concrete circumstances is (as well as what gain can be expected from a concrete attack). The challenges and constraints of these fact-finding practices were the focus of the previous sections.

Scope of legal rules: Aerial visualization capabilities influence the scope of the legal requirements