Mobile health (mHealth) has the potential to change health systems and how care is delivered (Reference Powell, Landman and Bates1). One form of mHealth is mobile medical applications (MMAs) also known as ‘apps’. These are a type of software available for mobile platforms (e.g., smartphone, tablet, smartwatch) (Reference Powell, Landman and Bates1). In a medical context, MMAs may be used by patients to self-manage and/or screen medical conditions, rather than presenting at hospitals or clinics for additional appointments. MMAs may also allow for medical practitioners and/or allied health workers to remotely monitor, screen and manage their patients (2;Reference Laranjo, Lau and Oldenburg3).

A potential barrier to the successful integration of MMAs into health systems is that many come at a cost to the patient, or require in-app purchases, which some patients are unable to afford. While some MMAs may have a negligible costs—and thus will not warrant public funding—others may require subscriptions or come with accessories, such as wearables and implantable devices. Furthermore, medical practitioners and allied health workers that use MMA-based services during a clinical encounter are often unable to claim reimbursement for the interpretation of MMA output or for treatment guided by MMA results.

Health management organizations (HMO) in the United States have reimbursed some MMAs since 2013 (Reference Dolan4). Similarly, since 2014, private health insurers have reimbursed specific MMAs in Germany (Reference Paris, Devaus and Wei5;Reference Dolan6). It is unclear how these apps were selected for reimbursement, although this may have depended on whether the MMA was approved by the relevant regulatory authority (e.g., the United States Food and Drug Administration [FDA]).

Countries with tax funded universal healthcare like Australia and Great Britain currently do not reimburse the use of MMAs. However, the National Institute for Health Care Excellence (NICE) in Britain is currently investigating ways to assess MMAs and provide guidance on their use (Reference Clifford7;Reference Mulryne and Clemence8). If the use of MMAs becomes routine in clinical consultations, MMA-guided care will need to be formally assessed.

This systematic review is part of a larger research project to develop or adapt an evaluation framework for MMAs and determine the feasibility of a reimbursement pathway for MMAs in Australia. The aim of our review was to identify and appraise existing evaluation frameworks for MMAs and determine their suitability for use in health technology assessment (HTA). In this context an evaluation framework was defined as a method for determining an MMA's effectiveness, safety and/or cost, cost-effectiveness.

METHODS

Literature Search

We searched PubMed (MEDLINE), EMBASE, CINAHL, PsychINFO, The Cochrane Library, Compendex, and Business Source Complete between January 1, 2008 (when the first publicly accessible online application store opened) and October 31, 2016 (Reference Donker, Petrie, Proudfoot, Clarke, Birch and Christensen9). We used a broad search strategy including terms for MMAs (e.g., mHealth app*, telehealth app*), mobile platforms (e.g., cellular phone, mobile device) and evaluation (e.g., criteri*, apprais*). Grey literature sources were also searched to identify any relevant material that may have not been identified through the database search. The full search strategy is given in the Supplementary Materials.

Study Eligibility Criteria

Papers were selected for inclusion if they met the predetermined eligibility criteria. The population of interest were participants aged 18 years or over that used an MMA. The intervention of interest was an MMA evaluation framework. This included frameworks that assessed all mHealth apps, as MMAs are a subset of these. MMAs were defined as mobile apps (including accompanying accessories or attachments) available on various platforms (smartphone, tablets, smart watches, etc.) that have a therapeutic or diagnostic intended purpose. Framework(s) aimed solely at assessing pregnancy, health promotion, or disease prevention apps (e.g., medication management, smoking cessation, and weight management) were excluded as the apps’ intended purpose was not diagnostic or therapeutic. The outcomes of interest were the core HTA evaluation domains of effectiveness, safety, and/or cost, cost-effectiveness. There was no comparator as the aim of the systematic review was not to determine the effectiveness of these evaluation frameworks but, rather, to identify the HTA domains that they address. Only frameworks available in English were included. Frameworks that were duplicated in several articles were collated and reported as a single record.

Study Selection

Two reviewers (M.M. and T.M.) screened the literature separately and applied the inclusion criteria. M.M. reviewed all title and abstracts retrieved from the searches, while T.M. assessed 10 percent. The full text articles were screened against the inclusion criteria by M.M. Any articles in which M.M. was unsure of eligibility, were discussed with T.M. and a consensus decision made. The reference lists of included papers were pearled to identify any additional relevant references.

Data Extraction

The data extracted from the papers included: Author and dates of publication, source affiliation, country of origin, name of framework, study design, description of framework, intended audience/user, type of MMA, framework scoring system, and HTA domains addressed. The included papers were not critically appraised for study quality as this was a methodological systematic review.

Framework Assessment

A checklist was created to act as a tool to standardize data extraction. Using the checklist, each framework was assessed to determine if it included any of nine traditional HTA domains; six were core domains considered essential for a full HTA: current use of the technology; description and technical characteristics; effectiveness; safety; cost and cost-effectiveness; organizational aspects, and three were optional domains: legal aspects; ethical aspects; social aspects (Reference Busse, Orvain and Velasco10;Reference Merlin, Tamblyn, Ellery and Group11). The checklist was trialed and tested by an HTA expert and M.M. and was found to have reasonable inter-rater reliability (Kappa = 0.77).

RESULTS

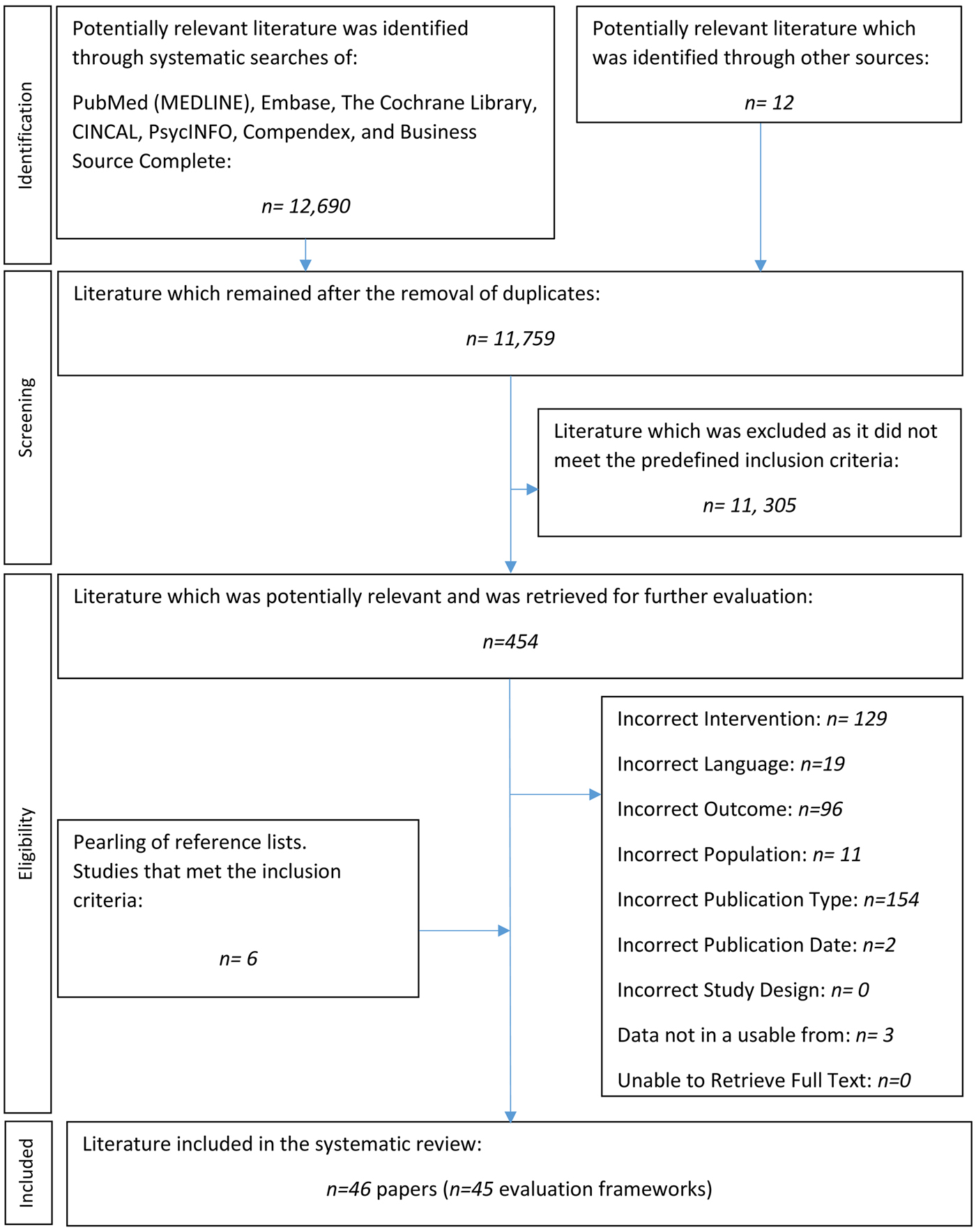

The systematic searches retrieved 12,690 citations. An additional twelve papers were identified from grey literature sources. Six additional frameworks were identified through the pearling of the included publications’ reference lists. Three frameworks were excluded as the information provided was not in a usable form. An evidence base of forty-six papers met the inclusion criteria, and two of these papers published on the same framework. Thus, forty-five frameworks were identified that assessed whether MMAs are safe, effective and/or cost-effective. Figure 1 illustrates the complete study selection process. Tables 1 and 2 provide details on all forty-five MMA evaluation frameworks.

Fig. 1. PRISMA flow-chart of literature selection.

Table 1. Description of Frameworks

Explanatory note: 1Based on first author affiliation.

Table 2. HTA Domains Addressed by Each Framework

Note. ✔ Domain was addressed; ~ Domain was partially addressed; ✘ Domain was not addressed.

HTA, health technology assessment.

Overview of Frameworks

All of the included frameworks addressed mHealth applications, with 73 percent (n = 33) explicitly assessing MMAs. Most of the frameworks that evaluated MMAs were sourced from universities. The remaining 27 percent (n = 12) were developed by private organizations, institutes, medical schools, or governmental organizations.

The frameworks originated from three geographical regions. Most (49 percent, n = 22) came from North America, while Europe contributed 40 percent (n = 18) and the remaining 11 percent (n = 5) originated from the Asia-Pacific region.

The frameworks that assessed MMAs came in a variety of different formats. Some of these formats included questionnaires, data extraction criteria, flow charts, and varying types of lists. Due to the variety of formats, it was difficult to categorize the frameworks into types. Less than half of the frameworks had a scoring system (Table 1).

Intended Audience

The included frameworks had different intended audiences or purposes, such as: MMA developers (n = 3), used for quality assurance for user protection (n = 13), for patients (n = 17), or used for quality assurance in a research setting (n = 18). All of these frameworks assessed the HTA domains concerning the current use of the technology, description and technical characteristics, effectiveness, safety, and ethical aspects.

Intended Health Condition

Most of the frameworks were aimed at evaluating MMAs that focused on the treatment, management or diagnosis of chronic health conditions. Diabetes and mental health were the most commonly addressed conditions with 13 percent (n = 6) of the frameworks focused on MMAs for each condition. Cancer and pain were the next most frequently addressed conditions (7 percent, n = 3, each). Three frameworks (7 percent) were aimed at MMAs managing sexuality transmitted infections, with two of these focused specifically on the human immunodeficiency virus (HIV).

Critical Appraisal of the Evidence-Base Underpinning the MMA

The credibility of the information included in an MMA was assessed by fifteen (33 percent) frameworks, including through: level of evidence or grade of the recommendation (Reference Robustillo Cortés, Cantudo Cuenca, Morillo Verdugo and Calvo Cidoncha12;Reference Stoyanov, Hides and Kavanagh13); assessment in a randomized controlled trial (Reference Stoyanov, Hides and Kavanagh13–Reference Beatty, Fukuoka and Whooley15); study design (i.e., clinical trial, controlled trial) (Reference Drincic, Prahalad, Greenwood and Klonoff16;Reference Portelli and Eldred17); improved health outcomes (Reference Chan, Torous, Hinton and Yellowlees18); sample size, intervention fidelity and evaluation design (Reference McMillan, Hickey, Patel and Mitchell19); and publication in peer-reviewed journals (Reference Albrecht, Von Jan, Pramann, Mantas and Hasman20–Reference Yasini and Marchand23). Powell et al. (Reference Powell, Torous and Chan14), Beatty et al. (Reference Beatty, Fukuoka and Whooley15), and Stoyanov et al. (Reference Stoyanov, Hides and Kavanagh13) specifically question if the MMA was clinically tested using RCTs. Stoyanov et al. (Reference Stoyanov, Hides and Kavanagh13) asked whether the MMA has been verified through trialing/testing, ranking the responses with the lowest being no RCTs and the highest being multiple RCTs. One framework considered whether a systematic review or meta-analysis had been conducted about the MMA and topic area (Reference Murfin24). Furthermore, twenty-seven (60 percent) of the included frameworks asked about the MMA's source of information.

HTA Domains

More than half of the assessed frameworks included the following HTA domains: MMA effectiveness; description of technical characteristics; safety; current use of the technology; and ethical aspects (Figure 2). Five frameworks assessed six domains (Reference McMillan, Hickey, Patel and Mitchell19;Reference Gibbs, Gkatzidou and Tickle25–Reference Martinez-Perez, de la Torre-Diez and Lopez-Coronado29), whereas two frameworks only assessed a single domain (Table 2) (Reference Gautham, Iyengar and Johnson30;31). The average number of domains addressed was four (x̄ = 3.9).

Fig. 2. The proportion of frameworks that address each HTA domain.

Core HTA Domains

Effectiveness

Every framework assessed the effectiveness of MMAs in some capacity. Eleven frameworks (24 percent) evaluated user satisfaction. Thirty (67 percent) frameworks evaluated the technical efficacy of MMAs. Beatty et al. (Reference Beatty, Fukuoka and Whooley15), Drincic et al. (Reference Drincic, Prahalad, Greenwood and Klonoff16), and McMillan et al. (Reference McMillan, Hickey, Patel and Mitchell19) appraised efficacy of the applications, but did not provide any further detail of what they meant; it could interpreted as both therapeutic and/or diagnostic effectiveness. Only one framework explicitly considered comparative effectiveness (Reference Aungst, Clauson, Misra, Lewis and Husain32). The framework by Aungst et al. (Reference Aungst, Clauson, Misra, Lewis and Husain32) asked whether an MMA already exists for the current reference condition (clinical tool used in practice).

Investigative MMAs

The diagnostic accuracy of MMAs was assessed by 29 percent (n = 13) of the frameworks. Martinez-Perez et al. (Reference Martínez-Pérez, De La Torre-Díez, Candelas-Plasencia and López-Coronado28;Reference Martinez-Perez, de la Torre-Diez and Lopez-Coronado29) reviewed the accuracy of an MMA's calculations. Stoyanov et al. (Reference Stoyanov, Hides and Kavanagh13), Powell et al. (Reference Powell, Torous and Chan14), Fairburn and Rothwell (Reference Fairburn and Rothwell33), Gibbs et al. (Reference Gibbs, Gkatzidou and Tickle25), and Murfin (Reference Murfin24), all assessed the accuracy or specificity of the information given in the MMAs, while Hacking Medical Institute (HMi) (34) reviewed the MMA's clinical credibility. Powell et al. (Reference Powell, Torous and Chan14) included four response options which rated how the MMA was designed to improve a specific condition, whereas Gibbs et al. (Reference Gibbs, Gkatzidou and Tickle25) ranked the accuracy of the information also using four options. None of the thirteen frameworks assessed subsequent changes in patient or decision-making management associated with use of the MMA (a necessary domain for determining the effectiveness of investigative interventions) (Reference Merlin, Lehman, Hiller and Ryan35). Furthermore, none of the included frameworks assessed the clinical utility of MMAs, that is, the health impacts of an MMA that provides diagnostic, information.

Therapeutic MMAs

Therapeutic effectiveness was assessed by 71 percent (n = 32) of the frameworks. Three frameworks addressed primary patient-relevant outcomes including quality of life and mortality (Reference Drincic, Prahalad, Greenwood and Klonoff16;Reference Chan, Torous, Hinton and Yellowlees18;Reference McMillan, Hickey, Patel and Mitchell19), whereas, 25/32 (78 percent) made provision for the reporting of surrogate outcomes (e.g., physiological, biochemical, and/or behavior change parameters); for example, a diabetes management MMA that could log glucose (HbA1C) readings, or an HIV management app that could track T-cell counts.

Safety

Safety was addressed in thirty-four (76 percent) frameworks with twenty-seven (79 percent) of these assessing the source of the information used by the MMA, and three appraising how the information sources were selected. Only seven frameworks evaluated the harms of the app itself (e.g., adverse events) (Reference Powell, Torous and Chan14;Reference Chan, Torous, Hinton and Yellowlees18;Reference McMillan, Hickey, Patel and Mitchell19;Reference Grundy, Wang and Bero26;Reference Aungst, Clauson, Misra, Lewis and Husain32;Reference Ferrero-Alvarez-Rementeria, Santana-Lopez, Escobar-Ubreva and Vazquez-Vazquez36;Reference Singh, Drouin and Newmark37). Six frameworks addressed whether the MMA had been trialed or tested and whether safety concerns had been identified during the process (Reference Beatty, Fukuoka and Whooley15;Reference Drincic, Prahalad, Greenwood and Klonoff16;Reference Albrecht, Von Jan, Pramann, Mantas and Hasman20;Reference Pandey, Hasan, Dubey and Sarangi22;38;Reference Brooks, Vittinghoff and Iyer39).

Cost, Cost-Effectiveness

Only one framework assessed the cost-effectiveness domain by asking whether a health economic evaluation had been conducted (Reference Walsworth40). However, this domain was partially addressed by 11 (24 percent) frameworks that reviewed the cost of MMAs in terms of the price to download the application or to undertake in-app purchases (Reference Stoyanov, Hides and Kavanagh13;Reference Beatty, Fukuoka and Whooley15;Reference Pandey, Hasan, Dubey and Sarangi22;Reference Gibbs, Gkatzidou and Tickle25;Reference Huckvale, Morrison, Ouyang, Ghaghda and Car27;Reference Basilico, Marceglia, Bonacina and Pinciroli41–Reference Shen, Levitan and Johnson46).

Current use of the Technology

The current use of the technology was assessed by 25 (55 percent) of the frameworks. Seventeen assessed (68 percent) usage of the MMA (e.g., rates, use, trends), sixteen (64 percent) assessed the intended user population and fifteen (60 percent) considered the intended purpose of the app (e.g., diagnosis, management, or treatment).

Description and Technical Characteristics

Technical characteristics of MMAs were assessed by 78 percent (n = 35) of the frameworks. The type of device (e.g., mobile platform, operating systems, software versions) was evaluated in twenty-three (67 percent) frameworks and nineteen (54 percent) evaluated whether experts were consulted during the development of the app. Eleven (31 percent) assessed whether the MMA had communicative capabilities (e.g., communication with personal health records, communication with electronic health records, and healthcare provider-patient communication), and eight (23 percent) considered whether the MMA had personalization capabilities.

Organizational Aspects

Only three (7 percent) of the included frameworks assessed whether the MMA would have organizational implications. Two of the frameworks recorded if any training was needed to use the application and if adopting the MMA would alter the usage of existing services (Reference Chan, Torous, Hinton and Yellowlees18;Reference Martínez-Pérez, De La Torre-Díez, Candelas-Plasencia and López-Coronado28;Reference Martinez-Perez, de la Torre-Diez and Lopez-Coronado29). One framework assessed whether the MMA would alter the daily practices of clinicians (Reference Aungst, Clauson, Misra, Lewis and Husain32).

Optional HTA Domains

Legal Aspects

Four (9 percent) of the identified frameworks assessed the legal implications of MMAs (Reference Robustillo Cortés, Cantudo Cuenca, Morillo Verdugo and Calvo Cidoncha12;Reference Gibbs, Gkatzidou and Tickle25–Reference Huckvale, Morrison, Ouyang, Ghaghda and Car27). Three of these determined whether there were legal implications by asking whether the MMA had a disclaimer concerning clinical accountability (Reference Robustillo Cortés, Cantudo Cuenca, Morillo Verdugo and Calvo Cidoncha12;Reference Gibbs, Gkatzidou and Tickle25;Reference Grundy, Wang and Bero26). Two of the frameworks required consideration of the possibility of copyright infringement (Reference Grundy, Wang and Bero26;Reference Huckvale, Morrison, Ouyang, Ghaghda and Car27).

Ethical Aspects

Ethical considerations were examined by twenty-four (53 percent) frameworks. Of these, eighteen (75 percent) recorded whether the MMA had a privacy policy (although only four considered the individual content of the privacy policy); eighteen (75 percent) evaluated patient confidentiality provisions in the app; and fourteen (58 percent) assessed conflicts of interest (e.g., affiliation, funding, third party sponsorship). Four (17 percent) frameworks appraised equity (e.g., socioeconomic status, disability, language, and age) (Reference Stoyanov, Hides and Kavanagh13;Reference Chan, Torous, Hinton and Yellowlees18;Reference Huckvale, Morrison, Ouyang, Ghaghda and Car27;Reference Reynoldson, Stones and Allsop43), and an additional four (17 percent) frameworks assessed MMA accessibility (e.g., geographical location) (Reference McMillan, Hickey, Patel and Mitchell19;Reference Huckvale, Morrison, Ouyang, Ghaghda and Car27–Reference Martinez-Perez, de la Torre-Diez and Lopez-Coronado29;Reference Lee, Sullivan and Schneiders42).

Social Aspects

Six (13 percent) frameworks assessed how the MMA provides social support to the users (Reference Beatty, Fukuoka and Whooley15;Reference Portelli and Eldred17;Reference Martínez-Pérez, De La Torre-Díez, Candelas-Plasencia and López-Coronado28;Reference Martinez-Perez, de la Torre-Diez and Lopez-Coronado29;Reference Singh, Drouin and Newmark37;Reference Lalloo, Jibb, Rivera, Agarwal and Stinson47), for example, whether the MMA provides psychosocial support, if the MMA can provide support through social media, or if access to social support is facilitated.

DISCUSSION

None of the included frameworks could be used “off the shelf” to evaluate MMAs in a full HTA requiring assessment across all six core HTA domains. Frameworks by Grundy et al. (Reference Grundy, Wang and Bero26), HMi (34), Huckvale et al. (Reference Huckvale, Morrison, Ouyang, Ghaghda and Car27), Martinez-Perez et al. (Reference Martínez-Pérez, De La Torre-Díez, Candelas-Plasencia and López-Coronado28;Reference Martinez-Perez, de la Torre-Diez and Lopez-Coronado29), and McMillan et al. (Reference McMillan, Hickey, Patel and Mitchell19) all assessed six HTA domains, but none of these addressed all of the six core domains. Ethical, social, and legal considerations are frequently omitted in typical HTAs. However, we found that, for MMA specific evaluations, ethical issues were often addressed, whereas organization of care, and cost and cost-effectiveness domains, together with legal considerations, were the least likely to be addressed (Reference Merlin, Tamblyn, Ellery and Group11).

Safety

Nearly a quarter of the evaluation frameworks did not assess safety in any capacity. Only five (16 percent) frameworks explicitly considered the MMA's ability to cause harm or adverse events. None of the frameworks explicitly assessed the comparative safety of the MMA with reference to other MMAs or current clinical practice without use of an MMA. It is possible that evaluators of MMAs do not find safety as important a concern as the effectiveness this technology. MMAs with attachments (such as glucometers, oximeters, or electrocardiogram leads) that have the potential to physically harm, may be more obvious candidates for safety assessment, rather than the individual MMA itself.

A further concern regards the source of information on which the assessment of safety was based. We found that only one quarter of frameworks checked this factor. Lack of attention to information sources is problematic because of the potential harms caused by misinformation. The International Medical Device Regulators Forum (IMDRF)(48) states that the greatest risks and benefits posed by software which acts as a medical device (SaMD), such as an MMA, relates to its output and how it impacts on a patient's clinical management or other healthcare related decisions, not from direct contact with the device itself. Apps which utilize poor/weak evidence bases could present a range of clinical harms. For example, chronically ill patients using medication incorrectly due to inaccurate feedback from the MMA; rehabilitation patients doing inappropriate exercises; or, potentially more seriously, the long-term consequences to health of receiving a false negative diagnosis from an investigational MMA. However, both the physical harm and risks associated with misinformation are of interest in an HTA and may affect subsequent policy decisions, regarding access to, or reimbursement of MMAs.

Effectiveness

Normally to assess the effectiveness of an intervention in an HTA, the results of the intervention are compared with current practice or an existing intervention. However, only one of forty-five frameworks considered in this systematic review referred to the availability of a comparator MMA or to the current management of the condition without the MMA. Without a comparator identified, it is impossible to adequately assess the effectiveness of an MMA or conduct a full HTA that could inform policy decision making.

Investigative MMAs

The safety and effectiveness of an investigative medical service can be determined through direct or linked evidence (Reference Merlin, Lehman, Hiller and Ryan35;49–51). None of the included frameworks appeared to use a direct evidence approach to evaluate an MMA. Frameworks did address the diagnostic accuracy of an MMA; however, none linked this to subsequent changes in management or healthcare decision making. In any case, those frameworks that assessed the diagnostic accuracy of MMAs did not collect sufficient information to enable a full assessment.

Therapeutic MMAs

In the evaluation of a therapeutic medical service safety and effectiveness can be determined through direct randomized trials (preferred), indirect comparisons of randomized trials, or nonrandomized trials, and observational studies. The purpose of this evidence is to identify the best available clinical evidence for the primary indication relative to the main comparator (50;51). None of the included frameworks adequately addressed the key elements evaluated to demonstrate the therapeutic effectiveness of an MMA, with only seven frameworks considering the quality of the evidence base (such as whether clinical trials were considered or what health outcomes eventuated from use of the MMA).

Cost and Cost-effectiveness and Organizational Issues

The frameworks did not consider the impact that the direct costs of an MMA would have on the current health system, or the potential effect on other medical services or devices (Table 2). Only one framework, by Walsworth (Reference Walsworth40), addressed cost and cost-effectiveness and it only assessed if the value of the MMA justified the cost. Formal economic evaluations of the value for money associated with the use of the MMA was not required by any of the identified evaluation frameworks. It may be that the cost impact of MMAs is considered to be trivial and, therefore, cannot justify a formal economic evaluation; the cost of some MMAs is small. However, costs do not just relate to the unit price of the MMA but also to downstream costs associated with behavior affected by the MMA.

Although no single framework addressed all of the information necessary for an HTA of an MMA, there were elements considered across the frameworks that if combined could produce a comprehensive evaluation framework. Technology-specific characteristics are particularly relevant.

Technology Specific Considerations

There are several technology specific considerations that may need to be addressed when conducting an HTA on an MMA.

The first is a requirement to assess ethical aspects, specifically data privacy. Over half of the frameworks identified had assessed ethical issues concerned with MMAs. Connectivity to the Internet, networks, and other devices through a portable handheld device (i.e., smartphone or tablet) is a unique vulnerability of apps that are used for medical purposes. If the MMA is jeopardized (e.g., hacked or viruses), there is the potential to compromise sensitive personal health information (52;53). The IMDRF (54) regards security concerns relating to the privacy and confidentiality of data (of an SaMD) as safety concerns. The accessibility, availability, and integrity of the device output are crucial for patient treatment and diagnoses (54). A further concern is that companies have been known to sell consumer data (55). The FDA (52;53) has attempted to address cybersecurity concerns by publishing pre- and postmarket guidance documents which provide recommendations for the management of cyber threats to medical devices.

Second, compatibility and connectivity concerns are important for the evaluation of MMAs. MMA performance may vary between different platforms (i.e., smartphone, tablet, or smartwatch), with different operating systems (i.e., Android versus iOS), and for different generations of the same device (i.e., iPhone 5 versus iPhone 6). Additionally, the impact of software updates must also be allowed for, as MMAs are a dynamic technology which is constantly changing. One update that makes an incremental change to the MMA may not alter its intended purpose. However, multiple subsequent incremental updates may change the intended purpose of the MMA (Reference O'Meley56). As highlighted by the IMDRF (54), if not managed systematically, any modification (e.g., updates) to the software throughout its lifecycle, including maintenance, poses a risk to the patient. A full HTA that is used to inform policy decisions regarding an MMA may need to assessing these compatibility and connectivity concerns to ensure that the app is consistent across various platforms, operating systems, and devices, as well as identify when software modifications such as updates, should trigger reassessment of the MMA.

Key Components of an MMA Evaluation Framework

MMA evaluation frameworks intended to appraise apps for HTA purposes should include: consideration of a comparator; a complete assessment of safety and harms from misinformation; a more detailed evaluation of ethical issues such as equity and secure management of confidential data; a consideration of the impact of software updates on the safety and effectiveness of the MMA. It is difficult to determine from this systematic review whether social, legal, and organizational aspects, or the cost and cost-effectiveness of MMAs should be evaluated. It would be helpful to identify indicators that could trigger an assessment of these factors. More research is needed to determine the concepts that should be included in an MMA evaluation framework for HTA purposes and what structure the framework could take. For example, would the structure of such a framework follow the HTA domains or use another categorization method which is more suitable to address the unique challenges presented by MMAs (e.g., development quality, information security, technical considerations).

The second stage of our research project is to conduct interviews with MMA developers, health professionals and policy makers to identify factors important in the use, assessment and reimbursement of MMAs.

LIMITATIONS

As with any systematic review there were some limitations with the research. There is a risk of publication bias, although we attempted to limit this by conducting grey literature searches and including all frameworks in the review that met the selection criteria. Another possible limitation was that the checklist we created to standardize and identify which HTA domains the frameworks addressed, could have been idiosyncratic. The tool was pilot tested by an HTA expert and found to have fair inter-rater reliability. The use of the core HTA domains to assess the MMA evaluation frameworks may have limited the concepts identified. To address this, we have also highlighted several technology-specific considerations that would need to be included in a HTA of MMAs.

In conclusion, none of the forty-five identified frameworks could be used, unaltered, to assess an MMA in a full HTA to inform a policy decision. While several of the identified MMA evaluation frameworks addressed up to six of the HTA domains, there was a lack of detail that would be required to undertake a full HTA. To adapt these frameworks for use in the HTA of MMAs there would need to be greater consideration of the comparator, and a fuller assessment of the harms associated with MMAs. Our results also indicate that an HTA of an MMA should pay particular attention to the ethical issues associated with the technology, in particular to the secure handling of confidential data. The impact of MMA updates on overall conclusions of safety and effectiveness would also need consideration.

Policy Implications

This research has various policy implications. First, there is a need to develop an MMA evaluation framework that is compatible with HTA and addresses all of the relevant policy concerns. Further information is needed from developers and users of apps about the technology-specific characteristics of MMAs that would need to be addressed in a HTA evaluation framework to inform policy decisions on MMAs. Second, due to technology specific considerations, such as the app development cycle, varying platforms, and cybersecurity risks, regulatory and reimbursement authorities may need to work collaboratively with each other if MMAs are to be safely integrated into clinical practice and healthcare delivery.

SUPPLEMENTARY MATERIAL

The supplementary material for this article can be found at https://doi.org/10.1017/S026646231800051X

Supplementary Materials: https://doi.org/10.1017/S026646231800051X

CONFLICTS OF INTEREST

The authors have nothing to declare.