Media summary: Causal directed acyclic graphs (causal DAGs) are powerful tools for clarifying assumptions required for causal inference. However, they can be easily misused. This tutorial provides guidance on safely integrating causal diagrams into analytic workflows, underscoring the importance of starting with clearly defined causal questions.

Introduction

Human research begins with two fundamental questions:

1. What do I want to know?

2. For which population does this knowledge generalise?

In the human sciences, our questions are typically causal. We aim to understand the effects of interventions on certain variables. However, many researchers collect data, apply complex regressions, and report model coefficients without understanding that the assumptions needed to support causal inferences differ from those needed to support predictions. Even when our models predict well, it typically remains unclear how these predictions relate to the scientific questions that sparked our interest.

Some say that association cannot imply causation and prohibit causal inferences from observational data. However, our experimental traditions reveal that when interventions are controlled and randomised, the coefficients we recover from statistical models can permit causal interpretations. The thread to causal inference is not from associations but rather from confounding. Despite familiarity with experimental protocols, however, many researchers struggle to address confounding by emulating randomisation and control using non-experimental or ‘real-world’ data. Practices of confounding control are not systematic. Indeed, we often overlook that what we take as control can inadvertently undermine our ability to consistently estimate causal effects, even in experiments (Montgomery et al., Reference Montgomery, Nyhan and Torres2018). Although the term ‘crisis’ is arguably overused in the human sciences, the state of causal inference leaves considerable headroom for improvement. ‘Headroom for improvement’ applies to poor experimental designs that unintentionally weaken causal claims (Bulbulia, Reference Bulbulia2024d; Hernán et al., Reference Hernán2017; Montgomery et al., Reference Montgomery, Nyhan and Torres2018).

Fortunately, recent decades have seen considerable progress in causal data science, commonly called ‘causal inference’, or ‘CI’. The progress has transformed those areas of health science, economics, political science and computer science that have adopted it. Causal inference provides methods for obtaining valid causal inferences from data through careful, systematic workflows. Within the workflows of causal inference, causal directed acyclic graphs (causal DAGs) – are powerful tools for evaluating whether and how causal effects can be identified from data. My purpose here is to explain where these tools fit within causal inference workflows and to illustrate several practical applications. I focus on causal directed acyclic graphs because they are relatively easy to use and clear for most applications. However, causal DAGs can be misused. Here, I consider common pitfalls and how to avoid them.

In Part 1, I review the conceptual foundations of causal inference. The basis of all causal inference lies in counterfactual contrasts. Although there are slightly different philosophical approaches to counterfactual reasoning, it is widely agreed that to infer a causal effect is to contrast counterfactuals for a well defined population under different levels of intervention. The overview I present here builds on the Neyman–Rubin potential outcomes framework of causal inference (Holland, Reference Holland1986) as it has been extended for longitudinal treatments by epidemiologist James Robins (Robins, Reference Robins1986).

In Part 2, I describe how causal DAGs allow investigators to evaluate whether and how causal effects may be identified from data using assumptions encoded in a causal DAG. I outline five elementary graphical structures from which all causal relations may be derived; these structures form the building blocks of every causal directed acyclic graphs. I then examine five rules that clarify whether and how investigators may identify causal effects from data under the structural (or equivalently causal) assumptions that a causal DAG encodes.

In Part 3, I apply causal directed acyclic graphs to seven common identification problems, showing how repeated-measures data collection addresses these problems. I then use causal diagrams to explain the limitations of repeated-measures data collection for identifying causal effects, tempering enthusiasm for easy solutions from repeated-measures designs.

In Part 4, I offer practical suggestions for creating and reporting causal directed acyclic graphs in scientific research. Where there is ambiguity or debate about how a treatment may be related to an outcome independently of causality, I suggest that investigators report multiple causal diagrams and conduct distinct analyses for each.

Part 1: causal inference as counterfactual data science

The first step in every causal inference workflow is to state a well-defined causal question and a target population for whom answers are meant to generalise (Hernán et al., Reference Hernán, Sauer, Hernández-Díaz, Platt and Shrier2016a).

1. What causal quantity do I want to learn from the data?

2. For which population does this knowledge generalise?

Causal diagrams come after we have stated a causal question and have clarified our ‘target population’. Before reviewing causal diagrams we must consider what is required to answer these questions precisely.

The fundamental problem of causal inference: missing counterfactual observations

We begin with the concept of causality itself. Consider an intervention, A, and its effect, Y. We say that A causes Y if altering A would lead to a change in Y (Hume, Reference Hume1902; Lewis, Reference Lewis1973). If altering A would not change Y, we say that A has no causal effect on Y.

In causal inference, we aim to use data to quantitatively contrast the potential outcomes in response to different levels of a well-defined intervention. Commonly, we refer to such interventions as ‘exposures’ or ‘treatments’; we refer to the possible effects of interventions as ‘potential outcomes’.

Consider a binary treatment variable A ∈ {0, 1}. For each unit i in the set {1, 2, …, n}, when A i is set to 0, the potential outcome under this condition is denoted Y i(0). Conversely, when A i is set to 1, the potential outcome is denoted Y i(1). We refer to the terms Y i(1) and Y i(0) as ‘potential outcomes’ because, until realised, the effects of interventions describe counterfactual states.

Suppose that each unit i receives either A i = 1 or A i = 0. The corresponding outcomes are realised as Y i|A i = 1 or Y i|A i = 0. For now, we assume that each realised outcome under that intervention is equivalent to one of the potential outcomes required for a quantitative causal contrast, such that [(Y i(a)|A i = a)] = (Y i|A i = a). Thus, when A i = 1, Y i(1)|A i = 1 is observed. However, when A i = 1, it follows that Y i(0)|A i = 1 is not observed:Conversely:

We define δ i as the individual causal effect for unit i and express the individual causal effect as:

Notice that at the level of the individual, a causal effect is a contrast between treatments one of which is excluded by the other at any given time. That individual causal effects cannot be identified from observations is known as ‘the fundamental problem of causal inference’ (Holland, Reference Holland1986; Rubin, Reference Rubin1976).

Identifying causal effects using randomised experiments

Although it is not typically feasible to compute individual causal effects, under certain assumptions, it may be possible to estimate average treatment effects, also called ‘marginal effects’ by contrasting the outcomes of observed treatments among individuals who have been randomly assigned, perhaps conditional on measured covariates, to the treatment conditions that investigators wish to compare. We define an average treatment effect (ATE) as the difference between the expected or average outcomes observed under treatment where treatment has been randomly assigned, perhaps conditionally, on measured covariates. Consider a binary treatment,A ∈ {0, 1}. We write the average treatment effect as a contrast in the expected means of a population all of whose members are exposed to two levels of treatment:

This is our pre-specified estimand for our target population. Note that a challenge remains in computing these treatment-group averages, given that individual causal effects are unobservable: each treatment to be compared is not administered to every member of the population from which a sample is drawn. We can frame the problem by referring to the full data required to compute this estimand – that is, in terms of the complete counterfactual dataset where the missing potential outcomes, inherent in observational data, were somehow available for everyone in the target population. Suppose that 50% of the sample is randomly assigned to each treatment condition. We find that for each treatment condition, half the observations over the joint distribution of the counterfactual data are inherently unobservable:

Although the fundamental problem of causal inference remains at the individual level, randomisation allows investigators to recover treatment group averages. When investigators randomise units into treatment conditions, ensuring full adherence and a sufficiently large sample to rule out chance differences in group composition, we can generally attribute differences in treatment group averages to the treatments themselves. That is, randomisation implies:

and

If we assume:

and

it follows that the average treatment effect of a randomised experiment can be computed:

It is evident that we do not require the joint distribution over the full data (i.e. the counterfactual data) to obtain these averages. Rather, randomisation allows us to obtain a contrast of averages (or equivalently the average of contrasts) from the observed data.

Consider how randomised experiments enable average treatment effect estimation.

First, we must specify a population for whom we seek to generalise our results. We refer to this population as the target population. If the study population differs from the target population in the distribution of covariates that interact with the treatment, we will have no guarantees that our results will generalise (for discussions of sample/target population mismatch, refer to Imai et al. (Reference Imai, King and Stuart2008), Westreich et al. (Reference Westreich, Edwards, Lesko, Stuart and Cole2017, Reference Westreich, Edwards, Lesko, Cole and Stuart2019), Pearl and Bareinboim (Reference Pearl and Bareinboim2022), Bareinboim and Pearl (Reference Bareinboim and Pearl2013), Stuart et al. (Reference Stuart, Ackerman and Westreich2018) and Webster-Clark and Breskin (Reference Webster-Clark and Breskin2021)).

Second, because the units in the study sample at randomisation may differ from the units in the study after randomisation, we must be careful to avoid biases that arise from sample/population mismatch over time (Bulbulia, Reference Bulbulia2024c; Hernán et al., Reference Hernán, Hernández-Díaz and Robins2004). If there is sample attrition or non-response, the treatment effect we obtain for the sample may differ from the treatment effect in the target population.

Third, a randomised experiment recovers the causal effect of random treatment assignment, not of the treatment itself. The effect of randomisation may differ from the effect of treatment if some participants do not adhere to the treatment to which they have been assigned (Hernán et al., Reference Hernán2017). The effect of randomised assignment is called the ‘intent-to-treat effect’ or equivalently the ‘intention-to-treat effect’. The effect of perfect adherence is called the ‘per-protocol effect’ (Hernán et al., Reference Hernán2017; Lash et al., Reference Lash, Rothman, VanderWeele and Haneuse2020). To obtain the per-protocol effect for randomised experiments, methods for causal inference in observational settings must be applied (Bulbulia, Reference Bulbulia2024d; Hernán et al., Reference Hernán2017).

Fourth, I have presented the average treatment effect on the additive scale, that is, as an additive difference in average potential outcomes for the target population under two distinct levels of treatment. However, depending on the scientific question at hand, investigators may wish to estimate causal effects on the risk-ratio scale, the rate-ratio scale, the hazard-ratio scale or another scale. Where there are interactions such that treatment effects vary across different strata of the population, an estimate of the causal effect on the risk difference scale will differ in at least one stratum to be compared from the estimate on the risk ratio scale (Greenland, Reference Greenland2003; VanderWeele, Reference VanderWeele2012). The sensitivity of treatment effects in the presence of interactions to the scale of contrast underscores the importance of pre-specifying a scale for the causal contrast investigators hope to obtain.

Fifth, investigators may unintentionally spoil randomisation by adjusting for indicators that might be affected by the treatment, outcome or both, by excluding participants using attention checks, by collecting covariate data that might be affected by the experimental conditions, by failing to account for non-response and loss-to-follow-up, and by committing any number of other self-inflicted injuries (Bulbulia, Reference Bulbulia2024d). Unfortunately, such practices of self-inflicted injury are widespread (Montgomery et al., Reference Montgomery, Nyhan and Torres2018). Notably, causal directed acyclic graphs are useful for describing risks to valid causal identification in experiments (refer to Hernán et al., Reference Hernán2017), a topic that I consider elsewhere (Bulbulia, Reference Bulbulia2024d).

In observational studies, investigators might wish to describe the target population of interest as a restriction of the study sample population. For example, investigators might wish to estimate the average treatment effect only in the population that received the treatment (Greifer et al., Reference Greifer, Worthington, Iacus and King2023; Greifer, Reference Greifer2023). This treatment effect is sometimes called the average treatment effect in the treated (ATT) and may be expressed as:

Consider that if investigators are interested in the average treatment effect in the treated, counterfactual comparisons are deliberately restricted to the sample population that was treated. Here, investigators seek to obtain the average of the missing counterfactual outcomes for the treated population had they not been treated, without also obtaining the missing counterfactual outcomes for the untreated population had they been treated. Identifying causal effects in a restricted population may imply different causal assumptions and analytic workflows. Supplementary materials S2 describe an example for which the assumptions required to estimate the average treatment effect in the treated might be preferred. Here, we use the term ATE as a placeholder to mean the average treatment effect, or equivalently the ‘marginal effect’, for a target population on a pre-specified scale of causal contrast, where we assume that this effect estimate pertains to the source population from which the analytic sample was randomly drawn (under the assumption of random sampling, refer to Dahabreh et al., Reference Dahabreh, Robins, Haneuse and Hernán2019; Dahabreh & Hernán, Reference Dahabreh and Hernán2019).

Setting aside the important detail that the ‘average treatment effect’ requires considerable care in its specification, it is worth pausing to marvel at how an ideally conducted randomised controlled experiment provides a means for identifying inherently unobservable counterfactuals. It does so by using a Sherlock Holmes method of inference by elimination of confounders, which randomisation balances across treatments.

When experimenters observe a difference in average treatment effects, and all else goes right, they may infer that the distribution of potential outcomes differs by treatment because randomisation exhausts every other explanation. Again, if the experiment is conducted properly, experimenters are entitled to this inference because randomisation balances the distribution of potential confounders across the treatment groups to be compared.

However, when treatment assignments have not been randomised, we typically lack guarantees that the variables that bias causal associations are balanced across treatment conditions. Unfortunately, randomised experiments are impractical for addressing many scientifically important questions. This bitter constraint is familiar to evolutionary human scientists. We often confront ‘What if?’ questions that are rooted in the unidirectional nature of human history. However, understanding how randomisation obtains the missing counterfactual outcomes that we require to consistently estimate average treatment effects clarifies the tasks of causal inference in non-experimental settings (Hernán et al., Reference Hernán, Alonso, Logan, Grodstein, Michels, Willett and Robins2008a, Reference Hernán, Wang and Leaf2022; Hernán & Robins, Reference Hernán and Robins2006a): we want to ensure balance in the variables that might affect outcomes under treatment in the treatment groups to be compared.

Next, we examine basic causal identification assumptions in greater detail. We do so because using causal diagrams without understanding these assumptions may lead to unwarranted false confidence.

Fundamental assumptions required for causal inference in the potential outcomes framework

Three fundamental identification assumptions must be satisfied to consistently estimate causal effects from data. These assumptions are typically satisfied in properly executed randomised controlled trials but not in real-world studies where randomised treatment assignment is absent.

Assumption 1: causal consistency

We satisfy the causal consistency assumption when, for each unit i in the set {1, 2, …, n}, the observed outcome corresponds to one of the specific counterfactual outcomes to be compared such that:

The causal consistency assumption implies that the observed outcome at the specific treatment level that an individual receives equates to that individual's counterfactual outcome at the observed treatment level. Although this assumption would appear straightforward, outside ideally controlled randomised experiments, treatment conditions typically vary, and treatment heterogeneity poses considerable challenges to satisfying this assumption. Refer to supplementary materials S3 for further discussion of how investigators may satisfy the causal consistency assumption in real-world settings.

Assumption 2: positivity

We satisfy the positivity assumption if there is a non-zero probability of receiving each treatment level within each stratum of covariate required to ensure conditional exchangeability of treatments (assumption 3). Where A is the treatment and L is a vector of covariates sufficient to ensure no unmeasured confounding, we say that positivity is achieved if:

There are two types of positivity violation:

1. Random non-positivity – when a treatment is theoretically possible but specific treatment levels are not represented in the data, random non-positivity is the only identifiability assumption verifiable with data.

2. Deterministic non-positivity – when the treatment is implausible by nature, such as a hysterectomy in biological males.

Satisfying the positivity assumption can present considerable data challenges (Bulbulia et al., Reference Bulbulia, Afzali, Yogeeswaran and Sibley2023; Westreich & Cole, Reference Westreich and Cole2010). For instance, if we wanted to estimate a one-year causal effect of weekly religious service attendance on charitable donations, controlling for baseline attendance, and the natural transition rate to weekly service attendance is low, the effective sample size for the treatment condition may be insufficient. Where the positivity assumption is violated, causal diagrams will be of limited utility because observations will not support valid causal inferences even in the absence of confounding biases. Supplementary materials S2 presents a worked example illustrating this difficulty in a cultural evolutionary context.)

Assumption 3: conditional exchangeability (also ‘no unmeasured confounding’, ‘conditional ignorability’, ‘d-separation’)

We satisfy the conditional exchangeability assumption if the treatment groups are conditionally balanced in the variables that could affect the potential outcomes. In experimental designs, random assignment facilitates this assumption. In observational studies effort is required to control for any covariate that could account for observed correlations between A and Y without a causal effect of A on Y.

Let $\coprod$![]() denote independence, and let L denote the set of covariates necessary to ensure this conditional independence. Conditional exchangeability is satisfied when:

denote independence, and let L denote the set of covariates necessary to ensure this conditional independence. Conditional exchangeability is satisfied when:

If we assume that the positivity and consistency assumptions also hold, we may compute the ATE on the difference scale:

In randomised controlled experiments, exchangeability is unconditional. We would only adjust our statistical model by interacting the treatment with pre-treatment variables to improve efficiency (Lin, Reference Lin2013) or diminish threats to valid randomisation from chance imbalances (Hernán & Robins, Reference Hernán and Robins2024). However, it would be confusing to think of such an adjustment as ‘control’.

In real-world observational studies, where measured covariates are sufficient to ensure conditional exchangeability across the treatment groups to be compared – also called, ‘no unmeasured confounding’ or ‘ignorability’ – we may obtain valid estimates for an average treatment effect by conditioning on the densities of measured confounders by treatment group. Where A = a and A = a* are the treatment levels we seek to contrast:By causal consistency, we obtain:

For continuous covariates L, we have:

We may now state the primary function of a causal DAG, which is to identify sources of bias that may lead to an association between an exposure and outcome in the absence of causation. Causal DAGs visually encode features of a causal order necessary to evaluate the assumptions of conditional exchangeability, or equivalently of ‘no-unmeasured confounding’, or equivalently of ‘ignorability’ – or equivalently of ‘d-separation’ (explained next). Although causal directed acyclic graphs may be useful for addressing other biases such as measurement error and target-population restriction bias (also called ‘selection bias’) (Bulbulia, Reference Bulbulia2024c; Hernán & Robins, Reference Hernán and Robins2024), it is important to understand that causal directed acyclic graphs are specifically designed to evaluate the assumptions of conditional exchangeability or ‘d-separation’; any other use is strictly ‘off-label’.

Finally, it is important to emphasise that without randomisation, we typically cannot ensure that there is no-unmeasured confounding (Greifer et al., Reference Greifer, Worthington, Iacus and King2023; Stuart et al., Reference Stuart, Bradshaw and Leaf2015). For this reason, causal data science workflows typically include sensitivity analyses to determine how much unmeasured confounding would be required to compromise a study's findings (VanderWeele & Ding, Reference VanderWeele and Ding2017). Moreover, even if investigators do not represent unmeasured common causes of treatment and exposure in the causal DAGs they craft for observational studies, we should assume there are umeasured common causes and plan sensitivity analyses.

Summary of Part 1

Causal data science is distinct from ordinary data science. The initial step involves formulating a precise causal question that clearly defines a treatment or sequence of treatments, the outcome or outcomes to be contrasted under treatment, and a population of interest called the target population. We must then satisfy the three fundamental assumptions required for causal inference, assumptions that are implicit in the ideal of a randomised controlled experiment: causal consistency – outcomes at the treatment levels to be compared must align with their counterfactual counterparts; positivity – each treatment must have a non-zero probability across all covariates; and conditional exchangeability – there should be no unmeasured confounding, meaning treatment assignment is ignorable conditional on measured confounders, or equivalently, that treatment groups are conditionally exchangeable.

Part 2: how causal directed acyclic graphs clarify the conditional exchangeability assumption

Next, I will introduce causal DAGs. I will start by explaining the meaning of the symbols used. Table 1 summarises our terminology and conventions. Refer to supplementary materials S1 for a glossary of common causal inference terms.

Table 1. Variable naming conventions

Variable naming conventions

• X denotes a random variable without reference to its role.

• A denotes the ‘treatment’ or ‘exposure’ – a random variable. This is the variable for which we seek to understand the effect of intervening on it. It is the ‘cause’.

• A = a denotes a fixed ‘treatment’ or ‘exposure’. The random variable A is set to level A = a.

• Y denotes the outcome or response of an intervention. It is the ‘effect’.

• Y(a) denotes the counterfactual or potential state of Y in response to setting the level of the treatment to a specific level, A = a. The outcome Y is as would be observed when, perhaps contrary to fact, treatment A is set to level A = a. Different conventions exist for expressing a potential or counterfactual outcome, such as Y a,Y a.

• L denotes a measured confounder or set of confounders. This set, if conditioned upon, ensures that any differences between the potential outcomes under different levels of the treatment are the result of the treatment and not the result of a common cause of the treatment and the outcome. Mathematically, we write this independence:

• U denotes an unmeasured confounder or confounders. U is a variable or set of variables that may affect both the treatment and the outcome, leading to an association in the absence of causality, even after conditioning on measured covariates:

$$Y ( a ) \cancel{\coprod} A{ \vert }L\quad {\rm [ because\;of\;unmeasured\;}U] $$

• F denotes a modifier of the treatment effect. F alters the magnitude or direction of the effect of treatment A on an outcome Y.

• M denotes a mediator, a variable that transmits the effect of treatment A on an outcome Y.

• $\bar{X}$

denotes a sequence of variables, for example, a sequence of treatments.

denotes a sequence of variables, for example, a sequence of treatments.• ${\sc R}$

denotes a randomisation to treatment condition.

denotes a randomisation to treatment condition.• ${\sc G}$

denotes a graph, here, a causal directed acyclic graph.

denotes a graph, here, a causal directed acyclic graph.

Note that investigators use a variety of different symbols. There is no unique right way to create a causal directed acyclic graph, except that the meaning must be clear and the graph must be capable of identifying relationships of conditional and unconditional independence between the treatment and outcome. Although directed acyclic graphs are accessible tools, general graphical models such as ‘Single World Intervention Graphs’, which allow for the explicit representation of counterfactual dependencies, may be preferable for investigators to estimate causal effects under multiple interventions (Bulbulia, Reference Bulbulia2024b; Richardson & Robins, Reference Richardson and Robins2013a).

Conventions we use in this article to create causal directed acyclic graphs

The conventions we use to describe components of our causal graphs are given in Table 2.

• Node – a node or vertex represents characteristics or features of units within a population on a causal diagram – that is a ‘variable’. In causal directed acyclic graphs, we draw nodes with respect to the target population, which is the population for whom investigators seek causal inferences (Suzuki et al., Reference Suzuki, Shinozaki and Yamamoto2020). Time-indexed node X t denotes relative chronology; X ϕt is our convention for indicating that timing is assumed, perhaps erroneously.

• Edge without an arrow

– path of association, causality not asserted.

– path of association, causality not asserted.• Red edge without an arrow (

) – confounding path, ignores arrows to clarify statistical dependencies.

) – confounding path, ignores arrows to clarify statistical dependencies.• Arrow (

) – denotes causal relationship from the node at the base of the arrow (a parent) to the node at the tip of the arrow (a child). We typically refrain from drawing an arrow from treatment to outcome to avoid asserting a causal path from A to Y because the function of a causal directed acyclic graph is to evaluate whether causality can be identified for this path.

) – denotes causal relationship from the node at the base of the arrow (a parent) to the node at the tip of the arrow (a child). We typically refrain from drawing an arrow from treatment to outcome to avoid asserting a causal path from A to Y because the function of a causal directed acyclic graph is to evaluate whether causality can be identified for this path.• Red arrow (

) – path of non-causal association between the treatment and outcome.

) – path of non-causal association between the treatment and outcome.• Dashed arrow (

) – denotes a true association between the treatment and outcome that becomes partially obscured when conditioning on a mediator, assuming A causes Y.

) – denotes a true association between the treatment and outcome that becomes partially obscured when conditioning on a mediator, assuming A causes Y.• Dashed red arrow (

) – highlights over-conditioning bias from conditioning on a mediator.

) – highlights over-conditioning bias from conditioning on a mediator.• Open blue arrow (

) – highlights effect modification, occurring when the treatment effect levels vary within covariate levels. We do not assess the causal effect of the effect

) – highlights effect modification, occurring when the treatment effect levels vary within covariate levels. We do not assess the causal effect of the effect  modifier on the outcome, recognising that intervening on the effect modifier may be incoherent. This is an off-label convention we use to clarify our interest in effect modification within strata of a covariate when there is a true treatment effect. However, it is possible to replace these open blue arrows with ordinary nodes and explain that the edges are drawn not for identification but for evaluating generalisations (see Bulbulia, Reference Bulbulia2024b).

modifier on the outcome, recognising that intervening on the effect modifier may be incoherent. This is an off-label convention we use to clarify our interest in effect modification within strata of a covariate when there is a true treatment effect. However, it is possible to replace these open blue arrows with ordinary nodes and explain that the edges are drawn not for identification but for evaluating generalisations (see Bulbulia, Reference Bulbulia2024b).• Boxed variable

– conditioning or adjustment for X.

– conditioning or adjustment for X.• Red-boxed variable

– highlights the source of confounding bias from adjustment.

– highlights the source of confounding bias from adjustment.• Dashed circle

– no adjustment is made for a variable (implied for unmeasured confounders.)

– no adjustment is made for a variable (implied for unmeasured confounders.)•

randomisation, for example, randomisation into treatment:

randomisation, for example, randomisation into treatment:  .

.• Presenting temporal order – causal directed acyclic graphs must be – as truth in advertising implies – acyclic. Directed edges or arrows define ancestral relations. No descendant node can cause an ancestor node. Therefore causal diagrams are, by default, sequentially ordered.

Table 2. Nodes, edges, conditioning conventions

Nevertheless, to make our causal graphs more readable, we adopt the following conventions:

1. The layout of a causal diagram is structured from left to right to reflect the assumed sequence of causality as it unfolds.

2. We often index our nodes using X t to indicate their relative timing and chronological order, where t represents the time point or sequence in the timeline of events.

3. Where temporal order is uncertain or unknown, we use the notation X ϕt to propose a temporal order that is uncertain.

Typically, the timing of unmeasured confounders is unknown, except that they occur before the treatments of interest; hence, we place confounders to the left of the treatments and outcomes they are assumed to affect, but without any time indexing.

Again, temporal order is implied by the relationship of nodes and edges. However, explicitly representing the order in the layout of one's causal graph often makes it easier to evaluate, and the convention representing uncertainty is useful, particularly when the data do not ensure the relative timing of the occurrence of the variable in a causal graph.

More generally, investigators use various conventions to convey causal structures on graphs. Whichever convention we adopt must be clear.

Finally, note that all nodes and paths on causal graphs – including the absence of nodes and paths – are asserted. Constructing causal diagrams requires expert judgment of the scientific system under investigation. It is a great power given to those who construct causal graphs, and with great power comes great responsibility to be transparent. When investigators are unclear or there is debate about which graphical model fits reality, they should present multiple causal graphs. Where identification is possible in several candidate causal graphs, they should perform and report multiple analyses.

How causal directed acyclic graphs relate observations to counterfactual interventions

Ancestral relations in directed acyclic graphs

We define the relation of ‘parent’ and ‘child’ on a directed acyclic graph as follows:

1. Node A is a parent of node B if there is a directed edge from A to B, denoted A → B.

2. Node B is a child of node A if there is a directed edge from A to B, denoted A → B.

It follows that a parent and child are adjacent nodes connected by a directed edge.

We denote the set of all parents of a node B as pa(B).

In a directed acyclic graph, the directed edge A → B indicates a statistical dependency where A may provide information about B. In a causal directed acyclic graph, the directed edge A → B is interpreted as a causal relationship, meaning A is a direct cause of B.

We further define the relations of ancestor and descendant on a directed acyclic graph as follows:

1. Node A is an ancestor of node C if there exists a directed path from A to C. Formally, A is an ancestor of C if there exists a sequence of adjacent nodes (A, B 1, B 2, …, B t, C) such that A → B 1 → B 2 → ⋅ ⋅ ⋅ → B t → C.

2. Node C is a descendant of node A if there exists a directed path from A to C. Formally, C is a descendant of A if there exists a sequence of adjacent nodes (A, B 1, B 2, …, B t, C) such that A → B 1 → B 2 → ⋅ ⋅ ⋅ → B t → C.

It follows that a node can have multiple ancestors and multiple descendants.

Markov factorisation and the local Markov assumption

Pearl (Reference Pearl2009: 52) asks us to imagine the following. Suppose we have a distribution P defined on n discrete variables,X 1, X 2, …, X n. By the chain rule, the joint distribution for variables X 1, X 2, …, X n on a graph can be decomposed into the product of n conditional distributions such that we may obtain the following factorisation:

We translate nodes and edges on a graph into a set of conditional independences that a graph implies over statistical distributions.

According to the local Markov assumption, given its parents in a directed acyclic graph, a node is said to be independent of all its non-descendants. Under this assumption, we obtain what Pearl calls Bayesian network factorisation, such that:

This factorisation greatly simplifies the calculation of joint distributions encoded in a directed acyclic graph (whether causal or non-causal) by reducing the complex factorisation of conditional distributions in ${\cal P}$![]() to simpler conditional distributions involving the parent set PAj, as represented by the structural components of the graph (Lauritzen et al., Reference Lauritzen, Dawid, Larsen and Leimer1990; Pearl, Reference Pearl1988, Reference Pearl1995, Reference Pearl2009).

to simpler conditional distributions involving the parent set PAj, as represented by the structural components of the graph (Lauritzen et al., Reference Lauritzen, Dawid, Larsen and Leimer1990; Pearl, Reference Pearl1988, Reference Pearl1995, Reference Pearl2009).

Minimality assumption

The minimality assumption combines (a) the local Markov assumption with (b) the assumption that adjacent nodes on the graph are dependent. The minimality assumption asserts that the DAG is minimal with respect to the set of conditional independencies it encodes. This means no edges can be removed from the graph without altering the set of implied conditional independencies. It ensures that all adjacent nodes are dependent, and the graph does not include any unnecessary edges (Neal, Reference Neal2020).

Causal edge assumption

The causal edges assumption states that every parent is a direct cause of their children. Given the minimality assumption, the causal edges assumption allows us to interpret the conditional dependence between variables on a graph based on the causal relationships encoded by the arrangement of nodes and edges (Neal, Reference Neal2020).

Compatibility assumption

The compatibility assumption ensures that the joint distribution of variables aligns with the conditional independencies implied by the causal graph. This assumption requires that the probabilistic model conforms to the graph's structural assumptions. Demonstrating compatibility directly from data is challenging, as it involves verifying that all conditional independencies specified by the causal DAG are present in the data. Therefore, we typically assume compatibility rather than attempt to empirically prove it (Pearl, Reference Pearl2009).

Faithfulness

A causal diagram is considered faithful to a given set of data if all the conditional independencies present in the data are accurately depicted in the graph. Conversely, the graph is faithful if every dependency implied by the graph's structure can be observed in the data (Hernán & Robins, Reference Hernán and Robins2024). Faithfulness ensures that the graphical representation of relationships between variables accords with empirical evidence (Pearl, Reference Pearl2009).

We may distinguish between weak faithfulness and strong faithfulness:

• Weak faithfulness allows for the possibility that some observed independencies might occur because specific parameter values cause cancellations. It acknowledges that some conditional independencies in the data may not be reflected in the graph’s structure because they result from exact numerical coincidences.

• Strong faithfulness requires that all and only the conditional independencies that hold in the data are exactly those implied by the graph via its d-separation properties. It rules out the possibility of independencies arising from exact cancellations in the parameters.

The faithfulness assumption, whether weak or strong, is not directly testable from observed data (Pearl, Reference Pearl2009).

d-Separation

In a causal diagram, a path is ‘blocked’ or ‘d-separated’ if a node along it interrupts causation. Two variables are d-separated if all paths connecting them are blocked, making them conditionally independent. Conversely, unblocked paths result in ‘d-connected’ variables, implying potential dependence (Pearl, Reference Pearl1995, Reference Pearl2009). (Note that ‘d’ stands for ‘directional’, emphasising that the separation considers the directionality of edges. This is crucial because the concept relies on the direction of the arrows in the DAG to determine independence.)

The rules of d-separation are as follows:

1. Fork rule

–B and C are independent when conditioning on A ($B\coprod C{\rm \mid }A$

–B and C are independent when conditioning on A ($B\coprod C{\rm \mid }A$ ).

).2. Chain rule

– conditioning on B blocks the path between A and C ($A\coprod C{\rm \mid }B$

– conditioning on B blocks the path between A and C ($A\coprod C{\rm \mid }B$ ).

).3. Collider rule

–A and B are marginally independent. However, conditioning on C or any of its descendants introduces a dependence between A and B such that ($A\, \canceli{\coprod} B{\rm \mid }C$

–A and B are marginally independent. However, conditioning on C or any of its descendants introduces a dependence between A and B such that ($A\, \canceli{\coprod} B{\rm \mid }C$ ).

).

Judea Pearl proved d-separation in the 1990s (Pearl, Reference Pearl1995, Reference Pearl2009).

It follows from d-separation that:

1. An open path (no variables conditioned on) is blocked only if two arrows point to the same node: A → C ← B. The node of common effect (here C) is called a collider.

2. Conditioning on a collider does not block a path; thus,

can lead to an association between A and B in the absence of causation.

can lead to an association between A and B in the absence of causation.3. Conditioning on a descendant of a collider opens a path; for example if

, then

, then  is open.

is open.4. If a path does not contain a collider, any variable conditioned along the path blocks it; thus,

blocks the path from A to C (Hernán & Robins, Reference Hernán and Robins2024: 78; Pearl, Reference Pearl2009). Thus, in paths without colliders, conditioning on any node along the path blocks the path. In paths with colliders, conditioning on the collider or its descendants unblocks the path.

blocks the path from A to C (Hernán & Robins, Reference Hernán and Robins2024: 78; Pearl, Reference Pearl2009). Thus, in paths without colliders, conditioning on any node along the path blocks the path. In paths with colliders, conditioning on the collider or its descendants unblocks the path.

Backdoor adjustment

From d-separation, Pearl was able to define a general identification algorithm for causal identification, called the ‘backdoor adjustment theorem’ (Pearl, Reference Pearl2009).

Let us shift to the general notation that we will use in the following examples. Where A denotes the treatment, Y denotes the outcome and L denotes a set (or subset) of measured covariates. In a causal directed acyclic graph (causal DAG), we say that a set of variables L satisfies the backdoor adjustment theorem relative to the treatment A and the outcome Y if L blocks every path between A and Y that contains an arrow pointing into A (a backdoor path). Formally, L must satisfy two conditions:

1. No element of L is a descendant of A.

2. L blocks all backdoor paths from A to Y (there are no unmeasured confounders affecting both A and Y other than L.)

If L satisfies these conditions, the causal effect of A on Y can be estimated by conditioning on  (Pearl, Reference Pearl2009).

(Pearl, Reference Pearl2009).

Front door path criterion

Pearl also proves a ‘front-door adjustment’ criterion, which is rarely used in practice but is worth understanding for its conceptual value. The front-door criterion is useful when we cannot estimate the causal effect of A on Y and there is unmeasured confounding by U. Suppose further, that there is a mediator, M, that fully mediates the effect of A on Y. If A → M is unconfounded and M → Y is unconfounded, A → Y may be identified by estimating the separate identifiable paths through M. The front-door criterion is not widely used because requires measuring an appropriate mediator that fully captures the causal effect. However, understanding the front-door adjustment helps develop intuition for how estimating causal effects may be possible when there is unmeasured confounding (Pearl, Reference Pearl2009).

Pearl's structural causal models

In the potential outcomes framework, we represent interventions by setting variables to specific levels, e.g. setting the treatment to a specific value $A = \tilde{a}$![]() . We have noted that counterfactual outcomes are conceived as the outcomes that would occur if, perhaps contrary to fact, an individual's treatment was set to a specific level. We use the convention Y i(a) or equivalently to denote the counterfactual or ‘potential’ outcome for individual i when that individual's treatment is set to A i = a. Because we assume individual treatments to be independent and identically distributed (i.i.d.), we drop the subscripts when describing the potential outcomes for multiple individuals under specific levels of treatment. We denote the average of the potential outcomes as follows:

. We have noted that counterfactual outcomes are conceived as the outcomes that would occur if, perhaps contrary to fact, an individual's treatment was set to a specific level. We use the convention Y i(a) or equivalently to denote the counterfactual or ‘potential’ outcome for individual i when that individual's treatment is set to A i = a. Because we assume individual treatments to be independent and identically distributed (i.i.d.), we drop the subscripts when describing the potential outcomes for multiple individuals under specific levels of treatment. We denote the average of the potential outcomes as follows:

As noted above, we say that conditional exchangeability is satisfied if the potential outcomes are independent of the treatment assignment conditional on the measured covariates:

It is worth considering that causal directed acyclic graphs do not directly represent counterfactual outcomes. Instead, they evaluate whether causality can be identified from hypothetical interventions on the variables represented in a graph. Formally, causal directed acyclic graphs rely on Judea Pearl's do-calculus (Pearl, Reference Pearl2009), which relies on the concept of an ‘interventional distribution’. Under Pearl's do-calculus, any node in a graph can be intervened upon. Nodes and edges in a causal diagram correspond to non-parametric structural equations or what Pearl calls ‘structural causal models’ (Pearl, Reference Pearl2009). Note that non-parametric structural equations are causal-structural models. They are fundamentally different from statistical structural equation models that are employed in many human sciences. Please do not confuse non-parametric structural equation models with statistical structural equation models (VanderWeele, Reference VanderWeele2015). In a causal directed acyclic graph, non-parametric structural equations represent the underlying causal mechanisms without making specific parametric assumptions about the functional forms of relationships. It is important to note that non-parametric structural equation models, also known as structural causal models, are mathematical representations of the causal relationships between variables in a system. These equations describe the functional relationships between variables without specifying the particular functional form or the probability distributions of the variables. In contrast, statistical structural equation models, commonly used in the social sciences and psychology, make specific assumptions about the functional form of the relationships (e.g. linear, polynomial or exponential) and the probability distributions of the variables (e.g. normal, Poisson or binomial). Statistical structural equation models model observed data. Non-parametric structural equations state the assumed causal structure of the system – we do not use non-parametric structural equation models to do statistics. When we employ statistical structural equation models or any other statistical model, we must first state the assumed functional relationships that we maintain (under expert advice) hold for the data. We must do so without making assumptions about the functional form of the statistical model we will eventually employ – statistics come later, only after we have evaluated whether the causal effect we seek may be identified with data. Pearl's do-calculus and the rules of d-separation are based on non-parametric structural equations, which provide a flexible and generalisable framework for causal inference (Pearl, Reference Pearl2009).

Pearl's structural causal models work as follows.

Let L denote the common causes of treatment A and outcome Y:

• The node L in the corresponding DAG G corresponds to the non-parametric structural equation:L = f L(U L), where f L is an unspecified function and U L represents the exogenous error term or unmeasured factors affecting L.

• The treatment node A in G is associated with the non-parametric structural equation: A = f A(L, U A), where f A is an unspecified function, L represents the common causes and U A represents the exogenous error term or unmeasured factors affecting A.

• The outcome node Y in G is associated with the non-parametric structural equation: Y = f Y(A, L, U Y), where f Y is an unspecified function, A represents the treatment, L represents the common causes and U Y represents the exogenous error term or unmeasured factors affecting Y.

In Pearl's formalism, we assume that U L, U A and U Y are independent exogenous random variables. That is, we assume there are no direct arrows linking A to Y except through the common cause node L. Causal diagrams allow us to factorise the joint distribution of L, A and Y as a product of conditional probability distributions.

Define O as a distribution of independent and identically distributed observations such that O = (L, A, Y). The true distribution P O is factorised as:

where P O(L) is the marginal distribution of the covariates L; P O(A|L) is the conditional distribution of the treatment given the covariates; and P O(Y|A, L) is the conditional distribution of the outcome given the treatment and covariates.

Pearl's do-calculus allows us to evaluate the consequences of intervening on variables represented in a causal DAG to interpret probabilistic dependencies and independencies in the conditional and marginal associations presented on a graph.

Here, we have developed counterfactual contrasts using the potential outcomes framework. The potential outcomes framework considers potential outcomes to be fixed and real (even if assigned non-deterministically). Pearl develops counterfactual contrasts using operations on structural functionals, referred to as ‘do-calculus’. In Pearl's framework, we obtain counterfactual inference by assuming that the nodes in a causal directed acyclic graph correspond to a system of structural equation models, such as those we just described.

Mathematically, potential outcomes and counterfactual interventions are equivalent, such that:

where the left-hand side of the equivalence is the potential outcomes framework formalisation of a potential outcome recovered by causal consistency, and the right-hand side is given by Pearl's do-calculus, which, as just mentioned, formalises interventional distributions on nodes of a graph that correspond to structural causal models.

In practice, whether one uses Pearl's do-calculus or the potential outcomes framework to interpret causal inferences is often irrelevant to identification results. However, there are theoretically interesting debates about edge cases. For example, Pearl's structural causal models permit the identification of contrasts that cannot be falsified under any experiment (Richardson & Robins, Reference Richardson and Robins2013a). Because advocates of non-parametric structural equation models treat causality as primitive, they are less concerned with the requirement for falsification (Díaz et al., Reference Díaz, Hejazi, Rudolph and Der Laan2021, Reference Díaz, Williams and Rudolph2023; Pearl, Reference Pearl2009; Rudolph et al., Reference Rudolph, Williams and Diaz2024). Additionally, the potential outcomes framework allows for identification in settings where the error terms in a structural causal model are not independent (Bulbulia, Reference Bulbulia2024b).

I have presented the potential outcomes framework because it is easier to interpret, more general, and – to my mind – clearer and more intellectually compelling (moreover, one does not need to be a verificationist to adopt it). However, for nearly every practical purpose, the do-calculus and ‘po-calculus’ (potential outcomes framework, refer to Shpitser and Tchetgen, Reference Shpitser and Tchetgen2016) are both mathematically and practically equivalent. And remember, the nodes and edges in a causal directed acyclic graph correspond to non-parametric structural equations: these equations represent causal mechanisms without making specific assumptions about the functional form of the assumed causal relationships encoded in the causal DAG. As currently employed, the statistical structural equation models used in human sciences often make implausible (or even incoherent) causal assumptions (Bulbulia, Reference Bulbulia2024b; VanderWeele, Reference VanderWeele2015). It is essential to draw a causal directed acyclic graph (causal DAG) before considering a statistical structural equation model.

The five elementary structures of causality

Table 3 presents five elementary structures of causality from which all causal directed acyclic graphs are built. These elementary structures can be assembled in different combinations to clarify the causal relationships presented in a causal directed acyclic graph.

Table 3. The five elementary structures of causality from which all causal directed acyclic graphs can be built

Key: A directed edge (arrow) denotes causal association. The absence of an arrow denotes no causal association. Rules of d-separation: In a causal diagram, a path is ‘blocked’ or ‘d-separated’ if a node along it interrupts causation. Two variables are d-separated if all paths connecting them are blocked or if there are no paths linking them, making them conditionally independent. Conversely, unblocked paths result in ‘d-connected’ variables, implying statistical association. Refer to Pearl (Reference Pearl1995). Note that ‘d’ stands for ‘directional’.

Implication: ${\cal G}$![]() denotes a causal directed acyclic graph (causal DAG). P denotes a probability distribution function. Pearl proved that independence in a causal DAG $\textstyle{( B{\coprod} C{\rm \mid }A) {\cal G}}$

denotes a causal directed acyclic graph (causal DAG). P denotes a probability distribution function. Pearl proved that independence in a causal DAG $\textstyle{( B{\coprod} C{\rm \mid }A) {\cal G}}$![]() implies probabilistic independence $( B\coprod C{\rm \mid }A) P$

implies probabilistic independence $( B\coprod C{\rm \mid }A) P$![]() ; likewise if $( B\coprod C{\rm \mid }A) P$

; likewise if $( B\coprod C{\rm \mid }A) P$![]() holds in all distributions compatible with ${\cal G}$

holds in all distributions compatible with ${\cal G}$![]() , it follows that $( B\coprod C{\rm \mid }A) {\cal G}$

, it follows that $( B\coprod C{\rm \mid }A) {\cal G}$![]() (refer to Pearl Reference Pearl2009: 61). We read causal graphs to understand the implications of causality for relationships in observable data. However, reading causal structures from data is more challenging because the relationships in observable data are typically compatible with more than one (and typically many) causal graphs.

(refer to Pearl Reference Pearl2009: 61). We read causal graphs to understand the implications of causality for relationships in observable data. However, reading causal structures from data is more challenging because the relationships in observable data are typically compatible with more than one (and typically many) causal graphs.

The five elementary rules for causal identification

Table 4 describes five elementary rules for identifying conditional independence using directed acyclic causal diagrams. There are no shortcuts to reasoning about causality. Each causal question must be asked in the context of a specific scientific question, and each causal graph must be built under the best lights of domain expertise. However, the following five elementary rules for confounding control are implied by the theorems that underpin causal directed acyclic graphs. They may be a useful start for evaluating the prospects for causal identification across a broad range of settings.

Table 4. Five elementary rules for causal identification

Key: A denotes the treatment; Y denotes the outcome; U denotes an unmeasured confounder; L denotes a confounder; ![]() asserts causality; t subscript denotes the true relative timing of the variable; ϕt relative timing is asserted, here erroneously;

asserts causality; t subscript denotes the true relative timing of the variable; ϕt relative timing is asserted, here erroneously; ![]() indicates a path for bias linking A to Y absent causation;

indicates a path for bias linking A to Y absent causation; ![]() indicates a path for bias separating A and Y;

indicates a path for bias separating A and Y; ![]() indicates that conditioning on L introduces bias (over-conditioning bias). We include

indicates that conditioning on L introduces bias (over-conditioning bias). We include ![]() to clarify that we cannot typically be confident that all common causes of the treatment and outcome have been measured. Examples 1 and 3–5 illustrate how bias arises from erroneous variable timing: ϕt ≠ t.

to clarify that we cannot typically be confident that all common causes of the treatment and outcome have been measured. Examples 1 and 3–5 illustrate how bias arises from erroneous variable timing: ϕt ≠ t.

1. Ensure that treatments precede outcomes – this rule is a logical consequence of our assumption that causality follows the arrow of time and that a causal directed acyclic graph is faithful to this ordering. However, the assumption that treatments precede outcomes may be easily violated where investigators cannot ensure the relative timing of events from their data.

Note that this assumption does not raise concerns in settings where past outcomes may affect future treatments. Indeed, an effective strategy for confounding control in such settings is to condition on past outcomes, and where relevant, on past treatments as well. For example, if we wish to identify the causal effect of A 1 on Y 2, and repeated-measures time series data are available, it may be useful to condition such that  . Critically, the relations of variables must be arranged sequentially without cycles.

. Critically, the relations of variables must be arranged sequentially without cycles.

Causal directed acyclic graphs must be acyclic. Yet most processes in nature include feedback loops. However, there is no contradiction as long as we represent these loops as sequential events. To estimate a causal effect of Y on A, we would focus on:  . Departing from conventions we have previously used to label treatments and outcomes, here Y denotes the treatment and A denotes the outcome.

. Departing from conventions we have previously used to label treatments and outcomes, here Y denotes the treatment and A denotes the outcome.

2. Condition on common causes or their proxies – this rule applies to settings in which the treatment A and the outcome Y share common causes. By conditioning on these common causes, we block the open backdoor paths that could introduce bias into our causal estimates. Controlling for these common causes (or their proxies) helps to isolate the specific effect of A on Y. Note that we do not draw a path from A → Y in this context because it represents an interventional distribution. In a causal directed acyclic graph, conditioning does not occur on interventional distributions. We do not box A and Y.

3. Do not condition on a mediator when estimating total effects – this rule applies to settings in which the variable L is a mediator of A → Y. Recall that Pearl's backdoor path criterion requires that we do not condition on a descendant of the treatment. Here, conditioning on L violates the backdoor path criterion, risking bias for a total causal effect estimate. We must not condition on a mediator if we are interested in total effect estimates. Note we draw the path from A → Y to underscore that this specific overconditioning threat occurs in the presence of a true treatment effect. Over-conditioning bias can operate in the absence of a true treatment effect. This is important because conditioning on a mediator might create associations without causation. In many settings, ensuring accuracy in the relative timing of events in our data will prevent the self-inflicted injury of conditioning on a common effect of the treatment.

4. Do not condition on a collider – this rule applies to settings in which L is a common effect of A and Y. Conditioning on a collider may invoke a spurious association. Again, the backdoor path criterion requires that we do not condition on a descendant of the treatment. We would not be tempted to condition on L if we knew that it was an effect of A. In many settings, ensuring accuracy in the relative timing of events in our data will prevent the self-inflicted injury of conditioning on a common effect of the treatment and outcome.

5. Proxy rule: conditioning on a descendant is akin to conditioning on its parent – this rule applies to settings where L ′ is an effect from another variable L. The graph considers when L ′ is downstream of a collider. Here again, in many settings, ensuring accuracy in the relative timing of events in our data will prevent the self-inflicted injury of conditioning on a common effect of the treatment and outcome.

Summary Part 2

We use causal directed acyclic graphs to represent and evaluate structural sources of bias. We do not use these causal graphs to represent the entirety of the causal system in which we are interested, but rather only those features necessary to evaluate conditional exchangeability, or equivalently to evaluate d-separation. Moreover, causal directed acyclic graphs should not be confused with the structural equation models employed in the statistical structural equation modelling traditions (refer also to Rohrer et al., Reference Rohrer, Hünermund, Arslan and Elson2022). To repeat, although Pearl's formalism is built upon ‘Non-Parametric Structural Equation Models’, the term ‘Structural Equation Model’ can be misleading. Causal directed acyclic graphs are structural models that represent assumptions about reality, they are not statistical models. We use structural causal models to evaluate identifiability. We create causal graphs before we embark on statistical modelling. They aim to clarify how to write statistical models by elucidating which variables we must include in our statistical models and, equally important, and which variables we must exclude to avoid invalidating our causal inferences. All causal graphs are grounded in our assumptions about the structures of causation. Although it is sometimes possible – under assumptions – to automate causal discovery (Peters et al., Reference Peters, Bühlmann and Meinshausen2016) we cannot fully dispense with assumption because the causal structures of the world are underdetermined by the data (Quine, Reference Quine1981; J. M. Robins, Reference Robins1999).

The distinction between structural and statistical models is fundamental because in the absence of clearly defined causal contrasts on well-defined treatments, well-defined outcomes and well-defined populations, and absent carefully evaluated assumptions about structural sources of bias in the relationship between treatments and outcomes, the statistical structural equation modelling tradition offers no guarantees that the coefficients investigators recover are interpretable. Misunderstanding this difference between structural and statistical models has led to considerable confusion across the human sciences (Bulbulia, Reference Bulbulia2022, Reference Bulbulia2024b; VanderWeele, Reference VanderWeele2015, Reference VanderWeele2022; VanderWeele & Vansteelandt, Reference VanderWeele and Vansteelandt2022).

Part 3: how causal directed acyclic graphs clarify the importance of timing of events recorded in data

As noted in the previous section, the five elementary rules of confounding control reveal the importance of ensuring accurate timing in the occurrence of the variables whose structural features a causal directed acyclic graph encodes. We begin by considering seven examples of confounding problems resolved when accuracy in the timing of the occurrence of variables is ensured. These examples refer to causal graphs in Table 5. We use the symbol G to denote a graph. We use the convention: ${\cal G}_{\{ {\rm row}\} \{ .\} \{ 1 \;= \;{\rm problem}; \;2 \;= \;{\rm solution}\} }$![]() to indicate a causal directed acyclic graph in the table.

to indicate a causal directed acyclic graph in the table.

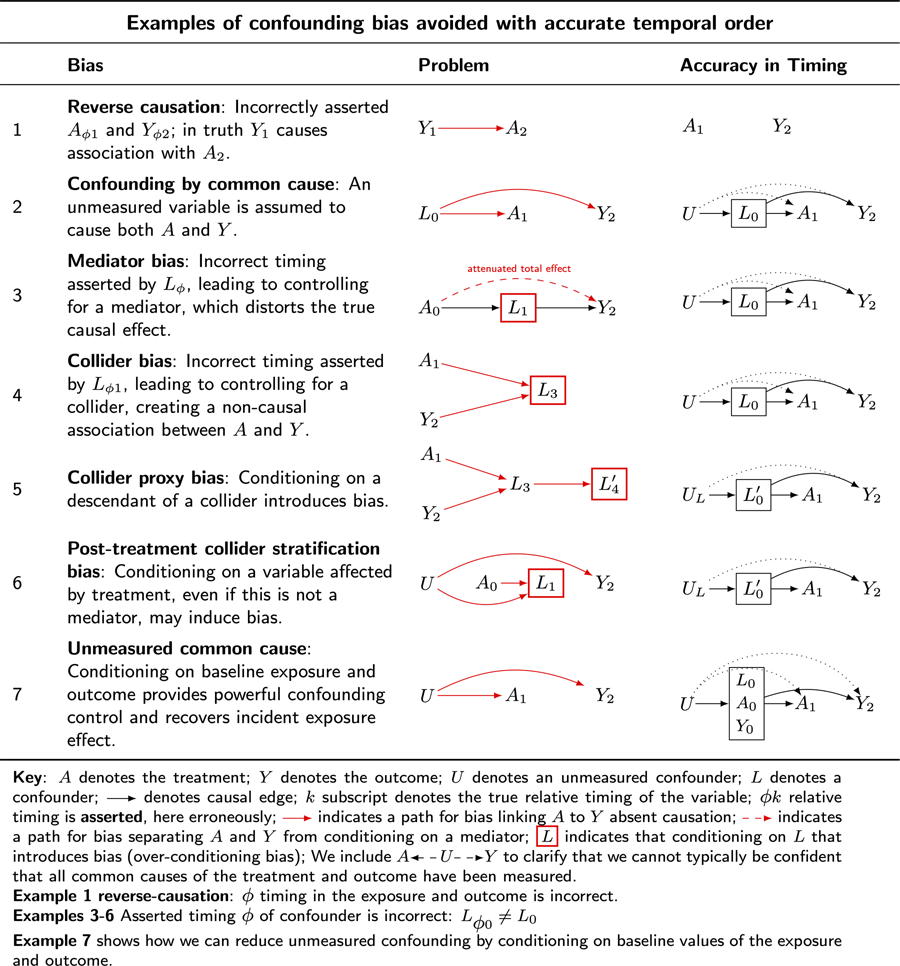

Table 5. Causal DAGs illustrate how ensuring the relative timing of the occurrence of variables of interest addresses common forms of bias when estimating causal effects

Key: A denotes the treatment; Y denotes the outcome; U denotes an unmeasured confounder; L denotes a confounder; ![]() denotes causal edge; k subscript denotes the true relative timing of the variable; ϕk relative timing is asserted, here erroneously;

denotes causal edge; k subscript denotes the true relative timing of the variable; ϕk relative timing is asserted, here erroneously; ![]() indicates a path for bias linking A to Y absent causation;

indicates a path for bias linking A to Y absent causation; ![]() indicates a path for bias separating A and Y from conditioning on a mediator;

indicates a path for bias separating A and Y from conditioning on a mediator; ![]() indicates that conditioning on L that introduces bias (over-conditioning bias). We include

indicates that conditioning on L that introduces bias (over-conditioning bias). We include ![]() to clarify that we cannot typically be confident that all common causes of the treatment and outcome have been measured.

to clarify that we cannot typically be confident that all common causes of the treatment and outcome have been measured.

Example 1, reverse-causation – ϕ timing in the exposure and outcome is incorrect.

Examples 3–6, asserted timing ϕ of confounder is incorrect: L ϕ0 ≠ L 0.

Example 7 shows how we can reduce unmeasured confounding by conditioning on baseline values of the exposure and outcome.

Example 1: reverse causation

Table 5 ${\cal G}_{3.1}$![]() illustrates bias from reverse causation. Suppose we are interested in the causal effect of marriage on well-being. If we observe that married people are happier than unmarried people, we might erroneously infer that marriage causes happiness, or happiness causes marriage (refer to McElreath, Reference McElreath2020).

illustrates bias from reverse causation. Suppose we are interested in the causal effect of marriage on well-being. If we observe that married people are happier than unmarried people, we might erroneously infer that marriage causes happiness, or happiness causes marriage (refer to McElreath, Reference McElreath2020).

Table 5 ${\cal G}_{3.2}$![]() clarifies a response. Ensure that the treatment is observed before the outcome is observed. Note further that the treatment, in this case, is not clearly specified because ‘marriage’ is unclear. There are at least four causal contrasts we might consider when thinking of ‘marriage’, namely:

clarifies a response. Ensure that the treatment is observed before the outcome is observed. Note further that the treatment, in this case, is not clearly specified because ‘marriage’ is unclear. There are at least four causal contrasts we might consider when thinking of ‘marriage’, namely:

Y(0,0) – the potential outcome when there is no marriage.

Y(0,1) – the potential outcome when there is a shift to marriage from no marriage.

Y(1,0) – the potential outcome under divorce.

Y(1,1) – the potential outcome from marriage prevalence.

Each of these four outcomes may be contrasted with the others, yielding six unique contrasts. Which do we wish to consider? ‘What is the causal effect of marriage on happiness?’ is ill-defined. This question does not uniquely state which of the six causal contrasts to consider. The first step in causal inference is to state a well-defined causal question in terms of interventions and outcomes to be compared. For a worked example refer to Bulbulia (Reference Bulbulia2024b).

Example 2: confounding by common cause

Table 5 ${\cal G}_{3.2}$![]() illustrates confounding by common cause. Suppose there is a common cause, L, of the treatment, A, and outcome,Y. In this setting, L may create a statistical association between A and Y, implying causation in its absence. Most human scientists will be familiar with the threat to inference in this setting: a ‘third variable’ leads to a statistical association between treatment and outcome absent causation.

illustrates confounding by common cause. Suppose there is a common cause, L, of the treatment, A, and outcome,Y. In this setting, L may create a statistical association between A and Y, implying causation in its absence. Most human scientists will be familiar with the threat to inference in this setting: a ‘third variable’ leads to a statistical association between treatment and outcome absent causation.

Suppose that smoking, L, is a common cause of both yellow fingers, A, and cancer,Y. Here, A and Y may show an association without causation. If investigators were to scrub the hands of smokers, this would not affect cancer rates.

Table 5 ${\cal G}_{3.2}$![]() clarifies a response. Condition on the common cause, smoking. Within strata of smokers and non-smokers, there will be no association between yellow fingers and cancer.

clarifies a response. Condition on the common cause, smoking. Within strata of smokers and non-smokers, there will be no association between yellow fingers and cancer.

Example 3: mediator bias

Table 5 ${\cal G}_{3.1}$![]() illustrates mediator bias. Conditioning on the effect of treatment blocks the flow of information from treatment to outcome, biasing the total effect estimate.

illustrates mediator bias. Conditioning on the effect of treatment blocks the flow of information from treatment to outcome, biasing the total effect estimate.

Suppose investigators are interested in whether cultural ‘beliefs in big Gods’ A affect social complexity Y. Suppose that ‘economic trade’, L, is both a common cause of the treatment and outcome. To address confounding by a common cause, we must condition on economic trade. However, timing matters. If we condition on measurements that reflect economic trade after the emergence of beliefs in big Gods, we may bias our total effect estimate.

Table 5 ${\cal G}_{3.2}$![]() clarifies a response. Ensure that measurements of economic trade are obtained for cultural histories before big Gods arise. Do not condition on post-treatment instances of economic trade.

clarifies a response. Ensure that measurements of economic trade are obtained for cultural histories before big Gods arise. Do not condition on post-treatment instances of economic trade.

Example 4: collider bias

Table 5 ${\cal G}_{4.1}$![]() illustrates collider bias. Imagine a randomised experiment investigating the effects of different settings on individuals’ self-rated health. In this study, participants are assigned to either civic settings (e.g. community centres) or religious settings (e.g. places of worship). The treatment of interest, A, is the type of setting, and the outcome, Y, is self-rated health. Suppose there is no effect of setting on self-rated health. However, suppose both setting and rated health independently influence a third variable: cooperativeness. Specifically, imagine religious settings encourage cooperative behaviour, and at the same time, individuals with better self-rated health are more likely to engage cooperatively. Now suppose the investigators decide to condition on cooperativeness, which in reality is the common effect of A and the outcome Y. Their rationale might be to study the effects of setting on health among those who are more cooperative or perhaps to ‘control for’ cooperation in the health effects of religious settings. By introducing such ‘control’, the investigators would inadvertently introduce collider bias, because the control variable is a common effect of the treatment and the outcome. If both A and Y are positively associated with L, A and Y will be negatively associated with each other. However, such an association is a statistical artefact. Were we to intervene on A, Y would not change.

illustrates collider bias. Imagine a randomised experiment investigating the effects of different settings on individuals’ self-rated health. In this study, participants are assigned to either civic settings (e.g. community centres) or religious settings (e.g. places of worship). The treatment of interest, A, is the type of setting, and the outcome, Y, is self-rated health. Suppose there is no effect of setting on self-rated health. However, suppose both setting and rated health independently influence a third variable: cooperativeness. Specifically, imagine religious settings encourage cooperative behaviour, and at the same time, individuals with better self-rated health are more likely to engage cooperatively. Now suppose the investigators decide to condition on cooperativeness, which in reality is the common effect of A and the outcome Y. Their rationale might be to study the effects of setting on health among those who are more cooperative or perhaps to ‘control for’ cooperation in the health effects of religious settings. By introducing such ‘control’, the investigators would inadvertently introduce collider bias, because the control variable is a common effect of the treatment and the outcome. If both A and Y are positively associated with L, A and Y will be negatively associated with each other. However, such an association is a statistical artefact. Were we to intervene on A, Y would not change.

Table 5 ${\cal G}_{4.2}$![]() clarifies a response. If the worry is that cooperativeness is a confounder, ensure that cooperativeness is measured before the initiation of exposure to religious settings.

clarifies a response. If the worry is that cooperativeness is a confounder, ensure that cooperativeness is measured before the initiation of exposure to religious settings.

Example 5: collider proxy bias

Table 5 ${\cal G}_{5.1}$![]() illustrates bias from conditioning on the proxy of a collider. Consider again the scenario described in Example 4: collider bias, but instead of controlling for cooperativeness, investigators control for charitable donations, a proxy for cooperativeness. Here, because the control variable is a descendant of a collider, conditioning on the proxy of the collider is akin to conditioning on the collider itself.

illustrates bias from conditioning on the proxy of a collider. Consider again the scenario described in Example 4: collider bias, but instead of controlling for cooperativeness, investigators control for charitable donations, a proxy for cooperativeness. Here, because the control variable is a descendant of a collider, conditioning on the proxy of the collider is akin to conditioning on the collider itself.

Table 5 G 5.2 clarifies a response. Do not condition on charitable donations, an effect of treatment.

Example 6: post-treatment collider stratification bias

Table 5 ${\cal G}_{6.1}$![]() illustrates post-treatment collider stratification bias. Consider again an experiment investigating the effect of religious service on self-rated health. Suppose we measure ‘religiosity’ after the experiment, along with other demographic data. Suppose further that religious setting affects religiosity, as does an unmeasured confounder, such as childhood deprivation. Suppose that childhood deprivation affects self-reported health. Although our experiment ensured randomisation of the treatment and thus ensured no unmeasured common causes of the treatment and outcome, conditioning on the post-treatment variable ‘religiosity’ opens a back-door path from the treatment to the outcome. This path is A 0

illustrates post-treatment collider stratification bias. Consider again an experiment investigating the effect of religious service on self-rated health. Suppose we measure ‘religiosity’ after the experiment, along with other demographic data. Suppose further that religious setting affects religiosity, as does an unmeasured confounder, such as childhood deprivation. Suppose that childhood deprivation affects self-reported health. Although our experiment ensured randomisation of the treatment and thus ensured no unmeasured common causes of the treatment and outcome, conditioning on the post-treatment variable ‘religiosity’ opens a back-door path from the treatment to the outcome. This path is A 0 ![]() L 1

L 1 ![]() U

U ![]() Y 2. We introduced confounding into our randomised experiment.

Y 2. We introduced confounding into our randomised experiment.