1 Introduction

Machine learning and artificial intelligence play a major role in today’s society: self-driving cars (e.g. [Reference Bachute and Subhedar3]), automated medical diagnoses (e.g. [Reference Rajkomar, Dean and Kohane41]) and security systems based on face recognition (e.g. [Reference Sharma, Bhatt and Sharma45]), for instance, are often based on certain machine learning models, such as deep neural networks (DNNs). DNNs often approximate functions that are discontinuous with respect to their input [Reference Szegedy, Zaremba, Sutskever, Bruna, Erhan, Goodfellow and Fergus48] making them susceptible to so-called adversarial attacks. In an adversarial attack, an adversary aims to change the prediction of a DNN through a directed, but small perturbation to the input. We refer to [Reference Goodfellow, Shlens and Szegedy14] for an example showing the weakness of DNNs towards adversarial attacks. Especially when employing DNNs in safety-critical applications, the training of machine learning models in a way that is robust to adversarial attacks has become a vital task.

Machine learning models are usually trained by minimising an associated loss function. In adversarially robust learning, this loss function is considered to be subject to adversarial attacks. The adversarial attack is usually given by a perturbation of the input data that is chosen to maximise the loss function. Thus, adversarial robust learning is formulated as a minmax optimisation problem. In practice, the inner maximisation problem needs to be approximated: [Reference Goodfellow, Shlens and Szegedy14] proposed the fast gradient sign method (FGSM), which perturbs the input data to maximise the loss function with a single step. Improvements of FGSM were proposed by, e.g. [Reference Kurakin, Goodfellow and Bengio25, Reference Tramèr, Kurakin, Papernot, Goodfellow, Boneh and McDaniel51, Reference Wong, Rice and Kolter57]. Another popular methodology is projected gradient descent (PGD) [Reference Madry, Makelov, Schmidt, Tsipras and Vladu32] and its variants, see, for example, [Reference Croce and Hein9, Reference Dong, Liao, Pang, Su, Zhu, Hu and Li10, Reference Maini, Wong, Kolter, H. and Singh33, Reference Mosbach, Andriushchenko, Trost, Hein and Klakow36, Reference Tramer and Boneh50, Reference Yang, Zhang, Katabi and Xu61]. Similar to FGSM, PGD considers the minmax optimisation problem but uses multi-step gradient ascent to approximate the inner maximisation problem. Notably, [Reference Wong, Rice and Kolter57] showed that FGSM with random initialisation is as effective as PGD.

Other defense methods include preprocessing (e.g. [Reference Guo, Rana, Cisse and van der Maaten16, Reference Song, Kim, Nowozin, Ermon and Kushman47, Reference Xu, Evans and Qi59, Reference Yun, Han, Chun, Oh, Yoo and Choe63]) and detection (e.g. [Reference Carlini and Wagner5, Reference Liu, Levine, Lau, Chellappa and Feizi31, Reference Metzen, Genewein, Fischer and Bischoff35, Reference Xu, Xiao, Zheng, Cai and Nevatia58]), as well as provable defenses (e.g. [Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli15, Reference Jia, Qu and Gong21, Reference Sheikholeslami, Lotfi and Kolter46, Reference Wong and Kolter55]). Various attack methods have also been proposed, see, for instance, [Reference Carlini and Wagner6, Reference Croce and Hein9, Reference Ghiasi, Shafahi and Goldstein13, Reference Wong, Schmidt and Kolter56]. More recently, there is an increased focus on using generative models to improve adversarial accuracy, see for example [Reference Nie, Guo, Huang, Xiao, Vahdat and Anandkumar38, Reference Wang, Pang, Du, Lin, Liu and Yan54, Reference Xue, Araujo, Hu and Chen60].

In the present work, we study the case of an adversary that finds their attack following a Bayesian statistical methodology. The Bayesian adversary does not find the attack through optimisation, but by sampling a probability distribution that can be derived using Bayes’ Theorem. Importantly, we study the setting in which the adversary uses a Bayesian strategy, but the machine learner/defender trains the model using optimisation, which is in contrast to [Reference Ye, Zhu, Bengio, Wallach, Larochelle, Grauman, Cesa-Bianchi and Garnett62]. Thus, our ansatz is orthogonal to previous studies of adversarial robustness by assuming that the attacker uses a significantly different technique. On the other hand, the associated Bayesian adversarial robustness problem can be interpreted as a stochastic relaxation of the classical minmax problem that replaces the inner maximisation problem with an integral. Thus, our ansatz should also serve as an alternative way to approach the computationally challenging minmax problem with a sampling based strategy. After establishing these connections, we

-

• propose Abram (short for Adversarial Bayesian Particle Sampler), a particle-based continuous-time dynamical system that simultaneously approximates the behaviour of the Bayesian adversary and trains the model via gradient descent.

Particle systems of this form have been used previously to solve such optimisation problems in the context of maximum marginal likelihood estimation, see, e.g. [Reference Johnston, Crucinio, Akyildiz, Sabanis and Girolami2] and [Reference Kuntz, Lim and Johansen24]. In order to justify the use Abram in this situation, we

-

• show that Abram converges to a McKean–Vlasov stochastic differential equation (SDE) as the number of particles goes to infinity, and

-

• give assumptions under which the McKean–Vlasov SDE converges to the minimiser of the Bayesian adversarial robustness problem with an exponential rate.

Additional complexity arises here compared to earlier work as the dynamical system and its limiting McKean–Vlasov SDE have to be considered under reflecting boundary conditions. After the analysis of the continuous-time system, we briefly explain its discretisation. Then, we

-

• compare Abram to the state of the art in adversarially robust classification of the MNIST and the CIFAR-10 datasets under various kinds of attacks.

This work is organised as follows. We introduce the (Bayesian) adversarial robustness problem in Section 2 and the Abram method in Section 3. We analyse Abram in Sections 4 (large particle limit) and 5 (longtime behaviour). We discuss different ways of employing Abram in practice in Section 6 and compare it to the state of the art in adversarially robust learning in Section 7. We conclude in Section 8.

2 Adversarial robustness and its Bayesian relaxation

In the following, we consider a supervised machine learning problem of the following form. We are given a training dataset

![]() $\{(y_1, z_1),\ldots, (y_K, z_K)\}$

of pairs of features

$\{(y_1, z_1),\ldots, (y_K, z_K)\}$

of pairs of features

![]() $y_1,\ldots, y_K \in Y \,:\!=\,\mathbb {R}^{d_Y}$

and labels

$y_1,\ldots, y_K \in Y \,:\!=\,\mathbb {R}^{d_Y}$

and labels

![]() $z_1,\ldots, z_K \in Z$

. Moreover, we are given a parametric model of the form

$z_1,\ldots, z_K \in Z$

. Moreover, we are given a parametric model of the form

![]() $g: X \times Y \rightarrow Z$

, with

$g: X \times Y \rightarrow Z$

, with

![]() $X \,:\!=\, \mathbb {R}^d$

denoting the parameter space. The goal is now to find a parameter

$X \,:\!=\, \mathbb {R}^d$

denoting the parameter space. The goal is now to find a parameter

![]() $\theta ^*$

, for which

$\theta ^*$

, for which

In practice the function

![]() $g(\!\cdot |\theta ^*)$

shall then be used to predict labels of features (especially such outside of training dataset).

$g(\!\cdot |\theta ^*)$

shall then be used to predict labels of features (especially such outside of training dataset).

The parameter

![]() $\theta ^*$

is usually found through optimisation. Let

$\theta ^*$

is usually found through optimisation. Let

![]() $\mathcal {L}:Z \times Z \rightarrow \mathbb {R}$

denote a loss function – a function that gives a reasonable way of comparing the output of

$\mathcal {L}:Z \times Z \rightarrow \mathbb {R}$

denote a loss function – a function that gives a reasonable way of comparing the output of

![]() $g$

with observed labels. Usual examples are the square loss for continuous labels and cross entropy loss for discrete labels. Then, we need to solve the following optimisation problem:

$g$

with observed labels. Usual examples are the square loss for continuous labels and cross entropy loss for discrete labels. Then, we need to solve the following optimisation problem:

where

![]() $\Phi (y,z|\theta ) \,:\!=\, \mathcal {L}(g(y|\theta ),z)$

.

$\Phi (y,z|\theta ) \,:\!=\, \mathcal {L}(g(y|\theta ),z)$

.

Machine learning models

![]() $g$

that are trained in this form are often susceptible to adversarial attacks. That means, for a given feature vector

$g$

that are trained in this form are often susceptible to adversarial attacks. That means, for a given feature vector

![]() $y$

, we can find a ‘small’

$y$

, we can find a ‘small’

![]() $\xi \in Y$

for which

$\xi \in Y$

for which

![]() $g(y+\xi |\theta ^*) \neq g(y|\theta ^*)$

. In this case, an adversary can change the model’s predicted label by a very slight alteration of the input feature. Such a

$g(y+\xi |\theta ^*) \neq g(y|\theta ^*)$

. In this case, an adversary can change the model’s predicted label by a very slight alteration of the input feature. Such a

![]() $\xi$

can usually be found through optimisation on the input domain:

$\xi$

can usually be found through optimisation on the input domain:

where

![]() $B(\varepsilon ) = \{\xi : \|\xi \|\leq \varepsilon \}$

denotes the

$B(\varepsilon ) = \{\xi : \|\xi \|\leq \varepsilon \}$

denotes the

![]() $\varepsilon$

-ball centred at

$\varepsilon$

-ball centred at

![]() $0$

and

$0$

and

![]() $\varepsilon \gt 0$

denotes the size of the adversarial attack. Hence, the attacker tries to change the prediction of the model whilst altering the model input only by a small value

$\varepsilon \gt 0$

denotes the size of the adversarial attack. Hence, the attacker tries to change the prediction of the model whilst altering the model input only by a small value

![]() $\leq \varepsilon$

. Other kinds of attacks are possible, the attacker may, e.g. try to not only change the predicted label to any other label, but rather to a particular target label, see, e.g. [Reference Kurakin, Goodfellow and Bengio26].

$\leq \varepsilon$

. Other kinds of attacks are possible, the attacker may, e.g. try to not only change the predicted label to any other label, but rather to a particular target label, see, e.g. [Reference Kurakin, Goodfellow and Bengio26].

In adversarially robust training, we replace the optimisation problem (2.1) by the minmax optimisation problem below:

Thus, we now train the network by minimising the loss also with respect to potential adversarial attacks. Finding the accurate solutions to such minmax optimisation problems is difficult: usually there is no underlying saddlepoint structure, e.g.

![]() $\Phi (y,z|\theta )$

is neither convex in

$\Phi (y,z|\theta )$

is neither convex in

![]() $\theta$

nor concave in

$\theta$

nor concave in

![]() $y$

,

$y$

,

![]() $X$

and

$X$

and

![]() $Y$

tend to be very high-dimensional spaces, and the number of data points

$Y$

tend to be very high-dimensional spaces, and the number of data points

![]() $K$

may prevent the accurate computation of gradients. However, good heuristics have been established throughout the last decade – we have mentioned some of them in Section 1.

$K$

may prevent the accurate computation of gradients. However, good heuristics have been established throughout the last decade – we have mentioned some of them in Section 1.

In this work, we aim to study a relaxed version of the minmax problem, which we refer to as the Bayesian adversarial robustness problem. This problem is given by

where the Bayesian adversarial distribution

![]() $\pi ^{\gamma, \varepsilon }_k(\cdot |\theta )$

has (Lebesgue) density

$\pi ^{\gamma, \varepsilon }_k(\cdot |\theta )$

has (Lebesgue) density

where

![]() $\gamma \gt 0$

is an inverse temperature,

$\gamma \gt 0$

is an inverse temperature,

![]() $\varepsilon \gt 0$

still denotes the size of the adversarial attack, and

$\varepsilon \gt 0$

still denotes the size of the adversarial attack, and

![]() $\mathbf {1}[\cdot ]$

denotes the indicator:

$\mathbf {1}[\cdot ]$

denotes the indicator:

![]() $\mathbf {1}[\mathrm {true}] \,:\!=\, 1$

and

$\mathbf {1}[\mathrm {true}] \,:\!=\, 1$

and

![]() $\mathbf {1}[\mathrm {false}] \,:\!=\, 0$

. The distribution

$\mathbf {1}[\mathrm {false}] \,:\!=\, 0$

. The distribution

![]() $\pi ^{\gamma, \varepsilon }_k(\cdot |\theta )$

is concentrated on the

$\pi ^{\gamma, \varepsilon }_k(\cdot |\theta )$

is concentrated on the

![]() $\varepsilon$

-ball,

$\varepsilon$

-ball,

![]() $\varepsilon \gt 0$

controls the range of the attack,

$\varepsilon \gt 0$

controls the range of the attack,

![]() $\gamma \gt 0$

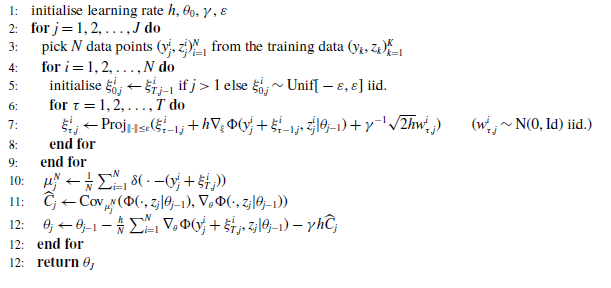

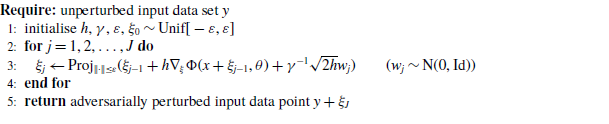

controls its focus. We illustrate this behaviour in Figure 1. Next, we comment on the mentioned relaxation and the Bayesian derivation of this optimisation problem.

$\gamma \gt 0$

controls its focus. We illustrate this behaviour in Figure 1. Next, we comment on the mentioned relaxation and the Bayesian derivation of this optimisation problem.

Figure 1.

Plots of the Lebesgue density of

![]() $\pi _1^{\gamma, \varepsilon }(\cdot |\theta _0)$

for energy

$\pi _1^{\gamma, \varepsilon }(\cdot |\theta _0)$

for energy

![]() $\Phi (y_1 + \xi, z_1|\theta _0) = (\xi -0.1)^2/2$

, choosing parameters

$\Phi (y_1 + \xi, z_1|\theta _0) = (\xi -0.1)^2/2$

, choosing parameters

![]() $\varepsilon \in \{0.025, 0.1, 0.4\}$

and

$\varepsilon \in \{0.025, 0.1, 0.4\}$

and

![]() $\gamma \in \{0.1, 10, 1000\}$

.

$\gamma \in \{0.1, 10, 1000\}$

.

2.1 Relaxation

Under certain assumptions,Footnote 1 one can show that

weakly as

![]() $\gamma \rightarrow \infty$

, see [Reference Hwang20]. Indeed, the Bayesian adversarial distribution converges to the uniform distribution over the global maximisers computed with respect to the adversarial attack. This limiting behaviour, that we can also see in Figure 1, forms the basis of simulated annealing methods for global optimisation. Moreover, it implies that the optimisation problems (2.2) and (2.3) are identical in the limit

$\gamma \rightarrow \infty$

, see [Reference Hwang20]. Indeed, the Bayesian adversarial distribution converges to the uniform distribution over the global maximisers computed with respect to the adversarial attack. This limiting behaviour, that we can also see in Figure 1, forms the basis of simulated annealing methods for global optimisation. Moreover, it implies that the optimisation problems (2.2) and (2.3) are identical in the limit

![]() $\gamma \to \infty$

, since

$\gamma \to \infty$

, since

\begin{align*} \lim _{\gamma \rightarrow \infty } \frac {1}{K}\sum _{k =1}^K &\int _{B({\varepsilon })} \Phi (y_k+\xi _k,z_k|\theta ) \pi ^{\gamma, \varepsilon }_k(\mathrm {d}\xi _i|\theta ) \\ &= \frac {1}{K}\sum _{k =1}^K \int _{B({\varepsilon })} \Phi (y_k+\xi _k,z_k|\theta ) \mathrm {Unif}(\mathrm {argmax}_{\xi \in Y} \Phi (y_k + \xi, z_k|\theta ))(\mathrm {d}\xi _i), \end{align*}

\begin{align*} \lim _{\gamma \rightarrow \infty } \frac {1}{K}\sum _{k =1}^K &\int _{B({\varepsilon })} \Phi (y_k+\xi _k,z_k|\theta ) \pi ^{\gamma, \varepsilon }_k(\mathrm {d}\xi _i|\theta ) \\ &= \frac {1}{K}\sum _{k =1}^K \int _{B({\varepsilon })} \Phi (y_k+\xi _k,z_k|\theta ) \mathrm {Unif}(\mathrm {argmax}_{\xi \in Y} \Phi (y_k + \xi, z_k|\theta ))(\mathrm {d}\xi _i), \end{align*}

and since

![]() $\xi _k \sim \mathrm {Unif}(\mathrm {argmax}_{\xi \in Y} \Phi (y_k + \xi, z_k|\theta ))$

implies

$\xi _k \sim \mathrm {Unif}(\mathrm {argmax}_{\xi \in Y} \Phi (y_k + \xi, z_k|\theta ))$

implies

![]() $\Phi (y_k+\xi _k,z_k|\theta ) = \max _{\xi \in B(\varepsilon )}\Phi (y_k+\xi, z_k|\theta )$

almost surely for

$\Phi (y_k+\xi _k,z_k|\theta ) = \max _{\xi \in B(\varepsilon )}\Phi (y_k+\xi, z_k|\theta )$

almost surely for

![]() $k =1,\ldots, K$

. A strictly positive

$k =1,\ldots, K$

. A strictly positive

![]() $\gamma$

on the other hand leads to a relaxed problem circumventing the minmax optimisation. [Reference Cipriani, Scagliotti and Wöhrer8] have also discussed this relaxation of an adversarial robustness problem in the context of a finite set of attacks, i.e. the

$\gamma$

on the other hand leads to a relaxed problem circumventing the minmax optimisation. [Reference Cipriani, Scagliotti and Wöhrer8] have also discussed this relaxation of an adversarial robustness problem in the context of a finite set of attacks, i.e. the

![]() $\varepsilon$

-ball

$\varepsilon$

-ball

![]() $B(\varepsilon )$

is replaced by a finite set. Probabilistically robust learning is another type of relaxation, see for example [Reference Bungert, Trillos, Jacobs, McKenzie, Nikolić and Wang4, Reference Robey, Chamon, Pappas and Hassani43]. Similar to our work, instead of doing the worst-case optimisation, i.e. finding the perturbation

$B(\varepsilon )$

is replaced by a finite set. Probabilistically robust learning is another type of relaxation, see for example [Reference Bungert, Trillos, Jacobs, McKenzie, Nikolić and Wang4, Reference Robey, Chamon, Pappas and Hassani43]. Similar to our work, instead of doing the worst-case optimisation, i.e. finding the perturbation

![]() $\xi$

that maximises the loss, they replace it with a probability measure on

$\xi$

that maximises the loss, they replace it with a probability measure on

![]() $\xi$

. This probability measure, however, follows a different paradigm.

$\xi$

. This probability measure, however, follows a different paradigm.

2.2 Bayesian attackers

We can understand the kind of attack that is implicitly employed in (2.3) as a Bayesian attack. We now briefly introduce the Bayesian learning problem to then explain its relation to this adversarial attack. In Bayesian learning, we model

![]() $\theta$

as a random variable with a so-called prior (distribution)

$\theta$

as a random variable with a so-called prior (distribution)

![]() $\pi _{\textrm { prior}}$

. The prior incorporates information about

$\pi _{\textrm { prior}}$

. The prior incorporates information about

![]() $\theta$

. In Bayesian learning, we now inform the prior about data

$\theta$

. In Bayesian learning, we now inform the prior about data

![]() $\{(y_1, z_1),\ldots, (y_K, z_K)\}$

by conditioning

$\{(y_1, z_1),\ldots, (y_K, z_K)\}$

by conditioning

![]() $\theta$

on that data. Indeed, we train the model by finding the conditional distribution of

$\theta$

on that data. Indeed, we train the model by finding the conditional distribution of

![]() $\theta$

given that

$\theta$

given that

![]() $g(y_k|\theta ) \approx z_k$

(

$g(y_k|\theta ) \approx z_k$

(

![]() $k =1,\ldots, K)$

. In the Bayesian setting, we represent ‘

$k =1,\ldots, K)$

. In the Bayesian setting, we represent ‘

![]() $\approx$

’ by a noise assumption consistent with the loss function

$\approx$

’ by a noise assumption consistent with the loss function

![]() $\mathcal {L}$

. This is achieved by defining the so-called likelihood as

$\mathcal {L}$

. This is achieved by defining the so-called likelihood as

![]() $\exp (\!-\Phi )$

. The conditional distribution describing

$\exp (\!-\Phi )$

. The conditional distribution describing

![]() $\theta$

is called the posterior (distribution)

$\theta$

is called the posterior (distribution)

![]() $\pi _{\textrm { post}}$

and can be obtained through Bayes’ theorem, which states that

$\pi _{\textrm { post}}$

and can be obtained through Bayes’ theorem, which states that

for measurable

![]() $A \subseteq X$

. A model prediction with respect to feature

$A \subseteq X$

. A model prediction with respect to feature

![]() $y$

can then be given by the posterior mean of the output

$y$

can then be given by the posterior mean of the output

![]() $g$

, which is

$g$

, which is

The Bayesian attacker treats the attack

![]() $\xi _k$

in exactly such a Bayesian way. They define a prior distribution for the attack, which is the uniform distribution over the

$\xi _k$

in exactly such a Bayesian way. They define a prior distribution for the attack, which is the uniform distribution over the

![]() $\varepsilon$

-ball:

$\varepsilon$

-ball:

The adversarial likelihood is designed to essentially cancel out the likelihood in the Bayesian learning problem, by defining a function that gives small mass to the learnt prediction and large mass to anything that does not agree with the learnt prediction:

Whilst this is not a usual likelihood corresponding to a particular noise model, we could see this as a special case of Bayesian forgetting [Reference Fu, He, Xu and Tao12]. In Bayesian forgetting, we would try to remove a single dataset from a posterior distribution by altering the distribution of the parameter

![]() $\theta$

. In this case, we try to alter the knowledge we could have gained about the feature vector by altering that feature vector to produce a different prediction.

$\theta$

. In this case, we try to alter the knowledge we could have gained about the feature vector by altering that feature vector to produce a different prediction.

3 Adversarial Bayesian particle sampler

We now derive a particle-based method that shall solve (2.3). To simplify the presentation in the following, we assume that

![]() $K=1$

, i.e. there is only a single data point. The derivation for multiple data points is equivalent – computational implications given by multiple data points will be discussed in Section 6. We also ignore the dependence of

$K=1$

, i.e. there is only a single data point. The derivation for multiple data points is equivalent – computational implications given by multiple data points will be discussed in Section 6. We also ignore the dependence of

![]() $\Phi$

on particular data points and note only the dependence on parameter and attack. Indeed, we write (2.3) now as

$\Phi$

on particular data points and note only the dependence on parameter and attack. Indeed, we write (2.3) now as

To solve this minimisation problem, we study the gradient flow corresponding to the energy

![]() $F$

, that is:

$F$

, that is:

![]() ${\mathrm {d}\zeta _t} = -\nabla _\zeta F(\zeta _t){\mathrm {d}t}$

. The gradient flow is a continuous-time variant of the gradient descent algorithm. The gradient flow can be shown to converge to a minimiser of

${\mathrm {d}\zeta _t} = -\nabla _\zeta F(\zeta _t){\mathrm {d}t}$

. The gradient flow is a continuous-time variant of the gradient descent algorithm. The gradient flow can be shown to converge to a minimiser of

![]() $F$

in the longterm limit if

$F$

in the longterm limit if

![]() $F$

satisfies certain regularity assumptions. The gradient of

$F$

satisfies certain regularity assumptions. The gradient of

![]() $F$

has a rather simple expression:

$F$

has a rather simple expression:

\begin{align*} \nabla _\theta F(\theta ) &= \nabla _\theta \frac { \int _{B(\varepsilon )}\Phi (\xi, \theta )\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi }{\int _{B(\varepsilon )} \exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi } \\ &= \frac { \int _{B(\varepsilon )} \nabla _\theta \Phi (\xi, \theta ) \cdot \exp (\gamma \Phi (\xi, \theta )) + \gamma \nabla _\theta \Phi (\xi, \theta ) \cdot \Phi (\xi, \theta )\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi }{\int _{B(\varepsilon )} \exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi } \\ &\qquad - \frac {\left ( \int _{B(\varepsilon )}\Phi (\xi, \theta )\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi \right )\left (\int _{B(\varepsilon )} \gamma \nabla _\theta \Phi (\xi, \theta ) \cdot \exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi \right )}{\left (\int _{B(\varepsilon )}\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi \right )^2} \\ &= \int _{B({\varepsilon })} \nabla _\theta \Phi (\xi, \theta ) \pi ^{\gamma, \varepsilon }(\mathrm {d}\xi |\theta ) + \gamma \mathrm {Cov}_{\pi ^{\gamma, \varepsilon }(\cdot |\theta )}(\Phi (\cdot, \theta ), \nabla _\theta \Phi (\cdot, \theta )), \end{align*}

\begin{align*} \nabla _\theta F(\theta ) &= \nabla _\theta \frac { \int _{B(\varepsilon )}\Phi (\xi, \theta )\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi }{\int _{B(\varepsilon )} \exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi } \\ &= \frac { \int _{B(\varepsilon )} \nabla _\theta \Phi (\xi, \theta ) \cdot \exp (\gamma \Phi (\xi, \theta )) + \gamma \nabla _\theta \Phi (\xi, \theta ) \cdot \Phi (\xi, \theta )\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi }{\int _{B(\varepsilon )} \exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi } \\ &\qquad - \frac {\left ( \int _{B(\varepsilon )}\Phi (\xi, \theta )\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi \right )\left (\int _{B(\varepsilon )} \gamma \nabla _\theta \Phi (\xi, \theta ) \cdot \exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi \right )}{\left (\int _{B(\varepsilon )}\exp (\gamma \Phi (\xi, \theta )) \mathrm {d}\xi \right )^2} \\ &= \int _{B({\varepsilon })} \nabla _\theta \Phi (\xi, \theta ) \pi ^{\gamma, \varepsilon }(\mathrm {d}\xi |\theta ) + \gamma \mathrm {Cov}_{\pi ^{\gamma, \varepsilon }(\cdot |\theta )}(\Phi (\cdot, \theta ), \nabla _\theta \Phi (\cdot, \theta )), \end{align*}

where we assume that

![]() $\Phi$

is continuously differentiable, bounded below and sufficiently regular to be allowed here to switch gradients and integrals. As usual, we define the covariance of appropriate functions

$\Phi$

is continuously differentiable, bounded below and sufficiently regular to be allowed here to switch gradients and integrals. As usual, we define the covariance of appropriate functions

![]() $f,g$

with respect to a probability distribution

$f,g$

with respect to a probability distribution

![]() $\pi$

, by

$\pi$

, by

The structure of

![]() $\nabla _\theta F$

is surprisingly simple, requiring only integrals of the target function and its gradient with respect to

$\nabla _\theta F$

is surprisingly simple, requiring only integrals of the target function and its gradient with respect to

![]() $\pi ^{\gamma, \varepsilon }$

, but, e.g. not its normalising constant. In practice, it is usually not possible to compute these integrals analytically or to even sample independently from

$\pi ^{\gamma, \varepsilon }$

, but, e.g. not its normalising constant. In practice, it is usually not possible to compute these integrals analytically or to even sample independently from

![]() $\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

, which would be necessary for a stochastic gradient descent approach. The latter approach first introduced by [Reference Robbins and Monro42] allows the minimisation of expected values by replacing these expected values by sample means; see also [Reference Jin, Latz, Liu and Schönlieb22] and [Reference Latz28] for continuous-time variants. Instead, we use a particle system approach that has been studied for a different problem by [Reference Johnston, Crucinio, Akyildiz, Sabanis and Girolami2] and [Reference Kuntz, Lim and Johansen24]. The underlying idea is to approximate

$\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

, which would be necessary for a stochastic gradient descent approach. The latter approach first introduced by [Reference Robbins and Monro42] allows the minimisation of expected values by replacing these expected values by sample means; see also [Reference Jin, Latz, Liu and Schönlieb22] and [Reference Latz28] for continuous-time variants. Instead, we use a particle system approach that has been studied for a different problem by [Reference Johnston, Crucinio, Akyildiz, Sabanis and Girolami2] and [Reference Kuntz, Lim and Johansen24]. The underlying idea is to approximate

![]() $\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

by an overdamped Langevin dynamics, which is restricted to the

$\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

by an overdamped Langevin dynamics, which is restricted to the

![]() $\varepsilon$

-Ball

$\varepsilon$

-Ball

![]() $B(\varepsilon )$

with reflecting boundary conditions:

$B(\varepsilon )$

with reflecting boundary conditions:

where

![]() $(W_t)_{t \geq 0}$

denotes a standard Brownian motion on

$(W_t)_{t \geq 0}$

denotes a standard Brownian motion on

![]() $Y$

. Alternatively, one may write the dynamics as

$Y$

. Alternatively, one may write the dynamics as

![]() $\mathrm {d}\xi _t = \nabla _\xi \Phi (\xi _t,\theta )\mathrm {d}t + \sqrt {2/\gamma }\mathrm {d}W_t$

, which is equivalent to the current form after a time re-scaling. Under weak assumptions on

$\mathrm {d}\xi _t = \nabla _\xi \Phi (\xi _t,\theta )\mathrm {d}t + \sqrt {2/\gamma }\mathrm {d}W_t$

, which is equivalent to the current form after a time re-scaling. Under weak assumptions on

![]() $\Phi$

, this Langevin dynamics converges to the distribution

$\Phi$

, this Langevin dynamics converges to the distribution

![]() $\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

as

$\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

as

![]() $t \rightarrow \infty$

. However, due to the heavy computational costs, in practice, we are not able to simulate the longterm behaviour of this dynamics for all fixed

$t \rightarrow \infty$

. However, due to the heavy computational costs, in practice, we are not able to simulate the longterm behaviour of this dynamics for all fixed

![]() $\theta$

to produce samples of

$\theta$

to produce samples of

![]() $\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

as required for stochastic gradient descent. Instead, we run a number

$\pi ^{\gamma, \varepsilon }(\cdot |\theta )$

as required for stochastic gradient descent. Instead, we run a number

![]() $N$

of (seemingly independent) Langevin dynamics

$N$

of (seemingly independent) Langevin dynamics

![]() $(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

. We then obtain an approximate gradient flow

$(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

. We then obtain an approximate gradient flow

![]() $(\theta _t^N)_{t \geq 0}$

that uses the ensemble of particles

$(\theta _t^N)_{t \geq 0}$

that uses the ensemble of particles

![]() $(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

to approximate the expected values in the gradient

$(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

to approximate the expected values in the gradient

![]() $\nabla _\theta F$

and then feed

$\nabla _\theta F$

and then feed

![]() $(\theta _t^N)_{t \geq 0}$

back into the drift of the

$(\theta _t^N)_{t \geq 0}$

back into the drift of the

![]() $(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

. Hence, we simultaneously approximate the gradient flow

$(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

. Hence, we simultaneously approximate the gradient flow

![]() $(\zeta _t)_{t \geq 0}$

by

$(\zeta _t)_{t \geq 0}$

by

![]() $(\theta ^N_t)_{t \geq 0}$

and the Bayesian adversarial distribution

$(\theta ^N_t)_{t \geq 0}$

and the Bayesian adversarial distribution

![]() $(\pi ^{\gamma, \varepsilon }(\cdot |\theta ^N_t))_{t \geq 0}$

by

$(\pi ^{\gamma, \varepsilon }(\cdot |\theta ^N_t))_{t \geq 0}$

by

![]() $(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

. Overall, we obtain the dynamical system

$(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

. Overall, we obtain the dynamical system

\begin{align*} \mathrm {d}\theta _t^N &= - \frac {1}{N}\sum _{n=1}^N\nabla _\theta \Phi (\xi _t^{n,N},\theta _t^N)\mathrm {d}t - \gamma \widehat {\mathrm {Cov}}(\xi _t^N)\mathrm {d}t, \\ \mathrm {d}\xi _t^{i,N} &= \gamma \nabla _\xi \Phi (\xi _t^{i,N},\theta ^N_t)\mathrm {d}t + \sqrt {2}\mathrm {d}W_t^{i} \qquad (i =1,\ldots, N). \end{align*}

\begin{align*} \mathrm {d}\theta _t^N &= - \frac {1}{N}\sum _{n=1}^N\nabla _\theta \Phi (\xi _t^{n,N},\theta _t^N)\mathrm {d}t - \gamma \widehat {\mathrm {Cov}}(\xi _t^N)\mathrm {d}t, \\ \mathrm {d}\xi _t^{i,N} &= \gamma \nabla _\xi \Phi (\xi _t^{i,N},\theta ^N_t)\mathrm {d}t + \sqrt {2}\mathrm {d}W_t^{i} \qquad (i =1,\ldots, N). \end{align*}

where

![]() $(W_t^{i})_{t \geq 0}$

are mutually independent Brownian motions on

$(W_t^{i})_{t \geq 0}$

are mutually independent Brownian motions on

![]() $Y$

for

$Y$

for

![]() $i = 1,\ldots, N$

. Again, the Langevin dynamics

$i = 1,\ldots, N$

. Again, the Langevin dynamics

![]() $(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

are defined on the ball

$(\xi _t^{1,N})_{t \geq 0}, \ldots, (\xi _t^{N,N})_{t \geq 0}$

are defined on the ball

![]() $B(\varepsilon )$

with reflecting boundary conditions – we formalise this fact below. The empirical covariance is given by

$B(\varepsilon )$

with reflecting boundary conditions – we formalise this fact below. The empirical covariance is given by

We refer to the dynamical system

![]() $(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t)_{t \geq 0}$

as Abram. We illustrate the dynamics of Abram in Figure 2, where we consider a simple example.

$(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t)_{t \geq 0}$

as Abram. We illustrate the dynamics of Abram in Figure 2, where we consider a simple example.

Figure 2.

Examples of the Abram method given

![]() $\Phi (\xi, \theta ) = \frac {1}{2}(\xi + \theta )^2$

,

$\Phi (\xi, \theta ) = \frac {1}{2}(\xi + \theta )^2$

,

![]() $\varepsilon = 1$

, and different combinations of

$\varepsilon = 1$

, and different combinations of

![]() $(\gamma, N) = (10,3)$

(top left),

$(\gamma, N) = (10,3)$

(top left),

![]() $(0.1,3)$

(top right),

$(0.1,3)$

(top right),

![]() $(10,50)$

(bottom left),

$(10,50)$

(bottom left),

![]() $(0.1, 50)$

(bottom right). In each of the four quadrants, we show the simulated path

$(0.1, 50)$

(bottom right). In each of the four quadrants, we show the simulated path

![]() $(\theta _t^N)_{t \geq 0}$

(top), the particle paths

$(\theta _t^N)_{t \geq 0}$

(top), the particle paths

![]() $(\xi _t^{1,N},\ldots, \xi _t^{N,N})_{t \geq 0}$

(centre), and the path of probability distributions

$(\xi _t^{1,N},\ldots, \xi _t^{N,N})_{t \geq 0}$

(centre), and the path of probability distributions

![]() $(\pi ^{\gamma, \varepsilon }(\cdot |\theta _t^N))_{t \geq 0}$

(bottom) that shall be approximated by the particles. The larger

$(\pi ^{\gamma, \varepsilon }(\cdot |\theta _t^N))_{t \geq 0}$

(bottom) that shall be approximated by the particles. The larger

![]() $\gamma$

leads to a concentration of

$\gamma$

leads to a concentration of

![]() $\pi ^{\gamma, \varepsilon }$

at the boundary, whilst it is closer to uniform if

$\pi ^{\gamma, \varepsilon }$

at the boundary, whilst it is closer to uniform if

![]() $\gamma$

is small. More particles lead to a more stable path

$\gamma$

is small. More particles lead to a more stable path

![]() $(\theta ^N_t)_{t \geq 0}$

. A combination of large

$(\theta ^N_t)_{t \geq 0}$

. A combination of large

![]() $N$

and

$N$

and

![]() $\gamma$

leads to convergence to the minimiser

$\gamma$

leads to convergence to the minimiser

![]() $\theta _* = 0$

of

$\theta _* = 0$

of

![]() $F$

.

$F$

.

We have motivated this particle system as an approximation to the underlying gradient flow

![]() $(\zeta _t)_{t \geq 0}$

. As

$(\zeta _t)_{t \geq 0}$

. As

![]() $N \rightarrow \infty$

, the dynamics

$N \rightarrow \infty$

, the dynamics

![]() $(\theta ^N_t)_{t \geq 0}$

does not necessarily convergence to the gradient flow

$(\theta ^N_t)_{t \geq 0}$

does not necessarily convergence to the gradient flow

![]() $(\zeta _t)_{t \geq 0}$

, but to a certain McKean–Vlasov stochastic differential equation (SDE), see [Reference McKean34]. We study this convergence behaviour in the following, as well as the convergence of the McKean–Vlasov SDE to the minimiser of

$(\zeta _t)_{t \geq 0}$

, but to a certain McKean–Vlasov stochastic differential equation (SDE), see [Reference McKean34]. We study this convergence behaviour in the following, as well as the convergence of the McKean–Vlasov SDE to the minimiser of

![]() $F$

and, thus, justify Abram as a method for Bayesian adversarial learning. First, we introduce the complete mathematical set-up and give required assumptions. To make it easier for the reader to keep track of the different stochastic processes that appear throughout this work, we summarise them in Table 1.

$F$

and, thus, justify Abram as a method for Bayesian adversarial learning. First, we introduce the complete mathematical set-up and give required assumptions. To make it easier for the reader to keep track of the different stochastic processes that appear throughout this work, we summarise them in Table 1.

Table 1. Definitions of stochastic processes throughout this work

3.1 Mean-field limit

In the following, we are interested in the mean field limit of Abram, i.e. we analyse the limit of

![]() $(\theta ^N_t)_{t \geq 0}$

as

$(\theta ^N_t)_{t \geq 0}$

as

![]() $N \rightarrow \infty$

. Thus, we can certainly assume for now that

$N \rightarrow \infty$

. Thus, we can certainly assume for now that

![]() $\gamma \,:\!=\, 1$

and

$\gamma \,:\!=\, 1$

and

![]() $\varepsilon \in (0,1)$

being fixed. We write

$\varepsilon \in (0,1)$

being fixed. We write

![]() $B \,:\!=\, B(\varepsilon )$

. Then, Abram

$B \,:\!=\, B(\varepsilon )$

. Then, Abram

![]() $(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t)_{t \geq 0}$

satisfies

$(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t)_{t \geq 0}$

satisfies

\begin{align} \theta ^N_t &= \theta _0 - \int _0^t\mu _s^N(\nabla _\theta \Phi (\cdot, \theta ^N_s))\mathrm {d}s - \int _0^t \mathrm {Cov}_{\mu _s^N}(\Phi (\cdot, \theta ^N_s),\nabla _\theta \Phi (\cdot, \theta ^N_s))\mathrm {d}s, \\ \xi _t^{i,N} &=\xi ^i_0 +\int _0^t\nabla _x \Phi (\xi _s^{i,N},\theta ^N_s)\mathrm {d}s + \sqrt {2}W^i_t+\int _0^tn(\xi _s^{i,N})\mathrm {d}l^{i,N}_s\qquad (i=1,\ldots, N). \nonumber \end{align}

\begin{align} \theta ^N_t &= \theta _0 - \int _0^t\mu _s^N(\nabla _\theta \Phi (\cdot, \theta ^N_s))\mathrm {d}s - \int _0^t \mathrm {Cov}_{\mu _s^N}(\Phi (\cdot, \theta ^N_s),\nabla _\theta \Phi (\cdot, \theta ^N_s))\mathrm {d}s, \\ \xi _t^{i,N} &=\xi ^i_0 +\int _0^t\nabla _x \Phi (\xi _s^{i,N},\theta ^N_s)\mathrm {d}s + \sqrt {2}W^i_t+\int _0^tn(\xi _s^{i,N})\mathrm {d}l^{i,N}_s\qquad (i=1,\ldots, N). \nonumber \end{align}

Here,

![]() $(W_t^1)_{t \geq 0},\ldots, (W_t^N)_{t \geq 0}$

are independent Brownian motions on

$(W_t^1)_{t \geq 0},\ldots, (W_t^N)_{t \geq 0}$

are independent Brownian motions on

![]() $Y$

and the initial particle values

$Y$

and the initial particle values

![]() $\xi ^1_0,\ldots, \xi ^N_0$

are independent and identically distributed. There and throughout the rest of this work, we denote the expectation of some appropriate function

$\xi ^1_0,\ldots, \xi ^N_0$

are independent and identically distributed. There and throughout the rest of this work, we denote the expectation of some appropriate function

![]() $f$

with respect to a probability measure

$f$

with respect to a probability measure

![]() $\pi$

by

$\pi$

by

![]() $\pi (f) \,:\!=\, \int _X f(\theta ) \pi (\mathrm {d}\theta ).$

We use

$\pi (f) \,:\!=\, \int _X f(\theta ) \pi (\mathrm {d}\theta ).$

We use

![]() $\mu _t^N$

to denote the empirical distribution of the particles

$\mu _t^N$

to denote the empirical distribution of the particles

![]() $(\xi _t^{1,N}, \ldots, \xi _t^{N,N})$

at time

$(\xi _t^{1,N}, \ldots, \xi _t^{N,N})$

at time

![]() $t \geq 0$

. That is

$t \geq 0$

. That is

![]() $\mu _t^N\,:\!=\,\frac {1}{N}\sum _{i=1}^N\delta (\!\cdot - {\xi _t^{i, N}})$

, where

$\mu _t^N\,:\!=\,\frac {1}{N}\sum _{i=1}^N\delta (\!\cdot - {\xi _t^{i, N}})$

, where

![]() $\delta (\!\cdot - \xi )$

is the Dirac mass concentrated in

$\delta (\!\cdot - \xi )$

is the Dirac mass concentrated in

![]() $\xi \in B$

. This implies especially that we can write

$\xi \in B$

. This implies especially that we can write

for appropriate functions

![]() $f$

and

$f$

and

![]() $g$

. The particles are constrained to stay within

$g$

. The particles are constrained to stay within

![]() $B$

by the last term in the equations of the

$B$

by the last term in the equations of the

![]() $(\xi _t^{1,N}, \ldots, \xi _t^{N,N})_{t \geq 0}$

. Here,

$(\xi _t^{1,N}, \ldots, \xi _t^{N,N})_{t \geq 0}$

. Here,

![]() $n(x)=-x/\left \|x \right \|$

for

$n(x)=-x/\left \|x \right \|$

for

![]() $x\in \partial B$

is the inner normal vector field. Although we focus on Abram

$x\in \partial B$

is the inner normal vector field. Although we focus on Abram

![]() $(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t)_{t \geq 0}$

, we remark that the solution of equations (3.1) is

$(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t)_{t \geq 0}$

, we remark that the solution of equations (3.1) is

![]() $(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t, l^{1,N}_t,\ldots, l^{N,N}_t)_{t \geq 0}$

. The functions

$(\theta ^N_t, \xi ^{1,N}_t,\ldots, \xi ^{N,N}_t, l^{1,N}_t,\ldots, l^{N,N}_t)_{t \geq 0}$

. The functions

![]() $(l^{1,N}_t,\ldots, l^{N,N}_t)_{t \geq 0}$

are uniquely defined under the additional conditions:

$(l^{1,N}_t,\ldots, l^{N,N}_t)_{t \geq 0}$

are uniquely defined under the additional conditions:

-

(1)

$l^{i,N}$

’s are non-decreasing with

$l^{i,N}$

’s are non-decreasing with

$l^{i,N}(0)=0$

and

$l^{i,N}(0)=0$

and -

(2)

$\int _0^t \boldsymbol {1}[{\xi ^{i,N}_s\notin \partial B(\varepsilon )}]dl^{i,N}(s)=0$

.

$\int _0^t \boldsymbol {1}[{\xi ^{i,N}_s\notin \partial B(\varepsilon )}]dl^{i,N}(s)=0$

.

Condition (2) implies that

![]() $l^{i,N}$

can increase only when

$l^{i,N}$

can increase only when

![]() $\xi ^{i, N}$

is in

$\xi ^{i, N}$

is in

![]() $\partial B(\varepsilon )$

. Intuitively,

$\partial B(\varepsilon )$

. Intuitively,

![]() $l^{i,N}$

cancels out part of

$l^{i,N}$

cancels out part of

![]() $\xi ^{i, N}$

so that it stays inside

$\xi ^{i, N}$

so that it stays inside

![]() $B(\varepsilon )$

. For more discussion on diffusion processes with reflecting boundary conditions, see e.g. [Reference Pilipenko40]. Additionally, it is convenient to define

$B(\varepsilon )$

. For more discussion on diffusion processes with reflecting boundary conditions, see e.g. [Reference Pilipenko40]. Additionally, it is convenient to define

for any probability measure

![]() $\nu$

on

$\nu$

on

![]() $({B}, \mathcal {B}B)$

and

$({B}, \mathcal {B}B)$

and

![]() $\theta \in X$

, where

$\theta \in X$

, where

![]() $\mathcal {B}B$

denotes the Borel-

$\mathcal {B}B$

denotes the Borel-

![]() $\sigma$

-algebra corresponding to

$\sigma$

-algebra corresponding to

![]() $B$

and, following the notation above,

$B$

and, following the notation above,

![]() $\nu ( \Phi (\cdot, \theta )) = \int _B \Phi (\xi, \theta )\nu (\mathrm {d}\xi )$

. We finish this background section by defining the limiting McKean–Vlasov SDE with reflection

$\nu ( \Phi (\cdot, \theta )) = \int _B \Phi (\xi, \theta )\nu (\mathrm {d}\xi )$

. We finish this background section by defining the limiting McKean–Vlasov SDE with reflection

\begin{align} \theta _t &= \theta _0- \int _0^t\mu _s(\nabla _\theta \Phi (\cdot, \theta _s))\mathrm {d}s - \int _0^t \mathrm {Cov}_{\mu _s}(\Phi (\cdot, \theta _s),\nabla _\theta \Phi (\cdot, \theta _s))\mathrm {d}s,\\ \xi _t &=\xi _0+ \int _0^t\nabla _x \Phi (\xi _s,\theta _s)\mathrm {d}s + \sqrt {2}W_t+\int _0^tn(\xi _s)\mathrm {d}l_s, \nonumber \end{align}

\begin{align} \theta _t &= \theta _0- \int _0^t\mu _s(\nabla _\theta \Phi (\cdot, \theta _s))\mathrm {d}s - \int _0^t \mathrm {Cov}_{\mu _s}(\Phi (\cdot, \theta _s),\nabla _\theta \Phi (\cdot, \theta _s))\mathrm {d}s,\\ \xi _t &=\xi _0+ \int _0^t\nabla _x \Phi (\xi _s,\theta _s)\mathrm {d}s + \sqrt {2}W_t+\int _0^tn(\xi _s)\mathrm {d}l_s, \nonumber \end{align}

with

![]() $\mu _t$

denoting the law of

$\mu _t$

denoting the law of

![]() $\xi _t$

at time

$\xi _t$

at time

![]() $t \geq 0$

. The goal of this work is to show that the particle system (3.1) converges to this McKean–Vlasov SDEs as

$t \geq 0$

. The goal of this work is to show that the particle system (3.1) converges to this McKean–Vlasov SDEs as

![]() $N\to \infty$

and to then show that the McKean–Vlasov SDE can find the minimiser of

$N\to \infty$

and to then show that the McKean–Vlasov SDE can find the minimiser of

![]() $F$

.

$F$

.

3.2 Assumptions

We now list assumptions that we consider throughout this work. We start with the Lipschitz continuity of

![]() $\nabla \Phi$

and

$\nabla \Phi$

and

![]() $G$

.

$G$

.

Assumption 3.1 (Lipschitz). The function

![]() $\nabla _\xi \Phi$

is Lipschitz continuous, i.e. there exists a Lipschitz constant

$\nabla _\xi \Phi$

is Lipschitz continuous, i.e. there exists a Lipschitz constant

![]() $L\gt 1$

such that

$L\gt 1$

such that

for any

![]() $\xi, \tilde \xi \in B$

and

$\xi, \tilde \xi \in B$

and

![]() $\theta, \tilde \theta \in {\mathbb {R}}^n$

. Similarly, we assume that

$\theta, \tilde \theta \in {\mathbb {R}}^n$

. Similarly, we assume that

![]() $G(\theta, \mu )$

is Lipschitz in the following sense: there is an

$G(\theta, \mu )$

is Lipschitz in the following sense: there is an

![]() $L\gt 1$

such that

$L\gt 1$

such that

for any probability measures

![]() $\nu$

,

$\nu$

,

![]() $\tilde \nu$

on

$\tilde \nu$

on

![]() $(B, \mathcal {B}B)$

and

$(B, \mathcal {B}B)$

and

![]() $\theta, \ \tilde \theta \in {\mathbb {R}}^n.$

$\theta, \ \tilde \theta \in {\mathbb {R}}^n.$

In Assumption 3.1 and throughout this work,

![]() ${{\mathcal W}}_p$

denotes the Wasserstein-

${{\mathcal W}}_p$

denotes the Wasserstein-

![]() $p$

distance given by

$p$

distance given by

for probability distributions

![]() $\nu, \nu '$

on

$\nu, \nu '$

on

![]() $(X, \mathcal {B}X)$

and

$(X, \mathcal {B}X)$

and

![]() $p \geq 1$

. In addition to the Wasserstein distance, we sometimes measure the distance between probability distributions

$p \geq 1$

. In addition to the Wasserstein distance, we sometimes measure the distance between probability distributions

![]() $\nu, \nu '$

on

$\nu, \nu '$

on

![]() $(X, \mathcal {B}X)$

using the total variation distance given by

$(X, \mathcal {B}X)$

using the total variation distance given by

The Lipschitz continuity of

![]() $G$

actually already implies the Lipschitz continuity of

$G$

actually already implies the Lipschitz continuity of

![]() $\nabla _\theta \Phi$

. By setting

$\nabla _\theta \Phi$

. By setting

![]() $\nu = \delta (\cdot -\xi )$

and

$\nu = \delta (\cdot -\xi )$

and

![]() $\tilde \nu =\delta (\cdot - {\tilde \xi })$

, we have

$\tilde \nu =\delta (\cdot - {\tilde \xi })$

, we have

\begin{align*} \left \|\nabla _\theta \Phi (\xi, \tilde \theta )-\nabla _\theta \Phi (\tilde \xi, \tilde \theta ) \right \| &= \left \|G(\theta, \delta (\cdot -\xi ))-G(\tilde \theta, \delta (\cdot -\tilde \xi )) \right \| \\ &\le L\Big (\left \|\theta -\tilde \theta \right \|+{{\mathcal W}}_1(\delta (\cdot - \xi ),\delta (\cdot - \tilde \xi ))\Big ) = L\Big (\left \|\xi -\tilde \xi \right \|+\left \|\theta -\tilde \theta \right \|\Big ) . \end{align*}

\begin{align*} \left \|\nabla _\theta \Phi (\xi, \tilde \theta )-\nabla _\theta \Phi (\tilde \xi, \tilde \theta ) \right \| &= \left \|G(\theta, \delta (\cdot -\xi ))-G(\tilde \theta, \delta (\cdot -\tilde \xi )) \right \| \\ &\le L\Big (\left \|\theta -\tilde \theta \right \|+{{\mathcal W}}_1(\delta (\cdot - \xi ),\delta (\cdot - \tilde \xi ))\Big ) = L\Big (\left \|\xi -\tilde \xi \right \|+\left \|\theta -\tilde \theta \right \|\Big ) . \end{align*}

We assume throughout that the constant

![]() $L\gt 1$

to simplify the constants in the Theorem5.5. Finally, we note that Assumption 3.1 implies the well-posedness of both (3.1) and (3.2), see ([Reference Adams, dos Reis, Ravaille, Salkeld and Tugaut1], Theorems 3.1, 3.2).

$L\gt 1$

to simplify the constants in the Theorem5.5. Finally, we note that Assumption 3.1 implies the well-posedness of both (3.1) and (3.2), see ([Reference Adams, dos Reis, Ravaille, Salkeld and Tugaut1], Theorems 3.1, 3.2).

Next, we assume the strong monotonicity of

![]() $G$

, which, as we note below, also implies the strong convexity of

$G$

, which, as we note below, also implies the strong convexity of

![]() $\Phi (x,\cdot )$

for any

$\Phi (x,\cdot )$

for any

![]() $x \in B$

. This assumption is not realistic in the context of deep learning (e.g. [Reference Choromanska, Henaff, Mathieu, Arous and LeCun7]), but not unusual when analysing learning techniques.

$x \in B$

. This assumption is not realistic in the context of deep learning (e.g. [Reference Choromanska, Henaff, Mathieu, Arous and LeCun7]), but not unusual when analysing learning techniques.

Assumption 3.2 (Strong monotonicity). For any probability measure

![]() $\nu$

on

$\nu$

on

![]() $(B, \mathcal {B}B)$

,

$(B, \mathcal {B}B)$

,

![]() $G(\cdot, \nu )$

is

$G(\cdot, \nu )$

is

![]() $2\lambda$

-strongly monotone, i.e. for any

$2\lambda$

-strongly monotone, i.e. for any

![]() $\theta, \tilde \theta \in {\mathbb {R}}^n$

, we have

$\theta, \tilde \theta \in {\mathbb {R}}^n$

, we have

for some

![]() $\lambda \gt 0$

.

$\lambda \gt 0$

.

By choosing

![]() $\nu = \delta (\cdot -\xi )$

in Assumption 3.2 for

$\nu = \delta (\cdot -\xi )$

in Assumption 3.2 for

![]() $\xi \in B,$

we have

$\xi \in B,$

we have

![]() $\mathrm {Cov}_{\nu }(\Phi (\cdot, \theta ),\nabla _\theta \Phi (\cdot, \theta ))=0$

, which implies that

$\mathrm {Cov}_{\nu }(\Phi (\cdot, \theta ),\nabla _\theta \Phi (\cdot, \theta ))=0$

, which implies that

![]() $\left \langle \nabla _\theta \Phi (x,\theta ) -\nabla _\theta \Phi (x,\theta '),\theta -\theta '\right \rangle \ge 2\lambda \left \|\theta -\theta ' \right \|^2$

. Thus, the

$\left \langle \nabla _\theta \Phi (x,\theta ) -\nabla _\theta \Phi (x,\theta '),\theta -\theta '\right \rangle \ge 2\lambda \left \|\theta -\theta ' \right \|^2$

. Thus, the

![]() $2\lambda$

-strong monotonicity of

$2\lambda$

-strong monotonicity of

![]() $G$

in

$G$

in

![]() $\theta$

also implies the

$\theta$

also implies the

![]() $2\lambda$

-strong convexity of

$2\lambda$

-strong convexity of

![]() $\Phi$

in

$\Phi$

in

![]() $\theta$

.

$\theta$

.

The assumptions stated throughout this sections are fairly strong, they are satisfied in certain linear-quadratic problems on bounded domains. We illustrate this in an example below.

Example 3.3.

We consider a prototypical adversarial robustness problem based on the potential

![]() $\Phi (\xi, \theta ) \,:\!=\, \left \|\xi -\theta \right \|^2$

with

$\Phi (\xi, \theta ) \,:\!=\, \left \|\xi -\theta \right \|^2$

with

![]() $\theta$

in a bounded set

$\theta$

in a bounded set

![]() $X' \subseteq X$

– problems of this form appear, e.g. in adversarially robust linear regression. Next, we are going to verify that this problem satisfies Assumptions 3.1 and 3.2

.

$X' \subseteq X$

– problems of this form appear, e.g. in adversarially robust linear regression. Next, we are going to verify that this problem satisfies Assumptions 3.1 and 3.2

.

We have

![]() $\nabla _\xi \Phi (\xi, \theta )=2(\xi -\theta ),$

which is Lipschitz in both

$\nabla _\xi \Phi (\xi, \theta )=2(\xi -\theta ),$

which is Lipschitz in both

![]() $\theta$

and

$\theta$

and

![]() $\xi$

. Since

$\xi$

. Since

\begin{align*} \nabla _\theta \Phi (\xi, \theta )&=2(\xi -\theta ), \\ \Phi (\xi, \theta )-\int _B\Phi (\xi, \theta )\nu (\mathrm d \xi )&=\left (\left \|\xi \right \|^2-\int _B \left \|\xi \right \|^2\nu (\mathrm d \xi )\right ) -2\theta \cdot \left (\xi -\int _B \xi \nu (\mathrm d \xi )\right ), \\ \nabla _\theta \Phi (\xi, \theta )-\int _B\nabla _\theta \Phi (\xi, \theta )\nu (\mathrm d \xi ) &=-2\left (\xi -\int _B \xi \nu (\mathrm d \xi )\right ), \end{align*}

\begin{align*} \nabla _\theta \Phi (\xi, \theta )&=2(\xi -\theta ), \\ \Phi (\xi, \theta )-\int _B\Phi (\xi, \theta )\nu (\mathrm d \xi )&=\left (\left \|\xi \right \|^2-\int _B \left \|\xi \right \|^2\nu (\mathrm d \xi )\right ) -2\theta \cdot \left (\xi -\int _B \xi \nu (\mathrm d \xi )\right ), \\ \nabla _\theta \Phi (\xi, \theta )-\int _B\nabla _\theta \Phi (\xi, \theta )\nu (\mathrm d \xi ) &=-2\left (\xi -\int _B \xi \nu (\mathrm d \xi )\right ), \end{align*}

we have that

where

![]() ${\mathbb E}_\nu [\xi ]=\int _B \xi \nu (\mathrm d \xi )$

and

${\mathbb E}_\nu [\xi ]=\int _B \xi \nu (\mathrm d \xi )$

and

![]() $\mathrm {Cov}_\nu (\left \|\xi \right \|^2,\xi )= \int _B(\left \|\xi \right \|^2-{\mathbb E}_\nu [\left \|\xi \right \|^2]) (\xi -{\mathbb E}_\nu [\xi ])\nu (\mathrm d \xi )$

. Since the

$\mathrm {Cov}_\nu (\left \|\xi \right \|^2,\xi )= \int _B(\left \|\xi \right \|^2-{\mathbb E}_\nu [\left \|\xi \right \|^2]) (\xi -{\mathbb E}_\nu [\xi ])\nu (\mathrm d \xi )$

. Since the

![]() $\varepsilon$

-ball and

$\varepsilon$

-ball and

![]() $\theta \in X'$

are bounded, we have that

$\theta \in X'$

are bounded, we have that

![]() $G(\theta, \nu )$

is Lipschitz in both

$G(\theta, \nu )$

is Lipschitz in both

![]() $\theta$

and

$\theta$

and

![]() $\nu$

. Thus, it satisfies Assumption 3.1

. In order to make

$\nu$

. Thus, it satisfies Assumption 3.1

. In order to make

![]() $G(\theta, \nu )$

satisfy Assumption 3.2

, we choose

$G(\theta, \nu )$

satisfy Assumption 3.2

, we choose

![]() $\varepsilon$

small enough such that the term

$\varepsilon$

small enough such that the term

![]() $4\theta \cdot \mathrm {Var}_\nu (\xi )$

is

$4\theta \cdot \mathrm {Var}_\nu (\xi )$

is

![]() $1$

-Lipschitz. In this case, we can verify that

$1$

-Lipschitz. In this case, we can verify that

![]() $\left \langle G(\theta, \nu )-G(\theta ',\nu ),\theta -\theta '\right \rangle \ge \left \|\theta -\theta ' \right \|^2$

and, thus, Assumption 3.2

.

$\left \langle G(\theta, \nu )-G(\theta ',\nu ),\theta -\theta '\right \rangle \ge \left \|\theta -\theta ' \right \|^2$

and, thus, Assumption 3.2

.

4 Propagation of chaos

We now study the large particle limit (

![]() $N\to \infty$

) of the Abram dynamics (3.1). When considering a finite time interval

$N\to \infty$

) of the Abram dynamics (3.1). When considering a finite time interval

![]() $[0,T]$

, we see that the particle system (3.1) approximates the McKean–Vlasov SDE (3.2) in this limit. We note that we assume in the following that

$[0,T]$

, we see that the particle system (3.1) approximates the McKean–Vlasov SDE (3.2) in this limit. We note that we assume in the following that

![]() $0\lt \varepsilon \lt 1$

. Moreover, we use the Wasserstein-

$0\lt \varepsilon \lt 1$

. Moreover, we use the Wasserstein-

![]() $2$

distance instead of Wasserstein-

$2$

distance instead of Wasserstein-

![]() $1$

distance in Assumption 3.1. We have

$1$

distance in Assumption 3.1. We have

![]() ${{\mathcal W}}_1(\nu, \nu ')\le {{\mathcal W}}_2(\nu, \nu ')$

for any probability measures

${{\mathcal W}}_1(\nu, \nu ')\le {{\mathcal W}}_2(\nu, \nu ')$

for any probability measures

![]() $\nu, \nu '$

for which the distances are finite, see [Reference Villani52]. Thus, convergence in

$\nu, \nu '$

for which the distances are finite, see [Reference Villani52]. Thus, convergence in

![]() ${{\mathcal W}}_2$

also implies convergence in

${{\mathcal W}}_2$

also implies convergence in

![]() ${{\mathcal W}}_1$

. We now state the main convergence result.

${{\mathcal W}}_1$

. We now state the main convergence result.

Theorem 4.1.

Let Assumption 3.1 hold. Then, there is a constant

![]() $C_{d,T}\gt 0$

such that for all

$C_{d,T}\gt 0$

such that for all

![]() $T\ge 0$

and

$T\ge 0$

and

![]() $N\ge 1$

we have the following inequality

$N\ge 1$

we have the following inequality

\begin{align*} \sup _{t\in [0,T]}{\mathbb E}\big [\left \|\theta _t^N-\theta _t \right \|^2+{{\mathcal W}}^2_2(\mu _t^N,\mu _t)\big ]\le o_{d,T,N}\,:\!=\,C_{d,T} {\begin{cases} N^{-\alpha _d},&\text { if } d\ne 4,\\ \log (1+N)N^{-\frac {1}{2}},&\text { if }d=4, \end{cases} } \end{align*}

\begin{align*} \sup _{t\in [0,T]}{\mathbb E}\big [\left \|\theta _t^N-\theta _t \right \|^2+{{\mathcal W}}^2_2(\mu _t^N,\mu _t)\big ]\le o_{d,T,N}\,:\!=\,C_{d,T} {\begin{cases} N^{-\alpha _d},&\text { if } d\ne 4,\\ \log (1+N)N^{-\frac {1}{2}},&\text { if }d=4, \end{cases} } \end{align*}

where

![]() $\alpha _d= 2/d$

for

$\alpha _d= 2/d$

for

![]() $d\gt 4$

and

$d\gt 4$

and

![]() $\alpha _d=1/2$

for

$\alpha _d=1/2$

for

![]() $d\lt 4.$

$d\lt 4.$

The dependence of

![]() $d, T$

on

$d, T$

on

![]() $C_{d, T}$

is not explicit except in some special cases which we discuss in Section 5. The upper bound is essentially

$C_{d, T}$

is not explicit except in some special cases which we discuss in Section 5. The upper bound is essentially

![]() $N^{-2/d}+N^{-1/2}$

with the dominating term differing for

$N^{-2/d}+N^{-1/2}$

with the dominating term differing for

![]() $d\gt 4$

and

$d\gt 4$

and

![]() $d\lt 4$

. In fact, when

$d\lt 4$

. In fact, when

![]() $d\lt 4$

, the convergence rate can not be better than

$d\lt 4$

, the convergence rate can not be better than

![]() $N^{-1/2},$

see ( [Reference Fournier and Guillin11], Page 2) for an example in which the lower bound is obtained.

$N^{-1/2},$

see ( [Reference Fournier and Guillin11], Page 2) for an example in which the lower bound is obtained.

Hence, we obtain convergence of both the gradient flow approximation

![]() $(\theta ^N_t)_{t \geq 0}$

and the particle approximation

$(\theta ^N_t)_{t \geq 0}$

and the particle approximation

![]() $(\mu _t^N)$

to the respective components in the McKean–Vlasov SDE. We prove this result by a coupling method. To this end, we first collect a few auxiliary results: studying the large sample limit of an auxiliary particle system and the distance of the original particle system to the auxiliary system. To this end, we sample

$(\mu _t^N)$

to the respective components in the McKean–Vlasov SDE. We prove this result by a coupling method. To this end, we first collect a few auxiliary results: studying the large sample limit of an auxiliary particle system and the distance of the original particle system to the auxiliary system. To this end, we sample

![]() $N$

trajectories of

$N$

trajectories of

![]() $(\xi _t)_{t \geq 0}$

from equations (3.2) as

$(\xi _t)_{t \geq 0}$

from equations (3.2) as

where the Brownian motions

![]() $(W_t^1,\ldots, W_t^N)_{t \geq 0}$

are the ones from (3.1). Of course these sample paths

$(W_t^1,\ldots, W_t^N)_{t \geq 0}$

are the ones from (3.1). Of course these sample paths

![]() $(\xi _t^1,\ldots, \xi _t^N)_{t \geq 0}$

are different from the

$(\xi _t^1,\ldots, \xi _t^N)_{t \geq 0}$

are different from the

![]() $(\xi _t^{1,N},\ldots, \xi _t^{N,N})_{t \geq 0}$

in equation (3.1): Here,

$(\xi _t^{1,N},\ldots, \xi _t^{N,N})_{t \geq 0}$

in equation (3.1): Here,

![]() $(\theta _t)_{t \geq 0}$

only depends on the law of

$(\theta _t)_{t \geq 0}$

only depends on the law of

![]() $(\xi _t)_{t \geq 0}$

, whereas

$(\xi _t)_{t \geq 0}$

, whereas

![]() $(\theta _t^N)_{t \geq 0}$

depends on position of the particles

$(\theta _t^N)_{t \geq 0}$

depends on position of the particles

![]() $(\xi ^{i,N}_t)_{t \geq 0}$

. As the

$(\xi ^{i,N}_t)_{t \geq 0}$

. As the

![]() $(\xi _t^1)_{t \geq 0}, \ldots, (\xi _t^N)_{t \geq 0}$

are i.i.d., we can apply the empirical law of large numbers from [Reference Fournier and Guillin11] and get the following result.

$(\xi _t^1)_{t \geq 0}, \ldots, (\xi _t^N)_{t \geq 0}$

are i.i.d., we can apply the empirical law of large numbers from [Reference Fournier and Guillin11] and get the following result.

Proposition 4.2. Let Assumption 3.1 hold. Then,

where

![]() $o_{d,T,N}$

is the constant given in Theorem

4.1

.

$o_{d,T,N}$

is the constant given in Theorem

4.1

.

For any

![]() $i = 1,\ldots, N$

, we are now computing bounds for the pairwise distances between

$i = 1,\ldots, N$

, we are now computing bounds for the pairwise distances between

![]() $\xi ^i_t$

and

$\xi ^i_t$

and

![]() $\xi _t^{i,N}$

for

$\xi _t^{i,N}$

for

![]() $t \geq 0$

. We note again that these paths are pairwise coupled through the associated Brownian motions

$t \geq 0$

. We note again that these paths are pairwise coupled through the associated Brownian motions

![]() $(W_t^i)_{t \geq 0}$

, respectively.

$(W_t^i)_{t \geq 0}$

, respectively.

Lemma 4.3.

Let Assumption 3.1 hold and recall that

![]() $\xi _0^{i,N} = \xi _0^i$

. Then,

$\xi _0^{i,N} = \xi _0^i$

. Then,

for

![]() $t \in [0,T]$

.

$t \in [0,T]$

.

Proof. Recall that

![]() $(l^{1,N}_t,\ldots, l^{N,N}_t)_{t \geq 0}$

is non-decreasing in time and, hence, has finite total variation. We apply Itô’s formula to

$(l^{1,N}_t,\ldots, l^{N,N}_t)_{t \geq 0}$

is non-decreasing in time and, hence, has finite total variation. We apply Itô’s formula to

![]() $\left \|\xi _t^{i,N}-\xi _t^i \right \|^2$

and obtain

$\left \|\xi _t^{i,N}-\xi _t^i \right \|^2$

and obtain

\begin{align*} \left \|\xi _t^{i,N}-\xi _t^i \right \|^2= 2 \underbrace {\int _0^t \left \langle \xi _s^{i,N}-\xi _s^i,\nabla _x \Phi (\xi ^{i,N}_s,\theta ^N_s)-\nabla _x \Phi (\xi ^i_s,\theta _s)\right \rangle \mathrm {d}s}_{(I1)}\\ + \underbrace {2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^{i,N}-\xi _s^i \right \rangle \mathrm {d}l^{i,N}_s -2\int _0^t\left \langle n(\xi ^i_s), \xi _s^{i,N}-\xi _s^i \right \rangle \mathrm {d}l^i_s}_{(I2)}. \end{align*}

\begin{align*} \left \|\xi _t^{i,N}-\xi _t^i \right \|^2= 2 \underbrace {\int _0^t \left \langle \xi _s^{i,N}-\xi _s^i,\nabla _x \Phi (\xi ^{i,N}_s,\theta ^N_s)-\nabla _x \Phi (\xi ^i_s,\theta _s)\right \rangle \mathrm {d}s}_{(I1)}\\ + \underbrace {2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^{i,N}-\xi _s^i \right \rangle \mathrm {d}l^{i,N}_s -2\int _0^t\left \langle n(\xi ^i_s), \xi _s^{i,N}-\xi _s^i \right \rangle \mathrm {d}l^i_s}_{(I2)}. \end{align*}

We first argue that

![]() $(I2)\le 0.$

Recall that

$(I2)\le 0.$

Recall that

![]() $ n(x)=-x/\left \|x \right \|$

and that the processes

$ n(x)=-x/\left \|x \right \|$

and that the processes

![]() $(\xi ^{i,N}_t)_{t \geq 0}$

and

$(\xi ^{i,N}_t)_{t \geq 0}$

and

![]() $(\xi ^{i}_t)_{t \geq 0}$

take values in the

$(\xi ^{i}_t)_{t \geq 0}$

take values in the

![]() $\varepsilon$

-ball

$\varepsilon$

-ball

![]() $B$

with

$B$

with

![]() $\varepsilon \lt 1$

. Then, we have

$\varepsilon \lt 1$

. Then, we have

\begin{align*} 2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^{i,N}-\xi _s^i \right \rangle \mathrm {d}l^{i,N}_s&= 2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^{i,N}\right \rangle \mathrm {d}l^{i,N}_s- 2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \mathrm {d}l^{i,N}_s\\ &= -2 {\varepsilon } l^{i,N}_t- 2 \int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \mathrm {d}l^{i,N}_s\le -2{\varepsilon } l^{i,N}_t+2{\varepsilon }\int _0^t\mathrm {d}l^{i,N}_s= 0, \end{align*}

\begin{align*} 2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^{i,N}-\xi _s^i \right \rangle \mathrm {d}l^{i,N}_s&= 2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^{i,N}\right \rangle \mathrm {d}l^{i,N}_s- 2\int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \mathrm {d}l^{i,N}_s\\ &= -2 {\varepsilon } l^{i,N}_t- 2 \int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \mathrm {d}l^{i,N}_s\le -2{\varepsilon } l^{i,N}_t+2{\varepsilon }\int _0^t\mathrm {d}l^{i,N}_s= 0, \end{align*}

where the last inequality holds since

![]() $- 2 \int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \mathrm {d}l^{i,N}_s\leq 2 \int _0^t\left |\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \right |\mathrm {d}l^{i,N}_s\leq 2{\varepsilon }\int _0^t\mathrm {d}l^{i,N}_s$

. Similarly, we have

$- 2 \int _0^t\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \mathrm {d}l^{i,N}_s\leq 2 \int _0^t\left |\left \langle n(\xi ^{i,N}_s), \xi _s^i \right \rangle \right |\mathrm {d}l^{i,N}_s\leq 2{\varepsilon }\int _0^t\mathrm {d}l^{i,N}_s$

. Similarly, we have

Hence, we have

![]() $(I2)\le 0.$

$(I2)\le 0.$

For

![]() $(I1)$

, due to Assumption 3.1 and, again, due to the boundedness of

$(I1)$

, due to Assumption 3.1 and, again, due to the boundedness of

![]() $B,$

we have

$B,$

we have

Finally, we study the distance between

![]() $\theta _t^N$

and

$\theta _t^N$

and

![]() $\theta _t$

for

$\theta _t$

for

![]() $t \geq 0$

.

$t \geq 0$

.

Lemma 4.4. Let Assumption 3.1 hold. Then, we have

for

![]() $t \in [0,T].$

$t \in [0,T].$

Proof. Due to Assumption 3.1 and since

![]() ${{\mathcal W}}_1(\mu ^N_s,\mu _s)\le {{\mathcal W}}_2(\mu ^N_s,\mu _s)$

, we have

${{\mathcal W}}_1(\mu ^N_s,\mu _s)\le {{\mathcal W}}_2(\mu ^N_s,\mu _s)$

, we have

\begin{align} \left \|\theta _t^N-\theta _t \right \|^2 =& -2\int _0^t \left \langle \theta _s^N-\theta _s,G(\theta _s^N,\mu ^N_s)-G(\theta _s,\mu _s)\right \rangle \mathrm {d}s\nonumber \\ &\le 2L\int _0^t \left \|\theta _s^N-\theta _s \right \|\Big (\left \|\theta _s^N-\theta _s \right \|+{{\mathcal W}}_2(\mu ^N_s,\mu _s)\Big )\mathrm {d}s\nonumber \\ &\le 2L \int _0^t\left \|\theta _s^N-\theta _s \right \|^2 \mathrm {d}s+2L\int _0^t \left \|\theta _s^N-\theta _s \right \|{{\mathcal W}}_2(\mu ^N_s,\mu _s)\mathrm {d}s\nonumber \\ &\le 3L \int _0^t\left \|\theta _s^N-\theta _s \right \|^2 \mathrm {d}s+L\int _0^t {{\mathcal W}}^2_2(\mu ^N_s,\mu _s)\mathrm {d}s. \end{align}

\begin{align} \left \|\theta _t^N-\theta _t \right \|^2 =& -2\int _0^t \left \langle \theta _s^N-\theta _s,G(\theta _s^N,\mu ^N_s)-G(\theta _s,\mu _s)\right \rangle \mathrm {d}s\nonumber \\ &\le 2L\int _0^t \left \|\theta _s^N-\theta _s \right \|\Big (\left \|\theta _s^N-\theta _s \right \|+{{\mathcal W}}_2(\mu ^N_s,\mu _s)\Big )\mathrm {d}s\nonumber \\ &\le 2L \int _0^t\left \|\theta _s^N-\theta _s \right \|^2 \mathrm {d}s+2L\int _0^t \left \|\theta _s^N-\theta _s \right \|{{\mathcal W}}_2(\mu ^N_s,\mu _s)\mathrm {d}s\nonumber \\ &\le 3L \int _0^t\left \|\theta _s^N-\theta _s \right \|^2 \mathrm {d}s+L\int _0^t {{\mathcal W}}^2_2(\mu ^N_s,\mu _s)\mathrm {d}s. \end{align}

The triangle inequality implies that

\begin{align} {{\mathcal W}}^2_2(\mu ^N_s,\mu _s)\le 2{{\mathcal W}}^2_2(\mu ^N_s,N^{-1}\sum ^N_{i=1}\delta _{\xi _s^i})+2{{\mathcal W}}^2_2(N^{-1}\sum ^N_{i=1}\delta _{\xi _s^i},\mu _s)\nonumber \\ \le \frac {2}{N}\sum ^N_{i=1}\left \|\xi ^{i,N}_s-\xi _s^i \right \|^2+2{{\mathcal W}}^2_2(N^{-1}\sum ^N_{i=1}\delta _{\xi _s^i},\mu _s). \end{align}

\begin{align} {{\mathcal W}}^2_2(\mu ^N_s,\mu _s)\le 2{{\mathcal W}}^2_2(\mu ^N_s,N^{-1}\sum ^N_{i=1}\delta _{\xi _s^i})+2{{\mathcal W}}^2_2(N^{-1}\sum ^N_{i=1}\delta _{\xi _s^i},\mu _s)\nonumber \\ \le \frac {2}{N}\sum ^N_{i=1}\left \|\xi ^{i,N}_s-\xi _s^i \right \|^2+2{{\mathcal W}}^2_2(N^{-1}\sum ^N_{i=1}\delta _{\xi _s^i},\mu _s). \end{align}

Combining (4.2) and (4.3), we obtain

We now proceed to the proof of Theorem4.1.

Proof of Theorem 4.1 We commence by constructing an upper bound for

From Lemma 4.3 and Lemma 4.4, we have

Grönwall’s inequality implies that

According to Proposition 4.2, we have

whereas (4.3) implies

Therefore,

where

![]() $C_{d,T}= 2C_d(1+ Le^{(1+5L)t}).$

$C_{d,T}= 2C_d(1+ Le^{(1+5L)t}).$

5 Longtime behaviour of the McKean–Vlasov process

Theorem4.1 implies that the gradient flow approximation in Abram

![]() $(\theta _t^N)_{t \geq 0}$

converges to the corresponding part of the McKean–Vlasov SDE

$(\theta _t^N)_{t \geq 0}$

converges to the corresponding part of the McKean–Vlasov SDE

![]() $(\theta _t)_{t \geq 0}$

given in (3.2). In this section, we show that this McKean–Vlasov SDE is able to find the minimiser

$(\theta _t)_{t \geq 0}$

given in (3.2). In this section, we show that this McKean–Vlasov SDE is able to find the minimiser

![]() $\theta _*$

of

$\theta _*$

of

![]() $F = \int _{B({\varepsilon })} \Phi (\xi, \cdot ) \pi ^{\gamma, \varepsilon }(\mathrm {d}\xi |\cdot ).$

This, thus, gives us a justification to use Abram to solve the Bayesian adversarial robustness problem. We start by showing that

$F = \int _{B({\varepsilon })} \Phi (\xi, \cdot ) \pi ^{\gamma, \varepsilon }(\mathrm {d}\xi |\cdot ).$

This, thus, gives us a justification to use Abram to solve the Bayesian adversarial robustness problem. We start by showing that

![]() $F$

admits a minimiser.

$F$

admits a minimiser.

Proof. We first argue that

![]() $F$

is bounded below and obtains a minumum at some point

$F$

is bounded below and obtains a minumum at some point

![]() $\theta _*.$

From Subsection 3.2, we already know that

$\theta _*.$

From Subsection 3.2, we already know that

![]() $\Phi (0,\theta )$

is

$\Phi (0,\theta )$

is

![]() $2\lambda$

-strongly convex in

$2\lambda$

-strongly convex in

![]() $\theta$

. Without loss of generality, we assume

$\theta$

. Without loss of generality, we assume

![]() $\Phi (0,0)=0$

and

$\Phi (0,0)=0$

and

![]() $\nabla _\theta \Phi (0,0)=0$

, that is

$\nabla _\theta \Phi (0,0)=0$

, that is

![]() $\Phi (0,\theta )$

reaches its minimum

$\Phi (0,\theta )$

reaches its minimum

![]() $0$

at

$0$

at

![]() $\theta _*=0.$

Since

$\theta _*=0.$

Since

![]() $\Phi (\xi, \cdot )$

is

$\Phi (\xi, \cdot )$

is

![]() $2\lambda$

strongly convex for any

$2\lambda$

strongly convex for any

![]() $\xi \in B$

, we have that

$\xi \in B$

, we have that

Assumption 3.1 implies that,

and

where

![]() $C_0=\left \|\nabla _\xi \Phi (0,0) \right \|.$

Therefore, we have

$C_0=\left \|\nabla _\xi \Phi (0,0) \right \|.$

Therefore, we have

![]() $\Phi (\xi, \theta )\ge -L-C_0- L\left \|\theta \right \|+\lambda \left \|\theta \right \|^2,$

which is bounded below by

$\Phi (\xi, \theta )\ge -L-C_0- L\left \|\theta \right \|+\lambda \left \|\theta \right \|^2,$

which is bounded below by

![]() $-L-C_0-\frac {L^2}{4\lambda }.$

Thus,

$-L-C_0-\frac {L^2}{4\lambda }.$

Thus,

![]() $F$

is bounded below by the same value. We can always choose some

$F$

is bounded below by the same value. We can always choose some

![]() $R_0=R_0(L,\lambda, C_0)$

, such that for

$R_0=R_0(L,\lambda, C_0)$

, such that for

![]() $\left \|\theta \right \|\ge R_0,$

$\left \|\theta \right \|\ge R_0,$

![]() $ \Phi (\xi, \theta )\ge C_0+L$

. Moreover, we already have

$ \Phi (\xi, \theta )\ge C_0+L$

. Moreover, we already have

![]() $ \Phi (\xi, 0)\le L+C_0.$

Thus,

$ \Phi (\xi, 0)\le L+C_0.$

Thus,

![]() $F(\theta ) \ge C_0+L$

when

$F(\theta ) \ge C_0+L$

when

![]() $\left \|\theta \right \|\ge R_0$

and

$\left \|\theta \right \|\ge R_0$

and

![]() $F(0) \le C_0+L$

. Hence,

$F(0) \le C_0+L$

. Hence,

![]() $F$

attains its minimum on the

$F$

attains its minimum on the

![]() $R_0$

-ball

$R_0$

-ball

![]() $\{\theta \in X: \left \|\theta \right \|\le R_0\}.$

$\{\theta \in X: \left \|\theta \right \|\le R_0\}.$

Before stating the main theorem of this section – the convergence of the McKean–Vlasov SDE to the minimiser of

![]() $F$

– we need to introduce additional assumptions.

$F$

– we need to introduce additional assumptions.

Assumption 5.2 (Neumann Boundary Condition). Let

![]() $\Phi (\cdot, \theta )$

satisfy a Neumann boundary condition on

$\Phi (\cdot, \theta )$

satisfy a Neumann boundary condition on

![]() $\partial B,$

$\partial B,$

for any

![]() $\theta \in X.$

$\theta \in X.$

For a general function

![]() $\Phi$

defined on

$\Phi$

defined on

![]() $B$

, this assumption can be satisfied by smoothly extending

$B$

, this assumption can be satisfied by smoothly extending