Crossref Citations

This article has been cited by the following publications. This list is generated based on data provided by Crossref.

Liu, Chunlin

and

Rodrigues, Fagner B.

2024.

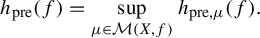

Metric Mean Dimension via Preimage Structures.

Journal of Statistical Physics,

Vol. 191,

Issue. 2,