1. Introduction

Engineering design extends beyond technical expertise, encompassing essential skills such as critical thinking, problem-solving abilities, collaborative teamwork and effective communication among team members. Engineering graduates are expected to master these skills and integrate analytical aptitude to achieve professional success (Baukal et al., Reference Baukal, Thurman and Stokeld2022; Watty, Reference Watty2017).

To meet these needs, higher education institutions incorporated project-based learning (PBL) in their engineering curricula, aligning with Accreditation Board for Engineering and Technology (ABET) standards (Campbell & Colbeck, Reference Campbell and Colbeck1998). PBL allows students to take on active roles in the learning process by engaging in hands-on engineering activities such as building systems, components, or procedures; carrying out tests and experiments; and actively participating in a team to develop practical solutions (Chandrasekaran et al., Reference Chandrasekaran, Stojcevski, Littlefair and Joordens2013; Kuppuswamy & Mhakure, Reference Kuppuswamy and Mhakure2020; Ngereja et al., Reference Ngereja, Hussein and Andersen2020; Shekar, Reference Shekar2014). Design courses at various stages of the engineering program apply PBL to familiarize students with the essential components of the design process (Agogino et al., Reference Agogino, Sheppard and Oladipupo1992; Dally & Zhang, Reference Dally and Zhang1993; Froyd & Ohland, Reference Froyd and Ohland2005; Gainen, Reference Gainen1995). PBL empowers students with the foundational engineering principles necessary to demonstrate critical thinking and to enhance students’ engagement, motivation, deeper understanding, knowledge retention, self-perception and confidence in engineering design (Doppelt, Reference Doppelt2003; Zhou et al., Reference Zhou, Pereira, George, Alperovich, Booth, Chandrasegaran, Tew, Kulkarni and Ramani2017). PBL provides students with the opportunity to investigate technical issues and grasp the interconnections between science and engineering ideas (Savage et al., Reference Savage, Chen and Vanasupa2009). Nonetheless, PBL can often demand substantial resources in terms of time, materials and space. It presents assessment challenges due to its multidimensional nature and the subjectivity involved in grading. PBL tends to prioritize depth over breadth of content, and variations in student participation levels may be observed (Mihić & Završki, Reference Mihić and Završki2017; Saptono, Reference Saptono2003).

The assessment of the design process is critical to ensure the development and implementation of design solutions that meet specified requirements, with effective communication of design choices and continuous improvement for optimal outcomes. Assessments of student designs frequently prioritize the final product, with limited attention given to the intricacies of the design process (Shively et al., Reference Shively, Stith and Rubenstein2018). However, assessment of the prototype and its individual components is important and the process to achieve a final prototype is necessary (Gericke et al., Reference Gericke, Eckert and Stacey2022). In the context of PBL, it is essential for engineering students to adeptly communicate the design process and decisions (Senescu & Haymaker, Reference Senescu and Haymaker2013). This necessitates the development of skills that enable the utilization of artifacts for effective communication within and across teams in the design process (Dym et al., Reference Dym, Agogino, Eris, Frey and Leifer2005; Senescu & Haymaker, Reference Senescu and Haymaker2013; Stockman et al., Reference Stockman, Kincaid, Heale, Meyer and Strong2017).

Design artifacts serve as tangible evidence of a student’s capability for in-depth justification and meticulous documentation of the design process, systematically organizing thoughts, design decisions and arguments. As an essential part of the engineering design process, they serve various purposes such as technical documentation, information dissemination, planning and coordination, education, self-explanation, facilitation of decision-making and communication of ideas (Stockman et al., Reference Stockman, Kincaid, Heale, Meyer and Strong2017). Evaluation of design artifacts is crucial to present a comprehensive assessment of students’ design thinking in the design process. However, the methods for assessing the quality of design artifacts, accessible to both designers and scholars, are not always straightforward or indicative of design proficiency (Goldstein et al., Reference Goldstein, Mejia, Adams, Purzer and Zielinski2016).

Rubrics have proved to be valuable tools for measuring the quality of a design solution, providing flexibility and adaptability to accommodate various criteria. They also enable the establishment of common criteria across different design artifacts (Atman et al., Reference Atman, Adams, Cardella, Turns, Mosborg and Saleem2007). The incorporation of rubrics in engineering education can potentially enhance learning experiences, minimize grading variations and provide increased opportunities for constructive feedback to students (Pang et al., Reference Pang, Kootsookos, Fox and Pirogova2022). Limited knowledge exists regarding the use of rubrics for assessing design skills. This research is important for developing tools that faculty and students can use to facilitate learning and the assessment of the engineering design process.

In PBL, insights obtained from assessing engineering design skills enable the thorough evaluation of student and team performance (Bailey & Szabo, Reference Bailey and Szabo2006), identifying strengths and weaknesses as students matriculate through engineering programs. In this study, we assess and compare the design skills of first-year and third-year engineering student teams within the context of PBL. Specific attention was given to key design steps, which were adapted from a content analysis of first-year engineering students’ design texts (Moore et al., Reference Moore, Atman, Bursic, Shuman and Gottfried1995) and have been used as codes in previous studies (Atman et al., Reference Atman, Chimka, Bursic and Nachtmann1999, Reference Atman, Cardella, Turns and Adams2005, Reference Atman, Adams, Cardella, Turns, Mosborg and Saleem2007).

2. Relevant literature

2.1. Engineering design assessment

Engineering design skill involves the application of scientific and mathematical principles to create practical and efficient solutions (Mourtos, Reference Mourtos2012). Assessment of engineering design skills often involves evaluating the consideration of technical proficiency, innovation, feasibility for implementation, functionality and practical application of knowledge, adapting to new tools and techniques and adherence to engineering principles (Fortier et al., Reference Fortier, Sims-Knight and Viall2012). Assessment of the engineering design process, though challenging, is integral to enhancing the overall learning experience, as it offers valuable opportunities to understand what students know (Bailey & Szabo, Reference Bailey and Szabo2006).

Assessment methodologies have included surveys, self-reported questionnaires, interviews, focus groups, tests, observation, self-reflection journals, verbal protocols and artifacts (Wind et al., Reference Wind, Alemdar, Lingle, Moore and Asilkalkan2019). Surveys, stakeholder feedback and peer reviews are indirect assessment techniques that may not particularly assess the design process knowledge embedded in reports, diaries or design-step logs (Angel et al., Reference Angel, Steenkamp, Gqibani, Baloyi and Nwobodo-Anyadiegwu2022). Written responses and design-step logs provide a more reliable and objective representation of students’ design knowledge, as they are less time-consuming and less susceptible to bias compared with other assessment methods like surveys and interviews (Schubert et al., Reference Schubert, Jacobitz and Kim2012).

Atman et al. (Reference Atman, Chimka, Bursic and Nachtmann1999, Reference Atman, Cardella, Turns and Adams2005) conducted a verbal protocol assessment of first-year and fourth-year students and found that the seniors gathered more information, considered more alternative solutions and easily transitioned between design steps, producing higher-quality designs. Ferdiana (Reference Ferdiana2020) implemented a triangular assessment approach for capstone projects by combining direct assessment of the design project product and the logbook and indirect assessment through surveys that measured students’ feedback. The final product quality was assessed using a student outcome rubric, while the logbooks evaluated the quality of the process. The surveys gauged the progress made in design thinking by the students during their participation in the design activity.

Portfolio reviews gauge the depth and breadth of students’ engineering design skills, presenting a holistic view of the development of design skills and the ability to integrate knowledge. Design logs document the application of design principles, generated ideas or arguments, assumptions, methods, progress and challenges experienced as students carry out a project or design activity. Erradi (Reference Abdelkarim2012) found that design logs can showcase students’ improved perceptions and can be used to assess the overall outcome of a course. In their study of engineering entrepreneurship education, Purzer and Fila (Reference Purzer and Fila2016) found that project artifacts and deliverables such as oral presentations, design reports, design prototypes and conceptual drawings were common forms of design skill assessments (Purzer & Fila, Reference Purzer and Fila2016).

Expert reviews play a pivotal role in providing critical evaluations of design skills, drawing on the expertise and experience of professionals in the field. Qualitative analysis involves the in-depth examination of design processes, methodologies and outcomes, whereas quantitative metrics enable the measurement of design performance based on specific parameters. A commonly used approach is the use of rubrics that outline specific criteria for evaluating design projects. These rubrics provide a structured way to assess and provide feedback on the students’ design tasks across specific design stages (Frank & Strong, Reference Frank and Strong2010).

2.2. Rubrics for assessing engineering design skill

Assessment of undergraduate engineering design activities can be carried out through both direct and indirect methods (Angel et al., Reference Angel, Steenkamp, Gqibani, Baloyi and Nwobodo-Anyadiegwu2022). Direct assessment is facilitated using rubrics, which are tailored to evaluate students’ performance against predefined criteria. Rubrics have been widely used in the assessment of engineering design processes and activities across various groups. The implementation of rubrics in the classroom is a valuable tool that supports and improves student learning (Brookhart, Reference Brookhart2013).

Rubrics consist of checklists and rating scales, which can either be analytical or holistic (Muhammad et al., Reference Muhammad, Lebar and Mokshein2018). When rubrics are used, assessment is consistent and often provides less opportunity for subjectivity. Rubrics clearly describe the expected level of performance from students in assignments, examinations, laboratory activities, internships, research papers, portfolios, group projects and project presentations based on program learning outcomes (Wolf & Stevens, Reference Wolf and Stevens2007). Rubrics are a valuable tool for evaluating student STEM performance, as they provide clarity on learning objectives, support instructional design and delivery, ensure fair and consistent assessment and foster student improvement in learning and teamwork skills (Pang et al., Reference Pang, Kootsookos, Fox and Pirogova2022).

Assessing student learning using rubrics is essential for both formative and summative evaluation, providing direction and motivation for ongoing educational growth (Kennedy & Shiel, Reference Kennedy and Shiel2022). In engineering design courses, students benefit when they receive feedback from faculty on their engineering design activities and progress. Not only do rubrics help to reduce variation in scoring of their engineering design work but they also help to justify grades earned on design projects and communicate design project goals (Jin et al., Reference Jin, Song, Shin and Shin2015). Watson and Barrella (Reference Watson and Barrella2017) developed a rubric to evaluate the sustainability design of 40 capstone projects completed by civil and environmental engineering seniors.

Rubrics have been explored as appropriate evaluation instruments for assessing the design process skills of students in PBL (Guo et al., Reference Guo, Saab, Post and Admiraal2020). Faust et al. (Reference Faust, Greenberg and Pejcinovic2012) applied rubric-based assessment to evaluate student work based on their presentations and written reports. The use of rubrics to support feedback and design process learning could provide benefits to students as they matriculate through engineering programs and into the engineering profession (Platanitis & Pop-Iliev, Reference Platanitis and Pop-Iliev2007). See Table 2 for an excerpt of the rubric used in this study.

3. Research aims and significance

The engineering design process investigated in this study encompasses key stages, including Problem Definition, Information Gathering, Generating Ideas, Modeling, Feasibility Analysis, Evaluation, Decision-Making, Communication, Prototyping, Testing and Collaboration. This study aims to assess and compare the engineering design process skills of first-year students with those of third-year students in an undergraduate engineering program. The rubric outlined in our previous work (Ejichukwu, Reference Ejichukwu2023; Ejichukwu et al., Reference Ejichukwu, Tolbert Smith and Ayoub2022) will be used to assess and compare the engineering design skills of the two student groups in PBL courses. The overarching goal is to facilitate the continuous development of students’ engineering design skills by identifying areas of improvement as they progress in the engineering program. This research study will address the following research question (RQ).

RQ: What differences exist in the design skills of first-year students compared with the design skills of third-year students when assessed through design artifacts?

The results of this study would provide an understanding of the differences between the design skills of first-year students compared with those of third-year students when assessed from design artifacts. The insights will be useful in enhancing students’ engineering design skill development as they advance through the engineering design education pathway.

4. Methodology

4.1. Study participants

The participants in this study were first-year and third-year students who registered in two distinct PBL courses in the fall 2019 semester. The first-year engineering students starting their journey toward a higher education and engineering specialization came from a variety of backgrounds and skill levels. Most of them stated that they had never had any previous engineering design experience. They exhibited a strong excitement to begin developing as engineers.

The third-year engineering students had advanced past foundational courses and were fully engaged in their specialized coursework and real-world applications within their chosen disciplines. They had previous experience working on challenging projects and engineering work experience through co-ops and internships to obtain some design expertise. This strengthened their critical thinking, problem-solving and project management abilities. The participants were grouped into teams. Teams were composed of a maximum of four students. There were a total of 8 third-year student teams and 25 first-year student teams, a total of 33 student teams.

4.2. PBL courses

The Introduction to Engineering Design course (ENGR 100) is a foundational course for all incoming engineering students. It offers an overview of the engineering profession, engineering design and programming using MATLAB. The course emphasizes the design-build-test-learn cycle through a combination of lectures, hands-on laboratory activities and a Mars rover design team project. This course provides first-year students with a well-rounded learning experience. Students work in teams and complete preliminary and critical design reviews, PowerPoint presentations, written reports and a final demonstration of their fully functional prototypes.

The Manufacturing Process I course (IMSE 382) delves into the fundamentals and principles of manufacturing processes. The course covers a range of topics, including the advantages and limitations of manufacturing processes, their impact on the mechanical and microstructural properties of engineering materials and various manufacturing techniques such as casting, heat treatments, bulk deformation processes, sheet metal working processes, processing of polymers and composites, surfaces and coatings, powder metallurgy and machining. Students learn about design for manufacturing, cost considerations and product quality measurement and have the opportunity to apply their learning through the STEM toy design project.

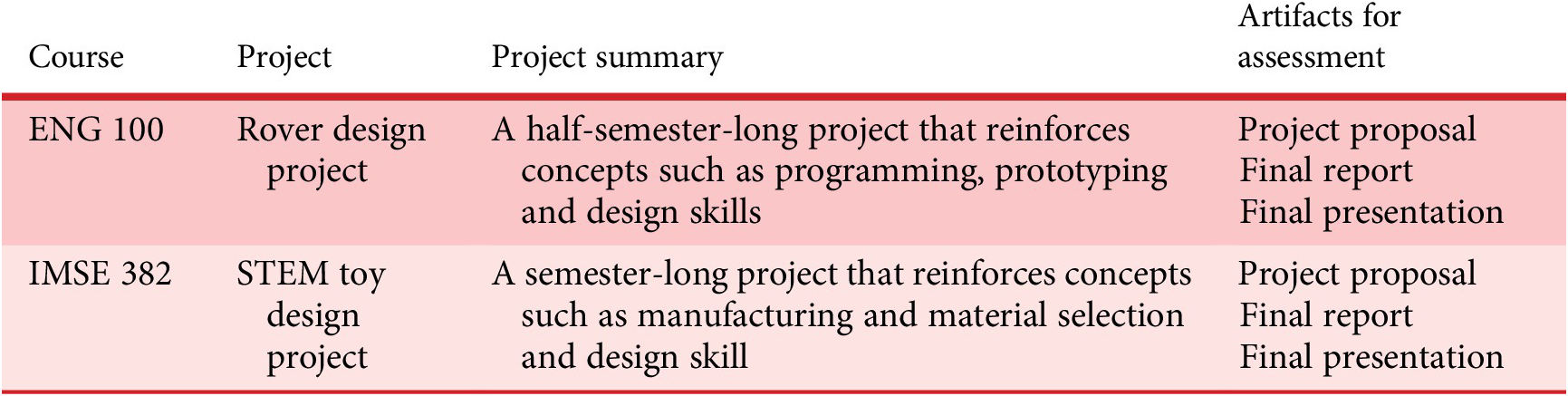

At the end of both PBL courses, student teams submit three artifacts for assessment: a written proposal, a presentation and a final report. A total of 99 project artifacts were collected from 8 third-year teams and 25 first-year teams. See Table 1 for a summary of each course, the project descriptions and a list of artifacts included in this research study.

Table 1. Design project and artifact summaries.

Table 2. Adapted rubric and Atman et al. (Reference Atman, Adams, Cardella, Turns, Mosborg and Saleem2007) coding scheme.

Although the course content, outcomes and duration of both course projects vary, this study focused on how the students in the different PBL courses applied their design skills to meet the set technical requirements of the projects as shown in the artifacts. The design artifacts were required to be clear and to effectively showcase the engineering design process, key decisions, material selections and all considerations made throughout the design process.

4.2.1. Project overview

The first-year engineering project required students to design a new wheel for the NASA Mars rover. The following design requirements were stated: the vehicle cannot exceed 3lbs, wheels must not be made from rubber, the vehicle must fit within a 3′ × 5′ perimeter, wheels must be designed from two materials, vehicle must travel at a speed greater than 0.5 m/s, and cost must be within a $60 budget. The student teams were instructed to first identify the need and purpose of their design, followed by Gathering Information and Generating Ideas. Before deciding on a final design solution, students engaged in a group brainstorming session to generate conceptual ideas and solutions to the design challenge. From the multiple ideas generated, they had to select the most feasible design idea and develop a working prototype. Figures 1, 2 and 3 show samples from the Generating Ideas, Modeling and Testing activity stages for one group in the ENGR100 course.

Figure 1. Samples of initial idea generation of first-year students.

Figure 2. Sample of final design selection and modeling of prototype by first-year students.

Figure 3. Finished prototype testing by first-year students.

In the Manufacturing Processes I course, students were tasked with designing and manufacturing a STEM toy to encourage children’s engagement in STEM. The toy was required to showcase a specific STEM concept, and students had to consult with the instructor to develop a manufacturing plan. The chosen STEM concept was communicated through annotated sketches, and students received the necessary machine-shop training to manufacture and test the toy. The third-year student teams generated several ideas for STEM toy designs, including the pullback windup car, Newton’s cradle, geared fidget cube, blacksmith automata, angular velocity spinning top, trammel of Archimedes and magic bearing. The materials and manufacturing processes selected depended on each STEM idea for the toy. For the pullback windup car, the toy was manufactured using machined metallic rods and 3D-printed components. The design process and final prototype for one team in the Manufacturing Processes I course are shown in Figures 4 and 5.

Figure 4. Initial idea generation for the pullback windup car of third-year students.

Figure 5. Final design of a prototype for the pullback windup car by third-year students.

.

4.3. Data collection

The data for analysis were obtained from a total of 99 artifacts submitted by 25 first-year student teams and 8 third-year student teams. The artifacts collected for use with the assessment rubric are detailed below.

-

• Project proposal document – The project proposal was submitted at the start of the project and is a written document. It outlines the project name and a comprehensive description of the expected outputs from each team. It also states the objectives and goals of each design team. The proposal encompasses the budget summary, project scope, design stages, methodology, project specifics and timelines.

-

• Project presentation document – The project teams presented their completed projects through a 15-minute oral presentation, which was attended by both faculty members and fellow students. The presentation was based on a PowerPoint document that included a title slide, an outline of the presentation, an introduction and motivation, detailed information on the solution, experimental results and the solutions. The students were expected to demonstrate the tests they carried out and provide a comprehensive overview of their design process, results and conclusions. Additionally, they were expected to provide recommendations for future work. The presentation was meant to effectively communicate the team’s design process, decisions made and experimental results.

-

• Final report document – The final report is a comprehensive document that provides a complete account of the design and manufacturing process. It includes the project name and a detailed description of the project objectives and goals, along with the scope and budget. The report also highlights the key challenges faced during the project and the lessons learned, as well as the milestones achieved and successes of the team. This document serves as an important record of the team’s design and manufacturing experience.

4.4. Rubric for assessing design skill from design artifacts

The assessment rubric utilized for artifacts in this study builds upon our previous work (Ejichukwu et al., Reference Ejichukwu, Tolbert Smith and Ayoub2022). The rubric adapted an existing design activity coding scheme by Atman et al. (Reference Atman, Adams, Cardella, Turns, Mosborg and Saleem2007) that identified ten distinct design activities organized into three stages. The decision to utilize this rubric was driven by the recognition that design skills in PBL can be assessed effectively through the identified design activities. The design activities serve as the criteria to be evaluated using assigned weights that represent the extent to which the students showcased their design process skills in communicating solutions to the design problem through artifacts. Furthermore, this choice aligns seamlessly with our specific research objectives of evaluating students’ design skills directly from design artifacts. The assessment rubric specifies the skills that students should master to achieve the intended results. It further classifies them according to different skill levels and learning goals (Vargas Hernandez & Davila Rangel, Reference Vargas Hernandez and Davila Rangel2011).

The design activity coding scheme was modified into a rubric. Excerpts from the design activity coding scheme of Atman et al. (Reference Atman, Adams, Cardella, Turns, Mosborg and Saleem2007) and how it was modified into a rubric are shown below.

To prevent overlapping, the rubric ensured mutually exclusive levels. The performance levels of the weighted scores were set on a scale from 0 (below proficient) to 1 (proficient) and 2 (above proficient). A below-proficient score indicates a limited understanding of the content with significant inaccuracies and material that lacks clarity and demonstrates a struggle to communicate ideas. “Proficient” presents basic knowledge of the design skills but lacks depth, communicates ideas adequately and may contain minor errors. “Above proficient” showcases a solid understanding of required activities, incorporating relevant theories and concepts, while communicating ideas effectively. For more information on the detailed rubric, see Appendix A.

The performance scores as assessed from student team artifacts using the rubric were recorded to be analyzed. The design process and project deliverables were compared across the two student groups. To address potential researcher bias, we implemented a rigorous and systematic approach. This included collaborative discussions among the research team to reach consensus on necessary adaptations, pilot testing on a small sample of student artifacts to confirm reliability and validity, and ensuring inter-rater reliability in our design assessment. These measures were meticulously implemented to enhance the objectivity, validity and reliability of our study’s design evaluation framework.

4.5. Data analysis

Our research aims to compare the design skills of first-year and third-year student teams as demonstrated through their submitted design artifacts. A total of 33 student teams submitted project artifacts comprising project proposals, presentations and final reports. There were eight project teams of third-year students and twenty-five project teams of first-year students.

During the initial scoring phase, the researchers critically reviewed the written documents to identify elements of each design activity in the various design stages. The score to be assigned was based on the extent to which the design skills and the design stages were present in the work as guided by the rubric. The artifacts of all the teams for all design stages were scored and then statistically analyzed.

The null hypothesis states that there is no difference in the design skill performance of the two student groups in all stages of the engineering design process. The null hypothesis implies that any difference in design skills between the two groups is not significant and can be attributed to chance or random variation. The alternative hypothesis is that third-year engineering students would show superior design skills compared with first-year students in all stages of the engineering design process due to their prior exposure to the foundational skills of engineering design. The alternative hypothesis suggests that there is a significant difference that can be measured and attributed. To test this hypothesis, we analyzed the data using descriptive statistics and visualization techniques to gain a better understanding of the variables involved. Subsequently, we applied a Mann–Whitney nonparametric two-tailed test to determine any significant differences, with a significance level set at 0.050.

The Mann–Whitney nonparametric two-tailed test was conducted to compare the design skills exhibited by first-year and third-year students. This test is suitable for analyzing independent groups with unequal sample sizes and does not require assumptions about the distribution of data. The Mann–Whitney test is robust and has high statistical power, making it less likely to produce false-positive results, even in the presence of outliers. The test assumes that the two groups are randomly drawn from the population, each measurement corresponds to a different student, and the data measurement scale is of ordinal or continuous type (Nachar, Reference Nachar2008). The statistical analysis was performed using Prism software and supported by data visualizations for easier interpretation.

5. Results

The general purpose of the study was to investigate how the design process skills of first-year students compare with those of third-year students across design activities when assessed from design artifacts. Our previous work (Ejichukwu et al., Reference Ejichukwu, Tolbert Smith and Ayoub2022) showed that third-year students performed better than first-year students across all the design activities; however, further statistical analysis yielded nuanced findings. The Mann–Whitney nonparametric two-tailed test was used to compare the performance scores of first-year students with third-year students given the different sample sizes of the two student groups. The sections below show the summary of the mean statistics, standard deviation and p-values for each stage of the design process in all three design activities assessed.

For both student groups, the standard deviation (Std Dev) is provided for each design activity, offering insights into the variability of scores within the first-year and third-year engineering student teams. A higher standard deviation suggests greater variability among individual team scores. A moderate variability indicates some spread in the scores, whereas a low variability indicates that the teams’ scores in each group are very close to each other. The p-value displays the statistical significance of the observed differences between the two groups for each design activity. It represents the probability of obtaining the observed results if there is no actual difference between the two groups. A p-value below the conventional significance level of 0.05 suggests a score’s statistically significant difference.

5.1. Proposal document

The authors predicted that the rubric of third-year students would be higher than the scores of first-year students in all design activities across the design artifacts. The tables summarizing the statistical results below present the results of comparing the rubric scores of the design activities of first-year engineering students (n = 25) and third-year engineering students (n = 8) within the proposal document.

Table 3 presents a comparison of the mean scores for various design activities in the proposal document between first-year and third-year students. Across most of the listed design activities – Problem Definition, Gathering Information, Modeling, Feasibility Analysis, Decision-Making, Communication and Prototyping, and Testing and Collaboration – there is no statistical difference between the two groups of students. This is evidenced by the p-values being higher than the conventional threshold for significance (usually 0.05). This leads to the conclusion that there is not enough evidence for a statistically significant difference between the two groups. However, significant differences emerge in the Generating Ideas and Evaluation activities. In Generating Ideas, first-year students have a higher mean score (1.64) compared with third-year students (1.0), with a very low p-value (0.0005), indicating a significant difference. Also, for Evaluation, first-year students have a lower mean score (0.20) compared with third-year students (1.0), with an even lower p-value (0.0001), again indicating a significant difference. The standard deviation for third-year students in both these activities is 0.00, which suggests no variability among the third-year students’ scores.

Table 3. Statistical summary of ENG 100 and IMSE 386 performance scores for the proposal.

5.2. Presentation document

The authors predicted that the rubric scores of third-year students would be higher than the scores of first-year students in all design activities across the design artifacts. Table 4 provides a comparative analysis of design activities between first-year and third-year engineering students as shown in their presentation documents. The p-values indicate the statistical significance of the observed differences between the scores after assessments using the rubric.

Table 4. Statistical summary of ENG 100 and IMSE 386 performance scores for the final presentation.

Statistically significant differences exist in the evaluation of presentation documents. For Gathering Information, there is a notable difference between first-year students, with a mean score of 0.20, and third-year students, with a mean score of 1.38. The p-value of 0.0005 suggests this difference is statistically significant, suggesting an enhancement in students’ ability to gather and apply information for design purposes as they advance in their studies. For Generating Ideas, first-year students had a mean score of 1.04, which is significantly lower than the 1.63 mean score for third-year students, as shown by a p-value of 0.0013. This implies a substantial improvement in idea generation proficiency and possibly an increase in student academic maturity. For Communication and Prototyping, the first-year students’ mean score of 1.12 is significantly lower compared with the 1.50 score for third-year students, with a p-value of 0.0400.

This discrepancy could reflect an evolved proficiency in communication and prototyping skills that comes with increased practice and exposure to more complex projects over time. However, for Problem Definition, the standard deviation of 0.28 indicates that the scores of student teams in this activity are relatively close to each other. In Modeling, Feasibility Analysis, Evaluation, Decision-Making and Testing and Collaboration, the standard deviation between the scores of first-year student teams moderately deviates from the mean. The p-values in these activities exceed the conventional threshold of 0.05, indicating no statistically significant differences between the scores of first-year and third-year students.

5.3. Final report documents

The authors predicted that the rubric scores of third-year students would be higher than the scores of first-year students in all design activities across the design artifacts. Table 5 provides outlines of the mean scores, variability and statistical significance of differences between first-year and third-year engineering students across their final reports.

Table 5. Statistical summary of ENG 100 and IMSE 386 performance scores for the final report.

The scores are compared using p-values to determine if the differences observed are statistically significant. Notably, statistically significant differences exist in the evaluation of the final report documents. For Gathering Information, a substantial difference is observed in the ability to gather information, with third-year students scoring significantly higher (mean = 2.0) than first-year students (mean = 0.80). The p-value of 0.0001 is well below the standard threshold of 0.05, indicating a strong statistical significance. This suggests that the curriculum effectively enhances information-gathering skills as student teams progress.

For Evaluation, there is a significant difference, with first-year students scoring higher (mean = 1.64) than third-year students (mean = 1.13). This is statistically significant, with a p-value of 0.0160. It suggests that evaluation skills may peak at some point during the educational process or that different student teams may have different abilities or approaches to evaluation. For Communication and Prototyping, there is a significant improvement in communication and prototyping skills from the first-year student teams (mean = 1.04) to the third-year student teams (mean = 1.38), with a p-value of 0.0360.

5.4. Comparisons across artifacts for different design stages

Both student groups demonstrated a clear understanding of the design task and an improvement in their knowledge as they progressed in the project. A comparison across design artifacts within each student group is presented below.

5.4.1. First-year students

In design, communication across persons is greatly aided by design representations (Krishnakumar et al., Reference Krishnakumar, Letting, Johnson, Soria Zurita and Menold2023). The use of visual aids such as pictures, images, hand drawings and CAD drawings effectively showcases the improvements made from the initial design idea and their final designs. Most first-year engineering students relied primarily on hand sketches without proper dimensioning in their proposals. Other comparisons were made across artifacts. Figure 6 provides an insightful comparison across three different artifacts for first-year students. It illustrates the varying levels of student performance in key design activities. For Problem Definition and Evaluation, the proposal scores are notably higher, suggesting a strong initial grasp of these concepts when planning their projects.

Figure 6. Proposal, presentation and final report comparison for first-year students.

A significant observation from the plot is the low-performance scores in Gathering Information across all artifacts despite progress from the proposal to the presentation and final report phase. The lowest scores (<1) occurred in the Gathering Information activity, indicating that first-year students lack proficiency in this area. A noticeable increase occurred in the Evaluation activity as students moved from the proposal to the presentation and final report documents. The mean scores for the proposal document are generally low, indicating room for development and more assistance from faculty in this phase. In the presentation and final report documents, first-year students showed proficiency (> = 1) in Generating Ideas, Modeling, Feasibility Analysis, Evaluation, Communication and Prototyping, and Testing and Collaboration.

Testing is necessary to ensure that the final design product satisfies the specified requirement. First-year student teams’ testing scores were below proficient at the proposal phase and improved in the presentation and report artifacts. Some first-year teams stated that they conducted multiple tests to ensure the feasibility of their design and evaluate its suitability to meet the identified need. However, further analysis of their artifacts often revealed incomplete information related to experiment methods and results. Communication and Prototyping are core components in PBL, and early-stage prototyping works better when done in a more deliberate and organized manner (Petrakis et al., Reference Petrakis, Wodehouse and Hird2021). The lack of details regarding experimental methods and results aligns with previous research that suggests first-year students may feel overwhelmed during the testing and evaluation phase.

Overall, the data plot shows that the student teams’ design process as assessed from their artifacts improved as they made progress from the proposal to the presentation and report document. This could be due to the iterative work done, with feedback and continuous effort leading to increased skill in the projects’ later stages. These provide valuable insights about the design performance of first-year engineering students, suggesting aspects of design skill development needing improvement.

5.4.2. Third-year students

The third-year students utilized tools for comprehensive CAD design and material selection in the manufacturing process. Figure 7 illustrates the variation in average performance scores, reflecting the proficiency levels of third-year student teams across all three different design artifacts, each evaluated for distinct design activities.

Figure 7. Proposal, presentation and final report comparison for third-year students.

The performance of third-year students in various design activities showed fluctuations from the proposal document to their final report. There is a small change in Problem Definition across the three artifacts. Gathering Information had the lowest rating (< 1) in the proposal document and the highest rating of 2 (above proficient) in the final report. The presentation and final report show satisfactory proficiency (> = 1) in Generating Ideas, Modeling, Feasibility Analysis, Evaluation, Decision-Making, Communication and Reporting, and Collaboration. Overall, an increase in scores from the proposal document to the presentation and the final report document suggests improvement in the design skills through the stages of the design process, indicating a learning curve and improvement in proficiency as the student teams progress through the design activities. The educational implications of these findings are further discussed, considering the impact of teaching methods and curriculum design and the progression of skills and knowledge throughout the study.

6. Discussion

The RQ in this study is stated below.

RQ: What differences exist in the design skills of first-year students compared with the design skills of third-year students when assessed through design artifacts?

To address the RQ, the study used an assessment rubric to compare the application of engineering design skills by first-year and third-year engineering students from design artifacts. The proficiency of each design activity ranges from 0 (below proficient) to 1 (proficient) and 2 (above proficient). The proficiency scores in specific project design activities demonstrate their design process skill for each design artifact. Nuanced variations were observed. The results of this study and implications for teaching and learning in the engineering design curriculum are discussed below.

6.1. Confirmed null hypothesis in design activities

Proposal documents: In the Problem Definition, Gathering Information, Modeling, Feasibility Analysis, Decision-Making, Communication and Prototyping, Testing and Collaboration activities, the p-values are greater than the conventional threshold for significance (usually 0.05). This indicates that there is no statistically significant difference between the scores of first-year and third-year students with respect to the proposal documents. Therefore, the null hypothesis that there is no difference between the groups cannot be rejected for these activities.

Presentation documents: In the Problem Definition, Modeling, Feasibility Analysis, Evaluation, Decision-Making and Testing and Collaboration, the null hypothesis, which states there is no significant difference between first-year and third-year students’ design skills, cannot be rejected because there is no sufficient evidence to conclude that there is a difference in design skills of the first-year and third-year students with respect to the presentation documents.

Report documents: In Problem Definition, Generating Ideas, Modeling, Feasibility Analysis, Decision-Making and Testing and Collaboration, the null hypothesis is upheld as there are no significant differences with respect to the report documents. The lack of statistical difference in design activities could suggest that the first-year engineering design course provides a strong foundation in engineering design skills since it is a required course for engineering majors or that these skills do not substantially develop further through the course of study.

Although the overall trend suggests that the level of design activity competence does not differ significantly between the two student groups, it should be noted that the sample size for third-year students is considerably smaller (n = 8) compared with the first-year students (n = 25), which could potentially impact the reliability of the comparison. A small sample size may result in reduced statistical power, leading to challenges in detecting differences when any exist (Type II error), or it may contribute to an overestimation of the effect size. Other possible causes may include project complexity, project duration, prior design experience, team formation and motivation that could have caused the absence of statistical differences in other design activities. To better develop these skills, educators in engineering design courses should emphasize these design activities to their students (Patel et al., Reference Patel, Murphy, Summers and Tahera2022).

6.2. Rejected null hypothesis in design activities

Proposal documents: A comparison of first- and third-year students’ performance in the proposal document showed significant differences in the Generating Ideas and Evaluation activities, namely, a peak in the Generating Ideas category for the first-year students’ proposals, with an average score of 1.64, compared with a lower average of 1.0 for the third-year students. This significant difference, supported by a p-value of 0.0005, suggests a stronger performance in idea generation by the first-year students. This finding agrees with Hu et al. (Reference Hu, Shealy and Milovanovic2021). Possible reasons for the first-year students scoring higher in Generating Ideas in their proposals may include their frequently approaching the subject from a new angle, free from the perceived correct standards or methods of more experienced learners. First-year students may be motivated to showcase their potential, excitement and ambition to succeed in a new academic environment. The differences may be attributed to the varying course objectives. Most notably, after discussion with the faculty teaching these courses, it was discovered that the instructors of the first-year course have prioritized creativity and ideation techniques as foundational skills, leading to a higher focus on generating a variety of ideas.

There is a statistically significant difference in the Evaluation skill of the two student groups. The first-year students have a mean score of 0.20, which is lower than that of the third-year students, who have a score of 1.0. Again, for this activity, third-year students, who likely possess a deeper understanding of the subject area and associated design constraints, tend to focus more on addressing the feasibility and practical utility of these concepts. This discrepancy may suggest that as students progress through their education, they develop a more critical eye for evaluating their ideas and work, possibly due to a more developed knowledge base.

Presentation documents: In the presentation artifact, there was a statistical difference between the two groups, confirming the alternative hypothesis. The third-year students outperformed first-year students in Gathering Information, Generating Ideas and Communication and Prototyping, showing a significant difference that favors the third-year students. This may be attributed to their increasing capacity to conduct research and efficiently integrate information as they move through their academic program. As students gain more knowledge and experience, they become more skilled at coming up with complex workable design concepts, offering complex approaches to problem-solving, critical thinking and persuasively conveying their ideas.

The low rubric scores of first-year students in Gathering Information agree with the results of the survey conducted by Moazzen et al. (Reference Moazzen, Miller, Wild, Jackson and Hadwin2015), where first-year engineering students consider gathering information as challenging. Most students are eager to delve into other design activities while neglecting the important step of gathering information. The noticeably low scores in Gathering Information highlight that in PBL, the students may have not received the necessary instructional emphasis on that aspect of the design process. This discrepancy could reflect an evolved proficiency in communication and prototyping skills that comes with increased practice and exposure to more complex projects over time.

Report documents: In this design artifact, there was a notable significant difference in Gathering Information, Evaluation and Communication and Prototyping. Third-year students demonstrated higher proficiency in these activities. This may imply that third-year students are more adept at obtaining information than their first-year counterparts, as evidenced by the much higher mean ratings they receive. As students advance academically, their research and analytical skills are also gradually improved due to increased exposure, opportunities for practical application, previous feedback and an overall cumulative experience gained in using resources and identifying pertinent information over time. However, the scores of third-year students in evaluation ratings also decreased noticeably. This suggests that as students gain experience, they may focus more on the technical feasibility and logical practicability of their design without much focus on correctly presenting how the design meets set criteria and specifications. This agrees with the poor evaluation skill of third-year students in Boudier et al. (Reference Boudier, Sukhov, Netz, Le Masson and Weil2023), where they explored idea generation during the evaluation activity.

The low performance of first-year students in specific design activities such as Gathering Information aligns with past research. The higher scores of the third-year students in some design activity stages could be an indication of their advanced level of expertise and competence in these areas. The results of the study indicate specific areas of design education where significant development occurs as students progress through the design process. It also highlights areas where further educational strategies might be needed to enhance the learning outcomes. The third-year student teams’ decreased score in the evaluation design activity is a deviation from the previous research of Atman et al. (Reference Atman, Cardella, Turns and Adams2005).

The differences in design skills between first-year and third-year engineering students can be influenced by a variety of factors and result in the following implications for teaching practitioners:

-

1. Emphasis placed by faculty on specific design activities: If faculty members place more emphasis on certain design activities within the curriculum, students are likely to develop stronger skills in those areas. For example, if Problem Definition and Evaluation are consistently highlighted throughout courses and projects, students may perform better in these areas as reflected in the assessment of their design artifacts. In students higher in the curriculum, weak areas that are not emphasized by engineering design instructors could go overlooked, resulting in lower design proficiencies in those areas. Faculty members should emphasize all design activities to ensure continuous development of the students’ design skills as they advance in the program.

-

2. Varying course objectives: The different courses had different objectives, which could lead to variations in skill development and skills assessment. First-year courses might focus on fundamental concepts and basic skills, while third-year courses could emphasize complex design problems and advanced skills. This progression naturally leads to differences in skill sets, with third-year students expected to have more refined skills in certain areas due to exposure to more complex and in-depth course objectives.

-

3. Available resources: The availability of resources such as software tools, lab equipment and materials can significantly impact the ability of students to develop certain skills. Third-year students may have access to more advanced resources, allowing them to perform better in activities that require such resources. Conversely, if first-year students have limited access, this could hinder their ability to develop certain skills.

-

4. Team differences: Team dynamics and the composition of group projects can influence skill development. Students often learn from their peers, so being part of a team with members who have strong skills in certain areas can enhance an individual’s abilities. If third-year students engage in more team-based projects or if their teams have a diverse set of skills, they may exhibit stronger skills in communication and collaboration.

-

5. Student interests: Personal interest plays a significant role in skill development. Students who are interested in certain aspects of design are likely to invest more time and effort into developing those skills. For example, students with a keen interest in prototyping may seek out additional opportunities to refine this skill, leading to higher performance in that area. Similarly, first-year students might not yet have developed clear interests, which could result in a more generalized skill set.

Overall, the development of design skills in engineering education is a complex interplay of curriculum design, resource allocation, teaching emphasis, student team dynamics and individual student interests and motivations. These factors can lead to significant variations in skill levels across different student teams. This study’s comparison between first-year and third-year students is meaningful as it evaluates the potential progression of design skills throughout the engineering curriculum. This comparison aims to ensure that students develop the desired engineering design skills as they progress in the engineering program. As such this study has the following implications for research:

-

1. The findings serve as valuable feedback for skill development, curriculum development and improvement. This research suggests that an assessment rubric can be used not only to identify engineering design process knowledge proficiency in design artifacts but can also be used to indicate where student teams are placing emphasis when communicating design process knowledge.

-

2. Results indicate that students emphasize skills that faculty emphasize in the classroom. This research has implications for how student teams demonstrate design process knowledge based on the design practices faculty emphasize in the course.

7. Conclusion

This study underscores the vital role of dedicated efforts from faculty in enhancing the pedagogical effectiveness of engineering design courses. The introduction of PBL as a tool to enhance engineering design education has been effective at reinforcing the application of engineering design skills toward complex problems. Assessing design skills, however, remains a multifaceted challenge. This study has shown that students’ proficiency in design knowledge can be effectively conveyed and evaluated through project artifacts, assessed by a carefully structured design activity rubric. The analysis of student work yielded interesting insights into the variations in statistical differences in design proficiencies of the two student groups. Nonetheless, a progression in design proficiency was evident across both groups, indicating a cumulative improvement in their ability to apply engineering design skills over time. These findings advocate for a concentrated effort on (1) the development of foundational design skills as students engage in design activities in PBL and (2) the reinforcement of early design activities to avoid a plateau in skill advancement as students learn about design constraints, feasibility and evaluation of concepts. Educators and student groups should consider integrating the rubric as a strategic instrument to evaluate and refine engineering design skills systematically.

8. Limitations of the study

The authors acknowledge potential limitations. Due to the coauthors also serving as instructors for the courses under investigation, there is potential subjectivity and reviewer bias when using and designing the rubric. This could influence the ways that the results were interpreted and described. However, to mitigate the impact of these limitations and increase the reliability of the study’s approach and results, the first author led the research study and reviewed findings with the second and third authors. Finally, another colleague who (1) had extensive background in the engineering design process, (2) was not a member of the research team and (3) was not an instructor of the courses included in the study evaluated the methods and results to reduce the impact of subjectivity.

This investigation acknowledges potential external influences such as previous design experience, motivation, team dynamics and individual learning approaches that might impact the results. Additionally, the respective course objectives and teaching strategies adopted by the different faculty members could sway the design activities that students prioritize. Effective leadership and team communication may lead to more adept problem-solving and task management. While these factors were not the focus of this study, they can affect the execution of design tasks within teams, which in turn can shape outcomes.

The study’s insights could be limited by the variable nature of the design activities, the time allocated for task completion and the number of participants. For example, first-year students engaged in shorter projects than third-year students. The smaller group size of third-year students (n = 8) versus first-year students (n = 25) could reduce the statistical power to detect existing differences (Type II error) or potentially exaggerate the perceived effect size. Moreover, a notable lack of response variability, particularly among third-year students, could skew the p-value calculations. Finally, this study did not evaluate oral presentations, which might have offered additional insights into the students’ understanding of the design process and their ability to adapt their viewpoints, thus reflecting their command of the subject and design skills.

9. Future research

Future research endeavors should focus on tackling the limitations identified. Studies could methodically look at how the learning outcomes are affected by past design expertise, personal motivation and the subtleties of team building and leadership. The dynamics of team communication and how different learning styles affect the performance of individuals as well as groups should receive special attention. A larger sample size and duration of design tasks, as well as longitudinal designs that track progress over multiple semesters, would not only enhance the robustness of the findings but also allow for the exploration of the development of design skills over time. Researchers may examine the difficulties and the learning curves related to more advanced design projects completed by older students in contrast to those completed by beginners. This may provide insight into how students’ problem-solving and design-thinking abilities develop as they go through their academic careers.

APPENDIX A The adapted design assessment rubric.

Legend: Explanation of the scoring rubric for each of the design activities.

0 – no mention/evidence of the design activity. Or mere naming of the design activity with no explanation.

1 – if there is evidence that the design activity was completed but no elaboration.

2 – evidence goes beyond the description of the design activity and elaborates on specific strategies or techniques used to complete the design stage.