Impact Statement

In Bayesian system identification for structures, simplistic probabilistic models are typically used to describe the discrepancies between measurement and model predictions, which are often defined as independent and identically distributed Gaussian random variables. This assumption can be unrealistic for real-world problems, potentially resulting in underestimation of the uncertainties and overconfident predictions. We demonstrate that in a real-world case study of a twin-girder steel bridge, the inclusion of correlation is decisively favored by the data. In the proposed approach, both the functional form of the probabilistic model and the posterior distribution over the uncertain parameters of the probabilistic model are inferred from the data. A novel efficient log-likelihood evaluation method is proposed to reduce the computational cost of the inference.

1. Introduction

1.1. Motivation

Structural health monitoring (SHM) methods based on probabilistic approaches have seen significant development in recent years (Farrar and Worden, Reference Farrar and Worden2012) and have been applied for system identification and damage detection for various types of structures including bridges (Behmanesh and Moaveni, Reference Behmanesh and Moaveni2014), rail (Lam et al., Reference Lam, Hu and Wong2014), offshore oil and gas installations (Brownjohn, Reference Brownjohn2007), offshore wind farms (Rogers, Reference Rogers2018) and other civil engineering structures (Chen, Reference Chen2018). The Bayesian system identification framework established by Beck and Katafygiotis (Reference Beck and Katafygiotis1998) uses measurements of structural responses obtained from sensors in combination with computational physics models to infer uncertain parameters, calibrate models, identify structural damage, and provide insight into the structural behavior (Huang et al., Reference Huang, Shao, Wu, Beck and Li2019). In Bayesian statistics the problem is cast as a parameter estimation and model selection problem, often referred to as system identification in the SHM literature (Katafygiotis et al., Reference Katafygiotis, Papadimitriou and Lam1998). Specifically, previous knowledge about the system parameters to be inferred is represented by statistical distributions and combined with measurements to infer the posterior parameter distribution. A key advantage of this approach is that it provides a rigorous framework for combining prior knowledge and data with a probabilistic description of the uncertainties to obtain a posterior distribution over nondirectly observed parameters of interest (the so-called latent variables) using directly observed responses. For example, the rotational stiffness of a support can be estimated based on measured deflections (Ching et al., Reference Ching, Muto and Beck2006; Lam et al., Reference Lam, Yang and Au2018).

In parallel with the probabilistic methods for SHM, sensor and monitoring technologies have seen significant progress in recent years. These technologies can provide higher accuracy and improved measurement capabilities, for example, by utilizing fiber optic strain sensors (Ye et al., Reference Ye, Su and Han2014; Barrias et al., Reference Barrias, Casas and Villalba2016). Fiber optic strain sensors provide measurements with high spatial and temporal resolution as large numbers of sensors with high sampling rates are used in the same structure. System identification is carried out under the assumption that there is sufficient information in the measurements so that the data can overrule the prior assumption on the latent variables. Therefore, utilizing the additional information contained in these measurements can potentially improve the accuracy of our predictions, reduce the uncertainty on the inferred system parameters, and lead to improved physical models that can more accurately capture the structural behavior. However, when using measurements from dense sensor layouts, such as fiber optic strain sensors, the discrepancies between model prediction and observations are expected to be dependent. This dependence has to be considered in the system identification to avoid inaccurate parameter estimation and overconfidence in the model predictions.

1.2. Problem statement

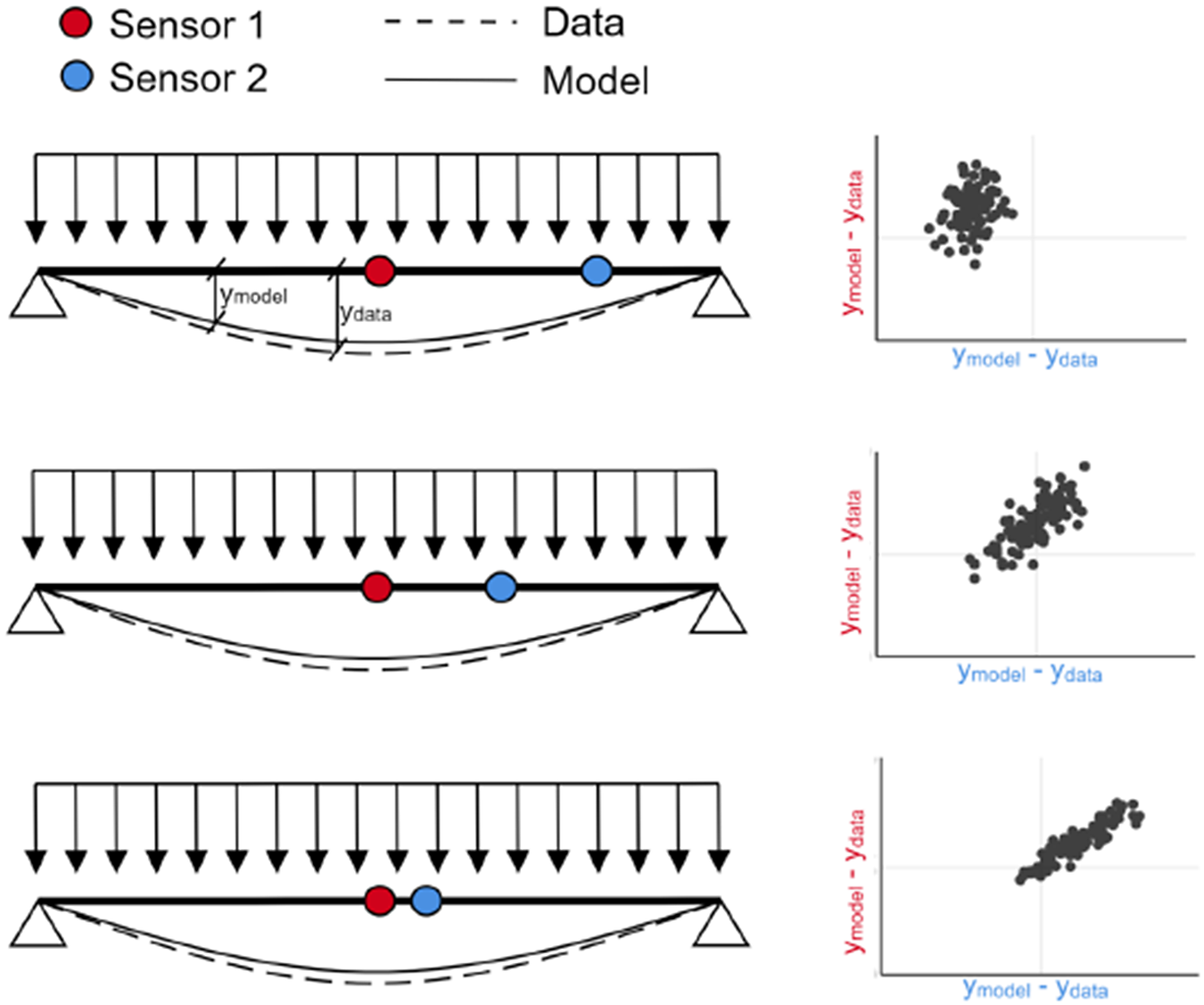

Current approaches in Bayesian inference for structures largely neglect the dependencies in the model prediction error. Instead, it is typically assumed that the prediction error is Gaussian white noise, that is, uncorrelated with zero mean (Lye et al., Reference Lye, Cicirello and Patelli2021). When using closely spaced measurements and model predictions, for example, in the case of time series with high sampling rates or spatial data from densely spaced sensors, dependencies may be present in the model prediction errors (Simoen et al., Reference Simoen, Papadimitriou, De Roeck and Lombaert1998). The strength of the correlation typically depends on the proximity of the measurements in time and the spacing of sensors on the structure. A fictitious example of a simply supported beam where the error between measurement and model prediction for two sensors is modeled as a bivariate Normal distribution, explicitly accounting for the spatial correlation of three different sets of measurements, is shown in Figure 1 for illustration purposes. Disregarding the spatial and temporal measurements correlation, by enforcing the assumption of independence can lead to large errors in the posterior distribution of the inferred parameters, as correlation has been shown to have an impact on the information content of measurements (Papadimitriou and Lombaert, Reference Papadimitriou and Lombaert2012), the maximum likelihood and maximum a posteriori estimates of the parameters of interest, and the posterior uncertainty (Simoen et al., Reference Simoen, Papadimitriou and Lombaert2013).

Figure 1. Illustration of the impact of correlation in the model prediction error for the fictitious case of a simply supported beam with two sensors.

To consider correlations in Bayesian system identification for structures poses a number of challenges for the modeler. An appropriate functional form of the prediction error correlation is not known a priori, and due to the prevalence of the independence assumption, there is limited information available on how to model it. Additionally, it is not known to what degree the correlation is problem-specific. Hence, to perform Bayesian system identification on real-world structures when spatial and/or temporal dependence are present, we identified the following open issues:

1. Appropriate models for the spatial and temporal correlations must be included in the probabilistic model that describes the uncertainties.

2. Bayesian inference must be performed in a computationally efficient manner when large datasets and combined spatial and temporal dependencies are considered.

1.3. Approach

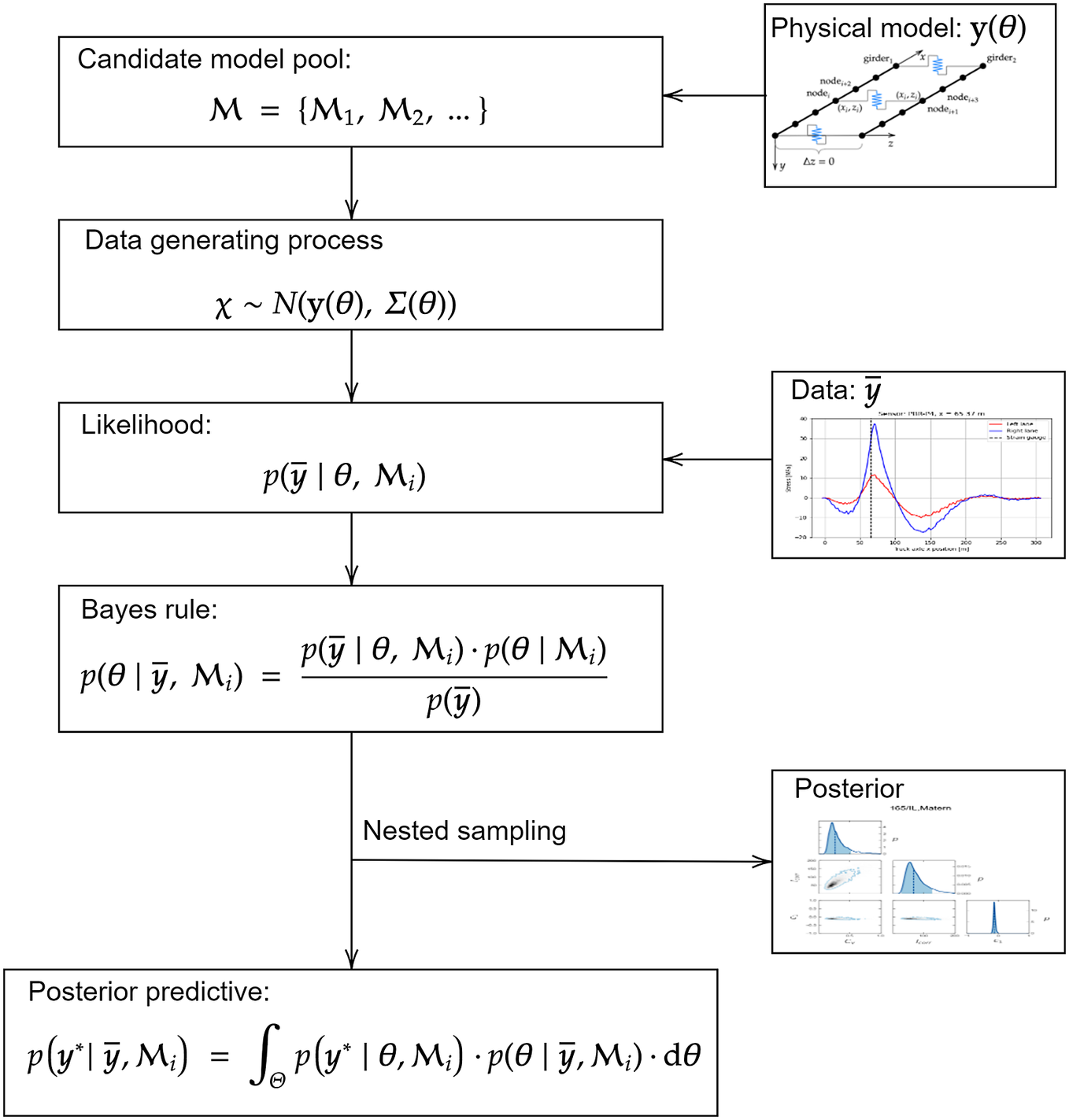

The approach proposed in this article to address the issues mentioned above can be summarized as follows. First, a mathematical model of the data-generating process is formulated. This model is composed of a physical model describing the response of the structure, and a probabilistic model describing the measurement and model prediction error including the spatial and temporal correlation. Both the measurement and model prediction error are taken as normally distributed, and the strength of the correlation is assumed to be dependent on the distance between measurements (in time and/or in space). This dependence is modeled by a set of kernel functions. A pool of candidate models is defined, with each model considering a different kernel function to describe the correlation in the physical model prediction error. Bayesian inference is performed to obtain the posterior distribution of physical and probabilistic model parameters based on the data. The posterior probability and Bayes factor are calculated for each candidate model, making it possible to evaluate how strongly a given model is supported relative to the other candidate models based on the data. The proposed approach is illustrated in Figure 2. More details on the individual building blocks are given in Section 3.

Figure 2. Overview of the Bayesian inference approach used in this work.

Second, a strategy is presented for performing system identification for relatively large datasets (

![]() $ N>{10}^2 $

for temporal dependencies and

$ N>{10}^2 $

for temporal dependencies and

![]() $ N>{10}^3 $

for combined spatial and temporal dependencies) by efficiently evaluating the log-likelihood and the evidence. We propose a procedure for exact and efficient log-likelihood calculation by (i) assuming separability of the spatial and temporal correlation (Genton, Reference Genton2007); (ii) exploiting the Markov property of the Exponential kernel (Marcotte and Allard, Reference Marcotte and Allard2018); and (iii) using the nested sampling strategy (Skilling, Reference Skilling2006) to reduce the computational cost of estimating the evidence under each model. The accuracy of the proposed approach is initially investigated in a case study using synthetic data, and subsequently, the feasibility of the approach for its use in real-world cases is demonstrated through a twin-girder steel road bridge case study. In the real-world use case, stress influence lines obtained from controlled load tests are used to estimate the posterior distribution of a set of uncertain, unobservable parameters. The accuracy and uncertainty of the posterior predictive stress distributions obtained from each candidate model are compared, to determine the benefit of using a larger dataset and considering dependencies.

$ N>{10}^3 $

for combined spatial and temporal dependencies) by efficiently evaluating the log-likelihood and the evidence. We propose a procedure for exact and efficient log-likelihood calculation by (i) assuming separability of the spatial and temporal correlation (Genton, Reference Genton2007); (ii) exploiting the Markov property of the Exponential kernel (Marcotte and Allard, Reference Marcotte and Allard2018); and (iii) using the nested sampling strategy (Skilling, Reference Skilling2006) to reduce the computational cost of estimating the evidence under each model. The accuracy of the proposed approach is initially investigated in a case study using synthetic data, and subsequently, the feasibility of the approach for its use in real-world cases is demonstrated through a twin-girder steel road bridge case study. In the real-world use case, stress influence lines obtained from controlled load tests are used to estimate the posterior distribution of a set of uncertain, unobservable parameters. The accuracy and uncertainty of the posterior predictive stress distributions obtained from each candidate model are compared, to determine the benefit of using a larger dataset and considering dependencies.

2. Previous work

In the Bayesian system identification literature, it is typically assumed that the prediction error is Gaussian white noise, that is, uncorrelated with zero mean (Mthembu et al., Reference Mthembu, Marwala, Friswell and Adhikari2011; Chiachío et al., Reference Chiachío, Chiachío, Saxena, Sankararaman, Rus and Goebel2015; Pasquier and Smith, Reference Pasquier and Smith2015; Astroza et al., Reference Astroza, Ebrahimian, Li and Conte2017). In some studies, for example, Goller and Schueller (Reference Goller and Schueller2011) and Ebrahimian et al. (Reference Ebrahimian, Astroza, Conte and Papadimitriou2018) the variance of the model prediction error is included in the vector of inferred parameters, however, dependencies are not considered. In other works, such as Simoen et al. (Reference Simoen, Roeck and Lombaert2015), Pasquier and Marcotte (Reference Pasquier and Marcotte2020), and Vereecken et al. (Reference Vereecken, Slobbe, Rózsás, Botte, Lombaert and Caspeele2022) the parameters that define the uncertainty (with or without considering dependencies) are estimated using a subset of the available data. This approach, however, results in the use of data for inferring nuisance parameters and may not be practical when limited data is available. Examples of inference of the uncertainty parameters can be found in applications outside of structural engineering, for example, in geostatistics (Diggle and Ribeiro, Reference Diggle and Ribeiro2002).

To the best of the authors knowledge, Simoen et al. (Reference Simoen, Papadimitriou and Lombaert2013) is the only work concerning model prediction error correlation in Bayesian system identification for structures and investigates the impact of considering dependencies in model prediction error in Bayesian system identification. The aforementioned study presents an approach with many similarities to the proposed one. Bayesian inference and model selection are applied to a pool of candidate models to infer the distribution of uncertain parameters and to determine the strength of the evidence in favor of each model. The physical and probabilistic parameters are inferred for a simple linear regression example as well as a reinforced concrete beam example using modal data. In both cases, the datasets are composed of synthetic observations polluted with correlated noise. Furthermore, the posterior distributions are assumed to be Gaussian, allowing for a computationally efficient asymptotic approximation to be utilized to obtain the posterior and evidence.

We focus on the feasibility of the approach in a practical application with real-world data, where the ground truth of the correlation structure and parameters are not known and the posterior and evidence are not approximated analytically. We instead utilize nested sampling to estimate the evidence, ensuring the applicability of the approach in cases where the Gaussian assumption for the posterior is not valid. Additionally, we address the issue of efficiently calculating the log-likelihood for large datasets with combined spatial and temporal dependencies under the assumption of separable space and time covariance.

3. Methods and tools

3.1. Continuous Bayes theorem

The Bayes theorem of conditional probability for continuous random variables can be written as (Gelman et al., Reference Gelman, Carlin, Stern, Dunson, Vehtari and Rubin2013):

$$ p(\boldsymbol{\theta} |\overline{\boldsymbol{y}},\mathcal{M})=\frac{p(\overline{\boldsymbol{y}}|\boldsymbol{\theta}, \mathcal{M})\cdot p(\boldsymbol{\theta} |\mathcal{M})}{\int_{\Theta}p(\overline{\boldsymbol{y}}|\boldsymbol{\theta}, \mathcal{M})\cdot p(\boldsymbol{\theta} |\mathcal{M})\cdot \mathrm{d}\boldsymbol{\theta }}, $$

$$ p(\boldsymbol{\theta} |\overline{\boldsymbol{y}},\mathcal{M})=\frac{p(\overline{\boldsymbol{y}}|\boldsymbol{\theta}, \mathcal{M})\cdot p(\boldsymbol{\theta} |\mathcal{M})}{\int_{\Theta}p(\overline{\boldsymbol{y}}|\boldsymbol{\theta}, \mathcal{M})\cdot p(\boldsymbol{\theta} |\mathcal{M})\cdot \mathrm{d}\boldsymbol{\theta }}, $$

where

![]() $ \boldsymbol{\theta} $

is a vector of uncertain parameters;

$ \boldsymbol{\theta} $

is a vector of uncertain parameters;

![]() $ \overline{\boldsymbol{y}} $

a vector of observations;

$ \overline{\boldsymbol{y}} $

a vector of observations;

![]() $ \mathcal{M} $

denotes the model;

$ \mathcal{M} $

denotes the model;

![]() $ p(\boldsymbol{\theta} |\overline{\boldsymbol{y}},\mathcal{M}) $

is the posterior distribution;

$ p(\boldsymbol{\theta} |\overline{\boldsymbol{y}},\mathcal{M}) $

is the posterior distribution;

![]() $ p(\overline{\boldsymbol{y}}|\boldsymbol{\theta}, \mathcal{M}) $

is the likelihood; and

$ p(\overline{\boldsymbol{y}}|\boldsymbol{\theta}, \mathcal{M}) $

is the likelihood; and

![]() $ p\left(\boldsymbol{\theta} |\mathcal{M}\right) $

is the prior.

$ p\left(\boldsymbol{\theta} |\mathcal{M}\right) $

is the prior.

It can be seen that

![]() $ p(\boldsymbol{\theta} |\overline{\boldsymbol{y}},\mathcal{M}) $

describes the posterior distribution of the model parameter set

$ p(\boldsymbol{\theta} |\overline{\boldsymbol{y}},\mathcal{M}) $

describes the posterior distribution of the model parameter set

![]() $ \boldsymbol{\theta} $

conditional on measurements

$ \boldsymbol{\theta} $

conditional on measurements

![]() $ \overline{\boldsymbol{y}} $

under model

$ \overline{\boldsymbol{y}} $

under model

![]() $ \mathcal{M} $

. The likelihood term gives the probability of observing

$ \mathcal{M} $

. The likelihood term gives the probability of observing

![]() $ \overline{\boldsymbol{y}} $

given parameters

$ \overline{\boldsymbol{y}} $

given parameters

![]() $ \boldsymbol{\theta} $

. Finally, the denominator on the right-hand side is known as the evidence, or marginal likelihood, and gives the likelihood of obtaining the measurements conditional on the model

$ \boldsymbol{\theta} $

. Finally, the denominator on the right-hand side is known as the evidence, or marginal likelihood, and gives the likelihood of obtaining the measurements conditional on the model

![]() $ \mathcal{M} $

. Obtaining the evidence is necessary for performing Bayesian model selection. In most practical applications this integral is high-dimensional (see e.g., Lye et al., Reference Lye, Cicirello and Patelli2021) and computationally intractable. Furthermore, the conventional Markov Chain Monte Carlo (MCMC) methods (Metropolis et al., Reference Metropolis, Rosenbluth, Rosenbluth, Teller and Teller1953; Hastings, Reference Hastings1970)—typically used in Bayesian inference—are primarily geared toward estimating the posterior, and do not compute the evidence. The nested sampling method, implemented in the Dynesty Python package (Speagle, Reference Speagle2019) is utilized to overcome this limitation. In this approach, the posterior is separated into nested slices of increasing likelihood. Weighted samples are generated from each slice and subsequently recombined to yield the posterior and evidence. Nested Sampling can deal effectively with moderate to high-dimensional problems (e.g., in problems with up to

$ \mathcal{M} $

. Obtaining the evidence is necessary for performing Bayesian model selection. In most practical applications this integral is high-dimensional (see e.g., Lye et al., Reference Lye, Cicirello and Patelli2021) and computationally intractable. Furthermore, the conventional Markov Chain Monte Carlo (MCMC) methods (Metropolis et al., Reference Metropolis, Rosenbluth, Rosenbluth, Teller and Teller1953; Hastings, Reference Hastings1970)—typically used in Bayesian inference—are primarily geared toward estimating the posterior, and do not compute the evidence. The nested sampling method, implemented in the Dynesty Python package (Speagle, Reference Speagle2019) is utilized to overcome this limitation. In this approach, the posterior is separated into nested slices of increasing likelihood. Weighted samples are generated from each slice and subsequently recombined to yield the posterior and evidence. Nested Sampling can deal effectively with moderate to high-dimensional problems (e.g., in problems with up to

![]() $ 100 $

parameters) and multi-modal posteriors. The reader is referred to Skilling (Reference Skilling2006) and Speagle (Reference Speagle2019) for detailed information on the nested sampling method.

$ 100 $

parameters) and multi-modal posteriors. The reader is referred to Skilling (Reference Skilling2006) and Speagle (Reference Speagle2019) for detailed information on the nested sampling method.

3.2. Bayesian model selection

The posterior distribution of the parameters for a given set of data is conditional on the model

![]() $ \mathcal{M} $

. Often, multiple models can be defined a priori to describe the observed behavior. To select the most plausible model, a pool of models

$ \mathcal{M} $

. Often, multiple models can be defined a priori to describe the observed behavior. To select the most plausible model, a pool of models

![]() $ \mathbf{\mathcal{M}} $

is defined and inference is performed conditional on each model

$ \mathbf{\mathcal{M}} $

is defined and inference is performed conditional on each model

![]() $ {\mathcal{M}}_i $

. The Bayes rule can then be applied to select the most likely model based on the evidence. In Hoeting et al. (Reference Hoeting, Madigan, Raftery and Volinsky1999), the following equation is provided for performing Bayesian model selection:

$ {\mathcal{M}}_i $

. The Bayes rule can then be applied to select the most likely model based on the evidence. In Hoeting et al. (Reference Hoeting, Madigan, Raftery and Volinsky1999), the following equation is provided for performing Bayesian model selection:

$$ p({\mathcal{M}}_i|\overline{\boldsymbol{y}})=\frac{p(\overline{\boldsymbol{y}}|{\mathcal{M}}_i)\cdot p({\mathcal{M}}_i)}{\sum \limits_{i=1}^Kp(\overline{\boldsymbol{y}}|{\mathcal{M}}_i)\cdot p({\mathcal{M}}_i)}, $$

$$ p({\mathcal{M}}_i|\overline{\boldsymbol{y}})=\frac{p(\overline{\boldsymbol{y}}|{\mathcal{M}}_i)\cdot p({\mathcal{M}}_i)}{\sum \limits_{i=1}^Kp(\overline{\boldsymbol{y}}|{\mathcal{M}}_i)\cdot p({\mathcal{M}}_i)}, $$

where

![]() $ p({\mathcal{M}}_i|\overline{\boldsymbol{y}}) $

is the posterior probability of model

$ p({\mathcal{M}}_i|\overline{\boldsymbol{y}}) $

is the posterior probability of model

![]() $ i $

;

$ i $

;

![]() $ p(\overline{\boldsymbol{y}}|{\mathcal{M}}_i) $

is the evidence under model

$ p(\overline{\boldsymbol{y}}|{\mathcal{M}}_i) $

is the evidence under model

![]() $ {\mathcal{M}}_i $

; and

$ {\mathcal{M}}_i $

; and

![]() $ p\left({\mathcal{M}}_i\right) $

is the prior probability of model

$ p\left({\mathcal{M}}_i\right) $

is the prior probability of model

![]() $ i $

.

$ i $

.

Given a pool of models, selecting the model that best fits the data can straightforwardly be achieved by selecting the model that minimizes a particular error metric between measurements and model outputs. However, simply choosing the model that best fits the data could potentially lead to overfitting: more complicated models would tend to fit the data best, making them the most likely in this approach even if the added complexity provides a negligible benefit. An advantage of Bayesian model selection is that it automatically enforces model parsimony, also known as Occam’s razor as discussed in MacKay (Reference MacKay2003) and Beck and Yuen (Reference Beck and Yuen2004), penalizing overly complex models. It should be emphasized that a high posterior model probability does not necessarily indicate that a particular model provides a good fit with the data, since the model probabilities are conditioned on the pool of candidate models

![]() $ \mathbf{\mathcal{M}} $

. Therefore, a high posterior model probability can only be interpreted as a particular model being more likely, relative to the other models that are considered. To aid the interpretation of the results, the relative plausibility of two models

$ \mathbf{\mathcal{M}} $

. Therefore, a high posterior model probability can only be interpreted as a particular model being more likely, relative to the other models that are considered. To aid the interpretation of the results, the relative plausibility of two models

![]() $ {\mathcal{M}}_1 $

and

$ {\mathcal{M}}_1 $

and

![]() $ {\mathcal{M}}_2 $

belonging to a class of models

$ {\mathcal{M}}_2 $

belonging to a class of models

![]() $ \mathbf{\mathcal{M}} $

can be expressed in terms of the Bayes factor:

$ \mathbf{\mathcal{M}} $

can be expressed in terms of the Bayes factor:

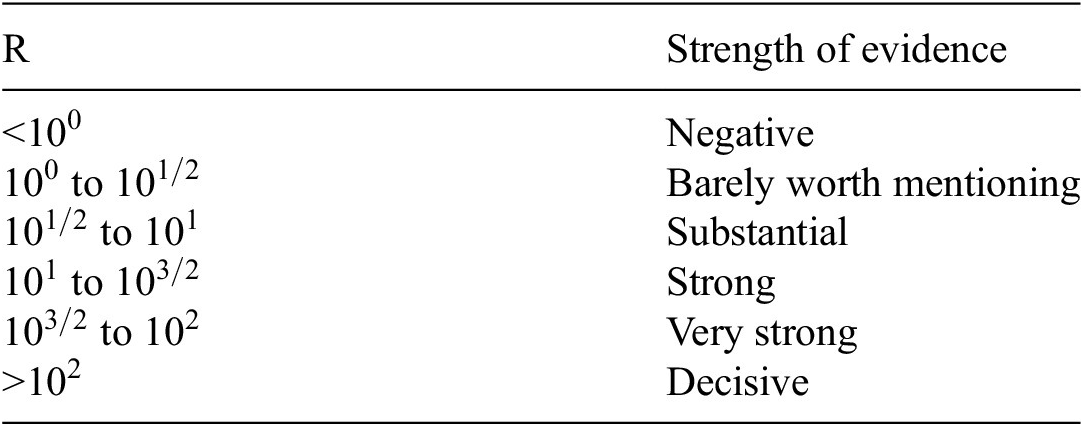

An advantage of using the Bayes factor over the posterior model probabilities for model selection is that it can be readily interpreted to indicate the support of one model over another, and thus offers a practical means of comparing different models. The interpretation of Jeffreys (Reference Jeffreys2003) is used in this work, given in Table 1.

Table 1. Interpretation of the Bayes factor from Jeffreys (Reference Jeffreys2003)

3.3. Posterior predictive distribution

Bayesian system identification can be used to obtain point estimates and posterior distributions of uncertain parameters using physical models and measurement data. However, directly using the point estimates of the inferred parameters to make predictions would result in underestimation of the uncertainty and overly confident predictions. This is due to the fact that using point estimates to make predictions disregards the uncertainty in the inferred parameters resulting from lack of data. In contrast, the posterior predictive is a distribution of possible future observations conditioned on past observations taking into account the combined uncertainty from all sources (e.g., modeling and measurement error and parameter uncertainty). The posterior predictive can be obtained as Gelman et al. (Reference Gelman, Carlin, Stern, Dunson, Vehtari and Rubin2013):

where

![]() $ {\boldsymbol{y}}^{\ast } $

is a vector of possible future observations.

$ {\boldsymbol{y}}^{\ast } $

is a vector of possible future observations.

3.4. Data-generating process

In order to perform system identification, the likelihood function is formulated based on the combination of a probabilistic model and a deterministic physical model. This coupled probabilistic-physical model is used to represent the process that is assumed to have generated the measurements, referred to as the data-generating process. Details on the deterministic physical model are provided in Section 5.2. The probabilistic model is used to represent the uncertainties that are inherent when using a model to describe a physical system. The following sources of uncertainty are considered:

-

• Measurement uncertainty

-

• Physical model uncertainty

Measurement uncertainty refers to the error between the measured response quantities and the true system response, caused by the combined influence of sensor errors and environmental noise (Kennedy and O’Hagan, Reference Kennedy and O’Hagan2001). Modeling uncertainty can contain several components and refers to the error between reality and the models used to represent it. These errors arise, for example, due to simplifications in the physical model and numerical approximations.

In this article, we consider data-generating processes based on a multiplicative and additive model prediction error, which will be explained in the following subsections. In the following expressions, Greek letters are used to represent random variables, while bold lower and upper-case letters denote vectors and matrices respectively.

3.4.1. Multiplicative model

The data-generating processes described by equation (5) are obtained by considering the discrepancies between the deterministic model output and the real system response, a process referred to as stochastic embedding in Beck (Reference Beck2010). In this model of the data-generating process, a multiplicative prediction error is considered:

where

![]() $ \boldsymbol{\chi} $

is a vector of predictions obtained from the coupled physical-probabilistic model of the data generating process;

$ \boldsymbol{\chi} $

is a vector of predictions obtained from the coupled physical-probabilistic model of the data generating process;

![]() $ \boldsymbol{Y} $

is a diagonal matrix of physical model predictions obtained as

$ \boldsymbol{Y} $

is a diagonal matrix of physical model predictions obtained as

![]() $ \boldsymbol{Y}=\operatorname{diag}\left(\boldsymbol{y}\right) $

, with

$ \boldsymbol{Y}=\operatorname{diag}\left(\boldsymbol{y}\right) $

, with

![]() $ \boldsymbol{y} $

denoting the corresponding vector of predictions;

$ \boldsymbol{y} $

denoting the corresponding vector of predictions;

![]() $ {\boldsymbol{\eta}}_m $

is a vector of multiplicative physical model error factors;

$ {\boldsymbol{\eta}}_m $

is a vector of multiplicative physical model error factors;

![]() $ \boldsymbol{\varepsilon} $

is a vector of measurement error random variables;

$ \boldsymbol{\varepsilon} $

is a vector of measurement error random variables;

![]() $ {\boldsymbol{\theta}}_s $

is a vector of physical model parameters to be estimated;

$ {\boldsymbol{\theta}}_s $

is a vector of physical model parameters to be estimated;

![]() $ {\boldsymbol{\theta}}_c $

is a vector of probabilistic model parameters to be estimated; and

$ {\boldsymbol{\theta}}_c $

is a vector of probabilistic model parameters to be estimated; and

![]() $ \boldsymbol{\theta} =\left\{{\boldsymbol{\theta}}_s,{\boldsymbol{\theta}}_c\right\} $

is the set of combined physical and probabilistic model parameters to be estimated.

$ \boldsymbol{\theta} =\left\{{\boldsymbol{\theta}}_s,{\boldsymbol{\theta}}_c\right\} $

is the set of combined physical and probabilistic model parameters to be estimated.

In this model formulation, the error in the physical model prediction is assumed to scale with the magnitude of the model output. This assumption is prevalent in the structural reliability literature (Cervenka et al., Reference Cervenka, Cervenka and Kadlec2018; Sykorka et al., Reference Sykorka, Krejsa, Mlcoch, Prieto and Tanner2018). The physical model predictions are multiplied by a factor

![]() $ {\boldsymbol{\eta}}_m $

, expressing the discrepancy between model prediction and reality. A correlated Multivariate Normal distribution with a mean of

$ {\boldsymbol{\eta}}_m $

, expressing the discrepancy between model prediction and reality. A correlated Multivariate Normal distribution with a mean of

![]() $ 1.0 $

and covariance matrix

$ 1.0 $

and covariance matrix

![]() $ {\boldsymbol{\Sigma}}_{\eta } $

is assumed for

$ {\boldsymbol{\Sigma}}_{\eta } $

is assumed for

![]() $ {\boldsymbol{\eta}}_m $

as shown in equation (6):

$ {\boldsymbol{\eta}}_m $

as shown in equation (6):

The assumption of a Gaussian distribution for

![]() $ {\boldsymbol{\eta}}_m $

is made primarily for simplicity and computational convenience. The impact of this assumption is deemed to be outside the scope of this work and is not further examined. The measurement error is taken as independent, identically distributed (i.i.d.) Gaussian random variables, distributed as

$ {\boldsymbol{\eta}}_m $

is made primarily for simplicity and computational convenience. The impact of this assumption is deemed to be outside the scope of this work and is not further examined. The measurement error is taken as independent, identically distributed (i.i.d.) Gaussian random variables, distributed as

![]() $ \boldsymbol{\varepsilon} \sim \mathcal{N}\left(0,{\sigma}_{\varepsilon}\right) $

. The assumption of Gaussian white noise for the measurement error is prevalent in the literature and is commonly used in Bayesian system identification for structures (see Section 2), stemming from the fact that measurement noise can be considered as a sum of a large number of independent random variables. Modeling the measurement error as i.i.d. realizations from a Normal distribution is therefore justified by the central limit theorem. Utilizing the affine transformation property of the Multivariate Normal distribution we obtain the following model for the data-generating process:

$ \boldsymbol{\varepsilon} \sim \mathcal{N}\left(0,{\sigma}_{\varepsilon}\right) $

. The assumption of Gaussian white noise for the measurement error is prevalent in the literature and is commonly used in Bayesian system identification for structures (see Section 2), stemming from the fact that measurement noise can be considered as a sum of a large number of independent random variables. Modeling the measurement error as i.i.d. realizations from a Normal distribution is therefore justified by the central limit theorem. Utilizing the affine transformation property of the Multivariate Normal distribution we obtain the following model for the data-generating process:

with

![]() $ \boldsymbol{I} $

being the identity matrix. The residuals between measurements and model predictions are considered as a random field, with the position of each observation defined by a spatial coordinate (representing the location of a sensor) and a temporal coordinate, denoted as

$ \boldsymbol{I} $

being the identity matrix. The residuals between measurements and model predictions are considered as a random field, with the position of each observation defined by a spatial coordinate (representing the location of a sensor) and a temporal coordinate, denoted as

![]() $ {x}_i $

and

$ {x}_i $

and

![]() $ {t}_i $

, respectively. The position of an observation

$ {t}_i $

, respectively. The position of an observation

![]() $ \overline{\boldsymbol{y}} $

is described by a two-dimensional vector

$ \overline{\boldsymbol{y}} $

is described by a two-dimensional vector

![]() $ {\boldsymbol{x}}_i=\left({x}_i,{t}_i\right) $

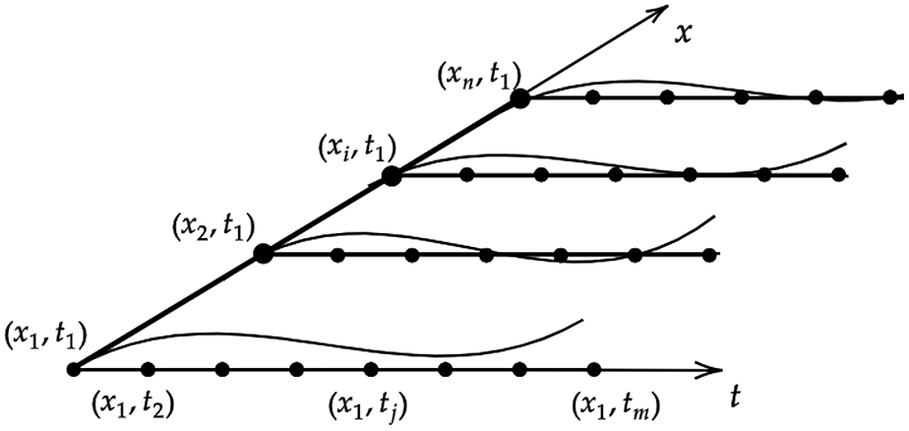

, and the random field is represented as a (not necessarily regular) grid of points, as shown in Figure 3 with

$ {\boldsymbol{x}}_i=\left({x}_i,{t}_i\right) $

, and the random field is represented as a (not necessarily regular) grid of points, as shown in Figure 3 with

![]() $ n $

denoting the total number of sensors and

$ n $

denoting the total number of sensors and

![]() $ m $

denoting the number of observations per sensor over time.

$ m $

denoting the number of observations per sensor over time.

Figure 3. Illustration of space and time coordinate system. Influence lines along the time axis

![]() $ t $

are obtained for each sensor position

$ t $

are obtained for each sensor position

![]() $ x $

.

$ x $

.

The correlation in the model prediction error between two points

![]() $ {\boldsymbol{x}}_i=\left({x}_i,{t}_i\right) $

and

$ {\boldsymbol{x}}_i=\left({x}_i,{t}_i\right) $

and

![]() $ {\boldsymbol{x}}_j=\left({x}_j,{t}_j\right) $

is obtained as the product of the spatial and temporal correlation, described in terms of the respective kernel functions:

$ {\boldsymbol{x}}_j=\left({x}_j,{t}_j\right) $

is obtained as the product of the spatial and temporal correlation, described in terms of the respective kernel functions:

where

![]() $ {k}_x\left({x}_i,{x}_j;{\boldsymbol{\theta}}_c\right) $

and

$ {k}_x\left({x}_i,{x}_j;{\boldsymbol{\theta}}_c\right) $

and

![]() $ {k}_t\left({t}_i,{t}_j;{\boldsymbol{\theta}}_c\right) $

are parametrized by the set of parameters of the probabilistic model

$ {k}_t\left({t}_i,{t}_j;{\boldsymbol{\theta}}_c\right) $

are parametrized by the set of parameters of the probabilistic model

![]() $ {\boldsymbol{\theta}}_c $

. The standard deviation of the model prediction error at a point

$ {\boldsymbol{\theta}}_c $

. The standard deviation of the model prediction error at a point

![]() $ i $

is obtained as

$ i $

is obtained as

![]() $ {\sigma}_i={C}_v\cdot {y}_i $

, where

$ {\sigma}_i={C}_v\cdot {y}_i $

, where

![]() $ {C}_v $

denotes the coefficient of variation (COV) of the model prediction error. Calculating the covariance for every pair of points yields a symmetric positive semi-definite covariance matrix

$ {C}_v $

denotes the coefficient of variation (COV) of the model prediction error. Calculating the covariance for every pair of points yields a symmetric positive semi-definite covariance matrix

![]() $ {\Sigma}_{\eta } $

that describes the covariance of the physical model prediction error for every point in the random field:

$ {\Sigma}_{\eta } $

that describes the covariance of the physical model prediction error for every point in the random field:

$$ {\boldsymbol{\Sigma}}_p=\boldsymbol{Y}{\boldsymbol{\Sigma}}_{\eta }{\boldsymbol{Y}}^T=\left[\begin{array}{ccc}{\sigma}_1^2& \dots & {\sigma}_1\cdot {\sigma}_N\cdot {\rho}_{1,N}\\ {}\vdots & \ddots & \vdots \\ {}{\sigma}_N\cdot {\sigma}_1\cdot {\rho}_{N,1}& \dots & {\sigma}_N^2\end{array}\right], $$

$$ {\boldsymbol{\Sigma}}_p=\boldsymbol{Y}{\boldsymbol{\Sigma}}_{\eta }{\boldsymbol{Y}}^T=\left[\begin{array}{ccc}{\sigma}_1^2& \dots & {\sigma}_1\cdot {\sigma}_N\cdot {\rho}_{1,N}\\ {}\vdots & \ddots & \vdots \\ {}{\sigma}_N\cdot {\sigma}_1\cdot {\rho}_{N,1}& \dots & {\sigma}_N^2\end{array}\right], $$

with

![]() $ N=n\cdot m $

.

$ N=n\cdot m $

.

3.4.2. Additive model

A coupled probabilistic-physical model of the data-generating process based on a correlated, additive model prediction error is also considered (equation (10). In this case, the model prediction error is described by an additive term, modeled as a multivariate normal distribution with zero mean and covariance

![]() $ {\boldsymbol{\Sigma}}_{\eta }({\boldsymbol{\theta}}_c) $

. Similarly to the multiplicative model, the measurement error is represented by a vector of i.i.d. normal random variables, with a mean of zero and standard deviation

$ {\boldsymbol{\Sigma}}_{\eta }({\boldsymbol{\theta}}_c) $

. Similarly to the multiplicative model, the measurement error is represented by a vector of i.i.d. normal random variables, with a mean of zero and standard deviation

![]() $ {\sigma}_{\varepsilon } $

.

$ {\sigma}_{\varepsilon } $

.

where

![]() $ {\boldsymbol{\eta}}_a $

denotes the vector of additive model prediction error.

$ {\boldsymbol{\eta}}_a $

denotes the vector of additive model prediction error.

4. Efficient log-likelihood evaluation

The assumption of a multiplicative or additive physical model uncertainty factor described by a Gaussian distribution leads to a multi-variate Gaussian likelihood description. For a given covariance matrix

![]() $ \Sigma $

, and omitting the dependence on the parameter vector

$ \Sigma $

, and omitting the dependence on the parameter vector

![]() $ \boldsymbol{\theta} $

from the right-hand side of the equation for brevity, the multivariate normal log-likelihood function can be expressed as:

$ \boldsymbol{\theta} $

from the right-hand side of the equation for brevity, the multivariate normal log-likelihood function can be expressed as:

The evaluation of the Multivariate Gaussian log-likelihood (equation (12) requires calculating the determinant (

![]() $ \mid .\mid $

) and inverse (

$ \mid .\mid $

) and inverse (

![]() $ {(.)}^{-1} $

) of the covariance matrix

$ {(.)}^{-1} $

) of the covariance matrix

![]() $ \Sigma $

. These operations typically have

$ \Sigma $

. These operations typically have

![]() $ O\left({N}^3\right) $

time complexity and

$ O\left({N}^3\right) $

time complexity and

![]() $ O\left({N}^2\right) $

memory requirements for factorizing and storing the covariance matrix respectively, making the direct evaluation of the log-likelihood infeasible for more than a few thousand points.

$ O\left({N}^2\right) $

memory requirements for factorizing and storing the covariance matrix respectively, making the direct evaluation of the log-likelihood infeasible for more than a few thousand points.

To address this issue, we present an approach for efficient log-likelihood evaluation under the multiplicative model uncertainty with additive Gaussian noise described in Section 3.4.1. For the case of additive model uncertainty, described in Section 3.4.2 we utilize an existing approach from the literature. A comparison of the average wall clock time required for log-likelihood evaluation as a function of the size of a 2-dimensional grid of measurements against a naive implementation using the full covariance matrix, for both the additive and multiplicative cases, can be found in Koune (Reference Koune2021). A Python implementation of both methods is available at https://github.com/TNO/tripy.

4.1. Efficient log-likelihood evaluation for combined spatial and temporal correlation and multiplicative model prediction uncertainty

To reduce the computational complexity for evaluating the log-likelihood under the multiplicative model uncertainty, we propose an approach that utilizes the tridiagonal inverse form of the correlation matrix that can be obtained from the Exponential kernel, as well as the Kronecker structure of the separable space and time covariance matrix.

In the following, it is assumed that the correlation is exponential in time. No assumptions are made regarding the structure of the correlation in space or the number of spatial dimensions. The

![]() $ i,j\mathrm{th} $

element of the temporal covariance matrix

$ i,j\mathrm{th} $

element of the temporal covariance matrix

![]() $ {\boldsymbol{\Sigma}}_t $

is obtained as:

$ {\boldsymbol{\Sigma}}_t $

is obtained as:

$$ {\boldsymbol{\Sigma}}_t^{i,j}={C}_{v,i}\cdot {C}_{v,j}\cdot \mathrm{exp}\left(\frac{\parallel {t}_i-{t}_j\parallel }{l_{\mathrm{corr}}}\right), $$

$$ {\boldsymbol{\Sigma}}_t^{i,j}={C}_{v,i}\cdot {C}_{v,j}\cdot \mathrm{exp}\left(\frac{\parallel {t}_i-{t}_j\parallel }{l_{\mathrm{corr}}}\right), $$

where

![]() $ {l}_{\mathrm{corr}} $

is the correlation length and

$ {l}_{\mathrm{corr}} $

is the correlation length and

![]() $ {C}_v $

is the coefficient of variation described in Section 3.4.1. It is shown by Pasquier and Marcotte (Reference Pasquier and Marcotte2020) that the inverse of the covariance matrix for this kernel function has a symmetric tridiagonal form:

$ {C}_v $

is the coefficient of variation described in Section 3.4.1. It is shown by Pasquier and Marcotte (Reference Pasquier and Marcotte2020) that the inverse of the covariance matrix for this kernel function has a symmetric tridiagonal form:

$$ {\boldsymbol{\Sigma}}_t^{-1}=\left[\begin{array}{cccccc}{d}_1& {c}_1& & & \hskip2pt & \\ {}{c}_1& {d}_2& {c}_2& & & \\ {}& {c}_2& {d}_3& {c}_3& & \\ {}& & \ddots & \ddots & \ddots & \end{array}\right]. $$

$$ {\boldsymbol{\Sigma}}_t^{-1}=\left[\begin{array}{cccccc}{d}_1& {c}_1& & & \hskip2pt & \\ {}{c}_1& {d}_2& {c}_2& & & \\ {}& {c}_2& {d}_3& {c}_3& & \\ {}& & \ddots & \ddots & \ddots & \end{array}\right]. $$

Following Cheong (Reference Cheong2016), the diagonal vectors of diagonal and off-diagonal terms in equation (14) can be obtained analytically, eliminating the need to form the full correlation and covariance matrices which is often computationally intensive due to the amount of memory and operations required. For a given vector of observations with coordinates

![]() $ \boldsymbol{t}=\left\{{t}_1,{t}_2,\dots, {t}_m\right\} $

denoting

$ \boldsymbol{t}=\left\{{t}_1,{t}_2,\dots, {t}_m\right\} $

denoting

![]() $ \Delta {t}_i=\mid {t}_i-{t}_{i-1}\mid $

for

$ \Delta {t}_i=\mid {t}_i-{t}_{i-1}\mid $

for

![]() $ i\in \left[m\right] $

yields equation (15).

$ i\in \left[m\right] $

yields equation (15).

where

![]() $ \lambda $

is the inverse of the correlation length

$ \lambda $

is the inverse of the correlation length

![]() $ {l}_{\mathrm{corr}} $

and

$ {l}_{\mathrm{corr}} $

and

![]() $ {a}_i $

is the correlation between points

$ {a}_i $

is the correlation between points

![]() $ i $

and

$ i $

and

![]() $ i-1 $

. The diagonal and off-diagonal elements of the inverse correlation matrix

$ i-1 $

. The diagonal and off-diagonal elements of the inverse correlation matrix

![]() $ {\boldsymbol{\Sigma}}_t^{-1} $

can then be obtained analytically, eliminating the need for direct inversion of

$ {\boldsymbol{\Sigma}}_t^{-1} $

can then be obtained analytically, eliminating the need for direct inversion of

![]() $ {\boldsymbol{\Sigma}}_t $

and reducing computational complexity and memory requirements:

$ {\boldsymbol{\Sigma}}_t $

and reducing computational complexity and memory requirements:

$$ {d}_1=\frac{1}{C_{v,1}^2}\cdot \frac{1}{1-{a}_2^2}, $$

$$ {d}_1=\frac{1}{C_{v,1}^2}\cdot \frac{1}{1-{a}_2^2}, $$

$$ {d}_m=\frac{1}{C_{v,m}^2}\cdot \frac{1}{1-{a}_m^2}, $$

$$ {d}_m=\frac{1}{C_{v,m}^2}\cdot \frac{1}{1-{a}_m^2}, $$

$$ {d}_{ii}=\frac{1}{C_{v,i}^2}\cdot \left(\frac{1}{1-{a}_i^2}+\frac{1}{1-{a}_{i+1}^2}-1\right), $$

$$ {d}_{ii}=\frac{1}{C_{v,i}^2}\cdot \left(\frac{1}{1-{a}_i^2}+\frac{1}{1-{a}_{i+1}^2}-1\right), $$

Furthermore, we define the combined space and time covariance which can be obtained as the Kronecker product of the temporal correlation matrix and the spatial correlation matrix,

![]() $ {\boldsymbol{\Sigma}}_{\eta }={\boldsymbol{\Sigma}}_t\otimes {\boldsymbol{\Sigma}}_x $

. Using the properties of the Kronecker product, it can be shown that the resulting inverse matrix

$ {\boldsymbol{\Sigma}}_{\eta }={\boldsymbol{\Sigma}}_t\otimes {\boldsymbol{\Sigma}}_x $

. Using the properties of the Kronecker product, it can be shown that the resulting inverse matrix

![]() $ {\boldsymbol{\Sigma}}_{\eta}^{-1} $

has a symmetric block tridiagonal form:

$ {\boldsymbol{\Sigma}}_{\eta}^{-1} $

has a symmetric block tridiagonal form:

$$ {\boldsymbol{\Sigma}}_{\eta}^{-1}=\left[\begin{array}{cccccc}{\boldsymbol{D}}_1& {\boldsymbol{C}}_1& & & \hskip2pt & \\ {}{\boldsymbol{C}}_1& {\boldsymbol{D}}_2& {\boldsymbol{C}}_2& & & \\ {}& {\boldsymbol{C}}_2& {\boldsymbol{D}}_3& {\boldsymbol{C}}_3& & \\ {}& & \ddots & \ddots & \ddots & \end{array}\right]. $$

$$ {\boldsymbol{\Sigma}}_{\eta}^{-1}=\left[\begin{array}{cccccc}{\boldsymbol{D}}_1& {\boldsymbol{C}}_1& & & \hskip2pt & \\ {}{\boldsymbol{C}}_1& {\boldsymbol{D}}_2& {\boldsymbol{C}}_2& & & \\ {}& {\boldsymbol{C}}_2& {\boldsymbol{D}}_3& {\boldsymbol{C}}_3& & \\ {}& & \ddots & \ddots & \ddots & \end{array}\right]. $$

We consider the covariance matrix for the data-generating process defined in equation (7). Expressing the physical model uncertainty covariance matrix

![]() $ {\boldsymbol{\Sigma}}_p $

in terms of the combined space and time covariance

$ {\boldsymbol{\Sigma}}_p $

in terms of the combined space and time covariance

![]() $ {\boldsymbol{\Sigma}}_{\eta } $

yields:

$ {\boldsymbol{\Sigma}}_{\eta } $

yields:

Then

![]() $ {\Sigma}_p^{-1} $

will also be block tridiagonal. However, including additive noise such that

$ {\Sigma}_p^{-1} $

will also be block tridiagonal. However, including additive noise such that

![]() $ {\boldsymbol{\Sigma}}_p=\boldsymbol{Y}{\boldsymbol{\Sigma}}_{\eta }{\boldsymbol{Y}}^T+{\sigma}_{\varepsilon}^2\boldsymbol{I} $

leads to a dense inverse matrix. To efficiently evaluate the likelihood, we aim to calculate the terms

$ {\boldsymbol{\Sigma}}_p=\boldsymbol{Y}{\boldsymbol{\Sigma}}_{\eta }{\boldsymbol{Y}}^T+{\sigma}_{\varepsilon}^2\boldsymbol{I} $

leads to a dense inverse matrix. To efficiently evaluate the likelihood, we aim to calculate the terms

![]() $ {(\overline{\boldsymbol{y}}-\boldsymbol{y})}^T{\boldsymbol{\Sigma}}_p^{-1}(\overline{\boldsymbol{y}}-\boldsymbol{y}) $

and

$ {(\overline{\boldsymbol{y}}-\boldsymbol{y})}^T{\boldsymbol{\Sigma}}_p^{-1}(\overline{\boldsymbol{y}}-\boldsymbol{y}) $

and

![]() $ \mid {\boldsymbol{\Sigma}}_p\mid $

in equation (12) without explicitly forming the corresponding matrices or directly inverting the covariance matrix, while taking advantage of the properties described previously to reduce the complexity. Algebraic manipulation of the product

$ \mid {\boldsymbol{\Sigma}}_p\mid $

in equation (12) without explicitly forming the corresponding matrices or directly inverting the covariance matrix, while taking advantage of the properties described previously to reduce the complexity. Algebraic manipulation of the product

![]() $ {(\overline{\boldsymbol{y}}-\boldsymbol{y})}^T{\boldsymbol{\Sigma}}_p^{-1}(\overline{\boldsymbol{y}}-\boldsymbol{y}) $

is performed in order to obtain an expression that can be evaluated efficiently by taking advantage of the Kronecker structure and block symmetric tridiagonal inverse of the covariance matrix. We apply the Woodbury matrix identity given below:

$ {(\overline{\boldsymbol{y}}-\boldsymbol{y})}^T{\boldsymbol{\Sigma}}_p^{-1}(\overline{\boldsymbol{y}}-\boldsymbol{y}) $

is performed in order to obtain an expression that can be evaluated efficiently by taking advantage of the Kronecker structure and block symmetric tridiagonal inverse of the covariance matrix. We apply the Woodbury matrix identity given below:

Substituting

![]() $ \boldsymbol{A}\to {\boldsymbol{\Sigma}}_{\varepsilon}^{-1} $

,

$ \boldsymbol{A}\to {\boldsymbol{\Sigma}}_{\varepsilon}^{-1} $

,

![]() $ \boldsymbol{B}\to \boldsymbol{Y} $

, and

$ \boldsymbol{B}\to \boldsymbol{Y} $

, and

![]() $ {\boldsymbol{C}}^{-1}\to {\boldsymbol{\Sigma}}_{\eta } $

, yields:

$ {\boldsymbol{C}}^{-1}\to {\boldsymbol{\Sigma}}_{\eta } $

, yields:

Applying the left and right vector multiplication by

![]() $ \boldsymbol{y} $

, the second term in the r.h.s. of equation (12) becomes:

$ \boldsymbol{y} $

, the second term in the r.h.s. of equation (12) becomes:

In the previous expression, the term

![]() $ {\boldsymbol{y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{y} $

can be efficiently evaluated as the product of vectors and diagonal matrices. Similarly, the term

$ {\boldsymbol{y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{y} $

can be efficiently evaluated as the product of vectors and diagonal matrices. Similarly, the term

![]() $ {\boldsymbol{y}}^T({\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y}) $

can be directly computed and yields a vector. We consider the following term from the r.h.s. of equation (24):

$ {\boldsymbol{y}}^T({\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y}) $

can be directly computed and yields a vector. We consider the following term from the r.h.s. of equation (24):

We note that the term

![]() $ {\boldsymbol{\Sigma}}_{\eta}^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

is the sum of a symmetric block tridiagonal matrix

$ {\boldsymbol{\Sigma}}_{\eta}^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

is the sum of a symmetric block tridiagonal matrix

![]() $ {\boldsymbol{\Sigma}}_{\eta}^{-1} $

and the diagonal matrix

$ {\boldsymbol{\Sigma}}_{\eta}^{-1} $

and the diagonal matrix

![]() $ {\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

. We can therefore take advantage of efficient algorithms for Cholesky factorization of symmetric block tridiagonal matrices and for solving linear systems using this factorization to compute

$ {\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

. We can therefore take advantage of efficient algorithms for Cholesky factorization of symmetric block tridiagonal matrices and for solving linear systems using this factorization to compute

![]() $ \boldsymbol{X} $

. Furthermore, the Cholesky factors obtained previously are also used to reduce the computational cost of evaluating the determinant

$ \boldsymbol{X} $

. Furthermore, the Cholesky factors obtained previously are also used to reduce the computational cost of evaluating the determinant

![]() $ \mid \boldsymbol{\Sigma} \mid =\mid {\boldsymbol{\Sigma}}_{\varepsilon }+\boldsymbol{Y}{\boldsymbol{\Sigma}}_{\eta }{\boldsymbol{Y}}^T\mid $

. Applying the determinant lemma for

$ \mid \boldsymbol{\Sigma} \mid =\mid {\boldsymbol{\Sigma}}_{\varepsilon }+\boldsymbol{Y}{\boldsymbol{\Sigma}}_{\eta }{\boldsymbol{Y}}^T\mid $

. Applying the determinant lemma for

![]() $ {\boldsymbol{\Sigma}}_p $

yields equation (26):

$ {\boldsymbol{\Sigma}}_p $

yields equation (26):

The determinant

![]() $ \mid {\boldsymbol{\Sigma}}_{\eta}\mid $

can be calculated efficiently by utilizing the properties of the Kronecker product, given that

$ \mid {\boldsymbol{\Sigma}}_{\eta}\mid $

can be calculated efficiently by utilizing the properties of the Kronecker product, given that

![]() $ \mid {\boldsymbol{\Sigma}}_{\eta}\mid =\mid {\boldsymbol{\Sigma}}_t\otimes {\boldsymbol{\Sigma}}_x\mid $

. Furthermore,

$ \mid {\boldsymbol{\Sigma}}_{\eta}\mid =\mid {\boldsymbol{\Sigma}}_t\otimes {\boldsymbol{\Sigma}}_x\mid $

. Furthermore,

![]() $ {\boldsymbol{\Sigma}}_{\varepsilon } $

is a diagonal matrix meaning that the determinant can be trivially obtained. Finally, we have previously calculated the Cholesky factorization of the term

$ {\boldsymbol{\Sigma}}_{\varepsilon } $

is a diagonal matrix meaning that the determinant can be trivially obtained. Finally, we have previously calculated the Cholesky factorization of the term

![]() $ {\boldsymbol{\Sigma}}_{\eta}^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

. Using the fact that the determinant of a block triangular matrix is the product of the determinants of its diagonal blocks and the properties of the determinant, the first expression in the r.h.s. of equation (26) can be computed with:

$ {\boldsymbol{\Sigma}}_{\eta}^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

. Using the fact that the determinant of a block triangular matrix is the product of the determinants of its diagonal blocks and the properties of the determinant, the first expression in the r.h.s. of equation (26) can be computed with:

where the matrix

![]() $ \boldsymbol{L} $

is the lower triangular Cholesky factor of

$ \boldsymbol{L} $

is the lower triangular Cholesky factor of

![]() $ {\boldsymbol{\Sigma}}_{\eta}^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

. Since each block

$ {\boldsymbol{\Sigma}}_{\eta}^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

. Since each block

![]() $ {\boldsymbol{L}}_{ii} $

is also triangular, the evaluation of the determinant has been reduced to evaluation of the determinant of each triangular block

$ {\boldsymbol{L}}_{ii} $

is also triangular, the evaluation of the determinant has been reduced to evaluation of the determinant of each triangular block

![]() $ {\boldsymbol{L}}_{ii} $

, which is equal to the product of its diagonal elements.

$ {\boldsymbol{L}}_{ii} $

, which is equal to the product of its diagonal elements.

Using the above calculation procedure, an efficient solution can also be obtained for the case of only temporal correlation, where

![]() $ {\boldsymbol{\Sigma}}_t^{-1} $

has the symmetric tridiagonal form given in equation (14). The term

$ {\boldsymbol{\Sigma}}_t^{-1} $

has the symmetric tridiagonal form given in equation (14). The term

![]() $ {\boldsymbol{\Sigma}}_t^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

will be the sum of a symmetric tridiagonal and a diagonal matrix. From a computational viewpoint, this property is advantageous as it allows for a solution to the system of equations equation (25) with

$ {\boldsymbol{\Sigma}}_t^{-1}+{\boldsymbol{Y}}^T{\boldsymbol{\Sigma}}_{\varepsilon}^{-1}\boldsymbol{Y} $

will be the sum of a symmetric tridiagonal and a diagonal matrix. From a computational viewpoint, this property is advantageous as it allows for a solution to the system of equations equation (25) with

![]() $ O(N) $

operations using the Thomas algorithm (Quarteroni et al., Reference Quarteroni, Sacco and Saleri2007). Alternatively, for improved efficiency and numerical stability, a Cholesky decomposition can be applied to solve the linear system and calculate the determinants of the symmetric tridiagonal terms in equation (26).

$ O(N) $

operations using the Thomas algorithm (Quarteroni et al., Reference Quarteroni, Sacco and Saleri2007). Alternatively, for improved efficiency and numerical stability, a Cholesky decomposition can be applied to solve the linear system and calculate the determinants of the symmetric tridiagonal terms in equation (26).

4.2. Efficient log-likelihood evaluation for combined spatial and temporal correlation and additive model prediction uncertainty

To reduce the computational complexity of the log-likelihood evaluation in the case of additive model prediction uncertainty and combined spatial and temporal correlation, we use an approach that utilizes the properties of the Kronecker product and the eigendecomposition of the separable covariance matrix. For a detailed description of this approach, the reader is referred to Stegle et al. (Reference Stegle, Lippert, Mooij, Lawrence and Borgwardt2011).

5. Description of the IJssel bridge case study

5.1. Description of the structure

The IJssel bridge is a twin-girder steel plate road bridge that carries traffic over the river IJssel in the direction of Westervoort. It consists of an approach bridge and a main bridge, of which the latter is of interest in this case. The main bridge has a total length of

![]() $ 295 $

m and five spans with lengths of

$ 295 $

m and five spans with lengths of

![]() $ 45 $

,

$ 45 $

,

![]() $ 50 $

,

$ 50 $

,

![]() $ 105 $

,

$ 105 $

,

![]() $ 50 $

, and

$ 50 $

, and

![]() $ 45 $

m. In total, the bridge has 12 supports. An elevation view of the structure is shown in Figure 4. The supports at pillar H are hinges, while the rest are roller bearings in the longitudinal directions. The roller bearings at pillars G and K can resist uplift forces. The deck structure of the bridge is composed of two steel girders with variable height, ranging from

$ 45 $

m. In total, the bridge has 12 supports. An elevation view of the structure is shown in Figure 4. The supports at pillar H are hinges, while the rest are roller bearings in the longitudinal directions. The roller bearings at pillars G and K can resist uplift forces. The deck structure of the bridge is composed of two steel girders with variable height, ranging from

![]() $ 2.4 $

to

$ 2.4 $

to

![]() $ 5.3 $

m, and cross-beams with a spacing of approximately

$ 5.3 $

m, and cross-beams with a spacing of approximately

![]() $ 1.8 $

m. The main girders and cross beams support the steel deck plate. The deck plate has a thickness of

$ 1.8 $

m. The main girders and cross beams support the steel deck plate. The deck plate has a thickness of

![]() $ 10 $

or

$ 10 $

or

![]() $ 12\ \mathrm{mm} $

and

$ 12\ \mathrm{mm} $

and

![]() $ 160\times 8 $

mm longitudinal bulb stiffeners. The cross beams are placed with a center-to-center distance of

$ 160\times 8 $

mm longitudinal bulb stiffeners. The cross beams are placed with a center-to-center distance of

![]() $ 1.75 $

to

$ 1.75 $

to

![]() $ 1.80 $

m and are composed of a

$ 1.80 $

m and are composed of a

![]() $ 500\times 10 $

mm web with a

$ 500\times 10 $

mm web with a

![]() $ 250\times 12 $

mm welded flange. The cross beams are tapered in the parts that extend beyond the main girders and the beam height is reduced to

$ 250\times 12 $

mm welded flange. The cross beams are tapered in the parts that extend beyond the main girders and the beam height is reduced to

![]() $ 200 $

mm at the beam ends. The two main girders are coupled with K-braces located below every second or third cross beam, with a distance of

$ 200 $

mm at the beam ends. The two main girders are coupled with K-braces located below every second or third cross beam, with a distance of

![]() $ 5.4 $

m on average.

$ 5.4 $

m on average.

Figure 4. Elevation view of the IJsselbridge (top), and typical cross-section including K-brace (bottom), with lengths shown in meters (from Rijkswaterstaat).

5.2. Description of the physical model

A two-dimensional twin-girder finite element (FE) model based on Euler-Bernoulli beam elements is used to model the IJsselbridge, shown in Figure 5. Each element has four degrees of freedom (DOFs): two translations and two rotations. The variable geometrical properties of the steel girders along the longitudinal axis are taken into account by varying the structural properties of the individual beam elements, where each element has a prismatic cross-section. In addition to the main girder, half the width of the deck plate and the corresponding longitudinal stiffeners are also considered in the calculation of the structural properties for each cross-section along the

![]() $ x $

-axis. The maximum beam element length can be specified in order to approximate the variable geometry of the main girders along the length of the bridge to the required precision. A maximum element length of

$ x $

-axis. The maximum beam element length can be specified in order to approximate the variable geometry of the main girders along the length of the bridge to the required precision. A maximum element length of

![]() $ 2.0 $

m was used for all simulations, resulting in a model with

$ 2.0 $

m was used for all simulations, resulting in a model with

![]() $ {n}_{\mathrm{node}}=193 $

nodes and

$ {n}_{\mathrm{node}}=193 $

nodes and

![]() $ {n}_{\mathrm{DOF}}=386 $

DOFs. The coupling between the main girders due to combined stiffness of the deck, crossbeams, and K-braces is simulated by vertical translational springs, placed approximately at the positions of the K-braces that connect the two main girders of the IJssel bridge. Six pinned supports are specified for each girder at locations corresponding to pillars F through L. Independent rotational springs are defined at the supports to simulate the friction at the support bearings and to account for the possibility of partial locking.

$ {n}_{\mathrm{DOF}}=386 $

DOFs. The coupling between the main girders due to combined stiffness of the deck, crossbeams, and K-braces is simulated by vertical translational springs, placed approximately at the positions of the K-braces that connect the two main girders of the IJssel bridge. Six pinned supports are specified for each girder at locations corresponding to pillars F through L. Independent rotational springs are defined at the supports to simulate the friction at the support bearings and to account for the possibility of partial locking.

Figure 5. Illustration of the IJsselbridge FE model (left), lateral load function (right), and parametrization of the FE model (bottom).

During the measurement campaign, the bridge was closed for traffic and only loaded by a heavy weighted truck at the left and right lanes. To account for the position of the truck along the transverse direction (

![]() $ z $

-axis)—which is not included in the FE model—each load is multiplied by a value of the Lateral Load Function (LLF), as illustrated in Figure 5 (right). The LLF is taken as a linear function, with slope and intercept coefficients such that: (i) a point load applied directly on a girder does not affect the other girder; and (ii) a load applied at the center of the bridge deck is equally distributed between the left and right girders.

$ z $

-axis)—which is not included in the FE model—each load is multiplied by a value of the Lateral Load Function (LLF), as illustrated in Figure 5 (right). The LLF is taken as a linear function, with slope and intercept coefficients such that: (i) a point load applied directly on a girder does not affect the other girder; and (ii) a load applied at the center of the bridge deck is equally distributed between the left and right girders.

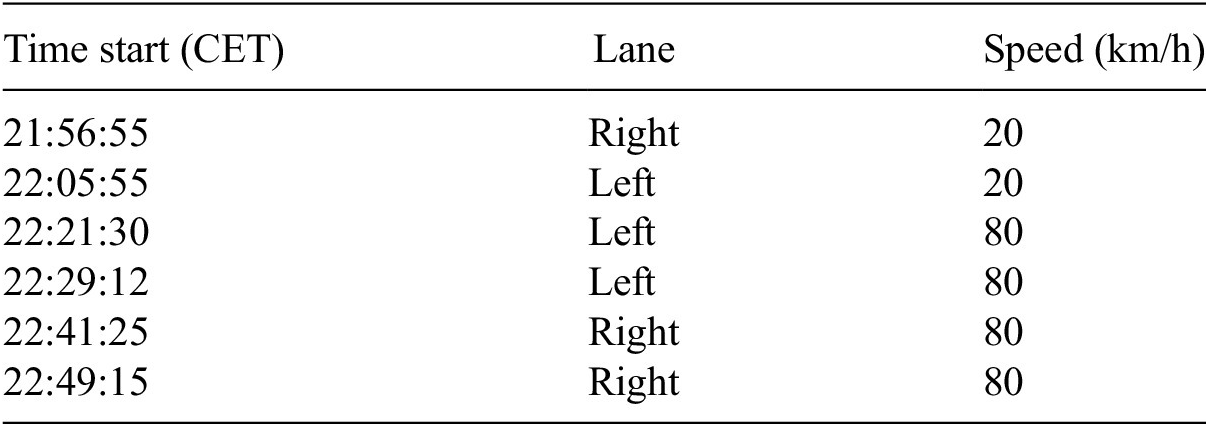

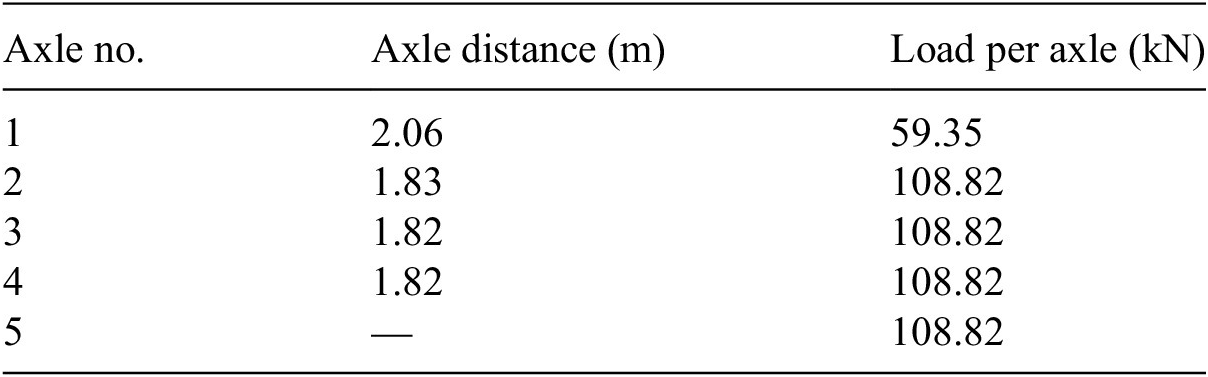

5.3. Measurements

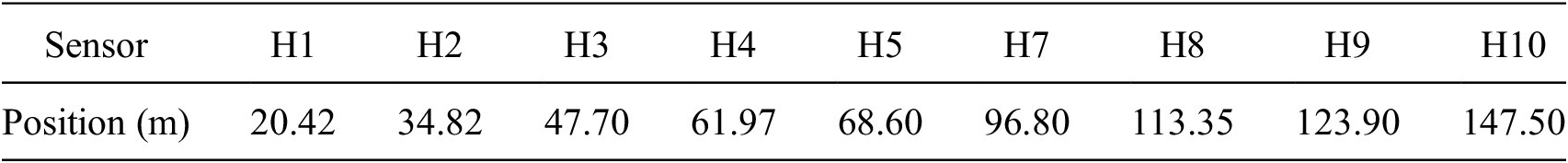

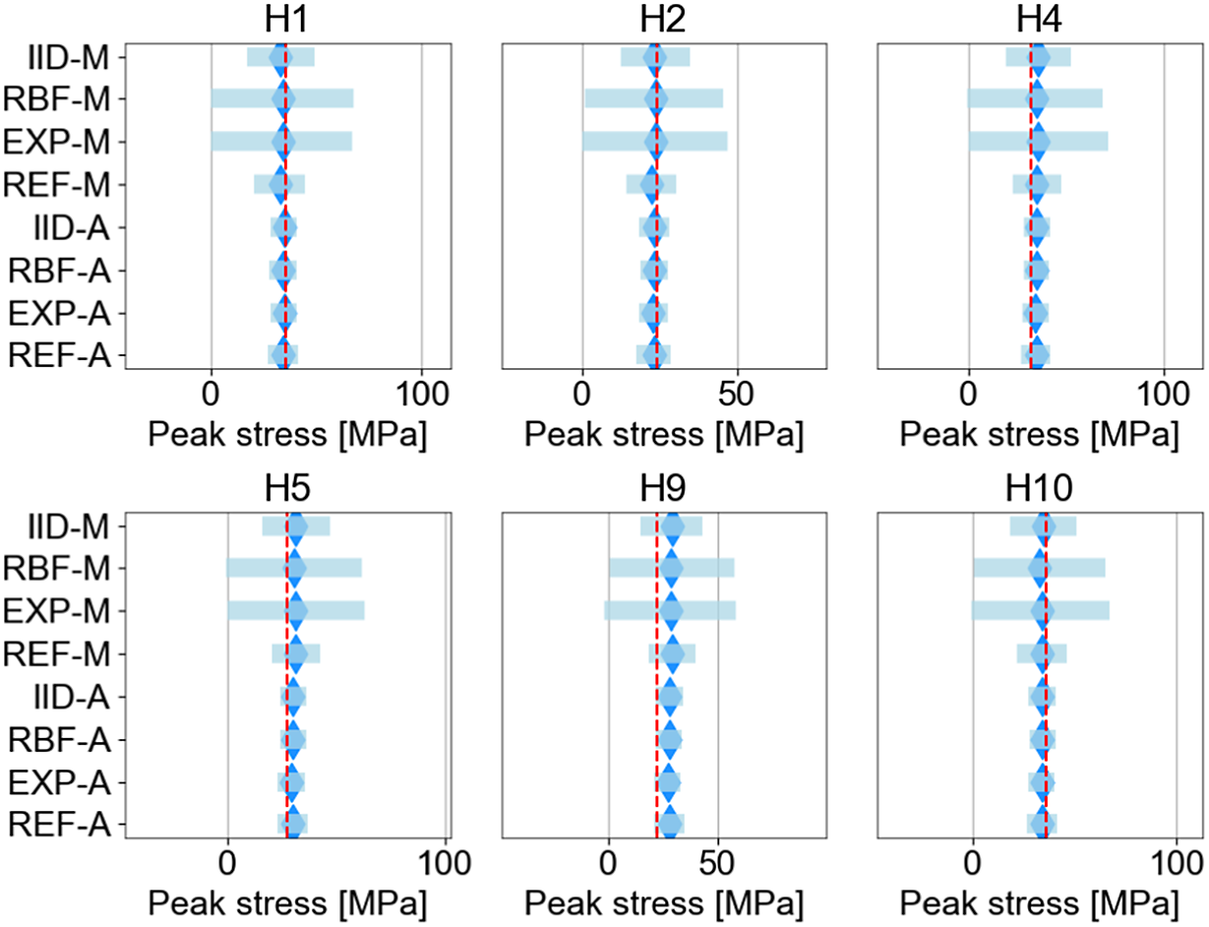

The data used in this study is obtained from a measurement campaign performed under controlled load tests. A total of 34 strain measurement sensors were placed on the top and bottom flanges of the steel girders, the cross beams, the longitudinal bulb stiffeners, and the concrete approach bridge, to measure the response of the structure to traffic loads. In this article, we use only a subset of sensors that are placed on the center of the bottom flange of the right main girder, since they measure the global response of the bridge. These sensors are denoted with the prefix “H” (Figure 6). The exact position of each considered sensor along the length of the bridge and the sensor label are provided in Table 2. It should be noted that the authors were given access to the experimental data and details regarding the sensor network, data acquisition system, and experimental procedure of the measurement campaign, however, the location of the sensors was not chosen specifically for the purposes of the present study. Additional information regarding the measurement campaign can be found in Appendix B.

Figure 6. Approximate location of sensors on the right girder. The prefix “H” is used to denote the sensors on the main structure of the IJsselbridge. Adapted from a Rijkswaterstaat internal report.

Table 2. Names, labels, and positions of strain gauges placed on the IJsselbridge main girder

Note. The positions are measured from pillar F (see Figure 4).

Time series of the strain

![]() $ \varepsilon $

at a sampling rate of

$ \varepsilon $

at a sampling rate of

![]() $ 50 $

Hz are obtained from each sensor and postprocessed to yield the corresponding influence lines. These strain influence lines are converted to stress influence lines using Hooke’s law

$ 50 $

Hz are obtained from each sensor and postprocessed to yield the corresponding influence lines. These strain influence lines are converted to stress influence lines using Hooke’s law

![]() $ \sigma =E\cdot \varepsilon $

, where

$ \sigma =E\cdot \varepsilon $

, where

![]() $ \sigma $

denotes the stress and

$ \sigma $

denotes the stress and

![]() $ E $

denotes Young’s modulus. The latter is taken as

$ E $

denotes Young’s modulus. The latter is taken as

![]() $ E=210 $

GPa, as specified in the IJsselbridge design. Each sensor yields two influence lines, one for each lane that was loaded during the measurement campaign. Linear interpolation is performed to obtain the stresses at the locations along the length of the bridge corresponding to the locations of the nodes of the FE model. The processed influence lines are plotted in Figure 7.

$ E=210 $

GPa, as specified in the IJsselbridge design. Each sensor yields two influence lines, one for each lane that was loaded during the measurement campaign. Linear interpolation is performed to obtain the stresses at the locations along the length of the bridge corresponding to the locations of the nodes of the FE model. The processed influence lines are plotted in Figure 7.

Figure 7. Stress influence lines obtained from the measurement campaign. The blue and red lines correspond to the measured response for different truck positions in the transverse direction of the bridge.

It is noted that significant discrepancies were observed between the measurements and FE model predictions for a number of sensors during a preliminary comparison of model predictions using a model fitted to the measurements with conventional numerical optimization. It was determined, after verifying the validity of the measurements, that this can be attributed to the simplicity of the model, which was not able to capture the structural behavior at a number of sensor locations. The following list is a summary of the physical simplifications and assumptions that could potentially contribute to the observed discrepancies:

-

• Only limited number of structural elements are explicitly modeled, with elements such as stiffeners, K-braces, the steel deck, and cross-beams being only considered implicitly (e.g., by modifying the cross-sectional properties of the elements representing the main girders), or omitted entirely.

-

• The 3D distribution of forces within the elements is neglected, loads are lumped to the closest node and supports are considered as points, potentially overestimating the stresses.

-

• Although likely negligible, the stiffness of the deck and cross-beams between the two girders is only considered implicitly as lumped stiffness in the vertical springs.

-

• Variations in the geometry and cross-section properties of the K-braces along the length of the bridge are not taken into account.

-

• Shear lag in the deck is not modeled.

The uncertainty in the physical model prediction resulting from the aforementioned simplifications and misspecifications is accounted for in the Bayesian inference by considering the additive and multiplicative error models, introduced in Section 3.4.

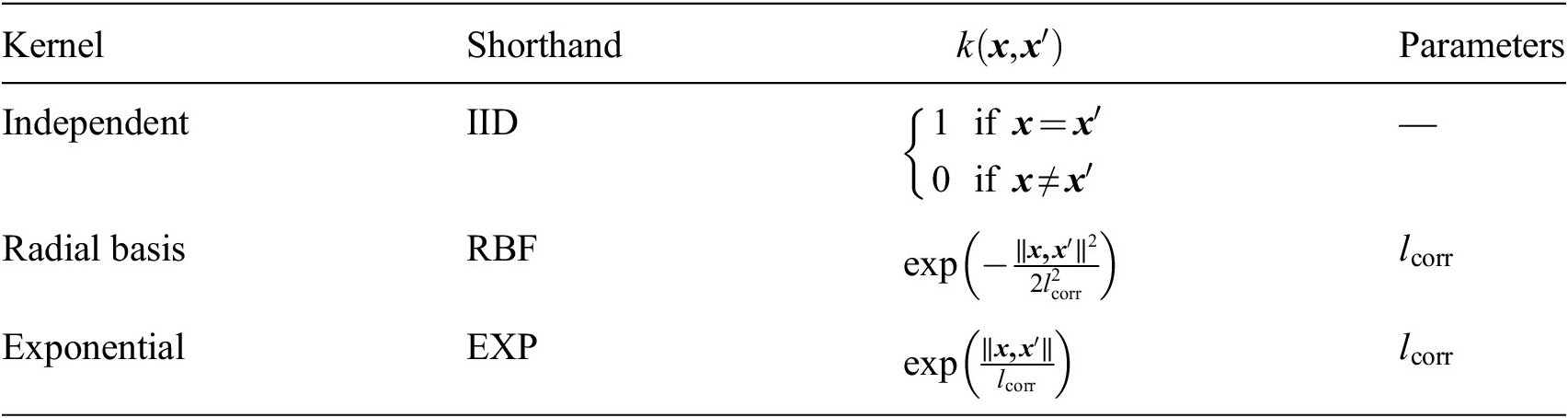

5.4. Correlation functions

Correlation functions also referred to as kernels or kernel functions in the literature and throughout this work, are positive definite functions of two Euclidean vectors

![]() $ k\left(\boldsymbol{x},{\boldsymbol{x}}^{\prime };{\boldsymbol{\theta}}_c\right) $

(Duvenaud, Reference Duvenaud2014) that describe the correlation between points

$ k\left(\boldsymbol{x},{\boldsymbol{x}}^{\prime };{\boldsymbol{\theta}}_c\right) $

(Duvenaud, Reference Duvenaud2014) that describe the correlation between points

![]() $ \boldsymbol{x} $

and

$ \boldsymbol{x} $

and

![]() $ {\boldsymbol{x}}^{\prime } $

. Table 3 provides a summary of the kernel functions used to model the correlation in the model prediction error in this article. These kernel functions were chosen due to their wide adoption in statistical applications, ease of implementation, and small number of parameters. Additionally, these kernel functions were empirically found to result in more accurate posterior and posterior predictive distributions among a group of candidate kernel functions in Koune (Reference Koune2021).

$ {\boldsymbol{x}}^{\prime } $

. Table 3 provides a summary of the kernel functions used to model the correlation in the model prediction error in this article. These kernel functions were chosen due to their wide adoption in statistical applications, ease of implementation, and small number of parameters. Additionally, these kernel functions were empirically found to result in more accurate posterior and posterior predictive distributions among a group of candidate kernel functions in Koune (Reference Koune2021).

Table 3. List of correlation functions and corresponding parameters

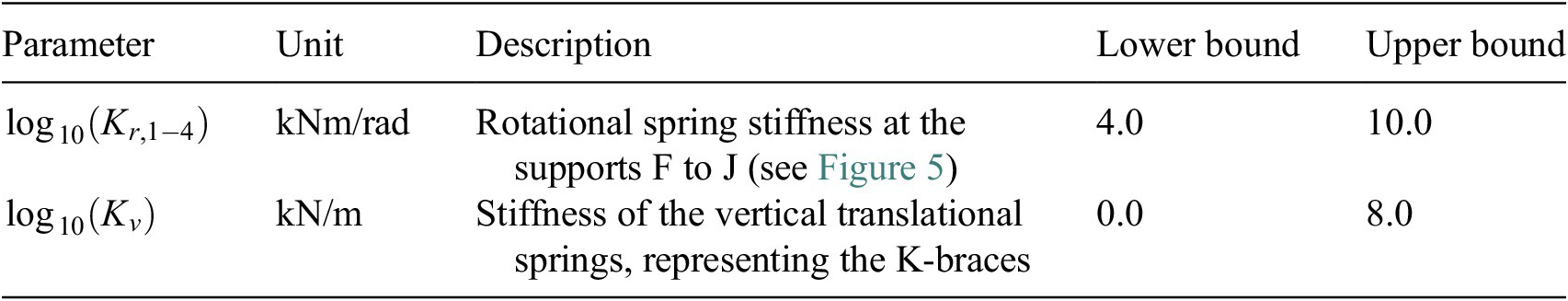

5.5. Physical model parameters

The set of physical model parameters to be inferred,

![]() $ {\boldsymbol{\theta}}_s $

, is shown in Figure 5. The choice of the uncertain physical model parameters is based primarily on sensitivity analyses, the damage mechanisms expected to affect the behavior of the structure and consultation with steel bridge experts. Independent rotational springs are defined at supports F, G, H, and J, with the corresponding rotational spring stiffnessses denoted as

$ {\boldsymbol{\theta}}_s $

, is shown in Figure 5. The choice of the uncertain physical model parameters is based primarily on sensitivity analyses, the damage mechanisms expected to affect the behavior of the structure and consultation with steel bridge experts. Independent rotational springs are defined at supports F, G, H, and J, with the corresponding rotational spring stiffnessses denoted as

![]() $ {K}_{r,1} $

through

$ {K}_{r,1} $

through

![]() $ {K}_{r,4} $

, to simulate the friction at the support bearings and to account for the possibility of partial locking. An analysis of the sensitivity of the stress response to the stiffness of the support bearings indicated that the stiffness at supports K and L has negligible influence on the stress at the sensor locations. Therefore, the bearings at supports K and L are assumed to be hinges with no rotational stiffness. Furthermore, the riveted connections present in the K-braces result in high uncertainty on their stiffness, while additionally this stiffness was found to have a significant effect on the FE model response. The stiffness parameter of the vertical springs,

$ {K}_{r,4} $

, to simulate the friction at the support bearings and to account for the possibility of partial locking. An analysis of the sensitivity of the stress response to the stiffness of the support bearings indicated that the stiffness at supports K and L has negligible influence on the stress at the sensor locations. Therefore, the bearings at supports K and L are assumed to be hinges with no rotational stiffness. Furthermore, the riveted connections present in the K-braces result in high uncertainty on their stiffness, while additionally this stiffness was found to have a significant effect on the FE model response. The stiffness parameter of the vertical springs,

![]() $ {K}_v $

, is assumed to be equal for all vertical springs along the length of the bridge. Both the vertical and rotational spring stiffness values span several orders of magnitude. We therefore represent these parameters in terms of their base-10 logarithms. In addition to the more natural and convenient representation, the parametrization by the base-10 logarithm yields a reduction of the relative size of the prior to the posterior, and therefore a reduction of the number of samples required for convergence when using nested sampling.

$ {K}_v $

, is assumed to be equal for all vertical springs along the length of the bridge. Both the vertical and rotational spring stiffness values span several orders of magnitude. We therefore represent these parameters in terms of their base-10 logarithms. In addition to the more natural and convenient representation, the parametrization by the base-10 logarithm yields a reduction of the relative size of the prior to the posterior, and therefore a reduction of the number of samples required for convergence when using nested sampling.

Table 4 summarizes the prior distributions that are used for

![]() $ {\log}_{10}\left({K}_r\right) $

and

$ {\log}_{10}\left({K}_r\right) $

and

![]() $ {\log}_{10}\left({K}_v\right) $

. The prior distributions are determined using a combination of engineering judgment and sensitivity analysis. Additional details of the sensitivity analysis for the rotational and vertical spring stiffness parameters can be found in Appendix A. It should be noted that the impact of the physical model parameterization on the Bayesian model selection is not considered in this study. The feasibility of inferring parameters of the probabilistic model, and selecting the most likely model from a pool of candidate models, when a large number of physical model parameters is present is left as a potential topic for future work.

$ {\log}_{10}\left({K}_v\right) $

. The prior distributions are determined using a combination of engineering judgment and sensitivity analysis. Additional details of the sensitivity analysis for the rotational and vertical spring stiffness parameters can be found in Appendix A. It should be noted that the impact of the physical model parameterization on the Bayesian model selection is not considered in this study. The feasibility of inferring parameters of the probabilistic model, and selecting the most likely model from a pool of candidate models, when a large number of physical model parameters is present is left as a potential topic for future work.

Table 4. Description and uniform prior distribution bounds of physical model parameters

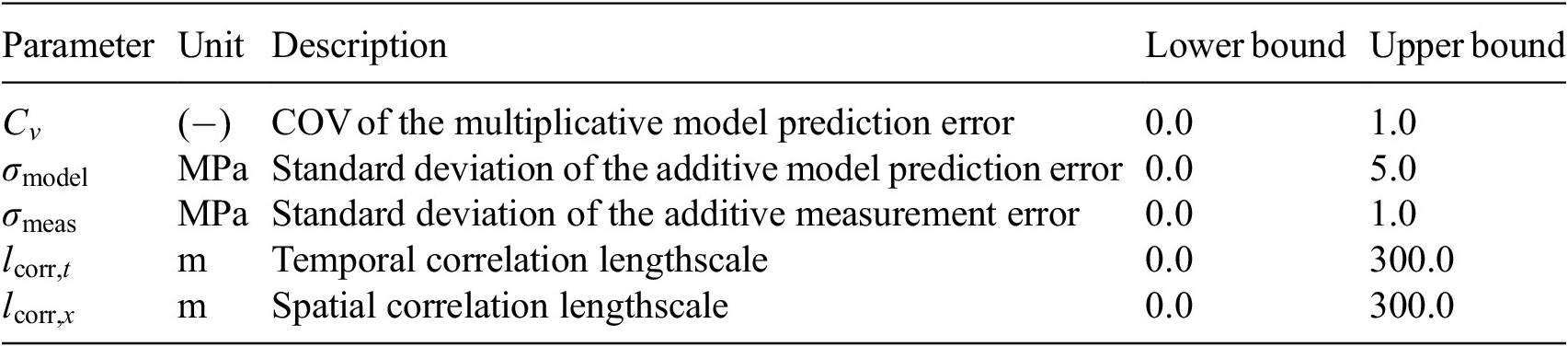

5.6. Probabilistic model parameters

Table 5 provides an overview of the set of probabilistic model parameters to be inferred,

![]() $ {\boldsymbol{\theta}}_c $

, and their prior distributions. Details of the probabilistic model formulations for each of the cases investigated are provided in Sections 6 and 7. The support (domain) of the priors must be defined such that it is possible to capture the structure of the correlations in the residuals between model predictions and measurements. It should be noted that choosing the priors for the parameters of the probabilistic model is not a simple task as no information on the correlation structure is available. Furthermore, a poor choice of prior can significantly impact the inference and prediction, leading to wide credible intervals (Fuglstad et al., Reference Fuglstad, Simpson, Lindgren and Rue2018). Uniform priors are chosen with supports that are wide enough to capture a range of correlations that are expected to be present in the measurements.

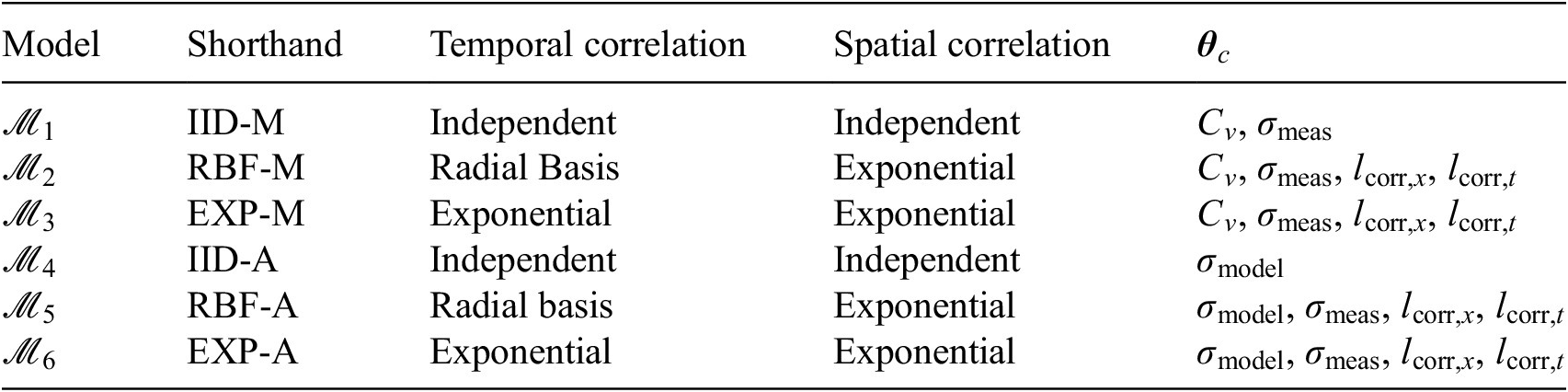

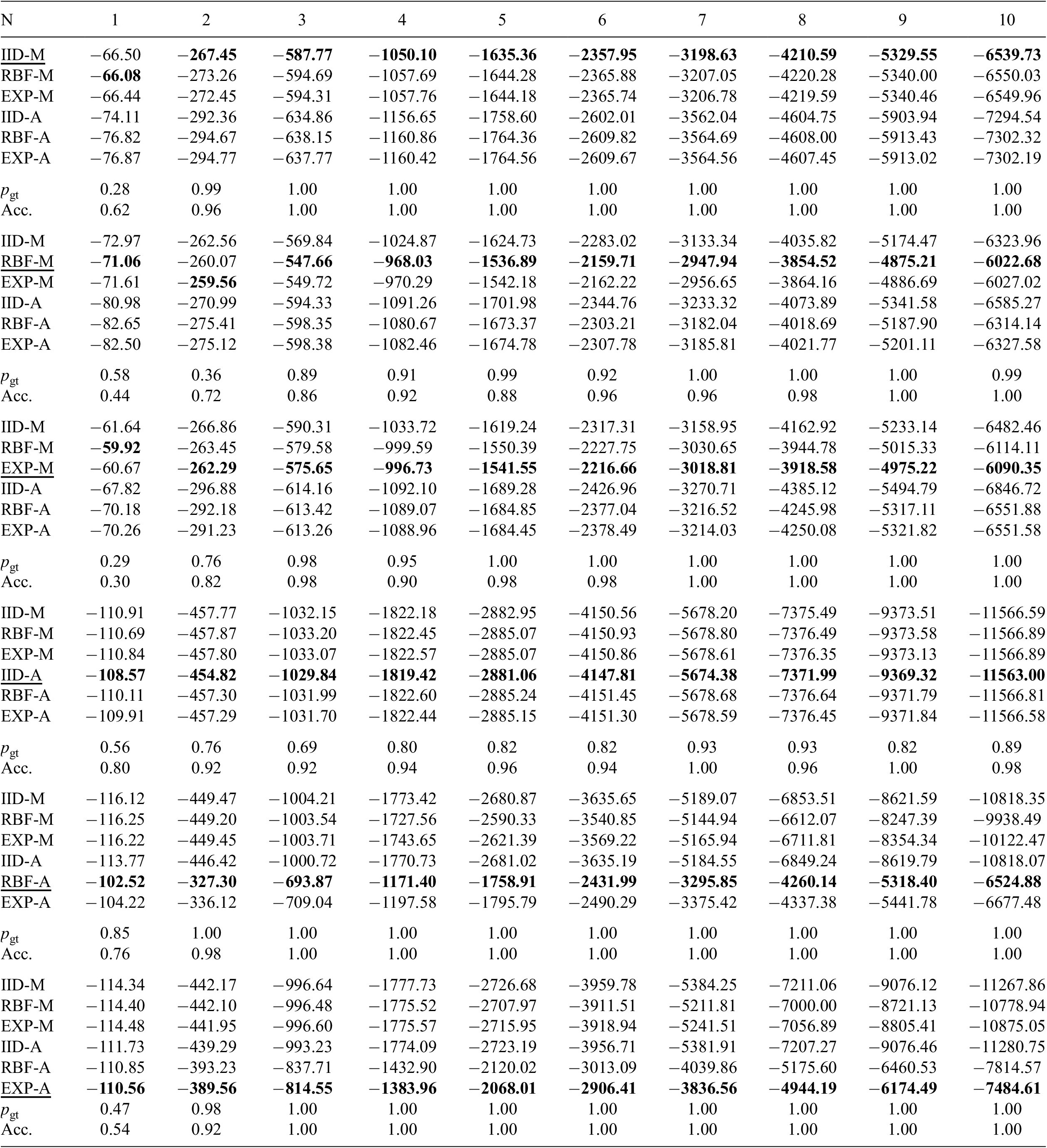

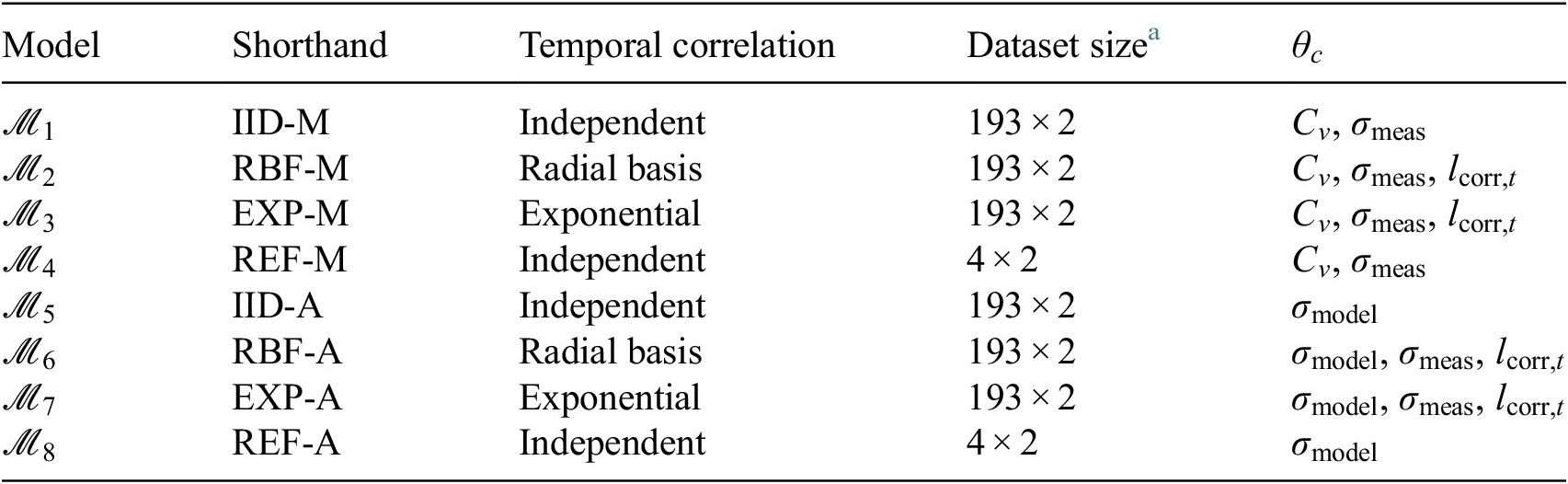

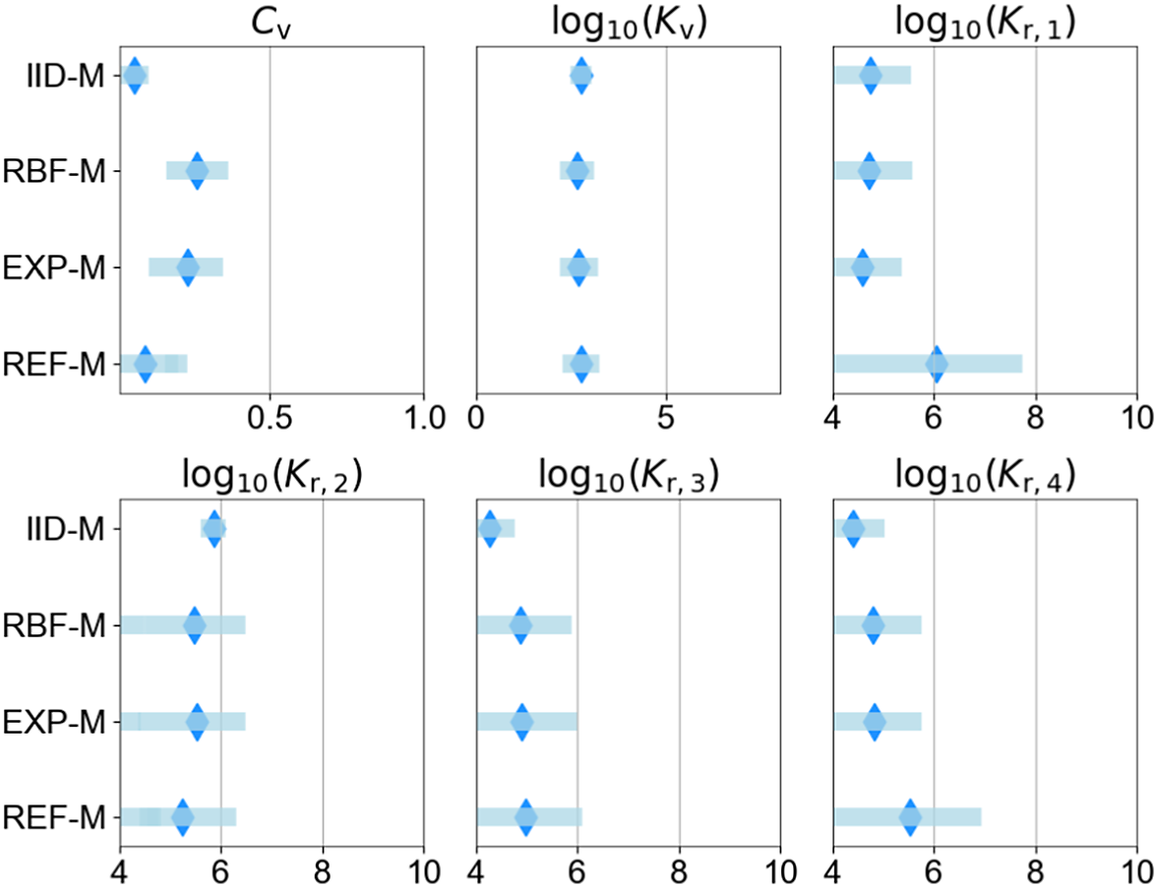

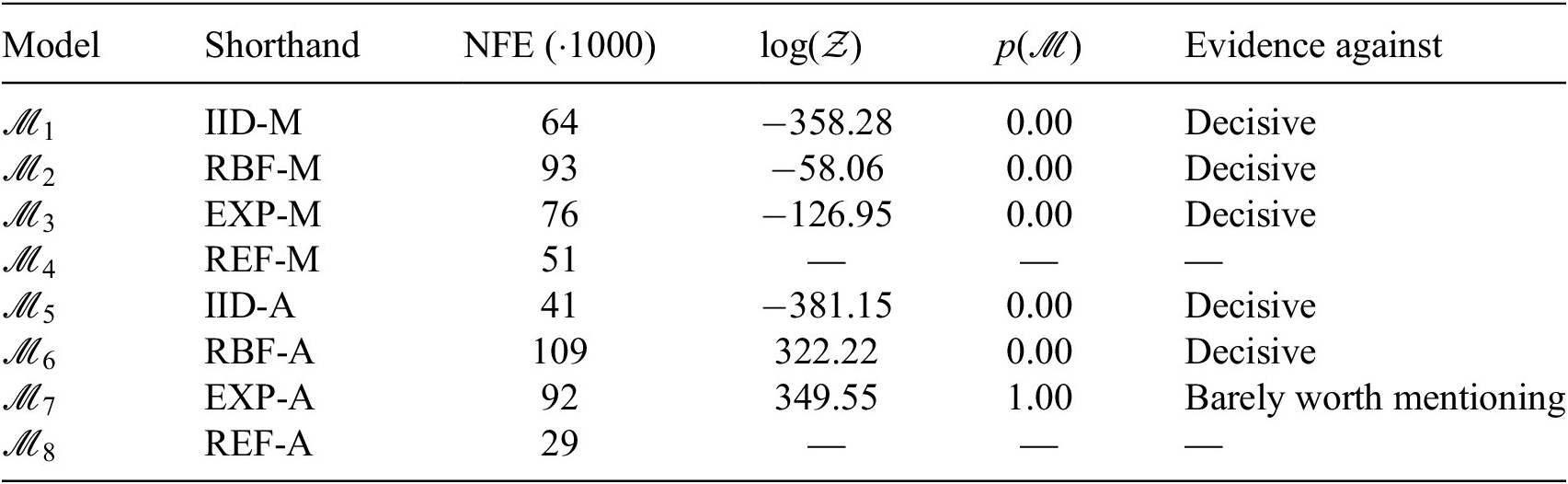

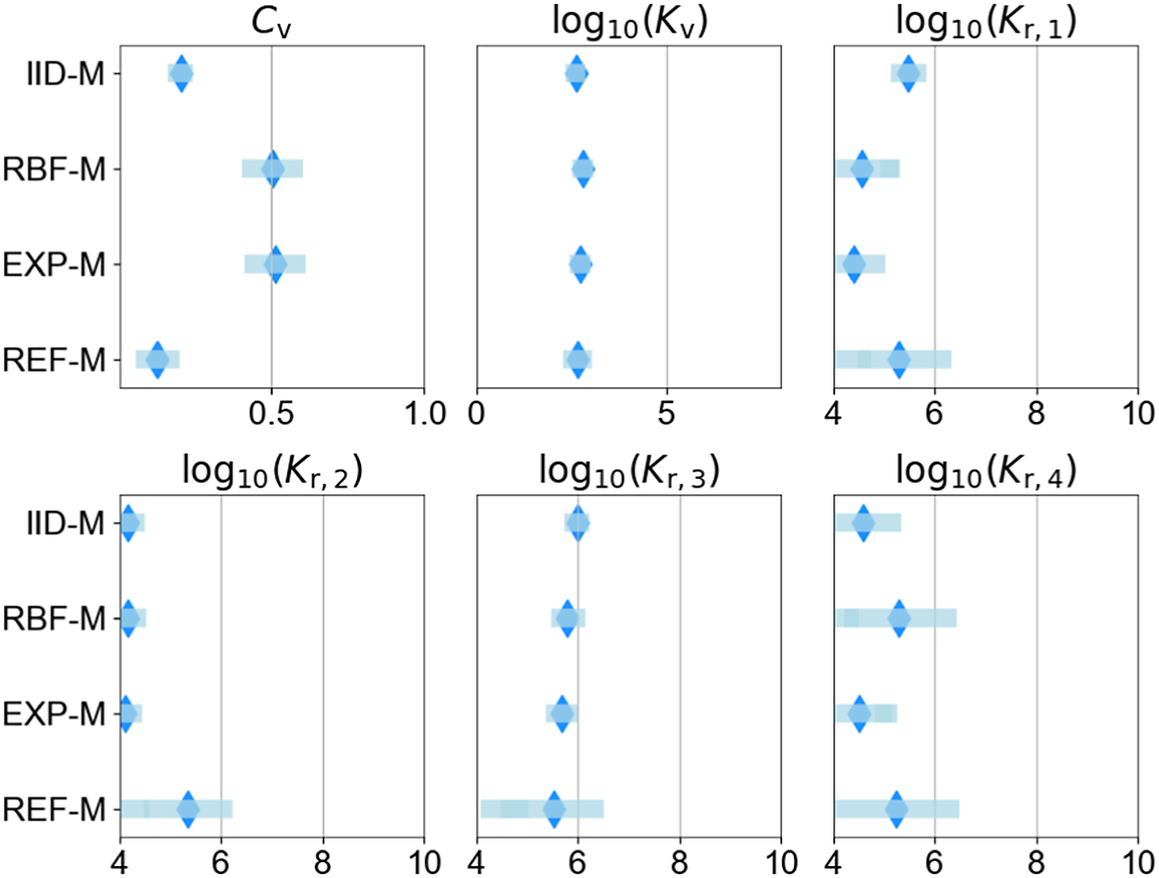

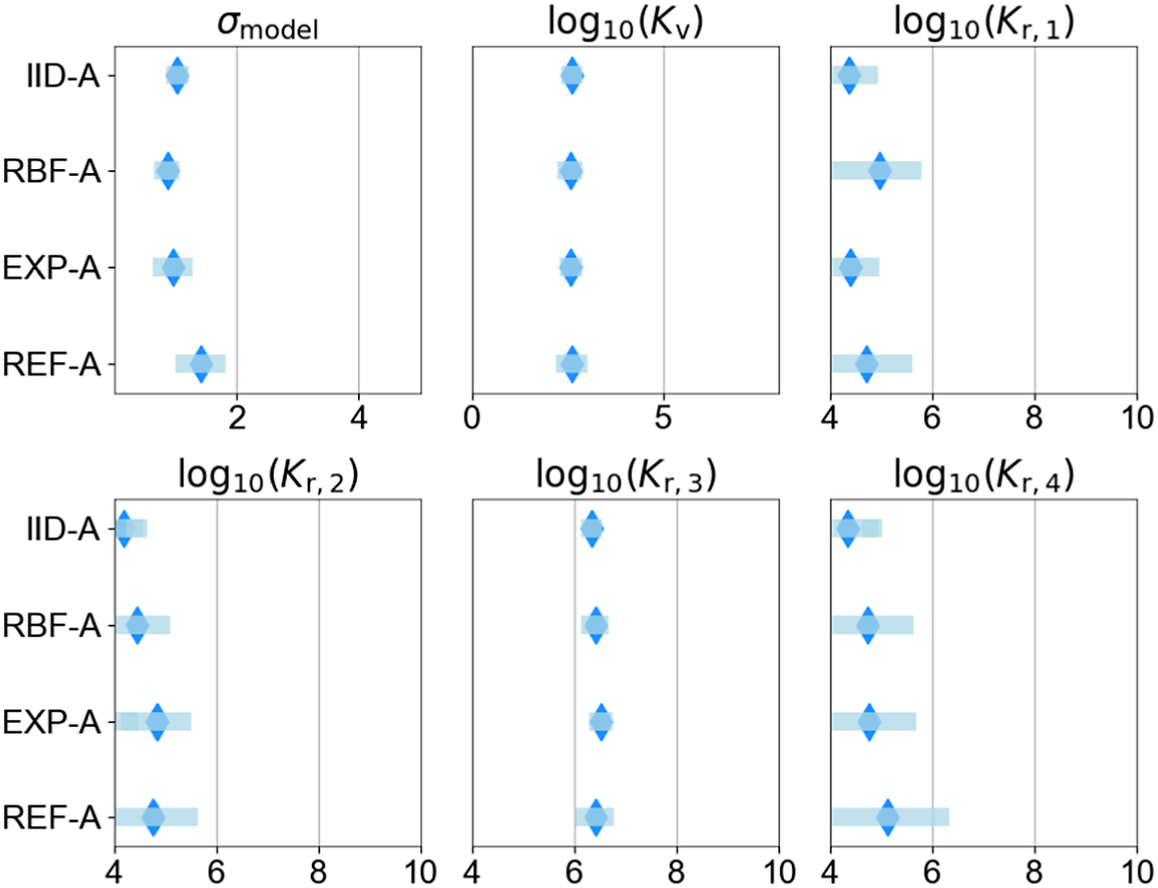

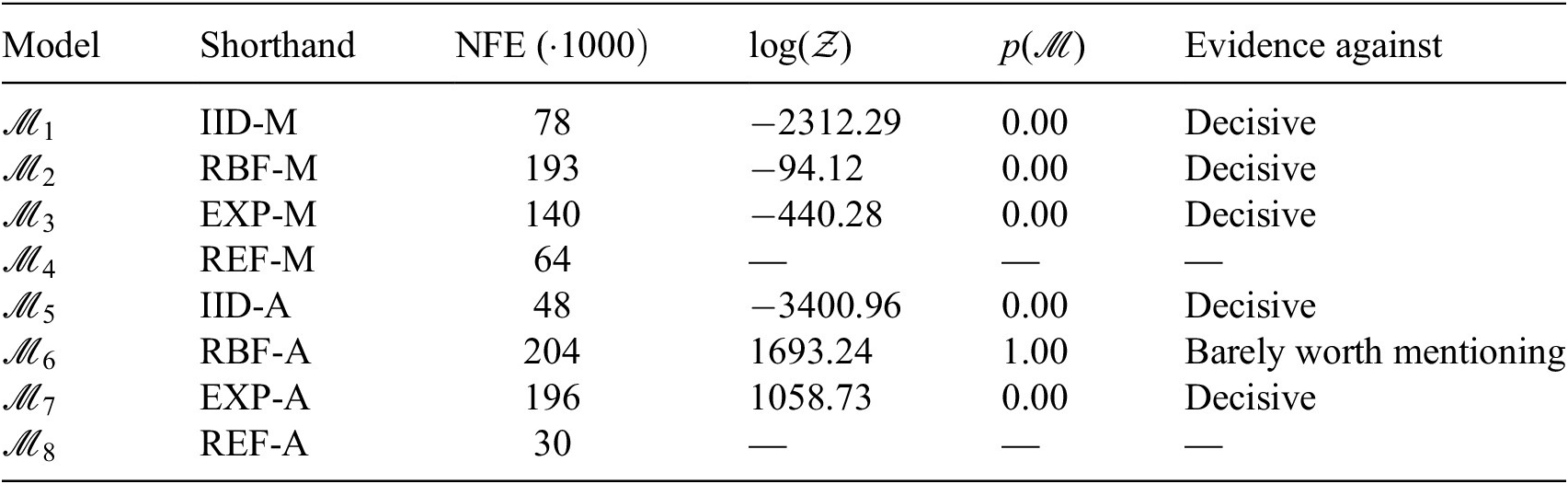

$ {\boldsymbol{\theta}}_c $