1. Introduction

A sequence ![]() $(x(n))_{n\geq 1}\subseteq [0,1)$ is uniformly distributed modulo

$(x(n))_{n\geq 1}\subseteq [0,1)$ is uniformly distributed modulo ![]() $1$ if the proportion of points in any subinterval

$1$ if the proportion of points in any subinterval ![]() $I \subseteq [0,1)$ converges to the size of the interval:

$I \subseteq [0,1)$ converges to the size of the interval: ![]() $\#\{n\leq N:\ x(n)\in I\}\sim N|I|$, as

$\#\{n\leq N:\ x(n)\in I\}\sim N|I|$, as ![]() $N\rightarrow \infty$. The theory of uniform distribution dates back to 1916, to a seminal paper of Weyl [Reference WeylWey16], and constitutes a simple test of pseudo-randomness. A well-known result of Fejér (see [Reference Kuipers and NiederreiterKN74, p. 13]) implies that for any

$N\rightarrow \infty$. The theory of uniform distribution dates back to 1916, to a seminal paper of Weyl [Reference WeylWey16], and constitutes a simple test of pseudo-randomness. A well-known result of Fejér (see [Reference Kuipers and NiederreiterKN74, p. 13]) implies that for any ![]() $A>1$ and any

$A>1$ and any ![]() $\alpha >0$ the sequence

$\alpha >0$ the sequence

is uniformly distributed, while for ![]() $A=1$ the sequence is not uniformly distributed. In this paper, we study stronger, local tests for pseudo-randomness for this sequence.

$A=1$ the sequence is not uniformly distributed. In this paper, we study stronger, local tests for pseudo-randomness for this sequence.

Given an increasing ![]() $\mathbb {R}$-valued sequence,

$\mathbb {R}$-valued sequence, ![]() $(\omega (n))=(\omega (n))_{n>0}$, we denote the sequence modulo

$(\omega (n))=(\omega (n))_{n>0}$, we denote the sequence modulo ![]() $1$ by

$1$ by

Furthermore, let ![]() $u_N(n)\subset [0,1)$ denote the ordered sequence, thus

$u_N(n)\subset [0,1)$ denote the ordered sequence, thus ![]() $u_N(1) \le u_N(2) \le \dots \le u_N(N)$. With that, we define the gap distribution of

$u_N(1) \le u_N(2) \le \dots \le u_N(N)$. With that, we define the gap distribution of ![]() $(x(n))$ as the limiting distribution (if it exists): for

$(x(n))$ as the limiting distribution (if it exists): for ![]() $s>0$,

$s>0$,

where ![]() $\|\cdot \|$ denotes the distance to the nearest integer, and

$\|\cdot \|$ denotes the distance to the nearest integer, and ![]() $u_N(N+1)= u_N(1)$. Thus,

$u_N(N+1)= u_N(1)$. Thus, ![]() $P(s)$ represents the limiting proportion of (scaled) gaps between (spatially) neighboring elements in the sequence which are less than

$P(s)$ represents the limiting proportion of (scaled) gaps between (spatially) neighboring elements in the sequence which are less than ![]() $s$. We say that a sequence has Poissonian gap distribution if

$s$. We say that a sequence has Poissonian gap distribution if ![]() $P(s) = 1- e^{-s}$, the expected value for a uniformly distributed sequence on the unit interval.

$P(s) = 1- e^{-s}$, the expected value for a uniformly distributed sequence on the unit interval.

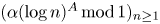

Figure 1. From left to right, the histograms represent the gap distribution density at time ![]() $N$ of

$N$ of ![]() $(\log n)_{n\geq 1}$,

$(\log n)_{n\geq 1}$, ![]() $((\log n)^{2})_{n > 0}$, and

$((\log n)^{2})_{n > 0}$, and ![]() $((\log n)^{3})_{n > 0}$ when

$((\log n)^{3})_{n > 0}$ when ![]() $N=10^{5}$ and the curve is the graph of

$N=10^{5}$ and the curve is the graph of ![]() $x \mapsto e^{-x}$. Note that

$x \mapsto e^{-x}$. Note that ![]() $(\log n)$ is not uniformly distributed, and the gap distribution is not Poissonian.

$(\log n)$ is not uniformly distributed, and the gap distribution is not Poissonian.

Our main theorem is the following result.

Theorem 1.1 Let ![]() $\omega (n):= \alpha ( \log n)^{A}$ for

$\omega (n):= \alpha ( \log n)^{A}$ for ![]() $A>1$ and any

$A>1$ and any ![]() $\alpha >0$. Then

$\alpha >0$. Then ![]() $x(n)$ has Poissonian gap distribution.

$x(n)$ has Poissonian gap distribution.

In fact, this theorem follows (via the method of moments) from Theorem 1.2 (below) which states that for every ![]() $m \ge 2$ the

$m \ge 2$ the ![]() $m$-point correlation function of this sequence is Poissonian. By that we mean the following. Let

$m$-point correlation function of this sequence is Poissonian. By that we mean the following. Let ![]() $m\geq 2$ be an integer, and let

$m\geq 2$ be an integer, and let ![]() $f \in C_c^\infty (\mathbb {R}^{m-1})$ be a compactly supported function which can be thought of as a stand-in for the characteristic function of a Cartesian product of compact intervals in

$f \in C_c^\infty (\mathbb {R}^{m-1})$ be a compactly supported function which can be thought of as a stand-in for the characteristic function of a Cartesian product of compact intervals in ![]() $\mathbb {R}^{m-1}$. Let

$\mathbb {R}^{m-1}$. Let ![]() $[N]:=\{1,\ldots,N\}$ and define the

$[N]:=\{1,\ldots,N\}$ and define the ![]() $m$-point correlation of

$m$-point correlation of ![]() $(x(n))$, at time

$(x(n))$, at time ![]() $N$, to be

$N$, to be

\begin{equation} R^{(m)}(N,f) := \frac{1}{N}\sum_{\boldsymbol{\mathbf{n}} \in [N]^m}^\ast f(N\|x(n_1)-x(n_2)\|,N \|x(n_2)-x(n_3)\|, \ldots, N\|x(n_{m-1})-x(n_{m})\|), \end{equation}

\begin{equation} R^{(m)}(N,f) := \frac{1}{N}\sum_{\boldsymbol{\mathbf{n}} \in [N]^m}^\ast f(N\|x(n_1)-x(n_2)\|,N \|x(n_2)-x(n_3)\|, \ldots, N\|x(n_{m-1})-x(n_{m})\|), \end{equation}

where ![]() $\sum \limits ^\ast$ denotes a sum over distinct

$\sum \limits ^\ast$ denotes a sum over distinct ![]() $m$-tuples. Thus, the

$m$-tuples. Thus, the ![]() $m$-point correlation measures how correlated points are on the scale of the average gap between neighboring points (which is

$m$-point correlation measures how correlated points are on the scale of the average gap between neighboring points (which is ![]() $N^{-1}$). We say that

$N^{-1}$). We say that ![]() $(x(n))$ has Poissonian

$(x(n))$ has Poissonian ![]() $m$-point correlation if

$m$-point correlation if

That is, if the ![]() $m$-point correlation converges to the expected value if the sequence was uniformly distributed on the unit interval.

$m$-point correlation converges to the expected value if the sequence was uniformly distributed on the unit interval.

Theorem 1.2 Let ![]() $\omega (n):= \alpha (\log n)^{A}$ for

$\omega (n):= \alpha (\log n)^{A}$ for ![]() $A>1$ and any

$A>1$ and any ![]() $\alpha >0$. Then

$\alpha >0$. Then ![]() $x(n)$ has Poissonian

$x(n)$ has Poissonian ![]() $m$-level correlations for all

$m$-level correlations for all ![]() $m \ge 2$.

$m \ge 2$.

It should be noted that Theorem 1.2 is far stronger than Theorem 1.1. In addition to the gap distribution, Theorem 1.2 allows us to recover a wide variety of statistics such as the ![]() $i$th nearest neighbor distribution for any

$i$th nearest neighbor distribution for any ![]() $i \ge 1$.

$i \ge 1$.

For our analysis, it is more useful to have good control on the support of the Fourier transform of ![]() $f$, and not crucial that

$f$, and not crucial that ![]() $f$ be compactly supported. Thus, we restate Theorem 1.2 here for this class of

$f$ be compactly supported. Thus, we restate Theorem 1.2 here for this class of ![]() $f$. We prove that Theorem 1.2 follows from Theorem 1.3 in § 8.

$f$. We prove that Theorem 1.2 follows from Theorem 1.3 in § 8.

Theorem 1.3 Let ![]() $\omega (n):=\alpha (\log n)^A$ for

$\omega (n):=\alpha (\log n)^A$ for ![]() $A>1$ and any

$A>1$ and any ![]() $\alpha > 0$. Then, for any

$\alpha > 0$. Then, for any ![]() $m \ge 2$, we have

$m \ge 2$, we have

for any positive function ![]() $f \in C^\infty (\mathbb {R}^{m-1})$, whose Fourier transform

$f \in C^\infty (\mathbb {R}^{m-1})$, whose Fourier transform ![]() $\widehat {f}$ has compact support.

$\widehat {f}$ has compact support.

Here we use ![]() $\widehat {f}$ to denote the Fourier transform of

$\widehat {f}$ to denote the Fourier transform of ![]() $f$. To avoid carrying a constant through we assume the support of

$f$. To avoid carrying a constant through we assume the support of ![]() $\widehat {f}$ is contained in

$\widehat {f}$ is contained in ![]() $(-1,1)$.

$(-1,1)$.

Previous work

The study of the uniform distribution and fine-scale local statistics of sequences modulo ![]() $1$ has a long history which we outlined in more detail in a previous paper [Reference Lutsko, Sourmelidis and TechnauLST24]. If we consider the sequence

$1$ has a long history which we outlined in more detail in a previous paper [Reference Lutsko, Sourmelidis and TechnauLST24]. If we consider the sequence ![]() $(\alpha n^\theta \,\mathrm {mod}\,1)_{n\geq 1}$, there have been many attempts to understand the local statistics, in particular the pair correlation (when

$(\alpha n^\theta \,\mathrm {mod}\,1)_{n\geq 1}$, there have been many attempts to understand the local statistics, in particular the pair correlation (when ![]() $m=2$). It is known that for any

$m=2$). It is known that for any ![]() $\theta \neq 1$, if

$\theta \neq 1$, if ![]() $\alpha$ belongs to a set of full measure, then the pair correlation function is Poissonian [Reference Rudnick and SarnakRS98, Reference Aistleitner, El-Baz and MunschAEM21, Reference Rudnick and TechnauRT22]. However, there are very few explicit (i.e. non-metric) results. When

$\alpha$ belongs to a set of full measure, then the pair correlation function is Poissonian [Reference Rudnick and SarnakRS98, Reference Aistleitner, El-Baz and MunschAEM21, Reference Rudnick and TechnauRT22]. However, there are very few explicit (i.e. non-metric) results. When ![]() $\theta = 2$ Heath-Brown [Reference Heath-BrownHea10] gave an algorithmic construction of certain

$\theta = 2$ Heath-Brown [Reference Heath-BrownHea10] gave an algorithmic construction of certain ![]() $\alpha$ for which the pair correlation is Poissonian; however, this construction did not give an exact number. When

$\alpha$ for which the pair correlation is Poissonian; however, this construction did not give an exact number. When ![]() $\theta = 1/2$ and

$\theta = 1/2$ and ![]() $\alpha ^2 \in \mathbb {Q}$ the problem lends itself to tools from homogeneous dynamics. This was exploited by Elkies and McMullen [Reference Elkies and McMullenEM04] who showed that the gap distribution is not Poissonian, and by El-Baz, Marklof, Vinogradov [Reference El-Baz, Marklof and VinogradovEMV15] who showed that the sequence

$\alpha ^2 \in \mathbb {Q}$ the problem lends itself to tools from homogeneous dynamics. This was exploited by Elkies and McMullen [Reference Elkies and McMullenEM04] who showed that the gap distribution is not Poissonian, and by El-Baz, Marklof, Vinogradov [Reference El-Baz, Marklof and VinogradovEMV15] who showed that the sequence ![]() $(\alpha n^{1/2}\, \mathrm {mod}\,1)_{n\in \mathbb {N} \setminus \square }$, where

$(\alpha n^{1/2}\, \mathrm {mod}\,1)_{n\in \mathbb {N} \setminus \square }$, where ![]() $\square$ denotes the set of squares, does have Poissonian pair correlation.

$\square$ denotes the set of squares, does have Poissonian pair correlation.

With these sparse exceptions, the only explicit results occur when the exponent ![]() $\theta$ is small. If

$\theta$ is small. If ![]() $\theta \le 14/41$ the authors and Sourmelidis [Reference Lutsko, Sourmelidis and TechnauLST24] showed that the pair correlation function is Poissonian for all values of

$\theta \le 14/41$ the authors and Sourmelidis [Reference Lutsko, Sourmelidis and TechnauLST24] showed that the pair correlation function is Poissonian for all values of ![]() $\alpha >0$. This was later extended by the authors [Reference Lutsko and TechnauLT21] to show that these monomial sequences exhibit Poissonian

$\alpha >0$. This was later extended by the authors [Reference Lutsko and TechnauLT21] to show that these monomial sequences exhibit Poissonian ![]() $m$-point correlations (for

$m$-point correlations (for ![]() $m\geq 3$) for any

$m\geq 3$) for any ![]() $\alpha >0$ if

$\alpha >0$ if ![]() $\theta < 1/(m^2+m-1)$. To the best of our knowledge the former is the only explicit result proving Poissonian pair correlations for sequences modulo

$\theta < 1/(m^2+m-1)$. To the best of our knowledge the former is the only explicit result proving Poissonian pair correlations for sequences modulo ![]() $1$, and the latter result is the only result proving convergence of the higher-order correlations to any limit.

$1$, and the latter result is the only result proving convergence of the higher-order correlations to any limit.

The authors’ previous work motivates the natural question: what about sequences which grow slower than any power of ![]() $n$? It is natural to hypothesize that such sequences might exhibit Poissonian

$n$? It is natural to hypothesize that such sequences might exhibit Poissonian ![]() $m$-point correlations for all

$m$-point correlations for all ![]() $m$. However, there is a constraint. Marklof and Strömbergsson [Reference Marklof and StrömbergssonMS13] have shown that the gap distribution of

$m$. However, there is a constraint. Marklof and Strömbergsson [Reference Marklof and StrömbergssonMS13] have shown that the gap distribution of ![]() $( (\log n)/(\log b) \,\mathrm {mod}\, 1)_{n\geq 1}$ exists for

$( (\log n)/(\log b) \,\mathrm {mod}\, 1)_{n\geq 1}$ exists for ![]() $b>1$, and is not Poissonian (thus the correlations cannot all be Poissonian). However, they also showed that in the limit as

$b>1$, and is not Poissonian (thus the correlations cannot all be Poissonian). However, they also showed that in the limit as ![]() $b$ tends to

$b$ tends to ![]() $1$, this limiting distribution converges to the Poissonian distribution (see [Reference Marklof and StrömbergssonMS13, (74)]). Thus, the natural question becomes: what can be said about sequences growing faster than

$1$, this limiting distribution converges to the Poissonian distribution (see [Reference Marklof and StrömbergssonMS13, (74)]). Thus, the natural question becomes: what can be said about sequences growing faster than ![]() $\log (n)$ but slower than any power of

$\log (n)$ but slower than any power of ![]() $n$?

$n$?

With that context in mind, our result has several implications. First, it provides the only example at present of an explicit sequence whose ![]() $m$-point correlations can be shown to converge to the Poissonian limit (and thus whose gap distribution is Poissonian). Second, it answers the natural question implied by our previous work on monomial sequences. Finally, it answers the natural question implied by Marklof and Strömbergsson's result on logarithmic sequences.

$m$-point correlations can be shown to converge to the Poissonian limit (and thus whose gap distribution is Poissonian). Second, it answers the natural question implied by our previous work on monomial sequences. Finally, it answers the natural question implied by Marklof and Strömbergsson's result on logarithmic sequences.

1.1 Plan of the paper

The proof of Theorem 1.2 adds several new ideas to the method developed in [Reference Lutsko and TechnauLT21], which is insufficient for the definitive results established here. Broadly, we argue in three steps, detailing the difficulties and innovations in each step.

In the remainder of this work we take ![]() $\alpha = 1$; exactly the same proof applies to general

$\alpha = 1$; exactly the same proof applies to general ![]() $\alpha$, leaving straightforward adaptations aside. Fix

$\alpha$, leaving straightforward adaptations aside. Fix ![]() $m \ge 2$ and assume the sequence has Poissonian

$m \ge 2$ and assume the sequence has Poissonian ![]() $j$-point correlation for

$j$-point correlation for ![]() $2\le j< m$.

$2\le j< m$.

Step 1. Remove the distinctness condition in the ![]() $m$-point correlation by relating the completed correlation to the

$m$-point correlation by relating the completed correlation to the ![]() $m$th moment of a random variable. This will add a new frequency variable, with the benefit of decorrelating the sequence elements. Then we perform a Fourier decomposition of this moment and, using a combinatorial argument from [Reference Lutsko and TechnauLT21, § 3], we reduce the problem of convergence for the moment to convergence of one particular term to an explicit ‘target’. This step works quite similarly to what we did in [Reference Lutsko and TechnauLT21].

$m$th moment of a random variable. This will add a new frequency variable, with the benefit of decorrelating the sequence elements. Then we perform a Fourier decomposition of this moment and, using a combinatorial argument from [Reference Lutsko and TechnauLT21, § 3], we reduce the problem of convergence for the moment to convergence of one particular term to an explicit ‘target’. This step works quite similarly to what we did in [Reference Lutsko and TechnauLT21].

Step 2. Using various partitions of unity, we further reduce the problem to an asymptotic evaluation of the ![]() $L^m([0,1])$-norm of a two-dimensional exponential sum. Then we apply van der Corput's

$L^m([0,1])$-norm of a two-dimensional exponential sum. Then we apply van der Corput's ![]() $B$-process to each of these variables. In contrast to our argument in [Reference Lutsko and TechnauLT21], we can no longer use the form of the sequence to perform explicit computations throughout. Instead, a more fundamental understanding of how the two

$B$-process to each of these variables. In contrast to our argument in [Reference Lutsko and TechnauLT21], we can no longer use the form of the sequence to perform explicit computations throughout. Instead, a more fundamental understanding of how the two ![]() $B$-processes work is now required. In fact, after the first application of the

$B$-processes work is now required. In fact, after the first application of the ![]() $B$-process we end up with an implicitly defined phase function. Surprisingly, after the second application of the

$B$-process we end up with an implicitly defined phase function. Surprisingly, after the second application of the ![]() $B$-process (in the other variable) we can show that a manageable phase function arises! This is the content of Lemma 4.10, and we believe this by-product of our investigation to be of some independent interest. Being able to understand the phase function arising is crucial to performing the next step. Furthermore, a simple computation yields that if we stop at this step and apply the triangle inequality the resulting error term is of size

$B$-process (in the other variable) we can show that a manageable phase function arises! This is the content of Lemma 4.10, and we believe this by-product of our investigation to be of some independent interest. Being able to understand the phase function arising is crucial to performing the next step. Furthermore, a simple computation yields that if we stop at this step and apply the triangle inequality the resulting error term is of size ![]() $O((\log N)^{(A+1)m})$.

$O((\log N)^{(A+1)m})$.

Step 3. Finally, we expand the ![]() $L^m([0,1])$-norm giving an oscillatory integral. Then, using a localized version of Van der Corput's lemma, we achieve an extra saving to bound the error term by

$L^m([0,1])$-norm giving an oscillatory integral. Then, using a localized version of Van der Corput's lemma, we achieve an extra saving to bound the error term by ![]() $o(1)$. In [Reference Lutsko and TechnauLT21] we used classical theorems from linear algebra to justify that that localized version of Van der Corput's lemma is applicable, by showing that Wronskians of a family of relevant curves are uniformly bounded from below. In the present situation, the underlying geometry and Wronskians are considerably more involved. After several initial manipulations we boil matters down to determinants of generalized Vandermonde matrices. To handle those we rely on recent work of Khare and Tao [Reference Khare and TaoKT21], which is precise enough to (by a small logarithmic gain) single out the largest contribution to the Wronskian and thereby complete the argument.

$o(1)$. In [Reference Lutsko and TechnauLT21] we used classical theorems from linear algebra to justify that that localized version of Van der Corput's lemma is applicable, by showing that Wronskians of a family of relevant curves are uniformly bounded from below. In the present situation, the underlying geometry and Wronskians are considerably more involved. After several initial manipulations we boil matters down to determinants of generalized Vandermonde matrices. To handle those we rely on recent work of Khare and Tao [Reference Khare and TaoKT21], which is precise enough to (by a small logarithmic gain) single out the largest contribution to the Wronskian and thereby complete the argument.

Notation

Throughout, we use the usual Bachmann–Landau notation: for functions ![]() $f,g:X \rightarrow \mathbb {R}$, defined on some set

$f,g:X \rightarrow \mathbb {R}$, defined on some set ![]() $X$, we write

$X$, we write ![]() $f \ll g$ (or

$f \ll g$ (or ![]() $f=O(g)$) to denote that there exists a constant

$f=O(g)$) to denote that there exists a constant ![]() $C>0$ such that

$C>0$ such that ![]() $\vert f(x)\vert \leq C \vert g(x) \vert$ for all

$\vert f(x)\vert \leq C \vert g(x) \vert$ for all ![]() $x\in X$. Moreover, let

$x\in X$. Moreover, let ![]() $f\asymp g$ denote

$f\asymp g$ denote ![]() $f \ll g$ and

$f \ll g$ and ![]() $g \ll f$, and let

$g \ll f$, and let ![]() $f = o(g)$ denote that

$f = o(g)$ denote that ![]() $\frac {f(x)}{g(x)} \to 0$.

$\frac {f(x)}{g(x)} \to 0$.

Given a Schwartz function ![]() $f: \mathbb {R}^m \to \mathbb {R}$, let

$f: \mathbb {R}^m \to \mathbb {R}$, let ![]() $\widehat {f}$ denote the

$\widehat {f}$ denote the ![]() $m$-dimensional Fourier transform:

$m$-dimensional Fourier transform:

Here, and throughout we let ![]() $e(x):= e^{2\pi i x}$. Moreover, we use the convention

$e(x):= e^{2\pi i x}$. Moreover, we use the convention ![]() $\frac {0}{0}=0$ to avoid extra conditions on summations.

$\frac {0}{0}=0$ to avoid extra conditions on summations.

All of the sums which appear range over integers, in the indicated interval. We will frequently be taking sums over multiple variables, thus if ![]() $\boldsymbol {\mathbf {u}}$ is an

$\boldsymbol {\mathbf {u}}$ is an ![]() $m$-dimensional vector, for brevity, we write

$m$-dimensional vector, for brevity, we write

Moreover, all ![]() $L^p$ norms are taken with respect to Lebesgue measure; we often do not include the domain when it is obvious. Let

$L^p$ norms are taken with respect to Lebesgue measure; we often do not include the domain when it is obvious. Let

For ease of notation, ![]() $\varepsilon >0$ may vary from line to line by a bounded constant.

$\varepsilon >0$ may vary from line to line by a bounded constant.

2. Preliminaries

The following stationary phase principle is derived from the work of Blomer, Khan and Young [Reference Blomer, Khan and YoungBKY13, Proposition 8.2]. In application we will not make use of the full asymptotic expansion, but this will give us a particularly good error term which is essential to our argument.

Proposition 2.1 (Stationary phase expansion)

Let ![]() $w\in C_{c}^{\infty }(\mathbb {R})$ be supported in a compact interval

$w\in C_{c}^{\infty }(\mathbb {R})$ be supported in a compact interval ![]() $J$ of length

$J$ of length ![]() $\Omega _w>0$ so that there exists an

$\Omega _w>0$ so that there exists an ![]() $\Lambda _w>0$ for which

$\Lambda _w>0$ for which

for all ![]() $j\in \mathbb {N}$. Suppose

$j\in \mathbb {N}$. Suppose ![]() $\psi$ is a smooth function on

$\psi$ is a smooth function on ![]() $J$ so that there exists a unique critical point

$J$ so that there exists a unique critical point ![]() $x_{0}$ with

$x_{0}$ with ![]() $\psi '(x_{0})=0$. Suppose there exist values

$\psi '(x_{0})=0$. Suppose there exist values ![]() $\Lambda _{\psi }>0$ and

$\Lambda _{\psi }>0$ and ![]() $\Omega _\psi >0$ such that

$\Omega _\psi >0$ such that

for all ![]() $j > 2$. Moreover, let

$j > 2$. Moreover, let ![]() $\delta \in (0,1/10)$, and

$\delta \in (0,1/10)$, and ![]() $Z:=\Omega _w + \Omega _{\psi }+\Lambda _w+\Lambda _{\psi }+1$. If

$Z:=\Omega _w + \Omega _{\psi }+\Lambda _w+\Lambda _{\psi }+1$. If

\begin{equation} \Lambda_{\psi}\geq Z^{3\delta}\quad \mathrm{and}\quad \Omega_w\geq\frac{\Omega_{\psi}Z^{\frac{\delta}{2}}}{\Lambda_{\psi}^{1/2}}\end{equation}

\begin{equation} \Lambda_{\psi}\geq Z^{3\delta}\quad \mathrm{and}\quad \Omega_w\geq\frac{\Omega_{\psi}Z^{\frac{\delta}{2}}}{\Lambda_{\psi}^{1/2}}\end{equation}hold, then

has the asymptotic expansion

\[ I=\frac{e(\psi(x_{0}))}{\sqrt{\psi''(x_{0})}} \sum_{0\leq j \leq3C/\delta}p_{j}(x_{0})+O_{C,\delta}(Z^{-C}) \]

\[ I=\frac{e(\psi(x_{0}))}{\sqrt{\psi''(x_{0})}} \sum_{0\leq j \leq3C/\delta}p_{j}(x_{0})+O_{C,\delta}(Z^{-C}) \]

for any fixed ![]() $C\in \mathbb {Z}_{\geq 1}$; here

$C\in \mathbb {Z}_{\geq 1}$; here

where

In a slightly simpler form we have the following lemma.

Lemma 2.2 (First-order stationary phase)

Let ![]() $\psi$ and

$\psi$ and ![]() $w$ be smooth, real-valued functions defined on a compact interval

$w$ be smooth, real-valued functions defined on a compact interval ![]() $[a, b]$. Let

$[a, b]$. Let ![]() $w(a) = w(b) = 0$. Suppose there exist constants

$w(a) = w(b) = 0$. Suppose there exist constants ![]() $\Lambda _\psi,\Omega _w,\Omega _\psi \geq 3$ satisfying (2.1), with

$\Lambda _\psi,\Omega _w,\Omega _\psi \geq 3$ satisfying (2.1), with ![]() $Z$ as in Proposition 2.1 and

$Z$ as in Proposition 2.1 and ![]() $\Lambda _w=1$ so that

$\Lambda _w=1$ so that

\begin{equation} \psi^{(j)}(x) \ll \frac{\Lambda_\psi}{ \Omega_\psi^j},\quad w^{(j)} (x)\ll \frac{1}{\Omega_w^j}\quad \text{and}\quad \psi^{(2)} (x)\gg \frac{\Lambda_\psi}{\Omega_\psi^2} \end{equation}

\begin{equation} \psi^{(j)}(x) \ll \frac{\Lambda_\psi}{ \Omega_\psi^j},\quad w^{(j)} (x)\ll \frac{1}{\Omega_w^j}\quad \text{and}\quad \psi^{(2)} (x)\gg \frac{\Lambda_\psi}{\Omega_\psi^2} \end{equation}

for all ![]() $j=0,1,2,\ldots$ and all

$j=0,1,2,\ldots$ and all ![]() $x\in [a,b]$. If

$x\in [a,b]$. If ![]() $\psi ^\prime (x_0)=0$ for a unique

$\psi ^\prime (x_0)=0$ for a unique ![]() $x_0\in [a,b]$, and if

$x_0\in [a,b]$, and if ![]() $\psi ^{(2)}(x)>0$, then

$\psi ^{(2)}(x)>0$, then

\[ \int_{a}^{b} w(x) e(\psi(x)) \,d x = \frac{e(\psi(x_0) + 1/8)}{\sqrt{|\psi^{(2)}(x_0)|}} w(x_0) + O\bigg(\frac{\Omega_\psi^3}{\Lambda_{\psi}^{3/2}\Omega_w^2} + \frac{1}{Z}\bigg). \]

\[ \int_{a}^{b} w(x) e(\psi(x)) \,d x = \frac{e(\psi(x_0) + 1/8)}{\sqrt{|\psi^{(2)}(x_0)|}} w(x_0) + O\bigg(\frac{\Omega_\psi^3}{\Lambda_{\psi}^{3/2}\Omega_w^2} + \frac{1}{Z}\bigg). \]

If instead ![]() $\psi ^{(2)}(x)<0$ on

$\psi ^{(2)}(x)<0$ on ![]() $[a,b]$ then the same equation holds with

$[a,b]$ then the same equation holds with ![]() $e(\psi (x_0) + 1/8)$ replaced by

$e(\psi (x_0) + 1/8)$ replaced by ![]() $e(\psi (x_0) - 1/8)$.

$e(\psi (x_0) - 1/8)$.

Proof. We apply Proposition 2.1 with ![]() $\Lambda _w=1$ and

$\Lambda _w=1$ and ![]() $C=1$. In which case the first error term comes from the term

$C=1$. In which case the first error term comes from the term ![]() $p_1$ in the expansion. All higher-order terms give a smaller contribution; see [Reference Blomer, Khan and YoungBKY13, p. 20] for a more detailed explanation.

$p_1$ in the expansion. All higher-order terms give a smaller contribution; see [Reference Blomer, Khan and YoungBKY13, p. 20] for a more detailed explanation.

Moreover, we also need the following version of van der Corput's lemma. ([Reference SteinSte93, Chapter VIII, Proposition 2]).

Lemma 2.3 (van der Corput's lemma)

Let ![]() $[a,b]$ be a compact interval. Let

$[a,b]$ be a compact interval. Let ![]() $\psi,w:[a,b]\rightarrow \mathbb {R}$ be smooth functions. Assume

$\psi,w:[a,b]\rightarrow \mathbb {R}$ be smooth functions. Assume ![]() $\psi ''$ does not change sign on

$\psi ''$ does not change sign on ![]() $[a,b]$ and that for some

$[a,b]$ and that for some ![]() $j\geq 1$ and

$j\geq 1$ and ![]() $\Lambda >0$ the bound

$\Lambda >0$ the bound

holds for all ![]() $x\in [a,b]$. Then

$x\in [a,b]$. Then

where the implied constant depends only on ![]() $j$.

$j$.

Generalized Vandermonde matrices

One of the primary difficulties which we will encounter in § 6 is the fact that taking derivatives of exponentials (which arise as the inverse of the ![]() $\log$s defining our sequence) increases in complexity as we take derivatives. To handle this we appeal to a recent result of Khare and Tao [Reference Khare and TaoKT21] which requires us to set up some notation. Given an

$\log$s defining our sequence) increases in complexity as we take derivatives. To handle this we appeal to a recent result of Khare and Tao [Reference Khare and TaoKT21] which requires us to set up some notation. Given an ![]() $M$-tuple

$M$-tuple ![]() $\boldsymbol {\mathbf {u}} \in \mathbb {R}^M$, let

$\boldsymbol {\mathbf {u}} \in \mathbb {R}^M$, let

denote the Vandermonde determinant. Furthermore, given two tuples ![]() $\boldsymbol {\mathbf {u}}$ and

$\boldsymbol {\mathbf {u}}$ and ![]() $\boldsymbol {\mathbf {n}}$, we define

$\boldsymbol {\mathbf {n}}$, we define

\[ \boldsymbol{\mathbf{u}}^{\boldsymbol{\mathbf{n}}} := u_1^{n_1} \cdots u_M^{n_M}\quad \mbox{and}\quad \boldsymbol{\mathbf{u}}^{\circ\boldsymbol{\mathbf{n}}} := \begin{pmatrix} u_1^{n_1} & u_1^{n_2} & \cdots & u_1^{n_M}\\ u_2^{n_1} & u_2^{n_2} & \cdots & u_2^{n_M}\\ \vdots & \vdots & \vdots & \vdots \\ u_M^{n_1} & u_M^{n_2} & \cdots & u_M^{n_M} \end{pmatrix}, \]

\[ \boldsymbol{\mathbf{u}}^{\boldsymbol{\mathbf{n}}} := u_1^{n_1} \cdots u_M^{n_M}\quad \mbox{and}\quad \boldsymbol{\mathbf{u}}^{\circ\boldsymbol{\mathbf{n}}} := \begin{pmatrix} u_1^{n_1} & u_1^{n_2} & \cdots & u_1^{n_M}\\ u_2^{n_1} & u_2^{n_2} & \cdots & u_2^{n_M}\\ \vdots & \vdots & \vdots & \vdots \\ u_M^{n_1} & u_M^{n_2} & \cdots & u_M^{n_M} \end{pmatrix}, \]

the latter being a generalized Vandermonde matrix. Finally, let ![]() $\boldsymbol {\mathbf {n}}_{\min } :=(0,1, \ldots, M-1)$. Then Khare and Tao established the following lemma.

$\boldsymbol {\mathbf {n}}_{\min } :=(0,1, \ldots, M-1)$. Then Khare and Tao established the following lemma.

Lemma 2.4 [Reference Khare and TaoKT21, Lemma 5.3]

Let ![]() $K$ be a compact subset of the cone

$K$ be a compact subset of the cone

Then there exist constants ![]() $C,c>0$ such that

$C,c>0$ such that

for all ![]() $\boldsymbol {\mathbf {u}} \in (0,\infty )^M$ with

$\boldsymbol {\mathbf {u}} \in (0,\infty )^M$ with ![]() $u_1 \le \dots \le u_M$ and all

$u_1 \le \dots \le u_M$ and all ![]() $\boldsymbol {\mathbf {n}} \in K$.

$\boldsymbol {\mathbf {n}} \in K$.

3. Combinatorial completion

The proof of Theorem 1.3 follows an inductive argument. Thus, fix ![]() $m \ge 2$ and assume

$m \ge 2$ and assume ![]() $(x(n))$ has

$(x(n))$ has ![]() $j$-point correlations for all

$j$-point correlations for all ![]() $j < m$. Let

$j < m$. Let ![]() $f$ be a positive

$f$ be a positive ![]() $C^\infty (\mathbb {R})$ function with

$C^\infty (\mathbb {R})$ function with ![]() $\widehat {f} \in C_c^\infty (\mathbb {R})$, and define

$\widehat {f} \in C_c^\infty (\mathbb {R})$, and define

Note that if ![]() $f$ was the indicator function of an interval

$f$ was the indicator function of an interval ![]() $I$, then

$I$, then ![]() $S_N$ would count the number of points in

$S_N$ would count the number of points in ![]() $(x_n)_{n \le N}$ which land in the shifted interval

$(x_n)_{n \le N}$ which land in the shifted interval ![]() $I/N+s/N$. Now consider the

$I/N+s/N$. Now consider the ![]() $m$th moment of

$m$th moment of ![]() $S_N$. Then one can show that (see [Reference Lutsko and TechnauLT21, § 3])

$S_N$. Then one can show that (see [Reference Lutsko and TechnauLT21, § 3])

\begin{align}

\mathcal{M}^{(m)}(N) &:= \int_0^1 S_N(s,f)^m \,{d}s \nonumber\\

&\; = \int_0^1 \sum_{\boldsymbol{\mathbf{n}} \in [N]^m}\sum_{\boldsymbol{\mathbf{k}} \in \mathbb{Z}^m}

f(N(\omega(n_1)+k_1 + s)) \cdots f(N(\omega(n_m) +k_m + s))\,{d} s \nonumber\\

&\; = \frac{1}{N}\sum_{\boldsymbol{\mathbf{n}} \in [N]^m}\sum_{\boldsymbol{\mathbf{k}}\in \mathbb{Z}^{m-1}}

F(N(\omega(n_1)-\omega(n_2) +k_1),\ldots N(\omega(n_{m-1})-\omega(n_m) +k_{m-1})),

\end{align}

\begin{align}

\mathcal{M}^{(m)}(N) &:= \int_0^1 S_N(s,f)^m \,{d}s \nonumber\\

&\; = \int_0^1 \sum_{\boldsymbol{\mathbf{n}} \in [N]^m}\sum_{\boldsymbol{\mathbf{k}} \in \mathbb{Z}^m}

f(N(\omega(n_1)+k_1 + s)) \cdots f(N(\omega(n_m) +k_m + s))\,{d} s \nonumber\\

&\; = \frac{1}{N}\sum_{\boldsymbol{\mathbf{n}} \in [N]^m}\sum_{\boldsymbol{\mathbf{k}}\in \mathbb{Z}^{m-1}}

F(N(\omega(n_1)-\omega(n_2) +k_1),\ldots N(\omega(n_{m-1})-\omega(n_m) +k_{m-1})),

\end{align}

where

\begin{align*} & F(z_1,z_2, \ldots, z_{m-1}) \\ &\quad := \int_{\mathbb{R}} f(s)f(z_1+z_2+\cdots + z_{m-1}+s) f(z_2+\cdots + z_{m-1}+s)\cdots f(z_{m-1}+s)\,d s. \end{align*}

\begin{align*} & F(z_1,z_2, \ldots, z_{m-1}) \\ &\quad := \int_{\mathbb{R}} f(s)f(z_1+z_2+\cdots + z_{m-1}+s) f(z_2+\cdots + z_{m-1}+s)\cdots f(z_{m-1}+s)\,d s. \end{align*}

As such we can relate the ![]() $m$th moment of

$m$th moment of ![]() $S_N$ to the

$S_N$ to the ![]() $m$-point correlation of

$m$-point correlation of ![]() $F$. Note that since

$F$. Note that since ![]() $\widehat {f}$ has compact support,

$\widehat {f}$ has compact support, ![]() $\widehat {F}$ also has compact support. To recover the

$\widehat {F}$ also has compact support. To recover the ![]() $m$-point correlation in full generality, we replace the moment

$m$-point correlation in full generality, we replace the moment ![]() $\int S_N(s,f)^m \,{d}s$ with the mixed moment

$\int S_N(s,f)^m \,{d}s$ with the mixed moment ![]() $\int \prod _{i=1}^m S_N(s,f_i) \,{d} s$ for some collection of

$\int \prod _{i=1}^m S_N(s,f_i) \,{d} s$ for some collection of ![]() $f_i:\mathbb {R}\to \mathbb {R}$. The proof below can be applied in this generality; however, for ease of notation we only explain the details in the former case.

$f_i:\mathbb {R}\to \mathbb {R}$. The proof below can be applied in this generality; however, for ease of notation we only explain the details in the former case.

3.1 Combinatorial target

We will need the following combinatorial definitions to explain how to prove convergence of the ![]() $m$-point correlation from (3.1). Given a partition

$m$-point correlation from (3.1). Given a partition ![]() $\mathcal {P}$ of

$\mathcal {P}$ of ![]() $[ m]$, we say that

$[ m]$, we say that ![]() $j\in [m]$ is isolated if

$j\in [m]$ is isolated if ![]() $j$ belongs to a partition element of size

$j$ belongs to a partition element of size ![]() $1$. A partition is called non-isolating if no element is isolated (and otherwise we say it is isolating). For our example

$1$. A partition is called non-isolating if no element is isolated (and otherwise we say it is isolating). For our example ![]() $\mathcal {P} = \{\{1,3\}, \{4\}, \{2,5,6\}\}$ we have that

$\mathcal {P} = \{\{1,3\}, \{4\}, \{2,5,6\}\}$ we have that ![]() $4$ is isolated, and thus

$4$ is isolated, and thus ![]() $\mathcal {P}$ is isolating.

$\mathcal {P}$ is isolating.

Now consider the middle line of (3.1). We apply Poisson summation to each of the ![]() $k_i$ sums. That is, we insert

$k_i$ sums. That is, we insert

\begin{equation} \sum_{k \in \mathbb{Z}} f(N(\omega(n)+k + s)) = \frac{1}{N}\sum_{k\in \mathbb{Z}} e(k(\omega(n)+s)) \widehat{f}\bigg(\frac{k}{N}\bigg) \end{equation}

\begin{equation} \sum_{k \in \mathbb{Z}} f(N(\omega(n)+k + s)) = \frac{1}{N}\sum_{k\in \mathbb{Z}} e(k(\omega(n)+s)) \widehat{f}\bigg(\frac{k}{N}\bigg) \end{equation}yielding

\begin{equation} \mathcal{M}^{(m)}(N) = \frac{1}{N^m} \int_0^1 \sum_{\boldsymbol{\mathbf{n}} \in [N]^m} \sum_{\boldsymbol{\mathbf{k}} \in \mathbb{Z}^m} \widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e(\boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}}s)\,{d} s, \end{equation}

\begin{equation} \mathcal{M}^{(m)}(N) = \frac{1}{N^m} \int_0^1 \sum_{\boldsymbol{\mathbf{n}} \in [N]^m} \sum_{\boldsymbol{\mathbf{k}} \in \mathbb{Z}^m} \widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e(\boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}}s)\,{d} s, \end{equation}

where ![]() $\omega (\boldsymbol {\mathbf {n}}) := (\omega (n_1), \omega (n_2), \ldots, \omega (n_m))$ and where

$\omega (\boldsymbol {\mathbf {n}}) := (\omega (n_1), \omega (n_2), \ldots, \omega (n_m))$ and where ![]() $\widehat {f}(\frac {\boldsymbol {\mathbf {k}}}{N}) = \prod _{i=1}^m \widehat {f}(\frac {k_i}{N})$.

$\widehat {f}(\frac {\boldsymbol {\mathbf {k}}}{N}) = \prod _{i=1}^m \widehat {f}(\frac {k_i}{N})$.

In [Reference Lutsko and TechnauLT21, § 3] we showed that, if

\[ \mathcal{E}(N):= \frac{1}{N^m} \int_0^1 \sum_{\boldsymbol{\mathbf{n}} \in [N]^m} \sum_{\substack{\boldsymbol{\mathbf{k}} \in (\mathbb{Z}^{\ast})^m}} \widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e( \boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}}s)\,{d} s, \]

\[ \mathcal{E}(N):= \frac{1}{N^m} \int_0^1 \sum_{\boldsymbol{\mathbf{n}} \in [N]^m} \sum_{\substack{\boldsymbol{\mathbf{k}} \in (\mathbb{Z}^{\ast})^m}} \widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e( \boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}}s)\,{d} s, \]

then for fixed ![]() $m$, and assuming the inductive hypothesis, Theorem 1.3 reduces to the following lemma.

$m$, and assuming the inductive hypothesis, Theorem 1.3 reduces to the following lemma.

Lemma 3.1 Let ![]() $\mathscr {P}_m$ denote the set of non-isolating partitions of

$\mathscr {P}_m$ denote the set of non-isolating partitions of ![]() $[m]$. We have that

$[m]$. We have that

where the partition ![]() $\mathcal {P} = (P_1, P_2, \ldots, P_d)$, and

$\mathcal {P} = (P_1, P_2, \ldots, P_d)$, and ![]() $|P_i|$ is the size of

$|P_i|$ is the size of ![]() $P_i$.

$P_i$.

3.2 Dyadic decomposition

It is convenient to decompose the sums over ![]() $n$ and

$n$ and ![]() $k$ within

$k$ within ![]() $S_N(s,f)$ into (nearly) dyadic ranges in a smooth manner. Given

$S_N(s,f)$ into (nearly) dyadic ranges in a smooth manner. Given ![]() $N$, we let

$N$, we let ![]() $Q>1$ be the unique integer with

$Q>1$ be the unique integer with ![]() $e^{Q}\leq N < e^{Q+1}$. Now, we describe a smooth partition of unity which approximates the indicator function of

$e^{Q}\leq N < e^{Q+1}$. Now, we describe a smooth partition of unity which approximates the indicator function of ![]() $[1,N]$. Strictly speaking, these partitions depend on

$[1,N]$. Strictly speaking, these partitions depend on ![]() $Q$, but we suppress this from the notation. Furthermore, since we want asymptotics of

$Q$, but we suppress this from the notation. Furthermore, since we want asymptotics of ![]() $\mathcal {E}(N)$, we need to take a bit of care at the right end point of

$\mathcal {E}(N)$, we need to take a bit of care at the right end point of ![]() $[1,N]$, and so a tighter than dyadic decomposition is needed. Let us make this precise, and point out that a detailed construction can be found in the Appendix. For

$[1,N]$, and so a tighter than dyadic decomposition is needed. Let us make this precise, and point out that a detailed construction can be found in the Appendix. For ![]() $0\le q < Q$ we let

$0\le q < Q$ we let ![]() $\mathfrak {N}_q: \mathbb {R}\to [0,1]$ denote a smooth function for which

$\mathfrak {N}_q: \mathbb {R}\to [0,1]$ denote a smooth function for which

and such that ![]() $\mathfrak {N}_{q}(x) + \mathfrak {N}_{q+1}(x) = 1$ for

$\mathfrak {N}_{q}(x) + \mathfrak {N}_{q+1}(x) = 1$ for ![]() $x\in [e^q,e^{q+1})$. Now for

$x\in [e^q,e^{q+1})$. Now for ![]() $q \ge Q$ we let

$q \ge Q$ we let ![]() $\mathfrak {N}_q$ form a smooth partition of unity for which

$\mathfrak {N}_q$ form a smooth partition of unity for which

\begin{equation} \sum_{q=0}^{2Q-1} \mathfrak{N}_q (x) = \begin{cases} 1 & \mbox{if } 1< x < e^{Q},\\ 0 & \mbox{if } x< 1/2 \mbox{ or } x > N + \dfrac{3N}{\log(N)} \end{cases} \end{equation}

\begin{equation} \sum_{q=0}^{2Q-1} \mathfrak{N}_q (x) = \begin{cases} 1 & \mbox{if } 1< x < e^{Q},\\ 0 & \mbox{if } x< 1/2 \mbox{ or } x > N + \dfrac{3N}{\log(N)} \end{cases} \end{equation}and

while ![]() $\operatorname {supp}(\mathfrak {N}_Q) \subset (0.9\cdot e^{Q-1}, 1.1\cdot e^{Q})$. Let

$\operatorname {supp}(\mathfrak {N}_Q) \subset (0.9\cdot e^{Q-1}, 1.1\cdot e^{Q})$. Let ![]() $\Vert g \Vert _{\infty }$ denote the sup norm of a function

$\Vert g \Vert _{\infty }$ denote the sup norm of a function ![]() $g : \mathbb {R} \to \mathbb {R}$. We impose the following condition on the derivatives:

$g : \mathbb {R} \to \mathbb {R}$. We impose the following condition on the derivatives:

\begin{equation} \Vert \mathfrak{N}_{q}^{(j)}\Vert_{\infty} \ll \begin{cases} e^{-qj} & \mbox{for } q < Q,\\ (e^{Q}/Q)^{-j} & \mbox{for } Q< q, \end{cases} \end{equation}

\begin{equation} \Vert \mathfrak{N}_{q}^{(j)}\Vert_{\infty} \ll \begin{cases} e^{-qj} & \mbox{for } q < Q,\\ (e^{Q}/Q)^{-j} & \mbox{for } Q< q, \end{cases} \end{equation}

for ![]() $j \ge 1$. For technical reasons, assume

$j \ge 1$. For technical reasons, assume ![]() $\mathfrak {N}_q^{(1)}$ changes sign only once.

$\mathfrak {N}_q^{(1)}$ changes sign only once.

Notice that (3.5) implies

\[ \sum_{n \in \mathbb{Z}}\sum_{q=0}^{2Q-2} \mathfrak{N}_q (n) \sum_{k \in \mathbb{Z}} f(N(\omega(n) +k +s)) \leq S_N(s,f) \leq\sum_{n\in \mathbb{Z}}\sum_{q=0}^{2Q-1} \mathfrak{N}_q (n) \sum_{k \in \mathbb{Z}} f(N(\omega(n) +k +s)). \]

\[ \sum_{n \in \mathbb{Z}}\sum_{q=0}^{2Q-2} \mathfrak{N}_q (n) \sum_{k \in \mathbb{Z}} f(N(\omega(n) +k +s)) \leq S_N(s,f) \leq\sum_{n\in \mathbb{Z}}\sum_{q=0}^{2Q-1} \mathfrak{N}_q (n) \sum_{k \in \mathbb{Z}} f(N(\omega(n) +k +s)). \]

Ignoring the lower bound, which can be treated similarly, applying Poisson summation for every fixed ![]() $n$ and using the positivity of the inner sum, we then have

$n$ and using the positivity of the inner sum, we then have

\[ S_N(s,f)\leq \frac{1}{N}\sum_{n \in \mathbb{Z}}\sum_{q=0}^{2Q-1} \mathfrak{N}_q(n) \sum_{k \in \mathbb{Z}} \widehat{f} \bigg(\frac{k}{N}\bigg) e(k(\omega(n) +s)). \]

\[ S_N(s,f)\leq \frac{1}{N}\sum_{n \in \mathbb{Z}}\sum_{q=0}^{2Q-1} \mathfrak{N}_q(n) \sum_{k \in \mathbb{Z}} \widehat{f} \bigg(\frac{k}{N}\bigg) e(k(\omega(n) +s)). \]

Next, by positivity, we have that

\begin{equation} \mathcal{M}^{(m)}(N) \le \int_0^1 \bigg(\frac{1}{N}\sum_{q=0}^{2Q-1} \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)\sum_{k \in \mathbb{Z}} \widehat{f}\bigg(\frac{k}{N}\bigg) e( k \omega(n) + ks)\bigg)^m {d} s. \end{equation}

\begin{equation} \mathcal{M}^{(m)}(N) \le \int_0^1 \bigg(\frac{1}{N}\sum_{q=0}^{2Q-1} \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)\sum_{k \in \mathbb{Z}} \widehat{f}\bigg(\frac{k}{N}\bigg) e( k \omega(n) + ks)\bigg)^m {d} s. \end{equation}

All frequencies ![]() $\boldsymbol {\mathbf {k}}$ for which

$\boldsymbol {\mathbf {k}}$ for which ![]() $k_j=0$ for at least some index

$k_j=0$ for at least some index ![]() $1\leq j\leq m-1$ contribute to

$1\leq j\leq m-1$ contribute to ![]() $\mathcal {M}^{(m)}(N)$ exactly

$\mathcal {M}^{(m)}(N)$ exactly

\[ \sum_{i=1}^{m-1} \begin{pmatrix} m-1\\ i \end{pmatrix}\widehat{f}(0)^{i}\int_0^1 \bigg(\frac{1}{N}\sum_{n \in [N]}\sum_{k \neq 0} \widehat{f}\bigg(\frac{k}{N}\bigg) e( k \omega(n) + ks)\bigg)^{m-i} {d} s. \]

\[ \sum_{i=1}^{m-1} \begin{pmatrix} m-1\\ i \end{pmatrix}\widehat{f}(0)^{i}\int_0^1 \bigg(\frac{1}{N}\sum_{n \in [N]}\sum_{k \neq 0} \widehat{f}\bigg(\frac{k}{N}\bigg) e( k \omega(n) + ks)\bigg)^{m-i} {d} s. \]

Subtracting exactly the above term from both sides of (3.8), while using our inductive assumption that the ![]() $\mathcal {M}^{m-i}(N)$ converge (for

$\mathcal {M}^{m-i}(N)$ converge (for ![]() $1\leq i\leq m-2)$, then yields

$1\leq i\leq m-2)$, then yields

\begin{equation} \mathcal{E}(N) \le \int_0^1\bigg(\frac{1}{N}\sum_{q=0}^{2Q-1} \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)\sum_{k\neq 0}\widehat{f}\bigg(\frac{k}{N}\bigg) e(k \omega(n) + ks)\bigg)^m {d} s +o(1). \end{equation}

\begin{equation} \mathcal{E}(N) \le \int_0^1\bigg(\frac{1}{N}\sum_{q=0}^{2Q-1} \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)\sum_{k\neq 0}\widehat{f}\bigg(\frac{k}{N}\bigg) e(k \omega(n) + ks)\bigg)^m {d} s +o(1). \end{equation}The same argument can be used to yield

\[ \mathcal{E}(N)+o(1) \ge \int_0^1\bigg(\frac{1}{N}\sum_{q=0}^{2Q-2} \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)\sum_{k\neq 0}\widehat{f}\bigg(\frac{k}{N}\bigg) e( k \omega(n) + ks)\bigg)^m {d} s . \]

\[ \mathcal{E}(N)+o(1) \ge \int_0^1\bigg(\frac{1}{N}\sum_{q=0}^{2Q-2} \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)\sum_{k\neq 0}\widehat{f}\bigg(\frac{k}{N}\bigg) e( k \omega(n) + ks)\bigg)^m {d} s . \]

We similarly decompose the ![]() $k$ sums, although thanks to the compact support of

$k$ sums, although thanks to the compact support of ![]() $\widehat {f}$ we do not need to worry about

$\widehat {f}$ we do not need to worry about ![]() $k\ge N$. Let

$k\ge N$. Let ![]() $\mathfrak {K}_u:\mathbb {R} \to [0,1]$ be a smooth function such that, for

$\mathfrak {K}_u:\mathbb {R} \to [0,1]$ be a smooth function such that, for ![]() $U :=\lceil \log N \rceil$,

$U :=\lceil \log N \rceil$,

\[ \sum_{u=-U}^{U} \mathfrak{K}_u(k) =\begin{cases} 1 & \mbox{if } \vert k\vert \in [1, N),\\ 0 & \mbox{if } \vert k\vert < 1/2 \mbox{ or } \vert k\vert > 2N, \end{cases} \]

\[ \sum_{u=-U}^{U} \mathfrak{K}_u(k) =\begin{cases} 1 & \mbox{if } \vert k\vert \in [1, N),\\ 0 & \mbox{if } \vert k\vert < 1/2 \mbox{ or } \vert k\vert > 2N, \end{cases} \]

and the symmetry ![]() $\mathfrak {K}_{-u}(k) = \mathfrak {K}_{u}(-k)$ holds true for all

$\mathfrak {K}_{-u}(k) = \mathfrak {K}_{u}(-k)$ holds true for all ![]() $u,k> 0$. Similarly,

$u,k> 0$. Similarly,

\begin{align*} & \operatorname{supp}(\mathfrak{K}_u) \subset[e^{u }/3, 3e^{u})\quad \mbox{if } u \ge 0,\quad \mbox{and } \\ & \Vert \mathfrak{K}_{u}^{(j)} \Vert_{\infty} \ll e^{-|u|j},\quad \mbox{for all } j\ge 1. \end{align*}

\begin{align*} & \operatorname{supp}(\mathfrak{K}_u) \subset[e^{u }/3, 3e^{u})\quad \mbox{if } u \ge 0,\quad \mbox{and } \\ & \Vert \mathfrak{K}_{u}^{(j)} \Vert_{\infty} \ll e^{-|u|j},\quad \mbox{for all } j\ge 1. \end{align*}

As for ![]() $\mathfrak {N}_q$, we also assume

$\mathfrak {N}_q$, we also assume ![]() $\mathfrak {K}_u^{(1)}$ changes sign exactly once.

$\mathfrak {K}_u^{(1)}$ changes sign exactly once.

Therefore, a central role is played by the smoothed exponential sums

\begin{equation} \mathcal{E}_{q,u}(s):=\frac{1}{N}\sum_{k\in \mathbb{Z}} \mathfrak{K}_{u}(k) \widehat{f}\bigg(\frac{k}{N}\bigg)e( ks)\sum_{n\in \mathbb{Z}} \mathfrak{N}_{q}(n)e( k\omega(n) ). \end{equation}

\begin{equation} \mathcal{E}_{q,u}(s):=\frac{1}{N}\sum_{k\in \mathbb{Z}} \mathfrak{K}_{u}(k) \widehat{f}\bigg(\frac{k}{N}\bigg)e( ks)\sum_{n\in \mathbb{Z}} \mathfrak{N}_{q}(n)e( k\omega(n) ). \end{equation}

Notice that (3.9) and the compact support of ![]() $\widehat {f}$ imply

$\widehat {f}$ imply

\[ \mathcal{E}(N) \ll \bigg\Vert \sum_{u=-U}^{U}\sum_{q=0}^{2Q-1} \mathcal{E}_{q,u}\bigg\Vert_{L^m(\mathbb{R})}^m. \]

\[ \mathcal{E}(N) \ll \bigg\Vert \sum_{u=-U}^{U}\sum_{q=0}^{2Q-1} \mathcal{E}_{q,u}\bigg\Vert_{L^m(\mathbb{R})}^m. \]

Now write

\[ \mathcal{F}(N) := \frac{1}{N^m}\sum_{\boldsymbol{\mathbf{q}}\in [0,2Q-1]^m} \sum_{\boldsymbol{\mathbf{u}} = [-U,U]^m}\sum_{\boldsymbol{\mathbf{k}},\boldsymbol{\mathbf{n}} \in \mathbb{Z}^m} \mathfrak{K}_{\boldsymbol{\mathbf{u}}}(\boldsymbol{\mathbf{k}})\mathfrak{N}_{\boldsymbol{\mathbf{q}}}(\boldsymbol{\mathbf{n}}) \int_0^1\widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e( \boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}} s)\, {d} s, \]

\[ \mathcal{F}(N) := \frac{1}{N^m}\sum_{\boldsymbol{\mathbf{q}}\in [0,2Q-1]^m} \sum_{\boldsymbol{\mathbf{u}} = [-U,U]^m}\sum_{\boldsymbol{\mathbf{k}},\boldsymbol{\mathbf{n}} \in \mathbb{Z}^m} \mathfrak{K}_{\boldsymbol{\mathbf{u}}}(\boldsymbol{\mathbf{k}})\mathfrak{N}_{\boldsymbol{\mathbf{q}}}(\boldsymbol{\mathbf{n}}) \int_0^1\widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e( \boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}} s)\, {d} s, \]

where ![]() $\mathfrak {N}_{\boldsymbol {\mathbf {q}}}(\boldsymbol {\mathbf {n}}) := \mathfrak {N}_{q_1}(n_1)\mathfrak {N}_{q_2}(n_2)\cdots \mathfrak {N}_{q_m}(n_m)$ and

$\mathfrak {N}_{\boldsymbol {\mathbf {q}}}(\boldsymbol {\mathbf {n}}) := \mathfrak {N}_{q_1}(n_1)\mathfrak {N}_{q_2}(n_2)\cdots \mathfrak {N}_{q_m}(n_m)$ and ![]() $\mathfrak {K}_{\boldsymbol {\mathbf {u}}}(\boldsymbol {\mathbf {k}}) := \mathfrak {K}_{u_1}(k_1)\mathfrak {K}_{u_2}(k_2)\cdots \mathfrak {K}_{u_m}(k_m)$. Our goal will be to establish that

$\mathfrak {K}_{\boldsymbol {\mathbf {u}}}(\boldsymbol {\mathbf {k}}) := \mathfrak {K}_{u_1}(k_1)\mathfrak {K}_{u_2}(k_2)\cdots \mathfrak {K}_{u_m}(k_m)$. Our goal will be to establish that ![]() $\mathcal {F}(N)$ is equal to the right-hand side of (3.4) up to an

$\mathcal {F}(N)$ is equal to the right-hand side of (3.4) up to an ![]() $o(1)$ term. Then, since we can establish the same asymptotic for the lower bound, we may conclude the asymptotic for

$o(1)$ term. Then, since we can establish the same asymptotic for the lower bound, we may conclude the asymptotic for ![]() $\mathcal {E}(N)$. Since the details are identical, we will only focus on

$\mathcal {E}(N)$. Since the details are identical, we will only focus on ![]() $\mathcal {F}(N)$.

$\mathcal {F}(N)$.

Fixing ![]() $\boldsymbol {\mathbf {q}}$ and

$\boldsymbol {\mathbf {q}}$ and ![]() $\boldsymbol {\mathbf {u}}$, we let

$\boldsymbol {\mathbf {u}}$, we let

\[ \mathcal{F}_{\boldsymbol{\mathbf{q}},\boldsymbol{\mathbf{u}}}(N)=\frac{1}{N^m}\int_0^1\sum_{\substack{\boldsymbol{\mathbf{n}},\boldsymbol{\mathbf{k}} \in \mathbb{Z}^m}} \mathfrak{N}_{\boldsymbol{\mathbf{q}}}(\boldsymbol{\mathbf{n}})\mathfrak{K}_{\boldsymbol{\mathbf{u}}}(\boldsymbol{\mathbf{k}}) \widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e(\boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}}s) \,{d} s. \]

\[ \mathcal{F}_{\boldsymbol{\mathbf{q}},\boldsymbol{\mathbf{u}}}(N)=\frac{1}{N^m}\int_0^1\sum_{\substack{\boldsymbol{\mathbf{n}},\boldsymbol{\mathbf{k}} \in \mathbb{Z}^m}} \mathfrak{N}_{\boldsymbol{\mathbf{q}}}(\boldsymbol{\mathbf{n}})\mathfrak{K}_{\boldsymbol{\mathbf{u}}}(\boldsymbol{\mathbf{k}}) \widehat{f}\bigg(\frac{\boldsymbol{\mathbf{k}}}{N}\bigg) e(\boldsymbol{\mathbf{k}}\cdot \omega(\boldsymbol{\mathbf{n}}) + \boldsymbol{\mathbf{k}}\cdot \boldsymbol{\mathbf{1}}s) \,{d} s. \]

Remark In the following sections, we will fix ![]() $\boldsymbol {\mathbf {q}}$ and

$\boldsymbol {\mathbf {q}}$ and ![]() $\boldsymbol {\mathbf {u}}$. Because of the way we have defined

$\boldsymbol {\mathbf {u}}$. Because of the way we have defined ![]() $\mathfrak {N}_q$, this implies two cases:

$\mathfrak {N}_q$, this implies two cases: ![]() $q< Q$ and

$q< Q$ and ![]() $q\ge Q$. The only real difference in these two cases are the bounds in (3.7), which differ by a factor of

$q\ge Q$. The only real difference in these two cases are the bounds in (3.7), which differ by a factor of ![]() $Q = \log (N)$. To keep the notation simple, we will assume we have

$Q = \log (N)$. To keep the notation simple, we will assume we have ![]() $q < Q$ and work with the first bound. In practice the logarithmic correction does not affect any of the results or proofs.

$q < Q$ and work with the first bound. In practice the logarithmic correction does not affect any of the results or proofs.

4. Applying the  $B$-process

$B$-process

4.1 Degenerate regimes

Fix ![]() $\delta = {1/(m + 1)}$. We say that

$\delta = {1/(m + 1)}$. We say that ![]() $(q,u)\in [2 Q] \times [-U,U]$ is degenerate if either

$(q,u)\in [2 Q] \times [-U,U]$ is degenerate if either

holds. Otherwise ![]() $(q,u)$ is called non-degenerate. Let

$(q,u)$ is called non-degenerate. Let ![]() $\mathscr {G}(N)$ denote the set of all non-degenerate pairs

$\mathscr {G}(N)$ denote the set of all non-degenerate pairs ![]() $(q,u)$. In this section it is enough to suppose that

$(q,u)$. In this section it is enough to suppose that ![]() $u>0$ (and therefore

$u>0$ (and therefore ![]() $k>0$). Next, we show that degenerate

$k>0$). Next, we show that degenerate ![]() $(q,u)$ contribute a negligible amount to

$(q,u)$ contribute a negligible amount to ![]() $\mathcal {F}(N)$.

$\mathcal {F}(N)$.

First, assume ![]() $q \le \delta Q$. Expanding the

$q \le \delta Q$. Expanding the ![]() $m$th power, evaluating the

$m$th power, evaluating the ![]() $s$-integral and trivial estimation yield

$s$-integral and trivial estimation yield

\begin{align*} \Vert \mathcal{E}_{q,u} \Vert_{L^m}^m &= \int_0^1\bigg(\frac{1}{N} \sum_{k \in \mathbb{Z}} \mathfrak{K}_u(k)\widehat{f}\bigg(\frac{k}{N}\bigg) e(ks) \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)e(k\omega(n))\bigg)^m {d}s\\ &\ll \frac{1}{N^m}\sum_{\substack{k_i \asymp e^u,\\ i =1,\ldots m}} \max_x\bigg(\widehat{f}\bigg(\frac{x}{N}\bigg)\bigg)^m\sum_{\substack{n_i \asymp e^q,\\ i =1,\ldots m}} \bigg|\int_0^1 e((k_1+ \cdots + k_m)s)\,{d}s\bigg|\\ &\ll \frac{1}{N^m} \#\{k_1,\ldots,k_m\asymp e^{u}: k_1 +\cdots +k_m = 0 \}N^{m\delta}\ll N^{m\delta-1}. \end{align*}

\begin{align*} \Vert \mathcal{E}_{q,u} \Vert_{L^m}^m &= \int_0^1\bigg(\frac{1}{N} \sum_{k \in \mathbb{Z}} \mathfrak{K}_u(k)\widehat{f}\bigg(\frac{k}{N}\bigg) e(ks) \sum_{n \in \mathbb{Z}} \mathfrak{N}_{q}(n)e(k\omega(n))\bigg)^m {d}s\\ &\ll \frac{1}{N^m}\sum_{\substack{k_i \asymp e^u,\\ i =1,\ldots m}} \max_x\bigg(\widehat{f}\bigg(\frac{x}{N}\bigg)\bigg)^m\sum_{\substack{n_i \asymp e^q,\\ i =1,\ldots m}} \bigg|\int_0^1 e((k_1+ \cdots + k_m)s)\,{d}s\bigg|\\ &\ll \frac{1}{N^m} \#\{k_1,\ldots,k_m\asymp e^{u}: k_1 +\cdots +k_m = 0 \}N^{m\delta}\ll N^{m\delta-1}. \end{align*}

If ![]() $u< q^{(A-1)/2}$ and

$u< q^{(A-1)/2}$ and ![]() $q>\delta Q$, then we can apply the Euler summation formula, followed by van der Corput's lemma with

$q>\delta Q$, then we can apply the Euler summation formula, followed by van der Corput's lemma with ![]() $j=1$, to conclude that

$j=1$, to conclude that

where the numerator is the size of the support of ![]() $\mathfrak {N}_q$ and the denominator is the maximum value of

$\mathfrak {N}_q$ and the denominator is the maximum value of ![]() $k \omega '(x)$ for

$k \omega '(x)$ for ![]() $x$ in that support. Hence,

$x$ in that support. Hence,

Note

\[ \sum_{q\leq Q}\frac{e^{q}}{q^{A-1}}\ll\int_{1}^{Q}\frac{e^{q}}{q^{A-1}}{d}q= \int_{1}^{Q/2}\frac{e^{q}}{q^{A-1}}{d}q+\int_{Q/2}^{Q} \frac{e^{q}}{q^{A-1}}{d}q\ll e^{Q/2}+\frac{1}{Q^{A-1}} \int_{Q/2}^{Q}e^{Q}\,{d}q\ll\frac{e^{Q}}{Q^{A-1}}. \]

\[ \sum_{q\leq Q}\frac{e^{q}}{q^{A-1}}\ll\int_{1}^{Q}\frac{e^{q}}{q^{A-1}}{d}q= \int_{1}^{Q/2}\frac{e^{q}}{q^{A-1}}{d}q+\int_{Q/2}^{Q} \frac{e^{q}}{q^{A-1}}{d}q\ll e^{Q/2}+\frac{1}{Q^{A-1}} \int_{Q/2}^{Q}e^{Q}\,{d}q\ll\frac{e^{Q}}{Q^{A-1}}. \]

Thus,

\[ \bigg\Vert \sum_{\delta Q\leq q\leq Q} \sum_{u\leq q^{(A-1)/2}} \mathcal{E}_{q,u}\bigg\Vert_\infty \ll\frac{1}{N} \sum_{q\leq Q} \sum_{u\leq q^{(A-1)/2}}\sum_{k\asymp e^{u}}\frac{1}{k} \frac{e^{q}}{q^{A-1}}\ll\frac{1}{N} \frac{e^{Q}}{Q^{A-1}} \sum_{u\leq Q^{(A-1)/2}}1\leq\frac{1}{Q^{{(A-1)/2}}}. \]

\[ \bigg\Vert \sum_{\delta Q\leq q\leq Q} \sum_{u\leq q^{(A-1)/2}} \mathcal{E}_{q,u}\bigg\Vert_\infty \ll\frac{1}{N} \sum_{q\leq Q} \sum_{u\leq q^{(A-1)/2}}\sum_{k\asymp e^{u}}\frac{1}{k} \frac{e^{q}}{q^{A-1}}\ll\frac{1}{N} \frac{e^{Q}}{Q^{A-1}} \sum_{u\leq Q^{(A-1)/2}}1\leq\frac{1}{Q^{{(A-1)/2}}}. \]

Taking the ![]() $L^m$-norm then yields

$L^m$-norm then yields

\[ \bigg\Vert \sum_{(q,u)\in [2Q]\times[-U,U] \setminus \mathscr{G}(N)}\mathcal{E}_{q,u}\bigg\Vert_{L^m}^m \ll_{\delta} \log(N)^{-\rho}, \]

\[ \bigg\Vert \sum_{(q,u)\in [2Q]\times[-U,U] \setminus \mathscr{G}(N)}\mathcal{E}_{q,u}\bigg\Vert_{L^m}^m \ll_{\delta} \log(N)^{-\rho}, \]

for some ![]() $\rho >0$. Hence, the triangle inequality implies

$\rho >0$. Hence, the triangle inequality implies

\begin{equation} \mathcal{F}(N) =\bigg\Vert \sum_{(u,q) \in \mathscr{G}(N)}\mathcal{E}_{q,u} \bigg\Vert_{L^m}^m + O(N^{-\rho}). \end{equation}

\begin{equation} \mathcal{F}(N) =\bigg\Vert \sum_{(u,q) \in \mathscr{G}(N)}\mathcal{E}_{q,u} \bigg\Vert_{L^m}^m + O(N^{-\rho}). \end{equation} Next, to dismiss the degenerate regimes, let ![]() $w,W$ denote strictly positive numbers satisfying

$w,W$ denote strictly positive numbers satisfying ![]() $w< W$. Consider

$w< W$. Consider

where ![]() $\| \cdot \|$ denotes the distance to the nearest integer. We shall need (as in [Reference Lutsko and TechnauLT21, Proof of Lemma 4.1]) the following lemma.

$\| \cdot \|$ denotes the distance to the nearest integer. We shall need (as in [Reference Lutsko and TechnauLT21, Proof of Lemma 4.1]) the following lemma.

Lemma 4.1 If ![]() $W<1/10$, then

$W<1/10$, then

\[ \sum_{e^{u}\leq |k|< e^{u+1}}g_{w,W}(k)\ll\bigg(e^{u}+\frac{1}{w}\bigg)\log(1/W) \]

\[ \sum_{e^{u}\leq |k|< e^{u+1}}g_{w,W}(k)\ll\bigg(e^{u}+\frac{1}{w}\bigg)\log(1/W) \]

where the implied constant is absolute.

Proof. The proof is elementary, hence we only sketch the main idea. If ![]() $e^{u}w <1$ then we achieve the bound

$e^{u}w <1$ then we achieve the bound ![]() $(1/w) \log (1/W)$, and otherwise we get the bound

$(1/w) \log (1/W)$, and otherwise we get the bound ![]() $e^{u}\log (1/W)$. Focusing on the latter, first make a case distinction between those

$e^{u}\log (1/W)$. Focusing on the latter, first make a case distinction between those ![]() $x$ which contribute

$x$ which contribute ![]() $\frac {1}{\|xw\|}$ and those that contribute

$\frac {1}{\|xw\|}$ and those that contribute ![]() $\frac {1}{W}$. Then count how many contribute the latter. For the former, since the spacing between consecutive points is small, we can convert the sum into

$\frac {1}{W}$. Then count how many contribute the latter. For the former, since the spacing between consecutive points is small, we can convert the sum into ![]() $e^uw$ many integrals of the form

$e^uw$ many integrals of the form ![]() $(1/w) \int _{W/w}^{1/w} (1/x) \,{d}x$.

$(1/w) \int _{W/w}^{1/w} (1/x) \,{d}x$.

With the previous lemma at hand, we can show that an additional degenerate regime is negligible. Specifically, when we apply the ![]() $B$-process, the first step is to apply Poisson summation. Depending on the new summation variable there may, or may not, be a stationary point. The following lemma allows us to dismiss the contribution when there is no stationary point. Fix

$B$-process, the first step is to apply Poisson summation. Depending on the new summation variable there may, or may not, be a stationary point. The following lemma allows us to dismiss the contribution when there is no stationary point. Fix ![]() $k\asymp e^{u}$ and let

$k\asymp e^{u}$ and let ![]() $[a,b]:=\mathrm {supp}(\mathfrak {N}_{q})$. Consider

$[a,b]:=\mathrm {supp}(\mathfrak {N}_{q})$. Consider

\[ \mathrm{Err}(k):=\sum_{\underset{m_{q}(r)>0}{r\in\mathbb{Z}}} \int_{\mathbb{R}} e(\Phi_r(x)) \mathfrak{N}_{q}(x)\,{d}x, \]

\[ \mathrm{Err}(k):=\sum_{\underset{m_{q}(r)>0}{r\in\mathbb{Z}}} \int_{\mathbb{R}} e(\Phi_r(x)) \mathfrak{N}_{q}(x)\,{d}x, \]

where

Our next aim is to show that the smooth exponential sum

\[ \mathrm{Err}_{u}(s):=\sum_{k\in\mathbb{Z}}e(ks)\mathrm{Err}(k)\mathfrak{K}_{u}(k) \widehat{f}\bigg(\frac{k}{N}\bigg) \]

\[ \mathrm{Err}_{u}(s):=\sum_{k\in\mathbb{Z}}e(ks)\mathrm{Err}(k)\mathfrak{K}_{u}(k) \widehat{f}\bigg(\frac{k}{N}\bigg) \]

is small on average over ![]() $s$.

$s$.

Lemma 4.2 Fix any constant ![]() $C>0$. Then the bound

$C>0$. Then the bound

\begin{equation} I_{\boldsymbol{\mathbf{u}}}:=\int_0^1\prod_{i=1}^m \operatorname{Err}_{u_i}(s)\,{d}s\ll Q^{-C}N^{m} \end{equation}

\begin{equation} I_{\boldsymbol{\mathbf{u}}}:=\int_0^1\prod_{i=1}^m \operatorname{Err}_{u_i}(s)\,{d}s\ll Q^{-C}N^{m} \end{equation}

holds uniformly in ![]() $Q^{{(A-1)/2}}\leq \boldsymbol {\mathbf {u}}\ll Q$.

$Q^{{(A-1)/2}}\leq \boldsymbol {\mathbf {u}}\ll Q$.

Proof. Let ![]() $\mathcal {L}_{\boldsymbol {\mathbf {u}}}$ denote the truncated sub-lattice of

$\mathcal {L}_{\boldsymbol {\mathbf {u}}}$ denote the truncated sub-lattice of ![]() $\mathbb {Z}^{m}$ defined by gathering all

$\mathbb {Z}^{m}$ defined by gathering all ![]() $\boldsymbol {\mathbf {k}}\in \mathbb {Z}^{m}$ so that

$\boldsymbol {\mathbf {k}}\in \mathbb {Z}^{m}$ so that ![]() $k_{1}+\cdots +k_{m}=0$ and

$k_{1}+\cdots +k_{m}=0$ and ![]() $\vert k_{i}\vert \asymp e^{u_i}$ for all

$\vert k_{i}\vert \asymp e^{u_i}$ for all ![]() $i\leq m$. The quantity

$i\leq m$. The quantity ![]() $\mathcal {L}_{\boldsymbol {\mathbf {u}}}$ arises from

$\mathcal {L}_{\boldsymbol {\mathbf {u}}}$ arises from

\begin{equation} I_{\boldsymbol{\mathbf{u}}}=\sum_{\underset{i\leq m}{|k_{i}|\asymp e^{u}}}\bigg(\bigg(\prod_{i\leq m} \mathrm{Err}(k_{i})\mathfrak{K}_{u}(k_{i})\widehat{f}\bigg(\frac{k_i}{N}\bigg)\bigg) \int_{0}^{1}e((k_{1}+\cdots+k_{m})s)\,{d}s\bigg)\ll \sum_{\boldsymbol{\mathbf{k}}\in\mathcal{L}_{\boldsymbol{\mathbf{u}}}} \bigg(\prod_{i\leq m}\mathrm{Err}(k_{i})\bigg).\end{equation}

\begin{equation} I_{\boldsymbol{\mathbf{u}}}=\sum_{\underset{i\leq m}{|k_{i}|\asymp e^{u}}}\bigg(\bigg(\prod_{i\leq m} \mathrm{Err}(k_{i})\mathfrak{K}_{u}(k_{i})\widehat{f}\bigg(\frac{k_i}{N}\bigg)\bigg) \int_{0}^{1}e((k_{1}+\cdots+k_{m})s)\,{d}s\bigg)\ll \sum_{\boldsymbol{\mathbf{k}}\in\mathcal{L}_{\boldsymbol{\mathbf{u}}}} \bigg(\prod_{i\leq m}\mathrm{Err}(k_{i})\bigg).\end{equation}

Partial integration and the dyadic decomposition allow one to show that the contribution of ![]() $\vert r\vert \geq Q^{O(1)}$ to

$\vert r\vert \geq Q^{O(1)}$ to ![]() $\operatorname {Err}(k_i)$ can be bounded by

$\operatorname {Err}(k_i)$ can be bounded by ![]() $O(Q^{-C})$ for any

$O(Q^{-C})$ for any ![]() $C>0$. Hence, from van der Corput's lemma (Lemma 2.3) with

$C>0$. Hence, from van der Corput's lemma (Lemma 2.3) with ![]() $j=2$ and the assumption

$j=2$ and the assumption ![]() $m_{q}(r)>0$, we infer

$m_{q}(r)>0$, we infer

where the implied constant is absolute. Notice that ![]() $\omega '(a)\asymp q^{A-1}e^{-q}=:w$ and

$\omega '(a)\asymp q^{A-1}e^{-q}=:w$ and

Thus, ![]() $\mathrm {Err}(k)\ll g_{w,W}(k) Q^{O(1)}.$ Using

$\mathrm {Err}(k)\ll g_{w,W}(k) Q^{O(1)}.$ Using ![]() $\mathrm {Err}(k_{i})\ll g_{w,W}(k_{i}) Q^{O(1)}$ for

$\mathrm {Err}(k_{i})\ll g_{w,W}(k_{i}) Q^{O(1)}$ for ![]() $i< m$ and

$i< m$ and ![]() $\mathrm {Err}(k_{m})\ll Q^{O(1)}/W$ in (4.3) produces the estimate

$\mathrm {Err}(k_{m})\ll Q^{O(1)}/W$ in (4.3) produces the estimate

\begin{equation} I_{\boldsymbol{\mathbf{u}}}\ll\frac{Q^{O(1)}}{W}\sum_{\underset{i< m}{\vert k_{i}\vert\asymp e^{u}}} \bigg(\prod_{i< m}g_{w,W}(k_{i})\bigg)=\frac{Q^{O(1)}}{W} \bigg(\sum_{\vert k \vert \asymp e^{u}}g_{w,W}(k)\bigg)^{m-1}.\end{equation}

\begin{equation} I_{\boldsymbol{\mathbf{u}}}\ll\frac{Q^{O(1)}}{W}\sum_{\underset{i< m}{\vert k_{i}\vert\asymp e^{u}}} \bigg(\prod_{i< m}g_{w,W}(k_{i})\bigg)=\frac{Q^{O(1)}}{W} \bigg(\sum_{\vert k \vert \asymp e^{u}}g_{w,W}(k)\bigg)^{m-1}.\end{equation}

Suppose ![]() $W\geq N^{-\varepsilon }$. Then

$W\geq N^{-\varepsilon }$. Then ![]() $g_{w,W}(k)\leq N^{\varepsilon }$ and we obtain that

$g_{w,W}(k)\leq N^{\varepsilon }$ and we obtain that

Now suppose ![]() $W< N^{-\varepsilon }\leq 1/10$. Then Lemma 4.1 is applicable and yields

$W< N^{-\varepsilon }\leq 1/10$. Then Lemma 4.1 is applicable and yields

Plugging this into (4.4) and using ![]() $1/W\ll e^{q-u/2}q^{(1-A)/2}\ll Ne^{-\frac {u}{2}}$ shows that

$1/W\ll e^{q-u/2}q^{(1-A)/2}\ll Ne^{-\frac {u}{2}}$ shows that

Because ![]() $u\geq Q^{{(A-1)/2}}$, we certainly have

$u\geq Q^{{(A-1)/2}}$, we certainly have ![]() $e^{-\frac {u}{2}}\ll Q^{-C}$ for any

$e^{-\frac {u}{2}}\ll Q^{-C}$ for any ![]() $C>0$ and thus the proof is complete.

$C>0$ and thus the proof is complete.

4.2 First application of the  $B$-process

$B$-process

First, following the lead set out in [Reference Lutsko, Sourmelidis and TechnauLST24], we apply the ![]() $B$-process in the

$B$-process in the ![]() $n$-variable. Assume without loss of generality that

$n$-variable. Assume without loss of generality that ![]() $k >0$ (if

$k >0$ (if ![]() $k <0$ we take complex conjugates and the without loss of generality assumption that

$k <0$ we take complex conjugates and the without loss of generality assumption that ![]() $f$ is even).

$f$ is even).

Then, after applying the ![]() $B$-process, the phase function

$B$-process, the phase function ![]() $x\mapsto k\omega (x) - rx$ will be transformed to

$x\mapsto k\omega (x) - rx$ will be transformed to

\[ \phi(k,r): = k\omega(x_{k,r}) - r x_{k,r}\quad \mathrm{where}\ x_{k,r} := \begin{cases} \widetilde{\omega}\bigg(\dfrac{r}{k}\bigg) & \text{if } \dfrac{r}{k}>e^{A-1},\\ 3 & \text{otherwise,} \end{cases} \]

\[ \phi(k,r): = k\omega(x_{k,r}) - r x_{k,r}\quad \mathrm{where}\ x_{k,r} := \begin{cases} \widetilde{\omega}\bigg(\dfrac{r}{k}\bigg) & \text{if } \dfrac{r}{k}>e^{A-1},\\ 3 & \text{otherwise,} \end{cases} \]

and ![]() $\widetilde {\omega }(x):= (\omega ^{\prime })^{-1}(x)$ is the inverse of the derivative of

$\widetilde {\omega }(x):= (\omega ^{\prime })^{-1}(x)$ is the inverse of the derivative of ![]() $\omega$. Importantly, the next proposition states that

$\omega$. Importantly, the next proposition states that ![]() $\mathcal {E}_{q,u}$ is well approximated by

$\mathcal {E}_{q,u}$ is well approximated by

\begin{equation} \mathcal{E}_{q,u}^{(B)}(s):=\frac{e(-1/8)}{N}\sum_{k > 0} \mathfrak{K}_{ u}(k)\widehat{f} \bigg(\frac{ k}{N}\bigg) e(ks)\sum_{r\geq 0} \frac{\mathfrak{N}_{q}(x_{k,r})}{\sqrt{|k\omega^{\prime\prime}(x_{k,r})|}}e(\phi(k,r)). \end{equation}

\begin{equation} \mathcal{E}_{q,u}^{(B)}(s):=\frac{e(-1/8)}{N}\sum_{k > 0} \mathfrak{K}_{ u}(k)\widehat{f} \bigg(\frac{ k}{N}\bigg) e(ks)\sum_{r\geq 0} \frac{\mathfrak{N}_{q}(x_{k,r})}{\sqrt{|k\omega^{\prime\prime}(x_{k,r})|}}e(\phi(k,r)). \end{equation}

We comment on the definition of ![]() $x_{k,r}$ and

$x_{k,r}$ and ![]() $\mathcal {E}_{q,u}^{(B)}$ in the next remark.

$\mathcal {E}_{q,u}^{(B)}$ in the next remark.

Remark Recall that we currently work in the non-degenerate regime, ![]() $q\geq \delta Q$. Note that

$q\geq \delta Q$. Note that ![]() $x_{k,r}$ is the stationary point for the map

$x_{k,r}$ is the stationary point for the map ![]() $x \mapsto k \omega (x)-rx$ when

$x \mapsto k \omega (x)-rx$ when ![]() $r/k > e^{A-1}$ (i.e. when there is a unique stationary point in the relevant regime). On the other hand,

$r/k > e^{A-1}$ (i.e. when there is a unique stationary point in the relevant regime). On the other hand, ![]() $\mathfrak {N}_q(3)=0$ since

$\mathfrak {N}_q(3)=0$ since ![]() $\inf (\operatorname {supp}(\mathfrak {N}_q)) \rightarrow \infty$ as

$\inf (\operatorname {supp}(\mathfrak {N}_q)) \rightarrow \infty$ as ![]() $Q\rightarrow \infty$. Thus,

$Q\rightarrow \infty$. Thus, ![]() $\mathfrak {N}_q(x_{k,r})$ is well defined and smooth as a function of

$\mathfrak {N}_q(x_{k,r})$ is well defined and smooth as a function of ![]() $k,r\geq 0$.

$k,r\geq 0$.

Proposition 4.3 If ![]() $u\geq Q^{(A-1)/2}$, then

$u\geq Q^{(A-1)/2}$, then

uniformly for all non-degenerate ![]() $(u,q)\in \mathscr {G}(N)$.

$(u,q)\in \mathscr {G}(N)$.

Proof. Let ![]() $[a,b]:= \operatorname {supp}(\mathfrak {N}_q)$, let

$[a,b]:= \operatorname {supp}(\mathfrak {N}_q)$, let ![]() $\Phi _r(x):= k\omega (x) - rx$, and let

$\Phi _r(x):= k\omega (x) - rx$, and let ![]() $m(r):= \min \{|\Phi _r^\prime (x)|:\ x \in [a,b]\}$. As usual when applying the

$m(r):= \min \{|\Phi _r^\prime (x)|:\ x \in [a,b]\}$. As usual when applying the ![]() $B$-process we first apply Poisson summation and integration by parts:

$B$-process we first apply Poisson summation and integration by parts:

\[ \sum_{n \in \mathbb{Z}} \mathfrak{N}_q(n) e(k\omega(n)) = \sum_{r \in \mathbb{Z}} \int_{-\infty}^\infty \mathfrak{N}_q(x) e(\Phi_r(x)) \,{d}x = M(k) + \operatorname{Err}(k), \]

\[ \sum_{n \in \mathbb{Z}} \mathfrak{N}_q(n) e(k\omega(n)) = \sum_{r \in \mathbb{Z}} \int_{-\infty}^\infty \mathfrak{N}_q(x) e(\Phi_r(x)) \,{d}x = M(k) + \operatorname{Err}(k), \]

where ![]() $M(k)$ gathers the contributions when

$M(k)$ gathers the contributions when ![]() $r\in \mathbb {Z}$ with

$r\in \mathbb {Z}$ with ![]() $m(r)=0$ (i.e. with a stationary point) and

$m(r)=0$ (i.e. with a stationary point) and ![]() $\operatorname {Err}(k)$ gathers the contribution of

$\operatorname {Err}(k)$ gathers the contribution of ![]() $0 < m(r)$.

$0 < m(r)$.

In the notation of Lemma 2.2, let ![]() $w(x):= \mathfrak {N}_q(x)$,

$w(x):= \mathfrak {N}_q(x)$, ![]() $\Lambda _{\psi } := \omega (e^q)e^{u} = q^{A}e^{u}$, and

$\Lambda _{\psi } := \omega (e^q)e^{u} = q^{A}e^{u}$, and ![]() $\Omega _\psi = \Omega _{w} := e^q$. Since

$\Omega _\psi = \Omega _{w} := e^q$. Since ![]() $(u,q)$ is non-degenerate we have that

$(u,q)$ is non-degenerate we have that ![]() $\Lambda _\psi /\Omega _\psi \gg q$, and hence

$\Lambda _\psi /\Omega _\psi \gg q$, and hence

\begin{equation} M(k) = e(-1/8)\sum_{r\geq 0} \frac{\mathfrak{N}_{q}(x_{k,r})}{\sqrt{|k\omega^{\prime\prime}(x_{k,r})|}} e(\phi(k,r)) + O((q \Lambda_\psi^{1/2+O(\varepsilon)})^{-1}). \end{equation}

\begin{equation} M(k) = e(-1/8)\sum_{r\geq 0} \frac{\mathfrak{N}_{q}(x_{k,r})}{\sqrt{|k\omega^{\prime\prime}(x_{k,r})|}} e(\phi(k,r)) + O((q \Lambda_\psi^{1/2+O(\varepsilon)})^{-1}). \end{equation}

Summing (4.7) against ![]() $N^{-1} \mathfrak {K}_{u}(k) \widehat {f}( k/N) e(ks)$ for

$N^{-1} \mathfrak {K}_{u}(k) \widehat {f}( k/N) e(ks)$ for ![]() $k\geq 0$ gives rise to

$k\geq 0$ gives rise to ![]() $\mathcal {E}_{q,u}^{\mathrm {(B)}}$. The term coming from

$\mathcal {E}_{q,u}^{\mathrm {(B)}}$. The term coming from

can be bounded sufficiently by Lemma 4.2 and the triangle inequality.

Since ![]() $x_{k,r}$ is roughly of size

$x_{k,r}$ is roughly of size ![]() $e^{q}$, if we stop here and apply the triangle inequality to (4.5) we would get

$e^{q}$, if we stop here and apply the triangle inequality to (4.5) we would get

\begin{align*} |\mathcal{E}_{q,u}^{(B)}(s)|\ll\frac{1}{N}\sum_{k > 0} \mathfrak{K}_{u}(k) e^q \frac{1}{\sqrt{k}} \frac{k}{e^q} \ll\frac{1}{N} e^{3u/2} \ll N^{1/2}. \end{align*}

\begin{align*} |\mathcal{E}_{q,u}^{(B)}(s)|\ll\frac{1}{N}\sum_{k > 0} \mathfrak{K}_{u}(k) e^q \frac{1}{\sqrt{k}} \frac{k}{e^q} \ll\frac{1}{N} e^{3u/2} \ll N^{1/2}. \end{align*}

Hence, we still need to find a saving of ![]() $O(N^{1/2})$. To achieve most of this, we now apply the

$O(N^{1/2})$. To achieve most of this, we now apply the ![]() $B$-process in the

$B$-process in the ![]() $k$ variable. This will require the following a priori bounds.

$k$ variable. This will require the following a priori bounds.

4.3 Amplitude bounds

Before proceeding with the second application of the ![]() $B$-process, we require bounds on the amplitude function

$B$-process, we require bounds on the amplitude function

and its derivatives; for which we have the following lemma.

Lemma 4.4 For any pair ![]() $q,u$ as above, and any

$q,u$ as above, and any ![]() $j \ge 1$, we have the bounds

$j \ge 1$, we have the bounds

where the implicit constant in the exponent depends on ![]() $j$, but not

$j$, but not ![]() $q,u$. Moreover,

$q,u$. Moreover,

Proof. First, note that since ![]() $\Psi _{q,u}$ is a product of functions of

$\Psi _{q,u}$ is a product of functions of ![]() $k$, if we can establish (4.8) for each of these functions, then the overall bound will hold for

$k$, if we can establish (4.8) for each of these functions, then the overall bound will hold for ![]() $\Psi _{q,u}(k,r,s)$ by the product rule. Moreover, the bound is obvious for

$\Psi _{q,u}(k,r,s)$ by the product rule. Moreover, the bound is obvious for ![]() $\mathfrak {K}_u(k)$,

$\mathfrak {K}_u(k)$, ![]() $\widehat {f}(k/N)$, and

$\widehat {f}(k/N)$, and ![]() $k^{-1/2}$.

$k^{-1/2}$.

Thus, consider first ![]() $\partial _k \mathfrak {N}_q(x_{k,r}) = \mathfrak {N}_q^\prime (x_{k,r}) \partial _k(x_{k,r})$. By assumption, since

$\partial _k \mathfrak {N}_q(x_{k,r}) = \mathfrak {N}_q^\prime (x_{k,r}) \partial _k(x_{k,r})$. By assumption, since ![]() $x_{k,r} \asymp e^{q}$, we have that

$x_{k,r} \asymp e^{q}$, we have that ![]() $\mathfrak {N}_q^\prime (x_{k,r}) \ll e^{-q}$. Again, by repeated application of the product rule, it suffices to show that

$\mathfrak {N}_q^\prime (x_{k,r}) \ll e^{-q}$. Again, by repeated application of the product rule, it suffices to show that ![]() $\partial _k^j x_{k,r} \ll e^{q-uj}Q^{O(1)}$. To that end, begin with the equation

$\partial _k^j x_{k,r} \ll e^{q-uj}Q^{O(1)}$. To that end, begin with the equation

Hence, ![]() $\widetilde {\omega }^\prime (\omega ^\prime (x)) = \frac {1}{\omega ^{\prime \prime }(x)}$ which we can write as

$\widetilde {\omega }^\prime (\omega ^\prime (x)) = \frac {1}{\omega ^{\prime \prime }(x)}$ which we can write as

where ![]() $f_1$ is a rational function. Now we take

$f_1$ is a rational function. Now we take ![]() $j-1$ derivatives of each side. Inductively, one sees that there exist rational functions

$j-1$ derivatives of each side. Inductively, one sees that there exist rational functions ![]() $f_j$ such that

$f_j$ such that

Setting ![]() $x= x_{k,r}=\widetilde \omega (r/k)$ then gives

$x= x_{k,r}=\widetilde \omega (r/k)$ then gives

With (4.9), we can use repeated application of the product rule to bound

\begin{align*} \partial_k^{j} x_{k,r} &= \partial_k^{j} \widetilde{\omega}(r/k)\\ &= -\partial_k^{j-1} \widetilde{\omega}^\prime (r/k)\bigg(\frac{r}{k^2}\bigg)\\ &\ll \widetilde{\omega}^{(j)} (r/k)\bigg(\frac{r}{k^2}\bigg)^{j} + \widetilde{\omega}^\prime (r/k)\bigg(\frac{r}{k^{1+j}}\bigg)\\ &\ll x_{k,r}^{j+1} f_j(\log(x_{k,r}))\bigg(\frac{r}{k^2}\bigg)^{j} + x_{k,r}^2 f_1(\log(x_{k,r}))\bigg(\frac{r}{k^{1+j}}\bigg). \end{align*}

\begin{align*} \partial_k^{j} x_{k,r} &= \partial_k^{j} \widetilde{\omega}(r/k)\\ &= -\partial_k^{j-1} \widetilde{\omega}^\prime (r/k)\bigg(\frac{r}{k^2}\bigg)\\ &\ll \widetilde{\omega}^{(j)} (r/k)\bigg(\frac{r}{k^2}\bigg)^{j} + \widetilde{\omega}^\prime (r/k)\bigg(\frac{r}{k^{1+j}}\bigg)\\ &\ll x_{k,r}^{j+1} f_j(\log(x_{k,r}))\bigg(\frac{r}{k^2}\bigg)^{j} + x_{k,r}^2 f_1(\log(x_{k,r}))\bigg(\frac{r}{k^{1+j}}\bigg). \end{align*}

Now recall that ![]() $k \asymp e^{u}$,

$k \asymp e^{u}$, ![]() $x_{k,r} \asymp e^{q}$, and

$x_{k,r} \asymp e^{q}$, and ![]() $r \asymp e^{u-q}q^{A-1}$, thus

$r \asymp e^{u-q}q^{A-1}$, thus

\begin{align*} \partial_k^{j} x_{k,r} &\ll \bigg(e^{q(j+1)} \bigg(\frac{e^{u-q}}{e^{2u}}\bigg)^{j} + e^{2q}\bigg(\frac{e^{u-q}}{e^{(1+j)u}}\bigg)\bigg)Q^{O(1)}\\ &\ll e^{q-ju}Q^{O(1)}. \end{align*}

\begin{align*} \partial_k^{j} x_{k,r} &\ll \bigg(e^{q(j+1)} \bigg(\frac{e^{u-q}}{e^{2u}}\bigg)^{j} + e^{2q}\bigg(\frac{e^{u-q}}{e^{(1+j)u}}\bigg)\bigg)Q^{O(1)}\\ &\ll e^{q-ju}Q^{O(1)}. \end{align*}

Hence, ![]() $\partial _k^{(j)} \mathfrak {N}_q(x_{k,r}) \ll e^{-ju} Q^{O(1)}$.

$\partial _k^{(j)} \mathfrak {N}_q(x_{k,r}) \ll e^{-ju} Q^{O(1)}$.

The same argument suffices to prove that ![]() $\partial _k^{j} (1/\sqrt {|\omega ^{\prime \prime }(x_{k,r})|}) \ll e^{q-ju} Q^{O(1)}$.

$\partial _k^{j} (1/\sqrt {|\omega ^{\prime \prime }(x_{k,r})|}) \ll e^{q-ju} Q^{O(1)}$.

4.4 Second application of the  $B$-process

$B$-process

We now apply the ![]() $B$-process in the

$B$-process in the ![]() $k$-variable. At the present stage, the phase function is

$k$-variable. At the present stage, the phase function is ![]() $\phi (k,r)+ks$. Thus, for

$\phi (k,r)+ks$. Thus, for ![]() $h \in \mathbb {Z}$, let

$h \in \mathbb {Z}$, let ![]() $\mu = \mu _{h,r,s}$ be the unique stationary point of

$\mu = \mu _{h,r,s}$ be the unique stationary point of ![]() $k \mapsto \phi (k,r) - (h-s)k$. Namely,

$k \mapsto \phi (k,r) - (h-s)k$. Namely,

After the second application of the ![]() $B$-process, the phase will be transformed to