Children and Adolescent Mental Health Services (CAMHS) have been at the forefront of widespread attention given mounting needs, workforce shortage, and the task to implement cost-effective measures.

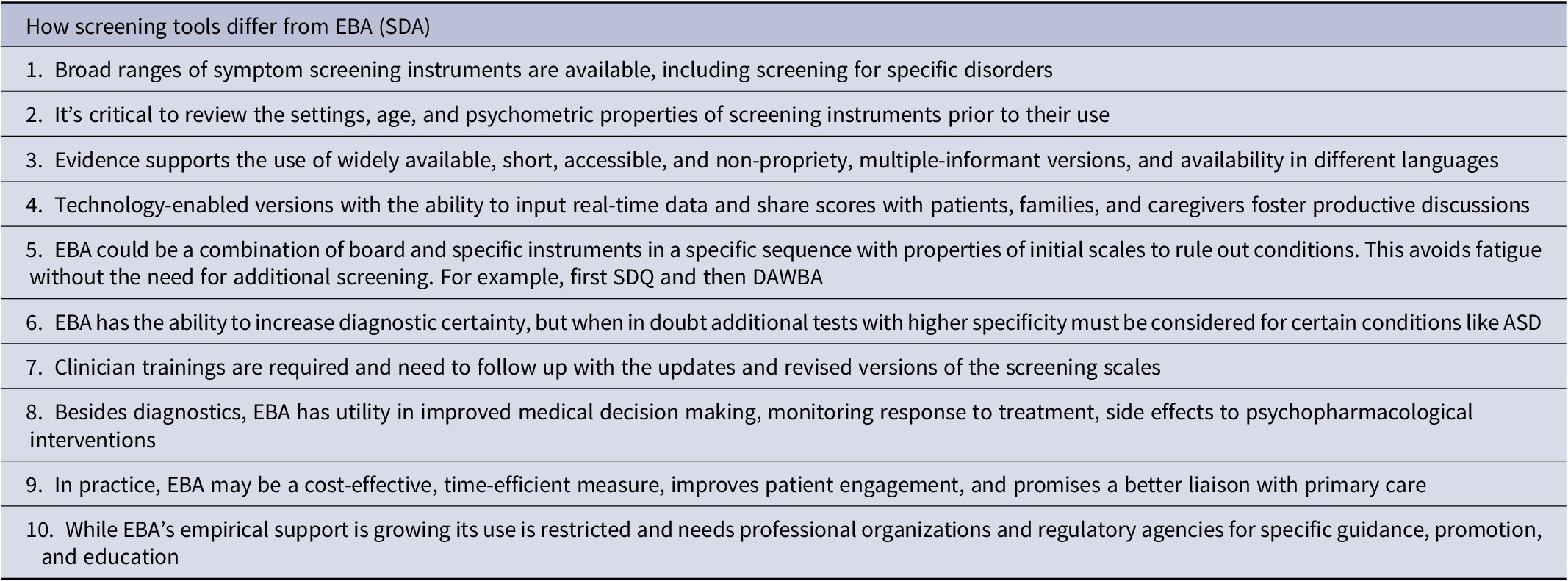

The current categorical systems present a unique set of diagnostic challenges. Some criteria require multisource informants, attention to detail in the responses, ascertaining the language, and cognitive barriers that are subjected to errors and not time efficient. The traditional assessment of psychiatric phenomenology is dependent on subjective accounts of symptoms and implicit heuristics. When clinicians rely on their clinical judgment alone, they may be prone to biases leading to misdiagnosis and missed diagnoses. Therefore, the call for standardizing these evaluation processes has merits. The current use of objective rating scales as screening questionnaires has been discretionary and varies across clinical spectrums despite their high validity and internal consistency (Table 1). Amidst these challenges, many support implementing precision measurement-based strategies to improve diagnostic uncertainty and thereby provide cost-effective equitable access to healthcare. There is no consensus or guidance about “which and how” to implement measurement-based care (MBC).Reference Becker-Haimes, Tabachnick, Last, Stewart, Hasan-Granier and Beidas1

Table 1. Measurement-Based Care

The screening for neurodevelopmental disorders like ASD continues to remain challenging and controversial. The sensitivity and positive predictive value (PPV) of the commonly used screening tool the Modified Checklist for Autism in Toddlers (M-CHAT) were in the range of 33.1% to 38.8%, and 14.6% to 17.8%, respectively. While the burden of missed or late diagnosis of ASD continues to mount, the scientific basis for controversial population-based universal screening grows. However, with the advances in artificial intelligence Cognoa ASD Diagnosis Aid developed software to assist in evaluating at-risk individuals with ASD. Besides, costs, time, and training, there were also concerns about the validity when DSM or ICD are revised in future editions. The widely used SNAP-IV Rating Scale in the United States is a revision of the Swanson, Nolan, and Pelham (SNAP) Questionnaire from 1983. The items from the DSM-IV (1994) criteria for Attention-Deficit/Hyperactivity Disorder (ADHD) are included in the current version but lack a DSM-V criterion update.

Evidence-based assessments (EBA) (also referred to as standardized diagnostic assessment [SDA] tools) are not the same as just incorporating screening but are more advanced measures delivered with validated instruments to generate.Reference Ford, Last and Henley2 They work by developing models which synthesize probabilities of having a diagnosis. However, there are many caveats to these approaches: firstly, the instruments are often varying in specificity and sensitivity, are time-consuming, and are not always free. Secondly, multi-informant broad scales may yield better scores for many disorders, but they require an office visit for contextual clinicians’ interpretation. Thirdly, short and lengthier questionnaires have strengths and weaknesses including the likelihood to predict internalizing or externalizing disorders and low specificity for complex presentations like autism spectrum disorder (ASD), which may require additional screening.Reference Aydin, Siebelink and Crone3

Given these barriers, there are novel approaches to addressing these complex diagnostic challenges.Reference Aydin, Siebelink and Crone3 A study added referral letters to two specific but different types of screening instruments along with clinician ratings for predicting clinical diagnosis before the formal visit. The Strengths and Difficulties Questionnaire (SDQ) is a brief psychological assessment tool for 2–17-year-olds, with parents and teacher versions for younger children and self-reported for 11 years and above. The development and well-being assessment (DAWBA) is a package of interviews, questionnaires, and rating techniques designed to generate ICD and DSM diagnoses on 2–17-year-olds. Most sections of the DAWBA can only be skipped if the start-of-section screening questions are negative and the relevant SDQ score is close to the average (<80th centile). This dual requirement is designed to prevent “respondent fatigue” leading to too many sections being skipped.

The patient and families were provided links for online completion of SDQ and the development and well-being assessment (DAWBA) questionnaires. The clinician then combined self-report symptoms, with SDQ scores and DAWBA probability band scores; and developed a probable clinical profile before the office visit. Aydin et al.’s study was statistically designed to estimate the incremental value of referral letters, SDQ, DAWBA, and clinical-rated DAWBA. These approaches provide a panoramic overview of clinical presentation with more specific or sensitive scores in SDQ and balanced scores of 17 disorders in DAWBA. Besides it being a cost-effective measure, it captures DSM criteria and allows families time to complete and review the test scores. The study also had several limitations lack of blinding, missing data, and absence of specific psychometric properties of SDQ in identifying ASD. There are costs of using propriety scales like DAWBA besides clinician time and training to score instruments.Reference Aydin, Siebelink and Crone3

EBA develops a unique patient profile utilizing self-reported symptoms, incrementally adding screening tools that if implemented in practice have the potential for a paradigm shift in CAMHS operations. The merits include the potential for many patients with undiagnosed internalizing disorders may not even generate a referral to the CAMHS and could be effectively treated within primary care. The diagnostic accuracy, reduction in time, and costs of services could be a boon to an unsustainable burden on the CAMHS workforce which is already spread thin. The recent study utilized EBA could address CAMHS’s long wait times and health care inequality with poor access to services the children from lower income families.Reference Aydin, Siebelink and Crone3 EBA enables clinicians to systematically measure patients’ symptoms and treatment responses, thereby enhancing their clinical decision-making and improving outcomes. To evaluate the overall effectiveness of EBA a multicenter randomized controlled trial standardized diagnostic assessment for children and adolescents with emotional difficulties (STADIA) is being conducted.Reference Day, Wyatt and Bhardwaj4 Lastly, Kiddie−computerized adaptive tests (K-CATs) are computerized adaptive tests (CATs) based on multidimensional item response theory. These tests could be completed within 8 minutes and have strong diagnostic validity diagnoses (AUCs from 0.83 for a generalized anxiety disorder to 0.92 for a major depressive disorder) and suicidal ideation (AUC = 0.996).Reference Gibbons, Kupfer and Frank5

Despite strong empirical support, MBC remains elusive without a coherent position from professional organizations and regulatory agencies. EBA has several benefits in diagnostics, and it has the potential to improve quality, safety, and clinical outcomes in CAMHS.

Author Contributions

Conceptualization: M.G., N.G.; Writing—original draft: M.G.; Writing—review and editing: M.G., N.G.

Disclosures

The authors declare none.