1 Introduction

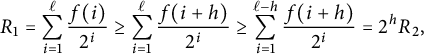

There are numerous works aiming at sharp geometric bounds on the mixing time of a finite Markov chain. Examples include Morris and Peres’ evolving sets bound [Reference Morris and Peres39], expressed in terms of the expansion profile, and the related bound by Fountoulakis and Reed [Reference Fountoulakis and Reed19]. The sharpest geometric bounds on the uniform (a.k.a.

![]() $L_{\infty }$

) mixing time are given in terms of the log-Sobolev constant (see [Reference Diaconis and Saloff-Coste16] for a survey on the topic) and the spectral profile bound, due to Goel et al. [Reference Goel, Montenegro and Tetali20]. Both determine the uniform mixing time up to a multiplicative factor of order

$L_{\infty }$

) mixing time are given in terms of the log-Sobolev constant (see [Reference Diaconis and Saloff-Coste16] for a survey on the topic) and the spectral profile bound, due to Goel et al. [Reference Goel, Montenegro and Tetali20]. Both determine the uniform mixing time up to a multiplicative factor of order

![]() $\log \log [ 1/\min \pi (x)] $

, where throughout

$\log \log [ 1/\min \pi (x)] $

, where throughout

![]() $\pi $

denotes the stationary distribution (see [Reference Diaconis and Saloff-Coste16, Reference Kozma32]). The reader is not familiar with mixing time definitions can find them in Section 2.2. Other notions and definitions used below can be found in Sections 1.5 and 1.7.

$\pi $

denotes the stationary distribution (see [Reference Diaconis and Saloff-Coste16, Reference Kozma32]). The reader is not familiar with mixing time definitions can find them in Section 2.2. Other notions and definitions used below can be found in Sections 1.5 and 1.7.

This type of geometric bounds on mixing times are robust under bounded perturbations of the edge weights, and in the bounded degree setup, also under quasi-isometries. That is, changing some of the edge weights by at most some multiplicative constant factor can change these geometric bounds only by some corresponding constant factor. A natural question, with obvious implications to the potential sharpness of such geometric bounds, is whether mixing times are themselves robust under small changes to the geometry of the Markov chain. For instance, can bounded perturbations of the edge weights change the mixing time by more than a constant factor? Similarly, how far apart can the mixing times of simple random walks (SRWs) on two quasi-isometric graphs of bounded degree be? Different variants of this question were asked by various authors such as Pittet and Saloff-Coste [Reference Pittet and Saloff-Coste42, Section 6], Diaconis and Saloff-Coste [Reference Diaconis and Saloff-Coste16, p. 720], and Aldous and Fill [Reference Aldous and Fill3, Open Problem 8.23].

Ding and Peres [Reference Ding and Peres17] constructed a sequence of bounded degree graphs satisfying that the order of the total variation mixing times strictly increases as a result of a certain sequence of bounded perturbations of the edge weights.Footnote 1 In [Reference Hermon24], a similar example is constructed in which the uniform mixing time is sensitive under bounded perturbations of the edge weights, as well as under a quasi-isometry. All these examples are based on the “perturbed tree” example of T. Lyons [Reference Lyons37] (simplified by Benjamini [Reference Benjamini7]). In particular, they are highly non-transitive, and a priori it appears as if what makes such examples work could not be imitated by a transitive example. It remained an open problem to determine whether the total variation mixing time of random walk on vertex-transitive graphs is robust under small perturbations. This was asked by Ding and Peres [Reference Ding and Peres17, Question 1.4] (see also [Reference Kozma32, p. 3] and [Reference Pittet and Saloff-Coste42, Section 6]). In this paper, we give a negative answer to this question, even when the small perturbation preserves transitivity.

We denote the group of permutations of n elements by

![]() $\mathfrak {S}_n$

. Recall that a transposition is an element of

$\mathfrak {S}_n$

. Recall that a transposition is an element of

![]() $\mathfrak {S}_n$

which exchanges two values and keeps all the rest fixed.

$\mathfrak {S}_n$

which exchanges two values and keeps all the rest fixed.

Theorem 1.1 There exist a pair of sequences of sets of transpositions

![]() $S_n$

and

$S_n$

and

![]() $S_n'$

such that the Cayley graphs

$S_n'$

such that the Cayley graphs

![]() $\operatorname {\mathrm {Cay}}(\mathfrak {S}_{n},S_n)$

and

$\operatorname {\mathrm {Cay}}(\mathfrak {S}_{n},S_n)$

and

![]() $\operatorname {\mathrm {Cay}}(\mathfrak {S}_{n},S_n')$

are

$\operatorname {\mathrm {Cay}}(\mathfrak {S}_{n},S_n')$

are

![]() $(3,0)$

-quasi-isometric and

$(3,0)$

-quasi-isometric and

Further,

![]() $S_n \subset S_n' \subset S_n^3:=\{xyz:x,y,z \in S_n \}$

.

$S_n \subset S_n' \subset S_n^3:=\{xyz:x,y,z \in S_n \}$

.

Of course,

![]() $\log \log \log |\mathfrak {S}_{n}|\asymp \log \log n$

. We formulated the theorem in this way because the size of the group is the more natural object in this context. Let us remark that probably the ratio of mixing time in our example is indeed

$\log \log \log |\mathfrak {S}_{n}|\asymp \log \log n$

. We formulated the theorem in this way because the size of the group is the more natural object in this context. Let us remark that probably the ratio of mixing time in our example is indeed

![]() $\asymp \log \log \log |\mathfrak {S}_{n}|$

, but for brevity, we prove only the lower bound.

$\asymp \log \log \log |\mathfrak {S}_{n}|$

, but for brevity, we prove only the lower bound.

The mixing times in Theorem 1.1 are the total variation ones. In what comes, whenever we write mixing time without mentioning the metric, it is always the total variation mixing time. The behavior described in Theorem 1.1 cannot occur for the uniform mixing times which in the transitive setup is quasi-isometry invariant (see Theorem 2.5).

1.1 Variations on a theme

A related question, asked by Itai Benjamini (private communication) is whether there exists some absolute constant

![]() $C>0$

such that for every finite group G for all two symmetric sets of generators S and

$C>0$

such that for every finite group G for all two symmetric sets of generators S and

![]() $S'$

such that

$S'$

such that

![]() $S \subset S'$

we have that the mixing time of SRW on the Cayley graph of G with respect to

$S \subset S'$

we have that the mixing time of SRW on the Cayley graph of G with respect to

![]() $S'$

is at most

$S'$

is at most

![]() $C \frac {|S'|}{|S|} $

times the mixing time of SRW on the Cayley graph of G with respect to S (a set S is called symmetric if

$C \frac {|S'|}{|S|} $

times the mixing time of SRW on the Cayley graph of G with respect to S (a set S is called symmetric if

![]() ). Our example also disproves this. In fact,

). Our example also disproves this. In fact,

![]() $S \subset S' \subseteq S^3$

and

$S \subset S' \subseteq S^3$

and

![]() $|S'|-|S| \le \sqrt { |S|}$

, where

$|S'|-|S| \le \sqrt { |S|}$

, where

![]() for

for

![]() $i \in \mathbb {N}$

. The definition of an

$i \in \mathbb {N}$

. The definition of an

![]() $(a,b)$

-quasi-isometry (see Section 1.5) gives that if

$(a,b)$

-quasi-isometry (see Section 1.5) gives that if

![]() $S \subseteq S' \subseteq S^i$

then

$S \subseteq S' \subseteq S^i$

then

![]() $\operatorname {\mathrm {Cay}}(G,S)$

and

$\operatorname {\mathrm {Cay}}(G,S)$

and

![]() $\operatorname {\mathrm {Cay}}(G,S')$

are

$\operatorname {\mathrm {Cay}}(G,S')$

are

![]() $(i,0)$

-quasi-isometric.

$(i,0)$

-quasi-isometric.

The reason that

![]() $|S'|-|S|\le \sqrt {|S|}$

is explained in the proof sketch section below—both share a complete graph on some set K with

$|S'|-|S|\le \sqrt {|S|}$

is explained in the proof sketch section below—both share a complete graph on some set K with

![]() $|K|\asymp n$

. Hence, this complete graph has an order of

$|K|\asymp n$

. Hence, this complete graph has an order of

![]() $\asymp n^2$

edges, and there are only

$\asymp n^2$

edges, and there are only

![]() $o(n)$

additional edges. We could have increased

$o(n)$

additional edges. We could have increased

![]() $S_n$

by including in it all

$S_n$

by including in it all

![]() $|K|!$

permutation of the elements in K, while keeping

$|K|!$

permutation of the elements in K, while keeping

![]() $S_n' \setminus S_n$

the same set (of size

$S_n' \setminus S_n$

the same set (of size

![]() $o(n)$

), thus making

$o(n)$

), thus making

![]() $\frac { |S_{n}'|-|S_n|}{|S_n|} $

tremendously smaller.

$\frac { |S_{n}'|-|S_n|}{|S_n|} $

tremendously smaller.

We will also be interested in weighted versions of the problem, as these allow us to define “weak” perturbations in a natural way. Let

![]() $\Gamma $

be a group, and let

$\Gamma $

be a group, and let

![]() be symmetric weights (i.e.,

be symmetric weights (i.e.,

![]() $w(s)=w(s^{-1})$

) such that the support of W generates

$w(s)=w(s^{-1})$

) such that the support of W generates

![]() $\Gamma $

. The discrete-time lazy random walk on

$\Gamma $

. The discrete-time lazy random walk on

![]() $\Gamma $

with respect to W is the process with transition probabilities

$\Gamma $

with respect to W is the process with transition probabilities

![]() $P(g,g)=1/2$

and

$P(g,g)=1/2$

and

![]() $P(g,gs)=\frac {w(s)}{2\sum _{r \in S}w(r)}$

for all

$P(g,gs)=\frac {w(s)}{2\sum _{r \in S}w(r)}$

for all

![]() $g,s \in \Gamma $

. We denote its TV (total variation) mixing time by

$g,s \in \Gamma $

. We denote its TV (total variation) mixing time by

![]() $t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\Gamma ,W))$

. In continuous time, let

$t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\Gamma ,W))$

. In continuous time, let

![]() be symmetric rates. The continuous-time random walk on

be symmetric rates. The continuous-time random walk on

![]() $\Gamma $

with respect to R is the process that has infinitesimal transitions rates

$\Gamma $

with respect to R is the process that has infinitesimal transitions rates

![]() $r(s)$

between g and

$r(s)$

between g and

![]() $gs$

for all

$gs$

for all

![]() $g,s \in \Gamma $

. Denote its mixing time by

$g,s \in \Gamma $

. Denote its mixing time by

![]() $t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\Gamma ,R))$

. As in the unweighted case, due to the group symmetry the invariant distribution is uniform and the TV distance between it and the distribution of the walk at some given time is independent of the initial state.

$t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\Gamma ,R))$

. As in the unweighted case, due to the group symmetry the invariant distribution is uniform and the TV distance between it and the distribution of the walk at some given time is independent of the initial state.

Recall that

![]() $\mathfrak {S}_n$

is the symmetric group (the group of permutations of n elements). The following is the promised weighted version of our main result.

$\mathfrak {S}_n$

is the symmetric group (the group of permutations of n elements). The following is the promised weighted version of our main result.

Theorem 1.2 For every

![]() $f: \mathbb {N} \to [1,\infty )$

satisfying that

$f: \mathbb {N} \to [1,\infty )$

satisfying that

![]() $1 \ll f(n) \le \log \log \log n$

, there exist a sequence

$1 \ll f(n) \le \log \log \log n$

, there exist a sequence

![]() $(S_n)_{n =3}^{\infty }$

of sets of transpositions

$(S_n)_{n =3}^{\infty }$

of sets of transpositions

![]() $S_n\subset \mathfrak {S}_{n}$

and a sequence of weights

$S_n\subset \mathfrak {S}_{n}$

and a sequence of weights

![]() $(W_n)_{n=3}^{\infty }$

, such that

$(W_n)_{n=3}^{\infty }$

, such that

![]() $W_n=(w_n(s))$

is supported on

$W_n=(w_n(s))$

is supported on

![]() $S_n$

and satisfies that

$S_n$

and satisfies that

![]() $1 \le w_{n}(s) \le 1+\left (f(n!)/\log \log n\right )^{1/4}$

for all

$1 \le w_{n}(s) \le 1+\left (f(n!)/\log \log n\right )^{1/4}$

for all

![]() $s \in S_n$

, and such that

$s \in S_n$

, and such that

Similarly, in continuous time, if we set

![]() $R_{n}=W_n$

(for the above

$R_{n}=W_n$

(for the above

![]() $W_n$

), we get that

$W_n$

), we get that

We remark that the power

![]() $1/4$

is not optimal (it was not a priority for us to optimize it). As before,

$1/4$

is not optimal (it was not a priority for us to optimize it). As before,

![]() $|S_n|\asymp n^2$

.

$|S_n|\asymp n^2$

.

1.2 A non-transitive example

Our third result shows that if one is willing to consider non-transitive instances, then indeed one can have a bounded degree example whose (usual worst-case) mixing time is of strictly smaller order than the mixing time starting from the best initial state (i.e., the one from which the walk mixes fastest) after a small perturbation. In all previous constructions of graphs with a sensitive mixing time, there was a large set that starting from it, the walk mixes rapidly both before and after the perturbation, and the mixing time is governed by the hitting time of this set (which is sensitive by construction). In particular, the mixing time started from the best initial state is not sensitive.

Let G be a connected graph. Let

![]() $W=(w(e):e \in E(G))$

be positive edge weights. Consider the lazy random walk

$W=(w(e):e \in E(G))$

be positive edge weights. Consider the lazy random walk

![]() $(X_k)_{k=0}^\infty $

on

$(X_k)_{k=0}^\infty $

on

![]() $G,$

i.e., the process with transition probabilities

$G,$

i.e., the process with transition probabilities

![]() $P(x,y)=\frac {w(xy)}{2\sum _{z}w(xz)} $

and

$P(x,y)=\frac {w(xy)}{2\sum _{z}w(xz)} $

and

![]() $P(x,x)=\frac {1}{2}$

for all neighboring

$P(x,x)=\frac {1}{2}$

for all neighboring

![]() $x,y \in G$

. For

$x,y \in G$

. For

![]() $x \in G,$

we define the mixing time starting from x by

$x \in G,$

we define the mixing time starting from x by

With this definition, the usual mixing time

![]() $t_{\mathrm {mix}}(G,W)$

(see Section 2.2) is equal to

$t_{\mathrm {mix}}(G,W)$

(see Section 2.2) is equal to

![]() $\max _xt_{\mathrm {mix}}(G,W,x)$

.

$\max _xt_{\mathrm {mix}}(G,W,x)$

.

Theorem 1.3 There exist a sequence of finite graphs

![]() $L_n=(V_n,E_n)$

of diverging sizes and uniformly bounded degree (i.e.,

$L_n=(V_n,E_n)$

of diverging sizes and uniformly bounded degree (i.e.,

![]() $\sup _n \max _{v \in V_n}\deg v<\infty $

) and a sequence of some symmetric edge weights

$\sup _n \max _{v \in V_n}\deg v<\infty $

) and a sequence of some symmetric edge weights

![]() $W_n=(w_n(e):e \in E_{n})$

such that

$W_n=(w_n(e):e \in E_{n})$

such that

![]() $1 \le w_{n}(e) \le 1+\delta _n$

for all

$1 \le w_{n}(e) \le 1+\delta _n$

for all

![]() $e \in E_{n}$

and such that

$e \in E_{n}$

and such that

for some

![]() $\delta _n\to 0$

.

$\delta _n\to 0$

.

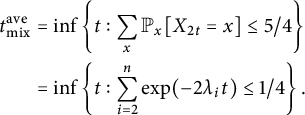

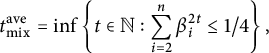

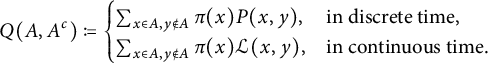

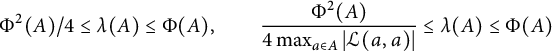

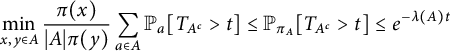

It follows from Theorem 1.3 that the average TV mixing time, by which, we mean

![]() $\inf \{t:\sum _{x}\pi (x)\|{\mathbb P}_x(X_t = \cdot )-\pi \|_{\mathrm {TV}} \le 1/4 \}$

, can be sensitive to perturbations. This is in contrast with the average

$\inf \{t:\sum _{x}\pi (x)\|{\mathbb P}_x(X_t = \cdot )-\pi \|_{\mathrm {TV}} \le 1/4 \}$

, can be sensitive to perturbations. This is in contrast with the average

![]() $L_2$

mixing time (see Section 2.2). This gives a negative answer to a question of Addario-Berry (private communication).

$L_2$

mixing time (see Section 2.2). This gives a negative answer to a question of Addario-Berry (private communication).

As in Theorem 1.1, the change in the order of the mixing time in Theorem 1.3 (the inverse of the

![]() $\delta _n$

in (1.3)) is

$\delta _n$

in (1.3)) is

![]() $o(\log \log \log |V_{n} |)$

. If we replace the condition

$o(\log \log \log |V_{n} |)$

. If we replace the condition

![]() $w_n\le 1+\delta _n$

with

$w_n\le 1+\delta _n$

with

![]() $w_n\le 1+c,$

then the change in the order of the mixing time can be as large as

$w_n\le 1+c,$

then the change in the order of the mixing time can be as large as

![]() $\log \log \log |V_{n} |$

.

$\log \log \log |V_{n} |$

.

Let us quickly sketch the construction of Theorem 1.3 (full details are in Section 4). Let n be some number, and let

![]() $S_n$

be the set of transpositions from Theorem 1.2. Let H be a large, fast mixing graph, and let A be some subset of the vertices of H with

$S_n$

be the set of transpositions from Theorem 1.2. Let H be a large, fast mixing graph, and let A be some subset of the vertices of H with

![]() $|A|=|S_n|$

and with the vertices of A far apart from one another. The graph L of Theorem 3 has as its vertex set

$|A|=|S_n|$

and with the vertices of A far apart from one another. The graph L of Theorem 3 has as its vertex set

![]() $\mathfrak {S}_n\times H$

(we are using here the same notation for the graph and its set of vertices). We choose the edges of L such that random walk on L has the following behavior. Its H projection is just SRW on the graph H. Its

$\mathfrak {S}_n\times H$

(we are using here the same notation for the graph and its set of vertices). We choose the edges of L such that random walk on L has the following behavior. Its H projection is just SRW on the graph H. Its

![]() $\mathfrak {S}_n$

projection is also SRW on

$\mathfrak {S}_n$

projection is also SRW on

![]() $\operatorname {\mathrm {Cay}}(\mathfrak {S}_n,S_n)$

, but slowed down significantly. Any given transposition

$\operatorname {\mathrm {Cay}}(\mathfrak {S}_n,S_n)$

, but slowed down significantly. Any given transposition

![]() $s\in S_n$

can be applied only when a corresponding vertex of A is reached in the second coordinate. The perturbation goes by perturbing only the

$s\in S_n$

can be applied only when a corresponding vertex of A is reached in the second coordinate. The perturbation goes by perturbing only the

![]() $\mathfrak {S}_n$

projection. We defer all other details to Section 4.

$\mathfrak {S}_n$

projection. We defer all other details to Section 4.

1.3 A proof sketch

We will now sketch the proof of our main result, Theorem 1.1 (the proof of Theorem 1.2 is very similar). Readers who intend to read the full proof can safely skip this section.

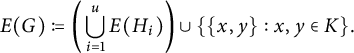

Random walk on

![]() $\operatorname {\mathrm {Cay}}(\mathfrak {S}_{n},S_n)$

with

$\operatorname {\mathrm {Cay}}(\mathfrak {S}_{n},S_n)$

with

![]() $S_n$

composed of transpositions is identical to the interchange process on the graph G which has n vertices and

$S_n$

composed of transpositions is identical to the interchange process on the graph G which has n vertices and

![]() $\{x,y\}$

is an edge of G if and only if the transposition

$\{x,y\}$

is an edge of G if and only if the transposition

![]() $(x,y)\in S_n$

. Hence, we need to construct two graphs G and

$(x,y)\in S_n$

. Hence, we need to construct two graphs G and

![]() $G'$

on n vertices, estimate the mixing time of the two interchange processes and show that the corresponding Cayley graphs are quasi-isometric.

$G'$

on n vertices, estimate the mixing time of the two interchange processes and show that the corresponding Cayley graphs are quasi-isometric.

Our two graphs have the form of “gadget plus complete graph.” Namely, there is a relatively small part of the graph D which we nickname “the gadget” and all vertices in

![]() $G\setminus D$

are connected between them. While D and the corresponding

$G\setminus D$

are connected between them. While D and the corresponding

![]() $D'$

in

$D'$

in

![]() $G'$

will be small (we will have

$G'$

will be small (we will have

![]() $|D|=|D'|$

), they dominate the mixing time of the interchange process.

$|D|=|D'|$

), they dominate the mixing time of the interchange process.

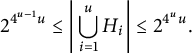

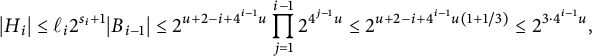

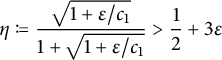

To describe the gadget, let

![]() $u\in {\mathbb N}$

and

$u\in {\mathbb N}$

and

![]() $\epsilon \in (0,\frac 12)$

be some parameters. The gadget will have u “stages”

$\epsilon \in (0,\frac 12)$

be some parameters. The gadget will have u “stages”

![]() $H_1,\dotsc ,H_u$

(the gadget is almost

$H_1,\dotsc ,H_u$

(the gadget is almost

![]() $\cup _{i=1}^u H_i$

but not quite). We obtain each

$\cup _{i=1}^u H_i$

but not quite). We obtain each

![]() $H_i$

by “stretching” the edges of some graph

$H_i$

by “stretching” the edges of some graph

![]() $H_i'$

which is a union of binary trees of depth

$H_i'$

which is a union of binary trees of depth

![]() (note that

(note that

![]() $H_i'$

has the depth exponential in i and hence has volume doubly exponential in i). To get

$H_i'$

has the depth exponential in i and hence has volume doubly exponential in i). To get

![]() $H_i$

, replace each edge of

$H_i$

, replace each edge of

![]() $H_i'$

with a path of length

$H_i'$

with a path of length

![]() . Namely, for each edge

. Namely, for each edge

![]() $\{x,y\}$

of

$\{x,y\}$

of

![]() $H_i'$

, we add

$H_i'$

, we add

![]() $\ell _i-1$

new vertices (denote them by

$\ell _i-1$

new vertices (denote them by

![]() $v_1,\dotsc ,v_{\ell _i-1}$

, and denote also

$v_1,\dotsc ,v_{\ell _i-1}$

, and denote also

![]() $v_0=x$

and

$v_0=x$

and

![]() $v_{\ell _i}=y$

) and connect

$v_{\ell _i}=y$

) and connect

![]() $v_j$

to

$v_j$

to

![]() $v_{j+1}$

for all

$v_{j+1}$

for all

![]() $j\in \{0,\dotsc ,\ell _i-1\}$

; and remove the edge

$j\in \{0,\dotsc ,\ell _i-1\}$

; and remove the edge

![]() $\{x,y\}$

.

$\{x,y\}$

.

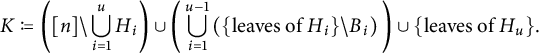

We still need to explain how many trees are in each

![]() $H_i$

and how they are connected to one another and to the rest of the graph. For this, we need the parameter

$H_i$

and how they are connected to one another and to the rest of the graph. For this, we need the parameter

![]() $\epsilon $

, which at this point can be thought of as a sufficiently small constant. For each of the vertices in each of the trees (before stretching), we label the children arbitrarily “left” and “right.” For each leaf

$\epsilon $

, which at this point can be thought of as a sufficiently small constant. For each of the vertices in each of the trees (before stretching), we label the children arbitrarily “left” and “right.” For each leaf

![]() $x\in H_i$

, we define

$x\in H_i$

, we define

![]() $g(x)$

to be the number of left turns in the (unique) path from the root to x. We now let

$g(x)$

to be the number of left turns in the (unique) path from the root to x. We now let

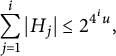

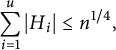

The sets

![]() $B_i$

are used twice. First, we use them to decide how many trees will be in each

$B_i$

are used twice. First, we use them to decide how many trees will be in each

![]() $H_i$

. For

$H_i$

. For

![]() $i=1,$

we let

$i=1,$

we let

![]() $H_1$

be one tree. For every

$H_1$

be one tree. For every

![]() $i>1$

, we let

$i>1$

, we let

![]() $H_i$

have

$H_i$

have

![]() $|B_{i-1}|$

trees, and identify each point of

$|B_{i-1}|$

trees, and identify each point of

![]() $B_{i-1}$

with one of the roots of one of the trees in

$B_{i-1}$

with one of the roots of one of the trees in

![]() $H_i$

. Second, we use the

$H_i$

. Second, we use the

![]() $B_i$

to connect the

$B_i$

to connect the

![]() $H_i$

to the complete graph. Every leaf of

$H_i$

to the complete graph. Every leaf of

![]() $H_i$

which is not in

$H_i$

which is not in

![]() $B_i$

is identified with a vertex of the complete graph (the complete graph K will be of size

$B_i$

is identified with a vertex of the complete graph (the complete graph K will be of size

![]() $n-o(n)$

, much larger than

$n-o(n)$

, much larger than

![]() $\cup _{i=1}^u H_i$

which will be of size

$\cup _{i=1}^u H_i$

which will be of size

![]() $O(n^{1/4})$

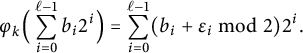

, and so most of the vertices of K are not identified with a vertex of the gadget). This terminates the construction of G (see Figure 1). Experts will clearly notice that this is a variation on the perturbed tree idea. In other words, while the perturbed tree itself (as noted above) is highly non-transitive, one can use it as a basis for transitive example by examining the interchange process on it.

$O(n^{1/4})$

, and so most of the vertices of K are not identified with a vertex of the gadget). This terminates the construction of G (see Figure 1). Experts will clearly notice that this is a variation on the perturbed tree idea. In other words, while the perturbed tree itself (as noted above) is highly non-transitive, one can use it as a basis for transitive example by examining the interchange process on it.

Figure 1: The gadget. Vertices marked with small squares actually belong to the complete graph rather than to the gadget.

The graph

![]() $G'$

is almost identical, the only difference is that in each path corresponding to a left turn we add short bridges. Namely, examine one such path and denote its vertices

$G'$

is almost identical, the only difference is that in each path corresponding to a left turn we add short bridges. Namely, examine one such path and denote its vertices

![]() $v_0,\dotsc ,v_{\ell _i}$

as above. Then in

$v_0,\dotsc ,v_{\ell _i}$

as above. Then in

![]() $G',$

we add edges between

$G',$

we add edges between

![]() $v_{2j}$

and

$v_{2j}$

and

![]() $v_{2j+2}$

for all

$v_{2j+2}$

for all

![]() $j\in \{0,\dotsc ,\ell _i/2-1\}$

.

$j\in \{0,\dotsc ,\ell _i/2-1\}$

.

Why this choice of parameters? It is motivated by a heuristic that for such graphs, namely, a gadget connected to a large complete graph, the mixing time of the interchange process is the time all particles have left the gadget (they do not have to all be outside the gadget at the same time, it is enough that each particle left the gadget at least once by this time). See Section 1.4 for some context for this heuristic. Thus, we are constructing our

![]() $H_i$

such that the time that it takes all particles to leave

$H_i$

such that the time that it takes all particles to leave

![]() $H_i$

is approximately independent of i. Indeed, the time a particle takes to traverse a single stretched edge is approximately

$H_i$

is approximately independent of i. Indeed, the time a particle takes to traverse a single stretched edge is approximately

![]() $\ell _i^2 \asymp 4^{u-i}$

while each tree of

$\ell _i^2 \asymp 4^{u-i}$

while each tree of

![]() $H_i$

has depth

$H_i$

has depth

![]() $4^{i-1}u$

(in the sense that this is the depth of the tree before its edges have been stretched) and the particle has to traverse all levels of

$4^{i-1}u$

(in the sense that this is the depth of the tree before its edges have been stretched) and the particle has to traverse all levels of

![]() $H_i$

, so it exits

$H_i$

, so it exits

![]() $H_i$

after time approximately

$H_i$

after time approximately

![]() $4^{u-1}u$

, which is independent of i. And this holds for all particles simultaneously because the probability that a particle takes

$4^{u-1}u$

, which is independent of i. And this holds for all particles simultaneously because the probability that a particle takes

![]() $\lambda \cdot 4^{u-1}u$

time to traverse the tree (for some

$\lambda \cdot 4^{u-1}u$

time to traverse the tree (for some

![]() $\lambda>1$

) is exponentially small in the number of layers

$\lambda>1$

) is exponentially small in the number of layers

![]() $4^{i-1}u$

, and hence that would not happen to any of the particles in the tree, which has approximately

$4^{i-1}u$

, and hence that would not happen to any of the particles in the tree, which has approximately

![]() $2^{4^{i-1}u}$

particles, if

$2^{4^{i-1}u}$

particles, if

![]() $\lambda $

is sufficiently large. In the roughest possible terms, the growing height of the trees is dictated by the growing number of vertices (which must grow because

$\lambda $

is sufficiently large. In the roughest possible terms, the growing height of the trees is dictated by the growing number of vertices (which must grow because

![]() $H_i$

has many more roots than

$H_i$

has many more roots than

![]() $H_{i-1}$

, since each

$H_{i-1}$

, since each

![]() $x\in B_{i-1}$

is a root of

$x\in B_{i-1}$

is a root of

![]() $H_i$

) while the decreasing stretching balances the growing height to get approximately uniform expected exit time. The only exception is

$H_i$

) while the decreasing stretching balances the growing height to get approximately uniform expected exit time. The only exception is

![]() $H_1$

, whose height is not dictated by the number of roots (clearly, as there is only one), but by the stretching.

$H_1$

, whose height is not dictated by the number of roots (clearly, as there is only one), but by the stretching.

With the definitions of G and

![]() $G'$

done, estimating the mixing times is relatively routine, so we make only two remarks in this quick sketch. How do we translate the fact that all particles visited the complete graph into an upper bound on the mixing time? We use a coupling argument. We couple two instances

$G'$

done, estimating the mixing times is relatively routine, so we make only two remarks in this quick sketch. How do we translate the fact that all particles visited the complete graph into an upper bound on the mixing time? We use a coupling argument. We couple two instances

![]() $\sigma $

and

$\sigma $

and

![]() $\sigma '$

of the interchange process (in continuous time) using the same clocks and letting them walk identically unless

$\sigma '$

of the interchange process (in continuous time) using the same clocks and letting them walk identically unless

![]() $\sigma (x)=\sigma '(y)$

for an edge

$\sigma (x)=\sigma '(y)$

for an edge

![]() $\{x,y\}$

that is about to ring, in which case we apply the transposition to exactly one of

$\{x,y\}$

that is about to ring, in which case we apply the transposition to exactly one of

![]() $\sigma $

or

$\sigma $

or

![]() $\sigma '$

, reducing the number of disagreements (this coupling involves a standard trick of doubling the rates, and censoring each step with probability 1/2). The fact that the complete graph is much larger and has many more edges simplifies our analysis (the reader can find the details of the coupling in Section 3.2).

$\sigma '$

, reducing the number of disagreements (this coupling involves a standard trick of doubling the rates, and censoring each step with probability 1/2). The fact that the complete graph is much larger and has many more edges simplifies our analysis (the reader can find the details of the coupling in Section 3.2).

The lower bound for the mixing time on

![]() $G'$

uses the standard observation that adding those edges between

$G'$

uses the standard observation that adding those edges between

![]() $v_{2j}$

and

$v_{2j}$

and

![]() $v_{2j+2}$

makes the left turn more likely to be taken than the right turns, transforming

$v_{2j+2}$

makes the left turn more likely to be taken than the right turns, transforming

![]() $B_i$

from an atypical set (with respect to the hitting distribution of the leaf set of

$B_i$

from an atypical set (with respect to the hitting distribution of the leaf set of

![]() $H_i$

) to a typical one, and hence, the particle that started at the root of

$H_i$

) to a typical one, and hence, the particle that started at the root of

![]() $H_1$

has high probability to traverse all

$H_1$

has high probability to traverse all

![]() $H_i$

before entering the complete graph for the first time. This, of course, takes it

$H_i$

before entering the complete graph for the first time. This, of course, takes it

![]() $4^{u-1}u^2$

time units (compare to the mixing time bound of

$4^{u-1}u^2$

time units (compare to the mixing time bound of

![]() $4^{u-1}u$

for the interchange process on G). Of course, the mixing time of the interchange process on

$4^{u-1}u$

for the interchange process on G). Of course, the mixing time of the interchange process on

![]() $G'$

is also bounded by the time that all particles leave the gadget, but we found no way to use this. We simply bound the time a single particle leaves the gadget and get our estimate.

$G'$

is also bounded by the time that all particles leave the gadget, but we found no way to use this. We simply bound the time a single particle leaves the gadget and get our estimate.

1.4 The mixing time of the interchange process

Since our proof revolves around estimating the mixing time of the interchange process on some graph, let us spend some time on a general discussion of this topic. We first mention some conjectures relating the mixing time of the interchange process on a finite graph G to that of

![]() $|G|$

independent random walks on G.

$|G|$

independent random walks on G.

Given a finite graph

![]() $G=(V,E)$

and edge rates

$G=(V,E)$

and edge rates

![]() $R,$

the corresponding n-fold product chain is the continuous-time Markov chain on

$R,$

the corresponding n-fold product chain is the continuous-time Markov chain on

![]() $V^n$

satisfying that each coordinate evolves independently as a random walk on G with edge rates R. This is a continuous-time walk on the n-fold Cartesian product of G with itself, whose symmetric edge rates

$V^n$

satisfying that each coordinate evolves independently as a random walk on G with edge rates R. This is a continuous-time walk on the n-fold Cartesian product of G with itself, whose symmetric edge rates

![]() $R_n$

are given by

$R_n$

are given by

for all

![]() $v_1,\ldots ,v_n,v_k' \in V$

and

$v_1,\ldots ,v_n,v_k' \in V$

and

![]() $k \in [n]$

. We shall refer to this Markov chain as n independent random walks on G with edge rates R and denote its (TV) mixing time by

$k \in [n]$

. We shall refer to this Markov chain as n independent random walks on G with edge rates R and denote its (TV) mixing time by

![]() $ t_{\mathrm {mix}}(n \text { independent RWs on } G,R)$

. As usual, the mixing time is defined with respect to the worst starting tuple of n points, which turns out to be when they all start from the worst point for a single walk on G with edge rates R.

$ t_{\mathrm {mix}}(n \text { independent RWs on } G,R)$

. As usual, the mixing time is defined with respect to the worst starting tuple of n points, which turns out to be when they all start from the worst point for a single walk on G with edge rates R.

Oliveira [Reference Oliveira40] conjectured that there exists an absolute constant

![]() $C>0$

such that the TV mixing time of the interchange process on an n-vertex graph G with rates R, i.e.,

$C>0$

such that the TV mixing time of the interchange process on an n-vertex graph G with rates R, i.e.,

![]() $t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\mathfrak {S}_n,R))$

, is at most

$t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\mathfrak {S}_n,R))$

, is at most

![]() $C t_{\mathrm {mix}}(n \text { independent RWs on }G,R)$

. See [Reference Hermon and Salez30, Conjecture 2] and [Reference Hermon and Pymar29, Question 1.12] for two different strengthened versions of this conjecture. See [Reference Hermon and Salez30] for a positive answer for high dimensional products.

$C t_{\mathrm {mix}}(n \text { independent RWs on }G,R)$

. See [Reference Hermon and Salez30, Conjecture 2] and [Reference Hermon and Pymar29, Question 1.12] for two different strengthened versions of this conjecture. See [Reference Hermon and Salez30] for a positive answer for high dimensional products.

For the related exclusion process, some progress on Oliveira’s conjecture is made in [Reference Hermon and Pymar29]. Returning to the interchange process, in the same paper, the following more refined question is asked [Reference Hermon and Pymar29, Question 1.12]: Is

![]() $t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\mathfrak {S}_n,R))$

equal up to some universal constants to the mixing time of n independent random walks on

$t_{\mathrm {mix}}(\operatorname {\mathrm {Cay}}(\mathfrak {S}_n,R))$

equal up to some universal constants to the mixing time of n independent random walks on

![]() $(G,R)$

starting from n distinct locations? (see [Reference Hermon and Pymar29] for precise definitions). We see that our result is related to finding some graphs G such that the mixing time of

$(G,R)$

starting from n distinct locations? (see [Reference Hermon and Pymar29] for precise definitions). We see that our result is related to finding some graphs G such that the mixing time of

![]() $|G|$

independent SRW with edge rates

$|G|$

independent SRW with edge rates

![]() $1$

on G, starting from distinct initial locations, is sensitive under small perturbations. In fact, the graphs we construct in this paper satisfy this property too, but in the interest of brevity, we will not prove this claim (the proof is very similar to the one for the interchange process we do provide). This conjectured relation between the exclusion process and independent random walks is behind the heuristic we employed (and mentioned in Section 1.3) to construct our example.

$1$

on G, starting from distinct initial locations, is sensitive under small perturbations. In fact, the graphs we construct in this paper satisfy this property too, but in the interest of brevity, we will not prove this claim (the proof is very similar to the one for the interchange process we do provide). This conjectured relation between the exclusion process and independent random walks is behind the heuristic we employed (and mentioned in Section 1.3) to construct our example.

As we now explain, if we did not require the initial locations to be distinct (as is the case in Oliveira’s conjecture) such sensitivity could not occur. It is easy to show (e.g., [Reference Hermon and Pymar29]) that when

![]() $|G|=n,$

$|G|=n,$

where

![]() $t_{\mathrm {rel}}(G,R)$

is the relaxation time of

$t_{\mathrm {rel}}(G,R)$

is the relaxation time of

![]() $(G,R)$

, defined as the inverse of the second smallest eigenvalue of

$(G,R)$

, defined as the inverse of the second smallest eigenvalue of

![]() $-\mathcal {L}$

, where

$-\mathcal {L}$

, where

![]() $\mathcal L$

is the infinitesimal Markov generator of the walk

$\mathcal L$

is the infinitesimal Markov generator of the walk

![]() $(G,R)$

. The relaxation time is robust under small perturbations (see Section 2.1), and hence so is

$(G,R)$

. The relaxation time is robust under small perturbations (see Section 2.1), and hence so is

![]() $ t_{\mathrm {mix}}(n \text { independent RWs on }G,R)$

. Our result that the mixing time is sensitive does not contradict Oliveira’s conjecture, as he conjectured only an upper bound (which, in our case, is sharp for neither

$ t_{\mathrm {mix}}(n \text { independent RWs on }G,R)$

. Our result that the mixing time is sensitive does not contradict Oliveira’s conjecture, as he conjectured only an upper bound (which, in our case, is sharp for neither

![]() $S_n$

nor

$S_n$

nor

![]() $S_n'$

).

$S_n'$

).

Loosely speaking, in order to make the mixing time of n independent random walks starting at distinct locations of smaller order than (the robust quantity)

![]() $t_{\mathrm {rel}}(G,R) \log n$

it is necessary that the eigenvector corresponding to the minimal eigenvalue of

$t_{\mathrm {rel}}(G,R) \log n$

it is necessary that the eigenvector corresponding to the minimal eigenvalue of

![]() $-\mathcal L$

be localized on a set of cardinality

$-\mathcal L$

be localized on a set of cardinality

![]() $n^{o(1)}$

. This is a crucial observation in tuning the parameters in our construction, which explains why for smaller areas of the graph (namely,

$n^{o(1)}$

. This is a crucial observation in tuning the parameters in our construction, which explains why for smaller areas of the graph (namely,

![]() $H_i$

with small index i) we “stretch” edges by a larger factor. This is the opposite of what is done in [Reference Hermon24].

$H_i$

with small index i) we “stretch” edges by a larger factor. This is the opposite of what is done in [Reference Hermon24].

Lastly, we comment that in contrast with a single random walk, in order to change the mixing time of n independent random walks, starting from n distinct initial locations, it does not suffice for the perturbation only to change the typical behavior of the walk, but rather it is necessary that it significantly changes the probabilities of some events in some sufficiently strong quantitative manner. See [Reference Hermon24] for a related discussion, about why it is much harder to construct an example where the uniform mixing time is sensitive than it is to construct one where the TV mixing time is sensitive.

1.5 Quasi-isometries and robustness

Since we hope this note will be of interest to both group theory and Markov chain experts, let us take this opportunity to compare two similar notions related to comparison of the geometry of two graphs or of two reversible Markov chains. The first is the notion of quasi-isometry which is more geometric in nature. The second is the notion of robustness which is more analytic. In particular, we are interested in properties which are preserved by these notions.

This discussion is an important part of the background, but let us advise the readers that it is not necessary to appreciate our results, as they apply in both cases. For example, Theorem 1.1 shows that the mixing time is neither quasi-isometry invariant nor robust.

A quasi-isometry (defined first in [Reference Gromov23]) between two metric spaces X and Y is a map

![]() $\phi :X\to Y$

such that for some numbers

$\phi :X\to Y$

such that for some numbers

![]() $(a,b)$

we have

$(a,b)$

we have

where d denotes the distance (in X or in Y, as appropriate). Further, we require that, for every

![]() $y\in Y,$

there is some

$y\in Y,$

there is some

![]() $x\in X$

such that

$x\in X$

such that

![]() $d(\phi (x),y)\le a+b$

. We say that X and Y are

$d(\phi (x),y)\le a+b$

. We say that X and Y are

![]() $(a,b)$

quasi-isometric if such a

$(a,b)$

quasi-isometric if such a

![]() $\phi $

exists. (Our choice of definition is unfortunately only partially symmetric. If

$\phi $

exists. (Our choice of definition is unfortunately only partially symmetric. If

![]() $\phi :X\to Y$

is an

$\phi :X\to Y$

is an

![]() $(a,b)$

quasi-isometry then one may construct a quasi-isometry

$(a,b)$

quasi-isometry then one may construct a quasi-isometry

![]() $\psi :Y\to X$

with the same a but perhaps with a larger b.)

$\psi :Y\to X$

with the same a but perhaps with a larger b.)

For a property of random walk that is defined naturally on infinite graphs, we say that it is quasi-isometrically invariant if whenever G and H are two quasi-isometric infinite graphs, the property holds for G if and only if it holds for H (the graphs are made into metric spaces with the graph distance). Examples include a heat kernel on-diagonal upper bound of polynomial type [Reference Carlen, Kusuoka and Stroock12], an off-diagonal upper bound [Reference Grigor’yan21], and a corresponding lower bound [Reference Boutayeb10, Reference Grigor’yan, Hu, Lau, Bandt, Zähle and Mörters22]. A particularly famous example is the Harnack inequality [Reference Barlow and Murugan5]. For a quantitative property of random walk naturally defined on finite graphs, such as the mixing time, one says that it is invariant to quasi-isometries if, whenever G and H are

![]() $(a,b)$

-quasi-isometric, the property may change by a constant that depends only on a and b and not on other parameters. Similar notions may be defined for Brownian motion on Riemannian manifolds, and one may even ask questions like “if a manifold M is quasi-isometric to a graph G and Brownian motion on M satisfies some property, does random walk on G satisfy an equivalent property?” and a number of examples of this behavior are known.

$(a,b)$

-quasi-isometric, the property may change by a constant that depends only on a and b and not on other parameters. Similar notions may be defined for Brownian motion on Riemannian manifolds, and one may even ask questions like “if a manifold M is quasi-isometric to a graph G and Brownian motion on M satisfies some property, does random walk on G satisfy an equivalent property?” and a number of examples of this behavior are known.

The notion of robustness does not have a standard definition, and in particular, the definitions in [Reference Ding and Peres17] and [Reference Hermon24] differ (and also differ from the definition we will use in this paper). Nevertheless, they all have a common thread: a definition for Markov chains that implies that the property in question is preserved under quasi-isometry of graphs of bounded degree, but that makes sense also without any a priori bound on the transition probabilities. Here, we will use the following definition. Let

![]() $\mathcal M$

be the set of finite state Markov chains. We say that a

$\mathcal M$

be the set of finite state Markov chains. We say that a

![]() $q:\mathcal M\to [0,\infty ]$

is robust if, for any

$q:\mathcal M\to [0,\infty ]$

is robust if, for any

![]() $A \in (0,1], $

there exists some

$A \in (0,1], $

there exists some

![]() $K \in (0,1] $

such that the following holds. Assume M and

$K \in (0,1] $

such that the following holds. Assume M and

![]() $M'$

are two irreducible reversible Markov chains on the same finite state space V with stationary distributions

$M'$

are two irreducible reversible Markov chains on the same finite state space V with stationary distributions

![]() $\pi $

and

$\pi $

and

![]() $\pi '$

and transition matrices P and

$\pi '$

and transition matrices P and

![]() $P'$

satisfying

$P'$

satisfying

$$ \begin{align} \begin{aligned} \forall \, x &\in V,& A\pi'(x) &\le \pi(x)\le \tfrac1A\pi'(x), \\ \forall \, f &\in \mathbb{R}^V,& A\mathcal{E'}(f,f)&\le \mathcal{E}(f,f)\le \tfrac1A\mathcal{E'}(f,f), \end{aligned} \end{align} $$

$$ \begin{align} \begin{aligned} \forall \, x &\in V,& A\pi'(x) &\le \pi(x)\le \tfrac1A\pi'(x), \\ \forall \, f &\in \mathbb{R}^V,& A\mathcal{E'}(f,f)&\le \mathcal{E}(f,f)\le \tfrac1A\mathcal{E'}(f,f), \end{aligned} \end{align} $$

where

![]() $\mathcal {E}(f,f)$

and

$\mathcal {E}(f,f)$

and

![]() $\mathcal {E}'(f,f)$

are the corresponding Dirichlet forms, namely,

$\mathcal {E}'(f,f)$

are the corresponding Dirichlet forms, namely,

and similarly for

![]() $\mathcal {E}'$

. Then

$\mathcal {E}'$

. Then

![]() $q(M)\ge Kq(M')$

.

$q(M)\ge Kq(M')$

.

We also define robustness for Markov chains in continuous time, and in this case, we replace

![]() $P(u,v)$

above with

$P(u,v)$

above with

![]() $\mathcal L(u,v$

) which is the infinitesimal rate of transition from u to v, but otherwise the definition remains the same.

$\mathcal L(u,v$

) which is the infinitesimal rate of transition from u to v, but otherwise the definition remains the same.

If P and

![]() $P'$

are SRWs on

$P'$

are SRWs on

![]() $(a,b)$

quasi-isometric graphs with the same vertex set (with the quasi-isometry being the identity), whose maximal degrees are at most D, then (1.5) holds with some A depending only on

$(a,b)$

quasi-isometric graphs with the same vertex set (with the quasi-isometry being the identity), whose maximal degrees are at most D, then (1.5) holds with some A depending only on

![]() $(a,b,D)$

[Reference Diaconis and Saloff-Coste14]. Thus, a robust quantity is also quasi-isometry invariant between graphs of bounded degree on the same vertex set.

$(a,b,D)$

[Reference Diaconis and Saloff-Coste14]. Thus, a robust quantity is also quasi-isometry invariant between graphs of bounded degree on the same vertex set.

Each notion has its advantages and disadvantages relative to the other notion. Quasi-isometry has the flexibility that the spaces compared need not be identical or even of the same type, indeed the fact that a Lie group (a continuous metric space, indeed a manifold) is quasi-isometric to any cocompact lattice of it (a discrete metric space) plays an important role in group theory. Robustness has the advantage that unbounded degrees are handled seamlessly.

Returning to our results, since the examples of our Theorem 1.1 are not of bounded degree, it is natural to ask if they satisfy a comparison of Dirichlet form of the form (1.5). In fact, this is true because in said examples our pair of sets of generators

![]() $S_n$

and

$S_n$

and

![]() $S_n'$

(from the statement of Theorem 1.1) satisfy for all n that

$S_n'$

(from the statement of Theorem 1.1) satisfy for all n that

![]() $S_n \subset S_n'$

and that any

$S_n \subset S_n'$

and that any

![]() $s' \in S_n' \setminus S_n$

can be written as

$s' \in S_n' \setminus S_n$

can be written as

![]() $s_{1}(s')s_2(s')s_3(s') \in S_n^3=\{xyz:x,y,z \in S_n \} $

in a manner satisfying that

$s_{1}(s')s_2(s')s_3(s') \in S_n^3=\{xyz:x,y,z \in S_n \} $

in a manner satisfying that

It is standard and not difficult to see that (1.6) implies the comparison of Dirichlet forms condition (1.5) (see, e.g., [Reference Berestycki8, Theorem 4.4]). Thus, the examples of Theorem 1.1 also satisfy (1.5) with A being a universal constant. We remark that, in general,

![]() $S \subset S' \subseteq S^3$

is sufficient for deriving (1.5) only with an A that may depend on

$S \subset S' \subseteq S^3$

is sufficient for deriving (1.5) only with an A that may depend on

![]() $|S'|$

.

$|S'|$

.

1.6 Remarks and open problems

We start with a remark on the Liouville property problem, a problem which for us was a significant motivation for this work. An infinite graph with finite degrees is called Liouville if every bounded harmonic function is constant (a function f on the vertices of a graph is called harmonic if

![]() $f(x)$

is equal to the average of f on the neighbors of x for all x).

$f(x)$

is equal to the average of f on the neighbors of x for all x).

An open problem in geometric group theory is whether the Liouville property is quasi-isometry invariant in the setup of Cayley graphs (and, in the spirit of the aforementioned question of Benjamini, whether it is preserved under deletion of some generators, possibly by passing to a subgroup, if the smaller set of generators does not generate the group). The problem of stability of the Liouville property is related to that of mixing times. Indeed, the example of Lyons [Reference Lyons37] mentioned above which is a base for all previous examples for sensitivity was in fact an example for the instability of the Liouville property (for non-transitive graphs).

A result of Kaimanovich and Vershik (see [Reference Kaimanovich and Vershik31] or [Reference Lyons and Peres35, Chapter 14]) states that for Cayley graphs, the Liouville property is equivalent to the property of the walk having zero speed. Of course, our graphs being finite means there is no unique number to be designated as “speed,” as in the Kaimanovich–Vershik setting. But still it seems natural to study the behavior of

![]() $\textrm {dist}(X_t,1)$

as a function of t, where

$\textrm {dist}(X_t,1)$

as a function of t, where

![]() $X_t$

is the random walk, 1 is the identity permutation (and the starting point of the walker), and dist is the graph distance with respect to the relevant Cayley graph (with respect to

$X_t$

is the random walk, 1 is the identity permutation (and the starting point of the walker), and dist is the graph distance with respect to the relevant Cayley graph (with respect to

![]() $S_n$

or

$S_n$

or

![]() $S_n'$

, as the case may be). Interestingly, perhaps, the functions increase linearly for the better part of the process for both our

$S_n'$

, as the case may be). Interestingly, perhaps, the functions increase linearly for the better part of the process for both our

![]() $S_n$

and

$S_n$

and

![]() $S_n'$

, so we cannot reasonably claim we show some version of instability for the speed for finite graphs. (We will not prove this claim, but it is not difficult.)

$S_n'$

, so we cannot reasonably claim we show some version of instability for the speed for finite graphs. (We will not prove this claim, but it is not difficult.)

Due to the relation to the Liouville problem, there is interest in reducing the degrees in Theorem 1.1. We note that since our

![]() $S_n$

is a set of transpositions, we must have

$S_n$

is a set of transpositions, we must have

![]() $|S_{n}| \le \binom {n}{2}\asymp \left (\frac {\log | \mathfrak {S}_{n}|}{\log \log |S_{n}|}\right )^{2}$

. As explained in the proof sketch section above, in our construction, there is a set

$|S_{n}| \le \binom {n}{2}\asymp \left (\frac {\log | \mathfrak {S}_{n}|}{\log \log |S_{n}|}\right )^{2}$

. As explained in the proof sketch section above, in our construction, there is a set

![]() such that

such that

![]() $|K| = n(1-o(1))$

and all of the transpositions of the form

$|K| = n(1-o(1))$

and all of the transpositions of the form

![]() $(a,b)$

with

$(a,b)$

with

![]() $a,b \in K$

belong to

$a,b \in K$

belong to

![]() $S_n$

. Hence

$S_n$

. Hence

![]() $|S_n| \asymp n^2$

.

$|S_n| \asymp n^2$

.

Let us mention two possible approaches to reduce the size of

![]() $S_n$

. The first is to replace the complete graph over K in the construction by an expander. In this case, we will have

$S_n$

. The first is to replace the complete graph over K in the construction by an expander. In this case, we will have

![]() $|S_{n}| \asymp n$

. It seems reasonable that this approach works, but we have not pursued it. Let us remark at this point that the mixing time of the interchange process on an expander is not known, with the best upper bound being

$|S_{n}| \asymp n$

. It seems reasonable that this approach works, but we have not pursued it. Let us remark at this point that the mixing time of the interchange process on an expander is not known, with the best upper bound being

![]() $\log ^2 n$

[Reference Alon and Kozma4] (see also [Reference Hermon and Pymar29]).

$\log ^2 n$

[Reference Alon and Kozma4] (see also [Reference Hermon and Pymar29]).

The second, and more radical, is to replace the

![]() $\binom {|K|}{2}$

transpositions corresponding to pairs from K by some number (say m, but importantly independent of n) of random permutations of the set K, obtained by picking m independent random perfect matchings of the set A, and for each perfect matching taking, the permutation that transposes each matched pair. (If

$\binom {|K|}{2}$

transpositions corresponding to pairs from K by some number (say m, but importantly independent of n) of random permutations of the set K, obtained by picking m independent random perfect matchings of the set A, and for each perfect matching taking, the permutation that transposes each matched pair. (If

![]() $|K|$

is odd, we keep one random element unmatched.) Note that the Cayley graph is no longer an interchange process, and that approximately

$|K|$

is odd, we keep one random element unmatched.) Note that the Cayley graph is no longer an interchange process, and that approximately

![]() $n^2$

elements have been replaced by a constant number. The degree would still be unbounded because of the other part of the graph. Again, we did not pursue this approach. One might wonder if it is possible to replace the entire graph, not just K, by matchings, but this changes the mixing time significantly.

$n^2$

elements have been replaced by a constant number. The degree would still be unbounded because of the other part of the graph. Again, we did not pursue this approach. One might wonder if it is possible to replace the entire graph, not just K, by matchings, but this changes the mixing time significantly.

Question 1.1 Can one take the set of generators

![]() $S_n$

to be of constant size? (Certainly, not with transpositions but with general subsets of

$S_n$

to be of constant size? (Certainly, not with transpositions but with general subsets of

![]() $\mathfrak {S}_n$

, or with other groups). If not, can one take

$\mathfrak {S}_n$

, or with other groups). If not, can one take

![]() $|S_n|$

to diverge arbitrarily slowly as a function of

$|S_n|$

to diverge arbitrarily slowly as a function of

![]() $|G_n|$

? Is there a relation between the degree of the graph and the maximal amount of distortion of the mixing time which is possible?

$|G_n|$

? Is there a relation between the degree of the graph and the maximal amount of distortion of the mixing time which is possible?

A related question is the following.

Question 1.2 Does the aforementioned question of Benjamini have an affirmative answer for bounded degree Cayley graphs?

Here are two questions about the sharpness of our

![]() $\log \log \log $

term.

$\log \log \log $

term.

Question 1.3 Does there exist a sequence of finite groups

![]() $G_n$

of diverging sizes, and sequences of generators

$G_n$

of diverging sizes, and sequences of generators

![]() $S_n \subset S_n' \subseteq S_n^i$

for some

$S_n \subset S_n' \subseteq S_n^i$

for some

![]() $i \in \mathbb {N}$

(independent of n) for all n, such that

$i \in \mathbb {N}$

(independent of n) for all n, such that

![]() $|S_n'| \lesssim |S_n|$

and

$|S_n'| \lesssim |S_n|$

and

Question 1.4 Can one have in the setup of Theorem 1.3

The opposite inequalities to (1.7) and (1.8) hold since the spectral gap is a quasi isometry invariant (see Section 2.1) and on the other hand determines the mixing time of a random walk on an n-vertex graph up to a factor

![]() $2 \log n$

(see, e.g., [Reference Levin, Peres, Wilmer, Propp and Wilson33, Section 12.2]).

$2 \log n$

(see, e.g., [Reference Levin, Peres, Wilmer, Propp and Wilson33, Section 12.2]).

Our last question pertains to Theorem 2.5. It is inspired by a question of Itai Benjamini on the Liouville property in the infinite setting.

Question 1.5 Let

![]() $G=(V,E)$

be a finite connected vertex-transitive graph. Is the uniform (or

$G=(V,E)$

be a finite connected vertex-transitive graph. Is the uniform (or

![]() $L^2$

) mixing time robust under bounded perturbations of the edge weights? (certainly, this is open only when the perturbation does not respect the transitivity). Likewise, does there exist some

$L^2$

) mixing time robust under bounded perturbations of the edge weights? (certainly, this is open only when the perturbation does not respect the transitivity). Likewise, does there exist some

![]() $C(a,b,d)>0$

(independent of G) such that if the degree of G is d and

$C(a,b,d)>0$

(independent of G) such that if the degree of G is d and

![]() $G$

is

$G$

is

![]() $(a,b)$

-quasi-isometric to

$(a,b)$

-quasi-isometric to

![]() $G'$

(which, again, need not be vertex-transitive), then the uniform mixing times of the SRWs on the two graphs can vary by at most a

$G'$

(which, again, need not be vertex-transitive), then the uniform mixing times of the SRWs on the two graphs can vary by at most a

![]() $C(a,b,d)$

factor?

$C(a,b,d)$

factor?

We end the introduction with a few cases for which the mixing time is known to be robust. Robustness of the TV and

![]() $L_{\infty }$

mixing times for all reversible Markov chains under changes to the holding probabilities (i.e., under changing the weight of each loop by at most a constant factor) was established in [Reference Peres and Sousi41] by Peres and Sousi and in [Reference Hermon and Peres28] by Hermon and Peres. Boczkowski, Peres, and Sousi [Reference Boczkowski, Peres and Sousi9] constructed an example demonstrating that this may fail without reversibility. Robustness of the TV and

$L_{\infty }$

mixing times for all reversible Markov chains under changes to the holding probabilities (i.e., under changing the weight of each loop by at most a constant factor) was established in [Reference Peres and Sousi41] by Peres and Sousi and in [Reference Hermon and Peres28] by Hermon and Peres. Boczkowski, Peres, and Sousi [Reference Boczkowski, Peres and Sousi9] constructed an example demonstrating that this may fail without reversibility. Robustness of the TV and

![]() $L_{\infty }$

mixing times for general (weighted) trees under bounded perturbations of the edge weights was established in [Reference Peres and Sousi41] by Peres and Sousi and in [Reference Hermon and Peres28] by Hermon and Peres. Robustness of TV mixing times for general trees under quasi-isometries (where one of the graphs need not be a tree, but is “tree-like” in that it is quasi-isometric to a tree) was established in [Reference Addario-Berry and Roberts1] by Addario-Berry and Roberts.

$L_{\infty }$

mixing times for general (weighted) trees under bounded perturbations of the edge weights was established in [Reference Peres and Sousi41] by Peres and Sousi and in [Reference Hermon and Peres28] by Hermon and Peres. Robustness of TV mixing times for general trees under quasi-isometries (where one of the graphs need not be a tree, but is “tree-like” in that it is quasi-isometric to a tree) was established in [Reference Addario-Berry and Roberts1] by Addario-Berry and Roberts.

In many cases, known robust quantities provide upper and lower bounds on the mixing time which are matching up to a constant factor. For example, in the torus

![]() $\{1,\dotsc ,\ell \}^d$

with nearest neighbor lattice edges, the mixing time is bounded above by the isoperimetric profile bound on the mixing time [Reference Morris and Peres39] and below by the inverse of the spectral gap. For a fixed

$\{1,\dotsc ,\ell \}^d$

with nearest neighbor lattice edges, the mixing time is bounded above by the isoperimetric profile bound on the mixing time [Reference Morris and Peres39] and below by the inverse of the spectral gap. For a fixed

![]() $d,$

both bounds are

$d,$

both bounds are

![]() $\Theta (\ell ^2)$

. As both quantities are robust, we get that any graph quasi-isometric to the torus would have mixing time

$\Theta (\ell ^2)$

. As both quantities are robust, we get that any graph quasi-isometric to the torus would have mixing time

![]() $\Theta ( \ell ^2)$

, as in the torus. In fact, the same holds for bounded degree Cayley graphs of moderate growth (see, e.g., [Reference Hermon and Pymar29, Section 7]). Moderate growth is a technical condition, due to Diaconis and Saloff-Coste [Reference Diaconis and Saloff-Coste15], who determined the order of the mixing time and the spectral gap for such Cayley graphs. Breuillard and Tointon [Reference Breuillard and Tointon11] showed that for Cayley graphs of bounded degree this condition is equivalent in some precise quantitative sense to the condition that the diameter is at least polynomial in the size of the group.

$\Theta ( \ell ^2)$

, as in the torus. In fact, the same holds for bounded degree Cayley graphs of moderate growth (see, e.g., [Reference Hermon and Pymar29, Section 7]). Moderate growth is a technical condition, due to Diaconis and Saloff-Coste [Reference Diaconis and Saloff-Coste15], who determined the order of the mixing time and the spectral gap for such Cayley graphs. Breuillard and Tointon [Reference Breuillard and Tointon11] showed that for Cayley graphs of bounded degree this condition is equivalent in some precise quantitative sense to the condition that the diameter is at least polynomial in the size of the group.

Lastly, in a recent work [Reference Lyons and White36], R. Lyons and White showed that for finite Coxeter systems increasing the rates of one or more generators does not increase the

![]() $L_p$

distance between the distribution of the walk at a given time t and the uniform distribution for any

$L_p$

distance between the distribution of the walk at a given time t and the uniform distribution for any

![]() $p \in [1,\infty ]$

. Since multiplying all rates by exactly a factor C changes the mixing time by exactly a factor

$p \in [1,\infty ]$

. Since multiplying all rates by exactly a factor C changes the mixing time by exactly a factor

![]() $1/C$

, this implies that the mixing time is robust under bounded permutations of the rates of the generators.

$1/C$

, this implies that the mixing time is robust under bounded permutations of the rates of the generators.

1.7 Notation

We denote

![]() $[n]=\{1,\dotsc ,n\}$

. We denote by

$[n]=\{1,\dotsc ,n\}$

. We denote by

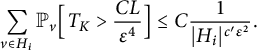

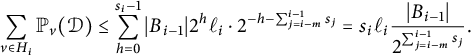

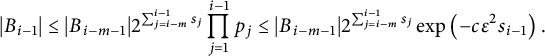

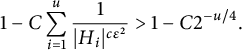

![]() $\mathbb {P}_v$

probabilities of random walk starting from v, which should be a vertex of the relevant graph. We denote by c and C arbitrary positive universal constants which may change from place to place. We will use c for constants which are small enough and C for constants which are large enough. We will occasionally number them for clarity. We denote

$\mathbb {P}_v$

probabilities of random walk starting from v, which should be a vertex of the relevant graph. We denote by c and C arbitrary positive universal constants which may change from place to place. We will use c for constants which are small enough and C for constants which are large enough. We will occasionally number them for clarity. We denote

![]() $X\lesssim Y$

for

$X\lesssim Y$

for

![]() $X\le CY$

and

$X\le CY$

and

![]() $X\asymp Y$

for

$X\asymp Y$

for

![]() $X\lesssim Y$

and

$X\lesssim Y$

and

![]() $Y\lesssim X$

. We denote

$Y\lesssim X$

. We denote

![]() $X\ll Y$

for

$X\ll Y$

for

![]() $X=o(Y)$

. Throughout, we do not distinguish between a graph G and its set of vertices, denoting the latter by G as well. The set of edges of G will be denoted by

$X=o(Y)$

. Throughout, we do not distinguish between a graph G and its set of vertices, denoting the latter by G as well. The set of edges of G will be denoted by

![]() $E(G)$

.

$E(G)$

.

2 Preliminaries

Definition 2.1 Let

![]() $\Gamma $

be a finitely generated group, and let S be a finite set of generators satisfying

$\Gamma $

be a finitely generated group, and let S be a finite set of generators satisfying

![]() $s\in S\iff s^{-1}\in S$

. We define the Cayley graph of

$s\in S\iff s^{-1}\in S$

. We define the Cayley graph of

![]() $\Gamma $

with respect to S, denoted by

$\Gamma $

with respect to S, denoted by

![]() $\operatorname {\mathrm {Cay}}(\Gamma ,S)$

, as the graph whose vertex set is G and whose edges are

$\operatorname {\mathrm {Cay}}(\Gamma ,S)$

, as the graph whose vertex set is G and whose edges are

Definition 2.2 Let G be a weighted graph, and let

![]() $(r(e)_{e\in E(G)})$

be the weights. The interchange process on G is a continuous-time process in which particles are put on all vertices, all different. Each edge e of G is associated with a Poisson clock which rings at rate

$(r(e)_{e\in E(G)})$

be the weights. The interchange process on G is a continuous-time process in which particles are put on all vertices, all different. Each edge e of G is associated with a Poisson clock which rings at rate

![]() $r(e)$

. When the clock rings, the two particles at the two vertices of e are exchanged.

$r(e)$

. When the clock rings, the two particles at the two vertices of e are exchanged.

The interchange process is always well defined for finite graphs (which is what we are interested in here). For infinite graphs, there are some mild conditions on the degrees and on r for it to be well defined. The interchange process on a graph G of size n is equivalent to a random walk in continuous time

![]() $X_t$

on

$X_t$

on

![]() $\mathfrak {S}_n$

with the generators S of

$\mathfrak {S}_n$

with the generators S of

![]() $\mathfrak {S}_n$

being all transpositions

$\mathfrak {S}_n$

being all transpositions

![]() $(xy)$

(in cycle notation) for all

$(xy)$

(in cycle notation) for all

![]() $(xy)$

which are edges of G. The rate of the transposition

$(xy)$

which are edges of G. The rate of the transposition

![]() $(xy)$

is

$(xy)$

is

![]() $r(xy)$

. The position of the

$r(xy)$

. The position of the

![]() $i{\textrm {th}}$

particle at time t is then

$i{\textrm {th}}$

particle at time t is then

![]() $X_t^{-1}(i)$

, where the inverse is as permutations.

$X_t^{-1}(i)$

, where the inverse is as permutations.

2.1 Comparison of Dirichlet forms

Recall the condition (1.5) for comparison of Dirichlet forms. When it holds it implies a comparison of the eigenvalues: If

![]() $0=\lambda _1 \le \lambda _2 \le \cdots \le \lambda _{n}$

and

$0=\lambda _1 \le \lambda _2 \le \cdots \le \lambda _{n}$

and

![]() $0=\lambda _1 '\le \lambda _2 '\le \cdots \le \lambda _{n}'$

are the eigenvalues of

$0=\lambda _1 '\le \lambda _2 '\le \cdots \le \lambda _{n}'$

are the eigenvalues of

![]() $I-P$

and

$I-P$

and

![]() $I-P'$

, respectively, then under (1.5) (see, e.g., [Reference Aldous and Fill3, Corollary 8.4] or [Reference Berestycki8, Corollary 4.1]),

$I-P'$

, respectively, then under (1.5) (see, e.g., [Reference Aldous and Fill3, Corollary 8.4] or [Reference Berestycki8, Corollary 4.1]),

The same inequality holds for the eigenvalues of the Markov generators

![]() $-\mathcal L$

and

$-\mathcal L$

and

![]() $-\mathcal L'$

in continuous time (that is,

$-\mathcal L'$

in continuous time (that is,

![]() $\mathcal L(x,y)=r(xy)$

for

$\mathcal L(x,y)=r(xy)$

for

![]() $x \neq y$

and

$x \neq y$

and

![]() $\mathcal L(x,x)=-\sum _{y:\, y \neq x}r(xy)$

, where

$\mathcal L(x,x)=-\sum _{y:\, y \neq x}r(xy)$

, where

![]() $r(xy)$

is the rate of the edge

$r(xy)$

is the rate of the edge

![]() $(xy)$

and with the convention that

$(xy)$

and with the convention that

![]() $r(xy)=0$

if

$r(xy)=0$

if

![]() $xy \notin E$

). The proof is the same as in the discrete case (see, again, [Reference Berestycki8, Corollary 4.1]). The quantity

$xy \notin E$

). The proof is the same as in the discrete case (see, again, [Reference Berestycki8, Corollary 4.1]). The quantity

![]() $\lambda _2$

is called the spectral gap. It follows that it is robust.

$\lambda _2$

is called the spectral gap. It follows that it is robust.

2.2 Mixing times

We now define the relevant notions of mixing: total variation,

![]() $L_2$

and uniform. We start with the total variation mixing time which is the topic of this paper, and which we will simply call the mixing time.

$L_2$

and uniform. We start with the total variation mixing time which is the topic of this paper, and which we will simply call the mixing time.

Definition 2.3 Let

![]() $X_t$

be a Markov chain on a finite state space (in continuous or discrete time) with stationary measure

$X_t$

be a Markov chain on a finite state space (in continuous or discrete time) with stationary measure

![]() $\pi $

, and denote the probability that

$\pi $

, and denote the probability that

![]() $X_t=y$

conditioned on

$X_t=y$

conditioned on

![]() $X_0=x$

by

$X_0=x$

by

![]() $P_t(x,y)$

. Then the mixing time is defined by

$P_t(x,y)$

. Then the mixing time is defined by

In discrete time, we often assume that

![]() $X_t$

is lazy, i.e., that at each step,

$X_t$

is lazy, i.e., that at each step,

![]() ${\mathbb P}(X_{t+1}=X_t)\ge \frac 12$

, and we will not state this explicitly. In particular, the mixing time in Theorem 1.1 is for the lazy chain. (Without laziness issues of bipartiteness and near bipartiteness pop up, which have little theoretical or practical interest; see, e.g., [Reference Basu, Hermon and Peres6, Remark 1.9] and [Reference Hermon and Peres27, Reference Peres and Sousi41].)

${\mathbb P}(X_{t+1}=X_t)\ge \frac 12$

, and we will not state this explicitly. In particular, the mixing time in Theorem 1.1 is for the lazy chain. (Without laziness issues of bipartiteness and near bipartiteness pop up, which have little theoretical or practical interest; see, e.g., [Reference Basu, Hermon and Peres6, Remark 1.9] and [Reference Hermon and Peres27, Reference Peres and Sousi41].)

The other notions we are interested in are the

![]() $L_2$

and uniform mixing time and the average

$L_2$

and uniform mixing time and the average

![]() $L_2$

mixing time. Here are the relevant definitions.

$L_2$

mixing time. Here are the relevant definitions.

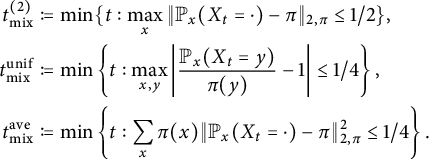

Definition 2.4 Let

![]() $X_t$

,

$X_t$

,

![]() $\pi $

and

$\pi $

and