Introduction

The electrocardiogram (ECG) is a non-invasive diagnostic tool that is regularly performed at the bedside in the emergency department (ED).Reference Fisch 1 Electrocardiographic abnormalities may be the first indication of ischemia, metabolic disturbance, or life-threatening arrhythmias.Reference Fisch 1 To ensure timely diagnosis and appropriate management of patients, emergency medicine (EM) physicians must provide rapid and reliable interpretation of ECGs, and EM programs must ensure competence of their trainees in this skill.

There is a lack of evidence-based literature on the optimal techniques for learning, maintaining and assessing competency in ECG interpretation.Reference Salerno, Alguire and Waxman 2 In 2001, the American College of Cardiology and the American Heart Association (ACC/AHA) produced a Clinical Competence Statement on Electrocardiography where they defined the minimum education, training, experiences, cognitive and technical skills necessary for the competent reading and interpretation of adult ECGs.Reference Kadish, Buxton and Kennedy 3 Unfortunately, this document was not evidence-based, did not address ECG interpretation in the EM context and was not endorsed by the Society for Academic Emergency Medicine.Reference Michelson and Brady 4

Research in cognitive psychology and medical education suggests that ECG diagnosis requires two distinct reasoning systems: analytic and non-analytic.Reference Evans 5 , Reference Norman and Eva 6 The analytic reasoning system involves a controlled, systematic consideration of features and their relation to potential diagnosis. The non-analytic approach involves rapid processes, such as pattern recognition that allows recognition of the correct diagnosis based on similarity to illness scripts, or mental representations of clinical knowledge based on cases that have been seen in the past.Reference Eva 7 , Reference Charlin, Boshuizen and Custers 8 Recent studies support the use of an additive model of diagnostic reasoning that highlights the importance of these feature-oriented and similarity-based reasoning strategies, suggesting that trainees base their interpretation on what they have previously seen.Reference Ark, Brooks and Eva 9

Competence has been defined as the relationship between a person’s abilities and the tasks he or she is required to perform in a particular situation in the real world.Reference Epstein 10 Sherbino et al propose that emergency physicians’ competence should be: 1) based on abilities, 2) derived from a set of domains that define the field of EM, 3) measurable, and 4) specific to the EM context.Reference Epstein 10 , Reference Sherbino, Bandiera and Frank 11

Evidence suggests that EM residents possess the ability to interpret ECGs. A 2004 survey showed that the majority of residency program directors in the United States were “comfortable” or “very comfortable” with their senior residents’ ability to interpret ECGs.Reference Pines, Perina and Brady 12 However, as of yet, these abilities are neither measurable nor have they been specifically defined in the EM context.

To address the competence of EM residents in adult ECG interpretation, we must first identify EM-specific adult ECG knowledge required by EM trainees. This study sought to define a list of adult ECG diagnosis and/or findings relevant to the EM training context.

Methods

After obtaining approval from the Institutional Review Board (IRB), the Delphi technique was used to develop consensus amongst a panel of EM residency program directors. The Delphi technique uses a series of questionnaires to aggregate opinions in an anonymous fashion over a series of “rounds” conducted through electronic exchange. It is an iterative process that involves systematic collection of judgments and consensus on a particular topic using sequential questionnaires interspersed with summarized information. In our study, a panel of participants were given a questionnaire (round 1) and asked to provide answers. For round two, panelists were sent the results of round 1, which included the average ratings of each item, panelist comments and new items suggested by panel members. The panelists were asked to reconsider and potentially change their ratings of items, taking into account the ratings accorded by the other panel members and panel member comments, and to rate new items. This process was repeated until a consensus was reached. Consensus was defined as a minimum of 75% agreement on the rating of any one item at round 2 or later. In the absence of consensus, stability of opinion was determined. Stability was measured as the consistency of answers between successive rounds of the study, and defined as a shift of 20% or less after successive rounds. As there is significant disagreement in the literature regarding consensus and stability, these values were determined a priori and based on values used in similar studies.Reference Holey, Feeley and Dixon 13 - Reference Scheibe, Skutsch and Schofer 16 Once an item achieved consensus or stability, it was removed from further rounds of the Delphi process. The process was conducted in a “quasi-anonymous” manner. Respondents’ identity was known to the primary investigator (PI) only to allow for reminders and provision of feedback in subsequent rounds. The participants’ ratings and opinions remained anonymous to members of the panel.

Study design, setting and population

One goal of the study was to identify a panel of participants who would have knowledge of the topic being investigated, be reasonably impartial, and have an interest in the research topic. We identified a purposive sample, which included all EM training program directors in Canada (from both the Royal College of Physicians and Surgeons of Canada training programs and the College of Family Physicians fellowship programs) as well as an EM physician with an expertise in emergency electrocardiography. These potential participants were invited to participate as panelists in the online consensus-building process.

Sample size

A sample size of 31 participants was expected to provide representative information while maximizing the chances of an adequate response rates. The literature suggests that there is little benefit to panels that exceed 30 participants.Reference Delbecq and Van de Ven 17 The study’s a priori minimum acceptable response rate was 10 participants.Reference Parenté and Anderson-Parenté 18

Questionnaire development

To develop an extensive list of possible ECG diagnoses and/or findings, one of the investigators (CP) conducted a detailed review of the published literature in cardiology and EM. The starting point for this search was the American Board of Internal Medicine 94–question/answer sheet for the ECG portion of the cardiovascular disease board examination.Reference Auseon, Schaal and Kolibash 19 This list was initially compared to several EM and cardiology textbooks, and missing diagnoses and/or findings were added.Reference Chan, Brady and Harrigan 20 - Reference Surawicz and Knilans 23 The resulting list underwent further review and modification by two EM physicians with ≥5 years of clinical practice. Finally, in the first two rounds of the Delphi process, participants were asked if they would add any other ECG diagnoses and/or findings to the list. Diagnoses and/or findings identified by participants were then added to subsequent rounds of the Delphi process. Each individual diagnosis and/or finding (including a brief description where necessary) was considered a separate item on the Delphi questionnaire.

The Delphi questionnaire was developed utilizing a web-based survey tool (FluidSurveys®, available at www.fluidsurveys.com). The panelists were asked to rate each item’s relevance to EM trainees using a 4-point Likert scale anchored by the following descriptors and assigned ratings:

-

1) It is not important for EM trainees to be able to identify this diagnosis (1 point)

-

2) It would be nice for EM trainees to be able to identify this diagnosis/finding (2 points)

-

3) EM trainees should be able to identify this diagnosis/finding (3 points)

-

4) EM trainees must be able to identify this diagnosis/finding (4 points)

Panelists had the option of choosing “I am unfamiliar with this diagnosis,” which was assigned a rating of zero points. Panel members were given the opportunity to make comments about items and add any additional diagnoses at their discretion. The questionnaire was pilot tested with two EM physicians, and final edits were made to the questionnaire based on their feedback.

Data collection

The online survey instrument was used to create, distribute, collect, and analyze responses. An introductory email and consent form were sent to all potential participants, and upon their agreement to participate, they were directed to round 1 of the Delphi questionnaire. Reminder invitations were sent at weekly intervals (three reminders per round). Participation in the panel was voluntary.

Data analysis

Statistical analysis of item ratings during each of the three rounds involved calculation of central tendencies (means, medians, and modes) and levels of dispersion (standard deviation and inter-quartile range) for all items. Given that the results were ordinal data, medians and inter-quartile ranges were reported to panelists in rounds 2 and 3. For the purposes of the analysis, man rating cut offs for the different categories were arbitrarily defined: EM trainees MUST be able to identify (mean Likert rating >3.8), “EM trainees SHOULD be able to identify” (mean Likert rating 2.8-3.8), “it would be NICE for EM trainees to identify” (mean Likert rating 1.8-<2.8), and “it is NOT IMPORTANT for EM trainees to identify” (mean Likert rating <1.8). Table 1 presents the number of diagnoses in each category. Repeated analysis of variance was used to assess differences between standard deviations for each of the rounds. Participant comments were not systematically analyzed.

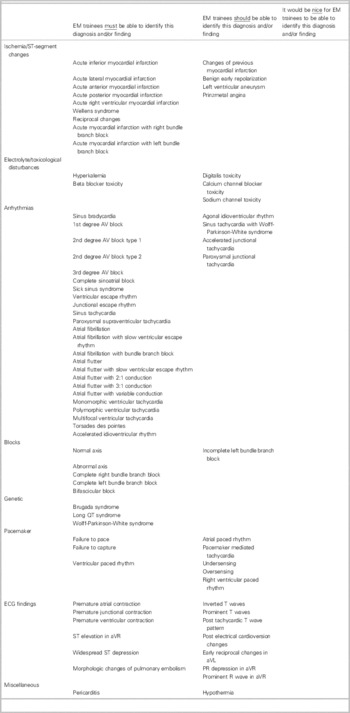

Table 1 Diagnoses and/or findings in each rating category

Abbreviations: EM – Emergency Medicine, ECG: Electrocardiogram.

Results

Of the 31 faculty members invited to participate in the Delphi process; 22 agreed, and 16 of the 22 (72%) panelists completed all three rounds of the Delphi consensus-gathering exercise. Except for one expert in EM electrocardiography, all other panel members were program directors of accredited EM training programs in Canada and came from 11 different training programs. In round 1, a total of 118 adult ECG diagnoses and/or findings were rated. Panel members suggested the addition of three diagnoses to the list, bringing the total number of items rated to 121 (Appendix A). The mean standard deviations for all 121 items decreased throughout the process: Round 1=0.62, Round 2=0.45, Round 3=0.32. Analysis of variance revealed that the standard deviations of each successive round were substantially different from each other. For 112 of the 121 items, the standard deviations decreased progressively over the three rounds. For the remaining nine items, they remained virtually unchanged through three rounds. Overall, 78 diagnoses reached consensus (Table 2), 42 achieved stability, and one diagnosis achieved neither consensus nor stability (Appendix A).

Table 2 ECG diagnoses and/or findings achieving consensus

* Achieved neither consensus nor stability

Discussion

This study described the consensus opinion of Canadian academic EM residency program directors about which adult ECG diagnoses and/or findings EM physicians must know. The Delphi process allowed for repeated iterations of item rating and led to a reasonable consensus.Reference Smith and Simpson 24 Experts do not yet agree on a single valid measure of consensus in Delphi studies. This study used a hierarchical process described by Dajani et al,Reference Dajani, Sincoff and Talley 25 that included participant consensus (the percentage of participants agreeing on a particular response), followed by stability of opinion in the absence of consensus (consistency of answers between successive rounds of the questionnaire without achieving the pre-defined criteria for consensus), which is consistent with other Delphi studies.Reference Holey, Feeley and Dixon 13 - Reference Murry and Hammons 15 , Reference Dajani, Sincoff and Talley 25 , Reference Williams and Webb 26 The demonstration of convergence, (which is a progressive decrease in range and standard deviation of responses as rounds progressedReference Greatorex and Dexter 27 ), suggested increased panelist agreement, and further supported the study conclusions. The anonymous nature of the process avoided the influence of strong personalities and other group dynamics.Reference Williams and Webb 26

Overall, participants agreed that EM trainees must or should be able to identify the majority of the adult ECG findings and/or diagnoses. This finding was interesting for several reasons: it indicated that there was not an EM-specific list of diagnoses and/or findings to use as a basis for a curriculum or assessment strategy, as had previously been suggested in the literature.Reference Michelson and Brady 4 In fact, this study found that the knowledge expectations for EM trainees were similar to those of other practitioners (cardiologists or internal medicine specialists) who routinely interpret ECGs.Reference Michelson and Brady 4 However, the study findings do not imply that EM ECG competency should be distilled to a checklist to be mastered, an arbitrary number of ECGs to be interpreted over the course of training, or successful completion of a board examination.Reference Kadish, Buxton and Kennedy 3 , Reference Michelson and Brady 4 , Reference Govaerts 28

The key to defining EM competency in ECG interpretation must include the integration of ECG knowledge with skills, judgment, and attitudes that are linked to the professional practice of EM.Reference Frank, Snell and Cate 29 The goal or competency we should aim to achieve is for EM trainees to possess the ability to correctly interpret ECGs (regardless of the specific diagnosis and/or findings), and to be able to facilitate care in the context of a patient’s clinical presentation, including the provision of time-sensitive interventions, resuscitation, or safe discharge home. The majority of EM education does (and should) occur in the context of professional practice, as competence is highly situational and cannot be separated from practice.Reference Dall'Alba and Sandberg 30 Over the course of their residency, EM trainees routinely interpret ECGs and make management decisions based on their interpretation under the training of qualified EM faculty members. Studies suggest that they do this well.Reference Pines, Perina and Brady 12 , Reference Zappa, Smith and Li 31 , Reference Westdorp, Gratton and Watson 32 The value of identifying a long, comprehensive list of ECG diagnoses and/or findings is that it suggests that a practice-based ED ECG instruction may be inadequate. Although trainees may encounter a significant number of the important diagnoses during their clinical rotations in the ED, it is unlikely that each trainee would encounter all of the diagnoses identified in this study. Armed with this knowledge, and the understanding that a significant component of diagnostic reasoning is based on what a trainee has previously seen, it becomes clear that supplemental education may be required in order to develop the appropriate illness scripts required for the practice of EM.Reference Ark, Brooks and Eva 9 This supplemental education may be learner-driven, where an individual trainee uses the list of diagnoses and/or findings to “gauge” their learning and undertakes self-study to improve their knowledge in areas where they perceive deficiencies. Alternatively, educators can use the list to identify components that are routinely not well-addressed by practice-based ECG education, knowledge of which can guide the development of educational strategies and/or assessments.

Limitations

There were several limitations in this study. Selection of the items for inclusion in the ratings list was carried out by a single individual, although the likelihood that important references were missed was minimized by having other physicians and another investigator review the list, and by allowing panel participants to add any diagnosis or finding that they felt was missing. A second limitation was the panel size of 22 participants, 16 of whom completed all 3 rounds of the Delphi process. Although a larger panel size would have resulted in a greater data generation, it would also have increased the risk of losing participants due to rater fatigue. We believe that the small panel size achieved good consensus. A final limitation concerns the selection of panel participants. EM program directors were chosen because of their clinical experience and their likelihood to complete the questionnaire, given their involvement in EM education. Although the group was heterogeneous in terms of geography and training program, as program directors they were all at risk of being directly affected by the results of this study, which may have biased their willingness to participate or their individual responses. Furthermore, their concentration in academic settings may have made them more likely to rate all diagnoses as potentially important instead of focusing on what is most clinically relevant to a practicing EM physician in the community. The authors intend to repeat a similar study in different study populations to further examine this possibility.

Conclusion

We have categorized adult ECG findings and diagnoses within an EM training context. These findings have potentially important applications to EM trainee education. For example, this study can serve as a needs assessment to inform design and development of curriculum to address EM trainees’ interpretation of adult ECG that will be more attuned to the realities of Canadian EM practice and also inform the way in which these competencies are assessed.

Acknowledgements

We are grateful to the educators who voluntarily served on the panel of raters and agreed to be part of the TRACE (TRAinee Competence in ECG interpretation) Consortium: Kirk Magee, Dalhousie University; Daniel Brouillard, Université Laval; Pierre Desaulniers, Université de Montreal; Ian Preyra, McMaster University; Joel Turner, McGill University; Wes Palatnick, University of Manitoba; Rob Woods, University of Saskatchewan; Sandy Dong, University of Alberta; Ian Rigby, University of Calgary; Brian Chung, University of British Columbia; Richard Kohn, McGill University; Christine Richardson, University Western Ontario; M Greidanus, University of Calgary; Jennifer Puddy, University of Calgary; Patrick Ling, University Of Saskatchewan and Amal Mattu, University of Maryland. We would like to acknowledge the following people for their help with this project: Dr. Ken Doyle (survey review) and Xiaoqing Xue (ANOVA).

Competing interests: None declared.

Supplementary material

To view supplementary material for this article, please visit http://dx.doi.org/10.1017/cem.2014.58