CLINICIAN'S CAPSULE

What is known about the topic?

Emergency department (ED) visits deemed inappropriate are often targeted to reduce ED use, but most evaluations use clinician judgment to establish appropriateness.

What did this study ask?

How reliable are clinicians in characterizing appropriateness of an ED return visit, if guided by standardized criteria to reflect on?

What did this study find?

Despite guidance, clinicians agree poorly on appropriateness of a scheduled return pediatric ED visit.

Why does this study matter to clinicians?

Future efforts targeting “inappropriate” ED visits should not rely on clinical judgment to determine visit appropriateness.

INTRODUCTION

Pediatric emergency departments (EDs) face increasing visit volumes, often leading to overcrowding.Reference Doan, Genuis and Yu1 ED overcrowding is associated with increased wait times, reduced quality of care, decreased satisfaction, and adverse health outcomes.Reference Bond, Ospina and Blitz2 While delay in transfer of boarded patients is a common cause of crowding in general EDs, increased input is a primary cause in pediatric EDs.Reference Stang, McGillivray and Bhatt3 As a result, efforts to mitigate ED crowding have sought to identify factors associated with “inappropriate” use.Reference Hilditch, Scheftsik-Pedery, Swain, Dyson and Wright4

Unfortunately, there is no consensus definition of ED visit appropriateness. A wide range of methods have been used to evaluate appropriateness of ED visits, but few have been validated. A 2011 systematic review of ED literature, counted 51 different approaches, including implicit criteria (patient's self-assessment or physician assessment); explicit criteria; triage score; symptoms or tests and procedures; and hospital admission. This variability highlights the complexity of defining appropriate emergency use.Reference Durand, Gentile and Devictor5

Despite widespread use of implicit clinician judgment to classify ED visit appropriateness, little is known about the reliability of this methodology. This brief report sought to measure agreement among clinician reviewers on the appropriateness of a scheduled ED return visit with and without the aid of a standardized three-question guide.

METHODS

Study design

We conducted a retrospective cohort study of all ED visits from May 1, 2012, to April 30, 2013, with a return visit within 7 days of the index visit. The study protocol was approved with waived consent by the institution's Research Ethics Board as a sub-analysis of a larger study.Reference Doan, Goldman and Meckler6

To evaluate the reliability of clinician judgment to determine visit appropriateness, we compared the determination of a primary clinician reviewer guided by survey questions against that of the index visit treating clinician. We then assessed interrater agreement among three clinician reviewers on a random subset of 90 scheduled ED return visits.

Study setting and population

We conducted this study at a tertiary pediatric ED that cares for >40,000 children age <17 annually. We limited the study cohort to a subset of scheduled ED return visits determined appropriate by the index visit clinician. We excluded scheduled returns for parenteral antibiotic therapy as data from this cohort were previously published.Reference Xu and Doan7

Study protocol

The primary reviewer reviewed the entire cohort of scheduled ED return visits guided by a two-part survey and was asked to determine, using clinical judgment, whether the visit was necessary (Supplemental Online Appendix 1). Two additional blinded reviewers subsequently reviewed a random subset of 90 visits using the same survey guide and were asked to evaluate the necessity of the visit. Finally, a standardized algorithm of three dichotomous questions (did the visit result in an admission; were pediatric ED-specific diagnostic/therapeutic interventions administered; and did the visit need to be scheduled during nonoffice hours) was compared with clinical judgment regarding visit appropriateness.

Patient visit information sets and evaluations were created online and by means of REDCap and exported for analyses.

Measures

The primary objective was to measure agreement with the index treating clinician on the appropriateness of the return visit by the primary reviewer and among all three clinical reviewers. The secondary objective was to evaluate agreement between the primary reviewer's clinical judgment and the three-question structured algorithm.

Data analysis

We used descriptive statistics to report on all outcomes as reviewed by the primary clinician reviewer. Agreement between the 3 reviewers was reported using Fleiss’ kappa statistic. The primary objective sample size was determined by the total number of scheduled ED return visits meeting inclusion criteria. To determine with 95% confidence, an estimated 85% agreement with ± 7.5% precision, 90 randomly selected visits were assessed by three reviewers. We used Microsoft Excel for Mac 2017 Version 15.4 and Stata Version 11.0 for analyses.

RESULTS

Study population

Between May 1, 2012, and April 30, 2013, there were a total of 42,413 pediatric ED visits: 2,962 (6.98%) index visits had one or more associated returns to the ED and 669 (22.6%) were scheduled. After excluding scheduled outpatient parenteral antibiotic therapy visits, the final cohort included 207 (30.9%) index visits with 232 scheduled returns.

The cohort's mean age was 4.6 years (95% confidence interval [CI], 3.3–5.9) and 54.1% were male. More than 75% were triaged Canadian Triage and Acuity Scale (CTAS) 3 or 4.

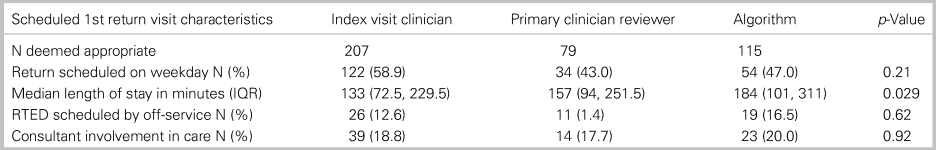

Appropriateness using clinical judgment

Among the 207 index visits associated with at least one scheduled ED return visit, the primary reviewer identified 79 (38.2%, 95% CI, 32.6–45.2%) as appropriate using clinical judgment (Table 1). For the subset of 90 cases evaluated by the two additional reviewers, there was 67.4% (95% CI, 60.4–74.4%) agreement among reviewers with a Fleiss’ Kappa of 0.30 (95% CI, 0.17–0.44).

Table 1. Visit characteristics for scheduled first ED return visits judged by clinician as appropriate, stratified by method of determination (index visit clinician, primary clinician reviewer, and algorithm)

IQR = interquartile range; RTED = return to ED.

Appropriateness using standardized algorithm

The three-question standardized algorithm based on the primary reviewer's survey responses identified 115/207 (55.6%) appropriate return visits (Supplementary Online Appendix 2). Agreement among the 3 investigators increased to 83.7% (95% CI, 77.7–89.7%), Fleiss’ kappa 0.67 (95% CI, 0.55–0.79) using the algorithm.

DISCUSSION

In our pediatric ED cohort of scheduled ED return visits, we found poor reliability of clinician judgment to determine visit appropriateness, despite guidance from survey questions highlighting specific patient and visit characteristics. Although pediatric emergency clinicians surveyed in 2005 agreed that implicit or physician chart review was the most effective way to evaluate visit appropriateness, our results suggest that clinician judgment is unreliable.Reference Brousseau, Mistry and Alessandrini8

Our findings were consistent with earlier studies of clinician judgment in adult emergency visits. O'Brien and colleagues compared the use of triage complaint, 10 explicit criteria, and consensus of two ED clinicians.Reference O'Brien, Shapiro, Woolard, O'Sullivan and Stein9 Kappas ranged from fair to moderate: triage and explicit, 0.39; triage and physicians, 0.42; and explicit and physicians, 0.42; agreement between the two emergency physicians, 0.4.Reference O'Brien, Shapiro, Woolard, O'Sullivan and Stein9

No previous studies have reported interrater reliability for clinician assessment of ED visit appropriateness in the pediatric population. De Angelis described the development of a set of criteria based primarily on diagnoses and complaints applied by pediatricians to determine appropriateness of ED visits made by patients in their practice.Reference Deangelis, Fosarelli and Duggan10 Neither the original study, nor subsequent publications using these criteria, however, have reported criteria reliability or validity.Reference Deangelis, Fosarelli and Duggan10

While our study did not use validated explicit criteria, we found an increase in agreement when limiting reviewers’ input to three objective criteria, which improved agreement from 32% to 52% over clinical judgment alone. These results further support the use of explicit rather than implicit approaches in future quality improvement or research.

Limitations

This retrospective review used abstracted data from the medical chart and limited detail may have contributed to the poor agreement between reviewers and the index treating clinician. Our algorithm is neither validated nor intended as a gold standard for visit appropriateness, but rather used to compare a structured approach using dichotomous questions with clinical judgment. Finally, our cohort of scheduled ED return visits at a single center was not meant to define appropriate visits and may not be generalizable. This should not affect the primary objective, which was to measure agreement among clinician reviewers using clinical judgment of appropriateness.

CONCLUSIONS

Although increasing visit volumes contribute to pediatric ED crowding, and unnecessary visits have been the subject of prior efforts to mitigate overcrowding, our results demonstrated poor reliability of clinician judgment to determine appropriateness of ED visits. Given the complexities in defining visit appropriateness and poor reliability of clinician judgment, future projects targeting appropriateness of ED utilisation should favor objective or explicit criteria rather than clinician judgment alone.

Supplemental material

The supplemental material for this article can be found at https://doi.org/10.1017/cem.2019.473.

Acknowledgments

The authors acknowledge Karly Stillwell, the divisional research coordinator for her generous assistance in this study.

Competing interests

The authors have no conflicts of interest to disclose.