Introduction

Populations of the eastern spruce budworm, Choristoneura fumiferana (Clemens) (Lepidoptera: Tortricidae), erupt periodically and can cause widespread defoliation of spruce-fir stands across northern North America. Although pathogens can play a key role in driving forest insect population cycles (Myers Reference Myers1993), their importance in spruce budworm outbreaks is less evident. Pathogens causing lethal infections, such as viruses, bacteria, and fungi, typically occur at low frequencies or in isolated epizootics and are believed to have generally low or at best localised population impacts (Neilson Reference Neilson1963; Vandenberg and Soper Reference Vandenberg and Soper1978; Strongman et al. Reference Strongman, Eveleigh, van Frankenhuyzen and Royama1997; Lucarotti et al. Reference Lucarotti, Eveleigh, Royama, Morin, McCarthy and Ebling2004). In contrast, microsporidiosis caused by infection with Nosema fumiferanae (Thomson) (Microsporidia: Nosematidae) is a widespread and highly prominent disease in high-density populations. This intracellular parasite is transmitted both horizontally (larva to larva) and vertically (female to offspring) (Thomson Reference Thomson1958a; Wilson Reference Wilson1982; Campbell et al. Reference Campbell, van Frankenhuyzen and Smith2007; van Frankenhuyzen et al. Reference van Frankenhuyzen, Nystrom and Liu2007) and commonly reaches high prevalence during the collapsing phase of an outbreak (Thomson Reference Thomson1960; Wilson Reference Wilson1977, Reference Wilson1987; Eveleigh et al. Reference Eveleigh, Lucarotti, McCarthy and Morin2012). Analysis of spruce budworm population cycles lead Royama (Reference Royama1984) to suggest that N. fumiferanae may play a role in causing oscillations as part of an unidentified complex of mortality agents that acts in concert with parasitism as the primary driver. Subsequent analyses of population trends have not produced evidence to support such a role (e.g., Régnière and Nealis Reference Régnière and Nealis2007, Reference Régnière and Nealis2008), and recent studies have shown that population impacts of infection on generation survival are limited (Eveleigh et al. Reference Eveleigh, Lucarotti, McCarthy and Morin2012).

Infection by N. fumiferanae is known to negatively affect processes throughout the budworm’s life cycle, from early-instar survival to female longevity and fecundity (Thomson Reference Thomson1958a, Reference Thomson1958b; Bauer and Nordin Reference Bauer and Nordin1989; van Frankenhuyzen et al. Reference van Frankenhuyzen, Nystrom and Liu2007). Even at high infection intensities those effects were shown to be subtle, and are not likely to exert a strong regulatory influence on their own (Régnière Reference Régnière1984). However, microsporidiosis may act in concert with other factors that affect budworm survival and recruitment in older outbreaks. We postulate that defoliation of the host tree is one such factor. Host-plant feedbacks mediated by defoliation and foliage depletion contribute significantly to generation survival and fecundity throughout the decline of an outbreak (Blais Reference Blais1953; Nealis and Régnière Reference Nealis and Régnière2004; Régnière and Nealis Reference Régnière and Nealis2007, Reference Régnière and Nealis2008). There is some evidence from analysis of long-term population trends in collapsing outbreaks that effects of N. fumiferanae infection on fecundity are affected by foliage depletion (Nealis and Régnière Reference Nealis and Régnière2004). Depletion of current year’s foliage at high larval densities forces late instars to feed on old (previous years’) needles (commonly referred to as back-feeding), especially on balsam fir (Abies balsamea Linnaeus; Pinaceae). Because old foliage is of suboptimal nutritional quality (Carisey and Bauce Reference Carisey and Bauce1997), back-feeding has subtle deleterious effects on larval survival, development, pupal weight, and fecundity (Blais Reference Blais1952, Reference Blais1953) that are not unlike those resulting from N. fumiferanae infection. Microsporidiosis and back-feeding are likely to occur together in high-density populations and, if interacting, could affect generation survival and recruitment to a much greater extent than either factor alone. In this paper we report results of two laboratory experiments that were conducted to test the hypothesis that adverse effects of microsporidiosis and back-feeding exacerbate each other.

Materials and methods

Study organisms

Microsporidia-free, second-instar C. fumiferana were obtained from the Insect Production Unit at the Great Lakes Forestry Centre (GLFC), Sault Ste. Marie, Ontario, Canada and reared on artificial diet without antimicrobial agents (Grisdale Reference Grisdale1984).

Spores of N. fumiferanae were obtained from infected larvae collected in an infestation near Sault Ste. Marie in 2008. The larvae were homogenised and spores were harvested by repeated centrifugation and stored in 50% glycerol in liquid nitrogen. Before infection of Nosema-free, laboratory-reared larvae, the glycerol was removed from the thawed suspension by repeated centrifugation (3×15 minutes, 3200 rpm) and the concentration of spores was determined by direct counting, using a heamocytometer. The spore suspension was then diluted with distilled water to a stock suspension of 1×107 spores per mL. Spore burdens in larvae, pupae, and adult females throughout this study were estimated by direct counting of 10 µL aliquots from 0.5 mL distilled water homogenates, using a heamocytometer and a phase-contrast microscope. The detection threshold for this technique is ~2.5×104 spores per last instars, pupa, or adult.

Production of vertically infected larvae

Infecting early instars by spreading spores on the surface of their artificial diet often results in variable and low levels of infection in late instars (K.v.F., personal observations). The reason is that in spruce budworm larvae N. fumiferanae has a 10-day to 15-day incubation period (Campbell et al. Reference Campbell, van Frankenhuyzen and Smith2007), which means that the 2–3 weeks between spore ingestion and pupation allows only limited proliferation of the pathogen. Because transmission of N. fumiferanae from mother to offspring is highly efficient in spruce budworm (van Frankenhuyzen et al. Reference van Frankenhuyzen, Nystrom and Liu2007), this problem was avoided by using maternally infected larvae.

Colonies of infected and uninfected larvae were established as follows. For each of the two experiments, ~2000 larvae from one rearing cohort were infected by spreading 0.1 mL of the stock spore suspension on the surface of artificial diet in a 25-mL creamer cup that then received 20–25 early-third instars. Another ~2000 larvae from the same rearing cohort were placed in cups without spores. Larvae were transferred after nine days of feeding to Nosema-free diet and reared to the adult stage. Single pair matings were conducted following the procedure of Stehr (Reference Stehr1954). Upon egg hatch, first instars were allowed to spin hibernacula in cheesecloth on Parafilm and were placed at 4 °C to enter diapause as second instars. Upon completion of a 24-week diapause, offspring were pooled and reared at a density of 20–30 larvae per 25-mL diet cup until peak-fourth instar and at five larvae per cup after that until they had reached the first day of the sixth stadium, aimed to coincide with natural flush of balsam fir buds.

Maternal spore burden (number of spores per moth) was used to select infected families for production of larval test populations after completion of diapause. Females whose offspring was used in experiment 1 carried on average 1.1±0.3×106 spores per moth (mean±SE, n=50). In experiment 2 the average intensity of maternal infection was increased to 5.3±1.9×107 spores per moth (n=36) by rearing larvae after initial infection through a second generation.

Experimental design

Interaction between infection and back-feeding was investigated in two consecutive experiments that were designed to accommodate different objectives (see below). Both experiments were conducted during the month of June (2010, 2011) when balsam fir branch tips with naturally flushed buds were available from ~15-year-old trees growing on the GLFC site. Branch tips were collected when the buds had fully flushed and stored at 8 °C for a few days until a sufficient number of one-day-old sixth instars were available from both infected and uninfected colonies. Infected and uninfected control larvae were divided over two feeding regimes: feeding on previous years’ needles was forced in one treatment by removing all current year’s growth from the tips (back-feeding), while current year’s growth was left in place in the other treatment (regular-feeding). Cups in both experiments were maintained at 21 °C and a 16:8 light:dark hours photoperiod and checked daily to replace foliage as needed until pupation. One-day-old pupae were sexed and weighed, and in the case of experiment 1 homogenised for determination of spore burdens. Effects of infection and back-feeding and their interaction were examined using the following response variables: larval survival (from day 1 of instar 6 to pupation), larval development time (number of days to reach pupation), and pupal mass (fresh weight of one-day-old pupae).

The two experiments were designed to accommodate different objectives. A key objective in experiment 1 was to examine effects of Nosema infection on growth and development as a function of individual spore burdens in one-day-old surviving pupae. This necessitated individual rearing of infected larvae to avoid horizontal transmission. A total of 186 uninfected control larvae and 369 infected larvae were divided over the two feeding treatments and reared individually in 75-mL plastic cups until pupation. To obtain the broadest possible range of infection intensities in the test population we used offspring from all F1-females, including those that had spore burdens below the direct-counting detection threshold. This resulted in ~40% of females with spore burdens below the threshold for transmission of disease to 100% of their offspring (5×105 spores per moth; van Frankenhuyzen et al. Reference van Frankenhuyzen, Nystrom and Liu2007), which was reflected in a high proportion of surviving pupae that had zero spore counts (160 out of 340 survivors). Pupae with zero spore counts were omitted from the analysis due to their uncertain infection status. In anticipation of this reduction, the initial sample size of infected larvae was doubled relative to the uninfected controls.

In experiment 2, the main objective was to investigate interactive effects at a high intensity of infection. F2-females that were selected for production of infected test larvae had spore burdens above 2×106 spores per moth, which was sufficiently high to ensure complete transmission from mothers to offspring. This was confirmed on a subsample of larvae taken from the pool of infected larvae before the experiment was set up, which were all infected (mean: 6.4±0.9×106 spores per early-fifth instars, n=49). A total of 120 uninfected and 160 infected larvae were divided over the two feeding treatments and, for the sake of efficiency, reared in groups of 10 larvae per 300-mL cup (Dixie D8, Georgia Pacific Canada Consumer Products Inc., Brampton, Ontario, Canada). Final larval numbers in the various treatments were determined by availability and other logistic constraints.

Data analysis

Statistical analyses were conducted with Minitab (release 16.0). Main effects (feeding regime, infection, gender) and their interaction on pupal mass and larval development time were tested in a two-way analysis of variance (general linear model procedure), using type III sum of squares. Means are reported as least square means±SE and were compared where needed using Tukey’s test (P<0.05). The contribution of infection intensity in survivors with detectable spore burdens (experiment 1) was examined by regression analysis (general regression procedure) with Ln(number of spores per pupa) as an additional predictor. Survival data were analysed by using binary logistic regression.

Results and discussion

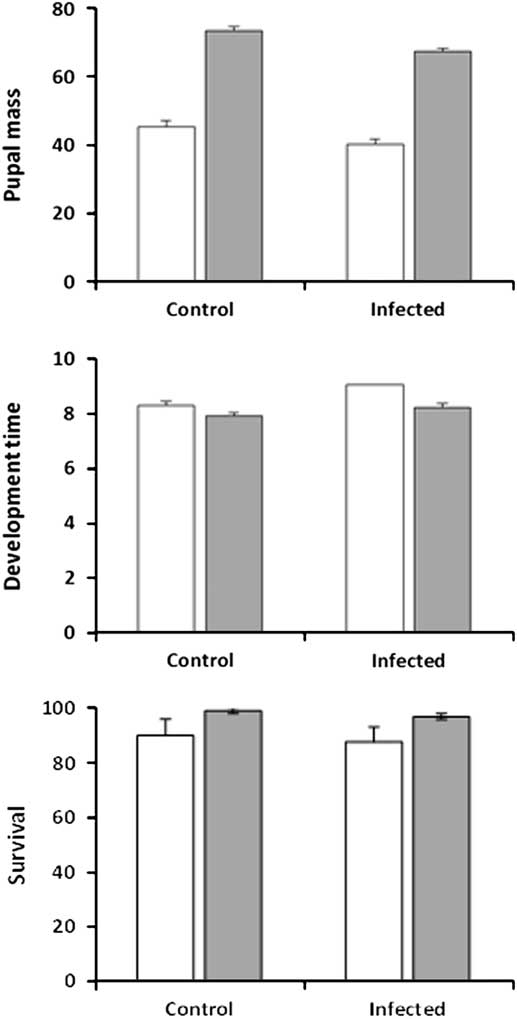

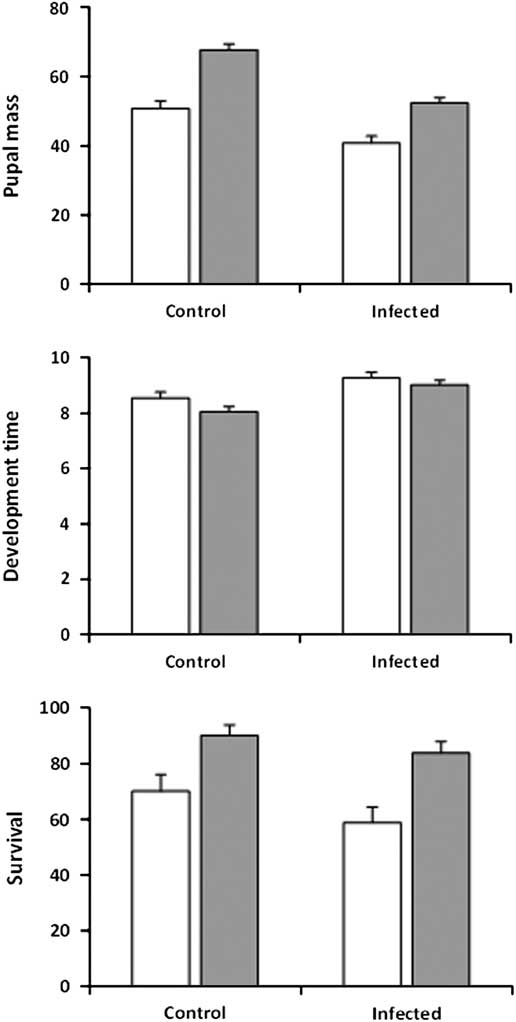

In experiment 1, pupal mass was significantly affected by the three main effects of infection, feeding regime and larval sex, but not by any of their interactions (Table 1; Fig. 1 top). Feeding regime had the largest impact on pupal mass: larvae in the regular-feeding treatment produced pupae that were on average 65% heavier than when larvae were forced to feed on old foliage (regular-feeding: 70.4 mg±1.10, n=204; back-feeding: 42.7±1.19, n=152). In comparison, infection had a marginal effect on pupal mass, with uninfected control larvae producing pupae that were on average only 10% heavier than pupae produced by infected larvae (control: 59.4±1.17, n=176; infected: 53.8±1.13, n=180). The effect of infection was also outweighed by a between-gender difference, as females were 22% heavier than males (female: 62.2±1.49, n=59; male: 50.9±0.65, n=297). The three main effects were also significant in the analysis of development time (number of days to pupation), again with no significant interactions (Table 1; Fig. 1, middle). Gender had a larger effect on development time than infection and feeding regime. Female larvae took 15% longer to develop than male larvae (8.9±0.10 versus 7.7±0.04 days), compared with only a 5–6% increase in infected larvae over control larvae (8.6±0.08 versus 8.1±0.09) and larvae in the back-feeding treatment over larvae in the regular-feeding treatment (8.6±0.09 versus 8.1±0.08). Survival to the pupal stage was significantly affected by feeding regime (n=386; z=−2.23; P=0.025) but the effect was small (regular-feeding: 98.0%±0.95, n=208; back-feeding: 85.4%±2.65, n=178) (Fig. 1, bottom). Infection was not a significant predictor of larval survival (z=−0.84; P=0.399) with infected larvae and control larvae having similar survival on average (infected: 90.0%±2.13, n=200; control: 94.6%±1.66, n=186) (Fig. 1, bottom). The interaction between infection and feeding regime was not significant (z=0.19; P=0.847).

Fig. 1 Pupal mass (mg, top), development time (days to onset of pupation, middle), and survival to pupation (%, bottom) for sixth-instar spruce budworms that were not infected (control) or infected by Nosema fumiferanae at low intensity (infected) when feeding on current-year foliage (regular-feeding, grey bars) or previous-year foliage (back-feeding, open bars).

Table 1 Main effects of Nosema infection (infection: infected versus control), feeding treatment (feeding: back-feeding versus regular-feeding), gender (sex: males versus female) and their interactions on mass of one-day-old pupae and development time from first day of sixth larval stage to onset of pupation in experiment 1 and 2.

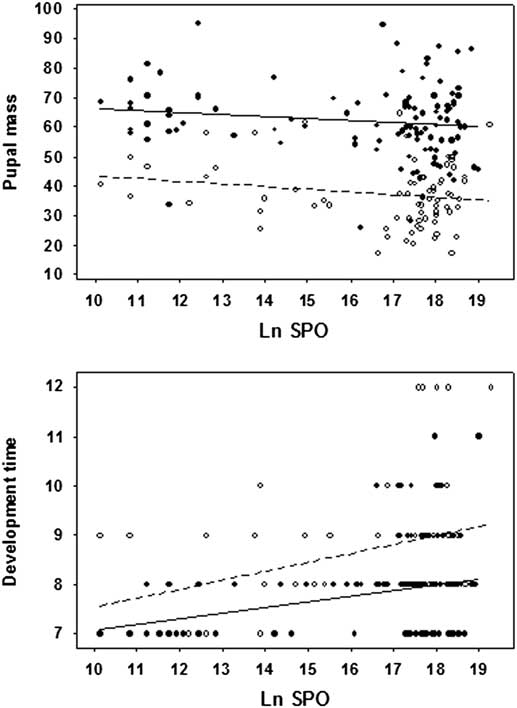

Spore burdens in survivors in the infected treatment ranged from the detection limit of 2.5×104 spores to a maximum of 1.8×108 for an average burden of 4.7±0.3×107 (n=180) spores per pupa. Spore burden alone was a significant predictor (F⩾5.3, P⩽0.022) but accounted for only a small proportion of the total variation in pupal mass and development time (2.3% and 12.5%, respectively). Spore burden contributed more strongly to the prediction of development time and pupal mass when feeding regime was included as a factor and non-significant interactions were removed, with resulting regression models (Table 2) accounting for respectively 51.5% and 28.9% of total variation. Pupal mass declined and development time increased linearly with increasing spore burden in both feeding treatments in a parallel fashion (Fig. 2). Significant effects of feeding regime (F>37.4, P<0.001) indicates significantly different intercepts of regression lines in the back-feeding and regular-feeding treatments, while the absence of interactions between spore burden and feeding regime (F⩽1.44, P⩾0.230) indicates that the slopes did not differ. The effect of spore burden was limited: a seven order-of-magnitude increase from ~2.5×104 to 1.8×108 spores per pupa resulted in a predicted decrease in pupal mass of only 10–17% and a similarly small predicted delay in larval development of 15–17%.

Fig. 2 Pupal mass (mg, top) and development time (days to onset of pupation, bottom) for sixth-instar spruce budworms feeding on current-year foliage (regular-feeding, closed symbols) or previous-year foliage (back-feeding, open symbols) in relation to the burden of Nosema fumiferanae spores in surviving pupae. Ln Spo=Ln (number of spores per pupa).

Table 2 Larval development time and pupal mass as a function of feeding regime (feeding, as in Table 1) and spore burden (LnSpo).

Notes:

* Box-Cox transformed number of days.

≅ Ln (number of spores per pupa).

Results obtained at higher infection intensity in experiment 2 were comparable in that both pupal mass and development time were significantly affected by infection, feeding regime, and sex, with no significant interactions (Table 1; Fig. 3, top, middle). Infection had a larger and feeding regime a smaller effect on pupal mass than in experiment 1. Uninfected control pupae were on average 27% heavier than infected pupae (control: 59.2 mg±1.34, n=96; infected: 46.5±1.28, n=114), while pupae from larvae in the regular-feeding treatment were 31% heavier than pupae from larvae forced to feed on old foliage (regular-feeding: 60.0 mg±1.19, n=121; back-feeding: 45.8±1.42, n=89). Female pupae were on average 35% heavier than males (female: 60.7 mg±1.33, n=104; male: 45.1±1.29, n=106) and female larvae took 17% longer to reach the pupal stage than males (9.4±0.13 versus 8.0±0.13 days). The effect of infection on development time was also more pronounced than in experiment 1, with infected larvae taking 10% longer to develop to the pupal stage than uninfected control larvae (9.1±0.12 versus 8.3±0.13 days). Larvae in the back-feeding treatment developed about 5% slower than larvae in the regular-feeding treatment (8.5±0.12 versus 8.9±0.14), as was the case in experiment 1. Although survival of infected larvae to the pupal stage was lower than survival of uninfected control larvae (Infected: 71.2%±3.5, n=160; Control: 80.0%±3.6, n=120) (Fig. 3, bottom), the effect was not significant (z=−1.06; P=0.290). Feeding regime did have a significant effect on survival (z=−2.62; P=0.009) with larvae in the back-feeding treatment having lower survival (regular-feeding: 86.4%±2.90, n=140; Back-feeding: 63.6%±4.08, n=140) (Fig. 3, bottom). The interaction between infection and feeding regime was not significant (z=0.10; P=0.920).

Fig. 3 Pupal mass (mg, top), development time (days to onset of pupation, middle), and survival to pupation (%, bottom) for sixth-instar spruce budworms that were not infected (control) or infected by Nosema fumiferanae at high intensity (infected) when feeding on current-year foliage (regular-feeding, grey bars) or previous-year foliage (back-feeding, open bars).

Results of our study confirm conclusions from previous studies that microsporidiosis and feeding on previous year’s foliage affect budworm fitness in a similar fashion, and that both cause relatively small reductions in survival and pupal mass and increases in larval development time. Effects of infection were more pronounced at higher infection intensities, but deleterious effects were small even at very high individual spore burdens. Our study adds the new insight that infection and back-feeding do not exacerbate each other but that they act in a simple, additive fashion. For each of the three response variables, the sum of change due to infection and due to back-feeding was similar to the difference observed between uninfected larvae in the regular-feeding treatment and infected larvae in the back-feeding treatment (Table 3). The lack of interaction between back-feeding and Nosema infection was consistent across a range of experimental conditions presented by the two experiments, including differences in rearing conditions (singly or in groups), infection intensity, and unintended gender bias in selection of test larvae in one of the experiments (experiment 1), suggesting that the observation of no interaction is robust. Infection intensities in our test populations were well within the range reported for females from outbreak populations (Wilson Reference Wilson1987; Eveleigh et al. Reference Eveleigh, Lucarotti, McCarthy, Morin, Royama and Thomas2007; van Frankenhuyzen et al. Reference van Frankenhuyzen, Nystrom and Liu2007), suggesting that the absence of interaction between back-feeding and Nosema infection observed in the laboratory is likely to be relevant for field populations.

Table 3 Additivity of sublethal effects of Nosema infection and back-feeding on spruce budworm in experiment 1 and 2.

Notes: Magnitude of effects is calculated as % increase (+) or decrease (−) in mean response variable relative to uninfected-control or regular-feeding treatment.

* Infected versus control larvae in regular-feeding treatment.

≅ Control larvae in regular-feeding versus back-feeding treatment.

⊥ Infected larvae in back-feeding treatment versus control larvae in regular-feeding treatment.

The data presented here thus clearly refute our hypothesis that infection by N. fumiferanae and forced feeding on last year’s foliage by late instars are likely to interact in affecting spruce budworm recruitment in older outbreak populations. This means that the role of N. fumiferanae in spruce budworm outbreak dynamics remains enigmatic. Although the pathogen builds to high prevalence in the course of an outbreak, deleterious effects of infection on survival and fitness are relatively small, and apparently not exacerbated by similar effects caused by concurrent back-feeding. It is possible that N. fumiferanae infection interacts with foliage depletion through mechanisms other than back-feeding, such as feedbacks involving flower production (van Frankenhuyzen et al. Reference van Frankenhuyzen, Ryall, Liu, Meating, Bolan and Scarr2011), early-instar dispersal (van Frankenhuyzen et al. Reference van Frankenhuyzen, Nystrom and Liu2007; Régnière and Nealis Reference Régnière and Nealis2008) or moth dispersal (Eveleigh et al. Reference Eveleigh, Lucarotti, McCarthy, Morin, Royama and Thomas2007). Such interactions require further investigation.

Acknowledgements

This study was funded by the Canadian Forest Service under the integrated pest management project.