1. Introduction

Since the launch of ChatGPT in late 2022, generative artificial intelligence (AI) systems have garnered global attention at an unprecedented level. Although US tech companies are generally regarded as “first-movers,” especially in rolling out different commercial and industry applications of such technology, the rapid development and deployment of generative AI systems based on advanced large language models (LLMs) have extended beyond Silicon Valley. As a significant player in AI globally, China has also seen the mushrooming of generative AI applications for public consumption, including Baidu’s “Wenxin Yiyuan,” Huawei’s “Pangu,” Tencent’s “Hunyuan Assistant,” Alibaba’s “Tongyi Qianwen,” SenseTime’s “Ririxin” and ByteDance’s “Dou Bao.”

The swift rise of new LLMs poses a range of risks and challenges, which have led to calls for strengthening AI regulation around the world. Two types of regulatory approaches have emerged. One approach favours a lighter-touch regulatory path. The other approach, perhaps most exemplified by the EU’s AI Act, is a more stringent approach that advocates for dedicated regulatory bodies and requires riskier AI applications to be subject to rigorous testing and pre-market approval processes. Some commentators anticipate that, just as the EU’s General Data Protection Regulation has had a global impact, the AI Act is likely to extend the so-called “Brussels effect” to the field of AI regulation (Rotenberg & Hickok, Reference Rotenberg and Hickok2022).

While the AI Act has garnered significant global attention, China’s AI regulations have received comparatively little notice. This is despite China being, in 2023 (before the passage of the AI Act), the “first country to implement detailed, binding regulations on some of the most common applications of AI” (Sheehan, Reference Sheehan2024). As a leading AI power, China’s regulatory approach can potentially have a significant influence on global AI governance trends and shape international standards. Our paper aims to address this knowledge gap by critically examining China’s approach to regulating generative AI to date. We focus on the Interim Measures for the Management of Generative Artificial Intelligence Services (“the Measures”) issued in July 2023 by several ministries and departments under the State Council, the executive arm of the Chinese state.

According to Article 2 of the Measures, its regulatory scope applies to services that provide generated text, images, audio, video, and other content to the public within the territory of China. The Measures do not cover research and development (R&D), enterprise and industry applications that do not directly provide services to the public. The regulation touches on a range of risks associated with the application of generative AI, including content security, personal data protection, data security, intellectual property violations, among others. It also introduces a multi-tiered system of obligations on providers of generative AI services to mitigate such risks.

At the time of its introduction in July 2023, the Measures were the first legally binding instrument in the world specifically aimed at regulating generative AI. Some have described this regulation as reflecting a “vertical approach” to AI regulation (Jia et al., Reference Jia, Zhao and Fu2023). Such an approach seeks to address specific risks arising from types of AI technologies. In contrast, a “horizontal approach,” which is largely reflected in the EU’s AI Act, encompasses comprehensive and overarching regulations that apply broadly to AI systems (Shaughnessy & Sheehan, Reference Sheehan2023). Moreover, China’s approach has been described as “highly reactive” and “adaptive,” with regulatory bodies swiftly responding to sudden changes and uncertainties (Migliorini, Reference Migliorini2024; A. H. Zhang, Reference Zhang2022 ). Over the past decade, China’s cyber laws and regulations have reflected a dual strategy of maintaining control over a variety of risks emerging from a rapidly advancing technological landscape, while simultaneously fostering development and innovation within that same environment.

To contextualise the Measures, section 2 of this article provides an overview of China’s approach to regulating AI to date. Section 3 examines the Measures’ underlying policy motivations related to AI development and security. Section 4 sheds light on the key provisions of the Measures, focusing on the principal obligations on generative AI service providers to safeguard information content security. Section 5 addresses the remaining challenges in the implementation of the Measures and considers the future trajectory of China’s AI governance.

2. Overview of China’s approach to regulating AI

China’s AI governance has been primarily characterised by a state-led approach that aims to harness AI’s potential for the country’s international competitiveness, economic growth and social governance (Roberts et al., Reference Roberts, Cowls, Morley, Taddeo, Wang and Floridi2021). This approach is intrinsically tied to China’s ambitions of becoming a global leader in AI technology. Similarly, it has been observed that “China’s engagement with the Internet has been guided by its techno-nationalist strategy of development, coupled with the imperative of maintaining social stability” (Ebert Stiftung, Reference Ebert Stiftung2023).

The country’s New Generation Artificial Intelligence Development Plan, launched in 2017, outlined a strategy to be a globally dominant player in AI by 2030 (State Council, 2017). This global leadership role includes the commercialisation of AI, as well as the shaping of technical and ethical standards. Compared with the more market-driven approaches seen in the US or the rights-based frameworks prevalent in the EU, China’s AI governance has generally prioritised security interests and social stability while seeking to promote development of the AI industry.

In terms of “methods” for regulating emerging technologies, two primary pathways have been observed. The first pathway involves establishing rules that are tailored to specific technological applications or scenarios. The second pathway adopts a more comprehensive approach that encompasses all potential applications or scenarios (Hacker et al., Reference Hacker, Engel and Mauer2023). Some commentators have referred to “vertical” and “horizontal” approaches to AI regulation (Shaughnessy & Sheehan, Reference Sheehan2023). Vertical approaches typically emphasise distinct regulatory frameworks for specific applications or manifestations of the technology. On the other hand, horizontal approaches favour the development of broad regulatory frameworks that cover almost all potential uses of AI.

The EU has leaned towards a horizontal approach, with the AI Act representing “a single piece of horizontal legislation to fix the broad scope of what applications of AI are to be regulated” (Shaughnessy & Sheehan, Reference Sheehan2023). Nevertheless, the rapid advancements in and public adoption of new generative AI tools have challenged the AI Act’s originally proposed categories of risk that applied broadly to all AI applications. This prompted changes to the final version of the Act that placed foundation models and generative AI under a specific category of general-purpose AI (GPAI). Such advanced AI models and systems are regulated through a separate tiered approach, with more stringent obligations for “high-impact” GPAI models that pose a “systemic risk,” as defined in Articles 3 (64) and (65) of the EU’s AI Act. These include foundation models trained with significant amounts of data, and where their advanced levels of complexity, capabilities and performance can disseminate systemic risks along the value chain.

China has primarily adopted a “vertical” approach to regulating AI to date. An important advantage of this approach is the speed at which legally binding instruments can be introduced or amended to respond to emerging issues. For example, on 11 April 2023, the Cyberspace Administration of China (CAC), the primary internet and cyber regulator in China, released an initial draft of the Measures for public feedback, with a submission deadline of 10 May 2023. The entire regulatory process, including drafting the initial version, gathering public input, conducting discussions and revisions, and obtaining final approval from relevant authorities, was completed within three months. The Measures were promulgated on 13 July 2023 by the CAC (as the lead) along with the National Development and Reform Commission, Ministry of Education, Ministry of Science and Technology, Ministry of Industry and Information Technology, Ministry of Public Security and National Radio and Television Administration. The Measures took effect on 15 August 2023. This rapid regulatory process contrasts with the legislative process of higher-level laws by the National People’s Congress, which can take several years.

At the time of writing, China has not established a more comprehensive legislative framework on AI. Policymakers have instead focused on administrative regulations that target specific AIdevelopments or applications considered to be “riskier” in terms of security or safety. Besides the Measures, China’s AI regulations include the Provisions on the Management of Algorithmic Recommendations in Internet Information Services in (“Algorithmic Recommendations Provisions”) and the Regulations on the Deep Synthesis Management of Internet Information Service (“Deep Synthesis Regulations”), both introduced by the CAC with a range of other government authorities in 2021 and 2022 respectively.

A common thread running through these regulations is their emphasis on information and content safety and security risks arising from AI. In an important standards framework on AI Safety Governance issued by the National Technical Committee 260 on Cybersecurity of the Standardization Administration of China (2024, September), risks of information and content safety were identified in Section 3.2.1(a) of this framework as follow:

AI-generated or synthesized content can lead to the spread of false information, discrimination and bias, privacy leakage, and infringement issues, threatening the safety of citizens’ lives and property, national security, ideological security, and causing ethical risks. If users’ inputs contain harmful content, the model may output illegal or damaging information without robust security mechanisms.

The Algorithmic Recommendations Provisions aim to control algorithms used for information dissemination, introducing new obligations for online news platforms that require them to intervene in content recommendations. The Provisions also grant users specific rights, including the ability to turn off algorithmic recommendation services, the option to delete tags, and the right to receive explanations when an algorithm significantly affects their interests. The Deep Synthesis Regulations address risks in the information environment arising from “deep synthesis technology.” Instead of using the politically charged term “deepfakes,” the regulations employ the more neutral technical term “deep synthesis technology.” This is defined as the use of deep learning, augmented reality, and other algorithms to enable content synthesis or generation, encompassing text, images, audio, video, virtual scenes and more.

Technical standards and guidelines play an important role in the operationalisation of laws and regulations. Since the introduction of the Measures, the National Technical Committee 260 on Cybersecurity of Standardization Administration of China released the Implementation Guidelines on Cybersecurity Standards for Content Labelling Method in Generative Artificial Intelligence Services TC260-PG-20233A on 25 August 2023. While such Guidelines are “voluntary” in nature, they enable regulators to test adoption among industry actors before developing mandatory standards or further regulations later on. This approach can be seen in the CAC’s release of a draft regulation and a draft mandatory standard on AI labelling in September 2024 (discussed later in section 4.3). Other technical standards specifically related to the Measures at the time of writing include the Basic Security Requirements for Generative Artificial Intelligence Services TC260-003 introduced by Technical Committee 260 on 29 Februrary 2024. This set of mandatory technical standards aims to provide basic safety and security requirements for generative AI service providers, including corpus, model, security measures, and security assessments. It applies to service providers in relation to their obligations to conduct security assessments and improve security levels under the Measures. The standards also provide an important point of reference for relevant regulatory authorities to assess the security level of generative AI services.

High-level policy documents play an important role in shaping the trajectory of laws and regulations. One important document is the aforementioned New Generation Artificial Intelligence Development Plan, which proposed three phases of development in China’s AI governance framework (State Council, 2017). In the first phase, by 2020, China will “initially establish AI ethical norms, policies, and regulations in some areas.” By 2025, the second phase, there will be “initial establishment of AI laws and regulations, ethical norms and policy systems, and the formation of AI security assessment and control capabilities.” Finally, by 2030, China will have “constructed more comprehensive AI laws and regulations, and an ethical norms and policy system.” This Plan, while broadly framed, is being implemented. The first phase saw the release various guidelines and ethical norms by relevant government bodies. At the highest level, General Offices of the CPC Central Committee and the State Council (2022) issued guidelines in 2022 aimed at enhancing ethical governance in science and technology. The Ministry of Science Technology (2021) also released a document that outlined the fundamental ethical principles guiding China’s AI policy framework. The next phase of implementation included the introduction of administrative regulations including the Algorithmic Recommendations Provisions, the Deep Synthesis Regulations and the Measures.

At the time of writing, there are signs of regulatory efforts towards a comprehensive AI legislation. In June 2023, an AI Law was added to the State Council’s Legislative Plan (State Council, 2023). The new law is expected to expand upon current regulations, forming a more comprehensive legislative framework that will become the cornerstone of China’s AI policy (Sheehan, Reference Sheehan2023). In August 2023, the Chinese Academy of Social Sciences (2023), an important and influential think tank regularly involved in lawmaking processes, released a Model AI Law 1.1 Expert Draft. A more comprehensive AI legislation in China may eventually resemble the EU’s AI Act. However, the journey from initial legislative planning to the eventual enactment of such a law at the national level could take a few years. Meanwhile, regulators have swiftly introduced specific measures to mitigate the risks posed by new, potentially disruptive technologies.

It is important to note that administrative regulations like the Measures, which have “interim” in its title, are often used by lawmakers to test certain approaches before more significant and higher-level legal instruments are considered. In this way, lawmakers can undertake and learn from some degree of regulatory experimentation. The term “interim” implies that the Measures are likely to be temporary, allowing for future regulatory development. Such an approach has been described as an “iterative” model of governance to address new problems and challenges as they arise, in which administrative regulations and guidelines are continuously introduced, updated, replaced and/or repealed (Sheehan, Reference Sheehan2023). However, this can also result in a patchwork of multiple, overlapping regulatory instruments in the lead-up to a more comprehensive legislation.

While it may be state-led, China’s regulatory approach to AI is not as “top-down” as some may assume. Indeed, the development and passing of China’s AI regulations have involved a wide range of stakeholders, including different policymakers and regulatory bodies as well as key players outside the party-state structure. These non-state actors have included technology companies, technologists, academic experts, journalists and policy researchers. This involvement of different actors serves multiple policy objectives and creates a negotiable policy space where these actors can influence China’s present and future AI regulations (Migliorini, Reference Migliorini2024). Sheehan has described these actors’ roles as “a mix of public advocacy, intellectual debate, technical workshopping, and bureaucratic wrangling” (Sheehan, Reference Sheehan2024).

An example of this dynamic, multi-actor policy process can be gleaned from the initial draft of the Measures that was released for public consultation in April 2023. The initial draft did not merely restrict its application to public-facing generative AI services. The said draft also imposed additional obligations on generative AI service providers, such as requiring providers to fine-tune their models within three months of discovering unlawful content. Following feedback from different actors during the public consultation, the final version of the Measures limited its scope to public-facing service providers and reduced the range of obligations on service providers.

As we have seen, China’s regulation of AI has evolved rapidly, based on a vertical, reiterative approach that targets particular technological developments and applications that regulators consider to pose a certain (high) degree of risks, with a consistent focus on AI-based content security or safety risks. The balancing act between maintaining control over such risks while fostering industry development and innovation is particularly evident in the regulation of generative AI, where the potential for both breakthrough innovations and significant risks to information and content security is high. Having established this regulatory context, we now turn our attention to a more detailed examination of the policy objectives underlying the Measures in the next section.

3. The Measures: balancing AI development and security

Confronted with the potentially transformative impact of advanced AI systems and models, Chinese policymakers are grappling with the challenge of balancing two main regulatory goals: AI development and security. The notion of “development” in this context refers to China’s desire to propel the growth of the AI industry and boost its standing as a global AI powerhouse. Meanwhile, “security” (sometimes referred to as “safety”) has been at the centrepiece of all of China’s cyber and technology-related laws and regulations to date. For policymakers, there is a fundamental need for guardrails to prevent and mitigate a range of security risks associated with the rapid development of emerging AI technologies (Qiao, Reference Qiao2023).

The dual pillars of “development” and “security” are reflected in Article 1 of the Measures. Article 1 sets out the following regulatory objectives: fostering responsible growth of generative AI while ensuring national security and public interests and protection of the rights of citizens, legal entities, and other organisations. Additionally, Article 3 reinforces the equal importance of “development” and “security” as well as emphasises the principle of balancing innovation promotion with lawful governance.

Although both terms were presented side-by-side in policy statements related to the Measures, “development” appeared to be consistently placed before “security.” The emphasis on development became more pronounced in the final version of the Measures in comparison with the initial draft that focused much more on security concerns. Some prominent scholars in China have argued that the primary “insecurity” is the underdevelopment of China’s AI industry. Notably, Professor Liming Wang has underscored that, when compared to other potential risks, the most significant danger for China is the risk of falling behind technologically (L. M. Wang, Reference Wang2023).

A key question is the extent to which and how the Measures will support the development of the generative AI industry in China. Within the Chinese legal framework, regulations often derive their authority from higher-level laws. Article 1 of the Measures explicitly cites, as its legal foundation, the Law on the Promotion of Scientific and Technological Progress (2021 amendment). The Law on the Promotion of Scientific and Technological Progress has the overarching goal of

comprehensively promoting scientific and technological progress, harnessing the role of science and technology as the primary productive force, innovation as the primary driving force, and talent as the primary resource. Facilitating the transformation of scientific and technological achievements into practical productive forces, driving technological innovation to support and lead economic and social development, and comprehensively building a socialist modernised country.

By anchoring the Measures to the Law on the Promotion of Scientific and Technological Progress, there is an emphasis on aligning the development of generative AI with broader policy objectives aimed at fortifying China’s technological advancements. In essence, the Measures are linked to a wider strategy of Beijing for achieving global leadership as well as self-sufficiency in respect of AI developmen. As early as 2017, the Chinese leadership has made it clear that “China should strengthen deployment around core technologies, top talents, standards, and norms, aiming to gain leadership in the new round of international scientific and technological competition (in AI)” (State Council, 2017).

Article 2 of the Measures is of particular significance, delineating the Measures’ application to “services that provide generated text, images, audio, video, and other content to the public within the territory of the People’s Republic of China using generative AI technology.” Article 3 makes it clear that the regulatory scope excludes “industry organisations, enterprises, education and research institutions, public cultural institutions, and relevant professional institutions that do not provide generative AI services to the general public.” As discussed earlier, the draft version had originally contemplated a much wider regulatory scope. The change made in the final version of the Measures, which excludes uses of generative AI for scientific research and enterprise or industrial uses from its scope, reflects more strongly the goal of facilitating more widespread adoption and further development of the technology (Xu, Reference Xu2023).

An important example of policymakers’ attempts to balance development and security concerns in the Measures is reflected by the distinction between the technology itself and the services built upon it. This differentiation seeks to recognise that certain areas of generative AI development and application require more stringent oversight, while other areas may benefit from a more permissive or light-touch approach. In practice, this means that regulation tends to focus on areas where public interests, safety and security are most at stake.

The Measures make an important distinction between “core” generative AI technologies on the one hand, and services arising from these technologies on the other. The former is primarily addressed in Chapter II of the Measures, titled “Technology Development and Governance,” which emphasises the promotion of innovation and the application of generative AI across diverse industries and domains. The provisions here contain words such as “encourage,” “support” and “promote,” particularly in relation to “trial and error” in technology R&D. Chapter II reflects policymakers’ consideration that the Measures should not excessively interfere with the development of core generative AI technologies.

The Measures focuses on regulating service providers. According to Article 22, generative AI service providers refer to organisations or individuals who offer services that are based on utilising generative AI. This includes services provided through application programming interfaces or other methods. The obligations on providers of generative AI services under Chapter II of the Measures include ensuring pre-training, optimisation training and all data-related activities adhere to existing laws and regulations (particularly the Cybersecurity Law, Data Security Law, and intellectual property laws). Service providers must also ensure that the data sources and foundational models used are lawfully obtained and employ effective measures to increase the quality of the training data by “enhancing its truthfulness, accuracy, objectivity, and diversity.” Chapter III of the Measures, titled “Service Standards,” outline a range of obligations on generative AI service providers. These obligations include safeguarding user personal information, meeting requirements regarding user identification, delivering secure and stable services, and reporting unlawful content, among others.

While the intention of differentiating between technology and services is to promote technological development while mitigating risks associated with generative AI applications, the Measures have been criticised for its broad definition of “service providers.” This broad definition includes services that develop foundation models. There have been calls for more granularity in regulatory oversight over different types of actors involved in generative AI technology development and service provision, an issue that we will return to later in this paper.

On the whole, the Measures attempt to avoid excessive interference in technological development. Nevertheless, there are also considerable provisions that lean towards prioritising security over developmental objectives, particularly in addressing content risks associated with generative AI. This emphasis aligns with the broader trajectory of AI regulations in China, as discussed earlier, which reflect the importance of maintaining control over the information environment. In the following section, we assess the key provisions of the Measures, focusing on information and content safety obligations imposed on generative AI service providers.

4. Key obligations on regulated actors

While the Measures set out a range of obligations on various actors, the main provisions are targeted at services involved in the generation, processing and delivery of content using generative AI. For policymakers, the ever-increasing capabilities of the technology pose significant content security risks, for instance, it can make it very challenging for humans or even AI to identify false or misleading content. The Measures represent a shift in the existing regulatory landscape for information content security, blurring traditional distinctions between content producers and service providers.

4.1 Existing content production and service provision regulations

By way of background, existing regulatory frameworks for information content security in China have traditionally distinguished between content production and technical service provision. Content producers generally bear direct liability for content-related infringements. Service providers refer to organisations or individuals that provide online information service activities that assist in carrying out such infringements. The latter are held liable only when they have knowledge or should have known about the direct infringement (Liu, Reference Liu2016). This distinction is reflected in the framework for regulating liability for harms caused by content generated or shared on platforms (Yao & Li, Reference Yao and Li2023). A common approach taken by Chinese courts has been to characterise a platform’s role in information dissemination as either content provision or technical services, as echoed in Provisions of the Supreme People’s Court on Several Issues Concerning the Application of Law in the Trial of Civil Disputes Involving Infringement of the Right to Online Communication (2020).

A key framework for information content governance in China is set out by the Provisions on the Governance of the Online Information Content Ecosystem (the Provisions), an administrative regulation introduced in 2020. The Provisions distinguish between online information content producers (Chapter II), online information content service platforms (Chapter III) and online information content service users (Chapter IV). According to Article 41 of the Provisions, online information content producers are organisations or individuals involved in creating, duplicating or disseminating online information content. Online information content service platforms refer to online information service providers offering content transmission services. Finally, online information content service users are organisations or individuals that use such services. Article 8 of the Provisions requires online information content service platforms to meet their obligations to manage information content (such as taking appropriate measures when discovering illegal or harmful content) and enhance the governance of their information content ecosystems.

It is important to understand the concept of “principal obligations” (zhuti zheren) in the above regulatory framework. The concept was originally used in a political context, referring to the responsibilities of party organisations and government departments. In 2014, it was introduced for the first time in the legal context in the amendment to Article 3 of the Workplace Safety Law as the basis of a corporation’s responsibility for workplace safety. However, when applied in the context of information content regulation, this concept has not been thoroughly clarified and explained. One scholar suggests that the term can be understood as the ultimate responsibility of the service platform provider for any unlawful activity that takes place on the platform (Ye, Reference Ye2018). Some have argued that it refers to a form of strict liability (Liu, Reference Liu2022). This conceptual ambiguity gives rise to regulatory implementation and compliance challenges in practice.

The Deep Synthesis Regulations followed a similar distinction between content providers and technical service providers. It outlines three main categories of regulated entities: (1) deep synthesis service providers, (2) deep synthesis service technology support providers and (3) deep synthesis service users. The Deep Synthesis Regulations assign a range of information security-related obligations on deep synthesis service providers, such as establishing management rules, strengthening content review and having in place mechanisms for “debunking rumours” (Article 7 to Article 12 of the Measures). Such obligations are like those of online information content service platforms under the Provisions on the Governance of the Online Information Content Ecosystem. In other words, deep synthesis technology service providers must comply with relevant obligations related to information content management.

Policymakers to date have not provided an official explanation on the relationship between the Deep Synthesis Regulations and the Measures. It has been suggested that existing regulations are sufficient to address the content-related risks associated with generative AI (Tang, Reference Tang2023). However, we contend that the advancements in the LLMs underlying the latest generative AI applications since the launch of ChatGPT have considerably magnified the degree of such risks beyond that envisaged by the Deep Synthesis Regulations. This amplification of risks necessitated the swift introduction of the Measures by Chinese policymakers, only months after the introduction of the Deep Synthesis Regulations.

4.2 Dual obligations on generative AI service providers

Article 9 of the Measures makes it clear that generative AI service providers must assume the obligations of content producers and meet relevant obligations as related to information content security. This is the first time in Chinese regulations that AI service providers are deemed “content producers” and assigned relevant obligations.

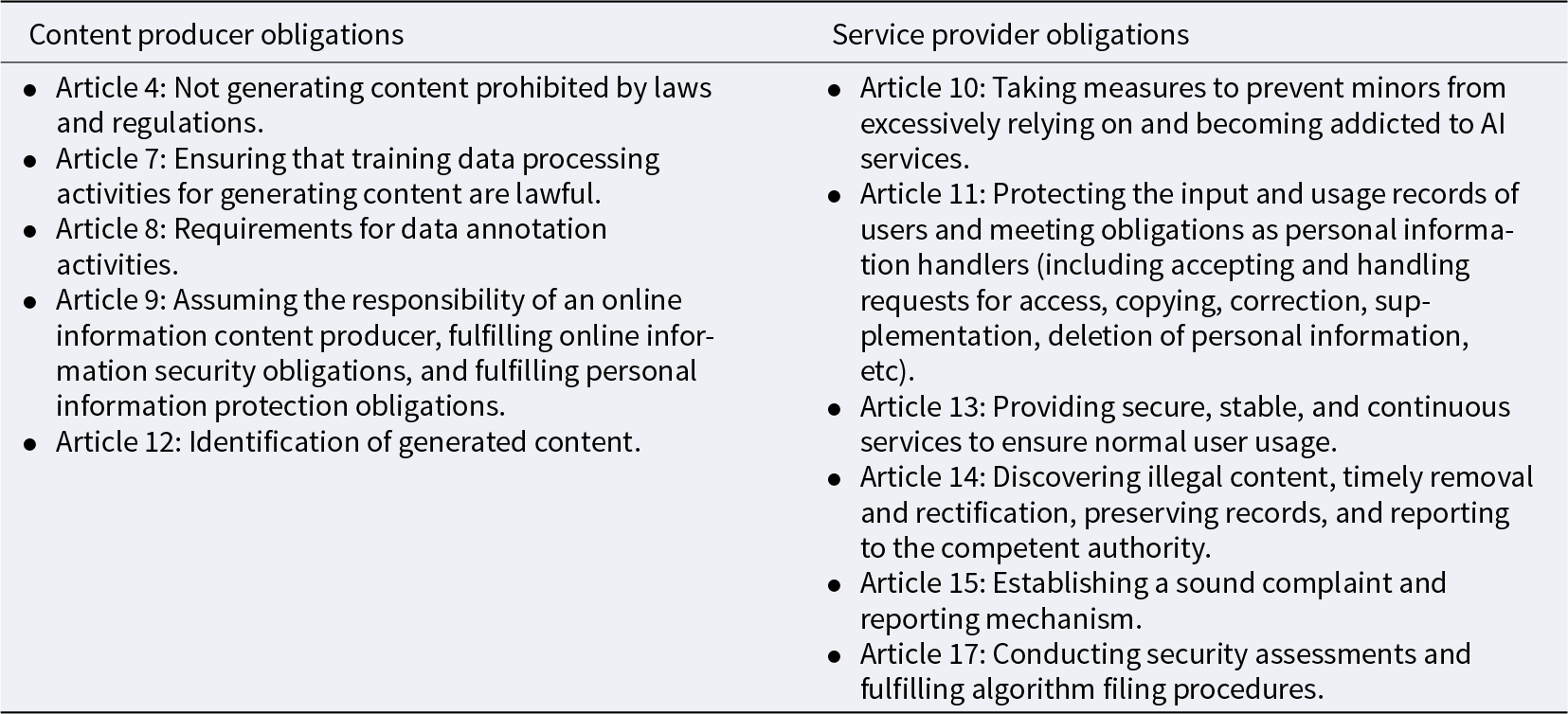

The Measures impose a dual set of obligations on generative AI service providers relating to both content generation and the provision of technical services (see Table 1 below). Article 14 requires service providers, upon discovering illegal content, to promptly respond by stopping its generation, halting its transmission, and/or removing it. Furthermore, they must implement AI model optimisation and training to rectify the situation, and report incidents to regulators. According to Article 10, service providers must take appropriate measures against users engaged in illegal activities, including warnings, restricting functionalities, and suspending or terminating services.

Table 1. Summary of obligations of service providers under the Measures

These obligations on generative AI service providers under the Measures are consistent with the set of “principal obligations” for information management imposed on online information content service platforms under the Provisions on the Governance of the Online Information Content Ecosystem as mentioned earlier. At the same time, the Measures specify in Chapter III (titled “Service Standards”) that generative AI service providers must assume the obligations of online information content producers “in accordance with the law.” Such obligations include labelling the generated content (Article 12), safeguarding personal data (Article 9) and establishing a comprehensive complaint reporting mechanism (Article 15).

Under the Measures, generative AI service providers bear direct liability for content generation as well as indirect liability for failing to take effective measures to stop the dissemination of unlawful content. Some commentators have argued that this dual set of obligations are too onerous for generative AI service providers and do not create a favourable environment for the healthy development of the industry (Yao & Li, Reference Yao and Li2023). Others have pointed out that other regulations to date, including the Provisions on the Governance of the Online Information Content Ecosystem and the Deep Synthesis Regulations, have separated the obligations of information content producers from those of technology service providers (Xu, Reference Xu2023).

Yet, we contend that the Measures are responsive to the characteristics of generative AI services that entail advanced technological integration of content production, service provision and user utilisation. The obligations on generative AI services providers reflect the significance of certain service providers’ involvement in the content generation process. While user prompts are part of the process, the pre-training and fine-tuning of the AI models by service providers also directly impact on the content generation.

4.3 Algorithm disclosure and AI-generated content labelling

An emerging trend in AI regulatory tools involves various disclosure requirements, driven by the need for greater algorithmic transparency. A primary goal of these requirements is to enhance the foreseeability of and control over associated risks through the disclosure of key information about the underlying algorithms. Several regulatory tools in this realm can found in the Measures, which are centred around algorithm filing for certain types of generative AI services and the labelling of AI-generated content. This is where the Measures intersect with the two other AI-related regulations discussed in section 2.

According to Article 17 of the Measures, providers of generative AI services with “public opinion properties or social mobilisation capacity” must comply with the Algorithmic Recommendations Provisions. The Algorithmic Recommendations Provisions established a mandatory algorithm filing and registry system for algorithm recommendation service providers with “public opinion properties or social mobilisation capacity.” These service providers are typically the larger social media platforms. Such providers must file with the CAC certain information on their services and algorithms, including the service form, fields of application, algorithm type, algorithm self-assessment, content to be displayed and other relevant information. If such information changes, the providers must also undertake modification procedures through the filing system within 10 days of the change. The providers must make public its filing index. Where relevant, providers must also carry out security assessments with the CAC.

Labelling AI-generated content can also be considered another form of transparency-enhancing tool for generative AI. AI labelling requirements can be found in the EU AI Act, EU Digital Services Act and the US Executive Order on the Safe, Secure, and Trustworthy Development and Use of AI. Labelling involves embedding identifiable information or markers within the content itself, indicating its origin or creator. This serves as a means of disclosing the content’s AI-generated nature and its source.

Article 12 of the Measures requires generative AI service providers to label generated content such as images and videos in accordance with the Deep Synthesis Management Regulations. Two key provisions of the Deep Synthesis Management Regulations are particularly relevant here. Article 16 specifies that service providers must use technical measures to add unobtrusive marks that do not affect the user’s experience. However, where deep synthesis services may “lead to public confusion or misidentification,” significant markings must be provided to the service users, according to Article 17 of the Deep Synthesis Management Regulations. However, there is no definition of what could “lead to public confusion or misidentification.” Indeed, a broad interpretation of this concept could impact on everyday uses of generative AI services by the public (Yao & Li, Reference Yao and Li2023).

As mentioned earlier, the National Technical Committee 260 on Cybersecurity of Standardization Administration of China (2023, August 25) issued technical guidelines on various methods for content labelling by AI-generated content service providers. According to the guidelines, implicit labelling should not be directly perceptible to humans, such as embedding in file metadata, but can be extracted from the content using technical means. Explicit labelling, on the other hand, involves inserting a semi-transparent label in the interactive interface or background of the content. The label should at least contain information such as “AI-generated.”

The substance of these Guidelines have been developed into administrative regulations and mandatory standards. On 14 September 2024, the CAC released two draft regulatory instruments for public consultation. The first is the draft Measures for the Labelling of AI-Generated Synthetic Content, which seeks to regulate how Internet Information Service Providers (IISPs) label AI-generated content. The regulation, in its draft version, requires both explicit and implicit labelling of such content. It further specifies when and how these labels must be applied. IISPs must also regulate the dissemination of AI-generated content on their platforms, verifying and adding labels as necessary. The draft also outlines obligations for internet application distribution platforms and sets rules for interactions between IISPs and users regarding labelling. The second is a related national standard, the Cybersecurity Technology – Labelling Method for Content Generated by AI, which provides detailed technical requirements for both explicit and implicit labelling. Compliance with this standard is intended to be mandatory and was developed through extensive consultation with industry experts and enterprises.

Overall, much of the labelling obligations are imposed on the AI service provider, not the user. The user’s obligations are limited to not destroying or tampering with the labelling or markings. Article 18 of the Deep Synthesis Management Regulations stipulates that “no organisation or individual shall use technical means to delete, tamper with, or conceal deep synthesis marks.” As examined below, the Measures impose significantly fewer obligations on users of generative AI services compared to the extensive responsibilities placed on service providers.

4.4 Obligations on users of generative AI services

While regulators recognise the need to distinguish generative AI service providers and users, this distinction is not always straightforward in practice. This blurred line reflects the unique nature of generative AI, where outputs are often a result of complex interactions between the AI model and user inputs. The Measures attempt to address this complexity by regulating certain aspects of user behaviour and conduct, acknowledging the dual role of users as both consumers and co-creators of AI-generated content. This is reflected in Article 4 of the Measures which imposes obligations on both providers and users to avoid generating false or harmful information, avoid infringing upon others’ rights, and improve the accuracy and reliability of generated content.

The Measures also incorporate provisions aimed at empowering users and ensuring accountability of service providers. Article 18 grants users the right to file complaints or reports with the relevant authorities if they find the service provider is not complying with laws, administrative regulations or the provisions of the Measures. Complementing this, Article 14 requires service providers to establish a sound mechanism for promptly receiving and handling public complaints and reports and responding to the outcomes.

However, the Measures do not address appeal and recourse mechanisms for situations where service providers infringe upon users’ rights, such as imposing unreasonable restrictions on use and/or termination of services. For example, if a service provider unilaterally determines that a user has generated illegal content and decides to restrict or remove the user from using its services, the Measures do not specify whether the user has the right to appeal to the service provider or another body, and if so, what the procedure for such an appeal should be. This regulatory gap could potentially lead to arbitrary decisions by service providers, with users having limited recourse to challenge these actions. It may also hinder the development of fair and consistent standards for content moderation across different service providers.

Nevertheless, an analysis of the Measures reveals an apparent imbalance in the obligations imposed on service providers versus users. While service providers face a range of obligations and potential liabilities, the obligations placed on users appear to be relatively light in comparison. Article 4, while placing high-level obligations on users, does not attribute direct legal liability to them. This approach raises questions regarding how user obligations will be enforced in practice. The disparity in obligations between service providers and users under the Measures could potentially lead to scenarios where service providers bear disproportionate responsibility for AI-generated content that is substantially influenced or directed by user inputs.

5. Challenges and outlook for regulating generative AI in China

Having examined the key provisions of the Measures, we now turn our attention to the challenges and regulatory gaps that might be addressed and consider the impact of the Measures on China’s ongoing efforts to develop a comprehensive AI Act. We will further examine how China’s approach might influence AI governance and regulation at a global level.

5.1 Filling the regulatory gaps

Overall, the Measures were introduced relatively quickly, and the matters it addressed were at a broad, principled level. This has left significant room for interpretation and implementation. Many provisions remain relatively high-level and lack specific operational details, which can make it difficult for service providers and enforcement authorities to implement. Some obligations will need to rely on subsequent rules, standards, or guidelines to ensure implementation. Examples in this category include the interpretation of “the accuracy and reliability of generated content” in Article 4, disputes over intellectual property rights of generated content in Article 7, the user complaint reporting system in Article 15 and the interaction between service providers and regulatory authorities during supervisions and inspections in Article 19.

The Measures focus on the administrative obligations of service providers but overlook the provisions related to civil obligations. The content security obligations under the Measures mainly manifest as public law obligations. Their enforcement primarily follows public law procedures, with regulatory authorities (government departments) holding generative AI service providers accountable. Article 21 of the Measures primarily entails administrative sanctions like warnings, notifications, criticisms, and corrective actions. Such an approach neglects addressing situations where private individuals or entities could initiate legal actions against service providers.

While the Chinese Civil Code could potentially fill in this gap, there are limitations. For example, Chinese laws and regulations currently lack provisions regarding the determination of damages and the burden of proof for civil liability arising from AI. This gap is particularly significant given the complexity, autonomy and opacity of AI systems. The Measures do not specify damages caused by AI services or the burden of proof for establishing damages. In contrast, the EU has been seeking to developrules applicable to civil liability for persons harmed by AI (the AI Liability Directive) alongside the AI Act. We propose that this is an area where Chinese legislators could take more targeted civil liability provisions. These provisions should address the unique challenges such as the complexity of determining causation and the potential for autonomous decision-making. If introduced, such provisions may end up in a comprehensive AI legislation (discussed below).

In our view, implementation guidelines and technical standards are likely to become increasingly important, such as the abovementione standards issued by the National Technical Committee 260 on Cybersecurity of Standardization Administration of China. The two draft instruments released for public consultation by the State Council in September 2024, the Measures for the Labelling of AI-Generated Synthetic Content and the Cybersecurity Technology – Labelling Method for Content Generated by AI, will provide more detailed compliance directions for regulated actors once they take effect. For regulatory authorities, effectively coordinating the relationship between “hard law” instruments like laws and regulations and “soft law” tools will become a crucial aspect of AI governance in China.

5.2 A more balanced approach to content producer obligations

The current provisions of the Measures reflect a regulatory approach that prioritises control at the service provider level. While this may simplify enforcement and align with existing content regulation frameworks, it may not fully address the unique challenges posed by generative AI.

We propose that the Measures should delineate a clearer and balanced system of responsibility for different regulated actors. Generative AI service providers are designated as “content producers” under the Measures, which mean that they assume almost all responsibilities for information content security. Despite Article 4 stating that both users and service providers have responsibility, all obligations found in Chapter IV are directed at service providers. Given that users actively contribute to content creation and dissemination on platforms, requiring some accountability on the part of users could foster more responsible usage and deter unlawful activities.

Some have called for greater responsibility on users and platforms that disseminate the generated content (Dong & Chen, Reference Dong and Chen2024). Nevertheless, the Measures’ broad definition of generative AI service providers encompasses a wide range of platforms in China that offer generative AI-powered services to the public. Consequently, these platforms are subject to the stringent content producer obligations outlined in the Measures.

To strike a balance between generative AI development and security, the Measures should consider compliance “safe harbours.” Such a system would still place “content producer” responsibility on service providers but offer exemption from liability under certain circumstances (Shen, Reference Shen2023). Safe harbours are commonly found in the context of online service providers’ liability for user-generated content. A well-known example is the US Digital Millennium Copyright Act that includes safe harbour provisions for internet service providers (ISPs). These provisions protect ISPs from copyright infringement liability for content posted by users if the ISPs meet specific requirements, such as promptly removing infringing content upon receiving a valid takedown notice from the copyright holder. Safe harbour provisions for generative AI service providers can help to prevent or mitigate content-related risks while encouraging innovation and industry development goals.

Furthermore, a tiered approach espoused in Article 3 of the Measures needs to deploy a variety of regulatory approaches and tools to achieve both development and security goals. Currently, the Measures do not distinguish between foundation model providers and providers of “downstream” generative AI application services. Both categories of providers are deemed “service providers” and therefore subject to the same obligations under the Measures. A more reasonable approach for balancing both policy goals would be to adopt a multi-tiered governance system that provides room for foundation model providers to experiment, while requiring greater regulatory scrutiny for “downstream” providers with reasonable safe harbours.

As generative AI and LLMs advance at a rapid pace, policymakers face the challenge of developing more sophisticated regulatory frameworks. These frameworks must address the intricate nature of AI-based content generation, which blurs the lines between user input and machine output. The Measures reflect an important first step. We propose that the implementation of the Measures need to balance user empowerment with appropriate levels of user responsibility and accountability. This could involve implementing a tiered system of user responsibility based on the level of human input and the intended use of the generated content. Additionally, policymakers need to establish clearer guidelines for determining liability in cases where harmful content emerges from the complex interaction between user prompts and AI systems.

5.3 Developing a comprehensive AI law

Many provisions in the Measures represent restatements or refinements of existing regulations, including those concerning generation of false information, data tagging, preventing discrimination in algorithmic design, algorithm transparency, personal information protection, user complaint mechanisms, among others. As analysed in section 4, the obligations on content producers reflect existing obligations found in the Provisions on the Governance of the Online Information Content Ecosystem. Furthermore, the Measures directly incorporate provisions from existing regulations. For instance, the obligations related to algorithm filing and security assessment under Article 17 of the Measures are directly linked to the Algorithmic Recommendations Provisions. The labelling of AI-generated content requirements under Article 12 of the Measures incorporate the Deep Synthesis Regulations.

In our view, there is not a considerable deal of novel institutional design in the Measures. One commentator points out that the new obligations introduced by the Measures centre on two aspects: generative AI service providers taking on the obligations of content producers and specific requirements relating to training data of generative AI models (X.R. Wang, Reference Wang2023).

We suggest that the Measures may be intentionally conservative in its regulatory architecture. On the one hand, the Measures can be seen as a prompt response by policymakers to the risks and challenges posed by rapidly evolving AI technologies. On the other hand, Chinese policymakers are seeking coherence and consistency among different AI-related laws and regulations, recognising the downsides and costs of regulatory fragmentation. At the time of writing, it appears that China is working on establishing a general legislation like the EU’s AI Act. China has undertaken such an approach in other areas of technology regulation, with the enactment of comprehensive laws such Cybersecurity Law, Data Security Law, and the Personal Information Protection Law. These national-level laws provided the foundation for introducing a range of regulatory instruments such as those addressing algorithm assessment and filing and deepfake prevention, as mentioned earlier. If China goes down this path, we believe two aspects of regulatory design should be considered by legislators.

First, the primary focus of a general AI law should be on integrating disparate regulatory approaches, standardising existing rules and addressing gaps. For example, an algorithm filing system has been introduced, but the Algorithmic Recommendations Provisions do not lay out the next phase of the system. For example, there need to be further clarification on whether registration serves as a prerequisite for ex-ante liability or as a basis for ex-post liability. This distinction will shape how the registration process is perceived by and its actual effects on the parties involved. A general AI law needs to address important gaps like these, or at least lay the foundation for doing so.

Second, a general AI legislation must clearly define liability of different regulated actors, considering the complexity, autonomy and opacity of AI systems and applications of AI . As mentioned earlier, specific provisions covering issues like damage determination and burden of proof are crucial. Establishing clear rules for different types of redress (from administrative to civil claims) can help to mitigate technology risks while reducing compliance costs for businesses and regulatory challenges for government agencies.

5.4 Implications for global AI governance

In recent years, the global competition in AI has expanded from the technological domain to the regulatory domain. Several Chinese scholars have pointed out that the Measures not only respond to the regulatory and governance needs of domestic generative AI technology and industry but also emphasise China’s increasing involvement in global AI governance and rule-marking (L. Zhang, Reference Zhang2023). On one hand, countries grapple with intense competition in AI technology, several major powers are also engaged in a global race to set the rules and standards that should govern and regulate AI. At the time of writing, the EU has been the most active player in seeking to establish global standards through the introduction of the AI Act.

China also hopes that the introduction of the Measures will showcase its pioneering, agile approach to AI governance (Yi, Reference Yi2023). Formulating standards and norms have consistently been an important aspect of China’s AI development plans, as reflected in the 2017 New Generation Artificial Intelligence Development Plan. It remains to be seen whether the regulatory approaches and tools reflected in the Measures will be adopted and adapted elsewhere. China’s model may not be easily adaptable in jurisdictions with more open information environments.

We agree with the proposition that “despite China’s drastically different political system, policymakers in the United States and elsewhere can learn from its regulations” (Sheehan, Reference Sheehan2023). China’s “vertical,” “adaptive” approach to regulating AI to date, as well as the technical and bureaucratic tools such as algorithmic disclosure, labelling of AI-generated content and technical standards arising from China’s AI regulations can be applied in other parts of the world. There is no doubt that different policy motivations (and prioritisation thereof) will continue to lead to shape each jurisdiction’s stance towards AI governance. The idea of regulatory interoperability and consistency has underlined the quest of global governance for AI (Engler, Reference Engler2022). The risks associated with AI are not confined to individual countries but are international in scope, requiring coordinated international action.

Promoting international cooperation on AI governance faces numerous real-world obstacles arising from the geopolitical landscape and technological competition between major players. Fostering domestic AI development around the concept of digital sovereignty could have negative spillover effects on the global AI market. At the same time, a “race to the bottom” where countries compete to have more lenient regulations can exacerbate the risks of significant harms that such technologies can create (Criddle et al., Reference Criddle, Espinoza and Liu2023).

6. Conclusion

The Measures focus on information content security risks arising from applications of generative AI for the public. Our analysis has shed light on the primary obligations assigned to service providers. While these Measures establish some broad principles and rules for regulating generative AI, a more intricate regulatory framework of “hard” and “soft” law instruments will be essential for effective implementation. A more nuanced stance to regulating the diverse actors and activities, such as a clearer delineation of user liability and offering certain exemptions from liability for service providers, requires further consideration by policymakers, especially if China is heading towards a more comprehensive AI legal framework. The rapid advancements in generative AI pose universal regulatory challenges. Globally, countries are navigating new territory when it comes to coordinating a cohesive approach. China’s path in international AI cooperation and competition remains uncertain.

Competing interests

The author declares none.