1. Introduction

1.1. Models Behaving Badly

In our digital society, financial decisions rely increasingly on computer models, so when things go wrong, those models are potential blame candidates.

In some cases, there is unambiguously a human blunder (or in more sinister cases, human manipulation). For example, a spreadsheet reference may link to the wrong cell; formulas that should update are replaced by hard-coded numbers; or rows are omitted from a spreadsheet sum that should have been included. We have tabulated some of these examples below.

Model errors are not always so clear-cut. More often, modellers may adopt approaches that seemed reasonable at the time, even if with hindsight other approaches would be better. Model calibration may assume that the future is like the past, but, following a large loss, it becomes clear that other parameter values would better capture the range of outcomes. We show some examples below.

Finally, some model errors can occur even if a model is correctly specified and coded, if assumed approximations or algorithms break down. We state some examples below.

These are all examples of model risk events, and, we propose, all events that could have been prevented or at least significantly mitigated, by applying the principles of sound model risk management described in this paper.

In the actuarial world, we use models more than in nearly any other industry, so we are particularly exposed to model risk. And as with the use of models in any field, our desire and need to use them is greatest when the future is not going to be just like the past – yet this is the riskiest of situations, in which the trustworthiness of the model will be most in doubt.

In the remainder of this section, we briefly discuss the use of models in the financial world and the definition of model risk used in this report, along with its limitations.

In section 2, a comprehensive Model Risk Management Framework is proposed, addressing issues such as model risk governance and controls, model risk appetite and model risk identification. These ideas are illustrated by discussing high-profile case studies where model risk has led to substantial financial losses.

In section 3, we explore cultural aspects of model risk, by identifying the different ways in which models are (not) used in practice and the different types of model risk each of those generates. We associate this discussion with the Cultural Theory of risk, originating in anthropology and draw implications for model governance.

In section 4, we provide a detailed discussion of the mechanisms that induce the financial impact of model risk. The challenges of quantifying such a financial impact are demonstrated by case studies of life insurance proxy models, longevity risk models, and models used in financial advice; and we also consider the application to models in broader fields such as environmental models.

Overall, conclusions from the report are set out in section 5.

1.2. What are Models and Why We Need Them

A wide array of quantitative models is used every day in the financial world, to support the operations of organisations such as insurers, banks, and regulators. They range from simple formulae to complex mathematical structures. They may be implemented in a spreadsheet or by sophisticated commercial software. In the face of such variety, what makes a model a model? In the words of the Board of Governors of the Federal Reserve System (2011):Footnote 1

“[T]he term model refers to a quantitative method, system, or approach that applies statistical, economic, financial, or mathematical theories, techniques, and assumptions to process input data into quantitative estimates. A model consists of three components: an information input component, which delivers assumptions and data to the model; a processing component, which transforms inputs into estimates; and a reporting component, which translates the estimates into useful business information”.

The use of models is dictated by the complexity of the environment that financial firms navigate, of the portfolios they construct, and of the strategies they employ. Human intuition and reasoning are not enough to deal with such complexity. For example, evaluating the impact of a change in interest rates on the value of a portfolio of life policies requires the evaluation of cashflows for individual contracts (or groups of contracts), a computationally intensive exercise. Furthermore, the very idea of the “value” of a portfolio requires a model, based on assumptions and techniques drawn from areas including statistics and financial economics. Hence, while the definition of models above may include a wide range of types of model, our attention is focussed on complex computational models, such as those used in insurance pricing or capital management. Such models generally employ Monte Carlo simulation and generate as outputs probability distributions and risk metrics for various quantities of interest.

For models to be useful, they must represent real-world relations in a simplified way. Simplification cannot be avoided, given the complexity of the relationships that make modelling necessary. But simplification is also necessary in order to focus attention on those relationships that are pertinent to the application at hand and to satisfy constraints of computational power.Footnote 2

Decision making often requires quantities that can only be obtained as outputs of a quantitative model; for example, to calculate capital requirements via a value-at-risk (VaR) principle, a probability distribution of future values of a relevant portfolio quantity (e.g. net asset position) needs to be determined. It is understood that the VaR calculated through the model is (at best) a reasonable approximation. The extent to which models can be successfully used to provide such approximations, with acceptable errors will be further discussed in section 4.

However, the (in)accuracy of a model’s outputs and predictions is not the sole determinant of its usefulness. Indicatively, models can be used for:

-

- Identifying relations and interactions between input risk factors that are not self-evident.

-

- Communicating uncertainties using a commonly understood technical language.

-

- Answering “what if” questions through sensitivity analysis.

-

- Identifying sensitivities of outputs to particular inputs, thus providing guidance on areas that warrant further investigation.

-

- Revealing inconsistencies and discrepancies in other (simpler or indeed more complex) models.

Thus, models are not only necessary for decision making, but more generally as tools for reasoning about the business environment and the organisation’s strategies.

1.3. What is Model Risk?

The definition of Model Risk adopted for the purposes of this Working Party’s Report, again follows the guidance of the Federal Reserve (2011):

“The use of models invariably presents model risk, which is the potential for adverse consequences from decisions based on incorrect or misused model outputs and reports. Model risk can lead to financial loss, poor business and strategic decision making, or damage to a bank’s reputation. Model risk occurs primarily for two reasons:

-

∙ The model may have fundamental errors and may produce inaccurate outputs when viewed against the design objective and intended business uses. […]

-

∙ The model may be used incorrectly or inappropriately”.

In the rest of this section, we discuss this definition and its link to subsequent sections of the report.

The first stated reason for the occurrence of model risk is the potential for fundamental errors producing inaccurate outputs. There are many circumstances in which such errors can occur. These may be coding errors, incorrect transcription of portfolio structure into mathematical language, inadequate approximations, use of inappropriate data, or omission of material risks.Footnote 3

Some model errors, in particular those arising from mathematical inconsistencies and implementation errors, we can aim to eliminate by rigorous validation procedures and, more broadly, a robust model risk management process as elaborated in section 2. But a model by its very definition cannot be “correct” as it cannot be identified with the system it is meant to represent: some divergence between the two has to be tolerated, as formalised by a stated model risk appetite (section 2.4). Such divergence may be termed “model error”, but it is not only an unavoidable but an essential feature of modelling: simplification is exactly what makes models useful.

What is of interest in the management of model risk is thus not model error itself, but the materiality of its consequences. Model risk is a consequence of the model’s use in the organisation; a model that is not in use does not generate model risk. The possible financial impact of model risk will depend on the very specific feature of the business use that the model is put into, for example, pricing, financial planning, hedging, or capital management. The plausible size and direction of such financial impact provides us with a measure of the materiality of model risk, in the context of a specific application – a detailed discussion of this point is given in section 4.1. Furthermore, communication of model risk materiality to the board is a key issue in the governance of model risk (section 2.8).

This leads us to the second stated source of model risk: incorrect or inappropriate use. Given the likely presence of some form of model error, there will be applications where the plausible financial impact of model error will be outside model risk-appetite limits. The use of the model in such applications would indeed be inappropriate. Furthermore, a model originally developed with a particular purpose in mind, may be misused if employed for another purpose for which it is no longer fit, as demonstrated in the case studies of section 2.10.

A complication in the determination of plausible ranges of model error – and consequently ranges of financial impact – arises in the context of quantifying uncertainty.Footnote 4 In some types of model error, such as the approximation error introduced by the use of Proxy Models in modelling life portfolios (section 4.2), quantification of its range is possible by technical arguments.Footnote 5 However, in other applications, particularly those involving statistical estimation, such quantification is fraught with problems. The randomness of variables such as asset returns and claims severities makes estimates of their statistical behaviour uncertain; in other words we can never be confident that the right distribution or parameters have been chosen.

Ignorance of such a “correct model” makes the assessment of the fitness for purpose of the model in use a non-trivial question. Techniques for addressing this issue are discussed through a case study on longevity risk in section 4.3. But we note that a quantitative analysis of model uncertainty, for example, via arguments based on statistical theory, will itself be subject to model error, albeit of a different kind. Thus, model error remains elusive and the boundary of what we consider model error and what we view as quantified uncertainty keeps moving.

This particular difficulty relates to the fundamental question of whether model risk can be managed using standard tools and frameworks in risk management. At the heart of this question lie two distinct interpretations of the nature of modelling and, thus, model risk.

-

i. Modelling may be viewed as one more business activity that leads to possible benefits and also risks. In that case, model risk is a particular form of operational risk and it can be (albeit partially and imperfectly) quantified and managed using similar frameworks to those used for other types of risk. Implicit in this view is an emphasis on models as parts of more general business processes and not as drivers of decisions.

-

ii. Alternatively, one can argue that model risk is fundamentally different in nature to other risks. Model risk is pervasive: how can you manage the risk that the very tools you use to manage risk are flawed? Model risk is elusive: how can you quantify model risk without a second-order “model of model risk”, which may itself be wrong? The emphasis in this view is on the difficulties of representing the world with models. Implicit in such emphasis is the assumption that a correct answer to important questions asked in the business exists, which model uncertainty does not allow us to reach.

The reality lies between these two ends of the spectrum. Whilst there are aspects of standard operational risk management that can be applied to managing model risk, there are also important nuances to model risk that make it unique. In section 2, we therefore explain how current thinking, tools, and best practices in risk management can be leveraged and tailored to effectively manage model risk. In section 4, we then explore further the specific nuances and challenges around the quantification of model risk, where an explicit link between models and decisions is necessary in order to explore the financial implications of model risk.

The presence of uncertainties that cannot be resolved by scientific elaboration implies that differing views of a model’s accuracy and acceptable use can legitimately co-exist inside an organisation. Ways of using models differ not only in their relation to particular applications, but also in relation to the overall modelling cultures prevalent within the organisation. In some circumstances, model outputs mechanically drive decisions; in others the model provides one source of management information (MI) among many; in some contexts, the model outputs may be completely overridden by expert judgement. Different applications and circumstances may justify different approaches to model use and generate different sorts of (non-)model risks. In the presence of deep uncertainties, a variety of perceptions of the legitimate use of models is helpful for model risk management, as is further explored in section 3.

Finally, we note that the focus of this report is on technical model risks, arising primarily from errors in a quantitative model leading to decisions that have adverse financial consequences. Behavioural model risks, that is, risks arising from a change in behaviour caused by extensive use of a model (e.g. overconfidence or disregard for non-modelled risks) are only briefly touched upon in section 3. Systemic model risks, arising from the coordination of market actors through use of similar models and decision processes, remain outside the scope of this report.

1.4. Summary

In this section we argued that:

-

- Model risk is a pervasive problem across industries, where complex quantitative models are used, with substantial financial impact.

-

- Model risk occurs primarily for two reasons:

-

1. The model may have fundamental errors and may produce inaccurate outputs when viewed against the design objectives and intended uses.

-

2. The model may be used incorrectly or inappropriately.

-

-

- In the context of model uncertainty, avoiding fundamental errors can be impossible and working out the appropriate use of models can be technically challenging. Therefore development and adoption of a comprehensive Model Risk Management Framework is required.

2. How Can Model Risk Be Managed?

2.1. Real-Life Case Studies

In section 1 we explained what model risk is and the sort of major consequences that model risk events have had – particularly in recent years as models have become more prominent and complex. However, models are necessary as they are crucial to sound decision making, understanding the business environment, setting strategies, etc. So it is vital therefore to properly manage model risk.

We now consider three specific case studies to provide examples of the different aspects of models and model use that can give rise to model risk events. This will help illustrate the importance and benefits that can be gained from a Model Risk Management Framework that we set out in sections 2.2–2.9. We will then revisit the case studies in section 2.10 and look at which elements of the framework were deficient or not fully present, and consider the specific improvements that could have been made.

2.1.1. Long-Term Capital Management (LTCM) hedge fund

Synopsis. LTCM is a typical example of having a sophisticated model but not understanding its mechanisms and the factors that could affect the model. See, for example, the article “Long-term capital management: it’s a short-term memory” in the New York Times (Lowenstein Reference Lowenstein2008).

Background. The hedge fund was formed in 1993 by renowned Salomon Brothers bond trader John Meriwether. Principal shareholders included the Nobel prize-winning economists Myron Scholes and Robert Merton, who were both also on the Board of Directors. Investors paid a minimum of $10 million to get into the fund which consisted of high net worth individuals and financial institutions such as banks and pensions funds.

LTCM started with just over $1 billion in initial assets and focussed on bond trading with the strategy being to take advantage of arbitrage between securities that were incorrectly priced relative to each other. This involved hedging against a fairly regular range of volatility in foreign currencies and bonds using complex models.

What happened?. The fund achieved spectacular annual returns of 42.8% in 1995 and 40.8% in 1996. This performance was achieved, moreover, after management had taken 27% off the top in fees.

As the fund grew, management felt the need to adopt a more aggressive strategy, and move into a wider range of investments. They pursued riskier opportunities, including venturing into merger arbitrage (i.e. bets on whether or not mergers would take place), and used their models to identify merger arbitrage opportunities.

As arbitrage margins are very small, the fund took on more and more leveraged positions in order to make significant profits. At one point the fund had a debt to equity ratio of 25:1 ($125 billion of debt to £4.7 billion of equity). The notional value of their derivative position was $1.25 trillion (mainly in interest rate derivatives).

Then, in 1998, a trigger event occurred, the Russian Financial Crisis, when Russia declared it was devaluing its currency and effectively defaulted on its bonds. The effects of this were felt around the world – European markets fell by around 35% and the US markets fell by around 20%.

As a result of the economic crisis, many of the Banks and Pension funds invested in LTCM moved close to collapse. As investors sold European and Japanese bonds, the impact on LTCM was devastating. In less than 1 year, the fund lost $4.4 billion of its $4.7 billion in capital through market losses and forced liquidations.

It was feared that LTCM’s failure could cause a chain reaction of catastrophic losses in the financial markets. After an initial buy-out bid was rejected, the Federal Reserve stepped in and organised a $3.6 billion bailout to avert the possibility of a collapse of the wider financial system.

2.1.2. West Coast rail franchise

Synopsis. The £9 billion West Coast Main Line rail franchise contract is a non-financial services example of a failure in model validation.

Background. Virgin had been operating the West Coast Main Line rail up to the point of the franchise renewal in 2012. Both they and First Group bid for the franchise; after assessment by the Department for Transport, the franchise was initially awarded to First Group.

What happened?. Virgin requested a judicial review of the decision, based on questions over the forecasting and risk models used by the Department for Transport. The transport secretary agreed for an independent review to be performed.

The resulting Laidlaw report (Department for Transport and Patrick McLoughlin, 2012) found that there had been technical modelling flaws with incorrect economic assumptions used. Mistakes were made in the way in which inflation and passenger numbers were taken into account and values for these two variables were understated by up to 50%.

As a result, First Group’s bid seemed more attractive, as they were much more optimistic about how passengers and revenues could grow in the future.

The Department for Transport provided guidance to the bidders, but used different assumptions to the bidders, which created inconsistencies and confusion.

Economic assumptions were only checked at a late stage, when the Department for Transport calculated the size of the risk bond to be paid by the bidder. Errors were also found to affect the risk evaluations, which were therefore incorrect, such that the risk was understated.

The review found that external advisors had spotted some of the mistakes, hence early warning signs that things were going wrong, but there was no formal escalation of these mistakes and therefore incorrect reports were circulated.

The whole fiasco led to a huge embarrassment for the Department for Transport, with taxpayers having to pay more than £50 million for their error. Questions were raised over senior management and their levels of understanding. Three senior officials were suspended as a result and Virgin continued to run the service for another 2 years.

2.1.3. JP Morgan (JPM)

Synopsis. Named the “London Whale” by the press after the size of the trades, this was a case of poor model risk management combined with broader risk management issues that led to JPM making losses of £6 billion and being fined £1 billion. See, for example, the 2013 BBC News articles “‘London Whale’ traders charged in US over $6.2bn loss” (BBC News, 2013a) and “JP Morgan makes $920m London Whale payout to regulators” (BBC News, 2013b).

Background. JPM’s Chief Investment Office (CIO) was responsible for investing excess bank deposits in a low-risk manner. In order to manage the bank’s risk, the CIO bought synthetic CDS derivatives (their synthetic credit portfolio or SCP), which were designed to hedge against big downturns in the economy.

In fact, the SCP portfolio increased more than tenfold from $4 billion in 2010 to $51 billion in 2011 and then tripled to $157 billion in early 2012.

What happened?. The SCP portfolio, whilst initially intended as a risk management tool, instead became a speculative tool and source of profit for the bank as the financial crisis ended, and again in 2011. The CIO was short-selling SCP and betting that there would be an upturn in the market.

Existing risk controls flagged these trades that were effectively ten times more risky than the agreed guidelines. This resulted in the CIO breaching five of JPM’s critical risk controls more than 330 times.

Instead of scaling back the risk, however, JPM changed the way it measured risk, by changing its VaR metric in January 2012. Unfortunately, there was an error in the spreadsheet used to measure the revised VaR, which meant the risk was understated by 50%. This error enabled traders to continue building big bets.

As they were making large trades in a relatively small market, others noticed and took opposing positions, including a number of hedge funds and even another JPM company.

In early 2012, the European sovereign debt crisis took hold and markets reversed, leading to trading losses totalling more than $6 billion.

This prompted an inquiry by the Federal Reserve, the SEC, and ultimately the FBI, which resulted in JPM having to pay fines of $1 billion. The CEO of JPM was found to have withheld information from regulators on the Bank’s daily losses, and the incident claimed the jobs of several top JPM executives.

2.2. The Model Risk Management Framework

The concept of model risk and the management of it could reasonably be viewed as less mature than for other risk types (e.g. market, credit, insurance, operational, liquidity), which appear in standard regulatory frameworks such as Solvency II. In particular, an independent industry research survey on model risk management (Chartis Research Ltd, 2014) highlighted that few firms (only 12% of those surveyed) have a comprehensive model risk management programme, although a large proportion see model risk management as a high or their highest priority. The survey also identified that there are significant organisational and structural challenges to model risk management (lack of clear ownership of and senior engagement in model risk, siloed management of model risk), and that model risk appetite and Model Risk Policy are key to the overarching process. Further, the survey explains that whilst model risk is generally seen as an operational risk, it does not fit the operational risk definitions well. We have therefore in this section aimed to develop an enterprise-wide framework for managing model risks, to be fit to address the issues experienced in the real-life case studies, and to address these specific challenges identified.

Different organisations view and categorise model risk in different ways. Although the consequences of model risk events occurring are mainly financial, some organisations may treat model risk as a subset of both financial and operational risk.

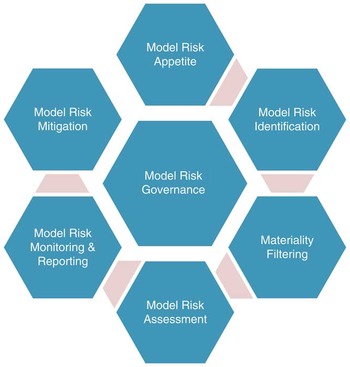

In this paper, we have used established operational risk management processes as the starting point for the management of model risk. From this, we have developed a framework for managing model risks, based on industry best practice, regulatory developments (e.g. the US Federal Reserve’s Supervisory Guidance on Model Risk Management mentioned in Chapter 1, Solvency II), good practice general risk management principles, and consideration of the specific nuances of model risk as a risk type. The proposed Model Risk Management Framework is represented diagrammatically in Figure 1.

Figure 1 The Model Risk Management Framework

The terminology used may differ slightly to that used across organisations, but the concepts that are described in more detail in the following sections should be commonly understood.

For illustrative purposes the framework has been described in the language and context of a company, however, the framework is intended to be applicable more widely than this (e.g. to pension schemes, consultancies, mutual societies, regulators) so that the specific named bodies here (Board, Risk Function, etc.) can equally be replaced by the equivalent bodies or individuals in a non-corporate organisation.

We now go through each component of the Model Risk Management Framework in turn, setting out the detailed principles that we would expect a company to tailor and apply to their organisation in order to effectively manage model risk.

2.3. Model Risk Governance

We begin with governance, which is central in Figure 1 and to the Model Risk Management Framework.

In order to put in place appropriate governance around model risk, an organisation should establish an overarching Model Risk Policy, which sets out the roles and responsibilities of the various stakeholders in the model risk management process, accompanied by more detailed Modelling Standards, which set out specific requirements for the development, validation, and use of models.

2.3.1. Model Risk Policy

The Board. Overall responsibility for managing model risk must lie with the Board. This is because the consequences of model risk events can impact the financial strength of the company, as we have seen from the Introduction, and because the Board is ultimately responsible for the results and decisions of the organisation, which are built upon potentially multiple layers of models. As we will see in the section on model risk appetite, the scope and content of the model risk framework will be driven by how willing the Board is to accept the results from complex financial models.

In order to fulfil its role in managing model risk, the responsibilities of the Board could be as follows:

-

∙ specifying its appetite for model risk;

-

∙ maintaining an appropriate governance structure to ensure material models operate properly on an ongoing basis;

-

∙ ensuring there are sufficient resources available to develop, operate, and validate material models, and the appropriate skills and training are in place; and

-

∙ ensuring that all relevant personnel are aware of the procedures for the proper discharge of their responsibilities around models.

Clearly, it is important to consider proportionality and apply pragmatism in order to ensure the Board meets these responsibilities. In particular, the Board would not, in general, be expected to get involved in the detail of individual models or their associated risks (other than perhaps for regulatory capital models), however, they would be expected to define, for their key model applications (e.g. financial reporting, capital and reserving, and pricing, models) the degree of error they are willing to tolerate, as they sponsor the resources required to support that degree of tolerable error.

The Risk Function. The Board generally delegates the responsibility for day-to-day management of risks to senior management. Given that model risk is a less mature risk type and it often will not have a single owner at an enterprise level (as there are a huge number of individual models), it may be most appropriate for the Board to delegate responsibility to the Risk Function (or equivalent senior management body) to set and maintain an effective Model Risk Management Framework.

In order to fulfil its role in managing model risk, the responsibilities of the Risk Function could therefore be as follows:

-

∙ establishing and maintaining the Model Risk Management Framework;

-

∙ setting and maintaining Standards for modelling (see section 2.3.2), and monitoring compliance with these Standards;

-

∙ maintenance of the company’s aggregate model inventory (see section 2.5);

-

∙ setting and maintaining thresholds for materiality filtering (see section 2.6), and oversight of the application of these materiality thresholds;

-

∙ monitoring the company’s position against the Board’s model risk appetite (see section 2.4), and where necessary proposing relevant management actions to bring the model risk profile back into appetite (see section 2.9);

-

∙ ensuring model risk assessments are carried out (see section 2.7);

-

∙ ensuring material models and model developments are appropriately validated on a sufficiently timely and regular basis;

-

∙ identifying emerging model risks within the company; and

-

∙ model risk reporting (see section 2.8) to the Board and its delegated committee structure.

Model owners. The implementation of the Model Risk Management Framework is then the responsibility of the individuals responsible for each model, with subject matter support from the Risk Function. Therefore, for each model used in the organisation, the business must assign a model owner responsible for that model.

In order to identify who “owns” (is responsible for) each model, the company is likely to consider who developed the model. Often, however, model developers may have moved on or worked for an external party. Therefore, the individual(s) responsible for a model should be the person(s) responsible for the use of the outputs of the model. A model is fundamentally just a tool to provide information for a specific use (e.g. to feed into financial results or regulatory submissions, to determine a price for a transaction, to assess the pricing level of a product, etc.). Therefore, the individual who uses the outputs of the model to meet the responsibilities of their role, should be responsible for the model. So the onus is on the model user to “own” the model and ensure that they understand it, which will require appropriate knowledge transfer to be undertaken with the model developer. The same applies when the model user changes. To avoid key person dependencies for important models, it is sensible to ensure that more than one individual has a deep understanding of the model.

Where there are multiple users of a model, a primary owner should be allocated, responsible for ensuring the model complies with the Model Risk Management Framework. Depending on the different applications of the model in this case, it may be appropriate for the primary owner to be a more senior individual in the organisation commensurate with the materiality of the most important applications. Secondary users will still need to comply with the general requirements of the Model Risk Management Framework but can place some reliance on the primary owner, for example, for the documentation of the model and the generic expert judgements and limitations, overseeing validation, etc. However, where the secondary users make changes to the model, or any documentation, expert judgements, limitations, and model output reports are specific to their use, they will need to ensure these meet the requirements of the Framework.

As the use of models has become much more widespread, and models in organisations have become more complex, in recent years, not all model users will have the requisite technical skills to be able to fully comply with the Framework requirements or indeed maintain their models. Where this is the case where they should work with the Risk Function for support in understanding how to apply the Framework, and engage the support of the model developers, Actuarial Function, or other relevant experts for support in understanding the technical aspects of the model. However, they still retain responsibility and as a minimum should maintain an overall understanding of the model and its key underlying assumptions and limitations.

The primary responsibility of the model owner is to ensure that the model complies with the requirements of the Model Risk Management Framework. This involves capturing the model in the organisation’s model inventory, assessing it against the materiality criteria, and ensuring that the model complies with the Modelling Standards applicable to its risk rating – that is, that the model is properly developed, implemented, and used, and has undergone appropriate validation and approval. In particular, the model owner should be able to actively focus on the Standards around model use – that is, are the applications that the model is being used for appropriate, and are the outputs from the model properly understood. The model owner is also responsible for providing all necessary information for validation of the model.

Governance committee. Like any other type of risk, there needs to be formal governance around the management of model risk. There needs to be clarity over the committee structure that will oversee model risk and receive regular model risk MI reports. The precise committee structure will depend on the nature of the organisation and on the Board’s view of the relative importance of the risk. Appropriate governance could certainly be achieved through the existing committee structure; it is not necessary to introduce new committees specifically for the governance of model risk. For example, it may be more practical for a company to govern insurance pricing models in the same forum as where insurance pricing is governed, rather than to separate out the governance of models. However, it is important that there is clarity of oversight of model risk as there can be elements of cross-over between financial and operational risks (and the expertise for their management) that stem from model risk.

Once we have identified the committee responsible for oversight of model risk, we need to ensure that there is appropriate representation on the committee, and an appropriate governance process around model risk management that embraces all types of model risk cultures, in order to achieve optimal model risk management results. This is discussed further in section 3.

In order to fulfil its responsibilities around model risk management, the terms of reference of the governance committee could include the following

-

∙ Exercise governance and oversight over the development, operation, and validation of all material models used by the company, to ensure that they are fit for purpose, adequately utilised in the business, and comply with regulatory requirements.

-

∙ Monitor the model inventory for completeness and adequacy.

-

∙ Review and approve material model changes and developments.

-

∙ Review all model validation reports, approve the associated action plans to address findings, and monitor progress against agreed action plans.

-

∙ Manage model risk within the Board’s model risk appetite.

-

∙ Monitor emerging model risks within the company.

-

∙ Monitor compliance of models with the Modelling Standards, escalate breaches as appropriate, and consider the appropriateness of proposed rectification plans.

Internal audit. Internal audit is responsible for evaluating the adequacy and effectiveness of the overall system of governance around model development, operation, and validation.

2.3.2. Modelling Standards

In order to embed the Model Risk Management Framework into the running of the business, as described above the Risk Function (or equivalent) should set minimum standards that model owners and validators are expected to adhere to. This is a familiar concept for many insurers in respect of Solvency II internal models. Companies could therefore develop similar but higher level standards that would be applicable to all business material models (i.e. those models that exceed the materiality thresholds as defined in section 2.6). These standards would contain only those specific requirements that senior management expect around the modelling process in order for the Board to gain comfort over the fitness for purpose of these models. Furthermore, these Standards could be applied in a graduated or differential manner according to the materiality rating of the model. For example, models could be categorised as immaterial, low materiality, medium materiality, or high materiality through the materiality filtering process; and the Standards could then explicitly separate out the “base” requirements applicable to all material models, the additional requirements applicable to all models rated medium or higher, and the further requirements only applicable to high materiality models.

In general, the Modelling Standards should typically include requirements in the areas set out in Figure 2.

Figure 2 Modelling Standards

2.4. Model Risk Appetite

The explicit consideration of an appetite for model risk is a relatively new concept, but for effective management of any risk, the Board’s appetite for that risk needs to be defined and articulated into a risk-appetite statement. Expression of the Board’s appetite for model risk is the second vital step in model risk management, following the establishment of Policy.

Risk appetite is defined as the amount and type of risk that an organisation is willing to take in order to meet their strategic objectives. Each organisation has a different view on, or appetite for, different risks, including model risk, which will depend on their sector, culture, and objectives. Specifically in the case of model risk, the Board has to establish the extent of its willingness, or otherwise, to accept results from complex models.

Furthermore, the Board’s appetite for model risk is likely to vary depending on the purpose for which a model is being used. This should be considered when determining the materiality criteria used to assess which models are most significant for the organisation (an issue addressed below in section 2.6). By definition, the Model Risk Management Framework should be applied to those models which are most business-critical for the purposes of decision making, financial reporting, etc. Therefore, the appetite for errors in these, and hence model risk, will be the lowest.

As with any risk, the risk appetite for model risk should be articulated in the form of appetite statements or risk tolerances, translated into specific metrics with associated limits for the extent of model risk the Board is prepared to take. For example, some of the metrics that could be considered in a model risk-appetite statement are as follows:

-

∙ aggregate quantitative model risk exposure (see section 2.7);

-

∙ extent to which all models used have been identified and risk assessed;

-

∙ extent to which models are compliant with Standards applicable to their materiality rating;

-

∙ extent to which uncertainty around model outputs is transparently presented to model users;

-

∙ number of high-risk-rated models;

-

∙ cumulative amounts or numbers of model errors;

-

∙ number of models rated not fit for purpose through independent validation;

-

∙ scale or number of noted model weaknesses;

-

∙ the scale or number of model developments needed to address errors or weaknesses;

-

∙ the duration of outstanding or overdue remediation actions;

-

∙ the number of overdue validations; and

-

∙ the number or scales of model-related internal audit issues.

As mentioned in section 2.3.1, it is important to consider proportionality and apply pragmatism in the Board’s role on setting model risk appetite. For example, a short set of questions focussing on the aspects above of most relevance to the organisation and the most material model applications, can both engage the Board or relevant sub-committee and make the process simple and efficient.

The company’s position against the model risk appetite (i.e. the company’s “model risk profile”) should be monitored by the body responsible for model risk governance on a regular (e.g. quarterly) basis, and should allow management to identify whether the company is within or outside appetite.

2.5. Model Risk Identification

With the Model Risk Policy framed and the Board’s appetite for model risk established, the next step is to identify the model risks that a company is exposed to. In order to do this, it is necessary to identify all existing models, and key model changes, or new developments.

In terms of existing models, a model inventory or log should be created, in which each team or Function is required to list the models it uses. This does need to include obsolete models that are no longer used. All models across the organisation fitting the definition in section 1.2 should be considered, for example, spreadsheets used to calculate policy values, and not just those used to produce financial projections and statutory results.

In an insurance company, for example, the model inventory may include the following types of models:

-

∙ reserving;

-

∙ regulatory capital;

-

∙ EEV/MCEV;

-

∙ product pricing;

-

∙ economic capital;

-

∙ ALM;

-

∙ transaction support;

-

∙ forecasting;

-

∙ benefit illustration;

-

∙ claims values;

-

∙ experience analysis; and

-

∙ reinsurance.

It is helpful to categorise the different types of models into groupings – either by purpose/application (as above) or by process (e.g. asset valuation, liability cashflow, aggregation, etc.). This allows the organisation to build up a picture of where the model usage is and which areas run the most model risk. It may be difficult to find a categorisation that fits all models well, but it is important to agree on an approach which is practical for managing the organisation’s models. For example, where model inventories are used to identify when models are next due for validation, categorisation by purpose may be more helpful. Similarly, spreadsheets often hand-off from one to another (typical “chains” can easily comprise 50 separate spreadsheets, each run by different people and stored in different locations, etc.) so it can be helpful to group these together as a single “model” by considering the end purpose/application of these spreadsheets.

The model inventory should capture key features for each model, including but not limited to:

-

∙ the model owner;

-

∙ what the model is called;

-

∙ the name of the model platform or system;

-

∙ a brief description of what the model is used for;

-

∙ an overview of how the model works;

-

∙ the frequency of its use;

-

∙ the key assumptions or inputs;

-

∙ where on the network the model is stored;

-

∙ etc.

The inventory could also take account of any model hierarchy and dependencies, as it is common for some models to be inter-related. In its simplest form, this might just be where a group model takes the results from the models of its subsidiaries. Alternatively, it may be more complex, for example, where the results of cashflow or balance sheet models are used in further models that stress the results under a range of scenarios. This could be incorporated in a number of ways, depending on the organisation and range of models. One way to accommodate this would be to allocate each model as Level 1, 2, 3, etc. within a hierarchy and use unique reference numbers for the models to demonstrate which depend on which. For particularly complex model interdependencies, a diagram may help illustrate the links.

The information captured in the model inventory will be crucial in making the materiality assessment for each model (see section 2.6) to determine the risk rating of each model and hence the extent to which the Model Risk Management Framework needs to be applied.

Once created it is imperative that the log is maintained, because the business will evolve and new models will regularly be developed. In addition, previously non-material models can become material and vice versa.

In the same way, an organisation should create and maintain a model development log or inventory – that is, a log of the planned and in progress material model changes and developments, in order to identify risks from model changes or new model developments. The model development log should contain similar information to the model inventory, for example:

-

∙ the model purpose/application

-

∙ a brief description of the model development;

-

∙ scope of the model development;

-

∙ rationale for model development;

-

∙ the name of the model platform or system for the model development;

-

∙ current status of the model development;

-

∙ model development impact (quantitative);

-

∙ model development impact (qualitative);

-

∙ model development validation rating;

-

∙ key validation issues identified;

-

∙ key actions outstanding to complete and approve the development;

-

∙ etc.

2.6. Materiality Filtering

The model inventory and development log will likely identify a large number of models and model developments in an organisation. A materiality filter should therefore be applied (in line with the firm’s model risk appetite) to identify the models and model developments which are material (i.e. present a material risk) to the organisation as a whole, and which thus need to be more robustly managed. Materiality criteria should therefore be defined to determine which models in the model inventory and which model developments in the model development log are viewed as material, with these models then being managed in line with the Model Risk Management Framework. Furthermore, the framework and the materiality criteria could be enhanced to include graduations of thresholds – to differentiate between immaterial, low materiality, medium materiality, and high materiality models, with each category of models being subject to appropriate standards under the Framework. The remainder of this section only considers the simple case of models either being subject to the Framework requirements or not.

As discussed in section 2.4, the key determinant of which models are viewed as being subject to the Model Risk Management Framework is the organisation’s appetite for model risk. The less appetite the Board has for model risk, the more models and more model developments it should wish to see captured under the Model Risk Management Framework – hence the materiality criteria should be “tighter”/more stringent. In reality, there will be a practical limit to the number of models and developments that can be caught under the framework, depending on the resources the company is willing to invest in managing model risk (in the same way as for other risks). So there must be a balance in setting the materiality criteria to ensure the Model Risk Management Framework should apply to those models deemed most business-critical.

Such business-critical models will often be used in the quantification of financial measures. Therefore model risk materiality may be defined in terms of:

-

∙ profit;

-

∙ reserves;

-

∙ capital;

-

∙ asset valuation;

-

∙ price;

-

∙ sales;

-

∙ embedded value;

-

∙ cashflow;

-

∙ etc.

We may also want to consider the complexity of the models when determining model risk materiality. For example, a simple deterministic model with a handful of inputs and requiring just one or two assumptions, will likely be much less prone to model error than a complex stochastic asset liability projection model with a vast number of different inputs and assumptions that considers the interactions of multiple risk factors and lines of business.

Assessment of materiality based on such quantitative measures must be underpinned by a qualitative assessment, made by the relevant model owner. This qualitative assessment should encompass criteria such as:

-

∙ What is the extent of reliance on the results of the model for decision making?

-

∙ How important are the decisions being made (e.g. do they impact on the organisation’s strategic objectives)?

-

∙ How sensitive are the results to changes in parameters or assumptions?

-

∙ What is the potential for adverse customer impact due to the model results?

-

∙ etc.

To make the qualitative aspects useable in the materiality assessment, a standardised approach will be necessary to reach an overall conclusion on the qualitative aspects of materiality. This might be achieved through comparison of the model, for each of the criteria considered, against a set of descriptions, corresponding to points on a scale of materiality. The overall assessment might be then the most material outcome on any criteria considered or some (weighted) average of the results for each of the criteria.

Together, the information and data captured in the model inventory and model development log must be sufficient to enable the quantitative and qualitative materiality assessment to be made.

To illustrate the suggested approach, we have considered the example of the West Coast Main Line bid (section 2.1.2). Here, the quantitative assessment would naturally have been at the highest rating given the purpose of the model was to secure the promised multi-billion franchise payments (through quantifying the funding arrangements of the bidding entity).

From a qualitative perspective, section 2.1.2 describes heavy reliance on the model, with the output being highly relevant to the assessment of the bids (so of “strategic” importance, given the Department for Transport’s objective to re-franchise the railway). The decision also had a national impact on “customers”, given that it would affect the 26 million annual journeys made by the travelling public (as referenced in the Department for Transport’s Stakeholder Briefing Document, 2011). These factors would each have indicated a high qualitative rating.

Whether the model, as used, would have been sensitive to changes in assumptions is unclear, given the resulting problems that emerged. However, given the combination of high quantitative and qualitative ratings, a future model of this nature would be subject to the proposed Model Risk Management Framework, which would require such testing.

2.7. Model Risk Assessment

Once we have identified the models and model developments which are material, the next step is to assess the extent of model risk for each material model or model development. This should involve carrying out both a quantitative assessment, and a qualitative assessment, and considering both gross and net of controls. We can then aggregate this to derive an overall company-level model risk assessment. The process for carrying out this assessment is described in detail below.

2.7.1. Quantitative assessment for an individual model or model development

In order to quantify the model risk inherent in a model or model development, the following approach can be taken (analogous to the way any other risks would be measured):

-

1. Where there is a reasonable analytical measurement approach of estimating the financial impact of the model risk, this should be used (see section 4).

-

2. Where an analytical model risk measurement approach is not available, an “operational risk style” scenario-based approach should be taken, considering any relevant available data, to quantify the financial impact of the model risk.

To the extent possible, these assessments should be carried out using both gross and net of controls (i.e. before and after allowing for the application of the Model Risk Policy and Standards per section 2.3 in the model/model development), albeit acknowledging any allowance can only be crude/high level. For example, if we are using the scenario-based approach, then the scenario causing a loss may differ, or the frequency or severity assessments of the scenario may differ, depending on if we are applying the Policy and Standards or not.

If we consider the different ways in which model risk is generated: human blunders/errors; inappropriate use; and model uncertainty; then it is likely to be virtually impossible to derive a meaningful quantitative assessment of human blunders, it will be very challenging but may be possible at a high level to quantify the impact of inappropriate use of models, and it should be possible to derive a meaningful quantification of model uncertainty. For example, where it cannot be evidenced that software or spreadsheets have been through recognised testing protocols, then the models and output will generally be expected to be less accurate. Therefore, the quantitative assessments of model risk for individual models or model developments are most likely to relate to model uncertainty – this is covered in more detail in section 4.

2.7.2. Qualitative assessment for an individual model or model development

A qualitative assessment of the model risk should also be carried out.

On a “gross of controls” basis, the high/medium/low materiality assessment from section 2.6 could translate directly to a high/medium/low-risk assessment.

On a “net of controls” basis, we would consider the fitness for purpose of the model or model development, and depending on this we could reduce the risk rating accordingly from the “gross of controls” assessment. So, for example, if a model was assessed as high materiality but had been recently robustly validated as fit for purpose with no material open issues, the net model risk assessment may be low risk.

The fitness for purpose of a model or model development is determined by considering the effectiveness of the model or model development against each area of the Model Risk Policy and Standards (i.e. what is the quality of the data, has the model been developed subject to proper change controls, has the model been properly documented, are the key assumptions and limitations properly understood, is it being used for appropriate applications, etc.). This could be informed by the latest model validation report in respect of the model or model development; or alternatively the model risk governance body could commission the model validator to carry out the assessment, or equivalently commission the model developer to carry out the assessment and the model validator to peer review and challenge the assessment. Examples of possible diagrammatic and dashboard presentations of qualitative net of controls model risk assessments are set out in Figures 3 and 4 in section 2.8.3.

Figure 3 Diagrammatic presentation of qualitative model risk assessment

Figure 4 Dashboard presentation of qualitative model risk assessment

2.7.3. Enterprise-wide model risk assessment

In order to assess the overall model risk profile of the company, we make an assessment based on the risk assessments of the individual material models used in the organisation. Again, there will be a quantitative and a qualitative assessment.

The quantitative assessment will aggregate the individual quantitative model risk assessments, using appropriate correlation factors. Given the likely crudeness of the individual assessments, a broad-brush low positive correlation may be reasonable.

The qualitative assessment will consider the qualitative metrics in the model risk appetite – for example, the extent to which all models used have been identified and risk assessed, the extent to which models are compliant with Standards applicable to their materiality rating, the number of high-risk-rated models, cumulative amounts of model errors, etc.

2.8. Model Risk Monitoring and Reporting

Given that the Board has ultimate responsibility for managing the model risk of an organisation, it is important that the model risk MI presented to the Board and its delegated committee structure enables effective oversight of model risk.

2.8.1. Style

The MI should be set out in terms which are meaningful to the Board and relevant in the context of the Board’s objectives. Furthermore, the MI should be tailored to the cultures of the stakeholders on the Board and relevant sub-committees (this is elaborated upon in section 3).

2.8.2. Content

The model risk MI would be expected to cover the following as a minimum:

-

∙ The organisation’s overall model risk profile compared with its agreed appetite (as per section 2.4).

-

∙ Any recommended management actions to be taken where necessary to manage the company’s model risk within appetite (as per section 2.9).

-

∙ Monitoring of the material models in the model inventory in scope of the Model Risk Management Framework (as per sections 2.5 and 2.6), including materiality rating (low/medium/high), and quantitative and qualitative model risk assessments (as per section 2.7).

-

∙ Key model developments in progress or recently completed (as per section 2.5).

-

∙ Outcomes of model validations, highlighting any issues or areas of weakness.

-

∙ Actions being taken by management to address any model validation issues, weaknesses, or breaches of Modelling Standards, and progress against these actions.

-

∙ Any emerging trends or risks with model risk, whether within the organisation or from information/reports from other companies.

The MI should focus on the company’s material models, and the extent of reporting should be proportionate to the materiality of the model(s) and their use(s). The level of trust that the Board has in complex models, and the level of complexity of the organisation’s model inventory, will be key in determining the detail of reporting.

The majority of the content areas are self-explanatory or covered in the relevant subsections in this section; one aspect that merits further detail is around material model monitoring.

2.8.3. Material model monitoring

In defining model risk in section 1.3, two main generators of risk were identified – fundamental errors and incorrect or inappropriate use. The potential for errors is a more “familiar” risk for companies, and operational risk reporting MI could be the starting point for developing MI around this aspect. For example, typical operational risk MI covers the frequency and severity assessments of top operational risk events, the effectiveness of controls, and metrics such as observed losses, which would be relevant to model risk.

The risk arising from incorrect or inappropriate use of a model is however a less-familiar concept, so reporting on this risk may require a new approach to be developed. Different decision makers will take account of different pieces of information in coming to their decisions, and the emphasis on the model may vary. However, there is a danger that a decision may be made entirely based on a model’s results (our “Confident Model Users” perspective in section 3), without appreciation of the potential range of outcomes, or that information from a model is ignored in coming to a decision (which might be the style of the “Intuitive Decision Maker” or the “Uncertainty Avoider” in section 3). Therefore, the MI on model use risk should focus on the effectiveness of the model owners in communicating the extent of risk inherent in a model to the model users, and on the effectiveness of the model users in understanding, processing, and feeding this information into their decisions. For example, in order for a model owner to effectively communicate the extent of risk inherent in a model to the model users, good practice model output documentation (as per the Modelling Standards) should:

-

∙ set out the purpose(s) of the model;

-

∙ be appropriate to the expertise of the user audience;

-

∙ provide a reasonable range or confidence interval around the model result (e.g. by using sensitivities to key expert judgements);

-

∙ convey the uncertainty inherent in the qualitative aspects of the modelling process as well;

-

∙ summarise the key expert judgements and limitations underlying the model;

-

∙ describe the extent of challenge and scrutiny that the model has been through and why this is sufficient for the relevant use;

-

∙ explain how the model or model component fits into the overall modelling process (e.g. using a flowchart showing the data in-flows and out-flows); and

-

∙ use diagrams where appropriate to support the explanation of more technical/complex modelling concepts (e.g. section 4.3 sets out an example where there are two entirely differently shaped curves which produce the same point estimate mortality assumption – if the size and timing of cashflows is related to the shape of the curve, the choice of curve may have a significant financial impact).

The volume of information required to communicate model risk can be extensive, so a high level summarised approach may assist in conveying the key messages and areas of risk to model users.

For example, Figures 3 and 4 are simple illustrations of a diagram and a dashboard using an RAG (red/amber/green) rating to reflect the extent of model risk, considering impact and probability:

In order to monitor model use risk, we may therefore monitor the effectiveness with which the output documentation (or other format of communication) of material models covers each of the above good practice areas; and from Board or committee minutes we could monitor how this information has been taken into account in informing the relevant decisions.

2.9. Model Risk Mitigation

As mentioned in section 2.4, the model risk appetite should be monitored by the body responsible for model risk governance on a regular basis, and should allow management to identify whether the company is within or outside its risk appetite. If the organisation is outside its appetite then the model risk governance body should recommend relevant actions to bring the company back into its appetite within a reasonable timeframe. Possible model risk mitigation actions that the model risk governance body may recommend in order to bring the company’s risk profile back into appetite may include, for example:

-

∙ Model developments or changes should be carried out to remediate known material issues (these may have been identified by the model user, model developer, model validator, or otherwise).

-

∙ Additional validation of the model is necessary when a new model risk has emerged or an existing model risk has changed (e.g. if new information comes to light on longevity risk or if there is greater concern over pandemic risk due to a new emerging virus).

-

∙ An overlay of expert judgement should be applied to the model output to address the uncertainty inherent in the model. For example, if there is significant uncertainty in one of the underlying assumptions and hence there is a range of plausible results, then an expert judgement may be applied to identify a more appropriate result within the range of reasonable outcomes. Alternatively, the expert judgement may be an entirely independent and objective scenario assessment to complement the modelled result, or replace the use of a model altogether.

-

∙ The Modelling Standards, or the application of the Modelling Standards, should be enhanced (e.g. specific requirements in the Standards that applied only to high materiality models could be extended to apply also to medium materiality models).

-

∙ If appropriate, additional prudence may be applied in model assumptions, or explicit additional capital may be held, to reflect the risk inherent in the model. For example, additional capital may be held in line with the quantitative model risk assessment.

2.10. Case Studies

Having set out the suggested framework for managing model risk, we now consider the real-life case studies from section 2.1 and identify the areas of the Model Risk Management Framework, which were deficient or not fully present, and consider the specific improvements that could have been made, in order to bring the Framework to life.

2.10.1. LTCM hedge fund

Model Risk Management Framework assessment. The diagram considers where the risks arose for LTCM.

Model use. There was an over-reliance on the model and the assumption that it would always work the same way, even when LTCM moved away from their original investment strategy. The model was tailored to arbitrage, but not merger arbitrage. This resulted in LTCM taking on more risk than they could manage.

Model methodology and expert judgement. There was no transparency externally of either the investment strategy or the underlying model. Investors were only told that the strategy involved bond arbitrage. At the time, hedge funds were not subject to the same level of regulation as other investments, so that there was effectively no regulatory oversight of LTCM or their complicated models.

Model validation and external triggers. There was inadequate review of the model and its objectives when the trading strategy was changed (to include merger arbitrage). Insufficient consideration was given to the impact of low probability events such as systemic risk. This reinforced the need for and benefits of stress testing models for extreme events (in respect of both the underlying strategy, and in respect of the extension of modelling from fixed income arbitrage to merger arbitrage).

2.10.2. West Coast rail franchise

Model Risk Management Framework assessment. The diagram considers where the risks arose for the West Coast Main Line bid.

Data quality and Expert judgement. Mistakes were made in the inflation assumptions used, which were understated by 50%. These should have been checked for reasonableness, particularly as the Virgin bid would have been based on data that was complete and appropriate. This resulted in clear bias, as First Group assumed more optimistic values for inflation in the later phases of the franchise period.

Model validation. Clearly, the extent of the validation performed was inadequate as it did not identify the technical flaws in modelling or inconsistent assumptions found by the subsequent review. Fundamentally, the First Group model’s results did not sound reasonable. Richard Branson famously said that “he would run the service for free if their assumptions were correct”. Another validation flaw was that the Department for Transport’s models were not shared with bidders. Had all bidders used the same models (rather than each using their own different models) then this would have permitted the Department for Transport to compare the rival bids and identify any inconsistencies.

Model governance. There was a lack of understanding around the model by the stakeholders and overall insufficient transparency, governance, and oversight of the project. Roles and responsibilities for functions, committees, and boards within the Department for Transport were not clear. There were early warning signs that things were going wrong when external advisors spotted mistakes, but these were not communicated or formally escalated and incorrect reports were circulated to decision makers. Ultimately, there was lack of accountability, which resulted in the taxpayer paying the burden.

2.10.3. JPM

Model Risk Management Framework assessment. In this case, poor model risk management is not the only issue but also broader failings in risk management generally.

Model use. The CIO was supposed to be investing deposits in a low-risk manner, but their activities meant the WHOLE bank exceeded VaR limits for 4 consecutive days. Management claimed not to understand the risk model, but a number of CIO staff were on the executive committee.

Model validation and changes. When existing controls highlighted the level of risk, changes to the controls were implemented but errors meant that these understated the risk by half. The controls were adjusted to suit the (in)validity of the model, not the other way around. The broader risk management issues meant that the further increase in risk-taking was not queried. The subsequent investigations found that the spreadsheet used to calculate the revised VaR metrics was created very quickly, although JPM claimed that it had been in development for a year. An internal report by JPM, however, did identify inadequate approval and implementation of the VaR model.

Model governance and expert judgements. The internal JPM report stated that CIO judgement, execution, and escalation in Q1 2012 were poor and that CIO oversight and controls did not evolve in line with the increased risk and complexity of their activities. The subsequent Senate report said that the CIO risk dashboard was “flashing red and sounding alarms”. The regulator also came in for criticism for its oversight; this inappropriate activity by JPM was first noticed in 2008, but was not followed up.

2.11. Conclusion

The Model Risk Management Framework presents a relatively simple approach for managing model risk. Clearly, it requires a not insubstantial investment of time, particularly from the Risk Function and from model owners, to implement, however, much of what is involved is common sense and good business practice. In particular, in the modern day environment the Board and Senior Management expect that results reported to them have been properly checked and that the models used to generate them are fully understood.

We have seen from the various case studies that model risk can cause very large losses or errors, and that a well-implemented Model Risk Management Framework could potentially have averted these. In the past 10–15 years, companies have generally implemented stronger controls around regulatory and embedded value reporting models. The Model Risk Management Framework builds on this to create an enterprise-wide framework that fits all material models that an organisation uses, embraces thought leadership and best practice from recent market and regulatory developments, and applies sound risk management principles that are already embedded for more established risk types.

In summary, a Model Risk Management Framework should cover:

-

∙ The Board’s appetite for model risk and the articulation of this into a clear statement.

-

∙ The identification of model risks that the organisation is exposed to and which of these are sufficiently material to warrant more comprehensive management.

-

∙ The quantitative and qualitative assessment of these model risks.

-

∙ The monitoring of these risk assessments by the relevant governance body, which should take action, where necessary, to bring the model risk back into line with the Board’s appetite.

Of course any risk management framework can only ever be as good as the people who use it. This will depend on how well-embedded risk culture is in the organisation more generally.

3. Governance and Model Culture

3.1. Introduction

In section 2, we described a range of techniques that have been developed in recent years to manage more effectively the portfolio of models and their associated risks within an organisation. These techniques relate to the consideration of model risk as a risk to be managed as any other; the identification and ranking of models (through an inventory and model risk assessment); and the reduction of model risk through validation and change control. Furthermore, restrictions are imposed on the use of models (so that decisions are only supported by models when the models are “fit for purpose”), as well as requirements introduced for a “use test” (so that models are adapted in response to feedback from the users).

These are common features of Model Risk Management Frameworks which have been developed as a response to some of the shortcomings of models noted through the case studies described in section 2.10, including the specific issues identified during the financial crisis, such as a lack of understanding of the assumptions on which models were based and the appropriateness of such assumptions in a range of market conditions. In this, we recognise that this report is building on the shoulders of existing thinking on Model Risk Management, such as the guidance issued by the Federal Reserve (2011) and by HM Treasury (2013).

Whilst market practice has significantly advanced in recent years, as in, for example, the level of control and governance activities in respect of specific models (such as internal models under Solvency II), in many cases more still needs to be done to embed these techniques across all models used in an organisation.

Notwithstanding the scope for further embedding of Model Risk Management Frameworks, in this section we consider how to further build on such frameworks. Model risk is a consequence of the way that a model is used in decision making. Presuming that decisions are generally driven by model outputs according to uncontested principles, makes both management and quantification of model risk possible, as discussed in sections 2 and 4, respectively. Such a presumption is necessary in order to frame model risk in a tractable way.

However, we know that often decisions are not made purely based on the output of models and that different stakeholders within an organisation will tend to disagree about the ways in which models can and should be used in decision making. In this section we work towards a classification of alternative ways of (not) using models in decision making and discuss how the recognition of such distinct perspectives on modelling can enhance the practice of model risk management. In particular, building on the arguments of section 2.3 it is seen that a wide representation of stakeholders, beyond model users and developers, is necessary in model governance.

3.2. Classifying Perceptions of Appropriate Model Use

Our proposed categorisation of different attitudes to models and modelling is given in Figure 5. Each of the four quadrants depicted represents a particular attitude towards models and their uses. These are not intended as psychological profiles; rather they are generic perceptions that can be held by different stakeholders at different times, depending on their position within an organisation and the specific processes they are involved in.

Figure 5 Alternative perceptions of modelling and its uses

In this representation, the horizontal axis reflects the perceived legitimacy of modelling: in the right half-plane, it is believed that models should be used in decision making, such that the emphasis is on measurement, computation, and mathematical abstraction. In the left half-plane, models are afforded an insubstantial role in decision making – here the emphasis is on intuition and subjective belief.

The vertical axis reflects concern with uncertainty. Stakeholders in the top half-plane are confident in their processes leading to good decisions and are generally not concerned about model uncertainty. Agents in the bottom half-plane are less sure of themselves; in particular, model uncertainty is a major concern for them.

Using (or indeed not using) models in a way consistent with the perceptions of each quadrant generates different sorts of risks, as is discussed below.

3.2.1. Confident Model Users

At top-right, Confident Model Users are keen to use their models in order to take decisions that are optimal. For them, decision making is a process that can and should be driven by modelling. A sophisticated complex model that gives detailed MI and can be used extensively across the enterprise is considered desirable.

The possibility that the model may be substantially flawed is not seriously considered by such agents. Slipping into Rumsfeld-speak, their concern is with Known Knowns: the aspects of the risk environment that can be readily identified and quantified.

Such agents are likely to ignore for too long evidence discordant with their models. They will also be less concerned about whether the model being used is appropriate to the particular purpose to which it is currently being put. In this quadrant, the main risk consists of model inaccuracies driving wrong decisions. This is indeed one of the key kinds of model risk that the Model Risk Management Frameworks described so far seek to address.

3.2.2. Conscientious Modellers