Richer and more realistic assumptions do not suffice to make a theory successful. Scientists use theories as a bag of working tools, and they will not take the burden of a heavier bag unless the new tools are very useful. Prospect Theory was accepted by many scholars not because it is ‘true’ but because the concepts that it added to utility theory … were worth the trouble; they yielded new predictions that turned out to be true. We were lucky.

Daniel Kahneman (Reference Kahneman2011, p. 288)I first met Danny Kahneman in 1971 when he and Amos Tversky came to Eugene, Oregon to spend their sabbatical at the Oregon Research Institute where I worked. They had just finished their first collaborative study, ‘Belief in the Law of Small Numbers’, and in the year they spent in Oregon, they completed several more pathbreaking studies on the availability and representativeness heuristics and set a solid foundation for what became famous as the heuristics and biases approach to the study of judgment under uncertainty. Their experiments were quite simple, often set in the context of story problems, and the findings they produced were stunning, revealing ways of thinking that, in certain circumstances, led to serious errors in probabilistic judgments and risky decisions.

My colleagues Sarah Lichtenstein, Baruch Fischhoff (a student of Amos and Danny’s), and I were similarly engaged in conducting laboratory experiments on preferences among gambles, trying to understand the ways that people processed information about probabilities and consequences. This led to invitations to meet with engineers and physicists who were studying the risks posed to society by emerging technologies such as nuclear power, radiation, chemicals in pesticides, spacecraft, medicinal drugs, and so on. Developers, managers, and regulators of these emerging technologies looked to the young discipline of risk analysis for answers to foundational questions such as ‘How should risk be assessed?’ and ‘How safe is safe enough?’

Risk assessment became increasingly politicized and contentious as the public, fearful of catastrophic accidents in the nuclear power and chemical domains, began to object to the building of new facilities in their backyards. Industrialists and their risk assessors grew frustrated and angry with activists who contested the safety of what were thought to be miracle technologies. Critics of these technologies were derided as being ignorant and irrational, relying on subjective risk perceptions rather than on rational analysis of the objective, that is, real, risks. On numerous occasions, I found myself invited as the lone social scientist in a large meeting of engineers and risk analysts who demanded to know why my colleagues and I were not educating the public about the safety and acceptability of their miracle technologies based on what they considered to be the real risks.

The heuristics and biases discovered by Danny and Amos, along with powerful framing and response-mode effects, describe processes of human thinking in the face of risk that likely are common not only among laypersons but also among experts judging risk intuitively and even analytically (Slovic et al., Reference Slovic, Fischhoff, Lichtenstein, Baum and Singer1981). These findings shattered the illusion of objectivity, showing risk to be extraordinarily complex and the assessment of it to depend on thoroughly subjective judgments. This proved to me that our young field of research on judgment and decision-making had something important to say about decisions involving risk.

As I wrote to Danny shortly before he died:

Thank you for more than a half-century of friendship and inspiration. You are in my thoughts daily as I try to do what I can to bring some sanity into this dangerous and often bizarre world. You have profoundly changed my life and my life’s work for the better and I know there are countless others who would say the same.

I was a painfully shy and introverted young man when I began my career, so underconfident and anxious when I gave my first conference talk that I had to take valium to calm my nerves. A decade or so later, I confidently faced hundreds of physicists, engineers, and nuclear power officials, exhorting them to attend carefully to your work with Amos on heuristics and biases when assessing the safety of nuclear power reactors. My confidence was buoyed by the power of your ideas that so powerfully demonstrated the potential for research on judgment under uncertainty to make the world safer and more rational.

Among the many things you taught me and motivated me to study was the need to think carefully about the vast discrepancy between how we ought to value lives and how we do value them when making decisions. What can be more important than this to spend one’s life studying and trying to communicate to the world?

In the remainder of this brief paper, I will trace the research path that I and many fine colleagues took over several decades to explore the powerful implications of prospect theory for valuing lives and creating a saner and more humane world.

Prospect theory

Prospect theory (Kahneman and Tversky, Reference Kahneman and Tversky1979) is arguably the most important descriptive theoretical framework ever developed in the field of decision-making. It is the foundation for the 2002 Nobel Prize in Economic Sciences awarded to Danny and, according to Google Scholar, has been cited more than 80,000 times in journal articles in business, finance, economics, law, management, medicine, psychology, political science, and many other disciplines.

The heart of the theory is the value function (Figure 1), proposing that the carriers of value are positive or negative changes from a reference point. Kahneman (Reference Kahneman2011) observed that ‘if prospect theory had a flag, this image would be drawn on it’ (p. 282). The function is nonlinear, reflecting diminishing sensitivity to changes in magnitude. In the positive domain, for example, protecting or saving lives, the function rises steeply from the reference point or origin, typically zero lives. The importance of saving one life is great when it is the first or only life saved but diminishes marginally as the total number of lives saved increases. Thus, psychologically, the importance of saving one life is diminished against the background of a larger threat – we will likely not ‘feel’ much different, nor value the difference, between saving 87 lives and saving 88 if these prospects are presented to us separately. In fact, experiments studying donations to aid needy children have found that the curvature begins surprisingly early, even with rather small numbers. In a separate evaluation, the perceived importance of protecting two children in need may not feel twice as important as protecting one. And the function may level off quickly. You likely will not feel more concerned about protecting six children in need than for protecting five. My colleagues and I referred to this as psychophysical numbing, as it follows the same diminishing responsiveness as the eye when light energy increases and the ear when sound energy increases. In a dark room, you can see a faint light that will no longer be visible in a bright room. The whisper that you hear in a quiet room becomes inaudible in a noisy environment. We sense our ‘feelings of risk’ as much as we sense the brightness of light and the loudness of sounds. And the ability to hear faint sounds, see dim lights, and protect a single life is highly adaptive for survival.

Figure 1. Value function in prospect theory. Source: Kahneman and Tversky (Reference Kahneman and Tversky1979).

But sensitivity to very small amounts of light and sound energy, and powerful emotions that motivate caring greatly for one or a small number of lives, comes at a cost. The delicate structures of the eye and ear and our emotional systems cannot scale up proportionately as the amounts of energy and the numbers of lives keep increasing. These systems asymptote to protect us from being overwhelmed by sighs, sounds, and, yes, emotions. Robert Jay Lifton (Reference Lifton1967) first documented the concept of psychic numbing after the atomic bombings in Japan, when aid workers had to become unfeeling to do their jobs without becoming overwhelmed by their emotions. Similar numbing was necessary to enable medical personnel to function in hospitals overwhelmed with severely ill COVID-19 patients.

However, diminishing marginal value for lives is not always beneficial.

My reaction to first seeing the value function was to run to Amos Tversky saying ‘This is terrible. It means that a life that is so important if it is the only life at risk, loses its value when others are also at risk. Is this how we want to behave? We need to study this more’.

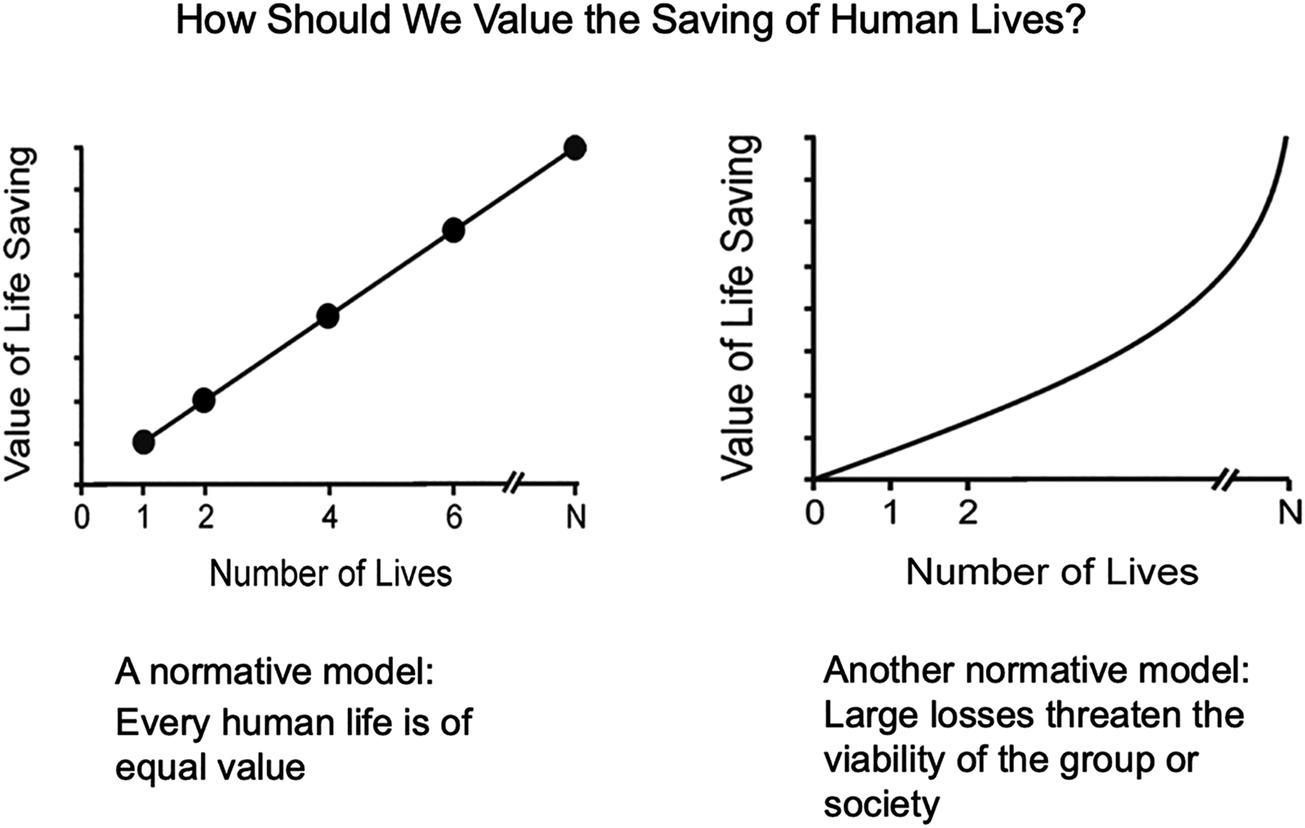

In fact, there are normative models for how we should value lives based on what Danny and others would later call ‘slow thinking’ that is quite different from the value function – see Figures 2 and 3, for example.

Figure 2. Two normative models for the valuing of human lives. Source: Slovic (Reference Slovic2007).

Figure 3. Two descriptive models for the valuing of human lives. Source: Slovic (Reference Slovic2007).

The left side of Figure 2 depicts a model that assumes every life is intrinsically of equal value, so as the number of lives at stake increases, the overall value of protecting them just adds them up, as represented by a linear function. A tweak on that model occurs when you are getting to a tipping point where the next lives lost would lead to the extinction of a group or a species. Then those lives become even more valuable to protect than the ones that came before them and the value function curves upward in a nonlinear fashion, as shown on the right side of Figure 2.

But our actions in the face of threats too large numbers of lives do not seem to follow either of these normative models, and this, in part, is because our feelings override our analytic judgments. These feelings value individual lives greatly but tend to be insensitive to large losses of life. Research finds support for two descriptive models that tell us how we often do value the saving of human lives (Figure 3). On the left side of this figure is the value function of prospect theory. It starts strongly with high values for small numbers of lives, especially the first or only life at risk, which motivates great protective or rescue efforts. Kogut and Ritov (Reference Kogut and Ritov2005b) have named this the singularity effect. But additional lives at risk typically evoke smaller and smaller changes in response (psychic numbing).

Fetherstonhaugh et al. (Reference Fetherstonhaugh, Slovic, Johnson and Friedrich1997) demonstrated psychic numbing in the context of evaluating people’s willingness to enact various lifesaving interventions. In a study involving a hypothetical grant-funding agency, respondents were asked to indicate the number of lives a medical research institute would have to save to merit the receipt of a $10 million grant. Nearly two-thirds of the respondents raised their minimum benefit requirements to warrant funding when there was a larger at-risk population, with a median value of 9,000 lives needing to be saved when 15,000 were at risk (implicitly valuing each life saved at $1,111), compared with a median of 100,000 lives needing to be saved out of 290,000 at risk (implicitly valuing each life saved at $100). Thus, respondents saw saving 9,000 lives in the smaller population as more valuable than saving more than ten times as many lives in the larger population. The same study also found that people were less willing to send aid that would save 4,500 lives in Rwandan refugee camps as the size of the camps’ at-risk populations increased.

However, research has also found something that prospect theory missed. In some cases, as the number of lives at risk increases, it is not just that we become increasingly insensitive to any additional lives – we actually may care less about what is happening-something known as compassion fade or compassion collapse (Västfjäll et al., Reference Västfjäll, Slovic, Mayorga and Peters2014).

Compassion’s collapse is dramatically illustrated by the genocide in Rwanda in 1994 where about 800,000 people were murdered in 100 days. The world knew about this, watched from afar while it happened, and did nothing to stop it. Over the years, there have been numerous episodes of genocide and mass atrocities where little was done to intervene and stop the bloodshed (Slovic, Reference Slovic2007; Power, Reference Power2002). This decline in response as the number of people at risk increases is also documented in controlled experiments. A study in Israel by Kogut and Ritov (Reference Kogut and Ritov2005a) illustrates the decline in the value of lives even with small increases in number. They showed a picture of eight children in need of $300,000 for cancer treatment, and people were asked to donate money to help them. In other separate conditions, they took pictures of individual children out of the group photo. Each child was said to need $300,000 for treatment, and donations were requested. They found that the donations were much higher for single children than for the group of eight. My colleagues and I have conducted other studies, showing that a decline in compassion may begin with as few as two lives (Västfjäll et al., Reference Västfjäll, Slovic, Mayorga and Peters2014). We do not concentrate our attention as closely on two people at risk. When our attention gets divided, our feelings are lessened, and we may respond less to two people than to one individual. This decline may continue as the number of lives at risk increases.

The importance of understanding and combating the implications of the value function

With many fine colleagues, I have been studying the societal and civilizational implications of the value function for more than a quarter-century. It has been fascinating trying to discover the powerful implications of the innocent-looking S-shaped line shown in Figure 1.

Part of this research was done in controlled experiments, such as those described above with tasks such as charitable donations, as a means of examining how context and sociocultural factors influenced the valuation of varying numbers of lives. These studies consistently revealed psychic numbing and, later, compassion fade and collapse (Västfjäll et al., Reference Västfjäll, Slovic, Mayorga and Peters2014, Reference Västfjäll, Slovic and Mayorga2015; Dickert et al., Reference Dickert, Västfjäll, Kleber and Slovic2015; Kogut et al., Reference Kogut, Slovic and Västfjäll2015). We confirmed that these effects were primarily driven by fast intuitive feelings, resulting from what Danny and others called System 1 thinking (Kahneman, Reference Kahneman2011). This led us to assert that our feelings do not do arithmetic well. They do not add; rather, they average, and they do not multiply either, something we characterized as the (deadly) arithmetic of compassion (Slovic and Slovic, Reference Slovic and Slovic2015). This is exemplified by the shocking statement that, in many important circumstances, ‘the more who die, the less we care’.

Subsequently, we linked numbing to inattention in decisions where multiple and sometimes conflicting attributes had to be weighed and integrated into a judgment or choice. Rather than apply a compensatory integration process with the proverbial weighing and balancing of competing objectives and values, all too often some limited subset of the information drew all or most of the attention, allowing the conflict to disappear, and a difficult decision suddenly becomes an easy decision. We recognized this as an instance of ‘the prominence effect’ (Tversky et al., Reference Tversky, Sattath and Slovic1988).

During an election season, for example, prominence is highlighted by single-issue voters, who say their vote will be decided by the candidate’s stance on one issue dear to them, such as closing the border to immigration, overlooking many ways the candidate’s adverse qualities and harmful plans will damage them and society.

I invoked psychic numbing and prominence to explain why genocides and other mass atrocities have occurred frequently since, after the Holocaust, good people and their leaders vowed ‘never again’ to let such mass abuses of humanity occur. Despite heartfelt assertions that endangered people absolutely needed to be protected from genocidal abuse, leaders have done little to stop it, instead making decisions not to act based on various security considerations that were prominent (Slovic, Reference Slovic2015).What I learned from this was that, when we are emotionally numb to tragedy, no matter how many lives are affected, our limited attentional capacity like a spotlight with a very narrow beam becomes captured by more imminent and tangible concerns, such as the physical, monetary, or political costs of trying to save lives that have little emotional meaning to us. Danny, having studied attention early in his career, had a name for our blindness to information not in the attentional spotlight (Kahneman, Reference Kahneman2011): ‘What you see is all there is’ (p. 85).

After studying genocide, I began to focus my limited attention on another threat to humanity, nuclear war. I was contacted by Daniel Ellsberg, who had been ‘in the room’ with many nuclear weapons strategists during the 1960s and was writing the book The Doomsday Machine (Ellsberg, Reference Ellsberg2017), describing this experience. He had heard about psychic numbing and wondered whether it was relevant. It was.

As I discovered when I began studying numbing in warfare, it was clear that history corroborated the laboratory findings.

Even before they had nuclear weapons, U.S. commanders in WWII did not refrain from using conventional firebombs to attack cities and civilians with destructiveness comparable to or even greater than that which occurred in Hiroshima and Nagasaki. General Curtis Lemay orchestrated a relentless firebombing campaign against 63 Japanese cities, killing hundreds of thousands of Japanese civilians (100,000 in Tokyo in one night). Only Kyoto, Hiroshima, and Nagasaki were spared. LeMay observed: ‘Killing Japanese didn’t bother me very much … It was getting the war over that bothered me. So I wasn’t worried particularly about how many people we killed’ (Rhodes, Reference Rhodes1996, p. 21).

Ellsberg (Reference Ellsberg2017) reported numerous examples of numbness to immense potential losses of life. For example, technological advances allowed the substitution of H-bombs for A-bombs in planning for a possible war against the Soviet bloc, thus raising the expected death toll from executing the U.S. nuclear war plan from about 15 million in 1955 to more than 200 million in 1961. ‘This change was introduced not because it was judged by anyone to be necessary, but because it was simply what the new, more efficient nuclear bombs – cheaper but vastly larger in yield than the old ones – could and would accomplish when launched against the same targets’ (p. 270).

Ellsberg notes that ‘the risk the presidents and Joint Chiefs were consciously accepting, … involved the possible ending of organized society – the very existence of cities – in the northern hemisphere, along with the deaths of nearly all its human inhabitants’ (p. 272). Later, he was informed that U.S. planners had been targeting ready to kill more than 600 million people in the Soviet Union and China.

Struggling to comprehend the meaning of 600 million deaths, Ellsberg thought: ‘That’s a hundred Holocausts’.

The next frontier

In the middle of the last century, Herbert Simon laid out an alternative to the assumption of rationality underlying economic theory. His principle of bounded rationality asserted that human behavior is guided by simplified models of the world that could only be understood in terms of psychological properties of perception, thinking, and learning which differ greatly from economic models of rationality (Simon, Reference Simon1957). Amos Tversky and Danny Kahneman’s pioneering research, with help from many others inspired by them, deepened our understanding of what bounded rationality really means for judgments and decision-making in the face of risk. This sparked a revolution that dethroned Homo Economicus, the rational man, and led to a new discipline, behavioral economics, grounded in psychology as Simon foresaw.

But Homo Economicus is still alive in the management of the world’s nuclear weapons programs. Almost 80 years of nuclear peace have led to confidence that deterrence strategies work based on the assumption that rational actors won’t act rashly in the face of mutually assured destruction. Nuclear weapons are instruments of peace, say military leaders and government officials (e.g., Mies, Reference Mies2012) as they continually enlarge their arsenals and improve ways to deliver the bombs with hypersonic missiles and from platforms in space, confident that rational actors will never use them.

Research inspired by Tversky and Kahneman is beginning to show how well-known heuristics and biases, as well as noise (Kahneman et al., Reference Kahneman, Sibony and Sunstein2021), can increase the risk that deterrence will fail and nuclear weapons will again be used against a threatening adversary (Slovic et al., Reference Slovic and Post2024). This sobering view demands strong new measures to minimize the devastation that the next nuclear war will create. Another behavioral revolution is needed, this time in the war room. On the flag of that revolution should be the value function of prospect theory.