I. INTRODUCTION

With the advent of virtual and augmented reality comes the birth of a new medium: live captured three-dimensional (3D) content that can be experienced from any point of view. Such content ranges from static scans of compact 3D objects to dynamic captures of non-rigid objects such as people, to captures of rooms including furniture, public spaces swarming with people, and whole cities in motion. For such content to be captured at one place and delivered to another for consumption by a virtual or augmented reality device (or by more conventional means), the content needs to be represented and compressed for transmission or storage. Applications include gaming, tele-immersive communication, free navigation of highly produced entertainment as well as live events, historical artifact and site preservation, acquisition for special effects, and so forth. This paper presents a novel means of representing and compressing the visual part of such content.

Until this point, two of the more promising approaches to representing both static and time-varying 3D scenes have been polygonal meshes and point clouds, along with their associated color information. However, both approaches have drawbacks. Polygonal meshes represent surfaces very well, but they are not robust to noise and other structures typically found in live captures, such as lines, points, and ragged boundaries that violate the assumptions of a smooth surface manifold. Point clouds, on the other hand, have a hard time modeling surfaces as compactly as meshes.

We propose a hybrid between polygonal meshes and point clouds: polygon clouds. Polygon clouds are sets of polygons, often called a polygon soup. The polygons in a polygon cloud are not required to represent a coherent surface. Like the points in a point cloud, the polygons in a polygon cloud can represent noisy, real-world geometry captures without any assumption of a smooth 2D manifold. In fact, any polygon in a polygon cloud can be collapsed into a point or line as a special case. The polygons may also overlap. On the other hand, the polygons in the cloud can also be stitched together into a watertight mesh if desired to represent a smooth surface. Thus polygon clouds generalize both point clouds and polygonal meshes.

For concreteness, we focus on triangles instead of arbitrary polygons, and we develop an encoder and decoder for sequences of triangle clouds. We assume a simple group of frames (GOF) model, where each GOF begins with an Intra (I) frame, also called a reference frame or a key frame, which is followed by a sequence of Predicted (P) frames, also called inter frames. The triangles are assumed to be consistent across frames. That is, the triangles' vertices are assumed to be tracked from one frame to the next. The trajectories of the vertices are not constrained. Thus the triangles may change from frame to frame in location, orientation, and proportion.

For geometry encoding, redundancy in the vertex trajectories is removed by a spatial orthogonal transform followed by temporal prediction, allowing low latency. For color encoding, the triangles in each frame are projected back to the coordinate system of the reference frame. In the reference frame the triangles are voxelized in order to ensure that their color textures are sampled uniformly in space regardless of the sizes of the triangles, and in order to construct a common vector space in which to describe the color textures and their evolution from frame to frame. Redundancy of the color vectors is removed by a spatial orthogonal transform followed by temporal prediction, similar to redundancy removal for geometry. Uniform scalar quantization and entropy coding matched to the spatial transform are employed for both color and geometry.

We compare triangle clouds to both triangle meshes and point clouds in terms of compression, for live captured dynamic colored geometry. We find that triangle clouds can be compressed nearly as well as triangle meshes while being far more flexible in representing live captured content. We also find that triangle clouds can be used to compress point clouds with significantly better performance than previously demonstrated point cloud compression methods. Since we are motivated by virtual and augmented reality (VR/AR) applications, for which encoding and decoding have to have low latency, we focus on an approach that can be implemented at low computational complexity. A preliminary part of this work with more limited experimental evaluation was published in [Reference Pavez and Chou1].

The paper is organized as follows as follows. After a summary of related work in Section II, preliminary material is presented in Section III. Components of our compression system are described in Section IV, while the core of our system is given in Section V. Experimental results are presented in Section VI. The conclusion is in Section VII.

II. RELATED WORK

A) Mesh compression

3D mesh compression has a rich history, particularly from the 1990s forward. Overviews may be found in [Reference Alliez, Gotsman, Dodgson, Floater and Sabin2–Reference Peng, Kim and Jay Kuo4]. Fundamental is the need to code mesh topology, or connectivity, such as in [Reference Mamou, Zaharia and Prêteux5,Reference Rossignac6]. Beyond coding connectivity, coding the geometry, i.e., the positions of the vertices is also important. Many approaches have been taken, but one significant and practical approach to geometry coding is based on “geometry images” [Reference Gu, Gortler and Hoppe7] and their temporal extension, “geometry videos” [Reference Briceño, Sander, McMillan, Gortler and Hoppe8,Reference Collet9]. In these approaches, the mesh is partitioned into patches, the patches are projected onto a 2D plane as charts, non-overlapping charts are laid out in a rectangular atlas, and the atlas is compressed using a standard image or video coder, compressing both the geometry and the texture (i.e., color) data. For dynamic geometry, the meshes are assumed to be temporally consistent (i.e., connectivity is constant frame-to-frame) and the patches are likewise temporally consistent. Geometry videos have been used for representing and compressing free-viewpoint video of human actors [Reference Collet9]. Key papers on mesh compression of human actors in the context of tele-immersion include [Reference Doumanoglou, Alexiadis, Zarpalas and Daras10,Reference Mekuria, Sanna, Izquierdo, Bulterman and Cesar11].

B) Motion estimation

A critical part of dynamic mesh compression is the ability to track points over time. If a mesh is defined for a keyframe, and the vertices are tracked over subsequent frames, then the mesh becomes a temporally consistent dynamic mesh. There is a huge body of literature in the 3D tracking, 3D motion estimation or scene flow, 3D interest point detection and matching, 3D correspondence, non-rigid registration, and the like. We are particularly influenced by [Reference Dou12–Reference Newcombe, Fox and Seitz14], all of which produce in real time, given data from one or more RGBD sensors for every frame t, a parameterized mapping ![]() $f_{\theta _t}:{\open R}^3\rightarrow {\open R}^3$ that maps points in frame t to points in frame t+1. Though corrections may need to be made at each frame, chaining the mappings together over time yields trajectories for any given set of points. Compressing these trajectories is similar to compressing motion capture (mocap) trajectories, which has been well studied. [Reference Hou, Chau, Magnenat-Thalmann and He15] is a recent example with many references. Compression typically involves an intra-frame transform to remove spatial redundancy and either temporal prediction (if low latency is required) or a temporal transform (if the entire clip or GOF is available) to remove temporal redundancy, as in [Reference Hou, Chau, Magnenat-Thalmann and He16].

$f_{\theta _t}:{\open R}^3\rightarrow {\open R}^3$ that maps points in frame t to points in frame t+1. Though corrections may need to be made at each frame, chaining the mappings together over time yields trajectories for any given set of points. Compressing these trajectories is similar to compressing motion capture (mocap) trajectories, which has been well studied. [Reference Hou, Chau, Magnenat-Thalmann and He15] is a recent example with many references. Compression typically involves an intra-frame transform to remove spatial redundancy and either temporal prediction (if low latency is required) or a temporal transform (if the entire clip or GOF is available) to remove temporal redundancy, as in [Reference Hou, Chau, Magnenat-Thalmann and He16].

C) Graph signal processing

Graph Signal Processing (GSP) has emerged as an extension of the theory of linear shift invariant signal processing to the processing of signals on discrete graphs, where the shift operator is taken to be the adjacency matrix of the graph, or alternatively the Laplacian matrix of the graph [Reference Sandryhaila and Moura17,Reference Shuman, Narang, Frossard, Ortega and Vandergheynst18]. Critically sampled perfect reconstruction wavelet filter banks on graphs were proposed in [Reference Narang and Ortega19,Reference Narang and Ortega20]. These constructions were used for dynamic mesh compression in [Reference Anis, Chou and Ortega21,Reference Nguyen, Chou and Chen22]. In particular [Reference Anis, Chou and Ortega21], simultaneously modifies the point cloud and fits triangular meshes. These meshes are time consistent, and come at different levels of resolution, which are used for multi-resolution transform coding of motion trajectories and color.

D) Point cloud compression using octrees

Sparse Voxel Octrees (SVOs) were developed in the 1980s to represent the geometry of 3D objects [Reference Jackins and Tanimoto23,Reference Meagher24]. Recently SVOs have been shown to have highly efficient implementations suitable for encoding at video frame rates [Reference Loop, Zhang and Zhang25]. In the guise of occupancy grids, they have also had significant use in robotics [Reference Elfes26–Reference Pathak, Birk, Poppinga and Schwertfeger28]. Octrees were first used for point cloud compression in [Reference Schnabel and Klein29]. They were further developed for progressive point cloud coding, including color attribute compression, in [Reference Huang, Peng, Kuo and Gopi30]. Octrees were extended to the coding of dynamic point clouds (i.e., point cloud sequences) in [Reference Kammerl, Blodow, Rusu, Gedikli, Beetz and Steinbach31]. The focus of [Reference Kammerl, Blodow, Rusu, Gedikli, Beetz and Steinbach31] was geometry coding; their color attribute coding remained rudimentary. Their method of inter-frame geometry coding was to take the exclusive-OR (XOR) between frames and code the XOR using an octree. Their method was implemented in the Point Cloud Library [Reference Rusu and Cousins32].

E) Color attribute compression for static point clouds

To better compress the color attributes in static voxelized point clouds, Zhang et al. used transform coding based on the Graph Fourier Transform (GFT), recently proposed in the context of GSP [Reference Zhang, Florêncio and Loop33]. While transform coding based on the GFT has good compression performance, it requires eigen-decompositions for each coded block, and hence may not be computationally attractive. To improve the computational efficiency, while not sacrificing compression performance, Queiroz and Chou developed an orthogonal Region-Adaptive Hierarchical Transform (RAHT) along with an entropy coder [Reference de Queiroz and Chou34]. RAHT is essentially a Haar transform with the coefficients appropriately weighted to take the non-uniform shape of the domain (or region) into account. As its structure matches the SVO, it is extremely fast to compute. Other approaches to non-uniform regions include the shape-adaptive discrete cosine transform [Reference Cohen, Tian and Vetro35] and color palette coding [Reference Dado, Kol, Bauszat, Thiery and Eisemann36]. Further approaches based on a non-uniform sampling of an underlying stationary process can be found in [Reference de Queiroz and Chou37], which uses the Karhunen–Loève transform matched to the sample, and in [Reference Hou, Chau, He and Chou38], which uses sparse representation and orthogonal matching pursuit.

F) Dynamic point cloud compression

Thanou et al. [Reference Thanou, Chou and Frossard39,Reference Thanou, Chou and Frossard40] were the first to deal fully with dynamic voxelized points clouds, by finding matches between points in adjacent frames, warping the previous frame to the current frame, predicting the color attributes of the current frame from the quantized colors of the previous frame, and coding the residual using the GFT-based method of [Reference Zhang, Florêncio and Loop33]. Thanou et al. used the XOR-based method of Kammerl et al. [Reference Kammerl, Blodow, Rusu, Gedikli, Beetz and Steinbach31] for inter-frame geometry compression. However, the method of [Reference Kammerl, Blodow, Rusu, Gedikli, Beetz and Steinbach31] proved to be inefficient, in a rate-distortion sense, for anything except slowly moving subjects, for two reasons. First, the method “predicts” the current frame from the previous frame, without any motion compensation. Second, the method codes the geometry losslessly, and so has no ability to perform a rate-distortion trade-off. To address these shortcomings, Queiroz and Chou [Reference de Queiroz and Chou41] used block-based motion compensation and rate-distortion optimization to select between coding modes (intra or motion-compensated coding) for each block. Further, they applied RAHT to coding the color attributes (in intra-frame mode), color prediction residuals (in inter-frame mode), and the motion vectors (in inter-frame mode). They also used in-loop deblocking filters. Mekuria et al. [Reference Mekuria, Blom and Cesar42] independently proposed block-based motion compensation for dynamic point cloud sequences. Although they did not use rate-distortion optimization, they used affine transformations for each motion-compensated block, rather than just translations. Unfortunately, it appears that block-based motion compensation of dynamic point cloud geometry tends to produce gaps between blocks, which are perceptually more damaging than indicated by objective metrics such as the Haussdorf-based metrics commonly used in geometry compression [Reference Mekuria, Li, Tulvan and Chou43].

G) Key learnings

Some of the key learnings from the previous work, taken as a whole, are that

• Point clouds are preferable to meshes for resilience to noise and non-manifold signals measured in real-world signals, especially for real-time capture where the computational cost of heavy duty pre-processing (e.g., surface reconstruction, topological denoising, charting) can be prohibitive.

• For geometry coding in static scenes, point clouds appear to be more compressible than meshes, even though the performance of point cloud geometry coding seems to be limited by the lossless nature of the current octree methods. In addition, octree processing for geometry coding is extremely fast.

• For color attribute coding in static scenes, both point clouds and meshes appear to be well compressible. If charting is possible, compressing the color as an image may win out due to the maturity of image compression algorithms today. However, direct octree processing for color attribute coding is extremely fast, as it is for geometry coding.

• For both geometry and color attribute coding in dynamic scenes (or inter-frame coding), temporally consistent dynamic meshes are highly compressible. However, finding a temporally consistent mesh can be challenging from a topological point of view as well as from a computational point of view.

In this work, we aim to achieve the high compression efficiency possible with the intra-frame point cloud compression and inter-frame dynamic mesh compression, while simultaneously achieving the high computational efficiency possible with octree-based processing, as well as its robustness to real-world noise and non-manifold data. We attain higher computational efficiency for temporal coding, by first exploiting available motion information for inter-frame prediction, instead of performing motion estimation at the encoder side as in [Reference Anis, Chou and Ortega21,Reference Thanou, Chou and Frossard39,Reference Thanou, Chou and Frossard40]. In practice, motion trajectories can be obtained in real time using several existing approaches [Reference Dou12–Reference Newcombe, Fox and Seitz14]. Second, for efficient and low complexity transform coding, we follow [Reference de Queiroz and Chou34].

H) Contributions and main results

Our contributions, which are summarized in more detail in Section VII, include

(i) Introduction of triangle clouds, a representation intermediate between triangle meshes and point clouds, for efficient compression as well as robustness to real-world data.

(ii) A comprehensive algorithm for triangle cloud compression, employing novel geometry, color, and temporal coding.

(iii) Reduction of inter- and intra-coding of color and geometry to compression of point clouds with different attributes.

(iv) Implementation of a low complexity point cloud transform coding system suitable for real time applications, including a new fast implementation of the transform from [Reference de Queiroz and Chou34].

We demonstrate the advantages of polygon clouds for compression throughout the extensive coding experiments evaluated using a variety of distortion measures. Our main findings are summarized as follows:

• Our intra-frame geometry coding is more efficient than previous intra-frame geometry coding based on point clouds by 5–10x or more in geometry bitrate,

• Our inter-frame geometry coding is better than our intra-frame geometry coding by 3x or more in geometry bitrate,

• Our inter-frame color coding is better than our/previous intra-frame color coding by up to 30% in color bitrate,

• Our temporal geometry coding is better than recent dynamic mesh compression by 6x in geometry bitrate, and

• Our temporal coding is better than recent point cloud compression by 33% in overall bitrate.

Our results also reveal the hyper-sensitivity of color distortion measures to geometry compression.

III. PRELIMINARIES

B) Dynamic triangle clouds

A dynamic triangle cloud is a numerical representation of a time changing 3D scene or object. We denote it by a sequence ![]() $\lbrace {\cal T}^{(t)} \rbrace $ where

$\lbrace {\cal T}^{(t)} \rbrace $ where ![]() ${\cal T}^{(t)}$ is a triangle cloud at time t. Each frame

${\cal T}^{(t)}$ is a triangle cloud at time t. Each frame ![]() ${\cal T}^{(t)}$ is composed of a set of vertices

${\cal T}^{(t)}$ is composed of a set of vertices ![]() ${\cal V}^{(t)}$, faces (polygons)

${\cal V}^{(t)}$, faces (polygons) ![]() ${\cal F}^{(t)}$, and color

${\cal F}^{(t)}$, and color ![]() ${\cal C}^{(t)}$.

${\cal C}^{(t)}$.

The geometry information (shape and position) consists of a list of vertices ![]() ${\cal V}^{(t)}=\lbrace v_i^{(t)} : i=1,\,\ldots ,\, N_p\rbrace $, where each vertex

${\cal V}^{(t)}=\lbrace v_i^{(t)} : i=1,\,\ldots ,\, N_p\rbrace $, where each vertex ![]() $v_i^{(t)}=[x_i^{(t)},\,y_i^{(t)},\,z_i^{(t)}]$ is a point in 3D, and a list of triangles (or faces)

$v_i^{(t)}=[x_i^{(t)},\,y_i^{(t)},\,z_i^{(t)}]$ is a point in 3D, and a list of triangles (or faces) ![]() ${\cal F}^{(t)}=\lbrace f_m^{(t)}: m=1,\,\ldots ,\,N_f \rbrace $, where each face

${\cal F}^{(t)}=\lbrace f_m^{(t)}: m=1,\,\ldots ,\,N_f \rbrace $, where each face ![]() $f_m^{(t)}=[i_m^{(t)},\,j_m^{(t)},\,k_m^{(t)}]$ is a vector of indices of vertices from

$f_m^{(t)}=[i_m^{(t)},\,j_m^{(t)},\,k_m^{(t)}]$ is a vector of indices of vertices from ![]() ${\cal V}^{(t)}$. We denote by

${\cal V}^{(t)}$. We denote by ![]() ${\bf V}^{(t)}$ the

${\bf V}^{(t)}$ the ![]() $N_p \times 3$ matrix whose i-th row is the point

$N_p \times 3$ matrix whose i-th row is the point ![]() $v_i^{(t)}$, and similarly, we denote by

$v_i^{(t)}$, and similarly, we denote by ![]() ${\bf F}^{(t)}$ the

${\bf F}^{(t)}$ the ![]() $N_f \times 3$ matrix whose m-th row is the triangle

$N_f \times 3$ matrix whose m-th row is the triangle ![]() $f_m^{(t)}$. The triangles in a triangle cloud do not have to be adjacent or form a mesh, and they can overlap. Two or more vertices of a triangle may have the same coordinates, thus collapsing into a line or point.

$f_m^{(t)}$. The triangles in a triangle cloud do not have to be adjacent or form a mesh, and they can overlap. Two or more vertices of a triangle may have the same coordinates, thus collapsing into a line or point.

The color information consists of a list of colors ![]() ${\cal C}^{(t)}=\lbrace c_n^{(t)} : n=1,\,\ldots ,\, N_c\rbrace $, where each color

${\cal C}^{(t)}=\lbrace c_n^{(t)} : n=1,\,\ldots ,\, N_c\rbrace $, where each color ![]() $c_n^{(t)}=[Y_n^{(t)},\,U_n^{(t)}$,

$c_n^{(t)}=[Y_n^{(t)},\,U_n^{(t)}$, ![]() $V_n^{(t)}]$ is a vector in YUV space (or other convenient color space). We denote by

$V_n^{(t)}]$ is a vector in YUV space (or other convenient color space). We denote by ![]() ${\bf C}^{(t)}$ the

${\bf C}^{(t)}$ the ![]() $N_c \times 3$ matrix whose n-th row is the color

$N_c \times 3$ matrix whose n-th row is the color ![]() $c_n^{(t)}$. The list of colors represents the colors across the surfaces of the triangles. To be specific,

$c_n^{(t)}$. The list of colors represents the colors across the surfaces of the triangles. To be specific, ![]() $c_n^{(t)}$ is the color of a “refined” vertex

$c_n^{(t)}$ is the color of a “refined” vertex ![]() $v_r^{(t)}(n)$, where the refined vertices are obtained by uniformly subdividing each triangle in

$v_r^{(t)}(n)$, where the refined vertices are obtained by uniformly subdividing each triangle in ![]() ${\cal F}^{(t)}$ by upsampling factor U, as shown in Fig. 1(b) for U=4. We denote by

${\cal F}^{(t)}$ by upsampling factor U, as shown in Fig. 1(b) for U=4. We denote by ![]() ${\bf V}_r^{(t)}$ the

${\bf V}_r^{(t)}$ the ![]() $N_c \times 3$ matrix whose n-th row is the refined vertex

$N_c \times 3$ matrix whose n-th row is the refined vertex ![]() $v_r^{(t)}(n)$.

$v_r^{(t)}(n)$. ![]() ${\bf V}_r^{(t)}$ can be computed from

${\bf V}_r^{(t)}$ can be computed from ![]() ${\cal V}^{(t)}$ and

${\cal V}^{(t)}$ and ![]() ${\cal F}^{(t)}$, so we do not need to encode it, but we will use it to compress the color information. Thus frame t of the triangle cloud can be represented by the triple

${\cal F}^{(t)}$, so we do not need to encode it, but we will use it to compress the color information. Thus frame t of the triangle cloud can be represented by the triple ![]() ${\cal T}^{(t)}=({\bf V}^{(t)},{\bf F}^{(t)},{\bf C}^{(t)})$.

${\cal T}^{(t)}=({\bf V}^{(t)},{\bf F}^{(t)},{\bf C}^{(t)})$.

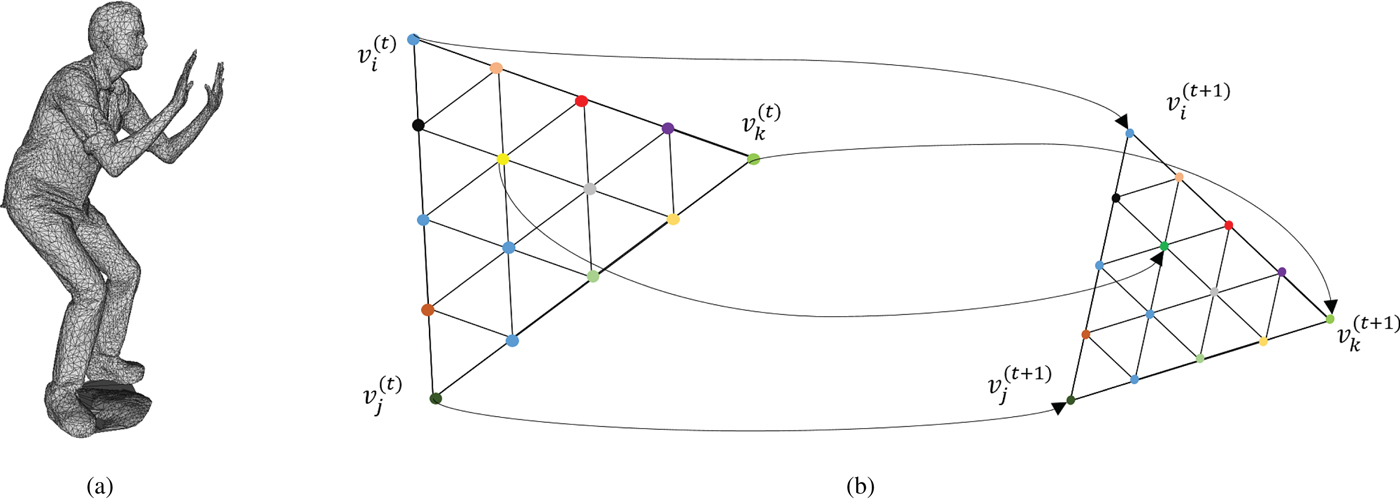

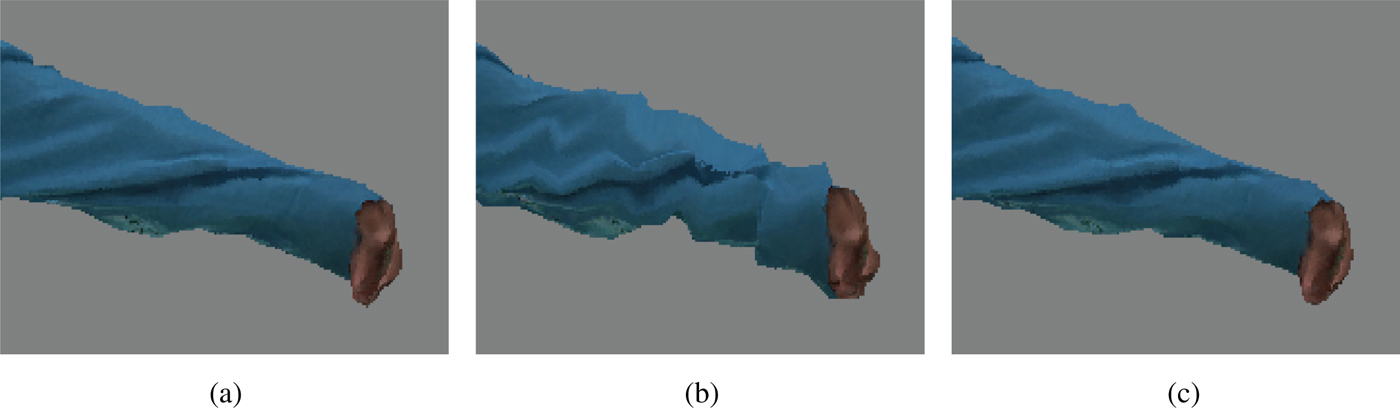

Fig. 1. Triangle cloud geometry information. (b) A triangle in a dynamic triangle cloud is depicted. The vertices of the triangle at time t are denoted by ![]() $v_i^{(t)},\,v_j^{(t)},\,v_k^{(t)}$. Colored dots represent “refined” vertices, whose coordinates can be computed from the triangle's coordinates using Alg. 5. Each refined vertex has a color attribute. (a) Man mesh. (b) Correspondences between two consecutive frames.

$v_i^{(t)},\,v_j^{(t)},\,v_k^{(t)}$. Colored dots represent “refined” vertices, whose coordinates can be computed from the triangle's coordinates using Alg. 5. Each refined vertex has a color attribute. (a) Man mesh. (b) Correspondences between two consecutive frames.

Note that a triangle cloud can be equivalently represented by a point cloud given by the refined vertices and the color attributes ![]() $({\bf V}_r^{(t)}$,

$({\bf V}_r^{(t)}$, ![]() ${\bf C}^{(t)})$. For each triangle in the triangle cloud, there are

${\bf C}^{(t)})$. For each triangle in the triangle cloud, there are ![]() $(U+1)(U+2)/2$ color values distributed uniformly on the triangle surface, with coordinates given by the refined vertices (see Fig. 1(b)). Hence the equivalent point cloud has

$(U+1)(U+2)/2$ color values distributed uniformly on the triangle surface, with coordinates given by the refined vertices (see Fig. 1(b)). Hence the equivalent point cloud has ![]() $N_c = N_f(U+1)(U+2)/2$ points. We will make use of this point cloud representation property in our compression system. The up-sampling factor U should be high enough so that it does not limit the color spatial resolution obtainable by the color cameras. In our experiments, we set U=10 or higher. Setting U higher does not typically affect the bit rate significantly, though it does affect memory and computation in the encoder and decoder. Moreover, for U=10, the number of triangles is much smaller than the number of refined vertices (

$N_c = N_f(U+1)(U+2)/2$ points. We will make use of this point cloud representation property in our compression system. The up-sampling factor U should be high enough so that it does not limit the color spatial resolution obtainable by the color cameras. In our experiments, we set U=10 or higher. Setting U higher does not typically affect the bit rate significantly, though it does affect memory and computation in the encoder and decoder. Moreover, for U=10, the number of triangles is much smaller than the number of refined vertices (![]() $N_f \ll N_c$), which is one of the reasons we can achieve better geometry compression using a triangle cloud representation instead of a point cloud.

$N_f \ll N_c$), which is one of the reasons we can achieve better geometry compression using a triangle cloud representation instead of a point cloud.

C) Compression system overview

In this section, we provide an overview of our system for compressing dynamic triangle clouds. We use a GOF model, in which the sequence is partitioned into GOFs. The GOFs are processed independently. Without loss of generality, we label the frames in a GOF ![]() $t=1\ldots ,\,N$. There are two types of frames: reference and predicted. In each GOF, the first frame (t=1) is a reference frame and all other frames (

$t=1\ldots ,\,N$. There are two types of frames: reference and predicted. In each GOF, the first frame (t=1) is a reference frame and all other frames (![]() $t=2,\,\ldots ,\,N$) are predicted. Within a GOF, all frames must have the same number of vertices, triangles, and colors:

$t=2,\,\ldots ,\,N$) are predicted. Within a GOF, all frames must have the same number of vertices, triangles, and colors: ![]() $\forall t\in [N]$,

$\forall t\in [N]$, ![]() ${\bf V}^{(t)} \in {\open R}^{N_p\times 3}$,

${\bf V}^{(t)} \in {\open R}^{N_p\times 3}$, ![]() ${\bf F}^{(t)} \in [N_p]^{N_f \times 3}$ and

${\bf F}^{(t)} \in [N_p]^{N_f \times 3}$ and ![]() ${\bf C}^{(t)} \in {\open R}^{N_c \times 3}$. The triangles are assumed to be consistent across frames so that there is a correspondence between colors and vertices within the GOF. In Fig. 1(b), we show an example of the correspondences between two consecutive frames in a GOF. Different GOFs may have different numbers of frames, vertices, triangles, and colors.

${\bf C}^{(t)} \in {\open R}^{N_c \times 3}$. The triangles are assumed to be consistent across frames so that there is a correspondence between colors and vertices within the GOF. In Fig. 1(b), we show an example of the correspondences between two consecutive frames in a GOF. Different GOFs may have different numbers of frames, vertices, triangles, and colors.

We compress consecutive GOFs sequentially and independently, so we focus on the system for compressing an individual GOF ![]() $({\bf V}^{(t)},\,{\bf F}^{(t)},\,{\bf C}^{(t)})$ for

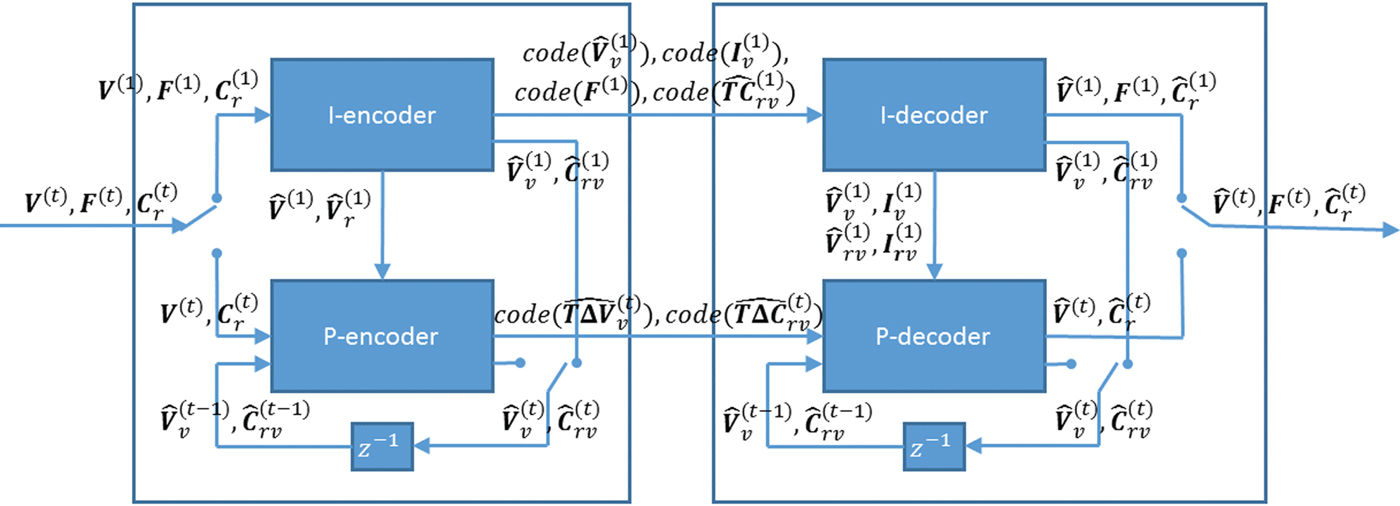

$({\bf V}^{(t)},\,{\bf F}^{(t)},\,{\bf C}^{(t)})$ for ![]() $t \in [N]$. The overall system is shown in Fig. 2, where

$t \in [N]$. The overall system is shown in Fig. 2, where ![]() ${\bf C}^{(t)}$ is known as

${\bf C}^{(t)}$ is known as ![]() ${\bf C}_r^{(t)}$ for reasons to be discussed later.

${\bf C}_r^{(t)}$ for reasons to be discussed later.

Fig. 2. Encoder (left) and decoder (right). The switches are in the t=1 position, and flip for t>1.

For all frames, our system first encodes geometry, i.e. vertices and faces, and then color. The color compression depends on the decoded geometry, as it uses a transform that reduces spatial redundancy.

For the reference frame at t=1, also known as the intra (I) frame, the I-encoder represents the vertices ![]() ${\bf V}^{(1)}$ using voxels. The voxelized vertices

${\bf V}^{(1)}$ using voxels. The voxelized vertices ![]() ${\bf V}_v^{(1)}$ are encoded using an octree. The connectivity

${\bf V}_v^{(1)}$ are encoded using an octree. The connectivity ![]() ${\bf F}^{(1)}$ is compressed without a loss using an entropy coder. The connectivity is coded only once per GOF since it is consistent across the GOF, i.e.,

${\bf F}^{(1)}$ is compressed without a loss using an entropy coder. The connectivity is coded only once per GOF since it is consistent across the GOF, i.e., ![]() ${\bf F}^{(t)}={\bf F}^{(1)}$ for

${\bf F}^{(t)}={\bf F}^{(1)}$ for ![]() $t\in [N]$. The decoded vertices

$t\in [N]$. The decoded vertices ![]() $\hat {{\bf V}}^{(1)}$ are refined and used to construct an auxiliary point cloud

$\hat {{\bf V}}^{(1)}$ are refined and used to construct an auxiliary point cloud ![]() $(\hat {{\bf V}}_r^{(1)},\,{\bf C}_r^{(1)})$. This point cloud is voxelized as

$(\hat {{\bf V}}_r^{(1)},\,{\bf C}_r^{(1)})$. This point cloud is voxelized as ![]() $(\hat {{\bf V}}_{rv}^{(1)},\,{\bf C}_{rv}^{(1)})$, and its color attributes are then coded as

$(\hat {{\bf V}}_{rv}^{(1)},\,{\bf C}_{rv}^{(1)})$, and its color attributes are then coded as ![]() $\widehat {{\bf TC}}_{rv}^{(1)}$ using the region adaptive hierarchical transform (RAHT) [Reference de Queiroz and Chou34], uniform scalar quantization, and adaptive Run-Length Golomb-Rice (RLGR) entropy coding [Reference Malvar44]. Here,

$\widehat {{\bf TC}}_{rv}^{(1)}$ using the region adaptive hierarchical transform (RAHT) [Reference de Queiroz and Chou34], uniform scalar quantization, and adaptive Run-Length Golomb-Rice (RLGR) entropy coding [Reference Malvar44]. Here, ![]() ${\bf T}$ denotes the transform and hat denotes a quantity that has been compressed and decompressed. At the cost of additional complexity, the RAHT transform could be replaced by transforms with higher performance [Reference de Queiroz and Chou37,Reference Hou, Chau, He and Chou38].

${\bf T}$ denotes the transform and hat denotes a quantity that has been compressed and decompressed. At the cost of additional complexity, the RAHT transform could be replaced by transforms with higher performance [Reference de Queiroz and Chou37,Reference Hou, Chau, He and Chou38].

For predicted (P) frames at t>1, the P-encoder computes prediction residuals from the previously decoded frame. Specifically, for each t>1 it computes a motion residual ![]() $\Delta {\bf V}_v^{(t)}={\bf V}_v^{(t)}-\hat {{\bf V}}_v^{(t-1)}$ and a color residual

$\Delta {\bf V}_v^{(t)}={\bf V}_v^{(t)}-\hat {{\bf V}}_v^{(t-1)}$ and a color residual ![]() $\Delta {\bf C}_{rv}^{(t)}={\bf C}_{rv}^{(t)}-\hat {{\bf C}}_{rv}^{(t-1)}$. These residuals are attributes of the following auxiliary point clouds

$\Delta {\bf C}_{rv}^{(t)}={\bf C}_{rv}^{(t)}-\hat {{\bf C}}_{rv}^{(t-1)}$. These residuals are attributes of the following auxiliary point clouds ![]() $(\hat {{\bf V}}_v^{(1)},\,\Delta {\bf V}_v^{(t)})$,

$(\hat {{\bf V}}_v^{(1)},\,\Delta {\bf V}_v^{(t)})$, ![]() $(\hat {{\bf V}}_{rv}^{(1)},\,\Delta {\bf C}_{rv}^{(t)})$, respectively. Then transform coding is applied using again the RAHT followed by uniform scalar quantization and entropy coding.

$(\hat {{\bf V}}_{rv}^{(1)},\,\Delta {\bf C}_{rv}^{(t)})$, respectively. Then transform coding is applied using again the RAHT followed by uniform scalar quantization and entropy coding.

It is important to note that we do not directly compress the list of vertices ![]() ${\bf V}^{(t)}$ or the list of colors

${\bf V}^{(t)}$ or the list of colors ![]() ${\bf C}^{(t)}$ (or their prediction residuals). Rather, we voxelize them first with respect to their corresponding vertices in the reference frame, and then compress them. This ensures that (1) if two or more vertices or colors fall into the same voxel, they receive the same representation and hence are encoded only once, and (2) the colors (on the set of refined vertices) are resampled uniformly in space regardless of the density, size or shapes of triangles.

${\bf C}^{(t)}$ (or their prediction residuals). Rather, we voxelize them first with respect to their corresponding vertices in the reference frame, and then compress them. This ensures that (1) if two or more vertices or colors fall into the same voxel, they receive the same representation and hence are encoded only once, and (2) the colors (on the set of refined vertices) are resampled uniformly in space regardless of the density, size or shapes of triangles.

In the next section, we detail the basic elements of the system: refinement, voxelization, octrees, and transform coding. Then, in Section V, we detail how these basic elements are put together to encode and decode a sequence of triangle clouds.

IV. REFINEMENT, VOXELIZATION, OCTREES, AND TRANSFORM CODING

In this section, we introduce the most important building blocks of our compression system. We describe their efficient implementations, with detailed pseudo code that can be found in the Appendix.

A) Refinement

A refinement refers to a procedure for dividing the triangles in a triangle cloud into smaller (equal sized) triangles. Given a list of triangles ![]() ${\bf F}$, its corresponding list of vertices

${\bf F}$, its corresponding list of vertices ![]() ${\bf V}$, and upsampling factor U, a list of “refined” vertices

${\bf V}$, and upsampling factor U, a list of “refined” vertices ![]() ${\bf V}_r$ can be produced using Algorithm 5. Step 1 (in Matlab notation) assembles three equal-length lists of vertices (each as an

${\bf V}_r$ can be produced using Algorithm 5. Step 1 (in Matlab notation) assembles three equal-length lists of vertices (each as an ![]() $N_f\times 3$ matrix), containing the three vertices of every face. Step 5 appends linear combinations of the faces' vertices to a growing list of refined vertices. Note that since the triangles are independent, the refinement can be implemented in parallel. We assume that the refined vertices in

$N_f\times 3$ matrix), containing the three vertices of every face. Step 5 appends linear combinations of the faces' vertices to a growing list of refined vertices. Note that since the triangles are independent, the refinement can be implemented in parallel. We assume that the refined vertices in ![]() ${\bf V}_r$ can be colored, such that the list of colors

${\bf V}_r$ can be colored, such that the list of colors ![]() ${\bf C}$ is in 1-1 correspondence with the list of refined vertices

${\bf C}$ is in 1-1 correspondence with the list of refined vertices ![]() ${\bf V}_r$. An example with a single triangle is depicted in Fig. 1(b), where the colored dots correspond to refined vertices.

${\bf V}_r$. An example with a single triangle is depicted in Fig. 1(b), where the colored dots correspond to refined vertices.

B) Morton codes and voxelization

A voxel is a volumetric element used to represent the attributes of an object in 3D over a small region of space. Analogous to 2D pixels, 3D voxels are defined on a uniform grid. We assume the geometric data live in the unit cube ![]() $[0,\,1)^3$, and we partition the cube uniformly into voxels of size

$[0,\,1)^3$, and we partition the cube uniformly into voxels of size ![]() $2^{-J} \times 2^{-J} \times 2^{-J}$.

$2^{-J} \times 2^{-J} \times 2^{-J}$.

Now consider a list of points ![]() ${\bf V}=[v_i]$ and an equal-length list of attributes

${\bf V}=[v_i]$ and an equal-length list of attributes ![]() ${\bf A}=[a_i]$, where

${\bf A}=[a_i]$, where ![]() $a_i$ is the real-valued attribute (or vector of attributes) of

$a_i$ is the real-valued attribute (or vector of attributes) of ![]() $v_i$. (These may be, for example, the list of refined vertices

$v_i$. (These may be, for example, the list of refined vertices ![]() ${\bf V}_r$ and their associated colors

${\bf V}_r$ and their associated colors ![]() ${\bf C}_r={\bf C}$ as discussed above.) In the process of voxelization, the points are partitioned into voxels, and the attributes associated with the points in a voxel are averaged. The points within each voxel are quantized to the voxel center. Each occupied voxel is then represented by the voxel center and the average of the attributes of the points in the voxel. Moreover, the occupied voxels are put into Z-scan order, also known as Morton order [Reference Morton45]. The first step in voxelization is to quantize the vertices and to produce their Morton codes. The Morton code m for a point

${\bf C}_r={\bf C}$ as discussed above.) In the process of voxelization, the points are partitioned into voxels, and the attributes associated with the points in a voxel are averaged. The points within each voxel are quantized to the voxel center. Each occupied voxel is then represented by the voxel center and the average of the attributes of the points in the voxel. Moreover, the occupied voxels are put into Z-scan order, also known as Morton order [Reference Morton45]. The first step in voxelization is to quantize the vertices and to produce their Morton codes. The Morton code m for a point ![]() $(x,\,y,\,z)$ is obtained simply by interleaving (or “swizzling”) the bits of x, y, and z, with x being higher order than y, and y being higher order than z. For example, if

$(x,\,y,\,z)$ is obtained simply by interleaving (or “swizzling”) the bits of x, y, and z, with x being higher order than y, and y being higher order than z. For example, if ![]() $x=x_4x_2x_1$,

$x=x_4x_2x_1$, ![]() $y=y_4y_2y_1$, and

$y=y_4y_2y_1$, and ![]() $z=z_4z_2z_1$ (written in binary), then the Morton code for the point would be

$z=z_4z_2z_1$ (written in binary), then the Morton code for the point would be ![]() $m=x_4y_4z_4x_2y_2z_2x_1y_1z_1$. The Morton codes are sorted, duplicates are removed, and all attributes whose vertices have a particular Morton code are averaged.

$m=x_4y_4z_4x_2y_2z_2x_1y_1z_1$. The Morton codes are sorted, duplicates are removed, and all attributes whose vertices have a particular Morton code are averaged.

Algorithm 1 Voxelization (voxelize)

The procedure is detailed in Algorithm 1. ![]() ${\bf V}_{int}$ is the list of vertices with their coordinates, previously in

${\bf V}_{int}$ is the list of vertices with their coordinates, previously in ![]() $[0,\,1)$, now mapped to integers in

$[0,\,1)$, now mapped to integers in ![]() $\{0,\,\ldots ,\,2^J-1\}$.

$\{0,\,\ldots ,\,2^J-1\}$. ![]() ${\bf M}$ is the corresponding list of Morton codes.

${\bf M}$ is the corresponding list of Morton codes. ![]() ${\bf M}_v$ is the list of Morton codes, sorted with duplicates removed, using the Matlab function unique.

${\bf M}_v$ is the list of Morton codes, sorted with duplicates removed, using the Matlab function unique. ![]() ${\bf I}$ and

${\bf I}$ and ![]() ${\bf I}_v$ are vectors of indices such that

${\bf I}_v$ are vectors of indices such that ![]() ${\bf M}_v={\bf M}({\bf I})$ and

${\bf M}_v={\bf M}({\bf I})$ and ![]() ${\bf M}={\bf M}_v({\bf I}_v)$, in Matlab notation. (That is, the

${\bf M}={\bf M}_v({\bf I}_v)$, in Matlab notation. (That is, the ![]() $i_v$th element of

$i_v$th element of ![]() ${\bf M}_v$ is the

${\bf M}_v$ is the ![]() ${\bf I}(i_v)$th element of

${\bf I}(i_v)$th element of ![]() ${\bf M}$ and the ith element of

${\bf M}$ and the ith element of ![]() ${\bf M}$ is the

${\bf M}$ is the ![]() ${\bf I}_v(i)$th element of

${\bf I}_v(i)$th element of ![]() ${\bf M}_v$.)

${\bf M}_v$.) ![]() ${\bf A}_v=[\bar a_j]$ is the list of attribute averages

${\bf A}_v=[\bar a_j]$ is the list of attribute averages

$$\bar{a}_j = \displaystyle{1 \over {N_j}}\sum\limits_{i:{\bf M}(i) = {\bf M}_v(j)} {a_i},$$

$$\bar{a}_j = \displaystyle{1 \over {N_j}}\sum\limits_{i:{\bf M}(i) = {\bf M}_v(j)} {a_i},$$

where ![]() $N_j$ is the number of elements in the sum.

$N_j$ is the number of elements in the sum. ![]() ${\bf V}_v$ is the list of voxel centers. The complexity of voxelization is dominated by sorting of the Morton codes, thus the algorithm has complexity

${\bf V}_v$ is the list of voxel centers. The complexity of voxelization is dominated by sorting of the Morton codes, thus the algorithm has complexity ![]() ${\cal O}(N\log N)$, where N is the number of input points.

${\cal O}(N\log N)$, where N is the number of input points.

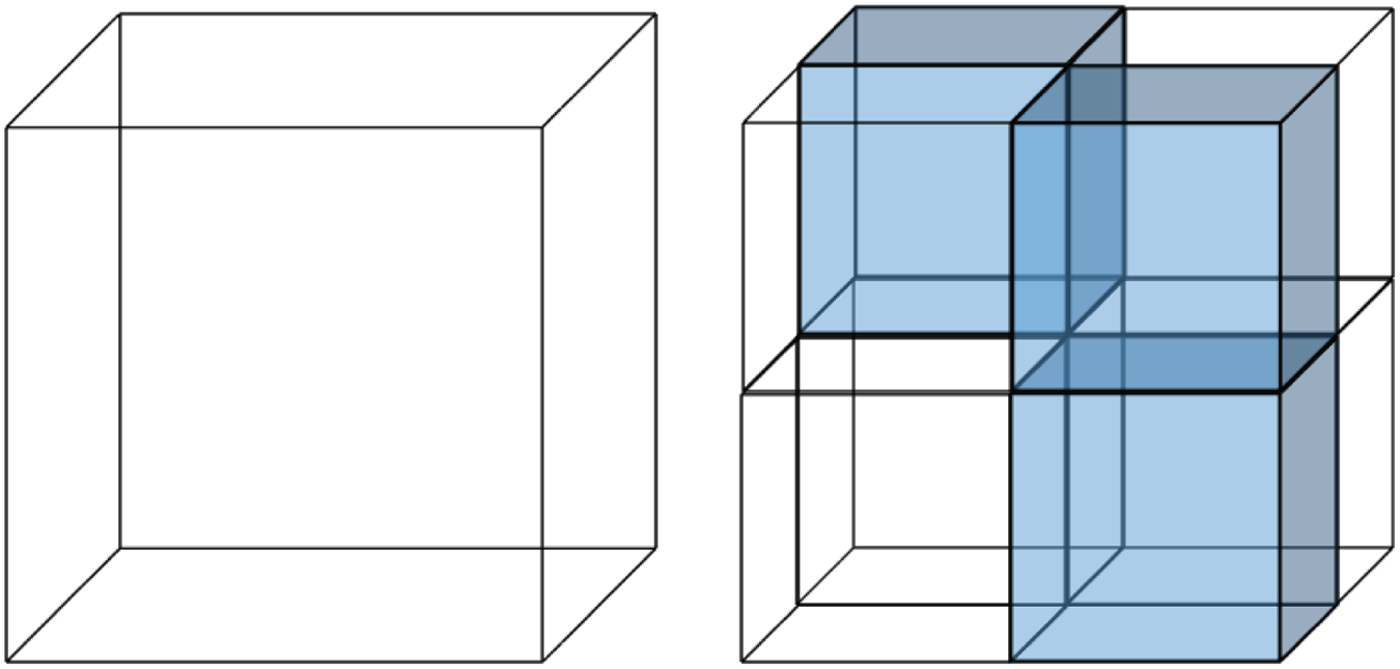

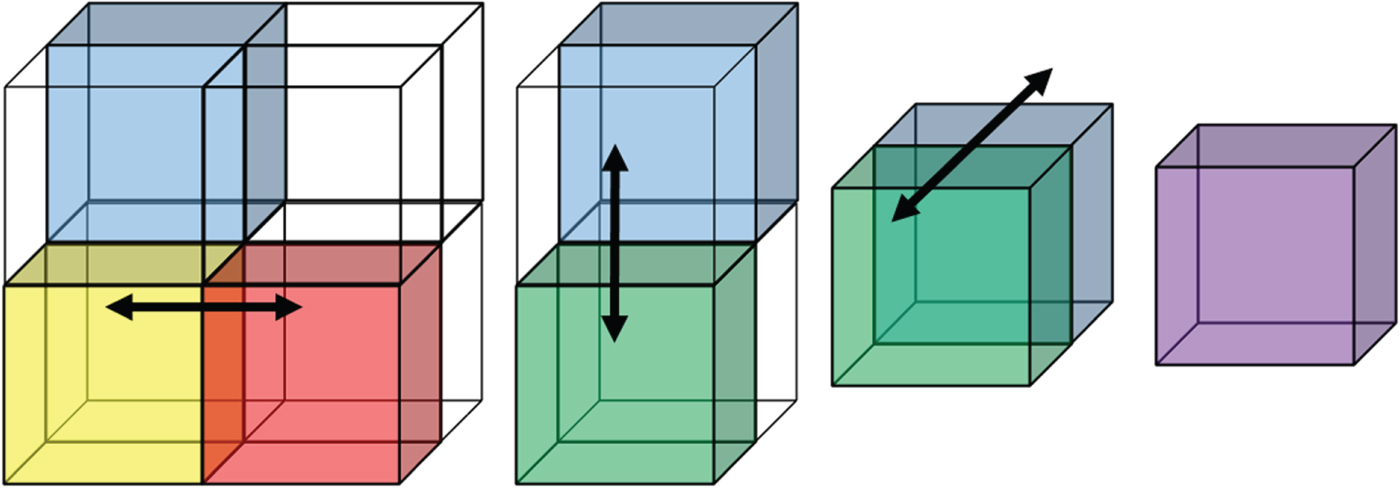

C) Octree encoding

Any set of voxels in the unit cube, each of size ![]() $2^{-J} \times 2^{-J} \times 2^{-J}\!$, designated occupied voxels, can be represented with an octree of depth J [Reference Jackins and Tanimoto23,Reference Meagher24]. An octree is a recursive subdivision of a cube into smaller cubes, as illustrated in Fig. 3. Cubes are subdivided only as long as they are occupied (i.e., contain any occupied voxels). This recursive subdivision can be represented by an octree with depth

$2^{-J} \times 2^{-J} \times 2^{-J}\!$, designated occupied voxels, can be represented with an octree of depth J [Reference Jackins and Tanimoto23,Reference Meagher24]. An octree is a recursive subdivision of a cube into smaller cubes, as illustrated in Fig. 3. Cubes are subdivided only as long as they are occupied (i.e., contain any occupied voxels). This recursive subdivision can be represented by an octree with depth ![]() $J\!$, where the root corresponds to the unit cube. The leaves of the tree correspond to the set of occupied voxels.

$J\!$, where the root corresponds to the unit cube. The leaves of the tree correspond to the set of occupied voxels.

Fig. 3. Cube subdivision. Blue cubes represent occupied regions of space.

There is a close connection between octrees and Morton codes. In fact, the Morton code of a voxel, which has length ![]() $3\,J$ bits broken into J binary triples, encodes the path in the octree from the root to the leaf containing the voxel. Moreover, the sorted list of Morton codes results from a depth-first traversal of the tree.

$3\,J$ bits broken into J binary triples, encodes the path in the octree from the root to the leaf containing the voxel. Moreover, the sorted list of Morton codes results from a depth-first traversal of the tree.

Each internal node of the tree can be represented by one byte, to indicate which of its eight children are occupied. If these bytes are serialized in a depth-first traversal of the tree, the serialization (which has a length in bytes equal to the number of internal nodes of the tree) can be used as a description of the octree, from which the octree can be reconstructed. Hence the description can also be used to encode the ordered list of Morton codes of the leaves. This description can be further compressed using a context adaptive arithmetic encoder. However, for simplicity, in our experiments, we use gzip instead of an arithmetic encoder.

In this way, we encode any set of occupied voxels in a canonical (Morton) order.

D) Transform coding

In this section, we describe the RAHT [Reference de Queiroz and Chou34] and its efficient implementation. RAHT is a sequence of orthonormal transforms applied to attribute data living on the leaves of an octree. For simplicity, we assume the attributes are scalars. This transform processes voxelized attributes in a bottom-up fashion, starting at the leaves of the octree. The inverse transform reverses this order.

Consider eight adjacent voxels, three of which are occupied, having the same parent in the octree, as shown in Fig. 4. The colored voxels are occupied (have an attribute) and the transparent ones are empty. Each occupied voxel is assigned a unit weight. For the forward transform, transformed attribute values and weights will be propagated up the tree.

Fig. 4. One level of RAHT applied to a cube of eight voxels, three of which are occupied.

One level of the forward transform proceeds as follows. Pick a direction (x, y, or z), then check whether there are two occupied cubes that can be processed along that direction. In the leftmost part of Fig. 4 there are only three occupied cubes, red, yellow, and blue, having weights ![]() $w_{r}$,

$w_{r}$, ![]() $w_{y}$, and

$w_{y}$, and ![]() $w_{b}$, respectively. To process in the direction of the x-axis, since the blue cube does not have a neighbor along the horizontal direction, we copy its attribute value

$w_{b}$, respectively. To process in the direction of the x-axis, since the blue cube does not have a neighbor along the horizontal direction, we copy its attribute value ![]() $a_b$ to the second stage and keep its weight

$a_b$ to the second stage and keep its weight ![]() $w_{b}$. The attribute values

$w_{b}$. The attribute values ![]() $a_y$ and

$a_y$ and ![]() $a_r$ of the yellow and red cubes can be processed together using the orthonormal transformation

$a_r$ of the yellow and red cubes can be processed together using the orthonormal transformation

$$\left[ {\matrix{ {a_g^0 } \cr {{\rm }a_g^1 } \cr } } \right] = \displaystyle{1 \over {\sqrt {w_y + w_r} }}\left[ {\matrix{ {\sqrt {w_y} } & {\sqrt {w_r} } \cr {{\rm }-\sqrt {w_r} } & {\sqrt {w_y} } \cr } } \right]\left[ {\matrix{ {a_y} \cr {a_r} \cr } } \right],$$

$$\left[ {\matrix{ {a_g^0 } \cr {{\rm }a_g^1 } \cr } } \right] = \displaystyle{1 \over {\sqrt {w_y + w_r} }}\left[ {\matrix{ {\sqrt {w_y} } & {\sqrt {w_r} } \cr {{\rm }-\sqrt {w_r} } & {\sqrt {w_y} } \cr } } \right]\left[ {\matrix{ {a_y} \cr {a_r} \cr } } \right],$$

where the transformed coefficients ![]() $a_g^0$ and

$a_g^0$ and ![]() $a_g^1$, respectively, represent low pass and high pass coefficients appropriately weighted. Both transform coefficients now represent information from a region with weight

$a_g^1$, respectively, represent low pass and high pass coefficients appropriately weighted. Both transform coefficients now represent information from a region with weight ![]() $w_g=w_y+w_r$ (green cube). The high pass coefficient is stored for entropy coding along with its weight, while the low pass coefficient is further processed and put in the green cube. For processing along the y-axis, the green and blue cubes do not have neighbors, so their values are copied to the next level. Then we process in the z direction using the same transformation in (2) with weights

$w_g=w_y+w_r$ (green cube). The high pass coefficient is stored for entropy coding along with its weight, while the low pass coefficient is further processed and put in the green cube. For processing along the y-axis, the green and blue cubes do not have neighbors, so their values are copied to the next level. Then we process in the z direction using the same transformation in (2) with weights ![]() $w_g$ and

$w_g$ and ![]() $w_b$.

$w_b$.

This process is repeated for each cube of eight subcubes at each level of the octree. After J levels, there remains one low pass coefficient that corresponds to the DC component; the remainder are high pass coefficients. Since after each processing of a pair of coefficients, the weights are added and used during the next transformation, the weights can be interpreted as being inversely proportional to frequency. The DC coefficient is the one that has the largest weight, as it is processed more times and represents information from the entire cube, while the high pass coefficients, which are produced earlier, have smaller weights because they contain information from a smaller region. The weights depend only on the octree (not the coefficients themselves), and thus can provide a frequency ordering for the coefficients. We sort the transformed coefficients by decreasing the magnitude of weight.

Finally, the sorted coefficients are quantized using uniform scalar quantization, and are entropy coded using adaptive Run Length Golomb-Rice coding [Reference Malvar44]. The pipeline is illustrated in Fig. 5.

Fig. 5. Transform coding system for voxelized point clouds.

Note that the RAHT has several properties that make it suitable for real-time compression. At each step, it applies several ![]() $2 \times 2$ orthonormal transforms to pairs of voxels. By using the Morton ordering, the j-th step of the RAHT can be implemented with worst-case time complexity

$2 \times 2$ orthonormal transforms to pairs of voxels. By using the Morton ordering, the j-th step of the RAHT can be implemented with worst-case time complexity ![]() ${\cal O}({N}_{vox,j})$, where

${\cal O}({N}_{vox,j})$, where ![]() $N_{vox,j}$ is the number of occupied voxels of size

$N_{vox,j}$ is the number of occupied voxels of size ![]() $2^{-j} \times 2^{-j} \times 2^{-j}$. The overall complexity of the RAHT is

$2^{-j} \times 2^{-j} \times 2^{-j}$. The overall complexity of the RAHT is ![]() ${\cal O}(J N_{vox,J})$. This can be further reduced by processing cubes in parallel. Note that within each GOF, only two different RAHTs will be applied multiple times. To encode motion residuals, a RAHT will be implemented using the voxelization with respect to the vertices of the reference frame. To encode color of the reference frame and color residuals of predicted frames, the RAHT is implemented with respect to the voxelization of the refined vertices of the reference frame.

${\cal O}(J N_{vox,J})$. This can be further reduced by processing cubes in parallel. Note that within each GOF, only two different RAHTs will be applied multiple times. To encode motion residuals, a RAHT will be implemented using the voxelization with respect to the vertices of the reference frame. To encode color of the reference frame and color residuals of predicted frames, the RAHT is implemented with respect to the voxelization of the refined vertices of the reference frame.

Efficient implementations of RAHT and its inverse are detailed in Algorithms 3 and 4, respectively. Algorithm 2 is a prologue to each and needs to be run twice per GOF. For completeness we include in the Appendix our uniform scalar quantization in Algorithm 7.

Algorithm 2 Prologue to RAHT and its Inverse (IRAHT) (prologue)

Algorithm 3 Region Adaptive Hierarchical Transform

Algorithm 4 Inverse Region Adaptive Hierarchical Transform

V. ENCODING AND DECODING

In this section, we describe in detail encoding and decoding of dynamic triangle clouds. First we describe encoding and decoding of reference frames. Following that, we describe encoding and decoding of predicted frames. For both reference and predicted frames, we describe first how geometry is encoded and decoded, and then how color is encoded and decoded. The overall system is shown in Fig. 2.

A) Encoding and decoding of reference frames

For reference frames, encoding is summarized in Algorithm 8, while decoding is summarized in Algorithm 9, which can be found in the Appendix. For both reference and predicted frames, the geometry information is encoded and decoded first, since color processing depends on the decoded geometry.

1) Geometry encoding and decoding

We assume that the vertices in ![]() ${\bf V}^{(1)}$ are in Morton order. If not, we put them into Morton order and permute the indices in

${\bf V}^{(1)}$ are in Morton order. If not, we put them into Morton order and permute the indices in ![]() ${\bf F}^{(1)}$ accordingly. The lists

${\bf F}^{(1)}$ accordingly. The lists ![]() ${\bf V}^{(1)}$ and

${\bf V}^{(1)}$ and ![]() ${\bf F}^{(1)}$ are the geometry-related quantities in the reference frame transmitted from the encoder to the decoder.

${\bf F}^{(1)}$ are the geometry-related quantities in the reference frame transmitted from the encoder to the decoder. ![]() ${\bf V}^{(1)}$ will be reconstructed at the decoder with some loss as

${\bf V}^{(1)}$ will be reconstructed at the decoder with some loss as ![]() $\hat {{\bf V}}^{(1)}$, and

$\hat {{\bf V}}^{(1)}$, and ![]() ${\bf F}^{(1)}$ will be reconstructed losslessly. We now describe the process.

${\bf F}^{(1)}$ will be reconstructed losslessly. We now describe the process.

At the encoder, the vertices in ![]() ${\bf V}^{(1)}$ are first quantized to the voxel grid, producing a list of quantized vertices

${\bf V}^{(1)}$ are first quantized to the voxel grid, producing a list of quantized vertices ![]() $\hat {{\bf V}}^{(1)}$, the same length as

$\hat {{\bf V}}^{(1)}$, the same length as ![]() ${\bf V}^{(1)}$. There may be duplicates in

${\bf V}^{(1)}$. There may be duplicates in ![]() $\hat {{\bf V}}^{(1)}$, because some vertices may have collapsed to the same grid point.

$\hat {{\bf V}}^{(1)}$, because some vertices may have collapsed to the same grid point. ![]() $\hat {{\bf V}}^{(1)}$ is then voxelized (without attributes), the effect of which is simply to remove the duplicates, producing a possibly slightly shorter list

$\hat {{\bf V}}^{(1)}$ is then voxelized (without attributes), the effect of which is simply to remove the duplicates, producing a possibly slightly shorter list ![]() $\hat {{\bf V}}_v^{(1)}$ along with a list of indices

$\hat {{\bf V}}_v^{(1)}$ along with a list of indices ![]() ${\bf I}_v^{(1)}$ such that (in Matlab notation)

${\bf I}_v^{(1)}$ such that (in Matlab notation) ![]() $\hat {{\bf V}}^{(1)}=\hat {{\bf V}}_v^{(1)}({\bf I}_v^{(1)})$. Since

$\hat {{\bf V}}^{(1)}=\hat {{\bf V}}_v^{(1)}({\bf I}_v^{(1)})$. Since ![]() $\hat {{\bf V}}_v^{(1)}$ has no duplicates, it represents a set of voxels. This set can be described by an octree. The byte sequence representing the octree can be compressed with any entropy encoder; we use gzip. The list of indices

$\hat {{\bf V}}_v^{(1)}$ has no duplicates, it represents a set of voxels. This set can be described by an octree. The byte sequence representing the octree can be compressed with any entropy encoder; we use gzip. The list of indices ![]() ${\bf I}_v^{(1)}$, which has the same length as

${\bf I}_v^{(1)}$, which has the same length as ![]() $\hat {{\bf V}}^{(1)}$, indicates, essentially, how to restore the duplicates, which are missing from

$\hat {{\bf V}}^{(1)}$, indicates, essentially, how to restore the duplicates, which are missing from ![]() $\hat {{\bf V}}_v^{(1)}$. In fact, the indices in

$\hat {{\bf V}}_v^{(1)}$. In fact, the indices in ![]() ${\bf I}_v^{(1)}$ increase in unit steps for all vertices in

${\bf I}_v^{(1)}$ increase in unit steps for all vertices in ![]() $\hat {{\bf V}}^{(1)}$ except the duplicates, for which there is no increase. The list of indices is thus a sequence of runs of unit increases alternating with runs of zero increases. This binary sequence of increases can be encoded with any entropy encoder; we use gzip on the run lengths. Finally, the list of faces

$\hat {{\bf V}}^{(1)}$ except the duplicates, for which there is no increase. The list of indices is thus a sequence of runs of unit increases alternating with runs of zero increases. This binary sequence of increases can be encoded with any entropy encoder; we use gzip on the run lengths. Finally, the list of faces ![]() ${\bf F}^{(1)}$ can be encoded with any entropy encoder; we again use gzip, though algorithms such as [Reference Mamou, Zaharia and Prêteux5,Reference Rossignac6] might also be used.

${\bf F}^{(1)}$ can be encoded with any entropy encoder; we again use gzip, though algorithms such as [Reference Mamou, Zaharia and Prêteux5,Reference Rossignac6] might also be used.

The decoder entropy decodes ![]() $\hat {{\bf V}}_v^{(1)}$,

$\hat {{\bf V}}_v^{(1)}$, ![]() ${\bf I}_v^{(1)}$, and

${\bf I}_v^{(1)}$, and ![]() ${\bf F}^{(1)}$, and then recovers

${\bf F}^{(1)}$, and then recovers ![]() $\hat {{\bf V}}^{(1)}=\hat {{\bf V}}_v^{(1)}({\bf I}_v^{(1)})$, which is the quantized version of

$\hat {{\bf V}}^{(1)}=\hat {{\bf V}}_v^{(1)}({\bf I}_v^{(1)})$, which is the quantized version of ![]() ${\bf V}^{(1)}$, to obtain both

${\bf V}^{(1)}$, to obtain both ![]() $\hat {{\bf V}}^{(1)}$ and

$\hat {{\bf V}}^{(1)}$ and ![]() ${\bf F}^{(1)}$.

${\bf F}^{(1)}$.

2) Color encoding and decoding

The RAHT, required for transform coding of the color signal, is constructed from an octree, or equivalently from a voxelized point cloud. We first describe how to construct such point set from the decoded geometry.

Let ![]() ${\bf V}_r^{(1)}=refine({\bf V}^{(1)},\,{\bf F}^{(1)},\,U)$ be the list of “refined vertices” obtained by upsampling, by factor U, the faces

${\bf V}_r^{(1)}=refine({\bf V}^{(1)},\,{\bf F}^{(1)},\,U)$ be the list of “refined vertices” obtained by upsampling, by factor U, the faces ![]() ${\bf F}^{(1)}$ whose vertices are

${\bf F}^{(1)}$ whose vertices are ![]() ${\bf V}^{(1)}$. We assume that the colors in the list

${\bf V}^{(1)}$. We assume that the colors in the list ![]() ${\bf C}_r^{(1)}={\bf C}^{(1)}$ correspond to the refined vertices in

${\bf C}_r^{(1)}={\bf C}^{(1)}$ correspond to the refined vertices in ![]() ${\bf V}_r^{(1)}$. In particular, the lists have the same length. Here, we subscript the list of colors by an “r” to indicate that it corresponds to the list of refined vertices.

${\bf V}_r^{(1)}$. In particular, the lists have the same length. Here, we subscript the list of colors by an “r” to indicate that it corresponds to the list of refined vertices.

When the vertices ![]() ${\bf V}^{(1)}$ are quantized to

${\bf V}^{(1)}$ are quantized to ![]() $\hat {{\bf V}}^{(1)}$, the refined vertices change to

$\hat {{\bf V}}^{(1)}$, the refined vertices change to ![]() $\hat {{\bf V}}_r^{(1)}=refine(\hat {{\bf V}}^{(1)},\,{\bf F}^{(1)},\,U)$. The list of colors

$\hat {{\bf V}}_r^{(1)}=refine(\hat {{\bf V}}^{(1)},\,{\bf F}^{(1)},\,U)$. The list of colors ![]() ${\bf C}_r^{(1)}$ can also be considered as indicating the colors on

${\bf C}_r^{(1)}$ can also be considered as indicating the colors on ![]() $\hat {{\bf V}}_r^{(1)}$. The list

$\hat {{\bf V}}_r^{(1)}$. The list ![]() ${\bf C}_r^{(1)}$ is the color-related quantity in the reference frame transmitted from the encoder to the decoder. The decoder will reconstruct

${\bf C}_r^{(1)}$ is the color-related quantity in the reference frame transmitted from the encoder to the decoder. The decoder will reconstruct ![]() ${\bf C}_r^{(1)}$ with some loss

${\bf C}_r^{(1)}$ with some loss ![]() $\hat {{\bf C}}_r^{(1)}$. We now describe the process.

$\hat {{\bf C}}_r^{(1)}$. We now describe the process.

At the encoder, the refined vertices ![]() $\hat {{\bf V}}_r^{(1)}$ are obtained as described above. These vertices and the color information form a point cloud

$\hat {{\bf V}}_r^{(1)}$ are obtained as described above. These vertices and the color information form a point cloud ![]() $(\hat {{\bf V}}_r^{(1)},\,{\bf C}_r^{(1)} )$, which will be voxelized and compressed as follows. The vertices

$(\hat {{\bf V}}_r^{(1)},\,{\bf C}_r^{(1)} )$, which will be voxelized and compressed as follows. The vertices ![]() $\hat {{\bf V}}_r^{(1)}$ and their associated color attributes

$\hat {{\bf V}}_r^{(1)}$ and their associated color attributes ![]() ${\bf C}_r^{(1)}$ are voxelized using (1), to obtain a list of voxels

${\bf C}_r^{(1)}$ are voxelized using (1), to obtain a list of voxels ![]() $\hat {{\bf V}}_{rv}^{(1)}$, the list of voxel colors

$\hat {{\bf V}}_{rv}^{(1)}$, the list of voxel colors ![]() ${\bf C}_{rv}^{(1)}$, and the list of indices

${\bf C}_{rv}^{(1)}$, and the list of indices ![]() ${\bf I}_{rv}^{(1)}$ such that (in Matlab notation)

${\bf I}_{rv}^{(1)}$ such that (in Matlab notation) ![]() $\hat {{\bf V}}_r^{(1)}=\hat {{\bf V}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$. The list of indices

$\hat {{\bf V}}_r^{(1)}=\hat {{\bf V}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$. The list of indices ![]() ${\bf I}_{rv}^{(1)}$ has the same length as

${\bf I}_{rv}^{(1)}$ has the same length as ![]() $\hat {{\bf V}}_r^{(1)}$, and contains for each vertex in

$\hat {{\bf V}}_r^{(1)}$, and contains for each vertex in ![]() $\hat {{\bf V}}_r^{(1)}$ the index of its corresponding vertex in

$\hat {{\bf V}}_r^{(1)}$ the index of its corresponding vertex in ![]() $\hat {{\bf V}}_{rv}^{(1)}$. Particularly, if the upsampling factor U is large, there may be many refined vertices falling into each voxel. Hence the list

$\hat {{\bf V}}_{rv}^{(1)}$. Particularly, if the upsampling factor U is large, there may be many refined vertices falling into each voxel. Hence the list ![]() $\hat {{\bf V}}_{rv}^{(1)}$ may be significantly shorter than the list

$\hat {{\bf V}}_{rv}^{(1)}$ may be significantly shorter than the list ![]() $\hat {{\bf V}}_r^{(1)}$ (and the list

$\hat {{\bf V}}_r^{(1)}$ (and the list ![]() ${\bf I}_{rv}^{(1)}$). However, unlike the geometry case, in this case, the list

${\bf I}_{rv}^{(1)}$). However, unlike the geometry case, in this case, the list ![]() ${\bf I}_{rv}^{(1)}$ need not be transmitted since it can be reconstructed from the decoded geometry.

${\bf I}_{rv}^{(1)}$ need not be transmitted since it can be reconstructed from the decoded geometry.

The list of voxel colors ![]() $\hat {{\bf C}}_{rv}^{(1)}$, each with unit weight (see Section IVD), is transformed by RAHT to an equal-length list of transformed colors

$\hat {{\bf C}}_{rv}^{(1)}$, each with unit weight (see Section IVD), is transformed by RAHT to an equal-length list of transformed colors ![]() ${\bf TC}_{rv}^{(1)}$ and associated weights

${\bf TC}_{rv}^{(1)}$ and associated weights ![]() ${\bf W}_{rv}^{(1)}$. The transformed colors are then uniformly quantized with stepsize

${\bf W}_{rv}^{(1)}$. The transformed colors are then uniformly quantized with stepsize ![]() $\Delta _{color,intra}$ to obtain

$\Delta _{color,intra}$ to obtain ![]() $\widehat {{\bf TC}}_{rv}^{(1)}$. The quantized RAHT coefficients are entropy coded as described in Section IV using the associated weights, and are transmitted. Finally,

$\widehat {{\bf TC}}_{rv}^{(1)}$. The quantized RAHT coefficients are entropy coded as described in Section IV using the associated weights, and are transmitted. Finally, ![]() $\widehat {{\bf TC}}_{rv}^{(1)}$ is inverse transformed by RAHT to obtain

$\widehat {{\bf TC}}_{rv}^{(1)}$ is inverse transformed by RAHT to obtain ![]() $\hat {{\bf C}}_{rv}^{(1)}$. These represent the quantized voxel colors, and will be used as a reference for subsequent predicted frames.

$\hat {{\bf C}}_{rv}^{(1)}$. These represent the quantized voxel colors, and will be used as a reference for subsequent predicted frames.

At the decoder, similarly, the refined vertices ![]() $\hat {{\bf V}}_r^{(1)}$ are recovered from the decoded geometry information. First upsampling, by factor U, the faces

$\hat {{\bf V}}_r^{(1)}$ are recovered from the decoded geometry information. First upsampling, by factor U, the faces ![]() ${\bf F}^{(1)}$ whose vertices are

${\bf F}^{(1)}$ whose vertices are ![]() $\hat {{\bf V}}^{(1)}$ (both of which have been decoded already in the geometry step).

$\hat {{\bf V}}^{(1)}$ (both of which have been decoded already in the geometry step). ![]() $\hat {{\bf V}}_r^{(1)}$ is then voxelized (without attributes) to produce the list of voxels

$\hat {{\bf V}}_r^{(1)}$ is then voxelized (without attributes) to produce the list of voxels ![]() $\hat {{\bf V}}_{rv}^{(1)}$ and list of indices

$\hat {{\bf V}}_{rv}^{(1)}$ and list of indices ![]() ${\bf I}_{rv}^{(1)}$ such that

${\bf I}_{rv}^{(1)}$ such that ![]() $\hat {{\bf V}}_r^{(1)}=\hat {{\bf V}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$. The weights

$\hat {{\bf V}}_r^{(1)}=\hat {{\bf V}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$. The weights ![]() ${\bf W}_{rv}^{(1)}$ are recovered by using RAHT to transform a null signal on the vertices

${\bf W}_{rv}^{(1)}$ are recovered by using RAHT to transform a null signal on the vertices ![]() $\hat {{\bf V}}_r^{(1)}$, each with unit weight. Then

$\hat {{\bf V}}_r^{(1)}$, each with unit weight. Then ![]() $\widehat {{\bf TC}}_{rv}^{(1)}$ is entropy decoded using the recovered weights and inverse transformed by RAHT to obtain the quantized voxel colors

$\widehat {{\bf TC}}_{rv}^{(1)}$ is entropy decoded using the recovered weights and inverse transformed by RAHT to obtain the quantized voxel colors ![]() $\hat {{\bf C}}_{rv}^{(1)}$. Finally, the quantized refined vertex colors can be obtained as

$\hat {{\bf C}}_{rv}^{(1)}$. Finally, the quantized refined vertex colors can be obtained as ![]() $\hat {{\bf C}}_r^{(1)}=\hat {{\bf C}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$.

$\hat {{\bf C}}_r^{(1)}=\hat {{\bf C}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$.

B) Encoding and decoding of predicted frames

We assume that all N frames in a GOP are aligned. That is, the lists of faces, ![]() ${\bf F}^{(1)},\,\ldots ,\,{\bf F}^{(N)}$, are all identical. Moreover, the lists of vertices,

${\bf F}^{(1)},\,\ldots ,\,{\bf F}^{(N)}$, are all identical. Moreover, the lists of vertices, ![]() ${\bf V}^{(1)},\,\ldots ,\,{\bf V}^{(N)}$, all correspond in the sense that the ith vertex in list

${\bf V}^{(1)},\,\ldots ,\,{\bf V}^{(N)}$, all correspond in the sense that the ith vertex in list ![]() ${\bf V}^{(1)}$ (say,

${\bf V}^{(1)}$ (say, ![]() $v^{(1)}(i)=v_i^{(1)}$) corresponds to the ith vertex in list

$v^{(1)}(i)=v_i^{(1)}$) corresponds to the ith vertex in list ![]() ${\bf V}^{(t)}$ (say,

${\bf V}^{(t)}$ (say, ![]() $v^{(t)}(i)=v_i^{(t)}$), for all

$v^{(t)}(i)=v_i^{(t)}$), for all ![]() $t=1,\,\ldots ,\,N$.

$t=1,\,\ldots ,\,N$. ![]() $(v^{(1)}(i),\,\ldots ,\,v^{(N)}(i))$ is the trajectory of vertex i over the GOF,

$(v^{(1)}(i),\,\ldots ,\,v^{(N)}(i))$ is the trajectory of vertex i over the GOF, ![]() $i=1,\,\ldots ,\,N_p$, where

$i=1,\,\ldots ,\,N_p$, where ![]() $N_p$ is the number of vertices.

$N_p$ is the number of vertices.

Similarly, when the faces are upsampled by factor U to create new lists of refined vertices, ![]() ${\bf V}_r^{(1)},\,\ldots ,\,{\bf V}_r^{(N)}$ — and their colors,

${\bf V}_r^{(1)},\,\ldots ,\,{\bf V}_r^{(N)}$ — and their colors, ![]() ${\bf C}_r^{(1)},\,\ldots ,\,{\bf C}_r^{(N)}$ — the

${\bf C}_r^{(1)},\,\ldots ,\,{\bf C}_r^{(N)}$ — the ![]() $i_r$th elements of these lists also correspond to each other across the GOF,

$i_r$th elements of these lists also correspond to each other across the GOF, ![]() $i_r=1,\,\ldots ,\,N_c$, where

$i_r=1,\,\ldots ,\,N_c$, where ![]() $N_c$ is the number of refined vertices, or the number of colors.

$N_c$ is the number of refined vertices, or the number of colors.

The trajectory ![]() $\{(v^{(1)}(i),\,\ldots ,\,v^{(N)}(i)):i=1,\,\ldots ,\,N_p\}$ can be considered an attribute of vertex

$\{(v^{(1)}(i),\,\ldots ,\,v^{(N)}(i)):i=1,\,\ldots ,\,N_p\}$ can be considered an attribute of vertex ![]() $v^{(1)}(i)$, and likewise the trajectories

$v^{(1)}(i)$, and likewise the trajectories ![]() $\{(v_r^{(1)}(i_r),\,\ldots ,\,v_r^{(N)}(i_r)):i_r=1,\,\ldots ,\,N_c\}$ and

$\{(v_r^{(1)}(i_r),\,\ldots ,\,v_r^{(N)}(i_r)):i_r=1,\,\ldots ,\,N_c\}$ and ![]() $\{(c_r^{(1)}(i_r),\,\ldots ,\,c_r^{(N)}(i_r)):i_r=1,\,\ldots ,\,N_c\}$ can be considered attributes of refined vertex

$\{(c_r^{(1)}(i_r),\,\ldots ,\,c_r^{(N)}(i_r)):i_r=1,\,\ldots ,\,N_c\}$ can be considered attributes of refined vertex ![]() $v_r^{(1)}(i_r)$. Thus the trajectories can be partitioned according to how the vertex

$v_r^{(1)}(i_r)$. Thus the trajectories can be partitioned according to how the vertex ![]() $v^{(1)}(i)$ and the refined vertex

$v^{(1)}(i)$ and the refined vertex ![]() $v_r^{(1)}(i_r)$ are voxelized. As for any attribute, the average of the trajectories in each cell of the partition is used to represent all trajectories in the cell. Our scheme codes these representative trajectories. This could be a problem if trajectories diverge from the same, or nearly the same, point, for example, when clapping hands separately. However, this situation is usually avoided by restarting the GOF by inserting a keyframe, or reference frame, whenever the topology changes, and by using a sufficiently fine voxel grid.

$v_r^{(1)}(i_r)$ are voxelized. As for any attribute, the average of the trajectories in each cell of the partition is used to represent all trajectories in the cell. Our scheme codes these representative trajectories. This could be a problem if trajectories diverge from the same, or nearly the same, point, for example, when clapping hands separately. However, this situation is usually avoided by restarting the GOF by inserting a keyframe, or reference frame, whenever the topology changes, and by using a sufficiently fine voxel grid.

In this section, we show how to encode and decode the predicted frames, i.e., frames ![]() $t=2,\,\ldots ,\,N$, in each GOF. The frames are processed one at a time, with no look-ahead, to minimize latency. The pseudo code can be found in the Appendix, the encoding is detailed in Algorithm 10, while decoding is detailed in Algorithm 11.

$t=2,\,\ldots ,\,N$, in each GOF. The frames are processed one at a time, with no look-ahead, to minimize latency. The pseudo code can be found in the Appendix, the encoding is detailed in Algorithm 10, while decoding is detailed in Algorithm 11.

1) Geometry encoding and decoding

At the encoder, for frame t, as for frame 1, the vertices ![]() ${\bf V}^{(1)}$, or equivalently the vertices

${\bf V}^{(1)}$, or equivalently the vertices ![]() $\hat {{\bf V}}^{(1)}$, are voxelized. However, for frame t>1 the voxelization occurs with attributes

$\hat {{\bf V}}^{(1)}$, are voxelized. However, for frame t>1 the voxelization occurs with attributes ![]() ${\bf V}^{(t)}$. In this sense, the vertices

${\bf V}^{(t)}$. In this sense, the vertices ![]() ${\bf V}^{(t)}$ are projected back to the reference frame, where they are voxelized like attributes. As for frame 1, this produces a possibly slightly shorter list

${\bf V}^{(t)}$ are projected back to the reference frame, where they are voxelized like attributes. As for frame 1, this produces a possibly slightly shorter list ![]() $\hat {{\bf V}}_v^{(1)}$ along with a list of indices

$\hat {{\bf V}}_v^{(1)}$ along with a list of indices ![]() ${\bf I}_v^{(1)}$ such that

${\bf I}_v^{(1)}$ such that ![]() $\hat {{\bf V}}^{(1)}=\hat {{\bf V}}_v^{(1)}({\bf I}_v^{(1)})$. In addition, it produces an equal-length list of representative attributes,

$\hat {{\bf V}}^{(1)}=\hat {{\bf V}}_v^{(1)}({\bf I}_v^{(1)})$. In addition, it produces an equal-length list of representative attributes, ![]() ${\bf V}_v^{(t)}$. Such a list is produced every frame. Therefore, the previous frame can be used as a prediction. The prediction residual

${\bf V}_v^{(t)}$. Such a list is produced every frame. Therefore, the previous frame can be used as a prediction. The prediction residual ![]() $\Delta {\bf V}_v^{(t)}={\bf V}_v^{(t)}-\hat {{\bf V}}_v^{(t-1)}$ is transformed, quantized with stepsize

$\Delta {\bf V}_v^{(t)}={\bf V}_v^{(t)}-\hat {{\bf V}}_v^{(t-1)}$ is transformed, quantized with stepsize ![]() $\Delta _{motion}$, inverse transformed, and added to the prediction to obtain the reproduction

$\Delta _{motion}$, inverse transformed, and added to the prediction to obtain the reproduction ![]() $\hat {{\bf V}}_v^{(t)}$, which goes into the frame buffer. The quantized transform coefficients are entropy coded. We use adaptive RLGR as the entropy coder. In this process, the prediction residuals correspond to attributes of a point cloud given by the triangle's vertices at the reference frame. Note that the RAHT used to transform the prediction residual

$\hat {{\bf V}}_v^{(t)}$, which goes into the frame buffer. The quantized transform coefficients are entropy coded. We use adaptive RLGR as the entropy coder. In this process, the prediction residuals correspond to attributes of a point cloud given by the triangle's vertices at the reference frame. Note that the RAHT used to transform the prediction residual ![]() $\Delta {\bf V}_v^{(t)}$ is built using the voxelized point cloud at the reference frame

$\Delta {\bf V}_v^{(t)}$ is built using the voxelized point cloud at the reference frame ![]() $\hat {{\bf V}}_v^{(1)}$, which is different than the RAHT used for color coding.

$\hat {{\bf V}}_v^{(1)}$, which is different than the RAHT used for color coding.

At the decoder, the entropy code for the quantized transform coefficients of the prediction residual is received, entropy decoded, inverse transformed, inverse quantized, and added to the prediction to obtain ![]() $\hat {{\bf V}}_v^{(t)}$, which goes into the frame buffer. Finally,

$\hat {{\bf V}}_v^{(t)}$, which goes into the frame buffer. Finally, ![]() $\hat {{\bf V}}^{(t)}=\hat {{\bf V}}_v^{(t)}({\bf I}_v^{(1)})$ is sent to the renderer.

$\hat {{\bf V}}^{(t)}=\hat {{\bf V}}_v^{(t)}({\bf I}_v^{(1)})$ is sent to the renderer.

2) Color encoding and decoding

At the encoder, for frame t>1, as for frame t=1, the refined vertices ![]() $\hat {{\bf V}}_r^{(1)}$, are voxelized with attributes

$\hat {{\bf V}}_r^{(1)}$, are voxelized with attributes ![]() ${\bf C}_r^{(t)}$. In this sense, the colors

${\bf C}_r^{(t)}$. In this sense, the colors ![]() ${\bf C}_r^{(t)}$ are projected back to the reference frame, where they are voxelized. As for frame t=1, this produces a significantly shorter list

${\bf C}_r^{(t)}$ are projected back to the reference frame, where they are voxelized. As for frame t=1, this produces a significantly shorter list ![]() $\hat {{\bf V}}_{rv}^{(1)}$ along with a list of indices

$\hat {{\bf V}}_{rv}^{(1)}$ along with a list of indices ![]() ${\bf I}_{rv}^{(1)}$ such that

${\bf I}_{rv}^{(1)}$ such that ![]() $\hat {{\bf V}}_r^{(1)}=\hat {{\bf V}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$. In addition, it produces a list of representative attributes,

$\hat {{\bf V}}_r^{(1)}=\hat {{\bf V}}_{rv}^{(1)}({\bf I}_{rv}^{(1)})$. In addition, it produces a list of representative attributes, ![]() ${\bf C}_{rv}^{(t)}$. Such a list is produced every frame. Therefore the previous frame can be used as a prediction. The prediction residual

${\bf C}_{rv}^{(t)}$. Such a list is produced every frame. Therefore the previous frame can be used as a prediction. The prediction residual ![]() $\Delta {\bf C}_{rv}^{(t)}={\bf C}_{rv}^{(t)}-\hat {{\bf C}}_{rv}^{(t-1)}$ is transformed, quantized with stepsize

$\Delta {\bf C}_{rv}^{(t)}={\bf C}_{rv}^{(t)}-\hat {{\bf C}}_{rv}^{(t-1)}$ is transformed, quantized with stepsize ![]() $\Delta _{color,inter}$, inverse transformed, and added to the prediction to obtain the reproduction

$\Delta _{color,inter}$, inverse transformed, and added to the prediction to obtain the reproduction ![]() $\hat {{\bf C}}_{rv}^{(t)}$, which goes into the frame buffer. The quantized transform coefficients are entropy coded. We use adaptive RLGR as the entropy coder.

$\hat {{\bf C}}_{rv}^{(t)}$, which goes into the frame buffer. The quantized transform coefficients are entropy coded. We use adaptive RLGR as the entropy coder.

At the decoder, the entropy code for the quantized transform coefficients of the prediction residual is received, entropy decoded, inverse transformed, inverse quantized, and added to the prediction to obtain ![]() $\hat {{\bf C}}_{rv}^{(t)}$, which goes into the frame buffer. Finally,

$\hat {{\bf C}}_{rv}^{(t)}$, which goes into the frame buffer. Finally, ![]() $\hat {{\bf C}}_r^{(t)}=\hat {{\bf C}}_{rv}^{(t)}({\bf I}_{rv}^{(1)})$ is sent to the renderer.

$\hat {{\bf C}}_r^{(t)}=\hat {{\bf C}}_{rv}^{(t)}({\bf I}_{rv}^{(1)})$ is sent to the renderer.

C) Rendering for visualization and distortion computation

The decompressed dynamic triangle cloud given by ![]() $\lbrace \hat {{\bf V}}^{(t)},\,\hat {{\bf C}}_r^{(t)},\,{\bf F}^{(t)}\rbrace _{t=1}^N$ may have varying density across triangles resulting in some holes or transparent looking regions, which are not satisfactory for visualization. We apply the triangle refinement function on the set of vertices and faces from Algorithm 5 and produce the redundant representation

$\lbrace \hat {{\bf V}}^{(t)},\,\hat {{\bf C}}_r^{(t)},\,{\bf F}^{(t)}\rbrace _{t=1}^N$ may have varying density across triangles resulting in some holes or transparent looking regions, which are not satisfactory for visualization. We apply the triangle refinement function on the set of vertices and faces from Algorithm 5 and produce the redundant representation ![]() $\lbrace \hat {{\bf V}}_r^{(t)},\,\hat {{\bf C}}_r^{(t)},\,{\bf F}_r^{(t)}\rbrace _{t=1}^N$. This sequence consists of a dynamic point cloud

$\lbrace \hat {{\bf V}}_r^{(t)},\,\hat {{\bf C}}_r^{(t)},\,{\bf F}_r^{(t)}\rbrace _{t=1}^N$. This sequence consists of a dynamic point cloud ![]() $\lbrace \hat {{\bf V}}_r^{(t)},\,\hat {{\bf C}}_r^{(t)}\rbrace _{t=1}^N$, whose colored points lie in the surfaces of triangles given by

$\lbrace \hat {{\bf V}}_r^{(t)},\,\hat {{\bf C}}_r^{(t)}\rbrace _{t=1}^N$, whose colored points lie in the surfaces of triangles given by ![]() $\lbrace \hat {{\bf V}}_r^{(t)},\,{\bf F}_r^{(t)}\rbrace _{t=1}^N$. This representation is further refined using a similar method to increase the spatial resolution by adding a linear interpolation function for the color attributes as shown in Algorithm 6. The output is a denser point cloud, denoted by

$\lbrace \hat {{\bf V}}_r^{(t)},\,{\bf F}_r^{(t)}\rbrace _{t=1}^N$. This representation is further refined using a similar method to increase the spatial resolution by adding a linear interpolation function for the color attributes as shown in Algorithm 6. The output is a denser point cloud, denoted by ![]() $\lbrace \hat {{\bf V}}_{rr}^{(t)},\,\hat {{\bf C}}_{rr}^{(t)}\rbrace _{t=1}^N$. We use this denser point cloud for visualization and distortion computation in the experiments described in the next section.

$\lbrace \hat {{\bf V}}_{rr}^{(t)},\,\hat {{\bf C}}_{rr}^{(t)}\rbrace _{t=1}^N$. We use this denser point cloud for visualization and distortion computation in the experiments described in the next section.

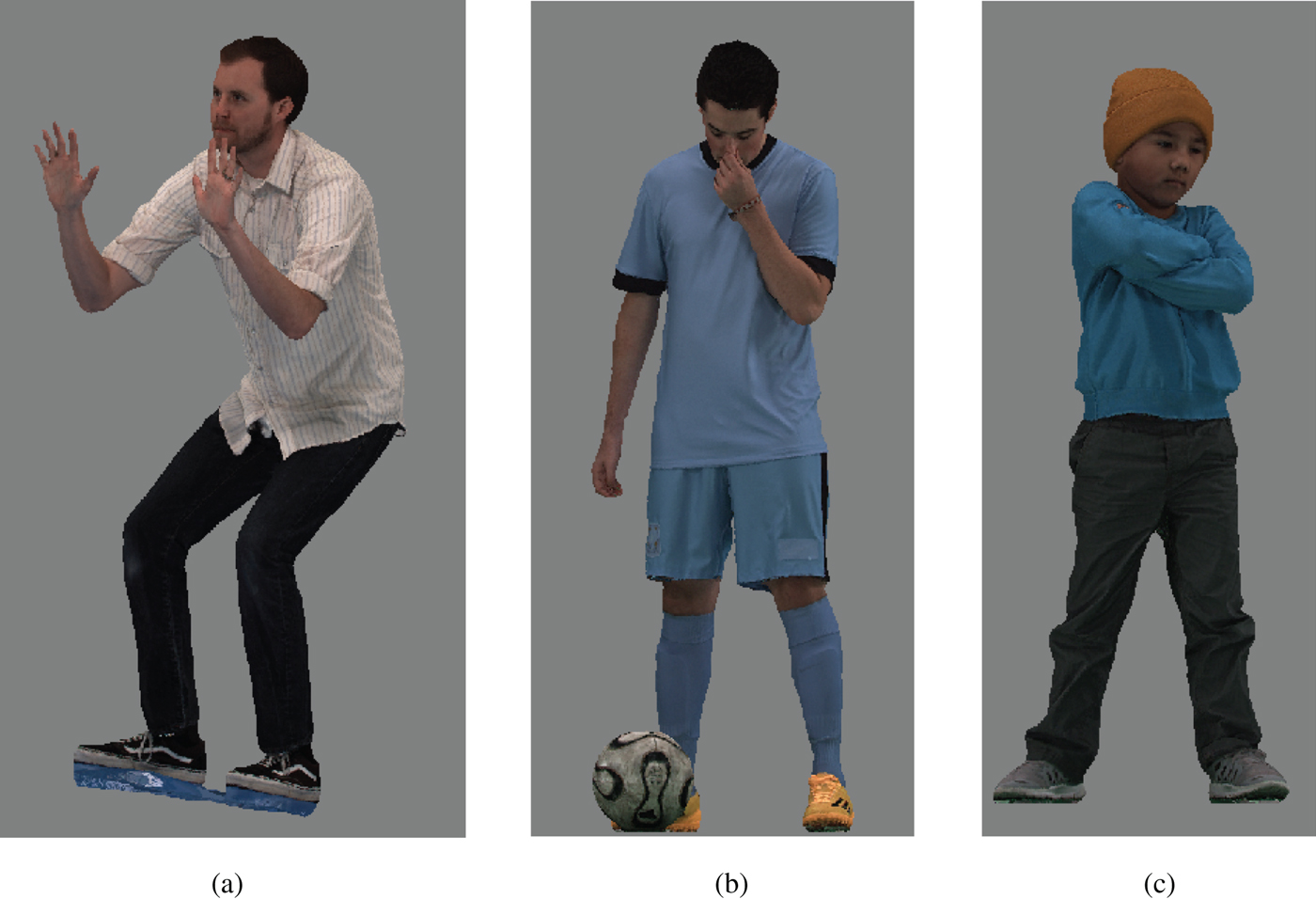

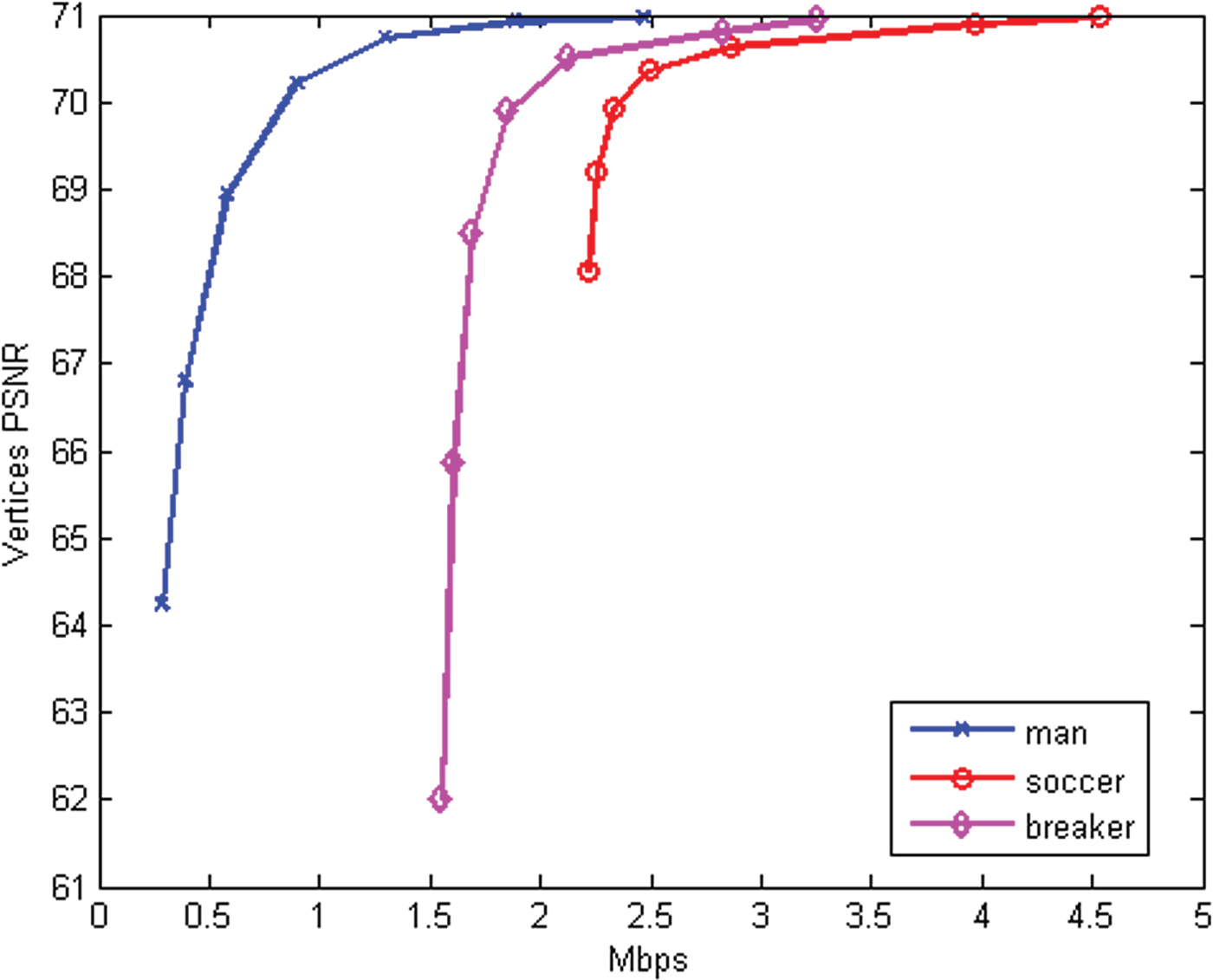

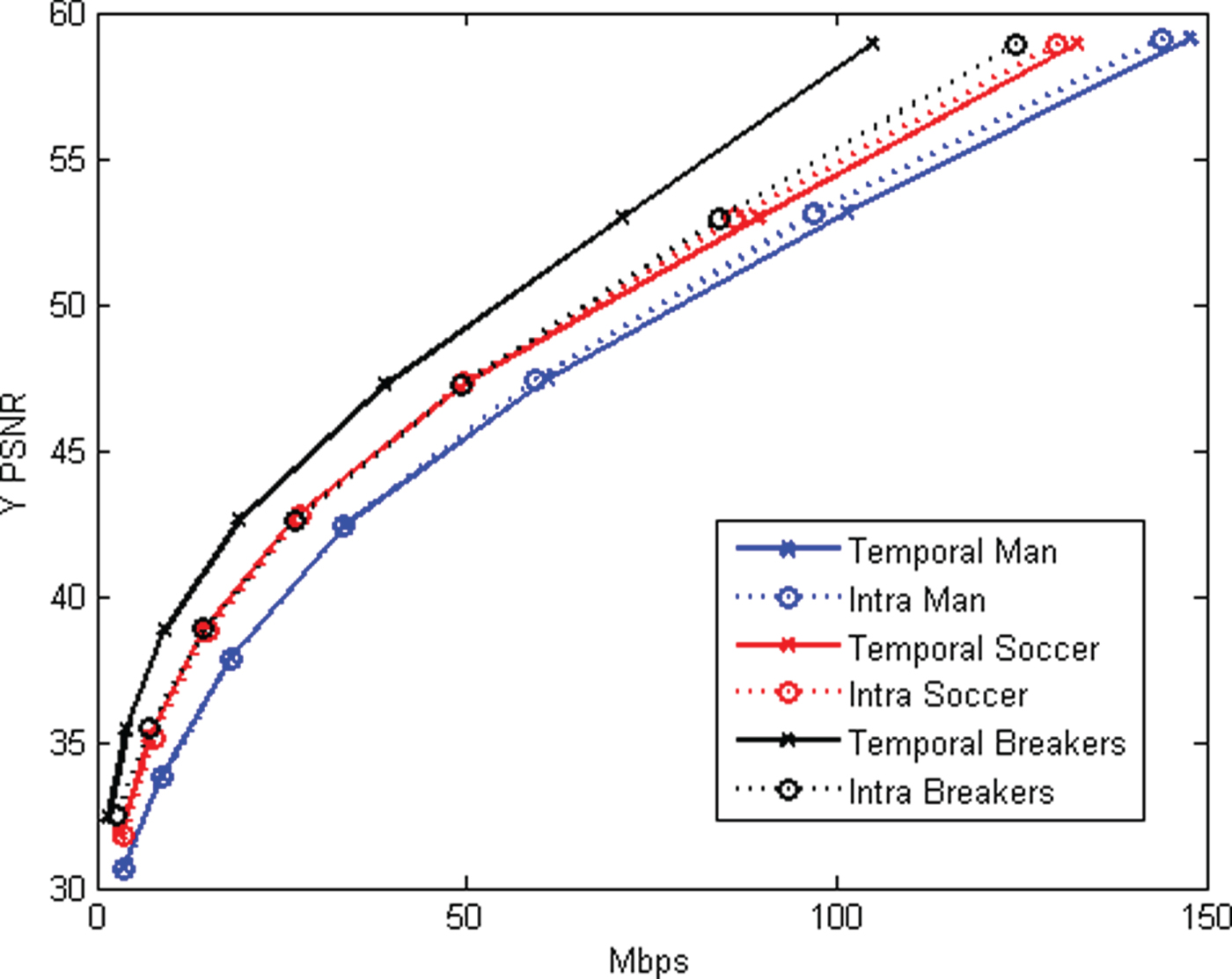

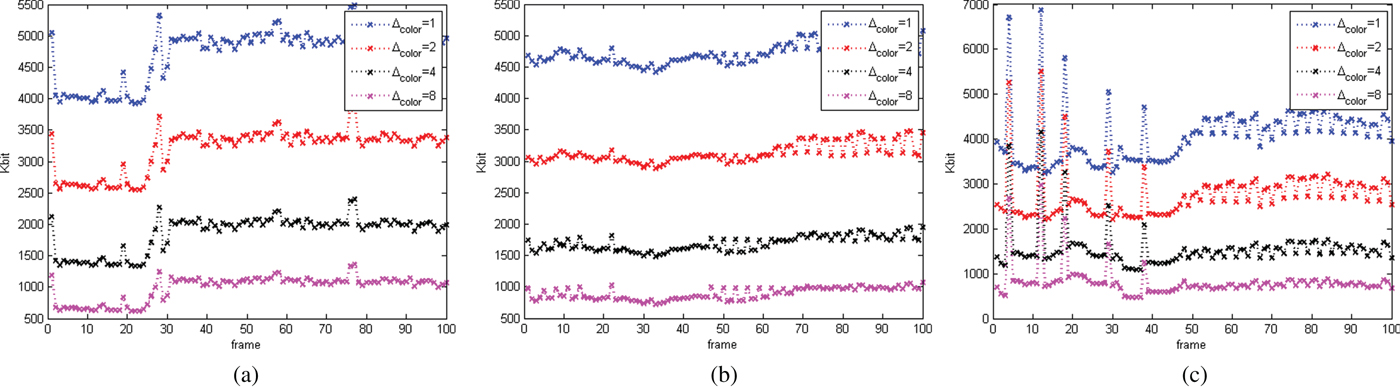

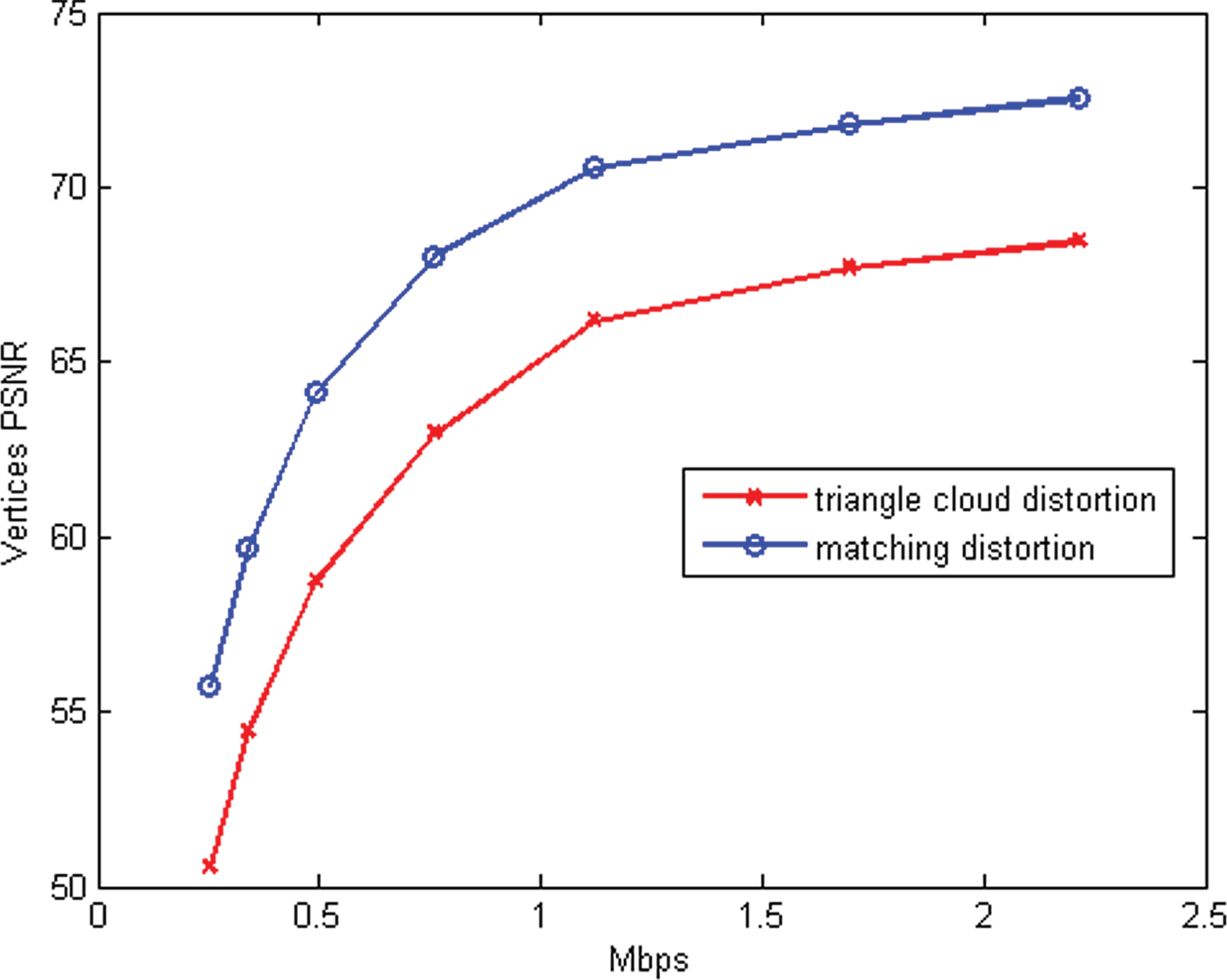

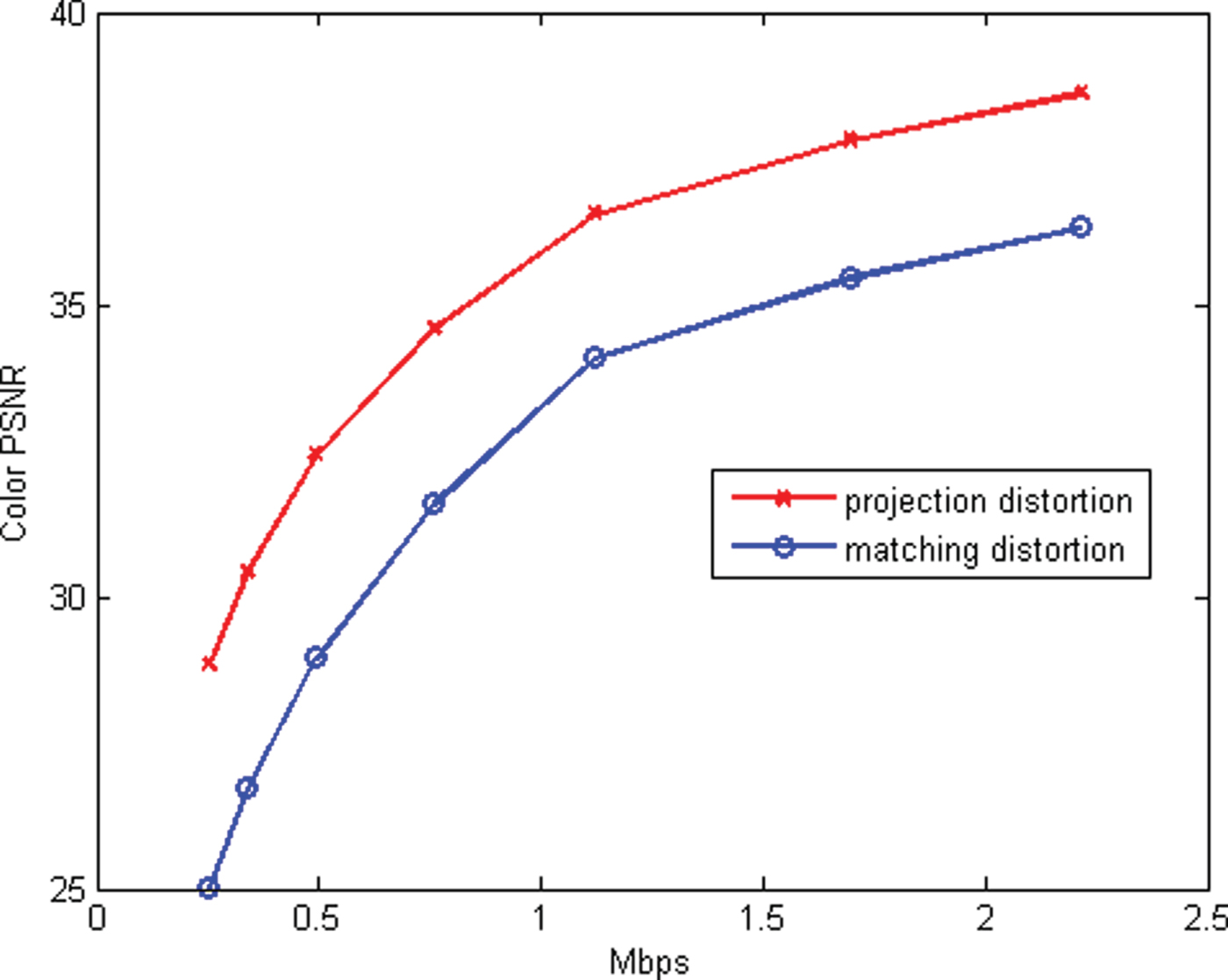

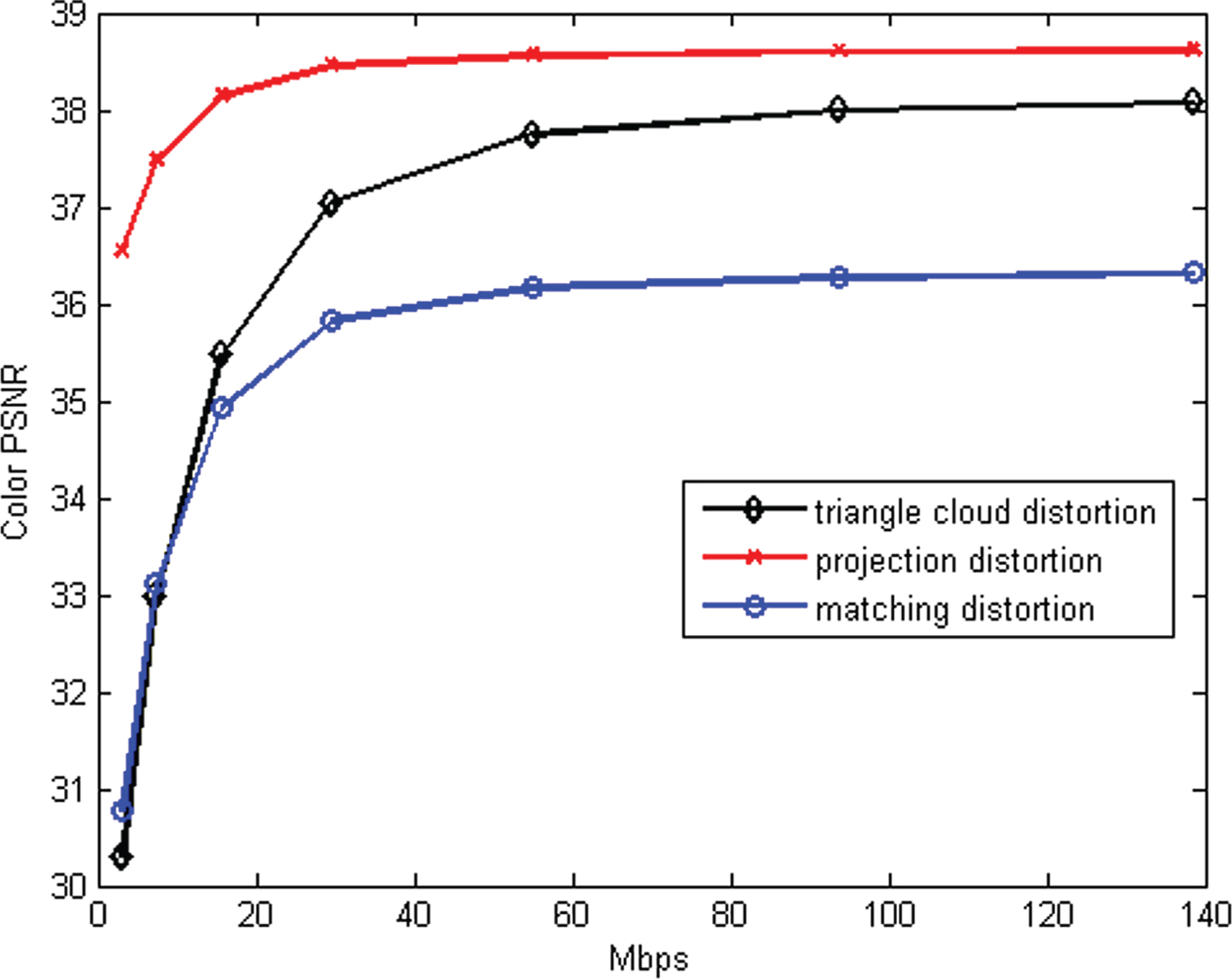

VI. EXPERIMENTS