1. Introduction

Long-dated contingent claims are relevant in insurance, pension fund management and derivative valuation. This paper proposes a shift in the valuation and production of long-term contracts, away from classical no-arbitrage valuation, towards less-expensive valuation under the real-world probability measure. In contrast to risk-neutral valuation, with the savings account as reference unit, the long-term best-performing portfolio, the numéraire portfolio of the equity market is coming into play in the proposed real-world valuation. A benchmark, the numéraire portfolio, is employed as the reference unit in the analysis, replacing the savings account. The numéraire portfolio is the strictly positive, tradable portfolio that when used as benchmark makes all benchmarked non-negative portfolios supermartingales. This means their current benchmarked values are greater than, or equal to, their expected future benchmarked values. Furthermore, the benchmarked real-world value of a benchmarked contingent claim is proposed to equal its real-world conditional expectation. This yields the minimal possible value for the benchmarked contingent claim. It turns out that the pooled total benchmarked hedge error of a well diversified book of contracts issued by an insurance company can practically vanish due to diversification when the number of contracts becomes large. In long-term asset and liability valuation, real-world valuation can lead to significantly lower values than suggested by classical valuation arguments where the existence of some equivalent risk-neutral probability measure is assumed.

Under the benchmark approach (BA), described in Platen & Heath (Reference Platen and Heath2010), instead of relying on the domestic savings account as the reference unit, a benchmark in form of the best performing, tradable strictly positive portfolio is chosen as numéraire. More precisely, it employs the numéraire portfolio as benchmark, whose origin can be traced back to Long (Reference Long1990), and which is equal to the growth optimal portfolio; see Kelly (Reference Kelly1956). In Bühlmann & Platen (Reference Bühlmann and Platen2003), a discrete time version of the BA was introduced into the actuarial literature. The current paper aims to popularise its continuous time version, which allows one to produce long-term payoffs less expensively than the classical risk-neutral production methodology.

In recent years, the problem of accurately valuing long-term assets and liabilities, held by insurance companies, banks and pension funds, has become increasingly important. How these institutions perform such valuations often remains unclear. The recent experience with low interest rate environments and the economic impacts of a pandemic suggests that some major changes are due in these industries concerning the valuation and production methods employed. One possible explanation for the need of change, to be explored in this article, is that the risk-neutral valuation paradigm itself may be too expensive, especially when it is applied to the valuation of long-term contracts. It leads to a more expensive production method than necessary, as will be explained in the current paper.

The structure of the paper is as follows: section 2 gives a brief survey on the literature about valuation methods in insurance and finance. Section 3 introduces the benchmark approach (BA). Real-world valuation is described in section 4. Three examples on real-world valuation and hedging of long-term annuities, with annual payments linked to a mortality index, a savings account or an equity index, possibly involving a guaranteed death benefit equal to a roll-up of initial premium, are illustrated in section 5. Section 6 concludes.

2. Valuation methods for long-term contracts

One of the most dynamic areas in the current risk management literature is the valuation of long-term contracts, including variable annuities. The latter represent long-term contracts with payoffs that depend on insured events and on underlying assets that are traded in financial markets. The valuation methods can be categorised into three main types: actuarial valuation or expected present value, risk-neutral valuation and utility maximisation valuation.

Actuarial valuation (expected present value)

One of the pioneers of calculating present values of contingent claims for life insurance companies was James Dodson, whose work is described in the historical accounts of Turnbull (Reference Turnbull2016) and Dodson (Reference Dodson1995). The application of such methods to with-profits policies ensued. In the late 1960s, US life insurers entered the variable annuity market, as mentioned in Sloane (Reference Sloane1970), where such products required assumptions on the long-term behaviour of equity markets. Many authors have analysed various actuarial models of the long-term evolution of stochastic equity markets, such as Wise (Reference Wise1984b) and Wilkie (Reference Wilkie1985, Reference Wilkie1987, Reference Wilkie1995).

Since the work of Redington (Reference Redington1952), the matching of well-defined cash flows with liquidly traded ones, while minimising the risk of reserves, has been a widely used valuation method in insurance. For instance, Wise (Reference Wise1984a,b, Reference Wise1987a, b, Reference Wise1989), Wilkie (Reference Wilkie1985) and Keel & Müller (Reference Keel and Müller1995) study contracts when a perfect match is not possible.

Risk-neutral naluation

The main stream of research, however, follows the concept of no-arbitrage valuation in the sense of Ross (Reference Ross1976) and Harrison & Kreps (Reference Harrison and Kreps1979). This approach has been widely used in finance, where it appears in the guise of risk-neutral valuation. The earliest applications of no-arbitrage valuation to variable annuities are in the papers by Brennan & Schwartz (Reference Brennan and Schwartz1976, Reference Brennan and Schwartz1979) and Boyle & Schwartz (Reference Boyle and Schwartz1977), which extend the Black-Scholes-Merton option valuation (see Black & Scholes Reference Black and Scholes1973 and Merton Reference Merton1973) to the case of equity-linked insurance contracts.

The Fundamental Theorem of Asset Pricing, in its most general form formulated by Delbaen & Schachermayer (Reference Delbaen and Schachermayer1998), establishes a correspondence between the “no free lunch with vanishing risk” no-arbitrage concept and the existence of an equivalent risk-neutral probability measure. This important result demonstrates that theoretically founded classical no-arbitrage pricing requires the restrictive assumption that an equivalent risk-neutral probability measure must exist. In such a setting, the effect of stochastic interest rates on the risk-neutral value of a guarantee is crucial in insurance and has been discussed by many authors, such as Bacinello & Ortu (Reference Bacinello and Ortu1993, Reference Bacinello and Ortu1996), Aase & Persson (Reference Aase and Persson1994) and Huang & Cairns (Reference Huang and Cairns2004, Reference Huang and Cairns2005).

Risk-neutral valuation for incomplete markets

In reality, one has to deal with the fact that markets are incomplete and insurance payments are not fully hedgeable. The choice of a risk-neutral pricing measure is, therefore, not unique, as pointed out by Föllmer & Sondermann (Reference Föllmer and Sondermann1986) and Föllmer & Schweizer (Reference Föllmer and Schweizer1991), for example. Hofmann et al. (Reference Hofmann, Platen and Schweizer1992), Gerber & Shiu (Reference Gerber and Shiu1994), Gerber (Reference Gerber1997). Jaimungal & Young (Reference Jaimungal and Young2005), Duffie & Richardson (Reference Duffie and Richardson1991) and Schweizer (Reference Schweizer1992) address this issue by suggesting certain mean-variance hedging methods based on a form of variance- or risk-minimising objective, assuming the existence of a particular risk-neutral measure. In the latter case, the so-called minimal equivalent martingale measure, due to Föllmer & Schweizer (Reference Föllmer and Schweizer1991), emerges as the pricing measure. This valuation method is also known as local risk minimisation and was considered by Möller (Reference Möller1998, Reference Möller2001), Schweizer (Reference Schweizer2001) and Dahl & Möller (Reference Dahl and Möller2006) for the valuation of insurance products.

Expected utility maximisation

Another approach involves the maximisation of expected terminal utility, see Karatzas et al. (Reference Karatzas, Shreve, Lehoczky and Xu1991), Kramkov & Schachermayer (Reference Kramkov and Schachermayer1999) and Delbaen et al. (Reference Delbaen, Grandits, Rheinländer, Samperi, Schweizer and Stricker2002). In this case, the valuation is based on a particular form of utility indifference pricing. This form of valuation has been applied by Hodges & Neuberger (Reference Hodges and Neuberger1989) and later by Davis (Reference Davis1997) and others. It has been used to value equity-linked insurance products by Young & Zariphopoulou (Reference Young and Zariphopoulou2002a, b), Young (Reference Young2003) and Moore & Young (Reference Moore and Young2003).

Typically in the context of some expected utility maximisation, there is an ongoing debate on the links between the valuation of insurance liabilities and financial economics for which the reader can be referred to Reitano (Reference Reitano1997), Longley-Cook (Reference Longley-Cook1998), Babbel & Merrill (Reference Babbel and Merrill1998), Möller (Reference Möller1998, Reference Möller2002), Phillips et al. (Reference Phillips, Cummins and Allen1998), Girard (Reference Girard2000), Lane (Reference Lane2000) and Wang (Reference Wang2000, Reference Wang2002). Equilibrium modelling from a macro-economic perspective has been the focus of a line of research that can be traced back to Debreu (Reference Debreu1982), Starr (Reference Starr1997) and Duffie (Reference Duffie2001).

Stochastic discount factors

Several no-arbitrage pricing concepts have been popular in finance that are equivalent to the risk-neutral approach. For instance, Cochrane (Reference Cochrane2001) employs the notion of a stochastic discount factor. The use of a state-price density, a deflator or a pricing kernel has been considered by Constantinides (Reference Constantinides1992), Cochrane (Reference Cochrane2001) and Duffie (Reference Duffie2001), respectively. Another way of describing classical no-arbitrage pricing was pioneered by Long (Reference Long1990) and further developed in Bajeux-Besnainou & Portait (Reference Bajeux-Besnainou and Portait1997) and Becherer (Reference Becherer2001), who use the numéraire portfolio as numéraire instead of the savings account and employ the real-world probability measure as pricing measure to recover risk-neutral prices.

Real-world valuation under the benchmark approach

The previously mentioned line of research involving the numéraire portfolio comes closest to the form of real-world valuation under the benchmark approach (BA) proposed in Platen (Reference Platen2002b) and Platen & Heath (Reference Platen and Heath2010). The primary difference is that the BA no longer assumes the existence of an equivalent risk-neutral probability measure. In so doing, it allows for a much richer class of models to be available for consideration and permits several self-financing portfolios to replicate the same contingent claim, where it can select the least expensive one as corresponding production process. Even in a complete market, the BA can hedge less expensively many typical payoffs than classical no-arbitrage pricing allows. Throughout this article, we use the terms replicate and hedge interchangeably in respect of the payoff of a given contingent claim.

The BA employs the best-performing, strictly positive, tradable portfolio as benchmark and makes it the central reference unit for modelling, valuation and hedging. A well-diversified equity index can be used as benchmark, as explained in Platen & Heath (Reference Platen and Heath2010) and Platen & Rendek (Reference Platen and Rendek2012). In some sense, real-world valuation can be interpreted along the lines of budgeting. Since we will demonstrate that one can hedge real-world values, it is about calculating what a contingent claim is expected to cost when producing it through hedging.

All valuations are performed under the real-world probability measure and, therefore, labelled “real-world valuations.” When an equivalent risk-neutral probability measure exists for a complete market model, real-world valuation yields the same value as risk-neutral valuation. When there is no equivalent risk-neutral probability measure for the market model, then risk-neutral prices can still be employed, as we demonstrate in the paper, but these are usually more expensive than the respective real-world prices.

In Du & Platen (Reference Du and Platen2016), the concept of benchmarked risk minimisation has been introduced, which yields via the real-world value the minimal possible value of a not fully hedgeable contingent claim and minimizes the fluctuations of the profit and losses when denominated in units of the numéraire portfolio. The profit and loss for the hedge portfolio of a contingent claim is defined as the theoretical value minus the realized gains from trade minus the initial value. Risk minimisation that is close to the previously mentioned concept of local risk minimisation of Föllmer & Schweizer (Reference Föllmer and Schweizer1991) and Föllmer & Sondermann (Reference Föllmer and Sondermann1986) was studied under the benchmark approach in Biagini et al. (Reference Biagini, Cretarola and Platen2014).

Stochastic mortality rates

Note that stochastic mortality rates are easily incorporated in the pricing of insurance products as demonstrated by Milevsky & Promislow (Reference Milevsky and Promislow2001), Dahl (Reference Dahl2004), Kirch & Melnikov (Reference Kirch and Melnikov2005), Cairns et al. (Reference Cairns, Blake and Dowd2006a, b, 2008), Biffis (Reference Biffis2005), Melnikov & Romaniuk (Reference Melnikov and Romaniuk2006, Reference Melnikov and Romaniuk2008) and Jalen & Mamon (Reference Jalen and Mamon2008). Most of these authors assume that the market is complete with respect to mortality risk, which means that it can be removed by diversification.

3. Benchmark approach

Since it will be crucial for the less-expensive production method, we propose in this paper, and since the continuous time BA has not been outlined in an actuarial journal, we will give within this and the following sections a survey about the BA, which goes beyond results presented in Platen (Reference Platen2002b) and Platen & Heath (Reference Platen and Heath2010). Consider a market comprising a finite number

![]() $J+1$

of primary security accounts. An example of such a security could be an account containing shares of a company with all dividends reinvested in that stock. A savings account held in some currency is another example of a primary security account. In reality, time is continuous, and this paper considers continuous time models that are described by Itô stochastic differential equations. These can provide compact and elegant mathematical descriptions of asset value dynamics. We work on a filtered probability space

$J+1$

of primary security accounts. An example of such a security could be an account containing shares of a company with all dividends reinvested in that stock. A savings account held in some currency is another example of a primary security account. In reality, time is continuous, and this paper considers continuous time models that are described by Itô stochastic differential equations. These can provide compact and elegant mathematical descriptions of asset value dynamics. We work on a filtered probability space

![]() $(\Omega , {\mathcal{F}},{\underline{\mathcal{F}}} , P )$

with filtration

$(\Omega , {\mathcal{F}},{\underline{\mathcal{F}}} , P )$

with filtration

![]() ${\underline{\mathcal{F}}} = ({\mathcal{F}}_t)_{t\ge 0}$

satisfying the usual conditions, as in Karatzas & Shreve (Reference Karatzas and Shreve1991).

${\underline{\mathcal{F}}} = ({\mathcal{F}}_t)_{t\ge 0}$

satisfying the usual conditions, as in Karatzas & Shreve (Reference Karatzas and Shreve1991).

The key assumption of the BA is that there exists a best-performing, strictly positive, tradable portfolio in the given investment universe, which we specify later on as the numéraire portfolio. This benchmark portfolio can be interpreted as a universal currency. Its existence turns out to be sufficient for the formulation of powerful results concerning diversification, portfolio optimisation and valuation.

The benchmarked value of a security represents its value denominated in units of the benchmark, the numéraire portfolio. Denote by

![]() ${\hat{S}}^j_t$

the benchmarked value of the jth primary security account,

${\hat{S}}^j_t$

the benchmarked value of the jth primary security account,

![]() $j \in \{ 0,1,\ldots ,J\}$

, at time

$j \in \{ 0,1,\ldots ,J\}$

, at time

![]() $t \geq 0$

. The 0-th primary security account is chosen to be the savings account of the domestic currency. The particular dynamics of the primary security accounts are not important for the formulation of several statements presented below. For simplicity, taxes and transaction costs are neglected in the paper.

$t \geq 0$

. The 0-th primary security account is chosen to be the savings account of the domestic currency. The particular dynamics of the primary security accounts are not important for the formulation of several statements presented below. For simplicity, taxes and transaction costs are neglected in the paper.

The market participants can form self-financing portfolios with primary security accounts as constituents. A portfolio at time t is characterized by the number

![]() $\delta^j_t$

of units held in the jth primary security account,

$\delta^j_t$

of units held in the jth primary security account,

![]() $j \in \{ 0,1,2,\ldots ,J \}$

,

$j \in \{ 0,1,2,\ldots ,J \}$

,

![]() $t \geq 0$

. Assume for any given strategy

$t \geq 0$

. Assume for any given strategy

![]() $\delta=\{\delta_t=(\delta^0_t,\delta^1_t,\ldots , \delta^J_t )^\top, t \geq 0 \}$

that the values

$\delta=\{\delta_t=(\delta^0_t,\delta^1_t,\ldots , \delta^J_t )^\top, t \geq 0 \}$

that the values

![]() $\delta^0_t,\delta^1_t,\ldots ,\delta^J_t $

depend only on information available at the time t. The value of the benchmarked portfolio, which means its value denominated in units of the benchmark, is given at time t by the sum

$\delta^0_t,\delta^1_t,\ldots ,\delta^J_t $

depend only on information available at the time t. The value of the benchmarked portfolio, which means its value denominated in units of the benchmark, is given at time t by the sum

\begin{equation} {\hat{S}}^\delta_t = \sum_{j=0}^J \delta^j_t\,{\hat{S}}^j_t, \end{equation}

\begin{equation} {\hat{S}}^\delta_t = \sum_{j=0}^J \delta^j_t\,{\hat{S}}^j_t, \end{equation}

for

![]() $t \geq 0$

. Since at any finite time, there is only finite total wealth available in the market, the paper considers only strategies whose benchmark and associated benchmarked portfolio values remain finite at all finite times.

$t \geq 0$

. Since at any finite time, there is only finite total wealth available in the market, the paper considers only strategies whose benchmark and associated benchmarked portfolio values remain finite at all finite times.

Let

![]() $E_t(X)=E(X|{\mathcal{F}}_t)$

denote the expectation of a random variable X under the real-world probability measure P, conditioned on the information available at time t captured by

$E_t(X)=E(X|{\mathcal{F}}_t)$

denote the expectation of a random variable X under the real-world probability measure P, conditioned on the information available at time t captured by

![]() ${\mathcal{F}}_t$

(e.g. see section 8 of Chapter 1 of Shiryaev Reference Shiryaev1984). This allows us to formulate the main assumption of the BA as follows:

${\mathcal{F}}_t$

(e.g. see section 8 of Chapter 1 of Shiryaev Reference Shiryaev1984). This allows us to formulate the main assumption of the BA as follows:

Assumption 1. There exists a strictly positive benchmark, called the numéraire portfolio (NP), such that each benchmarked non-negative portfolio

![]() ${\hat{S}}^\delta_t$

forms a supermartingale, which means that

${\hat{S}}^\delta_t$

forms a supermartingale, which means that

for all

![]() $0 \leq t \leq s < \infty$

.

$0 \leq t \leq s < \infty$

.

Inequality (2) can be referred to as the supermartingale property of benchmarked securities. It is obvious that the benchmark represents in the sense of (2) the best-performing portfolio, forcing all benchmarked non-negative portfolios in the mean downward or having no trend. In general, the numéraire portfolio (NP) coincides with the growth optimal portfolio, which is the portfolio that maximizes expected logarithmic utility, see Kelly (Reference Kelly1956). Since only the existence of the numéraire portfolio is requested, the benchmark approach reaches beyond the classical no-arbitrage modelling world.

According to Assumption 1, the current benchmarked value of a non-negative portfolio is greater than or equal to its expected future benchmarked values. Assumption 1 guarantees several fundamental properties of any useful financial market model without assuming a particular dynamic for the asset values. For example, it implies the absence of economically meaningful arbitrage, which means that any strictly positive portfolio remains finite at any finite time because the best-performing portfolio, the NP, has this property. As a consequence of the supermartingale property (2) and because a non-negative supermartingale that reaches zero is absorbed at zero, no wealth can be created from zero initial capital under limited liability.

Recall that for the classical risk-neutral valuation, the corresponding no-arbitrage concept is formalised as “no free lunch with vanishing risk” (NFLVR); see Delbaen & Schachermayer (Reference Delbaen and Schachermayer1994). The BA assumes that the portfolio remains finite at any finite time, which is equivalent to the “no unbounded profits with bounded risk” (NUPBR) no-arbitrage concept, see Karatzas & Kardaras (Reference Karatzas and Kardaras2007). Under the BA, an equivalent martingale measure is not required to exist, and therefore, benchmarked portfolio strategies are permitted to form strict supermartingales and not only martigales, a phenomenon which we exploit in the current paper.

Another fundamental property that follows directly from the supermartingale property (2) is that the benchmark, the NP, is unique. To see this, consider two strictly positive portfolios that are supposed to represent the benchmark. The first portfolio, when expressed in units of the second one, must satisfy the supermartingale property (2). By the same argument, the second portfolio, when expressed in units of the first one, must also satisfy the supermartingale property. Consequently, by Jensen’s inequality both portfolios must be identical. Thus, the value process of the benchmark that starts with given strictly positive initial capital is unique. Due to possible redundancies in the set of primary security accounts, this does not imply uniqueness for the trading strategy generating the benchmark.

Assumption 1 is satisfied for any reasonable financial market model. It simply asserts the existence of a best performing portfolio that does not reach infinity at any finite time. This requirement can be interpreted as the absence of economically meaningful arbitrage, which means that the benchmark and all associated benchmarked self-financing portfolios remain finite at finite times. In Theorem 14.1.7 of Chapter 14 of Platen & Heath (Reference Platen and Heath2010), Assumption 1 has been verified for jump diffusion markets, which cover a wide range of possible market dynamics. Karatzas & Kardaras (Reference Karatzas and Kardaras2007) and Christensen & Larsen (Reference Christensen and Larsen2007) show that Assumption 1 is satisfied for any reasonable semimartingale model. Note that Assumption 1 permits us to model benchmarked primary security accounts that are not martingales. This is necessary for realistic long-term market modelling, as will be demonstrated in section 5.

By referring to results in Platen (Reference Platen2004), Le & Platen (Reference Le and Platen2006), Platen & Rendek (Reference Platen and Rendek2012) and (2017), one can say that the benchmark portfolio is not only a theoretical construct, but can be approximated by well diversified portfolios, for example by the MSCI world stock index for the global equity market or the S

![]() $\&$

P500 total return index for the US equity market.

$\&$

P500 total return index for the US equity market.

A special type of security emerges when equality holds in relation (2).

Definition. A security is called fair if its benchmarked value

![]() ${\hat{V}}_t$

forms a martingale; that is, the current value of the process

${\hat{V}}_t$

forms a martingale; that is, the current value of the process

![]() $\hat{V}$

is the best forecast of its future values, which means that

$\hat{V}$

is the best forecast of its future values, which means that

for all

![]() $0 \leq t \leq s < \infty$

.

$0 \leq t \leq s < \infty$

.

Note that the above notion of a fair security is employing the NP, the benchmark. The BA allows us to consider securities that are not fair. This important flexibility is missing in the classical no-arbitrage approach, which essentially assumes always equality in (3). Securities that are not fair will be required when modelling the market realistically over long time periods.

4. Real-world valuation

As stated earlier, the most obvious difference between the BA and the classical risk-neutral approach is the choice of the pricing measure. The former uses the real-world probability measure with the NP as numéraire for valuation, while the savings account is the chosen numéraire under the risk-neutral approach, which assumes the existence of an equivalent risk-neutral probability measure. Under the risk-neutral approach, this assumption is additionally imposed to our Assumption 1 and, therefore, reduces significantly the class of models and phenomena considered. The supermartingale property (2) ensures that the expected return of a benchmarked non-negative portfolio can be at most zero. In the case of a fair benchmarked portfolio, the expected return is precisely zero. The current benchmarked value of such a portfolio is, therefore, the best forecast of its benchmarked future values. The risk-neutral approach assumes that for complete markets the savings account is fair, which seems to be at odds with evidence; see for example Baldeaux et al. (Reference Baldeaux, Grasselli and Platen2015) and (Reference Baldeaux, Ignatieva and Platen2018).

Under the benchmark approach, there can be many supermartingales that approach the same future random value of a payoff. In other words, there are many portfolio strategies which replicate the same given contingent claim

![]() $H_T$

at a future time T. Among such portfolio strategies, we identify the minimal replicating portfolio strategy to determine the portfolio which replicates the payoff

$H_T$

at a future time T. Among such portfolio strategies, we identify the minimal replicating portfolio strategy to determine the portfolio which replicates the payoff

![]() $H_T$

with the smallest initial value. Within a family of non-negative supermartingales, the supermartingale with the smallest initial value turns out to be the corresponding martingale; see Proposition 3.3 in Du & Platen (Reference Du and Platen2016). This basic fact allows us to deduce directly the following Law of the Minimal Price:

$H_T$

with the smallest initial value. Within a family of non-negative supermartingales, the supermartingale with the smallest initial value turns out to be the corresponding martingale; see Proposition 3.3 in Du & Platen (Reference Du and Platen2016). This basic fact allows us to deduce directly the following Law of the Minimal Price:

Theorem. (Law of the Minimal Price) If a fair portfolio replicates a given non-negative payoff at some future time, then this portfolio represents the minimal replicating portfolio among all non-negative portfolios that replicate this payoff.

For a given payoff, there may exist self-financing replicating portfolios that are not fair. Consequently, the classical Law of One Price is no longer enforced under the BA. However, the above Law of the Minimal Price provides instead a consistent, unique minimal value system for all hedgeable contracts with finite expected benchmarked payoffs. When contingent claims are priced by formally applying the risk-neutral pricing rule, which currently seems widely practised, no economically meaningful arbitrage can be made from holdings in the savings account, the contingent claim and the underlying asset. However, such prices are not always minimal and, therefore, can be more expensive than necessary.

It follows for a given hedgeable payoff that the corresponding fair hedge portfolio represents the least expensive hedge portfolio. From an economic point of view, investors prefer more to less and this is, therefore, also the value in a liquid, competitive market where diversifiable uncertainty is diversified. As will be demonstrated in section 5, there may exist several self-financing portfolios that hedge one and the same payoff. It is the fair portfolio that hedges the payoff at minimal cost. We emphasize that risk-neutral valuation, based purely on hedging via classical no-arbitrage arguments, see Ross (Reference Ross1976) and Harrison & Kreps (Reference Harrison and Kreps1979), may lead to more expensive values than those given by the corresponding fair value.

Now, consider the problem of valuing a given payoff to be delivered at a maturity date

![]() $T \in (0,\infty)$

. Define a benchmarked contingent claim

$T \in (0,\infty)$

. Define a benchmarked contingent claim

![]() ${\hat{H}}_T$

as a non-negative,

${\hat{H}}_T$

as a non-negative,

![]() $\mathcal{F}_T$

measurable payoff denominated in units of the benchmark with finite expectation

$\mathcal{F}_T$

measurable payoff denominated in units of the benchmark with finite expectation

If for a benchmarked contingent claim

![]() ${\hat{H}}_T$

,

${\hat{H}}_T$

,

![]() $T \in(0, \infty)$

, there exists a benchmarked fair portfolio

$T \in(0, \infty)$

, there exists a benchmarked fair portfolio

![]() ${\hat{S}}^{\delta_{\hat{H}_T}}$

, which replicates this claim at maturity T, that is

${\hat{S}}^{\delta_{\hat{H}_T}}$

, which replicates this claim at maturity T, that is

![]() ${\hat{H}}_T ={\hat{S}}^{\delta_{\hat{H}_T}}_T$

, then, by the above Law of the Minimal Price, its minimal replicating benchmarked value process is at time

${\hat{H}}_T ={\hat{S}}^{\delta_{\hat{H}_T}}_T$

, then, by the above Law of the Minimal Price, its minimal replicating benchmarked value process is at time

![]() $t \in[0,T]$

given by the real-world conditional expectation

$t \in[0,T]$

given by the real-world conditional expectation

Multiplying both sides of equation (5) by the value of the benchmark in domestic currency at time t, denoted by

![]() $S^*_t$

, one obtains the real-world valuation formula

$S^*_t$

, one obtains the real-world valuation formula

where

![]() $H_T={\hat{H}}_T\,S^*_T$

is the payoff denominated in domestic currency and

$H_T={\hat{H}}_T\,S^*_T$

is the payoff denominated in domestic currency and

![]() $S^{\delta_{\hat{H}_T}}_t$

the fair value at time

$S^{\delta_{\hat{H}_T}}_t$

the fair value at time

![]() $t \in [0,T]$

denominated in domestic currency.

$t \in [0,T]$

denominated in domestic currency.

Formula (6) is called the real-world valuation formula because it involves the conditional expectation

![]() $E_t$

with respect to the real-world probability measure P. It only requires the existence of the numéraire portfolio and the finiteness of the expectation in (4). These two conditions can hardly be weakened. By introducing the concept of benchmarked risk minimisation in Du & Platen (Reference Du and Platen2016), it has been shown that the above real-world valuation formula also provides the natural valuation for non-hedgeable contingent claims when one aims to diversify as much as possible the benchmarked non-hedgeable parts of contingent claims.

$E_t$

with respect to the real-world probability measure P. It only requires the existence of the numéraire portfolio and the finiteness of the expectation in (4). These two conditions can hardly be weakened. By introducing the concept of benchmarked risk minimisation in Du & Platen (Reference Du and Platen2016), it has been shown that the above real-world valuation formula also provides the natural valuation for non-hedgeable contingent claims when one aims to diversify as much as possible the benchmarked non-hedgeable parts of contingent claims.

An important application for the real-world valuation formula (6) arises when

![]() $H_T$

is independent of

$H_T$

is independent of

![]() $S^*_T$

, which leads to the generalized actuarial valuation formula

$S^*_T$

, which leads to the generalized actuarial valuation formula

The derivation of (7) from (6) exploits the fact that the expectation of a product of independent random variables equals the product of their expectations. One discounts in (7) by multiplying the real-world expectation

![]() $E_t(H_T)$

with the fair zero coupon bond value

$E_t(H_T)$

with the fair zero coupon bond value

Note that the fair zero coupon bond is under realistic assumptions less expensive than the respective classical risk-neutral zero coupon bond. When using the risk-neutral zero coupon bond in (7), then the actuarial valuation formula emerges, which has been used as a valuation rule by actuaries for centuries to determine the net present value of a claim. This important formula follows here under respective assumptions as a direct consequence of real-world valuation.

The following discussion aims to highlight the link between real-world valuation and risk-neutral valuation. Risk-neutral valuation uses as its numéraire the domestic savings account process

![]() $S^0=\{S^0_t \, , \, t \geq 0 \}$

, denominated in units of the domestic currency. Under certain assumptions, which will be described below, one obtains risk-neutral values from the real-world valuation formula by rewriting the real-world valuation formula (6) in the form

$S^0=\{S^0_t \, , \, t \geq 0 \}$

, denominated in units of the domestic currency. Under certain assumptions, which will be described below, one obtains risk-neutral values from the real-world valuation formula by rewriting the real-world valuation formula (6) in the form

\begin{equation} S^{\delta_{{\hat{H}}_T}}_t = E_t \left( \frac{\Lambda_T}{\Lambda_t} \,\frac{S^0_t}{S^0_T} \,H_T \right) , \end{equation}

\begin{equation} S^{\delta_{{\hat{H}}_T}}_t = E_t \left( \frac{\Lambda_T}{\Lambda_t} \,\frac{S^0_t}{S^0_T} \,H_T \right) , \end{equation}

employing the normalized benchmarked savings account

![]() $\Lambda_t = \frac{S^0_t\,S^*_0}{S^*_t\,S^0_0}$

for

$\Lambda_t = \frac{S^0_t\,S^*_0}{S^*_t\,S^0_0}$

for

![]() $t \in [0,T]$

. Note that

$t \in [0,T]$

. Note that

![]() $\Lambda_0=1$

and when assuming that the putative risk-neutral measure Q is an equivalent probability measure we get

$\Lambda_0=1$

and when assuming that the putative risk-neutral measure Q is an equivalent probability measure we get

\begin{equation} E_t \left( \frac{\Lambda_T}{\Lambda_t} \,\frac{S^0_t}{S^0_T} \,H_T \right) = E_t^Q \left( \frac{S^0_t}{S^0_T} \,H_T \right) , \end{equation}

\begin{equation} E_t \left( \frac{\Lambda_T}{\Lambda_t} \,\frac{S^0_t}{S^0_T} \,H_T \right) = E_t^Q \left( \frac{S^0_t}{S^0_T} \,H_T \right) , \end{equation}

because

![]() $\Lambda_t$

represents the respective (Radon-Nikodym derivative) density for Q at time t and

$\Lambda_t$

represents the respective (Radon-Nikodym derivative) density for Q at time t and

![]() $E^Q_t$

denotes the conditional expectation under Q. We remark that Q is an equivalent probability measure if and only if

$E^Q_t$

denotes the conditional expectation under Q. We remark that Q is an equivalent probability measure if and only if

![]() $\Lambda_t$

forms a true martingale, which means that the benchmarked savings account

$\Lambda_t$

forms a true martingale, which means that the benchmarked savings account

![]() ${\hat{S}}^0_t$

needs to form in this case a true martingale. It is the assumption of this restrictive martingale property that appears to be unrealistic for long-term modelling and leads to the current unnecessarily expensive production costs for pension and insurance payouts.

${\hat{S}}^0_t$

needs to form in this case a true martingale. It is the assumption of this restrictive martingale property that appears to be unrealistic for long-term modelling and leads to the current unnecessarily expensive production costs for pension and insurance payouts.

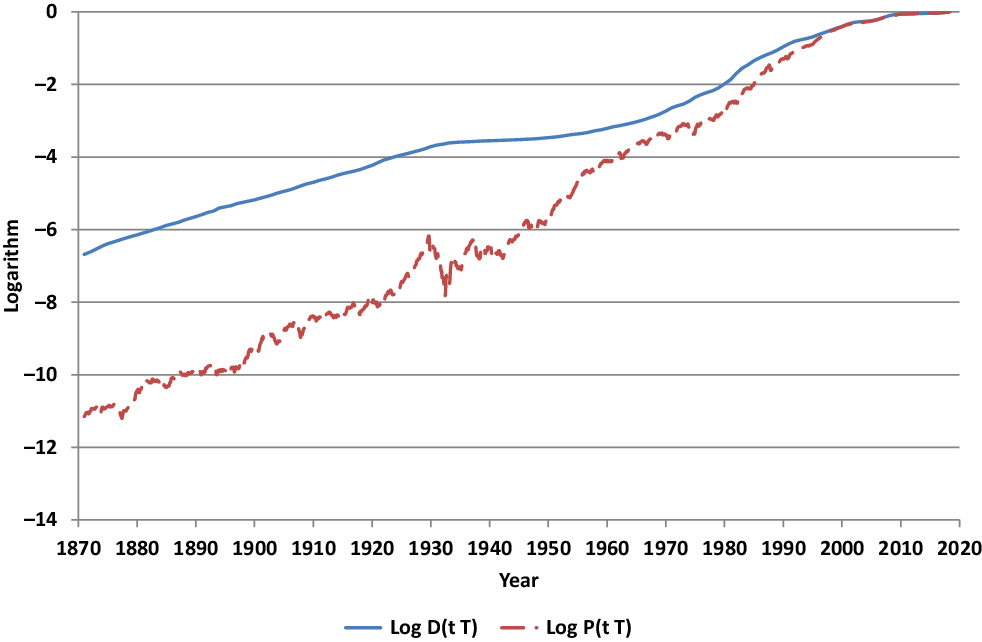

For illustration, let us interpret throughout this paper the S

![]() $\&$

P500 total return index as benchmark and numéraire portfolio for the US equity market. Its monthly observations in units of the US dollar savings account are displayed in Figure 1 for the period from January 1871 until March 2017. The logarithms of the S

$\&$

P500 total return index as benchmark and numéraire portfolio for the US equity market. Its monthly observations in units of the US dollar savings account are displayed in Figure 1 for the period from January 1871 until March 2017. The logarithms of the S

![]() $\&$

P500 and the US dollar saving account are exhibited in Figure 2. One clearly notes the higher long-term growth rate of the S

$\&$

P500 and the US dollar saving account are exhibited in Figure 2. One clearly notes the higher long-term growth rate of the S

![]() $\&$

P500 when compared with that of the savings account, a stylized empirical fact which is essential for the existence of the stock market.

$\&$

P500 when compared with that of the savings account, a stylized empirical fact which is essential for the existence of the stock market.

Figure 1 Discounted S

![]() $\&$

P500 total return index.

$\&$

P500 total return index.

Figure 2 Logarithms of S

![]() $\&$

P500 and savings account.

$\&$

P500 and savings account.

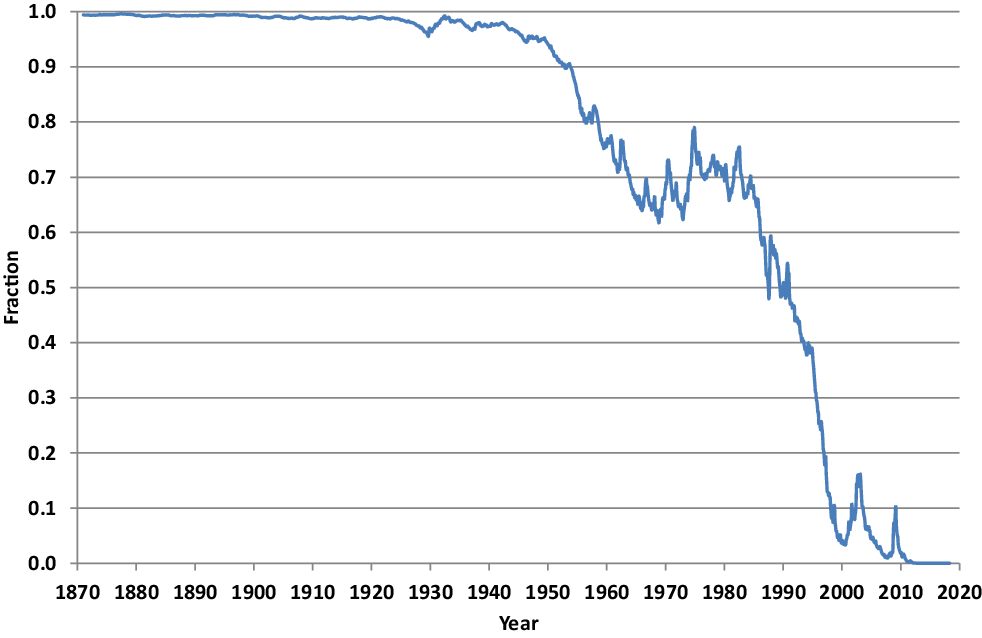

The normalized inverse of the discounted S

![]() $\&$

P500 allows us to plot in Figure 3 the resulting density process

$\&$

P500 allows us to plot in Figure 3 the resulting density process

![]() $\Lambda=\{\Lambda_t \, , \, t \in [0,T]\}$

of the putative risk-neutral measure Q as it appears in (9). Although one only has one sample path to work with, it seems unlikely that the path displayed in Figure 3 is the realisation of a true martingale. Due to its obvious systematic downward trend, it seems more likely to be the trajectory of a strict supermartingale. This is confirmed by fitting models to the index that always choose the parameters that yield a strict supermartingale model when this is possible; see Baldeaux et al. (Reference Baldeaux, Ignatieva and Platen2018). In this case, the density process would not describe a probability measure and one could expect substantial overpricing to occur in risk-neutral valuations of long-term contracts when simply assuming that the density process is a true martingale. Note that this martingale condition is the key assumption of the theoretical foundation of classical risk-neutral valuation; see Delbaen & Schachermayer (Reference Delbaen and Schachermayer1998). In current industry practice and most theoretical work, this assumption is typically made. Instead of working on the filtered probability space

$\Lambda=\{\Lambda_t \, , \, t \in [0,T]\}$

of the putative risk-neutral measure Q as it appears in (9). Although one only has one sample path to work with, it seems unlikely that the path displayed in Figure 3 is the realisation of a true martingale. Due to its obvious systematic downward trend, it seems more likely to be the trajectory of a strict supermartingale. This is confirmed by fitting models to the index that always choose the parameters that yield a strict supermartingale model when this is possible; see Baldeaux et al. (Reference Baldeaux, Ignatieva and Platen2018). In this case, the density process would not describe a probability measure and one could expect substantial overpricing to occur in risk-neutral valuations of long-term contracts when simply assuming that the density process is a true martingale. Note that this martingale condition is the key assumption of the theoretical foundation of classical risk-neutral valuation; see Delbaen & Schachermayer (Reference Delbaen and Schachermayer1998). In current industry practice and most theoretical work, this assumption is typically made. Instead of working on the filtered probability space

![]() $(\Omega , {\mathcal{F}}, {\underline{\mathcal{F}}}, P),$

one works on the filtered probability space

$(\Omega , {\mathcal{F}}, {\underline{\mathcal{F}}}, P),$

one works on the filtered probability space

![]() $(\Omega , {\mathcal{F}}, {\underline{\mathcal{F}}}, Q)$

assuming that there exists an equivalent risk-neutral probability measure Q without ensuring that this is indeed the case. In the case of a complete market, we have seen that the benchmarked savings account has to be a martingale to ensure that risk-neutral prices are theoretically founded as intended. We observed in (9) and (10) that in this case, the real-world and the risk-neutral valuation coincide. In the case when the benchmarked savings account is not a true martingale one can still perform formally risk-neutral pricing. For a hedgeable non-negative contingent claim one obtains then a self-financing hedge portfolio with values that represent the formally obtained risk-neutral value. This portfolio process, when benchmarked, is a supermartingale as a consequence of Assumption 1. Therefore, employing formally obtained risk-neutral prices by the market does not generate any economically meaningful arbitrage in the sense that it does not allow a market participant’s total positive wealth to generate infinite wealth over any finite time period. However, this may generate some classical form of arbitrage in the sense that contingent claims can be less expensively produced than suggested by classical risk-neutral valuation, as discussed in Loewenstein & Willard (Reference Loewenstein and Willard2000) and Platen (Reference Platen2002a). Under the BA, such classical forms of arbitrage are allowed to exist and can be systematically exploited, as we will demonstrate later on for the case of long-dated bonds and annuity contracts. If one includes the value processes of these contracts as primary security accounts in the given investment universe, then the best-performing portfolio, the numéraire portfolio, remains still the same and thus finite at any finite time. This means, in an extended market, which also trades at the same time risk-neutral and fair contract values, there is no economically meaningful arbitrage because no positive total wealth process of a market participant can generate infinite wealth in finite time.

$(\Omega , {\mathcal{F}}, {\underline{\mathcal{F}}}, Q)$

assuming that there exists an equivalent risk-neutral probability measure Q without ensuring that this is indeed the case. In the case of a complete market, we have seen that the benchmarked savings account has to be a martingale to ensure that risk-neutral prices are theoretically founded as intended. We observed in (9) and (10) that in this case, the real-world and the risk-neutral valuation coincide. In the case when the benchmarked savings account is not a true martingale one can still perform formally risk-neutral pricing. For a hedgeable non-negative contingent claim one obtains then a self-financing hedge portfolio with values that represent the formally obtained risk-neutral value. This portfolio process, when benchmarked, is a supermartingale as a consequence of Assumption 1. Therefore, employing formally obtained risk-neutral prices by the market does not generate any economically meaningful arbitrage in the sense that it does not allow a market participant’s total positive wealth to generate infinite wealth over any finite time period. However, this may generate some classical form of arbitrage in the sense that contingent claims can be less expensively produced than suggested by classical risk-neutral valuation, as discussed in Loewenstein & Willard (Reference Loewenstein and Willard2000) and Platen (Reference Platen2002a). Under the BA, such classical forms of arbitrage are allowed to exist and can be systematically exploited, as we will demonstrate later on for the case of long-dated bonds and annuity contracts. If one includes the value processes of these contracts as primary security accounts in the given investment universe, then the best-performing portfolio, the numéraire portfolio, remains still the same and thus finite at any finite time. This means, in an extended market, which also trades at the same time risk-neutral and fair contract values, there is no economically meaningful arbitrage because no positive total wealth process of a market participant can generate infinite wealth in finite time.

Figure 3 Plot of the sample path

![]() $t\mapsto \Lambda_t $

of the Radon-Nikodym derivative

$t\mapsto \Lambda_t $

of the Radon-Nikodym derivative

![]() $\Lambda_t$

in respect of the putative risk-neutral measure.

$\Lambda_t$

in respect of the putative risk-neutral measure.

Finally, consider the valuation of non-hedgeable contingent claims. Recall that the conditional expectation of a square integrable random variable can be interpreted as a least-squares projection; see Shiryaev (Reference Shiryaev1984). Consequently, the real-world valuation formula (6) provides with its conditional expectation, the least-squares projection of a given square integrable benchmarked payoff into the set of possible current benchmarked values. It is well-known that in a least-squares projection the forecasting error has mean zero and minimal variance, see Shiryaev (Reference Shiryaev1984). Therefore, the benchmarked profit and loss, the hedge error, has mean zero and minimal variance. More precisely, as shown in Du & Platen (Reference Du and Platen2016), under benchmarked risk minimisation the Law of the Minimal Price ensures through its real-world valuation that the value of the contingent claim is the minimal possible value and the benchmarked profit and loss has minimal fluctuations and is a local martingale orthogonal to all benchmarked traded wealth.

In an insurance company, the benchmarked profits and losses of diversified benchmarked contingent claims are pooled. If these benchmarked profits and losses are generated by sufficiently independent sources of uncertainty, then it follows via the Law of Large Numbers that the total benchmarked profit and loss for an increasing number of benchmarked contingent claims is not only a local martingale starting at zero, but also a process with an asymptotically vanishing quadratic variation or variance as described in Proposition 4.3 in Du & Platen (Reference Du and Platen2016). In this manner, an insurance company or pension fund can theoretically remove asymptotically its diversifiable uncertainty in its business by pooling its benchmarked profits and losses. It remains only the non-diversifiable uncertainty, which is captured by the tradeable benchmark, the numéraire portfolio, and can be hedged. This shows that real-world valuation makes perfect sense from the perspective of an institution with a large pool of sufficiently different contingent claims that aims for the least expensive production in its business.

5. Valuation of long-term annuities

This section illustrates the real-world valuation methodology in the context of long-term contracts, where we focus here on basic annuities or tontines. More complicated annuities, life insurance products, pensions and also equity-linked long-term contracts can be treated similarly. All show, in general, a similar effect where real-world values become significantly lower than prices formed under classical risk-neutral valuation. The most important building blocks of annuities, and also many other insurance-type contracts, are zero coupon bonds. This section will, therefore, study first the valuation and hedging of zero coupon bonds. It will then apply these findings to some basic annuity and compare its real-world value with its classical risk-neutral value.

5.1 Savings bond

To make the illustrations reasonably realistic, the following study considers the US equity market as investment universe. It uses the US one-year cash deposit rate as short rate when constructing the savings account. The S

![]() $\&$

P500 total return index is chosen as proxy for the numéraire portfolio, the benchmark. Monthly S

$\&$

P500 total return index is chosen as proxy for the numéraire portfolio, the benchmark. Monthly S

![]() $\&$

P500 total return data is sourced from Robert Shiller’s website (http://www.econ.yale.edu/shiller/data.htm) for the period from January 1871 until May 2018. The savings account discounted S

$\&$

P500 total return data is sourced from Robert Shiller’s website (http://www.econ.yale.edu/shiller/data.htm) for the period from January 1871 until May 2018. The savings account discounted S

![]() $\&$

P500 total return index has been already displayed in Figure 1.

$\&$

P500 total return index has been already displayed in Figure 1.

For simplicity, and to make the core effect very clear, assume that the short rate is a deterministic function of time. By making the short rate random, one would only complicate the exposition and would obtain very similar and even slightly more pronounced differences between real-world and risk-neutral valuation, due to the effect of stochastic interest rates on bond prices as a consequence of Jensen’s inequality. A similar comment applies to the choice of the S

![]() $\&$

P500 total return index as proxy for the numéraire portfolio or benchmark. Very likely, there exist better proxies for the numéraire portfolio, see for example Le & Platen (Reference Le and Platen2006) or Platen & Rendek (Reference Platen and Rendek2017). As will become clear, their larger long-term growth rates would make the effect to be demonstrated even more pronounced.

$\&$

P500 total return index as proxy for the numéraire portfolio or benchmark. Very likely, there exist better proxies for the numéraire portfolio, see for example Le & Platen (Reference Le and Platen2006) or Platen & Rendek (Reference Platen and Rendek2017). As will become clear, their larger long-term growth rates would make the effect to be demonstrated even more pronounced.

The first aim of this section is to illustrate the fact that under the BA there may exist several self-financing portfolios that replicate the payoff of one dollar at maturity of a zero coupon bond. Let

denote the risk-neutral price at time

![]() $t \in [0,T]$

, of the, so-called, savings bond with maturity T, where

$t \in [0,T]$

, of the, so-called, savings bond with maturity T, where

![]() $S^0_t$

denotes the value of the savings account at time t. According to our assumption of a deterministic short rate, we compute monthly values of

$S^0_t$

denotes the value of the savings account at time t. According to our assumption of a deterministic short rate, we compute monthly values of

![]() $S^0_t$

via the recursive formula

$S^0_t$

via the recursive formula

![]() $S^0_{t+1/12} = S^0_{t} \{ 1 + R(t,t+1)/12\}$

, where

$S^0_{t+1/12} = S^0_{t} \{ 1 + R(t,t+1)/12\}$

, where

![]() $R(t,t+1)$

is the one-year cash rate from our data set over the period from t to

$R(t,t+1)$

is the one-year cash rate from our data set over the period from t to

![]() $t+1$

and where

$t+1$

and where

![]() $S^0_{1/1/1871} = 1$

. Therefore, the risk-neutral price of the savings bond at time t equals the quotient of the historical data value

$S^0_{1/1/1871} = 1$

. Therefore, the risk-neutral price of the savings bond at time t equals the quotient of the historical data value

![]() $S^0_t$

over

$S^0_t$

over

![]() $S^0_{1/4/2018}$

. Obviously, the savings bond price is the price of a zero coupon bond under risk-neutral valuation and other classical valuation approaches. The upper graph in Figure 4 exhibits the logarithm of the saving bond price, with maturity in May 2018 valued at the time shown on the x-axis. The benchmarked value of this savings bond, which equals its value denominated in units of the S

$S^0_{1/4/2018}$

. Obviously, the savings bond price is the price of a zero coupon bond under risk-neutral valuation and other classical valuation approaches. The upper graph in Figure 4 exhibits the logarithm of the saving bond price, with maturity in May 2018 valued at the time shown on the x-axis. The benchmarked value of this savings bond, which equals its value denominated in units of the S

![]() $\&$

P500, is displayed as the upper graph in Figure 5. This trajectory appears to be more likely that of a strict supermartingale than that of a true martingale.

$\&$

P500, is displayed as the upper graph in Figure 5. This trajectory appears to be more likely that of a strict supermartingale than that of a true martingale.

Figure 4 Logarithms of values of savings bond and fair zero coupon bond.

Figure 5 Values of benchmarked savings bond and benchmarked fair zero coupon bond.

As pointed out previously and shown in Baldeaux et al. (Reference Baldeaux, Grasselli and Platen2015) and (Reference Baldeaux, Ignatieva and Platen2018), an equivalent risk-neutral probability measure is unlikely to exist for any realistic model for the US market. This is also consistent with the practice of financial planning. Financial planning recommends to invest at young age in the equity market and to shift wealth into fixed income securities closer to retirement. This type of strategy is widely acknowledged to be more efficient than investing all wealth in a savings bond, which represents the classical risk-neutral strategy for obtaining bond payoffs at maturity. The BA allows us to make the financial planning strategy rigorous, and both the savings account and a less-expensive fair bond are possible without creating economically meaningful arbitrage. Based on the absence of an equivalent risk-neutral probability measure, this paper provides the theoretical reasoning for the financial planning strategy. Moreover, it will quantify rigorously such a strategy under the assumption of a stylized model. The absence of an equivalent risk-neutral probability measure is also consistent with the existence of target date funds, which pay cash at maturity and have a glide path that invests early on in risky securities. Also, this glide path can be made rigorous in a similar manner as described below for the fair zero coupon bond.

5.2 Fair zero coupon bond

Under real-world valuation, the time t value of the fair zero coupon bond price with maturity date T is denoted by

and results from the real-world valuation formula (6), see also (8). It provides the minimal possible price for a self-financing portfolio that replicates

![]() $\$1$

at maturity T. The underlying assets involved are the benchmark (here the S

$\$1$

at maturity T. The underlying assets involved are the benchmark (here the S

![]() $\&$

P500 total return index) and the savings account of the domestic currency, here the US dollar. Both securities will appear in the corresponding hedge portfolio, which shall replicate at maturity the payoff of one dollar.

$\&$

P500 total return index) and the savings account of the domestic currency, here the US dollar. Both securities will appear in the corresponding hedge portfolio, which shall replicate at maturity the payoff of one dollar.

To calculate the value of a fair zero coupon bond, one has to compute the real-world conditional expectation in (12). For this calculation, one needs to employ a model for the real-world distribution of the random variable

![]() $(S^*_T)^{-1}$

. Any model that models the benchmarked savings account as a strict supermartingale will value the above benchmarked fair zero coupon bond less expensively than the corresponding benchmarked savings bond. Figure 5 displays, additionally to the benchmarked savings bond, the value of a benchmarked fair zero coupon bond, which will be derived below, under a respective model. One notes the significantly lower initial value of the benchmarked fair zero coupon bond. Also visually, its benchmarked value seems to appear as a reasonable forecast of its future benchmarked values, reflecting its martingale property. In Figure 4, the logarithm of the fair zero coupon bond is shown together with the logarithm of the savings bond value. The fair zero coupon bond price at time t is computed using (18), where for the model we explain later on the parameter values are given in (17) and D(t,T) in (11). One notes that the fair zero coupon bond appears to follow early on essentially the benchmark for many years and glides later on more and more towards the savings account. The strategy that delivers this hedging portfolio will be discussed in detail below.

$(S^*_T)^{-1}$

. Any model that models the benchmarked savings account as a strict supermartingale will value the above benchmarked fair zero coupon bond less expensively than the corresponding benchmarked savings bond. Figure 5 displays, additionally to the benchmarked savings bond, the value of a benchmarked fair zero coupon bond, which will be derived below, under a respective model. One notes the significantly lower initial value of the benchmarked fair zero coupon bond. Also visually, its benchmarked value seems to appear as a reasonable forecast of its future benchmarked values, reflecting its martingale property. In Figure 4, the logarithm of the fair zero coupon bond is shown together with the logarithm of the savings bond value. The fair zero coupon bond price at time t is computed using (18), where for the model we explain later on the parameter values are given in (17) and D(t,T) in (11). One notes that the fair zero coupon bond appears to follow early on essentially the benchmark for many years and glides later on more and more towards the savings account. The strategy that delivers this hedging portfolio will be discussed in detail below.

We interpret the value of the savings bond as the one obtained by formal risk-neutral valuation. As shown in relation (10), this value is greater than or equal to that of the fair zero coupon bond. For readers who want to have some economic explanation for the observed value difference, one could argue that the savings bond gives the holder the right to liquidate the contract at any time without costs. On the other hand, a fair zero coupon bond is akin to a term deposit without the right to access the assets before maturity. One could say that the savings bond carries a “liquidity premium” on top of the value for the fair zero coupon bond. Under the classical no-arbitrage paradigm, with its Law of One Price (see e.g. Taylor Reference Taylor2002), there is only one and the same value process possible for both instruments, which is that of the savings bond. The BA opens with its real-world valuation concept the possibility to model costs for early liquidation of financial instruments. The fair zero coupon bond is the least liquid instrument that delivers the bond payoff, and therefore, the least expensive zero coupon bond. The savings bond is more liquid and, therefore, more expensive.

5.3 Fair zero coupon bond for the minimal market model

The benchmarked fair zero coupon value at time

![]() $t\in [0,T]$

is the best forecast of its benchmarked payoff

$t\in [0,T]$

is the best forecast of its benchmarked payoff

![]() ${\hat{S}}^0_T = (S^*_T)^{-1}$

. It provides the minimal self-financing portfolio value process that hedges this benchmarked contingent claim. To facilitate a tractable evaluation of a fair zero coupon bond, we employ a continuous time model for the benchmarked savings account that models it as a strict supermartingale. The inverse of the benchmarked savings account is the discounted numéraire portfolio

${\hat{S}}^0_T = (S^*_T)^{-1}$

. It provides the minimal self-financing portfolio value process that hedges this benchmarked contingent claim. To facilitate a tractable evaluation of a fair zero coupon bond, we employ a continuous time model for the benchmarked savings account that models it as a strict supermartingale. The inverse of the benchmarked savings account is the discounted numéraire portfolio

![]() ${\bar{S}}^*_t=\frac{S^*_t}{S^0_t} = ({\hat{S}}^0_t)^{-1}$

. In the illustrative example we present, it is the discounted S

${\bar{S}}^*_t=\frac{S^*_t}{S^0_t} = ({\hat{S}}^0_t)^{-1}$

. In the illustrative example we present, it is the discounted S

![]() $\&$

P500, which, as discounted numéraire portfolio, satisfies in a continuous market model the stochastic differential equation (SDE)

$\&$

P500, which, as discounted numéraire portfolio, satisfies in a continuous market model the stochastic differential equation (SDE)

for

![]() $t\ge 0$

with

$t\ge 0$

with

![]() ${\bar{S}}^*_0 >0$

, see Platen & Heath (Reference Platen and Heath2010) Formula (13.1.6). Here

${\bar{S}}^*_0 >0$

, see Platen & Heath (Reference Platen and Heath2010) Formula (13.1.6). Here

![]() $W=\{W_t, t\ge 0\}$

is a Wiener process, and

$W=\{W_t, t\ge 0\}$

is a Wiener process, and

![]() $\alpha=\{\alpha_t,t\ge 0\}$

is a strictly positive process, which models the trend

$\alpha=\{\alpha_t,t\ge 0\}$

is a strictly positive process, which models the trend

![]() $\alpha_t={\bar{S}}^*_t \theta^2_t$

of

$\alpha_t={\bar{S}}^*_t \theta^2_t$

of

![]() ${\bar{S}}^*_t$

, with

${\bar{S}}^*_t$

, with

![]() $\theta_t$

denoting the market price of risk. Since in (13)

$\theta_t$

denoting the market price of risk. Since in (13)

![]() $\alpha_t$

can be a rather general stochastic process, the parametrisation of the SDE (13) does so far not constitute a model. For constant market price of risk, one obtains the Black-Scholes model, which has been the standard market model. In the long term, it yields a benchmarked savings account that is a true martingale and is, therefore, having an equivalent risk-neutral probability measure, which appears to be unrealistic for long-term modelling.

$\alpha_t$

can be a rather general stochastic process, the parametrisation of the SDE (13) does so far not constitute a model. For constant market price of risk, one obtains the Black-Scholes model, which has been the standard market model. In the long term, it yields a benchmarked savings account that is a true martingale and is, therefore, having an equivalent risk-neutral probability measure, which appears to be unrealistic for long-term modelling.

The trend or drift in the SDE (13) can be interpreted economically as a measure for the discounted fundamental value of wealth generated per unit of time by the underlying economy. To construct a respective model, we assume in the following that the drift of the discounted S

![]() $\&$

P500 total return index grows exponentially with a constant net growth rate

$\&$

P500 total return index grows exponentially with a constant net growth rate

![]() $\eta > 0$

. At time t, the drift of the discounted S

$\eta > 0$

. At time t, the drift of the discounted S

![]() $\&$

P500 total return index

$\&$

P500 total return index

![]() ${\bar{S}}^*_t$

is then modelled by the exponential function

${\bar{S}}^*_t$

is then modelled by the exponential function

This yields the stylized version of the minimal market model (MMM), see Platen (Reference Platen2001, Reference Platen2002a), which emerges from (13). We know explicitly the transition density of the resulting time-transformed squared Bessel process of dimension four,

![]() ${\bar{S}}^*$

, see Revuz & Yor (Reference Revuz and Yor1999). It equals

${\bar{S}}^*$

, see Revuz & Yor (Reference Revuz and Yor1999). It equals

where

is also the quadratic variation of

![]() $ \sqrt{{\bar{S}}^*} $

. The corresponding distribution function is that of a non-central chi-squared random variable with four degrees of freedom and non-centrality parameter

$ \sqrt{{\bar{S}}^*} $

. The corresponding distribution function is that of a non-central chi-squared random variable with four degrees of freedom and non-centrality parameter

![]() $x_t/(\varphi_T - \varphi_t)$

.

$x_t/(\varphi_T - \varphi_t)$

.

Using the above transition density function, we apply standard maximum likelihood estimation to monthly data for the discounted S

![]() $\&$

P500 total return index over the period from January 1871 to January 1932, giving the following estimates of the parameters

$\&$

P500 total return index over the period from January 1871 to January 1932, giving the following estimates of the parameters

![]() $\alpha$

and

$\alpha$

and

![]() $\eta$

,

$\eta$

,

\begin{align}\alpha &= 0.005860\, (0.000613 ),\nonumber \\[5pt] \eta &= 0.049496 \, (0.002968) ,\end{align}

\begin{align}\alpha &= 0.005860\, (0.000613 ),\nonumber \\[5pt] \eta &= 0.049496 \, (0.002968) ,\end{align}

where the standard errors are shown in brackets. In Appendix A, we explain the estimation method used and supply parameter estimates over other periods, where it is evident that the estimates are consistent over time.

These estimates for the net growth rate

![]() $\eta$

are consistent with estimates from various other sources in the literature, where the net growth rate of the US equity market during the last century has been estimated at about 5%; see for instance Dimson et al. (Reference Dimson, Marsh and Staunton2002).

$\eta$

are consistent with estimates from various other sources in the literature, where the net growth rate of the US equity market during the last century has been estimated at about 5%; see for instance Dimson et al. (Reference Dimson, Marsh and Staunton2002).

Under the stylized MMM, the explicitly known transition density of the discounted numéraire portfolio

![]() ${\bar{S}}^*_t$

yields for the fair zero coupon bond price by (12) the explicit formula

${\bar{S}}^*_t$

yields for the fair zero coupon bond price by (12) the explicit formula

for

![]() $t \in [0,T)$

, which has been first pointed out in Platen (Reference Platen2002b). Figure 5 displays with the lower graph the trajectory of the benchmarked fair zero coupon bond value with maturity T in May 2018. By (18), the value of the benchmarked fair zero coupon bond remains always below that of the benchmarked savings bond, where we interpret the latter as the formally obtained risk-neutral zero coupon bond value. The fair zero coupon bond value provides the minimal portfolio process for hedging the given payoff under the assumed MMM. Other benchmarked portfolios with the same payoff need to form strict supermartingales and, therefore, yield higher value processes. One such example is given by the benchmarked savings bond. Recall that the benchmarked fair zero coupon bond is a martingale. It is minimal among the supermartingales that represent benchmarked replicating self-financing portfolios and pays one dollar at maturity.

$t \in [0,T)$

, which has been first pointed out in Platen (Reference Platen2002b). Figure 5 displays with the lower graph the trajectory of the benchmarked fair zero coupon bond value with maturity T in May 2018. By (18), the value of the benchmarked fair zero coupon bond remains always below that of the benchmarked savings bond, where we interpret the latter as the formally obtained risk-neutral zero coupon bond value. The fair zero coupon bond value provides the minimal portfolio process for hedging the given payoff under the assumed MMM. Other benchmarked portfolios with the same payoff need to form strict supermartingales and, therefore, yield higher value processes. One such example is given by the benchmarked savings bond. Recall that the benchmarked fair zero coupon bond is a martingale. It is minimal among the supermartingales that represent benchmarked replicating self-financing portfolios and pays one dollar at maturity.

5.4 Comparison of values of savings and fair zero coupon bond

In this subsection, we compare the values of the savings bond and the zero coupon bond.

Figure 6 exhibits with its upper graph the trajectory of the savings bond and with its lower graph that of the zero coupon bond in US dollar denomination. Closer to maturity, the fair zero coupon bond’s value merges asymptotically with the savings bond value. Both self-financing portfolios replicate the payoff at maturity. Most important is the observation that they start with significantly different initial values. The fair zero coupon bond exploits the strict supermartingale property of the benchmarked savings account by targeting its value at maturity, whereas the savings bond ignores it. Two self-financing replicating portfolios are displayed in Figure 6. Such a situation, where two self-financing portfolios replicate the same contingent claim, is impossible under the classical no-arbitrage paradigm. However, under the BA this is a natural situation.

Figure 6 Values of savings bond and fair zero coupon bond.

In the above example for the parameters estimated during the period from January 1871 to January 1932, the savings bond with maturity in May 2018 has in January 1932 a value of

![]() $D(0,T) = S^0_0/S^0_T \approx \$0.026085 $

, where we have substituted from our data set the values

$D(0,T) = S^0_0/S^0_T \approx \$0.026085 $

, where we have substituted from our data set the values

![]() $S^0_0 = 20.809541 $

and

$S^0_0 = 20.809541 $

and

![]() $S^0_T = 797.7633 $

. The fair zero coupon bond is far less expensive and valued at only

$S^0_T = 797.7633 $

. The fair zero coupon bond is far less expensive and valued at only

![]() $P(0,T) = D(0,T) (1-\exp (-2\eta {\bar{S}}^*_0 / \{ \alpha (\exp(\eta T) - 1)\} \approx \$0.000657 $

, where we have substituted the value of

$P(0,T) = D(0,T) (1-\exp (-2\eta {\bar{S}}^*_0 / \{ \alpha (\exp(\eta T) - 1)\} \approx \$0.000657 $

, where we have substituted the value of

![]() ${\bar{S}}^*_0$

, inferred from the values of

${\bar{S}}^*_0$

, inferred from the values of

![]() $S^0_0$

and

$S^0_0$

and

![]() $S^*_0 = 45.498333 $

in our data set, and the values of

$S^*_0 = 45.498333 $

in our data set, and the values of

![]() $\alpha$

and

$\alpha$

and

![]() $\eta$

given in (17). The fair zero coupon bond with term to maturity of more than 80 years costs here less than 3% of the savings bond. This reveals a substantial premium in the value of the savings bond.

$\eta$

given in (17). The fair zero coupon bond with term to maturity of more than 80 years costs here less than 3% of the savings bond. This reveals a substantial premium in the value of the savings bond.

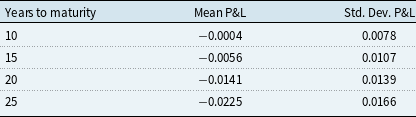

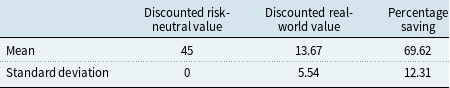

Of course, usually one has to deal with shorter terms to maturity in annuities. Therefore, we repeated with the estimated parameters the study for all possible zero coupon bonds that cover a period of 10, 15, 20, 25, 30 and 35 years from initiation until maturity that fall into the period starting in January 1932 and ending in May 2018. Table 1 displays the average percentage value of the risk-neutral over the fair bond. One notes that for a 25-year bond, one saves on average about 20% of the risk-neutral value, which is a substantial part and typical for pension products.

Table 1. Mean values of zero coupon bonds of prescribed terms to maturity whose start and end dates lie between January 1932 and May 2018.

By describing below the hedging strategy that generates the fair zero coupon bond, we propose a realistic way of changing industry practice from the classical risk-neutral production to the less expensive benchmark production.

5.5 Hedging of a fair zero coupon bond

The benefits of the proposed benchmark production methodology can be harvested if the respective hedging strategy is followed. The hedging strategy by which this is achieved follows under the MMM from the explicit fair zero coupon bond valuation formula (13). At the time,

![]() $t \in [0,T)$

the corresponding theoretical number of units of the S

$t \in [0,T)$

the corresponding theoretical number of units of the S

![]() $\&$

P500 to be held in the hedge portfolio follows, similar to the well-known Black-Scholes delta hedge ratio, as partial derivative of the bond value with respect to the underlying, and is given by the formula

$\&$

P500 to be held in the hedge portfolio follows, similar to the well-known Black-Scholes delta hedge ratio, as partial derivative of the bond value with respect to the underlying, and is given by the formula

\begin{eqnarray} \delta^*_t &=& \frac{\partial {\bar{P}}(t,T)}{\partial {\bar{S}}^*_t} \nonumber \\[5pt] &=& D(0,T)\, \exp\left\{\frac{- 2\,\eta\,{\bar{S}}^*_t} {\alpha\,(\exp\{\eta\,T\} - \exp\{\eta\,t\})} \right\} \, \frac{2\,\eta} {\alpha\,(\exp\{\eta\,T\} - \exp\{\eta\,t\})}. \end{eqnarray}

\begin{eqnarray} \delta^*_t &=& \frac{\partial {\bar{P}}(t,T)}{\partial {\bar{S}}^*_t} \nonumber \\[5pt] &=& D(0,T)\, \exp\left\{\frac{- 2\,\eta\,{\bar{S}}^*_t} {\alpha\,(\exp\{\eta\,T\} - \exp\{\eta\,t\})} \right\} \, \frac{2\,\eta} {\alpha\,(\exp\{\eta\,T\} - \exp\{\eta\,t\})}. \end{eqnarray}

Here D(0,T) is the respective value of the savings bond.

One may argue that transaction costs may play a role and could make the hedge critically more expensive than shown. Here we argue that the hedger will sell the index when it is just reaching new high levels, where most investors are buyers, and will buy the index when it reaches new relatively low values, where many investors typically sell the index. This hedging strategy will have a stabilising effect on the financial market if it is pursued by many institutions. Note that brokers often waive transaction costs for those who supply liquidity to the market as done by the strategy. In practice, implementing the hedging strategy is achieved straightforwardly using buy and sell limit orders which are permitted on most financial exchanges.

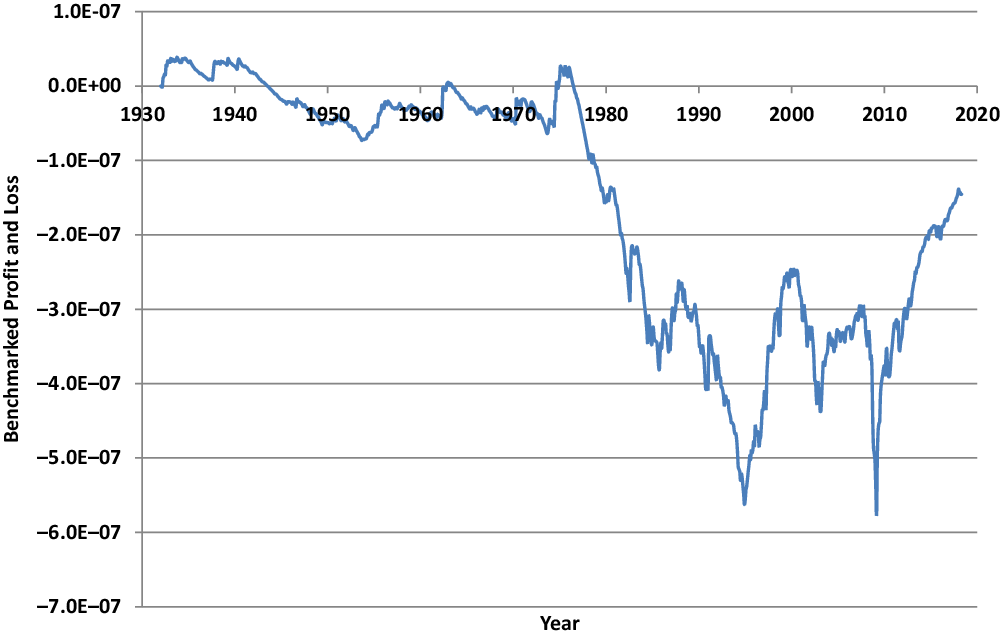

The resulting fraction of wealth to be held at time t in the S

![]() $\&$

P500, as it evolves for the given example of zero coupon bond valuation from January 1932 to maturity, is shown in Figure 7. The remaining wealth is always invested in the savings account.

$\&$

P500, as it evolves for the given example of zero coupon bond valuation from January 1932 to maturity, is shown in Figure 7. The remaining wealth is always invested in the savings account.

Figure 7 Fraction of wealth invested in the S

![]() $\&$

P500.

$\&$

P500.

To demonstrate how realistic the hedge of the fair zero coupon bond payoff is for the given delta under the stylized MMM with monthly reallocation, a self-financing hedge portfolio is formed. The delta hedge is performed, where the self-financing hedge portfolio starts in January 1932, which ensures that the hedge simulations employing the fitted parameters in (17) are out-of-sample. Each month, the fraction invested in the S

![]() $\&$