The ability of policy-making, elected representatives to manage the behavior of policy-implementing, unelected agents is vital to democratic responsiveness and accountability. Accurate information is necessary for effective agency management, enabling representatives to evaluate agent behavior, assess outcomes, and update legislation. Unfortunately, legislators are typically unable to observe bureaucratic actions directly, relying instead on reports from the agents themselves. Thus, the relationship between representatives and bureaucrats is often defined by stark informational asymmetries that disadvantage legislators, allowing agencies to operate with undesirably high levels of autonomy and low levels of accountability.

Well-resourced legislatures can mitigate these asymmetries by using their oversight and budgeting powers to compel transparency from their respective agencies. However, many representative bodies are resource deprived—lacking time, expertise, etc.—and therefore unable to coerce agencies to share information on their actions and outcomes. These shortcomings are particularly salient in local (i.e., city, county) government, where assemblies are often acutely underresourced. The inability of local legislatures to compel transparency has consequences for both local and state legislative bodies in the US, as state policy is routinely implemented by local agencies (e.g., public health clinics, schools, water authorities). Therefore, state representatives rely on local agencies for policy-relevant information, yet local assemblies often lack the capacity to compel this information.

We argue that in hierarchical governing systems—federalism (e.g., Argentina, India) or unitary systems with devolved competencies (e.g., Denmark, Japan)—higher-level legislative bodies may engage in direct oversight of lower-level agencies to ameliorate this problem, using traditional legislative instruments to compel transparency among local agents. That is, the very resources that enable state legislatures to effectively oversee state agencies can also be deployed downward, allowing state legislatures to provide oversight of, and compel information sharing by, agencies organized at the county and municipal levels. As a result, where state legislatures have the capacity to effectively pursue these avenues we should observe more accurate agency reporting.

To demonstrate this, we study the transparency of American police agencies, a canonical “street-level” bureaucracy (Lipsky Reference Lipsky1980) and an arm of government that remains understudied in political science (Soss and Weaver Reference Soss and Weaver2017). Police enjoy extraordinary discretion in exercising their power—including life and death decisions—making oversight and management crucial. Yet, most police agencies are organized at the municipal or county level, where the oversight capacity of elected representatives may be limited. Drawing from the literature on legislative capacity and oversight as well as real-world examples of legislative investigation, we argue that high-capacity state legislatures can use their oversight authority to compel state and substate police agencies to report relevant information. By increasing the transparency of state, county, and municipal agencies, state legislatures can reduce informational asymmetries between bureaucrats and elected representatives at each of these levels of government, providing support for policy making and agency control.

We test the implications of our argument in a multipart analysis. First, we analyze administrative records of 19,095 state, county, and municipal police agencies’ compliance with official requests for information over nearly six decades, finding strong evidence for a positive relationship between state legislative capacity and agency transparency. This is the largest agency-level analysis of police to date. Second, to assess the accuracy of agency data transmissions, we compare self-reports of police lethality to crowdsourced estimates. We find that where state legislatures lack the capacity for effective oversight, police agencies systematically underreport their lethality, annually concealing hundreds of killings from the official record. Finally, we analyze the effect of a state policy intervention on deadly force reporting, demonstrating that substate agencies are responsive to state legislative intercession.

In summary, we present an intuitive argument for how hierarchical governing structures can reduce informational asymmetries that may inhibit policy making or allow unelected agents to operate with undesirable levels of autonomy. We then present evidence that the capacity for state legislative oversight can increase the transparency of state, county, and municipal agencies. Our argument and findings add to a growing literature on how legislative capacity shapes both the influence of bureaucrats over policy outcomes (e.g., Kroeger Reference Kroeger2022) and the ability of legislators to manage agency behavior (e.g., Boehmke and Shipan Reference Boehmke and Shipan2015; Lillvis and McGrath Reference Lillvis and Mcgrath2017). Additionally, our analysis contributes to research on “democratic deficits” in the American states (e.g., Landgrave and Weller Reference Landgrave and Weller2020; Lax and Phillips Reference Lax and Phillips2012) by demonstrating that deficiencies in legislative capacity allow for less transparent and therefore less accountable agencies. Finally, we believe that our argument begins to rectify a shortcoming in existing interdisciplinary “institutional” analyses (Thompson [1967] Reference Thompson2017) of police, highlighting the dearth of attention paid to electoral institutions and the potential for “legal accountability” (Romzek and Dubnick Reference Romzek and Dubnick1987) vis-à-vis police. We hope that others will follow in this vein, and we encourage our colleagues to devote greater attention to the “second face of the American state” by both applying classic political economic models of governance and innovating new approaches to the study of police (Soss and Weaver Reference Soss and Weaver2017, 567).

On police behavior more directly, our results illuminate the extent of police lethality and reveal systematic deficiencies in public records. Though government manipulation of official data is a well-recognized problem in autocracies (Hollyer, Rosendorff, and Vreeland Reference Hollyer, Rosendorff and Vreeland2011), we show that these problems are also manifest in the United States—official statistics on crime and policing are systematically biased and cannot be taken at face value. Unfortunately, this means that thousands of academic articles on crime and policing are likely to be fundamentally flawed. This underscores the importance of understanding the potential limitations of official data to scientific research, especially where government officials may have incentives to conceal or manipulate data. The Trump administration provides a recent example as evidence indicates attempts to manipulate data on climate change (Friedman Reference Friedman2019), crime (Malone and Asher Reference Malone and Asher2017), and public health (Stolberg Reference Stolberg2020). We conclude the manuscript by encouraging our colleagues to study the political economy of data gathering and dissemination.

Engaging Interdisciplinary Police Research

Research on policing is extensive and interdisciplinary, yet it largely fails to consider how interbranch politics affect law enforcement. Borrowing the Thompson ([1967] Reference Thompson2017) model of organizations, this research can be classified into three broad categories: “technical,” how police perform discrete tasks; “managerial,” how police organize themselves; and “institutional,” how police interact with nonpolice stakeholders, which is our focus. To date, the vast majority of institutional research focuses on what Romzek and Dubnick (Reference Romzek and Dubnick1987) call political accountability—answering to residents—as opposed to legal accountability—answering to elected representatives—despite the fact that police (excepting elected sheriffs) are not directly accountable to residents and elected representatives have greater power to shape police behaviors. The existing literature’s relative inattention to how political institutions influence policing presents an opportunity for substantial contribution by political scientists. In the following, we briefly review police research in each of these main categories, arguing that more attention needs to be paid to role of elected representatives as central actors in the police ecosystem, especially by political scientists.

Institutional (or interactive) studies seek to situate our understanding of police within a broader societal context. Most of this work focuses on sentiment toward police in the mass public, including research in criminology (Hawdon, Ryan, and Griffin Reference Hawdon, Ryan and Griffin2003), law (Sunshine and Tyler Reference Sunshine and Tyler2003), sociology (Desmond, Papachristos, and Kirk Reference Desmond, Papachristos and Kirk2016), and more.Footnote 1 The political science research on policing also tends to focus primarily on this police–resident perspective (see Soss and Weaver Reference Soss and Weaver2017)—for example, studying the sociopolitical implications of police contact (e.g., White Reference White2019)—rather than on police interactions with the legislatures (or even executives) to whom police are accountable. Even when research specifically targets police oversight, one does not find application of general theory on the role of elected representatives and their oversight competencies. Instead, this research tends to analyze specific case studies of civilian complaint processing (e.g., Goldsmith Reference Goldsmith1991).

To demonstrate this point, consider Prenzler and Ronken’s Reference Prenzler and Ronken2001 typology of police oversight models. The authors outright dismiss the possibility of oversight by elected representatives, instead classifying models into three categories: (a) internal affairs (i.e., oversight bodies housed within-agency), (b) civilian review (i.e., external bodies reviewing internal oversight practices), and (c) civilian control (i.e., external bodies with investigation and sanctioning authority). To a political scientist, dismissing the role of elected representatives is a critical omission because representatives are the voice and hands of citizens in government—the possibility of civilian control of police, or any other bureaucracy, in absence of action by elected representatives is vanishingly small. As we elaborate below, though many local elected representatives lack the capacity for meaningful oversight, state legislatures often do have sufficient power to influence police behaviors. Here we follow and echo Soss and Weaver’s Reference Soss and Weaver2017 advice to study police as part of government, incentivized by and accountable to other governmental actors.

Political science engagement with policing research should not be limited to institutional studies alone, as each arm of policing literature builds upon and complements the others. For example, technical studies focus on the execution and efficacy of police activities, including research on deescalation tactics (Todak and James Reference Todak and James2018), race–gender bias in policing (Ba et al. Reference Ba, Knox, Mummolo and Rivera2021), the effects of police staffing on crime intervention and deterrence (Levitt Reference Levitt1997), and so on. These studies inform institutional research in general by showing us what police actually do and our research in particular by clarifying the need to consider features of communities (e.g., racial composition) and agencies (e.g., staffing) in our empirical analysis. With few exceptions (including Levitt Reference Levitt1997), these studies tend to take policy as given but, moving forward, must consider the broader political environment that produces policy and structures incentives for compliance. For example, Mughan, Li, and Nicholson-Crotty (Reference Mughan, Li and Nicholson-Crotty2020) present evidence that electoral accountability may deter sheriffs from abusing property-seizure policies.

Managerial studies, which focus on within-agency organizational choices (e.g., Goldstein Reference Goldstein1963), have clear connections with institutional research. For example, Farris and Holman (Reference Farris and Holman2017) show the political orientations of sheriffs shape their departments’ immigration enforcement strategies. But these studies, too, tend to think of agencies in a vacuum. Even reform-oriented research, including analyses of “community policing” (Weisburd and Braga Reference Weisburd and Braga2019) or sociologically oriented research on “police culture” (Campeau Reference Campeau2015; Crank Reference Crank2014), come largely from the managerial perspective, implicitly assuming only police themselves have influence. Ultimately, however, agency heads are externally accountable, and we need to consider how this structures their incentives in making decisions that guide subordinate behavior. For example, recent work by Eckhouse (Reference Eckhouse2021) nicely demonstrates the potential gains from marrying managerial and institutional approaches, offering a theory of how police (re)shape their behavior in response to monitoring regimes.

Moving police research forward requires deeper integration across these literatures and more meaningful cross-field collaboration. Political scientists can aid these efforts by recognizing police departments as government agencies and offering theories of how their location within this broader institutional context structures police incentives. For example, legislatures can issue policy-specific mandates, oversee police operations, alter police budgets, etc. Therefore, political scientists must bring the legislature into our understanding of policing. In the next sections, we participate in this effort by considering the hierarchical structure of government in which police operate, the potential policy tools that are at the disposal of legislature vis-à-vis police, and the de facto budget constraints on potential legislative oversight activity.

Legislative Capacity and Bureaucratic Control

Under nearly all modern governing arrangements, the elected representatives that write, propose, and pass policies into law delegate the enforcement of these laws to unelected agents. The level of autonomy enjoyed by these agents is a function of the ex ante, de jure discretion afforded to them in implementing policy. Importantly, however, this autonomy is also a function of these agents’ de facto ability to resist ex post oversight. Although nearly all democratic legislatures possess constitutional authority for both ex ante design and ex post oversight, a legislature’s ability to use these tools is subject to a budget constraint: legislative capacity. Legislatures’ endowment of resources (i.e., capacity) for conducting legislative work such as gathering information, writing comprehensive proposals, practicing oversight, and, if necessary, issuing sanctions to exert control over wayward agencies vary considerably. This is familiar in US state politics research, where state legislative capacity has been tracked for several decades (e.g., Bowen and Greene Reference Bowen and Greene2014; Squire Reference Squire2007).

Recent research indicates that higher-capacity legislatures design more detailed proposals that leave less room for bureaucratic discretion (Huber and Shipan Reference Huber and Shipan2002; Vakilifathi Reference Vakilifathi2019). Higher-capacity legislatures also conduct more rigorous oversight of the bureaucracy, monitoring implementation for concordance with legislative intent (Boehmke and Shipan Reference Boehmke and Shipan2015; Lillvis and McGrath Reference Lillvis and Mcgrath2017). As a result of this design and oversight capacity, voters in states with well-resourced legislatures are more likely to get the policy outcomes they desire (Fortunato and Turner Reference Fortunato and Turner2018; Lax and Phillips Reference Lax and Phillips2012). We continue in this emergent stream of research, identifying the potential role of state legislative capacity for oversight of county and municipal agencies.

State Legislative Oversight of Police Agencies

The level of discretion police officers enjoy is extraordinary, as are the potential consequences of their actions (Lipsky Reference Lipsky1980). Even routine, relatively minor decisions—for example, assessing parking fines—can be costly for citizens. At the extreme, officer discretion is a matter of life and death, as police are permitted to subdue civilians with force, including the use of electroshock weapons and firearms. Although discretion may be necessary given the complex and unpredictable environments in which police operate, the severity of the potential consequences for residents from engagement with police also demands robust oversight. This need for discretion and oversight creates a well-known problem with a similarly well-known potential solution in principal-agent arrangements: it can be prohibitively costly to monitor police in real time, but ex post scrutiny may be applied to compel preferred behaviors (McCubbins and Schwartz Reference McCubbins and Schwartz1984).

Transparent reporting is one such behavior. We assume that legislatures generally prefer transparency because they (a) require information for policy design and (b) cannot manage agency actions in a state of ignorance. This information includes statistics on crime, records of calls for assistance and corresponding dispatch, agency expenditures, the use of force by officers, etc. Without this information, representatives cannot develop high-quality policy instruments or ensure the conformity of policy execution with legislative intent. Furthermore, legislators have a strong interest—both intrinsic and electoral—in protecting the public welfare. This requires not only creating effective anticrime policy but also constraining abuse of the state’s monopoly on violence. Faithful reporting by police is necessary to successfully pursue these objectives.

Conversely, police agencies have strong incentives toward opacity. First, existing theoretical research argues that the executive can leverage its informational advantage to obtain greater resources (e.g., Banks and Weingast Reference Banks and Weingast1992). Qualitative studies of police budgeting strategy support this theoretical consensus: informational advantage is critical and agency heads report exploiting it several times over in interviews with Coe and Wiesel (Reference Coe and Wiesel2001). Second, keeping the legislature in the dark allows agencies to operate with autonomy, able to enforce policy as they see fit, and therefore extract benefits from drift. This is true for both on-the-ground execution by individual officers and broad administrative priorities by agencies, as research indicates that police are averse to information-intensive, line-item budgets that constrain spending discretion (Rubin Reference Rubin2019). Third, opacity allows agencies to operate with impunity, free from fear of reprisal when drifting from their mandate. In the case of police agencies such drift is particularly onerous given the potential costs of officer abuse.

Police have strong incentives for opacity regarding use of force in particular, as the revelation of police brutality or killings may draw public ire and, with it, the potential for official sanctions; offending officers and their supervisors more likely to be disciplined, dismissed, or face criminal charges in such climates. These incentives are so strong that police agencies have been accused of falsifying coroner’s reports to conceal their lethality (Egel, Chabria, and Garrison Reference Egel, Chabria and Garrison2017). Evidence indicates police willingness to conceal abuses in other areas as well: inflating clearance rates (Yeung et al. Reference Yeung, Greenblatt, Fahey and Harris2018), timecard manipulation (Rocheleau Reference Rocheleau2019), indiscriminate property seizure (Mughan, Li, and Nicholson-Crotty Reference Mughan, Li and Nicholson-Crotty2020), and so on. Incentives for opacity extend beyond concealing abuse of office, as recent research by Eckhouse (Reference Eckhouse2021) shows that police systematically manipulate crime statistics, reclassifying rapes as “unfounded,” to improve their metrics.

Apart from deselecting agency leaders, a power that state legislatures only enjoy directly in reference to state-level agents,Footnote 2 what can legislatures do to encourage transparency? Generally, legislatures have two instruments: sunshine and budget. With the former (sunshine), legislatures may use investigatory powers to discover and publicize missteps to shame agencies into better performance. All state legislative chambers have the authority to subpoena records on expenditures and assets, formal and informal procedure, internal communication, and other data critical to overcoming their informational disadvantage. Using subpoena power, they may also compel testimony from rank-and-file bureaucrats, agency leaders, and outside experts in order to help decipher the information they have gathered or to report on specific decisions, events, and procedures. These testimonial periods serve to generate additional information and/or shame bureaucrats, which elsewhere has been shown to be effective in promoting compliance (e.g., Hafner-Burton Reference Hafner-Burton2008).

These are well-designed coercive instruments; thus, the threat of subpoena—backed by the power of contempt—is typically sufficient for compliance with the legislature’s demands. Indeed, a “direct threat” of scrutiny or an explicit ultimatum is rarely required for agency compliance. Instead an “indirect threat” of scrutiny, produced by a legislature that has, over time, created a culture of compliance by engaging in rigorous oversight, can compel preferred behaviors. In other words, oversight of any agency in the state creates positive externalities for compliance among every agency in the state, as it establishes a legislature’s reputation for scrutiny.Footnote 3 Of course, legislatures are at times forced to use their coercive powers. For example, in 2018, the Maryland General Assembly formed a special committee to investigate the Baltimore Police Department’s gun-tracing task force that used its subpoena power extensively (Fenton Reference Fenton2020). That same year, the Florida House issued a series of subpoenas to the Broward County and Palm Beach County Sheriff’s Offices in its investigation of police conduct during the Parkland shooting (Koh Reference Koh2018) and the Nebraska legislature subpoenaed the head of its State Department of Correctional Services to deliver extensive information and testimony regarding its lethal injection protocol (Duggan Reference Duggan2018).

These investigations may reveal issues that require legislative solutions to guide future behavior including the provision of additional resources to agencies, the closure of statutory loopholes, etc. Should agencies remain recalcitrant, however, the legislature may employ its ultimate coercive power: reduced agency funding (or the threat thereof). Importantly, the budgetary powers of state legislatures apply to state and substate agencies because states provide direct funding to county and municipal police, as they do for county and municipal public health clinics, schools, etc. In 2017, 32% of total local government revenue came from state transfers (Tax Policy Center 2017), providing great leverage to state legislatures in coercing preferred behaviors from substate actors. Moreover, local governments actively lobby states for these resources on a regular basis (Payson Reference Payson2020), affording legislatures repeated opportunities for influence.

Although all state legislatures possess the de jure powers of oversight and budgeting, their de facto capacity to engage in these behaviors—their time and resources for legislative work—varies considerably. Squire (Reference Squire2007) documents these resource endowments by tracking the degree to which legislatures differ in session length, representative salaries, and staff allocations, which determine (respectively) the time available for legislative activity, members’ ability to invest in expertise (or become “full-time” legislators), and the labor available to legislators for information gathering, etc. For example, although representatives in California have ample resources for investigating and scrutinizing various agencies, representatives in New Hampshire are resource starved. California’s legislators are paid an annual salary of $114,877 plus $206 for each session-day, have an average of 18 staffers, and meet nearly every business day. New Hampshire General Court members, on the other hand, are paid just $100 per year total, share 150 staffers between 424 members, and meet fewer than 25 days per year. Our central expectation is that agencies will be more forthcoming—that is, police will share more information—when the legislature’s capacity for compliance-inducing behaviors is greater. Therefore, when the legislature’s resource endowment increases, so too will agencies’ tendency to transmit information.Footnote 4

We have made the case that state legislatures require agency transparency, particularly in the case of crime and policing, but must also establish why compelling transparency from local agencies requires state legislatures—why must state legislatures direct their oversight capacity downward to county and municipal agencies in order to get these data? After all, county boards and municipal councils are the representative bodies to which these agencies are immediately accountable. First, many city and county legislatures may lack the capacity for meaningful oversight, as these bodies meet infrequently and their members are often part-time workers. For example, data on city councilor compensation in California and Florida reveal that the median councilor is paid just $5,562 annually, with 78% falling under the national poverty line and 11% paid nothing at all. Second, local bodies may lack the political will to oversee their own police agencies, necessitating state legislative involvement. Councilors may be unwilling to scrutinize their police agencies for fear of electoral reprisals, producing a kind of regulatory capture. Zimring (Reference Zimring2017) discusses these local conflicts of interest regarding police accountability at length, and political science research shows that police use their localized influence to extract substantial rents from municipal governments (Anzia and Moe Reference Anzia and Moe2015). These problems dissipate at the state level as membership is geographically diverse, allowing representatives from one city to lead scrutiny of police in another.

Taken together, these factors reveal that local oversight may prove insufficient and state intercession may often be necessary, especially given the inherent complexities in evaluating police behaviors and budgeting practices. This may help explain why previous research has shown that local policing is responsive to state-level political processes at similar (Chaney and Saltzstein Reference Chaney and Saltzstein1998) or greater levels (Levitt Reference Levitt1997) than to municipal politics. Thus, if state legislatures play the role we argue, the effects of legislative capacity should be detectable at the state, county, and municipal levels.Footnote 5

Further discussion of this relationship, including more detailed description of state legislatures’ investigatory powers and additional examples of state legislative oversight of police agencies, at all levels, across 11 states, is given in Appendix A. We also show a strong, positive relationship between legislative capacity and legislative responsiveness to the George Floyd protests in May–June of 2020. Collectively, this discussion supports our argument that strong state legislatures often assume an oversight role vis-à-vis local agencies, which we test empirically in the following sections.

Research Design

We evaluate our central expectation—that the transparency of state and substate police agencies is increasing with state legislative capacity—in two main studies.Footnote 6 Both rely on data from the Department of Justice’s (DOJ) Uniform Crime Reporting (UCR) program, which is administered by the Federal Bureau of Investigation (FBI 2020):

The FBI’s Uniform Crime Reporting (UCR) Program serves as the national repository for crime data collected by law enforcement. Its primary objective is to generate reliable information for use in law enforcement administration, operation, and management. Considered a leading social indicator, UCR data is used for monitoring fluctuations in crime levels, evaluating policies, and regulating staffing levels. In addition to the American public relying on the data for information, criminologists, sociologists, legislators, city planners, the media, and other students of criminal justice use them for a variety of research and planning purposes.

Each year, the UCR provides every police agency in the US with a detailed framework for classifying, counting, and reporting events, including, but not limited to, homicide, so-called justifiable homicide by a police officer, sexual assault, and “clearances.” Importantly, participation is voluntary—at the discretion of individual agencies—with many agencies choosing not to participate. Moreover, the DOJ does not audit these data or sanction individual agencies for nonparticipation. This is important for the research design because it means that all police agencies receive an identical stimulus from the DOJ but no centralized incentive to comply.

Given the voluntary nature of the program, UCR data are often (rightly) maligned as inadequate for assessing patterns of crime. For example, Maltz and Targonski (Reference Maltz and Targonski2002, 299) note the extent of data missingness and conclude that the “crime data, as they are currently constituted, should not be used, especially in policy studies.” Regarding killings by police, specifically, Zimring (Reference Zimring2017, 29) writes that “the voluntary nature of the reporting system means that a significant number of killings by police do not get included in the official numbers.” Although we discuss these limitations in the following sections, it is important to note that, for the purposes of our study, this is a feature and not a bug. We are interested not in explaining variation in crime itself but variation in data sharing by police. Therefore, it is imperfect compliance with the UCR program that enables us to effectively test our argument on the role of legislative capacity. Specifically, compliance with UCR requests should be greater for agencies facing the threat of legislative scrutiny, the credibility of which is increasing in the state legislature’s oversight capacity. From this perspective, the UCR data requests are analogous to an annual audit study of all police agencies where the treatment is time- and state-varying legislative capacity.

We outline above why legislatures would prefer police agencies to share data, but why would legislatures want to compel participation in the UCR in particular? UCR participation has substantial efficiency advantages over alternative means of data collection, at least from the legislature’s perspective. When legislatures want information on crime, policing, and public safety, they may either solicit this information on an ad hoc basis—requiring the design, funding, and implementation of data-collection regimes—or they may encourage their agencies to participate in the UCR at a substantial resource savings. Second, UCR data allow for comparison that may be of interest to legislatures. Because it is a national program, states are able to compare outcomes on important metrics using common definitions and criteria. This allows legislatures to, for example, evaluate whether innovations used in other states have proven effective and are worth deeper consideration. With ad hoc, individualized data alone, such comparison is difficult.Footnote 7

Taken together, UCR participation has the potential to yield information that is more valuable than innovating a new program, at a lower cost. This is likely why most states delegate the entire enterprise of crime and policing data collection and dissemination to the UCR. We see this manifest in reliance on UCR statistics in state legislative reports on crime and policing (e.g., Joint Legislative Audit and Review Commission 2008), or state legislative directives to begin reporting certain information into the UCR (Texas SB 349 [2021]), of course, these directives only have teeth in proportion to the legislature’s capacity to compel compliance. When states do create their own programs for data dissemination, they tend to be a state-specific reporting of UCR-submitted data, as in Maryland and South Carolina (Criminal Justice Information Services 2019; Williams Reference Williams2020), or UCR data supplemented by collecting additional information that the UCR does not request. For example, North Carolina’s Highway Patrol traffic stop database was designed to collect a specific type of data—driver demographics and various outcomes of traffic stops made by state troopers—outside the purview of the UCR.Footnote 8 Stated alternatively, the existence and low cost (to states) of the UCR has effectively preempted the development of alternative, state-designed crime and policing data infrastructure.

To estimate the effect of state legislative capacity on police agency transparency, we undertake two empirical studies. First, we evaluate whether agencies are more likely to comply with DOJ data requests as their state legislature’s capacity for oversight increases, by analyzing administrative records indicating UCR participation for all municipal, county, and state police agencies in the 50 statesFootnote 9 between 1960 and 2017 in a generalized difference-in-differences (DiD) framework. The data reveal strong evidence for a plausibly causal effect of capacity on UCR compliance.

Second, we take advantage of several recent initiatives to crowdsource reliable data on police lethality, including well-known projects by The Washington Post and The Guardian, to assess the accuracy of data reported by agencies.Footnote 10 It is widely accepted that these crowdsourced data provide more accurate information on the extent of killings by police than do official statistics (Zimring Reference Zimring2017).Footnote 11 Moreover, these projects are among the only systematic efforts by nonpolice to compile information overlapping the UCR and therefore present a rare opportunity to systematically scrutinize the accuracy of UCR data. Our expectation is that higher legislative capacity will be associated with more transparent reporting of deadly force. The data support this, indicating fewer reporting discrepancies in states with high-capacity legislatures. Finally, we assess the effect of a policy intervention that mandates external investigation of deadly force in seven states and find plausibly causal evidence that state legislative intercession increases police transparency.

Study 1: UCR Compliance

Our administrative data include all state, county, and municipal police agencies in the 50 states from 1960 to 2017, indicating whether or not agencies complied with annual UCR data requests. The data are split into two sample periods—1960–1994 and 1995–2017—because the records before 1995 were obtained as physical media and then parsed from a series of quite large, unformatted (or unusually formatted) text documents.Footnote 12 Therefore, we cannot be completely certain that we have an exhaustive accounting for the 1960–1994 period and analyze the periods separately to avoid contaminating the latter, exhaustive sample. There are 19,095 active agencies across the two periods.Footnote 13

We do not analyze the scope or accuracy of the data submitted in this section, merely the most basic level of compliance: whether the agency provided any information at all in response to the DOJ’s request. This is common in the audit studies that are similar in design to ours. In general, compliance trends upward over the sample period, with about 37% of agencies reporting in 1960 compared with 76% in 2017. Parsing these data further, in the older sample (1960–1994) only 1% of agencies comply every year, 15% never comply, and 84% comply intermittently. In the more recent sample (1995–2017), 48% of agencies comply every year, 8% never comply, and 44% comply intermittently.

Our capacity measure is an adaptation of the Squire (Reference Squire2007) index—the industry standard measure of capacity—which combines the institutional endowments discussed above: legislator compensation, length of legislative sessions, and legislative staff. As Squire notes, the original measure is calculated intermittently and always relative to Congress, making the index inappropriate for dynamic analyses like ours. Given these limitations, we instead gather raw data on legislator compensation, session length, and staff expenditures for each legislative session and scale the values into a summary measure using Quinn’s (Reference Quinn2004) factor analytic model.Footnote 14 Because our adapted measure uses inflation-adjusted dollar amounts and scales all years jointly, it is better suited for our over-time analysis.Footnote 15 In addition to the modified Squire index, we also include the imposition of legislative term limits as an additional proxy for capacity, as term limits cause a sudden and permanent reduction to aggregate experience and institutional memory.

To sum up, the outcome in Study 1 is a police agency’s choice to comply with a UCR data request. The treatment variables are the resource-based legislative capacity measure and term limit imposition, and we use a generalized difference-in-differences design, relying on two-way (state and year) fixed effects, for identification (Angrist and Pischke Reference Angrist and Pischke2008).Footnote 16 We estimate and report the results for several variations of this model on both samples, starting with reduced-form models where capacity and term limits are regressed on UCR participation with only state and year fixed effects.

Next, we generalize and estimate more fully specified models. First, we separately evaluate the influence of capacity on agencies with appointed and elected heads. DeHart (Reference DeHart2020) notes that sheriffs are unusual among American police agency heads, as nearly all of them are elected, whereas state and municipal agency heads are appointed. Being elected rather than appointed should partially insulate sheriffs from state legislative pressures because elections both change the relevant principal and endow sheriffs with greater legitimacy, enhancing public support for the office and the individuals who hold it. To assess whether elected (relative to appointed) agency heads are less sensitive to state-legislative scrutiny, we use DeHart’s 2020 classification of all US counties as electing or appointing their sheriffs and interact an indicator for elections on our covariates of interest.

Second, given the low risk of simultaneity (i.e., UCR compliance having an effect on legislative capacity)Footnote 17 and the careful attention paid to measurement, the biggest threat to sound inference in our analysis is potential confounding. Although the DiD estimator should be reasonably robust to the omission of potential confounders (e.g., Angrist and Pischke Reference Angrist and Pischke2008), we sought to further assuage potential concerns that our results are driven by omitted variable bias. Therefore, we gathered data to account for potential confounders including indicators for agency type (city, county, state, and whether or not the sheriff’s office is noted in the constitution, following Falcone and Wells [Reference Falcone and Wells1995]),Footnote 18 the size of the population to which the agency is accountable, how many officers and civilians each agency employs, government partisanship, and the percentage of Black residents. In addition to our measure of government partisanship, we also account for time-varying perturbations from party control by including Party × Year fixed effects—where the former indicates either Democratic or Republican (relative to divided) control of the legislature and the latter indicates the year of observation. Collectively, these covariates help account for local oversight capacity, agencies’ resources for compliance, and political preferences, among other factors.

In Table 1 we present estimates from reduced-form and fully specified DiD models for each of the two samples. The estimates reveal strong evidence for an effect of state legislative capacity on UCR participation and therefore strong support for our argument that capacity encourages police transparency. In all models, the point estimates are positive, large, and many times greater than their standard error estimate. Substantively, the effect of capacity is remarkable. For example, in the latter sample, if all legislatures had resources equivalent to those of Texas, currently about the mean level of capacity, and increased their resources to the level of one of the higher-capacity legislatures, like Michigan—which would not be an unprecedented change (Squire and Hamm Reference Squire and Hamm2005)—the predicted effect on the probability of UCR participation would be an increase of over 0.06. This translates to an expectation of between 1,200 and 1,300 more agencies complying with UCR data requests each year. Footnote 19

Table 1. Legislative Capacity on UCR Participation

Term limit imposition also yields the anticipated effect, sharply reducing compliance probability by about 0.07 in the earlier sample (where there is less variability on the covariate) and 0.17 in the latter sample. Furthermore, the effect of legislative capacity is muted for agencies with elected heads in the latter sample and washed out completely in the earlier sample. Likewise, term limit effects are muted for elected heads in the latter sample, but the interaction is null in the earlier sample. The data suggest election, relative to appointment, seems to insulate some heads from legislative scrutiny.

In Appendix B, we report the results for all control variables and show that central result is robust to an array of alternative specifications including interacting capacity with partisan legislative control; analyzing state, county, and municipal agencies separately; and employing an alternative measure of capacity derived from Vannoni, Ash, and Morelli (Reference Vannoni, Ash and Morelli2021). The effect of capacity remains positive, substantively large, and statistically significant under all conditions: increasing state legislative capacity is associated with increases in state, county, and municipal police agency transparency. Finally, we replicate our analysis by examining compliance with the 2016 iteration of the Law Enforcement Management and Administrative Statistics survey (administered by the Bureau of Justice Statistics) and find the central relationship reported here manifest in those data as well: police agencies in states with higher-capacity legislatures are significantly more likely to comply with requests for data.

Although there may, of course, be some additional risk of confounding despite our various checks, the robustness of our results to the inclusion of a variety of observed potential confounds gives us confidence in the direction and relative size of our effects. Overturning these results would require an unmeasured time-varying variable that (a) is jointly related to participation and capacity (i.e., relevant to the main effect), (b) has heterogeneous over-time variation across states (i.e., does not produce a trend proxied by the year fixed effects), and (c) is not already proxied for by the current time-varying controls. Furthermore, sensitivity analyses following Cinelli and Hazlett (Reference Cinelli and Hazlett2020) reveal that such a confounder would need to predict both capacity and UCR participation over 10 times better than does our most predictive control variable (population, which is correlated with compliance: β > 0.06 and t > 50 and in both samples) to wash out the capacity effect. Although this is possible, we cannot think of particular covariates satisfying these conditions; otherwise, we would have included them in the model.

At minimum, we have shown that there are systematic differences in UCR participation across states and that these are robustly correlated with state legislative capacity. Although the magnitude of this effect may be better identified in subsequent studies, we believe that first revealing this relationship provides substantial value for researchers in two ways. First, our analysis suggests that political oversight is a fruitful area for future research, as political scientists increasingly analyze policing. Second, our analysis provides analysts with information on where, and a plausible explanation for why, data on crime are likely to be of lower or higher quality. Although we do not study crime itself as an outcome here, our analysis has clear implications for research that does.

Study 2: Underreporting Deadly Force

The UCR is the only comprehensive, national effort by the US government to build an accounting of police lethality, but these data are inaccurate.Footnote 20 The intermittent UCR compliance discussed above makes it clear that any accounting of crime or police activity contained in the UCR is incomplete. Furthermore, given the evidence that noncompliance is determined in part by political concerns and recognition of the substantial incentive police agencies have to obfuscate (or exaggerate) certain behaviors and outcomes, the information that is contained in the UCR is almost certainly inaccurate and biased toward reflecting favorably on police agencies. Such bias is likely acute in the case of police use of lethal force or what the UCR calls “justifiable homicide by law enforcement,” and indeed we find that underreporting is pervasive.

Unfortunately, UCR estimates of police lethality have been the standard in academic research for decades. Jacobs and O’Brien (Reference Jacobs and O’Brien1998, 846) went so far as to defend the data as “likely to be accurate because such homicides are difficult to conceal.” As recently as Holmes, Painter, and Smith (Reference Holmes, Painter and Smith2019), these data have been used as credible estimates of police lethality despite discussions of the well-known limitations of these data in academic research (e.g., Klinger Reference Klinger2012) and in the documentation of the data themselves.Footnote 21 Though previous research recognizes UCR shortcomings, recognition alone is insufficient. The fact that the wealthiest and most durable democracy in history does not know how many of its residents are killed by agents of the state is alarming and warrants study such that we may understand the political processes that produce (or permit) this downward bias in the official statistics and how the bias may potentially be alleviated.

We argue that one political factor driving this opacity, or bias, is the inability of legislatures to shape the behavior of police agencies. We evaluate this argument by comparing the UCR’s official statistics to deadly force counts from several crowdsourced initiatives organized in recent years: The Guardian’s The Counted (2015–2016), The Washington Post’s Fatal Force (2015–2016), Mapping Police Violence (2013–2016), Killed by Police (2014–2016), and Fatal Encounters (2013–2016).Footnote 22 Although not ground-truth data, these projects represent the best available estimates on the extent of police killings. Even former FBI Director James Comey has recognized the greater accuracy of these crowdsourced data, calling the UCR statistics “embarrassing and ridiculous” by comparison (Kindy Reference Kindy2015). A central feature of these data is that each case is independently vetted and confirmed, and indeed an independent report issued by the DOJ suggests they provide a superior accounting (Banks Reference Banks, Ruddle, Kennedy and Planty2016). Thus, discrepancies between UCR and the crowdsourced data provide an estimate of (known) undercounting—the number of known police killings that could have been officially reported but were not. Assessing difference across sources in this manner is common in event data analysis of political violence (e.g., Davenport and Ball Reference Davenport and Ball2002).

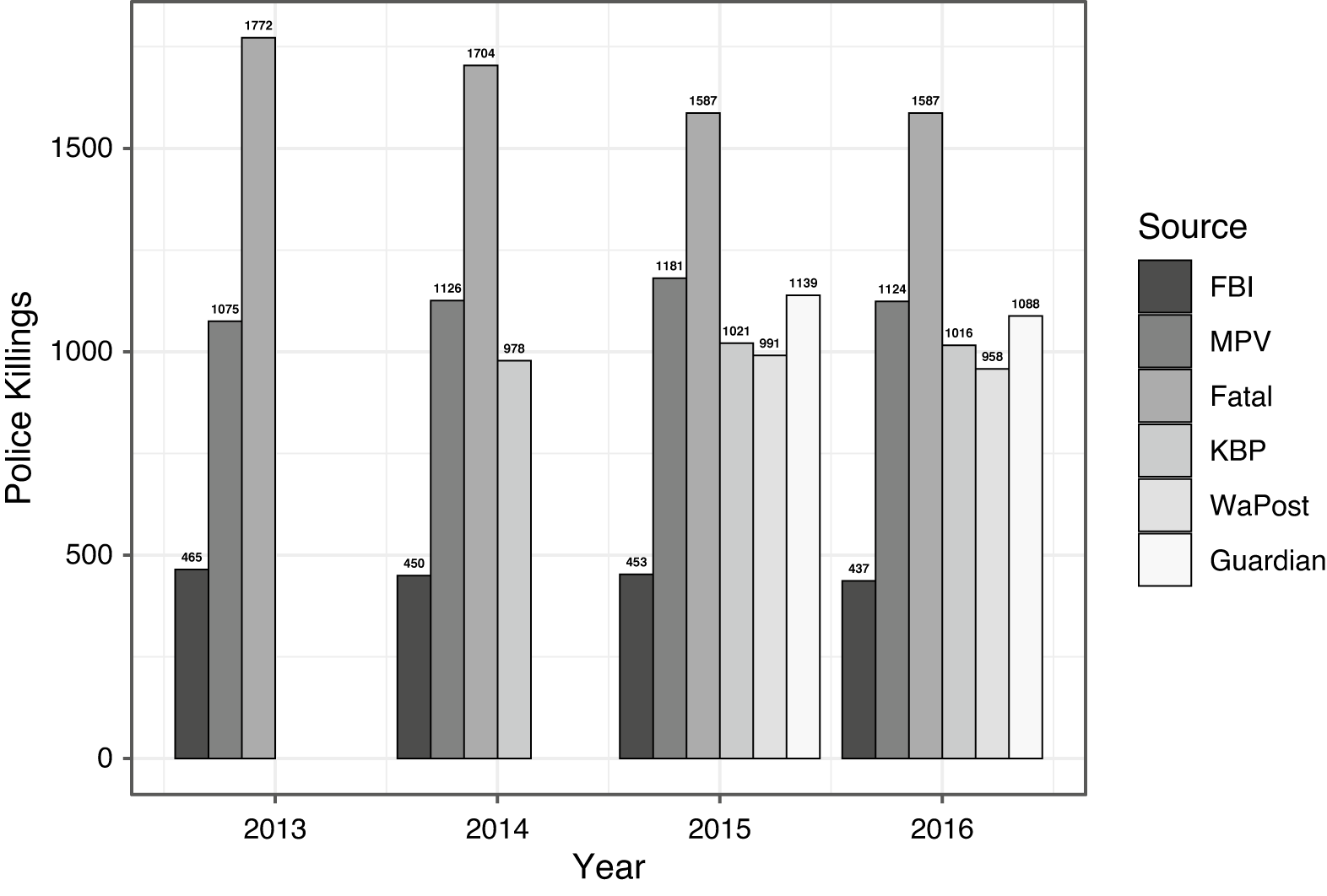

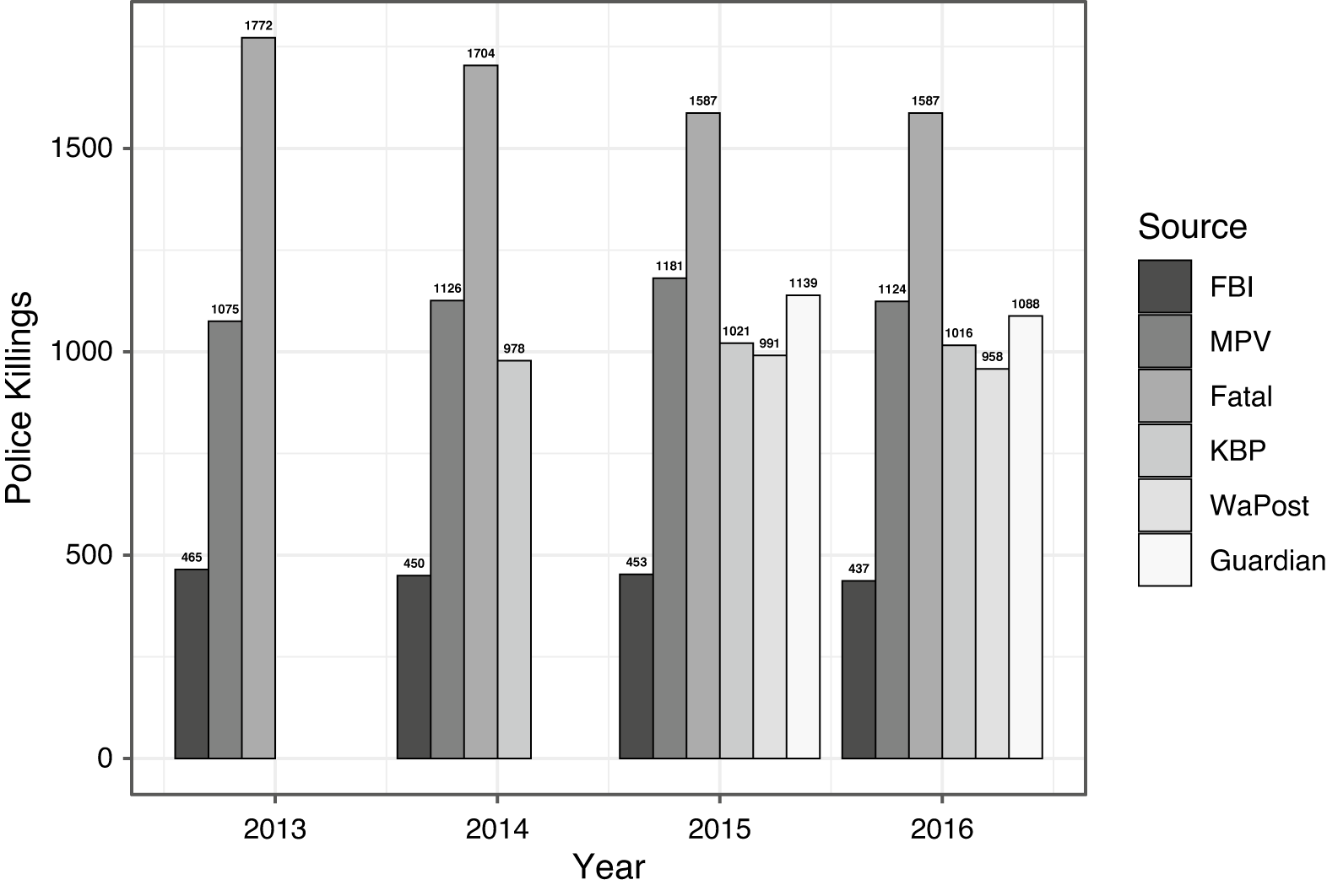

We plot these data in Figures 1 and 2 to demonstrate the systematic underreporting of police killings in the UCR. Figure 1 gives aggregate lethality figures by year, and there are two clear patterns. First, the figures are relatively consistent across time within reporting group. Second, the UCR underestimates police killings by at least 50% relative to crowdsourced estimates every year. We note that Fatal Encounters is a high outlier because it counts “deaths in custody” (e.g., dying of a heart attack while in custody awaiting arraignment) in addition to “justifiable homicides.” Though Fatal Encounters’ counts are likely to be biased upward as a result, these estimates are still useful to include in our comparison set, especially because we know the source and direction of the bias.

Figure 1. Police Killings by Source (2013–2016)

Figure 2. Comparing 2015 Police Killings per 100,000 Residents across the United States

Figure 2 decomposes the 2015 estimates to demonstrate state-level differences in reporting on the incidence of police killings per 100,000 residents. Again, there are two central patterns. The first is the striking degree of variability in the incidence of police killings across the United States. For example, police killings are a relatively rare occurrence in Connecticut but an order of magnitude higher in New Mexico. In 2015, police lethality in all crowdsourced data sources is also substantially greater than the official estimates of drug-related homicides (452) and gang-related homicides (757). The second main pattern is the substantial variability in degree of underreporting. For example, Michigan reports almost all known police killings, whereas Florida fails to report any.

This variability in underreporting is the focus of Study 2, as we argue that a significant portion of it can be attributed to systematic differences in capacity for legislative oversight. To test this, we undertake two additional analyses. First, we compare rates of underreporting of police lethality across states to the states’ legislative capacity. The data indicate that states lacking the capacity for oversight have significantly higher numbers of unreported police killings. Second, we provide evidence for a plausibly causal effect of state legislative intercession on police transparency by analyzing agency-reported deadly force numbers pre–post a policy intervention in seven states. This analysis suggests that the intervention significantly reduces the number of unreported police killings.

To measure the underreporting of police lethality, we calculate the difference between the publicly gathered data and the data contained in the UCR in each state-year (e.g., WaPost AZ,2016 – UCR AZ,2016 )—the number of known police killings unreported in official statistics, scaled per 100,000 residents.Footnote 23 The effective sample period for these data is 2013–2016, which limits our modeling options because capacity does not vary meaningfully within states over this period and term limits do not vary at all. Given the lack of within-unit variation in these main predictors, we focus on modeling the cross-sectional variation and, therefore, do not include state fixed effects. Instead, we use state random effects to account for within-unit correlation in unobservables and additional control variables to account for potential state-specific confounders. Theoretically, these are the state’s available resources for collecting and disseminating information on police killings and the preferences of police agencies and legislatures for transparency. Operationally, these enter the model as the state’s wealth (median income), the average number of agency employees (officers and civilians), share of Black population, the partisan control of government, and the political preferences of police agencies. Agency conservatism is captured using Bonica’s (Reference Bonica2016) preference estimates derived from campaign contribution data, and we use the mean estimated ideal point for all contributors working in law enforcement, where greater values indicate greater conservatism.Footnote 24 Research on the connection between political preferences and preferences for police militarism or violence suggest this is the best available measure (Christie, Petrie, and Timmins Reference Christie, Petrie and Timmins1996; Gerber and Jackson Reference Gerber and Jackson2017). Baseline categories for legislative and gubernatorial control are divided control and independent, respectively.Footnote 25 , Footnote 26

Before turning to our results, it is important to recall precisely what we are modeling: a police agencies’ propensity to report a killing, given that the killing has occurred. Therefore, the relevant covariates to include are predictors of the choice to report—that is, preferences and resources—not predictors of the event itself, such as local rates of violent crime. Importantly, in addition to being an irrelevant control variable, any official measure of crime would be subject to the same deficiencies as are the UCR estimates of police lethality and would therefore induce estimation bias.

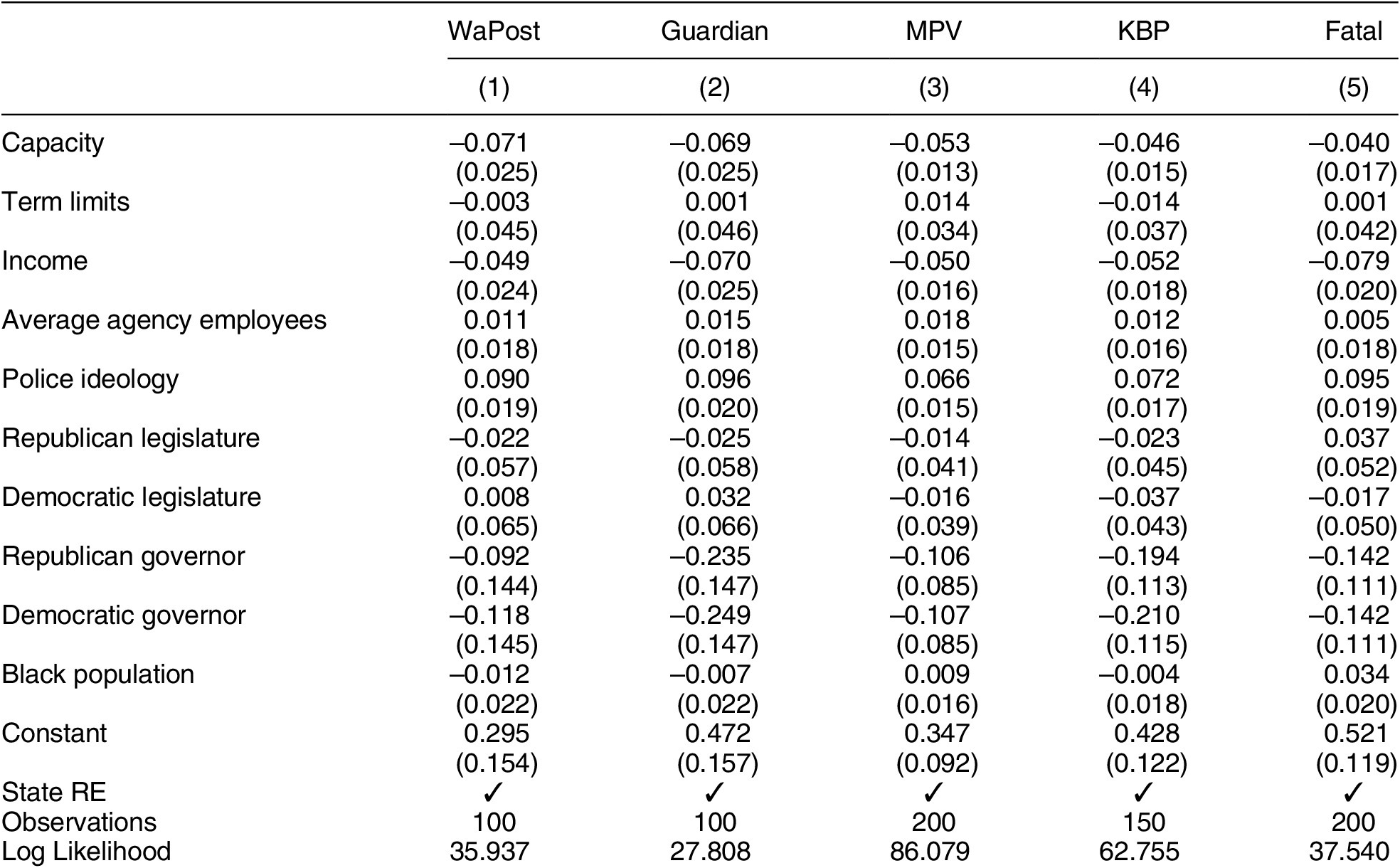

The results are provided in Table 2, with separate models for each crowdsourced database. All covariates are standardized to facilitate interpretation, with each coefficient indicating the effect of a one-standard-deviation (or binary) increase in the predictor. As can be seen, state legislative capacity is consistently associated with less underreporting in official statistics, as agencies in states with higher legislative capacity are more likely to enter police killings in official reports across all data sources. The results indicate that a one-standard-deviation increase in legislative capacity is associated with around 0.035–0.075 fewer unreported police killings per 100,000 residents—or an average of 2.2–4.8 per state annually. Converting all states from their true capacity to the sample maximum (California) is predicted to reduce unreported killings by 284–670 per annum. Also, as expected, the estimate from the Fatal Encounters analysis is smaller and has a larger relative standard error, reflecting our qualitative understanding that these data bias undercounts upward from the true value.Footnote 27 Finally, the term limits indicator, which we would expect to have a positive effect on underreporting, has an effective zero estimate in all models. The strong negative relationship between transparency and term limits revealed in the first study is not manifest here.

Table 2. Police Killings Unreported to UCR

To be clear, causal identification in this analysis is complicated by the lack of random treatment assignment, the sparsity of the outcome data, the time invariance of the main predictors, and our inability to include state fixed effects. Each precludes standard design- or model-based strategies for casual inference. Although reasonable people can disagree on what can be drawn from observational data analysis without ideal identification strategies, at minimum our analysis makes novel use of crowdsourced data to identify the set of states where police killings are most likely to be underreported and demonstrates that this underreporting is significantly negatively correlated with legislative capacity. Should researchers have alternative (i.e., noncapacity) theories explaining this relationship, we strongly encourage them to pursue this in future research. To further investigate our capacity-based argument, however, we can assess the causal effect of legislative intercession on the transparent reporting of deadly force, even if we cannot assess the causal effect of legislative capacity per se, which is of course an antecedent factor to intercession.

Since 2011, California, Colorado, Connecticut, Illinois, New York, Utah, and Wisconsin have implemented policies requiring and regulating the investigation of officer-involved deaths.Footnote

28

Consistent with our general argument, these laws are positively correlated with legislative capacity (

![]() $ p\hskip.18em = 0.017 $

). Each of these laws prescribes a systematic investigation protocol for officer-involved deaths (or use of force) by actors outside of the officer’s agency and mandates the application of the protocol. Therefore, these laws prescribe a level of transparency and formal memorialization of the events. This both reduces the incentive to obfuscation in official statistics by expanding the number of actors with knowledge of the event and increases the probability of being held accountable for underreporting. Our empirical expectation is that this policy intervention should reduce the number of unreported police killings. To test this, we estimate a series of DiD models where the outcome is the difference between the publicly gathered data and UCR data in each state year (e.g., WaPost

AZ,2016

– UCR

AZ,2016

). Our covariate of interest is the intervention status for the state-year. We expect the treatment to have a negative effect—to reduce the number of unreported killings.Footnote

29

$ p\hskip.18em = 0.017 $

). Each of these laws prescribes a systematic investigation protocol for officer-involved deaths (or use of force) by actors outside of the officer’s agency and mandates the application of the protocol. Therefore, these laws prescribe a level of transparency and formal memorialization of the events. This both reduces the incentive to obfuscation in official statistics by expanding the number of actors with knowledge of the event and increases the probability of being held accountable for underreporting. Our empirical expectation is that this policy intervention should reduce the number of unreported police killings. To test this, we estimate a series of DiD models where the outcome is the difference between the publicly gathered data and UCR data in each state year (e.g., WaPost

AZ,2016

– UCR

AZ,2016

). Our covariate of interest is the intervention status for the state-year. We expect the treatment to have a negative effect—to reduce the number of unreported killings.Footnote

29

Table 3 presents the DiD analyses of the effects of the investigation policy. Every model recovers a negative and significant estimate, revealing evidence for a plausibly causal effect of state legislative intercession on the transparent reporting of deadly force. Substantively, our results suggest that, by imposing investigation policies, the treated states improved the accuracy of police self-reporting by an average of 5 to 10 deaths annually. These results, taken together with the robust negative correlations between capacity and underreporting in Table 2 provide compelling evidence for our overarching argument that endowing state legislatures with the resources for rigorous oversight (or policy intervention) can substantially improve bureaucratic transparency across levels of government. This, in turn, should help legislatures acquire information that is necessary for policy design as well as help direct and constrain the behavior of agencies, ultimately helping align government action with voters’ preferences.

Table 3. Difference-in-Differences Analysis of State Investigation Laws

Discussion

We have argued that state legislatures—which depend upon state, county, and municipal agencies for policy-relevant information—can direct their scrutiny powers downward, helping to compel bureaucratic transparency of state and substate agencies. Thus, increasing state legislative capacity can increase information sharing and ultimately improve legislative management of agencies at each of these levels. An analysis of administrative records of 19,095 police agencies’ compliance with official data requests over nearly six decades yielded strong evidence for a plausibly causal effect of capacity on state, county, and municipal agency transparency. We then assessed the accuracy of reported data by comparing the number of known unreported police killings in each state over recent years to states’ legislative capacity, finding a robust negative association—states with higher-capacity legislatures had significantly lower rates of unreported police lethality. A DiD analysis of a transparency intervention provided complementary evidence that state legislative intercession improves reporting of police lethality.

Taken together, we believe this provides compelling evidence that (a) oversight can ameliorate informational asymmetries between unelected government agents and elected representatives and (b) state legislative oversight capacity can be directed downward to scrutinize substate agencies. This contributes to our understanding of interbranch conflict across levels of government, the broader implications of investing in legislative capacity, and the link between these investments and democratic responsiveness. Furthermore, police present a difficult test of our argument on the potential for state legislative bodies to affect local-agency behaviors. Relative to other agencies, police are generally popular (Gallup 2019) and possess strong unions, which may make police less sensitive to legislative demands for transparency. This suggests that our findings here should generalize well to other departments of the executive.

Even absent these broader implications for oversight, we believe that the specific analysis of policing here is important. As Zimring (Reference Zimring2017) notes, the volume of killings by police is immense, with roughly 1,000 deaths per year, over half of which go unreported in official statistics. This is nearly 40 times the lethality threshold used in some measures of civil conflict. Yet, this particular form of political violence receives comparatively little attention in political science research. We believe that our approach and findings here, complementing Soss and Weaver’s (Reference Soss and Weaver2017) argument to study police within the broader context of governance and democracy, suggest a potentially fruitful path toward better understanding how police behaviors may be brought in line with public sentiment. Our work also contributes to a fast-growing literature on the deep and wide-ranging implications of legislative capacity, an area of legislative research of which scholars have barely begun to scratch the surface. Furthermore, research on the relationship between governing bodies across different levels is growing but overwhelmingly focused on bottom-up interactions (e.g., the lobbying of Congress or state legislatures by municipal governments: Loftis and Kettler Reference Loftis and Kettler2015; Payson Reference Payson2020), whereas this article suggests that top-down interactions also deserve attention.

In addition, we provide evidence that the gathering and dissemination of official data is politically motivated, obscuring our understanding of government action. There are several implications. First, if agency behaviors are conditioned by expectations of scrutiny, then coercing transparency may effectively decrease police violence. Second, insights from previous studies using official statistics on crime and policing should not be taken on faith and may warrant reexamination, especially studies that use UCR data without accounting for the considerable selection bias in their generation and dissemination: given the above analyses, is difficult to think of any circumstance under which UCR data are appropriate for studying the causes of crime. Third, researchers need to consider the data-production process explicitly and apart from the event-generation process (Cook and Weidmann Reference Cook and Weidmann2019), especially when data providers have interests that are inconsistent with transparency.

To see how this matters, consider research by Knox, Lowe, and Mummolo (Reference Knox, Lowe and Mummolo2020) showing official statistics can mask racially biased policing by recording only the conditions of interactions (e.g., the race and speed of a driver an officer has pulled over) and not recording the conditions of interaction opportunities (e.g., the race and speed of all drivers an officer observed). Importantly, the authors’ estimates of the extent to which recorded data obscure the true nature of the event-generating process assumes that the data-generating process is unbiased (e.g., the race and speed of all drivers an officer has pulled over is faithfully recorded). Our analyses show that this almost certainly is not the case, so we may consider the bias estimated by Knox, Lowe, and Mummolo (Reference Knox, Lowe and Mummolo2020) a lower bound, at least in the case of behaviors or events, like racially biased use of force, that bear potential disciplinary costs.

Carefully considering how and why our realized data deviate from ground-truth events is important in areas aside from crime as well. Just as police have incentives to underreport their lethality, school personnel have incentives to manipulate test scores (Jacob and Levitt Reference Jacob and Levitt2003) and the Environmental Protection Agency may face pressure to underestimate pollution-related deaths (Friedman Reference Friedman2019). Moreover, as news media consumption becomes increasingly segmented and local media divestment continues (Peterson Reference Peterson2021), the ability of the press to serve as a watchdog declines. Therefore, better understanding political avenues for ensuring more accurate government data reporting is increasingly important. This matters both for less salient issue areas where auxiliary media-based data are not easily and readily available and, in the future, for high-salience issues as media resources continue to be diminished. Good governance and good science require good data, and we simply cannot rely on media to fill in gaps in the official statistics. Thus, we urge our colleagues to study the political economy of official statistics—critically scrutinizing the accuracy, reliability, and political determinants of official data needed for government accountability and scientific inquiry.

Supplementary Materials

To view supplementary material for this article, please visit http://doi.org/10.1017/S0003055422000624.

DATA AVAILABILITY STATEMENT

Research documentation and data that support the findings of this study are openly available at the American Political Science Review Dataverse: https://doi.org/10.7910/DVN/MFFV3C.

ACKNOWLEDGMENTS

This paper benefited from feedback from participants at the 2019 Annual Meeting of the Southern Political Science Association; workshops at American University, ETH-Zurich, Stony Brook University, University of California, San Diego, and University of St. Gallen; comments from Mallory Compton, Andrew Flores, Gerald Gamm, Mary Kroeger, Casey LaFrance, Josh McCrain, Rob McGrath, Ken Meier, Jonathan Mummolo, Tessa Provins, and Ian Turner; and consultation with Elizabeth Davis and Zhen Zeng at the Bureau of Justice Statistics. We are also grateful to the editor and three anonymous reviewers for constructive comments, criticisms, and suggestions. Any errors of omission or commission are of the authors’ making.

CONFLICT OF INTEREST

The authors declare no ethical issues or conflicts of interest in this research.

ETHICAL STANDARDS

The authors affirm this research did not involve human subjects.

Comments

No Comments have been published for this article.