1. Introduction

Let

![]() $X = (X_1, \ldots, X_n)$

be a random vector with coordinates in a Polish space E, and let

$X = (X_1, \ldots, X_n)$

be a random vector with coordinates in a Polish space E, and let

![]() $f\,:\, E^n \to \mathbb{R}$

be a measurable function such that f(X), for n large, is square-integrable. For a large class of such functions f it is expected that as n grows without bound, f(X) behaves like a normal random variable. To quantify such estimates one is interested in bounding the distance between f(X) and the normal random variable

$f\,:\, E^n \to \mathbb{R}$

be a measurable function such that f(X), for n large, is square-integrable. For a large class of such functions f it is expected that as n grows without bound, f(X) behaves like a normal random variable. To quantify such estimates one is interested in bounding the distance between f(X) and the normal random variable

![]() $\mathcal{N} \sim N (m_f, \sigma_f^2)$

where

$\mathcal{N} \sim N (m_f, \sigma_f^2)$

where

![]() $m_f = \mathbb{E}[f(X)]$

and

$m_f = \mathbb{E}[f(X)]$

and

![]() $\sigma_f^2 = Var (f(X))$

. Two such distances of interest are the Kolmogorov distance

$\sigma_f^2 = Var (f(X))$

. Two such distances of interest are the Kolmogorov distance

and the Wasserstein distance

where this last supremum is taken over real-valued Lipschitz functions h such that

![]() $|h(x) - h(y)| \leq |x - y|$

for all

$|h(x) - h(y)| \leq |x - y|$

for all

![]() $x, y \in \mathbb{R}$

.

$x, y \in \mathbb{R}$

.

For the case where the components of X are independent random variables, upper bounds on

![]() $d_W(f(X), \mathcal{N})$

were first obtained in [Reference Chatterjee2], and these were extended to

$d_W(f(X), \mathcal{N})$

were first obtained in [Reference Chatterjee2], and these were extended to

![]() $d_K(f(X), \mathcal{N})$

in [Reference Lachièze-Rey and Peccati14]. Both results rely on a class of difference operators that will be described in Section 2.

$d_K(f(X), \mathcal{N})$

in [Reference Lachièze-Rey and Peccati14]. Both results rely on a class of difference operators that will be described in Section 2.

Very few results address the (weakly) dependent case, and in the present work we provide estimates on

![]() $d_K(f(X), \mathcal{N})$

and

$d_K(f(X), \mathcal{N})$

and

![]() $d_W(f(X), \mathcal{N})$

when X is generated by a hidden Markov model. Such models are of interest because of their many applications in fields such as computational biology and speech recognition; see e.g. [Reference Durbin, Eddy, Krogh and Mitchison7]. Recall that a hidden Markov model (Z, X) consists of a Markov chain

$d_W(f(X), \mathcal{N})$

when X is generated by a hidden Markov model. Such models are of interest because of their many applications in fields such as computational biology and speech recognition; see e.g. [Reference Durbin, Eddy, Krogh and Mitchison7]. Recall that a hidden Markov model (Z, X) consists of a Markov chain

![]() $Z = (Z_1, \ldots, Z_n)$

which emits the observed variables

$Z = (Z_1, \ldots, Z_n)$

which emits the observed variables

![]() $X = (X_1, \ldots, X_n)$

. The possible states in Z are each associated with a distribution on the values of X. In other words the observation X is a mixture model where the choice of the mixture component for each observation depends on the component of the previous observation. The mixture components are given by the sequence Z. Note also that given Z, X is a Markov chain.

$X = (X_1, \ldots, X_n)$

. The possible states in Z are each associated with a distribution on the values of X. In other words the observation X is a mixture model where the choice of the mixture component for each observation depends on the component of the previous observation. The mixture components are given by the sequence Z. Note also that given Z, X is a Markov chain.

The content of the paper is as follows. Section 2 contains a short overview of results on normal approximation in the independent setting and introduces a simple transformation involving independent and identically distributed (i.i.d.) random variables that allows us to adapt these estimates to the hidden Markov model. Moreover, further quantitative bounds are provided for the special case when f is a Lipschitz function with respect to the Hamming distance. Applications to variants of the ones analyzed in [Reference Chatterjee2] and [Reference Lachièze-Rey and Peccati14] are developed in Sections 3 and 4, leading to an extra log-factor in the various rates obtained there. The majority of the more technical computations are carried out and presented in Section 5.

2. Main results

For a comprehensive review of Stein’s method we refer the reader to [Reference Chen, Goldstein and Shao4]. The exchangeable pairs approach was outlined in [Reference Chatterjee2]. We now recall below a few of its main points.

Let

![]() $W \,{:}\,{\raise-1.5pt{=}}\, f(X)$

. Originally in [Reference Chatterjee2], and then in [Reference Lachièze-Rey and Peccati14], various bounds on the distance between W and the normal distribution were obtained through a variant of Stein’s method. As is well known, Stein’s method is a way to obtain normal approximations based on the observation that the standard normal distribution

$W \,{:}\,{\raise-1.5pt{=}}\, f(X)$

. Originally in [Reference Chatterjee2], and then in [Reference Lachièze-Rey and Peccati14], various bounds on the distance between W and the normal distribution were obtained through a variant of Stein’s method. As is well known, Stein’s method is a way to obtain normal approximations based on the observation that the standard normal distribution

![]() $\mathcal{N}$

is the only centered and unit-variance distribution that satisfies

$\mathcal{N}$

is the only centered and unit-variance distribution that satisfies

for all absolutely continuous g with almost-everywhere (a.e.) derivative g

′ such that

![]() $\mathbb{E}|g^{\prime}(\mathcal{N})| < \infty$

(see [Reference Chen, Goldstein and Shao4]), and for the random variable W,

$\mathbb{E}|g^{\prime}(\mathcal{N})| < \infty$

(see [Reference Chen, Goldstein and Shao4]), and for the random variable W,

![]() $|\mathbb{E}[ W g(W) - g^{\prime}(W)]|$

can be thought of as a distance measuring the proximity of W to

$|\mathbb{E}[ W g(W) - g^{\prime}(W)]|$

can be thought of as a distance measuring the proximity of W to

![]() $\mathcal{N}$

. In particular, for the Kolmogorov distance, the solutions

$\mathcal{N}$

. In particular, for the Kolmogorov distance, the solutions

![]() $g_t$

to the differential equation

$g_t$

to the differential equation

are absolutely continuous with a.e. derivative such that

![]() $\mathbb{E}\big| g^{\prime}_t(\mathcal{N})\big| < \infty$

(see [Reference Chen, Goldstein and Shao4]). Then,

$\mathbb{E}\big| g^{\prime}_t(\mathcal{N})\big| < \infty$

(see [Reference Chen, Goldstein and Shao4]). Then,

Further properties of the solutions

![]() $g_t$

allow for upper bounds on

$g_t$

allow for upper bounds on

![]() $\mathbb{E}\big[ g^{\prime}_t (W) - W g_t(W)\big]$

using difference operators associated with W that were introduced in [Reference Chatterjee2] (see [Reference Lachièze-Rey and Peccati14]). This is called the generalized perturbative approach in [Reference Chatterjee3], and we describe it next. First, we recall the perturbations used to bound the right-hand side of (1) in [Reference Chatterjee2, Reference Lachièze-Rey and Peccati14]. Let

$\mathbb{E}\big[ g^{\prime}_t (W) - W g_t(W)\big]$

using difference operators associated with W that were introduced in [Reference Chatterjee2] (see [Reference Lachièze-Rey and Peccati14]). This is called the generalized perturbative approach in [Reference Chatterjee3], and we describe it next. First, we recall the perturbations used to bound the right-hand side of (1) in [Reference Chatterjee2, Reference Lachièze-Rey and Peccati14]. Let

![]() $X^{\prime} = \big(X^{\prime}_1, \ldots, X^{\prime}_n\big)$

be an independent copy of X, and let

$X^{\prime} = \big(X^{\prime}_1, \ldots, X^{\prime}_n\big)$

be an independent copy of X, and let

![]() $W^{\prime} = f(X^{\prime})$

. Then (W, W

′) is an exchangeable pair, since it has the same joint distribution as (W

′, W). A perturbation

$W^{\prime} = f(X^{\prime})$

. Then (W, W

′) is an exchangeable pair, since it has the same joint distribution as (W

′, W). A perturbation

![]() $W^A = f^A(X) \,{:}\,{\raise-1.5pt{=}}\, f\big(X^A\big)$

of W is defined through the change

$W^A = f^A(X) \,{:}\,{\raise-1.5pt{=}}\, f\big(X^A\big)$

of W is defined through the change

![]() $X^A$

of X as follows:

$X^A$

of X as follows:

\begin{align}\notag X_i^{A} =\left\{ \begin{array}{l@{\quad}l} X^{\prime}_i & \mbox{if } i \in A, \\[5pt] X_i & \mbox{if } i \notin A, \end{array}\right.\end{align}

\begin{align}\notag X_i^{A} =\left\{ \begin{array}{l@{\quad}l} X^{\prime}_i & \mbox{if } i \in A, \\[5pt] X_i & \mbox{if } i \notin A, \end{array}\right.\end{align}

for any

![]() $A \subseteq [n] \,{:}\,{\raise-1.5pt{=}}\, \{1, \ldots, n\}$

, including

$A \subseteq [n] \,{:}\,{\raise-1.5pt{=}}\, \{1, \ldots, n\}$

, including

![]() $A = \emptyset$

. With these definitions, still following [Reference Chatterjee2], difference operators are defined for any

$A = \emptyset$

. With these definitions, still following [Reference Chatterjee2], difference operators are defined for any

![]() $\emptyset \subseteq A \subseteq [n]$

and

$\emptyset \subseteq A \subseteq [n]$

and

![]() $i \notin A$

, as follows:

$i \notin A$

, as follows:

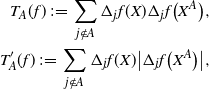

Moreover, set

\begin{align}\notag T_A(f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{j \notin A} \Delta_j f(X) \Delta_j f\big(X^A\big), \\\notag T^{\prime}_A (f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{j \notin A} \Delta_j f(X) \big|\Delta_j f\big(X^A\big)\big|,\end{align}

\begin{align}\notag T_A(f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{j \notin A} \Delta_j f(X) \Delta_j f\big(X^A\big), \\\notag T^{\prime}_A (f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{j \notin A} \Delta_j f(X) \big|\Delta_j f\big(X^A\big)\big|,\end{align}

and for

![]() $k_{n, A} = 1 / \Big(\begin{array}{c}n\\|A|\end{array}\Big) (n - |A|)$

, set

$k_{n, A} = 1 / \Big(\begin{array}{c}n\\|A|\end{array}\Big) (n - |A|)$

, set

\begin{align}\notag T_n(f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{\emptyset \subseteq A \subsetneq [n] } k_{n, A} T_A(f), \\\notag T^{\prime}_n(f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{\emptyset \subseteq A \subsetneq [n]} k_{n, A} T^{\prime}_A (f).\end{align}

\begin{align}\notag T_n(f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{\emptyset \subseteq A \subsetneq [n] } k_{n, A} T_A(f), \\\notag T^{\prime}_n(f) \,{:}\,{\raise-1.5pt{=}}\, \sum_{\emptyset \subseteq A \subsetneq [n]} k_{n, A} T^{\prime}_A (f).\end{align}

Now, for

![]() $W = f(X_1, \ldots, X_n)$

such that

$W = f(X_1, \ldots, X_n)$

such that

![]() $\mathbb{E} [W ]= 0$

,

$\mathbb{E} [W ]= 0$

,

![]() $0 < \sigma^2 = \mathbb{E} \big[W^2\big] < \infty$

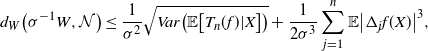

, and assuming all the expectations below are finite, [Reference Chatterjee2, Theorem 2.2] gives the bound

$0 < \sigma^2 = \mathbb{E} \big[W^2\big] < \infty$

, and assuming all the expectations below are finite, [Reference Chatterjee2, Theorem 2.2] gives the bound

\begin{align} d_W\big(\sigma^{-1} W, \mathcal{N}\big) \leq \frac{1}{\sigma^2} \sqrt{Var \big( \mathbb{E}\big[ T_n(f) |X \big] \big) } + \frac{1}{2 \sigma^3} \sum_{j = 1}^n \mathbb{E} \big| \Delta_j f(X)\big|^3,\end{align}

\begin{align} d_W\big(\sigma^{-1} W, \mathcal{N}\big) \leq \frac{1}{\sigma^2} \sqrt{Var \big( \mathbb{E}\big[ T_n(f) |X \big] \big) } + \frac{1}{2 \sigma^3} \sum_{j = 1}^n \mathbb{E} \big| \Delta_j f(X)\big|^3,\end{align}

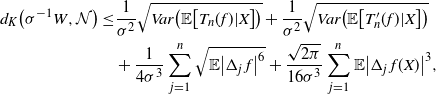

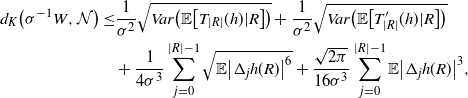

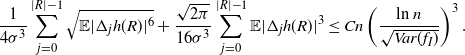

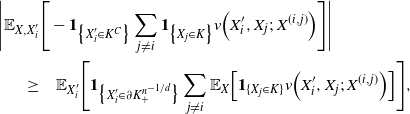

while [Reference Lachièze-Rey and Peccati14, Theorem 4.2] yields

\begin{align}\notag d_K\big(\sigma^{-1} W, \mathcal{N}\big) \leq & \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T_n(f) | X\big]\big)} + \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T^{\prime}_n (f) | X\big]\big)} \\ & + \frac{1}{4 \sigma^3} \sum_{j = 1}^n \sqrt{\mathbb{E} \big| \Delta_j\, f\big|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 1}^n \mathbb{E} \big|\Delta_j\, f(X)\big|^3,\end{align}

\begin{align}\notag d_K\big(\sigma^{-1} W, \mathcal{N}\big) \leq & \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T_n(f) | X\big]\big)} + \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T^{\prime}_n (f) | X\big]\big)} \\ & + \frac{1}{4 \sigma^3} \sum_{j = 1}^n \sqrt{\mathbb{E} \big| \Delta_j\, f\big|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 1}^n \mathbb{E} \big|\Delta_j\, f(X)\big|^3,\end{align}

where in both cases

![]() $\mathcal{N}$

is now a standard normal random variable.

$\mathcal{N}$

is now a standard normal random variable.

Our main abstract result generalizes (2) and (3) to the case when X is generated by a hidden Markov model. It is as follows.

Proposition 2.1. Let

![]() $(Z, X)$

be a hidden Markov model with Z an aperiodic time-homogeneous and irreducible Markov chain with finite state space

$(Z, X)$

be a hidden Markov model with Z an aperiodic time-homogeneous and irreducible Markov chain with finite state space

![]() $\mathcal{S}$

, and X taking values in a non-empty finite

$\mathcal{S}$

, and X taking values in a non-empty finite

![]() $\mathcal{A}$

. Let

$\mathcal{A}$

. Let

![]() $W \,{:}\,{\raise-1.5pt{=}}\, f(X_1, \ldots, X_n)$

with

$W \,{:}\,{\raise-1.5pt{=}}\, f(X_1, \ldots, X_n)$

with

![]() $\mathbb{E}[W] = 0$

and

$\mathbb{E}[W] = 0$

and

![]() $0 < \sigma^2 = \mathbb{E}\big[W^2\big] < \infty$

. Then there exist a finite sequence of independent random variables

$0 < \sigma^2 = \mathbb{E}\big[W^2\big] < \infty$

. Then there exist a finite sequence of independent random variables

![]() $R = \big(R_0, R_1, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

, with

$R = \big(R_0, R_1, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

, with

![]() $R_i$

taking values in

$R_i$

taking values in

![]() $\mathcal{S} \times \mathcal{A}$

for

$\mathcal{S} \times \mathcal{A}$

for

![]() $i = 0, \ldots, |\mathcal{S}|(n-1)$

, and a measurable function

$i = 0, \ldots, |\mathcal{S}|(n-1)$

, and a measurable function

![]() $h\,:\, (\mathcal{S} \times \mathcal{A})^{|\mathcal{S}|(n-1)+1} \longrightarrow \mathbb{R}$

such that

$h\,:\, (\mathcal{S} \times \mathcal{A})^{|\mathcal{S}|(n-1)+1} \longrightarrow \mathbb{R}$

such that

![]() $h\big(R_0, \ldots, R_{|\mathcal{S}| (n-1)} \big)$

and

$h\big(R_0, \ldots, R_{|\mathcal{S}| (n-1)} \big)$

and

![]() $f(X_1, \ldots, X_n)$

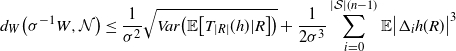

are identically distributed. Therefore,

$f(X_1, \ldots, X_n)$

are identically distributed. Therefore,

\begin{align} d_W\big(\sigma^{-1} W, \mathcal{N} \big) \leq \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E}\big[T_{|R|}(h) |R \big] \big)} + \frac{1}{2 \sigma^3} \sum_{i = 0}^{|\mathcal{S}|(n-1)} \mathbb{E}\big| \Delta_i h(R)\big|^3\end{align}

\begin{align} d_W\big(\sigma^{-1} W, \mathcal{N} \big) \leq \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E}\big[T_{|R|}(h) |R \big] \big)} + \frac{1}{2 \sigma^3} \sum_{i = 0}^{|\mathcal{S}|(n-1)} \mathbb{E}\big| \Delta_i h(R)\big|^3\end{align}

and

\begin{align}\notag d_K \big(\sigma^{-1} W, \mathcal{N}\big) \leq & \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T_{|R|}(h) | R\big]\big)} + \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T^{\prime}_{|R|} (h) | R\big]\big)} \\ & + \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{\mathbb{E} \big| \Delta_j h(R)\big|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 0}^{|R| - 1} \mathbb{E} \big|\Delta_j h(R)\big|^3,\end{align}

\begin{align}\notag d_K \big(\sigma^{-1} W, \mathcal{N}\big) \leq & \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T_{|R|}(h) | R\big]\big)} + \frac{1}{\sigma^2} \sqrt{Var\big( \mathbb{E} \big[ T^{\prime}_{|R|} (h) | R\big]\big)} \\ & + \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{\mathbb{E} \big| \Delta_j h(R)\big|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 0}^{|R| - 1} \mathbb{E} \big|\Delta_j h(R)\big|^3,\end{align}

where

![]() $\mathcal{N}$

is a standard normal random variable.

$\mathcal{N}$

is a standard normal random variable.

At first glance the above results might appear to be simple corollaries to (2) and (3). Indeed, as is well known, every Markov chain (in a Polish space) admits a representation via i.i.d. random variables

![]() $U_1, \ldots, U_n$

, uniformly distributed on (0, 1), and the inverse distribution function. Therefore,

$U_1, \ldots, U_n$

, uniformly distributed on (0, 1), and the inverse distribution function. Therefore,

![]() $f(X_1, \ldots, X_n) \overset{d}{=} h(U_1, \ldots, U_n)$

, for some function h, where, as usual,

$f(X_1, \ldots, X_n) \overset{d}{=} h(U_1, \ldots, U_n)$

, for some function h, where, as usual,

![]() $\overset{d}{=}$

indicates equality in distribution. However, providing quantitative estimates for

$\overset{d}{=}$

indicates equality in distribution. However, providing quantitative estimates for

![]() $\mathbb{E} |\Delta_j h(U_1, \ldots, U_n)|$

via f seems to be out of reach, since passing from f to h involves the ‘unknown’ inverse distribution function. For this reason, we develop for our analysis a more amenable, although more restrictive, choice of i.i.d. random variables, which is described intuitively in the next paragraph and then again in greater detail in Section 2.1.

$\mathbb{E} |\Delta_j h(U_1, \ldots, U_n)|$

via f seems to be out of reach, since passing from f to h involves the ‘unknown’ inverse distribution function. For this reason, we develop for our analysis a more amenable, although more restrictive, choice of i.i.d. random variables, which is described intuitively in the next paragraph and then again in greater detail in Section 2.1.

Consider

![]() $R = \big(R_0, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

as stacks of independent random variables on the

$R = \big(R_0, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

as stacks of independent random variables on the

![]() $|\mathcal{S}|$

possible states of the hidden chain that determine the next step in the process, with

$|\mathcal{S}|$

possible states of the hidden chain that determine the next step in the process, with

![]() $R_0$

specifying the initial state. Each

$R_0$

specifying the initial state. Each

![]() $R_i$

takes values in

$R_i$

takes values in

![]() $\mathcal{S} \times \mathcal{A}$

and is distributed according to the transition probability from the present hidden state. Then, one has

$\mathcal{S} \times \mathcal{A}$

and is distributed according to the transition probability from the present hidden state. Then, one has

![]() $f\big(X_1, \ldots , X_n\big) \overset{d}{=} h \big(R_0, \ldots , R_{|\mathcal{S}|(n-1)}\big)$

, for

$f\big(X_1, \ldots , X_n\big) \overset{d}{=} h \big(R_0, \ldots , R_{|\mathcal{S}|(n-1)}\big)$

, for

![]() $h = f \circ \gamma$

, where the function

$h = f \circ \gamma$

, where the function

![]() $\gamma$

translates between R and X. This construction is carried out in more detail in the next section. Further note that when

$\gamma$

translates between R and X. This construction is carried out in more detail in the next section. Further note that when

![]() $(X_i)_{i \geq 1}$

is a sequence of independent random variables, the hidden chain in the model consists of a single state, and then the function

$(X_i)_{i \geq 1}$

is a sequence of independent random variables, the hidden chain in the model consists of a single state, and then the function

![]() $\gamma$

is the identity function and so

$\gamma$

is the identity function and so

![]() $h = f$

.

$h = f$

.

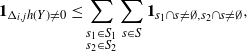

In order for Proposition 2.1 to be meaningful, a further quantitative study of the terms in the upper bounds is necessary. It turns out that the variance terms determine the order of decay; see Remark 5.2. Nevertheless, we obtain additional quantitative estimates for all the terms involved; the proofs are presented in Section 5.

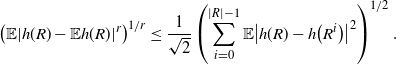

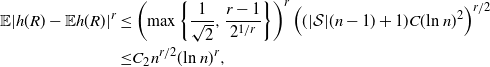

Proposition 2.2. With the notation as above, let f be Lipschitz with respect to the Hamming distance, i.e., let

for every

![]() $x, y \in \mathcal{A}^n$

and where

$x, y \in \mathcal{A}^n$

and where

![]() $c > 0$

. Then, for any

$c > 0$

. Then, for any

![]() $r > 0$

,

$r > 0$

,

for n large enough and

![]() $C_1, C_2 > 0$

depending on r and the parameters of the model.

$C_1, C_2 > 0$

depending on r and the parameters of the model.

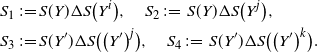

Let R

′ and R

′′ be independent copies of R, and let

![]() $\widetilde{\mathbf{R}}$

be the random set of recombinations of R, R

′, and R

′′. The set

$\widetilde{\mathbf{R}}$

be the random set of recombinations of R, R

′, and R

′′. The set

![]() $\widetilde{\mathbf{R}}$

consists of

$\widetilde{\mathbf{R}}$

consists of

![]() $3^{|R|}$

random vectors of size

$3^{|R|}$

random vectors of size

![]() $|R|$

:

$|R|$

:

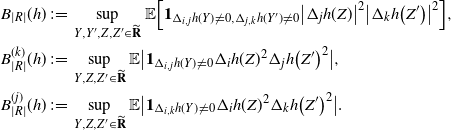

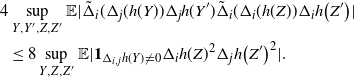

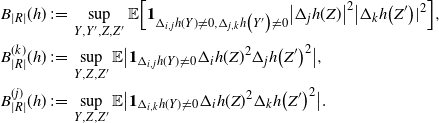

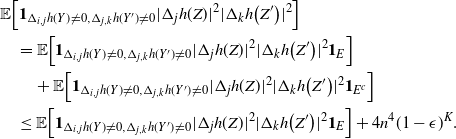

Let

\begin{align}\begin{split} B_{|R|}(h) \,{:}\,{\raise-1.5pt{=}}\, & \sup_{Y, Y^{\prime}, Z, Z^{\prime} \in \widetilde{\mathbf{R}}} \mathbb{E} \Big[\mathbf{1}_{\Delta_{i,j} h(Y) \neq 0, \Delta_{j,k} h(Y^{\prime}) \neq 0} \big|\Delta_j h(Z)\big|^2 \big|\Delta_k h\big(Z^{\prime}\big)\big|^2\Big],\\ B_{|R|}^{(k)}(h) \,{:}\,{\raise-1.5pt{=}}\, & \sup_{Y, Z, Z^{\prime} \in \widetilde{\mathbf{R}} } \mathbb{E} \big| \mathbf{1}_{\Delta_{i,j} h(Y) \neq 0} \Delta_i h(Z)^2 \Delta_j h\big(Z^{\prime}\big)^2\big|, \\ B_{|R|}^{(j)} (h) \,{:}\,{\raise-1.5pt{=}}\, & \sup_{Y, Z, Z^{\prime} \in \widetilde{\mathbf{R}}} \mathbb{E} \big| \mathbf{1}_{\Delta_{i,k} h(Y) \neq 0} \Delta_i h(Z)^2 \Delta_k h\big(Z^{\prime}\big)^2\big|.\end{split}\end{align}

\begin{align}\begin{split} B_{|R|}(h) \,{:}\,{\raise-1.5pt{=}}\, & \sup_{Y, Y^{\prime}, Z, Z^{\prime} \in \widetilde{\mathbf{R}}} \mathbb{E} \Big[\mathbf{1}_{\Delta_{i,j} h(Y) \neq 0, \Delta_{j,k} h(Y^{\prime}) \neq 0} \big|\Delta_j h(Z)\big|^2 \big|\Delta_k h\big(Z^{\prime}\big)\big|^2\Big],\\ B_{|R|}^{(k)}(h) \,{:}\,{\raise-1.5pt{=}}\, & \sup_{Y, Z, Z^{\prime} \in \widetilde{\mathbf{R}} } \mathbb{E} \big| \mathbf{1}_{\Delta_{i,j} h(Y) \neq 0} \Delta_i h(Z)^2 \Delta_j h\big(Z^{\prime}\big)^2\big|, \\ B_{|R|}^{(j)} (h) \,{:}\,{\raise-1.5pt{=}}\, & \sup_{Y, Z, Z^{\prime} \in \widetilde{\mathbf{R}}} \mathbb{E} \big| \mathbf{1}_{\Delta_{i,k} h(Y) \neq 0} \Delta_i h(Z)^2 \Delta_k h\big(Z^{\prime}\big)^2\big|.\end{split}\end{align}

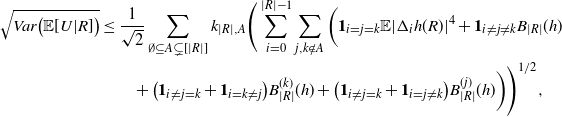

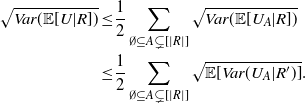

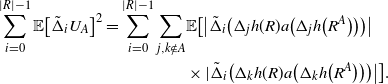

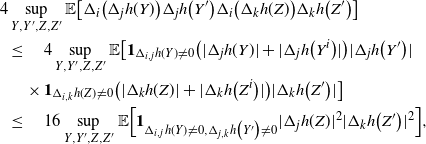

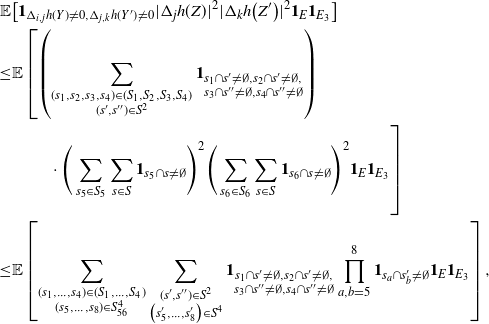

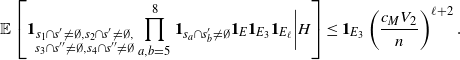

Then the following general bound for the variance terms holds.

Proposition 2.3. With the notation as above and for

![]() $U = T_{|R|}(h)$

or

$U = T_{|R|}(h)$

or

![]() $U = T^{\prime}_{|R|}(h)$

, we have

$U = T^{\prime}_{|R|}(h)$

, we have

\begin{align}\notag & \sqrt{Var\big(\mathbb{E}[U|R]\big)} \leq \frac{1}{\sqrt{2}} \sum_{\emptyset \subseteq A \subsetneq[|R|]} k_{|R|, A} \Bigg( \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A}\bigg( \mathbf{1}_{i = j = k} \mathbb{E} |\Delta_i h(R)|^4 + \mathbf{1}_{i \neq j \neq k} B_{|R|}(h) \\\notag & \quad \quad \quad \quad \quad \quad \quad \quad + \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = k \neq j} \big) B_{|R|}^{(k)}(h) + \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = j \neq k} \big) B_{|R|}^{(j)}(h) \bigg) \Bigg)^{1/2},\end{align}

\begin{align}\notag & \sqrt{Var\big(\mathbb{E}[U|R]\big)} \leq \frac{1}{\sqrt{2}} \sum_{\emptyset \subseteq A \subsetneq[|R|]} k_{|R|, A} \Bigg( \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A}\bigg( \mathbf{1}_{i = j = k} \mathbb{E} |\Delta_i h(R)|^4 + \mathbf{1}_{i \neq j \neq k} B_{|R|}(h) \\\notag & \quad \quad \quad \quad \quad \quad \quad \quad + \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = k \neq j} \big) B_{|R|}^{(k)}(h) + \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = j \neq k} \big) B_{|R|}^{(j)}(h) \bigg) \Bigg)^{1/2},\end{align}

where

![]() $[|R|] \,{:}\,{\raise-1.5pt{=}}\, \{1, \ldots, |R| \}$

.

$[|R|] \,{:}\,{\raise-1.5pt{=}}\, \{1, \ldots, |R| \}$

.

Note that in Proposition 2.3, the underlying function f is not assumed to be Lipschitz. Moreover, the function h is not symmetric, and therefore the expression above cannot be simplified further, in contrast to the similar results in [Reference Lachièze-Rey and Peccati14]. The proofs of Propositions 2.2 and 2.3 are technical and are delayed to Sections 5.1 and 5.2 respectively.

2.1. Additional details on the construction of R

Let (Z, X) be a hidden Markov model with Z an aperiodic time-homogeneous and irreducible Markov chain on a finite state space

![]() $\mathcal{S}$

, and with X taking values in an alphabet

$\mathcal{S}$

, and with X taking values in an alphabet

![]() $\mathcal{A}$

. Let P be the transition matrix of the hidden chain, and let Q be the

$\mathcal{A}$

. Let P be the transition matrix of the hidden chain, and let Q be the

![]() $|\mathcal{S}| \times |\mathcal{A}|$

probability matrix for the observations; i.e.,

$|\mathcal{S}| \times |\mathcal{A}|$

probability matrix for the observations; i.e.,

![]() $Q_{ij}$

is the probability of seeing output j if the latent chain is in state i. Let the initial distribution of the hidden chain be

$Q_{ij}$

is the probability of seeing output j if the latent chain is in state i. Let the initial distribution of the hidden chain be

![]() $\mu$

. Then

$\mu$

. Then

Next we introduce a sequence of independent random variables

![]() $R_0, \ldots, R_{|\mathcal{S}|(n-1)}$

taking values in

$R_0, \ldots, R_{|\mathcal{S}|(n-1)}$

taking values in

![]() $\mathcal{S} \times \mathcal{A}$

and a function

$\mathcal{S} \times \mathcal{A}$

and a function

![]() $\gamma$

such that

$\gamma$

such that

![]() $\gamma\big(R_0, \ldots, R_{|\mathcal{S}| (n-1) }\big) = \big(Z_1, \ldots, Z_n;\, X_1, \ldots , X_n\big)$

. For any

$\gamma\big(R_0, \ldots, R_{|\mathcal{S}| (n-1) }\big) = \big(Z_1, \ldots, Z_n;\, X_1, \ldots , X_n\big)$

. For any

![]() $s, s^{\prime} \in \mathcal{S}$

,

$s, s^{\prime} \in \mathcal{S}$

,

![]() $x \in \mathcal{A}$

, and

$x \in \mathcal{A}$

, and

![]() $i \in \{0, \ldots, n-1\}$

, let

$i \in \{0, \ldots, n-1\}$

, let

The random variables

![]() $R_i$

are well defined since

$R_i$

are well defined since

![]() $\sum_x Q_{s, x} = 1$

for any

$\sum_x Q_{s, x} = 1$

for any

![]() $s \in \mathcal{S}$

and

$s \in \mathcal{S}$

and

![]() $\sum_s P_{s^{\prime}, s} = \sum_s \mu(s) = 1$

for any

$\sum_s P_{s^{\prime}, s} = \sum_s \mu(s) = 1$

for any

![]() $s^{\prime} \in \mathcal{S}$

. One can think of the variables

$s^{\prime} \in \mathcal{S}$

. One can think of the variables

![]() $R_i$

as a set of instructions indicating where the hidden Markov model goes next. The function

$R_i$

as a set of instructions indicating where the hidden Markov model goes next. The function

![]() $\gamma$

reconstructs the realization

$\gamma$

reconstructs the realization

![]() $(Z_i, X_i)_{i \geq 1}$

sequentially from the sequence

$(Z_i, X_i)_{i \geq 1}$

sequentially from the sequence

![]() $(R_i)_{i \geq 0}$

. In particular,

$(R_i)_{i \geq 0}$

. In particular,

![]() $\gamma$

captures the following relations:

$\gamma$

captures the following relations:

One can also think of the sequence

![]() $(R_i)_{i \geq 0}$

as

$(R_i)_{i \geq 0}$

as

![]() $|\mathcal{S}|$

stacks of random variables on the

$|\mathcal{S}|$

stacks of random variables on the

![]() $|\mathcal{S}|$

possible states of the latent Markov chain, and the values being rules for the next step in the model. Note that only one variable on the ith level of the stack will be used to determine the

$|\mathcal{S}|$

possible states of the latent Markov chain, and the values being rules for the next step in the model. Note that only one variable on the ith level of the stack will be used to determine the

![]() $(i+1)$

th hidden and observed pair. Furthermore, the distribution of the random variables

$(i+1)$

th hidden and observed pair. Furthermore, the distribution of the random variables

![]() $R_i$

for

$R_i$

for

![]() $i \geq 1$

encodes the transition and output probabilities in the P and Q matrices of the original model.

$i \geq 1$

encodes the transition and output probabilities in the P and Q matrices of the original model.

Thus one can write

![]() $f\big(X_1, \ldots, X_n\big) = h\big(R_0, \ldots , R_{|\mathcal{S}| (n-1) }\big)$

, for

$f\big(X_1, \ldots, X_n\big) = h\big(R_0, \ldots , R_{|\mathcal{S}| (n-1) }\big)$

, for

![]() $h \,{:}\,{\raise-1.5pt{=}}\, f \circ \gamma$

, where the function

$h \,{:}\,{\raise-1.5pt{=}}\, f \circ \gamma$

, where the function

![]() $\gamma$

does the translation from

$\gamma$

does the translation from

![]() $(R_i)_{i \geq 0}$

to

$(R_i)_{i \geq 0}$

to

![]() $\big(Z_i, X_i\big)_{i \geq 1}$

as described above.

$\big(Z_i, X_i\big)_{i \geq 1}$

as described above.

Let

![]() $R^{\prime} = \big(R^{\prime}_0, \ldots, R_{|\mathcal{S}| (n-1)}^{\prime}\big)$

be an independent copy of R. Let

$R^{\prime} = \big(R^{\prime}_0, \ldots, R_{|\mathcal{S}| (n-1)}^{\prime}\big)$

be an independent copy of R. Let

![]() $A \subseteq \{0, 1, \ldots, |\mathcal{S}|(n-1)\}$

, and let the change

$A \subseteq \{0, 1, \ldots, |\mathcal{S}|(n-1)\}$

, and let the change

![]() $R^A$

of R be defined as follows:

$R^A$

of R be defined as follows:

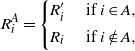

\begin{align} R_i^A = \left\{ \begin{array}{r@{\quad}l} R^{\prime}_i & \mbox { if } i \in A, \\[4pt] R_i & \mbox{ if } i \notin A, \end{array}\right.\end{align}

\begin{align} R_i^A = \left\{ \begin{array}{r@{\quad}l} R^{\prime}_i & \mbox { if } i \in A, \\[4pt] R_i & \mbox{ if } i \notin A, \end{array}\right.\end{align}

where, as before, when

![]() $A = \{j\}$

we write

$A = \{j\}$

we write

![]() $R^j$

instead of

$R^j$

instead of

![]() $R^{\{j\}}$

.

$R^{\{j\}}$

.

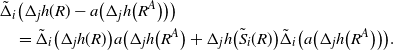

Recall that the ‘discrete derivative’ of h with a perturbation A is

Then (4) and (5) follow from (2) and (3), respectively, since when (Z, X) is a hidden Markov model one writes

where the sequence

![]() $(R_i)_{i \geq 0}$

is a sequence of independent random variables.

$(R_i)_{i \geq 0}$

is a sequence of independent random variables.

Remark 2.1. (i) The idea of using stacks of independent random variables to represent a hidden Markov model is somewhat reminiscent of Wilson’s cycle popping algorithm for generating a random directed spanning tree; see [Reference Wilson17]. The algorithm has also been related to loop-erased random walks in [Reference Gorodezky and Pak9].

(ii) If S consists of a single state, making the hidden chain redundant, there is a single stack of instructions. This corresponds to the independent setting of [Reference Chatterjee2] and [Reference Lachièze-Rey and Peccati14], and then

![]() $\gamma$

is just the identity function.

$\gamma$

is just the identity function.

(iii) The same approach, via the use of instructions, is also applicable when

![]() $\mathcal{A}$

and

$\mathcal{A}$

and

![]() $\mathcal{S}$

are infinite countable. The

$\mathcal{S}$

are infinite countable. The

![]() $Q_{s,x}$

no longer form a finite matrix, but the same definition holds as long as

$Q_{s,x}$

no longer form a finite matrix, but the same definition holds as long as

![]() $\sum_{x \in \mathcal{A}} Q_{s,x} = 1$

for all

$\sum_{x \in \mathcal{A}} Q_{s,x} = 1$

for all

![]() $s \in \mathcal{S}$

. We need a countably infinite number of independent instructions to encode

$s \in \mathcal{S}$

. We need a countably infinite number of independent instructions to encode

![]() $(Z_i, X_i)_{1 \leq i \leq n}$

. In particular, let

$(Z_i, X_i)_{1 \leq i \leq n}$

. In particular, let

![]() $R_0$

and

$R_0$

and

![]() $\big(R_{i, s}\big)_{1 \leq i \leq n, s \in \mathcal{S}}$

be such that

$\big(R_{i, s}\big)_{1 \leq i \leq n, s \in \mathcal{S}}$

be such that

Then the function

![]() $\gamma$

reconstructs

$\gamma$

reconstructs

![]() $\big(Z_i, X_i\big)_{1 \leq i \leq n}$

from

$\big(Z_i, X_i\big)_{1 \leq i \leq n}$

from

![]() $R_0$

and

$R_0$

and

![]() $\big(R_{i,s}\big)_{1 \leq i \leq n, s \in \mathcal{S}}$

via

$\big(R_{i,s}\big)_{1 \leq i \leq n, s \in \mathcal{S}}$

via

(iv) It is possible to obtain bounds on the various terms involved in Proposition 2.2 and Proposition 2.3 in the case when

![]() $|\mathcal{S}|$

is a function of n. Assuming that there exists a general deterministic upper bound

$|\mathcal{S}|$

is a function of n. Assuming that there exists a general deterministic upper bound

![]() $g_r(n)$

on

$g_r(n)$

on

![]() $\mathbb{E} |\Delta_i h|^r$

, one can bound the non-variance terms in Proposition 2.2 by

$\mathbb{E} |\Delta_i h|^r$

, one can bound the non-variance terms in Proposition 2.2 by

![]() $C |\mathcal{S}| \left(\sqrt{ g_6(n) } + g_3(n) \right) /\sigma^3 $

. The variance terms, using Proposition 2.3, will then be bounded by

$C |\mathcal{S}| \left(\sqrt{ g_6(n) } + g_3(n) \right) /\sigma^3 $

. The variance terms, using Proposition 2.3, will then be bounded by

![]() $C \sqrt{ |\mathcal{S}| g_4(n) + |\mathcal{S}|^3 ( A ) } /\sigma^2$

, where A is a complicated expression that depends on the particular problem as well as on

$C \sqrt{ |\mathcal{S}| g_4(n) + |\mathcal{S}|^3 ( A ) } /\sigma^2$

, where A is a complicated expression that depends on the particular problem as well as on

![]() $\mathbb{E}|\Delta_i h|^r$

and

$\mathbb{E}|\Delta_i h|^r$

and

![]() $|\mathcal{S}|$

.

$|\mathcal{S}|$

.

The next crucial part in the analysis is the key result we use everywhere: if a change in an instruction propagates X levels (a random variable), then

![]() $\mathbb{P} (X > K) \leq (1 - \epsilon)^K$

, for some absolute

$\mathbb{P} (X > K) \leq (1 - \epsilon)^K$

, for some absolute

![]() $0 < \epsilon < 1$

. If

$0 < \epsilon < 1$

. If

![]() $|\mathcal{S}|$

is finite, this holds under some standard assumptions on the model. If

$|\mathcal{S}|$

is finite, this holds under some standard assumptions on the model. If

![]() $|\mathcal{S}|$

grows with n, then under some minor additional restrictions in the hidden Markov model, we have

$|\mathcal{S}|$

grows with n, then under some minor additional restrictions in the hidden Markov model, we have

![]() $\mathbb{P}(X > K) \leq (1 - 1/|\mathcal{S}|)^K$

. Then K can be chosen to be at most a small power of n (we take

$\mathbb{P}(X > K) \leq (1 - 1/|\mathcal{S}|)^K$

. Then K can be chosen to be at most a small power of n (we take

![]() $K = \text{ln}\ n$

in the finite case, which explains the logarithmic factors in our bounds). For meaningful bounds,

$K = \text{ln}\ n$

in the finite case, which explains the logarithmic factors in our bounds). For meaningful bounds,

![]() $|\mathcal{S}|$

could grow at most like

$|\mathcal{S}|$

could grow at most like

![]() $\text{ln}\ n$

. However, much more fine-tuning is necessary in the actual proof.

$\text{ln}\ n$

. However, much more fine-tuning is necessary in the actual proof.

For some recent results (different from ours) on normal approximation for functions of general Markov chains we refer the reader to [Reference Chen, Shao and Xu5].

3. Covering process

Although our framework was initially motivated by [Reference Houdré and Kerchev11] and the problem of finding a normal approximation result for the length of the longest common subsequences in dependent random words, some applications to stochastic geometry are presented below. Our methodology can be applied to other related settings, in particular to the variant of the occupancy problem introduced in the recent article [Reference Grabchak, Kelbert and Paris10] (see Remark 4.1).

Let

![]() $(K, \mathcal{K})$

be the space of compact subsets of

$(K, \mathcal{K})$

be the space of compact subsets of

![]() $\mathbb{R}^d$

, endowed with the hit-and-miss topology. Let

$\mathbb{R}^d$

, endowed with the hit-and-miss topology. Let

![]() $E_n$

be a cube of volume n, and let

$E_n$

be a cube of volume n, and let

![]() $C_1, \ldots, C_n$

be random variables in

$C_1, \ldots, C_n$

be random variables in

![]() $E_n$

, called germs. In the i.i.d. setting of [Reference Lachièze-Rey and Peccati14], each

$E_n$

, called germs. In the i.i.d. setting of [Reference Lachièze-Rey and Peccati14], each

![]() $C_i$

is sampled uniformly and independently in

$C_i$

is sampled uniformly and independently in

![]() $E_n$

; i.e., if

$E_n$

; i.e., if

![]() $T \subset E_n$

with measure

$T \subset E_n$

with measure

![]() $|T|$

, then

$|T|$

, then

for all

![]() $i \in \{1, \ldots, n\}$

.

$i \in \{1, \ldots, n\}$

.

Here, we consider

![]() $C_1, \ldots, C_n$

, generated by a hidden Markov model in the following way. Let

$C_1, \ldots, C_n$

, generated by a hidden Markov model in the following way. Let

![]() $Z_1, \ldots, Z_n$

be an aperiodic irreducible Markov chain on a finite state space

$Z_1, \ldots, Z_n$

be an aperiodic irreducible Markov chain on a finite state space

![]() $\mathcal{S}$

. Each

$\mathcal{S}$

. Each

![]() $s \in \mathcal{S}$

is associated with a measure

$s \in \mathcal{S}$

is associated with a measure

![]() $m_s$

on

$m_s$

on

![]() $E_n$

. Then for each measurable

$E_n$

. Then for each measurable

![]() $T \subseteq E_n$

,

$T \subseteq E_n$

,

Assume that there are constants

![]() $0 < c_m \leq c_M$

such that for any

$0 < c_m \leq c_M$

such that for any

![]() $s \in \mathcal{S}$

and measurable

$s \in \mathcal{S}$

and measurable

![]() $T \subseteq E_n$

,

$T \subseteq E_n$

,

Note that

![]() $c_m = c_M = 1$

recovers the setting of [Reference Lachièze-Rey and Peccati14].

$c_m = c_M = 1$

recovers the setting of [Reference Lachièze-Rey and Peccati14].

Let

![]() $K_1, \ldots, K_n$

be compact sets (grains) with

$K_1, \ldots, K_n$

be compact sets (grains) with

![]() $Vol (K_i) \in (V_1, V_2)$

(absolute constants) for

$Vol (K_i) \in (V_1, V_2)$

(absolute constants) for

![]() $i = 1,\ldots, n$

. Let

$i = 1,\ldots, n$

. Let

![]() $X_i = C_i + K_i$

for

$X_i = C_i + K_i$

for

![]() $i = 1, \ldots, n$

be the germ–grain process. Consider the closed set formed by the union of the germs translated by the grains

$i = 1, \ldots, n$

be the germ–grain process. Consider the closed set formed by the union of the germs translated by the grains

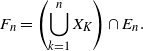

\begin{align}\notag F_n = \left( \bigcup_{k =1}^n X_K\right) \cap E_n.\end{align}

\begin{align}\notag F_n = \left( \bigcup_{k =1}^n X_K\right) \cap E_n.\end{align}

We are interested in the volume covered by

![]() $F_n$

,

$F_n$

,

and the number of isolated grains,

Theorem 3.1. Let

![]() $\mathcal{N}$

be a standard normal random variable. Then, for all

$\mathcal{N}$

be a standard normal random variable. Then, for all

![]() $n \in \mathbb{N}$

,

$n \in \mathbb{N}$

,

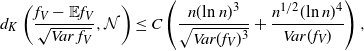

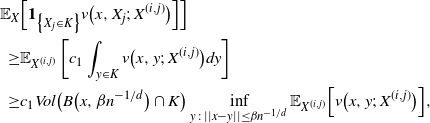

\begin{align} d_K\left(\frac{f_V - \mathbb{E} f_V}{\sqrt{Var\, f_V}} , \mathcal{N} \right) \leq C \left( \frac{ n(\text{ln}\ n)^3} {\sqrt{Var(f_V)^3}} + \frac{ n^{1/2} (\text{ln}\ n)^4}{ Var(f_V)} \right), \end{align}

\begin{align} d_K\left(\frac{f_V - \mathbb{E} f_V}{\sqrt{Var\, f_V}} , \mathcal{N} \right) \leq C \left( \frac{ n(\text{ln}\ n)^3} {\sqrt{Var(f_V)^3}} + \frac{ n^{1/2} (\text{ln}\ n)^4}{ Var(f_V)} \right), \end{align}

\begin{align} d_K\left(\frac{f_I - \mathbb{E} f_I}{\sqrt{Var\, f_I}} , \mathcal{N} \right) \leq C \left( \frac{ n(\text{ln}\ n)^3} {\sqrt{Var(f_I)^3}} + \frac{n^{1/2} (\text{ln}\ n)^4} {Var(f_I)}\right), \end{align}

\begin{align} d_K\left(\frac{f_I - \mathbb{E} f_I}{\sqrt{Var\, f_I}} , \mathcal{N} \right) \leq C \left( \frac{ n(\text{ln}\ n)^3} {\sqrt{Var(f_I)^3}} + \frac{n^{1/2} (\text{ln}\ n)^4} {Var(f_I)}\right), \end{align}

for some constant

![]() $C > 0$

independent of n.

$C > 0$

independent of n.

The study of the order of growth of

![]() $Var f_I$

and

$Var f_I$

and

![]() $Var f_V$

is not really part of the scope of the present paper. In the independent case, there are constants

$Var f_V$

is not really part of the scope of the present paper. In the independent case, there are constants

![]() $0 < c_V \leq C_V$

such that

$0 < c_V \leq C_V$

such that

![]() $c_V n \leq Var f_V \leq C_V n$

and

$c_V n \leq Var f_V \leq C_V n$

and

![]() $c_V n \leq Var f_I \leq C_V n $

for n sufficiently large (see [Reference Kendall and Molchanov13, Theorem 4.4]). In our dependent setting, a variance lower bound of order n will thus provide a rate of order

$c_V n \leq Var f_I \leq C_V n $

for n sufficiently large (see [Reference Kendall and Molchanov13, Theorem 4.4]). In our dependent setting, a variance lower bound of order n will thus provide a rate of order

![]() $(\log n)^4/\sqrt{n}$

.

$(\log n)^4/\sqrt{n}$

.

The proofs of the normal approximations for

![]() $f_V$

and

$f_V$

and

![]() $f_I$

are carried out in Sections 3.1 and 3.2. Many of the more technical computations are carried out in Section 5.

$f_I$

are carried out in Sections 3.1 and 3.2. Many of the more technical computations are carried out in Section 5.

3.1. Normal approximation for

$\textbf{\textit{f}}_{\textbf{\textit{V}}}$

$\textbf{\textit{f}}_{\textbf{\textit{V}}}$

Write

![]() $f_V\big(X_1, \ldots, X_n\big) = h\big(R_0, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

for a set of instructions R defined as in Section 2.1. The volume of each grain is bounded by

$f_V\big(X_1, \ldots, X_n\big) = h\big(R_0, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

for a set of instructions R defined as in Section 2.1. The volume of each grain is bounded by

![]() $V_2$

, so

$V_2$

, so

![]() $f_V$

is Lipschitz with respect to the Hamming distance, with constant

$f_V$

is Lipschitz with respect to the Hamming distance, with constant

![]() $V_2$

. Proposition 2.1 holds, and from Proposition 2.2, the non-variance terms in the bounds in Proposition 2.1 are bounded by

$V_2$

. Proposition 2.1 holds, and from Proposition 2.2, the non-variance terms in the bounds in Proposition 2.1 are bounded by

![]() $C (\text{ln}\ n)^3 / \sqrt{n}$

. Here and below, C is a constant, independent of n, which can vary from line to line. Indeed, for instance,

$C (\text{ln}\ n)^3 / \sqrt{n}$

. Here and below, C is a constant, independent of n, which can vary from line to line. Indeed, for instance,

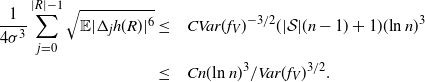

\begin{align}\notag \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{ \mathbb{E} |\Delta_j h(R)|^6} \leq & \quad C Var(f_V)^{-3/2} (|\mathcal{S}|(n-1) + 1) (\text{ln}\ n )^3 \\ \leq & \quad C n(\text{ln}\ n)^3 / Var(f_V)^{3/2}.\end{align}

\begin{align}\notag \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{ \mathbb{E} |\Delta_j h(R)|^6} \leq & \quad C Var(f_V)^{-3/2} (|\mathcal{S}|(n-1) + 1) (\text{ln}\ n )^3 \\ \leq & \quad C n(\text{ln}\ n)^3 / Var(f_V)^{3/2}.\end{align}

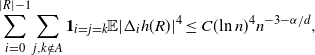

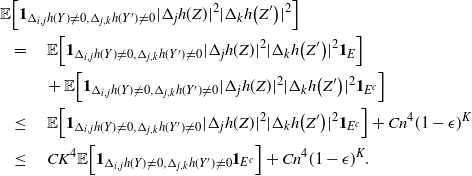

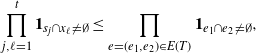

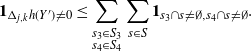

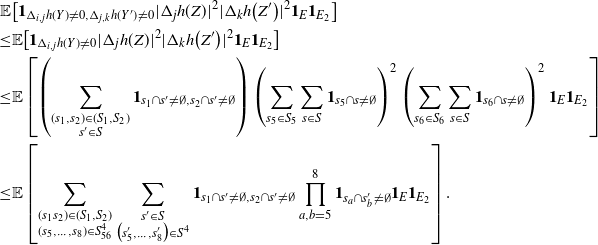

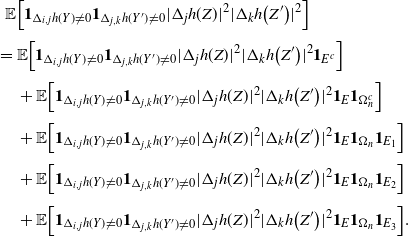

To analyze the bound on the variance terms given by Proposition 2.3, first note that

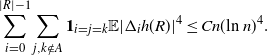

\begin{align}\notag \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A} \mathbf{1}_{i = j = k} \mathbb{E} | \Delta_i h(R)|^4 \leq Cn (\text{ln}\ n)^4,\end{align}

\begin{align}\notag \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A} \mathbf{1}_{i = j = k} \mathbb{E} | \Delta_i h(R)|^4 \leq Cn (\text{ln}\ n)^4,\end{align}

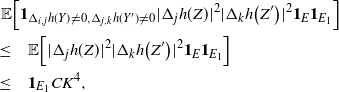

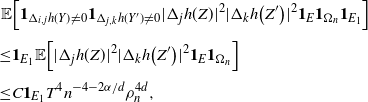

using Proposition 2.2. The other terms are bounded as follows.

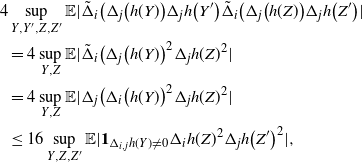

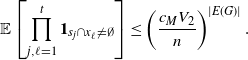

Proposition 3.1. Let

![]() $A \subsetneq [ | R | ] $

, and let

$A \subsetneq [ | R | ] $

, and let

![]() $B_{|R|}(h)$

,

$B_{|R|}(h)$

,

![]() $B_{|R|}^k(h)$

, and

$B_{|R|}^k(h)$

, and

![]() $B_{|R|}^j(h)$

be as in (6). Then

$B_{|R|}^j(h)$

be as in (6). Then

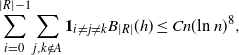

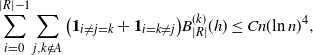

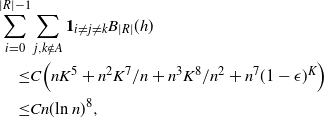

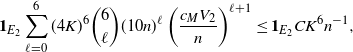

\begin{align} \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \mathbf{1}_{i \neq j \neq k} B_{|R|}(h) \leq Cn (\text{ln}\ n)^8, \qquad\qquad\end{align}

\begin{align} \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \mathbf{1}_{i \neq j \neq k} B_{|R|}(h) \leq Cn (\text{ln}\ n)^8, \qquad\qquad\end{align}

\begin{align}\sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = k \neq j} \big) B_{|R|}^{(k)}(h) \leq Cn (\text{ln}\ n)^4, \end{align}

\begin{align}\sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = k \neq j} \big) B_{|R|}^{(k)}(h) \leq Cn (\text{ln}\ n)^4, \end{align}

\begin{align} \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = j \neq k} \big) B_{|R|}^{(j)}(h) \leq Cn (\text{ln}\ n)^4, \end{align}

\begin{align} \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = j \neq k} \big) B_{|R|}^{(j)}(h) \leq Cn (\text{ln}\ n)^4, \end{align}

for some constant

![]() $C > 0$

that does not depend on n.

$C > 0$

that does not depend on n.

Proof. See Section 5.3 for the proof of the first bound. The others follow similarly.

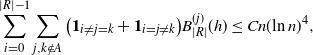

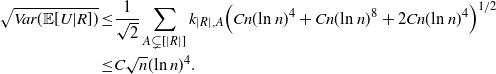

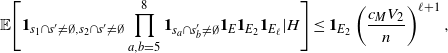

The bound on the variance terms in Proposition 2.3 becomes

\begin{align}\notag \sqrt{Var(\mathbb{E}[U|R])} \leq & \frac{1}{\sqrt{2}} \sum_{A \subsetneq[|R|]} k_{|R|, A} \big( C n (\text{ln}\ n)^4 + Cn (\text{ln}\ n)^8 + 2 C n (\text{ln}\ n)^4 \big)^{1/2}\\ \leq & C \sqrt{n} (\text{ln}\ n)^4.\end{align}

\begin{align}\notag \sqrt{Var(\mathbb{E}[U|R])} \leq & \frac{1}{\sqrt{2}} \sum_{A \subsetneq[|R|]} k_{|R|, A} \big( C n (\text{ln}\ n)^4 + Cn (\text{ln}\ n)^8 + 2 C n (\text{ln}\ n)^4 \big)^{1/2}\\ \leq & C \sqrt{n} (\text{ln}\ n)^4.\end{align}

3.2. Normal approximation for

$\textbf{\textit{f}}_{\textbf{\textit{I}}}$

$\textbf{\textit{f}}_{\textbf{\textit{I}}}$

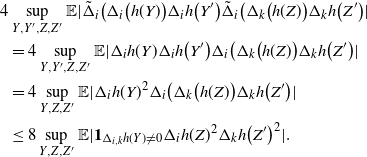

The proof of (9) is more involved since the function

![]() $f_I$

is not Lipschitz. Abusing notation, write

$f_I$

is not Lipschitz. Abusing notation, write

![]() $f_I\big(X_1, \ldots, X_n\big) = h\big(R_0, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

for a set of instructions R as in Section 2.1. Proposition 2.1 holds, and, as in our analysis for

$f_I\big(X_1, \ldots, X_n\big) = h\big(R_0, \ldots, R_{|\mathcal{S}|(n-1)}\big)$

for a set of instructions R as in Section 2.1. Proposition 2.1 holds, and, as in our analysis for

![]() $f_V$

, we proceed by estimating the non-variance terms in the bounds. The following holds.

$f_V$

, we proceed by estimating the non-variance terms in the bounds. The following holds.

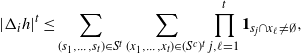

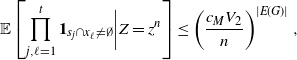

Proposition 3.2. For any

![]() $t = 1, 2, \ldots$

and

$t = 1, 2, \ldots$

and

![]() $i \in \{0, \ldots, |\mathcal{S}|(n-1)\}$

,

$i \in \{0, \ldots, |\mathcal{S}|(n-1)\}$

,

where

![]() $C = C(t) > 0$

.

$C = C(t) > 0$

.

Proof. See Section 5.4. The approach is similar to the one employed for Proposition 2.2 and uses a graph representation.

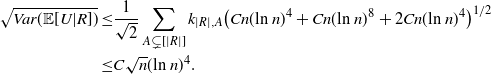

Therefore, for the non-variance term in Proposition 2.1, we have

\begin{align} \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{\mathbb{E} | \Delta_j h(R)|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 0}^{|R| - 1} \mathbb{E} |\Delta_j h(R)|^3\leq C n \left( \frac{\text{ln}\ n }{ \sqrt{ Var(f_I) } }\right)^3.\end{align}

\begin{align} \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{\mathbb{E} | \Delta_j h(R)|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 0}^{|R| - 1} \mathbb{E} |\Delta_j h(R)|^3\leq C n \left( \frac{\text{ln}\ n }{ \sqrt{ Var(f_I) } }\right)^3.\end{align}

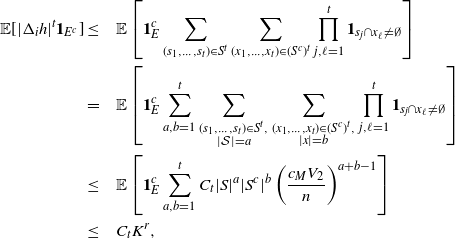

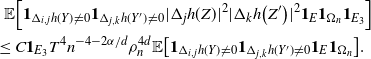

We are left to analyze the bound on the variance terms given by Proposition 2.3. First, note that using Proposition 3.2,

\begin{align}\notag \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A} \mathbf{1}_{i = j = k} \mathbb{E} | \Delta_i h(R)|^4 \leq Cn (\text{ln}\ n)^4.\end{align}

\begin{align}\notag \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A} \mathbf{1}_{i = j = k} \mathbb{E} | \Delta_i h(R)|^4 \leq Cn (\text{ln}\ n)^4.\end{align}

Proposition 3.3. Let

![]() $A \subsetneq [ | R | ] $

, and let

$A \subsetneq [ | R | ] $

, and let

![]() $B_{|R|}(h)$

,

$B_{|R|}(h)$

,

![]() $B_{|R|}^k(h)$

, and

$B_{|R|}^k(h)$

, and

![]() $B_{|R|}^j(h)$

be as in (6). Then the bounds (11), (12), and (13) hold in this setting as well.

$B_{|R|}^j(h)$

be as in (6). Then the bounds (11), (12), and (13) hold in this setting as well.

Proof. See Section 5.5.

The bound on the variance terms in Proposition 2.3 becomes

\begin{align}\notag \sqrt{Var(\mathbb{E}[U|R])} \leq & \frac{1}{\sqrt{2}} \sum_{A \subsetneq[|R|]} k_{|R|, A} \Big( C n (\text{ln}\ n)^4 + Cn (\text{ln}\ n)^8 + 2 C n (\text{ln}\ n)^4 \Big)^{1/2}\\ \leq & C \sqrt{n} (\text{ln}\ n)^4.\end{align}

\begin{align}\notag \sqrt{Var(\mathbb{E}[U|R])} \leq & \frac{1}{\sqrt{2}} \sum_{A \subsetneq[|R|]} k_{|R|, A} \Big( C n (\text{ln}\ n)^4 + Cn (\text{ln}\ n)^8 + 2 C n (\text{ln}\ n)^4 \Big)^{1/2}\\ \leq & C \sqrt{n} (\text{ln}\ n)^4.\end{align}

4. Set approximation with random tessellations

Let

![]() $K \subseteq [0,1]^d$

be compact, and let X be a finite collection of points in K. The Voronoi reconstruction, or the Voronoi approximation, of K based on X is given by

$K \subseteq [0,1]^d$

be compact, and let X be a finite collection of points in K. The Voronoi reconstruction, or the Voronoi approximation, of K based on X is given by

For

![]() $x \in [0,1]^d$

, denote by

$x \in [0,1]^d$

, denote by

![]() $V(x;\, X)$

the Voronoi cell with nucleus x within X, given by

$V(x;\, X)$

the Voronoi cell with nucleus x within X, given by

where

![]() $(X, x) = X \cup \{x\}$

, and where, as usual,

$(X, x) = X \cup \{x\}$

, and where, as usual,

![]() $||\cdot||$

is the Euclidean norm in

$||\cdot||$

is the Euclidean norm in

![]() $\mathbb{R}^d$

. The volume approximation of interest is

$\mathbb{R}^d$

. The volume approximation of interest is

In [Reference Lachièze-Rey and Peccati14],

![]() $X = (X_1, \ldots, X_n)$

is a vector of n i.i.d. random variables uniformly distributed on

$X = (X_1, \ldots, X_n)$

is a vector of n i.i.d. random variables uniformly distributed on

![]() $[0,1]^d$

. Here, we consider

$[0,1]^d$

. Here, we consider

![]() $X_1, \ldots, X_n$

generated by a hidden Markov model in the following way. Let

$X_1, \ldots, X_n$

generated by a hidden Markov model in the following way. Let

![]() $Z_1, \ldots, Z_n$

be an aperiodic irreducible Markov chain on a finite state space

$Z_1, \ldots, Z_n$

be an aperiodic irreducible Markov chain on a finite state space

![]() $\mathcal{S}$

. Each

$\mathcal{S}$

. Each

![]() $s \in \mathcal{S}$

is associated with a measure

$s \in \mathcal{S}$

is associated with a measure

![]() $m_s$

on

$m_s$

on

![]() $[0,1]^d$

. Then for each measurable

$[0,1]^d$

. Then for each measurable

![]() $T \subseteq [0,1]^d$

,

$T \subseteq [0,1]^d$

,

Assume, moreover, that there are constants

![]() $0 < c_m \leq c_M$

such that for any

$0 < c_m \leq c_M$

such that for any

![]() $s \in \mathcal{S}$

and measurable

$s \in \mathcal{S}$

and measurable

![]() $T \subseteq [0,1]^n$

,

$T \subseteq [0,1]^n$

,

Recall the notions of Lebesgue boundary of K given by

and

where d(x, A) is the Euclidean distance from

![]() $x \in \mathbb{R}^d$

to

$x \in \mathbb{R}^d$

to

![]() $A \subseteq \mathbb{R}^d$

.

$A \subseteq \mathbb{R}^d$

.

Now, for

![]() $\beta > 0$

, let

$\beta > 0$

, let

Next, recall that K is said to satisfy the (weak) rolling ball condition if

Our main result is as follows.

Theorem 4.1. Let

![]() $K \subseteq [0,1]^d$

satisfy the rolling ball condition. Moreover, assume that there exist

$K \subseteq [0,1]^d$

satisfy the rolling ball condition. Moreover, assume that there exist

![]() $S_-(K), \, S_+(K), \, \alpha > 0$

such that

$S_-(K), \, S_+(K), \, \alpha > 0$

such that

Then, for

![]() $n \geq 1$

,

$n \geq 1$

,

where

![]() $C > 0$

is a constant not depending on n.

$C > 0$

is a constant not depending on n.

As in [Reference Lachièze-Rey and Peccati14], we split Theorem 4.1 into two results. The first one establishes a central limit theorem.

Proposition 4.1. Let

![]() $0 < \sigma^2 = Var( \varphi(X))$

. Assume that

$0 < \sigma^2 = Var( \varphi(X))$

. Assume that

![]() $Vol(\partial K^r) \leq S_+(K) r^{\alpha}$

for some

$Vol(\partial K^r) \leq S_+(K) r^{\alpha}$

for some

![]() $S_+(K), \alpha > 0$

. Then, for

$S_+(K), \alpha > 0$

. Then, for

![]() $n \geq 1$

,

$n \geq 1$

,

where

![]() $C > 0$

is a constant not depending on n.

$C > 0$

is a constant not depending on n.

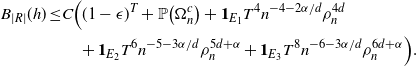

The second result introduces bounds on the variance under some additional assumptions.

Proposition 4.2. Let

![]() $K \subseteq [0,1]^d$

satisfy the rolling ball condition. Moreover, assume that there exist

$K \subseteq [0,1]^d$

satisfy the rolling ball condition. Moreover, assume that there exist

![]() $S_-(K), \, S_+(K), \, \alpha > 0$

such that

$S_-(K), \, S_+(K), \, \alpha > 0$

such that

Then, for n sufficiently large,

for some

![]() $C_d^-, C_d^+ > 0$

.

$C_d^-, C_d^+ > 0$

.

It is clear that Theorem 4.1 will be proved once Proposition 4.1 and Proposition 4.2 are established.

4.1. Proof of Proposition 4.1

Again, as before, we introduce a set of instructions R and a function h such that

![]() $h(R) = \varphi(X)$

. We apply Proposition 2.1, and the initial step is to bound

$h(R) = \varphi(X)$

. We apply Proposition 2.1, and the initial step is to bound

![]() $\mathbb{E}|\Delta_i h(R)|^r$

, where

$\mathbb{E}|\Delta_i h(R)|^r$

, where

![]() $ r > 0$

. In fact, the following holds.

$ r > 0$

. In fact, the following holds.

Proposition 4.3. Under the assumptions of Proposition 4.1,

where

![]() $c_{d,r , \alpha}$

depends on the parameters of the model and the dimension d, as well as on r and

$c_{d,r , \alpha}$

depends on the parameters of the model and the dimension d, as well as on r and

![]() $\alpha$

. Moreover, for

$\alpha$

. Moreover, for

![]() $n,q \geq 1$

,

$n,q \geq 1$

,

for some

![]() $C_{d, r, \alpha} > 0$

.

$C_{d, r, \alpha} > 0$

.

Before presenting the proof, we introduce some notation. Recall that

![]() $x, y \in [0,1]^d$

are said to be Voronoi neighbors within the set X if

$x, y \in [0,1]^d$

are said to be Voronoi neighbors within the set X if

![]() $V(x;\, X) \cap V(y;\, X) \neq \emptyset$

. In general, the Voronoi distance

$V(x;\, X) \cap V(y;\, X) \neq \emptyset$

. In general, the Voronoi distance

![]() $d_V(x, y;\, X)$

between x and y within X is given by the smallest

$d_V(x, y;\, X)$

between x and y within X is given by the smallest

![]() $k \geq 1$

such that there exist

$k \geq 1$

such that there exist

![]() $x = x_0, x_1 \in X, \ldots, x_{k-1} \in X, x_k = y$

such that

$x = x_0, x_1 \in X, \ldots, x_{k-1} \in X, x_k = y$

such that

![]() $x_i, x_{i+1}$

are Voronoi neighbors for

$x_i, x_{i+1}$

are Voronoi neighbors for

![]() $i = 0, \ldots, k-1$

.

$i = 0, \ldots, k-1$

.

Denote by

![]() $v(x, y;\, X) = Vol\big(V(y;\, X) \cap V(x;\, (y, X)) \big)$

the volume that

$v(x, y;\, X) = Vol\big(V(y;\, X) \cap V(x;\, (y, X)) \big)$

the volume that

![]() $V(y;\,X)$

loses when x is added to X. Then, for

$V(y;\,X)$

loses when x is added to X. Then, for

![]() $x \notin X$

,

$x \notin X$

,

Let

![]() $R_k(x;\, X)$

be the distance from x to the farthest point in the cell of a kth-order Voronoi neighbor in X; i.e., for

$R_k(x;\, X)$

be the distance from x to the farthest point in the cell of a kth-order Voronoi neighbor in X; i.e., for

![]() $X = (X_1, \ldots, X_n)$

,

$X = (X_1, \ldots, X_n)$

,

with

![]() $R(x;\, X) \,{:}\,{\raise-1.5pt{=}}\, R_1(x;\, X)$

. If x does not have kth-order neighbors, take

$R(x;\, X) \,{:}\,{\raise-1.5pt{=}}\, R_1(x;\, X)$

. If x does not have kth-order neighbors, take

![]() $R_k(x;\, X) = \sqrt{d}$

. Then

$R_k(x;\, X) = \sqrt{d}$

. Then

where

![]() $\kappa_d = \pi^{d/2} / \Gamma(d/2 + 1)$

is the volume of the unit ball in

$\kappa_d = \pi^{d/2} / \Gamma(d/2 + 1)$

is the volume of the unit ball in

![]() $\mathbb{R}^d$

.

$\mathbb{R}^d$

.

Proof of Proposition 4.3. The proof relies on two results. First, we have the following technical lemma which is established in Section 5.6.

Lemma 4.1. Assume there exist

![]() $S_+(K), \alpha > 0$

such that

$S_+(K), \alpha > 0$

such that

![]() $Vol(\partial K^r) \leq S_+(K) r^{\alpha}$

for all

$Vol(\partial K^r) \leq S_+(K) r^{\alpha}$

for all

![]() $r > 0$

. Let

$r > 0$

. Let

Then, for some

![]() $c_{d, qd + \alpha, k} >0$

,

$c_{d, qd + \alpha, k} >0$

,

for all

![]() $n \geq 1$

,

$n \geq 1$

,

![]() $q \geq 1$

.

$q \geq 1$

.

Second, within our framework, we have the following version of [Reference Lachièze-Rey and Peccati14, Proposition 6.4] where S(R) is the original set of points generated by R and

![]() $S(R^i)$

is the set of points generated after the change in the instruction

$S(R^i)$

is the set of points generated after the change in the instruction

![]() $R_i$

.

$R_i$

.

Proposition 4.4. (i) If, for every

![]() $s \in S(R) \setminus S\big(R^i\big)$

, the set

$s \in S(R) \setminus S\big(R^i\big)$

, the set

![]() $R_1(s, S(R))$

, which contains s and all its neighbors, is either entirely in K or entirely in

$R_1(s, S(R))$

, which contains s and all its neighbors, is either entirely in K or entirely in

![]() $K^c$

, then

$K^c$

, then

![]() $\Delta_i h(R)= 0$

. A similar result holds for

$\Delta_i h(R)= 0$

. A similar result holds for

![]() $s \in S\big(R^i\big) \setminus S(R)$

and the set

$s \in S\big(R^i\big) \setminus S(R)$

and the set

![]() $R_1\big(s, S\big(R^i\big)\big)$

.

$R_1\big(s, S\big(R^i\big)\big)$

.

(ii) Assume

![]() $|i - j|$

is large enough so that

$|i - j|$

is large enough so that

![]() $(S\big(R^i\big) \setminus S(R) ) \cup (S(R^j) \setminus S(R)) = S\big(R^{ij}\big) \setminus S(R)$

, where

$(S\big(R^i\big) \setminus S(R) ) \cup (S(R^j) \setminus S(R)) = S\big(R^{ij}\big) \setminus S(R)$

, where

![]() $S\big(R^{ij}\big)$

is the set of points generated after the changes in both

$S\big(R^{ij}\big)$

is the set of points generated after the changes in both

![]() $R_i$

and

$R_i$

and

![]() $R_j$

. Suppose that for every

$R_j$

. Suppose that for every

![]() $s_1 \in S\big(R^i\big) \Delta S(R)$

and

$s_1 \in S\big(R^i\big) \Delta S(R)$

and

![]() $s_2 \in S\big(R^j\big) \Delta S(R)$

, at least one of the following holds:

$s_2 \in S\big(R^j\big) \Delta S(R)$

, at least one of the following holds:

-

1.

$d_V( s_1, s_2;\, S\big(R^{ij}\big) \cap S(R)) \geq 2$

, or

$d_V( s_1, s_2;\, S\big(R^{ij}\big) \cap S(R)) \geq 2$

, or -

2.

$d_V\big(s_1, \partial K;\, S\big(R^{ij}\big) \cap S(R)\big) \geq 2$

and

$d_V\big(s_1, \partial K;\, S\big(R^{ij}\big) \cap S(R)\big) \geq 2$

and

$d_V\big(s_2, \partial K;\, S\big(R^{ij}\big) \cap S(R) \big) \cap S(R)) \geq 2$

.

$d_V\big(s_2, \partial K;\, S\big(R^{ij}\big) \cap S(R) \big) \cap S(R)) \geq 2$

.

Then

![]() $\Delta_{i,j} h(R) = 0$

.

$\Delta_{i,j} h(R) = 0$

.

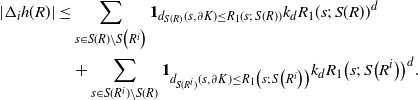

Now, write

\begin{align}\notag |\Delta_i h(R)| \leq & \sum_{s \in S(R) \setminus S\big(R^i\big)} \mathbf{1}_{d_{S(R)}(s, \partial K) \leq R_1(s;\, S(R))} k_d R_1(s;\, S(R))^d \\\notag & + \sum_{s \in S(R^i) \setminus S(R)} \mathbf{1}_{d_{S(R^i)}(s, \partial K) \leq R_1\big(s;\, S\big(R^i\big)\big)} k_d R_1\big(s;\, S\big(R^i\big)\big)^d.\end{align}

\begin{align}\notag |\Delta_i h(R)| \leq & \sum_{s \in S(R) \setminus S\big(R^i\big)} \mathbf{1}_{d_{S(R)}(s, \partial K) \leq R_1(s;\, S(R))} k_d R_1(s;\, S(R))^d \\\notag & + \sum_{s \in S(R^i) \setminus S(R)} \mathbf{1}_{d_{S(R^i)}(s, \partial K) \leq R_1\big(s;\, S\big(R^i\big)\big)} k_d R_1\big(s;\, S\big(R^i\big)\big)^d.\end{align}

As before, for some

![]() $T > 0$

, there exist an event E and

$T > 0$

, there exist an event E and

![]() $\epsilon > 0$

such that, conditioned on E,

$\epsilon > 0$

such that, conditioned on E,

![]() $|S(R^i) \setminus S(R)| = |S(R) \setminus S(R^i)| \leq T$

and

$|S(R^i) \setminus S(R)| = |S(R) \setminus S(R^i)| \leq T$

and

![]() $\mathbb{P}(E^c) \leq (1 - \epsilon)^T$

. Then, from Lemma 4.1, there exist

$\mathbb{P}(E^c) \leq (1 - \epsilon)^T$

. Then, from Lemma 4.1, there exist

![]() $S_+(K), \alpha > 0$

such that

$S_+(K), \alpha > 0$

such that

where

![]() $c_{d,r , \alpha}$

depends on the parameters of the model and the dimension d, as well as on r and

$c_{d,r , \alpha}$

depends on the parameters of the model and the dimension d, as well as on r and

![]() $\alpha$

. If

$\alpha$

. If

![]() $T = c\text{ln}\ n$

for a suitable

$T = c\text{ln}\ n$

for a suitable

![]() $c > 0$

, then

$c > 0$

, then

as desired. An application of the rth-moment Efron–Stein inequality (see [Reference Houdré and Ma12, Reference Rhee and Talagrand16]) then yields (23).

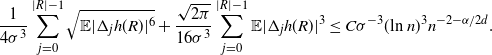

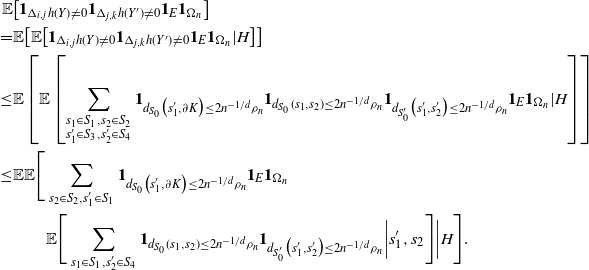

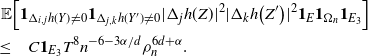

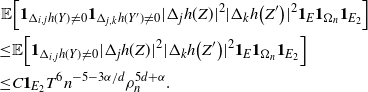

For the non-variance term in Theorem 2.1, we have

\begin{align} \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{\mathbb{E} | \Delta_j h(R)|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 0}^{|R| - 1} \mathbb{E} |\Delta_j h(R)|^3 \leq C \sigma^{-3} (\text{ln}\ n)^3 n^{- 2 - \alpha /2d}.\end{align}

\begin{align} \frac{1}{4 \sigma^3} \sum_{j = 0}^{|R| - 1} \sqrt{\mathbb{E} | \Delta_j h(R)|^6} + \frac{\sqrt{2 \pi} } {16 \sigma^3} \sum_{j = 0}^{|R| - 1} \mathbb{E} |\Delta_j h(R)|^3 \leq C \sigma^{-3} (\text{ln}\ n)^3 n^{- 2 - \alpha /2d}.\end{align}

To analyze the bound on the variance terms given by Proposition 2.3, first note that

\begin{align}\notag \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A} \mathbf{1}_{i = j = k} \mathbb{E} | \Delta_i h(R)|^4 \leq C(\text{ln}\ n)^4 n^{- 3 - \alpha /d},\end{align}

\begin{align}\notag \sum_{i = 0}^{|R| -1} \sum_{j, k \notin A} \mathbf{1}_{i = j = k} \mathbb{E} | \Delta_i h(R)|^4 \leq C(\text{ln}\ n)^4 n^{- 3 - \alpha /d},\end{align}

again using Proposition 4.3. The other terms are bounded as follows.

Proposition 4.5. Let

![]() $A \subsetneq [ | R | ] $

, and let

$A \subsetneq [ | R | ] $

, and let

![]() $B_{|R|}(h), B_{|R|}^k(h)$

and

$B_{|R|}(h), B_{|R|}^k(h)$

and

![]() $B_{|R|}^j(h)$

be as in (6). Then, for

$B_{|R|}^j(h)$

be as in (6). Then, for

![]() $\epsilon > 0$

,

$\epsilon > 0$

,

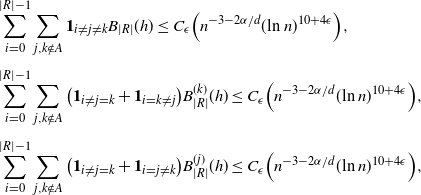

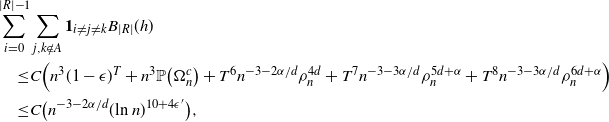

\begin{align}\notag \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \mathbf{1}_{i \neq j \neq k} B_{|R|}(h) \leq C_\epsilon \Big( n^{-3 - 2 \alpha/d} (\text{ln}\ n)^{10 + 4 \epsilon}\Big), \\[4pt]\notag \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = k \neq j} \big) B_{|R|}^{(k)}(h) \leq C_\epsilon \Big( n^{-3 - 2 \alpha/d} (\text{ln}\ n)^{10 + 4 \epsilon}\Big) , \\[4pt]\notag \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = j \neq k} \big) B_{|R|}^{(j)}(h) \leq C_\epsilon \Big( n^{-3 - 2 \alpha/d} (\text{ln}\ n)^{10 + 4 \epsilon}\Big) ,\end{align}

\begin{align}\notag \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \mathbf{1}_{i \neq j \neq k} B_{|R|}(h) \leq C_\epsilon \Big( n^{-3 - 2 \alpha/d} (\text{ln}\ n)^{10 + 4 \epsilon}\Big), \\[4pt]\notag \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = k \neq j} \big) B_{|R|}^{(k)}(h) \leq C_\epsilon \Big( n^{-3 - 2 \alpha/d} (\text{ln}\ n)^{10 + 4 \epsilon}\Big) , \\[4pt]\notag \sum_{i = 0}^{|R| -1} & \sum_{j, k \notin A} \big(\mathbf{1}_{i \neq j = k} + \mathbf{1}_{i = j \neq k} \big) B_{|R|}^{(j)}(h) \leq C_\epsilon \Big( n^{-3 - 2 \alpha/d} (\text{ln}\ n)^{10 + 4 \epsilon}\Big) ,\end{align}

for some constant

![]() $C_\epsilon> 0$

that does not depend on n.

$C_\epsilon> 0$

that does not depend on n.

Proof. See Section 5.7 for the proof of the first bound. The others follow similarly.

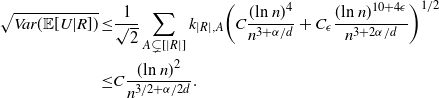

The bounds on the variance terms in Proposition 2.3 become

\begin{align}\notag \sqrt{Var(\mathbb{E}[U|R])} \leq & \frac{1}{\sqrt{2}} \sum_{A \subsetneq[|R|]} k_{|R|, A} \bigg( C \frac{(\text{ln}\ n)^4}{n^{3 + \alpha/d} } + C_\epsilon \frac{(\text{ln}\ n)^{10 + 4 \epsilon}}{n^{3 + 2\alpha /d}} \bigg)^{1/2}\\ \leq & C \frac{(\text{ln}\ n)^2 }{n^{3/2 + \alpha/2d}}.\end{align}

\begin{align}\notag \sqrt{Var(\mathbb{E}[U|R])} \leq & \frac{1}{\sqrt{2}} \sum_{A \subsetneq[|R|]} k_{|R|, A} \bigg( C \frac{(\text{ln}\ n)^4}{n^{3 + \alpha/d} } + C_\epsilon \frac{(\text{ln}\ n)^{10 + 4 \epsilon}}{n^{3 + 2\alpha /d}} \bigg)^{1/2}\\ \leq & C \frac{(\text{ln}\ n)^2 }{n^{3/2 + \alpha/2d}}.\end{align}

4.2. Proof of Proposition 4.2

The upper bound follows immediately from Proposition 4.3.

Recall the following result ([Reference Lachièze-Rey and Peccati14, Corollary 2.4]) concerning the variance. Let

![]() $X \,{:}\,{\raise-1.5pt{=}}\, (X_1, \ldots, X_n) \in E^n$

, where E is a Polish space. If X

′ is an independent copy of X, and

$X \,{:}\,{\raise-1.5pt{=}}\, (X_1, \ldots, X_n) \in E^n$

, where E is a Polish space. If X

′ is an independent copy of X, and

![]() $f\,:\, E^n \to \mathbb{R}$

is measurable, with

$f\,:\, E^n \to \mathbb{R}$

is measurable, with

![]() $\mathbb{E}\big[ f(X)^2\big] < \infty$

,

$\mathbb{E}\big[ f(X)^2\big] < \infty$

,

In our setting we take

![]() $f = \varphi$

. Unlike in [Reference Lachièze-Rey and Peccati14], the function

$f = \varphi$

. Unlike in [Reference Lachièze-Rey and Peccati14], the function

![]() $\varphi$

is not symmetric, and the right-hand side of (26) cannot be simplified. The lower bound provided by [Reference Lachièze-Rey and Peccati14, Corollary 2.4] recovers the correct order of growth of the variance, which is enough for our purposes. However, more precise generic results are also available; see e.g. [Reference Bousquet and Houdré1].

$\varphi$

is not symmetric, and the right-hand side of (26) cannot be simplified. The lower bound provided by [Reference Lachièze-Rey and Peccati14, Corollary 2.4] recovers the correct order of growth of the variance, which is enough for our purposes. However, more precise generic results are also available; see e.g. [Reference Bousquet and Houdré1].

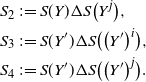

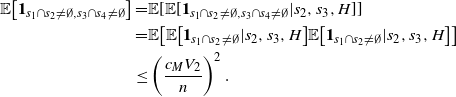

Let H be the realization of the hidden chain for X. By the law of total variance,

![]() $Var(\varphi(X)) \geq Var(\varphi(X) | H)$

. Let X

′ be an independent copy of X, given H. Note that, given H,

$Var(\varphi(X)) \geq Var(\varphi(X) | H)$

. Let X

′ be an independent copy of X, given H. Note that, given H,

![]() $(X_i)_{i = 1, \ldots, n}$

and

$(X_i)_{i = 1, \ldots, n}$

and

![]() $\big(X^{\prime}_i\big)_{ i = 1, \ldots, n}$

are independent random variables which are not identically distributed.

$\big(X^{\prime}_i\big)_{ i = 1, \ldots, n}$

are independent random variables which are not identically distributed.

Applying (26) to

![]() $\varphi(X | H)$

, we obtain

$\varphi(X | H)$

, we obtain

where

![]() $X^i = \big(X_1, \ldots, X_{i-1}, X^{\prime}_i, X_{i+1}, \ldots, X_n\big)$

, and

$X^i = \big(X_1, \ldots, X_{i-1}, X^{\prime}_i, X_{i+1}, \ldots, X_n\big)$

, and

![]() $\mathbb{E}^H$

indicates that H is given. (To simplify notation in what follows we drop the H.) The difference from the proof in [Reference Lachièze-Rey and Peccati14] is that now the variables are no longer identically distributed. Write

$\mathbb{E}^H$

indicates that H is given. (To simplify notation in what follows we drop the H.) The difference from the proof in [Reference Lachièze-Rey and Peccati14] is that now the variables are no longer identically distributed. Write

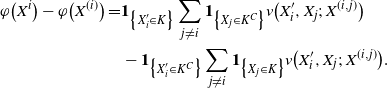

where

![]() $X^{(i)} = \big(X_1, \ldots, X_{i-1}, X_{i+1}, \ldots, X_n\big)$

. By Lemma 4.1,

$X^{(i)} = \big(X_1, \ldots, X_{i-1}, X_{i+1}, \ldots, X_n\big)$

. By Lemma 4.1,

We are left to study

![]() $\mathbb{E} \big[ \varphi\big(X^i\big) - \varphi\big(X^{(i)}\big)\big]$

. Recall that

$\mathbb{E} \big[ \varphi\big(X^i\big) - \varphi\big(X^{(i)}\big)\big]$

. Recall that

\begin{align}\notag \varphi\big(X^i\big) - \varphi\big(X^{(i)}\big) = & \mathbf{1}_{\big\{X^{\prime}_i \in K\big\}} \sum_{j \neq i} \mathbf{1}_{\big\{X_j \in K^C\big\}} v\big(X^{\prime}_i, X_j;\, X^{(i,j)}\big) \\\notag & - \mathbf{1}_{\big\{X^{\prime}_i \in K^C\big\}} \sum_{j \neq i} \mathbf{1}_{\big\{X_j \in K\big\}} v\big(X^{\prime}_i, X_j;\, X^{(i,j)}\big).\end{align}

\begin{align}\notag \varphi\big(X^i\big) - \varphi\big(X^{(i)}\big) = & \mathbf{1}_{\big\{X^{\prime}_i \in K\big\}} \sum_{j \neq i} \mathbf{1}_{\big\{X_j \in K^C\big\}} v\big(X^{\prime}_i, X_j;\, X^{(i,j)}\big) \\\notag & - \mathbf{1}_{\big\{X^{\prime}_i \in K^C\big\}} \sum_{j \neq i} \mathbf{1}_{\big\{X_j \in K\big\}} v\big(X^{\prime}_i, X_j;\, X^{(i,j)}\big).\end{align}

Now, for the case

![]() $X^{\prime}_i \in K^C$

(the other case being equivalent), we have

$X^{\prime}_i \in K^C$

(the other case being equivalent), we have

\begin{align}\notag \Bigg|\mathbb{E}_{X, X^{\prime}_i} & \Bigg[ - \mathbf{1}_{\big\{X^{\prime}_i \in K^C\big\}} \sum_{j \neq i} \mathbf{1}_{\big\{X_j \in K\big\}} v\Big(X^{\prime}_i, X_j;\, X^{(i,j)}\Big) \Bigg] \Bigg| \\\notag \geq & \quad \mathbb{E}_{X^{\prime}_i} \Bigg[\mathbf{1}_{\big\{X^{\prime}_i \in \partial K_+^{n^{-1/d}} \big\}} \sum_{j \neq i} \mathbb{E}_X\Big[ \mathbf{1}_{\{X_j \in K\}} v\Big(X^{\prime}_i, X_j;\, X^{(i,j)}\Big) \Big] \Bigg],\end{align}

\begin{align}\notag \Bigg|\mathbb{E}_{X, X^{\prime}_i} & \Bigg[ - \mathbf{1}_{\big\{X^{\prime}_i \in K^C\big\}} \sum_{j \neq i} \mathbf{1}_{\big\{X_j \in K\big\}} v\Big(X^{\prime}_i, X_j;\, X^{(i,j)}\Big) \Bigg] \Bigg| \\\notag \geq & \quad \mathbb{E}_{X^{\prime}_i} \Bigg[\mathbf{1}_{\big\{X^{\prime}_i \in \partial K_+^{n^{-1/d}} \big\}} \sum_{j \neq i} \mathbb{E}_X\Big[ \mathbf{1}_{\{X_j \in K\}} v\Big(X^{\prime}_i, X_j;\, X^{(i,j)}\Big) \Big] \Bigg],\end{align}

since

![]() $v\big(X^{\prime}_i, X_j;\, X^{(i,j)}\big) \geq 0$

. Then

$v\big(X^{\prime}_i, X_j;\, X^{(i,j)}\big) \geq 0$

. Then

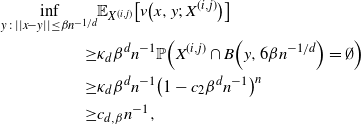

\begin{align}\notag \mathbb{E}_X & \Big[ \mathbf{1}_{\big\{X_j \in K\big\}} v\big(x, X_j;\, X^{(i,j)}\big) \Big]\Big ] \\\notag \geq & \mathbb{E}_{X^{(i,j)}} \left[ c_1 \int_{y \in K} v\big(x, y;\, X^{(i,j)}\big) dy \right] \\\notag \geq & c_1 Vol\big(B\big(x, \beta n^{-1/d}\big) \cap K\big) \inf_{y \,:\, ||x- y || \leq \beta n^{-1/d}} \mathbb{E}_{X^{(i,j)}}\Big[ v\big(x,y;\, X^{(i,j)}\big)\Big],\end{align}

\begin{align}\notag \mathbb{E}_X & \Big[ \mathbf{1}_{\big\{X_j \in K\big\}} v\big(x, X_j;\, X^{(i,j)}\big) \Big]\Big ] \\\notag \geq & \mathbb{E}_{X^{(i,j)}} \left[ c_1 \int_{y \in K} v\big(x, y;\, X^{(i,j)}\big) dy \right] \\\notag \geq & c_1 Vol\big(B\big(x, \beta n^{-1/d}\big) \cap K\big) \inf_{y \,:\, ||x- y || \leq \beta n^{-1/d}} \mathbb{E}_{X^{(i,j)}}\Big[ v\big(x,y;\, X^{(i,j)}\big)\Big],\end{align}

using the independence after conditioning on H and the properties of the model. We want to find an event that implies that

![]() $v\big(x,y;\, X^{(i,j)}\big) \geq c n^{-1}$

. One instance is when no point of

$v\big(x,y;\, X^{(i,j)}\big) \geq c n^{-1}$

. One instance is when no point of

![]() $X^{(i,j)}$

falls in

$X^{(i,j)}$

falls in

![]() $B\big(y, 6 \beta n^{-1/d}\big)$

. Indeed, then

$B\big(y, 6 \beta n^{-1/d}\big)$

. Indeed, then

![]() $B\big(y, 3 \beta n^{-1/d}\big) \subset V\big(y, X^{(i,j)}\big)$

. The distance between y and x is less than

$B\big(y, 3 \beta n^{-1/d}\big) \subset V\big(y, X^{(i,j)}\big)$

. The distance between y and x is less than

![]() $\beta n^{-1/d}$

, and so there is

$\beta n^{-1/d}$

, and so there is

![]() $z \in B\big(y, 3 \beta n^{-1/d}\big)$

, namely

$z \in B\big(y, 3 \beta n^{-1/d}\big)$

, namely

![]() $z = x + \beta n^{-1/d} (x - y)/||x-y||$

, such that

$z = x + \beta n^{-1/d} (x - y)/||x-y||$

, such that

Then

![]() $v\big(x,y;\, X^{(i,j)}\big) \geq Vol\big(B\big(z, \beta n^{-1/d}\big) = \kappa_d \beta^d n^{-1}$

. Finally,

$v\big(x,y;\, X^{(i,j)}\big) \geq Vol\big(B\big(z, \beta n^{-1/d}\big) = \kappa_d \beta^d n^{-1}$

. Finally,

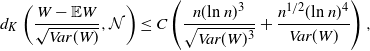

\begin{align}\notag \inf_{y \,:\, ||x- y || \leq \beta n^{-1/d}} & \mathbb{E}_{X^{(i,j)}}\big[ v\big(x,y;\, X^{(i,j)}\big)\big] \\\notag \geq & \kappa_d \beta^d n^{-1} \mathbb{P} \Big( X^{(i,j)} \cap B\Big(y, 6 \beta n^{-1/d}\Big) = \emptyset\Big) \\\notag \geq & \kappa_d \beta^d n^{-1} \big( 1 - c_2 \beta^d n^{-1}\big)^n \\\notag \geq & c_{d, \beta} n^{-1},\end{align}

\begin{align}\notag \inf_{y \,:\, ||x- y || \leq \beta n^{-1/d}} & \mathbb{E}_{X^{(i,j)}}\big[ v\big(x,y;\, X^{(i,j)}\big)\big] \\\notag \geq & \kappa_d \beta^d n^{-1} \mathbb{P} \Big( X^{(i,j)} \cap B\Big(y, 6 \beta n^{-1/d}\Big) = \emptyset\Big) \\\notag \geq & \kappa_d \beta^d n^{-1} \big( 1 - c_2 \beta^d n^{-1}\big)^n \\\notag \geq & c_{d, \beta} n^{-1},\end{align}

for some

![]() $c_{d, \beta} > 0$

depending on the parameters of the model, the dimension d, and

$c_{d, \beta} > 0$

depending on the parameters of the model, the dimension d, and

![]() $ \beta$

. Then

$ \beta$

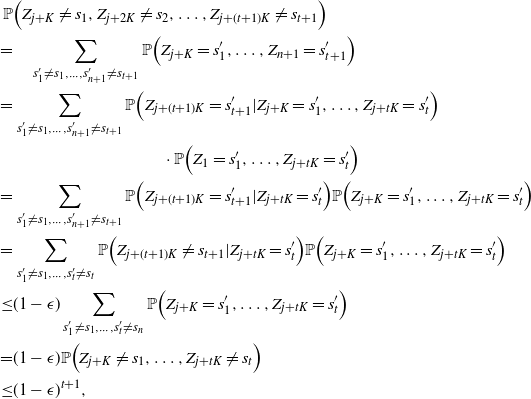

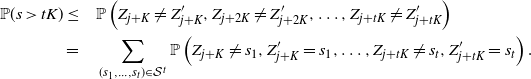

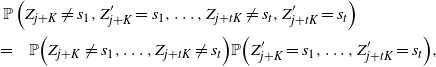

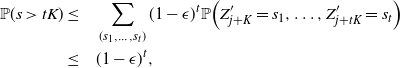

. Then