Introduction

We did not always want what we want today. A child who wanted to become a firefighter now prefers to work as a tax accountant. In fact, your authors did not always want to write a book about preference change, but at some point, they started to prefer doing so over other pastimes. Our preferences changed. Why does such change occur?

Sometimes our preferences change because we learn new things about the world; for example, one might want to write a philosophy book to fill a gap in the literature and learn that there is a distinct lack of introductions to preference change. However, not all changes in preferences lend themselves to being explained this way. Sometimes, what we want just changes fundamentally, not because we acquire information about something we already want.

This view contrasts with traditional decision theory that posits agents who possess stable preferences make rational decisions based on them. Some researchers, especially economists, have held that preferences are given and that decision theory does not consider preference construction. They might even assert that their decision models do not address psychological processes.Footnote 1

We believe that decision theory should consider the psychological reality of preference change. We did not always want to work on philosophy and might lose our taste for it. Any account of us as practical agents calls for a theory of preference change to account for these personal transformations. Philosophers have many reasons to be interested in such theories of preference change, both from a descriptive and a normative perspective.

Regarding the descriptive project, philosophers might debate the reality of fundamental preference change. Are there really preferences that serve as the grounds for all other preferences? Maybe the traditional view was correct all along, and despite appearances, all that changes is our information about the world.

From a normative perspective, philosophers have proposed constraints on how our preferences should change. Philosophers might argue that when the reasons that motivate us change, so should our preferences. If we were no longer motivated by the reason that philosophy is an intellectual endeavour, then perhaps we should come to prefer carpentry as a career, but probably not mathematical logic.

Other philosophers debate how we can and should choose as rational agents in light of our changing preferences, addressing both a descriptive and normative question. If your preferred career might differ after a course of studies, how can you choose a course of studies as a rational agent? Which preferences should you consider?

All these discussions centre on preference change, but they have mainly remained disconnected. This Element seeks to bring the strands together and reveal how they address being a changing agent in a complex world. In doing so, we will also draw on practical philosophers who work on topics such as rationality and autonomy. While much of this Element has a synchronic perspective (i.e. it focuses on a single point in time), notable exceptions exist (e.g. Reference BratmanBratman 2007).

To connect the existing philosophical work on preference change and show how it relates to our practical agency, we address three questions:

1. What is (fundamental) preference change?

2. How should we model preference change?

3. How should we choose in light of preference change?

These questions follow an intuitive progression. First, we must determine the phenomenon of interest: how does fundamental preference change fit into the basic picture of practical rationality endorsed by decision theory? Then, we ask how this phenomenon, preference change, can be modelled. What constraints are there on its dynamic? Finally, the changes in preference, which are hopefully better understood after the first two chapters raise the question of how to choose as a changing self. How can we make rational decisions based on what we prefer while being aware of the instability of our preferences? We proceed from the conceptual basis via modelling to practical conduct.

Our discussion of these questions, while not settling them conclusively, will give the reader an idea of what is at stake and how the various debates are connected. Building upon this foundation, the reader might turn themselves into an author.

1 Preferences and Preference Change

But doth not the appetite alter? A man loves the meat in his youth that he cannot endure in his age.

How should a rational agent choose between the options that are available to them? Decision theorists frequently answer this question using assumptions about preference relations. A rational agent chooses one option over another because they prefer it. When we are concerned with preference change, we are concerned with changes to these preference relations.

This section will introduce preference relations, their mental reality, and their role in decision theory. We will discuss what makes some preferences fundamental and argue that such fundamental preferences sometimes change. In the next section, we summarise the formal properties of the preference relation used by decision theorists to provide a foundation for our discussions.

Preference Relations

Preference relations are usually defined either in terms of a weak preference relation (≽) or in terms of a strict preference relation (≻) and an indifference relation (∼). These relations have intuitive interpretations in terms of wants, for example:

1. An agent weakly prefers reading a book to attending a concert if the agent wants to read at least as much as they want to attend the concert.

2. An agent strictly prefers hiking to watching a movie if the agent wants to hike more than watch a movie.

3. An agent is indifferent between hiking and reading a book if the agent wants both of them equally.

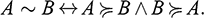

The three preference relations can be used to define each other using the following formulas (where ‘↔’ stands for ‘if and only if’ and ‘¬’ for ‘not’):

For example, an agent weakly prefers reading a book to attending a concert if and only if they strictly prefer reading or are indifferent between reading and a concert.

Using weak preference relations as the basic relation from which one derives the others makes some formal proofs easier. However, strict preference and indifference can be more intuitive since, in everyday language, we tend to use ‘prefer’ to express something similar to a strong preference relation. When Ishmael informs us that ‘Queequeg, for his own private reasons, preferred his own harpoon’ (Reference MelvilleMelville 1951: chapter 13), he means to convey that Queequeg wanted to use his own harpoon rather than having to use one provided by the whaling ship. We will use both weak and strict preference and indifference depending on the context. However, given their intuitive character, we favour strong preference and indifference.

We have specified these relations through intuitive examples, but it is commonly assumed that they must fulfil certain formal requirements that provide further specification. These might constrain the change in our preferences. We introduce a selection of these principles for illustration and use them in later discussions (for a more detailed presentation, see Reference Hansson, Grüne-Yanoff and ZaltaHansson and Grüne-Yanoff (2017) and Reference Bridges and MehtaBridges and Mehta (1995)).

Let A and B be any two alternatives from a set of mutually exclusive alternatives over which the strict preference and indifference relations are specified. Such a set of alternatives could, for example, be a prospective student’s choice among university programmes, where only one can be selected. Then, the following requirements are considered part of the meaning of the overall preference relations (using ‘→’ for ‘if’ and ‘¬’ for ‘not’):Footnote 2

Asymmetry of strict preference:

Symmetry of indifference:

Reflexivity of indifference:

Incompatibility of strict preference and indifference:

These requirements are rather basic and can be considered part of the semantic core of the preference conceptions. For example, it is hard to see how anyone could strictly prefer a physics course to a philosophy course and at the same time also strictly prefer the same philosophy course to the same physics course (asymmetry). Similarly, if the formal relation of indifference is supposed to be anything like our ordinary concept of indifference, an agent must be indifferent between a philosophy course and the very same philosophy course (reflexivity).Footnote 3

In addition to these largely conceptual restrictions, preference relations are also frequently considered transitive and complete. Transitivity of strict preference and indifference requires:

Transitivity of preference:

Transitivity of indifference:

Completeness demands that either strict preference or indifference holds between two options, that is,

Completeness:

An agent’s preferences are complete for a given set of alternatives if and only if, for every two alternatives in the set, the agent either strictly prefers one alternative or is indifferent between them.

A preference relation that fulfils the sketched requirements is, in formal terms, at least a total pre-order.Footnote 4 Such a pre-order and stricter orderings would guarantee many useful properties, but transitivity and completeness have been the subject of particular debate. These properties can be questioned from both a descriptive and a normative perspective (i.e. by questioning whether they describe actual human agents and whether rational agents should fulfil them).

One motivation to question completeness is straightforward: it seems unlikely that one agent could have determinate preferences for all possible comparisons. One might doubt that an agent selecting from a movie streaming service has a complete preference relation over all options. In everyday discourse, we are unlikely to blame the agent for failing to be completely opinionated.

Violations of transitivity might seem more egregious, especially from a normative perspective. If Lane prefers listening to Bowie over listening to Britney and prefers Britney over the Bee Gees, then it is at least odd not to prefer Bowie over the Bee Gees as well. However, empirical results suggest that human agents do not always conform to this principle (Reference TverskyTversky 1969; Reference FishburnFishburn 1991), and philosophers have also developed examples in which violations of transitivity appear normatively acceptable (e.g. Reference QuinnQuinn (1990), but see also the influential money-pump arguments in favour of transitivity: Reference Davidson, McKinsey and SuppesDavidson et al. (1955) and Reference GustafssonGustafsson (2010, Reference Gustafsson2013)).

For the purposes of our investigation, we are not interested in settling the status of these purported rationality restrictions but in how they relate to preference change. Three such connections are worth highlighting.

First, if completeness is not guaranteed, preference change includes cases where new preference relations are added or lost. The individual selecting from the streaming service undergoes preference change when they settle on one order over and above all the available options. Such cases are not covered by all preference change models, such as reason-based decision theory (Reference Dietrich and ListDietrich and List 2013a, Reference Dietrich and List2013b, Reference Dietrich and List2016b), which will be discussed later.

Second, the preference change model might itself be required to produce preference relations that are transitive or complete or that meet other rationality criteria. Therefore, it is often insufficient for one preference between two alternatives to change because an isolated change might violate the criteria. If Lane switches her preference between Bowie and the Bee Gees, then she also has to change her preference between Bowie and Britney or Britney and the Bee Gees to avoid a violation of transitivity.

Third, a present or threatening violation of the requirements might not only constrain preference change but also lead to preference change in the first place. If Logan realises that he prefers Shakespeare to Goethe and Melville to Shakespeare, this might prompt him also to prefer Melville to Goethe to avoid a violation of transitivity.

The second and third connections differ, in that only the third is concerned with the sources of preference change. The second constrains preference change without describing its source. As we will see, models of preference change differ in whether they incorporate such sources or provide more general restrictions.

A Mentalist Conception of Preferences

How does the formal description of preference relations map onto actual agents, especially human agents? When a decision theorist talks about the preferences of a human agent, which facts about the agent make their claims true or false?

Throughout this Element, we will assume that preference relations, including the indifference relation, describe mental states (i.e. ‘preference’ and ‘indifference’ denote mental states that might describe human agents). These mental states relate to two alternatives (i.e. their content is a comparison between two alternatives), and we call these mental states ‘preference states’. Rory’s preference for listening to Bowie over the Backstreet Boys is a preference state realised by her neural system. As such, the state has causal powers that guide her choice. In a situation where Rory has to choose between listening to Bowie and the Backstreet Boys, her preference will cause her to choose Bowie.Footnote 5

The ontological status of preferences is controversial, and for a long time, the mentalist conception of preferences we just outlined was the minority position. Behaviourism (i.e. theories according to which preference relations merely describe behaviour) dominated the debate.Footnote 6 In particular, economists have commonly assumed, and many still do, that preferences are not realised as mental states. However, talk of preference serves as a shorthand for describing human behaviour. This behaviouristic interpretation is connected to what is known as revealed preference theory; specifically, the assumption that one alternative is strictly preferred to another if and only if the other is never chosen over the first alternative when both are available (cf. Reference BradleyBradley 2017: 45; Reference Hansson, Grüne-Yanoff and ZaltaHansson and Grüne-Yanoff 2017).Footnote 7

The behaviourist conceptions of preference survived even after behaviourism had largely been abandoned in psychology, but the general reasons for abandoning behaviourism transfer to preferences. If the talk of preferences only re-describes behaviour in other terms, then it does not explain behaviour. However, Rory might say she listens to Bowie instead of the Backstreet Boys because she prefers Bowie’s music. At least, offering such explanations appears possible. Relatedly, if attributing preferences to agents is uniquely successful as an explanatory project, then it gives us a good reason to believe that agents indeed have preferences. If I can predict and explain Rory’s music-listening behaviour by attributing various preferences to her, I appear to have captured something effective in the world.Footnote 8

The content and exact functional role of preferences as mental states remains hotly debated. One of the most prominent options is the all-things-considered judgement interpretation of preferences, defended by Reference HausmanDaniel Hausman (2012). Others have questioned whether such judgements are cognitively plausible (Reference AngnerAngner 2018) or have sought to tie preferences closer to choice dispositions (e.g. Reference BradleyBradley 2017: 47; Reference GualaGuala 2019).

The exact description of preference change will clearly depend on which theory one endorses, but for our purposes, it will suffice to assume that preferences are mental entities and that, under conducive circumstances, cause choice behaviour and can be described using the type of formalisms outlined earlier, even though they might not meet all rationality criteria commonly expressed using these formalisms.

The mentalist conception of preferences will repeatedly influence our discussion of preference change. We are interested in why and how individuals change their minds, not just that we can express changes in behaviour as changes in preference. If formal models serve to describe humans with mental states and if the description does not sufficiently correspond to their mental states, or at least a relevant aspect of them, then the models have failed.Footnote 9

Deciding in Light of Uncertainty

So far, we have limited our description of human choice to preferences, but that is insufficient to handle choice in the case of uncertainty (i.e. when we can only assign degrees of probability to how the world is). For this, we need fully fledged approaches to decision theory. Since the basis of preference relations can be developed in multiple ways, multiple versions of decision theory exist. Two especially influential versions are those developed by Reference SavageLeonard Savage (1954) and Reference JeffreyRichard Jeffrey (1990) [1965]). These versions are interchangeable for many purposes, but the treatment of preference change is not one of them. Therefore, an overview of their differences is required.

Savage-Type Decision Theory

In order to describe a decision under uncertainty, Savage-type decision theory distinguishes:

Acts

States of the world

Consequences

For example, the act might be applying to a university, the relevant state of the world is whether the university is disposed to accept your application, and the consequence is whether you underwent the effort and are accepted.Footnote 10 We can express this situation in Table 1.

Table 1 Basic components of Savage-type decision theory

| Disposed to Accept | Disposed not to Accept | |

|---|---|---|

| Apply | Effort and Accepted | Effort and Not Accepted |

| Not Apply | No Effort and Not Accepted | No Effort and Not Accepted |

One might assume that you prefer expending the effort and being accepted to not expending the effort and not being accepted, which might still be better than having wasted the effort without getting accepted.Footnote 11 According to standard decision theory, whether you should apply then depends on the strength of your preferences and the probability you assign to the university being disposed to accept your application.

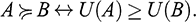

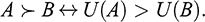

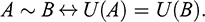

What we introduced here as the strength of preferences is more commonly described using a utility function. A utility function, U(·), takes an alternative as input and returns a real-value number as output. If one takes a mental realist perspective on utilities, they can be understood as degrees to which the alternative is wanted. We do not commit ourselves to such a perspective, but it provides an intuitive means to understand the following key formulas linking preferences:

Alternative A is at least as preferred as B if and only if A’s utility is at least as large as B’s.

Alternative A is preferred to B if and only if A’s utility is larger than B’s.

Alternative A is equally preferred as B if and only if A’s utility is identical to B’s.

These connecting biconditionals can still be posited from a non-realist perspective on utility, which considers it a formal tool. Formally, a utility function can be derived from preferences, provided they fulfil certain conditions, including those discussed in the previous section (see Reference SavageSavage 1954; Reference KrepsKreps 1988). In light of this derivation, one might consider the utility function as a useful shorthand for describing them.Footnote 12

The Savage framework has two utility functions: the utility function over outcomes and the expected utility (EU) function. The fundamental utility function ranges over the consequences, such as the case where someone has applied to a university and been accepted. The EU function ranges over the acts and provides the average utility of the outcomes for this act weighted by the probability of the state of the world for that outcome.

To illustrate this concept, we have assigned arbitrary numbers in our example that allow us to calculate the EU (Table 2).

Table 2 Applying the Savage-type decision theory

| P(Disposed to Accept) = 0.4 | P(Disposed not to Accept) = 0.6 | |

|---|---|---|

| Apply | U(Effort & Accepted) = 20 | U(Effort & Not Accepted) = −10 |

| Not Apply | U(No Effort & Not Accepted) = 0 | U(No Effort & Not Accepted) = 0 |

In the outlined case, the state of the world where the university is disposed to accept has a probability of 40 per cent (i.e. P(Disposed to Accept) = 0.4). The utility for spending the effort and getting accepted is 20 on our scale. Based on these numbers, we can calculate the EU for both acts:

EU(Apply) = P(Disposed to Accept) × U(Accepted & Effort) + P(Disposed to Accept) × U(Not Accepted & Effort) = 0.4 × 20 + 0.6 × −10 = 8 – 6 = 2

EU(Not Apply) = 0.4 × 0 + 0.6 × 0 = 0.

Assuming that EU is to be maximised, this calculation suggests that you should put in the effort and apply (2 > 0).

The EU functions, however, can be altered. Savage-type decision theory thereby distinguishes between a preference change over acts (apply vs. not apply) due to a new utility function (a change in the utility functions over outcomes) or Bayesian updating (a change in subjective probabilities).

This distinction has played a major role in debates about preference change, especially because it limits the types of preference changes that can exist. Therefore, we will return to it later.

While the framework of Savage-type decision theory is useful for many purposes, and we will draw on it accordingly, it unduly restricts the discussion of preference change.Footnote 13

First, Savage-type decision theory precludes all discussions of preference changes over alternatives for which the framework does not assign a preference order. For example, there are no preferences over states of the world. Such a limitation does not seem psychologically required. For example, you might prefer that a university be disposed to accept you, even if the question of applying is left out of the picture. It is not obviously wrong to want the world to be a certain way, even if it being this way does not affect any choices or outcomes.

Second, preference change is limited by the dependence relations postulated by Savage’s framework. Specifically, the framework requires the utility of consequences to be independent of both the state of the world in which they are realised and the action. The preferences over actions derive from the preferences over consequences (and the probabilities assigned to states) and not vice versa. However, at least at first glance, a consequence might become more appealing by virtue of the action that leads to it. For example, one might get another university degree because one’s preferences regarding studying over working in the industry have changed. A preference change of this sort would be ruled out by the framework, at least while one describes studying as an action.

While one can often solve such issues by re-describing the decision problem (i.e. by specifying its ontology differently), it can become unwieldy or unintuitive to do so. In such cases, the decision theory developed by Richard Jeffrey and Ethan Bolker is often more appropriate.

Jeffrey-Type Decision Theory

The Jeffrey–Bolker framework is very flexible because it takes propositions as its basic elements rather than consequences, states, and acts. Therefore, preferences in this framework describe which propositions an agent would rather see realised. For example, an agent might prefer the proposition that the university is disposed to accept an application over the proposition that the university is not disposed to accept an application. Given the generality of propositions, this leads to a more general decision-making theory.Footnote 14

Furthermore, instead of a utility and EU function, Jeffrey-type decision theory introduces what is called a desirability function, which maps propositions to real-value numbers. The differences between the desirability and standard utility functions can be relatively subtle. In the standard interpretation of utility, a high utility indicates that the consequence is highly wanted or, in the case of EU, that the act is highly choice-worthy. Desirability, however, is often interpreted as a news-value indicator, meaning that the more the news of a certain proposition being true is wanted, the higher the proposition’s desirability.Footnote 15 Therefore, the desirability for a logical truth would be zero since such a proposition being true cannot be news (for a logically omniscient agent).

Unlike the Savage-type framework, there is one desirability function and, therefore, no correspondence to the distinction between the utility and EU function (see Reference Bradley and StefánssonBradley and Stefánsson (2017: 492–493) for an interesting interpretation of desirability measures). The unified ontology of propositions also leads to this result.

Despite these differences between utility and desirability, the two types of functions can nonetheless be treated as largely equivalent for our purposes because the formulas connecting preferences and utility also apply to the desirability function. More particularly, writing v(·) for the desirability function, it is the case that

and so on for strict preference and indifference.

Generally, we try as much as possible to adhere to the vocabulary of preferences instead of the utility or desirability function. However, since the literature on preference change also frequently uses these functions, we will use them whenever it simplifies the overall presentation.

Following these introductions, we turn to preference change, especially the difference between derived and fundamental preference change.

Derived and Fundamental Preference Change

That some preferences can and do change is hard to dispute. Assume we confronted you with a choice between two lottery tickets, one in the left hand and one in the right. You have to choose between these two tickets, and we will give you whichever one you choose. Presumably, you will be largely indifferent between these two options. However, if you learn that the ticket in the left hand is the winning ticket for this week’s draw, your indifference will dissolve, and you will suddenly develop a clear preference in favour of it.

The process you undergo in this example is an instance of preference change, broadly construed, but this type of preference change has not greatly vexed contemporary decision theorists and philosophers. It is clear that, in this example, information drives the change in preferences. You always wanted a winning lottery ticket; you just did not know from the start that the ticket in the left hand was the winning one. What changed was the information you had, which then affected preferences derived from that information, but your fundamental preferences remained unchanged.

We intuitively make a difference between our fundamental preferences and preferences we derive from information (Figure 1).

This distinction between the two types of preferences and their relation to beliefs can be found in the literature under many names. Some authors prefer to distinguish intrinsic (i.e. fundamental) from extrinsic (i.e. derived) preferences (e.g. Reference BinmoreBinmore 2008: 5–6; Reference Spohn, Grüne-Yanoff and HanssonSpohn 2009). In economics, authors distinguish between endogenous and exogenous preference change.

Both the Savage- and Jeffrey-type decision theories are well equipped to deal with derived preference change, such as in the lottery ticket example. According to Savage-type decision theory, you learn something about the states of the world, namely, that the left ticket is the winning one. This piece of information affects the EU calculation. The fundamental preferences, described by utilities over consequences, remain untouched. All information processing is in the standard model assumed to be captured by calculating EU.

Since Jeffrey-type decision theory does not distinguish between utility and EU, it has another method of accounting for new information: conditioning. The simplest and most well-known form of conditioning is classical Bayesian conditioning, in which the agents update their degrees of belief in light of propositions being shown to be true using their conditional degrees of belief. That is, when one learns some proposition A, the resulting degrees of belief, Q, follow the formula:

where P(·|A) is the previous degrees of belief conditional on the truth of A, which are often calculated using Bayes theorem. For example, your initial probability of the left ticket winning might be P(left ticket winning) = 0.001 per cent. However, the conditional probability of the left ticket winning, given that the winning number is 12345, might have been P(left ticket winning|winning number is 12345) = 99.999 per cent since that is the number you saw on the ticket.Footnote 16 After learning that the winning number is indeed 12345, your new subjective probability function assigns Q(left ticket winning) = 99.999 per cent.

Such new degrees of belief then lead to changes in desirability due to their axiomatic connections. Therefore, Bayesian conditioning captures many cases of non-fundamental preference change. However, it is not the only form of conditioning. Reference BradleyBradley (2017) provides an excellent overview and discussion of these different forms of conditioning, and we will later turn to one of them as a model of preference change.

In the following, we are concerned with fundamental preference change (i.e. the change of fundamental preferences). In the Savage-type decision theory, such preference change occurs when either (1) the preference ordering of the consequences or (2) the situation (i.e. the states, consequences, and/or acts) changes.

Jeffrey-type decision theory does not directly distinguish between fundamental and derived preferences because all preferences are taken to range over propositions. As mentioned earlier, there is also no distinction between basic and expected desirability. Nonetheless, one can introduce a distinction between more and less fundamental preferences into Jeffrey’s apparatus, as shown in Reference BradleyBradley (2009) and outlined in Appendix A.

Our proposal, which is compatible with the Jeffrey-type decision theory, is that fundamental preferences are those that are mentally more fundamental. In fact, mentalism offers two closely intertwined ways of establishing which preferences are fundamental.

First, one can take a metaphysical perspective and argue that mental states stand in asymmetric metaphysical determination relations to each other. Your preference for the winning over the losing lottery ticket determines your preference for the lottery ticket in my left hand once you know it is the winning one. In the following, we will use the term ‘grounding’ for these metaphysical determination relations, but the specific grounding analysis is not decisive. For our purposes, what matters is that one state determines another in an asymmetric, non-causal manner. A preference state not grounded by any other preference states (together with beliefs) would be fundamental.

Second, one can take a normative perspective and attribute justification relations between preference states. The preference for a lottery win and the information that the lottery ticket in the left hand is the winning ticket justifies the preference for the left over the right ticket. In the cases we consider, the justification derives from rationality as captured by decision theory. A preference is then fundamental if and only if it is not justified by any other existing preferences (together with the relevant beliefs). When we are pressed to justify our preferences repeatedly, we reach a point where we claim to just like one alternative more than another, seemingly having reached a normatively fundamental preference.

We make the optimistic assumption that the grounding and the justification relations overlap in a well-functioning mind. This overlap partially occurs because both the grounding and the justification relation depend on the content of the involved mental states. That is, the preference for the winning lottery ticket and the belief that the left ticket is the winning ticket ground and justify the preference for the left lottery ticket by virtue of the content of these mental states.

When this overlap between justification and grounding exists, our derived preferences are grounded in preferences that justify holding them. Descriptive and normative decision theories are in agreement in this case, in that they would lead to the same model of the agent. Therefore, a mismatch can often be considered a failure of rationality.

Different types of mismatch will lead to problems of varying significance. A major problem would be if one preference partially justified another preference; for example, A ≻ B but grounded in its opposite (i.e. B ≽ A). Under normal circumstances, one should not prefer watching a movie to reading a book because one prefers reading a book over watching a movie. If such grounding were the case, one would be guaranteed to have inconsistent preferences.

Less serious, but also problematic, would be if one preference state (partially) grounded another while not justifying it (together with the relevant information). Consider the case where a preference for Bowie over Bee Gees grounds your preference for studying mathematics over sociology, despite no information providing the justificatory link between these two preferences. This outcome would be odd, to say the least, and could be considered a local breakdown of rational agency.

Whether a preference state is fundamental varies between individuals. To give an example informed by the history of philosophy, some might prefer to act according to God’s commands because they prefer doing good and believe God to command the good. In contrast, others might prefer to do good because they prefer to act according to God’s commands and believe God to command doing the good. In these two cases, the grounding and justification relations are reversed, but both are plausible mental constellations in which rational agents can find themselves. This example also shows that the content of mental states alone cannot explain the overlap between grounding and justification. There are also psychological facts that fix the direction of fundamentality.

The distinction between fundamental and derived preferences is further muddled by the fact that some preferences appear to be both.Footnote 17 For example, a citizen might fundamentally prefer democracy over authoritarianism while also preferring it because they believe democracy is more effective in combating corruption. Such cases suggest two interpretive possibilities.

First, one could argue that there is one preference that is justified by other preferences and beliefs but would persist without such justification and grounding. The preference for democracy is justified by other beliefs and preferences but robust to their counterfactual absence.

Second, one might postulate two preferences that just happen to share their content. The citizen would have two preferences with the content of preferring democracy over authoritarianism.

The difference in interpretation hinges on the individuation of preference states (i.e. whether to count them based on their content or source). We believe the decision between these options to be partially a matter of psychology. With either interpretation, however, fundamental preferences exist. Therefore, such cases will not pose much of a problem in the following.

The Definition of Fundamental Preference Change

Fundamental preference change occurs if and only if at least one mentally fundamental preference state changes, including cases where a fundamental preference is added or lost. It follows that not all preference change caused by acquiring information is derived from preference change. Information acquisition only leads to derived preference change if it exerts its effect via the grounding or justification relations between derived beliefs and derived preference. Therefore, showing that a process causing a change in fundamental preference is also a process of information acquisition does not demonstrate that the preference change is derived. For example, an individual who was always outgoing and keen on social events might lose such preferences after receiving a traumatising letter informing them of the death of a loved one. Their preferences change because the acquired information causally affects their fundamental social preferences, not because they are grounded in even more fundamental ones that relate to the information. The letter changes who they are and does not just teach them new facts about the world.

However, derived preference change is always the result of a change in a fundamental preference or information. In the lottery ticket example, it is clear that every change is due to the new information about which is the winning ticket. If you then lost your preference for wealth over poverty, it would also explain that your derived preference for the left over the right ticket vanished.

Having laid the conceptual foundation, we turn to the question of whether such fundamental preference change exists and is not merely a conceptual possibility.

Denial of Fundamental Preferences

The existence of fundamental preference change depends on the existence of fundamental preferences. In response to Binmore’s notion of intrinsic preferences, which play a similar role as fundamental preferences in our discussion, Reference BradleyBradley (2017: 24) suggested denying fundamental preferences. However, Binmore’s intrinsic preferences also have to fulfil what he calls the Aesop principle: ‘Preferences, beliefs and assessments of what is feasible should all be independent of each other’ (see Reference BradleyBradley (2017: 23), which slightly simplifies Reference BinmoreBinmore (2008: 5)).

The exact nature of the independence – whether it is probabilistic, causal, explanatory, or any other form of independence – is not entirely clear. However, according to Bradley, Binmore asserted that ‘[a] preference for one thing over another is intrinsic …if nothing we can learn would change it’ (Reference BradleyBradley 2017: 24).Footnote 18

However, denying such information-resistant intrinsic preferences poses no problem in investigating and modelling fundamental preference change. As already mentioned, we expect fundamental preferences to be affected by learning new information. Consider again the case of the individual who loses their preferences for social entertainment due to receiving a traumatising letter informing them of the death of a loved one. Their original preferences cannot be intrinsic in the sense of Binmore since they were affected by information acquisition. However, they are fundamental in the sense used by us (i.e. they are mental states that are not further justified or grounded by other preference states).

One might, however, also be tempted to deny the existence of fundamental preferences as described by us. Bradley’s discussion of Binmore suggests at least one reason for doing so:

Being wealthy, attractive and in good health are no doubt all things that we might desire under a wide range of circumstances, but not in circumstances when those arouse such envy that others will seek to kill us or when they are brought about at great suffering to ourselves or others. Even rather basic preferences, such as for chocolate over strawberry ice cream, are contingent on beliefs.

This passage can be read in two ways: one that does not threaten our project and one that does. On first reading, Bradley’s examples suggest that whatever preferences human agents have, they are limited to specific situations, and in other situations, agents will exhibit different preferences. However, that is entirely compatible with our project since we argue that fundamental preferences are subject to change. In some situations, an agent will have a fundamental preference for chocolate over strawberry ice cream, but in other situations, they will no longer do so after a preference change.

The second reading has to be that our preferences are always already conditional on other preferences and beliefs that we can ascribe to the human agents. That is, not only would human agents have different preferences when faced with other situations, it is already the case that all their preferences depend either on their grounding or their justification of further preferences and beliefs. We are committed to denying this and will provide one argument based on the structure of preferences and a second based on everyday discourse.

We can think of preferences as forming a network related by grounding and justifying relations. For no fundamental preferences to exist, the network must include at least one cycle or be infinite. Endorsing an infinite set of preferences conflicts with the assumed cognitive reality of preferences. Assuming that preferences are mental states, they have to be realised and stored in some form, and the human cognitive system only has a finite storage capacity.Footnote 19

Cycles are cognitively more plausible but still controversial if the relations between preferences are those of grounding and justification, which are commonly assumed to be non-cyclical. Cycles would be much more plausible if the relevant relations between preference states were those of counterfactual dependence: if I did not have these preferences, I would not have this other one. However, grounding and justification are the defining relations in our conception, and cycles create conceptual worries for these relations.

Grounding, for example, is often made intuitive using the notion of fundamentality, but this intuitive connection would be lost if grounding allowed for cycles. How could the grounding fact be more fundamental than the grounded fact if the relationship could be reversed?Footnote 20

The use of circular justification resembles a fallacious form of circular reasoning. In everyday discourse, it is at least odd to justify one’s preference for a career in philosophy over a career in finance with a preference for thought over money and then go on to justify the latter preference upon further inquiry with a preference for a philosophical career over a career in finance. It is more natural to stop at the end instead of continuing the chain (but see Reference HarmanHarman (1986: chapter 4) for a different view).

In addition to this argument about the structure of preferences, there is the fact that in everyday life, we often appeal to brute preferences, especially regarding tastes. Rory just prefers the fragrance of old books over that of Chanel No. 5. We might be able to describe the causes for her preferences, pointing to formative experiences, such as the joyful reading in her childhood of a second-hand copy of Pippi Longstocking, but this falls short of deriving her preferences. No other preferences exist that justify or ground this preference for the fragrance of books (cf. Reference BinmoreBinmore 2008: 5–6), even though a ceteris paribus clause might constrain the situations over which they range.

We have outlined two arguments for the existence of fundamental preference, and in the following sections, we will simply assume their existence. However, not all would be lost even if we had failed to convince. Without fundamental preferences, a closely related notion remains available: desire-driven attitude change (see Reference BradleyBradley 2017: 209–11). Denying fundamental preferences leads to a view of attitudes as a connected network. This network can change due to changes in belief attitudes within it, changes in desire attitudes, or both. Desire-driven attitude change occurs when a change to desire attitudes leads to a change in the network. The standard decision theory models, which fail to account for fundamental preference change, also do not allow for desire-driven attitude change. Much of our presentation could be reformulated in terms of desire-driven attitude change.Footnote 21

Explaining Preference Change Away

While most decision theorists tend to accept the existence of fundamental preferences, they have long been tempted to explain away fundamental preference change. Economists have especially tended in that direction. Avoiding fundamental preference change would make decision theory simpler and, thereby, more elegant. Economists, who tend to follow the Savage-type theory, could abide by the simple table of consequences, states, and acts and the basic preference orderings over the consequences. Such simplicity is not to be given up lightly.

One of the most influential attempts to explain away preference change can be found in the work of Reference Stigler and BeckerStigler and Becker (1977). Their ambitious paper De Gustibus Non Est Disputandum (there is no disputing about tastes) suggests that there are no cases of fundamental preference change and that fundamental preferences are universal. The argumentative strategy is to suggest that ‘no other approach of comparable generality and power is available’ (Reference Stigler and BeckerStigler and Becker 1977: 77) and then to deal with some of the biggest challenges to the assumption of stable and universal preferences, including addiction and fashion.

Discussing the case of heroin addiction, Reference Stigler and BeckerStigler and Becker (1977: 80) propose that there exists an underlying commodity called ‘euphoria’ that can be produced with input from heroin. To model addiction, one can then assume that

1. the consumption of euphoria reduces what, in economic terms, would be the future stock of euphoria capital, raising the costs of producing euphoria in the future;

2. the demand for euphoria is sufficiently inelastic that heroin use would increase over time.

With these assumptions, an economic model of addiction behaviour can be created without postulating fundamental preference change.

Fashions are another candidate for preference change that might bother economists. Individuals who preferred a suit jacket with shoulder pads in the 80s no longer do so, even though it is hard to see what new relevant information they would have acquired at first glance. The visual experience was presumably the same back then as it is now. So, one might think what has changed is the basic taste for certain visual experiences over others, perhaps simply because we have become accustomed to them. However, Stigler and Becker again suggest that the behaviour towards fashion is best explained by a preference for an underlying commodity; in this case, style as a form of social distinction. In order to achieve this form of distinction, fashion items must be new and ‘the newness must be of a special sort that requires a subtle prediction of what will be approved novelty’ (Reference Stigler and BeckerStigler and Becker 1977: 88). Therefore, fashion will be subject to change, and being fashionable (i.e. achieving social distinction via fashion) requires skill and effort.

With these and other examples, Stigler and Becker show how fundamental preference change can be explained away, in that a stable-preference model of the phenomenon can be found. However, showing that such stable-preference models are available is not quite the same as accepting that they should be used to explain away preference change. As usual, many models with inconsistent interpretations will fit the same data; so we must consider their plausibility and various epistemic virtues. Therefore, to challenge Stigler and Becker’s approach, one can either point to cases where the interpretation of a model with preference change has plausibility due to known causal paths or to cases where a model with preference change enjoys other epistemic virtues to at least the same degree as a preference-stable model.

To illustrate how a preference change model might be more plausible due to known causal pathways, consider Stigler and Becker’s heroin example again. We know that drugs such as heroin interfere with the normal functioning of the human neural system. Given the assumption that preferences are mental states realised by the neural system, there is a clear causal pathway of how heroin might affect fundamental preferences. A substrate that grounds preferences is affected by its consumption, sometimes to such an extent that it would be surprising if no fundamental preferences were affected. So, at least for those accepting the mentalist conception of preferences, there are good reasons to believe that fundamental preference change occurs in cases involving marked changes to the neural system.

Heroin consumption is not the only event where we have good reasons to believe that there is an effect on the neural substrate of preferences. Many of these events, such as sleep deprivation and brain damage, may appear deviant (i.e. as an aberration from proper functioning). However, humans also undergo events during a healthy life that might affect their fundamental preferences. For example, one might expect the physical maturation of children and pregnancy to causally affect the realisers of preferences. Any stable-preference model that deals with such instances would also have to provide arguments as to why the common assumption of causal effects is wrong, something Stigler and Becker do not provide.Footnote 22

Having discussed how known causal pathways might support preference change models, we now consider other epistemic virtues that might support preference change. As quoted towards the beginning of this section, Reference Stigler and BeckerStigler and Becker (1977: 77) have suggested that ‘no other approach [than that of stable and universal tastes] of comparable generality and power is available’. However, taken at face value, this claim is wrong. Generality and power on their own do not appear to favour a model with stable preferences over a model with changeable preferences. After all, models with changing preferences are (typically) a generalisation of models with stable preferences. To be charitable, one should not take the claim of Stigler and Becker at face value. Instead, their point must be that models with stable preferences have sufficient generality and power to explain all phenomena while being simpler and avoiding ad hoc attributions of preference change.

It is undoubtedly true that adding preference change can complicate models, but sometimes the efforts to avoid preference change also lead to considerable complications. In the case of both addiction and fashion, Stigler and Becker had to introduce some underlying object of interest – euphoria or social distinction – in addition to further assumptions, such as an inelastic demand for euphoria. At least in some cases, the required assumptions will be so complex that a model attributing preference change is simpler. To quote Bradley’s response to Stigler and Becker: ‘The sorts of suppositions that will need to be made about changes to underlying beliefs in order to preserve the invariance of tastes may well be as ad hoc as the assumptions about taste changes that they are supposed to replace and may be no more constrained by the empirical evidence’ (Reference BradleyBradley 2009: 239).

The accusation of making ad hoc assumptions cuts both ways, and sometimes the assumptions required for vindicating stable tastes might be even more ad hoc than that of changing preferences. Therefore, the reference to epistemic virtues alone does not vindicate stable-preference models.

This argument against the position taken by Stigler and Becker can be generalised to work against a strategy for avoiding attributing fundamental preference change (described and criticised by Reference Dietrich and ListDietrich and List (2013b: 627–28)). This strategy has two steps:

1. The first introduces a sufficiently fine ontology of alternatives over which fixed preferences are taken to range.

2. The second then explains away any apparent examples of fundamental preference change as the result of information acquisition.

Consistent with this general strategy, Stigler and Becker can also be understood as fine-graining alternatives. For example, they fine-grain the choice between fashion items by introducing social distinction to the goods that matter and then explain away preference change as a change in information about social distinction. What has changed is not a fundamental preference relation that ranges over alternatives such as suits with and without extravagant shoulder pads, but the information about which of those suits contribute to (positive) social distinction.

A general problem with this strategy becomes apparent at this higher level of abstraction. Any preference change would have to satisfy the constraints of Bayesian information learning, including dynamical consistency (see Reference Dietrich and ListDietrich and List 2013b: 628). While one might be able to meet those constraints by fine-graining alternatives and making more assumptions about the agent’s situation, the resulting model might very well be less appealing than one that includes preference change. Therefore, Dietrich and List end their discussion of this strategy with a similar conclusion to Bradley:

Interpretationally, the main cost of remodelling every preference change in informational terms would be a significant expansion of the ontology over which the agent would have to hold beliefs and preferences. This is a cognitively demanding model of an agent, which does not seem to be psychologically plausible. We would preserve rational choice theory’s parsimony with respect to the assumption of fixed preferences only at the expense of sacrificing parsimony with respect to the cognitive complexity ascribed to the agent.

Explaining away fundamental preference change in all cases is a strategy unlikely to succeed. Its epistemic virtues of a stable-preference theory do not cover its costs. Nonetheless, those convinced by the reality of fundamental preference change, including your humble authors, are well-advised to consider explanatory strategies such as those of Stigler and Becker. It can be all too easy to postulate preference change to explain whatever puzzle is at hand, especially without any rigorous preference change models. Someone convinced by the ubiquity of fundamental preference change might have failed to consider the role of social distinction in fashion, as described by Stigler and Becker. Identifying the exact limits of these different types of models is a philosophically interesting challenge. They might all share the same predictions of the behavioural data but differ profoundly in their description of human agency.

Furthermore, in 1977, Stigler and Becker could have justifiably claimed that no models incorporating preference change had reached the level of sophistication and rigour achieved by stable-preference models. As we will see, the situation has improved since then, even though models of fundamental preference change remain underdeveloped. However, the solution is not to avoid preference change attribution and add increasingly dubious assumptions to stable-preference models but to develop models of preference change. Philosophical work remains to be done in laying the conceptual and theoretical foundations for such models. We turn to this project in the next chapter.

2 Models of Preference Change

Two Input-Assimilation Models of Preference Change

So far, we have introduced the nature of preferences and decision theory and defended the existence of fundamental preference change. We now turn to specific models of preference change.

Conceptually, we can distinguish between normative and descriptive models of preference change. While normative models prescribe how individuals’ preferences should change over time, descriptive models describe how preferences do change over time (without assuming that they should change optimally).Footnote 23 However, many models of preference change involve both descriptive and normative aspects and are, in fact, mixed models.

To illustrate, consider one of the earliest formal models of preference change proposed by Reference Cohen and AxelrodCohen and Axelrod (1984). They outlined a dynamic model in which an agent adopts a policy for action, observes the results of this policy, which leads them to update their beliefs and preferences, and then implements a policy that has been changed accordingly. Overlooking its further details, it is striking how their model combines descriptive and normative aspects. While it makes the descriptive proposal that surprise causes preference change,Footnote 24 it is normative because a preference-changing agent performs better in maximising an output than a static agent, at least for an extensive range of parameters. Given that arguments in decision theory often take the form that achieving more is better than less (if you are so smart, why aren’t you rich?), Reference Cohen and AxelrodCohen and Axelrod’s (1984) approach also makes a normative case for their specific version of preference change.

In this chapter, we will see that more recent models of preference change, including our own proposal, also incorporate both normative and descriptive assumptions. We start with two comparable approaches, one based on preference logic and one based on Jeffrey-type Bayesian decision theory. Both types of approach produce ‘input-assimilation’ models and answer the following question (Reference HanssonHansson 1995: 2, Reference Hansson2001: 43):Footnote 25 given that one or more preferences have locally changed (input), how can the overall preference ordering of the agent be updated (assimilated)? Such models are normative, in that they prescribe that an agent should strive to maintain consistency in his preferences over time and that they rule out certain types of preference changes as irrational. However, descriptive considerations will also become apparent.

Preference-Logic Models of Preference Change

Multiple preference logic-based approaches to preference change exist, but we focus on an AGM-based model.Footnote 26 The AGM model was originally a model of belief change and is named after three eminent researchers (Reference Alchourrón, Gärdenfors and MakinsonAlchourrón et al. 1985). Only later have its ideas been used to model preference change.

Hansson and Grüne-Yanoff have offered some of the most prominent applications of the AGM model to preference change (Reference HanssonHansson 1995; Reference Grüne-Yanoff, Hansson, Grüne-Yanoff and HanssonGrüne-Yanoff and Hansson 2009; Reference Grüne-YanoffGrüne-Yanoff 2013).Footnote 27 The bases for these applications are the formal preference relations discussed in chapter 1, which are then incorporated in propositional logic without addressing uncertainty, as the Savage- and Jeffrey-type decision theories do.

We start with a set of alternatives, A, which might, for example, be a set of book genres over which an agent has preferences:

In this context, we can represent a preference relation R as a set of tuples, each comprising two alternatives that are compared. For example, if Lane prefers science fiction (SciFi) to crime and fantasy literature and also prefers crime to fantasy literature, then her preference ranking can be represented as follows:

Each tuple ranks the first option at least as highly as the second. In this example, Lane is shown to have a reflexive and transitive weak preference relation.

Such a preference relation R then renders valid a set of preference sentences [R] similar to the semantics of propositional logic (see again Reference HanssonHansson 1995: 8; Reference Grüne-YanoffGrüne-Yanoff 2013: 2626). For example, the tuple ⟨SciFi, Fantasy⟩ validates the proposition that Lane weakly prefers SciFi to Fantasy (i.e. the preference sentence ‘SciFi ≽ Fantasy’). Expressed formally:

The absence of a preference sentence, represented by the variable α, from the set of sentences validates its negation:

Hansson and Grüne-Yanoff also used the connectives of propositional logic (i.e. conjunction, disjunction, and the material conditional). The formal description of conjunction would be:

In essence, the basic preference sentences express a simple (weak) preference for one alternative over another and further sentences are constructed using negation and standard logical connectives.

Representing a preference relation as a set of tuples and the associated sentences allows for incomplete, intransitive, and further unusual preferences. However, despite this flexibility, Hansson and Grüne-Yanoff consider such a set of tuples insufficient to represent certain plausible states of mind. Specifically, agents might be in a state where they hold one or another preference but have not settled on one yet.

Consider a case in which the agent knows that they prefer either The Dispossessed (D) or The Lord of the Rings (LotR) to Death on the Nile (DotN) but cannot remember which one it was. Here, the preference sentence D ≻ DotN v LotR ≻ DotN holds, but neither D ≻ DotN nor LotR ≻ DotN holds.Footnote 28 Regarding the present formalism, the disjunction of two preference sentences might be valid without either being valid. To solve this problem, Hansson introduced a set R of preference relations and defined that a preference sentence holds for R if and only if all R in R validated it. In the case of the three books, R might be a set of two preference relations, R1 and R2, where the first validates the first disjunct (D ≻ DotN) and the second validates the second (LotR ≻ DotN).

The formal apparatus allowed Reference HanssonHansson (1995: 10, Reference Hansson2001: chapter 4) to describe four elementary types of preference change:

1. Revision: a new preference sentence (e.g. A ≽ B) is included in the preference model R.

2. Contraction: a preference sentence is lost from the preference model R.

3. Addition: a new alternative becomes available for the preference model R.

4. Subtraction: an alternative for the preference model R is lost.

Hansson provides a complete description of these types, but we will limit ourselves to a largely informal discussion of how to make the four types of preference change specific. After all, there are many ways to include a sentence in a preference model.

To visualise the problem, consider a case where an agent neither weakly prefers the movie Totoro to Bambi nor vice versa. Assume that their R includes only the preference relations R1 and R2, where the first one validates only Totoro ≽ Bambi and the second only Bambi ≽ Totoro, but neither validates both. If the agent undergoes a revision that Totoro ≽ Bambi is validated for them, then the second relation (R2) has to be different. However, it could be changed so that:

Totoro ≽ Bambi is only added to what R2 makes true, in which case the R2 would specify indifference between the two movies.

Or the earlier Bambi ≽ Totoro in R2 would be lost, in which case the agent represented by R now strictly prefers Totoro.

The description given so far does not settle which version is correct.

To make the four types of preference change specific, Hansson suggests that the preference model before the change should be maximally similar to that of the model afterwards. Put differently: the change should be conservative. This move requires a notion of distance. Many such notions can be defined simply by counting how many preference sentences were true for the first model but not the second and vice versa and then adding those two counts together. The maximally similar model is the one for which the added count is the smallest.

However, Reference HanssonHansson (1995: 12) suggests a slightly more complex distance metric, where some preferences change before others. Specifically, he proposes that the revision operation includes a so-called priority index that specifies alternatives that are ‘loosened’ during the revision. When multiple ways to undergo preference change are open, the change that concerns the loosened alternatives should occur with priority. For example, when Lane re-reads The Lord of the Rings and concludes that it is better than Dune, her experience might loosen her preferences for SciFi and Fantasy books but not travel guides. On Hansson’s account, that means that if a change in Lane’s preferences over genre novels can help avoid a preference change over travel guides, then the first change will occur. Her preferences over genre novels have been loosened and so should have priority in change.

The general conservative constraints imposed by Hansson and Grüne-Yanoff can be motivated in at least three ways (see Reference Grüne-YanoffGrüne-Yanoff 2013: 2629). First, the change process might have cognitive costs. Presumably, the agent seeks to ensure that the resulting new preferences are consistent and fulfil various prior commitments. The smaller the preferences change, the fewer the ways of newly violating these restrictions.

Second, the existing preferences might have been reached through an investment, either cognitively or otherwise, and therefore can be seen as a form of ‘accumulated capital’ (Reference Grüne-YanoffGrüne-Yanoff 2013: 2629). Regarding biographies, Rory might prefer The Power Broker to The Life of Johnson because she spent months reading both volumes and evaluating their multifaceted qualities. Given the effort it takes to establish such well-considered preferences on the matter, they should not be discarded without need.

Third, the functioning of preferences in human conduct might require stability. Grüne-Yanoff highlights the role of preferences in personal identity and long-time planning. Some social coordination functions might also be better served by stability than fluctuations not forced by the preference change-inducing event.

All three considerations are most easily understood and supported if preferences are assumed to be cognitively real. If preferences were merely used to describe behaviour, there would be no cognitive cost, and it would be challenging to see how they would accumulate cognitive capital or play a direct function in cognitive life. Recovering these three reasons from a behaviourist perspective would require considerable conceptual work. Therefore, the arguments by Hansson and Grüne-Yanoff illustrate the deep intertwinement between the ontology of preferences and theories of preference change. This intertwinement is also between descriptive and normative modelling. The cognitive roles ascribed by these arguments are a matter of descriptive inquiry and modelling, but they are used to impose normative constraints.

As the name suggests, preference logic deals with preferences, but decision theorists prefer to work with utility or desirability functions. We now discuss how Bayesian decision theorists can deal with fundamental preference change.

Bayesian Models of Preference Change

The flexibility of the Bayesian framework makes it comparatively easy to introduce fundamental preference change into Jeffrey-type decision theory. While the conditioning approach was initially intended to deal with new evidence, there is no reason to limit it in such a way. Reference BradleyBradley’s (2017) work on conditioning explicitly covers changes in preferences, including what he describes as desire-driven changes. Given the variety of ways of conditioning, not all of them can be covered; instead, we focus here on what Bradley calls ‘generalised conditioning’.

Let P and V stand for the old degrees of belief and desirability and Q and W for the new degrees of belief and desirability (i.e. the degrees after the change has occurred). Furthermore, we need the notion of a partition of the alternatives, which are in the Jeffrey-framework propositions. A partition of a set of propositions is a set of subsets that do not share any elements but together include all elements in the original set of propositions. So, if the propositions are:

tomorrow I read The Power Broker,

tomorrow I will work on preference change, or

tomorrow is a Sunday,

then one partition would be the set: {{Tomorrow I read The Power Broker, Tomorrow is Sunday}, {Tomorrow I will work on preference change}}. For brevity, we write the partition A = {αi}, where α1, α2, and so on are the various members of the partition, which are themselves sets of propositions.

Given this notation, the new pair of degrees can be obtained from the old by generalised conditioning if and only if for all propositions β and for all αi in A (such that P(β|αi) > 0), the following equations hold (2017: 202):

This first equation describes the new degrees of beliefs. They result from multiplying the previous conditional probabilities for the proposition β with the new probabilities for the partition elements across the partition. More important for our purposes is the second equation describing the new degrees of desirability:

Given the connection between desirability and preferences (i.e. an alternative is weakly preferred to another if and only if it has at least as high a desirability), the second equation describes a type of preference change. These equations might appear opaque, and we cannot provide here all of the well-developed justification in Reference BradleyBradley’s (2017) book and (2007) paper. However, to hint at some of the intuition regarding the desirability equation, note that

![]() . That is, deriving the new desirability for

. That is, deriving the new desirability for

![]() involves taking the new joint desirability for

involves taking the new joint desirability for

![]() with each proposition from the partition and then weighing this joint desirability by the new conditional probability of the relevant proposition from the partition given

with each proposition from the partition and then weighing this joint desirability by the new conditional probability of the relevant proposition from the partition given

![]() . Roughly, we consider the new desirability of all ways in which

. Roughly, we consider the new desirability of all ways in which

![]() could be true and weigh them by the relevant probability.

could be true and weigh them by the relevant probability.

Generalised conditioning covers cases where preferences over a given set of alternatives change direction. Not covered are cases where:

1. an agent acquires or loses a preference between two alternatives;

2. an agent becomes aware or loses awareness of an alternative.

In his effort to make Bayesian decision theory more realistic, Bradley has provided a way of dealing with these cases. The core idea will be broadly familiar since it also takes inspiration from the AGM model of belief change (see Reference BradleyBradley 2017: 245). As we did in our discussion of Hansson and Grüne-Yanoff, we will provide a broad outline without all the formal details.

To address the loss and gain of preferences, Bradley introduces the notion of avatars. The main intuition is that an agent can be thought of as a group of agents, called avatars, that disagree amongst themselves. If Rory has not yet settled on the desirability of various courses she might pick this term, she can be modelled as having multiple avatars that differ regarding the desirability of these courses while sharing attitudes regarding other alternatives on which Rory has a settled state of mind.Footnote 29 For example, Rory1, her first avatar, might assign V(biology) = 10 and V(sociology) = 5, while Rory2 might assign the numbers in reverse (i.e. V(biology) = 5 and V(sociology) = 10). If Rory the person settles on the first desirabilities, this can be modelled as her losing the second avatar that was previously in contradiction with this assignment.

The avatar approach greatly resembles the preference-logic approach of having a preference model R that is a set of multiple preference relations R1, R2, …, Rn. In both cases, the represented agent is said to prefer one alternative over another if and only if all components (i.e. all avatars or all preference relations) validate such a preference. Nonetheless, there are considerable differences. First, Bradley’s avatars are taken to have desirability and probability functions, which impose stricter criteria on the avatars than the existence of a preference model R requires. Second, Bradley’s approach does not include the notion of preference sentences, which could then be extended using logical connectives.Footnote 30

These differences could be addressed by extending and adapting Hansson’s preference logic and Bradley’s Bayesian approach. One could require the preference model R to be such that the relations it includes allow the construction of desirability functions, and one might define a language of preference sentences for Bradley’s framework. However, there are reasons not to do so. The two approaches have different purposes. The preference-logic approach is better suited to cases where the modeller wants to make minimal assumptions. Bradley’s extension is appropriate in cases where the full power of Bayesian decision theory is required.

Having covered losing or acquiring a preference state, the option of becoming aware or losing awareness of an alternative remains. To model such changes in awareness, Bradley distinguishes the modeller’s domain from the agent’s. Simply put, there is a truly available set of alternatives and a subset of which the agent is aware. From the perspective of Bradley’s project, the question is which restrictions rationality imposes on extending and restricting the agent’s domain of awareness. Reference BradleyBradley (2017: 255–60) primarily endorses conservative criteria that describe the preservation of attitudes. In the case of domain extension, the agent’s new probabilities and desirabilities should be the same as the original unconditional probabilities and desirabilities. In the case of domain restriction, the new probabilities and desirabilities should be the same as the old probabilities and desirabilities conditioned on what is to become the new smaller domain of alternatives. Put differently, when extending one’s domain, the previous attitudes are preserved as attitudes conditioned on the previous domain. In contrast, when restricting one’s domain, the previous attitudes conditioned on the sub-domain are preserved as unconditional attitudes.

We have already encountered conservative requirements in discussing Hansson’s and Grüne-Yanoff’s approaches. However, as with the case of avatars and preference models, there are significant differences. Reference Grüne-YanoffGrüne-Yanoff (2013) has criticised in detail that Bradley’s approach does not include a similarity measure between desirability functions, which would govern the change of such desirabilities. It is not the case in Bradley’s approach that an agent will necessarily develop the most similar desirability function, given the required changes and constraints of rationality. The rigidity that Bradley formalised using conditional attitudes does not have the same restrictive force. While similarity measures could also be introduced into Bradley’s approach, doing so is not without challenges (see Reference Grüne-YanoffGrüne-Yanoff (2013) for details).

Beyond Input-Assimilation Models: Sources of Change

In this section, we move beyond input-assimilation models of preference change and consider how to model the sources of change. Such models need to specify constraints on how preferences change and the conditions for whether preferences change. To distinguish them from input-assimilation models, we call them sources-of-change models.

The reader might wonder whether sources-of-change models fall into the domain of philosophy. In particular, one might believe that rationality imposes negligible constraints on the sources of preference change. Making this assumption, one might hold that models of these sources are purely psychological, not normative and of no philosophical interest. Unsurprisingly, we disagree with this view, and we do so for at least two reasons:

1. Philosophers are not just investigating normative questions but are also invested in descriptive endeavours. For example, philosophers of the social sciences pursue questions about the descriptive adequacy of agency models, including sources-of-change models. Considering the conceptual space of such models is a worthy endeavour for philosophers.

2. Sources of preference change and their impact fall into the domain of practical rationality. One way to see this is to consider our normative attitudes towards such change. For example, preference change is often considered unreasonable due to its source: one should not change one’s preference for one’s life partner due to the current weather. Being a good-weather partner is improper. Indeed, it might not only be morally reprehensible, but if such unconnected patterns proliferate, one might question whether the system is still a rational agent. Sources-of-change models might allow for describing such constraints or criticise systems for failing to do so.

In the following, we introduce reason-based decision theory and the commitment-based theory of preference change as two sources-of-change models.

Reason-Based Decision Theory

When Ishmael told us that ‘Queequeg, for his own private reasons, preferred his own harpoon’, he implied that our preferences could be grounded in reasons. Developed by Reference Dietrich and ListDietrich and List (2013a, Reference Dietrich and List2013b, Reference Dietrich and List2016b), reason-based decision theory captures how shifts in such motivating reasons induce preference change.

You might be motivated by the appeal of being famous and therefore prefer a career as a social media influencer to that of a decision theorist one day, while the next day, being famous does not serve as a motivating reason to you anymore, and your preference reverses. You stopped considering that reason, and, as a result, your preferences are no longer the same.Footnote 31

In order to model such preference changes, reason-based decision theory postulates: