Series Preface

The Elements in Forensic Linguistics series from Cambridge University Press publishes (1) descriptive linguistics work documenting a full range of legal and forensic texts and contexts; (2) empirical findings and methodological developments to enhance research, investigative advice, and evidence for courts; and (3) explorations and development of the theoretical and ethical foundations of research and practice in forensic linguistics. Highlighting themes from the second and third categories, The Idea of Progress in Forensic Authorship Analysis tackles questions within what is often seen as a core area of forensic linguistics – authorship analysis.

Authorship analysis has been a key area of interest for the author, Tim Grant, since he received his PhD in 2003. Since that time, he has contributed as a researcher on a range of interdisciplinary publications, and he has worked as a practitioner on a variety of civil and criminal cases for both the prosecution and defence. This Element, the first in our series, is a culmination of those experiences.

Drawing on these perspectives, Grant’s goals here are trifold – (1) to engage with ideas from the philosophy of science in order to take a critical retrospective view of the history of authorship analysis work; (2) to describe and address key concerns of how authorship analysis work has progressed in forensic science from both stylistic and stylometric traditions; and (3) to set out an agenda for future research, including the establishment of more reliable case formulation protocols and more robust external validity-testing practices.

Given the important nature of authorship analysis in the field of forensic linguistics, we are looking forward to more upcoming Elements that will continue to enhance the discussion of best protocols and practices in the field.

Series Editor

Many persons engaged in research into authorship assume that existing theory and technology make attribution possible though perhaps elusive.

from a paper delivered at

Prologue: The Dhiren Barot Case

On 12 August 2004, at 11:20 a.m., I received a call at work from a police investigator from SO13, the London Metropolitan Police’s antiterrorism branch.Footnote 1

At the time, I was employed at the Forensic Section of the School of Psychology at the University of Leicester and I had just recently been awarded my PhD in forensic authorship analysis under the supervision of Malcolm Coulthard. The caller started by saying they had tried to find Malcolm but he was out of the country, and they went on to ask about forensic linguistics and whether I could prove a suspect had written a particular document. The caller explained they had arrested a high-value terrorist in London and unless they could prove he had written a particular document, they would have to release him in forty hours’ time. They needed my help.

I quickly established that the anonymous document was about thirty pages long and that there were also a small number of comparison documents. I was told there were currently no digital versions of the documents – although if that’s what I needed, I was assured, the police team would type them up at speed. Time, not resources, was the problem. I said that I could help but also that I could not possibly do this on my own. I asked if I could gather a small team. I didn’t have much time; Scotland Yard was to send a fast car to pick me up which would arrive at my home in an hour and a half.

So, trying to keep the adrenaline rush out of my voice, I quickly made a round of calls. First, I recruited my University of Leicester colleague Jessica Woodhams. She is a forensic psychologist and was then in her first lecturing job having previously worked as a crime analyst at the Metropolitan Police. Jess was interested in the links between my work in authorship analysis and her work in case linkage analysis in sexual crime, and we had had a lot of useful and interesting methodological discussions. Next, I called Janet Cotterill from Cardiff University. Janet had been a PhD with me under Malcolm Coulthard and she had also had some rare casework experience. At this stage, I had only been involved in two very small cases – most of my knowledge was theoretical, not applied. I also asked Janet to recruit Sam Tomblin (now Sam Larner), who had impressed me a year before at an academic conference in mid-Wales.

I fully briefed Jess and Janet over the phone and told them to pack an overnight bag. Initially, the plan was to bring the Cardiff team to London by helicopter – in the event we all made the journey to New Scotland Yard in police cars with lights and sirens going. By 3:00 p.m., Jess and I were in a small, airless room high up in the tower block that is New Scotland Yard reading a document entitled The Rough Cut to the Gas Limo Project. Janet and Sam arrived shortly afterwards, breathless and slightly sick from the journey.

I was nervous and fully aware that this case was a massive break for me personally and that it could launch my career as a forensic linguistic practitioner. I was blissfully unaware that in almost everything that had happened so far, that in almost every decision I had made, and that in almost every opportunity for a decision that had passed me by unnoticed, I had made a bad choice. With nearly twenty years of hindsight, research, and experience, I now know I had already compromised the integrity of that authorship analysis before it had begun.

This hindsight is not just personal; we should hope that with the intervening years of research into forensic authorship analysis, and into forensic science more generally, that the discipline of forensic authorship analysis should have progressed. This Cambridge Element questions the nature of progress in forensic authorship analysis and attempts to identify where, in research and practice, real progress can be claimed, and also where no progress appears to have been made. The hope is that by critically applying hindsight to research and practice in forensic authorship analysis, and through understanding progress in the broader contexts of forensic science, we can build on such progress that has been achieved. Further to this, the ambition is that by focussing attention on this idea of progress, we can accelerate improvement in the practice and the standing of the discipline.

I hope this Element reaches different audiences. The primary audience is students and researchers in forensic linguistics. I have written with advanced undergraduate and postgraduate linguistics students in mind, but also I hope students and researchers in other forensic sciences will be helped in understanding the nature, strengths, and limitations of authorship analysis evidence.

Further to this, there are of course practitioner audiences – both forensic linguistic practitioners themselves (currently a fairly limited group) and lawyers and other investigators who might want to achieve greater understanding of this form of linguistic evidence.

This text is intended as an academic text and my forays into the history and philosophy of science are perhaps of less practical use, but they are important in understanding how authorship analysis is progressing and where it has not progressed so far. I hope I can interest all these readerships in this material as much as in the more focussed discussion of authorship analysis work.

1 The Idea of the Idea of Progress

The idea of progress is itself problematic and contestable.

In 1980, Robert Nisbet wrote his important book History of the Idea of Progress in which he tracks the idea of progress in civilisation, thinking, and science from its origins in Greek, Roman, and medieval societies to the present day. The work originated from a shorter essay (Reference NisbetNisbet, 1979) before being fully developed, and in his introduction to the 2009 edition, Nisbet reasserts that ‘the idea of progress and also the idea of regress or decline are both with us today in intellectual discourse’ (Reference NisbetNisbet, 2009, p. x). In these works, Nisbet argues that the origins of the idea of progress for the ancient Greeks came from a fascination with what he calls knowledge about, and the realisation that knowledge about the world was different from other forms of knowledge (such as spiritual knowledge) in that it allowed for an incremental acquisition of this special sort of knowledge, and that this accumulation of knowledge over time led, in turn, to a betterment of life for the individual and for society.

One competing discussion to the idea of scientific progress as accumulated acquisition of knowledge is proposed in the work of Thomas Kuhn and his idea of scientific revolutions and of paradigm shifts in science. Reference KuhnKuhn (1962) contends that science is not merely the incremental accumulation of knowledge about but that it is also an inherently social enterprise and methods of science and interpretations of meanings in science undergo periodic radical shifts. Like Nisbet, Kuhn is a historian of science and of ideas, and he identifies a number of points of scientific revolution in the history of Western civilisation. These include in astronomy the shift from Ptolemaic to Copernican ideas and then the shift from Copernican to Galilean ideas, created by Keplar’s and then Newton’s observations and shifts in thinking. Kuhn insists that each of these shifts is not entirely based in normal science – the accumulation of knowledge about – but that it also involves a mix of social change and, at the particular point in time, the promise of an as-yet-unrealised greater coherence of theoretical explanation provided by the new paradigm. In each period of normal science, anomalous observations grow until the whole body of knowledge and thinking itself requires reinterpretation.

One of the most radical and controversial aspects of the Kuhnian idea of science is that of incommensurability between paradigms. That science carried out in one paradigm is incommensurate with science carrried out in another, puts cross-paradigm conversation beyond comprehension – the science of paradigm A cannot be engaged with or critiqued by the tools provided by the science of paradigm B or vice versa. This may suggest a strongly relativistic interpretation of Kuhn, but this is rejected by him in the final chapter of The Structure of Scientific Revolutions (Reference Kuhn1962), which is called ‘Progress through Revolutions’, and is further reinforced in a postscript to the 1970 second edition, where Kuhn suggests that a new paradigm does in fact build upon its predecessor, carrying forward a large proportion of the actual problem-solving provided by the scientific insights of that preceding work. In the second-edition postscript, Kuhn (1970) proposes that it would be possible to reconstruct the ordering of paradigms on this basis of their content and without other historical knowledge. Philosophers of science argue over incommensurability and whether it is inherently coherent, and whether it is possible to maintain as a non-relativist position (as Kuhn does). There seems to be no current consensus on these questions (see e.g. Reference SiegelSiegel, 1987 and Reference SankeySankey, 2018), but Kuhn’s focus is on the utility of scientific insights as linking paradigms. Reference HollingerHollinger (1973) in a discussion on Kuhn’s claims to objectivity, suggests Kuhn believes that ‘Ideas “work” when they predict the behaviour of natural phenomena so well that we are enabled to manipulate nature or stay out of its way’ (p. 381). This focus on ideas that work has to ground all paradigms of science, and this prevents a radical relativist position from emerging.

Focussing on utility aligns these ideas of science and progress with a further set of discussions on the nature of scientific reasoning as ‘inference to the best explanation’, introduced by Reference HarmanHarman (1965). Both Kuhn’s and Harman’s conceptions of science are not altogether fixed but also not altogether relativistic as ‘best explanations’ and ‘useful ideas’ will change according to social contexts. Appeals to utility do seem to constrain a fully relativist position whilst allowing for scientific revolutions and paradigm shifts.

There is a final theme in discussions of scientific reasoning that links closely to the idea of ‘inference to the best explanation’, and this is the theme of demonstrability. Reference NewtonNewton (1997) argues that scientific truths must be demonstrably true – they cannot for example be personal – but without a means of demonstration (as may be the case with spiritual truths) nor can scientific truths be private. This idea of demonstrability is one on which modern scientific practices of peer review and publication sit. The first test is that of a reviewer, who must agree that a finding has been demonstrated; the second test is that of public dissemination, where the wider community gets to consider the adequacy of that demonstration.

One area where demonstrability can create difficulty is in that of scientific prediction. Predictions need always to be grounded in first describing (or modelling) an evidence base. Typically, it is possible to demonstrate the adequacy of a predictive model. Most TV weather forecasts, for example, spend some time describing the status quo – what is happening with regard to high- and low-pressure systems and the locations of current storms or sunshine. Tomorrow’s forecast uses this description and extrapolates. Only when tomorrow comes can the accuracy of the prediction itself be demonstrated. The science of weather forecasting can be demonstrated in terms of how often tomorrow’s forecast came true – for example, my local TV station may get it right eight days out of ten.

Some predictions, however, are predictions of states of affairs which cannot be demonstrated, and these include ‘predictions’ of what happened in the past. To continue the example of weather predictions, it might be asked whether we can ‘predict’ what the weather was in a location before records began. I may want to know how wet or cold it was on the day on which the roof was completed at the Norman manor house at Aston (then Enstone) in Birmingham, UK. To make such a prediction, I need to create a model, starting with today’s climate descriptions. Evaluation of the model involves looking for independent confirmation of my prediction, but there is a point at which my weather model for medieval Warwickshire is undemonstrable – it can be neither verified nor falsified – and this creates an issue as to the scientific status of such predictions. Even where the passage of time is much less than nine hundred years, demonstration of prediction of past events is difficult and needs to be done with care. One solution to this problem is termed validation. Validation is the demonstration that predictions can be reliable (or demonstrating the extent to which they are reliable) in cases where outcomes are known, thus demonstrating that the method is likely to be reliable where the outcome is unknown. As such, validation is an indirect form of scientific demonstration, but it may be the only form of demonstration available. An obvious problem with this idea of validation is that the known situations used to validate a model have to be as similar as possible to the situation of the prediction where the outcome was unknown. In predicting weather in the past, there is a danger that because of climate differences, modern weather models that can be validated today may be invalid in making predictions about the historic past and this will undermine the strength of the validation. These considerations are important in our contexts because a further example of predicting into the unknown past is that of casework in forensic science. In forensic science, the task in essence is to demonstrate to the courts what happened in the (typically not so distant) past. In these cases too, the issue of validation as an indirect demonstration of a scientific proposition is key, and as we shall see, the issue of whether the validation data are similar to the case data can be all important.

When considering the idea of progress in forensic science, and specifically in forensic authorship analysis, we need to consider what progress might look like. We need to ask whether there has been progress in knowledge about authorship and in knowledge about how authors’ styles of writing differ from one another and how one author’s style might vary over time. We need also to bring to consciousness that there may be competing paradigms for approaching authorship problems, and whether these paradigms are in fact incommensurable, or whether amounts of insight and knowledge from one paradigm can be adopted into another to create progress. We need to consider issues of utility of basic understandings about the nature of authorship for the progression of work in authorship analysis, and we need to consider issues of validation and demonstrability of results, particularly as most authorship analyses are essentially predictions about what has happened in the recent past. All of this needs to be considered when addressing the idea of progress in forensic authorship analysis.

One further thought Nisbet acknowledges is that in all cultural scientific and technological endeavours, progress is not inevitable and decline is possible. It may be that in adopting newer methods, the results may seem (or may actually be) less good than before – it also may or may not be the case that as those new methods mature, they then overtake the status quo in their utility. Decline and progress are flip sides of the same idea, and it seems incoherent to suggest that progress and not decline is possible. As well as the possibility of decline, Nisbet acknowledges that the negative consequences of progress may receive greater emphasis and create opposition to progress. As forensic authorship analysis progresses in utility, concerns over infringement of privacy may rightly increase, and progress in the area may become a victim of a catastrophic success in providing an unexpected and shocking identification of a specific individual in a high-profile controversy. Apparent progress can often be seen to catalyse opposition. Thus in 1995, a young but retired academic, Theodore John Kaczynski, published a treatise against progress which he called The Industrial Society and Its Future.Footnote 2 This text had significant consequences for a number of individuals whom Kaczynski maimed and killed, and it also carried implications for the progress of forensic authorship analysis.

2 Forensic Authorship Analysis

A Brief History of Forensic Authorship Analysis

It can be hard to declare the origin of any practical discipline as the practice will almost certainly have been occurring before it was noticed, labelled, and written about. In the English-speaking world, it is common to point to Jan Reference SvartvikSvartvik’s (1968) short study on the language of The Evans’ Statement with its subtitle that coined the term ‘forensic linguistics’. Svartvik sets out linguistic grounds for the contention that Timothy Evans’ apparent confession to the murder of his wife and baby daughter was in fact more likely authored by an interviewing police officer. Evans was hanged on 9 March 1950, convicted of the murder of his daughter (but not his wife). Just three years later, John Christie, another occupant at 10 Rillington Place, confessed to the murder of his wife, and was subsequently shown to be a serial murderer. This led to a re-evaluation of Evans’ conviction, a number of media campaigns and appeals, and eventually Public Inquiries examining the circumstances of the Evans case. Svartvik’s analysis contributed to the Public Inquiry held at the Royal Courts of Justice between 22 November and 21 January 1966 – and it is this Inquiry that finally led to Evans’ posthumous pardon in October 1966.

Svartvik first discusses the issues of the transcription of Evans’ spoken statement in the context that Evans has been assessed as having a ‘mental age’ of ten to eleven and was largely illiterate, and sets out linguistic structures that he believes were typical of Evans’ language and that would persist beyond the transcription conventions of two separate police officers. Svartvik aims to be ‘maximally objective: in our case this means that the features can be unambiguously stated to be open to outside inspection, and that they can be quantified and subjected to significance testing’ (Reference SvartvikSvartvik, 1968, p. 24). He notes that this restricts him to high-frequency features, which in turn ‘immediately rules out lexical features’ (p. 25), and comments that there is no ‘background material’, no comparison corpus on which he can rely. He does, however, compare Evans’ statements with that taken from Christie in his eventual prosecution.

As well as trying to be maximally objective, Svartvik in a footnote points out that his analysis is based firmly in linguistic research in syntax. Given his constraints, Svartvik focusses on clauses and clause types and cites the literature to support the typology he uses. Specifically, Svartvik examines the difference in distribution of relative clauses with mobile relators, where the subject can occur before or after the relator (e.g. then and also can occur as I then or then I, or I also or also I, etc.) and also elliptic subject linking clauses (which have no overt subject). He compares the statements compiled by the two police officers and creates comparisons within the statements, between sections that compromise Evans and sections that do not contain confessional material. He notes that in the crucial disputed sections of these statements, there is a significant increase in mobile relator clauses and ellipsed subject clauses associated with the police language. Svartvik states early in the report that he ‘cannot hope to arrive at any firm legal conclusions; the interest of [his] study is primarily linguistic and only secondarily of a legal nature’ (p. 19), and his conclusion is thus that of a correlation between ‘the observed linguistic discrepancies’ (p. 46) with the contested sections of the statements.

After Svartvik’s contribution to forensic authorship analysis in the late 1960s, the next reports of casework in forensic authorship analysis come in the 1970s through the work of Hannes Kniffka (reported in Reference KniffkaKniffka 1981, Reference Kniffka1990, Reference Kniffka1996, Reference Kniffka2007), which reflect his work in the German language. Kniffka reports work in several cases drawing on his understandings of sociolinguistics and of contact linguistics. Of five early cases he reports from the 1970s, two are reports of disputed meaning and three are reports of disputed authorship. These relate to disputes over an article in the press, minutes of a meeting, and a particular edition of a company newsletter (Reference KniffkaKniffka, 2007). Kniffka does not provide full details of his methods for analysis in these cases, but he does indicate the type of analysis in which he engages. For example, in the case about who wrote the edition of a company newspaper, he writes of analysis:

On several linguistic levels, including grammar (morphology, syntax, lexicon, word order), and linguistic and text-pragmatic features, several idiosyncrasies, ‘special usages’, errors and deviations can be found in an exactly parallel fashion [between the disputed material and the known material].

This description suggests a broader scope in developing authorial features from a text than that applied by Svartvik, and that Kniffka brought a fuller range of linguistic knowledge and analyses to bear in this case, and presumably in other authorship cases of the time.

In the late 1980s and early 1990s, Malcolm Coulthard in the UK became involved in a series of cases which questioned the authenticity of a number of confession statements associated with serious crimes in and around the city of Birmingham. Throughout the 1980s, a regional police unit, the West Midlands Serious Crime Squad (and later the Regional Crime Squad), came under increasing pressure as it became apparent that they were involved in serious mismanagement, corruption, and demonstrated fabrication of evidence including in witness statements. In 1987, there was a turning-point case in which Paul Dandy was acquitted on the basis that a confession statement was shown to have been fabricated. The evidence for the defence came from the then novel Electro-Static Detection Analysis or ESDA technique, which is used to reveal microscopic divots and troughs left in the surface of paper by writing on it, irrespective of whether any ink stain was present. In the case of Paul Dandy, an ESDA analysis showed a one-line admission had been added to his otherwise innocuous statement, and the court released him on the basis of this evidence, but not before he spent eight months on remand in solitary confinement (Reference KayeKaye, 1991). This practice of adding words to a statement to turn it into a confession became known in UK policing circles as ‘verballing’ and was informally acknowledged to have been a common practice of the Serious Crime Squad. A difficulty for many appeals, however, was that the original pads on which statements had been written had been destroyed. This meant that the ESDA technique could not be used and that all that remained was the language itself.

Coulthard gave linguistic evidence at a series of high-profile appeals, including the appeals of the Birmingham Six, who were convicted in 1975 of setting bombs for the Irish Republican Army (IRA) which killed 21 people and injured 182; the Bridgewater Four, who were convicted of killing twelve-year-old paperboy Carl Bridgewater by shooting him in the head after he apparently disturbed a burglary; and also at the trial of Ronnie Bolden in 1989. The Birmingham Six achieved successful appeals in 1991, the Bridgewater Four’s convictions were quashed in 1997, and Bolden was acquitted. After the Bolden trial, the West Midlands Serious Crime Squad was disbanded. There were a number of other less high-profile cases from the West Midlands Serious Crime Squad and the Regional Crime Squad, to which Coulthard also contributed. He brought some of these cases to a newly formed forensic linguistic research group at the University of Birmingham. This was attended by a group of academics and PhD students including myself, Janet Cotterill, Frances Rock, Chris Heffer, Alison Johnson, Sue Blackwell, and, briefly, John Olsson. At these sessions, alongside more typical research presentations, case materials were considered, analysed, and discussed and some of the case analyses made it into Coulthard’s work in investigations and in court.

In addition to these West Midlands cases, Coulthard was involved in the successful 1998 appeal of Derek Bentley’s conviction. His analysis of Bentley’s confession was reported in the first volume of the International Journal of Forensic Linguistics, now renamed Speech, Language and the Law (Reference CoulthardCoulthard, 1994). Coulthard’s analysis uses a feature similar to that identified by Svartvik – the mobile relator – and Coulthard shows, by using corpus methods, how the I then form, which was present in the Bentley statement, was more typical in the police register than the then I form, which was more typically used by the general population – as represented by the (then impressive) 1.5 million words of the COBUILD Spoken English Corpus. This finding matched Reference SvartvikSvartvik (1968, Table 6, p. 34), where the same pre-positioned subject in I then appears to mark that the statement has been authored by the police officer.

Coulthard’s use of corpora to understand base rates of a feature in a general population is a mark of significant progress over Svartvik – to whom the technological innovation of large, computerised corpora was unavailable, and who bemoaned the lack of ability to make proper between- and within-individual comparisons. Indeed, Svartvik notes that ‘[f]or a proper evaluation of the results of [his] study it would be desirable to have a better knowledge of the linguistic phenomena than we now have’ (Reference SvartvikSvartvik, 1968, p. 39). He then goes on to speculate on the effect of emotional and non-emotional content on this style feature:

It would also be valuable to know more about clause type usage generally and its expected range of variation in one idiolect, or, more specifically, in a statement made by one person on one occasion.

Svartvik is forced to rely on internal comparisons within Evans’ confessions and external comparison with the case materials of Christie’s confessions in order to provide contrast and background information. The contrast between Svartvik’s approach of using a small set of highly similar within-genre texts, and Coulthard’s approach of using a significantly sized population sample of texts across genres remains a twin-track approach used today to understand the distribution and distinctiveness of authorship features. As we shall see in what follows, each has its strengths and weaknesses.

Svartvik and Coulthard, however, have plenty in common in their approaches. They both focus on verballing in disputed confessions, and this introduces advantages and disadvantages in their analyses. The suspect witness statement of its time was a well-defined genre, and as Reference SvartvikSvartvik (1968) discusses, this introduces two specific issues: the role of the police officer as amanuensis and the introduction of features of their habitual style, and the fact itself of the creation of a written text from the distinctive style of spoken answers. It is through this fog of transformation that Svartvik and Coulthard are attempting to identify the voice, the authorship, of the defendants in their cases. On some occasions, as in Coulthard’s analysis of the Bentley confession, it was sufficient to determine that the text could not be verbatim Bentley, and that it must have been the product of a dialogue rather than a monologue from Bentley. These findings were contrary to the sworn statements of police officers in the original trial and thus were considered grounds for appeal. This, however, represents a rather indirect form of authorship analysis. On other occasions, the authorship analysis for both Coulthard and Svartvik is more direct. Thus Svartvik suggests that ‘substandard usage’, such as Evans’ double negative ‘“She never said no more about it”’ (Reference SvartvikSvartvik, 1968, p. 22), is probably a trace of his spontaneous speech in the statements. In both cases, however, the nature of the task addressed and the texts analysed are working against strong, direct conclusions of authorship.

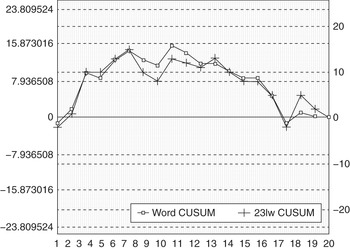

An important and revealing but ultimately fruitless detour in the history of forensic authorship analysis was the invention and application of cumulative sum (CUSUM) analysis. Reference MortonMorton (1991) set out a graphical and apparently general technique for spotting the language of one author inserted in a text written by another. The plots comprised two values derived from a text plotted in parallel sentence by sentence – typically the values were sentence length and the number of two- and three-letter words in that sentence, although Morton, and later Reference FarringdonFarringdon (1996), experimented with other measures (see Figure 1). An insertion of an external author was apparently revealed in a divergence of the two lines on the graph. The CUSUM technique was initially successfully used by defence teams in multiple cases including the appeal in London of Tommy McCrossen in July 1991, the trial in November 1991 of Frank Beck in Leicester, the trial of Vincent Connell in Dublin in December 1991, and the pardon for Nicky Kelly from the Irish government in April 1992 (Reference Holmes and TweedieHolmes and Tweedie, 1995, p. 20). The technique ‘demonstrated’ that these confession statements had been subjected to verballing and, in the McCrossen case at least, convinced the Court of Appeal that this was a wrongful conviction.

Figure 1 Example CUSUM chart reproduced from Reference GrantGrant (1992, p. 5) erroneously showing insertions in a text by a second author at sentences 6 and 17

Such cases quickly attracted media attention and academic analysis which wholly discredited the technique. Reference Holmes and TweedieHolmes and Tweedie’s (1995) review of the evidence cites no fewer than five published academic studies between 1992 and 1994, all of which find the technique seriously wanting (Reference CanterCanter, 1992; Reference de Haan and Schilsde Haan and Schils, 1993; Reference HardcastleHardcastle, 1993; Reference Hilton and HolmesHilton and Holmes, 1993; Reference Sanford, Aked, Moxey and MullinSanford et al., 1994). Errors of misattribution and missed attribution were easily demonstrated. It was shown how the graph itself could be manipulated and when visual comparisons were replaced with statistical methods, no significant effects could be found to uphold the claims of CUSUM advocates. In spite of this the legacy of CUSUM in the UK lasted a decade longer. By 1998, the UK Crown Prosecutors (CPS) made a statement of official guidance that forensic linguistic evidence of authorship was unreliable, that it should not be used by prosecutors, and that it should be vigorously attacked if used by defence. This guidance was drafted to take in all forms of linguistic evidence, not just CUSUM–based authorship analysis. It was only during the preparation of the 2005 prosecution of Dhiren Barot that this guidance was narrowed to pick out only CUSUM as a demonstrably bad technique for forensic authorship analysis. This was a result of Janet Cotterill and me pointing out to the prosecutors that the negative guidance remained on the books and might undermine the case against Barot.

Even before Coulthard was untangling the confession statements written by the West Midlands Serious Crime Squad and other disreputable British police officers, forensic authorship analysis was developing in the USA. Richard Reference Bailey, Ager, Knowles and SmithBailey (1979) presented a paper at a symposium at Aston University held in April 1978 on ‘Computer-Aided Literary and Linguistic Research’. In his paper called ‘Authorship Attribution in a Forensic Setting’, Bailey addressed the authorship of a number of statements made by Patty Hearst, who was both a kidnap victim and also went on to join her kidnappers in a spree of armed robberies. Bailey’s analysis was an early stylometric analysis – he used twenty-four statistical measures, some of which were extracted from the texts by newly written software routines called EYEBALL (Reference RossRoss, 1977). It seems Bailey’s analysis was not used in court but may have provided support to the prosecution legal team. It also seems likely that Bailey’s contribution was the same as that presented at the 1978 Aston symposium. Further to the Hearst case, Bailey also mentions in the Aston paper that he was aware of two subsequent US trials where authorship analysis was not admitted as evidence.

It thus appears that the earliest courtroom discussion of an authorship analysis in the United States was in Brisco v. VFE Corp. and related cross-actions in 1984. This case involved no fewer than five linguists: Gerald McMenamin, Wally Chafe, Ed Finegan, Richard Bailey, and Fred Brengelman (Reference FineganFinegan, 1990).Footnote 3 McMenamin provided an initial report for VFE (and its owners, Cottrell) and attributed a libellous letter to Brisco, using a method he later refers to as forensic stylistics (Reference McMenaminMcMenamin, 1993). Chafe was then hired by VFE/Cottrell and affirmed that he believed that both McMenamin’s methods and his conclusion were good. Finegan was then hired by Brisco’s lawyers and his report was critical of the VFE’s linguists’ methods; he was subsequently backed up by Bailey, who examined all the available reports and supported Finegan’s critiques. Finally, in view of the two critical reports of the original authorship analysis, VFE/Cottrell conducted a further deposition on the evidence with Brengelman as a third linguist for them, the fifth overall on this case.

The outcome of the Brisco case is somewhat confusing. McMenamin certainly testified at trial and his evidence went unrebutted. The fact that he went unchallenged, however, appears to be because after Brengelman’s deposition and before the trial, the issue of authorship was agreed to be settled. Additionally, it appears that the whole trial, including McMenamin’s evidence, was ‘vacated’ on grounds separate from the authorship evidence – this means the case was made void, as if the trial had never occurred. Finegan writes in personal communication that ‘The judgment in the case – which included ascribing the authorship of the defamatory document as established by a linguist’s expert testimony – was vacated subsequently by the very same trial judge, who later reinstated it, only to have it subsequently vacated by the California Appellate Court,’ and also that then ‘aspects of the case were appealed to the California Supreme Court, which declined hearing it’. McMenamin’s testimony in this case seems therefore to remain vacated and to have a status akin to that of Schrödinger’s cat – it was both the first instance (that I can find) of a linguist giving authorship evidence in an American court, and also, it never happened.

Irrespective of the status of McMenamin’s Schrödinger’s testimony, it is clear that he (along with others) went on to provide linguistic evidence of authorship in a number of cases in the late 1980s and 1990s and through to the present day.Footnote 4 Details of his approach and analysis methods are provided in two books (Reference McMenaminMcMenamin, 1993, Reference McMenmain2002). Forensic Stylistics in 1993 may be the first book specifically on forensic authorship analysis, and in it McMenamin reports that his approach relies on the basic theoretical idea from stylistics. This is that in every spoken or written utterance an individual makes a linguistic selection from a group of choices, and also, that in making such selections over time, the individual may habitually prefer one stylistic option over others. His method is to derive sets of features from each text he examines, often starting with the anonymous or disputed texts, and then demonstrating if these features mark the author’s habitual stylistic selections in known texts by suspect authors. This stylistics basis for authorship analysis was also (somewhat unwittingly) applied as an approach in what is still one of the most high-profile authorship analysis cases worldwide – the investigation into the writings of the Unabomber.

Jim Fitzgerald, as a Supervisory Special Agent in the FBI, reports visiting Roger Shuy at Georgetown University in the summer of 1995 to hear Shuy’s profile of The Industrial Society and Its Future, the then anonymous Unabomber manifesto (Reference FitzgeraldFitzgerald, 2017). Shuy suggested that the writer of the manifesto was a forty- to sixty-year-old, that they were a native English speaker raised in the Chicago area, and that they had been a reader of the Chicago Tribune. As reported, Shuy’s opinion was also that the writer was likely white, but he didn’t rule out that he might be African-American or have been exposed to African-American Vernacular English (AAVE). Excepting this last hedging about the AAVE influence, Shuy’s profile proved remarkably accurate.

The publication of the manifesto in the Washington Post and in the New York Times in 1995 resulted in the recognition by members of Theodore Kaczynski’s family that the themes and the writing style in the manifesto were similar to his other writings. The family provided letters and documents received from Kaczynski, and this enabled Fitzgerald and the FBI to write a ‘Comparative Authorship Project’ affidavit which compared the anonymous documents with the letters from Kaczynski. The affidavit was used to obtain a search warrant for Kaczynski’s remote Montana cabin. The analysis was based on more than six hundred comparisons not only of the writer’s selections of words and phrases, which were ‘virtually identical word-for-word matches of entire sentences’ (Reference FitzgeraldFitzgerald, 2017, p. 487), but also many of which were ‘somewhat similar’ and ‘very similar’ across the two document sets. Fitzgerald at that time claimed no expertise in linguistics and simply presented the points in common.

In the subsequent legal challenge to the analysis, Robin Lakoff, acting for the defence, argued that these co-selections of stylistic choices were insufficient evidence to amount to ‘probable cause’ to support the search warrant. In response to Lakoff, the prosecution hired Don Foster, a literary linguistics expert, who defended Fitzgerald’s analysis (Reference FosterFoster, 2000). The final judgement in this dispute was that the language analysis provided sufficient evidence to amount to probable cause, and thus the search was upheld as legal. With this finding, the considerable further written material and bomb-making materials found at Kaczynski’s cabin could be admitted at trial. In January 1998, in the face of this overwhelming evidence and as part of a plea bargain to avoid the death penalty, Kaczynski pleaded guilty to the charges against him and was sentenced to life imprisonment without the possibility of parole.

Fitzgerald’s work is clearly an authorship analysis and an analysis of the language evidence. That it is similar to McMenamin’s stylistic approach seems to be a coincidence and may be because Fitzgerald drew on his behavioural analysis training. Behavioural analysts and psychological profilers examine crime scene behaviours to produce profiles and to link crimes committed by the same offender. In case linkage work, behaviours are coded as selections within potential repertoires of choices required to achieve different crimes (Reference Woodhams, Hollin and BullWoodhams et al., 2007). Thus, in certain sexual crimes, controlling a victim is a required aim, but this might be achieved by verbal coercion, by physical threats, by tying up the victim, or by other means. The repeated co-selection of behaviours within these repertoires can be seen as evidence that a pair of unsolved crimes may have the same offender.Footnote 5 To a degree, Fitzgerald’s analysis is unusual in comparison with the analyses by the linguists Svartvik, Kniffka, Coulthard, and McMenamin. Most expert linguists would have been unlikely to comment on common content or themes across the queried and known documents, seeing this as beyond their expertise. Similarly, as linguists they would probably also have been less interested in linguistic content that was merely very similar or somewhat similar. The word-for-word matches of entire sentences, however, would still be considered strong linguistic evidence of authorship, and the idea of a set of linguistic choices identifying a specific author certainly has traction (Reference LarnerLarner, 2014; Reference WrightWright, 2017) and is referred to by Reference CoulthardCoulthard (2004) as a co-selection set.

Since the turn of the century, the growth in authorship cases has grown considerably. Perhaps building on Kniffka’s early work, the German federal police (Bundeskriminalamt (BKA)) have uniquely maintained a forensic linguistic unit within the official forensic science division. Within the UK, a number of high-profile forensic authorship analyses have been taken to court, and in R v. Hodgson (2009), Coulthard’s evidence of text messaging was appealed and his approach and opinions were upheld (Reference Coulthard, Johnson and WrightCoulthard et al., 2017). There have been many more cases where authorship evidence has been provided for criminal prosecution or defence but not reached the courts. This year, a full forty-three years after Richard Bailey first spoke at Aston University on forensic authorship analysis, the Aston Institute for Forensic Linguistics held a symposium on ‘International Approaches to Forensic Linguistic Casework’ at which there were discussions of cases from Australia, Canada, Greece, India, Indonesia, Norway, Portugal, South Africa, Spain, Sweden, and Turkey as well as from Germany, the UK and the USA.Footnote 6

A Taxonomy of Authorship Analysis

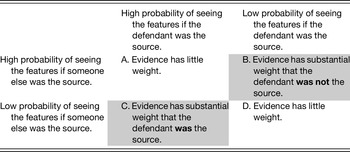

The focus in this Section is on the structure of authorship questions and the tasks which are carried out. As we have seen from its history, authorship analysis is not a unified endeavour and authorship analysis tasks vary according to the specifics of the case. As will be seen, the general term authorship analysis is preferred and used in contrast to authorship attribution as in many cases neither the aim nor the outcome of the linguistic analysis is an identification of an individual. Such analyses can roughly be broken down into three principal tasks, each of which can contain a number of sub-tasks as illustrated in Figure 2.

Figure 2 Taxonomy of authorship analysis problems

In Figure 2, the abbreviations Q-documents and K-documents are used to indicate Queried/Questioned documents and Known documents, respectively.

Comparative authorship analysis (CAA) can be roughly split into closed-set problems, in which there is a limited, known set of candidate authors, and open-set problems, where the number of candidate authors is unknown or very large. Closed-set problems are best classified in terms of how many candidate authors there are, from binary problems, to small, medium, and large sets of possible authors. The size of the set of candidate authors will to a great extent determine the method applied and the chances of success. Clearly defining a small set as five or fewer and a large set as twenty or more is an arbitrary distinction born out of practice, but it seems to work as a rule of thumb. Open-set problems are often also referred to as verification tasks, but in forensic work this would also include exclusion tasks. Verification tasks also occur when all documents are in fact by unknown authors and the task is to determine whether one anonymous text has the same author as a set of further anonymous texts. In problems with very many candidate authors, approaches are very different, and here are referred to as author search problems.

In comparative authorship analyses which are forensic, the question as to who defines the set of candidate authors is critical and different from other contexts. Scholarship in biblical, literary, and other forms of academic authorship attribution can allow the researcher to consider many types of evidence, and the scholar may engage in historical research, take into account factors such as inks or types of paper, or examine the content in terms of the psychology or opinions of the writer. All these extralinguistic factors may help determine a set of candidate authors in a specific case. When Fitzgerald investigated the Unabomber manifesto, and with his training as a behavioural analyst, he had no reason to ignore common thematic content between the known and anonymous texts or the extralinguistic forms of evidence in the case. Indeed, it was his duty to identify and evaluate all possible forms of evidence to contribute to the search for the offender. However, generally a linguist’s expert role is separate from that of a general investigator, and it is the police (or other investigators) who define the scope of the closed set. This also makes the police responsible for the evidence that supports the contention that there is indeed a closed set. When this occurs, this can then allow the linguist to write a conditional opinion, constraining their responsibility. For example, it might be possible to write that of this set of authors there is stronger evidence that author A wrote the queried text than for any of the other candidate authors.

Verification tasks (and the reciprocal exclusion tasks) occur when there might be a small set of known documents (K-documents) and a single queried or anonymous document (Q-document). The issue is whether a single Q-document has the same author as the K-documents, and these cases are structurally harder than classification tasks. One problem with these tasks is that analyses tend to express an idea of stylistic distance. This notion of distance is in fact metaphorical and there is no principled measure of how close or distant in style any two texts are, and no well-defined threshold that makes two texts close enough or far enough apart to include them or exclude them as having been written by the same author. The hardest verification task of all is one in which there is a single Q-text and a single K-text. One reason for this is that in any verification task, the K-texts have to be assumed to be representative of the writing style of the author. Although this is an assumption without foundation, it is a necessary assumption to set up a pattern of features against which the Q-text is compared. The assumption can be strengthened by the number and diversity of K-texts, but when there is just a single K-text, this is a very weak assumption indeed.

Identification of precursory authorship includes plagiarism enquiries and identification of textual borrowing that may contribute to allegations of copyright infringement. Such cases require examination of the relationships between texts, and identification and interpretation of whether shared phrases and shared vocabulary might arise independently. Such enquiries rely on the idea of ‘uniqueness of utterance’ (Reference CoulthardCoulthard, 2004) that accidental, exact textual reproduction between unrelated documents is highly unlikely. They are included here as a form of authorship analysis as it is often the case that the matter at question is whether a particular author really can claim a text as their own, or whether it was originally authored by someone else.

Sociolinguistic profiling is a form of authorship analysis that takes a different approach. Rather than focussing on a comparison between individuals, it focusses on social and identity variables in order to group language users. The range of possible variables is potentially enormous and has been subject to varying degrees of study, including with regard to issues discussed below of the relationships between possible essential characteristics and performed identities.Footnote 7 Reference NiniNini (2015) provides a survey of the more common variables including gender, age, education, and social class, with gender being by far the most well studied. Other language influence detection (OLID) is the task of identifying which languages, other than the principal language in which a text is written, might be influencing the linguistic choices in that target language. For example, an individual might be writing in English on the Internet, but it might be possible to discover both Arabic and French interlingual features influencing that English, and this then might inform understanding of the writer’s sociolinguistic history. This form of analysis is more commonly referred to as native language identification (NLI or NLID), but as Reference Kredens, Perkins and GrantKredens et al. (2019a) point out, the idea of a single ‘native’ language that might be identified is problematic – many individuals are raised in multilingual environments, and some might fairly be described as simultaneous bilinguals or multilinguals with contact from several languages affecting their production of a target language. There is a further profiling task perhaps exemplified by Svartvik’s and Coulthard’s identification of police register in Evans’ and Bentley’s witness statements, or by Shuy’s identification that the use of ‘devil strip’ indicates the writer as having linguistic history in Akron, Ohio (reported in Reference HittHitt, 2012).Footnote 8 This task is to identify a register or dialect that might indicate the community from which the author is drawn. Such a community might be a professional community, a geographic community, or a community of practice (defined by Reference Wenger, McDermott and SnyderWenger et al. (2002) as a community based in exchanged knowledge and regular shared activity). To acquire and use the identifying linguistic idiosyncrasies of such a community indicates at least strong linguistic contact with members of the community and possibly membership in it. Sociolinguistic profiling might therefore enable a linguist to draw a sketch of an anonymous offender and so assist investigators in their search for a suspect. In most jurisdictions, such profiling work will never be admitted in court and a report is typically viewed as an intelligence product rather than as evidential. Reference Grant and MacLeodGrant and MacLeod (2020) provide a discussion of the various ways forensic authorship analyses can be used outside of the provision of actual evidence in court.

Author search is a less well-studied form of authorship analysis. The task here is to devise search terms from the text of an anonymous writer, often to find online examples of other writings by the same author. These techniques might be used, for example, to identify an anonymous blogger or to link two anonymous user accounts across dark web fora to indicate that the same individual is behind those accounts. Or they might be used to find an anonymous dark web writer by identifying their writings on the clear web. Reference Koppel, Schler, Argamon and MesseriKoppel et al. (2006b) and Reference Koppel, Schler and ArgamonKoppel et al. (2011) report authorship experiments involving 10,000 authors and Reference Narayanan, Paskov and GongNarayanan et al. (2012) describe their study using a billion-word blog corpus with 100,000 authors. Using three blog posts to search, they can identify an anonymous author 20 per cent of the time and get a top twenty result 35 per cent of the time. Developments since Narayanan include Reference Ruder, Ghaffari and BreslinRuder et al.’s (2016) work, which provides cross-genre examples (with texts from email, the Internet Movie Database (IMDb), blogs, Twitter, and Reddit), and Reference Theóphilo, Pereira and RochaTheóphilo et al. (2019), who tackle this problem using the very short texts of Twitter. Reference Kredens, Pezik, Rogers and ShiuKredens et al. (2019b) refer to this task in the forensic context as ‘linguistically enabled offender identification’ and have described a series of studies and experiments across two corpora of forum messages. In the first experiment, with a corpus of 418 million words from 114,000 authors, they first used a single message-board post as a basis for a search, and in their results, which rank likely authors from the rest of the corpus, they achieved 47.9 per cent recall in the first thirty positions in the ranking (average hit rank = 7.2, median = 4). This means that in nearly half the experiments, other posts by the anonymous author appeared in the top thirty search hits. When they took fifty randomly selected posts from the author, rather than a single post, their results improved to achieve 89.7 per cent recall in the first thirty predictions (average hit rank = 2.7, median = 1). In an even bigger experiment with a bulletin board corpus of 2 billion words from 588,000 authors, results with a single input post were reduced to 19.2 per cent recall in the first thirty predictions (average hit rank = 5.46, median = 3); and with fifty random posts from a single author as input to 45.1 per cent (average hit rank = 5.46, median = 3). These results demonstrate both the plausibility and the power of author search approaches, and the issue of increasing the size of the search pool up to a full internet-scale technology.

Across these varied authorship analysis tasks, the nature of the genre and the length of the text can be important. It perhaps goes without saying that shorter texts provide less information to an authorship analysis than longer texts, so attributing a single Short Message Service (SMS) text message may be more challenging than attributing a blog post, a book chapter, or an entire book. On the other hand, there may be several hundred or even thousands of known text messages in an authorship problem, and this can compensate for the shortness of individual messages. Reference Grieve, Clarke and ChiangGrieve et al. (2019) suggest that more standard methods of authorship analysis struggle with texts of fewer than 500 words, and this can lead to approaches that concatenate very short texts. Unfortunately, this is precisely the territory in which forensic authorship analysis is situated. Reference Ehrhardt, Grewendorf and RathertEhrhardt (2007), for example, reports that for the German Federal Criminal Police Office, the average length of criminal texts considered between 2002 and 2005 was 248 words, with most being shorter than 200 words. Cross-genre comparative authorship problems, comparing, say, diary entries to a suicide note, or social media data to emails, or to handwritten letters, also raises difficulties as the affordances and constraints for different genres naturally affect the individual style. Reference Litvinova, Seredin, Litvinova, Dankova, Zagorovskaya, Karpov, Jokisch and PotapovaLitvinova and Seredin (2018) describe cross-genre problems as being ‘the most realistic, yet the most difficult scenario, (p. 223), and there are a limited number of studies which attempt to address this issue directly (see e.g. also Reference Kestemont, Luyckx, Daelemans and CrombezKestemont et al., 2012).

Any discussion of authorship analysis requires some understanding of the process of authorship. Reference LoveLove (2002) writes usefully about the separate functions that occur in the process of authorship, listing precursory authorship (the dependence on prior texts and sources whether directly quoted or not), executive authorship (the wordsmithing itself in stringing together words into phrases or sentences), revisionary authorship (involving heavy or lighter editing), and finally declarative authorship (involving the signing off of a document which might be individual or organisational). These functions have been discussed in some detail in Reference Grant, Gibbons and TurellGrant (2008), but it suffices to say here that each of these functions can be fulfilled by one or more different individuals. Thus, many authors may write precursory sources used in a text, many writers might contribute actual phrases or sentences to a text, many authors may edit or change a text, and many may truthfully declare it to be theirs. Authorship attribution can only rationally be applied to texts where preliminary analysis or argument can support an assumption that a single individual was responsible for writing the text or a specified portion of it. In forensic casework, it might be reasonable to assume a single writer is involved if the queried document is a sexually explicit communication, but less so if it is a commercial extortion letter or a defamatory blog post. This reflection points to potential tasks which are currently generally unexplored in the authorship analysis literature, tasks around determining how many authors might have contributed to a text and in what ways.

Finally, there is a set of further tasks which are related to authorship analysis but which are not directly of concern here. Reference Grant and MacLeodGrant and MacLeod (2020) in their study of online undercover policing write of authorship synthesis, which they break down into identity assumption, where an individual’s written presence online needs to be seamlessly taken over by an undercover operative, and linguistic legend building, where the task is to create a linguistic style that is sufficiently different from their own but that can be used consistently to create an online persona. In discussions of authorship disguise there are also obfuscation tasks (see e.g. Reference Juola and VescoviJuola and Vescovi, 2011) in which the task is to hide the style of the writer as a defence against all the types of authorship analysis described in this Section.

Approaches to Forensic Authorship Analysis

In terms of broad approach, authorship analyses can be split into stylistic analyses and stylometric analyses, and both approaches can be applied to the range of tasks described earlier in this Element. The stylistic approach can be characterised as an approach where in each case, markers of authorship will be elicited from every text and thus the markers of authorship will vary from case to case. In contrast, stylometric approaches attempt validation of markers of authorship in advance of tackling a specific problem and thus hope to create a set of general reliable measures of any author’s style. Neither stylistic nor stylometric approaches necessarily require a manual analysis or a computational approach, but typically stylistic approaches tend to be associated with hand analysis and stylometric approaches with computational analysis. Since its origins, forensic authorship analysis has involved the descriptive linguistic and statistical approaches, as exemplified by Svartvik, and computational techniques, as foreshadowed in Bailey’s computer-assisted analysis and in Coulthard’s corpus work in the Bentley case. Stylistic and stylometric approaches are sometimes seen as in opposition to one another, and possibly from incommensurable paradigms, but again, as exemplified by Svartvik and Coulthard, this does not have to be the case.

When considering the question of progress in forensic authorship analysis, this might address different aspects of the tasks which can be seen as three categories of research:

(1) The linguistic individual: questions of why there might be within-individual linguistic consistency and between-individual linguistic distinctiveness, and to what extent any or all of us possess a discernible ‘idiolect’;

(2) Markers of authorship: questions about the best methods to describe texts and to draw out any individuating features in those texts (sometimes referred to as markers of authorship), and about the best methods for partialing out influences on style other than authorship – for example, from the communities of practice from which an author is drawn, from genre, or from the mode of production of a text;

(3) Inference and decision-making: questions about the best methods to draw logical conclusions about authorship and how to weigh and present those conclusions.

Each of these areas is now addressed.

The Linguistic Individual

Discussions of the linguistic individual often begin with the idea of idiolect. Originating with Reference BlochBloch (1948) and then Reference HockettHockett (1958), the concept of ‘idiolect’ indicated a pattern of language associated with a particular individual in a particular context. It has been developed in contemporary forensic linguistic discussions, which often begin with the well-cited quotation from Coulthard that

The linguist approaches the problem of questioned authorship from the theoretical position that every native speaker has their own distinct and individual version of the language they speak and write, their own idiolect, and … this idiolect will manifest itself through distinctive and idiosyncratic choices in texts.

This raises empirical questions as to whether what Coulthard asserts is true, or partly true, and theoretical questions as to whether it has to be true to provide a sound foundation for forensic authorship analysis. Reference Grant, Coulthard and JohnsonGrant (2010, Reference Grant, Coulthard, May and Sousa Silva2020) distinguishes between population-level distinctiveness, which aligns with Coulthard’s theoretical position here, and pairwise distinctiveness between two specific authors, which does not require that ‘every native speaker has their own distinct and individual version of the language’. Authorship analyses which rely on population-level distinctiveness require large-scale empirical validation projects to demonstrate how select baskets of features can fully individuate one author from any others. Other forms of authorship analysis, though, with smaller sets of potential authors, need only rely on distinctiveness within that group or between pairs of authors in that group.

Further theoretical questions arise as to what might explain both within-author consistency and between-author distinctiveness. Reference Grant and MacLeodGrant and MacLeod (2018) argue that these might be best explained through the idea that the individual draws on identity resources which both provide linguistic choices and constrain the choices available to them. These resources include relatively stable resources such as an individual’s sociolinguistic history and their physicality in terms of their physical brain, their age, biological sex, and ethnicity (which index their identity positions relative to others and so shape their linguistic choices). Alongside these are more dynamic resources which explain within-author variation such as the changing contexts or genres of interactions and the changing audiences and communities of practice with which they engage. Such discussions raise the further possibility that some individuals might be more distinctive than others, and this is provided some empirical support by Reference WrightWright’s (2013, Reference Wright2017) study of emails in the Enron corpus and has implications for the practice of authorship analysis and for the validation of methods of authorship analysis.

Markers of Authorship

The distinction between stylistic and stylometric approaches tends to drive the type of linguistic features which are considered as potential markers of authorship for use in an analysis.

As might be expected when asking a stylistician to examine a text, features are observed from what Reference KniffkaKniffka (2007) refers to as different ‘linguistic levels’ (p. 60). Kniffka refers to grammar, linguistic, and text-pragmatic features as well as idiosyncrasies, ‘special usages’, errors, and deviations. Further to this, Reference McMenaminMcMenamin (1993) in his impressive appendices provides annotated bibliographies of descriptive markers and quantitative indicators of authorial style. These are grouped into types of features in terms of

usage (including spelling, punctuation, and emphases and pauses indicated in writing);

lexical variation;

grammatical categories;

syntax (including phrasal structures, collocations, and clause and sentence structures);

text and discourse features (including narrative styles and speech events, coherence and cohesion, topic analysis, introductions, and conclusions);

functional characteristics (including proportion of interrogatives vs declaratives);

content analysis;

cross-cultural features; and

quantitative features of word, clause, and sentence length, frequency, and distributions.

Reference Grant and MacLeodGrant and MacLeod (2020), following Reference Herring, Barab, Kling and GrayHerring’s (2004) approach to computer-mediated discourse analysis, group authorship features as:

structural features (incorporating morphological features including spelling idiosyncrasies, syntactic features, and punctuation features);

meaning features (including semantic idiosyncrasies and pragmatic habits);

interactive features (openings and closings to messages and including turn-taking and topic-control); and

linguistic features indicative of social behaviours (such as rhetorical moves analysis as applied to sexual grooming conversations (see e.g. Reference Chiang and GrantChiang and Grant, 2017).

Whilst there are differences in principles of organisation between these groupings of features, one thing they all have in common is an attempt to move above structural-level features of word choice, syntax, and punctuation. Understanding authorship style in terms of a text’s organisation, meanings, and functions also allows a rich, multidimensional description of a text, which may allow a description of what Reference Grant and MacLeodGrant and MacLeod (2020) refer to as a ‘complete model of the linguistic individual’ (p. 94). Grant and MacLeod also suggest that the higher-level linguistic features go less noticed by writers and therefore might be where individuals leak their true identities when attempting authorship disguise.

In contrast, and with some notable exceptions, stylometric studies tend to focus on features from the ‘lower’ structural levels of language description. There have been a series of reviews of stylometric features. Reference GrieveGrieve (2007) examines various features including the frequency and distribution of characters (specifically alphabetical characters and punctuation marks) and words. He also tests a set of vocabulary richness measures, the frequency of words in particular sentence positions, and the frequency of collocations. Finally, Grieve examines character-level n-gram frequency (from bi-grams to 9-grams).

N-gram measures are frequently used in stylometric studies and simply take each sequence of units that arise from a text. Reference GrieveGrieve (2007) examines character n-grams, but other researchers also use word n-grams and parts-of-speech n-grams. These are sometimes referred to as character-grams, word-grams, and POS-grams, respectively. Thus, taking an extract from Dickens’ famous opening to A Tale of Two Cities:

It was the best of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness …

In this portion, we can extract the character sequence ‘it_was_the_’, which can be counted three times as a character 11-gram. The initial occurrence ‘It_was_the_’ (beginning with the uppercase ‘I’) isn’t within this count, but the shorter 10-gram ‘t_was_the_’ does occur four times. In this example, the capitalisation of ‘I’ is counted as a different character, but a decision could be made to ignore capitalisation. Additionally, the same stretch of text, ‘it_was_the_’, could count as a word 3-gram with four occurrences, or indeed a part-of-speech 3-gram (pronoun-verb-determiner) also with four occurrences. It is important to note of analyses that extract all n-grams from a text that these n-grams may or may not carry interpretable linguistic information. Thus, in the Dickens text, the character 2-gram ‘es’ has three occurrences, but it is difficult to argue that this textual signal carries truly linguistic information. If, on the other hand, you calculate all character 2-9-grams from a text, then you can expect that some of these will pick up understandable linguistic markers such as the regular use of morphological features in a text indicating a preferred tense by one author, or a characteristic use of a prepositional ‘of’ over the genitive apostrophe by another author.

That character n-grams can both be very useful as authorship features and also have no linguistic meaning can be a real issue in forensic authorship analyses. The problem is that of explicability in court. An expert witness who can describe and fully explain what it is that one author does that a second author does not do adds not only credibility to their testimony, but also validity to their analysis in terms of providing demonstrable truths. Without linguistic explanation of features, there can be an over-reliance on black-box systems, equivalent to reliance on mere authority of an expert witness in court. This is only partially countered by well-designed validation studies. Limitations of approaches that use opaque features and methods are picked up in the Section on Progress in Stylistics and Stylometry.Footnote 9

In his study, Reference GrieveGrieve (2007) finds that character n-grams (up to about 6-grams) can be useful as authorship markers along with various measures of word and punctuation distribution, and shows how with a decreasing number of candidate authors in a closed set, other features, including some measures of lexical richness and average word and sentence length, might have some role to play, but generally lack a strong predictive power.

Reference StamatatosStamatatos (2009) also provides a survey of stylometric authorship analysis methods; although his interest is more in the computational processes used in combining features and producing outcomes rather than the features themselves, he does use a very similar set of features to Grieve. These include:

lexical features including word length, sentence length, vocabulary richness features, word n-grams, and word frequencies;

character features including n-grams and character frequencies (broken down into letters, digits, etc.);

syntactic features based on computational part-of-speech tagging and various chunking and parsing approaches; and

semantic features such as synonyms and semantic dependency analysis.

Stamatatos does not specifically provide a feature-by-feature evaluation, but he does comment that in stylometric work, the most important criterion for selecting features is their frequency. Essentially, without large counts of features there is insufficient potential variability between authors for stylometric algorithms to find points of discrimination. This computational hunger for bigger counts of features, and so distributional variance between authors, drives not only the types of feature, but also a requirement of sufficient length of text. Furthermore, Stamatatos cites Reference Koppel, Akiva and DaganKoppel et al. (2006a) who suggest that the mathematical instability of features is also important. This refers to features where there is more linguistic affordance for choice between alternatives (within a particular genre of text), which are thus more likely to create usable markers of authorship.

Reference ArgamonArgamon (2018) explicitly acknowledges that in feature selection for computational stylometric analyses there is a compromise:

Choice of such features must balance three considerations: their linguistic significance, their effectiveness at measuring true stylometric similarity, and the ease with which they can be identified computationally.

He acknowledges that some linguistically meaningful features such as metaphor are not yet sufficiently computationally tractable and points to a classic feature, the relative use of function words, as the kind of feature that does meet these criteria. Function word analysis originates with Reference Mosteller and WallaceMosteller and Wallace’s (1964) classic study of the authorship of The Federalist Papers and are now included in many current stylometric studies. Argamon further points out:

Function word use (a) does not vary substantially with topic (but does with genre and register) and (b) constitutes a good proxy for a wide variety of syntactic and discourse-level phenomena. Furthermore, it is largely not under conscious control, and so should reduce the risk of being fooled by deception.

Using function words to point to discourse-level phenomena is similar to the route Argamon takes to provide more linguistically informed features. Reference Argamon and KoppelArgamon and Koppel (2013) show how function words like conjunctions, prepositions, and modals (amongst others) can be used in authorship problems and then can be interpreted as linguistically meaningful features in a systemic functional context. A similar approach is taken by Reference GrantNini and Grant (2013), who suggest that such a tactic can bridge the gap between stylistic and stylometric approaches to authorship analysis, and by Reference Kestemont, Feldman, Kazantseva and SzpakowiczKestemont (2014), who argues that function words can usefully be reinterpreted as functors to create linguistically explicable features.

Stylometric methods thus tend to use a fairly small and closed set of features across authorship problems (although n-gram analysis might be seen as a method for generating many features from a text). This has meant that much of the focus in stylometry has shifted to the methods of decision-making through the application of different computational approaches to the classification of texts. Stylometry has given rise to a series of authorship competitions run by an academic collective under the umbrella of PAN (https://pan.webis.de) and although in PAN competitions there is some variation in the baskets of features used by different teams addressing different problems, the overlap between feature sets is more striking. Evidence from PAN suggests that the current idea of progress in computational forensic authorship analysis tasks will come from better classifiers and deep-learning approaches (or perhaps approaches that enable fuller understanding of the classifications), rather than progress in broadening the features that might be indicative of authorial style. In contrast, stylistic analysis might be criticised for allowing too much variation in both features and methods between analysts. Feature elicitation from texts requires a more rigorous protocol for deriving features, and more rigour is required for making authorship decisions on the basis of those features. For both approaches there needs to be a greater focus on protocols that will ensure that the features extracted can discriminate between authors, rather than between genres, registers, or topics of texts. This is an issue to which we return.

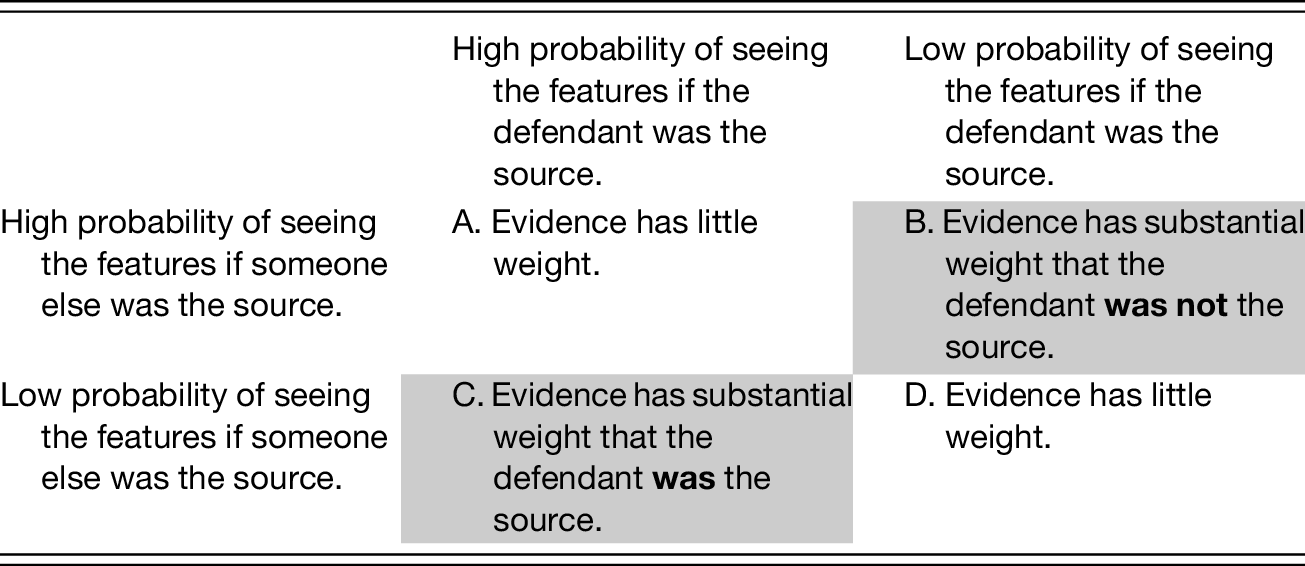

Inference and Decision-Making

The principal purpose of Reference Mosteller and WallaceMosteller and Wallace’s (1964) book into the authorship of The Federalist Papers was not the authorship question per se. The focus in fact was on how Bayesian approaches to likelihood could be used in making complex decisions such as authorship questions. As they wrote later:

A chief motivation for us was to use the Federalist problem as a case study for comparing several different statistical approaches, with special attention to one, called the Bayesian method, that expresses its final results in terms of probabilities, or odds, of authorship.

This focus on methods of decision-making has followed through into much stylometric research. As noted earlier in this Element, the principal innovation across most of the PAN studies has been in methods of decision-making in terms of using advanced algorithmic techniques. This innovation includes novel ways to measure textual similarity or difference and innovation in the application of machine-learning and classification techniques to answer various authorship problems. Reference ArgamonArgamon (2018) provides a discussion of such techniques for the non-expert and points out that the analyst should not make assumptions that methodological trials generalise to real-world authorship attributions, but analysts need to ‘evaluate the chosen method on the given documents in the case’ (p. 13).