1 Introduction

1.1 The Rise of Generative AI

Computer science education is undergoing a transformation as generative AI reshapes how we learn, teach, and create knowledge. Generative AI quickly advanced from niche to commodity in November 2022 with the launch of ChatGPT by OpenAI (Thorbecke Reference Thorbecke2023). At the one-year anniversary of the launch, news outlets categorized ChatGPT as “Changing the Tech World” (Edwards Reference Edwards2023b) and starting an “AI Revolution” (Thorbecke Reference Thorbecke2023). In that first year, competitors also released AI chatbots, including Google’s Bard and Amazon’s Q (DeVon Reference DeVon2023). OpenAI also released DALL-E, software that produces artwork based on user-provided text prompts, in October 2023. More recently, the availability of multimodal models, which process and integrate multiple types of input such as text, images, and audio, has expanded rapidly, with both closed models (developed and maintained by private organizations and accessible only through web requests) and open models (freely accessible for use and for running on local hardware) contributing to the fast growing landscape of generative AI tools. The wide availability of such a range of generative AI models offers enormous potential for innovation, especially within education, but it also raises a number of pressing ethical questions.

One major concern is the use of copyrighted work to train models. Authors and artists have sued after their creative works have been used to train models that then produce similar outputs without compensation or credit (Katersky Reference Katersky2023; Edwards Reference Edwards2023a). Ethical concerns about generative AI have resulted in the United States’ National Science Foundation issuing two major guidelines involving proposal creation and review (Notice to research community: Use of generative artificial intelligence technology in the NSF merit review process 2023). First, proposers are now “encouraged” to disclose the extent to which they used generative AI to develop their proposal. Second, reviewers are prohibited from uploading any content from proposals because of concerns that models may use that as training text. This could result in the model returning that same text to other users as responses to related prompts, breaching the confidentiality of the proposals and potentially leading to unintentional plagiarism.

Another major concern is how the use of generative AI may impact professionals, particularly regarding authorship and credit in academic and creative fields. For instance, the Writers Guild of America held a 148-day strike partly over disagreements related to credit and income due to the increasing use of generative AI in scriptwriting (Coyle Reference Coyle2023). In addition, some companies have replaced customer service representatives with ChatGPT because it responded to customer questions as well or better than many customer service agents (Verma Reference Verma2023). In the academic sphere, policies are evolving to address these concerns; for example, the Association of Computing Machinery (ACM) stipulates that while generative AI tools like ChatGPT can be used to create content, their use must be fully disclosed in an acknowledgments section, and they cannot be listed as authors of a published work.

Finally, there are examples from several fields of authors using ChatGPT and passing off its output as their own writing, then being subsequently discovered due to inaccuracies in the information that ChatGPT returns. For example, two lawyers and their firm were fined for submitting formal court documents generated by ChatGPT that contained fictitious legal research (Neumeister Reference Neumeister2023). Vanderbilt University faced backlash after it sent an email to students reflecting on a tragic shooting at Michigan State University, which was later revealed to have been drafted using ChatGPT – a decision widely criticized for its perceived lack of genuine human empathy (Powers Reference Powers2023). Similarly, recent controversies have arisen with the use of other generative AI tools in creative and commercial contexts, such as advertisements for Google Gemini (that suggested using it to draft personal letters) and for Toys ‘R’ Us (which used OpenAI’s Sora model to generate a full-length video), both of which sparked debates about the erosion of human creativity and authenticity. A judge in Colombia even asked ChatGPT for help with case law, asking, “Is an autistic minor exonerated from paying fees for their therapies?” to which ChatGPT responded, “Yes, this is correct. According to the regulations in Colombia, minors diagnosed with autism are exempt from paying fees for their therapies” (Stanly Reference Stanly2023). Although this response agreed with the judge’s decision, the use of ChatGPT for guiding legal decisions generated criticism at the time.

1.2 Challenges and Opportunities in Education

In the field of education in general, there are major concerns about students using ChatGPT to cheat. A BestCollegesSurvey of 10,000 current undergraduate and graduate students found that 51 percent of college students believe that using such tools for assignments and exams is cheating, compared with only 20 percent who disagreed (Nietzel Reference Nietzel2023). In early 2023, coinciding with the first school term where ChatGPT was widely available and well known, concerns were voiced on a national scale. Many instructors at Harvard prohibited students from using ChatGPT, considering it a form of academic dishonesty (Duffy and Weil Reference Duffy and Weil2023), and articles hypothesized about using it for cheating (Vicci Reference Vicci2023). There may be some data to back up this hypothesis – use of ChatGPT tailed off in June 2023, corresponding with summer break, then increased again in September (Barr Reference Barr2023). Some good news, however, is that an annual survey performed by Stanford researchers showed that the percentage of high school students reporting that they engaged in at least one “cheating” behavior in the past month had remained unchanged (Spector Reference Spector2023). This suggests that while the tools may change, new technologies may not necessarily be encouraging a greater proportion of students to use them dishonestly.

The field of computer science has not been immune to legal controversies regarding AI-based code generation. For instance, a class action lawsuit was filed in November 2022 against GitHub Copilot by a group of software engineers, alleging that GitHub, Microsoft, and OpenAI violated copyright, privacy, and business laws by using copyrighted developer source code without permission to train their models (Park et al. Reference Park, Kietzmann and Killoran2023). The plaintiffs argued that Copilot often produced code suggestions that were identical to the original copyrighted works without proper attribution or consent. Although GitHub and its partners contested these claims, the lawsuit raised ethical and legal questions about AI’s reliance on publicly available code. Despite such controversies, discussions in the popular media around tools like GitHub Copilot and ChatGPT often focus on their performance and providing guidance on their use, rather than focusing on the ethical implications of AI-generated code or predicting significant job losses within the computing industry.

From an academic perspective, large language models (LLMs) were first considered by the computer science education community in February 2022, at the Australasian Computing Education conference, when Finnie-Ansley et al. presented their paper “The Robots are Coming: Exploring the Implications of OpenAI Codex on Introductory Programming” (Finnie-Ansley et al. Reference Finnie-Ansley, Denny, Becker, Luxton-Reilly and Prather2022). This was the first paper published in a computing education venue that explicitly assessed the capabilities of an LLM designed for code generation, OpenAI’s Codex, when applied to standard introductory programming (CS1) problems. The paper compared the performance of the Codex model to the performance of a cohort of students on the same set of programming problems, finding that the model’s performance would see it rank in the 17th position out of a class of 71 students. In addition, an analysis involving several variations of the classic “Rainfall” problem (Fisler Reference Fisler2014) revealed that from the same input prompt, the Codex model would produce multiple unique solutions varied in length, syntax, and algorithmic approach. The authors concluded this work by stating

“we have examined what could be considered an emergent existential threat to the teaching and learning of introductory programming. With some hesitation we have decided to state how we truly feel – the results are stunning – demonstrating capability far beyond that expected by the authors.”.

This paper appeared at a time when awareness of generative AI was not yet widespread in the academic community, and thus set the stage for a broader discussion of the implications of this new technology for educators and for classroom practice.

Subsequent discussion and position papers, including those by Denny, Prather, et al. (Reference Denny, Prather and Becker2024), Becker et al. (Reference Becker, Denny, Finnie-Ansley, Luxton-Reilly, Prather and Santos2023), and Prather, Denny et al. (Reference Prather, Denny and Leinonen2023), have further explored both the opportunities and challenges that LLMs present to computing education. Among the challenges highlighted are concerns related to academic integrity, such as the potential for increased plagiarism and unauthorized code use; the danger of students becoming too reliant on AI, which might hinder their understanding of core programming concepts; and the risk of novices being misled by inappropriate or confusing outputs. In contrast, the opportunities presented by LLMs may be transformative. The ability to generate high-quality educational resources, provide instant feedback, and shift the educational focus toward solving more complex, engaging problems rather than a more narrow focus on low-level coding and related syntax issues represents potentially significant positive changes for computing education.

1.3 Stucture and Audience

In this Element, we consider and discuss the ways in which generative AI (GenAI) could be used in computer science education, both positive and negative. While computer science instruction covers a broad set of topics, including but not limited to how hardware and networks work, how to code, how to analyze code, and how to prove properties of code, most of the existing efforts and literature have focused on tertiary-level coding courses. As a result, much of the related work that is discussed in our Element focuses on the use of LLMs in such contexts. We do, however, provide some insights into the challenges and opportunities for using LLMs more broadly, such as in K–12 education or for instruction on specific topics such as formal proofs.

This Element is written with several audiences in mind. Educators not familiar with large language models can gain insight into the impacts, both positive and negative, of their uses, as well as some of the strategies people are exploring that range from embracing their use to mitigating the downsides of their use. For this audience, we have included some background on how large language models work, and we define vocabulary as it arises. Computer science education researchers who are interested in entering this emerging research area will learn about the latest research and where to seek additional information. For this audience, we have carefully cited the research we describe, including much of the most recent work published as of August 2024.

We begin in Section 2 by providing insight into how large language models work, starting with more simple technologies that are easier to reason about and ending with their current capabilities with respect to computer science education. We then describe the key findings of several studies on both instructor and student perceptions of the use of generative AI in computer science classrooms in Section 3.

The next part of the Element explores current uses of generative AI. First, we present a variety of ways instructors could use generative AI tools to improve the way that they prepare for instruction (Section 4). Then, in Section 5, we explore a range of uses of generative AI tools during instruction. Finally, we discuss how to integrate these separate capabilities into unified end-user tools in Section 6.

The final part of this Element tackles bigger implications of the technology. Section 7 discusses how learners and educators may be impacted by students’ misuse of generative AI tools. Then, in Section 8, we look to the future and consider how computer science education might change in response to the adoption of GenAI in industry. If industry is embracing generative AI coding tools, what different skills should we be teaching to help computer science students be the most efficient, skilled software developers upon graduation? Finally, we present a case study of a course designed and piloted at the University of California, San Diego, which fully embraces the use of generative AI. This innovative course defines learning goals that match industry needs in the presence of generative AI tools and includes training for students on GitHub Copilot, as well as the integration of more ambitious and interesting coding projects made possible due to the availability of Copilot. The course also adjusts how assessments are run and graded to provide a balance between encouraging the use of Copilot and ensuring that students are still learning the necessary technical material.

2 Understanding Large Language Models and ChatGPT

To better understand the strengths and weaknesses of chatbots such as ChatGPT, we begin by providing a brief introduction to the technology. We start by presenting a related technology – a machine learning image classifier. We then discuss LLMs, along with some of their common behaviors. Finally, we provide a summary of evaluations of the capabilities of LLMs for performing coding-related tasks.

Vocabulary

Image Classifier: A machine learning model designed to classify or recognize objects, animals, or other entities in images by analyzing their visual features, such as a giraffe, bus, or person’s face.

Generative AI: Artificial intelligence models that generate new content, such as text, images, audio, or video, based on learned patterns from large datasets.

Large Language Models (LLMs): A type of generative AI model trained on large text datasets to produce coherent and contextually appropriate text outputs by generating sequences of “tokens” (words or phrases).

ChatGPT: A conversational large language model (LLM) developed by OpenAI and designed to generate human-like responses in a dialogue format.

Hallucination: A phenomenon where a large language model generates incorrect or nonsensical responses that appear plausible but are not grounded in its training data or factual information.

2.1 Image Classifier

An image classifier is a program that can recognize images, such as a giraffe, bus, or person’s face. Creating an image classifier consists of two phases: training and use.

The training phase is how the classifier learns to associate attributes of the image with the results. In order to train a classifier, a database of pictures that have already been “labeled” (tagged with what is in the picture) is needed. For example, if an “animal” classifier is wanted, then a database of pictures with different types of animals is required, each labeled with the type of animal in the picture. For a vehicle classifier, a database of pictures with different types of vehicles is needed, each labeled with the type of vehicle in the picture. A program analyzes the database of pictures, determining the characteristics that distinguish, say, pictures of motorcycles from pictures of trucks.

The attributes of the training set are a critical component of the accuracy of the trained model. A “good” training set would include a wide variety of instances for each type of item – including different colors, camera angles, and other features. Not surprisingly, the more labeled images an image classifier has, the more accurate it is at classifying images. In addition, an image classifier is unable to place something in a category for which it was not trained without extra computation. If the training set is too small, then the classifier might get trained on “red herrings” – attributes that just happen to be in the pictures. For example, if someone trained the animal classifier on snakes that all live in the desert and squirrels that all live in the forest, then a picture of a snake in a forest might be identified as a squirrel, and a picture of a squirrel in a desert might be identified as a snake.

Some databases may even contain pictures that have been labeled unknowingly. For example, to automatically tag a particular friend when a picture is uploaded to Facebook®, Facebook needs an image classifier. Its training data on a specific friend would consist of all the pictures of that friend that have been labeled by users. Additionally, completing security puzzles by Captcha, such as clicking on all the boxes in a picture that contain traffic lights, motorcycles, or trucks, contributes labeled images to improve an image classifier.

There are major ethical concerns with image recognition being used for facial recognition of humans because of the interaction between people of color, the inaccuracy of models on those people, and historical injustices. Clearview AI has gone a step further than Facebook, mining social media to create an extensive database of images and names, leading to a proposed fine by the Netherlands (Corder Reference Corder2024). The database is marketed to law enforcement to identify suspects in crimes, which raises ethical questions, since the data is not verified to be correct, and arrests of innocent civilians have occurred because of misidentification in such systems (Johnson Reference Johnson2023). Facial recognition has been shown to be especially poor on individuals with darker skin (Sanford Reference Sanford2024); this could be because of either poor training sets or the challenge of distinguishing their features in poor lighting. Therefore, one must consider not only the technological perspective of AI, but also its impact on different populations.

2.2 Large Language Models

Large language models are a type of artificial intelligence model designed to generate text by predicting the next word, or “token,” in a sequence. Unlike image classifiers, which rely on labeled datasets to learn from, LLMs use a method called self-supervised learning. In self-supervised learning, the model learns from vast amounts of text data without needing explicit labels. Instead, the model uses parts of the text to predict other parts (enabling it to verify these predictions automatically), making the process of data preparation more scalable and efficient.

So, in essence, an LLM simply predicts tokens (or words). Consider a very basic sentence (which we can think of as a “prompt”), which is incomplete:

Prompt: “The cat sat on the”

An LLM is able to predict the most likely next word (token) to follow the sequence “The cat sat on the”. It does this by considering the context and calculating the probability distribution for all possible next words it has learned from its training. Based on the patterns it has learned, the model might generate a frequency distribution like “mat”: 60 percent, “floor”: 20 percent, “chair”: 10 percent, “table”: 5 percent, “roof”: 3 percent, and so on.

In this example, the word “mat” has the highest probability (60 percent) because the phrase “The cat sat on the mat” is a common phrase the model has likely seen many times in its training data. Therefore, the model is most likely to select “mat” as the next word. However, one parameter of an LLM is the “temperature” value, and this setting controls the randomness of the output. Thus, it might occasionally choose another word like “floor” or “chair,” and would be more likely to do so with a higher “temperature” setting. Finally, after selecting “mat,” the LLM can repeat the process all over again – thus generating a coherent sentence that aligns with the patterns it has learned from its training data.

During training, an LLM analyzes large datasets comprising diverse types of text, such as books, articles, and web content. Because LLMs are trained to predict tokens based on their context, they can generate a wide range of text outputs, from simple factual answers to more complex tasks like writing essays, generating code, or summarizing long documents. However, they are not perfect; their outputs are based on probabilities derived from their training data, which means they can sometimes generate inaccurate or even nonsensical responses, often referred to as “hallucinations.”

One of the most well-known tools that uses an LLM to generate text is ChatGPT, developed by OpenAI. ChatGPT leverages the LLM architecture and generates human-like responses by predicting tokens that fit well in a conversation. To make its responses more useful, safe, and aligned with what a human would expect, OpenAI used a technique called Reinforcement Learning from Human Feedback (RLHF). In this process, human trainers rated the model’s responses, giving positive feedback for answers that align well with conversational norms and factual accuracy, and negative feedback for responses that were misleading or unhelpful.

The probabilistic generation of tokens helps explain some of the strengths and weaknesses of ChatGPT. If there are many examples in the training set similar to what is being generated, the model can draw from a robust probability distribution, resulting in highly accurate and predictable outputs. For example, the question “is the world round?” will likely result in the output “Yes,” as this is a common question–answer pair in the training data. Similarly, asking ChatGPT to finish a series of fairly unique, famous quotes that would have appeared many times in its training set typically results in the expected output. For example: “With great power” … “comes great responsibility” (Spider-Man); “Don’t let the muggles” … “get you down” (Harry Potter). For more complex tasks that do not have direct matches in the training data, ChatGPT relies on its ability to detect and combine patterns it has learned during training. For instance, if asked to generate a resume for a specific computer scientist, ChatGPT might draw on general information about typical resume structures and content it has seen, as well as any relevant information it has learned about computer scientists in general. The result is a resume that appears plausible and well-structured. However, because ChatGPT generates content based on patterns rather than specific knowledge, the output may contain a mix of both accurate and inaccurate information. This blending of learned patterns allows ChatGPT to produce creative and contextually appropriate text, but it also means that users need to verify the details to ensure they are correct.

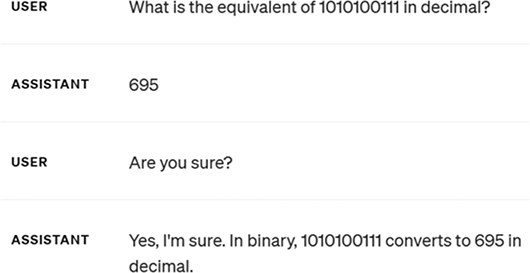

As mentioned earlier, when an LLM provides an incorrect answer to a factual question, it is sometimes called a hallucination. Another interesting characteristic of LLMs is that they are sometimes characterized as producing responses that are confidently incorrect. This refers to ChatGPT answering a question incorrectly with the same wording and confidence as answering a question accurately, with no mitigating language that a human might use (e.g., “I’m not sure, but this might be the answer”). See, for example, the output in Figure 1. Viewing LLMs as probabilistically generating answers that fit the pattern again explains this trend. ChatGPT was built to generate likely answers that fit a pattern, not factual answers. Therefore, it is always necessary to fact-check what an LLM produces, even when it does not “admit” to being unsure. This can have negative effects if people are not aware of these limitations. For example, ChatGPT has been shown to produce inaccurate responses when asked questions about drug information; ChatGPT’s answers to nearly three-quarters of drug-related questions reviewed by pharmacists were incomplete or wrong. In one case, researchers asked ChatGPT whether a drug interaction exists between the COVID-19 antiviral Paxlovid and the blood-pressure-lowering medication verapamil, and ChatGPT indicated no interactions had been reported for this combination of drugs (ASHP 2023).

Figure 1 A modern LLM appearing “confidently incorrect” (screenshot from March 2024). The correct answer is 679.

While LLMs like ChatGPT are updated periodically, they do not continuously scrape the internet for new information. Instead, they are retrained on new datasets at regular intervals, incorporating updated knowledge and fine-tuning from user interactions and feedback. However, promising techniques are emerging for improving the accuracy of LLM-generated responses in specific contexts. One effective approach is Retrieval-Augmented Generation (RAG), which combines the power of LLMs with external databases. Unlike traditional LLMs, which rely solely on their training data, RAG allows the model to retrieve relevant information from an external database or knowledge source before generating a response. This means that instead of guessing based on patterns it has seen during training, the model can refer to up-to-date or domain-specific information to provide more accurate and contextually relevant answers. For example, a model enhanced with RAG could retrieve the latest medical guidelines to answer a question about drug interactions more reliably. Another advancement in LLM technology is the use of chain-of-thought prompting, a method inspired by how humans approach complex problems. Instead of generating a single, immediate answer, the model explicitly articulates its reasoning process step by step before arriving at its final output. Such an approach can improve the ability of the model for tackling difficult or multistep problems, and by showing these steps it makes its reasoning more apparent to users.

2.3 LLMs for Coding

What about generating source code for a program? Large language models have been trained on enormous quantities of publicly accessible code from GitHub repositories and programming tutorials on the web (M. Chen et al. Reference Chen, Tworek and Jun2021). Given that programming languages have a much smaller set of grammatical rules than, say, natural languages, LLMs are especially good at producing code that is syntactically correct. In addition, their ability to make predictions based on context (such as detailed problem specifications) means they are often highly capable of producing correct solutions to programming problems.

A focus of much of the early work within the computing education community involved exploring the breadth of problems to which generative AI models could be applied. However, measuring the capabilities of such models is challenging given the rapid pace at which improvements are being made. For example, the results from “The Robots Are Coming” paper published in February 2022 (Finnie-Ansley et al. Reference Finnie-Ansley, Denny, Becker, Luxton-Reilly and Prather2022) were replicated in July 2023 – less than 18 months later – by a working group exploring LLMs in the context of computing education (Prather, Denny et al. Reference Prather, Denny and Leinonen2023). Compared to the original performance of the Codex model (which scored an average of 78.3% across two exams), the GPT-4 model (which was the state-of-the-art model at the time of the working group’s replication) exhibited dramatic improvement, scoring an average of 97% over two programming exams.

In general, LLMs have shown considerable proficiency in programming tasks, even beyond typical CS1-type problems. For example, Cipriano and Alves (Reference Cipriano and Alves2023) explored object-oriented programming (OOP) assignments, where students are typically asked to follow a set of best practices to design and implement a suite of interrelated classes (either by composition or inheritance). Using the GPT-3 model, they found that it was able to generate code that would achieve good scores when evaluated against unit tests, but that minor compilation and logic errors were still somewhat common. Interestingly, the specifications for the assignments that they evaluated were written in Portuguese, which did not appear to negatively impact performance despite the fact that Portuguese content makes up only a very small fraction of the model training sets.Footnote 1 Similarly, in follow-up work to their original paper, Finnie-Ansley et al. (Reference Finnie-Ansley, Denny and Luxton-Reilly2023) explored the performance of the Codex model on a collection of CS2 problems from a standard data structures and algorithms course. They found that when compared to the performance of students who had tackled the same problems on a test, the Codex model’s performance would place it just within the top quartile of the class.

Of course, while code-writing questions are common, there are many other kinds of problems of relevance to computing courses. For example, multiple-choice questions are in common use in large classes and are frequently used to assess code comprehension skill (Petersen, Craig, and Zingaro Reference Petersen, Craig and Zingaro2011). Savelka et al.’s investigation into the evolving efficacy of LLMs from GPT-3 to GPT-4 highlights a progressive improvement in answering multiple-choice questions for programming classes (Savelka, Agarwal, An et al. Reference Savelka, Agarwal, Bogart and Sakr2023; Savelka et al. (Reference Savelka, Agarwal, An, Bogart and Sakr2023). This suggests an increasing capacity of these models to mimic, if not surpass, human-level understanding in standard assessment formats.

Another question format that is unique to programming education is Parsons problems, which require the rearrangement of initially scrambled lines of code (Ericson et al. Reference Ericson, Denny and Prather2022). Unlike code-writing questions, where a code-generating AI model has the flexibility to synthesize code one token at a time based on the preceding tokens, Parsons problems provide the constraint that a solution must be formed using only the code blocks provided. Reeves et al. (Reference Reeves, Sarsa and Prather2023) examined the performance of the Codex model on a suite of Parsons problems obtained from a review of the literature. They found that the model could successfully solve around half of the Parsons problems, and observed that Codex would very rarely modify or add new lines of code, but that incorrectly indenting the code was the most common reason for failure. This investigation into solving Parsons problems with text-based models was complemented by the work of Hou et al. (Reference Hou, Man and Mettille2024), exploring the capabilities of so-called ‘vision’ models that could be provided with a bitmap version of a Parsons problem produced via a screenshot. In a comparison of two popular multimodal models, they found that at the time of their evaluation, GPT-4V clearly outperformed Bard, solving around 97% of the problems presented to it. Hou et al. conclude that the use of visual-based problems by educators, as a way to reduce student reliance on text-based LLMs for solving coursework, is unlikely to be a viable solution in the long term. They also recommend that computing educators reconsider their assessment practices in light of such impressive model performance.

3 Educator and Student Perceptions

Given the rapidly evolving capabilities of generative AI tools, it is essential to understand how they are perceived by both educators and students within the context of computing education. Several studies have shed light on these perceptions, revealing a complex landscape of excitement, concern, and adaptation strategies.

3.1 Educator Perceptions

Educator views on generative AI tools span a spectrum that is nicely captured in the title of the paper by Lau and Guo: “From ‘Ban It Till We Understand It’ to ‘Resistance is Futile.”’ (Lau and Guo Reference Lau and Guo2023). In this work, they investigated the perspectives of university programming instructors on adapting their courses in response to the increasing use among students of AI code generation and explanation tools like ChatGPT and GitHub Copilot. Conducted in early 2023, this research involved semistructured interviews with 20 introductory programming instructors from diverse geographic locations and institution types. By focusing on exploring immediate reactions and longer-term plans, the study aimed to gather a broad spectrum of strategies and viewpoints on integrating or resisting AI coding tools in educational settings. In the short term, instructors were predominantly concerned about AI-assisted cheating, leading to immediate measures like placing a greater emphasis on exam scores, banning AI tools, and exposing students to the tools’ capabilities and limitations. Looking further ahead, instructors diverged into two main camps: those aiming to resist AI tool usage, focusing on teaching programming fundamentals, and those embracing AI tools, viewing them as preparation for future job environments. This division underscores the lack of consensus and the exploratory nature of current approaches to AI in computing education.

Strategies to resist AI tool usage included designing “AI-proof” assignments, reverting to paper-based exams, and emphasizing code reading and critique over programming tasks. Instructors who were more open to integrating AI tools proposed using these technologies to offer personalized learning experiences, assisting with teaching tasks, and redefining the curriculum with a greater emphasis on software design, collaboration with AI, and creative project work. Overall, the findings suggest a need for adaptive teaching strategies that either incorporate AI as a tool for learning and development or reinforce traditional coding skills in novel ways that are resistant to trivial LLM-generated solutions. Lau and Guo also proposed a diverse set of open research questions that help plot a course for continued investigation within the community.

In more recent work, Sheard et al. (Reference Sheard, Denny and Hellas2024) conducted interviews with 12 instructors from Australia, Finland, and New Zealand. These interviews aimed to capture a diverse set of perspectives on the use of AI tools in computing education, including current practices and planned modifications in response to AI tools. In general, instructors acknowledged the potential of AI tools to support and enhance learning by generating code examples and providing personalized feedback. However, concerns were raised about students potentially bypassing crucial learning processes and over-relying on these tools, which could lead to a shallow understanding of programming concepts. Participants also noted significant challenges to traditional assessment methods, raising concerns about academic integrity and the potential for cheating. Such concerns are exemplified in the following quote from one participant in the study: “we are clearly living in a time in which we have to completely rethink computing education, and particularly the assessment side of computing education, because we can no longer assess students in any of the ways we have been trying to assess them.” There was general agreement from the participants of this interview study that the integration of AI tools should be at a point in the curriculum where students have already acquired foundational programming skills. Note, this view is not universal within the community, with some researchers suggesting a “prompts first” approach, in which students begin by learning to prompt AI models, is what is needed (Reeves et al. Reference Reeves, Prather and Denny2024).

3.2 Student Perceptions

Students appear to share some of the same concerns as educators. Prather, Reeves, et al. (Reference Prather, Reeves and Denny2023) provide an insightful exploration into novice programmers’ interactions with GitHub Copilot. Students reported a mix of awe and unease, with some finding the tool’s predictiveness both helpful and unsettling. This latter feeling, which inspired the title of their paper, was typified in the comment from one student: “I thought it was weird that it knows what I want.” While Copilot was praised for its ability to streamline coding tasks, concerns were raised regarding overreliance on the tool and potential barriers to learning fundamental problem-solving skills. One participant even expressed concern that the tool would make them a “worse problem solver” as they begin to rely on it more. Additionally, the study unveiled cognitive and metacognitive challenges students face, prompting a discussion on the need for educational tools to better support learning processes.

A comprehensive report of an ITiCSE Working Group that convened in July 2023 combined insights from a broad survey involving both educators and students across 17 countries (Prather, Denny et al. Reference Prather, Denny and Leinonen2023). Two separate surveys were developed for students and instructors, covering topics from generative AI tool use and ethical considerations to perceived impacts on education and future employment. Both groups reported similar patterns in using generative AI for code writing and for text-based tasks, indicating a growing familiarity with these technologies. There was also a strong consensus on the irreplaceable role of human instructors despite acknowledging the growing relevance of generative AI tools in computing education and future careers. Especially among upper-level students, generative AI tools appear to be becoming a significant resource for student assistance, indicating a shift in help-seeking preferences. Among the implications of this work, as identified by the report authors, is that educators should be aware of technological advancements so that they can effectively integrate generative AI tools into their teaching practices.

4 Class Preparation

One way that computing educators can clearly leverage generative AI is in the creation of high-quality learning resources. Such resources can be static (i.e. generated in bulk and distributed via an appropriate platform) or dynamic (i.e. generated on demand by students). Among the many possible resources that can be produced by generative AI tools, code explanations, programming exercises, and worked examples have recently been proposed and studied. In this section, we first present traditional teaching techniques, followed by the ways in which LLMs can assist.

4.1 Example Code Explanations

We first consider textbook examples. MacNeil et al. (Reference MacNeil, Tran and Hellas2023), integrated LLM-generated code explanations into an interactive e-book on web software development. Three types of explanations were generated for code snippets: line-by-line explanations, a list of important concepts, and a high-level summary. Table 1 shows examples of explanations generated by GPT-3 for a code snippet to create a simple server that counts the number of POST requests. The line-by-line explanation (a) highlights aspects of the syntax and terminology in every line of code, the listing concepts (b) are explanations of important concepts used in the code, whereas the summarization (c) provides a high-level explanation of the purpose of the code.

Table 1 Examples of three types of code explanations generated by GPT-3 for a code snippet for a server that counts POST requests; adapted from MacNeil et al. (Reference MacNeil, Tran and Hellas2023).

They found that student engagement varied based on code snippet complexity, explanation type, and snippet length, but that overall the majority of students found the code explanations helpful. Similarly, Leinonen, Denny, et al. (Reference Leinonen, Denny and MacNeil2023) compared the quality of code explanations generated by students and LLMs in a CS1 course context, finding that students rated LLM-created explanations as significantly easier to understand and more accurate summaries of code than those created by students. Both studies highlight the potential of LLMs as tools for generating educational content in computing courses. There remain, however, some concerns regarding the accuracy and completeness of LLM-generated code explanations, indicating the need for oversight or additional processing before these explanations are presented to learners.

4.2 Worked Examples

Worked examples have been well researched in introductory programming courses (Muldner, Jennings, and Chiarelli (Reference Muldner, Jennings and Chiarelli2022)). Worked examples involve a problem statement with a step-by-step annotated solution that illustrates the problem-solving process (see Table 2). Worked examples can take several forms.

Table 2 An exemplar step-by-step worked example of the problem: Write a Python program to print out the maximum value in a 2-d array

| Step 1: Initialize the variable to store the maximum value |

| max_value = array[0][0] |

| Step 2: Iterate through the array |

| max_value = array[0][0] |

| for row in array: |

| for element in row: |

| Step 3: Compare each element and update the maximum value |

| max_value = array[0][0] |

| for row in array: |

| for element in row: |

| if element > max_value: |

| max_value = element |

| Step 3: Print out the output |

| max_value = array[0][0] |

| for row in array: |

| for element in row: |

| if element > max_value: |

| max_value = element |

| print(max_value) |

Modeled worked examples occur during a lecture. An instructor writes code, narrating their thought process as they do so. They may make mistakes, requiring them to make modifications in real-time. The alternative is to provide static code all at once, explaining how it works after it is all displayed. The effectiveness of such modeling is unclear. In one study, modeling examples led to no differences between groups on exams, assignments, and overall course scores; a benefit was only observed on the final course project (Rubin Reference Rubin2013). In another study, there were no significant differences (Raj et al. Reference Raj, Gu and Zhang2020). LLMs may not be obviously appropriate for this kind of modeling, given that they generate static text. However, with an appropriate display interface it may be possible to use an LLM – perhaps including and correcting some deliberate mistakes – to simulate a teacher modeling a worked example. Indeed, Jury et al. (Reference Jury, Lorusso, Leinonen, Denny and Luxton-Reilly2024) evaluated a novel LLM-powered worked example tool in an introductory programming course. Their tool, “WorkedGen”, leveraged an LLM to generate interactive worked examples in a large first-year Python programming course to evaluate the quality and effectiveness of the LLM-generated worked examples. The evaluation focused on the clarity of explanations, the breakdown of worked examples into well-defined steps, and the tool’s overall usefulness to novice programmers. In general, the LLM-generated explanations were clear, and the code provided alongside explanations was deemed valuable. In the classroom deployment, students expressed that the LLM-generated worked examples were useful for their learning, indicating a positive perception of the tool. In particular, students appreciated the ability to engage further with a given worked example by selecting keywords, code lines, and personalized questions, which were custom features within the tool.

The bulk of research on worked examples in introductory coding instruction has been on supplementing example solutions with subgoals. Subgoals are high-level statements about the purpose of the example solution steps. Worked examples with subgoals have been found to be beneficial in several settings. An initial study compared examples annotated with subgoals (create component, set output, set conditions, define variables) to unannotated examples for students learning to create Android App Inventor programs. The researchers found significantly better results on both an immediate post-test and a delayed post-test (Margulieux, Catrambone, and Guzdial (Reference Margulieux, Catrambone and Guzdial2016)). A lab replication study involving university students replicated the results (Margulieux and Catrambone Reference Margulieux and Catrambone2016). A more realistic classroom setting found that the sections with subgoal-annotated worked examples performed significantly better on quizzes and had a lower dropout rate (Margulieux, Morrison, and Decker (Reference Margulieux, Morrison and Decker2020)).

4.3 Programming Exercises

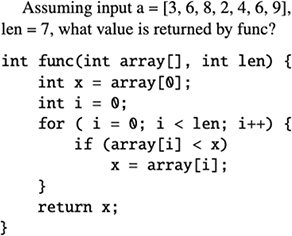

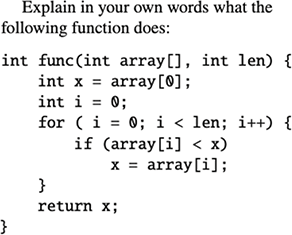

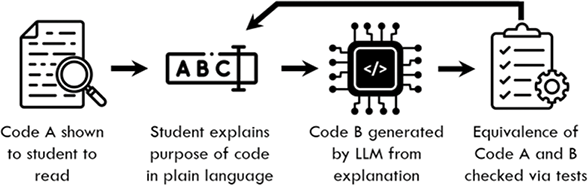

One of the first papers in the computing education literature to explore the generation of learning resources focused on programming exercises. Traditionally, there have been four major categories of programming exercises that assess and develop student skills: Tracing, Explain in Plain English (EiPE), Parson’s Problems, and coding problems (see Figures 2–5).

Figure 2 Tracing

Figure 3 Explain in Plain English (EiPE)

Figure 4 Parsons

Figure 5 Coding

Sarsa et al. (Reference Sarsa, Denny, Hellas and Leinonen2022) explored the creation of programming exercises with sample solutions and test cases, as well as code explanations. The rationale behind the research stems from the challenges educators face in developing a comprehensive set of novel exercises and the demand for active learning opportunities in programming education. A total of 240 programming exercises were generated, and a subset was evaluated both qualitatively and quantitatively for the sensibleness, novelty, and readiness for use of the generated content. The authors found that a significant majority of the automatically generated programming exercises were both sensible and novel, with many being ready to use with minimal modifications. They also found that it was possible to influence the content of the exercises effectively by specifying both programming concepts and contextual themes in the input to the Codex model. The ability to contextualize learning resources could potentially lead to more personalized and engaging learning experiences, especially if exercises can be tailored to individual interests through contextual themes (Del Carpio Gutierrez, Denny, and Luxton-Reilly (Reference Del Carpio Gutierrez, Denny and Luxton-Reilly2024); Leinonen, Denny, and Whalley (Reference Leinonen, Denny and Whalley2021)). While most of the generated exercises included sample solutions and automated tests, the quality of the test cases was variable, and in some instances, the tests did not entirely align with the solutions, suggesting the need for some manual intervention or regeneration of tests. Future research directions posed by the authors include refining the generation process to improve test case quality, and exploring the generation of more complex exercises and assignments, as well as investigating student engagement and learning outcomes when using automatically generated content. Recent work exploring the generation of test cases has been promising; for example, Alkafaween et al. (Reference Alkafaween, Albluwi and Denny2024) demonstrated that LLM-generated test suites for CS1-level programming problems are not only able to correctly identify most valid student solutions but are also, in many cases, as comprehensive as instructor-created test suites.

4.4 Block-Based Programming Assignments

Culturally Competent Projects

Vocabulary

Culturally Responsive Pedagogy: A learner-centered approach to teaching that incorporates students’ cultural identities and lived experiences into the classroom to facilitate their engagement and academic success.

Cultural Competence: The ability of an individual to honor their cultural backgrounds while developing understanding in at least one other culture.

Reskinning: The process of modifying the visual presentation of a Scratch project by changing the sprites and backdrop to match with a new theme while keeping the code untouched.

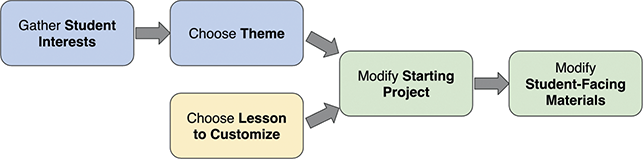

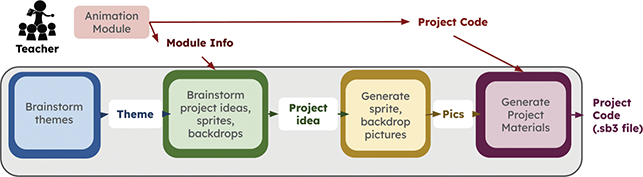

Large language models may also prove helpful in customizing existing assignments to an instructor’s local population (creating culturally competent projects). Cultural competence is an aspect of culturally responsive pedagogy (CRP). It emphasizes facilitating students to honor their cultural backgrounds while developing understanding in at least one other culture through the learning materials and activities (Davis et al. Reference Davis, White, Dinah and Scott2021). At the K–8 level, the literature clearly emphasizes the value and importance of CRP (Gay Reference Gay2018; Ladson-Billings Reference Ladson-Billings1995) and students’ sense of belonging (Maslow Reference Maslow1958, Reference Maslow1962), especially in computing disciplines and among learners from under-represented groups in computing. While there are several existing culturally responsive CS curricula, a fixed set of materials will not stay responsive across time, location, and population. Thus, empowering teachers to created localized instructional materials is the next essential step.

Figure 6 presents two sample Scratch projects from the Scratch Encore curriculum (Franklin et al. Reference Franklin, Weintrop and Palmer2020). The two projects are situated in different contexts/themes but teach the same CS material/concept (animating a sprite using a repeat loop with multiple costumes and, optionally, movement), largely with the same code. From the programmer’s perspective, they have approximately the same number of sprites, scripts, and blocks. From the user’s perspective, the projects involve one sprite animating in place (flags versus monkey), and two sprites animating across the screen (red boat and blue boat versus bee and snake). They only differ by theme: one represents a Dragon Boat Festival race (Figure 6, top), the other represents an animal race (Figure 6, bottom).

Figure 6 Examples of Scratch projects from Scratch Encore (Franklin et al. Reference Franklin, Weintrop and Palmer2020). The two projects are technically similar, only differing by theme.

Unfortunately, especially at the K–8 level, teachers are unlikely to have the expertise to efficiently create customized versions of lessons. The customization process is shown in Figure 7. There are two parts of the process that can be performed in parallel – choosing what project they want to customize and gathering their students’ interests. The teacher can gather students’ interests in many ways: via a survey, initial getting-to-know-you projects about themselves, or small group discussions with prompts. Then the teacher thinks about the attributes of the project (in this case, three sprites, two of which go across the screen left to right, and the third that animates in place) and chooses a theme that both matches the technical aspects of the sample project and will resonate with their students (especially students who might normally feel left out).

Figure 7 The steps in the process of customizing a Scratch project;

Recent work by Tran et al. (Reference Tran, Killen, Palmer, Weintrop and Franklin2024) explored the challenges teachers face when attempting to customize Scratch Encore lessons. Teachers were learning how to customize an existing base Scratch project as well as the corresponding student-facing worksheets. Researchers created extensive scaffolds, including instruction and a step-by-step guide for each project. The authors introduced reskinning – the process of modifying the visual presentation of the existing project by changing the sprites and backdrop to match with a new theme while keeping the code untouched. Using the reskinning approach, a teacher can quickly create a third, culturally competent version of the Scratch projects shown in Figure 6. They can incorporate a theme that may attract their students’ attention (by changing the sprites and backdrop) while preserving the structure of the base project’s code. It is critical that all existing technical attributes are followed because, for example, a project that is too easy may result in students not having sufficient knowledge to learn the next module/lesson, or a project that is too complicated may overwhelm students and cause fatigue. Even with these scaffolds, though, teachers struggled with three critical steps: (1) choosing a project that integrates the theme with the technical attributes, (2) creating sprites in a timely manner (often spending too much time tweaking the figures), and (3) modifying student-facing materials.

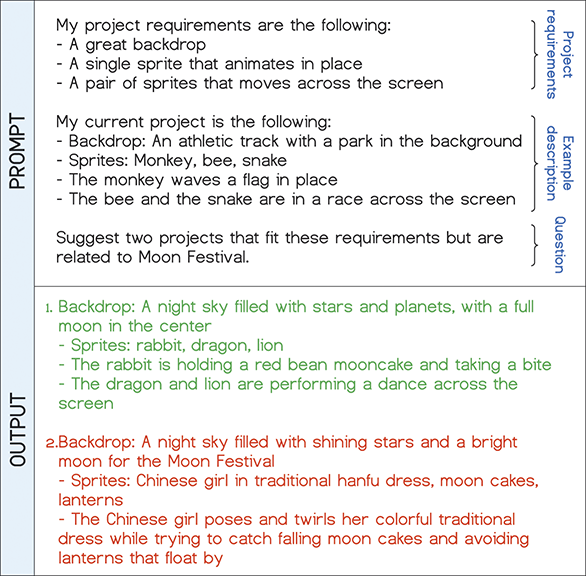

While this reskinning approach is promising, the concern about the cognitive load on teachers and the many demands on their time, especially during the school year, remains unaddressed. Generative AI could assist with these challenges. Given the ability of LLMs to generate novel human-like text responses, Tran examined the potential of teachers using LLMs to brainstorm culturally competent Scratch project ideas (Tran Reference Tran2023). The author prompted GPT-3 to suggest project ideas that technically match with a base project from Scratch Encore but related to a different theme. Their initial attempts at prompt engineering produced many positive results while also highlighting some outputs that are shallow in terms of theme. The prompt to generate project ideas for the Animation module and an example output from GPT-3 are shown in Figure 8. As we can see, the qualified project idea (in green) is in a ready-to-use state – a teacher can implement it through reskinning the Animal Race project. On the other hand, the disqualified project (in red) involves many complexity issues (e.g., a positional and timing dependency between the girl, the falling moon cakes, and the floating lanterns). In a more recent study, Tran et al. (Reference Tran, Gonzalez-Maldonado, Zhou and Franklin2025) performed a systematic evaluation of 300 customized Scratch project ideas generated by GPT-3. Specifically, the authors qualitatively evaluated each generated idea for quality of theme and alignment with the base project’s code and found that 81% of the generated ideas satisfied their evaluation metrics. At the same time, they identified two major shortcomings: the presence of potentially insensitive and inaccurate elements and code complexity when implementing the generated ideas in Scratch; both can be resolved with minimal modifications by teachers.

Figure 8 A query for GPT-3 to generate project ideas based on the Animal Race project from Scratch Encore. Suggested project ideas are related to the Moon Festival. The qualified idea is in green, and the disqualified idea is in red.

Further, text-to-image generative AI could potentially be used to generate starting sprite and backdrop images, allowing teachers to spend less time searching the internet for appropriate media. Relatively straightforward prompt engineering could be used to produce images that are suitable for Scratch: two-dimensional, cartoon-like, and with white backgrounds.

While existing research has shown the promising application of LLMs in the creation of culturally competent projects, it is critical to further approach this direction with caution due to the impressionable nature of young children and the well-documented evidence of cultural and social biases in LLM outputs (Kotek Dockum, and Sun Reference Kotek, Dockum and Sun2023; Liang et al. Reference Liang, Wu, Morency and Salakhutdinov2021; Nadeem et al. Reference Nadeem, Bethke and Reddy2020; Tao et al. Reference Tao, Viberg, Baker and Kizilcec2024). Tran et al., in their later study (2025), have concluded that LLM-generated content is not ready as student-facing materials at the K–8 level, and emphasized the important role of teachers in performing human filtering before bringing LLM-generated materials into the classroom. Teachers should review LLM-generated content for culturally insensitive elements that do not align with their expertise, and perform a thorough content review to filter out or adjust inappropriate projects; additional training and scaffolds may be needed to support teachers in handling this.

Block-Based Code

Another potential application of LLMs at the pre-university level is in the creation of coding solutions and the evaluation of student projects, from a teacher’s perspective. The major difference between the K–12 and the college settings is the use of block-based rather than text-based programming languages.

Given that block-based languages are precluded from the text-based nature of available LLM code-generation tools, researchers have attempted to use commercially available LLM models to perform two tasks: (1) creating sample solutions to block-based assignments and (2) automatically analyzing student Scratch projects (Gonzalez-Maldonado, Liu, and Franklin Reference Gonzalez-Maldonado, Liu and Franklin2025). To achieve that, they “trained” an existing LLM by creating two transpilers to convert Scratch code into a language that the model is more familiar with (Python and S-Expressions). Their evaluation of the LLM performance on both tasks indicated that prompt engineering alone is insufficient to guide the model to reliably produce high-quality outputs. For projects of medium complexity, the LLM consistently generated solutions that do not follow correct block-based syntax or only produced correct solutions in a few instances with correct syntax. In terms of analyzing student code, the study found a correlation between scores assigned by an existing auto-grader and those assigned by the LLM, but there remained great discrepancies between the “actual” scores and the LLM-generated scores. While current LLM models, without fine-tuning, are not ready to use for block-based programming class preparation, this study provides valuable insights into this novel application of LLMs in K–12 CS education.

4.5 Assessment Questions

Creating equivalent problems is not only useful for cultural competence. It also provides a solution for running testing facilities at scale as university class sizes grow.

There is a desire to have live coding exercises during exams. Allowing students to use their own devices can be challenging, because it may be impossible to monitor their use of the internet to look up references or even solutions to the exam questions. On the other hand, testing facilities large enough to support a multi-hundred-student exam can be prohibitively expensive. Fowler et al. (Reference Fowler, Smith and Zilles2024) studied dynamically creating equivalent coding problems so that a single computer lab could be used for a class of students, with each student making an appointment for their exam. Since the questions are dynamically generated (with constraints), reporting exact questions to others is unlikely to give them a significant advantage. In this work, the authors chose very specific problem types (e.g., find the largest element in an array) and determined which other closely related, but not identical, problems (e.g., find the smallest element in an array) were of equivalent difficulty. Once a single problem has enough variations, then it can be put into the bank, and one of the variations is chosen at the moment of the exam.

The process of creating and validating identical problems with slight technical variations is very time consuming, and the authors of the previous study did not utilize generative AI. Instead, they analyzed common problem types and hand-coded the transformation that generated different versions of the problem from one starting problem.

One potential approach would therefore be to leverage the work tailoring programming exercises to individual interests (Del Carpio Gutierrez et al. Reference Del Carpio Gutierrez, Denny and Luxton-Reilly2024; Leinonen et al. Reference Leinonen, Denny and Whalley2021) to assessment questions in order to mask the fact that the problems are technically identical. Such an approach, once validated on a subset, could lead to a much higher variety of potentially equivalent problems. Hand-coding each different possibility would be prohibitive, but using generative AI would provide a wealth of creative, interesting problems very quickly, even on demand.

5 Class Instruction

Generative AI can also be used to provide help directly to learners in ways that enhance learning. As class sizes increase, the opportunities students have for synchronous help from instructional staff decrease.

The integration of AI directly into development environments, and as digital teaching assistants (Hicke et al. Reference Hicke, Agarwal, Ma and Denny2023), represents a significant shift in educational support. Such tools can provide instant and personalized support outside the traditional classroom setting. Furthermore, the adaptation of curricula and teaching materials, including innovative textbooks like that of Porter and Zingaro (Reference Porter and Zingaro2023), which teaches Python programming using tools like Copilot and ChatGPT, illustrates the evolving nature of educational delivery. The advent of generative AI in computing education represents both a challenge and an opportunity. Educators are tasked with integrating these technologies into the curriculum in a way that enhances learning while preparing students for a future where AI is a fundamental aspect of problem-solving.

In this section, we first explore how current class instruction can be enhanced through generative AI tools. We divide this discussion into two types. First, we consider ways a student, on their own, could use generative AI to assist in tasks they have traditionally completed on their own. Next, we consider instances where they would traditionally reach out to instructional staff (when they are stuck). We recognize that this is not a hard and fast line; it is merely a way of organizing the many ideas on how to leverage generative AI technology. Finally, we explore a more transformative idea, in which generative AI is provided more autonomy in directing the learning process.

5.1 Student Resources

Explaining example code

While textbooks provide high-quality instruction, students supplement formal instruction with searches on the internet. The code they find online may be related to the topic, but it may be poorly documented, and include neither a high-level explanation nor line-by-line comments. The same approach that was previously described to generate explanations for online textbooks could also be used directly by students to enhance the learning potential of code found on the internet.

Explaining Concepts

Analogies can be a particularly effective type of explanation, as they help students bridge the gap between existing knowledge and unfamiliar concepts. The flexibility of LLMs has shown they are capable of generating cross-domain analogies which could be applied in a range of creative contexts (Ding et al. Reference Ding, Srinivasan, Macneil and Chan2023). This is especially important in educational contexts, given that creating effective educational analogies is difficult even for experienced instructors.

Bernstein et al. (Reference Bernstein, Denny and Leinonen2024) explored this idea in an introductory programming context by having students generate analogies for understanding recursion, a particularly challenging threshold concept in computer science (Sanders and McCartney Reference Sanders and McCartney2016). Their study involved 385 first-year students who were asked to generate their own analogies using ChatGPT. The students were provided a code snippet and were tasked with creating recursion-based analogies, optionally including personally relevant topics in their prompts. A wide range of topics were specified by students, including common topics such as cooking, books, and sports, which also appeared in ChatGPT-generated analogies when a topic was not specified. Other topics specified by students, such as video games and board games, were not present in the themes that were generated by ChatGPT. This suggests that while LLMs can generate bespoke analogies on-demand, students may benefit from being “in-the-loop”, as the inclusion of their personal interests and experiences can lead to more diverse and engaging analogies. Students reported enjoying the activity and indicated that the generated analogies improved their understanding and retention of recursion. This approach underscores how generative AI can be leveraged in computing education: not just as a tool for generating explanations, but as a means of empowering students to create their own, tailored learning resources.

5.2 Assignment Assistance

With respect to assignment assistance, we consider two aspects: how the feedback is delivered and what kind is required.

Providing feedback is critical for student learning. Research on best practices for feedback show that the most effective feedback is specific (Hattie and Timperley Reference Hattie and Timperley2007), timely (Opitz, Ferdinand, and Mecklinger Reference Opitz, Ferdinand and Mecklinger2011), aligned toward a student goal (Hattie and Timperley Reference Hattie and Timperley2007), and surprising (Malone Reference Malone1981). In addition, it is helpful to think about several different types of help a student might need (Franklin et al. Reference Franklin, Conrad and Boe2013), beyond “help me finish this assignment." Some students merely need confirmation they are on the right track, especially if they are not confident in their abilities. Others need just a reminder of a keyword or concept, allowing them to look at their notes or elsewhere to put that concept into practice. Some students know what concept to use but have a small conceptual barrier that needs to be resolved before they can move on. Finally, some may need to have an entire set of concepts retaught to them.

With synchronous, interactive support, a human teaching assistant can provide the right level of help – asking a series of probing questions to hone in on the topic and on the level of help the learner needs. However, in large classes, human TAs may not always be available, with scheduled office hours at inconvenient times for some learners or not fully used when they are offered (M. Smith et al. Reference Smith, Chen, Berndtson, Burson and Griffin2017). Face-to-face support also doesn’t always serve all students fairly, with some students reluctant to ask for help, while others may dominate the available time (A. J. Smith et al. Reference Smith, Boyer, Forbes, Heckman and Mayer-Patel2017).

Automated feedback can be provided in several ways. For each general mechanism, we first consider how assistance has been provided as class sizes have increased, prior to LLMs. We then present several projects that have evaluated LLMs for providing help to students. It is important to note that LLM technology has been improving rapidly – a technique evaluated just two years ago may be much more successful today.

Discussion Boards

A discussion board is an online forum where students can ask questions of the entire class or just to the instructional staff. While neither synchronous nor interactive, a discussion board has two main benefits. First, peers can answer each others’ questions, lowering the burden for instructional staff and providing potentially faster responses. Second, students can look at previous posts and find answers to their questions without needing to ask.

Prior research analyzing student use of a discussion board examined posts from 395 students across two courses, revealing three major results (Vellukunnel et al. Reference Vellukunnel, Buffum and Boyer2017). First, many posts related to logistical or relatively shallow questions. This is positive, because the discussion relieved instructional staff of the burden of answering simple questions while allowing in-person office hours to focus more on deep questions related to understanding. Second, the largest portion of questions reflected some level of constructive problem-solving activity, indicating that students were receiving substantive help. Finally, asking questions on the forum was positively correlated with course grades. The study did not, however, answer questions about whether the time instructional staff spent answering questions was lower than without the forum, nor whether peers provided high-quality assistance.

Early work proposed an automated discussion-bot that would answer student questions on discussion forums (Feng et al. Reference Feng, Shaw, Kim and Hovy2006). Instead of using a large language model trained on vast amounts of data on the internet, the bot used information retrieval and natural language processing techniques to mine answers from an annotated corpus of 1236 archived discussions and 279 course documents. They tested their bot with 66 questions, finding only 14 answers were determined to be exactly correct, whereas half were considered “good answers.”

More recently, the use of LLMs to assist with discussion forums has been explored (Zhang et al. Reference Zhang, Jaipersaud and Ba2023). The authors used an LLM to classify questions as conceptual, homework, logistics, and not answerable (by the LLM). Using GPT-3, they achieved 81% classification accuracy overall, extending to 93% accuracy for classifying unanswerable questions, meaning the approach can effectively ignore questions that it cannot answer, which could reduce the occurrence of unhelpful responses or hallucinations.

Liu and M’Hiri (Reference Liu and M’Hiri2024) took this one step further by simulating an LLM-powered teaching assistant on questions collected from the discussion board of a massive introductory programming course. Using LLM chains – a prompt engineering technique where several different prompts iteratively build toward a final solution: the first prompt classifies the question being answered, then uses that classification to inform what model/parameters/prompt to use to generate a candidate answer. Another prompt then assesses the quality of the answer. The authors ran a series of student questions through the model and found that the LLM-powered virtual TA:

was proficient at categorizing questions as either homework questions, coding feedback requests, or explanation requests;

provided more detailed responses than those provided by the human TAs;

matched the accuracy of TA responses with regard to non-assignment-specific questions;

had a tendency to include an overwhelming amount of information.

They concluded that while their findings are promising, human oversight is still required.

Automated Test Suites and Feedback

Another automated approach is to provide a test suite that generates feedback for students in the form of which tests their solution passed and failed. While this is useful in supporting students in some ways, creating test cases is a critical skill, both for test-driven developmentFootnote 2 and debugging. In test-driven development, test cases are created before the main code in order to help the programmer test code as it is developed. It can also be a useful metacognitive technique to help students form a correct mental model of the problem they are working on. One study found that students in an intervention group that created test cases prior to coding exhibited similar completion rates as the control group but with significantly fewer errors related to incorrect mental models (Denny et al. Reference Denny, Prather and Becker2019). This provides support for the design decision in 2005 for Web-CAT (a popular automated assessment tool) to support student submission of test cases in addition to their solution code (Allowatt and Edwards Reference Allowatt and Edwards2005).

The advantage of automated test suites, with respect to the features of effective feedback, is that it is timely. The responses are often nearly instantaneous. Unfortunately though, the form of the feedback is nonspecific and the information returned can vary greatly. The least information that could be provided would be just a final numeric score, giving the student an indication of how correct their code is. Other instructors may provide information about every test case, highlighting which ones failed (e.g., the test inputs and desired outputs). While this allows the student to start debugging on their own, it has been shown to encourage students to “debug their program into existence” whereby students lose track of the learning goals and focus exclusively on passing the automated test-cases (Zamprogno, Holmes, and Baniassad Reference Zamprogno, Holmes and Baniassad2020).

In practice, however, students are unlikely to have automated suites that provide them a numerical quality score when developing code in the real world. LLMs have the potential to unlock an entirely new type of automated feedback that more closely aligns with real-world coding practices. They can provide students with suggested code edits/next steps, meaningful feedback on code readability, efficiency, and design patterns. This type of feedback, which is often overlooked in traditional education settings where class sizes commonly reach hundreds of students, can help bridge the gap between academic learning and industry practices.

Nguyen and Allan (Reference Nguyen and Allan2024) explored the potential of generating this type of ‘formative’ feedback using LLMs. The authors used GPT-4 and “few-shot learning” – providing the model with some examples of the expected input and output within the prompt – to generate individualized feedback for 113 student submissions. They prompted the model to generate feedback on the student’s conceptual understanding, syntax, and time complexity and also had the model generate guiding questions or suggest follow-up actions. The group found that the feedback provided by the LLM was generally correct. The model provided correct evaluations of conceptual understanding for 92% of the submissions, syntax for 89% of submissions, and time complexity for 90% of submissions. The model was also found to be useful at generating code suggestions and hints (92% of submissions received code suggestions that were at least “somewhat correct” and 95% of hints were conceptually correct). Despite these promising results, the authors also identified several issues including a significant difference in model performance across different programming assignments and a tendency for GPT’s suggestions to lead to a suboptimal solution.

Code Explanations

We previously discussed using LLMs to generate code explanations for textbooks (MacNeil et al. Reference MacNeil, Tran and Hellas2023, Leinonen, Denny, et al. Reference Leinonen, Denny and MacNeil2023). One intriguing possibility is to leverage this same functionality to generate explanations of a student’s own code in order to help them understand and debug code they have written.

Balse et al. (Reference Balse, Kumar, Prasad and Warriem2023) used an LLM, specifically GPT-3.5-turbo, to generate explanations for logical errors in code written by students in an introductory programming course (CS1). The authors aimed to determine if LLM-generated explanations could support teaching assistants (TAs) in providing feedback to students efficiently. The quality of LLM-generated explanations was evaluated in two ways: the TA’s perception of explanation quality (comparing LLM-generated to peer-generated) and a detailed manual analysis of the LLM-generated explanations for all selected buggy student solutions. They found that TAs rated the quality of LLM-generated explanations comparably to peer-generated explanations. However, a manual analysis revealed that 50% of LLM explanations contained at least one incorrect statement, although 93% correctly identified at least one logical error.

Synchronous Assistance

LLMs show great promise for providing on-demand help at a large scale, especially if they can be designed to respond to student queries in a similar way to trained teaching assistants. This idea has been of significant interest in the computing education community.

Liffiton et al. (Reference Liffiton, Sheese, Savelka and Denny2024) describe a novel tool called “CodeHelp”, which incorporates “guardrails” to avoid directly revealing solutions. Deployed in a first-year computer and data science course, the tool’s effectiveness, student usage patterns, and perceptions were analyzed over a 12-week period. Rather than replacing existing instructional support, the goal of the work was to complement educator help by offloading simple tasks and being available at times that would inconvenient for the teacher or teaching assistants.

CodeHelp was well received by both students and instructors for its availability and assistance with debugging, although challenges included ensuring relevancy and appropriateness of the AI-generated responses and preventing over-reliance. A follow-up study by Sheese et al. (Reference Sheese, Liffiton, Savelka and Denny2024), investigated students’ patterns of help-seeking when utilizing CodeHelp, analysing more than 2,500 student queries. The study involved manual categorization of the queries into the types of assistance sought (e.g., debugging, implementation, conceptual understanding), alongside automated analysis of query characteristics (e.g., information provided by students). The authors found that the majority of queries were seeking immediate help with programming assignments rather than focusing on in-depth conceptual understanding. In addition, students often provided minimal information in their queries, pointing to a need for further instruction on how to effectively communicate with the tool.

Yang et al. (Reference Yang, Zhao, Xu, Brennan and Schneider2024) further expanded on this work by conducting think-aloud sessions aimed at exploring student help-seeking behaviors during the debugging process. They developed the “CS50 Duck” chatbot, a “pedagogically-minded subject-matter expert that guides students towards solutions and fosters learning.” Notably the CS50 Duck made use of retrieval-augmented generation (RAG) in order to pull information from a “ground truth” database of lecture captions so as to ensure responses were correct and more relevant to the content covered by the specific course. The authors identified several broad categories of help-seeking behavior that students exhibited when interacting with their chatbot:

Code Comprehension Instances where a student asked the chatbot to explain a snippet of code or its expected output