1 Algorithms for Measurement Invariance Testing: Contrasts and Connections

There are many reasonable places to start in an introduction to the study of measurement invariance and differential item functioning (DIF). Though it may seem overly broad, we can start by stating the goal of measurement in general. If we administer a set of items, it is typically because we assume that they indirectly assess some underlying quality which cannot be easily observed. These quantities are typically referred to as latent variables, and they represent some of the constructs in which developmental scientists are often most interested.

The study of measurement invariance and differential item functioning concerns the question of whether this measurement process unfolds equally well for all participants. If features of individual participants – which often include demographic variables such as age, sex, gender, or race, but can be any person- or context-specific quantity – are associated with different relations between latent variables and their observable measures, our inferences about this latent variable will be biased. Moreover, when we aim to compare participants who differ in these dimensions, we may make erroneous conclusions.

An example of a recent analysis of DIF helps to understand the issues at hand. Anxious symptoms are quite common among children on the autism spectrum, and it is therefore critical to have measures of anxiety that function well in this population. One recent study (Reference Schiltz and MagnusSchiltz & Magnus, 2021) analyzed how well a parent-report measure, the Screen for Child Anxiety Related Disorders (SCARED; Reference Birmaher, Khetarpal and BrentBirmaher et al., 1997), measures symptoms of anxiety disorders in a sample of

![]() children on the autism spectrum (mean age = 11.18 years). Among other covariates, the authors examined the effects of children’s social functioning, as measured by the Social Communication Questionnaire (SCQ; Reference Rutter, Bailey and LordRutter et al., 2003), on the link between SCARED scores and the latent variables they aim to represent, in this case five dimensions of anxiety (panic disorder, generalized anxiety disorder, separation anxiety, social anxiety, school avoidance). They found that certain social anxiety items – particularly “feels shy with people he/she does not know well” and “is shy” – were less commonly endorsed among parents whose children showed high SCQ scores, regardless of the child’s level of social anxiety. This last portion of the findings is critical: the fact that SCQ score was negatively related to endorsement of these items even after controlling for the latent variable, social anxiety, means that children’s social functioning may have a negatively biasing effect on their parents’ reports of their social anxiety. In other words, if a parent rates their child highly in these social anxiety symptoms on the SCARED, this may reflect deficits in the child’s social functioning, rather than the child’s experience of anxiety. Studying measurement invariance and DIF in these items is one way to avoid making such an error.

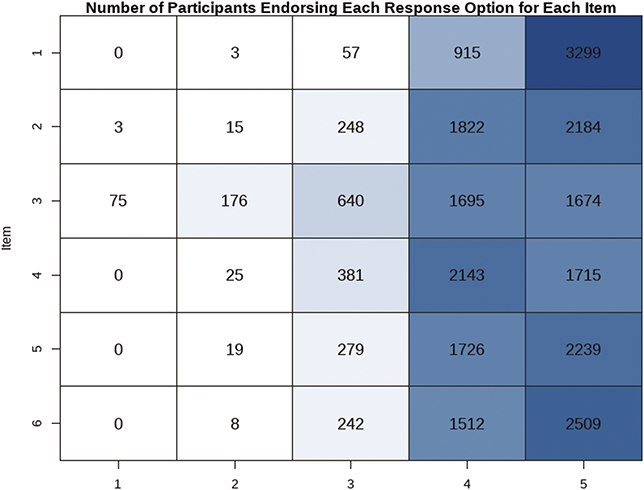

children on the autism spectrum (mean age = 11.18 years). Among other covariates, the authors examined the effects of children’s social functioning, as measured by the Social Communication Questionnaire (SCQ; Reference Rutter, Bailey and LordRutter et al., 2003), on the link between SCARED scores and the latent variables they aim to represent, in this case five dimensions of anxiety (panic disorder, generalized anxiety disorder, separation anxiety, social anxiety, school avoidance). They found that certain social anxiety items – particularly “feels shy with people he/she does not know well” and “is shy” – were less commonly endorsed among parents whose children showed high SCQ scores, regardless of the child’s level of social anxiety. This last portion of the findings is critical: the fact that SCQ score was negatively related to endorsement of these items even after controlling for the latent variable, social anxiety, means that children’s social functioning may have a negatively biasing effect on their parents’ reports of their social anxiety. In other words, if a parent rates their child highly in these social anxiety symptoms on the SCARED, this may reflect deficits in the child’s social functioning, rather than the child’s experience of anxiety. Studying measurement invariance and DIF in these items is one way to avoid making such an error.

1.0.1 Terminology and History

The study of measurement bias has a rich intellectual history, with many different researchers addressing these issues at many different times in the past century (Reference MillsapMillsap, 2011). The complexity of this landscape has led to a somewhat challenging terminological issue: the terms measurement invariance and differential item functioning effectively refer to the same concept in different ways. In general, researchers using structural equation modeling (SEM), particularly confirmatory factor analysis (CFA), developed the term measurement invariance to describe an assumption: that the items in a given test measure the latent variable equally for all individuals (Reference MeredithMeredith, 1993; Reference MillsapMillsap, 2011; Reference Putnick and BornsteinPutnick et al., 2016). At the same time, research arising mostly from the item response theory (IRT) tradition uses the term differential item functioning, often abbreviated DIF, to describe cases in which this assumption is violated: that is, items or sets thereof in which measurement parameters differ across participants (Reference Holland and ThayerHolland & Thayer, 1986; Reference Osterlind and EversonOsterlind & Everson, 2009; Reference Thissen, Steinberg, Wainer, Holland and WainerThissen et al., 1993). For readers interested in the long history of the study of measurement invariance and DIF, which extends back over a century, are referred to one historical review (Reference Millsap, Meredith, Cudeck and MacCallumMillsap & Meredith, 2007), which provides a fascinating overview of this literature.

However, for the purposes of this Element, there are two important things to note. First is that the study of DIF and measurement invariance have largely come together in the past two decades, perhaps due to the wider adoption of models (e.g., nonlinear factor models, discussed in the next section) which can be extended to accommodate many models within SEM and IRT (Reference BauerBauer, 2017; Reference Knott and BartholomewKnott & Bartholomew, 1999; Reference MillsapMillsap, 2011; Reference Skrondal and Rabe-HeskethSkrondal & Rabe-Hesketh, 2004). The concordance between these two traditions’ treatment of the same phenomenon has been noted many times before (Reference Reise, Widaman and PughReise et al., 1993; Reference Stark, Chernyshenko and DrasgowStark et al., 2004, Reference Stark, Chernyshenko and Drasgow2006), and contemporary modeling tools have been useful in helping researchers to unify these two perspectives. The second thing to note is that the terms will be used interchangeably throughout this Element. In general, measurement invariance will mostly be referred to when discussing an assumption (e.g., ensuring that different types of measurement invariance are satisfied), whereas DIF will be used to describe an effect (e.g., DIF effects were found for some items in this test). However, some slippage between the terms is unavoidable, given that – as we will see shortly – the two refer to virtually identical concepts.

For more information about the terminology used in this Element, readers are referred to the Glossary in Table 1.

Table 1 Glossary of terms used throughout the Element

| Term | Definition |

|---|---|

| alignment | An approximate method of estimating DIF effects which aims to minimize differences between groups in intercept and slope parameters in the context of a multiple-groups model. |

| approximate methods | A set of methods, encompassing alignment and regularization, which use penalized estimation to minimize the number of small DIF effects found. |

| configural invariance | The most basic form of measurement invariance, which requires that the number of factors and patterns of loadings is the same across groups. |

| differential item functioning (DIF) | Differences between participants in the measurement parameters for a particular item. Essentially the relational opposite of measurement invariance – that is, a model with DIF lacks measurement invariance. |

| effect size | For the purposes of this Element, the magnitude of a DIF effect either in terms of the differences in parameters or implied scores. |

| factor scores | Estimates generated from a factor model of each individual participant’s level of the latent variable. |

| focal group | In a multiple-groups model, the group for which differences in measurement parameters are of interest; compared to a reference group. Note that, absent substantive hypotheses, “focal” and “reference” groups can just be referred to by number (e.g., groups 1, 2, and 3). |

| likelihood ratio test | An inferential test which compares the fit of one model to another, nested model. For the purposes of this Element, to test the hypothesis that a model with certain DIF or impact parameters fits better than one without them. |

| measurement invariance | Equality between participants in measurement parameters, either for a single item or an entire test. Essentially the relational opposite of DIF – that is, a model with DIF lacks measurement invariance. |

| metric invariance | A form of measurement invariance which requires that factor loadings are equal across participants. Sometimes referred to as “weak metric invariance.” |

| multiple-groups model | For the purposes of this Element, a way to formulate DIF which treats DIF as between-group differences in parameter values. |

| nonuniform DIF | Differences across participants in an item which are not constant across all levels of the latent variable. Typically codified as differences between item loadings. |

| pre-estimation approaches | For the purposes of this Element, methods of determining the presence and magnitude of DIF effects which do not require a model to be estimated. |

| reference group | In a multiple-groups model, the group to which the focal group is compared in terms of measurement parameters. Note that, absent substantive hypotheses, “focal” and “reference” groups can just be referred to by number (e.g., groups 1, 2, and 3). |

| regression-based | For the purposes of this Element, a way to formulate DIF which treats measurement parameters as outcomes in a regression. Contrasted with multiple-groups model. |

| regularization | An approximate method of estimating DIF effects which penalizes DIF effects in the estimation of a factor model, with the goal of retaining only those effects that are meaningful. |

1.0.2 Organization of This Element

The main goals of this Element are to give developmental scientists as comprehensive a summary of measurement invariance and DIF research as possible, with the goal of helping them to incorporate the theory and practice of this research area into their own work. We begin with as complete a description of latent variable models as possible. The Element aims to provide a mathematical framework for the specific discussion of exactly what measurement invariance and DIF are. We will then mathematically define the types of DIF effects one might encounter, as well as different fields’ frameworks for studying DIF in general. Readers already familiar with the different types of measurement invariance (e.g., configural, metric, scalar) and DIF (e.g., uniform, nonuniform) may wish to skip this portion, particularly if they are already familiar with nonlinear latent variable models.

The second portion of this Element concerns the different extensions of the nonlinear factor model which can be used to test the assumption of measurement invariance, or detect and account for DIF. First, we discuss the two main modeling frameworks for addressing these questions, with an eye toward comparing and contrasting different fields’ and models’ definitions of DIF. We then move on to an exploration of different algorithms used to locate DIF, with a similar goal of examining differences and similarities between different fields. We will end with a set of recommendations for how to incorporate the study of DIF into one’s measurement-related research.

2 Latent Variable Models

In order to formulate what measurement invariance is, we must first introduce latent variable models. Most of the models in which measurement invariance is considered fall under the heading of nonlinear factor analysis (Reference Bauer and HussongBauer & Hussong, 2009; Reference Knott and BartholomewKnott & Bartholomew, 1999; Reference Skrondal and Rabe-HeskethSkrondal & Rabe-Hesketh, 2004), a broad framework which encompasses most CFA and IRT models. Critically, although this section aims to comprehensively introduce latent variable models, including the nonlinear factor model, it should not be the reader’s first introduction to either latent variable models or the generalized linear modeling framework. If either of those are new to the reader, it is suggested that they review a tutorial on factor analysis (e.g., Reference HoyleHoyle, 1995) or logistic regression and the generalized linear model in general (e.g., Reference Hosmer Jr, Lemeshow and SturdivantHosmer Jr et al., 2013; Reference McCullagh and NelderMcCullagh & Nelder, 2019). Those wishing for a comprehensive introduction to nonlinear factor analysis are referred to other work which covers this topic extensively (Reference Knott and BartholomewKnott & Bartholomew, 1999; Reference Skrondal and Rabe-HeskethSkrondal & Rabe-Hesketh, 2004).

Although we aim to present measurement invariance in an accessible way, a substantial number of equations in this section are unavoidable. Our hope is that, with some exposure to latent variable models and the generalized linear modeling framework, readers will be able to follow along with this section. The equations themselves do not require more than the above prerequisites (at least some prior exposure to latent variables and regression models outside of linear regression) to understand. Readers familiar with the contents of this section may wish to skim it to get the notation which will be used later on.

2.1 The Common Factor Model

Assume that we have a sample of

![]() participants, to whom we administer a set of

participants, to whom we administer a set of

![]() items. The response of the

items. The response of the

![]() th participant

th participant

![]() to the

to the

![]() item

item

![]() is denoted

is denoted

![]() . Note that we will refer to

. Note that we will refer to

![]() as an “item” or a “response variable” interchangeably throughout this text. We assume that the items are measures of a set of

as an “item” or a “response variable” interchangeably throughout this text. We assume that the items are measures of a set of

![]() latent variables

latent variables

![]() . Each individual has an implicit value of each latent variable, denoted

. Each individual has an implicit value of each latent variable, denoted

![]() . In a typical common factor model, we assume that the items are related to the latent variables as follows:

. In a typical common factor model, we assume that the items are related to the latent variables as follows:

(1)

(1)

For each item,

![]() is the intercept; this represents the predicted value of

is the intercept; this represents the predicted value of

![]() for an individual whose value on the latent variable(s) which load on

for an individual whose value on the latent variable(s) which load on

![]() is 0. As with intercepts in linear regression, we can effectively think of it as the overall “level” of the item – we predict that participants will, on average, have high overall levels of

is 0. As with intercepts in linear regression, we can effectively think of it as the overall “level” of the item – we predict that participants will, on average, have high overall levels of

![]() regardless of their level of the latent variable. The effect of the latent variable is conveyed by

regardless of their level of the latent variable. The effect of the latent variable is conveyed by

![]() , the factor loading. As with a slope in a linear regression, for each one-unit shift in the

, the factor loading. As with a slope in a linear regression, for each one-unit shift in the

![]() th latent variable we predict a

th latent variable we predict a

![]() -unit shift in the item. Finally,

-unit shift in the item. Finally,

![]() represents the error term, which is subject

represents the error term, which is subject

![]() ’s deviation from their predicted value of item

’s deviation from their predicted value of item

![]() . These error terms are assumed to have a mean of 0, and a variance of

. These error terms are assumed to have a mean of 0, and a variance of

![]() . Error terms are generally considered to be uncorrelated across items – that is

. Error terms are generally considered to be uncorrelated across items – that is

![]() , which represents the covariance between the error terms for items

, which represents the covariance between the error terms for items

![]() and

and

![]() , is assumed to be 0.

, is assumed to be 0.

Latent variables may covary with one another, with an

![]() covariance matrix

covariance matrix

![]() . Each diagonal element of

. Each diagonal element of

![]() , here denoted

, here denoted

![]() , represents the variance of the

, represents the variance of the

![]() latent variable; off-diagonal elements of the matrix, denoted

latent variable; off-diagonal elements of the matrix, denoted

![]() , represent the covariance between the

, represent the covariance between the

![]() th and

th and

![]() th latent variables. Latent variables may also have their own means; the mean of the

th latent variables. Latent variables may also have their own means; the mean of the

![]() th latent variable is denoted

th latent variable is denoted

![]() .

.

Some constraints must be imposed on these parameters in order for the model to be identified. There are two common options. First, we can fix one item’s value of

![]() to 0 and its value of

to 0 and its value of

![]() to 1 for each latent variable. This item is often referred to as the reference variable and, when this approach is used, the latent variable is on the same scale as this item. Alternatively, we could set the variances

to 1 for each latent variable. This item is often referred to as the reference variable and, when this approach is used, the latent variable is on the same scale as this item. Alternatively, we could set the variances

![]() to 1 and the mean

to 1 and the mean

![]() to 0 for all latent variables. In this case, the loading

to 0 for all latent variables. In this case, the loading

![]() may now be considered the predicted change in

may now be considered the predicted change in

![]() associated with a one-standard deviation shift in the latent variable

associated with a one-standard deviation shift in the latent variable

![]() ; the intercept

; the intercept

![]() may now be considered the predicted value of

may now be considered the predicted value of

![]() when all latent variables are at their means. As we will see shortly, assessing measurement invariance involves adding a number of new parameters to the model, which will complicate model identification; this is discussed in detail below.

when all latent variables are at their means. As we will see shortly, assessing measurement invariance involves adding a number of new parameters to the model, which will complicate model identification; this is discussed in detail below.

2.2 Nonlinear Factor Models

The common factor model presented above is the foundation of most psychological researchers’ understanding of latent variable models. However, in order for us to understand the relations among different latent variable models, it is necessary to extend it. In particular, the above model assumes that items

![]() meet the same general assumptions as the dependent variable in a linear regression. For our purpose, the most problematic of these assumptions is that the residuals of the items are normally distributed. This assumption requires, among other things, that our outcome be continuous, which we know many psychological outcomes are not (even if we erroneously treat them that way). In particular, it is common for us to be working with ordinal items resulting from a Likert scale (e.g., responses ranging from 1 to 5 assessing a participant’s agreement with a given statement) or even binary items (e.g., a yes–no response to a question about psychiatric symptoms).

meet the same general assumptions as the dependent variable in a linear regression. For our purpose, the most problematic of these assumptions is that the residuals of the items are normally distributed. This assumption requires, among other things, that our outcome be continuous, which we know many psychological outcomes are not (even if we erroneously treat them that way). In particular, it is common for us to be working with ordinal items resulting from a Likert scale (e.g., responses ranging from 1 to 5 assessing a participant’s agreement with a given statement) or even binary items (e.g., a yes–no response to a question about psychiatric symptoms).

Thus, our discussion of DIF will use a nonlinear factor analysis formulation. Although we will attempt to describe the nonlinear factor analysis formulation, we suggest that this not be the reader’s first introduction to nonlinear factor models and generalized linear models more generally, as mentioned above. However, for review, a brief description follows.

The major advantage of nonlinear factor models is that they allow the relation between items and latent variables (i.e., the one expressed in Equation 1) to take a nonlinear form, corresponding to items

![]() of different scales. The shift to nonlinear variables requires two major innovations on the model presented above. The first is what is known as a linear predictor, which we denote

of different scales. The shift to nonlinear variables requires two major innovations on the model presented above. The first is what is known as a linear predictor, which we denote

![]() , and which is formulated as follows:

, and which is formulated as follows:

(2)

(2)

Note that this is basically the exact same equation as Equation 1, except without the error term. The purpose of the linear predictor becomes a bit clearer when we introduce the second component of nonlinear factor models, known as the link function. In order to get to our expected value of

![]() , we must take our linear predictor

, we must take our linear predictor

![]() and place it inside the inverse link function. Let us unpack each term in this statement. The expected value of

and place it inside the inverse link function. Let us unpack each term in this statement. The expected value of

![]() is whatever value we would predict for subject

is whatever value we would predict for subject

![]() on item

on item

![]() . For binary items, this is the probability that that particular subject will give a response of 1 (e.g., say “yes” to a yes–no question, get a question correct on a test of ability, endorse a given symptom on a symptom inventory) based on their level of the latent variable. We will denote this

. For binary items, this is the probability that that particular subject will give a response of 1 (e.g., say “yes” to a yes–no question, get a question correct on a test of ability, endorse a given symptom on a symptom inventory) based on their level of the latent variable. We will denote this

![]() (following the notation of Reference Bauer and HussongBauer and Hussong (2009)).

(following the notation of Reference Bauer and HussongBauer and Hussong (2009)).

As for the inverse link function, let us first define the link function,

![]() :

:

(3)

(3)

To put Equation 3 colloquially, we can define the link function

![]() as “whatever function we apply to the expected value of

as “whatever function we apply to the expected value of

![]() to get to

to get to

![]() .” Being that the inverse link function, denoted

.” Being that the inverse link function, denoted

![]() , is the inverse of

, is the inverse of

![]() , it can be colloquially defined as “whatever function we apply to

, it can be colloquially defined as “whatever function we apply to

![]() to get to the expected value of

to get to the expected value of

![]() .” Note that the function

.” Note that the function

![]() must be bijective in order to have an inverse.

must be bijective in order to have an inverse.

Mathematically, it is defined as follows:

(4)

(4)

Thus, in order to get to

![]() , our expected value for

, our expected value for

![]() , our model is implicitly estimating the linear predictor

, our model is implicitly estimating the linear predictor

![]() and applying the inverse link function. As far as our choices of link and inverse link functions, they depend on the type of data we are interested in modeling. One reasonable choice for binary data is the logit link function, whose inverse will yield a number between 0 and 1, making it well-suited to modeling a probability. As noted above, when we are working with binary data, the expected value of

and applying the inverse link function. As far as our choices of link and inverse link functions, they depend on the type of data we are interested in modeling. One reasonable choice for binary data is the logit link function, whose inverse will yield a number between 0 and 1, making it well-suited to modeling a probability. As noted above, when we are working with binary data, the expected value of

![]() (

(

![]() ) is the probability that a given individual endorses item

) is the probability that a given individual endorses item

![]() – that is,

– that is,

![]() . Thus, we will use the logit function to translate

. Thus, we will use the logit function to translate

![]() to

to

![]() , and the inverse of that function to translate

, and the inverse of that function to translate

![]() to

to

![]() . The logit link function is defined as follows:

. The logit link function is defined as follows:

where

![]() denotes the natural logarithm. Conversely, the inverse logit link function is defined as follows:

denotes the natural logarithm. Conversely, the inverse logit link function is defined as follows:

(6)

(6)

where

![]() is shorthand for

is shorthand for

![]() . If the reader wishes to see that Equation 6 is indeed the inverse of Equation 5, they may choose a value of

. If the reader wishes to see that Equation 6 is indeed the inverse of Equation 5, they may choose a value of

![]() , which can be any number between 0 and 1, and plug it into Equation 5. Then take the value of

, which can be any number between 0 and 1, and plug it into Equation 5. Then take the value of

![]() obtained from Equation 5 and plug it into Equation 6. This transformation should yield the originally chosen value of

obtained from Equation 5 and plug it into Equation 6. This transformation should yield the originally chosen value of

![]() .

.

Putting it all together, we can now finally formulate how the latent variable itself is related to the expected value of a binary item under a nonlinear factor analysis. Combining the original linear formulation in Equation 2 and the inverse link function in Equation 4 we get the following:

(7)

(7)

Note that we have the same exact parameters linking the latent variable

![]() to the item

to the item

![]() : intercept

: intercept

![]() and loading

and loading

![]() . The difference is that now

. The difference is that now

![]() is related by these parameters to the latent variable through a nonlinear function as opposed to a linear one. If the reader wishes to test this out, an example is given in Table 2, in which we have each individual’s values of

is related by these parameters to the latent variable through a nonlinear function as opposed to a linear one. If the reader wishes to test this out, an example is given in Table 2, in which we have each individual’s values of

![]() ,

,

![]() ,

,

![]() , and

, and

![]() for pre-set values of

for pre-set values of

![]() and

and

![]() .

.

Table 2 Example values of latent variables (

), linear predictors (

), linear predictors (

), endorsement probabilities (

), endorsement probabilities (

), and outcomes (

), and outcomes (

), for a nonlinear factor analysis with

), for a nonlinear factor analysis with

and

and

| ID |

|

|

|

|

|---|---|---|---|---|

| 1 | 0.5723 | 1.1084 | 0.2482 | 0 |

| 2 | − 0.0034 | 0.245 | 0.4391 | 1 |

| 3 | − 2.7187 | − 3.828 | 0.9787 | 1 |

| 4 | − 0.6953 | − 0.793 | 0.6885 | 0 |

| 5 | 1.3079 | 2.2118 | 0.0987 | 0 |

| 6 | − 0.9547 | − 1.182 | 0.7653 | 1 |

| 7 | − 1.7034 | − 2.3051 | 0.9093 | 1 |

| 8 | 0.204 | 0.5559 | 0.3645 | 0 |

| 9 | 0.3321 | 0.7482 | 0.3212 | 0 |

| 10 | 0.7218 | 1.3326 | 0.2087 | 0 |

There is one more step to understanding nonlinear factor analysis, and that is defining the probability function. Note that the inverse link function does not give us

![]() but its expected value – that is, for a binary item it will not give us a value of

but its expected value – that is, for a binary item it will not give us a value of

![]() (which can only take a value of 0 or 1) but rather

(which can only take a value of 0 or 1) but rather

![]() , the probability that

, the probability that

![]() will be 1. Notice also that our linear predictor has no error term in it – we have not accounted for any source of randomness or error. Thus, the probability function is what links the expected value

will be 1. Notice also that our linear predictor has no error term in it – we have not accounted for any source of randomness or error. Thus, the probability function is what links the expected value

![]() to the value of

to the value of

![]() we ultimately obtain. In the case of binary response variables, we generally assume that

we ultimately obtain. In the case of binary response variables, we generally assume that

![]() follows a Bernoulli distribution with probability

follows a Bernoulli distribution with probability

![]() .

.

Colloquially, this means the following. The variable

![]() is random and we don’t know whether it will be 0 or 1, but we do know the probability with which it will be 1, and that probability is

is random and we don’t know whether it will be 0 or 1, but we do know the probability with which it will be 1, and that probability is

![]() . A person with

. A person with

![]() will most likely give a value of 1, a person with

will most likely give a value of 1, a person with

![]() will most likely give a value of 0, and a person with

will most likely give a value of 0, and a person with

![]() is just as likely to give a value of 0 or 1, but any one of these people could theoretically give a value of 0 or 1. Note, for instance, in Table 2, that the observed values of

is just as likely to give a value of 0 or 1, but any one of these people could theoretically give a value of 0 or 1. Note, for instance, in Table 2, that the observed values of

![]() take values of 0 or 1, and may even (as in the case of the 8th observation) take a value of 0 if the probability of endorsing the item is over 0.5.

take values of 0 or 1, and may even (as in the case of the 8th observation) take a value of 0 if the probability of endorsing the item is over 0.5.

Once we combine all three of these things – the linear predictor, the link function, and the probability function – we have all the information we need to think about factor analysis, and thus DIF, in a way that encompasses many different types of models across disciplines. For instance, the formulation provided above for binary variables, using an inverse logit function to link the linear predictor to the probability of endorsing an item, is actually the same as the two-parameter logistic model in IRT (Reference BirnbaumBirnbaum, 1969). This model is frequently applied to binary data in an IRT setting.

2.2.1 Extensions to Ordinal Data

The above model will suffice for binary data, but ordinal data requires a slightly more complicated extension of this formulation. A model arising from IRT for ordinal data is known as the graded response model (Reference Samejima, Linden and HambletonSamejima, 1997). Like the formulation given in Equation 6, we are modeling probabilities. Given an ordinal item with

![]() categories

categories

![]() , we model the probability of endorsing category

, we model the probability of endorsing category

![]() or lower. For instance, in a three-level item, rather than modeling the probability that a participant endorses option 1, 2, or 3, we model the probability that the participant endorses option 1; option 2 or 1; or option 3, 2, or 1. Note that the probability of endorsing option 3, 2, or 1 is exactly 1 – the participant must endorse one of these three options. So we only model

or lower. For instance, in a three-level item, rather than modeling the probability that a participant endorses option 1, 2, or 3, we model the probability that the participant endorses option 1; option 2 or 1; or option 3, 2, or 1. Note that the probability of endorsing option 3, 2, or 1 is exactly 1 – the participant must endorse one of these three options. So we only model

![]() probabilities – in this case, the probability of endorsing option 2 or lower, and the probability of endorsing option 1. Note also that the case of binary data is actually a special case of this model – that is, it is a model with

probabilities – in this case, the probability of endorsing option 2 or lower, and the probability of endorsing option 1. Note also that the case of binary data is actually a special case of this model – that is, it is a model with

![]() categories.

categories.

Whereas in binary data we used

![]() to represent the probability of endorsing a given item, here we use

to represent the probability of endorsing a given item, here we use

![]() to represent the cumulative probability of endorsing category

to represent the cumulative probability of endorsing category

![]() or lower. In the three-level example just given, thus, every participant would have an implied value of

or lower. In the three-level example just given, thus, every participant would have an implied value of

![]() , the probability of endorsing response option 1, and

, the probability of endorsing response option 1, and

![]() , the probability of endorsing response option 2 or response option 1. Each value of

, the probability of endorsing response option 2 or response option 1. Each value of

![]() has its own linear predictor, which we will denote

has its own linear predictor, which we will denote

![]() . This linear predictor is given by:

. This linear predictor is given by:

(8)

(8)

The new parameter in this equation,

![]() , is the threshold parameter. This parameter represents the value of the latent variable a participant must exceed in order to get a score exceeding

, is the threshold parameter. This parameter represents the value of the latent variable a participant must exceed in order to get a score exceeding

![]() . One critical note is that all possible values of

. One critical note is that all possible values of

![]() and the intercept

and the intercept

![]() cannot all be identified; one of these values must be fixed. The typical solution is to set the intercept

cannot all be identified; one of these values must be fixed. The typical solution is to set the intercept

![]() to zero in the event that individual thresholds are estimated. Readers who would like a comprehensive description of the

to zero in the event that individual thresholds are estimated. Readers who would like a comprehensive description of the

![]() parameter are referred to the original formulation of the model (Reference Bauer and HussongBauer & Hussong, 2009), where it is explained more completely.

parameter are referred to the original formulation of the model (Reference Bauer and HussongBauer & Hussong, 2009), where it is explained more completely.

2.2.2 A Note about Matrices and Vectors

In many cases it is useful to consider all of the above parameters in terms of matrices or vectors, which contain all of the relevant parameters (e.g., all of the loadings, all of the intercepts) for a given model. It is not critical to understand matrix algebra fully to make this distinction; the important thing to know is that, when we designate a matrix or vector of parameters as invariant or noninvariant, that generally means that we are applying the distinction to all of the relevant parameters. For instance, we can consider the factor loadings, each indexed

![]() , as part of a matrix of dimension

, as part of a matrix of dimension

![]() ,

,

![]() .

.

If we say that we are testing the invariance of

![]() , we mean that we are testing the hypothesis that all of the loadings, for all items measuring all factors, are invariant across groups. Similarly, we can place all of the intercepts, individually denoted

, we mean that we are testing the hypothesis that all of the loadings, for all items measuring all factors, are invariant across groups. Similarly, we can place all of the intercepts, individually denoted

![]() , into a

, into a

![]() -length vector, which we will denote

-length vector, which we will denote

![]() . If we are using a model for ordinal data with individual thresholds for each response category, we can put these into a

. If we are using a model for ordinal data with individual thresholds for each response category, we can put these into a

![]() matrix

matrix

![]() . With respect to the distribution of the latent variable itself, we can think of the means of each factor as being stored in the

. With respect to the distribution of the latent variable itself, we can think of the means of each factor as being stored in the

![]() -length vector

-length vector

![]() , and the covariance matrix of the latent variables as fitting into the

, and the covariance matrix of the latent variables as fitting into the

![]() covariance matrix

covariance matrix

![]() . We can also refer to the error terms as a

. We can also refer to the error terms as a

![]() matrix

matrix

![]() .

.

Finally, we can even refer to the data itself as a matrix. The entire collection of all of the items for all of the participants, individually denoted

![]() for participant

for participant

![]() on item

on item

![]() , can simply be referred to as a

, can simply be referred to as a

![]() matrix

matrix

![]() . Similarly, if we believe that subjects each have their own (unobserved) values of the latent variables, we could consider the

. Similarly, if we believe that subjects each have their own (unobserved) values of the latent variables, we could consider the

![]() matrix of these latent variable values, individually denoted

matrix of these latent variable values, individually denoted

![]() for participant

for participant

![]() on latent variable

on latent variable

![]() ,

,

![]() .

.

Though all of these quantities are different from one another, with some consisting of measurement parameters (i.e.,

![]() ,

,

![]() ,

,

![]() ), others consisting of latent variable parameters (i.e.,

), others consisting of latent variable parameters (i.e.,

![]() and

and

![]() ), and others not being parameters at all (i.e.,

), and others not being parameters at all (i.e.,

![]() ,

,

![]() ), the point is the same: when we refer to a given condition applying to a matrix or a vector, we mean that the condition applies to all of the individual elements within that matrix or vector. This way of referring to the elements of a matrix or vector will become an important one when we distinguish two different ways of thinking about measurement invariance and DIF, as some methods consider individual parameters, whereas others consider the entire matrix thereof.

), the point is the same: when we refer to a given condition applying to a matrix or a vector, we mean that the condition applies to all of the individual elements within that matrix or vector. This way of referring to the elements of a matrix or vector will become an important one when we distinguish two different ways of thinking about measurement invariance and DIF, as some methods consider individual parameters, whereas others consider the entire matrix thereof.

3 What is Measurement Invariance? What is DIF?

With the nonlinear factor model defined, we are now able to provide formal definitions of measurement invariance within it. Recall that measurement invariance is thought of as the assumption that the relation between the latent variable and the item is constant across all values of other covariates – these can be demographic variables, such as age, or theory-specific variables, such as SDQ score in our first example. We can define the assumption of measurement invariance mathematically as follows.

(9)

(9)

Here the items and latent variable are referred to as

![]() and

and

![]() , just as before; the new addition is

, just as before; the new addition is

![]() , which represents participant

, which represents participant

![]() ’s value of some variable

’s value of some variable

![]() . Note that we use the term covariate to refer to this variable. Other terms which are sometimes used are background variable or predictor. This covariate can be anything about the participant – gender, age, race, location, and so on. What

. Note that we use the term covariate to refer to this variable. Other terms which are sometimes used are background variable or predictor. This covariate can be anything about the participant – gender, age, race, location, and so on. What

![]() refers to is the probability distribution of

refers to is the probability distribution of

![]() , conditional on

, conditional on

![]() ; that is, it represents the value of the

; that is, it represents the value of the

![]() item the

item the

![]() subject will get, based on their value of the latent variable. Similarly,

subject will get, based on their value of the latent variable. Similarly,

![]() refers to the

refers to the

![]() subject’s value on the item given their value of both the latent variable and the covariate

subject’s value on the item given their value of both the latent variable and the covariate

![]() .

.

What this effectively means is: in the absence of measurement bias on the basis of covariate

![]() , there will be no more information about

, there will be no more information about

![]() that

that

![]() can give us over and above the latent variable itself. Suppose, for example, that we are trying to determine whether an item (

can give us over and above the latent variable itself. Suppose, for example, that we are trying to determine whether an item (

![]() ) measuring children’s math ability (

) measuring children’s math ability (

![]() ) is free of measurement bias on the basis of gender (

) is free of measurement bias on the basis of gender (

![]() ). If there is no measurement bias, then a girl and a boy who each have the same value of

). If there is no measurement bias, then a girl and a boy who each have the same value of

![]() , or the same level of math ability, should get the same exact score on

, or the same level of math ability, should get the same exact score on

![]() . Their score is entirely dependent on the latent variable and nothing else. The challenge, of course, is that we never know someone’s value of

. Their score is entirely dependent on the latent variable and nothing else. The challenge, of course, is that we never know someone’s value of

![]() , because it is latent – we must infer whether measurement bias is present using a few modifications of the latent variable models shown above.

, because it is latent – we must infer whether measurement bias is present using a few modifications of the latent variable models shown above.

The condition shown in Equation 9 is described as full invariance (Reference MillsapMillsap, 2011). Though it describes the ideal situation, in reality it is difficult to achieve – it is generally improbable that there will be absolutely no differences on the basis of some extraneous covariates on any of a test’s items. It is also very difficult to test this condition mathematically. Usually, we are testing a somewhat weaker condition, which is referred to as first-order invariance (Reference MillsapMillsap, 2011). The equation corresponding to first-order invariance is as follows:

(10)

(10)

What this means is that the expected value of

![]() , conditional on the latent variable

, conditional on the latent variable

![]() , does not depend on the covariate

, does not depend on the covariate

![]() . The formulation in Equation 10 is subtly but powerfully different from the one offered earlier. Put another way, it means: even if we cannot guarantee that everything about

. The formulation in Equation 10 is subtly but powerfully different from the one offered earlier. Put another way, it means: even if we cannot guarantee that everything about

![]() is the same across all levels of

is the same across all levels of

![]() , we can guarantee that our predictions for values of

, we can guarantee that our predictions for values of

![]() does not depend on levels of

does not depend on levels of

![]() . Using the example above, this formulation essentially means: an item measuring children’s math ability is free of bias on the basis of gender if, given information about a child’s math ability, knowing their gender would not cause us to make a different prediction about their score on this item. There may be subtle differences in sources of error, which cause actual scores on the item to deviate from predicted scores, but the predicted score would be the same across gender.

. Using the example above, this formulation essentially means: an item measuring children’s math ability is free of bias on the basis of gender if, given information about a child’s math ability, knowing their gender would not cause us to make a different prediction about their score on this item. There may be subtle differences in sources of error, which cause actual scores on the item to deviate from predicted scores, but the predicted score would be the same across gender.

In addition to representing a less stringent condition for the data to meet, this formulation of first-order invariance also allows us to define measurement bias mathematically using the terms we have already presented. Note that, in Equations 3–8, the expected value of

![]() (there denoted

(there denoted

![]() ) is entirely a function of the latent variable

) is entirely a function of the latent variable

![]() and the measurement parameters,

and the measurement parameters,

![]() ,

,

![]() , and, if thresholds are being modeled,

, and, if thresholds are being modeled,

![]() . Thus, since we are dealing with expected values, looking for measurement bias means looking for differences across values of

. Thus, since we are dealing with expected values, looking for measurement bias means looking for differences across values of

![]() in these parameters. So we can mathematically define measurement bias as: any differences on the basis of any covariate

in these parameters. So we can mathematically define measurement bias as: any differences on the basis of any covariate

![]() in the values of the measurement parameters.

in the values of the measurement parameters.

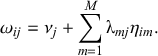

We will now formally define the different types of measurement noninvariance in terms of the different parameters they affect, and the corresponding effects they have on the expected values of items. Using this formulation, we are now ready to distinguish between the different types of DIF we can observe. For this, we refer to Figures 1 and 2, in which the relation between

![]() and

and

![]() are shown based on different values of the parameters introduced in the previous section. Plots such as these are termed trace line plots, and they can be useful tools for understanding the differences between groups in measurement.

are shown based on different values of the parameters introduced in the previous section. Plots such as these are termed trace line plots, and they can be useful tools for understanding the differences between groups in measurement.

We are presenting differences in these parameters in the context of a multiple-groups scenario with two groups. The grouping variable will be denoted

![]() , and it will have two levels, Group 0

, and it will have two levels, Group 0

![]() and Group 1

and Group 1

![]() . Assume that group membership is a known variable (e.g., treatment group vs. control group, or groupings based on self-reported gender and age) rather than an unobserved subgroup. We will refer to the groups in this case as the reference group for

. Assume that group membership is a known variable (e.g., treatment group vs. control group, or groupings based on self-reported gender and age) rather than an unobserved subgroup. We will refer to the groups in this case as the reference group for

![]() and the focal group for

and the focal group for

![]() . This terminology stems from the case in which there is one group with known measurement parameters (the reference group:

. This terminology stems from the case in which there is one group with known measurement parameters (the reference group:

![]() ), and one in which measurement bias is a concern (the focal group;

), and one in which measurement bias is a concern (the focal group;

![]() ): but we can apply it to any two-group case. After this section we will describe the actual model formulation for measurement models which allow DIF. As will be shown, we can model DIF on the basis of many different types of variables, not just grouping variables. However, because it is easier to present differences in terms of a simple comparison, we will first present the types of DIF in the two-group case, without loss of generality.

): but we can apply it to any two-group case. After this section we will describe the actual model formulation for measurement models which allow DIF. As will be shown, we can model DIF on the basis of many different types of variables, not just grouping variables. However, because it is easier to present differences in terms of a simple comparison, we will first present the types of DIF in the two-group case, without loss of generality.

3.1 Differences in the Overall Level of the Item:

As noted earlier, the intercept parameter

![]() denotes the intercept for item

denotes the intercept for item

![]() . This parameter represents the predicted value of the item for a subject with a value of 0 for all of the latent variables. If there is measurement bias in this parameter for grouping variable

. This parameter represents the predicted value of the item for a subject with a value of 0 for all of the latent variables. If there is measurement bias in this parameter for grouping variable

![]() , this means that one of the groups gives higher or lower predicted values of the items than the other, controlling for the latent variable.

, this means that one of the groups gives higher or lower predicted values of the items than the other, controlling for the latent variable.

The interpretation of this parameter depends on the type of item

![]() . If

. If

![]() is a continuous item, then the intercept represents the actual value we predict someone with

is a continuous item, then the intercept represents the actual value we predict someone with

![]() for all

for all

![]() to endorse. So if members of Group 1 have a higher value of

to endorse. So if members of Group 1 have a higher value of

![]() than members of Group 0, then members of Group 1 are predicted to give higher overall responses to

than members of Group 0, then members of Group 1 are predicted to give higher overall responses to

![]() , even if they have the same value of the latent variable

, even if they have the same value of the latent variable

![]() . For instance, suppose that we are measuring a single latent variable, responsive parenting, and

. For instance, suppose that we are measuring a single latent variable, responsive parenting, and

![]() is the amount of time a parent spends playing with their child during a free-play task. Further suppose that parents in Group 1 (which could be any grouping variable – older parents relative to younger ones, male parents relative to non-male ones, parents in a treatment condition relative to the control group) have higher values of

is the amount of time a parent spends playing with their child during a free-play task. Further suppose that parents in Group 1 (which could be any grouping variable – older parents relative to younger ones, male parents relative to non-male ones, parents in a treatment condition relative to the control group) have higher values of

![]() than those in Group 0. In this case, if we took two parents who had the exact same level of responsiveness, but one parent was from Group 1 and the other was from Group 0, we would predict that the parent from Group 1 plays with their child more than the one from Group 0, even though in reality they are equally responsive. One possible relation between the item and the latent variable is shown in the top left portion of Figure 1. Notice that the lines are parallel: it is only their intercept, representing their overall level of responsiveness, that has changed.

than those in Group 0. In this case, if we took two parents who had the exact same level of responsiveness, but one parent was from Group 1 and the other was from Group 0, we would predict that the parent from Group 1 plays with their child more than the one from Group 0, even though in reality they are equally responsive. One possible relation between the item and the latent variable is shown in the top left portion of Figure 1. Notice that the lines are parallel: it is only their intercept, representing their overall level of responsiveness, that has changed.

Figure 1 Examples of uniform DIF in continuous, binary, and ordinal items

Note. Figures plot the expected value of

![]() against the latent variable

against the latent variable

![]() . Note the different intercept values for continuous and binary items, and different threshold values for ordinal items, in the upper left-hand corner of the graphs.

. Note the different intercept values for continuous and binary items, and different threshold values for ordinal items, in the upper left-hand corner of the graphs.

If

![]() is binary or ordinal, the interpretation of

is binary or ordinal, the interpretation of

![]() changes. If

changes. If

![]() is binary,

is binary,

![]() represents the probability of endorsing the item. Suppose we are still measuring responsive parenting, but the item

represents the probability of endorsing the item. Suppose we are still measuring responsive parenting, but the item

![]() in this case is a binary response variable of whether the parent endorses the item: “I try to take my child’s moods into account when interacting with them.” In this case, if members of Group 1 had a higher value of

in this case is a binary response variable of whether the parent endorses the item: “I try to take my child’s moods into account when interacting with them.” In this case, if members of Group 1 had a higher value of

![]() than those in Group 0, then we would predict that a parent from Group 1 who has the same level of responsiveness as another parent in Group 0, would nevertheless be more likely to endorse this item than their counterpart in Group 0. The interpretation is only slightly different for ordinal items. If the item were instead a five-level ordinal one, a higher value of

than those in Group 0, then we would predict that a parent from Group 1 who has the same level of responsiveness as another parent in Group 0, would nevertheless be more likely to endorse this item than their counterpart in Group 0. The interpretation is only slightly different for ordinal items. If the item were instead a five-level ordinal one, a higher value of

![]() in Group 1 would be interpreted as a higher probability of endorsing any category

in Group 1 would be interpreted as a higher probability of endorsing any category

![]() , relative to category

, relative to category

![]() (i.e., the next category down). In this case, we would predict that a parent in Group 1 is more likely than a parent in Group 0 to endorse a higher category, even at the same level of responsiveness. Note that this difference is the exact same across all categories – that is, if Group 1 has a higher value of

(i.e., the next category down). In this case, we would predict that a parent in Group 1 is more likely than a parent in Group 0 to endorse a higher category, even at the same level of responsiveness. Note that this difference is the exact same across all categories – that is, if Group 1 has a higher value of

![]() , then they are more likely to endorse category 4 relative to category 3, category 3 versus 2, category 2 versus 1, and category 1 versus 0. A possible relation between the item and the latent variable is shown in Figure 1, both for the binary and the ordinal cases. Note that, just as in the case of continuous variables, the difference between the trace lines is best described as a horizontal shift, whereby one trace line is associated with higher values of

, then they are more likely to endorse category 4 relative to category 3, category 3 versus 2, category 2 versus 1, and category 1 versus 0. A possible relation between the item and the latent variable is shown in Figure 1, both for the binary and the ordinal cases. Note that, just as in the case of continuous variables, the difference between the trace lines is best described as a horizontal shift, whereby one trace line is associated with higher values of

![]() than the other at every value of

than the other at every value of

![]() . That is, while the expected value of

. That is, while the expected value of

![]() may change, it does so uniformly.

may change, it does so uniformly.

3.2 Differences in the Relation Between the Latent Variable and the Item:

If

![]() can be considered as the intercept for a given item, the loading

can be considered as the intercept for a given item, the loading

![]() represents the regression coefficient that conveys the effect of the latent variable

represents the regression coefficient that conveys the effect of the latent variable

![]() on this item. Thus, if there are differences on the basis of grouping variable

on this item. Thus, if there are differences on the basis of grouping variable

![]() in

in

![]() , that means that there are differences in the nature of the relation between the latent variable and the items. As with

, that means that there are differences in the nature of the relation between the latent variable and the items. As with

![]() , the interpretation of between-group differences in

, the interpretation of between-group differences in

![]() differs based on the scale of the item. However, in both cases it can be interpreted as a regression coefficient, with

differs based on the scale of the item. However, in both cases it can be interpreted as a regression coefficient, with

![]() as the predictor and

as the predictor and

![]() as the outcome. The type of regression coefficient is simply a matter of which type of item we are using – if

as the outcome. The type of regression coefficient is simply a matter of which type of item we are using – if

![]() is continuous,

is continuous,

![]() is essentially a linear regression coefficient, but if

is essentially a linear regression coefficient, but if

![]() is binary,

is binary,

![]() will be a logistic regression coefficient, and so on.

will be a logistic regression coefficient, and so on.

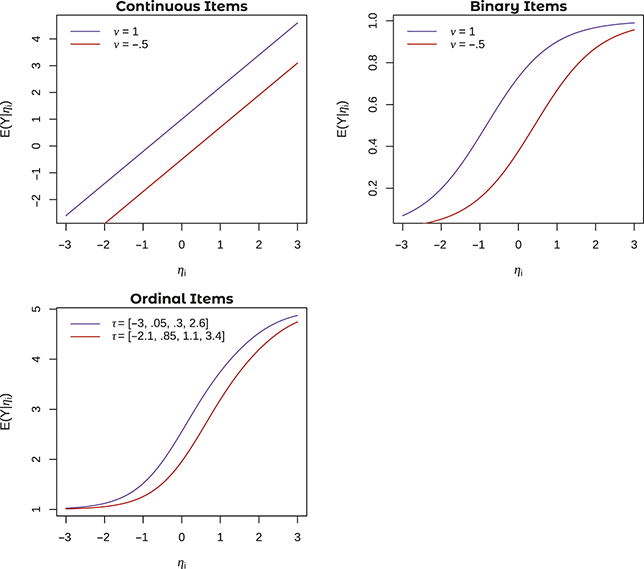

One possible set of relations between

![]() and

and

![]() with different values of

with different values of

![]() is shown in Figure 2. Notice a critical difference between the lines shown here and those shown for between-group differences in

is shown in Figure 2. Notice a critical difference between the lines shown here and those shown for between-group differences in

![]() : here the lines are not parallel, both for continuous and binary values of

: here the lines are not parallel, both for continuous and binary values of

![]() . The relation between

. The relation between

![]() and

and

![]() is stronger in one group than another. Notice that this is the case for all types of items (i.e., continuous, binary, and ordinal). In one group, the predicted value of

is stronger in one group than another. Notice that this is the case for all types of items (i.e., continuous, binary, and ordinal). In one group, the predicted value of

![]() increases more quickly with corresponding increases in

increases more quickly with corresponding increases in

![]() than the other group – that is, the relation between

than the other group – that is, the relation between

![]() and

and

![]() is stronger in this group.

is stronger in this group.

Figure 2 Examples of nonuniform DIF in continuous, binary, and ordinal items

Note. Figures plot the expected value of

![]() against the latent variable

against the latent variable

![]() . Note the different loading values for all items in the upper left-hand corners of the graph.

. Note the different loading values for all items in the upper left-hand corners of the graph.

Consider our responsive parenting example from earlier. Suppose that item

![]() here is the parent’s recall of the number of times they drove their children to school. (Note that, this being a count variable, it would likely be better modeled using a Poisson or negative binomial regression than the linear regression we are proposing here. This example is just an illustration; we will treat it as normally distributed for the purpose of argument.) Further suppose that

here is the parent’s recall of the number of times they drove their children to school. (Note that, this being a count variable, it would likely be better modeled using a Poisson or negative binomial regression than the linear regression we are proposing here. This example is just an illustration; we will treat it as normally distributed for the purpose of argument.) Further suppose that

![]() is a grouping variable based on whether the family lived in an urban, suburban, or rural location. In this case, the number of times the parent drove their child to school may not be a particularly good measure of responsiveness, as it would likely show a weaker relation to responsiveness among urban parents. Parents in urban locations may be very responsive but just not have occasion to drive their children to school, opting instead to accompany them on public transit or walk them to school. In this case, the slope linking

is a grouping variable based on whether the family lived in an urban, suburban, or rural location. In this case, the number of times the parent drove their child to school may not be a particularly good measure of responsiveness, as it would likely show a weaker relation to responsiveness among urban parents. Parents in urban locations may be very responsive but just not have occasion to drive their children to school, opting instead to accompany them on public transit or walk them to school. In this case, the slope linking

![]() to

to

![]() ,

,

![]() , would be smaller among urban parents than rural or suburban ones, indicating that as responsiveness increased we would not expect a corresponding increase in

, would be smaller among urban parents than rural or suburban ones, indicating that as responsiveness increased we would not expect a corresponding increase in

![]() . Similarly, if

. Similarly, if

![]() were a binary item asking a parent to recall the last time they drove their child to school, taking a value of 0 if the occasion was over a week ago and a value of 1 if it was within the past week,

were a binary item asking a parent to recall the last time they drove their child to school, taking a value of 0 if the occasion was over a week ago and a value of 1 if it was within the past week,

![]() would also be smaller. In this case, it would simply mean that, relative to rural or suburban parents, urban parents’ probability of driving their children to school does not increase with corresponding increases in responsiveness.

would also be smaller. In this case, it would simply mean that, relative to rural or suburban parents, urban parents’ probability of driving their children to school does not increase with corresponding increases in responsiveness.

3.3 Differences in the Probability of Endorsing Specific Levels of each Item:

In an item with multiple possible categories a participant could endorse, recall that there are category-specific threshold parameters, denoted

![]() for category

for category

![]() of item

of item

![]() measuring latent variable

measuring latent variable

![]() . A difference between groups may mean many different things, depending on the nature of the item and the differences found therein. In general, such differences occur only if one group is more or less likely to endorse a specific category than the other group, over and above differences in the latent variable.

. A difference between groups may mean many different things, depending on the nature of the item and the differences found therein. In general, such differences occur only if one group is more or less likely to endorse a specific category than the other group, over and above differences in the latent variable.

For instance, suppose we have a four-level ordinal item, and one group has a lower threshold for

![]() . What this means is that members of this group are more likely to endorse category 4 than the other group. A between-groups difference such as this one may happen, for instance, if category 4 refers to an event which is extremely common in one group due to factors which are unrelated to the latent variable. Suppose that in our responsive parenting example we had a four-level ordinal item which asked the parent how frequently in the past week they had helped their child get dressed, with response options including never (1), occasionally (2), often (3), and always (4). If we created a grouping variable on the basis of the child’s age, putting children under three years in the younger group and children over three years in the older group, we might predict that parents with children in the younger group are more likely to endorse the “always” option than those with children in the younger group. Though many children under three years can do some dressing-related tasks, the majority are not able to complete all of the tasks involved in dressing themselves. Perhaps parents of children in the older group would have more latitude to interpret the question – interpreting it, for instance, as the frequency with which they engaged in age-normative tasks relating to dressing oneself such as helping to pick out a matching outfit or helping the child to tie their shoes. But the parents of children in the younger group would be much more likely to say “always.”

. What this means is that members of this group are more likely to endorse category 4 than the other group. A between-groups difference such as this one may happen, for instance, if category 4 refers to an event which is extremely common in one group due to factors which are unrelated to the latent variable. Suppose that in our responsive parenting example we had a four-level ordinal item which asked the parent how frequently in the past week they had helped their child get dressed, with response options including never (1), occasionally (2), often (3), and always (4). If we created a grouping variable on the basis of the child’s age, putting children under three years in the younger group and children over three years in the older group, we might predict that parents with children in the younger group are more likely to endorse the “always” option than those with children in the younger group. Though many children under three years can do some dressing-related tasks, the majority are not able to complete all of the tasks involved in dressing themselves. Perhaps parents of children in the older group would have more latitude to interpret the question – interpreting it, for instance, as the frequency with which they engaged in age-normative tasks relating to dressing oneself such as helping to pick out a matching outfit or helping the child to tie their shoes. But the parents of children in the younger group would be much more likely to say “always.”

As one might intuit from the very specific nature of this example that differences in individual thresholds are often difficult to hypothesize a priori. As will be seen shortly, they can also be computationally challenging to model, which leads to some methodological researchers recommending that such differences be modeled sparingly (Reference Gottfredson, Cole and GiordanoGottfredson et al., 2019).

Differences That Do Not Represent Measurement Bias

We have stated that measurement bias is present if there are differences between groups in the measurement parameters. However, there is another reason that we may observe differences between groups in the latent variables: it may be the case that there actually are differences in the latent variable. In particular, the latent variable mean

![]() , as well as latent variable variances

, as well as latent variable variances

![]() and covariances

and covariances

![]() may differ between groups. We will use the term impact to describe such differences.

may differ between groups. We will use the term impact to describe such differences.

Differences between groups in

![]() , which we will refer to as mean impact, are some of the between-group differences in which researchers are often most interested. Consider our responsive parenting example. In this case, we may be interested in whether one group is actually more responsive on average than the other group. For instance, there is evidence that chaotic home environments (i.e., environments lacking in routine and structure) are conducive to less responsive parenting (Vernon-Feagans et al., 2016; Wachs et al., 2013). If we separated our sample into groups according to household chaos level, we may well find that the mean of responsive parenting was higher among parents who reported living in less chaotic homes, relative to their high-chaos counterparts. Similarly, if we were conducting an intervention trial and measuring postintervention levels of responsiveness, perhaps we would find mean differences on the basis of condition, with those in the treatment condition showing higher means than those in the control condition.

, which we will refer to as mean impact, are some of the between-group differences in which researchers are often most interested. Consider our responsive parenting example. In this case, we may be interested in whether one group is actually more responsive on average than the other group. For instance, there is evidence that chaotic home environments (i.e., environments lacking in routine and structure) are conducive to less responsive parenting (Vernon-Feagans et al., 2016; Wachs et al., 2013). If we separated our sample into groups according to household chaos level, we may well find that the mean of responsive parenting was higher among parents who reported living in less chaotic homes, relative to their high-chaos counterparts. Similarly, if we were conducting an intervention trial and measuring postintervention levels of responsiveness, perhaps we would find mean differences on the basis of condition, with those in the treatment condition showing higher means than those in the control condition.

Differences between groups in the variance components, which we refer to as variance impact, is also common. It may be the case, for instance, that certain groups of parents show more variability in their responsiveness than others. For instance, it may be the case that, in addition to being more responsive overall, parents in less-chaotic homes are more uniformly responsive than those in highly chaotic homes. That is, though we do not have evidence to cite here as in the case of latent variable means, we might speculate that parents living in highly predictable environments are generally all highly responsive, which would mean that the variance of responsiveness in that group would be low since all parents show the same high level of responsiveness. By contrast, parents living in chaotic environments could be more variable in their overall level of responsiveness.

We will now map the above-mentioned differences in the parameters, as defined in this section, onto terminologies and conventions from the SEM and IRT traditions of latent variable modeling.

4 Codifying Measurement Noninvariance and Differential Item Functioning in Different Latent Variable Frameworks

Though measurement invariance and DIF refer to the same fundamental concept, SEM and IRT have different ways of thinking about it. As noted earlier, questions of measurement invariance are typically explored in the SEM framework, particularly within the common factor model; by contrast, the study of DIF has arisen largely from the IRT literature. Critically, field-specific distinctions have faded in recent decades, with both fields’ methods generally being united under the heading of nonlinear factor analysis (Reference Wirth and EdwardsWirth & Edwards, 2007). However, the historical differences between the fields matter, because the procedures, goals, and types of items associated with these two modeling traditions have given rise to a number of differences which the reader is likely to hear as they study measurement-related questions. So we will make a few generalizations about the differences between the two fields, recognizing that they are oversimplified, in the interest of providing a broad summary of a complicated distinction.

The broadest distinction comes down to differences in the patterns of measurement bias that are interpreted, as well as what these patterns are called. We explore these patterns now.

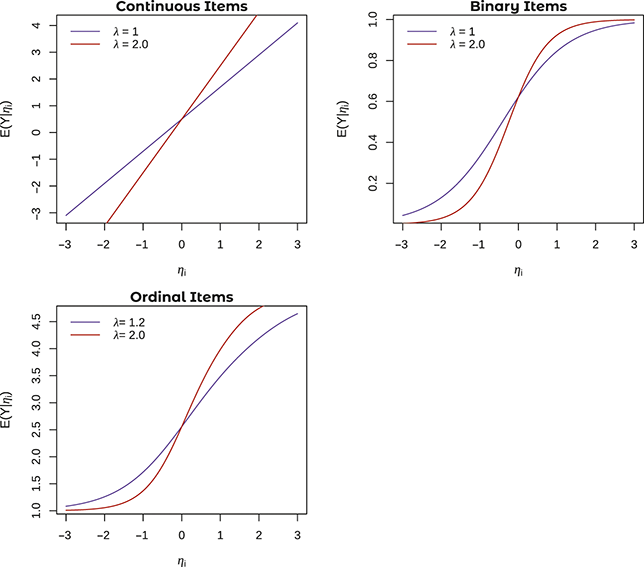

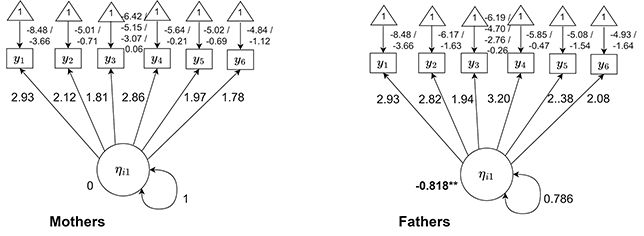

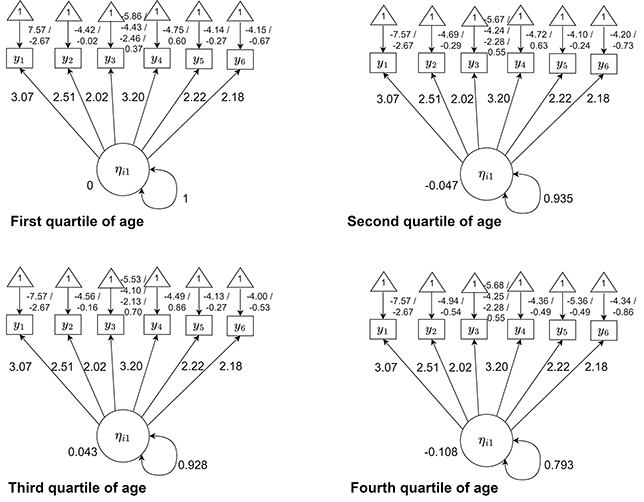

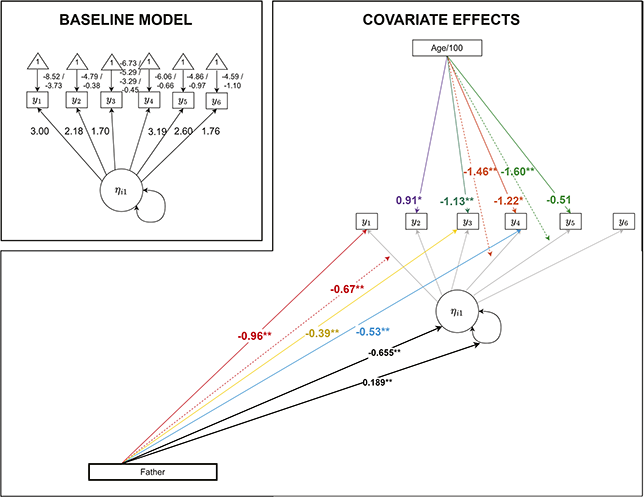

4.1 Types of Invariance in SEM

In general, within SEM the question of invariance is considered at the level of the test, with increasingly stringent invariance conditions which must be met for the results to be interpreted (Reference MeredithMeredith, 1993). These levels are shown in Figure 3. There are a few things to note about the depiction of invariance in Figure 3, some of which reflect broader points about measurement invariance in SEM. First, we are considering a two-group case. The path diagram on the left is for our first group (here denoted Group 0) and the path diagram on the right is our second group (here denoted Group 1). We will discuss multiple-group analysis shortly (“Multiple-groups formulation”). In the meantime, note that the depiction here includes two types of parameters: intercepts

![]() and loadings

and loadings

![]() . This

. This

![]() subscript, which is simply the last number in the subscript of parameters that are allowed to vary across groups, represents group membership. Second, notice that there are no threshold parameters

subscript, which is simply the last number in the subscript of parameters that are allowed to vary across groups, represents group membership. Second, notice that there are no threshold parameters