1 Introduction

1.1 The Danish National Early Language Assessment Project

This Element describes the development and psychometric properties of two language assessment instruments for Danish that were commissioned by the Danish government ministries for children and education. They were developed by the authors of this Element and their colleagues for use in early childhood education and care (ECEC) centers, to be administered by the children’s usual ECEC educators. The background for this governmental commission was a heightened awareness of (1) the great variation in language development already apparent by the end of infancy (Reference Fenson, Marchman and ThalFenson et al., 2007), (2) the association between early language development and later educational achievement (Reference Bleses, Makransky and DaleBleses et al., 2016) and, further downstream, many other life outcomes, and (3) the realization that the provision of publicly subsidized early childhood care from about age 1 along with subsidized public ECEC centers, as well as free regular schools later, did little to close the achievement gap between advantaged and disadvantaged children.

The contextual factors that led to the development of these instruments are present in many other countries and regions, and the preparation of this Element was in part motivated by the belief that aspects of both the actual instrument and design and the process by which it was developed will be helpful to researchers and clinicians in other contexts. For closely related languages and similar cultures – for example, other Germanic languages and many other Indo-European languages with alphabetic orthography – a relatively straightforward adaptation might be made. For more diverse languages and writing systems, the process of reviewing basic language development research with an eye toward the practical evaluation of individual differences by educators, and evidence for its success, may be the most helpful contribution. In Section 5, we discuss some of the lessons learned from this project; some readers may find that section helpful before turning to the details of the specific Danish project.

1.2 The Purpose of Language and Language Assessment

The mastery of language is among the most central and significant accomplishments of early childhood due to its exceptionally wide range of functions that permeate individual and social life (Reference HoffHoff, 2014; Reference LuriaLuria, 1981; Reference WellsWells, 2007). Language has a “public face” in its use for the communication of wants, information, emotional states, and relationships. It has a “private face” as well, as a tool for planning, thought, self-regulation and other aspects of executive functioning, and more. Drawing on both types of functioning, language can serve as a crucial tool for joint efforts and other forms of cooperation. The oral language skills developed in early childhood are also an essential foundation for literacy (Reference Bleses, Makransky and DaleBleses et al., 2016; Reference Harlaar, Hayiou-Thomas and DaleHarlaar et al., 2008), which will extend all the just-mentioned functions.

Consequently, variation in early language skills – impairments, variation within the normal range, and exceptional ability – has diverse and far-reaching consequences for almost all life domains, including education, vocation, social relationships, mental health, and life satisfaction. There has been an increasing worldwide awareness of and societal focus on this variability in recent decades. Several factors have led to the focus on variability. One is the overall contrast in success between oral language and literacy. The great majority of children (estimates of 93–95 percent: Reference LeonardLeonard, 2014) growing up in environments free from the grossest kinds of deprivation attain oral language skills that, while variable, do not substantially impair participation in society. Fewer children – an estimated 80–90 percent – will attain levels of literacy that do not substantially reduce their life opportunities (Reference Kamhi and CattsKamhi & Catts, 2012). Because literacy demands differ greatly across educational, occupational, and social contexts, there is no single criterion for success; literacy is best considered as a continuous variable for which low ability is an indicator of risk. However, variability in both early language skills and consequent literacy is substantially correlated with socially relevant factors such as poverty, low parental education, and immigrant status (Reference Hart and RisleyHart & Risley, 1995; Reference HoffHoff, 2006). Thus it is connected to cultural values of opportunity and social mobility across generations. In addition, early language abilities do significantly predict literacy skills even beyond the prediction from the social factors mentioned here, a result that has motivated the examination of distinct aspects of early language development that may be particularly important for literacy. These issues are relevant and of social concern also in Denmark, a relatively affluent welfare society. In fact, social mobility in Denmark is relatively poor, particularly in light of the huge public investments in children and youth. Although Denmark ranks high on measures that are believed to facilitate social mobility, the often-cited Global Social Mobility Index is in fact a measure of presumed drivers of mobility, such as healthcare and education access (World Economic Forum, 2020). But income mobility in Denmark is largely due to redistributional policies, and educational mobility is comparable to that of the US in spite of Denmark’s tuition-free education and monthly student benefits on which people can live while taking part in education (Reference Landersø and HeckmanLandersø & Heckman, 2017).

The research findings just mentioned confirm that the rate of early language development can have a continuing influence, and consequently that effective early intervention has the potential to make a pivotal difference in a child’s life. Early language development can either set an initial trajectory toward success and the fulfilment of potential or toward the risk of disappointment and failure. Of course, whether an assessment of delayed language development proves life-changing or not depends on the existence and accessibility of adequate follow-up procedures. In addition to the practical value for individual children, the systematic examination of large samples of language assessments is a powerful tool for researchers who seek to identify the range of factors and their interactions that influence children’s language and early literacy development.

Thus, in the area of language development, as in most other aspects of behavior and development, assessment tools are central to valid work, both in research and in applied work such as clinical and educational practice. With respect to applied work, there are four broad functions of assessment (Reference Snow and van HemelSnow & van Hemel, 2008). Each of them has its own set of features of highest priority; no one assessment instrument can be ideal for all of them. The first is screening and prediction; that is, identifying children who are at risk of later significant impairments. These children may or may not show current impairments (screening vs. prediction). For this function, the desirable properties of the assessment include rapidity of administration, minimal requirements for child compliance, and diagnostic validity, especially at the lower extremes of performance, not just correlations across the full range. The second is identification of service eligibility. Based on current performance, the results of longitudinal predictive research, and resource availability, some children can be offered interventions that go beyond the existing universal programs. Service delivery decisions are inherently based on a categorical classification, though it may be comprised of more than two categories, as in response-to-intervention (RTI) approaches (Reference Fuchs, Fuchs and ComptonFuchs et al., 2012). The criteria are somewhat arbitrary, because virtually all measures and predictive correlations are continuous, without all-or-nothing cut-off points. That is, in most cases we are dealing with quantitative variation along a dimension, not qualitatively different categories. For this function, the desirable properties of the assessment prioritize reliability and validity over cost, breadth of assessment (multiple aspects of language), and high-quality norms for the measure. The third function is to obtain diagnostic information, a more detailed characterization of the impairment that can guide the design of effective intervention for the individual child or group of children. For this function, the most desirable features of the assessment include sensitivity to the academic and cultural importance of the skills and an identification of good points of “leverage” for affecting the broader language system. Finally, the fourth function of assessment is to evaluate the efficacy of the intervention, which is a core concept of “evidence-based practice.” Desirable properties of efficacy measures include repeatability for monitoring change over time, moderate cost in time and money, the inclusion of “near” and “far” skills relative to the intervention to gauge the generalizability of gains, and demonstrated sensitivity to change.

1.3 Typical and Atypical Development in the Preschool Years

The knowledge and skills that comprise competence in any language are complex and diverse. Linguists and others who study language often group them into four broad categories, all of which are developing in the preschool period (Reference HoffHoff, 2014), although the exact number of categories and the boundaries between them vary across linguistic theories (e.g., Reference CrystalCrystal, 2010). The first is phonology, the sound system of a language. Core aspects of phonology are the set of sounds used in the language and their contextually conditioned realization, along with the permitted sequential patterns of sounds; that is, the phonotactics of the language. For example, Bantu languages often include clicks – sounds produced by the intake of air (specifically, an ingressive, velaric airstream) – in contrast to the more frequently used sounds in most of the world’s languages, which are produced by exhaling air from the lungs (specifically, an egressive, pulmonic airstream); Mandarin includes tones to contrast meaning; English has an unusually large set of vowels, and Danish an even larger one that, depending on the specific phonological analysis, outnumbers the set of consonants. The sequence kn is not permitted in English, though it is in certain related languages such as German or Danish; certain sequences of three consonants such as str are permitted in English but not in Japanese.

The second category is lexicon (alternative terms often used are vocabulary and semantics). It includes knowledge of the basic units of meaning, which are sometimes words and sometimes parts of words (derivational morphemes) that can be used to form new words by adding to a root word. For example, if narg is a verb, then a narger is someone who nargs. The prefix un– can be used to change the meaning of many adjectives into their opposite; for example, unhappy, uncool. Note that in a number of linguistic theories, knowledge with respect to units smaller than words is classified with morphology (and syntax) rather than lexicon.

The third category is morphology and syntax (sometimes collectively referred to as grammar), which is focused on combinations of the smaller units of meaning. It includes inflectional morphology, in which the addition of inflectional morphemes does not change the meaning of the word but adds something to it; for example, dog plus the ending –s means more than one dog. It also includes syntax – the rules for combining meaningful elements to generate a complex meaning. For example, in English, The man bites the dog means something different from The dog bites the man despite the identical words used; word order is a primary mechanism in this and many other languages. In numerous other languages, similar types of meaning difference are signified by the use of inflection and prepositions, while word order is more flexible, for example, in German or Spanish.

Finally, the fourth category is pragmatics – the knowledge that makes it possible to express one’s own feelings and wishes, to comment, to make requests, and to perform other functions, as well as the ability to engage in coherent, meaningful conversation and narrative and to use context and knowledge of the world to help interpret intended meanings. Humor and irony are also important contributors to communicative effectiveness, as is the ability to indicate the speaker’s view of the truth or validity of what is being said. For example, languages may have regular means for expressing the source of information and the speaker’s confidence in it. An important component of pragmatics is the knowledge of how to be polite (or impolite, when that is the desired act).

Table 1 presents some major milestones of language development in these four domains between birth and school age (Reference HoffHoff, 2014; Reference Lightfoot, Cole and ColeLightfoot et al., 2013; Reference SaxtonSaxton, 2017). It should be kept in mind that this information is based on the development of monolingual children in typical environments for their language community. The table is a broad summary generalization across languages, but it should be acknowledged that the largest body of evidence is on English and that there are variations across languages as well as language domains. In Danish, early vocabulary development is somewhat slower on average than for other European languages (Reference Bleses, Vach and SlottBleses et al., 2008b), and selected aspects of morphological development are slower than in other Nordic languages (Reference Bleses, Basbøll and VachBleses, Basbøll, et al., 2011), whereas early phonological development, on the other hand, is relatively advanced in Danish children (Reference Clausen and Fox-BoyerClausen & Fox-Boyer, 2017). However, at the level of detail reported here, the table is generally accurate.

Table 1 Some major milestones of language development in four domains during the preschool period

| Phonology | |

|---|---|

| Approximate age (months) | Milestone |

| Birth |

|

| 6–7 |

|

| 10–12 | Canonical babbling (CVCV sequences) with stress and intonation |

| 30–36 | Completion (or near-completion) of phonemic inventory |

| 36–42 | Beginnings of phonological awareness, e.g., rhyme |

| Lexicon | |

|---|---|

| Approximate age (months) | Milestone |

| 4–6 | Recognizes own name |

| 8–10 | Understands a few words |

| 12–14 | Produces first word |

| 18–20 |

|

| 28–30 | 500-word productive vocabulary |

| 60 | 6,000-word vocabulary |

| Morphology and syntax | |

|---|---|

| Approximate age (months) | Milestone |

| 18–20 | First word combinations |

| 24–30 | Grammatical morphemes (plural, past, etc.) |

| 27–33 | Negative and question forms |

| 36–42 | First complex (multiclause) sentences |

| 42–48 | Use of derivational morphology to understand and create new words |

| Pragmatics | |

|---|---|

| Approximate age (months) | Milestone |

| 7–10 | Use of vocalizations and gestures to attract adult attention, get desired objects and actions |

| 14–20 | Expanding range of communicative purposes of language, e.g., greetings, comments, requests for absent object |

| 24–30 |

|

| 30–36 | Creation of indirect requests, e.g., “You’re sitting on my dolly” |

| 36–48 | Development of narrative skills |

It is also important to realize that these are just averages, and there is considerable variability even among typically developing children. There are moderate correlations among the various aspects of language development; there is also some evidence for causal effects among them, such as lexical development serving to stimulate morphological development, but it also appears that environments that are facilitative for one aspect of language are usually beneficial for other aspects. In any case, the correlations are far from perfect, and that fact implies that in many situations, it is important to assess two or more of them. Also, given the near-universal use of vocabulary size as a measure, it is important to acknowledge that words differ in their ease of acquisition, even when the frequency with which they are heard by children is taken into account. For example, word class (nouns, verbs, adjectives, etc.), imageability of the word meaning (dog vs. happy vs. idea), and the number of similar-sounding verbs all influence the typical age at which particular words are acquired (Reference HansenHansen, 2017).

The skills just described can be viewed as the primary oral language skills. All of them are foundational for literacy development, but lexicon and pragmatics are especially important both theoretically and for assessment. There is a large body of evidence that early vocabulary size is a predictor of later literacy (Reference Bleses, Makransky and DaleBleses et al., 2016; Reference LeeLee, 2011). One reason for the prediction is that for early readers, it is necessary to know a word in order to be able to read it; another is that the knowledge of other words in a sentence can facilitate the reading of each individual word and eventually learning the meaning of new words. Among pragmatic skills, the ability to understand and produce narratives is important for literacy (National Early Literacy Panel, 2008). Narratives – that is, stories – are the first step in mastering decontextualized language; that is, language about objects, people, and events remote in time and space. Ultimately, almost all print will be decontextualized in this sense.

There are other language skills, not as essential for face-to-face conversation but crucial for later literacy, namely preliteracy skills (Reference Catts, Herrera and NielsenCatts et al., 2015). For sound-based writing systems typical of European languages and many others, the most important of these, and among the best early predictors of literacy, are those that require awareness and manipulation of sounds (National Early Literacy Panel, 2008). For purposes of talking about a feline, cat can be considered a single unit; in contrast, to understand or generate rhymes – that is, to realize that some parts of the words cat and hat are the same and some are different – it is necessary to mentally divide the sound of the word into smaller units. This ability is an aspect of phonological awareness. A related test of phonological awareness is the deletion task; for example, “say window without dow” or “say sat without sss.” Phonological memory – the ability to hold a sequence of sounds in memory and reproduce it later – is also highly relevant for literacy in sound-based writing systems. The nonword repetition task, which removes meaning as a possible cue in order to assess the purely phonological skill, is the most commonly used measure of this skill (Reference Hoff, Core and BridgesHoff et al., 2008). An example is “say volpitate.”

There are other important pre-reading domains (National Early Literacy Panel, 2008). They include letter knowledge, both recognizing the shapes and having some sense of the sound they denote, and other concepts of print, such as the direction of reading a page (left to right vs. right to left), the order of pages, and an awareness of whether it is the pictures or the abstract shapes on the page – the letters – that are being read.

Although there is great commonality in language development within a language community, there is also great variation, particularly in rate of development, which motivates the development and use of assessments due to the predictive significance of early variation. The variation is seen even among typically developing children, as well as in children with specific impairments such as autism spectrum disorder, Down Syndrome, and Developmental Language Disorder (this clinical term is related to Specific Language Impairment but differs in that in Developmental Language Disorder, a nonverbal–verbal discrepancy is not required; the term is increasingly preferred) (Reference Paul, Norbury and GossePaul et al., 2018). Most early impairments can be seen as delay in rate, rather than a qualitative difference. However, for certain clinical categories, there are also qualitative differences in the relationship among language components. For example, children with Down Syndrome often have relative strengths in pragmatics; children with autism spectrum disorder may not show the usual superiority of comprehension to production.

A wide range of biological and environmental factors influence or at least predict rate of development. Biological factors include genetic endowment, gender, and prenatal, perinatal, and other biomedical factors. Environmental factors include family socioeconomic status (SES; often indexed as parental education level), early language input (quantity and quality of child-directed language), and any intervention received. It is important to recognize that biological and environmental influences interact in multiple ways. For example, the input a child receives may be influenced by the child’s gender, level of language ability, or simple talkativeness. SES may influence prenatal and perinatal experience due to, on the positive side, the availability of good medical care, and on the negative side, the influence of stress, poverty, or drugs. A family history of difficulty in learning oral language or reading probably presents both a biological and an environmental risk to the child.

Although many developmental disorders (e.g., Down Syndrome, Fragile X, autism spectrum disorder) have as one of their consequences impairment in language development, the majority of children with language delay do not present with such a primary disorder (Reference LeonardLeonard, 2014). The language disorder is the primary disorder, though there may be some other closely related problems, such as with executive functioning or symbolic play. This fact is another motivation for the early assessment of language itself, as it may be the earliest sign of developmental difficulty. Nevertheless, it should be kept in mind that the significant overall prediction from early language delay to later development is still relatively modest (Reference Dollaghan, Rescorla and DaleDollaghan, 2013) and thus of limited clinical use by itself. Ideally it should be integrated with other types of information such as the nature of early language input, gender, and other factors listed previously (Reference Rescorla and DaleRescorla & Dale, 2013).

An inherent challenge in all assessment of language is that while delay is a continuous variable, without any qualitatively defined “cut-off points” (Reference LeonardLeonard, 2014; Reference Rescorla and DaleRescorla & Dale, 2013), service eligibility generally requires a classification criterion. Although we have evidence that, overall, the more significant the delay early in development, the more likely it is that there will be difficulties later, that evidence still does not identify a specific criterion. Pragmatic issues such as the availability, nature, and cost of interventions are likely to determine the cut-off point to be used (but see the discussion of RTI in Section 1.4).

1.4 Language Assessment in an Educational Context

A broad characterization of the functions of assessment was presented in Section 1.2. In this section, which is focused on language and preliteracy assessment, we consider some of the most frequent purposes of assessment in an educational context (Reference Snow and van HemelSnow & van Hemel, 2008). The most common of these is to identify children with specific individual needs relative to the goals and methods of the educational setting. Achieving this purpose may include screening if the assessment is just a first step, administered to all children, to be followed by in-depth assessment when indicated by the initial results, or it may be diagnostic, when the results are used directly in decisions concerning educational management and service delivery. An increasingly common variation on the diagnostic function is the core component of RTI approaches (Reference Fuchs, Fuchs and ComptonFuchs et al., 2012). Rather than applying a dichotomous classification criterion to all children, in RTI, continuous monitoring of children can lead to increasingly intensive interventions, first within the classroom and then moving to individualized services as needed. Conversely, good performance following that intervention will return children to the regular program. Language assessment may also serve to evaluate children’s mastery of a second language, which is the language of instruction, to determine whether further second language instruction is needed. Low scores on these assessments need not imply that the student has an impairment, only that the level of mastery of the language of instruction in school is not yet sufficient for academic learning. Language assessment may also be a useful component of monitoring the effectiveness of interventions in many early childhood research projects when these interventions enroll children in the thousands, making research-based pre-testing and post-testing practically impossible. There is a growing awareness that the monitoring of effectiveness is important for everyday clinical practice as well; even educational programs using well-established curricula need to evaluate how well they are serving their students. Finally, language assessments provide a basis for providing feedback to parents, along with educator observations of their children’s participation in the programs.

Informal observational assessment of language skills in an educational context has its own distinctive challenges. Both the group setting and the predominance of teacher-led activities imply that individual children have less opportunity to speak, and therefore there is less basis for observational assessment. Many contexts and topics of language use occur much less frequently, if at all, in the classroom compared to the home setting. On the other hand, new and important contexts will emerge or be more frequent in the classroom, such as language about more complex and/or abstract topics and responding to teacher “test questions.”

Although both receptive and expressive language are essential skills and need to receive attention in assessment, receptive skills take on an even greater role in the classroom due to the emphasis in early education on introducing new concepts and relevant language for those concepts. This new language is much more likely to be mastered first at a receptive level rather than at an expressive level (Reference Bornstein and HendricksBornstein & Hendricks, 2012; Reference Fenson, Marchman and ThalFenson et al., 2007). The effective assessment of receptive skills is often more difficult than noting whether the child can produce a word.

A third challenge reflects the increasing prevalence of bilingual and multilingual children with diverse other languages in the classroom (Reference Hoff, Bavin and NaiglesHoff, 2015; Reference Paradis, Genesee and CragoParadis et al., 2021). For these children, it is often the case that the language of instruction is a second language. Depending on the purpose of the assessment, it may be appropriate to assess only in the second language; for example, to evaluate the efficacy of instruction in second-language oral or written skills. But this narrow focus may be inappropriate when the goal is to decide whether the child has impaired language skills (Reference Paul, Norbury and GossePaul et al., 2018). For that purpose, assessment in the first language is also essential, but this prescription may be difficult when there are many first languages represented in the classroom, with varying availability (including none) of assessment tools in those languages (see Section 1.6 below). Note, however, that ambiguity in the interpretation of second-language assessment results is mainly confined to bilingual children who obtain low assessment scores. When bilingual children obtain second-language assessment scores within the typical range for native monolingual children, there is little reason to suspect impaired fundamental language skills.

Language assessment techniques for young children fall into three main categories (Reference Marchman, Dale, de Groot and HagoortMarchman & Dale, 2018; Reference Snow and van HemelSnow & van Hemel, 2008). The first is structured testing. Structured tests are highly efficient with respect to testing time, and they are well-suited for evaluating receptive skills; for example, with picture-pointing tasks. However, they do require a certain amount of time and, more importantly, training on the part of those doing the assessment, as both the administration and the scoring of individual items can be highly complex. Above all, they require the cooperation of the young child, which is particularly challenging below 2½–3 years.

A second assessment format is language sampling, widely used in basic research on early language development. A major strength of this observational technique is that it does not require the imposition of constraints on the child’s behavior. However, it requires highly trained personnel for eliciting, transcribing, and analyzing the child’s language. Transcription and analysis are extremely time-consuming. Two other limitations of language sampling are, first, that it is better suited for studies of language production than of comprehension and, second, that there are substantial contextual effects such that words and grammatical structures that are in fact within the child’s repertoire may not occur in a given situation if the occasion does not arise. For example, words for extended kinship relationships (e.g., grandfather), toys, bathing, and sleeping are less likely to occur in the classroom, while more advanced words for spatial relationships, quantity, and time are more likely to occur there than at home.

A third approach has become increasingly common in studies of early child language, and that is parent report (Reference Fenson, Marchman and ThalFenson et al., 2007). Parent report is based on extensive experience with the child, and experience that is highly diverse: at play, during meals, while bathing, at bedtime, and with themselves, the other parent, grandparents, siblings, and friends. The strength of parent report is that it represents an aggregation across a period of time and many situations, and thus it is less affected by contextual bias. Considerable research evidence has confirmed that parent report is most effective when it is focused on current function, not retrospective reports such as “When did your child say her first word?” and when it utilizes a recognition format rather than recall; for example, “Have you heard your child say green?” rather than “How many (or what) color words has your child said?” Although parents can often report on children’s comprehension ability, this method appears most valid in the assessment of production. Recent research has extended parent report beyond vocabulary and grammar to other dimensions of language such as intelligibility (Reference McLeod, Crowe and ShahaeianMcLeod et al., 2015) and pragmatics (Reference O’NeillO’Neill, 2007).

1.5 Educator-Administered Language Assessment

Based on the generalizations that the most frequent purposes of assessment in early childhood education programs are screening and monitoring child language development and the effectiveness of ECEC intervention, that the nature of interaction in the classroom is distinctive in involving multiple children and linguistic predominance by educators, and that the resources available in terms of educator time and training are limited, educator-administered assessment in the form of reports and direct testing appears to be the most appropriate. Two such measures are the focus of this Element.

Parental report can also be of value, particularly with respect to the generalization of the linguistic skills that are the focus of the program to other settings and conversational partners. However, there are limitations to parent report as a primary measure of children in educational settings (Reference Bleses, Jensen and HøjenBleses, Jensen, et al., 2018; Reference Dale, Cole, Dale and ThalDale, 1996).

Some parents may have low literacy levels, which hampers the validity of a questionnaire-based assessment (Reference Roberts, Burchinal and DurhamRoberts et al., 1999), and an interview format for the measure may be difficult or expensive to arrange. For some proportion of children of immigrant families, the language being assessed – the language of the classroom – is a second language, in which parents may have limited fluency and which they may not speak much at home. This linguistic mismatch is of even greater significance when some of the assessment is directed toward “pre-academic language” of numerical and spatial concepts, causality, classification, etc., which is less likely to be used in the home. In fact, a previous version of LA 3–6 used parent report. However, feedback from educators gave the clear picture that obtaining these reports from parents was a huge and often frustrating task and that the reports were disproportionately often not completed by the parents of the children who, statistically, were at the greatest risk for language development issues, namely parents in at-risk families.

Educator-based assessment of language has several potential advantages as well as challenges. Educators have sustained experience with individual children, which is not available to more specialized professionals such as speech-language therapists, who may have only one session or a small number of sessions with a given child and who are, in any case, typically of limited availability in early childhood programs. At the same time, ECEC educators have experience with multiple children, providing a stronger basis for comparative evaluation among children than is possible for parents. They may or may not have received some training in language and/or assessment. They can provide both reports and simple test information about children. They are well-positioned to conduct repeated assessments to monitor the efficacy of intervention programs. There is also growing evidence that during the school years, teachers can provide valid assessments of, for example, reading (Reference Dale, Harlaar and PlominDale, Harlaar, & Plomin, 2005). Another argument is that educators can use the result to improve language experiences for individual children as part of their educational practice.

Two recent educator-based language assessment projects have provided encouraging results. Both of the projects were based on adaptations of the MacArthur-Bates Communicative Development Inventories (CDIs; Reference Fenson, Marchman and ThalFenson et al., 2007). Reference Bleses, Jensen and HøjenBleses, Jensen, et al. (2018) constructed a short version of the Danish adaptation of the CDI: Words & Sentences for 18–34-month-olds. The measure, CDI: Educator, included a seventy-item vocabulary checklist, for which words were selected from the longer CDI: Words & Sentences that were appropriate for educator classroom experience with children and also questions concerning the child’s use of decontextualized language. The reliability and validity of the measure were judged adequate based on a sample of 5,097 children. Reference Garmann, Romøren and FlygstadGarmann et al. (2019) piloted the use of a Norwegian adaptation of the MacArthur-Bates CDI-III (Reference Fenson, Marchman and ThalFenson et al., 2007), which is a brief measure for 3-year-olds. CDI-III reports from the parents of twenty-eight children were compared to those of educators in the children’s ECEC centers. The parents gave slightly higher scores than the educators did, but parent and educator scores were significantly correlated (intra-class correlations of between 0.47 and 0.71). In addition, CDI-III scores were correlated with spontaneous speech measures. CDI-III vocabulary scores were significantly correlated with number of word types in spontaneous speech (0.48), and CDI-III sentence complexity scores were significantly correlated with mean length of utterance in spontaneous speech (0.41). CDI-III grammar scores were, however, not significantly correlated with the number of grammatical errors in spontaneous speech. The authors concluded that educator reports of child language skills can provide good estimates of children’s skills for some but not all language domains.

At the same time, there are challenges for educator-based assessment. Although the classroom (and the teacher’s previous experience) provides a basis for comparison, it is inherently a divided attention situation, as the educator must attend both to other children and to the full range of goals of the program, including behavior management. Another challenge is the opportunity for bias, even if unintentional. The bias may stem from the belief that the program is successfully facilitating growth, or from expectations based on a child’s previous assessment or other skills, such as social emotional skills. The potential challenge of bias applies to direct testing as well as to reporting; becoming an effective test administrator requires the development of skills to minimize unintentional cuing of the correct response or incorrect coding of responses that are not entirely clear (Reference SattlerSattler, 2001). Overall, testing requires a more skeptical perspective on a child’s performance than the supportive perspective essential to good teaching, and this necessitates the careful wording of instructions.

1.6 Assessment of Children Learning Two or More Languages

The assessment instruments discussed in this Element are focused, with one exception to be discussed later, on the acquisition of Danish. Danish is the language of education in schools for virtually all children in Denmark and a key skill for successful experience in the larger society. Assessment of Danish is relevant and sufficient for determining how well children have currently mastered Danish, for identifying children who will need additional help, along with information for designing that help, and for evaluating ECEC programs. However, monolingual (here Danish-only) assessment is not sufficient or appropriate for determining whether a bilingual child has a language impairment in cases when bilingual children receive relatively low assessment results (whereas relatively high scores in the Danish assessment suggest no general language impairment, in which case Danish assessment is sufficient). This caution concerning low assessment scores is due to the fact that young bilingual children typically learn each of their languages at a slower rate than monolingual children learning that language (Reference Bosch and Sebastián-GallésBosch & Sebastián-Gallés, 2003; Reference Hoff, Core and PlaceHoff et al., 2012; Reference Paradis, Genesee and CragoParadis et al., 2021). When competence in both languages is taken into account by adding measures such as vocabulary across languages, scores are quite similar for bilingual and monolingual children. These are not surprising facts; bilingual children have had less input in each language, because their experience is divided by language. And in fact there is much evidence that estimates by parents of the time spent in each language is a predictor of children’s relative proficiency in each language (Reference Hoff, Core and PlaceHoff et al., 2012; Reference Paradis, Genesee and CragoParadis et al., 2021).

The task of obtaining and integrating information about both languages in order to identify a language impairment is a very challenging one, given the increasing number of bilingual children and the number and diversity of other languages found in many countries, including Denmark, at present. For many languages there are no well-validated language measures, and even when they do exist, they are likely to require some ability in the language being tested.

Parent report measures are available for a wider range of languages, but they vary in degree of validation and in the extent to which some ability in the language is necessary to interpret the parent’s responses. Educators cannot be expected to have the training and experience to deal with these issues or the technical issues of combining information from two or more languages. For this reason, there has been an attempt to develop a language-independent measure, one that could be translated into other languages as needed. Measures of this type typically consist of questions that rely on parents’ general knowledge of children’s language at this stage of life, such as “For your child’s age, does your child have difficulty pronouncing words?” and “Do you have to repeat questions or directions to your child more often than to other children?” (Reference RestrepoRestrepo, 1998). As will be discussed in Section 3.5.4, a measure of this type is included in LA 3–6.

With some, albeit limited, information about mastery of the other language, along with information about the child’s relative exposure to the two languages, it is possible to make an initial judgment as to whether there is an impairment. Nevertheless, when the evidence suggests a possible impairment is present, it is best to refer the child for more detailed evaluation by a speech and language professional who can call on more complex methods of assessment, such as dynamic assessment.

But why is it that establishing norms for bilingual language development is such a difficult, if not impossible, task? Bilingual or second-language acquisition varies depending on a range of developmental and contextual factors such as: At what age did second-language acquisition commence? How many languages is the child exposed to? What proportion of each language is the child exposed to? How closely related are the languages that the child is acquiring? Because of the multiple combinations of answers to such questions, a considerable number of disparate norms would have to be developed. To illustrate the difficulties, assuming four ages of learning the second language (ages 0, 1, 2, or 3), three levels of the amount of second-language input (approximately 25%, 50%, or 75%), there would be 4 × 3 = 12 basic contexts for children learning just two languages, with different developmental trajectories likely in each case. Separate norms would have to be developed for each minority language (e.g., Turkish, Arabic, Polish, etc.), meaning that to assess bilingual speakers of just the 10 largest minority languages in a given country fairly, 120 norms would have to be developed. However, even this calculation does not take into account children with more than two languages, and it also implies the very unlikely assumption that relative language input in the first and second language remains stable over time. Ideally, second-language norms should also be established for each language combination, doubling the size of the feat to be accomplished.

Even if time and money were no issue, it would be a practical impossibility to establish such norms, at least if the aim were norms with the same degree of quantitative robustness and age resolution (monthly) as those for the monolingual instruments described in Section 3.6. There are not enough children. In the light of these and other issues discussed in Section 1.5, our approach is to guide practitioners’ interpretation of single-language (Danish) results for bilingual children by taking into account questions about the child’s language acquisition history and context, such as those we have outlined. In addition, certain dimensions of language and preliteracy development are especially informative in the case of bilingual children, which will be discussed in Section 3.5.3.

2 Educational Language Assessment in the Context of a National Screening Program

Language assessment programs are typically placed in health services for reasons of accessibility, as is the case, for example, in Norway, which in many ways is very similar to Denmark, the focus of this Element (Reference Klem, Gustafsson and HagtvetKlem et al., 2015). In contrast, the Danish language assessment instruments are designed to be administered by the educational staff in ECEC centers serving children aged 1 to 5 years and in Grade 0 (approximately age 6) as a tool to carry out the national language assessment program of children, which covers children in the age range of 2 to 6 years. According to current legislation (2020), each municipality must ensure that a language assessment is carried out for all children whom ECEC educators suspect may have nontypical or slow Danish language development and all children not in childcare. There are no requirements to use a specific language assessment instrument. However, regulations about who must have a language assessment have changed over the years.

In 2007, when the first version of the Danish assessment instrument was launched, the Danish government decided to implement language assessment for all 3-year-olds as an integrated part of educational practice in ECEC centers. Denmark has a near-universal ECEC system, and municipalities are obliged to ensure the availability of daycare for all families. Consequently, 97 percent of all 3–5-year-old children are enrolled in ECEC (Reference MinistryDanish Ministry for Social Affairs, 2015). The number of infants and toddlers in ECEC has increased and, according to the Ministry for Children and Education, three out of four children are enrolled in ECEC even before they turn 3. On average, these children are about 10 months old when they start in center-based childcare or the parallel option of professionally supervised childcare in the private home of a home-based childcare provider (Ministry for Children and Social Affairs, 2018a). The universal ECEC system is therefore a highly suitable context for reaching nearly all children in Denmark.

By embedding the language assessment in an educational setting, the use of the Danish language assessment is broader than typical national assessment programs. The assessment instruments are intended to serve as a tool for the early detection of signs of language delays or disorders in individual children by assessing a broad array of language and preliteracy domains. However, at the same time, the instruments are intended to serve to inform the staff about the strengths and weaknesses of each individual child’s Danish language and literacy development with the aim of tailoring the educational program to the needs of each child in the ECEC center.

The Danish government commissioned the researchers behind this Element to develop the instrument, taking into account the broader educational purpose of the language while at the same time ensuring scientific standards. Situational assessment in an educational setting has implications for the development of the instruments, in particular concerning the specific procedures used to assess each domain. For instance, even though nonword repetition is a standard procedure for assessing phonological awareness, we found via pilot testing that this task cannot be expected to be administered reliably by educational staff, who are not trained in phonetics. A consistent policy was followed in which otherwise plausible procedures or specific tests have been discarded because pilot tests demonstrated that educational staff could not administer them in a reliable way (e.g., the assessment of narrative skills).

A general lesson to be learned from embedding language assessment in an ECEC context with the purpose of assessing all or a great proportion of children is that educators may initially doubt whether they can do it. There may also be some initial resistance from some educators or factions within the ECEC community. However, our experience over the last fifteen years has been of a steady decline in resistance to language assessment in the ECEC context to the point where it is virtually nonexistant now.

3 A Language Assessment Instrument for 3–6-Year-Olds

3.1 Background

The first version of our language assessment instrument – Screening instruments for 3-year-olds (Reference Bleses, Vach and JørgensenBleses et al., 2010) – was developed on the basis of the available international research on the assessment of children’s language development (see, for example, the report by Reference Snow and van HemelSnow & van Hemel, 2008). The aim of this work was to identify the range of skills emerging in early childhood that have continuing predictive significance (including language development and early literacy) and to identify appropriate methods for measuring their development. Based on the view that the assessment of children (including language development) has one fundamental purpose, namely to support the best possible development of all children, the report recommends two general guidelines for the development of assessment materials: (1) the purpose of the assessment must be to provide a basis for decisions on the part of the ECEC center, and (2) the assessment should be part of a systematic and coherent effort that encompasses health, educational, and family aspects of the child’s life. These two general guidelines have been followed in the development of the first and subsequent updated versions of the Danish-language assessment instruments described in this Element. It is also highlighted in the strong recommendation of the Reference Snow and van HemelSnow & van Hemel (2008) report for the National Academy of Sciences that assessment materials must be evidence-based in terms of what is measured, how it is measured, and the way in which the measurement is subsequently used.

In keeping with these recommendations, the first version of the language assessment instrument from 2007, as well as all subsequent updates and new developments, was developed on the basis of a number of general principles: The selection of the language and literacy dimensions to be assessed is based on research documenting the relationship of early language and literacy skills to later reading skills. Subscales and individual items have been tested in multiple pilot studies. Standardized age- and gender-specific norms are provided. Fixed cut-off points for typical versus nontypical language development are established to standardize the interpretation of results. Standardized administration procedures have been developed and described. Internal and external psychometric properties are documented and are accessible to users.

Based on the requirements on the part of the Ministry for Children and Education and the research-based guidelines mentioned earlier in this section, the first version included subscales assessing language production (vocabulary, complex grammar, and pronunciation), language comprehension, and aspects of pragmatic skills (Reference Bleses, Vach and JørgensenBleses et al., 2010). As the name indicates, the first version targeted only 3-year-old children; that is, children around 36 months of age. The latest version, Language Assessment 3–6 (hereafter LA 3–6), which is documented in this section, covers ages up to 6 years. Although the number and type of subscales have changed in several revisions in order to improve the reliability and validity of the instrument – using data from both norming studies and from “real-life” language assessments – the assessment instrument still assesses production, comprehension, and pragmatic skills.

Other revisions of the assessment have been implemented as a consequence of changes to the legislation governing the national language assessment program. In 2011, the age range of the language assessment instrument was expanded to include the assessment of children just before school start (age 5) (Reference Bleses, Lum and HøjenBleses, Lum, et al., 2011). In 2015, the current language assessment instrument was finalized. The main change in the features compared to earlier versions is that the current instrument can be used to assess children in the entire age range from around 3 years (specifically 34 months) to around 6 years (specifically 71 months) with monthly norms, rather than being restricted to just 3-year-olds (version 1) or specific ages (version 2). Moreover, the language assessment instrument should make it possible to assess the development of Danish skills in children who acquire Danish as an additional language, but with detailed instructions provided in the manual on how to interpret results for this subgroup of children (see also Sections 3.5.3 and 4.6.3).

Given that the instrument measures several aspects of language and preliteracy skills, it is important that each subtest is as compact as possible. Needless to say, a test session with lengthy subtests that drag on for an hour or more would not yield valid estimates of a young child’s skills and, more importantly, would probably be an unpleasant experience for the child. In addition, in a context where ECEC educators administer language assessments to all – or at least a large proportion of all – children, expediency is important.

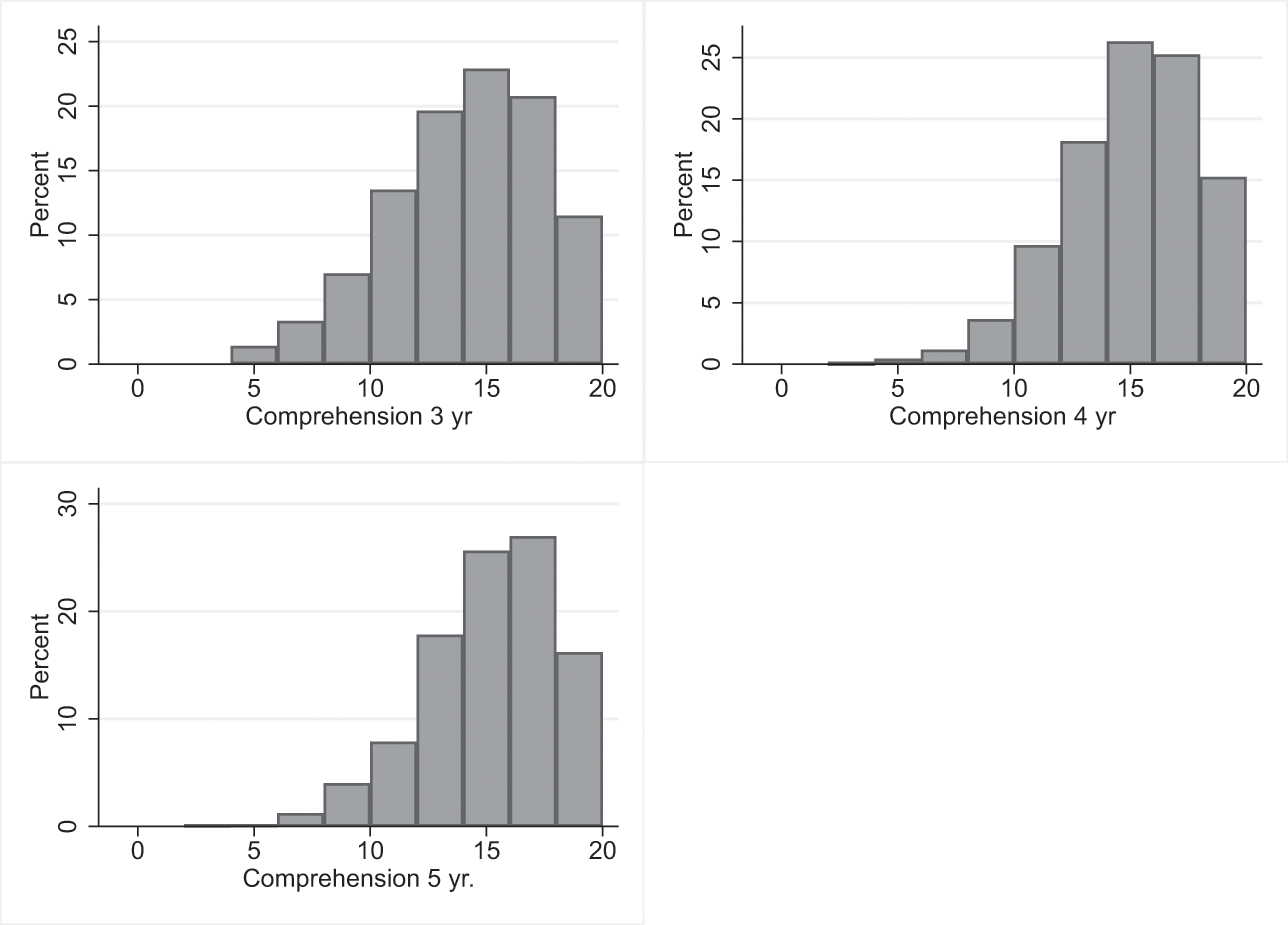

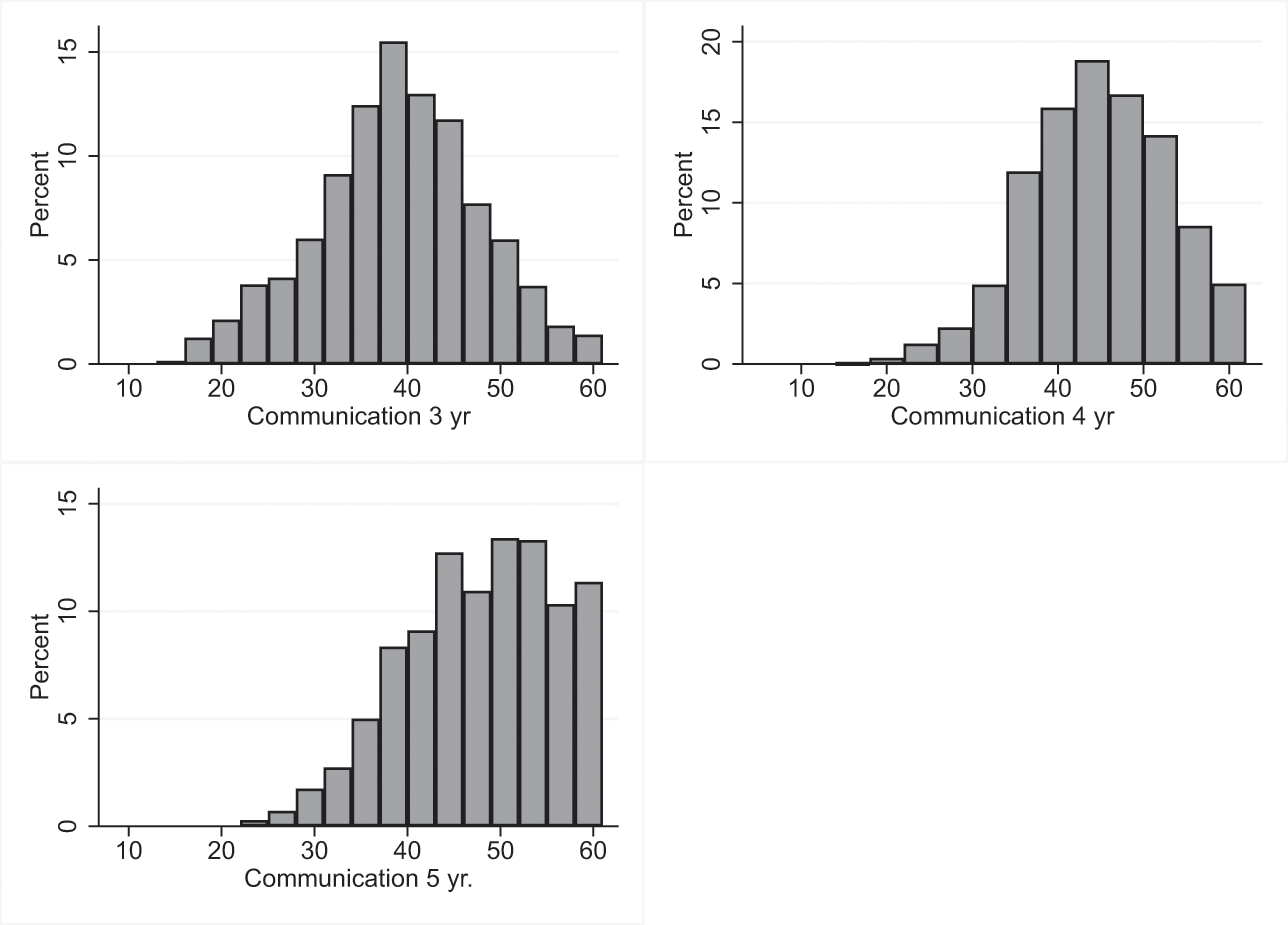

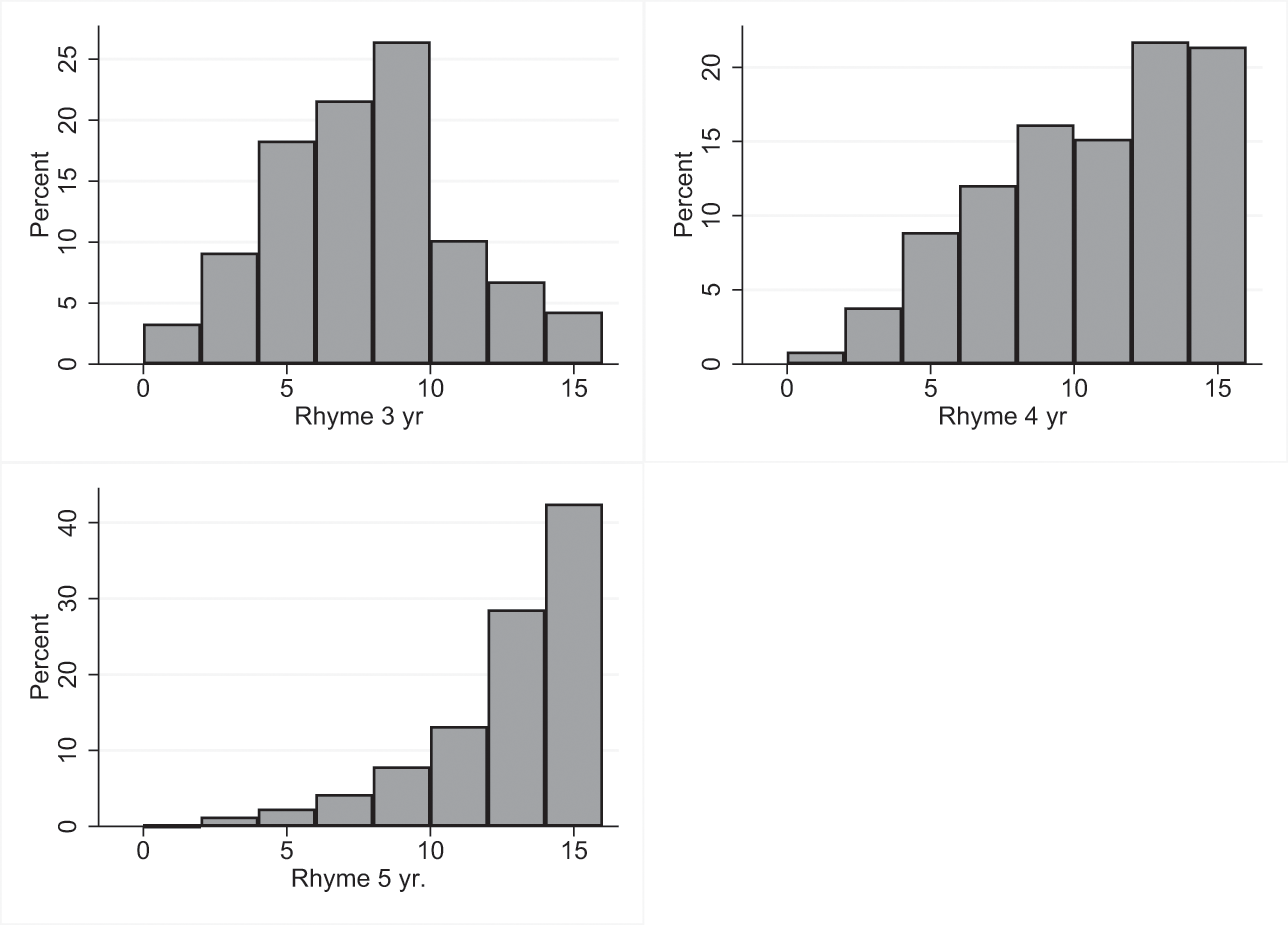

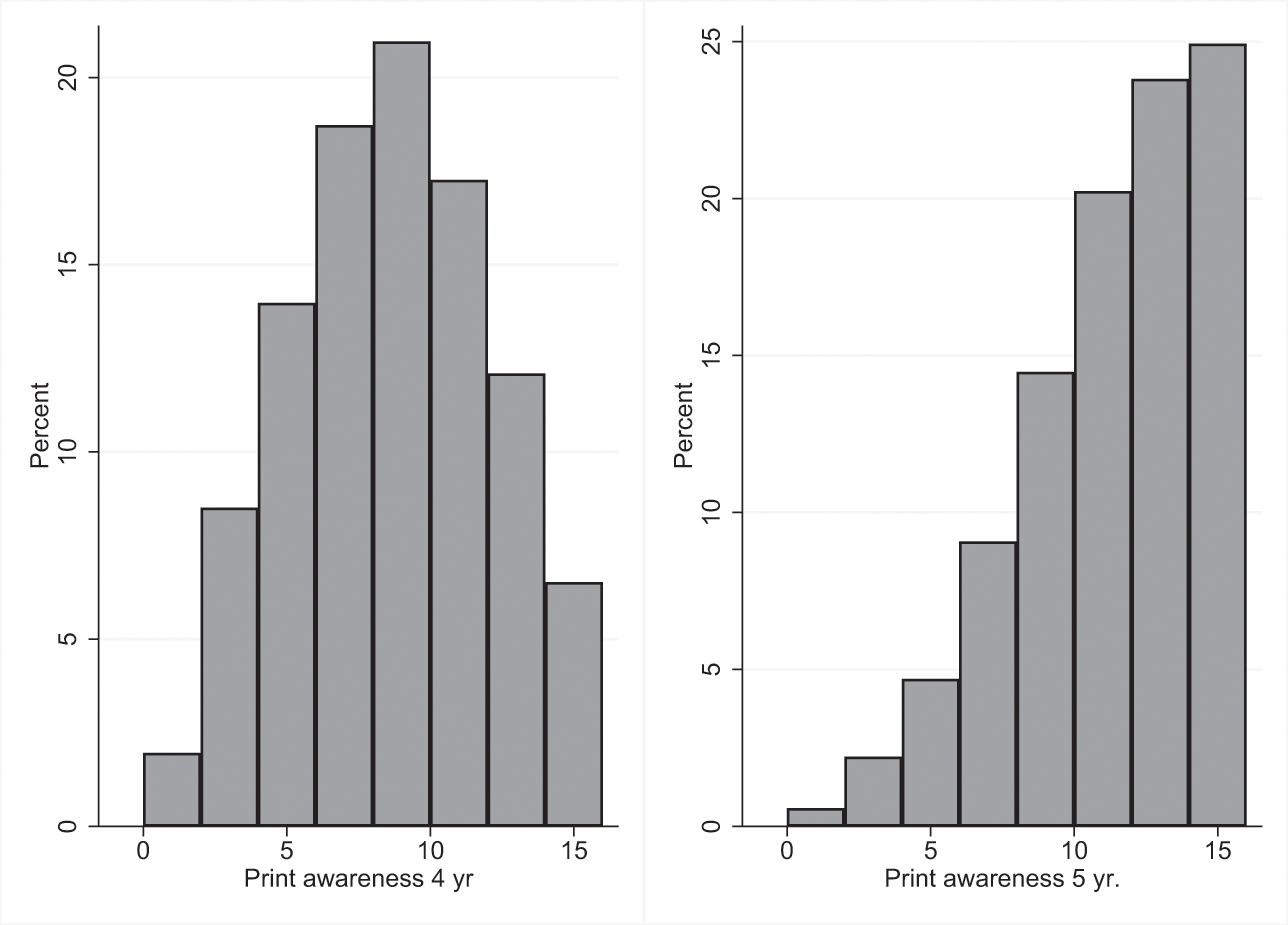

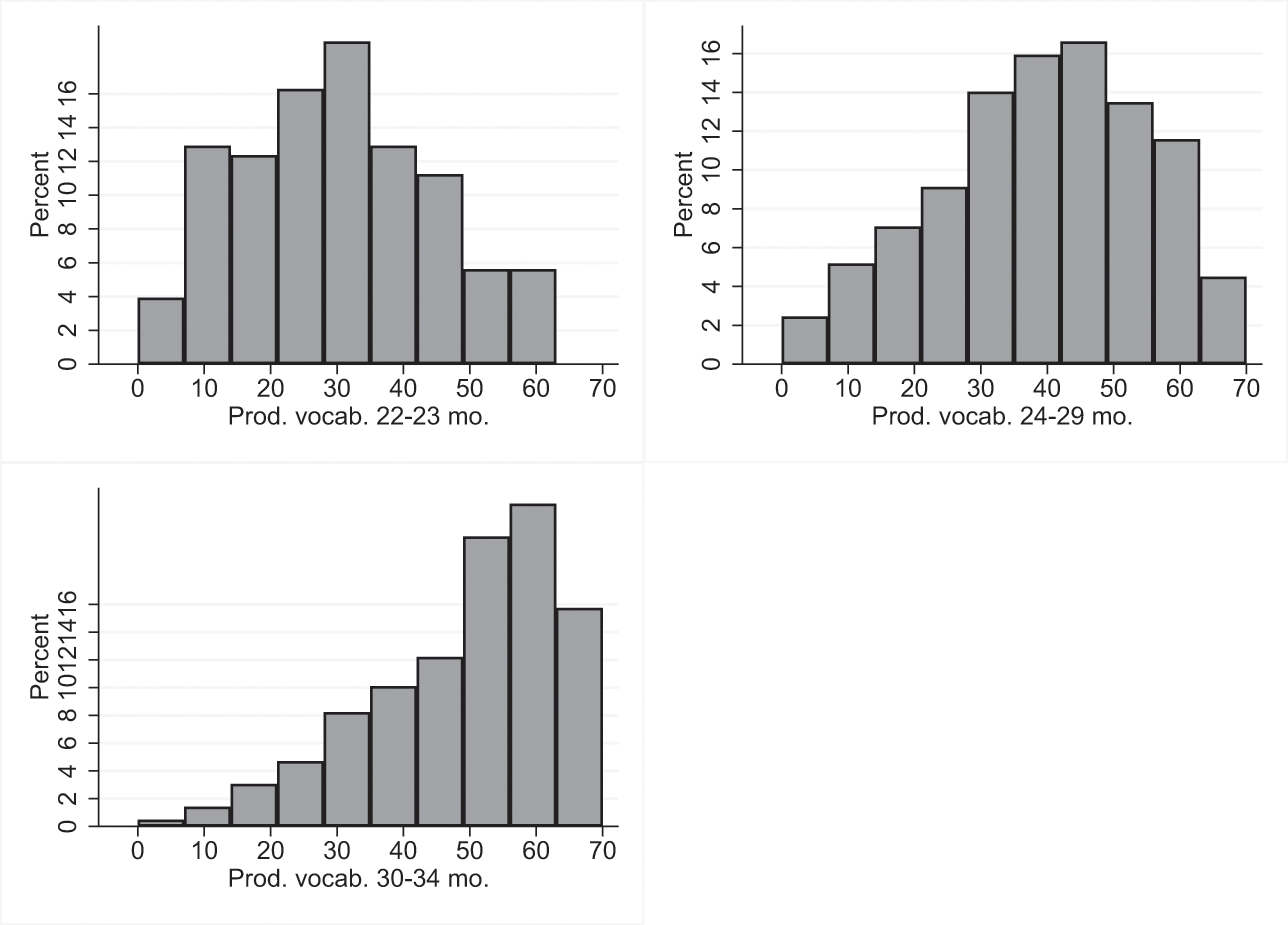

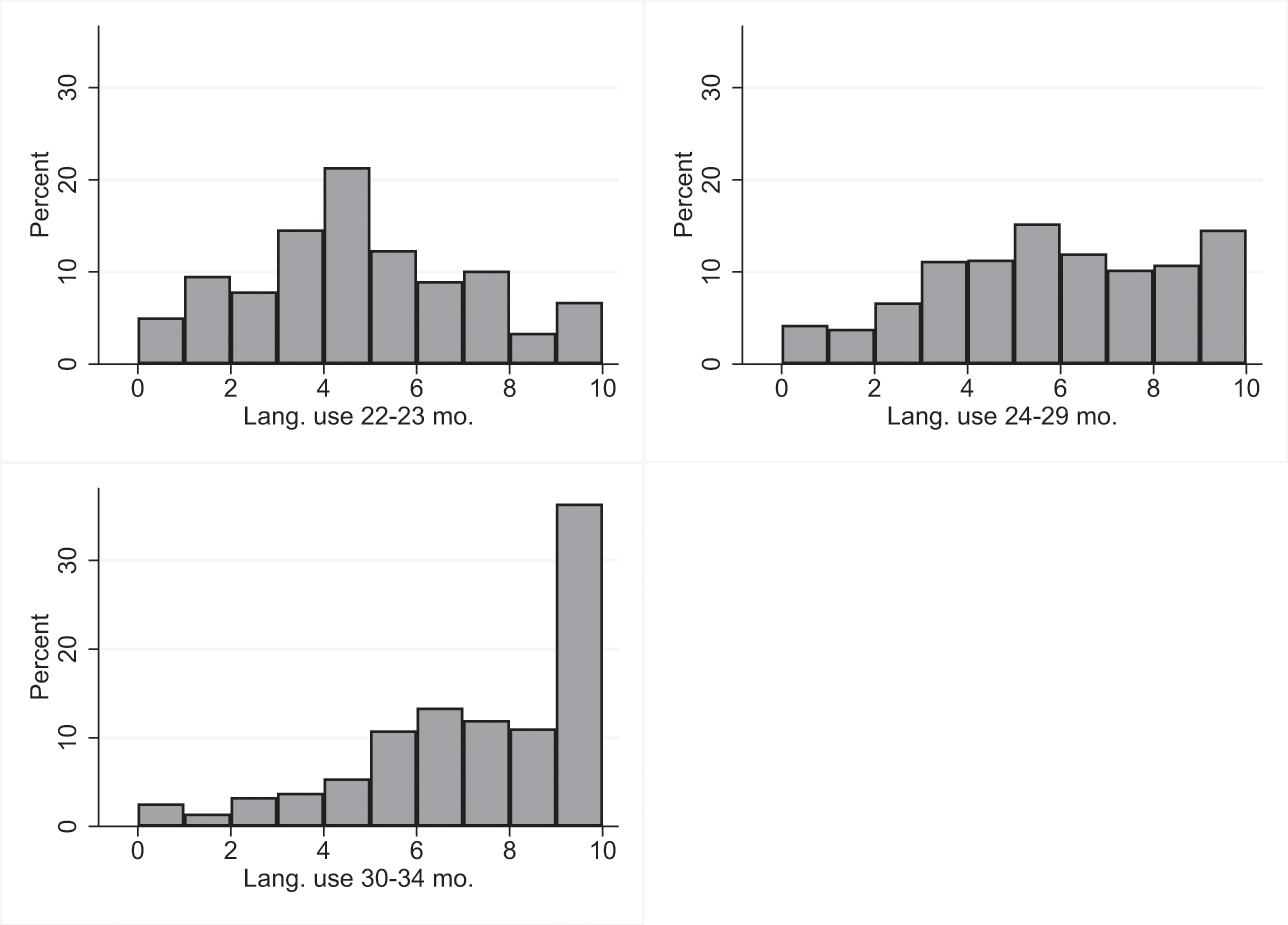

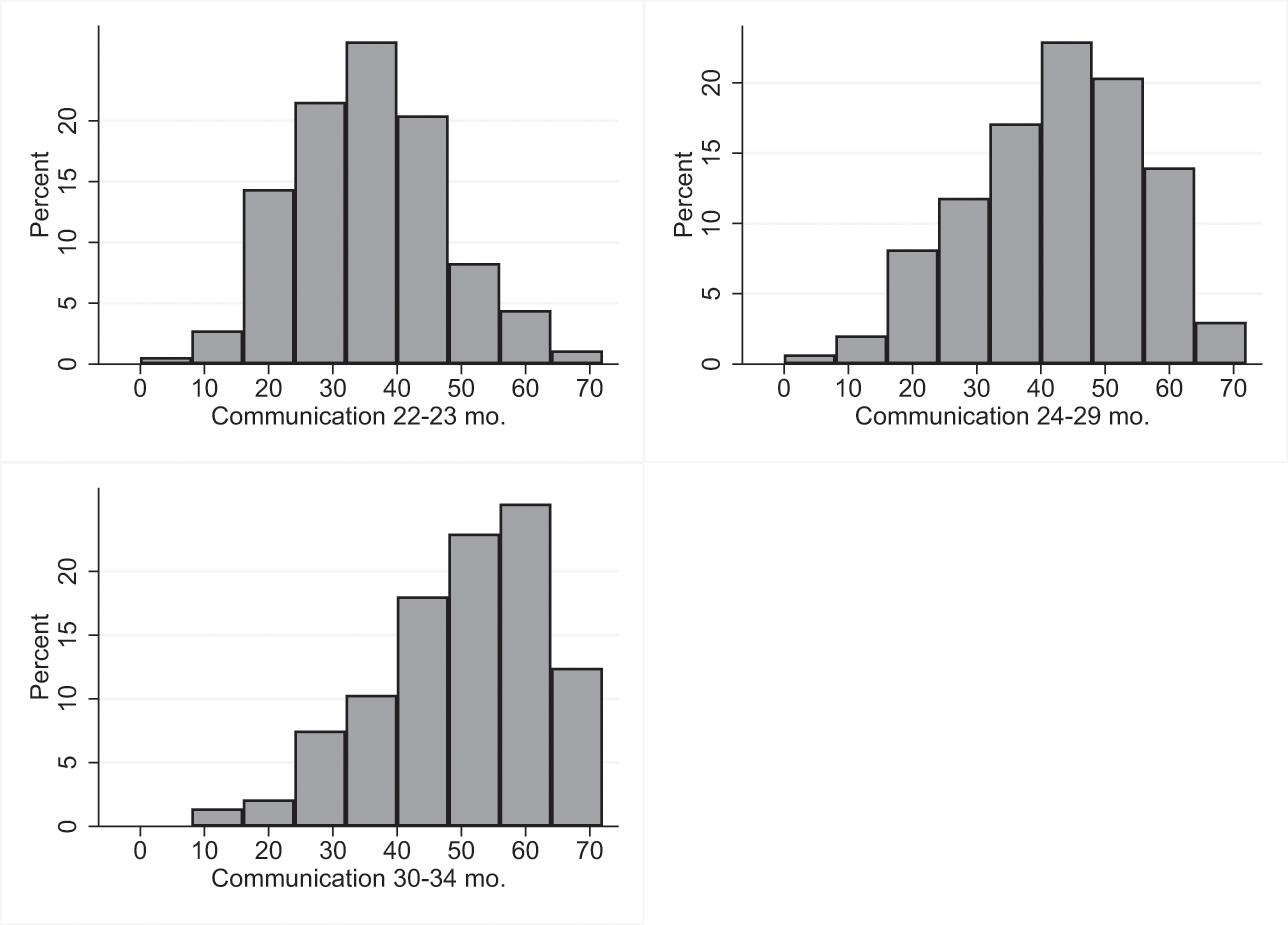

Therefore, via pilot tests, we have worked on reducing the number of items in each subtest to a minimum, which was still sufficient for obtaining a gradient in scores, although a normal distribution of scores was not always possible. One consequence of the move to limit the number of items is that we give age-dependent items for the subtests on Productive Vocabulary and Language Comprehension. For these subtests, some of the items change between age 3 and age 4 and between age 4 and age 5. A downside of this approach is that raw scores are not comparable between ages. This, however, is not a real problem for use by practitioners because the raw scores are translated into percentile scores using age-specific (monthly) and gender-specific norms. The age-dependent shift in items is only a problem if researchers want to use raw scores. One solution to this problem is to apply the inverse normal distribution function to the percentile scores to obtain a normal distribution of scores that would lend themselves to typical statistical analyses. An alternative solution is to assume that older children who receive harder items know all of the easier items that were only administered to younger children. Each solution has advantages and disadvantages, but the design of the subscale was ultimately developed with priority given to making it a relatively quick and reliable assessment for use in an educational context, with research purposes having only secondary priority.

A reasonable question to ask is whether ECEC educators, who are not speech-language specialists, can reliably assess speech and language skills in children. In Section 3.7, we provide psychometric analyses that support the assumption that educators can do this. In addition, a recently published analysis of a precursor to LA 3–6 indicates that measurement error is relatively low when ECEC educators carry out the assessment (Reference Haghish, Vach and BlesesHaghish et al., 2021). The version examined by Haghish and colleagues had a high degree of overlap with LA 3–6. The data used were pretest data collected for two parallel language interventions for 3–6-year-olds (Reference Bleses, Højen and DaleBleses, Højen, Dale, et al., 2018; Reference Bleses, Højen and JusticeBleses, Højen, Justice, et al., 2018). Using hierarchical linear modeling (Reference Raudenbush and BrykRaudenbush & Bryk, 2002) with the five levels – child, intervention group, educator, classroom, ECEC center – Haghish and colleagues were able to estimate the degree of measurement error associated with the educator level. The degree of estimated measurement error (or variance inflation factor) at the educator level ranged from 4 percent for the subscale Letter Identification to 19 percent for the subscale Rhyme Detection.

In the following sections, we describe the current version of the instrument LA 3–6. Note that the age range implied in “3–6” does not include ages all the way up to just before age 7 but rather from just before the 3rd birthday (34 months of age) until the last month before the 6th birthday (71 months of age).

3.2 Selection of Subscales

Based on international and Danish research on children’s early language development (Reference Bleses, Vach and WehbergBleses et al., 2007; Reference Bornstein, Hahn and HaynesBornstein et al., 2004; Reference Fenson, Marchman and ThalFenson et al., 2007; Reference HoffHoff, 2013, Reference Hoff2014; Reference Wehberg, Vach and BlesesWehberg et al., 2007), we identified important milestones in the language development of 3–5-year-old children (see Section 1.3).

The selection of individual items was based partially on previous research, as previously mentioned (Reference Snow and van HemelSnow & van Hemel, 2008), and partially on empirical results from a large-scale study of 6,000 children aged 8–36 months (Reference Bleses, Vach and SlottBleses et al., 2008a) and 13,000 children aged 3–6 years (Reference Bleses, Højen and DaleBleses, Højen, Dale, et al., 2018; Reference Bleses, Højen and JusticeBleses, Højen, Justice, et al., 2018) combined with educational expert knowledge and feedback from users.

We want to stress the importance of involving representatives of future users – that is, educators – in the development of the material. They may give information about limitations on facilities that may make an otherwise good test hard to administer in practice. In addition, information for users may help encourage the acceptance of the instruments. For example, many users may have an opinion on whether or not a given picture is a suitable picture to illustrate a particular word. Many users may believe that a prototypical illustration of a word, yielding high identification rates, is by definition the best illustration. This relates to the different goals of ECEC educators and speech-language therapists. Whereas speech-language therapists are trained to obtain accurate assessments of children, including exposing and identifying weaknesses, ECEC educators are trained to help children succeed.

Finally, as previously mentioned, it was critical for us to include only tasks and items that were practical and suitable for reliable use by educational staff who were not trained speech and language specialists.

3.3 Content

It was a requirement of the Ministry for Children and Education, which commissioned the instrument, that it be sensitive to language development across the entire scale of language skills; that is, ranging from children who are very delayed to the most advanced children. Thus this instrument contrasts with many other instruments whose primary purpose is to identify children with poor language development and therefore do not distinguish between children with typical versus advanced language development. If, however, equivalent sensitivity across the entire range of skills could not be obtained, sensitivity at the low end of scores was a priority for our instrument.

In order to ensure that developmentally appropriate items are administered to children at each age within the target range, the items used at the three ages change somewhat in the Vocabulary and Language Comprehension subscales, as noted. For the Vocabulary subscale (twenty-five items), ten items were changed between ages 3 and 4 and between ages 4 and 5. In other words, the overlap between ages 3 and 4 and between ages 4 and 5 comprised 60 percent of the items. The overlap between age 3 and age 5 was 20 percent. For the Language Comprehension subscale (twenty items), five items changed between age 3 and age 4 and between age 4 and age 5. In other words, the overlap between ages 3 and 4 and between ages 4 and 5 comprised 75 percent of the items. The overlap between ages 3 and 5 comprised five items or 50 percent.

The instrument consists of subscales measuring oral language skills as well as subscales measuring preliteracy skills. Table 2 provides an overview of the subscales and the ages at which they are administered. Note two distinct types of age dependency in the administration: (1) whether or not a subscale is administered at a given age, and (2) whether the particular items administered are age dependent.

3.4 Administration

For the administration of LA 3–6, the following materials have been developed (see Table 3). All materials have been developed for paper format but have been integrated into an IT platform by commercial as well as governmental suppliers, the latter offering the platform free of charge. The purpose of IT platforms is to facilitate the easy and consistent implementation of the measure, along with the recording of responses and the conversion to norm-based percentile scores for documentation of the individual child’s skills and skill development over time. The platform enables educators to use a tablet or laptop computer to read directions and record the child’s responses. Some platforms also enable the visual presentation of stimuli, although to optimize comparability with the presentation mode of stimuli in the norming study, we recommend presenting stimuli from a picture booklet. That said, it is an open question whether contemporary and future children who may be more familiar with tablets than with books are comparable in this regard with the children in the norming study, which was conducted in 2015, when young children were probably less familiar with tablets.

Table 3 Overview of which materials are used for which parts of the assessment process

| Material | Administration | Results | Interpretation | Intervention |

|---|---|---|---|---|

| Assessment form | ✓ | |||

| Picture booklet | ✓ | |||

| Results report (IT system) | ✓ | ✓ | ✓ | |

| Supplementary questions to parents | ✓ | |||

| Manual | ✓ | ✓ | ✓ |

3.4.1 Assessment Form

The assessment form includes all subscales (i.e., Vocabulary, Rhyme Detection, etc.) and their associated test items. For each subscale there is a brief guide containing a description of the subscale, information about which materials are to be used, and a description of how to perform the assessment task with the child (e.g., rhyming task or language comprehension task) and how it is scored. The child’s response can be manually recorded on paper and subsequently entered in the IT system, or it can be registered directly in the IT system. This requires that the assessor has a computer or tablet at hand; feedback from users indicates that some experience is required to use a computer or tablet for registering the child’s response while at the same time keeping a full focus on the child in order to keep the child engaged in the tasks.

3.4.2 Assessment of the Child on Each Subscale

Four of the assessment subscales require the use of materials for the child to look at; that is, pictures or letters. These subscales are Language Comprehension, Productive Vocabulary, Rhyme Detection, and Letter Knowledge. Examples of these are shown in Figures 1–3.

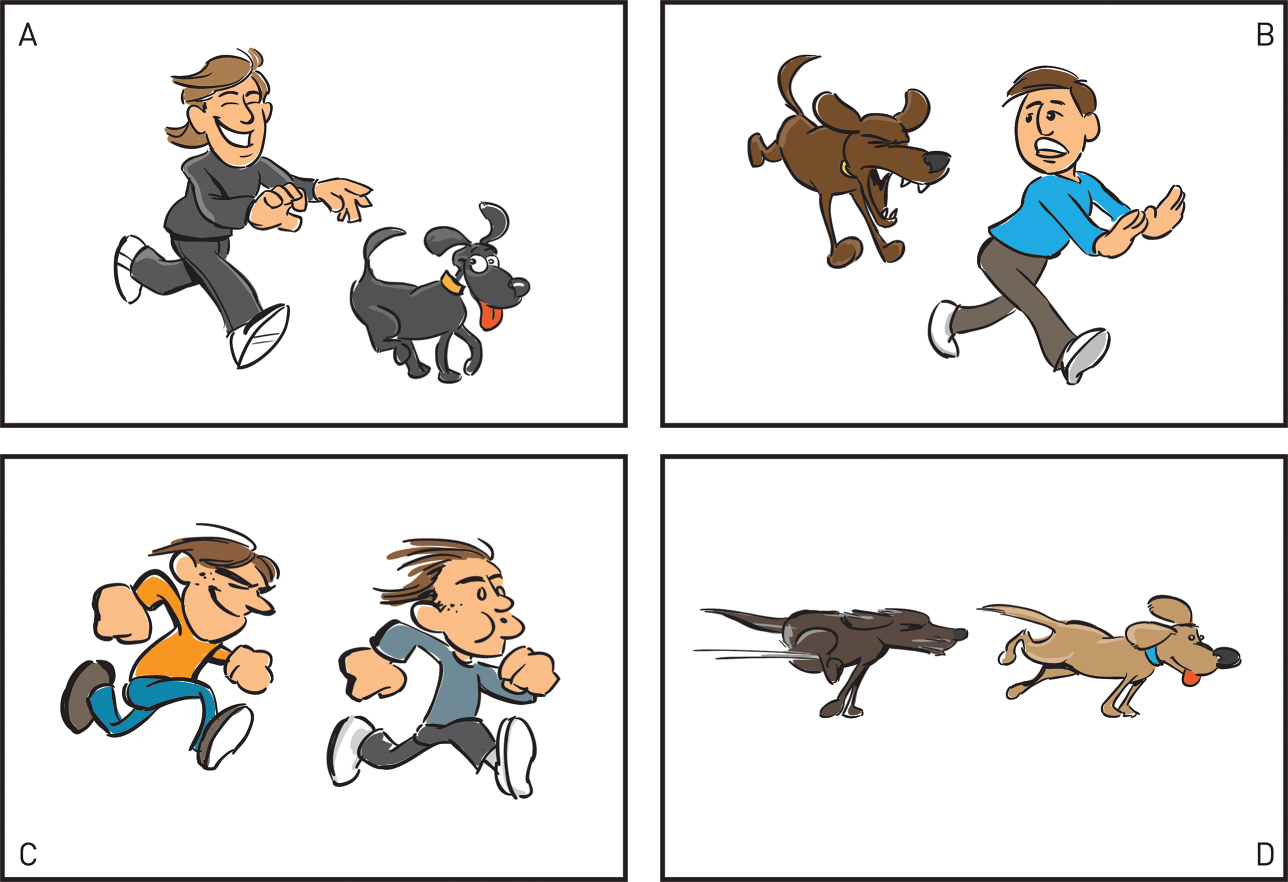

Figure 1 Example of pictures for Language Comprehension. The child has to point to one of four pictures corresponding to a sentence uttered by the administrator

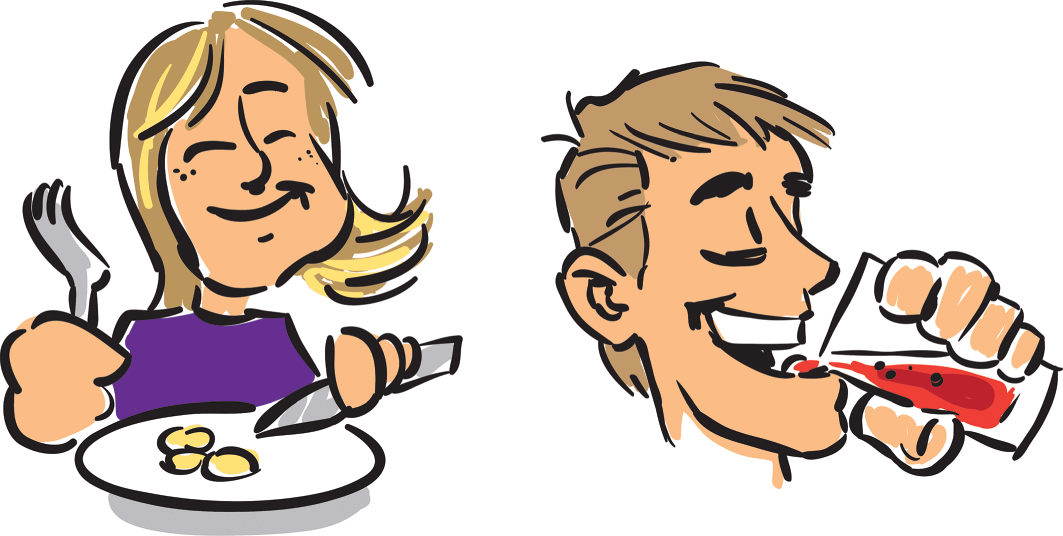

Figure 2 Example of word elicitation pictures for Productive Vocabulary assessment. Contrasting pictures are used to elicit less tangible or illustratable words such as “drinking”: The woman is eating. What is the man doing?

Figure 3 Example of pictures for a Rhyme Detection item using the three words hus [huːʔs] (“house”), mus [muːʔs] (“mouse”), and sol [soːʔl] (“sun”).

For Language Comprehension, the child is asked to choose one of four pictures corresponding to a sentence uttered by the administrator; for example: Where is the boy being chased by the dog? (see Figure 1).

For Productive Vocabulary, the child is asked to label an element of a picture. Two-thirds of the words are nouns, two words are adverbs (place and time), and the remaining words are approximately equal numbers of adjectives and verbs. For nouns, the child is simply asked What is this? For other categories, where it might not be obvious which of the multiple elements of the picture is to be named, two contrasting pictures are given to help elicit the relevant word; for example: The woman is eating. What is the man doing? (see Figure 2).

For Rhyme Detection, three pictures are shown, and the child is asked to point to the two pictures of objects whose names are words that rhyme. The administrator names the three pictures and asks the child to point to “the two things that sound alike; those that rhyme” (see Figure 3).

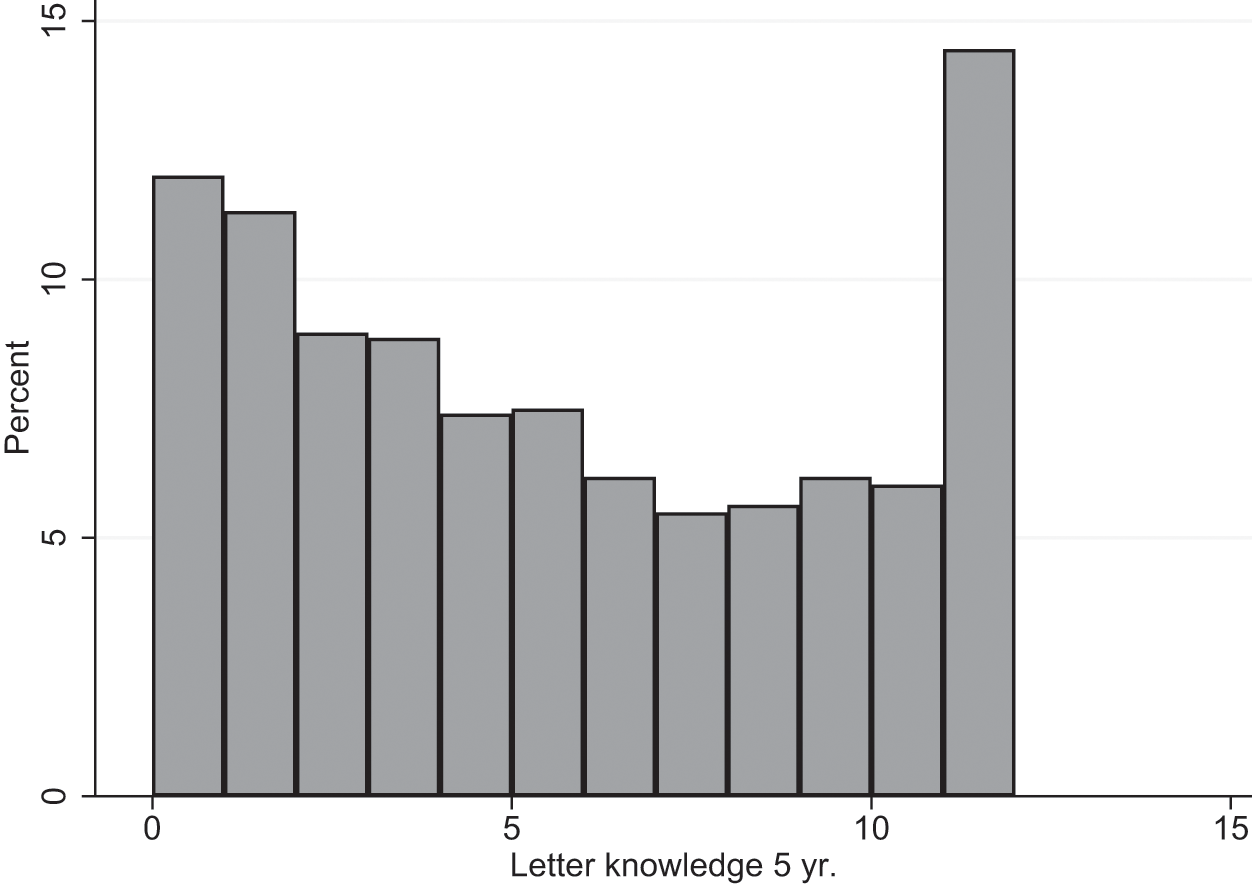

For assessment of Letter Knowledge, twelve capital letters are shown in a table, and the child is asked to name each of the letters: I C J F E G O S A L D K. There are twenty-nine letters in the Danish alphabet. Testing all letters would be time consuming, and therefore a subset of letters was identified and used in a previous version of the instrument and carried over to the present version.

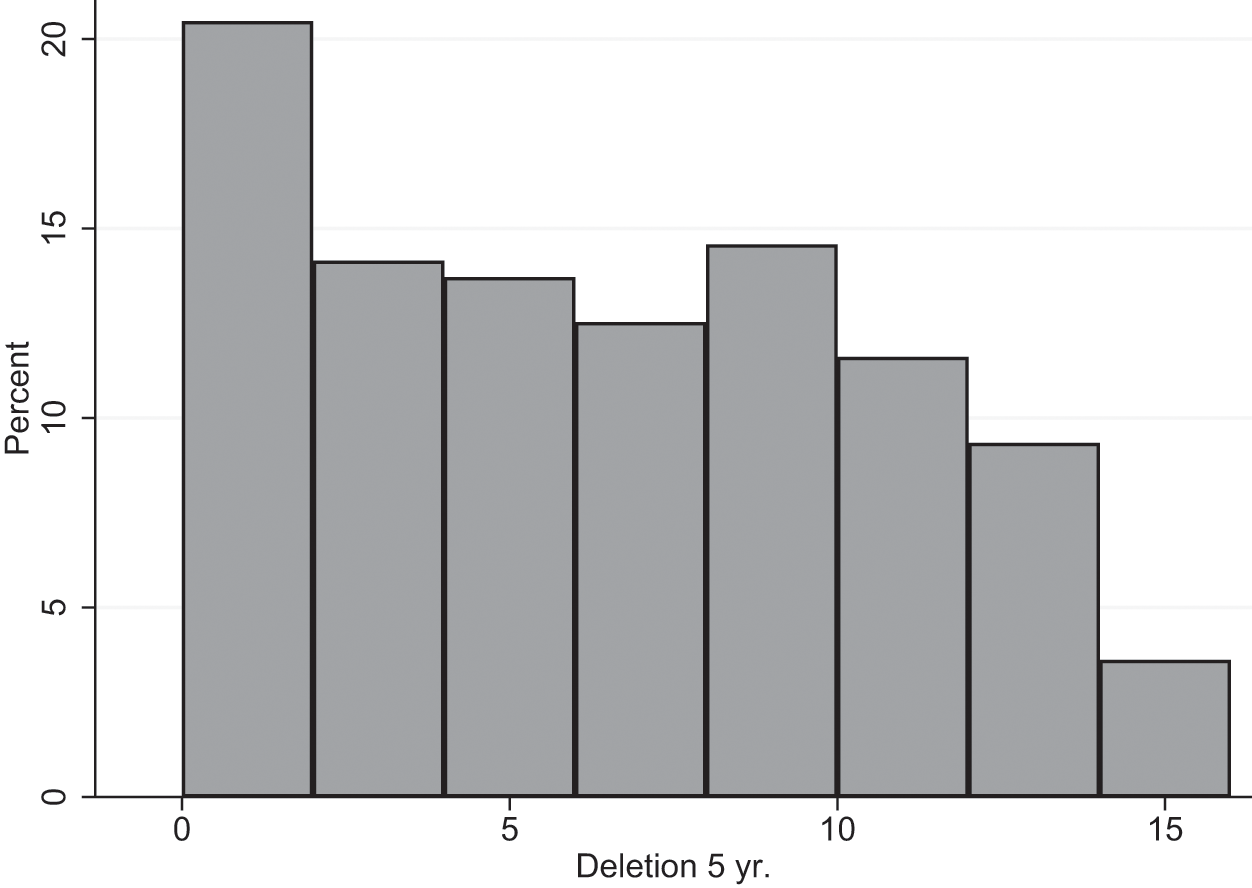

The Deletion task has three blocked levels of difficulty that require the child to say what is left when deleting (1) one word-part of a compound (e.g., Say snowman without man), (2) deleting a syllable, (e.g., Say window without dow), or (3) deleting a sound (e.g., Say cold without khh). The deleted part could be either at the beginning or the end of the word. The remaining part of the word would always make a word in its own right (such as snow, win, and old in the English examples).

For the assessment of Print Awareness, the assessor uses a picture book of their own choice (not provided with the test). The book must have both text and pictures. The assessor asks the children sixteen questions related to book reading, generally increasing in difficulty, starting with handing the book to the child and asking What is this? Later questions pertain to where to start reading on a page and pointing out examples of a word, the last word on a page, or the last letter in a given word.

For assessment of the child’s communicative skills, the child is not directly involved. An educator who is familiar with the child from day-to-day care rates how frequently (never, seldom, often, or always) a child would give a particular communicative response in a hypothetical communicative situation. For example, A child or an adult talks to the child on the playground. The child reacts by answering the other child/the adult. More advanced examples include You are talking to the child, but the child seems to not understand. The child asks clarifying questions and At home, the child has heard their parents talk about an experience. The child explains to you what the parents were talking about.

3.5 Results of the Assessment

3.5.1 The Results Report

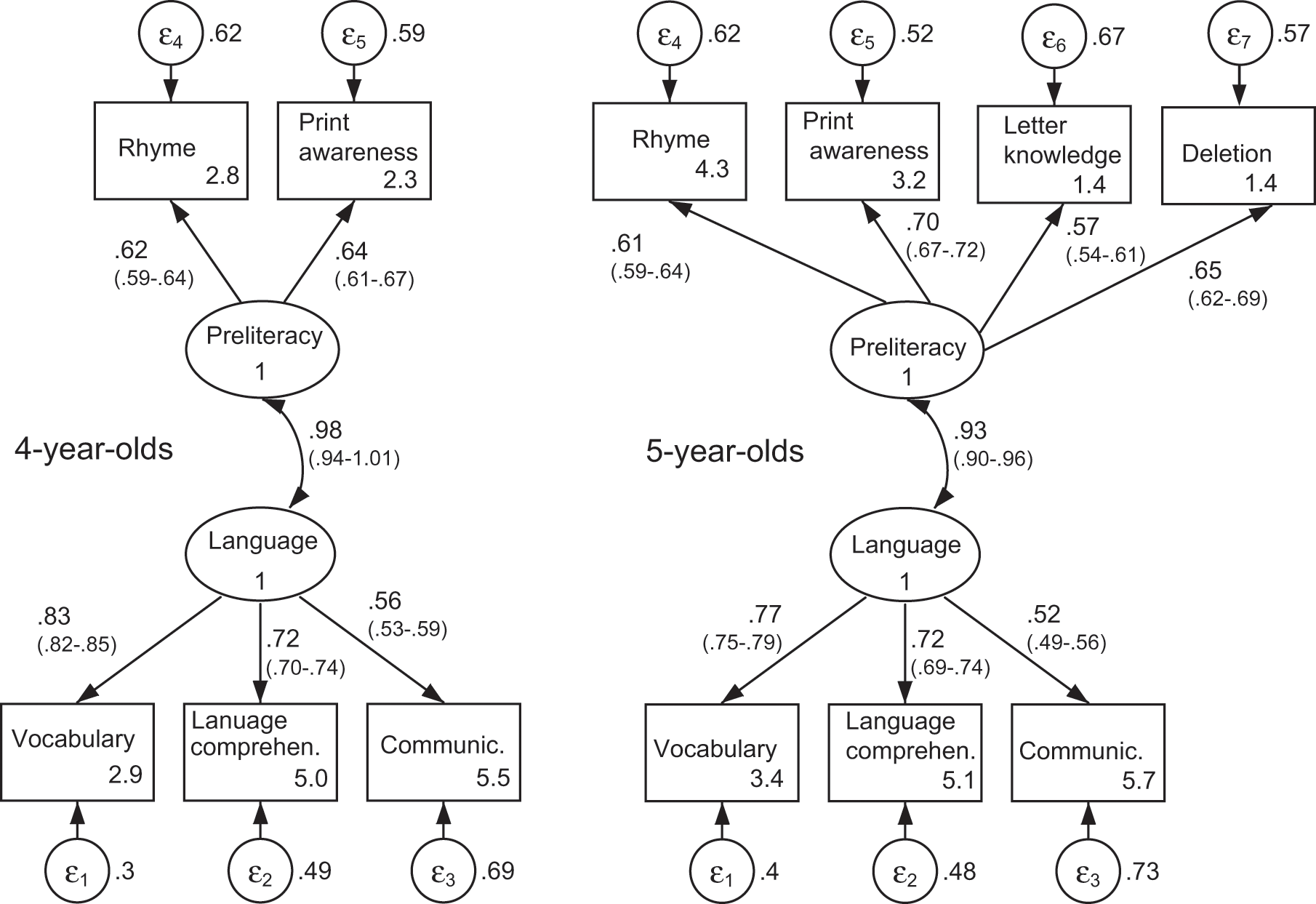

Based on the number of items responded to correctly and the age and gender of the child, the child’s performance can be compared with norms (available to potential IT platform contractors upon request from the Danish Ministry of Education). The resulting report contains two overall summary scores in terms of percentiles: a language summary score and a preliteracy summary score. The language summary score is generated by standardizing the language subscale scores (Vocabulary, Language Comprehension, and Communication), averaging the scores, and subsequently converting them to percentile scores (see Section 3.7.4). Likewise, the preliteracy subscale scores (Rhyme, Print Awareness, Letter Naming, and Deletion) were standardized and averaged to generate the preliteracy summary score. Note, however, that the administration of preliteracy subscales depends on age (see Table 2). Because the only subscale administered to 3-year-olds is Rhyme, no preliteracy summary score is generated for 3-year-olds. The mean score for each of the summary scores is referenced against the norms for the child’s age and gender to produce a language summary percentile score and a preliteracy summary percentile score.

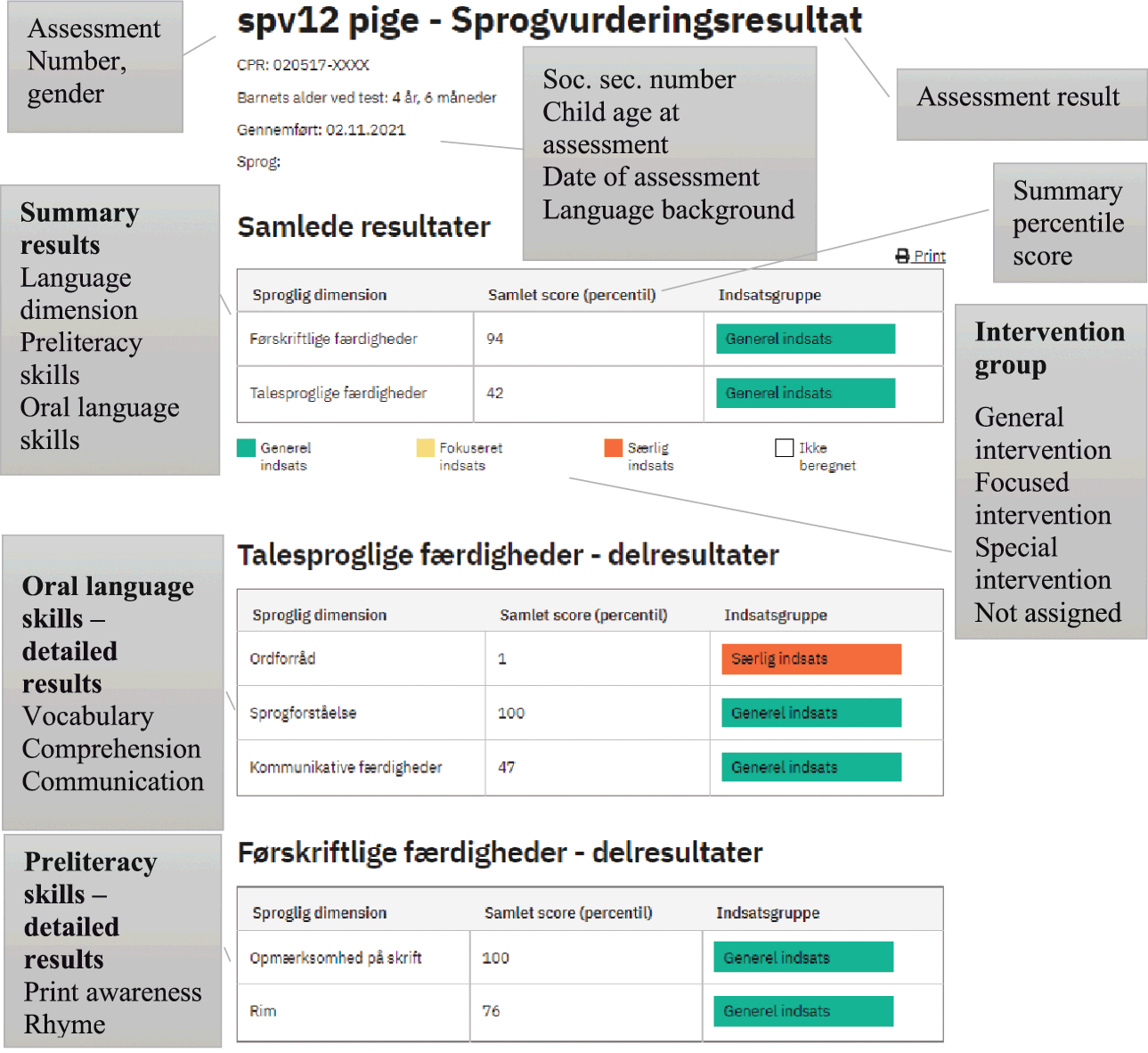

The report also shows the percentile scores of each of the subscales completed by the child to help generate a “language profile” for each child; that is, a profile showing which dimensions of language or preliteracy skills are strong or weak in the child. The two summary scores and the language profile scores also indicate the age- and gender-specific 5th and 15th percentiles. An example of a results report is given in Figure 4 for a fictional child.

Figure 4 Example results report showing percentile scores for the two summary scores and some of the individual subscale scores for a fictional child

The 5th and 15th percentile cut-off points were set to facilitate the best use of later resources. The requirements of later intervention based on the results of individual children were operationalized into three levels of skill development for each subscale as well as for the summary scores for language and preliteracy – (1) “low” (percentiles 1–5), (2) “at-risk” (percentiles 6–15), and (3) “typical” (percentiles 16–100) – for determining the language support and educational needs of each child. Rather than outputting the categories low, at-risk, and typical for each child, the report assigns a corresponding intervention category; namely, “Special intervention” (percentiles 1–5), “Focused intervention” (percentiles 6–15), and “General intervention” (percentiles 16–100).

Although using percentiles has the advantage of yielding comparable scores across ages, we have also consistently found that the concept of percentiles is difficult to communicate to users who are ECEC professionals but not necessarily used to manipulating numbers. However, it is usually possible to explain the concept by beginning with the concept of halves, the upper half and the lower half of scores, then progressing to the concepts of quartiles, deciles, and percentiles.

3.5.2 Interpretation of Results

We recommend in the manual that the lowest-scoring 5 percent of the children should be referred to a professional speech therapist in order to conduct a complete professional pathological speech assessment and potentially to receive specialized intervention. This cut-off point corresponds to the existing mean referral rate to speech therapists across municipalities in Denmark for 3-year-olds at 4.3 percent (Reference ReuschReusch, 2006). Children with scores between 6th and 15th percentile are referred to the ECEC center for focused intervention and a later reevaluation for language delay. It is not within the scope of the instrument and manual to specify appropriate interventions, but the manual does give examples of how to target the specific weaknesses of a child according to their profile (e.g., low scores on Rhyme or Vocabulary). The percentile scores for each subscale may help guide educators in best supporting the child by identifying specific domains within language and preliteracy for which the child needs the most support. The 15th percentile cut-off point is based on findings from studies of language delay, which have reported prevalence rates ranging from 2.3 percent to 19 percent (Reference Nelson, Nygren, Walker and PanoschaNelson et al., 2006). The remaining 85 percent of the children can be regarded as having language development within the normal range. Thus these children are recommended to continue the general or usual instructional activities in the ECEC center.

3.5.3 Interpretation of Results for Bilingual and Multilingual Children

The LA 3–6 was developed specifically to provide a norm-referenced assessment of Danish language development in children learning Danish only. However, a large and growing proportion of children in Denmark speak one or more languages at home in addition to Danish. Therefore, assessing only Danish-language skills does not provide a full picture of the overall language skills of children learning multiple languages. Although it would be highly useful to establish norms for bilingual children – either for both/all of a child’s languages or just the majority language – this is not feasible, because no one set of norms would be sufficient, as discussed in Section 1.6.

With the given limitations of only assessing the majority language – Danish – in bilingual and multilingual children, it is important to give extra attention to the interpretation of their assessment results, as their development is highly variable. First, even though more bilingual than monolingual children score below the 15th percentile cut-off point, a large number of bilingual children do not. For these children, there is little reason to suppose that they have any problems with language development, because they score within the typical language development range for monolingual children even in their second language. Second, educators are advised to base their evaluation of the child’s language development more on preliteracy subscales than on oral language subscales. This is because bilingual children have been found to resemble or even exceed monolingual children with respect to preliteracy skills such as rhyming, deletion, and letter knowledge, presumably because such skills translate better between languages than do oral language skills and/or because the bilingual experience facilitates better metalinguistic and phonological awareness (Reference Hammer, Hoff and UchikoshiHammer et al., 2014; Reference Højen, Bleses and JensenHøjen et al., 2019; Reference Højen, Hoff and BlesesHøjen et al., 2021). Therefore, low scores on, for example, Vocabulary and Language Comprehension should raise less concern if the child scores highly on, for example, Rhyme and Deletion than if the child generally scores low on both oral language and preliteracy subscales. In the former case, it is likely that the child has had too little exposure to Danish to score within the typical range for oral language skills. Educators are encouraged to seek information from the child’s parents about when the child began to be regularly exposed to Danish and the proportion of Danish input in order to best interpret low scores in the language assessment.

3.5.4 Supplementary Questions for Parents of Bilingual and Multilingual Children

To provide additional help with the interpretation of the assessment results of these children, we compiled ten language-independent questions in a questionnaire inspired by Reference RestrepoRestrepo (1998). The questions are primarily for parents of bilingual children with low percentile scores in the language assessment. In these cases, it is crucial, but difficult, to determine whether the low scores are due to general language learning difficulties or due to the child being in the early phase of Danish second-language acquisition. The questions for parents are given in Table 4.

Table 4 Supplementary questions primarily for parents of bilingual children to help educators determine whether low scores on the Danish majority language assessment are indicative of general language learning difficulties or due to the child being in the early phase of majority language acquisition

| Language domain | Specific question |

|---|---|

| General | 1. For your child’s age, does your child have problems communicating with you? |

| 2. Do your friends or family think that your child is delayed in talking? | |

| 3. Did either of the parents have difficulties learning to talk or learning to read? | |

| Pronunciation | 4. For your child’s age, does your child have difficulty pronouncing words? |

| 5. Do your friends or family think that your child is difficult to understand? | |

| Vocabulary | 6. Do you think your child has difficulty learning new words? |

| 7. Does your child often point or use a general word like “that thing” rather than the specific word; for example, “the ball”? | |

| Grammar/production | 8. For your child’s age, does your child produce very short sentences? |

| 9. Is it hard to tell if your child is talking about one or more objects or people, because your child uses the words incorrectly? For example, saying “I got a good friends.” | |

| 10. For your child’s age, do they often make mistakes when talking about things in the past? For example, saying “Yesterday, I play with Tom!” |

As the wording shows, the questions must be answered with reference to the child’s age, and as such, the questions require some insight from the parents with respect to normal language development. The questionnaire was developed as an addendum to LA 3–6 and has not been validated. Therefore, it should be seen as a tool to help interpret the results of bilingual children when they receive low scores in the LA 3–6 proper, along with any other information available on the child’s mastery of the first language. The questions may be answered for just the minority language spoken at home or for both the minority and the majority language.

3.6 Norming Study

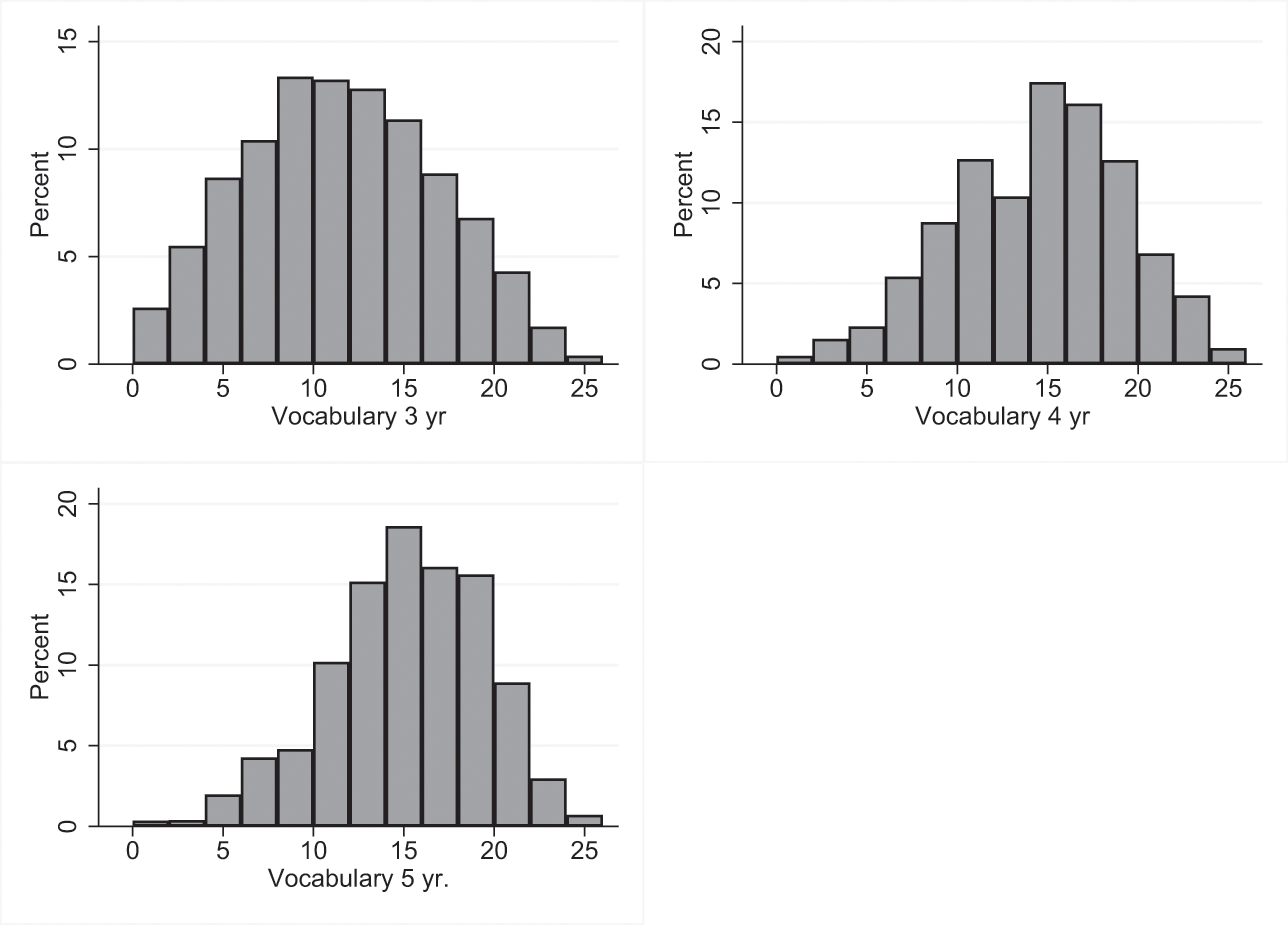

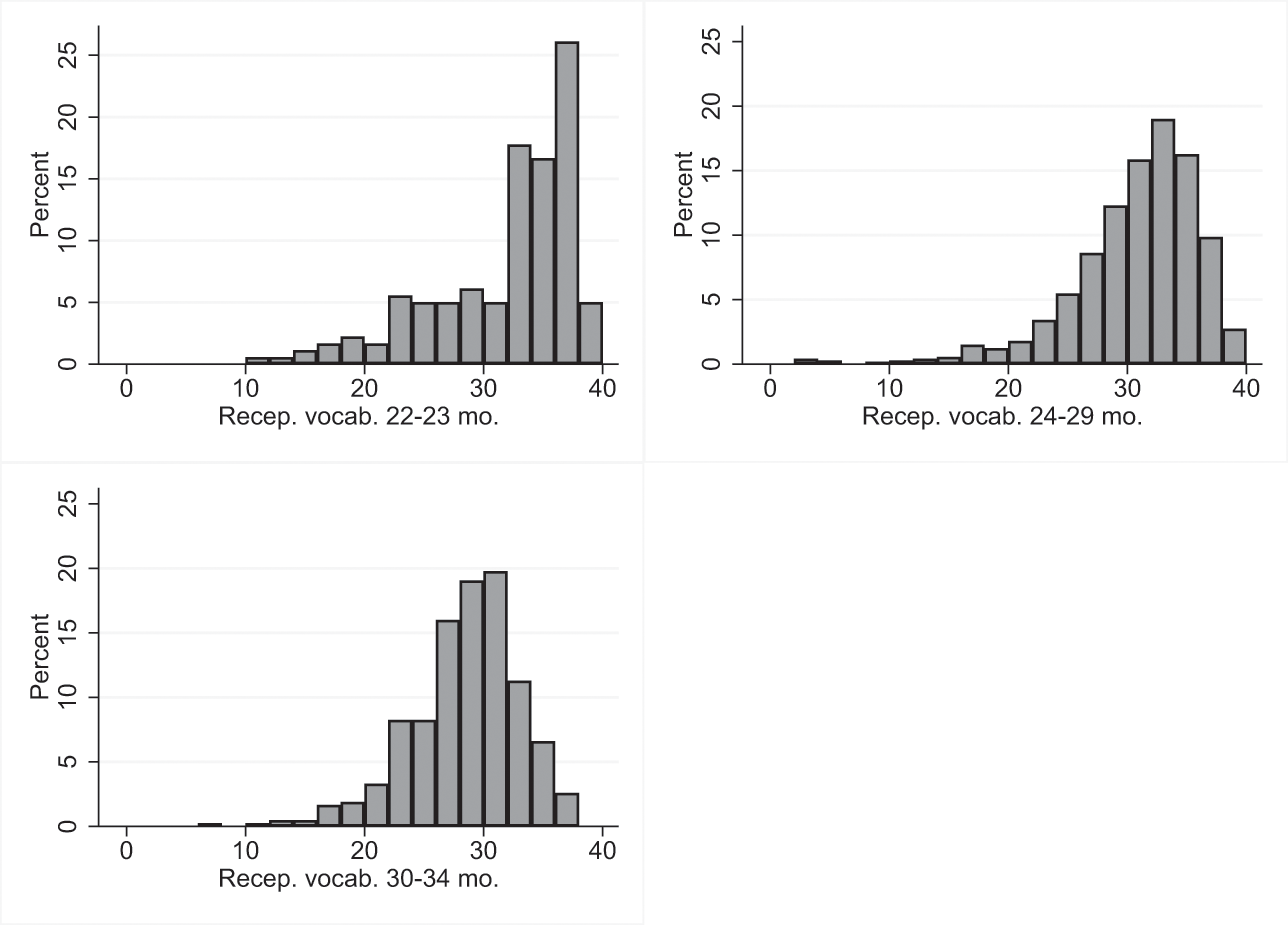

A norming study was conducted to develop age-specific (monthly) and gender-specific norms based on a population-representative sample of children. The norms were expressed as percentile scores, so that in later use of the instrument, it could be determined that a raw score of, for example, 14.5 on a subscale for a 40-month-old girl corresponds to a percentile score of 55; that is, a little above the median. For a 44-month-old girl, the same raw score would correspond to a slightly lower percentile score; for example, 45. One advantage of percentiles over standard scores is that percentiles are more accurate in identifying low extreme levels.

3.6.1 Sample